Abstract

Bloom’s taxonomy provides a well-established framework for categorizing the cognitive complexity of assessment questions, ensuring alignment with course learning outcomes (CLOs). Achieving this alignment is essential for constructing meaningful and valid assessments that accurately measure student learning. However, in higher education, the large volume of questions that instructors must develop each semester makes manual classification of cognitive levels a time-consuming and error-prone process. Despite various attempts to automate this classification, the highest accuracy reported in existing research has not exceeded 93.5%, highlighting the need for further advancements in this area. Furthermore, the best-performing deep learning models only reached an accuracy of 86%. These results emphasize the need for improvement, particularly in the application of deep learning models, which have not been fully exploited for this task. In response to these challenges, our study explores a novel approach to enhance the accuracy of cognitive level classification. We leverage a combination of augmentation through synonym substitution, advanced feature extraction techniques utilizing DistilBERT and TF-IDF, and a robust ensemble model incorporating soft voting. These methods were selected to capture both semantic meaning and term frequency, allowing the model to benefit from contextual depth and statistical relevance. Additionally, Bayesian optimization is employed for hyperparameter tuning to refine the model’s performance further. The novelty of our approach lies in the fusion of sparse TF-IDF features with dense DistilBERT embeddings, optimized through Bayesian search across multiple classifiers. This hybrid design captures both term-level salience and deep contextual semantics, something not fully exploited in prior models focused solely on transformer architectures. Our soft-voting ensemble capitalizes on classifier diversity, yielding more stable and accurate results. Through this integrated approach outperformed previous configurations with an accuracy of 96%, surpassing the current state-of-the-art results and setting a new benchmark for automated cognitive level classification. These findings have significant implications for the development of high-quality, scalable assessments in educational settings.

1. Introduction

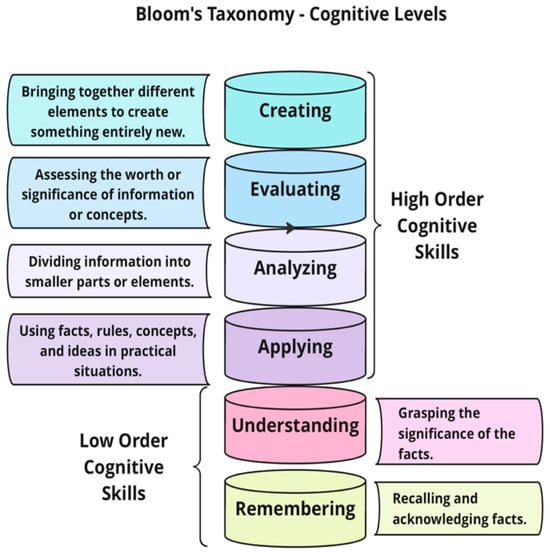

Assessing students’ cognitive abilities is a crucial element in modern pedagogical strategies [1]. Central to this approach is Bloom’s taxonomy, a framework that classifies educational objectives based on a hierarchy of complexity and specificity [2]. This taxonomy was developed by Benjamin Bloom and his colleagues in the mid-20th century. It organizes learning objectives into three domains: cognitive, affective, and psychomotor. The cognitive domain attracted the most attention as it focuses on intellectual skills fundamental to education. The domain is divided into six hierarchical levels: remembering, understanding, applying, analyzing, evaluating, and creating [2,3]. These levels progress from basic recall of facts to more complex tasks such as generating new ideas or products, providing instructors with a structured framework for designing curricula and assessments that foster comprehensive cognitive development [4]. The first two levels, remembering and understanding, are referred to as lower-order thinking skills (LOTS), while the remaining four—applying, analyzing, evaluating, and creating—are categorized as higher-order thinking skills (HOTS). Although LOTSs are important, memorization and comprehension alone are insufficient in the modern educational context. HOTSs have become increasingly critical for university students and are considered essential for academic success [5].

Assessments play a crucial role in enhancing students’ learning and shaping the skills they acquire. Well-designed assessments enable instructors to improve their overall learning experience [6]. For assessments to be effective, they must target different cognitive levels, accurately measure the range of students’ abilities, and align with the CLOs. Thus, it is essential for instructors to classify both their assessment questions and CLOs to ensure quality assessments [7]. The classification of CLOs and assessment questions into different cognitive levels is typically performed by instructors based on their expertise. This process is both time-intensive and error-prone, particularly due to the large volume of questions instructors must create each semester. As a result, researchers recognized the need to automate this classification process to improve efficiency and minimize bias. They employed different text classification techniques, including keyword searches, rule-based classification, natural language processing (NLP), and machine learning methods [8,9,10].

Text classification refers to the process of assigning thematic categories to textual content. It has become an essential tool across various fields, including education. In the educational domain, text classification is used for the efficient organization and analysis of large volumes of materials, such as the categorization of digital resources, the automation of open-ended response assessments, the evaluation of student essays, the detection of plagiarism, and the classification of assessment questions based on cognitive level [11]. Advancements in NLP and machine learning technologies significantly transformed the field of text classification.

Traditional machine learning techniques, such as support vector machines (SVM), decision trees (DT), naive Bayes (NB), and K-nearest neighbors (KNN), have been widely employed for text classification tasks [4,12,13,14]. However, deep learning methods have shown superior performance in many NLP tasks, including text categorization [15]. Deep learning models differ from traditional machine learning models primarily in how they perform feature extraction. Traditional models often rely on manually engineered features and techniques, such as term frequency-inverse document frequency (TF-IDF) or count vectorization, to convert text into numerical representations, which may overlook the relational and semantic nuances between words [16]. In contrast, deep learning models use pre-trained or specifically trained word embeddings, such as word to vector (Word2Vec), FastText, and embeddings from language models (ELMo), to capture richer contextual information and the relationships between words. This enables deep learning models to handle more complex tasks, such as text classification, with greater accuracy [17,18].

Several studies employed various traditional machine learning and deep learning techniques to classify the cognitive levels of assessment questions. Despite these efforts, the highest reported accuracy achieved was 93.5%, utilizing a traditional machine learning model [19]. In contrast, the best-performing deep learning models only reached an accuracy of 86% [20]. These results highlight the need for improvement, particularly in the application of deep learning models, which have not been fully exploited for this task. To address these gaps, our study introduces a hybrid model designed to enhance the classification of cognitive levels in assessment questions. The model was developed and tested using a widely recognized benchmark dataset, which contains essay-style and short-answer questions. The model leverages distilled bidirectional encoder representations from transformers (DistilBERT) and TF-IDF for feature extraction, applies data augmentation through synonym substitution, and integrates an ensemble approach using soft voting. Furthermore, Bayesian optimization is employed to fine-tune hyperparameters, further improving the model’s performance.

The remainder of the paper is organized as follows: Section 2 provides a comprehensive literature review, analyzing key research findings in the field. Section 3 outlines the methodology and framework of the proposed model, detailing its design and implementation for enhancing the automatic classification of assessment questions. Section 4 presents the results, followed by a discussion in Section 6. Finally, Section 7 concludes the paper.

2. Background

2.1. Bloom’s Taxonomy

Bloom’s taxonomy, a framework developed by Benjamin Bloom and his colleagues in the 1950s, remains a foundational tool in education, providing a structured way to categorize educational outcomes by cognitive complexity [4]. The taxonomy was later revised in 2001 by Anderson and Krathwohl [21] to reflect advancements in the understanding of cognitive processes. The revised taxonomy includes six cognitive levels: remember, understand, apply, analyze, evaluate, and create. Each level represents a step in the cognitive development process, guiding instructors in designing assessments and learning activities that foster deeper understanding and mastery of subjects. The taxonomy has been adapted to include verbs and action-oriented language to better align assessment activities with specific cognitive skills, thereby enhancing educational outcomes’ clarity and effectiveness [22].

The taxonomy facilitates a systematic approach to developing CLOs, instructional activities, and assessment tasks that target specific cognitive skills [22]. Instructors can design more focused and effective learning experiences by categorizing CLOs into different cognitive levels. A well-balanced course should include a mix of lower-level outcomes, which emphasize recall and comprehension, as well as higher-level outcomes that challenge students to apply knowledge, analyze complex information, evaluate arguments, and generate new ideas or solutions, as illustrated in Figure 1. Assessment questions should then be carefully aligned with the specific outcomes they are intended to assess. For instance, if a learning outcome is at a higher cognitive level, it should not be evaluated using questions that only test lower cognitive skills. Similarly, the questions should not exceed the cognitive level of the intended outcome [23].

Figure 1.

Bloom’s taxonomy graphic description.

2.2. Assessing Cognitive Skills

In contemporary educational frameworks, the creation of assessments that accurately measure student achievement is crucial. Effective assessments are necessary not only for evaluating students’ knowledge, but also for assessing their ability to apply and analyze that knowledge [24]. Utilizing frameworks such as Bloom’s taxonomy to analyze assessment questions helps identify the cognitive demands placed on students [25]. For instance, a study by Muhayimana et al. [26] applied Bloom’s taxonomy to evaluate the cognitive levels of English exam questions and found a predominant emphasis on lower-order thinking skills (LOTS). This discovery underscores the necessity for assessments that equally promote higher-order thinking skills (HOTS) to meet educational objectives and support comprehensive cognitive development in students.

Expanding upon these findings, another study by Tsaparlis et al. [27] examined the Nationwide Chemistry Examination in Greece and observed a tendency to favor HOTS over LOTS. This shift toward more cognitively demanding tasks correlated with lower student performance, suggesting that an overemphasis on HOTS might hinder student success. Tsaparlis et al. advocate for a more balanced approach to assessment, one that challenges students across both HOTS and LOTS to foster well-rounded cognitive growth.

Adams [28] explained that critical thinking can be cultivated across all cognitive levels, but its depth and complexity increase at higher levels. At the LOTS level, tasks might involve identifying relationships or evaluating the relevance of information, laying the groundwork for more advanced thinking. At the HOTS level, students are encouraged to analyze complex problems, evaluate evidence, and synthesize ideas, fostering advanced reasoning. Similarly, Nurmatova and Altun [29] argued that problem-solving abilities progress from applying known concepts at the LOTS level to tackling complex, open-ended problems at the HOTS level. According to Lourdusamy et al. [30], deep conceptual understanding emerges when students move beyond simple memorization at the LOTS level to integrate and evaluate concepts at the HOTS level.

These studies highlight an important educational imperative: the need for a balanced representation of both LOTS and HOTS. Achieving this balance is essential for enhancing assessment practices that better promote critical thinking, problem-solving abilities, and deep conceptual understanding among students. In response to these findings, our research introduces a hybrid model that leverages advanced analytics to design assessments that are balanced and aligned with educational goals, ensuring that both HOTS and LOTS are adequately represented and measured.

2.3. Related Work

Several studies employed various methodologies to classify the cognitive levels of assessment questions based on Bloom’s taxonomy. These methods can be categorized based on the classification approach they utilized into three main categories: rule-based approaches [22,31], traditional machine learning models [32,33], and deep learning models.

2.3.1. Rule-Based Approaches

In 2023, a study by Goh et al. [34] utilized a rule-based approach involving question preprocessing through verb extraction using the Stanford Part-of-Speech (POS) Tagger and parser to identify significant word tokens (primarily verbs). The WordNet similarity approach was then applied for question classification. The dataset comprised 200 examination questions from various subjects, incorporating both single-sentence and multi-sentence types. The model achieved an overall accuracy of 83%. While rule-based methods are relatively straightforward, they rely heavily on predefined rules, limiting their adaptability to more complex contexts and unseen data.

2.3.2. Traditional Machine Learning Approaches

Several studies explored traditional machine learning techniques for cognitive level classification. In 2022, a study by Patil et al. [19] applied a customized TFPOS-IDF methodology for feature extraction, followed by an ensemble classification technique using KNN, SVM, and NB algorithms. The dataset included 755 short-answer and essay questions. Compared to other configurations, this model demonstrated a superior accuracy of 93.5%, which remains the most successful result among traditional machine learning methods. Another study by Mohammedid et al. conducted in 2020 [35] employed modified TF-IDF (TFPOS-IDF) and Word2Vec for feature extraction, testing models such as KNN, logistic regression (LR), and SVM on two datasets of 141 and 600 open-ended questions. The highest accuracy achieved was 89.7% on the larger dataset, highlighting the effectiveness of feature engineering in improving classification outcomes. Similarly, in 2012, a study by Yahya et al. [36] used data preprocessing, feature extraction, and SVM for classification. The dataset consisted of 600 open-ended questions, and the study reported a classification accuracy of 92.3%.

2.3.3. Deep Learning Approaches

Deep learning models have also been applied to cognitive level classification, though with varying success. A 2023 study by Vankawala et al. [37] employed feature extraction using TF-IDF and evaluated models such as KNN, SVM, multinomial naive Bayes (MNBC), long short-term memory (LSTM), bidirectional recurrent neural network (BiRNN), and bidirectional long short-term memory (BiLSTM) on a dataset of 1500 questions. The BiLSTM model achieved an accuracy of 80.03% under this setup, which, while promising, is still lower than the best traditional machine learning models. Another study from 2023 by Gani et al. [20] explored various non-contextual, i.e., Word2Vec, global vectors for word representation (GloVe), and FastText, as well as contextual, i.e., BERT, RoBERTa, and efficiently learning an encoder that classifies token replacements accurately (ELECTRA), word embedding techniques. Using a combined dataset of 2522 questions, the study achieved an accuracy of 86% and a similarly high F1-score, showcasing the potential of contextual embeddings in improving classification accuracy. However, the relatively small dataset size may have constrained the full performance potential of the deep learning model. Table 1 provides a summary of the top-performing studies for each approach.

Table 1.

Summary of the top-performing studies on cognitive level classification of assessment questions.

While traditional machine learning methods achieved the highest reported accuracy of 93.5%, deep learning models have yet to match this performance, with the highest reported accuracy being 86%. These findings suggest that there is still room for improvement, particularly in optimizing deep learning techniques for cognitive levels classification. This study introduces a hybrid model that integrates both traditional and deep learning approaches, aiming to push the boundaries of current classification accuracy.

3. The Proposed Methodology

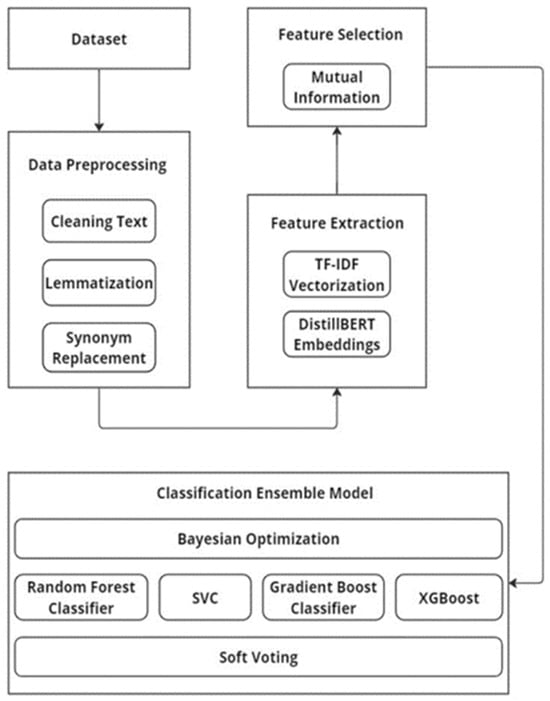

The proposed methodology for classifying the cognitive levels of assessment questions is depicted in Figure 2. The methodology involves multiple stages. It starts with data preprocessing, including text cleaning, lemmatization, and synonym replacement to enhance the dataset. Feature extraction using TF-IDF vectorization and DistilBERT embeddings are then used to capture both frequency-based and semantic information, followed by mutual information for feature selection. The extracted features are input into an ensemble model that combines classifiers, i.e., random forest (RF), SVM, gradient boost (GB), and eXtreme gradient boosting (XGBoost), using soft voting for final predictions. Bayesian optimization is applied to fine-tune the model’s performance.

Figure 2.

Classification model.

3.1. Dataset

The dataset used in this study was sourced from a publicly available question bank developed by Yahya et al. [36]. It comprises 600 short-answer and essay questions, evenly distributed across Bloom’s six cognitive levels, with 100 questions assigned to each level. This balanced distribution ensures an equal representation of cognitive categories, making the dataset ideal for training and evaluating machine learning models used for classifying cognitive levels based on Bloom’s taxonomy. The subject areas covered are diverse, including natural sciences, mathematics, technology, and general knowledge, making the dataset applicable for a broad spectrum of academic disciplines at the higher education level. The questions are presented in English, catering primarily to undergraduate educational settings. The dataset has been utilized by the top-performing models discussed in our literature review [19,37,38], making it a reliable benchmark for comparison. This ensures that our evaluation aligns with recognized benchmarking practices in the field and allows for a meaningful assessment of our model’s performance relative to existing approaches. The dataset is publicly available and can be accessed at https://doi.org/10.1371/journal.pone.0230442.s002 (accessed on 3 January 2025).

3.2. Data Preprocessing

3.2.1. Data Cleaning and Lemmatization

The first step in preprocessing the dataset involved cleaning the textual data to remove unnecessary and potentially obscuring elements. We applied regular expressions to eliminate non-alphanumeric symbols from the corpus, ensuring that irrelevant characters were excluded from further analysis. Additionally, we removed stop words—common terms with high frequency but minimal semantic contribution to the overall meaning of the text. After the cleaning process, the corpus was subjected to lemmatization using the spaCy NLP library, as detailed by Alammary [38]. This lemmatization step reduced words to their lemma or root form, standardizing variations of the same word to improve feature extraction and model accuracy.

3.2.2. Augmentation Through Synonym Substitution

To address challenges posed by language variability and to enrich the semantic diversity of our dataset, we implemented an augmentation strategy focused on synonym substitution. This approach not only expanded the dataset’s volume, but also aimed at enhancing the models’ resilience to diverse linguistic inputs, thereby improving their predictive robustness [39]. The augmentation process involved substituting selected words in the training questions with their synonyms, a technique that preserved the essential meaning of each question while introducing variations in expression [40]. Through this method of synonym substitution, the size of our training dataset was effectively doubled, providing a more robust set of training examples. Importantly, this augmentation was applied exclusively to the training data. The testing data were kept free from any augmentation to maintain their integrity as true representations of unseen, real-world data, ensuring that our evaluations reflect the model’s genuine performance capabilities. Data cleaning, lemmatization, and augmentation, as illustrated in Figure 2, were carried out as detailed in Algorithm 1. Preprocessing and augmentation prepare the raw data through cleaning and synonym replacement, ensuring the data are optimized for feature extraction.

| Algorithm 1. Pseudo-code of preprocessing and augmentation |

|

3.3. Feature Extraction

In this study, two methodologies were employed for feature extraction: DistilBERT embeddings and TF-IDF vectorization. Each approach contributes uniquely to the representation of textual data. DistilBERT embeddings capture rich, contextualized word representations by leveraging deep transformer models, while TF-IDF vectorization focuses on the statistical significance of words within the corpus, ensuring that both semantic depth and term frequency relevance are considered in the classification task.

3.3.1. DistilBERT Embeddings

DistilBERT is a smaller, faster version of the original bidirectional encoder representations from transformers (BERT) model, designed to retain much of BERT’s accuracy while improving computational efficiency [41]. Technically, DistilBERT is built through a process known as “knowledge distillation,” where the smaller model (student) learns from a larger pre-trained model (teacher) by approximating its predictions. DistilBERT uses fewer layers (6 compared to BERT’s 12), but retains the same hidden size and attention heads, ensuring it still captures complex linguistic patterns. The model operates on the transformer architecture, which processes text bidirectionally, meaning it analyzes the context of a word by looking at both the preceding and succeeding tokens [42,43]. This bidirectional attention mechanism allows DistilBERT to capture deep contextual dependencies between words, making it effective for NLP tasks such as text classification and question answering. Therefore, in this study, DistilBERT was utilized to extract rich semantic embeddings from the dataset of assessment questions, enhancing the classification accuracy by understanding not just the words, but also their contextual use.

In this study, DistilBERT was employed to extract rich semantic embeddings from a dataset of assessment questions, significantly enhancing our model’s classification accuracy. The model’s ability to interpret not just the words, but also their contextual usage, allows for a more nuanced analysis of question complexity and cognitive demand. The hyperparameters sets for DistilBERT in our experiments were as follows:

- Model variant: ‘distilbert-base-uncased’

- Maximum sequence length: 512 tokens

- Batch size: 16

- Learning rate: 2 × 105

- Number of training epochs: 4

- Optimizer: AdamW with a weight decay of 0.01

These parameters were optimized to balance computational efficiency with predictive performance, ensuring rapid processing without sacrificing accuracy. By leveraging DistilBERT’s capabilities, we aim to refine the assessment of cognitive levels in educational settings, thus providing more precise and actionable insights for educators.

3.3.2. TF-IDF Vectorization

TF-IDF vectorization was deployed in our study to quantify the importance of words within the corpus of assessment questions. By converting the textual data into a sparse matrix of weighted term frequencies, TF-IDF allowed us to capture the significance of specific words based on their occurrences across different questions. The TF component measures the frequency of a word within a specific question, normalized over the total number of words in that question, while the IDF component logarithmically scales the inverse proportion of questions containing the word [44]. Mathematically, TF-IDF is calculated as follows:

where t is the term, d is the document, and D is the corpus [35]. The model balances frequency with distinctiveness. This allows it to emphasize meaningful words that help differentiate between cognitive levels. In our study, TF-IDF was used as a key feature extraction technique, transforming each question into a vector representation that could be input into the classification models. This representation was crucial for identifying the patterns that corresponded to various cognitive levels, supporting the overall accuracy of the model’s predictions.

3.3.3. Integration of Features

Algorithm 2 (feature extraction processes the preprocessed data to extract DistilBERT embeddings and compute TF-IDF scores. As shown in Figure 2 depicted as the central component of the workflow) illustrates our approach to integrating DistilBERT embeddings and TF-IDF vectorization for feature extraction, as depicted in Figure 2, which provides an overview of their role in constructing robust representations of assessment questions. Initially, text data are processed where each question is tokenized and then transformed into embedding vectors using the DistilBERT model. Specifically, DistilBERT outputs sentence-level embeddings for each question, encapsulating the overall contextual meaning rather than focusing on individual words. This allows the model to grasp the complex interplay of terms within the context of each question. Simultaneously, we compute TF-IDF scores that highlight the statistical relevance of terms within the questions, focusing on their frequency and distribution across the dataset.

The integration of these two types of features, deep contextual embeddings from DistilBERT and statistical insights from TF-IDF, occurs through a methodical concatenation process. Each 768-dimensional embedding vector from DistilBERT is concatenated with the corresponding sparse TF-IDF vector, resulting in a comprehensive feature vector that combines semantic depth with term specificity. This combination ensures that our classification model benefits from a rich, nuanced understanding of both the contextual and factual content of each question.

As shown in Figure 2, this feature extraction process is pivotal, linking the initial text preprocessing with the final classification stages. By merging advanced neural network outputs with traditional vectorization techniques, we create a multidimensional feature set that enhances our model’s accuracy in classifying cognitive levels. This methodological integration is essential for leveraging the strengths of both the linguistic context and statistical significance, thereby improving the model’s performance in educational assessment tasks.

| Algorithm 2. Pseudo-code of feature extraction |

|

3.4. Feature Selection

Feature selection plays a crucial role in optimizing machine learning models by refining the feature set to include only the most informative components. By eliminating irrelevant or redundant features, this process reduces model complexity, enhances computational efficiency, and can improve the model’s generalization performance. In the context of classifying cognitive levels, selecting the most relevant features is vital to ensure that the model focuses on data elements that significantly impact the prediction task [45,46].

In this study, mutual information was employed as the primary technique for feature selection due to its ability to measure the dependency between features and target labels. Unlike linear correlation measures, mutual information can capture both linear and non-linear relationships between variables, making it particularly effective for this task. By evaluating how the presence or absence of specific features reduces uncertainty about the target label, mutual information identifies the features most indicative of the cognitive levels [47]. This approach allows the model to account for complex interactions within the data, leading to more accurate classification.

Feature selection in our study was implemented using the SelectKBest function in combination with the mutual_info_classif method from the scikit-learn library. This approach systematically ranks the features based on their mutual information scores, ensuring that only the top-performing features are retained. Specifically, we set the parameter k = 6000, which balances model complexity and the comprehensiveness of the feature set. Algorithm 3 (feature selection is shown as the final preparatory step before model training, selecting the most impactful features to enhance model accuracy and efficiency) outlines the procedure for feature selection, as depicted in Figure 2. It details how the top k features were selected, enabling the model to focus on the most relevant predictors for classifying assessment questions by cognitive level.

| Algorithm 3. Pseudo-Code of Feature Selection |

|

3.5. Model Optimization and Classification

To evaluate and classify the cognitive levels of assessment questions, the dataset was split into training and testing sets using an 80/20 ratio. This split ensured that 80% of the data were used for training the models, while 20% were reserved for evaluating their performance.

Four classifiers that demonstrated strong performance in previous studies were selected for the task: random forest (RF), support vector machine (SVM), gradient boosting (GB), and extreme gradient boosting (XGBoost). These algorithms were chosen based on their ability to handle complex classification tasks, their proven success in text classification, and their complementary learning characteristics.

To enhance the performance of each model, Bayesian optimization was employed for hyperparameter tuning. Unlike grid or random search methods, Bayesian optimization builds a probabilistic model of the objective function and uses it to explore the hyperparameter space more intelligently. It balances exploration and exploitation by using information from previous evaluations to guide the search toward better-performing configurations.

In our study, Bayesian optimization was applied independently to each classifier using defined search ranges (as presented in Table 2). The objective was to maximize validation accuracy while minimizing the number of iterations required to find optimal hyperparameters. This approach reduced computational overhead and improved overall model accuracy [48].

Table 2.

Hyperparameter ranges for model optimization.

After individual optimization, the four classifiers were integrated into an ensemble classifier using soft voting. This ensemble model leverages the combined strengths of each classifier, aggregating their predictions by assigning weighted probabilities to their outputs. In contrast to hard voting, where each model contributes equally to the final decision, soft voting takes into account the confidence level of each classifier’s prediction [49]. In our study, this approach allowed the ensemble to make more informed and nuanced decisions by considering the probability scores generated by each individual model. For example, when the classifiers were divided on their predictions, the model with higher confidence (a higher probability score) influenced the final decision more strongly, leading to improved overall accuracy. This ensemble-based approach effectively boosted the predictive power and generalization of the classification task.

3.6. Model Evaluation

Various metrics were utilized to evaluate and verify the model’s effectiveness in classifying the cognitive levels of assessment questions, including accuracy, macro precision, macro recall, and macro-F1 [45].

Accuracy measures the percentage of correct predictions across all classes and is defined as follows:

where TP, TN, FP, and FN denote the number of true positives, true negatives, false positives, and false negatives, respectively, across all classes. While accuracy provides an overall measure of performance, it can be misleading in multi-class classification, particularly if the model performs well on some cognitive levels but poorly on others.

To ensure balanced performance across all six cognitive levels in Bloom’s taxonomy, we employ macro precision, macro recall, and macro-F1, which treat all classes equally by computing the metric independently for each class and averaging the results [50].

Macro precision is defined as follows:

where N is the total number of classes, and TPi and Fpi represent the true positives and false positives for class i. This metric evaluates how well the model avoids false positives across all cognitive levels.

Macro recall is defined as follows:

where FNi is the number of false negatives for class i. Macro recall ensures that the model does not disproportionately favor some cognitive levels while neglecting others.

Macro-F1, which balances precision and recall, is computed as follows:

This metric provides a class-balanced evaluation of model performance, ensuring that each cognitive level contributes equally to the overall score. Since Bloom’s taxonomy classification involves multiple cognitive levels with equal representation in the dataset, macro metrics prevent the model from favoring lower- or higher-order cognitive skills, thereby offering a more fair and reliable assessment compared to overall accuracy alone.

4. Results

This section presents the performance outcomes of the four classifiers—RF, SVM, GB, and XGBoost—both individually and within the ensemble model. The models were evaluated based on accuracy and macro-F1, with comparisons made between the use of TF-IDF vectorization, DistilBERT embeddings, and a combination of both feature extraction methods. Additionally, the impact of preprocessing techniques and data augmentation on model performance is examined. The results highlight the varying degrees of improvement brought by preprocessing and augmentation to each model, as well as the overall benefit of combining TF-IDF vectorization and DistilBERT embeddings for feature extraction and the strengths of the ensemble model.

The evaluations of our models, as presented in Table 3, Table 4, Table 5, Table 6 and Table 7, are based on single runs using the optimized parameters from Bayesian searches. This approach allows us to assess the immediate performance of each model configuration. To verify the reliability and generalizability of our ensemble model, we further employed 7-fold cross-validation with k = 7. This process was applied post-initial evaluation to test the consistency of the ensemble’s performance, helping to mitigate any potential overfitting and confirm the model’s effectiveness across different data segments. However, as the cross-validation results show no significant variation in performance, only the single-run results were reported. This dual approach, single runs for initial assessment and cross-validation for verification, provides a comprehensive evaluation of our predictive models.

4.1. RF Classifier

As seen in Table 3, the RF classifier exhibited significant performance variations depending on the feature extraction methods, preprocessing techniques, and data augmentation used. When TF-IDF vectorization was applied along with both data preprocessing and augmentation, the RF classifier performed strongly, achieving an accuracy of 92% and a macro-F1 of 0.92. This was achieved with the following model parameters: bootstrap = False, max_depth = 20, max_features = sqrt, min_samples_leaf = 1, min_samples_split = 2, and n_estimators = 200 as model parameters. This configuration marked the highest performance for this classifier. Notably, the combination of TF-IDF and DistilBERT did not yield better results, with accuracy dropping to 81%, even when preprocessing and augmentation were applied.

Without preprocessing, the classifier’s performance dropped considerably. For instance, the accuracy decreased to 67% when only TF-IDF was used without data augmentation. In configurations using DistilBERT without preprocessing or augmentation, the RF classifier recorded its lowest accuracy of 52%. These results indicate that preprocessing and augmentation are essential for maximizing the performance of the RF classifier, particularly when using DistilBERT.

Table 3.

Performance of RF classifier with different feature extraction and preprocessing techniques.

Table 3.

Performance of RF classifier with different feature extraction and preprocessing techniques.

| Feat. Extr. | Data Preproc. | Data Augm. | Macro-P | Macro-R | Macro-F1 | Acc. 1 |

|---|---|---|---|---|---|---|

| TF-IDF | Yes | Yes | 0.92 | 0.93 | 0.92 | 0.92 |

| TF-IDF | Yes | No | 0.68 | 0.67 | 0.67 | 0.67 |

| TF-IDF | No | Yes | 0.68 | 0.68 | 0.67 | 0.67 |

| TF-IDF | No | No | 0.68 | 0.71 | 0.67 | 0.67 |

| DistilBERT | Yes | Yes | 0.90 | 0.90 | 0.90 | 0.90 |

| DistilBERT | Yes | No | 0.48 | 0.49 | 0.48 | 0.48 |

| DistilBERT | No | Yes | 0.84 | 0.83 | 0.83 | 0.83 |

| DistilBERT | No | No | 0.51 | 0.58 | 0.52 | 0.52 |

| Combined | Yes | Yes | 0.81 | 0.81 | 0.80 | 0.81 |

| Combined | Yes | No | 0.55 | 0.56 | 0.55 | 0.55 |

| Combined | No | Yes | 0.53 | 0.53 | 0.51 | 0.51 |

| Combined | No | No | 0.53 | 0.53 | 0.51 | 0.51 |

1 Feat. Extr. = feature extraction, Data Preproc. = data preprocessing (includes cleaning text and lemmatization), Data Augm = data augmentation (includes synonym replacement), Macro-P = macro precision, Macro-R = macro recall, Macro-F1 = macro F1-score, and Acc. = accuracy.

4.2. SVM Classifier

The SVM classifier consistently performed well when using TF-IDF as the feature extraction method, particularly when preprocessing and data augmentation were applied. As presented in Table 4, the model achieved its highest accuracy of 93%, alongside a macro-F1 of 0.93, when both TF-IDF preprocessing and augmentation were used. This underscores the classifier’s reliance on these enhancements to reach optimal performance.

When DistilBERT was used as the feature extraction method with preprocessing and augmentation, the classifier also achieved an accuracy of 93%, demonstrating the strong potential of contextual embeddings under similar conditions. However, without augmentation or preprocessing, the performance of DistilBERT dropped considerably, indicating a dependency on these techniques.

Interestingly, combining TF-IDF with DistilBERT did not improve the classifier’s performance. With both preprocessing and augmentation, the accuracy dropped to 80%, and without either, it further declined to 53%. These results suggest that TF-IDF alone is more effective for the SVM classifier than a combined feature approach, particularly when preprocessing is applied effectively.

Table 4.

Performance of SVM classifier with different feature extraction and preprocessing techniques.

Table 4.

Performance of SVM classifier with different feature extraction and preprocessing techniques.

| Feat. Extr. | Data Preproc. | Data Augm. | Macro-P | Macro-R | Macro-F1 | Acc. |

|---|---|---|---|---|---|---|

| TF-IDF | Yes | Yes | 0.93 | 0.80 | 0.93 | 0.93 |

| TF-IDF | Yes | No | 0.70 | 0.72 | 0.68 | 0.68 |

| TF-IDF | No | Yes | 0.70 | 0.69 | 0.68 | 0.68 |

| TF-IDF | No | No | 0.70 | 0.69 | 0.68 | 0.68 |

| DistilBERT | Yes | Yes | 0.93 | 0.94 | 0.93 | 0.93 |

| DistilBERT | Yes | No | 0.56 | 0.56 | 0.55 | 0.55 |

| DistilBERT | No | Yes | 0.89 | 0.88 | 0.88 | 0.88 |

| DistilBERT | No | No | 0.56 | 0.57 | 0.56 | 0.56 |

| Combined | Yes | Yes | 0.81 | 0.81 | 0.80 | 0.80 |

| Combined | Yes | No | 0.60 | 0.61 | 0.60 | 0.60 |

| Combined | No | Yes | 0.54 | 0.54 | 0.53 | 0.53 |

| Combined | No | No | 0.54 | 0.54 | 0.53 | 0.53 |

4.3. GB Classifier

The GB classifier demonstrated exceptional results, particularly when combining TF-IDF and DistilBERT with preprocessing and augmentation, achieving an accuracy of 94%, the highest among all models tested. This performance showcases the ability of GB to leverage both feature extraction methods effectively when preprocessing techniques are applied. Without augmentation, the model’s performance remained strong, with a 93% accuracy when TF-IDF was used alone.

In contrast, when preprocessing and augmentation were omitted, the GB classifier’s accuracy dropped significantly. For instance, for the combined feature extraction approach without preprocessing, an accuracy of 62% was recorded under this setup. Even the standalone TF-IDF model recorded a lower accuracy of 71% without preprocessing. These results further emphasize the importance of preprocessing for this classifier, as it had a marked impact on the GB model’s performance, as summarized in Table 5.

Table 5.

Performance of GB classifier with different feature extraction and preprocessing techniques.

Table 5.

Performance of GB classifier with different feature extraction and preprocessing techniques.

| Feat. Extr. | Data Preproc. | Data Augm. | Macro-P | Macro-R | Macro-F1 | Acc. |

|---|---|---|---|---|---|---|

| TF-IDF | Yes | Yes | 0.94 | 0.94 | 0.94 | 0.94 |

| TF-IDF | Yes | No | 0.735 | 0.71 | 0.71 | 0.71 |

| TF-IDF | No | Yes | 0.735 | 0.71 | 0.71 | 0.71 |

| TF-IDF | No | No | 0.735 | 0.71 | 0.71 | 0.71 |

| DistilBERT | Yes | Yes | 0.90 | 0.91 | 0.90 | 0.90 |

| DistilBERT | Yes | No | 0.50 | 0.51 | 0.48 | 0.48 |

| DistilBERT | No | Yes | 0.86 | 0.85 | 0.85 | 0.85 |

| DistilBERT | No | No | 0.56 | 0.56 | 0.55 | 0.55 |

| Combined | Yes | Yes | 0.94 | 0.94 | 0.94 | 0.94 |

| Combined | Yes | No | 0.93 | 0.93 | 0.93 | 0.93 |

| Combined | No | Yes | 0.64 | 0.62 | 0.62 | 0.62 |

| Combined | No | No | 0.64 | 0.62 | 0.62 | 0.62 |

4.4. XGBoost Classifier

The XGBoost classifier delivered competitive results, particularly when TF-IDF was used with preprocessing. The highest accuracy of 93% was observed in this configuration, matching the performance of the SVM classifier in similar settings. However, when the combined feature extraction approach (TF-IDF and DistilBERT) was employed with preprocessing and augmentation, the accuracy decreased slightly to 83%.

As observed with the other classifiers, the absence of preprocessing and augmentation led to a noticeable decline in performance for XGBoost. For example, using the combined feature extraction method without preprocessing resulted in an accuracy of 57%, highlighting the necessity of preprocessing to achieve optimal performance with this classifier. Nonetheless, the XGBoost model demonstrated a degree of robustness when using TF-IDF, as the accuracy remained relatively high at 67% even without augmentation, as detailed in Table 6.

Table 6.

Performance of the XGBoost classifier with different feature extraction and preprocessing techniques.

Table 6.

Performance of the XGBoost classifier with different feature extraction and preprocessing techniques.

| Feat. Extr. | Data Preproc. | Data Augm. | Macro-P | Macro-R | Macro-F1 | Acc. |

|---|---|---|---|---|---|---|

| TF-IDF | Yes | Yes | 0.93 | 0.93 | 0.93 | 0.93 |

| TF-IDF | Yes | No | 0.67 | 0.67 | 0.67 | 0.67 |

| TF-IDF | No | Yes | 0.67 | 0.67 | 0.67 | 0.67 |

| TF-IDF | No | No | 0.67 | 0.67 | 0.67 | 0.67 |

| DistilBERT | Yes | Yes | 0.90 | 0.76 | 0.90 | 0.90 |

| DistilBERT | Yes | No | 0.60 | 0.59 | 0.57 | 0.57 |

| DistilBERT | No | Yes | 0.81 | 0.80 | 0.80 | 0.80 |

| DistilBERT | No | No | 0.58 | 0.59 | 0.57 | 0.57 |

| Combined | Yes | Yes | 0.83 | 0.84 | 0.83 | 0.83 |

| Combined | Yes | No | 0.83 | 0.84 | 0.83 | 0.83 |

| Combined | No | Yes | 0.58 | 0.58 | 0.57 | 0.57 |

| Combined | No | No | 0.58 | 0.58 | 0.57 | 0.57 |

4.5. Ensemble Classifier with Soft Voting

The ensemble model, which integrated the predictions of the RF, SVM, GB, and XGBoost classifiers using a soft voting approach, outperformed the individual classifiers in most configurations. As displayed in Table 7, the ensemble model reached its peak performance with an accuracy of 96% and a macro-F1 of 0.96 when both TF-IDF and DistilBERT were combined with preprocessing and augmentation. This result surpasses the highest performance of any single classifier, illustrating the advantages of ensemble learning for this task.

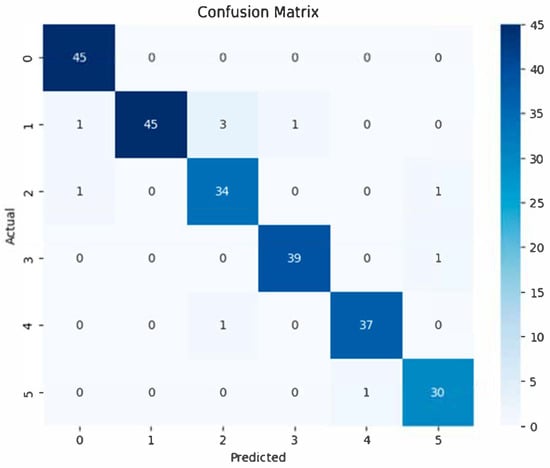

Even in configurations without augmentation, the ensemble model still achieved competitive performance levels. For instance, with TF-IDF and preprocessing but no augmentation, the accuracy remained at 75%. While this suggests potential robustness across different feature extraction methods and preprocessing techniques, this observation is limited by the use of a single dataset. Therefore, further evaluation across multiple datasets is required to substantiate the model’s generalizability. Figure 3 presents the confusion matrix for the best-performing configuration, illustrating the model’s ability to distinguish between different Bloom’s levels and highlighting areas of occasional confusion between adjacent cognitive categories. Table 8 provides a summary view of how different feature extraction techniques—TF-IDF, DistilBERT, and their combination—impact performance within the ensemble model, further demonstrating the superiority of the combined approach. Nonetheless, in contrast to individual classifiers, the ensemble model showed reduced sensitivity to the absence of augmentation, demonstrating relatively consistent performance across varied configurations.

Figure 3.

Confusion matrix of the ensemble classifier, “the proposed method”.

Table 7.

Performance of an ensemble classifier with different feature extraction and preprocessing techniques.

Table 7.

Performance of an ensemble classifier with different feature extraction and preprocessing techniques.

| Feat. Extr. | Data Preproc. | Data Augm. | Macro-P | Macro-R | Macro-F1 |

|---|---|---|---|---|---|

| TF-IDF | Yes | Yes | 0.93 | 0.95 | 0.95 |

| TF-IDF | Yes | No | 0.76 | 0.75 | 0.75 |

| TF-IDF | No | Yes | 0.76 | 0.75 | 0.75 |

| TF-IDF | No | No | 0.76 | 0.75 | 0.75 |

| DistilBERT | Yes | Yes | 0.91 | 0.90 | 0.90 |

| DistilBERT | Yes | No | 0.53 | 0.52 | 0.52 |

| DistilBERT | No | Yes | 0.88 | 0.87 | 0.87 |

| DistilBERT | No | No | 0.59 | 0.57 | 0.57 |

| Combined | Yes | Yes | 0.96 | 0.96 | 0.96 |

| Combined | Yes | No | 0.74 | 0.76 | 0.75 |

| Combined | No | Yes | 0.66 | 0.65 | 0.65 |

| Combined | No | No | 0.66 | 0.65 | 0.65 |

Table 8.

“Summary view” performance of an ensemble classifier with different feature extraction and preprocessing techniques.

Table 8.

“Summary view” performance of an ensemble classifier with different feature extraction and preprocessing techniques.

| Feat. Extr. | Macro-P | Macro-R | Macro-F1 |

|---|---|---|---|

| TF-IDF only | 0.76 | 0.75 | 0.75 |

| DistilBERT only | 0.59 | 0.57 | 0.57 |

| Combined | 0.96 | 0.96 | 0.96 |

5. Practical Application of the Model

To illustrate the practical role of our model, we consider its application in a software engineering course taught to third-year IT students at a Saudi university. The course has five learning outcomes as shown in Table 9, with two at the applying level and one each at the understanding, analyzing, and creating levels. Assessments in this course typically include assignments, projects, presentations, discussion boards, quizzes, mid-semester exams, and final exams, with a total of 90 to 120 questions.

Table 9.

Learning outcomes of the software engineering course.

To better understand the challenges our model seeks to address, we analyzed an offering of this course where students were given six assessments, including an assignment, a project, a discussion board, a quiz, a mid-semester exam, and a final exam. A total of 91 questions were included in these assessments. The analysis revealed a significant imbalance in cognitive level distribution, with 63% of the questions assessing remembering, 16% assessing understanding, and only 21% targeting higher cognitive levels. Given the course’s learning outcomes and its placement as a third-year course, it is evident that a greater emphasis on higher-order cognitive skills was necessary.

A second issue observed was the misalignment between learning outcomes and the questions assessing them. For instance, LO4, classified at the analyzing level, was assessed only through questions targeting remembering and understanding levels, raising concerns about whether this learning outcome was evaluated at the appropriate cognitive depth. Similarly, LO1, set at the understanding level, was assessed using higher-order thinking questions, including applying, analyzing, evaluating, and creating. This misalignment suggests that students may not have been assessed at the appropriate cognitive level, potentially leading to inaccurate performance evaluations. Due to instructors’ busy schedules, the large number of questions, and the lack of tools to monitor assessment structures, lower-order cognitive skills may have been unintentionally overemphasized, and misalignments may have gone unnoticed.

The proposed model could provide an automated solution to address these challenges. With an accuracy of 96%, it can classify assessment questions with a higher degree of precision than any other previous model, allowing instructors to gain a clearer understanding of the cognitive level distribution within their assessments. The model can be integrated into learning management systems (LMS) or assessment management systems (AMS) to classify questions immediately upon creation. This real-time classification would allow instructors to monitor and adjust the cognitive level distribution dynamically, ensuring that both lower-order and higher-order cognitive skills are adequately represented. By providing immediate feedback, the model enables instructors to proactively detect and correct cognitive imbalances before assessments are finalized, rather than identifying them retrospectively.

The model could also support monitoring the alignment between learning outcomes and their corresponding assessment questions. By classifying and tracking the cognitive levels of both outcomes and their associated questions, instructors can determine whether each outcome is sufficiently assessed at the intended level. If a lower-order learning outcome is assessed by higher-order questions, or if a higher-order outcome is assessed only by lower-order questions, adjustments can be made before the assessments are finalized. This ensures that assessments are valid, as they accurately measure the intended cognitive levels, reliable, by providing consistent and objective classification of assessment questions, and pedagogically sound, by promoting a balanced representation of LOTS and HOTS that supports deeper learning and constructive curriculum alignment [51,52].

6. Discussion

The objective of this study was to improve the accuracy of classifying assessment questions based on cognitive levels as defined by Bloom’s taxonomy. To achieve this, we developed a hybrid model that integrates DistilBERT embeddings and TF-IDF features for feature extraction, supplemented by preprocessing and data augmentation to enhance data quality. Additionally, we employed an ensemble approach with soft voting across multiple classifiers optimized through Bayesian optimization to surpass the performance of existing machine learning and deep learning models for this task.

Our hybrid model outperformed all previously reported models in the literature. It achieved an accuracy of 96%, exceeding the highest reported accuracy of 93.5%, which was obtained using a traditional machine learning approach [19].

This 2.5% improvement in classification accuracy is particularly significant in high-stakes domains such as educational assessment, where even small gains can lead to considerable improvements in fairness, instructional alignment, and student learning outcomes [53]. At high accuracy levels, such as those exceeding 90%, further improvements become more difficult to achieve but are highly valuable because they reduce misclassification rates, ensuring that CLOs and assessment questions are classified more accurately into their respective cognitive levels. In large-scale educational applications, where thousands of questions and CLOs must be categorized, even a small percentage gain can prevent hundreds of misclassifications, thereby enhancing the reliability of assessments [54].

Misclassification can lead to imbalanced assessments, where certain cognitive levels are overrepresented, while others are underrepresented, potentially misguiding instructional strategies [55]. By achieving 96% accuracy, our model provides instructors with a more precise tool for aligning assessments with cognitive levels, ensuring a balanced representation of LOTS and HOTS, ultimately fostering more effective curriculum planning and improved student learning outcomes.

The superior performance of our approach confirms that the combination of DistilBERT, TF-IDF, and the implementation of the ensemble model using soft voting significantly enhanced these capabilities. The soft-voting ensemble not only aggregated the diverse strengths of multiple classifiers, but also adjusted the influence of each classifier based on its prediction confidence. This approach allowed for more nuanced decision-making, which was important in scenarios where classifiers disagreed. Empirical results show that the ensemble model achieved an accuracy of 96% and a macro-F1 of 0.96 when combining TF-IDF and DistilBERT with preprocessing and augmentation, surpassing the individual classifiers’ best accuracy of 94% (gradient boosting) under similar conditions. This quantifies the ensemble’s contribution and confirms that while individual feature representations substantially aid classification tasks, the ensemble method distinctly amplifies these effects, ensuring superior performance through collaborative decision-making. This finding underscores the synergy between advanced feature engineering and strategic classifier integration, establishing the ensemble’s pivotal role in achieving the reported high accuracy.

While the overall accuracy and macro-F1 scores indicate strong performance, we also analyzed the confusion matrix to better understand misclassification patterns across Bloom’s cognitive levels. Most errors occurred between adjacent levels—particularly between understanding and applying, and between evaluating and creating. These cognitive levels are often linguistically and conceptually close, which can make them more difficult to distinguish, even for human annotators. The low frequency of such misclassifications supports the model’s robustness, yet highlights an opportunity for further refinement, such as incorporating interpretability tools or calibration techniques to improve class separation in borderline cases.

Unlike traditional models that rely on manual feature extraction techniques, such as SVM and decision trees, our model leveraged deep learning via DistilBERT to capture richer semantic relationships between words in assessment questions. The combination of these embeddings with TF-IDF enabled the hybrid model to capture both contextual and frequency-based features, leading to significant performance improvements.

In comparison to previous deep learning models, our model demonstrated a significant performance advantage. The best accuracy achieved by a prior deep learning model, which used a CNN with RoBERTa embeddings, was 86% [20], while another study utilizing a BiLSTM model achieved an accuracy of only 80% [37]. As highlighted earlier, these results underscore the need for improvement in the application of deep learning techniques to this task, which had not been fully exploited in prior studies. Our model addressed this gap by incorporating key enhancements that contributed to its superior performance.

First, unlike the CNN-based and BiLSTM models, which relied primarily on contextual embeddings, our approach combined both TF-IDF and DistilBERT, capturing a broader range of linguistic features—contextual and frequency-based—leading to a more comprehensive representation of assessment questions. This dual-feature extraction helped overcome the limitations of prior deep learning models, which often struggled with the nuances of short, specialized assessment questions.

Second, our ensemble approach used soft voting combined the strengths of multiple classifiers, resulting in more balanced and robust predictions. By aggregating the outputs of several models, the ensemble method mitigated the weaknesses associated with using a single deep learning model such as CNN or BiLSTM, particularly in handling the diverse linguistic structures of assessment questions. These enhancements demonstrate that deep learning models can achieve substantial improvements when optimized and tailored for this task.

Preprocessing and data augmentation also played critical roles in boosting model performance. By reducing noise and diversifying the training data, these techniques improved the model’s ability to generalize to new, unseen assessment questions. The significant drop in performance observed when preprocessing and augmentation were omitted further underscores their importance. A major strength of our model lies in its hybrid nature, which combines traditional feature extraction (TF-IDF) with deep contextual embeddings (DistilBERT). This allowed the model to capture both shallow and deep semantic features, contributing to its superior performance. The ensemble method further amplified these advantages by leveraging the diversity of classifiers, improving overall robustness.

The practical implications of our findings are substantial for instructors and institutions looking to automate the classification of cognitive levels in assessments. By reducing the need for manual classification, which is both time-consuming and prone to error, our model can enhance the accuracy and consistency of assessment question classification. This, in turn, can lead to more balanced and effective assessments that are better aligned with CLOs.

7. Conclusions

This study presents a hybrid model that significantly advances the classification of cognitive levels in assessment questions, achieving an outstanding 96% accuracy. By integrating DistilBERT embeddings, TF-IDF, and an ensemble of classifiers, the model effectively addresses the limitations of both traditional machine learning and deep learning methods, providing instructors with a powerful and scalable tool for enhancing assessment quality. The model’s performance demonstrates the potential of combining advanced machine learning techniques with robust data preprocessing and augmentation methods to drive significant improvements in the automated analysis of assessment questions.

The use of both TF-IDF and DistilBERT in conjunction with ensemble learning allows the model to capture nuanced textual features and context, resulting in higher accuracy compared to models relying on individual approaches. This dual feature extraction, supported by careful data augmentation, enables the model to generalize well across various question types, making it a versatile solution for educational settings. Moreover, the ensemble approach maximizes the strengths of multiple classifiers, further boosting the model’s performance beyond what individual classifiers could achieve alone.

Despite its strong performance, this study has several limitations. The first limitation concerns the dataset used, which, while representative and recognized as a robust benchmark due to its balanced distribution and subject diversity, was relatively small. This may limit the generalizability of the findings to other educational contexts or languages. The limited dataset size also poses challenges for deep learning models, which typically require large volumes of data to achieve optimal performance and fully exploit their representational power. Furthermore, the dataset was restricted to essay-style and short-answer questions, excluding other formats such as multiple-choice, problem-solving, and true/false items. Expanding the model’s applicability to these additional question types would enhance its relevance across a broader range of assessment scenarios.

An additional limitation is the exclusive use of DistilBERT for contextual embeddings. While chosen for its efficiency, it may not fully capture the richness of semantic relationships present in assessment language. Future work could explore the integration of more advanced transformer-based models, such as RoBERTa or GPT, which may further improve embedding quality and classification performance. Exploring these architectures represents a promising direction for enhancing the model’s capabilities.

Another limitation relates to the synonym substitution strategy used for data augmentation. Although this approach increased data volume, it may have introduced semantic drift by unintentionally altering the original intent or cognitive level of some questions. No formal quality check was conducted to verify alignment. Future research could address this by using more sophisticated generative models (e.g., T5 or GPT) or involving educators to validate the augmented content for semantic consistency.

A further limitation is the absence of a quantitative benchmark against manual classification. The study did not measure key indicators such as annotation time, expert agreement, or inter-rater reliability. Including such benchmarks in future studies would help quantify the practical advantages of automation and reinforce confidence in model outputs. Future work could also include a per-class performance analysis to better understand the model’s ability to distinguish between closely related Bloom’s levels. In addition, while this study highlights the potential for integration into learning management systems (LMS) or assessment platforms (AMS), several implementation challenges remain. These include ensuring low-latency inference, enabling seamless system interoperability (e.g., via API), designing intuitive user interfaces for educators, and maintaining data privacy. Addressing these practical aspects will be essential for successful deployment in institutional settings.

Furthermore: while mutual information-based feature selection provides a basic level of interpretability, it does not offer insight into individual predictions. Future work should explore post hoc explainability methods such as SHAP or LIME and include visual examples to support educator trust and model transparency.

Author Contributions

Conceptualization, A.A.; methodology, A.A. and S.M.; software, S.M.; validation, A.A. and S.M.; formal analysis, A.A. and S.M.; investigation, A.A. and S.M.; data curation, A.A. and S.M.; writing—original draft preparation, S.M.; writing—review and editing, A.A.; visualization, S.M.; supervision, A.A.; project administration, A.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The dataset used in this study is publicly available and can be accessed at the following link: https://doi.org/10.1371/journal.pone.0230442.s002 (accessed on 3 January 2025).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Resing, W.C.M. Dynamic Testing and Individualized Instruction: Helpful in Cognitive Education? J. Cogn. Educ. Psychol. 2013, 12, 81–95. [Google Scholar] [CrossRef]

- Ramirez, T.V. On Pedagogy of Personality Assessment: Application of Bloom’s Taxonomy of Educational Objectives. J. Pers. Assess. 2017, 99, 146–152. [Google Scholar] [CrossRef] [PubMed]

- Velichkovsky, B.M. Heterarchy of Cognition: The Depths and the Highs of a Framework for Memory Research. Memory 2002, 10, 405–419. [Google Scholar] [CrossRef] [PubMed]

- Alammary, A.S. Arabic Questions Classification Using Modified TF-IDF. IEEE Access 2021, 9, 95109–95122. [Google Scholar] [CrossRef]

- Tuela, A.I.; Palar, Y.N. Analysis of Higher Order Thinking Skills (HOTS) Based on Bloom Taxonomy in Comprehensive Examination Questions. J. Kependidikan J. Has. Penelit. Kaji. Kepustakaan Bid. Pendidik. Pengajaran Pembelajaran 2022, 8, 957–971. [Google Scholar] [CrossRef]

- Ifelebuegu, A. Rethinking Online Assessment Strategies: Authenticity versus AI Chatbot Intervention. J. Appl. Learn. Teach. 2023, 6, 385–392. [Google Scholar] [CrossRef]

- Jones, K.O.; Harland, J.; Reid, J.M.V.; Bartlett, R. Relationship between Examination Questions and Bloom’s Taxonomy. In Proceedings of the Frontiers in Education Conference, FIE 2009, San Antonio, TX, USA, 18–21 October 2009. [Google Scholar] [CrossRef]

- Shaikh, S.; Daudpotta, S.M.; Imran, A.S. Bloom’s Learning Outcomes’ Automatic Classification Using LSTM and Pretrained Word Embeddings. IEEE Access 2021, 9, 117887–117909. [Google Scholar] [CrossRef]

- Mead, A.D.; Zhou, C. Using Machine Learning to Predict Bloom’s Taxonomy Level for Certification Exam Items. J. Appl. Test. Technol. 2022, 23, 53–71. [Google Scholar]

- Jain, M.; Beniwal, R.; Ghosh, A.; Grover, T.; Tyagi, U. Classifying Question Papers with Bloom’s Taxonomy Using Machine Learning Techniques. Commun. Comput. Inf. Sci. 2019, 1046, 399–408. [Google Scholar] [CrossRef]

- Karathanasi, L.C.; Bazinas, C.; Iordanou, G.; Kaburlasos, V.G. A Study on Text Classification for Applications in Special Education. In Proceedings of the 2021 International Conference on Software, Telecommunications and Computer Networks (SoftCOM), Hvar, Croatia, 23–25 September 2021. [Google Scholar]

- Balla, H.; Delany, S.J. Exploration of Approaches to Arabic Named Entity Recognition. Conf. Pap. 2020, 2611, 2–16. [Google Scholar] [CrossRef]

- Che, W.; Liu, Y.; Wang, Y.; Zheng, B.; Liu, T. Towards Better UD Parsing: Deep Contextualized Word Embeddings, Ensemble, and Treebank Concatenation. In CoNLL 2018—SIGNLL Conference on Computational Natural Language Learning, Proceedings of the CoNLL 2018 Shared Task: Multilingual Parsing from Raw Text to Universal Dependencies, Brussels, Belgium, 31 October–1 November 2018; Association for Computational Linguistics: Brussels, Belgium, 2018; pp. 55–64. [Google Scholar] [CrossRef]

- Hijazi, M.; Zeki, A.; Science, A.I.-C. Arabic Text Classification Based on Semantic Relations. Int. J. Math. Comput. Sci. 2022, 17, 937–946. [Google Scholar]

- Chandio, M.T.; Pandhiani, S.M.; Iqbal, R. Bloom’s Taxonomy: Improving Assessment and Teaching-Learning Process. J. Educ. Educ. Dev. 2016, 3, 203–221. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-Training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the NAACL HLT 2019—2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies—Proceedings of the Conference, Minneapolis, MN, USA, 2–7 June 2019; pp. 4171–4186. [Google Scholar]

- Zhou, H. Research of Text Classification Based on TF-IDF and CNN-LSTM. J. Phys. Conf. Ser. 2022, 2171, 012021. [Google Scholar] [CrossRef]

- Alammary, A. BERT Models for Arabic Text Classification: A Systematic Review. Appl. Sci. 2022, 12, 5720. [Google Scholar] [CrossRef]

- Patil, N.; Kulkarni, O.; Bhujle, V.; Joshi, A.; Khanchandani, K.; Kambli, M. Automatic Question Classifier. In Proceedings of the 4th International Conference on Cybernetics, Cognition and Machine Learning Applications, ICCCMLA 2022, Goa, India, 8–9 October 2022; pp. 53–58. [Google Scholar] [CrossRef]

- Gani, M.O.; Ayyasamy, R.K.; Sangodiah, A.; Fui, Y.T. Bloom’s Taxonomy-Based Exam Question Classification: The Outcome of CNN and Optimal Pre-Trained Word Embedding Technique. Educ. Inf. Technol. 2023, 28, 15893–15914. [Google Scholar] [CrossRef]

- Anderson, L.; Krathwohl, D.; Airasian, P.; Cruikshank, K.; Mayer, R.; Pintrich, P.; Raths, J.; Wittrock, M. A Taxonomy for Learning, Teaching, and Assessing: A Revision of Bloom’s Taxonomy of Educational Objectives; Pearson: London, UK, 2000. [Google Scholar]

- Mohammed, M.; Omar, N. Question Classification Based on Bloom’s Taxonomy Using Enhanced Tf-Idf. Int. J. Adv. Sci. Eng. Inf. Technol. 2018, 8, 4–6. [Google Scholar] [CrossRef]

- Shannon, T. Teaching and Assessing with Taxonomies. Int. J. Bus. Educ. 2023, 164, 9. [Google Scholar] [CrossRef]

- Schildkamp, K.; van der Kleij, F.M.; Heitink, M.C.; Kippers, W.B.; Veldkamp, B.P. Formative Assessment: A Systematic Review of Critical Teacher Prerequisites for Classroom Practice. Int. J. Educ. Res. 2020, 103, 101602. [Google Scholar] [CrossRef]

- Dutta, A.; Chatterjee, P.; Dey, N.; Moreno-Ger, P.; Sen, S. Cognitive Evaluation of Examinees by Dynamic Question Set Generation Based on Bloom’s Taxonomy. IETE J. Res. 2023, 70, 2570–2582. [Google Scholar] [CrossRef]

- Muhayimana, T.; Kwizera, L.; Nyirahabimana, M.R. Using Bloom’s Taxonomy to Evaluate the Cognitive Levels of Primary Leaving English Exam Questions in Rwandan Schools. Curric. Perspect. 2022, 42, 51–63. [Google Scholar] [CrossRef]

- Tsaparlis, G. Higher and lower-order thinking skills: The case of chemistry revisited. J. Balt. Sci. Educ. 2020, 19, 467–483. [Google Scholar] [CrossRef]

- Adams, N.E. Bloom’s Taxonomy of Cognitive Learning Objectives. J. Med. Libr. Assoc. 2015, 103, 152–153. [Google Scholar] [CrossRef] [PubMed]

- Nurmatova, S.; Altun, M. A Comprehensive Review of Bloom’s Taxonomy Integration to Enhancing Novice EFL Educators’ Pedagogical Impact. Arab. World Engl. J. 2023, 14. [Google Scholar] [CrossRef]

- Lourdusamy, R.; Magendiran, P.; Fonceca, C.M. Analysis of Cognitive Levels of Questions With Bloom’s Taxonomy: A Case Study. Int. J. Softw. Innov. 2022, 10, 1–22. [Google Scholar] [CrossRef]

- Zhang, J.; Wong, C.; Giacaman, N.; Luxton-Reilly, A. Automated Classification of Computing Education Questions Using Bloom’s Taxonomy. In Proceedings of the 23rd Australasian Computing Education Conference, Auckland, New Zealand, 2–5 February 2021; pp. 58–65. [Google Scholar] [CrossRef]

- Laddha, M.D.; Lokare, V.T.; Kiwelekar, A.W.; Netak, L.D. Classifications of the Summative Assessment for Revised Blooms Taxonomy by Using Deep Learning. Int. J. Eng. Trends Technol. (IJETT) 2021, 69, 211–218. [Google Scholar] [CrossRef]

- Li, Y.; Rakovic, M.; Poh, B.; Gaševic, D.; Data, G.C.-I.E. Automatic Classification of Learning Objectives Based on Bloom’s Taxonomy. In Proceedings of the 15th International Conference on Educational Data Mining, EDM 2022, Durham, UK, 24–27 July 2022. [Google Scholar] [CrossRef]

- Goh, T.; Jamaludin, N.; Mohamed, H.; Sciences, M.I.-A. Semantic Similarity Analysis for Examination Questions Classification Using WordNet. Appl. Sci. 2023, 13, 8323. [Google Scholar] [CrossRef]

- Mohammedid, M.; Omar, N. Question Classification Based on Bloom’s Taxonomy Cognitive Domain Using Modified TF-IDF and Word2vec. PLoS ONE 2020, 15, e0230442. [Google Scholar] [CrossRef]

- Yahya, A.A.; Toukal, Z.; Osman, A. Bloom’s Taxonomy–Based Classification for Item Bank Questions Using Support Vector Machines. In Modern Advances in Intelligent Systems and Tools; Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar] [CrossRef]

- Vankawala, S.; Thakkar, A.; Bhatt, N. Advanced Educational Assessments: Automated Question Classification Based on Bloom’s Cognitive Level. In Proceedings of the 2023 International Conference on Evolutionary Algorithms and Soft Computing Techniques (EASCT), Bengaluru, India, 20–21 October 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Alammary, A. LOsMonitor: A Machine Learning Tool for Analyzing and Monitoring Cognitive Levels of Assessment Questions. IEEE Trans. Learn. Technol. 2021, 14, 640–652. [Google Scholar] [CrossRef]

- Cao, C.; Zhou, F.; Dai, Y.; Wang, J.; Surveys, K.Z.-A.C. A Survey of Mix-Based Data Augmentation: Taxonomy, Methods, Applications, and Explainability. ACM Comput. Surv. 2024, 57, 37. [Google Scholar] [CrossRef]

- Sezer, A.; Sezer, H.B. Deep Convolutional Neural Network-Based Automatic Classification of Neonatal Hip Ultrasound Images: A Novel Data Augmentation Approach with Speckle Noise Reduction. Ultrasound Med. Biol. 2020, 46, 735–749. [Google Scholar] [CrossRef]

- Alammary, A.S. Investigating the Impact of Pretraining Corpora on the Performance of Arabic BERT Models. J. Supercomput. 2025, 81, 187. [Google Scholar] [CrossRef]

- Chen, N.; Mao, J.; Peng, Z.; Yi, J.; Tao, Z.; Wang, Y. Knowledge Distillation- Based Lightweight Network for Power Scenarios Inspection. In Proceedings of the 2023 China Automation Congress (CAC), Chongqing, China, 17–19 November 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 3990–3996. [Google Scholar]

- Rawat, A.; Kumar, S.; Samant, S.S. A Systematic Review of Question Classification Techniques Based on Bloom’s Taxonomy. In Proceedings of the 14th International Conference on Computing Communication and Networking Technologies (ICCCNT 2023), Delhi, India, 6–8 July 2023. [Google Scholar]

- Lin, X. Application of an Improved TF-IDF Method in Literary Text Classification. Adv. Multimed. 2022, 2022, 9285324. [Google Scholar] [CrossRef]

- Goutte, C.; Gaussier, E. A Probabilistic Interpretation of Precision, Recall and F-Score, with Implication for Evaluation. In Proceedings of the 27th European Conference on IR Research, ECIR 2005, Santiago de Compostela, Spain, 21–23 March 2005. [Google Scholar]

- Dhal, P.; Azad, C. A Comprehensive Survey on Feature Selection in the Various Fields of Machine Learning. Appl. Intell. 2022, 52, 4543–4581. [Google Scholar] [CrossRef]

- Abduljabbar, D.A.; Omar, N. Exam questions classification based on Bloom’s taxonomy cognitive level using classifiers combination. J. Theor. Appl. Inf. Technol. 2015, 78, 447. [Google Scholar]

- Habibeh, M.; Eldred, J. Bayesian Optimization For Accelerator Tuning; US DOE: Washington, DC, USA, 2024. [Google Scholar]

- Shingavi, Y.; Kumar, A.; Tasnin, W. Advancing Diabetes Prediction: An Ensemble Learning Method for Improved Accuracy Using Soft Voting Classifier. In Proceedings of the 2024 2nd International Conference on Artificial Intelligence and Machine Learning Applications Theme: Healthcare and Internet of Things (AIMLA), Namakkal, India, 15–16 March 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–6. [Google Scholar]

- Takahashi, K.; Yamamoto, K.; Kuchiba, A.; Koyama, T. Confidence interval for micro-averaged F1 and macro-averaged F1 scores. Appl. Intell. 2022, 52, 4961–4972. [Google Scholar] [CrossRef]

- Nitko, A. Educational Assessment of Students; Prentice Hall: Hoboken, NJ, USA, 1996. [Google Scholar]

- Biggs, J.; Tang, C.; Kennedy, G. Teaching for Quality Learning at University 5e; McGraw Hill: New York, NY, USA, 2022. [Google Scholar]

- Van der Kleij, F.M.; Feskens, R.C.; Eggen, T.J. Effects of feedback in a computer-based learning environment on students’ learning outcomes: A meta-analysis. Rev. Educ. Res. 2015, 85, 475–511. [Google Scholar] [CrossRef]

- Shute, V.J.; Rahimi, S. Review of Computer-based Assessment for Learning in Elementary and Secondary Education. J. Comput. Assist. Learn. 2017, 33, 1–19. [Google Scholar] [CrossRef]

- Nicol, D.J.; Macfarlane-Dick, D. Formative Assessment and Self-regulated Learning: A Model and Seven Principles of Good Feedback Practice. Stud. High. Educ. 2006, 31, 199–218. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).