Uncertain Shape and Deformation Recognition Using Wavelet-Based Spatiotemporal Features

Abstract

1. Introduction

2. Methods

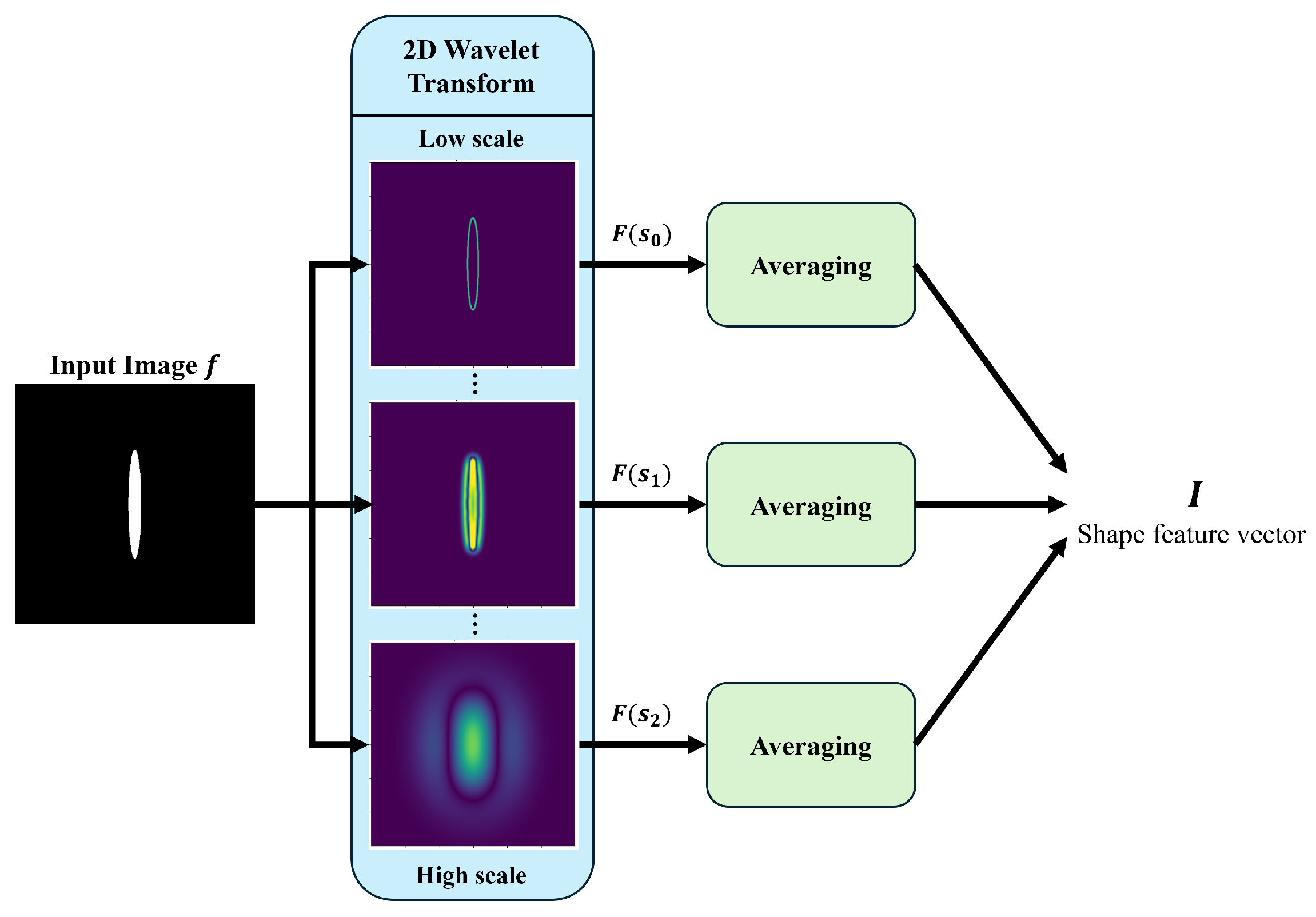

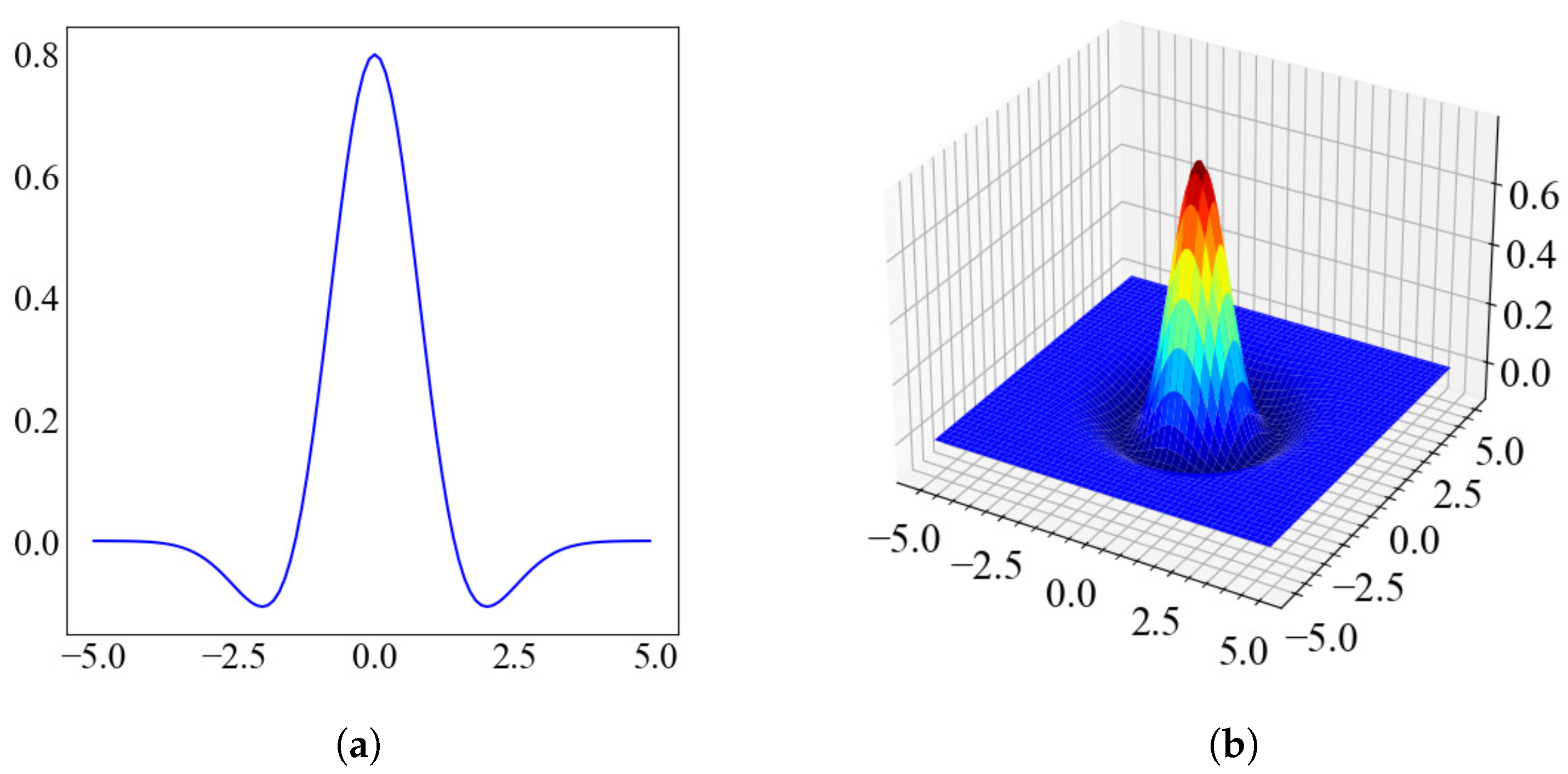

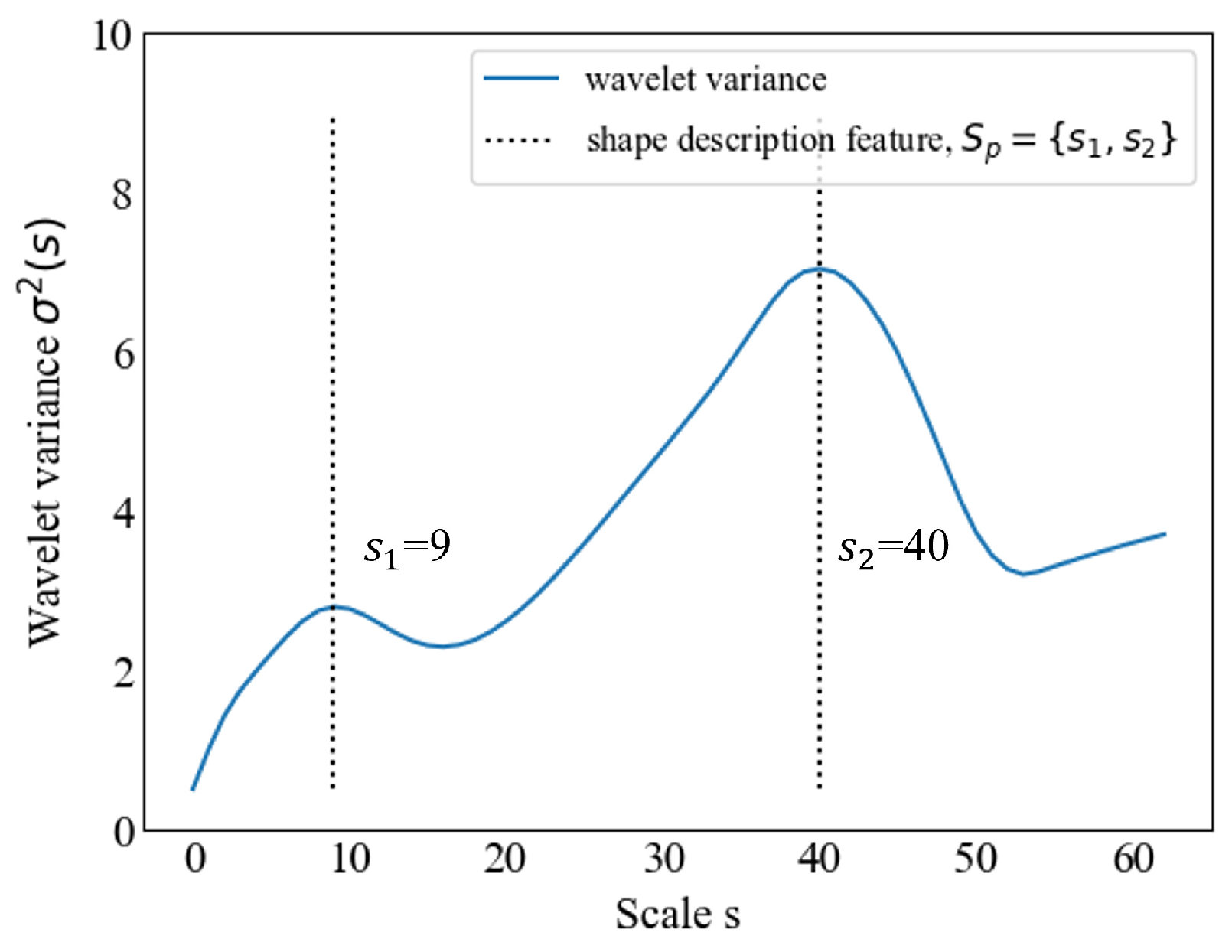

2.1. Robust Features for Uncertain Shapes Using Wavelet Kernels

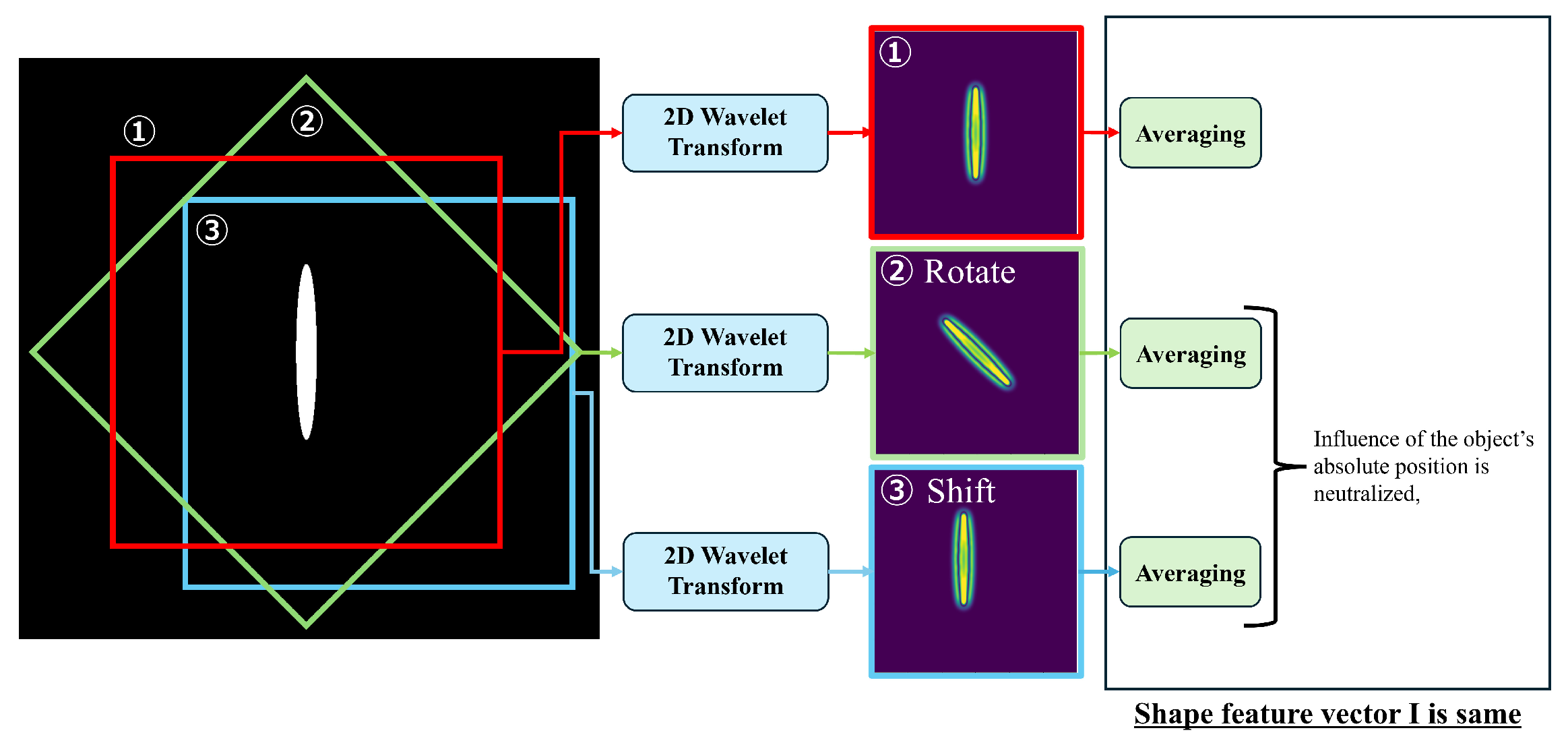

2.2. Translation-Invariant and Rotation-Invariant Shape Features for Recognizing Uncertain and Deforming Shapes

3. Experiments and Results

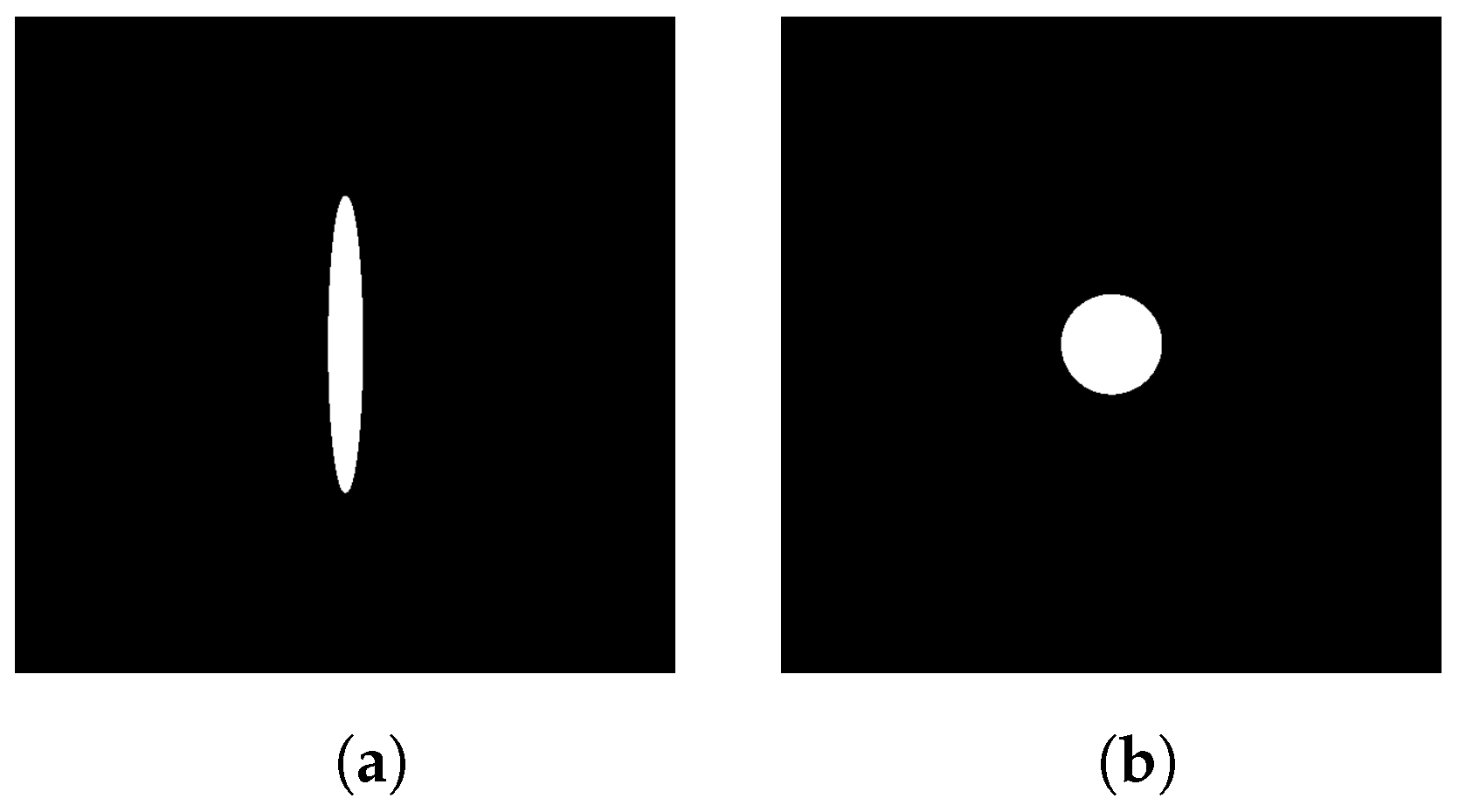

3.1. Shape Representation of an Ellipse and a Circle

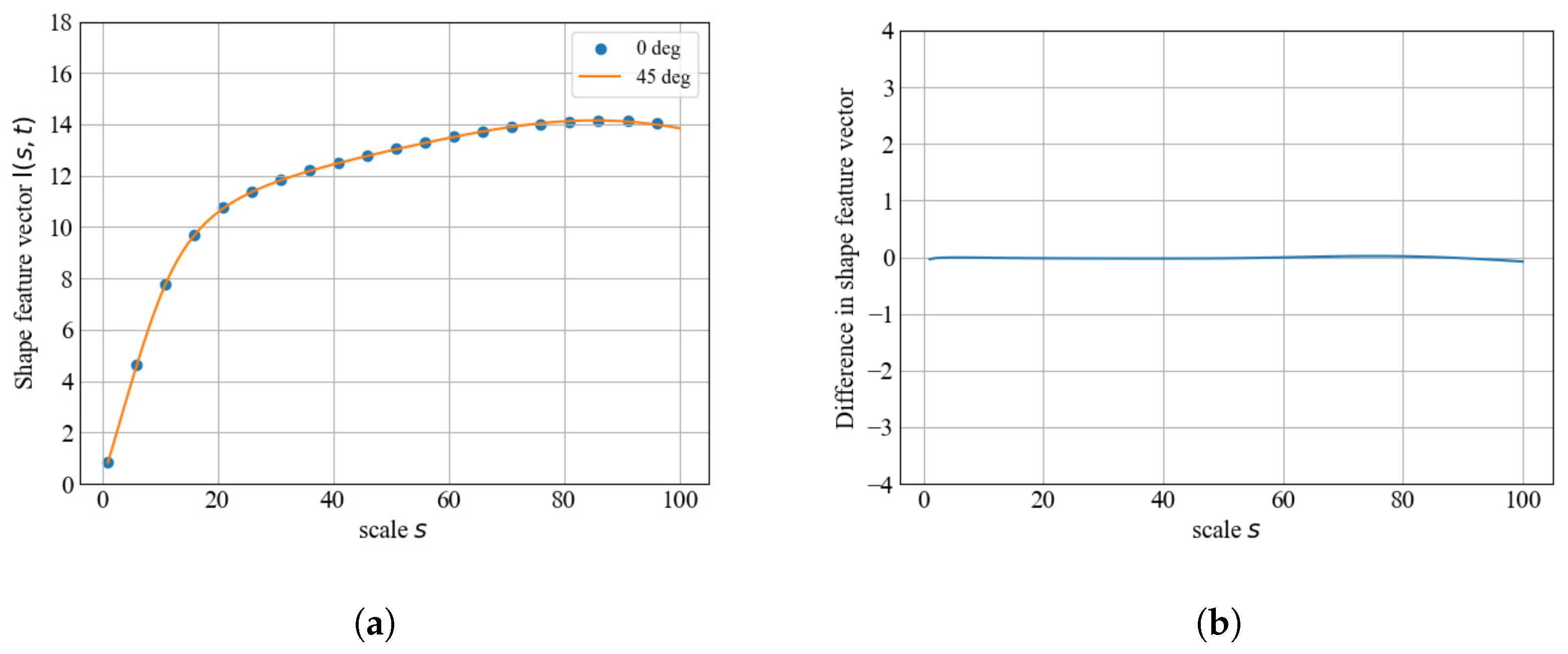

3.2. Shape Representation Under Ellipse Rotation

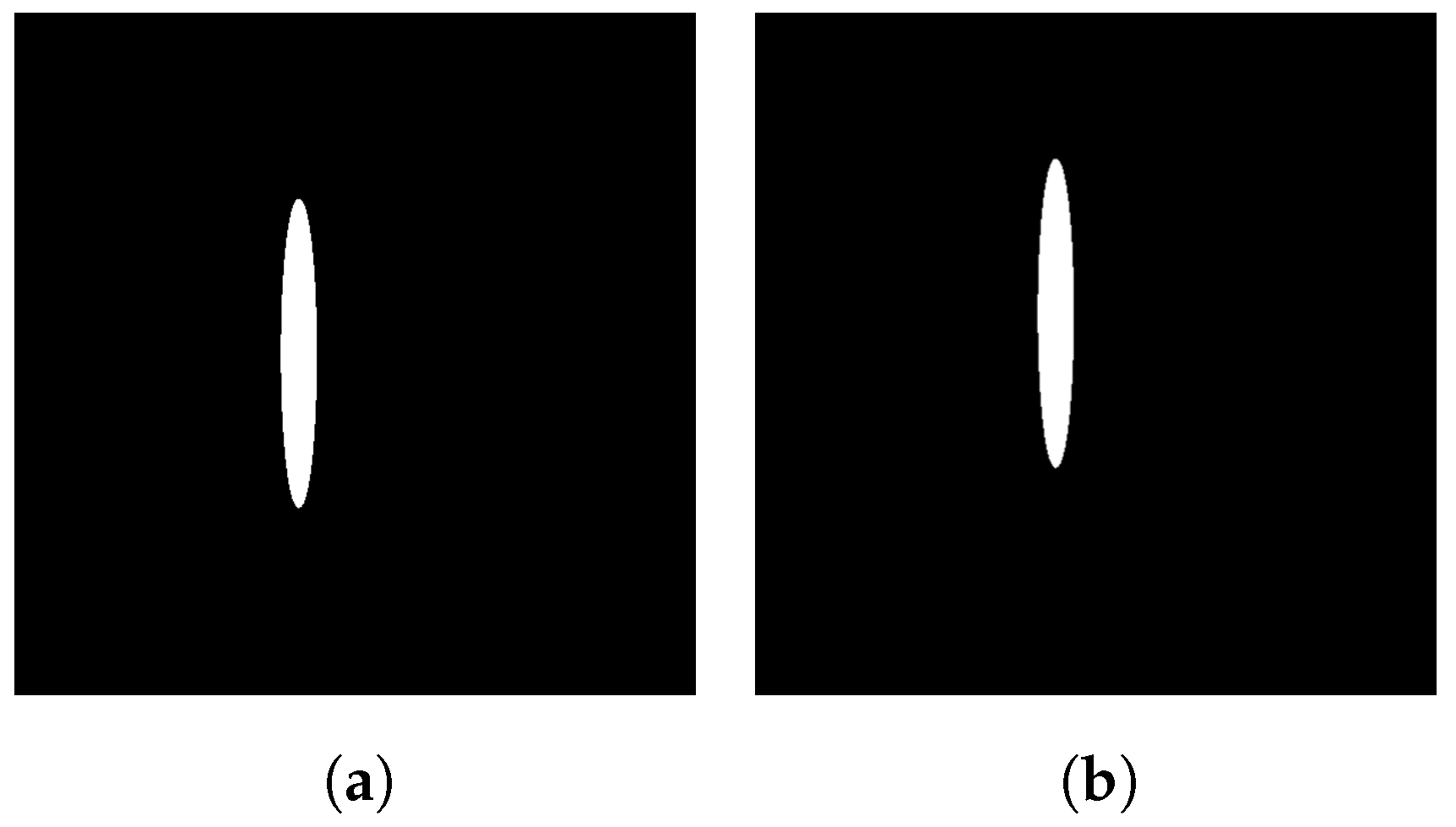

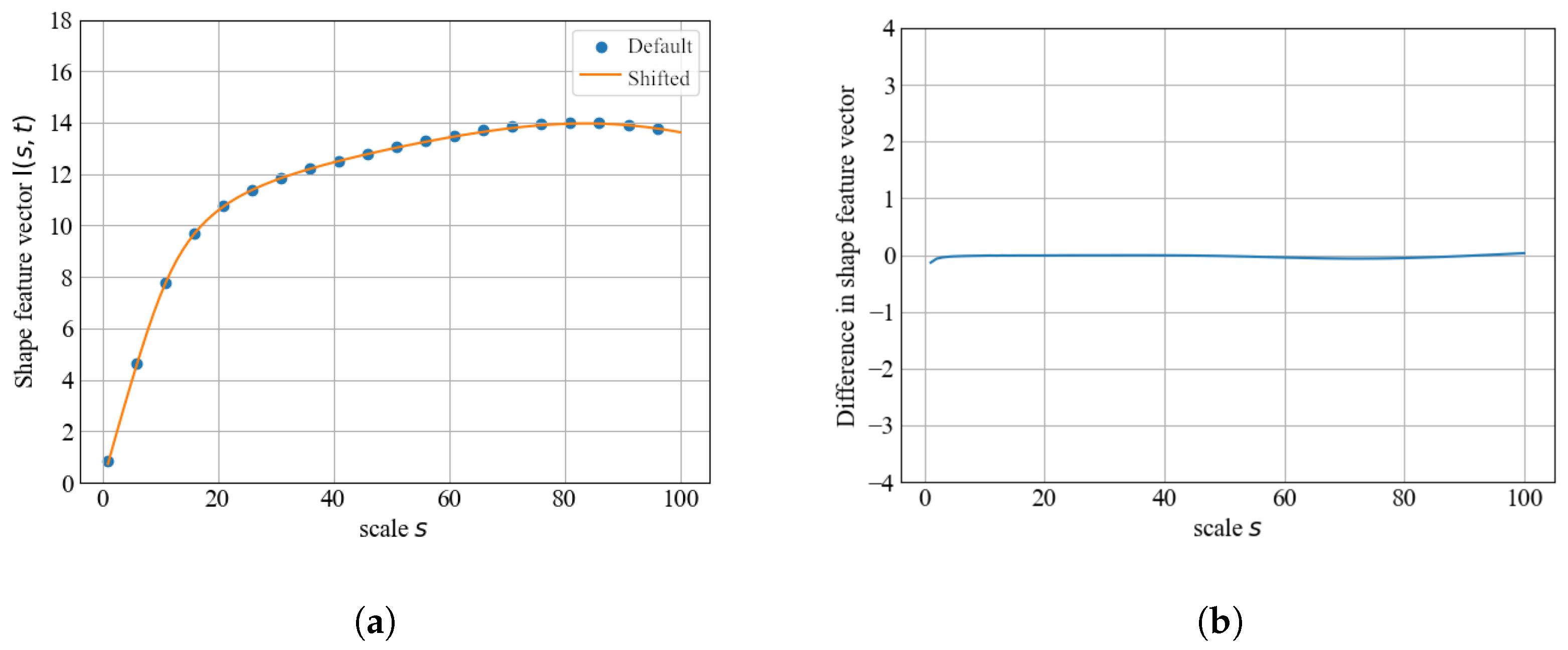

3.3. Shape Representation Under Ellipse Translation

4. Discussion and Application

4.1. Discussion

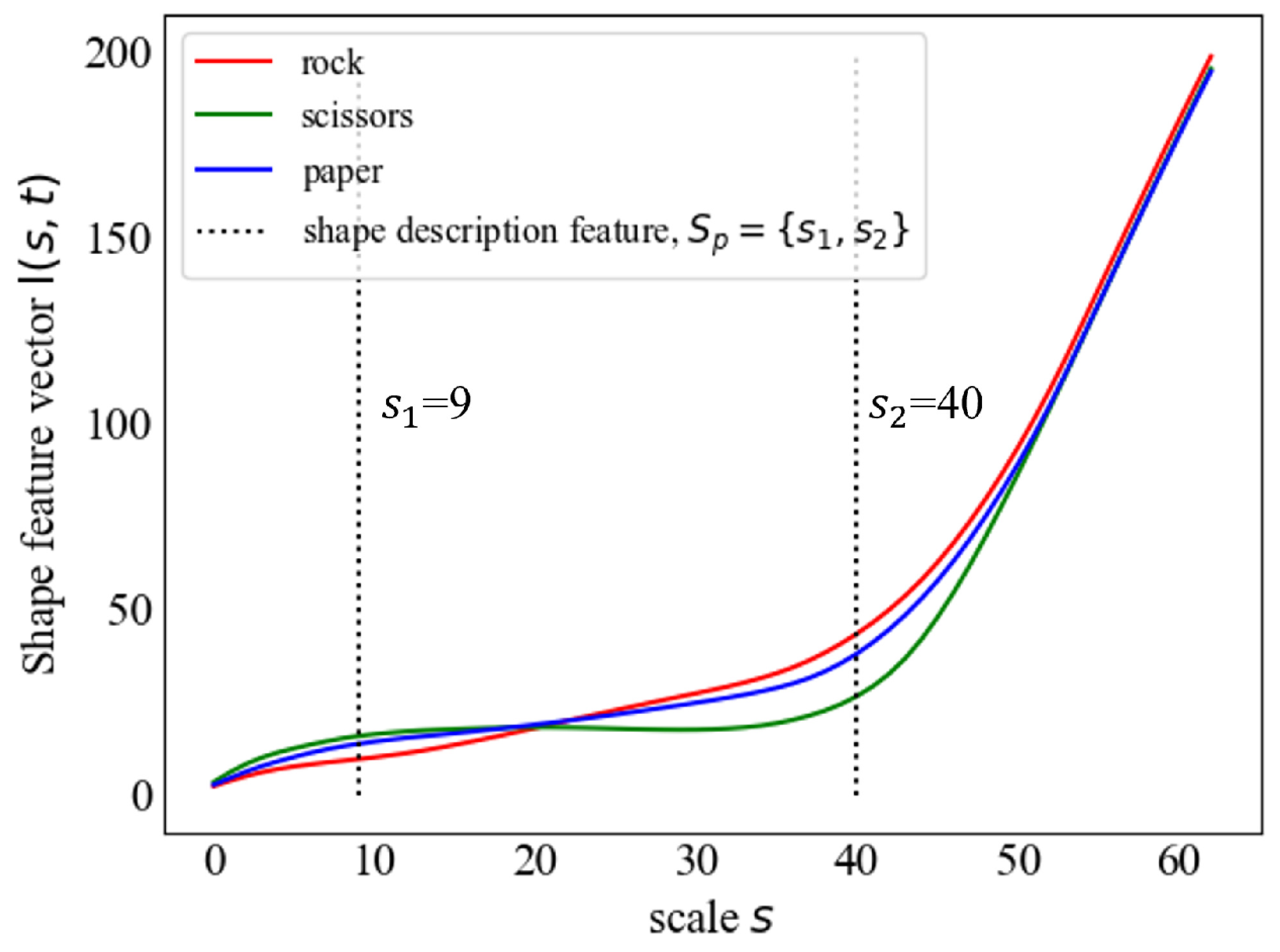

Shape Feature Vectors of Different Shape

4.2. Rotation-Invariant and Translation-Invariant Shape Feature Vectors

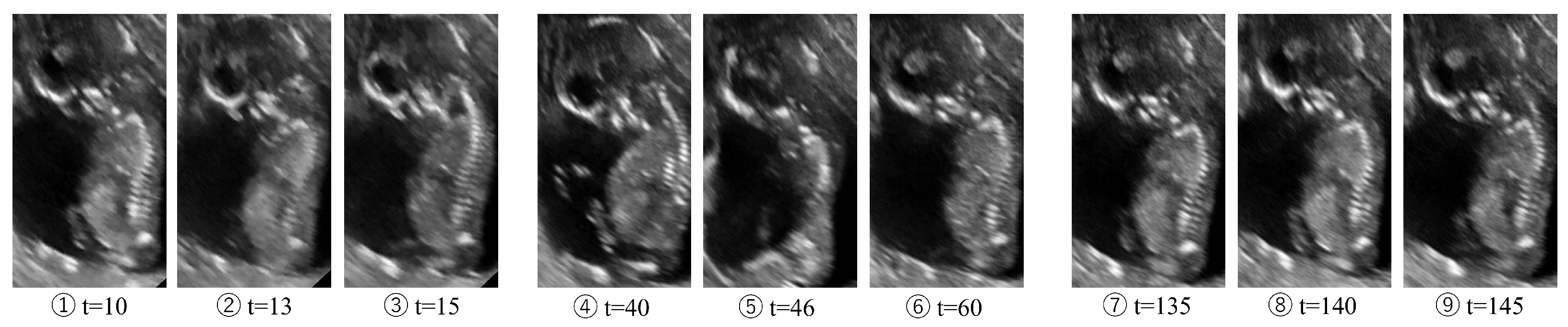

4.3. Application

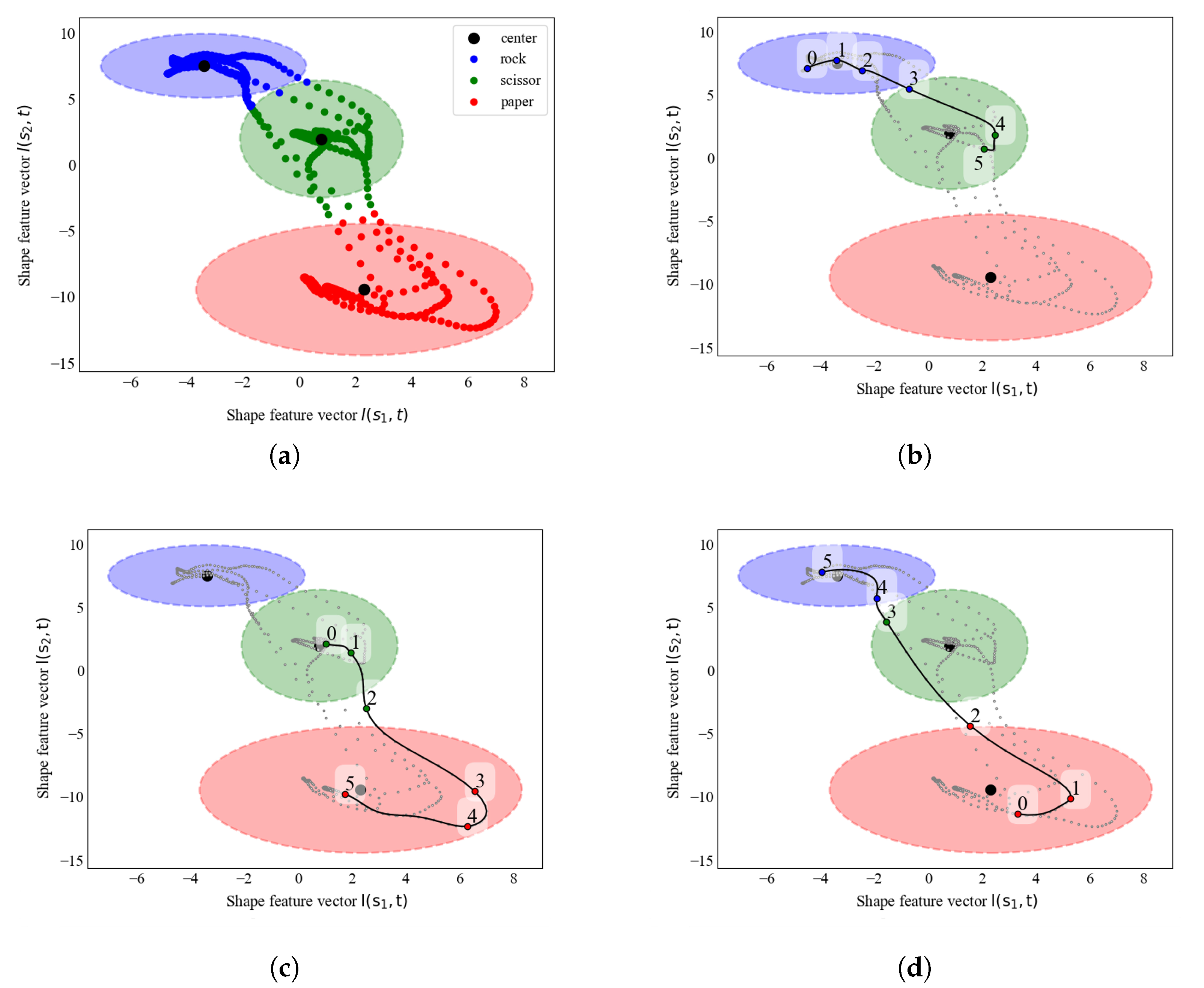

Recognition of Multiple Shapes Using Shape Feature Vector

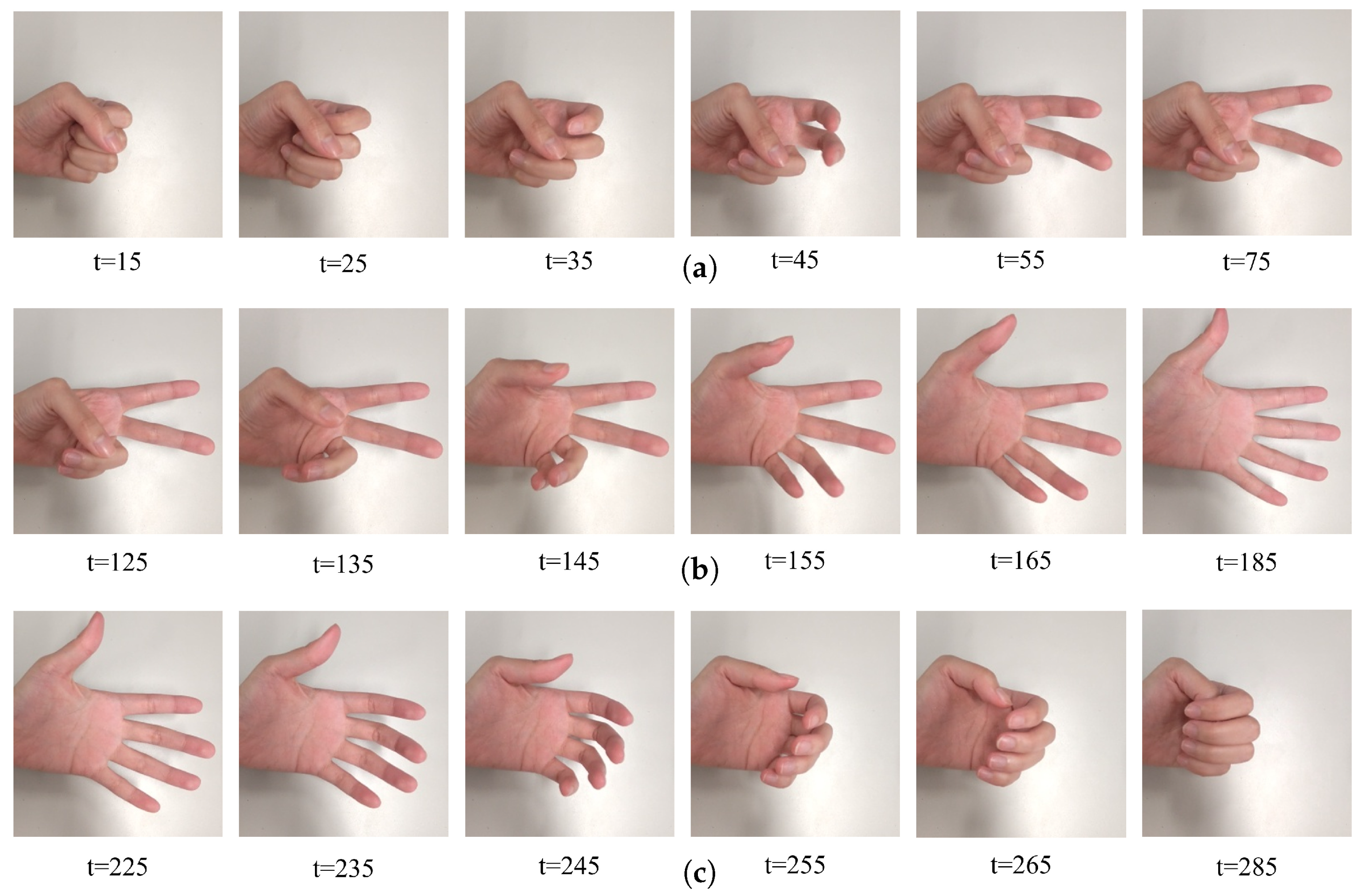

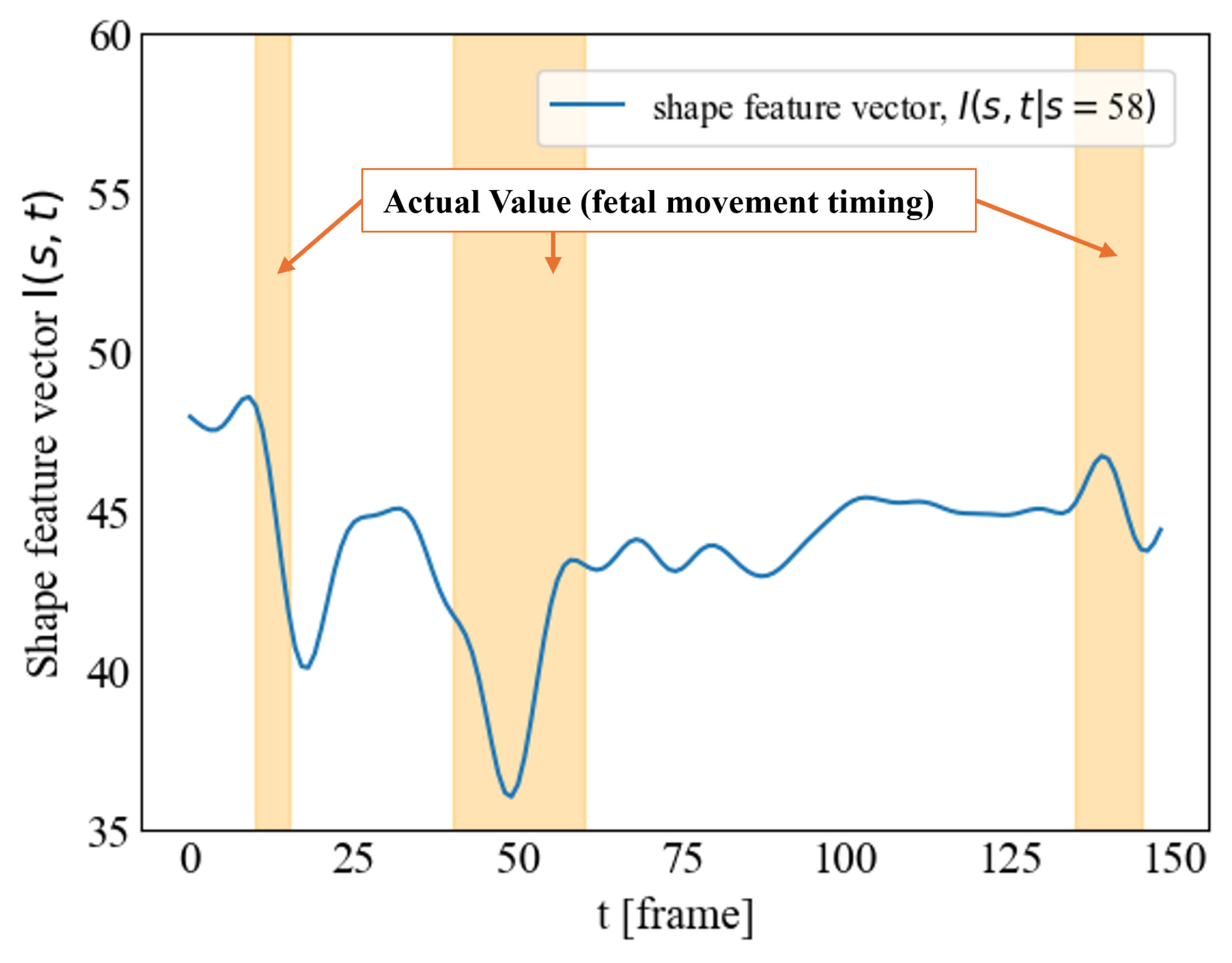

4.4. Application: Intensity of Deformation Estimation

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

| Algorithm A1 Wavelet-Based Shape Feature Extraction |

|

References

- Linardakis, M.; Varlamis, I.; Papadopoulos, G.T. Survey on Hand Gesture Recognition from Visual Input. arXiv 2025, arXiv:2501.11992. [Google Scholar] [CrossRef]

- Lai, J.; Nowlan, N.C.; Vaidyanathan, R.; Shaw, C.J.; Lees, C.C. Fetal movements as a predictor of health. Acta Obstet. Gynecol. Scand. 2016, 95, 968–975. [Google Scholar] [CrossRef] [PubMed]

- Belongie, S.; Malik, J.; Puzicha, J. Shape matching and object recognition using shape contexts. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 509–522. [Google Scholar] [CrossRef]

- Yan, X.; Ai, T.; Zhang, X. Template Matching and Simplification Method for Building Features Based on Shape Cognition. ISPRS Int. J. Geo-Inf. 2017, 6, 250. [Google Scholar] [CrossRef]

- Hummel, J.E.; Biederman, I. Dynamic binding in a neural network for shape recognition. Psychol. Rev. 1992, 99, 480–517. [Google Scholar] [CrossRef]

- Osowski, S.; Nghia, D.D. Fourier and wavelet descriptors for shape recognition using neural networks—A comparative study. Pattern Recognit. 2002, 35, 1949–1957. [Google Scholar] [CrossRef]

- Mahmud, H.; Hasan, M.K.; Tariq, A.A.; Mottalib, M. Hand Gesture Recognition Using SIFT Features on Depth Image. In Proceedings of the The Ninth International Conference on Advances in Computer-Human Interactions, Venice, Italy, 24–28 April 2016. [Google Scholar]

- Piccinini, P.; Prati, A.; Cucchiara, R. Real-time object detection and localization with SIFT-based clustering. Image Vis. Comput. 2012, 30, 573–587. [Google Scholar] [CrossRef]

- Poetro, B.S.W.; Maria, E.; Zein, H.; Najwaini, E.; Zulfikar, D.H. Advancements in Agricultural Automation: SVM Classifier with Hu Moments for Vegetable Identification. Indones. J. Data Sci. 2024, 5, 15–22. [Google Scholar] [CrossRef]

- Al-Razgan, M.; Ali, Y.A.; Awwad, E.M. Enhancing Fetal Medical Image Analysis through Attention-guided Convolution: A Comparative Study with Established Models. J. Disabil. Res. 2024, 3, 20240005. [Google Scholar] [CrossRef]

- Murray, K.T. Recurrent networks recognize patterns with low-dimensional oscillations. arXiv 2023, arXiv:2310.07908. [Google Scholar] [CrossRef]

- Bronstein, M.M.; Bruna, J.; Cohen, T.; Veličković, P. Geometric Deep Learning: Grids, Groups, Graphs, Geodesics, and Gauges. arXiv 2021, arXiv:2104.13478. [Google Scholar] [CrossRef]

- Yan, X.; Lin, L.; Mitra, N.J.; Lischinski, D.; Cohen-Or, D.; Huang, H. ShapeFormer: Transformer-based Shape Completion via Sparse Representation. arXiv 2022, arXiv:2201.10326. [Google Scholar] [CrossRef]

- Jaderberg, M.; Simonyan, K.; Zisserman, A.; Kavukcuoglu, K. Spatial Transformer Networks. arXiv 2016, arXiv:1506.02025. [Google Scholar] [CrossRef]

- Maraci, A.; Napolitano, R.; Papageorghiou, A.; Noble, J. Object Classification in an Ultrasound Video Using LP-SIFT Features. In Proceedings of the Medical Computer Vision: Algorithms for Big Data: International Workshop, MCV 2014, Cambridge, MA, USA, 18 September 2014; Springer: Cham, Switzerland, 2014. [Google Scholar] [CrossRef]

- Jimoh, K.O.; Ajayi, A.O.; Ogundoyin, I.K. Template Matching Based Sign Language Recognition System for Android Devices. FUOYE J. Eng. Technol. 2020, 5. [Google Scholar] [CrossRef]

- Gil Jiménez, P.; Bascón, S.M.; Moreno, H.G.; Arroyo, S.L.; Ferreras, F.L. Traffic sign shape classification and localization based on the normalized FFT of the signature of blobs and 2D homographies. Signal Process. 2008, 88, 2943–2955. [Google Scholar] [CrossRef]

- Liu, X.; Li, C.; Tian, L. Hand Gesture Recognition Based on Wavelet Invariant Moments. In Proceedings of the 2017 IEEE International Symposium on Multimedia (ISM), Taichung, Taiwan, 11–13 December 2017; pp. 459–464. [Google Scholar] [CrossRef]

- Antoine, J.P.; Barachea, D.; Cesar, R.M.; da Fontoura Costa, L. Shape characterization with the wavelet transform. Signal Process. 1997, 62, 265–290. [Google Scholar] [CrossRef]

- Wang, C.H.; Liu, X.L. Study of object recognition with GPR based on STFT. In Proceedings of the 2018 17th International Conference on Ground Penetrating Radar (GPR), Rapperswil, Switzerland, 18–21 June 2018; pp. 1–4, ISSN 2474-3844. [Google Scholar] [CrossRef]

- Amolins, K.; Zhang, Y.; Dare, P. Wavelet based image fusion techniques—An introduction, review and comparison. ISPRS J. Photogramm. Remote Sens. 2007, 62, 249–263. [Google Scholar] [CrossRef]

- Frøen, J.F.; Heazell, A.E.P.; Tveit, J.V.H.; Saastad, E.; Fretts, R.C.; Flenady, V. Fetal Movement Assessment. Semin. Perinatol. 2008, 32, 243–246. [Google Scholar] [CrossRef]

- Mesbah, M.; Khlif, M.S.; East, C.; Smeathers, J.; Colditz, P.; Boashash, B. Accelerometer-based fetal movement detection. In Proceedings of the 2011 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Boston, MA, USA, 30 August–3 September 2011; pp. 7877–7880, ISSN 1558-4615. [Google Scholar] [CrossRef]

- Xu, J.; Zhao, C.; Ding, B.; Gu, X.; Zeng, W.; Qiu, L.; Yu, H.; Shen, Y.; Liu, H. Fetal Movement Detection by Wearable Accelerometer Duo Based on Machine Learning. IEEE Sens. J. 2022, 22, 11526–11534. [Google Scholar] [CrossRef]

- Manikandan, K.; Shanmugan, S.; Ashishkumar, R.; Venkat Harish, V.; Jansi Rani, T.; Nishanthi, T. Trans membranous fetal movement and pressure sensing. Mater. Today Proc. 2020, 30, 62–68. [Google Scholar] [CrossRef]

- Sterman, M.B. Relationship of intrauterine fetal activity to maternal sleep stage. Exp. Neurol. 1967, 19, 98–106. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Matoba, H.; Kusaka, T.; Shimatani, K.; Tanaka, T. Uncertain Shape and Deformation Recognition Using Wavelet-Based Spatiotemporal Features. Electronics 2025, 14, 2131. https://doi.org/10.3390/electronics14112131

Matoba H, Kusaka T, Shimatani K, Tanaka T. Uncertain Shape and Deformation Recognition Using Wavelet-Based Spatiotemporal Features. Electronics. 2025; 14(11):2131. https://doi.org/10.3390/electronics14112131

Chicago/Turabian StyleMatoba, Haruka, Takashi Kusaka, Koji Shimatani, and Takayuki Tanaka. 2025. "Uncertain Shape and Deformation Recognition Using Wavelet-Based Spatiotemporal Features" Electronics 14, no. 11: 2131. https://doi.org/10.3390/electronics14112131

APA StyleMatoba, H., Kusaka, T., Shimatani, K., & Tanaka, T. (2025). Uncertain Shape and Deformation Recognition Using Wavelet-Based Spatiotemporal Features. Electronics, 14(11), 2131. https://doi.org/10.3390/electronics14112131