Abstract

In recent years, a large number of Internet of Things (IoT)-based products, solutions, and services have emerged from the industry to enter the marketplace, improving the quality of service. With the wide adoption of IoT-based systems/applications in real scenarios, the privacy preservation (PP) topic has garnered significant attention from both academia and industry; as a result, many PP solutions have been developed, tailored to IoT-based systems/applications. This paper provides an in-depth analysis of state-of-the-art (SOTA) PP solutions recently developed for IoT-based systems and applications. We delve into SOTA PP methods that preserve IoT data privacy and categorize them into two scenarios: on-device and cloud computing. We categorize the existing PP solutions into privacy-by-design (PbD), such as federated learning (FL) and split learning (SL), and privacy engineering solutions (PESs), such as differential privacy (DP) and anonymization, and we map them to IoT-driven applications/systems. We further summarize the latest SOTA methods that employ multiple PP techniques like -DP + anonymization or -DP + blockchain + FL (rather than employing just one) to preserve IoT data privacy in both PES and PbD categories. Lastly, we highlight quantum-based methods devised to enhance the security and/or privacy of IoT data in real-world scenarios. We discuss the status of current research in PP techniques for IoT data within the scope established for this paper, along with opportunities for further research and development. To the best of our knowledge, this is the first work that provides comprehensive knowledge about PP topics centered on the IoT, and which can provide a solid foundation for future research.

1. Introduction

The Internet of Things (IoT) is a cutting-edge paradigm that links many electronic devices and gadgets to a smart network via the Internet, using advanced communications systems to ensure reliable and seamless connectivity. This massive integration of devices and network systems enables the generation and/or gathering of data from wearables and other sensors, actuating tools, and computing devices, unlocking a world of enormous possibilities [1,2]. The IoT is one of the main pillars of Industry 5.0, which enables the seamless merging of digital and physical domains [3]. Due to widespread adoption and extensive deployment, the global IoT market, which was less than a thousand dollars (i.e., USD 970) in 2022, is expected to reach USD 2.227 trillion by 2028 [4]. There are numerous applications of the IoT in many sectors, and the umbrella for IoT-based systems and applications is expanding at astonishing speed [5].The number of IoT devices with diverse capabilities is rapidly increasing, and the current 25 billion devices interconnected by IoT systems have been predicted to grow by about 60 billion by the year 2025 [6]. Furthermore, applications of the IoT in some areas (such as healthcare) are growing in large numbers with each passing day. In the health area, the IoT can be used to monitor vital signs [7] and for virtual health monitoring [8], infectious disease prediction [9], medical resource optimization [10], medical data analytics [11], secure and efficient diagnosis [12], etc.

Due to the huge popularity of IoT systems/applications, a wide variety of topics have been researched, such as trust management [13], service scenario enhancement [14], data analytics [15], collaborative learning [16], data collection from diverse sources [17,18], data integrity protection [19], edge AI or edge intelligence [20], secure communications and authentication protocol development [21,22,23], IoT device security [24], threat and vulnerability detection in IoT environments [25], computing and communications overhead reduction [26], web service data analytics [27], service quality enhancement [28], and dataset creation [29], to name just a few. Despite these topics/areas, privacy protection in the IoT environment has been extensively investigated in recent years due to the extensive deployment of IoT devices and our dependence on digital tools. According to an IBM report, losses due to corporate data leakages in 2021 reached USD 4.35 million, which was the highest figure in the past 17 years [30]. Privacy protection in IoT systems and applications is very challenging due to greater data mobility and the collection of a broad array of information. To preserve privacy, a wide range of methods has been developed to protect the data generated from IoT devices as well as the data stored on cloud platforms.

A lot of survey papers have been published centering on IoT data privacy. Chanal and Kakkasageri [31] discussed confidentiality, integrity, availability, and authentication in IoT environments. Rasool et al. [32] discussed privacy and security issues in the Internet of Medical Things (IoMT) environment along with potential threats. Barua et al. [33] explored various vulnerabilities in the Bluetooth low-energy protocol, which is widely used in IoT environments, and presented various vulnerabilities, impacts, and mitigation techniques. Siraparapu and Azad [34] highlighted the importance of secure devices and networks needed to navigate the higher complexities of IoT environments. Adam et al. [35] highlighted recent advancements in protecting the privacy of sensitive data in IoT environments. Their study provides insight into three interrelated aspects (security, privacy, and trust) in IoT systems. Recently, privacy and security challenges have been discussed when IoT-based systems are used in start-ups [36]. A state-of-the-art (SOTA) review to navigate the complex privacy-aware landscape of the IoT personal data store was offered by Pinto et al. [37]. We affirm the contributions of the above-cited surveys; however, comprehensive knowledge concerning privacy from diverse viewpoints was not covered in previous surveys. Furthermore, hybrid methods to navigate the complex landscape of privacy challenges in IoT environments were not adequately discussed. To bridge this gap, we present a comprehensive analysis of diverse privacy-preserving (PP) solutions developed for IoT-based applications/systems. Our major contributions are as follows.

- We explore recent SOTA methods that have been developed to safeguard the privacy of sensitive IoT data in two different settings. Specifically, we identify and categorize those PP methods into two settings: on-device (OD) and cloud computing (CC).

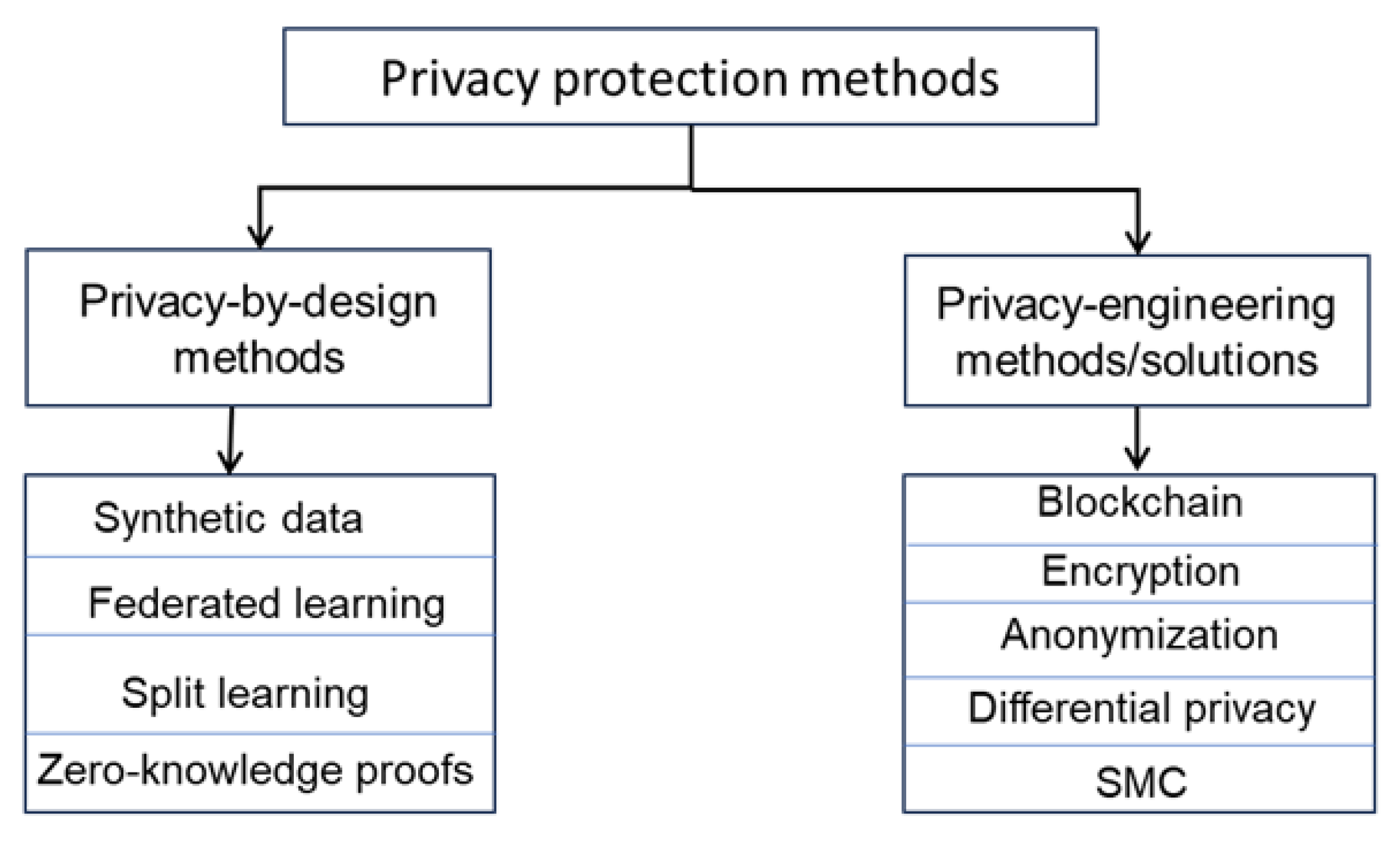

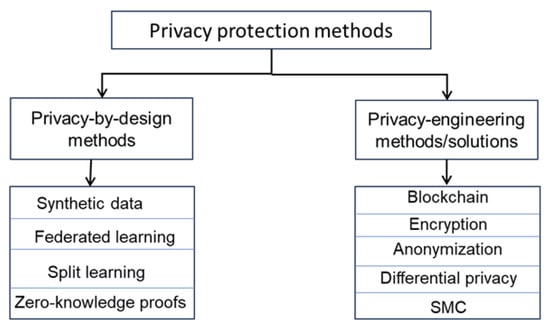

- We categorize existing PP solutions into two broad categories: privacy-by-design (PbD) and privacy engineering solutions (PESs), and we map them to IoT-driven applications/systems. To the best of the authors’ knowledge, this is the first work that introduces this categorization of PES and PbD methods in the IoT domain.

- We summarize the findings of the latest SOTA methods that employ multiple PP techniques (-DP + anonymization, DP + blockchain + FL, etc.) rather than employing just one to preserve IoT data privacy. These methods are in huge demand when it comes to navigating the complex landscape of IoT data privacy while fulfilling the demand for analytics in the modern era.

- We highlight quantum-based methods devised to enhance the security and privacy of IoT data in real-world scenarios, along with details of their methodologies.

- We discuss the current status of PP in IoT data within the scope established for this paper, along with potential opportunities for future research and developments.

- To the best of our knowledge, this is the first work to provide comprehensive knowledge about PP topics centered on the IoT, and the insights garnered from this survey provide a valuable resource for researchers and practitioners in developing next-generation robust PP methods for IoT data.

The rest of this paper is structured as follows. Section 2 discusses the preliminaries and background of the topics presented in this paper. Section 3 explains the methodology adopted in performing our systematic analysis of the literature. The SOTA approaches that are used to preserve the privacy of IoT data in OD and CC settings are given in Section 4. The privacy-by-design and privacy engineering solutions centered on the IoT data privacy are discussed in Section 5. Synergized methods to offer stringent privacy guarantees are summarized in Section 6, and quantum-based PP methods for IoT data privacy protection are discussed in Section 7. The lessons learned from this work, challenges, and promising directions for future research, are given in Section 8, and we conclude the paper in Section 9.

2. Preliminaries and Background

Owing to the advancements in Internet and communications technologies, an IoT system can collect diverse types of data and transfer it to the cloud for analytics and/or mining tasks. In the typical IoT system, there can be N devices of similar or dissimilar types that aggregate data from the underlying environments. IoT systems can be designed for real-time services or offline analytics, depending upon the scenario. In the former, the analytics time is relatively short and results can be derived in a few seconds [38]. In the latter, data are first aggregated in the cloud, and obtaining results can take a few hours [39]. However, many optimized techniques can significantly reduce the computing overhead and analytics time [40]. With the advent of IoT systems, machine-to-machine and human-to-machine interactions have become possible, and many IoT-based systems/applications are serving humans in various ways.

2.1. Technologies Originating from the IoT Paradigm/Concept

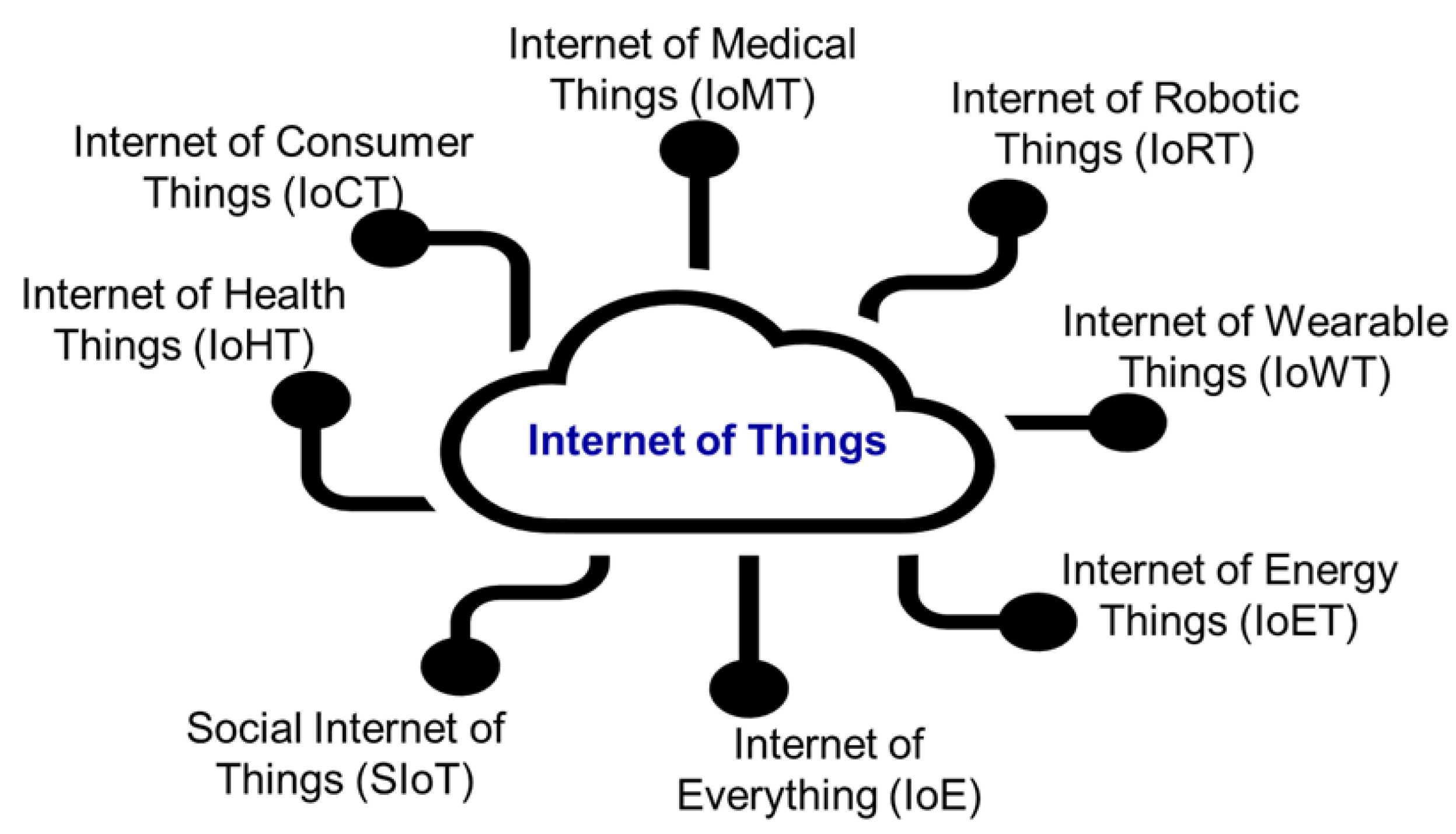

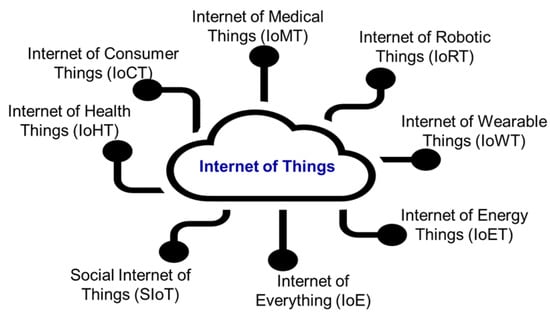

Recently, the IoT has been integrated with numerous other technologies to extend service scenarios as well as to secure IoT data from adversaries or malicious uses [41,42,43,44]. Figure 1 depicts eight famous technologies that originate from the IoT concept/paradigm.

Figure 1.

Technologies originating from the IoT paradigm.

Apart from these technologies, numerous other concepts and technologies have resulted from the IoT, such as mobile IoT applications, the clinical IoT, drones, multimedia, and the industrial IoT.

2.2. Four Main Components of IoT Systems

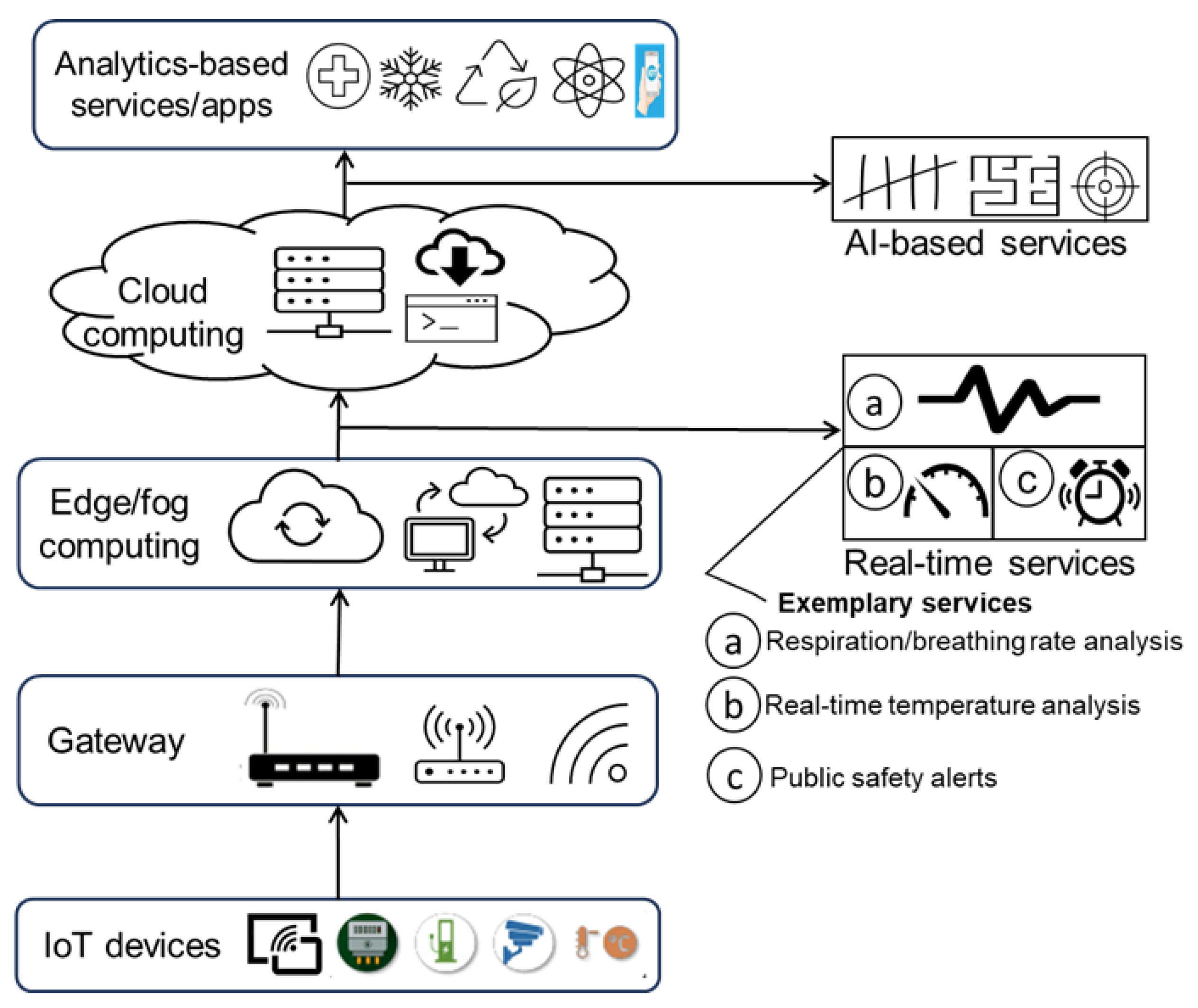

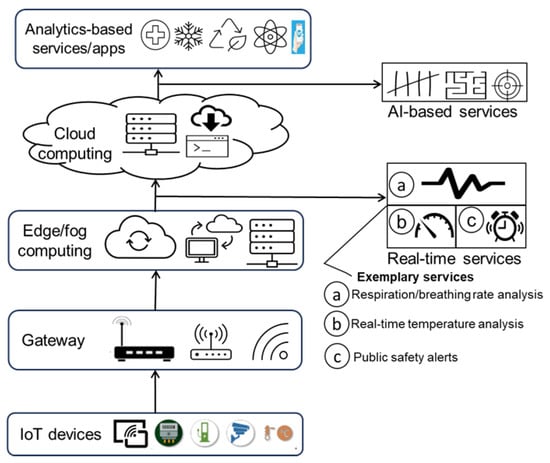

Figure 2 presents a generic IoT system having four crucial components (https://data-flair.training/blogs/how-iot-works/, accessed on: 22 March 2025): sensors and/or devices, network connectivity, computing and processing, and the user interface (downstream services).

Figure 2.

Service scenarios of IoT-based systems.

The first component includes various sensors and/or devices that assist in aggregating minute data from the deployed environment. For example, a temperature sensor might acquire readings from the underlying environment. The data collected from these devices (ranging from a tiny temperature-monitoring sensor or a CCTV producing a complex, full video feed) can have distinct degrees of complexity. In some cases, a single device may capture data of different types or modalities. For instance, mobile phones have diverse types of sensors (cameras, a GPS, an accelerometer, etc.) that can collect data of different types. Hence, the role of this particular component is to aggregate data from the underlying environment/setting. This particular task is accomplished either using multiple devices or a stand-alone sensor. The second component encompasses the communications and transport devices (e.g., satellite networks, cellular networks, Wi-Fi, wide area networks, or Bluetooth) that assist in sending/transporting the collected data from sensors and/or devices to the cloud/edge infrastructure for analytics. However, it is vital to choose the best communication and transport devices for reliable data transmission without impacting the quality of service (QoS) [45].

The third component performs analytics on the collected data at either the edge/fog layer or in the cloud. In some applications, the response is required in a short time, which cannot be provided through cloud architectures due to the massive number of devices and/or other constraints, like power levels. In addition, the data generated and processed might need to be sent to the cloud for computing/processing, and it might take a long time to respond, which might affect end-user experience or QoS [46]. Edge/fog computing is suitable for time-sensitive applications because a response can be generated in a short time. In contrast, cloud-based systems are suitable when a response in a relatively longer time is acceptable. The fourth component delivers the information to the end user via dashboard and/or mobile phone [47]. This can be accomplished by triggering alerts or notifications through text messages or email. In some cases, users can tune the settings of the access control or other machines from the web interfaces according to their needs.

2.3. Three Types of IoT Architecture

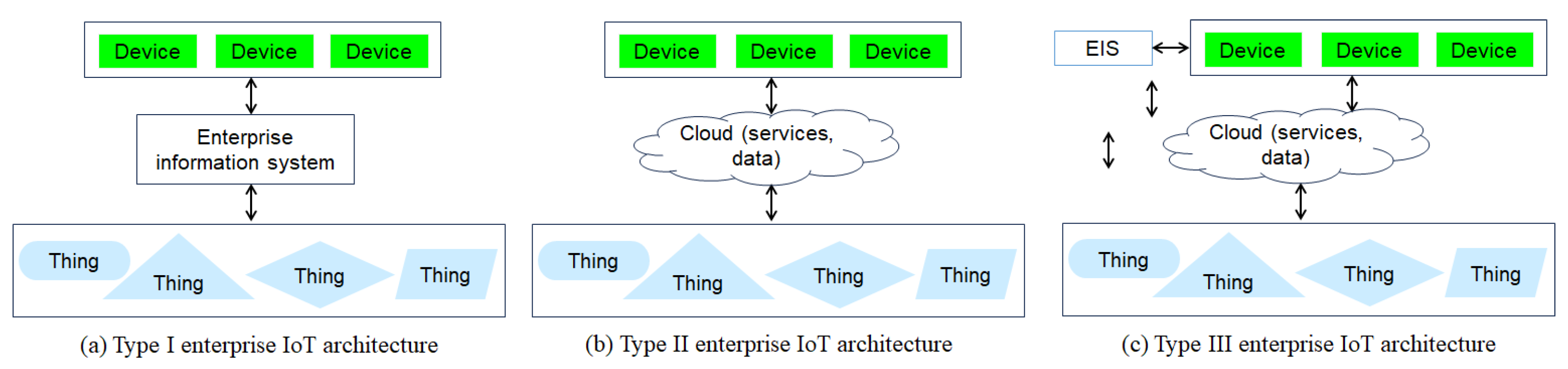

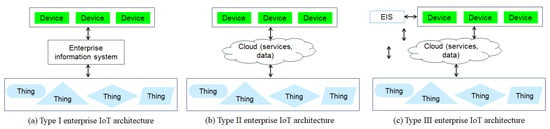

Due to higher ubiquity and real-time experiences, most organizations are making the transition from a conventional enterprise information system (EIS) to IoT-powered systems/applications. In these systems, a huge amount of data is collected and stored in the organization’s databases for downstream analytical tasks. In some cases, the capabilities of cloud computing are utilized to store collected data, and services are delivered to end users seamlessly. In some cases, cloud computing and the EIS jointly perform analytics on the data collected from different devices and/or things. Figure 3 presents three widely used types of IoT architecture.

Figure 3.

Three types of IoT architecture used in enterprises (adapted from Li et al. [48]).

The first type of architecture offers legacy system integration, real-time services, data mobility, and visibility. The second type of architecture ensures scalability, real-time services, data mobility, and visibility. The third type of architecture ensures legacy system integration, scalability, real-time services, data mobility, and visibility. Further insight into the developments for each architecture, along with their challenges, was given in [48]. Of late, many enterprises are integrating an edge or fog computing architecture to expand services (or the application stack).

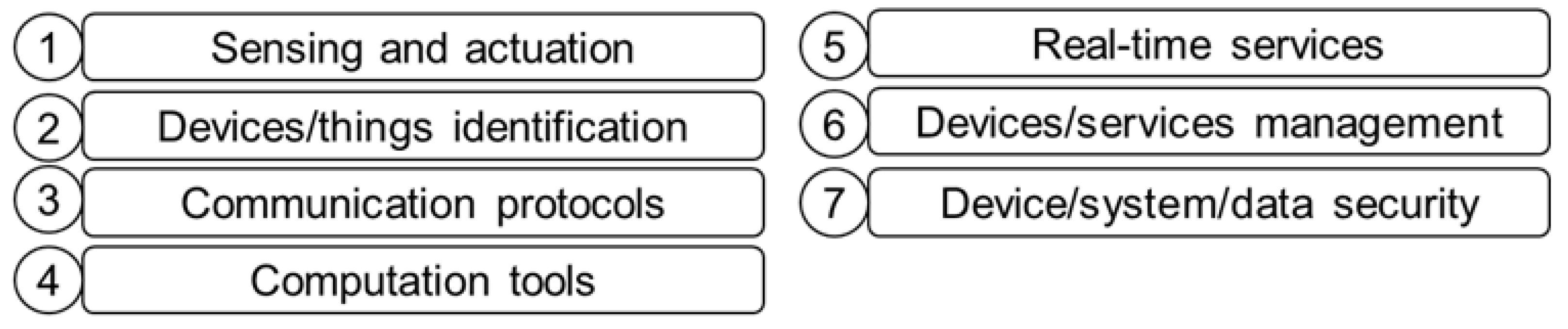

2.4. Key Pillars of the IoT

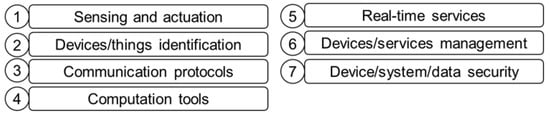

IoT systems encompass a large number of devices to collect heterogeneous data to provide diverse services to end users. They have seven major functional pillars to serve users, as shown in Figure 4. Each pillar has a unique role in IoT systems. For example, the first pillar collects data of diverse types from the underlying environments/settings with the help of sensors, wearable devices, and actuators. IoT sensors and actuators can be chemical, acoustic, pressure, thermal, biological, RFID tags, etc. [49]. The computation pillar facilitates data management, mobility, and knowledge discovery, and the security pillar protects the devices, communications protocols, and services from unauthorized access and/or modification. Further insight into each pillar can be gained from [50]. Due to the versatility of IoT systems, various tools and applications are integrated into each pillar. For example, to accelerate analytics results, fog/cloud computing is integrated with the computation tools pillar. Similarly, to offer better security and privacy, different techniques, including encryption, blockchain, differential privacy, and zero-knowledge proofs (ZKPs) are used in IoT systems.

Figure 4.

Main pillars of IoT-based systems (adapted from Swamy et al. [50]).

2.5. High-Impact Applications of the IoT in Diverse Sectors

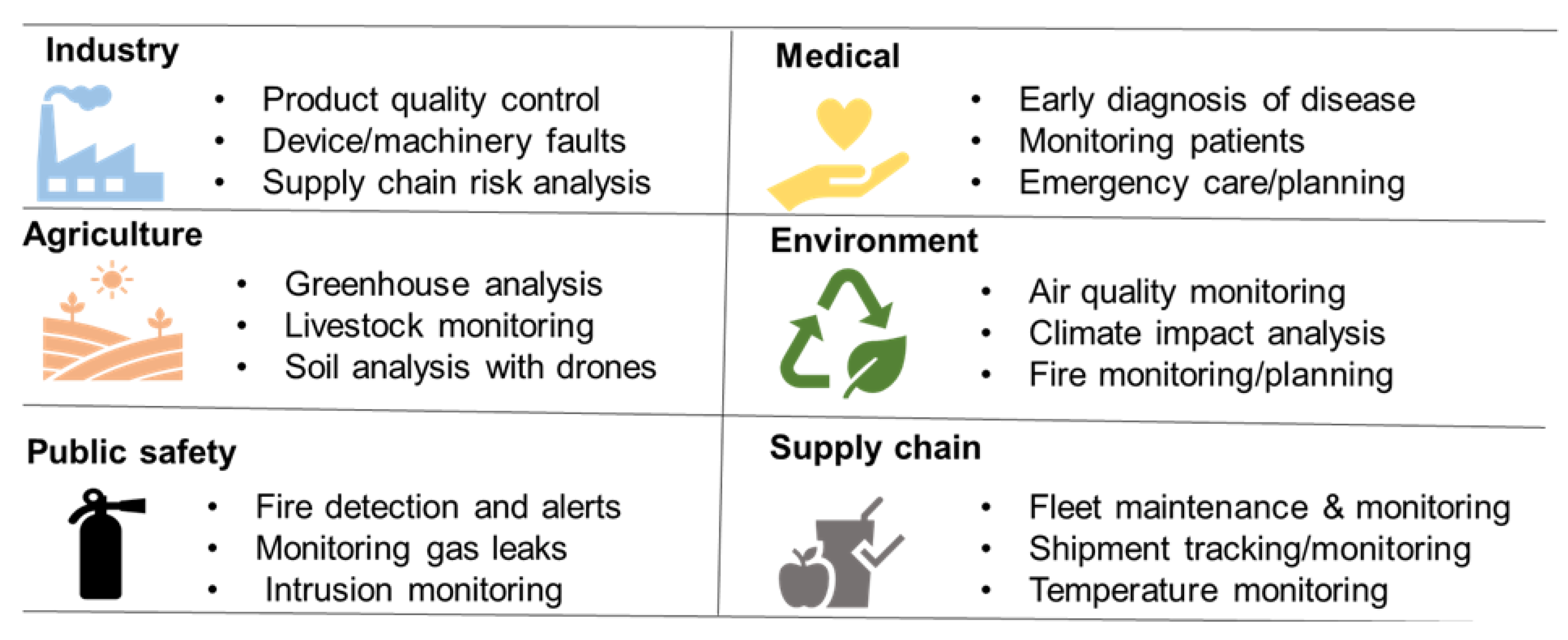

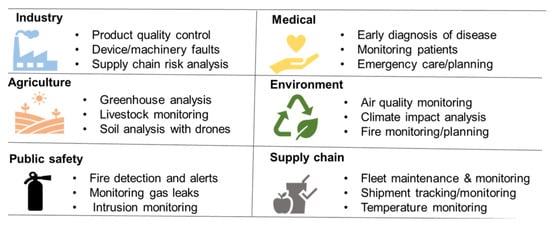

In recent years, the IoT application’s umbrella has expanded to encompass diverse sectors such as medicine, smart cities, surveillance, and agriculture. Figure 5 presents high-impact applications of the IoT in six sectors. In the medical sector, IoT systems can help monitor patients and achieve early diagnosis of diseases. In the agriculture sector, they can be used to monitor the health of plants as well as analyze crop yield. In industry, they can be used for industrial output monitoring and predictive maintenance. Apart from these sectors, IoT systems have potential applications in other sectors, such as smart cities, retail, and transportation. In smart cities, they can monitor water sanitization and identify illegal construction. In the retail sector, they can be used for recommendations by capturing data with CCTV cameras and analyzing it with AI techniques. In smart homes, they can monitor elderly patients’ health. In transportation, they can monitor traffic flow, spot illegal parking, and be used for freight management. In summary, IoT-based systems have diverse applications in each sector, and the array of IoT-based applications is rapidly expanding. Owing to the rapid advancements in AI models and computing resources, the application of IoT-based systems is expanding and creating a big impact on human lives [51,52]. Rapid advancements in real-time data processing and analysis are key enablers behind the success of IoT applications in diverse sectors.

Figure 5.

High-impact applications of IoT systems.

3. Review Methodology

In this paper, we conducted an in-depth review to solicit and retrieve SOTA articles for accurate, reliable, and complete conclusions. We applied a systematic approach to find relevant papers from credible databases. We conducted this comprehensive survey by adopting the PRISMA method, which assists in identifying, evaluating, and synthesizing closely relevant studies about specific research questions and/or topics. This review focuses on the research questions below.

- What are the two practical settings followed in the IoT domain in real-world applications, and what privacy-preserving methods exist for each setting?

- What is the difference between the two types of privacy methods (PES and PbD) adopted in digital environments for privacy preservation, and what is the status of current developments in those two methods in the context of the IoT domain?

- What are the different privacy-preserving methods that have been synergized within the PES and PbD categories in order to provide privacy guarantees, and what are the key purposes of these ever-increasing synergies?

- What are the different privacy-preserving methods that have been integrated in PES and PbD in order to provide privacy guarantees, and what are the scenarios in which these integrations have been made?

- How are the recent developments in quantum-based PP methods affecting the IoT domain, and what kinds of problems can likely be resolved with such methods?

- What are the key opportunities associated with advancing the privacy status of the IoT environment in the future in relation to what has been researched already?

In this work, we provide systematic and extensive coverage of the privacy-preserving methods used in the IoT domain in order to help researchers and developers understand recent advancements in IoT privacy preservation and its related topics. To achieve this key goal, we performed an extensive analysis of relevant SOTA studies that provide robust privacy guarantees and that were published in reliable venues.

Scientific databases consulted and the selection criteria: The research articles included in this review were gathered from various scientific databases, including IEEE Explore, the ACM Digital Library, Springer, MDPI, Science Direct, Scopus, and the Web of Science. The main reasons for considering these databases were higher credibility w.r.t. academic integrity, and the availability of various articles related to the scope of this review. To transparently and accurately report the contents and findings of prior studies, we selected peer-reviewed journals, top-tier conferences, technical magazines, and a few highly cited arXiv papers. It is worth noting that arXiv is not a widely used database for reviews, so we chose very few papers from it based on relevance and from exploring citations in the articles we considered from our primary databases in order to cover certain contexts. While selecting papers, we ensured that most of them were in English and that the full text (or metadata) of each paper was easily accessible. Through this systematic method, we ensured that relevant, high-quality, SOTA, and recently published articles were selected.

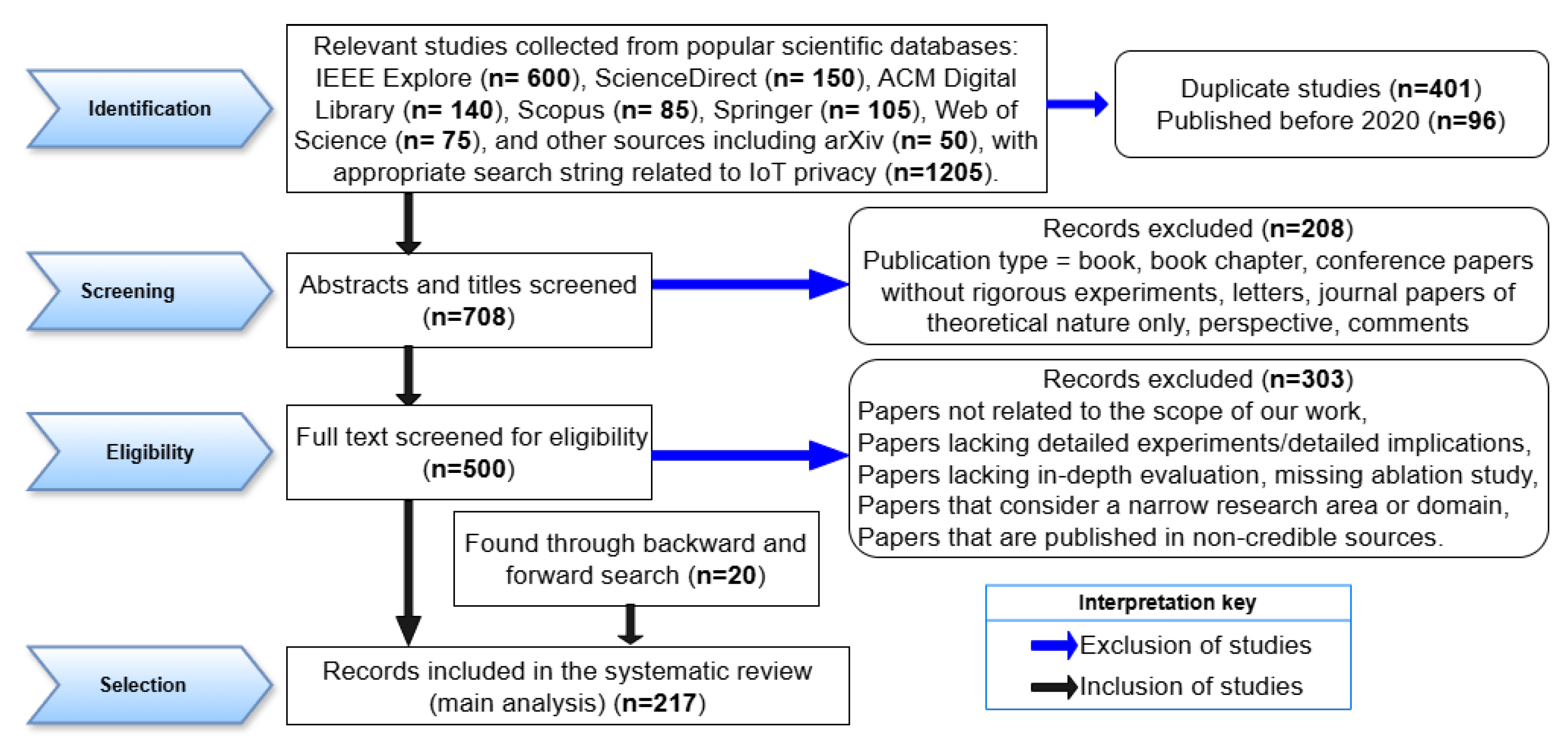

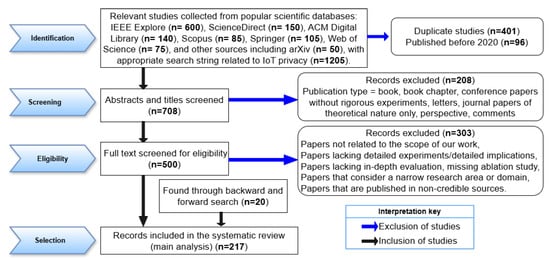

Query approach: We queried relevant studies for this paper from Google Scholar and the search functions available in the above-mentioned databases. We also applied publication year, language, and full-text search criteria to retrieve recent and relevant studies. We performed backward and forward searches of citations in highly cited papers to identify relevant studies for analysis and inclusion in our review. We used different keywords to find relevant studies for our research questions. For example, the main search keywords for identifying studies related to developments in Section 4 were “privacy preservation in IoT”, combined with (AND) “on-device setting/cloud setting” and OR “(the application name) (e.g., activity recognition, analytics, healthcare)”. We also used privacy attacks as well as privacy technique names combined with IoT to search relevant studies: ((IoT) AND (privacy disclosure OR inference attacks OR sensitive data leakage OR inference of sensor readings OR identity disclosure) AND (differential privacy OR anonymization OR secure multi-party computation OR blockchain OR federated learning OR split learning OR synthetic data)). We also used the related article function from Google Scholar to find papers that belong to the same category. To find relevant literature for PES and PbD methods, we used “IoT” combined with the name of the PP method. To find related literature for a hybrid method, we used “IoT” combined with the name of two or more privacy technologies that were combined to achieve privacy in an IoT setting. Similarly, we found relevant studies for quantum-based privacy aspects by using the quantum technique’s name, such as quantum key distribution (QKD) or quantum-based privacy, combined with “IoT” as the query. We also searched some papers by combining the words survey, review, or perspective with IoT to cover deep knowledge about established concepts in the field. Lastly, we used performance objective names such as privacy protection with reduced overhead, privacy and utility optimization, privacy and latency reduction, etc., to find related papers. The systematic process utilized to select relevant studies is illustrated in Figure 6.

Figure 6.

The systematic procedure used in the selection of studies for in-depth analysis of privacy preservation in the IoT domain (the # of papers filtered at each stage is indicated with blue arrows).

Exclusion and inclusion criteria: As shown in Figure 6, we systematically chose different studies to be included in our review, and excluded those that were either redundant or lacked detailed experimental and/or methodological descriptions. In the initial assessment, we excluded studies that do not closely align with the scope of this paper by carefully checking the titles and abstracts. We also removed papers that were not written in English. In the final assessment, we excluded studies that focus on narrow areas like IoT use in agriculture (or in vehicular networks) or that discuss outdated topics (e.g., device specification privacy or device structure) with minor modifications in the methodologies. We excluded papers for which either the full text or the metadata were unavailable. We also excluded some papers that only discuss general IoT services without privacy aspects. Our selection process initially identified 1205 publications. The above-stated exclusion criteria ensured the relevance and quality of the selected studies, with types such as book chapters, books, low-tier conference papers, letters, and retracted studies being removed from inclusion. Lastly, only journal or top-tier conference articles and reviews/surveys published in English were retained. Only recent studies that have empirical results and are accessible in full text were included in the paper. Specifically, studies that devised PP methods in order to resolve the problem of data privacy in IoT systems/applications were included in our study. This systematic process resulted in a final dataset for analysis encompassing 256 publications. As shown in Figure 6, about 497; 208, and 303 studies were excluded at the identification, screening, and eligibility stage, respectively.

Threats to validity and their mitigation: Two key threats related to the validity of our review are (i) search bias and (ii) quality assessment bias, which can undermine the reliability and completeness of the findings. The former can occur due to two issues: incomplete searches, or searching only some reputable sources. This can result in the exclusion of papers that were published in lower-tier venues. The latter threat is related to criteria employed in assessing the suitability of studies for inclusion or exclusion (bias, lack of clarity, etc.). To address both threats, we included studies from diverse databases and used techniques like snowballing from [53] to prevent search bias. To mitigate the second threat, we evaluated each study’s full text, and examined the methodological and experimental details. We adopted other techniques (e.g., establishing a checklist in terms of questions and scoring each study) from [53] to prevent bias related to quality assessment and to exclude low-quality/irrelevant papers. We analyzed the relevance of each study by carefully contrasting each paper with the objectives and/or scope of our review. Based on the above analysis, it is fair to say that threats to validity were effectively mitigated, and the findings and conclusions are reliable and complete.

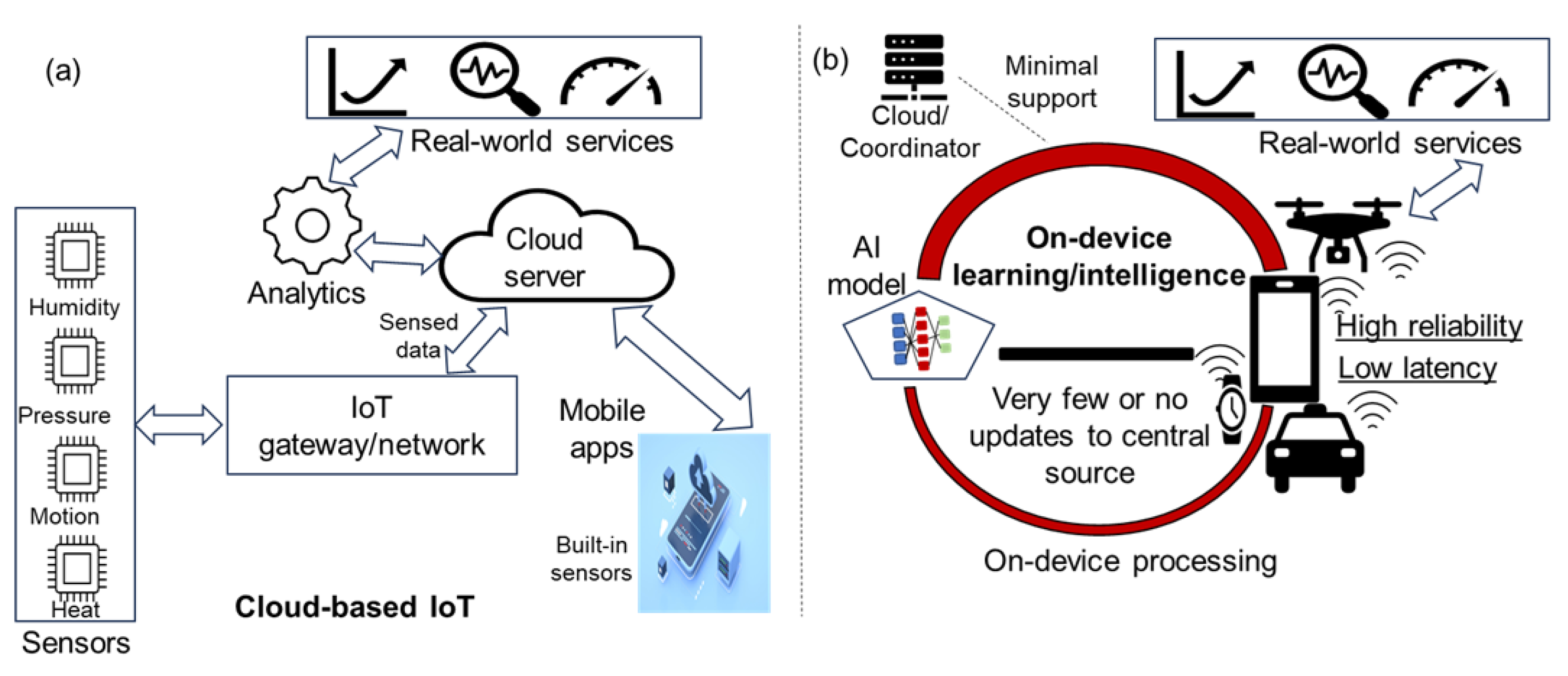

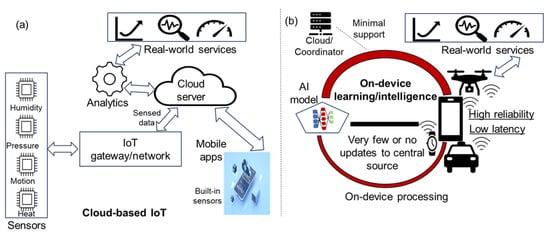

4. Privacy-Preserving Methods for Two IoT Settings

In this section, we describe privacy-preserving methods developed for on-device and cloud-based IoT systems. Both settings provide numerous services to end users, such as medical care, recommendations, environment condition monitoring, localization and tracking, and activity analysis. We illustrate both these settings in Figure 7. In the on-device setting, the data rarely move from device to cloud, and most of the computations are executed locally, as shown in Figure 7b. However, minimal support to execute compute-intensive operations or model updates is provided by back-end servers/coordinators in some scenarios. In addition, minimal generic or formatted data are shared with back-end servers/coordinators in order to serve more users or to resolve latency issues [54]. Due to the widespread use of mobile phones and other wearable devices, on-device services (including AI-based) are on the rise [55]. A practical example of such a system processing data locally is skin cancer recognition [56]. In the cloud setting, data are collected from sensors and/or devices and transferred to cloud servers for further analysis, as shown in Figure 7a. An example is skin health monitoring by using a cloud-enabled IoT [57]. The on-device setting shown in Figure 7b provides better privacy because data are mostly processed on a device, and network connectivity is not required in some cases. However, an attacker can sometimes exploit loopholes in AI models or other configuration files to compromise user privacy [58]. In contrast, the cloud-based IoT has been widely criticized for poor privacy guarantees because data are always aggregated at a central server [59]. To address privacy issues in both settings, wide arrays of solutions have been developed, ranging from conventional anonymization to sophisticated obfuscation.

Figure 7.

Overview of the two different IoT settings ((a) denotes the cloud computing setting, and (b) denotes the on-device setting) considered for privacy analysis.

Next, we analyze the SOTA privacy-preserving methods that have been developed for the above two IoT settings. The analysis in this section can assist in understanding recent developments for privacy preservation in IoT settings. Table 1 contrasts the recently developed SOTA privacy-preserving solutions for on-device and cloud-based IoT settings. We analyze and compare the solutions based on four parameters (techniques used, strengths, weaknesses, and applications). In the on-device setting, most of the methods execute privacy-preserving methods on devices, but with minimal support from the edge/cloud. In contrast, the privacy-preserving methods used in the other setting are mostly executed in the cloud. In some cases, stringent privacy requirements are satisfied through the execution of privacy-preserving methods on edge devices as well as in the cloud [60,61]. In some scenarios, privacy is preserved merely by keeping data on the devices and executing some operations locally [62,63]. In some cases, more than one server is employed to better protect the privacy of the data originating from IoT devices [64,65]. In some studies, two- or three-layer privacy solutions are suggested to protect data from adversaries [66]. However, as more devices are connected, and because IoT-based systems are deployed in real-world settings, privacy protection is still challenging [67]. In the future, more robust and practical privacy-preserving methods will be required for all sorts of privacy issues.

Table 1.

Analysis of recent and SOTA privacy-preserving methods for two IoT settings.

From an in-depth analysis of the published literature, we found that privacy-preserving methods for on-device settings are relatively few compared to the cloud-based setting. However, the trend towards serverless computing is increasing, and more and more AI models are deployed on cellphones [90,91]; therefore, more developments are expected in the on-device IoT setting. For privacy protection, different techniques such as DP, anonymization, encryption, secure computing, obfuscation, and randomizing have been used. In the on-device setting, lightweight operations such as randomization, reformatting, and data reduction have been applied. In contrast, more complex techniques such as encryption, anonymization, DP, and hybrid solutions have been applied in the cloud-based setting. Most techniques provide the desired privacy guarantees in related scenarios and/or domains. However, there are certain performance objectives and/or overhead issues in each method, as noted in Table 1. From an application’s point of view, each solution provides robust privacy guarantees in either application-specific or generic scenarios. The analysis in Table 1 can pave the way to understanding two practical IoT settings and the privacy-preserving solutions developed for each setting.

From the analysis in Table 1, it can be observed that some methods yield better results than others. The main reason for the performance disparity is the difference in objective, proposed methodology, and evaluation datasets. Furthermore, some methods are attack-specific and therefore yield better performance against respective attacks only. In addition, some methods make use of optimization strategies such as noise optimization in DP or lightweight operations to yield better performance in both IoT settings. In terms of utility and privacy trade-off, only a few methods resolve this critical issue, while most methods optimize one metric at the expense of another. In some methods, the use of small yielded better privacy but very poor utility. Furthermore, some methods sustained utility alongside privacy by carefully determining the noise or classifying data into sensitive and non-sensitive parts. In all methods, the computing overheads rise with the increase in data size or complex mathematical operations, yielding poor scalability. There are only a few methods that maintain the balance between performance objectives (e.g., privacy/utility) and computational overheads. Additionally, some methods make use of the encryption technique, which can lead to poor scalability with the increase in data size. Lastly, most methods lack real-world applicability, as the implementation was carried out in the form of test beds or static datasets. Most methods can encounter many configurations and implementation issues in real IoT environments. In the future, it is vital to develop practical and lightweight methods that can maintain the balance between different kinds of trade-offs while offering seamless privacy guarantees against modern-day attacks.

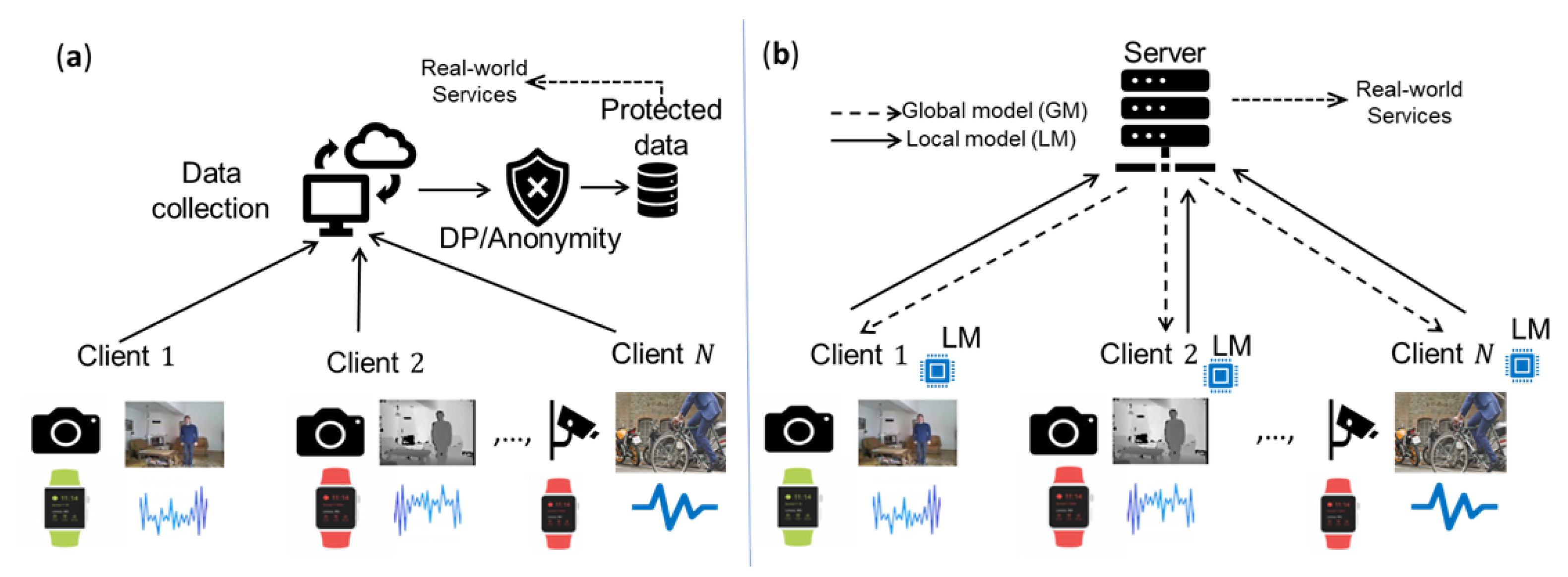

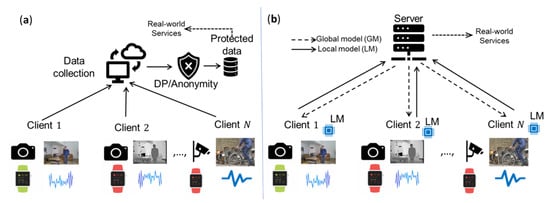

5. Privacy-by-Design and Privacy Engineering Solutions for IoT Data

In this section, we identify and summarize the findings from the two different types of privacy-preserving solutions for IoT data, namely privacy-by-design and privacy engineering solutions. In the former, the data are not accessed, and instead, the model is trained collaboratively. An example of such a solution is federated learning, where data are not accessed, but knowledge is acquired from all clients (that is, devices from the IoT) [92,93]. In contrast, the data are accessed in raw form and shared for analytics after applying the necessary protection in privacy engineering solutions. An example of such a solution is anonymization and DP, which are applied to data collected from IoT devices and shared for downstream tasks [94]. Further information regarding these solutions (along with examples) can be found in our previous work [95]. In some scenarios, both solutions have been used jointly to meet strict privacy requirements [96]. In this work, we focus on the efficacy of both solutions in terms of privacy preservation in IoT settings/environments. We demonstrate both settings in Figure 8, in which (a) and (b) show PES and PbD, respectively.

Figure 8.

Overview of (a) privacy engineering and (b) privacy-by-design solutions for IoT data.

In recent years, both solutions have been extensively used in IoT environments to protect the privacy of both the data and the models while allowing knowledge extraction [97,98]. In this work, we identify and summarize the findings of both solutions to highlight recent developments in the IoT domain. To the best of our knowledge, developments for both of these methods in a categorized form are not found in the current literature. Table 2 and Table 3 summarize the recent SOTA PES for IoT settings. Specifically, we put the solutions into five broad categories and summarize the findings of different studies in each category. In Table 2 and Table 3, observe that many privacy-preserving solutions have been proposed for IoT environments. In this analysis, we provide information about data modality along with the strengths and weaknesses of each approach. The analysis in Table 2 and Table 3 contribute to understanding the latest developments for privacy protection in diverse IoT systems and applications. Note that there are hybrid methods in each category, which we thoroughly cover in the next section.

From the analysis in Table 2 and Table 3, it can be observed that some methods yield better results than others due to differences in anonymity/privacy operations, # of steps in the methodology, and evaluation datasets/metrics. For example, most methods in the anonymization category employ clustering operations to cluster similar users/records, thereby reducing the information loss. In these methods, privacy can be compromised particularly when the sensitive information has the least diversity. These methods cannot effectively resolve the trade-off between privacy and utility owing to more changes in the data via pre-built generalization taxonomies. Conversely, the computing complexity is not very high in these methods due to simple operations (e.g., data transformations). However, some steps, such as clustering of the data, can lead to higher overheads when the data size is large. These methods lack real-time applicability, as they provide resilience against a limited array of privacy attacks. In contrast, DP-based methods can offer robust privacy guarantees. However, the small value of can degrade utility, and therefore, many optimization strategies have been developed to optimize the to resolve the privacy–utility trade-off. Moreover, it is still very challenging to yield better utility while adopting DP-based methods in realistic scenarios. The computing complexity of the DP-based method also rises with the dimensions of the data. DP has been widely used in real-time settings as well as in offline scenarios.

The encryption-based methods are usually slow but offer better privacy guarantees at the expense of utility. These methods have been widely used in data transfer from IoT devices to cloud servers without compromising privacy. Recently, some encryption-based methods allow knowledge discovery even from ciphertexts, leading to the optimization of the privacy–utility trade-off. The encryption-based methods have poor scalability owing to complex mathematical operations. However, the recent lightweight encryption methods have resolved these issues to some extent. The computing complexity of these methods can rapidly rise with the data size or key size, offering poor scalability. These methods have higher real-time applicability, particularly in data transfer. Blockchain-based methods offer better data privacy but are very computationally expensive. The utility of these methods depends on the downstream tasks. The computing complexity of this method can also rise with the participant as well as the number of transactions/interactions. It has higher real-time applicability owing to the CIA (confidentiality, integrity, and availability) triad. SMC has emerged as one of the PP methods involving multiple parties. However, the use of complex operations (e.g., encryption, masking, etc.) makes this technique computationally expensive. Furthermore, data underwent various splits and shares, and therefore, the utility can be low. However, optimized techniques like protocol choice, precision tuning, and carefully crafted noise can contribute to lowering the utility loss. The computing complexity in SMC can also rise with the data size and partitions. Finally, most techniques have not been tested with real IoT data, and therefore, some deployment/configuration issues can likely emerge in most of these methods in real settings.

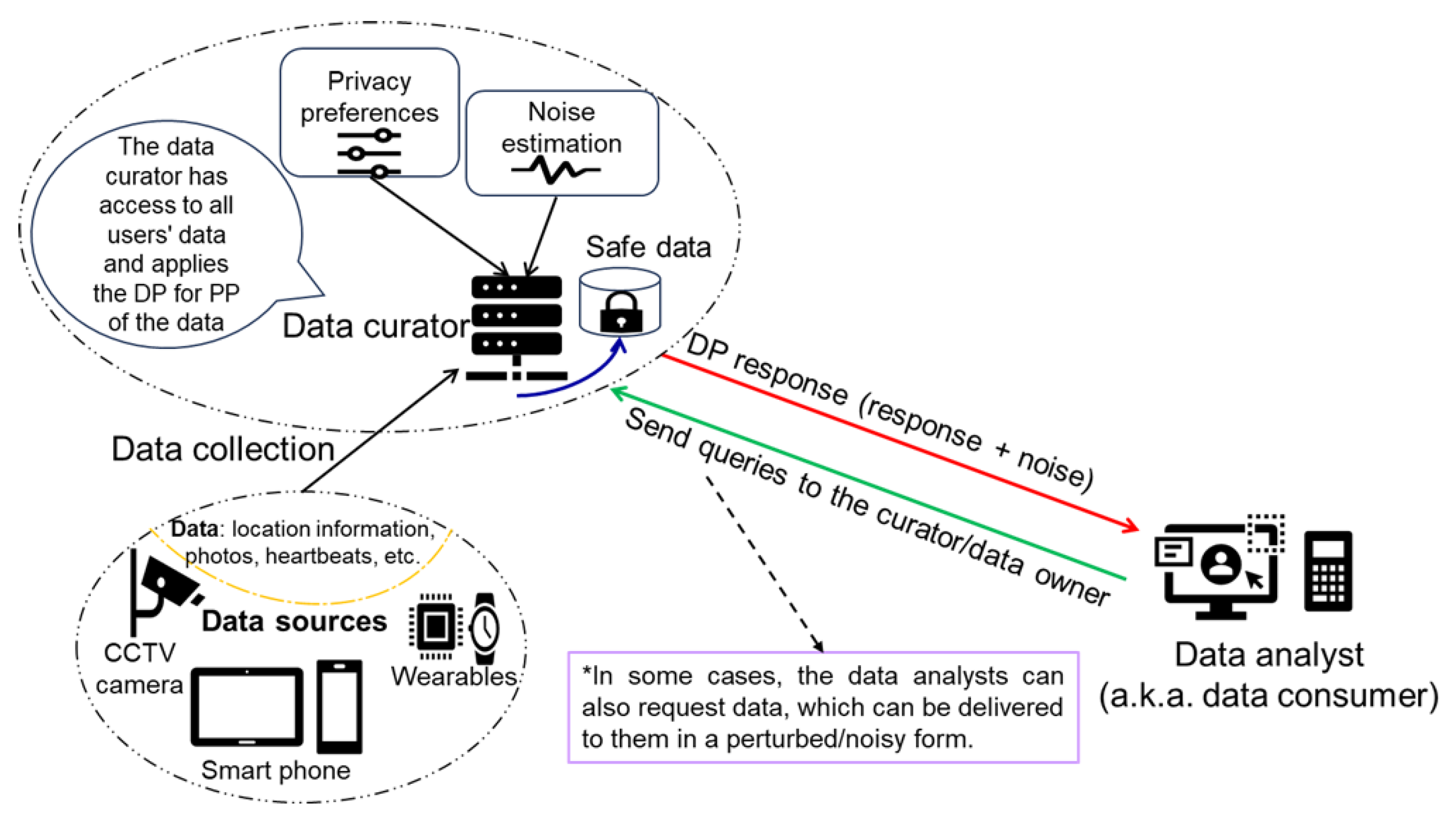

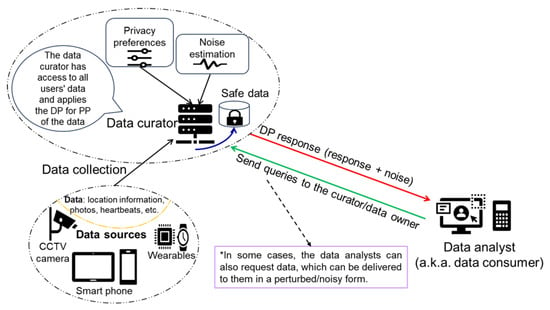

The PES facilitates diverse types of objectives such as IoT data sharing/analytics, answering queries, pattern extraction, secondary use of IoT data for diverse purposes, training ML models, etc., in a privacy-preserving way. As stated earlier, in these solutions, raw data are usually aggregated at some central place and PP mechanisms are accordingly applied. In recent years, many customized PESs have been developed to extract meaningful knowledge from IoT data without losing guarantees of privacy. Zhang et al. [99] recently developed an adaptive method based on DP to facilitate data sharing in IoMT environments. The proposed method enables query execution on the data that are aggregated from IoT devices while optimizing the trade-off between utility and computational efficiency. Islam et al. [100] developed a practical framework to prevent privacy leakage in queries by adding a carefully determined noise via the DP model for data sharing in IoT systems. The proposed framework secures IoT data by jointly using the DP and blockchain while restraining higher perturbations in the data. To further clarify the use of PES in IoT, we demonstrate the workflow of DP in IoT data sharing/analytics in Figure 9. As shown in Figure 9, IoT device data are collected at some central place first, followed by the DP application (in some cases, users can give some privacy preferences regarding their data processing, which is also taken into account while perturbing data) to make it accessible for the data analyst. It is worth noting that data analysts will obtain noisy answers in the case of query and perturbed data instead of real data in data access cases, thereby preserving the privacy of IoT data.

Table 2.

Analysis of the SOTA privacy engineering solutions for IoT settings/environments.

Table 2.

Analysis of the SOTA privacy engineering solutions for IoT settings/environments.

| Technique | Method | Strength(s) | Weakness(es) | Data Modality | Study |

|---|---|---|---|---|---|

| Anonymization | K-VARP | Reduced information loss | Cannot apply to fewer tuples | Table | Otgon et al. [101] |

| k-A clustering | Processing of hybrid data | Leaks privacy in skewed data | Table | Nasab et al. [102] | |

| Clustering-based k-A + D-A | End-to-end privacy guarantees | Higher utility loss | Table | Onesimu et al. [103] | |

| -anonymity model | Privacy protection in CS setting | Cannot be used on skewed data | Table | Li et al. [104] | |

| -sensitive k-anonymity | Protection against SVA and CSA | Higher noise for gain in diversity | Table | Khan et al. [105] | |

| Pk-A and temporal DP | Hybrid solution for IoT data | Limited applicability | Time series | Guo et al. [106] | |

| k-A + clustering | Protects against identity disclosure | Neglects many critical factors | Sensor data | Liu et al. [107] | |

| Dynamic anonymization by separatrices | Prevents attribute disclosure attacks | Prone to other privacy attacks | Table | Coelho et al. [108] | |

| IGH + k-A | Protection for same data types | Cannot apply to dynamic scenarios | Hybrid | Mahanan et al. [109] | |

| Probabilistic RS + IA | Solves privacy–time trade-off | Limited protection for LA, PA, BKA | Sensor data | Moqurrab et al. [110] | |

| Differential Privacy | Local DP | Privacy protection of key-value IoT data | Analysis from a small is not done well | Table | Wang et al. [111] |

| Laplace + SAA | Privacy–accuracy trade-off | Amount of noise is high | Set value | Huang et al. [112] | |

| Double randomizer + BT | Privacy protection in range queries | Higher computing complexity | Table | Ni et al. [113] | |

| DPSmartCity | Data classification and robust PP | Considers limited no. of adversaries | IoT data | Gheisari et al. [114] | |

| MPDP k-medoids | PUT optimization in IoMT data | Limited applicability to SD | Table | Zhang et al. [115] | |

| LDP + Count Sketch | Preserves correlations and availability | More noise addition in intermediate steps | Key-valued | Wang and Lee [116] | |

| EdgeSecureDP | 78% accuracy in DL environment | Higher may leak privacy | Graph | Amjath et al. [117] | |

| Adaptive selection + SW | Protection of WD data | Lack of extensive validation | Streams | Zhao et al. [118] | |

| Conventional DP | Privacy protection in queries | Prone to inference attacks | Table | Mudassar et al. [119] | |

| LDP + iterative aggregation | Hides records from TPS | Lack of tests for multiattribute data | Table | Zhou et al. [120] | |

| Encryption | Homomorphic encryption | Privacy of DO, CS, and DU in complex IoT environments | Suitable only for small-scale data and high cost | Sensor instances | Ren et al. [121] |

| Fully HE | PP computations on encrypted data | Cannot resist inference attacks | ECG records | Ramesh et al. [122] | |

| Thumbnail PE | Protection for DL-based SRA | Poor results from large-image data | Image data | Yuan et al. [123] | |

| Searchable SE | Efficient and secure scheme | High overhead with MD queries | File data | Gao et al. [124] |

Abbreviations: K-VARP = K-anonymity for VARied data stream via Partitioning, k-A = k anonymous/anonymity, D-A = disassociation, SAA = sample and aggregation, BT = binary tree, CS = client server, SVA = sensitive variance attack, CSA = categorical similarity attack, DPSmartCity = Differential Privacy-Preserving Smart City, IGH = identical generalization hierarchy, RS = random sampling, IA = Instant_Anonymity, LA = linkage attack, PA = probabilistic attack, BKA = background knowledge attack, MPDP = multiple partition differential privacy, PUT = privacy–utility trade-off, SD = small dataset, DL = distributed learning, SW=square wave, WD=wearable device, TPS = third-party servers, DO=data owner, CS=cloud server, DU = data user, HE=homomorphic encryption, PE = preserving encryption, SRA = super-resolution attack, SE = symmetric encryption, MD = multidimensional, CP-ABE = ciphertext-policy attribute-based encryption, CPT = chosen plain-text.

Table 3.

Remaining part of Table 2.

Table 3.

Remaining part of Table 2.

| Technique | Method | Strength(s) | Weakness(es) | Data Modality | Study |

|---|---|---|---|---|---|

| Encryption | CP-ABE | Privacy guarantees in cloud setting | Protects against CPT attack | Attribute data | Li et al. [125] |

| Identity-based encryption | Ensures privilege access for PP | Prone to inference and MS attacks | General data | Yan et al. [126] | |

| Identity-based encryption | PP with revocation functionality | Low PP when many nodes are C | Actuator data | Sun et al. [127] | |

| Catalan object | PP via complex ciphertext | Lacks real-life validation | General data | Saravcevic et al. [128] | |

| Functional encryption + ABC | End-to-end solution for PP | Requires data at centralized place | Sensor data | Sharma et al. [129] | |

| Chaos-based encryption | Strong PP in data transmission | PP for one data modality only | Biosignal data | Clemente et al. [130] | |

| Chaos-based encryption | PP via higher indistinguishability | High complexity and sensitive to IC | Image data | Jain et al. [131] | |

| Blockchain | MC architecture | In-built privacy for many use cases | Poor utility and data access | Smart device data | Ali et al. [132] |

| Smart contract | PP and better control on devices | Higher storage and update costs | Smart device data | Loukil et al. [133] | |

| Data certification | Protection of car mileage data | Heavy reliance on central databases | Sensor data | Chanson et al. [134] | |

| ACOMKSVM + ECC | PP in learning process | Prone to inference and re-construction | Image data | Le et al. [135] | |

| Chameleon hash functions | Protection against diverse attacks | Poor against Sybil attack | General data | Xu et al. [136] | |

| EOS blockchain | Pipeline for securing SH data | No support for analytics or DM | Smart home data | Tchagna et al. [137] | |

| BPRPDS | Protection against UP of DUs | Limited support for DM and PS | General IoT data | Li et al. [138] | |

| Secret sharing | PP in IoT data sharing scenarios | Prone to data leakage and UP | Node data | Li et al. [139] | |

| SecPrivPreserve | Privacy, security, and integrity | High overheads and operations | General IoT data | Padma et al. [140] | |

| Smart contract + HE + ZKP | Tamper-proof data sharing | High computing complexity | IoT nodes’ data | Ma et al. [141] | |

| SMC | FairplayMP framework | Solves PUT in personal data | Data are divided into various pieces | Sensor data | Tso et al. [142] |

| Paillier and ElGamal CS | Prevent insider privacy attacks | Very high data processing costs | Sensor data | Yi et al. [143] | |

| PrivacyProtector + SW-SSS | Strong protection against C-L-A | Relies on multiple servers | Log data | Lou et al. [144] | |

| Bloom filter + SMC + BC | Secure data sharing in IoT devices | Poor scalability and efficiency | IoT node data | Ma et al. [145] | |

| Shamir’s secret sharing | PP in aggregated IoT data | Prone to SA prediction attacks | Sensor data | Goyal et al. [146] | |

| SMC + SS | Support for different aggregations | Difficult to choose security parameters | IIoT node data | Liu et al. [147] | |

| Federated MPC | PP in diagnostic applications + SMI | Poor results from imbalanced data | X-ray data | Siddique et al. [148] | |

| MPMQSMT component | PP of identity, location, and health data | Malicious parties can corrupt the data | General IoT data | Bao et al. [149] |

Abbreviations: PP = privacy protection, MS = model serialization, C = corrupted, ABC = attribute-based cryptography, IC = initial condition, MC = modular consortium, ACOMKSVM = Ant Colony optimization with Multi Kernel Support Vector Machine, ECC = Elliptical Curve cryptosystem, SH = smart home, DM = data mining, BPRPDS = blockchain-based privacy-preserving and rewarding private data-sharing scheme, UP = unauthorized profiling, DUs = data users, PS = personalized service, ZKP = zero-knowledge proof, CS = crypto systems, SW-SSS = Slepian–Wolf-coding-based secret sharing scheme, BC = block chain, SMC = secure multi-party computation, SA = sensitive attribute, SMI = sensitive medical information, MPMQSMT =multi-party multi-query set membership testing.

Figure 9.

Workflow of the IoT data sharing/analytics by using the DP model (partially adopted from Islam et al. [100]). The * in this figure simply means another type of request (e.g., requesting data instead of posing a query).

Apart from the PES analyzed in the above tables, PbD solutions have been recently adopted in IoT and/or IIoT domains to provide robust privacy guarantees and data usability [150]. To this end, federated learning is one of the PbD solutions having numerous applications in IoT environments [151]. Apart from FL, federated analytics (FA) and split learning (among others) are also regarded as PbD solutions because they do not move the data but instead transfer knowledge and/or the model [152,153,154]. To the best of our knowledge, our work here is the first to comprehensively explore the role of PbD solutions in the IoT domain. Specifically, we analyzed different studies that employed these solutions for privacy protection in IoT settings/environments. Table 4 summarizes various SOTA studies that employed PbD solutions for privacy preservation in IoT applications/systems. In Table 4, we compare SOTA PbD-related studies based on four criteria: strength, weakness, modality, and method used. In some studies, explicit details about the datasets were not given, or the solution was analyzed in a testbed, so we list general IoT data in the modality column. A high-level taxonomy of PbD and PES, along with their techniques considered in this work, is shown in Figure 10.

Table 4.

Analysis of SOTA PbD solutions for IoT settings/environments.

Figure 10.

A high-level taxonomy of PbD and PES methods, along with representative techniques.

The above classification of PP methods is based on basic differences in both these methods, such as data mobility, accessibility, and processing. PbD methods such as federated learning and split learning are designed with privacy as a fundamental architectural concept. In these methods, data stay local, and only partial computations or model changes are distributed with the server. PES methods, on the other hand, including anonymization and DP, and change/alter data while still letting it be shared with third parties. This difference is significant, as it allows particular methods to be chosen depending on the use case or application scenario in the real-world IoT setting. Table 4 shows that a lot of studies have explored PbD solutions to guarantee the privacy of sensitive data curated and/or exchanged in IoT environments/settings. In these studies, diverse data modalities were considered, and accordingly, relevant privacy mechanisms were explored. In the analysis presented in Table 4, we considered the latest PbD methods that provide privacy guarantees in IoT systems and/or services. Since most of the PbD-related studies adopted some methods from PES, we report few studies in this analysis. However, the next section covers this aspect and thoroughly reports the synergies within the PES and PbD categories as well as between them. The analysis presented here can contribute to understanding the mainstream PbD methods and their methodological details in the context of IoT data privacy.

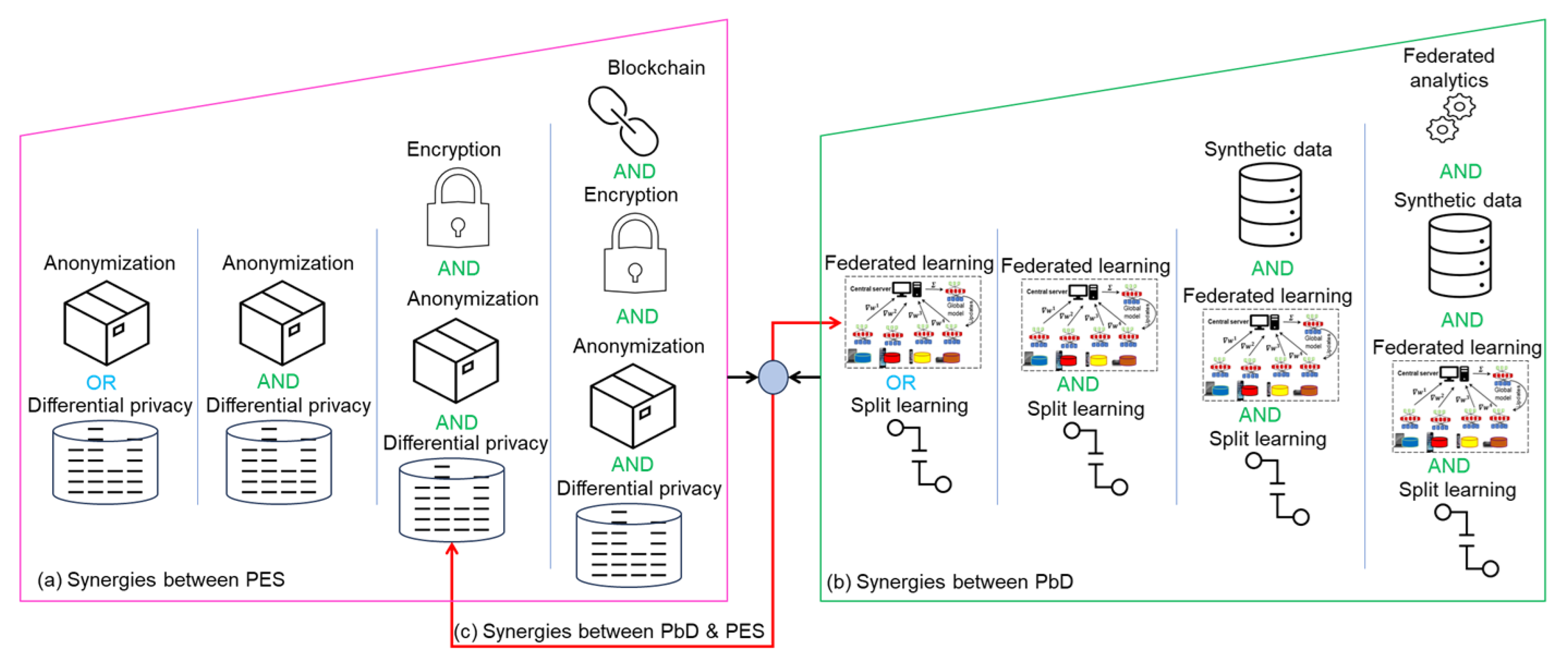

6. Synergy of Multiple Privacy-Preserving Solutions for Privacy Protection of IoT Data

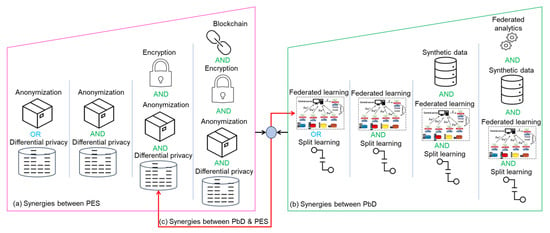

In the past, stand-alone techniques like anonymization, DP, federated learning, etc., were used to protect the privacy of data in IoT systems/services. Of late, many hybrid methods (e.g., synergy between different PP methods) have been developed to meet the stringent privacy requirements in IoT environments/settings. In these hybrid methods, some mechanisms from each technique are combined into a unified framework to meet different privacy-versus-utility requirements. For instance, in some settings, anonymization and pseudonymization are jointly used to protect the privacy of data in the IoT [175]. Similarly, DP has been rigorously combined with blockchain to protect the privacy of sensitive data in IoT environments [176]. In a nutshell, there are synergies between different PES and PbD solutions to ensure the privacy of IoT data. In this work, we highlight this aspect for the first time and discuss SOTA literature to provide a systematic analysis of such methods. Figure 11 summarizes the synergies between the PES and PbD methods discussed in the previous section, as well as the synergies. In the literature, studies mostly combined two methods to yield robust privacy guarantees. However, some recent methods have employed multiple PP methods in diverse IoT scenarios.

Figure 11.

Overview of synergies between PES and PbD methods in the context of the IoT.

Figure 11 shows three types of synergies: among PES methods, among PbD methods, and between PES and PbD methods. In Figure 11, the first levels in parts (a) and (b), separated with OR, indicate stand-alone solutions. In contrast, AND between methods indicates simultaneous use. These synergies were created to meet diverse privacy requirements or to resolve the privacy/utility trade-off. For instance, not all data are sensitive, and therefore, different mechanisms can be combined when considering the sensitivity of the data. In some cases, different users can have different privacy preferences, and therefore, different mechanisms under the same use case (or service scenario) might be required. Next, we summarize recent developments in hybrid methods in the context of the IoT for each type listed in Table 5. We contrast previous developments on four key criteria: the number of methods combined, the methods’ names, the purpose for the synergy, and application scenarios. In some studies, the application scenario was not explicitly reported, and for those studies “General scenarios” appears in the Scenario(s) column. The analysis presented in Table 5 paves the way to understanding the latest developments made to combine different methods from the same category to ensure the privacy of confidential data in IoT settings. It is worth noting that when different methods are integrated, they often lead to diverse technical, implementation, and performance challenges. For example, increased computing cost, implementation complexity, possible compatibility problems between many approaches, and the difficulty in balancing privacy–utility trade-offs when several methods are merged constitute the main obstacles to such synergy. Furthermore, these hybrid solutions could bring fresh risks at integration points and usually require additional knowledge to be implemented properly in real-world scenarios.

Table 5.

Analysis of SOTA synergized privacy solutions for IoT settings/environments.

In Table 5, note that two or more methods have been extensively used in both PES and PbD methods for privacy protection in different scenarios. Note that the analysis in Table 5 presents synergies only within PES and PbD methods because PbD methods are relatively new. In the PES category, synergy from up to four different methods accomplishes different performance objectives along with privacy. Recent research has shed light on creating synergies between the different methods to ensure robust privacy guarantees in the IoT [200,201]. Hence, the number of synergies within PbD methods is likely to grow in the coming years. However, a lot of synergies are possible by combining PES and PbD methods to enhance privacy status against diverse attacks in IoT settings/environments [202]. Table 6 summarizes the SOTA privacy-preserving methods that have employed both PES and PbD to provide robust privacy guarantees in IoT settings. The comparison criteria are the same as in Table 5, but the methods are from the two different categories.

Table 6.

Analysis of synergized (PES + PbD) privacy solutions for IoT settings.

In Table 6, observe that a lot of studies have explored ways to combine different methods to accomplish privacy guarantees along with other objectives (e.g., scalability, utility, overhead, and convergence). For example, FL has been widely integrated with many diverse privacy protection methods in the PES category. Similarly, DP in the PES category has been widely integrated with various PbD methods. In addition, all these studies were proposed for generic scenarios as well as specific ones (e.g., healthcare, parking, telemedicine, industrial automation, crowd sensing). The main purpose of each synergy was to ensure stringent privacy for diverse data resulting from IoT settings/environments. In this analysis, we limited ourselves to established research. Therefore, we found maximum synergies from using up to four methods. Although all these studies contributed to achieving the desired goals, the complexity in most of the methods was high, due to the many complex operations. Also, some studies were not validated in different contexts and/or scenarios, offering limited adoption choices in real-world cases. In some cases, the emphasis was on privacy protection only, which can lead to higher utility loss in realistic scenarios. The analysis presented in this section contributes to understanding the synergies within the PES and PbD categories as well as between them. In the future, more such synergies are expected to ensure robust privacy guarantees for diverse types of data from IoT devices and environments.

The thematic synthesis of the SOTA hybrid solutions given in Table 5 and Table 6 in terms of overarching trends, common challenges, and innovation gaps is given below.

- Overarching trends: (i) The synergy of up to two methods has been well investigated in the current literature, while the synergy of more than two methods has received relatively less attention in IoT data privacy. (ii) DP and FL have been widely integrated with many other methods in the PES and PbD categories. (iii) Most hybrid solutions have been designed for generic IoT applications/systems, whereas hybrid PP solutions for some specific domains/applications remained relatively less explored. (iv) Most hybrid solutions are designed to accomplish higher privacy guarantees at the expense of data utility or other performance metrics.

- Common challenges: (i) Most hybrid methods have a very high computing cost owing to a large number of complex operations, particularly when end-to-end privacy guarantees are desirable. (ii) The sequential application of different PP methods in a pipeline induces heavier distortion/modifications in the data. (iii) Most hybrid methods cannot provide end-to-end privacy guarantees due to configuration/parameter differences. (iv) Hybrid solutions involving complex techniques like encryption/SMC lead to the energy/compute drain on tiny/resource-constrained IoT nodes/devices. (v) Formal verification of hybrid PP solutions is difficult due to different data representations/transformations.

- Innovation gaps: (i) Most hybrid methods are tested with static datasets that have already been aggregated, lacking real-time applicability. (ii) The decision about parameter value in the hybrid solutions is often made manually, leading to the less favorable resolution of the privacy–utility trade-off. (iii) Though hybrid methods offer more robust protection than one method, resistance against diverse attacks cannot be accomplished due to unrealistic assumptions or narrow threat models in most cases. (iv) Most hybrid methods are integrated in their naive form without proper customization, offering limited protection against active adversaries.

7. Quantum-Based Privacy Preserving Solutions for IoT Data Privacy

With the advent of quantum computers, the conventional PP techniques or protocols used in various IoT environments/settings may not remain secure. Hence, the latest research has explored ways to upgrade classical PP methods with quantum-based solutions because they provide more robust solutions to privacy attacks. In the IoT domain, a lot of methods have been developed that are quantum-safe and that offer more rigorous privacy guarantees than classical methods, along with the lowest overhead [228,229]. Recent research has addressed quantum threats to IoT privacy and security. Alam et al. [230] examined quantum computing’s impact on traditional encryption like RSA, introducing post-quantum cryptographic alternatives, including lattice-based and code-based approaches. Sheetal and Deepa [231] explored general IoT security measures focusing on authentication and access control, while Irshad et al. [232] proposed a scalable and secure cloud architecture integrating post-quantum cryptography with blockchain for IoT environments. Dileep et al. [233] demonstrated privacy protection in the edge-based IoT by using quantum-enhanced encryption that combines an alternating quantum walk with the Advanced Encryption Standard (AES) for healthcare applications. Hasan et al. [234] used QKD with the Paillier cryptosystem for a smart metering system, showing improved performance against quantum threats. Malina et al. [235] surveyed privacy-enhancing technologies for the IoT with an emphasis on post-quantum cryptography implementation and included case studies of connected vehicles. Li et al. [236] constructed a privacy-preserving query architecture that remains secure even with untrusted devices, and Fernández-Caramés [237] reviewed post-quantum cryptosystems for resource-constrained IoT devices in critical applications. These studies collectively show the progression from theoretical quantum-resistant approaches to practical implementations across various IoT domains, addressing the unique security challenges of IoT systems in preparation for the quantum computing era. Table 7 presents a comparative analysis of recent quantum-based privacy-preserving solutions for IoT data security, highlighting their key features and performance characteristics.

Table 7.

Analysis of the recent quantum-based privacy-preserving solutions for IoT data privacy.

In Table 7, quantum-based PP methods have been integrated with IoT systems to provide robust privacy guarantees and other performance objectives. However, most of the studies in this line of work are theoretical or provide only the architecture without implementation. In addition, validation in real-world cases is lacking in most of the studies due to the absence of advanced computing infrastructures (i.e., quantum computers). In the future, it will be vital to explore this line of work to secure IoT systems against diverse privacy attacks while accomplishing the desired constraints or service requirements.

8. Lessons Learned, Current Challenges, and Future Research Directions

Through our extensive analysis of the literature, we found that privacy protection for IoT settings/environments is undeniably a hot research topic. A lot of reviews, articles, and case studies have been published on IoT data privacy involving different modalities (images, time series, tables, text, etc.), settings (centralized, decentralized, and hybrid), and applications (facial recognition, recommendation, parking, data sharing, knowledge sharing, etc.). Some studies are devised to protect against one type of privacy attack (e.g., data reconstruction), while other studies offer support for multiple types of privacy attack (e.g., data reconstruction, inference, model stealing). Some studies optimize the trade-off between privacy and utility in IoT settings. In contrast, others only prioritize privacy over utility, and vice versa. Some studies are stand-alone, while some combine two or more privacy methods to accomplish privacy preservation in the IoT. In addition, some studies try to accomplish multiple objectives (lower latency, less overhead, reduced energy, etc.) along with strong privacy guarantees. Lastly, some studies are validated on real-life datasets while others are validated through testbeds. Among the survey papers, the role of one or two privacy-preserving techniques is extensively discussed, along with recent developments in that line of work [246]. Some case studies highlighted the importance of privacy preservation in IoT environments by using one or more privacy protection techniques [247]. Other studies highlighted the significance of privacy protection in a narrow domain like SmartTVs [248]. Recently, some studies have investigated communication security aspects such as TLS interception, replay attacks, and device authentication in the IoT domain [249]. The authors devised various practical tests to verify the resilience of IoT devices to these attacks, underscoring the key privacy/security issues in IoT deployments. In a nutshell, there are diverse studies on IoT privacy covering many important aspects of different use cases or scenarios.

This paper provides extensive information about privacy preservation topics in the IoT by summarizing the latest SOTA studies. In Section 4, we summarized recent SOTA methods along with methodological details on two settings for the IoT: on-device and cloud computing. We found a lot of studies on cloud-based IoT settings, but relatively fewer studies for on-device settings of the IoT. In Section 5, we analyzed the PES and PbD methods along with their strengths and weaknesses in terms of privacy protection. We found that PES has been investigated well, but PbD is relatively new, and there is room for improvement. We identified four or five broad categories for each method. For PES, we focused on anonymization, differential privacy, encryption, SMC, and blockchain. In contrast, we found federated learning, split learning, zero-knowledge proofs, and synthetic data among the PbD methods. In Section 6, we analyzed the synergies between PP methods to provide a deep analysis of the latest methods. Through analysis of the literature, we found that a lot of methods have been combined within PES (e.g., differential privacy and anonymization) and within PbD (federated learning, split learning, etc.). Furthermore, many studies combined methods from both PES and PbD (e.g., federated learning plus differential privacy, or differential privacy plus split learning) to provide strong privacy guarantees. The analysis presented in that section contributes to understanding the hybrid solutions that have been devised to meet stringent privacy requirements and to complement the weaknesses of the stand-alone methods. In Section 7, we summarized the quantum-based privacy-preserving methods that have recently been proposed to achieve greater defense against sophisticated privacy attacks. In each section, we offered details for different components (e.g., modality, application scenario, techniques, and/or method names) to provide a comprehensive understanding of privacy topics in the IoT. Unlike earlier studies that usually concentrated on particular privacy techniques in isolation [250,251,252], our work offers a unique dual-categorization framework that methodically analyzes privacy solutions across both on-device and cloud computing scenarios, also separating PbD from PES. In the following respects, our study is original compared to the former analyses/studies.

- We show, across these dual categories, the first thorough mapping of privacy-preserving techniques, especially for IoT contexts.

- We specifically examine the synergies between many privacy approaches, stressing how combinations of techniques (e.g., DP + blockchain + FL) handle difficult privacy issues in IoT environments.

- We include newly developed quantum-based privacy techniques, which have not received much attention in previous works.

- For IoT environments in particular, we provide thorough comparison tables analyzing strengths, shortcomings, data modalities, and application areas of different privacy techniques.

The knowledge enclosed in this manuscript paves the way to a clear understanding of the research dynamics and valuable literature on privacy for diverse IoT systems and applications.

Current challenges in the field: PP in IoT systems and applications has become a hot research area. However, despite many developments, it is still challenging to resolve all kinds of privacy issues in a fine-grained manner. Below, we pinpoint crucial challenges in PP methods for IoT settings.

- Most of the existing PP methods are ad-hoc in nature, meaning they do not classify IoT data based on sensitivity, leading to identical protection for entire segments of the data. However, not all data are sensitive in practice, and hence, data classification to reduce the number of anonymity operations or to lessen computing overhead is challenging in IoT services and/or applications.

- Most of the existing PP methods offer resistance against a limited array of privacy attacks, and they cannot offer the required safeguards in some IoT scenarios, such as on-device IoT settings.

- Most PP methods employ different anonymity operations at different stages of the IoT application, leading to poor data utility and delays in knowledge derivation.

- In IoT systems/applications, devices can generate data in different formats and in a continuous format; however, the current methods cannot adjust the parameters and/or configurations based on these changes, which can lead to inconsistent privacy protection.

- The current PP methods used in IoT settings are very restrictive, meaning they alter the data properties too much by adding excessive noise or implementing other kinds of operations. Highly modified data cannot provide the required knowledge in terms of analytics, and can negatively impact insights and data-driven outcomes.

- The existing PP methods often combine multiple methods in order to cater to one or two types of privacy attacks. While this synergy is handy, it can lead to high computing costs due to extensive operations.

- Most PP methods cannot scale well to the increasing number of devices, and they lack validation in realistic IoT cases, leading to higher overhead and/or poor privacy guarantees in real-world cases.

- PP in PbD methods comes with different trade-offs, and privacy protection often leads to other kinds of technical problems (e.g., poisoning attacks, prolonged convergence, model stealing, or backdoor attacks).

- Most PP methods work in a black-box manner and lack transparency in terms of data processing, leading to poor control and transparency over users’ data in IoT environments. In the current era, there is a lack of intuitive privacy interfaces, personalized PP data-processing methods, privacy-preference-aware learning models, etc.

- Most PP methods do not provide robust privacy guarantees for the entire data lifecycle (collection → processing → storage → sharing). Hence, there is a risk of private information leakage at different stages/phases of the data lifecycle.

- Most of the PP methods used in IoT settings are general, which means they cannot offer a concrete solution for privacy preservation in diverse domains (e.g., healthcare, finance, etc.). Due to heterogeneous data and domain requirements, it is challenging to adapt current PP solutions to diverse domains.

Apart from the above-cited challenges, consent management for data collection and ensuring the fulfillment of regulatory requirements are very challenging. Privacy preservation for different data items (generated content, processed content, and output) is required, which is not the case in conventional data-handling scenarios. Furthermore, an AI model trained on sensitive IoT data can capture unnecessary information that can lead to data reconstruction or privacy-endangering inferences. Lastly, IoT devices can generate a massive amount of data, but not all the data are sensitive in terms of privacy. An in-depth understanding and classification of data can help remove unnecessary data, or it can augment utility by making fewer changes to the less sensitive data. However, it is challenging to classify data based on sensitivity and to learn from the required information by using only a small segment of the data rather than the entire range of big data.

Future research directions: We outline twelve promising directions for future research on privacy preservation in the IoT.

- PP methods for on-device setting: In recent years, a lot of lightweight AI models have been developed for resource-constrained IoT devices. Although these models have various guardrails, there is still a chance of privacy disclosure via side-channel attacks or device behavior analysis. Furthermore, using privacy methods like encryption can increase computing overhead on tiny devices. In the future, developing lightweight privacy protection methods in order to prevent personal information leaks in IoT devices is a promising research area.

- Development of quantum-based privacy methods: Recently, quantum computing methods have revolutionized the privacy domain, and can provide promising solutions for privacy protection, particularly in intelligent infrastructures [235]. These methods have the potential to address the technical deficiencies in conventional/classical privacy protection methods while protecting user-sensitive information [253]. To this end, devising quantum-based privacy methods for diverse IoT settings, or combining quantum-based methods with conventional ones to protect sensitive data, are very promising areas of research.

- Privacy–utility trade-off optimization in PbD methods: PbD methods such as federated learning, split learning, and federated analytics present promising solutions to address privacy issues in the IoT as well as in general settings. However, accomplishing the privacy/utility trade-off via these methods is difficult due to their distributed nature and the risk of poisoning attacks [254,255]. To this end, devising practical methods that can optimize the privacy/utility trade-off despite poisoning attacks or data variability/heterogeneity is a fascinating topic to work on in the future.

- Development of less restrictive privacy methods: Most of the privacy methods used in IoT settings are restrictive, meaning they enforce hard parameters on the data to accomplish privacy [256]. However, restrictive parameters can alter the structure of the data too much, and utility can be seriously compromised. Furthermore, heavily distorted data can induce other problems, like bias in downstream tasks. To address this, developing adaptive privacy methods that can reduce the number and extent of modifications in the data without compromising privacy is an active area of research.

- Computing overhead reduction in synergized privacy methods: As discussed in earlier parts of this paper, there are many synergies between PES and PbD methods. This trend is expected to grow because privacy requirements are increasing day by day. However, in synergized methods, many operations are performed to transform data from one form to another, leading to extensive computing overhead [257]. It is vital to devise lightweight hybrid methods that combine some operations from each category to reduce overhead while offering privacy guarantees. Furthermore, classifying data w.r.t. privacy risks and reducing redundant operations is a promising research area.

- Development of personalized privacy methods for diverse IoT settings/scenarios: Most of the previous methods treat all data resulting from IoT devices as risky. However, in practice, not all data are risky and may require non-identical processing. The development of personalized privacy methods can strike a balance between privacy and utility, which is not the case with methods that treat all data as risky. To that end, devising practical methods that can classify data based on sensitivity and that ensure the required protection for each type (highly sensitive, less sensitive, and not sensitive) is a promising area of research.

- Development of privacy methods with end-to-end privacy guarantees: In IoT-based systems, privacy needs to be ensured at different stages, such as input, processing, and output. Also, privacy methods may differ depending upon the setting and data modality [59]. It is vital to determine the most suitable methods that contribute to privacy guarantees at each stage but with the least computing overhead. In line with such work, developing new methods that can guarantee data privacy when data are stored, moved, and/or transferred from one environment to another is a promising research area.

- Integration of zero trust architectures with privacy-enhancing technologies: In recent years, the zero trust architecture (ZTA) has been extensively used to address security concerns in IoT devices, and has been promoted by John Cates and companies like Google. Recently, some privacy-preserving techniques, such as blockchain, have been integrated with the ZTA to provide robust privacy guarantees when large-scale data interactions are desirable [258]. Exploring the role of diverse privacy methods (differential privacy, anonymization, SMC, etc.) in the ZTA is a promising direction for future research.

- Explainable AI and transfer learning for PP IoT analytics: The IoT brings in a lot of sensitive data that we need to utilize, and explainable AI (XAI) can help humanize or make privacy mechanisms transparent to the end user and to regulatory bodies [259]. However, future work should look for a way to provide well-founded explanations despite these strong guarantees of protection from information disclosure in IoT systems. Moreover, transfer learning mechanisms may allow reusing privacy models built in one IoT domain [260] (e.g., smart homes) for the new or altered IoT environments (e.g., the industrial IoT) to obtain a good trade-off between privacy protection and analytical usefulness.

- Collaborative privacy frameworks for cross-domain IoT ecosystems: IoT ecosystems are often multi-domain with different vendors and regulatory jurisdictional environments, leading to high infrastructure complexity [261]. To cater to this huge complexity, collaborative privacy frameworks should be developed. These approaches include standards for data sharing along with privacy guarantees, anonymization methods for IoT observation imagery, as well as models to help users abide by shifting privacy laws across jurisdictions [262]. In the near future, developing collaborative privacy frameworks that can protect against internal and external adversaries in complex IoT systems is an emerging avenue for research.