Efficiently Exploiting Muti-Level User Initial Intent for Session-Based Recommendation

Abstract

1. Introduction

- In this paper, we propose a new method named Exploring EMUI, which aims to further explore the role of user initial intent in the performance of SBR.

- We propose the MIGM. This module obtains a more discriminative initial intent representation by mining multi-level user initial intent. As well, a contrastive learning task is constructed to maximize the use of the initial user intent at each level.

- We designed the IMM. This module retains the part of the user’s initial intent that matches the dynamic interests for enhanced session recommendation.

- Extensive experiments demonstrate that our model enhances the state-of-the-art approach.

2. Related Works

2.1. Traditional Methods

2.2. Deep Learning-Based Methods

2.3. Self-Supervised Learning in RS

3. Methodology

3.1. Problem Statement

3.2. The Proposed EMUI

3.2.1. Item Features Learning Based on HCGN

3.2.2. User Initial Intent Learning

3.2.3. General Intent Learning

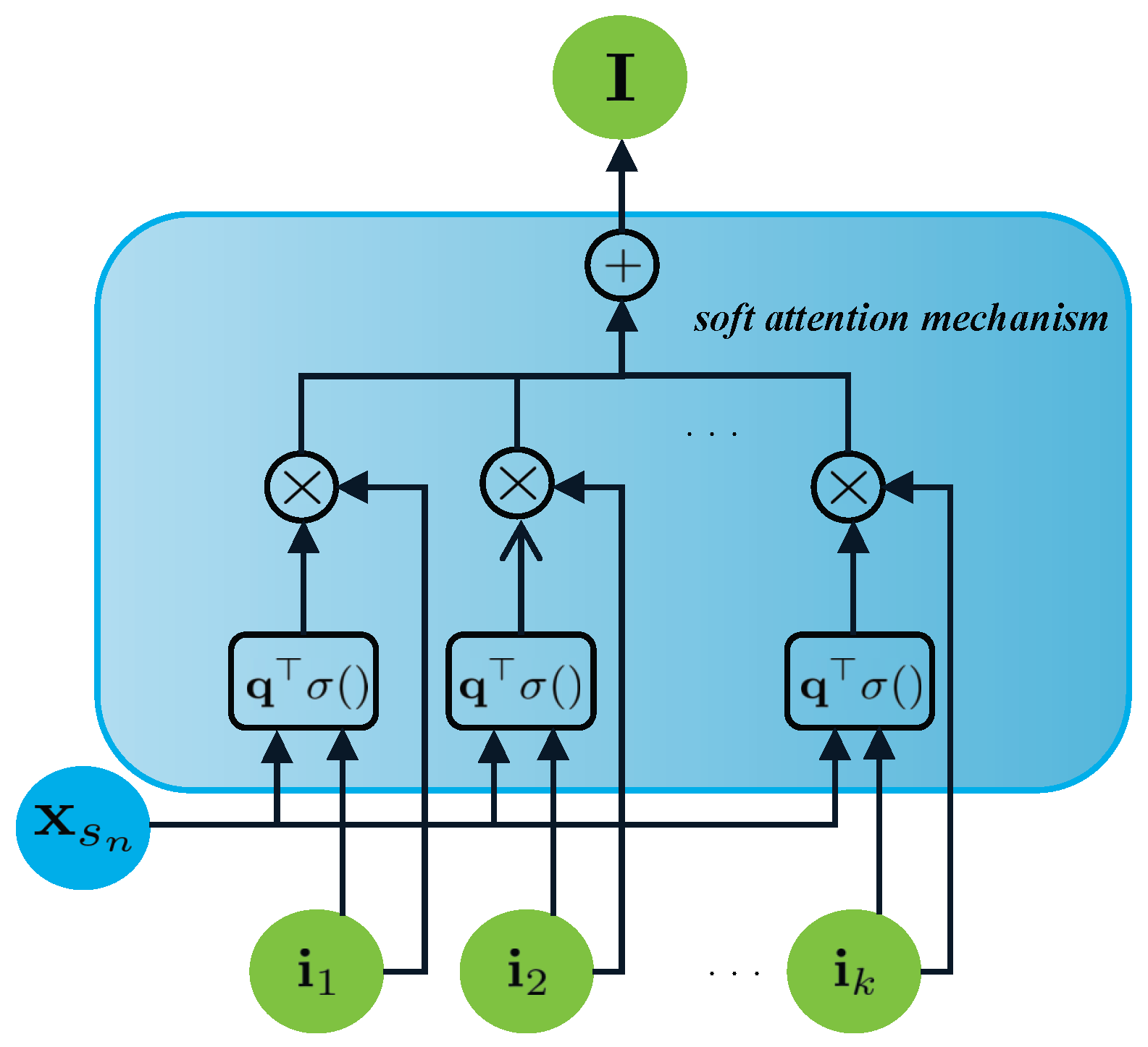

3.2.4. User Intent Dynamic Fusion

3.2.5. Make Recommendations

4. Experiments

- RQ1: How does the performance of EMUI compare with other baseline methods?

- RQ2: How do variants of EMUI affect the final recommendation?

- RQ3: How do the depth of EMUI and MIGM affect the model performance?

- RQ4: How do different fusion methods of user general and initial intent affect the model performance?

- RQ5: How does hyper-parameter impact the EMUI performance?

4.1. Experimental Settings

4.1.1. Datasets

4.1.2. Competing Methods

- Item-KNN [15] is a CF-based method that recommends similar items to the user by the cosine similarity scores.

- FPMC [14] is the MC-based method that applies to the next-basket recommendation. It adapts to the session recommendation task by ignoring the user latent representation.

- GRU4REC [6] models session sequential transitions using GRU to capture user preferences.

- NARM [7] fuses RNN and attention mechanisms to learn the user’s main interests and fuse them with the sequential features.

- STAMP [18] utilizes a multilayer perceptron that considers the user’s long-term and current interests to extract user preferences.

- SRGNN [20] models the conversation as a graph structure and learns item features and user interests using GGNN and soft attention mechanisms.

- GCE-GNN [23] aggregates global contextual information about sessions using a global graph, while retaining the session graph for more comprehensive session embedding.

- DHCN [24] transforms sessions into a hypergraph, capturing the complex transitions between items using a hypergraph convolutional network. Externally, the method incorporates a self-supervised learning approach to enhance recommendations.

- AMAN [13] treats the first clicked item of a session as the user’s original interest and captures the association of items in different micro-behavioural sequences.

- STGCR [28] constructs temporal graph and temporal hypergraph views and creates a contrastive learning task between the two views to maximize the mutual information between them to improve recommendation performance.

4.1.3. Parameter Settings

4.1.4. Evalution Metrics

4.2. Overall Performance (RQ1)

- RNN-based methods (e.g., GRU4Rec, NARM, STAMP) achieved great improvement in all evaluation metrics compared to traditional methods (e.g., Item-KNN, FPMC), which demonstrates the effectiveness of RNN-based methods in session recommendation tasks. The traditional approach recommends items based on the user’s most recently clicked items without considering the order dependency in the session, whereas the deep learning-based methods take it into account. This shows the importance of modeling the sequential dependencies of items in a session.

- GNN-based approaches (e.g., SRGNN, DHCN) have achieved better results compared to recurrent neural network-based approaches (e.g., GRU4Rec, NARM). GNN-based methods benefit from the advantage of graph structure to capture long-range dependencies between items. By modeling complex item transformation relationships, richer item representations are obtained, and recommendation performance is improved.

- Contrastive learning techniques further enhance session recommendation performance (e.g., STGCR). Contrastive learning improves the proportion of valid information of the learned content by maximizing the mutual information between the target and the positive samples while broadening the distance from the negative samples to get more accurate item features. AMAN adds the initial user intent, which improves the recommendation performance to a certain extent compared to other methods.

- Our proposed EMUI outperforms the baseline method in all metrics on all three datasets. EMUI upgrades the initial intent representation in AMAN by extracting multi-level user initial intent through MIGM to enrich the initial intent representation. In order to match the initial intent with the user’s dynamic interest, we newly designed IMM to retain the part of the user’s initial intent that matches the dynamic interest. In addition, to further exploit the multi-level user initial intentions, we created a comparison learning task to maximize the relationship between the final obtained user initial intentions and the initial intentions of each level. The experimental results show that our model can effectively improve recommendation performance.

4.3. Impact of EMUI Components (RQ2)

4.4. Impact of the Depth of EMUI and MIGM (RQ3)

4.5. Impact of Different Fusion Methods of User General and Initial Intent (RQ4)

4.6. Impact of the Hyper-Parameters (RQ5)

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| SBR | Session-based recommendation |

| RNN | Recurrent neural network |

| GNN | Graph neural network |

| EMUI | Exploring Multi-Level User Initial Intent |

| MIGM | Multi-level initial intent generation module |

| IMM | Interest matching module |

References

- Wang, S.; Cao, L.; Wang, Y.; Sheng, Q.Z.; Orgun, M.A.; Lian, D. A survey on session-based recommender systems. ACM Comput. Surv. (CSUR) 2021, 54, 1–38. [Google Scholar] [CrossRef]

- Jannach, D.; Ludewig, M. When recurrent neural networks meet the neighborhood for session-based recommendation. In Proceedings of the eleventh ACM Conference on Recommender Systems, Como, Italy, 27–31 August 2017; pp. 306–310. [Google Scholar]

- Pan, Z.; Cai, F.; Ling, Y.; de Rijke, M. An intent-guided collaborative machine for session-based recommendation. In Proceedings of the 43rd International ACM SIGIR Conference on Research and Development in Information Retrieval, Virtual, 24–25 July 2020; pp. 1833–1836. [Google Scholar]

- Song, W.; Xiao, Z.; Wang, Y.; Charlin, L.; Zhang, M.; Tang, J. Session-based social recommendation via dynamic graph attention networks. In Proceedings of the Twelfth ACM International Conference on Web Search and Data Mining, Melbourne, Australia, 11–15 February 2019; pp. 555–563. [Google Scholar]

- Wang, M.; Ren, P.; Mei, L.; Chen, Z.; Ma, J.; De Rijke, M. A collaborative session-based recommendation approach with parallel memory modules. In Proceedings of the 42nd International ACM SIGIR Conference on Research and Development in Information Retrieval, Paris, France, 21–25 July 2019; pp. 345–354. [Google Scholar]

- Hidasi, B.; Karatzoglou, A.; Baltrunas, L.; Tikk, D. Session-based recommendations with recurrent neural networks. arXiv 2015, arXiv:1511.06939. [Google Scholar]

- Li, J.; Ren, P.; Chen, Z.; Ren, Z.; Lian, T.; Ma, J. Neural attentive session-based recommendation. In Proceedings of the 2017 ACM on Conference on Information and Knowledge Management, Singapore, 6–10 November 2017; pp. 1419–1428. [Google Scholar]

- Tan, Y.K.; Xu, X.; Liu, Y. Improved recurrent neural networks for session-based recommendations. In Proceedings of the 1st Workshop on Deep Learning for Recommender Systems, Boston, MA, USA, 15 September 2016; pp. 17–22. [Google Scholar]

- Xu, C.; Zhao, P.; Liu, Y.; Sheng, V.S.; Xu, J.; Zhuang, F.; Fang, J.; Zhou, X. Graph contextualized self-attention network for session-based recommendation. In Proceedings of the IJCAI, Macao, China, 10–16 August 2019; Volume 19, pp. 3940–3946. [Google Scholar]

- Chen, T.; Wong, R.C.W. Handling information loss of graph neural networks for session-based recommendation. In Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Virtual, 6–10 July 2020; pp. 1172–1180. [Google Scholar]

- Wang, J.; Ding, K.; Zhu, Z.; Caverlee, J. Session-based recommendation with hypergraph attention networks. In Proceedings of the 2021 SIAM International Conference on Data Mining (SDM), SIAM, Virtual, 29 April–1 May 2021; pp. 82–90. [Google Scholar]

- Li, A.; Cheng, Z.; Liu, F.; Gao, Z.; Guan, W.; Peng, Y. Disentangled graph neural networks for session-based recommendation. IEEE Trans. Knowl. Data Eng. 2022, 35, 7870–7882. [Google Scholar] [CrossRef]

- Qiao, J.; Wang, L. Modeling user micro-behaviors and original interest via Adaptive Multi-Attention Network for session-based recommendation. Knowl.-Based Syst. 2022, 244, 108567. [Google Scholar] [CrossRef]

- Rendle, S.; Freudenthaler, C.; Schmidt-Thieme, L. Factorizing personalized markov chains for next-basket recommendation. In Proceedings of the 19th International Conference on World Wide Web, Raleigh, NC, USA, 26–30 April 2010; pp. 811–820. [Google Scholar]

- Sarwar, B.; Karypis, G.; Konstan, J.; Riedl, J. Item-based collaborative filtering recommendation algorithms. In Proceedings of the 10th International Conference on World Wide Web, Hong Kong, China, 1–5 May 2001; pp. 285–295. [Google Scholar]

- Zhou, X.; Shu, W.; Lin, F.; Wang, B. Confidence-weighted bias model for online collaborative filtering. Appl. Soft Comput. 2018, 70, 1042–1053. [Google Scholar] [CrossRef]

- Wang, R.; Jiang, Y.; Lou, J. Attention-based dynamic user preference modeling and nonlinear feature interaction learning for collaborative filtering recommendation. Appl. Soft Comput. 2021, 110, 107652. [Google Scholar] [CrossRef]

- Liu, Q.; Zeng, Y.; Mokhosi, R.; Zhang, H. STAMP: Short-term attention/memory priority model for session-based recommendation. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, London, UK, 19–23 August 2018; pp. 1831–1839. [Google Scholar]

- Luo, A.; Zhao, P.; Liu, Y.; Zhuang, F.; Wang, D.; Xu, J.; Fang, J.; Sheng, V.S. Collaborative Self-Attention Network for Session-based Recommendation. In Proceedings of the IJCAI, Yokohama, Japan, 11–17 July 2020; pp. 2591–2597. [Google Scholar]

- Wu, S.; Tang, Y.; Zhu, Y.; Wang, L.; Xie, X.; Tan, T. Session-based recommendation with graph neural networks. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 346–353. [Google Scholar]

- Qiu, R.; Li, J.; Huang, Z.; Yin, H. Rethinking the item order in session-based recommendation with graph neural networks. In Proceedings of the 28th ACM International Conference on Information and Knowledge Management, Beijing, China, 3–7 November 2019; pp. 579–588. [Google Scholar]

- Zeng, J.; Xie, P. Contrastive self-supervised learning for graph classification. In Proceedings of the AAAI conference on Artificial Intelligence, Virtual, 2–9 February 2021; Volume 35, pp. 10824–10832. [Google Scholar]

- Wang, Z.; Wei, W.; Cong, G.; Li, X.L.; Mao, X.L.; Qiu, M. Global context enhanced graph neural networks for session-based recommendation. In Proceedings of the 43rd International ACM SIGIR Conference on Research and Development in Information Retrieval, Virtual, 24–25 July 2020; pp. 169–178. [Google Scholar]

- Xia, X.; Yin, H.; Yu, J.; Wang, Q.; Cui, L.; Zhang, X. Self-supervised hypergraph convolutional networks for session-based recommendation. In Proceedings of the AAAI conference on Artificial Intelligence, Virtual, 2–9 February 2021; Volume 35, pp. 4503–4511. [Google Scholar]

- Sun, F.Y.; Hoffmann, J.; Verma, V.; Tang, J. Infograph: Unsupervised and semi-supervised graph-level representation learning via mutual information maximization. arXiv 2019, arXiv:1908.01000. [Google Scholar]

- Ghosh, S.; Roy, M.; Ghosh, A. Semi-supervised change detection using modified self-organizing feature map neural network. Appl. Soft Comput. 2014, 15, 1–20. [Google Scholar] [CrossRef]

- He, X.; Deng, K.; Wang, X.; Li, Y.; Zhang, Y.; Wang, M. Lightgcn: Simplifying and powering graph convolution network for recommendation. In Proceedings of the 43rd International ACM SIGIR conference on research and development in Information Retrieval, Virtual, 24–25 July 2020; pp. 639–648. [Google Scholar]

- Wang, H.; Yan, S.; Wu, C.; Han, L.; Zhou, L. Cross-view temporal graph contrastive learning for session-based recommendation. Knowl.-Based Syst. 2023, 264, 110304. [Google Scholar] [CrossRef]

- Feng, Y.; You, H.; Zhang, Z.; Ji, R.; Gao, Y. Hypergraph neural networks. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 3558–3565. [Google Scholar]

- Wu, F.; Souza, A.; Zhang, T.; Fifty, C.; Yu, T.; Weinberger, K. Simplifying graph convolutional networks. In Proceedings of the International Conference on Machine Learning, PMLR, Long Beach, CA, USA, 10–15 May 2019; pp. 6861–6871. [Google Scholar]

- Rendle, S.; Freudenthaler, C.; Gantner, Z.; Schmidt-Thieme, L. BPR: Bayesian personalized ranking from implicit feedback. In Proceedings of the Twenty-Fifth Conference on Uncertainty in Artificial Intelligence, UAI’09, Arlington, VA, USA, 18–21 June 2009; pp. 452–461. [Google Scholar]

| Notations | Descriptions |

|---|---|

| V | Item set contains all items |

| S | Session set contains all sessions |

| An item in item set | |

| A session in session set | |

| E | Hyperedge set |

| e | A hyperedge in hyperedge set |

| W | The diagonal matrix of hyperedge weights |

| H | The hypergraph association matrix |

| D | The degree matrices of nodes |

| B | The degree matrices of hyperedges |

| x | Item embedding set |

| An item embedding | |

| I | User initial intent set |

| k-th user initial intent in a session | |

| The average item embedding in a session | |

| User general intent representation | |

| The final user intent representation |

| Dataset | Tmall | RetailRocket | Taobao | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Methods | P@10 | M@10 | P@20 | M@20 | P@10 | M@10 | P@20 | M@20 | P@10 | M@10 | P@20 | M@20 |

| Item-KNN | 6.68 | 3.12 | 9.20 | 3.34 | 21.41 | 9.78 | 35.26 | 16.58 | 0.13 | 0.08 | 0.14 | 0.08 |

| FPMC | 13.05 | 7.11 | 16.08 | 7.34 | 20.59 | 8.53 | 32.37 | 13.82 | 0.80 | 0.71 | 0.80 | 0.72 |

| GRU4Rec | 9.50 | 5.75 | 10.98 | 5.92 | 31.01 | 15.37 | 44.01 | 23.67 | 0.87 | 0.77 | 0.89 | 0.77 |

| NARM | 19.21 | 10.39 | 23.35 | 10.68 | 44.74 | 25.54 | 50.22 | 24.59 | 0.90 | 0.75 | 0.94 | 0.75 |

| STAMP | 22.46 | 13.08 | 26.44 | 13.35 | 43.14 | 26.65 | 50.96 | 25.17 | 0.77 | 0.44 | 0.84 | 0.44 |

| SRGNN | 23.49 | 13.47 | 27.65 | 13.76 | 44.88 | 26.95 | 50.32 | 26.65 | 17.17 | 11.96 | 23.48 | 12.03 |

| GCEGNN | 28.03 | 15.07 | 33.41 | 15.43 | 46.38 | 27.96 | 54.58 | 28.09 | 27.46 | 13.98 | 32.75 | 14.14 |

| DHCN | 26.24 | 14.63 | 31.51 | 15.08 | 46.32 | 27.85 | 53.66 | 27.30 | 25.98 | 13.75 | 30.63 | 14.16 |

| STGCR | 29.13 | 16.45 | 34.28 | 16.86 | 48.84 | 29.40 | 56.45 | 29.40 | 30.13 | 17.26 | 32.14 | 17.35 |

| AMAN | 28.64 | 16.13 | 33.52 | 16.37 | 47.45 | 28.92 | 55.84 | 29.17 | 26.00 | 15.55 | 36.20 | 18.17 |

| ours (EMUI) | 31.19 | 18.58 | 36.64 | 18.86 | 49.30 | 29.47 | 56.82 | 29.99 | 34.72 | 23.61 | 37.37 | 23.78 |

| Dataset | Tmall | RetailRocket | Taobao | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Variant | P@10 | M@10 | P@20 | M@20 | P@10 | M@10 | P@20 | M@20 | P@10 | M@10 | P@20 | M@20 |

| DHCN | 26.24 | 14.63 | 31.51 | 15.08 | 46.32 | 27.85 | 53.66 | 27.3 | 25.98 | 13.75 | 30.63 | 14.16 |

| EMUI w/o MIGM | 28.92 | 16.93 | 34.38 | 17.17 | 47.98 | 29.14 | 55.97 | 29.32 | 32.59 | 21.87 | 35.44 | 22.53 |

| EMUI w/o IMM | 30.12 | 18.03 | 35.99 | 18.34 | 48.57 | 28.66 | 55.89 | 28.94 | 33.75 | 22.74 | 36.11 | 22.81 |

| EMUI w/o CL | 30.25 | 18.12 | 36.24 | 18.55 | 48.88 | 28.92 | 56.13 | 29.08 | 33.94 | 22.81 | 36.16 | 23.05 |

| EMUI | 31.19 | 18.58 | 36.64 | 18.86 | 49.3 | 29.47 | 56.82 | 29.99 | 34.72 | 23.61 | 37.37 | 23.78 |

| Dataset | Tmall | RetailRocket | Taobao | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Methods | P@10 | M@10 | P@20 | M@20 | P@10 | M@10 | P@20 | M@20 | P@10 | M@10 | P@20 | M@20 |

| ours | 31.19 | 18.58 | 36.64 | 18.86 | 49.30 | 29.47 | 56.82 | 29.99 | 34.72 | 23.61 | 37.37 | 23.78 |

| averaging | 30.86 | 18.09 | 36.78 | 18.35 | 49.76 | 29.26 | 56.53 | 29.45 | 34.32 | 24.45 | 37.41 | 23.66 |

| summaration | 30.89 | 17.97 | 36.46 | 18.57 | 49.35 | 29.34 | 56.38 | 29.60 | 33.15 | 23.54 | 36.11 | 23.39 |

| concatenation | 26.89 | 15.17 | 32.45 | 15.30 | 46.30 | 27.59 | 54.38 | 27.76 | 30.97 | 20.22 | 33.79 | 20.95 |

| Dataset | Tmall | RetailRocket | Taobao | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| P@10 | M@10 | P@20 | M@20 | P@10 | M@10 | P@20 | M@20 | P@10 | M@10 | P@20 | M@20 | |

| 0 | 30.25 | 18.12 | 36.24 | 18.55 | 48.88 | 28.92 | 56.13 | 29.08 | 33.94 | 22.81 | 36.16 | 23.05 |

| 0.0001 | 30.98 | 18.48 | 36.55 | 18.79 | 48.29 | 28.79 | 55.52 | 28.92 | 33.82 | 23.07 | 36.69 | 23.18 |

| 0.001 | 31.19 | 18.58 | 36.64 | 18.86 | 49.30 | 29.47 | 56.82 | 29.99 | 34.72 | 23.61 | 37.37 | 23.78 |

| 0.005 | 30.64 | 18.31 | 36.41 | 18.68 | 48.56 | 28.85 | 55.80 | 28.99 | 33.87 | 22.95 | 36.45 | 23.12 |

| 0.01 | 30.35 | 18.17 | 36.28 | 18.58 | 48.80 | 28.90 | 56.04 | 29.06 | 33.92 | 22.85 | 36.24 | 23.07 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ding, J.; Wei, J.; Lu, G. Efficiently Exploiting Muti-Level User Initial Intent for Session-Based Recommendation. Electronics 2025, 14, 207. https://doi.org/10.3390/electronics14010207

Ding J, Wei J, Lu G. Efficiently Exploiting Muti-Level User Initial Intent for Session-Based Recommendation. Electronics. 2025; 14(1):207. https://doi.org/10.3390/electronics14010207

Chicago/Turabian StyleDing, Jiawei, Jinsheng Wei, and Guanming Lu. 2025. "Efficiently Exploiting Muti-Level User Initial Intent for Session-Based Recommendation" Electronics 14, no. 1: 207. https://doi.org/10.3390/electronics14010207

APA StyleDing, J., Wei, J., & Lu, G. (2025). Efficiently Exploiting Muti-Level User Initial Intent for Session-Based Recommendation. Electronics, 14(1), 207. https://doi.org/10.3390/electronics14010207