Abstract

Link prediction for opportunistic networks faces the challenges of frequent changes in topology and complex and variable spatial-temporal information. Most existing studies focus on temporal or spatial features, ignoring ample potential information. In order to better capture the spatial-temporal correlations in the evolution of networks and explore their potential information, a link prediction method based on spatial-temporal attention and temporal convolution network (STA-TCN) is proposed. It slices opportunistic networks into discrete network snapshots. A state matrix based on topology information and attribute information is constructed to represent snapshots. Time convolutional networks and spatial-temporal attention mechanisms are employed to learn spatial-temporal information. Furthermore, to better improve link prediction performance, the proposed method converts the auto-correlation error into non-correlation error. On three real opportunistic network datasets, ITC, MIT, and Infocom06, experimental results demonstrate the superior predictive performance of the proposed method compared to baseline models, as shown by improved AUC and F1-score metrics.

1. Introduction

An opportunistic network [1,2] is a type of mobile self-organized network in which the source node is not directly connected to the target node, and the communication between the two nodes is realized by encounters through nodes’ movement, and the data is transmitted by way of “store-carry-forward”. Opportunistic networks have been used in many fields [3], such as socially oriented services, personal and environmental services, intelligent transportation systems, earthquake prediction [4], and so on. The goal of link prediction is to predict the future network topology based on historical information. Link prediction can offer support for the above applications, which is one of the hotspots in the current research of opportunistic networks. In this paper, we study opportunistic networks, whose links have two states: connected and disconnected.

The limitations of current link prediction methods can be summarized in the following three aspects:

- Most temporal models focus on capturing temporal features, neglecting the correlation between spatial and temporal features.

- Many nodes in real networks have rich attributes that change over time, and these attributes are intertwined in both the temporal and spatial dimensions [5]. It is challenging to determine which factor is more important. Although some methods consider both spatial and temporal features, they fail to discriminate the importance of different factors.

- Existing studies do not deal with auto-correlation errors. When networks are sliced into snapshots, in the process of collecting and modeling time series, auto-correlation errors will arise unavoidably, which will affect prediction performance.

To alleviate the aforementioned limitations, this paper proposes a link prediction model based on Spatial-Temporal Attention Mechanism and Temporal Convolution Networks (STA-TCN). A state matrix is constructed by combining the topology information, the link frequency, and the link duration of node pairs so that potential information can be learned better. The spatial-temporal attention mechanism and temporal convolution are integrated into the encoder-decoder to extract spatial-temporal information. Inspired by [6], the auto-correlation error in the predicting process is adjusted to the non-correlation error to improve the link prediction performance. The main contributions of this paper are as follows:

- A novel network representing method is proposed to characterize networks by state matrix, which is constructed by the first-order adjacency matrix, quadratic adjacency matrix, and correlation matrix.

- A spatial-temporal dual-attention mechanism is employed to learn the node representations in both spatial and temporal aspects; each snapshot and each neighbor are obtained with different attention to achieve more accurate spatial-temporal characteristics.

- An error transformation method is proposed. The auto-correlation error in the STA-TCN model is converted into non-correlated error to further improve the prediction performance.

2. Related Work

Link prediction, as an important task in network data mining, has been widely studied and falls into the following categories: matrix factorization-based, probabilistic model-based, and machine learning-based approaches.

The matrix factorization-based methods decompose the adjacency matrix of a network into the product of low-rank matrices to capture the latent relationships between nodes. Ref. [7] proposed an architecture for link prediction using the NMF algorithm, which utilized different kernel functions to extract local and global features of the network. Ref. [8] introduced a graph-regularized non-negative matrix factorization method that captured the local information of snapshots through graph regularization and calculated the importance of nodes in each snapshot using the PageRank algorithm. Finally, a non-negative matrix factorization model is jointly optimized to preserve both local and global information. Ref. [9] proposed a robust graph regularized non-negative matrix factorization algorithm modeling the network topology and node attributes.

The probability model-based methods utilize principles of probability theory and mathematical statistics to infer unknown phenomena based on known data or assumptions. Ref. [10] presented a social network evolution analysis method based on triplets (SNEA), where the prediction algorithm learns the evolution of the triplet transition probability matrix. Ref. [11] proposed a deep generative model for graphs, which learns the likelihood of missing links in the graph using autoregressive generative models. Ref. [12] introduced an adaptive method to assess the likelihood of a link between nodes.

In recent years, deep learning-based link prediction models have gained increasing attention due to their ability to automatically discover features from data [13,14]. Ref. [15] proposed an embedding method called dyngraph2vec, which used multiple non-linear layers to learn structural patterns in each snapshot and utilized recurrent layers to learn temporal transformations in the network. To reduce the number of model parameters and improve learning efficiency, DynRNN and DynAERNN models were subsequently proposed. Ref. [16] introduced the Evolving Graph Convolutional Network (EvolveGCN), which evolved the graph convolutional parameter matrix using a Recurrent Neural Network (RNN) to capture the dynamics of graph sequences. Ref. [17] proposed an end-to-end link prediction method called Graph Convolution Network Embedded Long Short-Term Memory (GC-LSTM), which embedded Long Short-Term Memory (LSTM) into the graph convolutional network to learn the temporal features of all snapshots in a dynamic network. It used the graph convolutional network (GCN) to capture the local structural attributes of nodes and their relationships in each snapshot. Ref. [18] proposed a novel encoder-LSTM-decoder (E-LSTM-D) model to predict end-to-end dynamic links. The model integrated stacked LSTMs into the architecture of the encoder-decoder, learning not only low-dimensional representations and non-linearity but also the time dependencies between consecutive network snapshots. Ref. [19] replaced LSTM with Gated Recurrent Units (GRUs) in the E-LSTM-D model to obtain the E-GRUs-D model, which improved the training efficiency of the model while sacrificing a small amount of accuracy. Ref. [20] proposed a GRU prediction model combined with structural embedding and designed various loss constraints to improve prediction accuracy. Ref. [21] introduced node-adaptive parameter learning and data-adaptive generation modules to enhance traditional GCN and further proposed the Adaptive Graph Convolutional Recurrent Network (AGCRN) to automatically capture fine-grained spatial and temporal correlations in traffic sequences.

To sum up, the above methods mainly use graph neural networks combined with recurrent neural networks to capture topological and temporal information, which neglects the rich potential information of nodes and the interactions between spatial and temporal dimensions. Compared with the above work, the STA-TCN model proposed in this paper takes state matrix to represent the snapshot. A spatial-temporal attention mechanism is employed to better learn the spatial and temporal features. To further improve the network’s link prediction performance, the auto-correlation error is transformed into the non-correlation error.

3. Methodology

3.1. Problem Statement

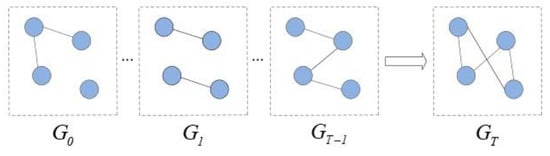

Given a sequence of network snapshots of length T as , denote a snapshot of the network at moment t, defined as , where denotes the set of nodes and M is the total number of nodes, and denotes the set of links. Opportunistic network link prediction aims to predict based on a sequence of network snapshots of length T, as shown in Figure 1.

Figure 1.

Link prediction based on snapshots.

3.2. Representation of Opportunistic Network

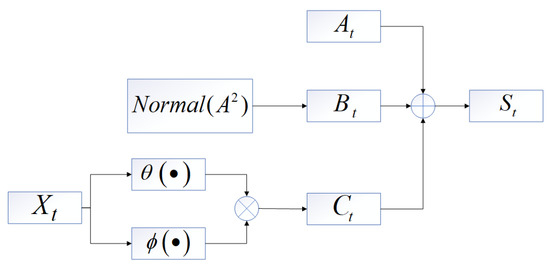

The opportunistic network is an undirected network, which can be divided into discrete network snapshots by time slicing. For a specific snapshot at time t, a first-order adjacency matrix , quadratic adjacency matrix , node attribute matrix , and correlation matrix are established. In this paper, a snapshot is represented by state matrix , which is constructed by , and as shown in Figure 2:

Figure 2.

State matrix.

This section will provide a detailed explanation of the construction process for each of its components.

3.2.1. Topological Feature Representation

- (1)

- First-order adjacency matrix

The first order adjacency matrix represents the state of connected edges within a network snapshot t. If node and node are linked, then the element , otherwise .

- (2)

- Quadratic adjacency matrix

The quadratic adjacency matrix , captures the topological information of the network using the power of two of the first-order adjacency matrix and normalizes it to obtain the quadratic adjacency matrix , which is defined as:

3.2.2. Attribute Feature Representation

Opportunistic networks in which communication of nodes are frequently connected and interrupted. The link duration of node pairs indicates the duration of information transfer between nodes, and link frequency represents the strength of the relationship between nodes. The changes in link duration and link frequency can reveal the dynamic evolution of interaction patterns and relationships between nodes. Thus, the attribute vectors of the nodes are constructed based on the starting link moment , the ending link moment and link frequency .

When the majority of node pairs within a snapshot have a link duration greater than the slice size, the impact of the number of link frequency on the calculation of node attribute features is minimal, indicating that the magnitude of the link frequency can be disregarded. In that case, the node attribute feature vector is shown as:

where the i-th row of denotes the attribute vector of node in the t-th snapshot.

When the majority of node pairs within a snapshot have a link duration less than the slice size, indicating that multiple links emerge in a snapshot, it is necessary to consider link frequency in the calculation of node attribute feature vector. The node attribute feature vector can be defined as:

With the obtained node attribute vectors, we can construct , the time-specific correlation matrix [22], which aims to capture the attribute correlation between nodes within a network snapshot t. Specifically, for a snapshot t, captures the extent to which node is affected by node . The correlation between the attribute vectors of node and the attribute vectors of node is captured by a normalization function, as shown by Formula (4):

where and are embedding functions.

3.2.3. State Matrix Construction

State matrix is employed to represent a snapshot, which consists of the topological information of the network and the node attribute vectors, shown as following:

where is the first order adjacency matrix, is the quadratic adjacency matrix, and is the correlation matrix. The matrices , and are linearly combined with the learnable parameters , and .

3.3. Link Prediction Model

The model uses the state matrix sequence as input. It encodes the sequence using spatial attention and TCN to obtain feature vectors. It decodes feature vectors using temporal attention and TCN to obtain the node’s feature matrix. Finally, it takes a fully connected layer to output the predicted matrix.

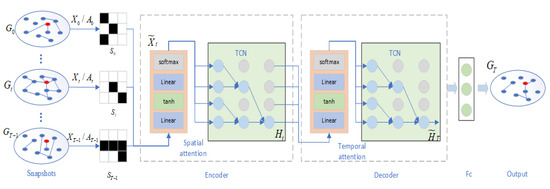

3.3.1. Overall Framework

In this paper, we propose a link prediction model STA-TCN based on temporal convolutional network and attention mechanism, as shown in Figure 3. The architecture of STA-TCN is composed of two components: a spatial attention-based encoder and a temporal attention-based decoder. The encoder extracts the spatial features [23] to obtain the matrix sequence , and inputs the sequence into the TCN [24] for further encoding to obtain the spatial-temporal features ; the decoder introduces the temporal attention to process the encoded features to obtain , and inputs the processed features into the TCN for further decoding to get the node representation , and go through the fully connected layer to get the predict network for the next moment .

Figure 3.

STA-TCN model.

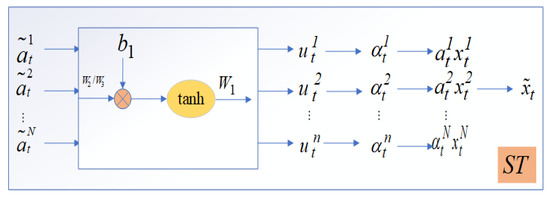

3.3.2. Spatial Attention-Based Encoder

The input to the encoder is the state matrix . As different neighbors have varying impacts on the node, the attention mechanism is employed to dynamically capture the most important neighbors. To accomplish this, a spatial attention module is designed to identify the significant neighbors of a node, as illustrated in Figure 4. The spatial attention mechanism is defined by the following:

where , and are the learnable parameter matrices, denotes the i-th row vector of , is the bias vector, and denotes the attention value of the i-th node at the network snapshot t. The attention weight of the normalized node at moment t is shown as follows:

Figure 4.

Spatial attention.

The node representation vector acquired by spatial attention is shown as following:

where denotes the product-by-element operation.

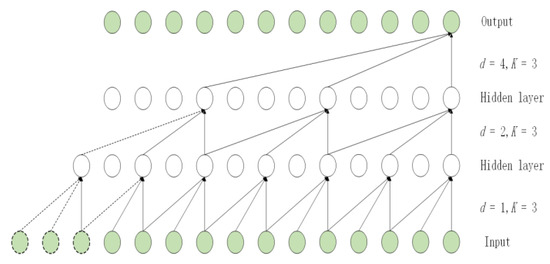

The series weighted by spatial attention is input into the time-series model TCN, which is a hierarchical model composed of multiple layers of dilated causal convolutions for extracting relationships. Figure 5 illustrates the architecture of a TCN with 3 layers of dilated causal convolutions, where the output of the final layer captures the temporal dynamics in the history. Using a TCN with L layers to establish the temporal model of the node , where L represents the number of convolutional layers, each layer includes dilated convolutions, activate functions and normalization operations. Node at time t uses dilated causal convolutions for computation as shown as:

where denotes the convolution operation at time t, f denotes the filter, K denotes the filter size, and d is the dilating factor.

Figure 5.

Temporal convolutional networks, K = 2.

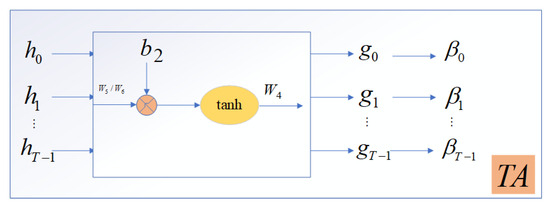

3.3.3. Temporal Attention-Based Decoder

The decoder decodes the spatial-temporal vectors generated by the encoder to obtain the predicted adjacency matrix of the network at the next moment, thereby completing the link prediction. Since different snapshots provide different amounts of information, a temporal attention module is designed to automatically assign different weights to each snapshot, as shown in Figure 6. To automatically obtain different degrees of attention values for different network snapshots. The temporal attention mechanism calculates the attention weight for each snapshot using the follows:

where , and are the learnable parameter matrices, denotes the row vector of spatial-temporal feature at network snapshot t, is the bias vector, and denotes the attentional value at network snapshot t. The normalized denotes the attention weight at time t. The weighted summation with the encoder spatial-temporal features yields the node representation vector , which is input to the decoder TCN model, and the node representation vector is computed as following:

Figure 6.

Temporal attention.

3.3.4. Output and Loss Function

The TCN model in decoder outputs , where M denotes the total number of nodes and O represents the number of hidden neurons of the decoder TCN, and finally outputs the predicted adjacency matrix through the fully connected layer, as shown as follows:

where , denote the learnable weight matrix and bias vector, respectively.

The loss function consists of the prediction matrix and the first-order adjacency matrix of the real network topology at the next moment, and the loss function is defined as:

where is the mean square error loss function, is the model parameter penalty term to prevent the model parameters from being too large or too small during the training process, and is the learnable parameter. The mean square error is minimized by optimizing the prediction model parameters . is calculated as shown as following:

where is the predictive mapping function of the model, and , where is the prediction error.

Time-series prediction usually assumes that the errors are uncorrelated; however, due to the temporal correlation of the opportunistic network, the error is, in fact, auto-correlated, and this auto-correlated nature leads to an increase in the error of the final result, weakening the performance of the model, and the th-order auto-correlated error is shown as following:

where is the auto-correlation coefficient, and is the non-correlated error, and the absolute value of is less than 1.

The first-order auto-correlation is the most significant term [6], because the correlation between and decreases when increases, so Formula (16) can be simplified to , and the auto-correlation error at the moment of T can be obtained from the mapping function in Formula (15), and in the same vein, the auto-correlation error at the moment of T − 1, so Formula (16) can be updated to Formula (17):

where is the non-correlated error and is unknown, so instead of , the right-hand side of Formula (17) can be approximated by transforming the right-hand side of Formula (17) using the value of the learnable parameter and using as the trainable parameter. Through this transformation, the transfer of errors in the time series is eliminated so that the current prediction results are no longer affected by past errors. The input form of the target sequence is as shown in Formula (17), and in time-series prediction, the input sequence and the target sequence should have the same form, so Equation (17) can be updated to Formula (18):

Therefore, the input sequence and the target sequence are of the form . The predicted output of the model is , and the auto-correlation error is adjusted to non-correlation error .

4. Experiments

The model proposed in this paper will be evaluated on three opportunistic network datasets and compared with time-series and auto-encoder methods.

4.1. Datasets

To verify the effectiveness of the proposed model, we conduct sufficient experiments on three opportunistic network datasets ITC, MIT, and Infocom06 [25].

ITC is known as Imote-Traces-Cambridge, it includes t traces of 50 students carrying small Bluetooth devices (iMotes) in office, conference, and urban environments over a 12-day period. The MIT dataset collected records of students carrying cell phones for communication on the MIT campus; the entire experiment collected nearly 9 months of communication records of persons carrying 97 cell phones. Infocom06 collected communication records of 97 participants involved in the IEEE Infocom conference over a period of 4 days. The information related to the three datasets is shown in Table 1.

Table 1.

Datasets used in this work.

4.2. Evaluate Metrics

The link prediction task can be regarded as a binary classification task. Therefore, in order to comprehensively evaluate the performance of the method, AUC, Precision, and F1-score are used to evaluate the performance of the algorithm. Three metrics are specifically defined below.

- (1)

- Area Under the Curve (AUC) [26]: AUC is a widely used metric in binary classification that represents the area under the receiver operating characteristic (ROC) curve. The ROC curve is a plot of the true positive rate against the false positive rate at various threshold settings. AUC provides an overall measure of the model’s ability to discriminate between positive and negative classes.

- (2)

- Precision: Precision is defined as the proportion of correctly predicted links in a prediction list of all samples. It is calculated as the ratio of true positive predictions to the sum of true positive and false positive predictions.

- (3)

- F1-score: The single Precision is difficult to effectively evaluate, so a comprehensive evaluation metric F1-score is used as a supplement, which simultaneously considers the precision and recall of the classification model. Where recall is defined as the proportion of correctly predicted links in the test set.

4.3. Results and Analysis

The experiments mainly consist of four parts: the decision on whether to consider link frequency in node attribute vector, the determination of slice size, ablation studies, and comparison of predictive performance with baseline models. The ablation studies include the validation of spatial-temporal attention and the validation of autocorrelated error adjustment. Experimentally, the dataset is sliced into a training set and test set in 7:3 ratio.

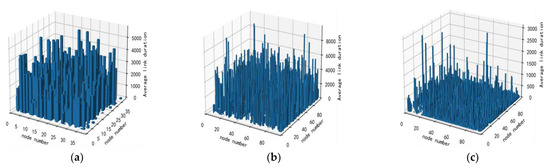

4.3.1. Decision on Whether to Consider Link Frequency

To decide whether to consider link frequency in attribute feature representation, this paper calculated the average duration of all the node pairs on three datasets. As shown in Figure 7, the average link duration in the ITC and MIT datasets is obviously higher than the one in the Infocom06 dataset.

Figure 7.

Average link duration on different datasets. (a) ITC; (b) MIT; (c) Infocom06.

Due to the presence of excessively long links (greater than one hour) and excessively low link frequency between a node pair (less than three links) in the datasets, which do not align with the characteristics of mobile device communication, we discard these abnormal communication data. Furthermore, for further analysis at different time slice sizes [27], the study calculated the number of links with an average link duration greater than the slice size. The proportion of this number to the overall links (remove outliers) is shown in Table 2.

Table 2.

Proportion of node pairs with the average link duration longer than the slice size.

As can be seen from Table 2, the average link duration percentage greater than the duration of 600 s reaches 72.76% and 36.40% on the datasets ITC and MIT, respectively. Thus, only the link duration is considered in these two datasets; we can construct the node attribute vector through Formula (2). On the Infocom06 dataset, the average link duration greater than duration 240 s accounts for 10.77%; therefore, the attribute vector should take into account the link frequency, which can be constructed by Formula (3).

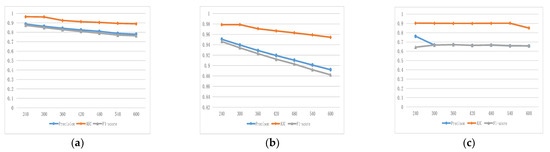

4.3.2. Determination of Slicing Size

The purpose of this experiment is to determine the optimal slice size of the STA-TCN prediction method on different datasets. To determine the effect of opportunistic network slice size on the performance of the STA-TCN prediction method, the prediction method is evaluated using AUC, Precision, and F1-score. The prediction results are greatly affected by the slice size, so it is necessary to select the appropriate slice size. If the slice size is too short, the network evolution is too slow, and the input data are prone to redundancy; if the slice size is too large, some of the evolutionary features of the network may be lost. Setting slice sizes from 4 min to 10 min with one-minute intervals, the experimental results are shown in Figure 8:

Figure 8.

Prediction performance of different slice sizes. (a) ITC; (b) MIT; (c) Infocom.

As shown in Figure 8, on ITC and MIT datasets, evaluation metrics decrease when the slice size increases. On Infocom06 dataset, evaluation metrics Precision and AUC are the maximum at T = 240 s, while F1-score reaches a maximum at T = 360 s. Therefore, the slice size on ITC, MIT, and infocom06 is set to 240 s.

4.3.3. Validation of Spatial-Temporal Attention

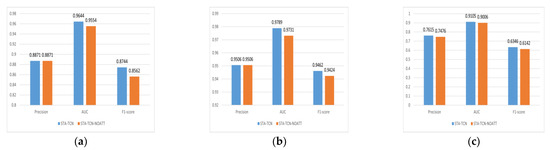

To validate the effectiveness of the spatial-temporal attention module, the following experiment is designed. On three datasets, our method STA-TCN is compared with the STA-TCN-NOATT method, which does not consider spatial-temporal attention. The opportunistic network slice size is set to 240 s in the ITC, MIT, and Infocom06 datasets, and other conditions remain the same. The prediction results are shown in the Figure 9:

Figure 9.

Comparison with or without spatial-temporal attention. (a) ITC; (b) MIT; (c) Infocom.

In Figure 9, the y-axis denotes the values of evaluation metrics. From the results shown in Figure 9, on the ITC dataset, STA-TCN and STA-TCN-NOATT exhibit the same Precision, but STA-TCN achieves greater AUC and F1-score compared to STA-TCN-NOATT, indicating that spatial-temporal attention has a certain effect on performance improvement in the ITC dataset. Similarly, on the MIT dataset, STA-TCN and STA-TCN-NOATT show the same Precision, yet STA-TCN achieves greater AUC and F1-score values than STA-TCN-NOATT, demonstrating that spatial-temporal attention has a stronger impact on performance improvement in the MIT dataset. At last, on the Infocom06 dataset, STA-TCN achieves greater Precision, AUC, and F1-score values than STA-TCN-NOATT, indicating that spatial-temporal attention can effectively enhance prediction performance on the Infocom06 dataset. Hence, considering spatial-temporal attention in the prediction method significantly improves the model performance.

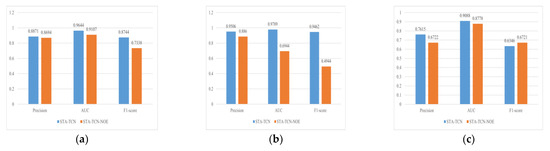

4.3.4. Validation of Non-Autocorrelated Errors

In the loss function construction, the auto-correlation error is adjusted to a non-correlation error, considering that the internal and external environments will generate an auto-correlation error on the prediction method, and this auto-correlation error will have an impact on the prediction results. In order to verify the effectiveness of the method of adjusting the auto-correlation error, the following experiments are designed to compare the STA-TCN method with the STA-TCN-NOE method without adjusting the auto-correlation error. In the ITC, MIT, and Infocom06 datasets, the opportunistic network slice size is set to 240 s, and other conditions are the same, and the experimental results are shown in Figure 10:

Figure 10.

Comparison with or without auto-correlation errors. (a) ITC; (b) MIT; (c) Infocom.

From the results in Figure 10, it is concluded that STA-TCN works better than STA-TCN-NOE on ITC and MIT datasets, and the enhancement of experimental effect is more obvious on MIT data. Although the evaluation index F1-score of STA-TCN-NOE in infocom06 is better than the one of STA-TCN, its Precision and AUC are worse than the one of STA-TCN. Therefore, adjusting the model autocorrelation error can improve the performance of the model.

4.3.5. Comparison of Link Prediction Performance

To ensure the reliability of the experimental results, we conducted 100 rounds of training and testing for each method on the three datasets. The comparison was based on the average values as shown in Table 3:

Table 3.

Comparing our methods with other methods.

The compared methods are composed of time series models as well as their variants. As shown in Table 3, compared with the best baseline methods on the AUC metric, STA-TCN obtained a score improvement of 0.2–2% on the three datasets. It achieved an intermediate level of performance in terms of Precision on the three datasets. Additionally, it performed at above-average, suboptimal, and optimal levels on the F1 metric. Temporal models, such as TCN, LSTM, DynRNN, DynAERNN, and the temporal model E-LSTM-D, which combines autoencoders, only learn the evolution patterns of links in the opportunistic network without considering the interactions between nodes and ignoring the spatial dependencies between nodes, leading to information loss. The method AGCRN which combines GNN with RNN, uses an adaptive adjacency matrix to construct initial features, but it is unable to learn the rich latent features of nodes. Our method fully extracts the original features of the opportunity network through the state matrix and integrates the temporal and spatial influences with the help of the spatial-temporal attention mechanism, so STA-TCN achieves better results than other methods.

STA-TCN achieved the highest AUC and F1 values and the second highest accuracy on the Infocom06 dataset, as well as the highest AUC and the second highest F1 value on the MIT dataset. It can be seen that STA-TCN performs best on the Infocom06 dataset and second best on the MIT dataset. While on the ITC dataset, it does not perform as well as a single temporal model in Precision and F1 metrics, which is due to the fact that the size of the ITC dataset is smaller than the other two and the addition of the state matrix and the spatial-temporal attention may add some redundant information.

Overall, our method achieved the highest value in the AUC evaluation metric on all three datasets. AUC measures the model’s ability to distinguish between positive and negative samples, and it is a good indicator of overall model performance; a higher AUC value indicates better model performance. In addition, our method also performs generally at an above-average level on other evaluation metrics. Thus, it can be concluded that our method achieves an improvement compared to the baseline methods.

5. Conclusions

In this paper, we proposed a link prediction method called STA-TCN, which is based on temporal convolutional network and attention mechanism. A state matrix is taken to learn the potential node attribute information in the opportunistic network. Spatial-temporal attention mechanism is employed to capture the spatial-temporal dependencies in the opportunistic network. Auto-correlation error is transformed into non-correlated error during the prediction process to enhance the model’s predictive capability. On three real opportunistic network datasets, STA-TCN demonstrates superior AUC as well as good precision and recall values. Therefore, the proposed model can effectively improve the performance of link prediction in opportunistic networks. However, the proposed model does not take into account the heterogeneity of networks in the real world. Future research will extend the study into heterogeneous networks for more application scenarios.

Author Contributions

Conceptualization, J.S.; writing—original draft preparation, Y.L.; writing—review and editing, J.S.; software, J.L. and Y.L.; methodology, J.L.; visualization, Y.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by National Natural Science Foundation of China, No. 62062050, 62362052. And was funded by Innovation Foundation for Postgraduate Student of Jiangxi Province, No. YC2023-100.

Data Availability Statement

The datasets used in this publication are available at https://www.crawdad.org/ accessed on 21 June 2023.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Pirozmand, P.; Wu, G.; Jedari, B.; Xia, F. Human mobility in opportunistic networks: Characteristics, models and prediction methods. J. Netw. Comput. Appl. 2014, 42, 45–58. [Google Scholar] [CrossRef]

- Trifunovic, S.; Kouyoumdjieva, S.T.; Distl, B.; Pajevic, L.; Karlsson, G.; Plattner, B. A Decade of Research in Opportunistic Networks: Challenges, Relevance, and Future Directions. IEEE Commun. Mag. 2017, 55, 168–173. [Google Scholar] [CrossRef]

- Rajaei, A.; Chalmers, D.; Wakeman, I.; Parisis, G. Efficient Geocasting in Opportunistic Networks. Comput. Commun. 2018, 127, 105–121. [Google Scholar] [CrossRef]

- Avoussoukpo, C.B.; Ogunseyi, T.B.; Tchenagnon, M. Securing and Facilitating Communication within Opportunistic Networks: A Holistic Survey. IEEE Access 2021, 9, 55009–55035. [Google Scholar] [CrossRef]

- Xu, D.; Cheng, W.; Luo, D.; Liu, X.; Zhang, X. Spatio-Temporal Attentive RNN for Node Classification in Temporal Attributed Graphs. In Proceedings of the IJCAI’19, Macao, China, 10–16 August 2019; pp. 3947–3953. [Google Scholar]

- Sun, F.; Lang, C.; Boning, D. Adjusting for Autocorrelated Errors in Neural Networks for Time Series. In Proceedings of the Thirty-Fifth Conference on Neural Information Processing Systems, Online, 6–14 December 2021; pp. 29806–29819. [Google Scholar]

- Wang, W.; Feng, Y.; Jiao, P.; Yu, W. Kernel framework based on non-negative matrix factorization for networks reconstruction and link prediction. Knowl.-Based Syst. 2017, 137, 104–114. [Google Scholar] [CrossRef]

- Lv, L.; Bardou, D.; Hu, P.; Liu, Y.; Yu, G. Graph regularized nonnegative matrix factorization for link prediction in directed temporal networks using PageRank centrality. Chaos Solitons Fractals 2022, 159, 112107. [Google Scholar] [CrossRef]

- Nasiri, E.S.; Berahmand, K.; Li, Y. Robust graph regularization nonnegative matrix factorization for link prediction in attributed networks. Multimed. Tools Appl. 2022, 82, 3745–3768. [Google Scholar] [CrossRef]

- Gou, F.; Wu, J. Triad link prediction method based on the evolutionary analysis with IoT in opportunistic social networks. Comput. Commun. 2022, 181, 143–155. [Google Scholar] [CrossRef]

- Tran, C.; Shin, W.; Spitz, A.; Gertz, M. DeepNC: Deep Generative Network Completion. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 1837–1852. [Google Scholar] [CrossRef]

- Li, L.; Wen, Y.; Bai, S.; Liu, P. Link prediction in weighted networks via motif predictor. Knowl.-Based Syst. 2022, 242, 108402. [Google Scholar] [CrossRef]

- Huang, H.; Wei, Q.; Hu, M.; Feng, Y. Links Prediction Based on Hidden Naive Bayes Model. Adv. Eng. Sci. 2016, 48, 150–157. [Google Scholar]

- Shu, J.; Li, R.; Xiong, T.; Liu, L.; Sun, L. Link Prediction Based on Learning Automaton and Firefly Algorithm. Adv. Eng. Sci. 2021, 53, 133–140. [Google Scholar]

- Goyal, P.; Chhetri, S.R.; Canedo, A. dyngraph2vec: Capturing network dynamics using dynamic graph representation learning. Knowl.-Based Syst. 2020, 187, 104816. [Google Scholar] [CrossRef]

- Pareja, A.; Domeniconi, G.; Chen, J.; Ma, T.; Suzumura, T.; Kanezashi, H.; Kaler, T.; Leiserson, C.E. EvolveGCN: Evolving Graph Convolutional Networks for Dynamic Graphs. arXiv 2019, arXiv:1902.10191. [Google Scholar] [CrossRef]

- Chen, J.; Wang, X.; Xu, X. GC-LSTM: Graph convolution embedded LSTM for dynamic network link prediction. Appl. Intell. 2022, 52, 7513–7528. [Google Scholar] [CrossRef]

- Chen, J.; Zhang, J.; Xu, X.; Fu, C.; Zhang, D.; Zhang, Q.; Xuan, Q. E-LSTM-D: A Deep Learning Framework for Dynamic Network Link Prediction. IEEE Trans. Syst. Man Cybern. Syst. 2021, 51, 3699–3712. [Google Scholar] [CrossRef]

- Liu, L.; Yu, Z.; Zhu, H. A Link Prediction Method Based on Gated Recurrent Units for Mobile Social Network. J. Comput. Res. Dev. 2023, 60, 705–716. [Google Scholar]

- Yin, Y.; Wu, Y.; Yang, X.; Zhang, W.; Yuan, X. SE-GRU: Structure Embedded Gated Recurrent Unit Neural Networks for Temporal Link Prediction. IEEE Trans. Netw. Sci. Eng. 2022, 9, 2495–2509. [Google Scholar] [CrossRef]

- Bai, L.; Yao, L.; Li, C.; Wang, X.; Wang, C. Adaptive Graph Convolutional Recurrent Network for Traffic Forecasting. In Proceedings of the 34th International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 6–12 December 2020; pp. 17804–17815. [Google Scholar]

- Cirstea, R.; Kieu, T.; Guo, C.; Yang, B.; Pan, S.J. EnhanceNet: Plugin Neural Networks for Enhancing Correlated Time Series Forecasting. In Proceedings of the 2021 IEEE 37th International Conference on Data Engineering (ICDE), Chania, Greece, 19–22 April 2021; pp. 1739–1750. [Google Scholar]

- Guo, S.; Lin, Y.; Feng, N.; Song, C.; Wan, H. Attention Based Spatial-Temporal Graph Convolutional Networks for Traffic Flow Forecasting. In Proceedings of the Thirty-Third AAAI Conference on Artificial Intelligence and Thirty-First Innovative Applications of Artificial Intelligence Conference and Ninth AAAI Symposium on Educational Advances in Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; pp. 922–929. [Google Scholar]

- Lea, C.; Flynn, M.D.; Vidal, R.; Reiter, A.; Hager, G.D. Temporal Convolutional Networks for Action Segmentation and Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1003–1012. [Google Scholar]

- Kotz, D.; Henderson, T. CRAWDAD: A Community Resource for Archiving Wireless Data at Dartmouth. IEEE Pervasive Comput. 2005, 4, 12–14. [Google Scholar] [CrossRef]

- Lü, L.; Zhou, T. Link prediction in complex networks: A survey. Phys. A Stat. Mech. Its Appl. 2011, 390, 1150–1170. [Google Scholar] [CrossRef]

- Shu, J.; Zhang, X.; Liu, L.; Yang, Z. Multi-nodes Link Prediction Method Based on Deep Convolution Neural Networks. Acta Electron. Sin. 2018, 46, 2970–2977. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).