Supporting Immersive Video Streaming via V2X Communication

Abstract

1. Introduction

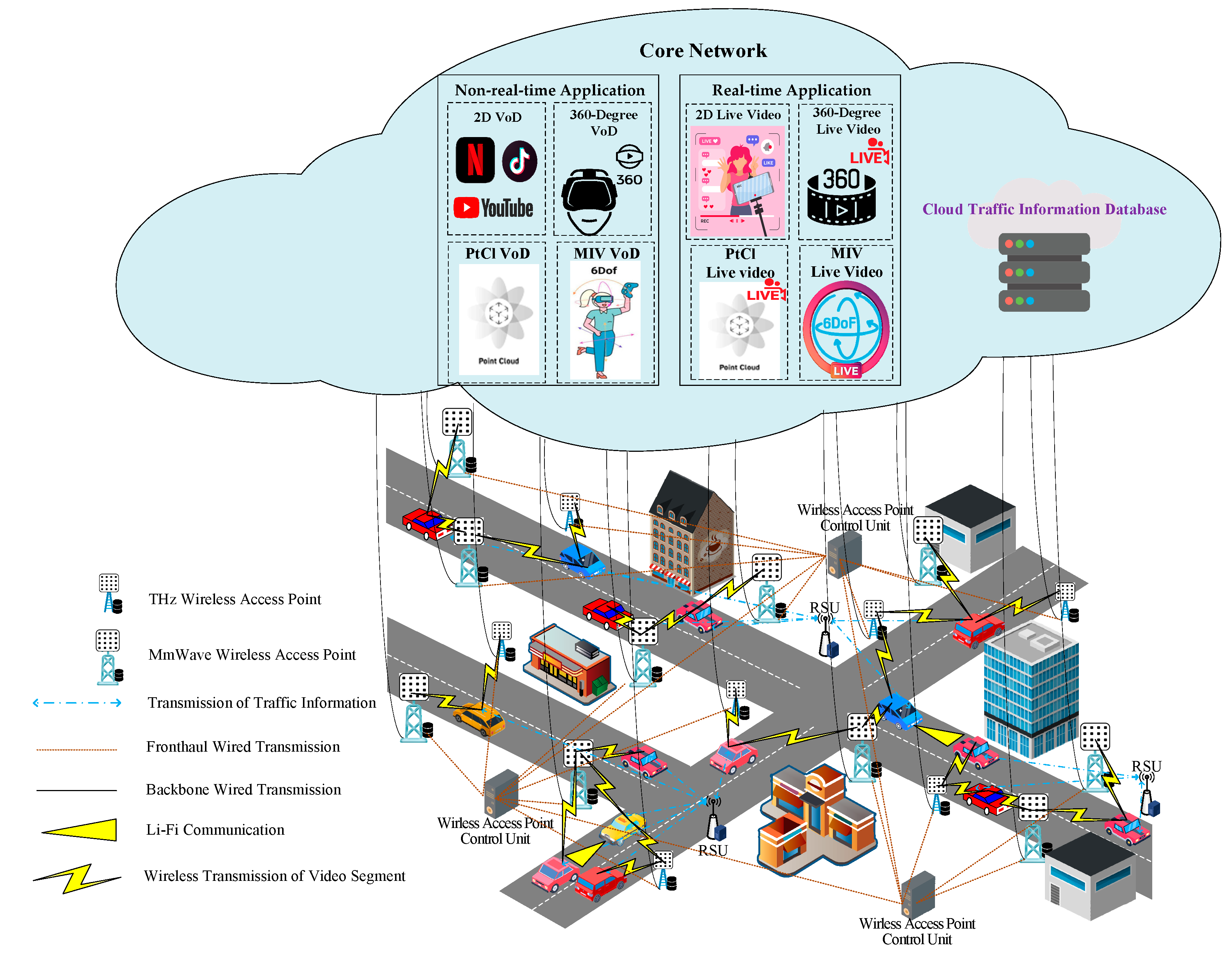

- This study employs THz and mmWave frequency band technologies, while also introducing CF XL-MIMO systems to further enhance system coverage, providing efficient and stable bandwidth for constructing a comprehensive vehicular network environment for vehicle passengers.

- This study adopts different encoding technologies for various types of multimedia applications and dynamically adjusts video quality based on different network conditions to optimize video transmission efficiency.

- In planning bandwidth allocation, the characteristics of applications and user levels are considered, with bandwidth being prioritized for real-time applications. When similar applications compete for bandwidth, premium users are given priority access. Additionally, when network bandwidth is insufficient, V2V communication technology can be utilized to access bandwidth from non-busy road sections, thereby effectively improving the overall utilization of bandwidth resources.

2. Bandwidth Allocation for Diverse Streaming Services in V2X Communication

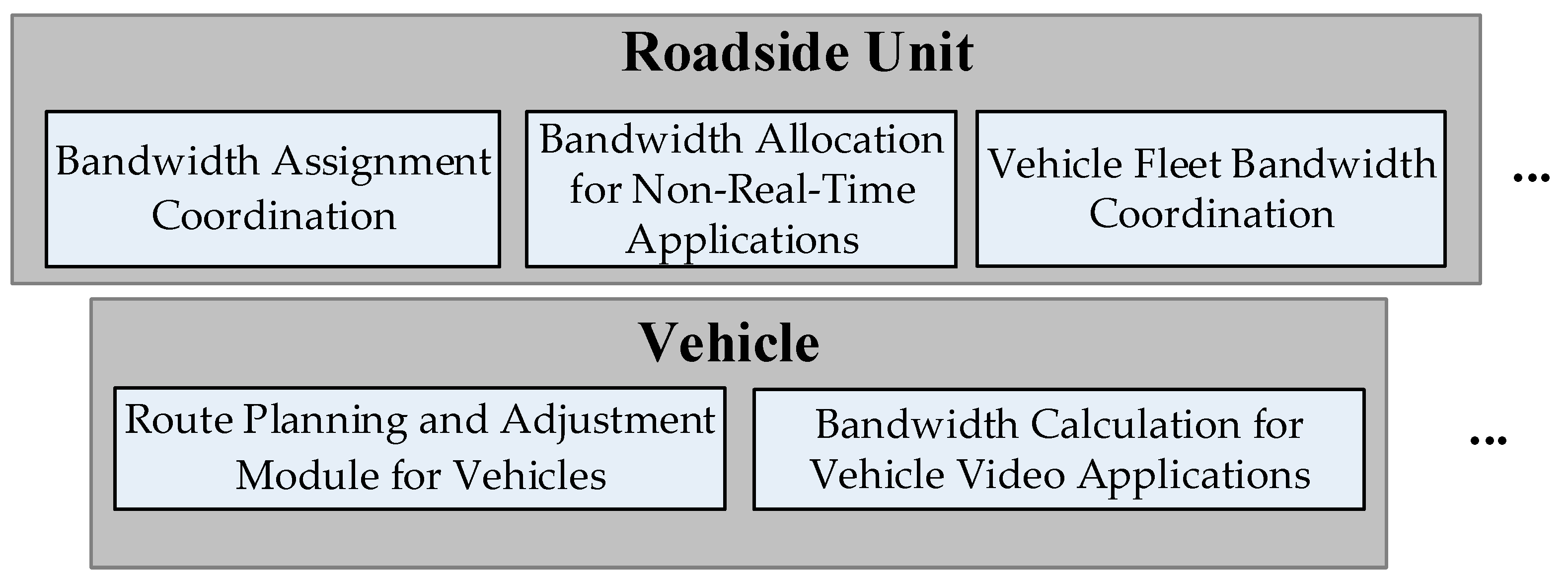

2.1. Route Planning and Adjustment Module for Vehicles

- Step 1:

- Before the vehicle departs, real-time traffic information is retrieved from the cloud. Based on the destination set by the vehicle passenger and the average travel time returned by each segment RSU, the shortest driving route is selected using the Dijkstra algorithm as follows:where and represent the starting point and the final destination of the driving route for vehicle σ, respectively.

- Step 2:

- The following equations are used to calculate the time for the vehicle to arrive at each road section and transmit the estimated time to the RSU along the route:where represents the i-th intersection that vehicle σ passes through, and is the current time. denotes the time it takes for the vehicle to pass through the segment connecting and after arriving at road section at time , while stands for the time spent by the vehicle due to traffic control before entering the segment connecting and after arriving at road section at time . Here, the values of and are both predicted using Support Vector Regressions.

- Step 3:

- This module operates in the background execution mode.

- Step 4:

- Whenever the vehicle arrives at the next intersection on the route, correct t the pass time period for each subsequent segment as follows:where represents the current segment of the route for σ, denotes the time when σ arrives at intersection . stands for the time it takes for σ to pass through the segment connecting and after arriving at intersection at time , while represents the time spent by the vehicle due to traffic control before entering the segment connecting and after arriving at intersection at time .

- Step 5:

- After transmitting the corrected arrival times at each intersection to the management RSUs of each segment, return to Step 3 to continue execution.

- Step 6:

- If the vehicle passenger changes the itinerary temporarily, recalculate the shortest driving route using the Dijkstra algorithm as follows:where and represent the next intersection and the destination of EV σ‘s current driving route, respectively, and is the index value of the previous intersection of the current segment. and denote the length of the current segment and the distance from σ‘s current position to the next intersection, respectively.Return to Step 2 to continue execution.

2.2. Bandwidth Calculation Module for Vehicle Video Applications

- Step 1:

- When the vehicle passenger initiates the video streaming application, this module retrieves the video specifications from the video streaming application software provider’s server. If the video type is a VR application, the user’s historical head motion trajectory is uploaded to the server. This allows the server to predict the FoV and return the specifications required for the requested video’s base layer and enhancement layer.

- Step 2:

- The estimated arrival times at each section of the route, along with the video application requirements and specifications, are uploaded to the RSUs along the route. Subsequently, this module requests the governing RSU over the current road segment to activate its “Bandwidth Assignment Coordination” module and awaits the results.

- Step 3:

- If the results received in the previous step indicate that the required bandwidth can be satisfied, proceed to the next step. Otherwise, proceed to Step 9 accordingly.

- Step 4:

- If the video quality adjustments have reached the system-set limit, proceed to Step 10; otherwise, continue with the execution.

- Step 5:

- If the video application type is not 6DoF PtCl video, or if the system has reached its maximum allowable quantity of 6DoF PtCl videos, proceed to Step 8. Otherwise, continue with the execution.

- Step 6:

- If the 6DoF PtCl video is live video, increase the point density in the user’s video according to the following equation and return to Step 2. Otherwise, continue to the next step.where and represent the point density uploaded and downloaded for 6DoF live video by vehicle passenger σ at time , respectively. and respectively stand for the management RSUs where the upload and download bandwidths are insufficient for vehicle σ during the period of passing through the segment connecting and . and denote the current and ending times of , respectively. and are the index values of the i-th intersection and the destination of the driving route. represents the system-set maximum point density for vehicle passengers, while is the required point density for the video set by the vehicle passenger with the level set to .

- Step 7:

- Increase the point density of VoD PtCl for the user according to the following equation, then return to Step 2.where and represent the resolution of the base layer and enhancement layer PtCl uploaded for 6DoF VoD by the vehicle passenger, respectively. Similarly, and denote the resolution of the base layer and enhancement layer PtCl downloaded for 6DoF VoD, respectively. represents the system-set maximum resolution for video of vehicle passengers, while stands for the bandwidth requirement for video resolution as determined by the vehicle passenger, which is specified at the level specified by .

- Step 8:

- If the video resolution has not reached the system-set maximum, increase the video resolution and return to Step 2. Otherwise, proceed to Step 10.where and represent the resolution of the base layer and enhancement layer uploaded for video by the vehicle passenger, respectively. Similarly, and denote the resolution of the base layer and enhancement layer downloaded for video by the vehicle passenger, respectively. represents the system-set maximum resolution for video of vehicle passengers, while is the bandwidth requirement for video resolution specified at level .

- Step 9:

- If the bandwidth demand of the application is not yet satisfied and the video resolution has not reached the system-set minimum, decrease the video resolution and return to Step 2. Otherwise, proceed to the next step.

- Step 10:

- Notify the vehicle passenger of the returned result. If the result indicates that the bandwidth demand can be satisfied, continue execution. If not, notify the vehicle passenger and end this module.

- Step 11:

- If the video application type used is non-real-time, check the download status of video segments. If all video segments for that application have been preloaded, end this module.

- Step 12:

- This module enters background execution mode.When the vehicle updates the driving path or it arrives at the next road segment along its route, return to Step 2 to continue execution.

2.3. Bandwidth Assignment Coordination

- Step 1:

- After receiving the bandwidth requirements for the vehicle passengers’ application, categorize the type of video application.

- Step 2:

- If the application is real-time video, continue to the next step. Otherwise, activate the “Bandwidth Allocation for Non-Real-Time Applications” module. Then conclude the execution of this module.

- Step 3:

- Based on the specifications of the live video application and the estimated time taken to traverse road sections, calculate the bandwidth required for the real-time application along the vehicle route.

- Step 4:

- Determine if any CF XL-MIMO access point(s) within the communication range of the vehicle can supply bandwidth for the live video application:where the binary flag indicates that real-time application is bidirectional, while indicates it is unidirectional. Binary indicator signifies as a 6DoF PtCl application, while binary indicator indicates that the encoding of also includes the enhancement layer. and denote the current and end times of , respectively, while and represent the indices of the ith intersection and the destination along the driving route, respectively. and respectively stand for the upload and download bandwidth that the vehicle can be allocated for bidirectional video when passing through the transmission range of CF XL-MIMO access point at time slot . and denote the point density uploaded and downloaded for 6DoF PtCl video , respectively; and represent the resolution of the uploaded and downloaded video base layer for , respectively, and stand for the uploaded and downloaded enhancement layer, respectively, while denotes the designated frame rate per second. represents the index value of the management RSU when the vehicle passes through segment . When the values of and are less than zero, it indicates that the upload or download bandwidth provided by CF XL-MIMO access point(s) for segment cannot meet the minimum bandwidth requirement of ; at this time, and are respectively used to indicate the management RSU of the vehicle passing through the segment where the upload and download bandwidth is insufficient at time slot . The number of PtCl for 6DoF PtCl video can be divided into levels, which is also the upper limit of the system-set point density video for vehicle passengers, while sets the video streaming screen resolution requirement at level for vehicle passengers. The upper limit level of the resolution of video streaming screens for other categories of videos is , and is the resolution requirement of video streaming screens set by vehicle passengers at level .

- Step 5:

- If the bandwidth requirements of the video can be met, notify the CF XL-MIMO access point control unit to provide the bandwidth vehicle passengers expected, and then return the results to the vehicle passenger. The execution of this module ends.If not, continue.

- Step 6:

- To meet the bandwidth requirements of real-time applications, the module reallocates the bandwidth currently assigned to non-real-time applications during the same time slot. In order to address any remaining bandwidth deficit for non-real-time applications after this adjustment, the ‘Bandwidth Allocation for Non-Real-Time Applications’ module will subsequently be activated.where is the index value of a non-real-time application, represents the CF XL-MIMO access point that originally allocated bandwidth to . and denote the upload and download bandwidth that can be reassigned from to real-time application . When the updated values of and are less than zero, it indicates that the bandwidth reassigned from to still cannot meet the bandwidth requirements of .

- Step 7:

- If the expected bandwidth of the real-time application has been met, notify the vehicle passenger and end the execution of this module; otherwise, continue to the next step.

- Step 8:

- If the passenger is a premium user, proceed to the next step; otherwise, skip to step 12.

- Step 9:

- Allocate the bandwidth beyond the minimum required for real-time applications of regular users to real-time applications for premium users.where ς is the index value representing a real-time application of a regular user, represents the CF XL-MIMO access point that originally allocated bandwidth to ς. and denote the upload and download bandwidth that can be reassigned from ς to , respectively. When the updated values of and are less than zero, it indicates that the bandwidth reassigned from ς to still cannot meet the requirements of .

- Step 10:

- If the previous step shows that either or is greater than zero, it indicates that part of the bandwidth for a regular user’s real-time application ς has been reassigned to the premium user. In this case, initiate the “Vehicle Fleet Bandwidth Coordination” module to reallocate bandwidth for ς.

- Step 11:

- At this stage, if both and are zero, it means that the expected bandwidth requirement of the requested application has been met. Notify the vehicle passenger and end the execution of this module. Otherwise, proceed to the next step.

- Step 12:

- Activate the “Vehicle Fleet Bandwidth Coordination” module to identify CF XL-MIMO access points in less congested road sections that can allocate bandwidth for application .

- Step 13:

- Notify the vehicle passenger of the results and end the execution of this module.

2.4. Bandwidth Allocation for Non-Real-Time Applications

- Step 1:

- Considering the specifications of the base and enhancement layers of the non-real-time video, network conditions, the remaining travel time of the vehicle, and the number of remaining segments stored in the vehicle’s memory, the bandwidth allocated to the non-real-time application v for the remaining route can be calculated as follows:subject to:where represents the number of video segments of that this module allows pre-downloading, is the time for pre-downloading video segment s of non-real-time application , denotes the total number of video segments for , and stands for the index of the first video segment that has not been downloaded yet. is the bandwidth provided by the CF XL-MIMO access point for pre-downloading during time over duration , and is the number of time slots for consecutive downloading of video segments within the communication range of . represents the time duration of downloading video segment of . and indicate the resolutions of the base and enhancement layers of , respectively, while denotes the frame rate per second. is the current time, and stand for the start and end times of playing , respectively, is the lower limit of traffic flow that indicates the road segment is congested, and represents the traffic flow connecting road sections and . is the resolution requirement for the level of video screen set by the vehicle passenger, and represents the pre-downloaded video segments stored in the buffer.

- Step 2:

- Examine whether the CF XL-MIMO access points from the previous step can meet the minimum bandwidth requirements for the base and enhancement layers of as follows:where is the time duration of pre-downloaded video stored in the buffer.

- Step 3:

- If 0, it indicates that the required bandwidth for both the base and enhancement layers of can be pre-downloaded. In this case, the CF XL-MIMO access point control unit is notified, and the result is returned to the vehicle passenger, concluding the execution of this module. Otherwise, proceed to the next step.

- Step 4:

- Calculate the bandwidth available for the remaining travel distance of the non-real-time application based on the specifications of the base layer :The above equation depends on:Equations (37)–(45)

- Step 5:

- If the previous step can meet the bandwidth requirements for the base layer of , then notify the CF XL-MIMO access point control unit to provide the required bandwidth for the base layer to the vehicle, and return the result to the vehicle passenger, concluding the execution of this module. Otherwise, continue to the next step.

- Step 6:

- If the vehicle passenger is categorized as a regular user, proceed to Step 9.

- Step 7:

- Consult the RSU of the remaining road segments along the route to reallocate the bandwidth originally assigned to non-real-time applications of regular users to premium users.The above equation depends on:Equations (37)–(45)where is the index value representing the non-real-time application for regular users, and is the bandwidth provided by CF XL-MIMO access point for pre-downloading at time over duration .

- Step 8:

- If the bandwidth obtained in the previous step meets the bandwidth requirements, return the result to the vehicle passenger, and conclude the execution of this module. Otherwise, continue to the next step.

- Step 9:

- Activate the “Vehicle Fleet Bandwidth Coordination” module to locate CF XL-MIMO access points on less congested road segments for non-real-time applications experiencing bandwidth deficits. Transfer bandwidth to these applications via inter-vehicle communication and notify the vehicle passenger of the outcome.

2.5. Vehicle Fleet Bandwidth Coordination

- Step 1:

- The RSU connects the vehicle requesting bandwidth with other vehicles within the V2V communication range to form a fleet.subject to:where the binary flags and indicate that the application is a real-time video and a bidirectional video, respectively. is the num.er of fleets, denotes the f-th fleet that relays bandwidth for , and represents the length of . stands for the index of the vehicle at the head of the fleet, represents vehicle σ located at the end of the fleet, represents a CF XL-MIMO access point covering the communication range of the fleet head, and is the destination of σ. faces bandwidth deficit when passing the k-th road segment connecting and . and respectively represent the upload and download bandwidth deficits of a real-time video at time when passing through the segment, while s is the index of the road segment where non-real-time video segments cannot be pre-downloaded at time . and represent the start and end playback times for , respectively. and denote the remaining bandwidth that can be uploaded and downloaded from vehicle to at time , respectively, while and represent the total bandwidth required to upload and download from vehicle to vehicle at time , respectively.

- Step 2:

- If the fleet connection establishment fails, record the insufficient bandwidth for and proceed to Step 4. Otherwise, continue to execute the next step.

- Step 3:

- Once the fleet is formed, bandwidth is forwarded to v using V2V communication. This module evaluates whether the CF XL-MIMO access points supporting the fleet meet v’s bandwidth requirements as follows:where denotes the time period during which the application faces a bandwidth deficit. If is negative, it indicates that the real-time application does not obtain sufficient bandwidth for uploading video at time . Similarly, if is negative, it signifies that the application does not obtain sufficient bandwidth for downloading video at that time. If is negative, it implies that the non-real-time video segment’s bandwidth is insufficient at that time.

- Step 4:

- If the fleet formed in Step 1 has successfully met the expected bandwidth for after forwarding the bandwidth, notify the vehicle passenger of the result, and this module will terminate its execution.Otherwise, notify the vehicle passenger of the result, and this module will enter background execution mode.At the next intersection on the vehicle’s route, it will resume at Step 1 and continue its execution.

3. Simulation Results and Discussion

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Feng, B.; Feng, C.; Feng, D.; Wu, Y.; Xia, X.G. Proactive content caching scheme in urban vehicular networks. IEEE Trans. Commun. 2023, 71, 4165–4180. [Google Scholar] [CrossRef]

- Shin, Y.; Choi, H.S.; Nam, Y.; Cho, H.; Lee, E. Particle Swarm Optimization Video Streaming Service in Vehicular Ad-Hoc Networks. IEEE Access 2022, 10, 102710–102723. [Google Scholar] [CrossRef]

- Alaya, B.; Sellami, L. Multilayer video encoding for QoS managing of video streaming in VANET environment. ACM Trans. Multimed. Comput. Commun. Appl. (TOMM) 2022, 18, 1–19. [Google Scholar] [CrossRef]

- Nguyen, V.L.; Hwang, R.H.; Lin, P.C.; Vyas, A.; Nguyen, V.T. Towards the age of intelligent vehicular networks for connected and autonomous vehicles in 6G. IEEE Netw. 2022, 37, 44–51. [Google Scholar] [CrossRef]

- Guo, H.; Zhou, X.; Liu, J.; Zhang, Y. Vehicular intelligence in 6G: Networking, communications, and computing. Veh. Commun. 2022, 33, 100399. [Google Scholar] [CrossRef]

- Pourazad, M.T.; Doutre, C.; Azimi, M.; Nasiopoulos, P. HEVC: The new gold standard for video compression: How does HEVC compare with H. 264/AVC? IEEE Consum. Electron. Mag. 2012, 1, 36–46. [Google Scholar] [CrossRef]

- Bross, B.; Wang, Y.K.; Ye, Y.; Liu, S.; Chen, J.; Sullivan, G.J.; Ohm, J.R. Overview of the versatile video coding (VVC) standard and its applications. IEEE Trans. Circuits Syst. Video Technol. 2021, 31, 3736–3764. [Google Scholar] [CrossRef]

- ISO/IEC JTC 1/SC 29/WG 04 MPEG Video Coding. Verification Test Report on the Compression Performance of Low Complexity Enhancement Video Coding. Available online: https://lcevc.org/wp-content/uploads/MPEG-Verification-Test-Report-on-the-Compression-Performance-of-LCEVC-Meeting-MPEG-134-May-2021.pdf (accessed on 1 May 2024).

- Tu, Z.; Zong, T.; Xi, X.; Ai, L.; Jin, Y.; Zeng, X.; Fan, Y. Content adaptive tiling method based on user access preference for streaming panoramic video. In Proceedings of the 2018 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 12–14 January 2018; IEEE: New York, NY, USA, 2018; pp. 1–4. [Google Scholar]

- Chen, Y.; Hannuksela, M.M.; Suzuki, T.; Hattori, S. Overview of the MVC+ D 3D video coding standard. J. Vis. Commun. Image Represent. 2014, 25, 679–688. [Google Scholar] [CrossRef]

- Li, L.; Wang, R.; Zhang, X. A tutorial review on point cloud registrations: Principle, classification, comparison, and technology challenges. Math. Probl. Eng. 2021, 2021, 1–32. [Google Scholar] [CrossRef]

- Garus, P.; Henry, F.; Jung, J.; Maugey, T.; Guillemot, C. Immersive video coding: Should geometry information be transmitted as depth maps? IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 3250–3264. [Google Scholar] [CrossRef]

- Shin, H.C.; Jeong, J.Y.; Lee, G.; Kakli, M.U.; Yun, J.; Seo, J. Enhanced pruning algorithm for improving visual quality in MPEG immersive video. ETRI J. 2022, 44, 73–84. [Google Scholar] [CrossRef]

- Vadakital VK, M.; Dziembowski, A.; Lafruit, G.; Thudor, F.; Lee, G.; Alface, P.R. The MPEG immersive video standard—Current status and future outlook. IEEE Multimed. 2022, 29, 101–111. [Google Scholar] [CrossRef]

- Boyce, J.M.; Doré, R.; Dziembowski, A.; Fleureau, J.; Jung, J.; Kroon, B.; Salahieh, B.; Vadakital, V.K.M.; Yu, L. MPEG immersive video coding standard. Proc. IEEE 2021, 109, 1521–1536. [Google Scholar] [CrossRef]

- Salahieh, B.; Bhatia, S.; Boyce, J. Multi-Pass Renderer in MPEG Test Model for Immersive Video. In Proceedings of the 2019 Picture Coding Symposium (PCS), Ningbo, China, 12–15 November 2019; IEEE: New York, NY, USA, 2019; pp. 1–5. [Google Scholar]

- Wu, C.H.; Hsu, C.F.; Hung, T.K.; Griwodz, C.; Ooi, W.T.; Hsu, C.H. Quantitative comparison of point cloud compression algorithms with pcc arena. IEEE Trans. Multimed. 2022, 25, 3073–3088. [Google Scholar] [CrossRef]

- Xiong, J.; Gao, H.; Wang, M.; Li, H.; Ngan, K.N.; Lin, W. Efficient geometry surface coding in V-PCC. IEEE Trans. Multimed. 2022, 25, 3329–3342. [Google Scholar] [CrossRef]

- Dumic, E.; da Silva Cruz, L.A. Subjective Quality Assessment of V-PCC-Compressed Dynamic Point Clouds Degraded by Packet Losses. Sensors 2023, 23, 5623. [Google Scholar] [CrossRef] [PubMed]

- Graziosi, D.; Nakagami, O.; Kuma, S.; Zaghetto, A.; Suzuki, T.; Tabatabai, A. An overview of ongoing point cloud compression standardization activities: Video-based (V-PCC) and geometry-based (G-PCC). APSIPA Trans. Signal Inf. Process. 2020, 9, e13. [Google Scholar] [CrossRef]

- Yang, M.; Luo, Z.; Hu, M.; Chen, M.; Wu, D. A Comparative Measurement Study of Point Cloud-Based Volumetric Video Codecs. IEEE Trans. Broadcast. 2023, 69, 715–726. [Google Scholar] [CrossRef]

- Shi, Y.; Venkatram, P.; Ding, Y.; Ooi, W.T. Enabling low bit-rate mpeg v-pcc-encoded volumetric video streaming with 3d sub-sampling. In Proceedings of the 14th Conference on ACM Multimedia Systems, Vancouver, BC, Canada, 7–10 June 2023; pp. 108–118. [Google Scholar]

- Han, B.; Liu, Y.; Qian, F. ViVo: Visibility-aware mobile volumetric video streaming. In Proceedings of the 26th Annual International Conference on Mobile Computing and Networking, London, UK, 21–25 September 2020; pp. 1–13. [Google Scholar]

- Li, J.; Zhang, C.; Liu, Z.; Hong, R.; Hu, H. Optimal volumetric video streaming with hybrid saliency based tiling. IEEE Trans. Multimed. 2022, 25, 2939–2953. [Google Scholar] [CrossRef]

- Khan, A.R.; Jamlos, M.F.; Osman, N.; Ishak, M.I.; Dzaharudin, F.; Yeow, Y.K.; Khairi, K.A. DSRC technology in Vehicle-to-Vehicle (V2V) and Vehicle-to-Infrastructure (V2I) IoT system for Intelligent Transportation System (ITS): A review. In Recent Trends in Mechatronics Towards Industry 4.0: Selected Articles from iM3F 2020, Malaysia; Springer: Berlin/Heidelberg, Germany, 2022; pp. 97–106. [Google Scholar]

- Zheng, K.; Zheng, Q.; Chatzimisios, P.; Xiang, W.; Zhou, Y. Heterogeneous vehicular networking: A survey on architecture, challenges, and solutions. IEEE Commun. Surv. Tutor. 2015, 17, 2377–2396. [Google Scholar] [CrossRef]

- Jiang, W.; Han, B.; Habibi, M.A.; Schotten, H.D. The road towards 6G: A comprehensive survey. IEEE Open J. Commun. Soc. 2021, 2, 334–366. [Google Scholar] [CrossRef]

- Chowdhury, M.Z.; Shahjalal, M.; Ahmed, S.; Jang, Y.M. 6G wireless communication systems: Applications, requirements, technologies, challenges, and research directions. IEEE Open J. Commun. Soc. 2020, 1, 957–975. [Google Scholar] [CrossRef]

- Wu, X.; Soltani, M.D.; Zhou, L.; Safari, M.; Haas, H. Hybrid LiFi and WiFi networks: A survey. IEEE Commun. Surv. Tutor. 2021, 23, 1398–1420. [Google Scholar] [CrossRef]

- Farrag, M.; Al Ayidh, A.; Hussein, H.S. Conditional Most-Correlated Distribution-Based Load-Balancing Scheme for Hybrid LiFi/WiGig Network. Sensors 2023, 24, 220. [Google Scholar] [CrossRef] [PubMed]

- Ma, S.; Sheng, H.; Sun, J.; Li, H.; Liu, X.; Qiu, C.; Safari, M.; Al-Dhahir, N.; Li, S. Feasibility Conditions for Mobile LiFi. IEEE Trans. Wirel. Commun. 2024, 23, 7911–7923. [Google Scholar] [CrossRef]

- Jamuna, R.; Abarna, B.; Kumar, A.S.; Ganesh, L.; Pranesh, H. LIFI for Smart Transportation: Enabling Secure and Safe Vehicular Communication. J. Xi’an Shiyou Univ. Nat. Sci. Ed. 2023, 19, 1–8. [Google Scholar]

- Saravanan, M.; Deepak, R.R.; Ravinkumar, P.L.; Sivabaalavignesh, A. Vehicle communication using visible light (Li-Fi) technology. In Proceedings of the 2022 8th International Conference on Advanced Computing and Communication Systems (ICACCS), Coimbatore, India, 25–26 March 2022; IEEE, New York, NY, USA, 2022; Volume 1, pp. 885–889. [Google Scholar]

- Su, L.; Niu, Y.; Han, Z.; Ai, B.; He, R.; Wang, Y.; Wang, N.; Su, X. Content distribution based on joint V2I and V2V scheduling in mmWave vehicular networks. IEEE Trans. Veh. Technol. 2022, 71, 3201–3213. [Google Scholar] [CrossRef]

- Lin, Z.; Wang, L.; Ding, J.; Xu, Y.; Tan, B. Tracking and transmission design in terahertz V2I networks. IEEE Trans. Wirel. Commun. 2022, 22, 3586–3598. [Google Scholar] [CrossRef]

- Lin, Z.; Fang, Y.; Chen, P.; Chen, F.; Zhang, G. Modeling and analysis of edge caching for 6G mmWave vehicular networks. IEEE Trans. Intell. Transp. Syst. 2022, 24, 7422–7434. [Google Scholar] [CrossRef]

- Elhoushy, S.; Ibrahim, M.; Hamouda, W. Cell-free massive MIMO: A survey. IEEE Commun. Surv. Tutor. 2021, 24, 492–523. [Google Scholar] [CrossRef]

- Zhao, J.; Hu, F.; Gong, Y.; Wang, D. Downlink Resource Intelligent Scheduling in mmWave Cell-Free Urban Vehicle Network. IEEE Trans. Veh. Technol. 2024, 1–14. [Google Scholar] [CrossRef]

- Lu, H.; Zeng, Y.; You, C.; Han, Y.; Zhang, J.; Wang, Z.; Dong, Z.; Jin, S.; Wang, C.-X.; Jiang, T.; et al. A tutorial on near-field XL-MIMO communications towards 6G. IEEE Commun. Surv. Tutor. 2024, 1. [Google Scholar] [CrossRef]

- Liu, Z.; Zhang, J.; Liu, Z.; Xiao, H.; Ai, B. Double-layer power control for mobile cell-free XL-MIMO with multi-agent reinforcement learning. IEEE Trans. Wirel. Commun. 2023, 23, 4658–4674. [Google Scholar] [CrossRef]

- Freitas, M.; Souza, D.; da Costa, D.B.; Borges, G.; Cavalcante, A.M.; Marquezini, M.; Almeida, I.; Rodrigues, R.; Costa, J.C. Matched-decision AP selection for user-centric cell-free massive MIMO networks. IEEE Trans. Veh. Technol. 2023, 72, 6375–6391. [Google Scholar] [CrossRef]

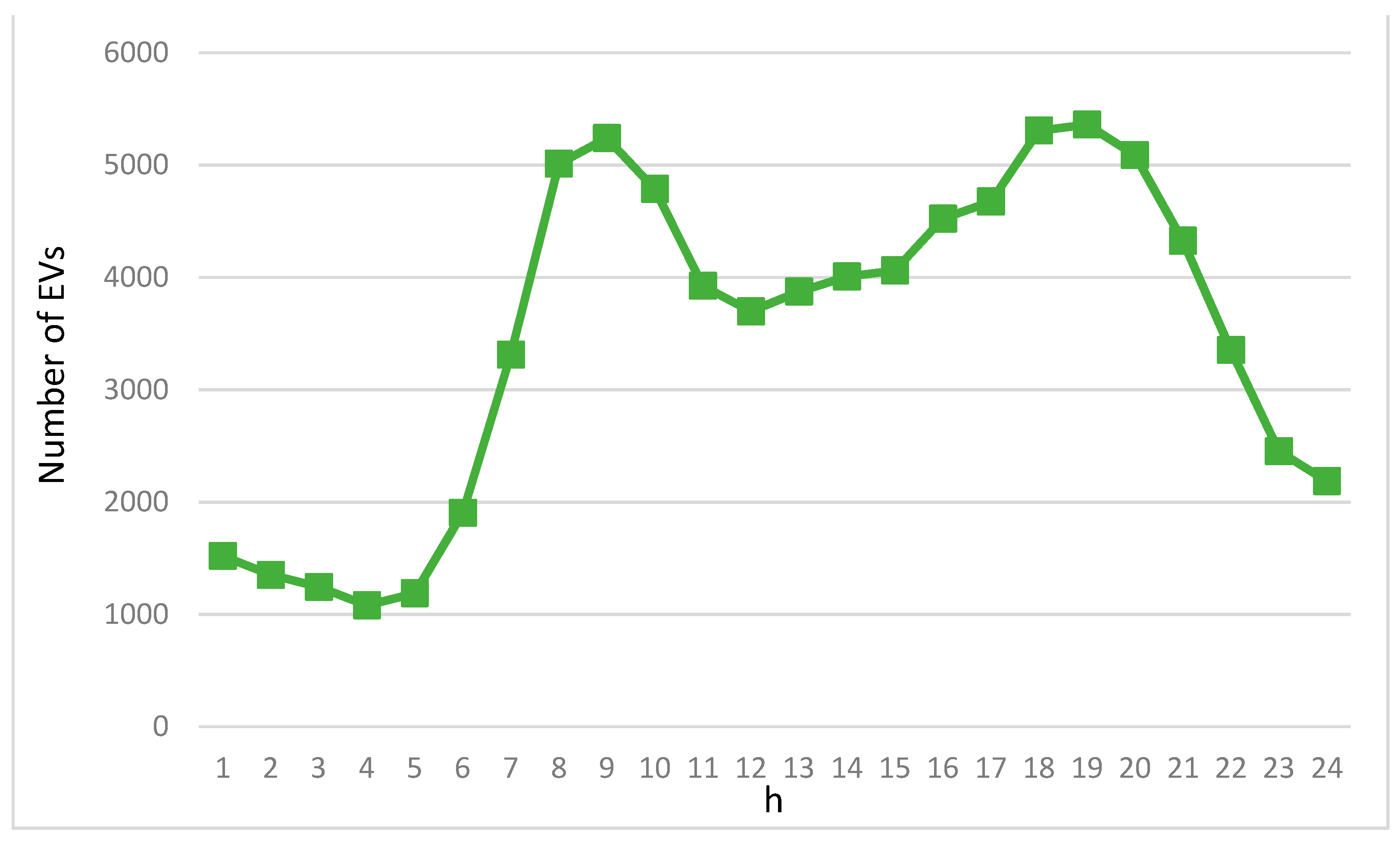

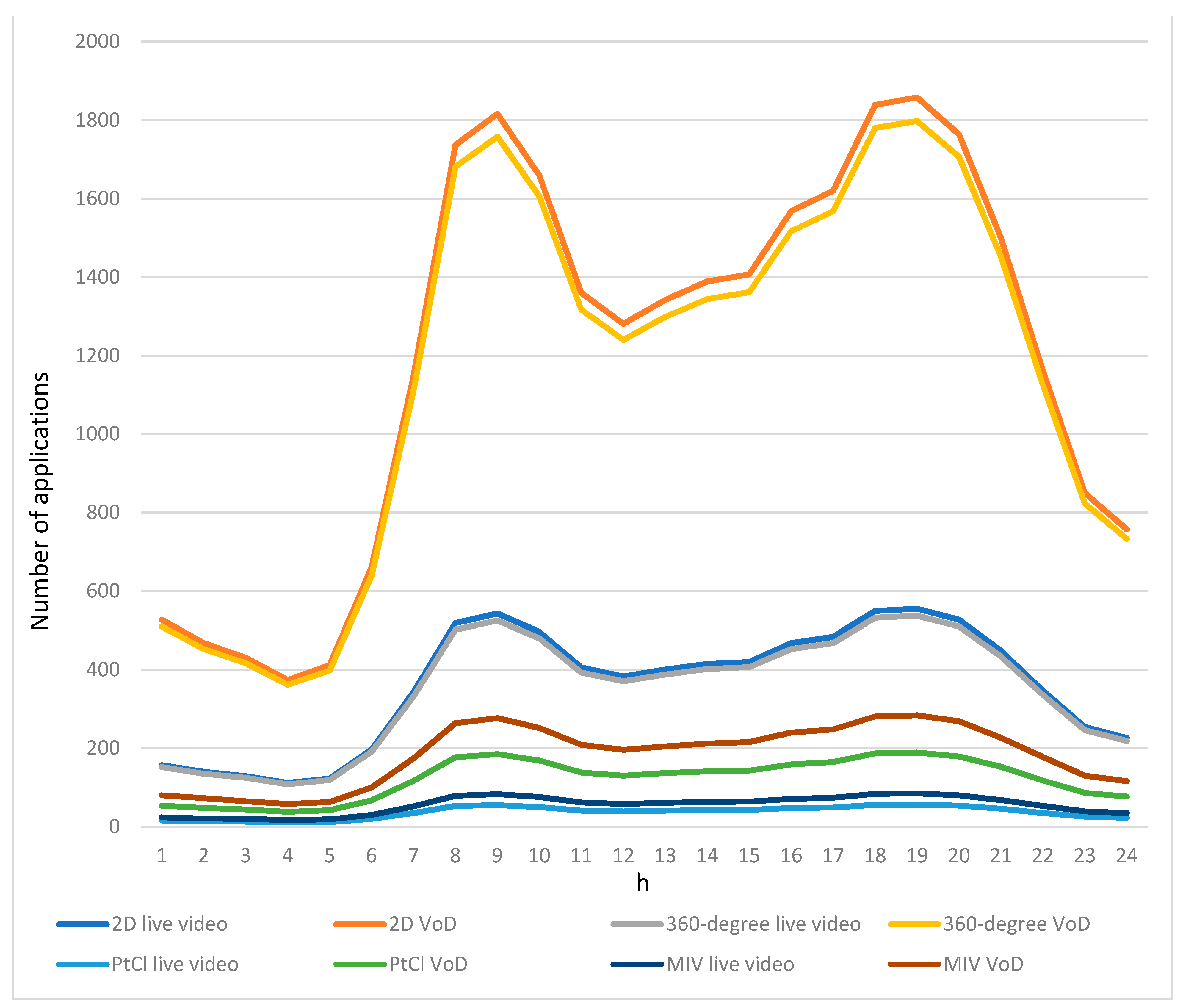

- Vehicle Flow Statistics for New York City. Available online: https://www.nyc.gov/html/dot/html/about/datafeeds.shtml (accessed on 10 January 2024).

- 90+ Powerful Virtual Reality Statistics to Know in 2024. Available online: https://www.g2.com/articles/virtual-reality-statistics (accessed on 3 May 2024).

- Virtual Reality Statistics. Available online: https://99firms.com/blog/virtual-reality-statistics/#gref (accessed on 2 May 2024).

- 47 Latest Live Streaming Statistics For 2024: The Definitive List. Available online: https://bloggingwizard.com/live-streaming-statistics/ (accessed on 5 May 2024).

- YouTube Recommended Upload Encoding Settings. Available online: https://support.google.com/youtube/answer/1722171?hl=en-GB (accessed on 10 January 2024).

- Battista, S.; Meardi, G.; Ferrara, S.; Ciccarelli, L.; Maurer, F.; Conti, M.; Orcioni, S. Overview of the low complexity enhancement video coding (LCEVC) standard. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 7983–7995. [Google Scholar] [CrossRef]

- Wiredrive 360 Video Specs. Available online: https://support.wiredrive.com/hc/en-us/articles/115000282194-Wiredrive-360-Video-Specs (accessed on 10 January 2024).

- The Best Encoding Settings for Your 4k 360 3D VR Videos + FREE Encoding Tool. Available online: https://headjack.io/blog/best-encoding-settings-resolution-for-4k-360-3d-vr-videos/ (accessed on 10 January 2024).

- Zare, A.; Aminlou, A.; Hannuksela, M.M.; Gabbouj, M. HEVC-compliant tile-based streaming of panoramic video for virtual reality applications. In Proceedings of the 24th ACM international conference on Multimedia, Amsterdam, The Netherlands, 15–19 October 2016; pp. 601–605. [Google Scholar]

- Yu, D.; Chen, R.; Li, X.; Xiao, M.; Zhang, G.; Liu, Y. A GPU-Enabled Real-Time Framework for Compressing and Rendering Volumetric Videos. IEEE Trans. Comput. 2023, 73, 789–800. [Google Scholar] [CrossRef]

- Cao, C. Compression d’objets 3D Représentés par Nuages de Points. Doctoral Dissertation, Institut Polytechnique de Paris, Paris, France, 2021. [Google Scholar]

- Santos, C.; Tavares, L.; Costa, E.; Rehbein, G.; Corrêa, G.; Porto, M. Coding Efficiency and Complexity Analysis of the Geometry-based Point Cloud Encoder. In Proceedings of the 2024 IEEE 15th Latin America Symposium on Circuits and Systems (LASCAS), Punta del Este, Uruguay, 27 February–1 March 2024; IEEE: New York, NY, USA, 2024; pp. 1–5. [Google Scholar]

- Gupta, M.; Navaratna, N.; Szriftgiser, P.; Ducournau, G.; Singh, R. 327 Gbps THz silicon photonic interconnect with sub-λ bends. Appl. Phys. Lett. 2023, 123, 171102. [Google Scholar] [CrossRef]

- Kumar, A.; Gupta, M.; Pitchappa, P.; Wang, N.; Szriftgiser, P.; Ducournau, G.; Singh, R. Phototunable chip-scale topological photonics: 160 Gbps waveguide and demultiplexer for THz 6G communication. Nat. Commun. 2022, 13, 5404. [Google Scholar] [CrossRef]

- Li, X.; Xiao, J.; Yu, J. Long-distance wireless mm-wave signal delivery at W-band. J. Light. Technol. 2015, 34, 661–668. [Google Scholar] [CrossRef]

- LiFi. Available online: https://lifi.co/lifi-speed/ (accessed on 20 May 2024).

- Wang, Z.; Zhang, J.; Du, H.; Niyato, D.; Cui, S.; Ai, B.; Debbah, M.; Letaief, K.B.; Poor, H.V. A tutorial on extremely large-scale MIMO for 6G: Fundamentals, signal processing, and applications. IEEE Commun. Surv. Tutor. 2024, 1. [Google Scholar] [CrossRef]

- Shoaib, M.; Husnain, G.; Sayed, N.; Lim, S. Unveiling the 5G Frontier: Navigating Challenges, Applications, and Measurements in Channel Models and Implementations. IEEE Access 2024, 12, 59533–59560. [Google Scholar] [CrossRef]

- Noor-A-Rahim, M.; Liu, Z.; Lee, H.; Khyam, M.O.; He, J.; Pesch, D.; Moessner, K.; Saad, W.; Poor, H.V. 6G for vehicle-to-everything (V2X) communications: Enabling technologies, challenges, and opportunities. Proc. IEEE 2022, 110, 712–734. [Google Scholar] [CrossRef]

- Liu, Y.; Zhu, K.; Hua, W.; Zhu, Y. Noval Enabling Technology for V2X Network: Blockchain. In Communication, Computation and Perception Technologies for Internet of Vehicles; Springer Nature: Singapore, 2023; pp. 225–244. [Google Scholar]

| Video Type | Resolution/Frame Rate | Minimum Required Bandwidth | Bandwidth Required for Base Layer | Bandwidth Required for Enhancement Layer |

|---|---|---|---|---|

| VoD | 1080P/30FPS | 1.644 Mbps | 0.987 Mbps | 0.657 Mbps |

| 2K/30FPS | 3.288 Mbps | 2.304 Mbps | 0.984 Mbps | |

| 4K/30FPS | 7.193 Mbps | 5.036 Mbps | 2.157 Mbps | |

| 8K/30FPS | 16.443 Mbps | 11.511 Mbps | 4.932 Mbps | |

| Live video | 720P/30FPS | 2.52 Mbps | 2.142 Mbps | 0.378 Mbps |

| 1080P/30FPS | 6.3 Mbps | 2.52 Mbps | 3.78 Mbps | |

| 2K/30FPS | 9.45 Mbps | 7.56 Mbps | 1.89 Mbps | |

| 4K/30FPS | 18.9 Mbps | 15.12Mbps | 3.78 Mbps |

| User Level | Resolution/Frame Rate | Minimum Required Bandwidth | Bandwidth Required for Base Layer | Bandwidth Required for Enhancement Layer |

|---|---|---|---|---|

| Regular | 1080P/30FPS | 0.554 Mbps | 0.387 Mbps | 0.166 Mbps |

| 2K/30FPS | 1.107 Mbps | 0.774 Mbps | 0.332 Mbps | |

| 4K/30FPS | 1.732 Mbps | 1.212 Mbps | 0.519 Mbps | |

| 8K/30FPS | 6.93 Mbps | 4.851 Mbps | 2.079 Mbps | |

| Premium | 1080P/30FPS | 0.986 Mbps | 0.69 Mbps | 0.295 Mbps |

| 2K/30FPS | 1.97 Mbps | 1.379 Mbps | 0.591 Mbps | |

| 4K/30FPS | 3.083 Mbps | 2.158 Mbps | 0.924 Mbps | |

| 8K/30FPS | 12.332 Mbps | 8.632 Mbps | 3.699 Mbps | |

| 12K/30FPS | 103.09 Mbps | 72.163 Mbps | 30.927 Mbps |

| User Level | Resolution/Frame Rate | Minimum Required Bandwidth | Bandwidth Required for Base Layer | Bandwidth Required for Enhancement Layer |

|---|---|---|---|---|

| Regular | 1080P/30FPS | 1.699 Mbps | 1.444 Mbps | 0.254 Mbps |

| 2K/30FPS | 2.124 Mbps | 1.805 Mbps | 0.318 Mbps | |

| 4K/30FPS | 3.398 Mbps | 2.888 Mbps | 0.509 Mbps | |

| 8K/30FPS | 13.595 Mbps | 11.555 Mbps | 2.039 Mbps | |

| Premium | 1080P/30FPS | 3.024 Mbps | 2.57 Mbps | 0.453 Mbps |

| 2K/30FPS | 3.78 Mbps | 3.213 Mbps | 0.567 Mbps | |

| 4K/30FPS | 6.048 Mbps | 5.14 Mbps | 0.771 Mbps | |

| 8K/30FPS | 24.192 Mbps | 20.563 Mbps | 3.084 Mbps | |

| 12K/30FPS | 210.924 Mbps | 179.285 Mbps | 31.638 Mbps |

| User Level | Point Density | Minimum Required Bandwidth | Bandwidth Required for Base Layer | Bandwidth Required for Enhancement Layer |

|---|---|---|---|---|

| Regular | Low | 4.545 Mbps | 3.181 Mbps | 1.363 Mbps |

| Medium | 11.067 Mbps | 7.746 Mbps | 3.32 Mbps | |

| High | 16.32 Mbps | 11.424 Mbps | 4.896 Mbps | |

| Premium | Low | 16.16 Mbps | 11.312 Mbps | 4.848 Mbps |

| Medium | 39.352 Mbps | 27.546 Mbps | 11.805 Mbps | |

| High | 58.026 Mbps | 40.618 Mbps | 17.407 Mbps |

| User Level | Number of PtCl | Minimum Required Bandwidth |

|---|---|---|

| Regular | Low | 33.218 Mbps |

| Medium | 56.497 Mbps | |

| High | 83.7 Mbps | |

| Premium | Low | 541.655 Mbps |

| Medium | 921.24 Mbps | |

| High | 1364.8 Mbps |

| User Level | Resolution/Frame Rate | Minimum Required Bandwidth | Bandwidth Required for Base Layer | Bandwidth Required for Enhancement Layer |

|---|---|---|---|---|

| Regular | 1080P/30FPS | 12.036 Mbps | 7.221 Mbps | 4.814 Mbps |

| 2K/30FPS | 24.072 Mbps | 16.85 Mbps | 7.221 Mbps | |

| 4K/30FPS | 52.658 Mbps | 36.86 Mbps | 15.797 Mbps | |

| 8K/30FPS | 180.544 Mbps | 126.38 Mbps | 54.163 Mbps | |

| Premium | 1080P/30FPS | 16.056 Mbps | 11.24 Mbps | 4.816 Mbps |

| 2K/30FPS | 32.112 Mbps | 22.478 Mbps | 14.4 Mbps | |

| 4K/30FPS | 70.245 Mbps | 49.171 Mbps | 21.073 Mbps | |

| 8K/30FPS | 240.84 Mbps | 168.58 Mbps | 72.252 Mbps |

| User Level | Resolution/Frame Rate | Minimum Required Bandwidth | Bandwidth Required for Base Layer | Bandwidth Required for Enhancement Layer |

|---|---|---|---|---|

| Regular | 1080P/30FPS | 36.892 Mbps | 31.358 Mbps | 5.533 Mbps |

| 2K/30FPS | 46.116 Mbps | 39.198 Mbps | 6.917 Mbps | |

| 4K/30FPS | 73.785 Mbps | 62.717 Mbps | 11.067 Mbps | |

| Premium | 1080P/30FPS | 49.2 Mbps | 41.82 Mbps | 7.38 Mbps |

| 2K/30FPS | 61.512 Mbps | 52.285 Mbps | 9.22 Mbps | |

| 4K/30FPS | 98.419 Mbps | 83.656 Mbps | 14.762 Mbps |

| Wireless Communication Technology | Transmission Distance | Maximum Bandwidth Achievable |

|---|---|---|

| THz | 400 m | 327 Gbps |

| mmWave | 1.7 km | 20 Gbps |

| Li-Fi | 10 m | 224 Gbps |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, C.-J.; Hu, K.-W.; Jian, M.-E.; Lien, Y.-H.; Cheng, H.-W. Supporting Immersive Video Streaming via V2X Communication. Electronics 2024, 13, 2796. https://doi.org/10.3390/electronics13142796

Huang C-J, Hu K-W, Jian M-E, Lien Y-H, Cheng H-W. Supporting Immersive Video Streaming via V2X Communication. Electronics. 2024; 13(14):2796. https://doi.org/10.3390/electronics13142796

Chicago/Turabian StyleHuang, Chenn-Jung, Kai-Wen Hu, Mei-En Jian, Yi-Hung Lien, and Hao-Wen Cheng. 2024. "Supporting Immersive Video Streaming via V2X Communication" Electronics 13, no. 14: 2796. https://doi.org/10.3390/electronics13142796

APA StyleHuang, C.-J., Hu, K.-W., Jian, M.-E., Lien, Y.-H., & Cheng, H.-W. (2024). Supporting Immersive Video Streaming via V2X Communication. Electronics, 13(14), 2796. https://doi.org/10.3390/electronics13142796