Detecting Fake Accounts on Social Media Portals—The X Portal Case Study

Abstract

1. Introduction

- Matrimonial fraud;

- Phishing (impersonating another person or institution to obtain essential data);

- Hacking (e.g., breaking into a user’s computer and taking control of it);

- Cyberstalking (online harassment).

- Present a distinctive visual-based approach to account classification;

- Create an image dataset of platform X accounts;

- Validate the created dataset;

- Test the detection of the authenticity of an X portal account by using the selected machine learning model.

2. Related Works

3. Creating a Dataset of Twitter Accounts

3.1. Definition of Various Types of Accounts

3.2. Feature Engineering to Generate a Dataset

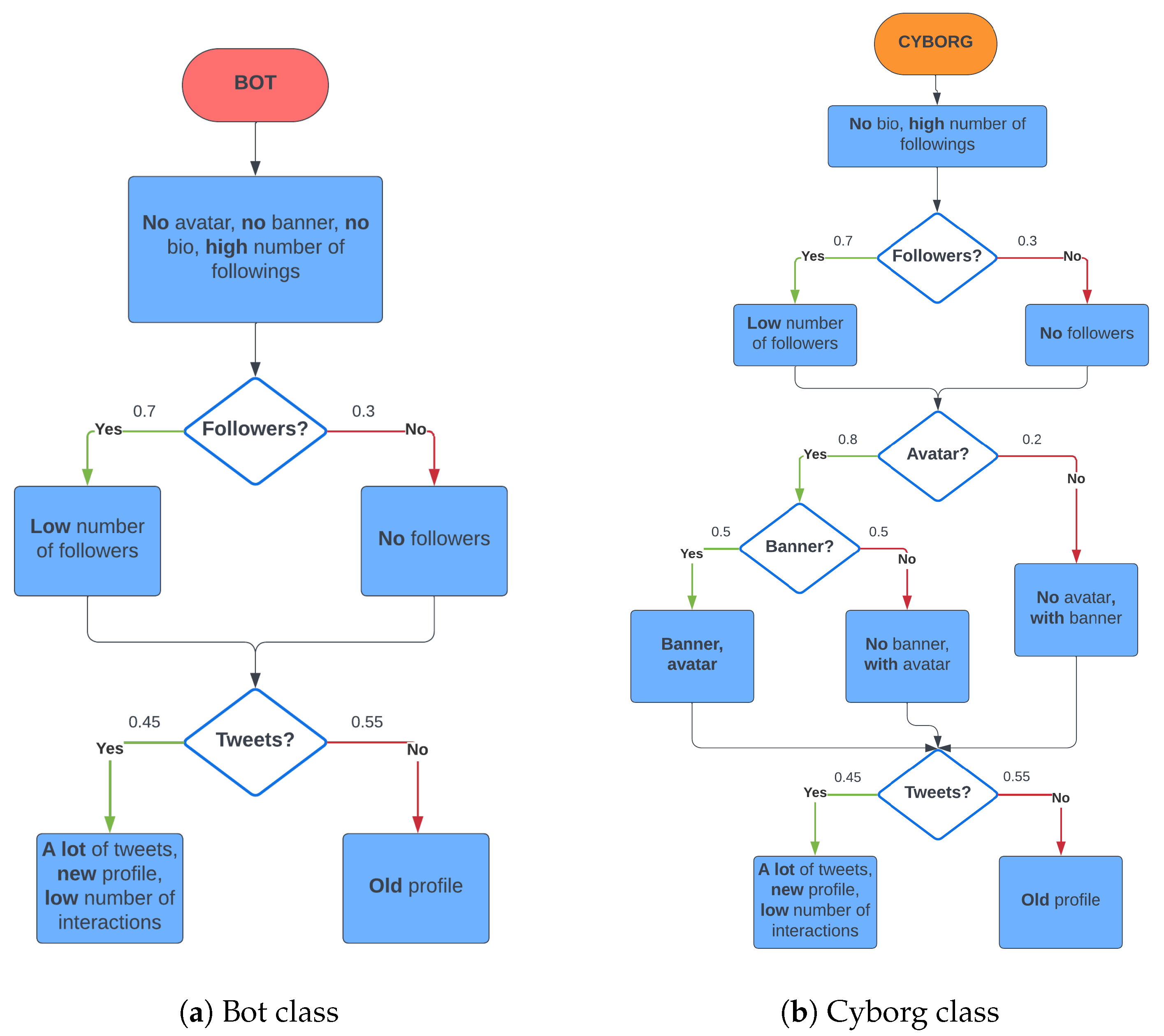

3.3. Types and Characteristics of Generated Accounts on X Portal

3.4. Generation and Presentation of Accounts’ Images

4. Experiments and Results

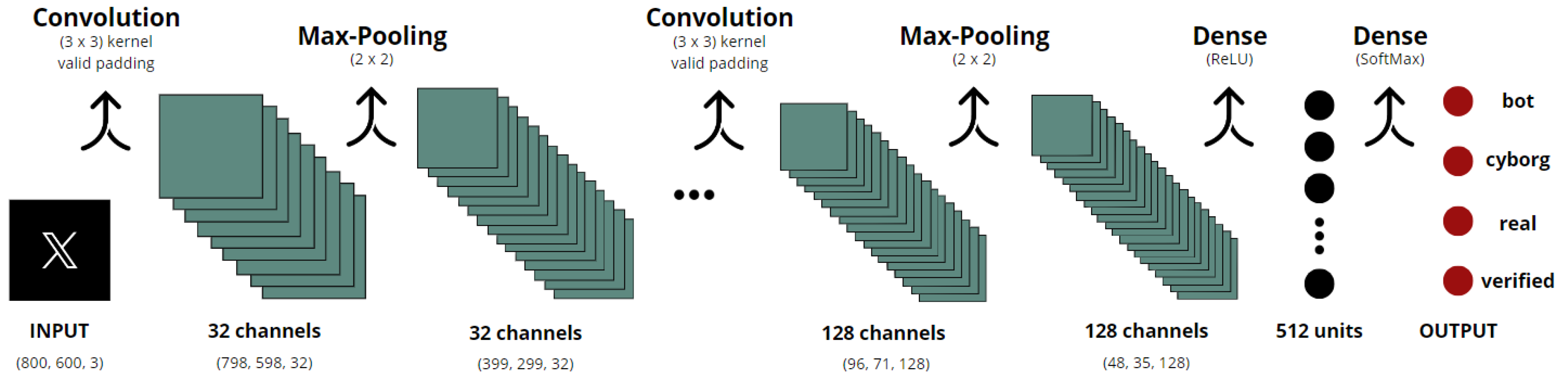

4.1. Machine Learning Model Selection and Optimization

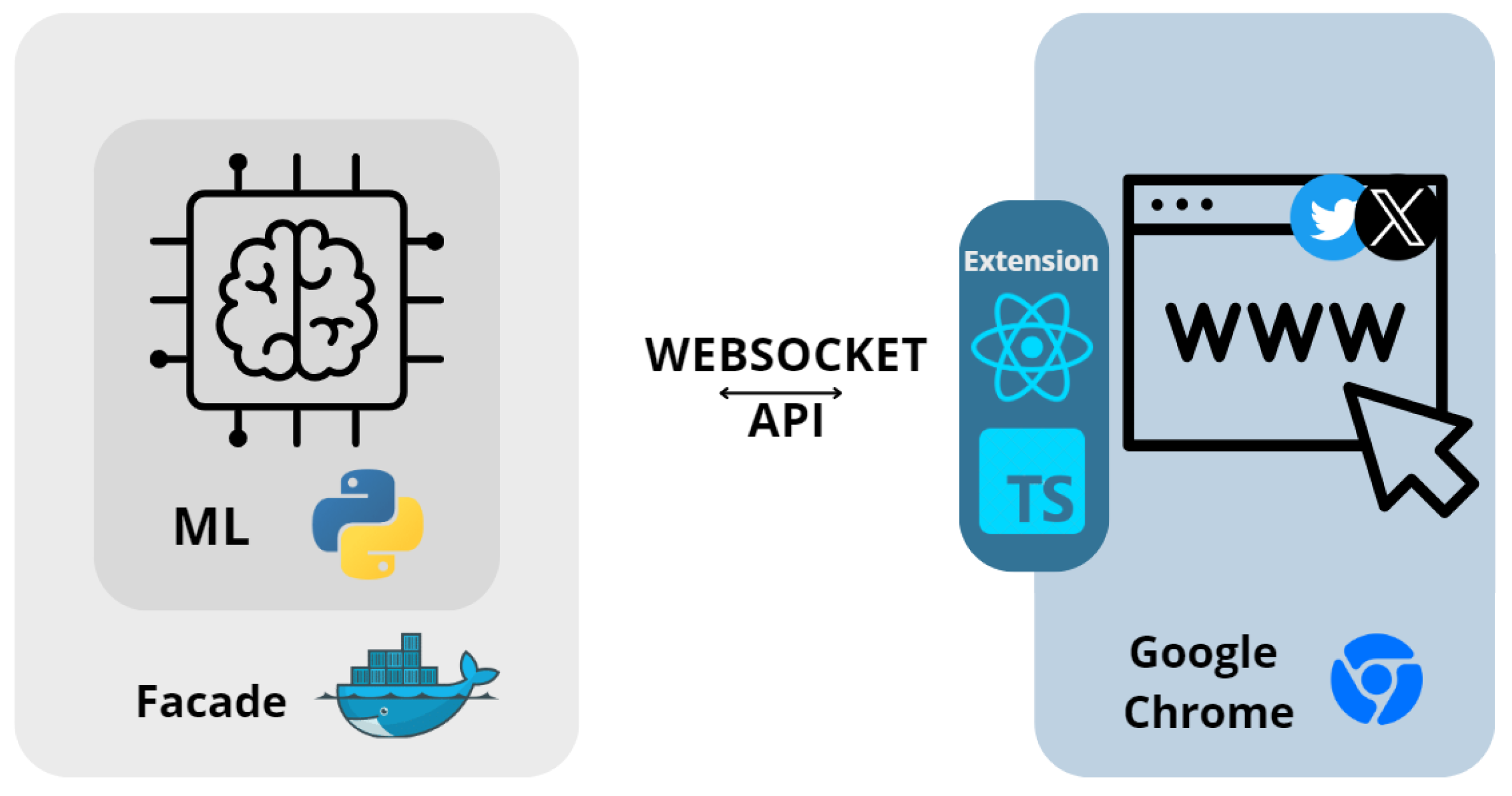

4.2. Detection of Fake Accounts

- Upon first launching the extension, the user navigates to the X social network profile of their interest (Figure 11a);

- Within the extension, the user selects the button to detect if the account is fake (Figure 11b);

- The extension takes a screenshot of the web page element containing the profile on the portal;

- The image is sent to the facade component by the WebSocket API;

- The facade forwards the image as the input to the machine learning model;

- The model predicts whether the analyzed account is fake and returns the results;

- Through the facade, the results are sent back to the end user (Figure 11c);

- The probability of the account being fake is displayed to the user in the extension interface.

4.3. Tests Performed on Fake Account Detection Tool

4.3.1. Testing in Implemented Copy of X Environment

4.3.2. Testing in Original X Environment

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Meltwater, W.A.S. Digital 2023 Global Overview Report. Available online: https://datareportal.com/reports/digital-2023-global-overview-report (accessed on 13 June 2024).

- Almadhoor, L. Social media and cybercrimes. Turk. J. Comput. Math. Educ. (TURCOMAT) 2021, 12, 2972–2981. [Google Scholar]

- Di Domenico, G.; Sit, J.; Ishizaka, A.; Nunan, D. Fake news, social media and marketing: A systematic review. J. Bus. Res. 2021, 124, 329–334. [Google Scholar] [CrossRef]

- Shu, K.; Bhattacharjee, A.; Alatawi, F.; Nazer, T.H.; Ding, K.; Karami, M.; Liu, H. Combating disinformation in a social media age. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2020, 10, 1–39. [Google Scholar] [CrossRef]

- Social Media Use Statistics. Available online: https://gs.statcounter.com/social-media-stats (accessed on 4 March 2024).

- Umbrani, K.; Shah, D.; Pile, A.; Jain, A. Fake Profile Detection Using Machine Learning. In Proceedings of the 2024 ASU International Conference in Emerging Technologies for Sustainability and Intelligent Systems (ICETSIS), Manama, Bahrain, 28–29 January 2024; pp. 966–973. [Google Scholar] [CrossRef]

- Durga, P.; Sudhakar, D.T. The use of supervised machine learning classifiers for the detection of fake Instagram accounts. J. Pharm. Negat. Results 2023, 14, 267–279. [Google Scholar] [CrossRef]

- Prakash, O.; Kumar, R. Fake Account Detection in Social Networks with Supervised Machine Learning. In International Conference on IoT, Intelligent Computing and Security. Lecture Notes in Electrical Engineering; Agrawal, R., Mitra, P., Pal, A., Sharma Gaur, M., Eds.; Springer: Singapore, 2023; Volume 982, pp. 287–295. [Google Scholar] [CrossRef]

- Kanagavalli, N.; Sankaralingam, B.P. Social Networks Fake Account and Fake News Identification with Reliable Deep Learning. Intell. Autom. Soft Comput. 2022, 33, 191–205. [Google Scholar] [CrossRef]

- Bhattacharyya, A.; Kulkarni, A. Machine Learning-Based Detection and Categorization of Malicious Accounts on Social Media. In Social Computing and Social Media. HCII 2024. Lecture Notes in Computer Science; Coman, A., Vasilache, S., Eds.; Springer: Cham, Switzerland, 2024; Volume 14703, pp. 328–337. [Google Scholar] [CrossRef]

- Goyal, B.; Gill, N.S.; Gulia, P.; Prakash, O.; Priyadarshini, I.; Sharma, R.; Obaid, A.J.; Yadav, K. Detection of Fake Accounts on Social Media Using Multimodal Data With Deep Learning. IEEE Trans. Comput. Soc. Syst. 2023, 1–12. [Google Scholar] [CrossRef]

- Wani, M.A.; Agarwal, N.; Jabin, S.; Hussain, S.Z. Analyzing real and fake users in Facebook network based on emotions. In Proceedings of the 2019 11th International Conference on Communication Systems & Networks (COMSNETS), Bengaluru, India, 7–11 January 2019; pp. 110–117. [Google Scholar] [CrossRef]

- Gupta, A.; Kaushal, R. Towards detecting fake user accounts in facebook. In Proceedings of the 2017 ISEA Asia Security and Privacy (ISEASP), Surat, India, 29 January–1 February 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Boshmaf, Y.; Logothetis, D.; Siganos, G.; Lería, J.; Lorenzo, J.; Ripeanu, M.; Beznosov, K. Integro: Leveraging victim prediction for robust fake account detection in OSNs. In Proceedings of the Network and Distributed System Security Symposium 2015 (NDSS’15), San Diego, CA, USA, 8–11 February 2015; pp. 1–15. [Google Scholar] [CrossRef]

- Conti, M.; Poovendran, R.; Secchiero, M. Fakebook: Detecting fake profiles in on-line social networks. In Proceedings of the 2012 International Conference on Advances in Social Networks Analysis and Mining, Istanbul, Turkey, 26–29 August 2012; pp. 1071–1078. [Google Scholar] [CrossRef]

- Sheikhi, S. An Efficient Method for Detection of Fake Accounts on the Instagram Platform. Rev. d’Intell. Artif. 2020, 34, 429–436. [Google Scholar] [CrossRef]

- Akyon, F.C.; Esat Kalfaoglu, M. Instagram Fake and Automated Account Detection. In Proceedings of the 2019 Innovations in Intelligent Systems and Applications Conference (ASYU), Izmir, Turkey, 31 October–2 November 2019; pp. 1–7. [Google Scholar] [CrossRef]

- Zarei, K.; Farahbakhsh, R.; Crespi, N. Deep dive on politician impersonating accounts in social media. In Proceedings of the 2019 IEEE Symposium on Computers and Communications (ISCC), Barcelona, Spain, 29 June–3 July 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Harris, P.; Gojal, J.; Chitra, R.; Anithra, S. Fake Instagram Profile Identification and Classification using Machine Learning. In Proceedings of the 2021 2nd Global Conference for Advancement in Technology (GCAT), Bangalore, India, 1–3 October 2021; pp. 1–5. [Google Scholar] [CrossRef]

- Adikari, S.; Dutta, K. Identifying fake profiles in linkedin. In Proceedings of the Pacific Asia Conference on Information Systems (PACIS), Chengdu, China, 24–28 June 2014; pp. 1–30. [Google Scholar] [CrossRef]

- Xiao, C.; Freeman, D.; Hwa, T. Detecting Clusters of Fake Accounts in Online Social Networks. In Proceedings of the 8th ACM Workshop on Artificial Intelligence and Security, Denver, CO, USA, 16 October 2015; pp. 91–101. [Google Scholar] [CrossRef]

- Rostami, R.R. Detecting Fake Accounts on Twitter Social Network Using Multi-Objective Hybrid Feature Selection Approach. Webology 2020, 17, 1–18. [Google Scholar] [CrossRef]

- Sahoo, S.R.; Gupta, B.B. Real-Time Detection of Fake Account in Twitter Using Machine-Learning Approach. In Advances in Computational Intelligence and Communication Technology. Advances in Intelligent Systems and Computing; Springer: Singapore, 2020; Volume 1086, pp. 149–159. [Google Scholar] [CrossRef]

- Prabhu Kavin, B.; Karki, S.; Hemalatha, S.; Singh, D.; Vijayalakshmi, R.; Thangamani, M.; Haleem, S.L.A.; Jose, D.; Tirth, V.; Kshirsagar, P.R.; et al. Machine Learning-Based Secure Data Acquisition for Fake Accounts Detection in Future Mobile Communication Networks. Wirel. Commun. Mob. Comput. 2022, 2022, 6356152. [Google Scholar] [CrossRef]

- Van Der Walt, E.; Eloff, J. Using Machine Learning to Detect Fake Identities: Bots vs Humans. IEEE Access 2018, 6, 6540–6549. [Google Scholar] [CrossRef]

- Roy, P.K.; Chahar, S. Fake Profile Detection on Social Networking Websites: A Comprehensive Review. IEEE Trans. Artif. Intell. 2020, 1, 271–285. [Google Scholar] [CrossRef]

- Krishnamurthy, B.; Gill, P.; Arlitt, M.F. A few chirps about twitter. In Proceedings of the WOSN ’08: Proceedings of the First Workshop on Online Social Networks, Seattle, WA, USA, 17–22 August 2008; pp. 19–24. [Google Scholar] [CrossRef]

- Chu, Z.; Gianvecchio, S.; Wang, H.; Jajodia, S. Who is Tweeting on Twitter: Human, Bot, or Cyborg? In Proceedings of the 26th Annual Computer Security Applications Conference, Austin, TX, USA, 6–10 December 2010; pp. 21–30. [Google Scholar] [CrossRef]

- Meta Open Source React. The Library for Web and Native User Interfaces. 2023. Available online: https://react.dev/ (accessed on 13 June 2024).

- Microsoft Corp. Playwright. 2023. Available online: https://playwright.dev/ (accessed on 13 June 2024).

- Faker Open Source, Faker. 2023. Available online: https://fakerjs.dev/ (accessed on 13 June 2024).

- Marby, D.; Yonskai, N. Lorem Picsum. Images. 2023. Available online: https://picsum.photos/images (accessed on 13 June 2024).

- Khaled, S.; El-Tazi, N.; Mokhtar, H.M.O. Detecting Fake Accounts on Social Media. In Proceedings of the IEEE International Conference on Big Data (Big Data), Seattle, WA, USA, 10–13 December 2018; pp. 3672–3681. [Google Scholar] [CrossRef]

- O’shea, K.; Nash, R. An introduction to convolutional neural networks. arXiv 2015, arXiv:1511.08458. [Google Scholar] [CrossRef]

- Pal, M. Random forest classifier for remote sensing classification. Int. J. Remote. Sens. 2005, 26, 217–222. [Google Scholar] [CrossRef]

- Rish, I. An empirical study of the naive Bayes classifier. In Proceedings of the IJCAI 2001 Workshop on Empirical Methods in Artificial Intelligence, Seattle, WA, USA, 4–6 August 2001; Volume 3, pp. 41–46. [Google Scholar]

- Lubbers, P.; Albers, B.; Salim, F. Using the WebSocket API. In Pro HTML5 Programming; Springer: Berlin/Heidelberg, Germany, 2011; pp. 159–191. [Google Scholar] [CrossRef]

- Interfejs API Chrome.tabs, On-Line Documentation. Available online: https://developer.chrome.com/docs/extensions/reference/api/tabs (accessed on 4 March 2024).

| No. | Paper | Problem | Approach |

|---|---|---|---|

| 1 | [10] | Fake X account detection | Text-based solution |

| 2 | [11] | Fake X, Instagram, and Facebook account detection | Combination of text, visual, and network factors |

| 3 | [22] | Fake X account detection | Text-based solution |

| 4 | [23] | Fake X account detection | Text-based solution |

| 5 | [24] | Fake X and Facebook account detection | Text-based solution |

| 6 | [25] | Fake X human-created account detection | Text-based solution |

| 7 | This paper | Fake X account detection | Image-based |

| No. | Selected Feature | Description of Feature |

|---|---|---|

| 1 | Username | Unique identifier/name of user’s account |

| 2 | Biography | Short introduction written by users about themselves, their achievements, expertise, and other important information |

| 3 | Profile photo (avatar) | One of the main features of accounts; it allows one to recognize a person by their appearance more quickly and easily |

| 4 | Header photo (banner) | In addition to the previous, Twitter introduced such photos to make the user’s account more attractive |

| 5 | Date of creation | The date when the user created their account and became active on the network portal |

| 6 | Website | URL link that could be the user’s website or profile on other platforms |

| 7 | Number of tweets (Twitter posts) | The essential feature for fake profile detection that allows for the determination of the level of user activity |

| 8 | Number of followers | Number of other accounts that are following the user |

| 9 | Following count | Number of other accounts that are being followed by the user’s profile |

| 10 | Number of likes | An important feature indicating the number of profiles that liked the content created by the user |

| 11 | Number of views | Number of profiles that have seen the content created by the user, showing how wide their audience is |

| 12 | Number of retweets | Number of how many times the user’s content was shared on both Twitter and other platforms |

| 13 | Number of replies | Number of comments on the user’s posts |

| Characteristics | Classes of Accounts | |||

|---|---|---|---|---|

| Bot | Cyborg | Real | Verified | |

| Profile photo | Blank or default (initials) | Blank or default (initials) or both or only profile | Blank or default (initials) + header or both or only profile photo | Yes |

| Header photo | No | Yes | ||

| Account description | No | No | Yes | Yes |

| Account website | No | No | Website URL or no website | |

| Number of followers | Low number of followers or no accounts following a given profile | Average | High | |

| Number of followings | High | Average | ||

| Date of creation | Large post No. + low interactions No. (close date) or no posts (former date of account creation) | former | Former | |

| Number of posts | Average | High | ||

| Number of interactions | Average | High | ||

| Verification | No | No | No | Standard (blue icon) or business (yellow icon) or institutional (gray icon) |

| Classifier | Accuracy | Avg. Precision | Avg. Recall | Avg. F1-Score |

|---|---|---|---|---|

| Convolutional Neural Network | 96.5 | 96.59 | 96.40 | 96.49 |

| Naive Bayes | 87.27 | 89.1 | 87.29 | 86.89 |

| Random Forest | 80.26 | 85.35 | 80.25 | 79.31 |

| Classified | Total | True Pos. % | |||||

|---|---|---|---|---|---|---|---|

| Bot | Cyborg | Human | Verified | ||||

| Actual | Bot | 992 | 0 | 8 | 0 | 1000 | 99.2% |

| Cyborg | 24 | 956 | 20 | 0 | 1000 | 95.6% | |

| Human | 2 | 1 | 997 | 0 | 1000 | 99.7% | |

| Verified | 0 | 18 | 58 | 924 | 1000 | 92.4% | |

| Classified | Total | True Pos. % | |||||

|---|---|---|---|---|---|---|---|

| Bot | Cyborg | Human | Verified | ||||

| Actual | Bot | 46 | 0 | 4 | 0 | 50 | 92% |

| Cyborg | 0 | 45 | 5 | 0 | 50 | 90% | |

| Human | 0 | 13 | 37 | 0 | 50 | 74% | |

| Verified | 0 | 6 | 12 | 32 | 50 | 64% | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dracewicz, W.; Sepczuk, M. Detecting Fake Accounts on Social Media Portals—The X Portal Case Study. Electronics 2024, 13, 2542. https://doi.org/10.3390/electronics13132542

Dracewicz W, Sepczuk M. Detecting Fake Accounts on Social Media Portals—The X Portal Case Study. Electronics. 2024; 13(13):2542. https://doi.org/10.3390/electronics13132542

Chicago/Turabian StyleDracewicz, Weronika, and Mariusz Sepczuk. 2024. "Detecting Fake Accounts on Social Media Portals—The X Portal Case Study" Electronics 13, no. 13: 2542. https://doi.org/10.3390/electronics13132542

APA StyleDracewicz, W., & Sepczuk, M. (2024). Detecting Fake Accounts on Social Media Portals—The X Portal Case Study. Electronics, 13(13), 2542. https://doi.org/10.3390/electronics13132542