Abstract

Swarm exploration by multi-agent systems relies on stable inter-agent communication. However, so far both exploration and communication have been mainly considered separately despite their strong inter-dependency in such systems. In this paper, we present the first steps towards a framework that unifies both of these realms by a “tight” integration. We propose to make exploration “communication-aware” and communication “exploration-aware” by using tools of probabilistic learning and semantic communication, thus enabling the coordination of knowledge and action in multi-agent systems. We anticipate that by a “tight” integration of the communication chain, the exploration strategy will balance the inference objective of the swarm with exploration-tailored, i.e., semantic, inter-agent communication. Thus, by such a semantic communication design, communication efficiency in terms of latency, required data rate, energy, and complexity may be improved. With this in mind, the research proposed in this work addresses challenges in the development of future distributed sensing and data processing platforms—sensor networks or mobile robotic swarms consisting of multiple agents—that can collect, communicate, and process spatially distributed sensor data.

1. Introduction

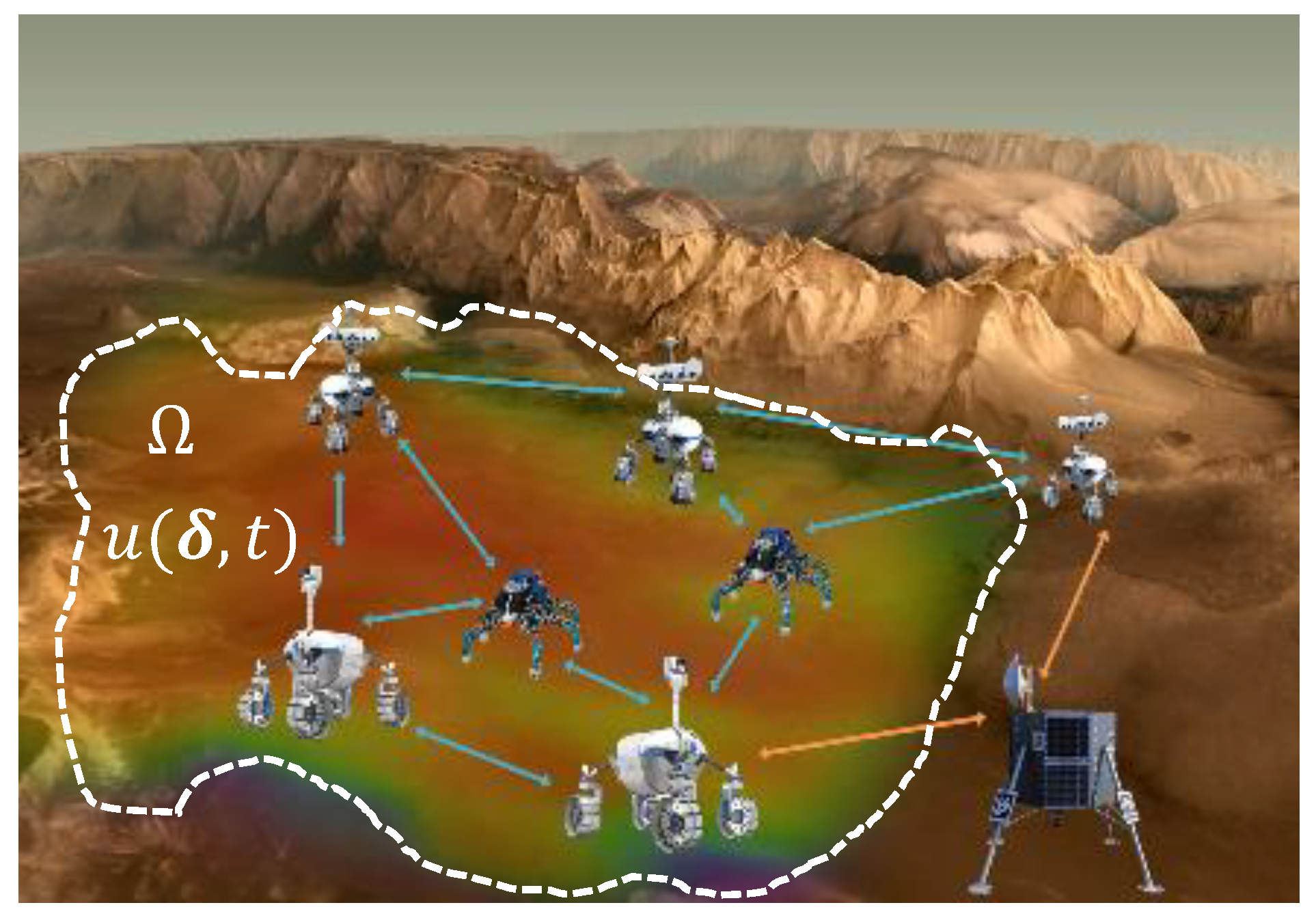

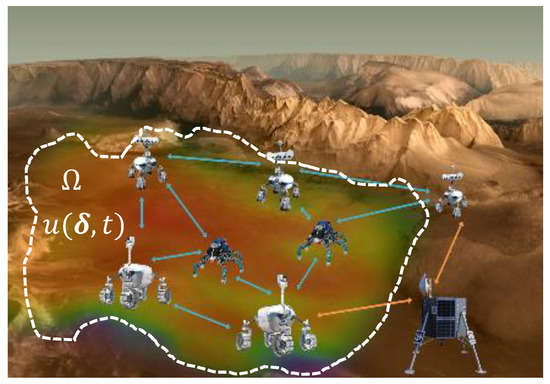

In hazardous or inhospitable environments, exploration, and monitoring tasks impose high risks on human operators. Typical examples include emergency scenarios caused by nuclear or toxic accidents, as well as exploration scenarios in extraterrestrial environments [1,2]. Here, the use of mobile robotic systems is required. Cooperation in a multi-agent system, such as a swarm, is able to accelerate such reconnaissance missions or mapping tasks significantly [3]. An example of swarm exploration on an extraterrestrial surface, e.g., on Mars, is shown in Figure 1: Agents distribute and process sensed data along the arrows with the aim to reconstruct an unknown physical or chemical process of interest at position and time t or relevant parameters of such processes in the domain . For instance, a process of interest can be the spatio-temporal distribution of gas concentration. There, a relevant process parameter is the location of gas sources.

Figure 1.

A swarm of autonomous agents explores an unknown physical process over spatial coordinate and time t in the spatial domain .

To achieve this goal, swarm exploration incorporates methods for distributed sensing, optimized (intelligent) information gathering [4], and agent movement/action coordination (exploitation). In particular, it requires the communication of locally and instantaneously available exploration measurements between agents. The underlying communication network acts as a data exchange backbone and is the tool that eventually enables the “diffusion” of local information to all agents and, hence, assists global decision-making. Communication is therefore always an integral part of a swarm exploration.

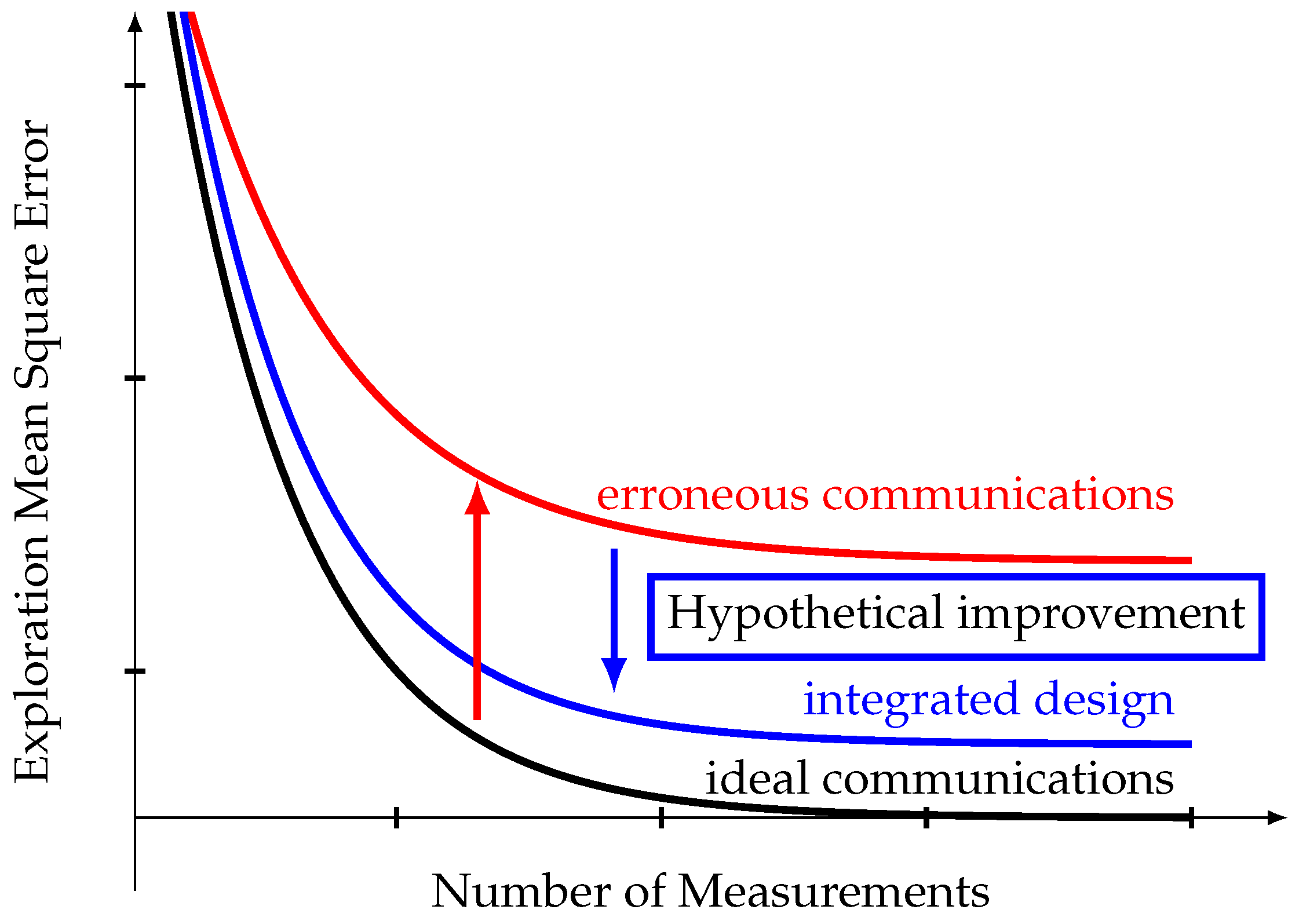

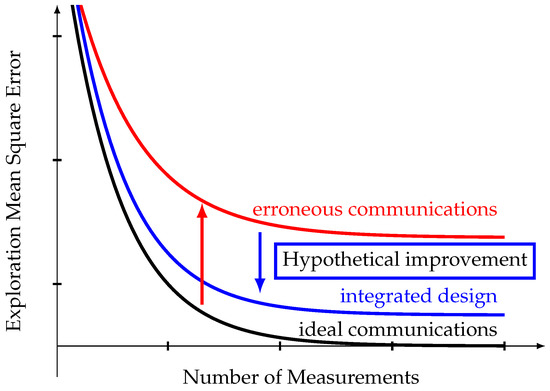

Swarm exploration often considers reliable and error-free communications, i.e., ideal links. However, communication systems do add uncertainty to the exchanged information. This means that studies so far paint an optimistic picture of the exploration performance metric, e.g., the Mean Square Error, which indeed degrades with erroneous links, as illustrated in Figure 2. For instance, communication uncertainty needs to be considered when predicting new sampling positions for agents, since locations causing severe communication degradation will be useless for distributed information processing/exploration purposes.

Figure 2.

We integrate communications into exploration through probabilistic Machine Learning (ML) for a hypothetical enhancement of exploration performance in practice.

Likewise, communication systems are designed to aim for error-free transmission of measurements or processing results, but they are neither aware of their relevance for learning the entire explored process nor of the confidence in the data to be transmitted. Our key objective is hence to integrate the latter semantic understanding of the communicated messages into the communication and swarm system with respect to the overall exploration objective such that an exploration task can be completed with higher accuracy and/or higher speed. An integrated design could improve exploration performance towards that with ideal links, as sketched in Figure 2.

In conclusion, by using the framework of semantic communication [5,6,7,8,9] for the exchanged data among the agents, we avoid classical error-free digital transmission of data but open up the possibility for a swarm to “learn” a semantic understanding of the communicated messages with respect to the overall exploration objective. By integrating the physical layer directly into the exploration task and thus possibly excluding higher communication protocol layers, we may also improve communication efficiency in terms of latency, data rate, energy, and complexity compared to semantics-agnostic state-of-the-art protocols.

These considerations raise two fundamental questions:

- How to integrate exploration and communication into a single framework?

- How to ensure mutual awareness? In other words, which information is appropriate to be exchanged between the agents and how can it be exploited?

The aim of this paper is to present possible approaches that address these two fundamental questions and to outline potential research directions in the area of mutually-aware exploration and communication.

Main Contribution

In this article, we propose and investigate a conceptually new, “tightly” coupled “exploration-aware” communication and a “communication-aware” exploration by using appropriate Artificial Intelligence (AI) machinery like probabilistic Machine Learning (ML). This implies that both communication systems and exploration techniques are designed to be learned from data and adapt their parameters to measured data. We hypothesize that this will enhance exploration performance, as shown in Figure 2.

Our main contribution is the development of a framework and methodology as well as the design of this “tight” integration, aiming to be a starting point for further investigations on modeling and algorithms. We anticipate that by a “tight” integration of the communication chain, the exploration strategy will balance the inference objective of the swarm with exploration-tailored, i.e., semantic inter-agent communication. By doing so, we replace the “classical” transmission of the raw data with its semantic counterpart, thus reducing the cost in terms of required data rate, latency, energy and complexity, while preserving the desired functionality of the whole distributed system.

At the core, we pursue two key objectives to obtain a mutually-aware design where all domains of the exploration task are connected through probabilistic ML:

- Modeling of the physical process by means of Factor Graphs (FGs) and design of ML-based “communication-aware” swarm exploration algorithms that follow active inference principles [10].

- Investigation and design of “exploration-aware” wireless communication methods and algorithms in the framework of ML. Here, we focus on the meaning, i.e., semantics, of the messages to be transmitted between robots instead of the raw data. To link exploration to communication, a promising approach is the framework of semantic communication [5,6,7,8,9] and in particular [11], as it may enable a tight integration.

2. State-of-the-Art

In the following section, we give a brief overview of state-of-the-art techniques in the areas of ML-based exploration and communications to highlight our contribution.

2.1. Distributed Multi-Agent Exploration

Distributed exploration requires cooperative computational techniques, which are also referred to as “in-network processing” [12]. The estimation is done such that each node conducts “local” computations and shares intermediate results with its neighboring nodes. The key to these computations is a decomposition of a network-global objective function into a sum of “local” sub-objectives, typically with additional constraints that ensure a network-wide convergence to a specific solution. A special class of such algorithms is called consensus-based algorithms, see, e.g., [13,14,15,16,17,18]. This class of algorithms enforces consensus over the whole network, i.e., each node converges to the same solution. Here, the Alternating Directions Method of Multipliers (ADMM) [19] has gained popularity for in-network processing due to its ability to handle different types of constraints on model parameters.

As an alternative, diffusion-based approaches (see e.g., [20,21,22] and references therein) have been proposed that estimate a quantity in a distributed fashion within a network without enforcing consensus. Such approaches are also based on solving an optimization problem that permits a decomposability of the network objective function. One of the applications of interest for swarm exploration is seismic imaging of subsurface structures. In particular, distributed subsurface imaging techniques based on the full waveform inversion and the traveltime tomography have been proposed recently that can be directly applied to decentralized multi-agent networks, s. [22,23]. Full waveform inversion is a high-resolution geophysical imaging method based on the wave equation [24]. For a distributed implementation of this method, a global cost function is decomposed over the receivers and local gradients and subsurface images are computed. Following the diffusion-based information exchange, these gradients, and images are exchanged among the receivers in order to obtain a global estimate of the subsurface image.

For the exploration of complex physical processes that are described in terms of Partial Differential Equations (PDEs), classical approaches typically do not provide a direct assessment of statistical information about the quality of estimated parameters. In contrast, Bayesian inference methods postulate randomness of the parameters of interest and are from the domain of machine learning [25]. As such, instead of a point estimate, parameter distributions are computed. FGs can be used to describe probabilistic relationships between all model parameters [26] and parameter estimation is then realized using message passing schemes [27]. Bayesian tools have been used in the past for inverse PDE problems (see, e.g., [3,28]). In [3], the authors use FGs for inverse PDE modeling in a distributed setting and to localize gas sources based on concentration measurement samples. In essence, random variables are used to represent the gas concentration distribution in each mesh cell of the discretized PDE. An FG is then applied to capture temporal and spatial dependencies between concentration variables.

Having inferred the model parameters, one can then design a movement planning strategy that exploits the statistics of the estimated model parameters to optimally guide agents to new, more informative sampling locations to accelerate the exploration process. We categorize such strategies in the realm of exploitation. For example, methods of optimal experiment design [29] can be used for optimal selection of sampling locations; in [30] these were used for planning of optimal and safe trajectories for the movement of multiple agents. The work of [31] proposes information-driven approaches that guide agents based on mutual information or entropy. Furthermore, some swarm exploration approaches make use of (deep) Reinforcement Learning (RL) for the movement strategy of the agents [32,33,34]. However, the success of these methods relies heavily on the availability of suitable training data to learn an adequate movement strategy. Especially in applications with scarce training data, such approaches are likely to fail or perform unreliably in real environments: The use of synthetic training data introduces a model mismatch that is learned by the system. Furthermore, the learned behavior cannot be easily corrected a-posteriori due to the structure of the Deep Neural Network (DNN) that cannot be interpreted. Therefore, in our framework, we will focus on model-based approaches for exploration in scarce data regimes, as there are typically fewer parameters to infer as compared to purely data-driven methods.

All aforementioned methods for distributed exploration and path planning heavily rely on agent-to-agent communication of the exchanged data or messages. Hence, the quality of the inter-agent communication links has a direct impact on the exploration result. However, the majority of state-of-the-art methods for distributed exploration do not sufficiently take into account the erroneous nature of the communication links. Most studies consider erroneous inter-agent links by integrating noise and link failures into the link model, see, e.g., [35,36]. The algorithmic solutions are then adapted to these erroneous communication links.

So far, no framework has been proposed that unifies both communication and distributed exploration and optimizes both realms with respect to the overall exploration target. It is here, that we propose a framework for a joint design of the inter-agent communication and the exploration target in order to develop more robust and flexible algorithmic solutions for a distributed exploration.

2.2. Machine Learning for Communications

The probabilistic view often used in exploration is vital for the field of communications. With description by probabilistic models, we are able to connect exploration and communications closely. Since Claude Elwood Shannon laid the theoretical foundation of communications and information theory [37], probabilistic models have found their way not only into exploration but also into one prominent example of recent research interest: Artificial Intelligence (AI), in particular its subdomain Machine Learning (ML).

In the last decade, ML saw the emergence of powerful (probabilistic) models known as Deep Neural Networks (DNNs). Thanks to its ability to approximate arbitrarily well and to learn abstract features, it has led to several breakthroughs in research areas where there is no explicit domain knowledge but data to be collected, e.g., pattern recognition, generative modeling, and RL [38]. Previously considered intractable to optimize, automatic differentiation on dedicated Graphics Processing Units (GPUs) and innovative architectures now enable data-driven training of DNNs.

The impressive results showing equal or superhuman performance have not gone unnoticed by the communications community. Thus, much of the recent literature focuses on the data-driven design of the physical layer with DNNs, e.g., for wireless, molecular, and fiber-optical channels [38]. One prominent early example of such an approach is the Auto Encoder (AE) where a complete communication system is interpreted as one DNN and trained end-to-end [39].

In wireless communications, a number of channel models have been proposed and are widely used, so that key gains from using ML are expected in approximating optimal algorithmic structures that are otherwise numerically too complex (algorithm deficit) to be realized. For example, the computational complexity of Maximum A-Posteriori (MAP) decoding of large block-length codes or MAP detection, e.g., in massive Multiple Input Multiple Output (MIMO) systems, grows exponentially with code/system dimensions. In fact, e.g., using plain DNNs for decoding enables lowering of decoding complexity while approximately maintaining MAP error rate [40]. To improve generalization and reduce training complexity, more recent works focus on the idea of deep unfolding [41,42,43]. In deep unfolding, the parameters of a model-based iterative algorithm with a fixed number of iterations are untied and enriched with additional weights as well as non-linearities. The resulting DNN can be optimized for performance improvements in MIMO detection [42,44] and belief propagation decoding [42]. An example of an algorithm deficit on a higher level beyond the physical layer is resource allocation, where it is difficult to analytically express the true objective function or to find the global optimum. Thus, Deep RL has proven to be a proper means [45].

Semantic Communication

In contrast to wireless channels, a model deficit holds for molecular and fiber-optical channels. Note that it applies in particular to the example of this article: integration of semantic context, here exploration, into communication system design. The idea of semantic communication emerged in the early 1950s [46,47,48] but has seen a lot of research interest only recently with the rise of ML application to the physical layer [5,6,7,8,9].

Its notion traces back to Weaver [46] who reviewed Shannon’s information theory [37] in 1949 and amended considerations w.r.t. semantic content of messages. Oftentimes quoted is his statement that “there seem to be [communication] problems at three levels” [46]:

- How accurately can the symbols of communication be transmitted? (The technical problem).

- How precisely do the transmitted symbols convey the desired meaning? (The semantic problem).

- How effectively does the received meaning affect conduct in the desired way? (The effectiveness problem).

Weaver saw the broad applicability of Shannon’s theory back in 1949 and argued for the generality of the theory at Level A for all levels [49].

The generic model of Weaver was revisited by Bao, Basu et al. in [48,50] where the authors define semantic information sources and semantic channels. In [48], the authors consider joint semantic compression and channel coding at Level B with the classic transmission system, i.e., Level A, as the (semantic) channel. By this means, the authors can derive semantic counterparts of the source and channel coding theorems.

Recently, drawing inspiration from Weaver, Bao, Basu et al. [46,48,50] and enabled by the rise of ML in communications research, DNN-based natural language processing techniques, i.e., transformer networks, were introduced in AEs for the task of text and speech transmission [11,51,52,53]. The aim of these techniques is to learn compressed hidden representations of the semantic content of sentences to improve communication efficiency, but exact recovery of the source (text) is the main objective. This leads to performance improvements in semantic metrics, especially at low Signal-to-Noise Ratio (SNR) compared to classical digital transmissions.

As a result, semantic communication is still a nascent field: It remains still unclear what this term exactly means and especially its distinction from Joint Source-Channel Coding (JSCC) [9,51,54]. As a result, many survey papers aim to provide an interpretation, see, e.g., [5,6,7,8,9].

In summary, we note that both model and algorithm deficits are true for the open research topic of joint modeling or integrated design of communications and exploration. Therefore, we think that the capability of ML-based design to handle such model deficits is crucial to develop a first prototype of tight integration, as outlined in our main contribution. Further, the inherent flexibility of DNNs should allow for quick design.

3. Distributed Exploration Problem

In the following, we give a brief description of the exploration problem, which requires data exchange for a distributed solution and therefore motivates a unified framework with communications. Consider a multi-agent system (a swarm) of L autonomous mobile agents. Their objective is to learn model parameters of an unknown process by taking samples of at different locations and times (see Figure 1). Here, is a spatial coordinate vector and t is time. Exploration is understood as an inference of all (or some) relevant process parameters, such as positions of physical sources and material or medium parameters that cannot be directly observed from measurements. In this work, we assume that the process of interest is represented by a PDE. Hence, the physical quantity at position and time t can be modeled by a function where is a parameter vector of the PDE and the function F computes the forward solution of the PDE for . For instance, in the wave equation the parameter can describe the spatial distribution of the wave velocity, in the diffusion equation it can be the location of diffusive sources. Then, the exploration problem can be formulated in generic terms as an inverse parameter estimation or optimization problem over all agents:

where the variable is the parameter estimate of at agent l. The function is the local cost at agent l, and usually evaluates a residual error between measured samples of and estimated samples that are generated using the forward solution of the PDE . For a consensus solution, one usually adds the constraint . Doing so enforces convergence to the same parameter estimate for all agents in the network and results in iterative updates that require data from neighboring agents. Hence, solutions to the corresponding optimization problem naturally require agents to cooperate, which includes baseband/physical layer communication of processing results between the agents.

As an example, for the distributed full waveform inversion proposed in [22] the local cost is the squared residual between the measured seismic response and synthetically generated seismic data based on the local model . To evaluate this residual, synthetic seismic data needs to be generated, i.e., the wave equation needs to be solved in a forward manner by computing . Then, to enable a distributed estimation of the global model , gradients and models are exchanged among connected agents and fused locally.

As mentioned earlier, the main objective of this study is the design of a joint framework that targets the exploration problem while considering the underlying imperfect communication conditions between the agents. To this end, we integrate both communications and exploration into a unifying framework by using FGs in a probabilistic setting.

4. Proposed Framework

As the main contribution of this article, we now describe in more detail a new design approach that considers communications and exploration jointly. To make the exploration “communication-aware” and communications “exploration-aware”, we use tools of probabilistic learning and FGs [26,27].

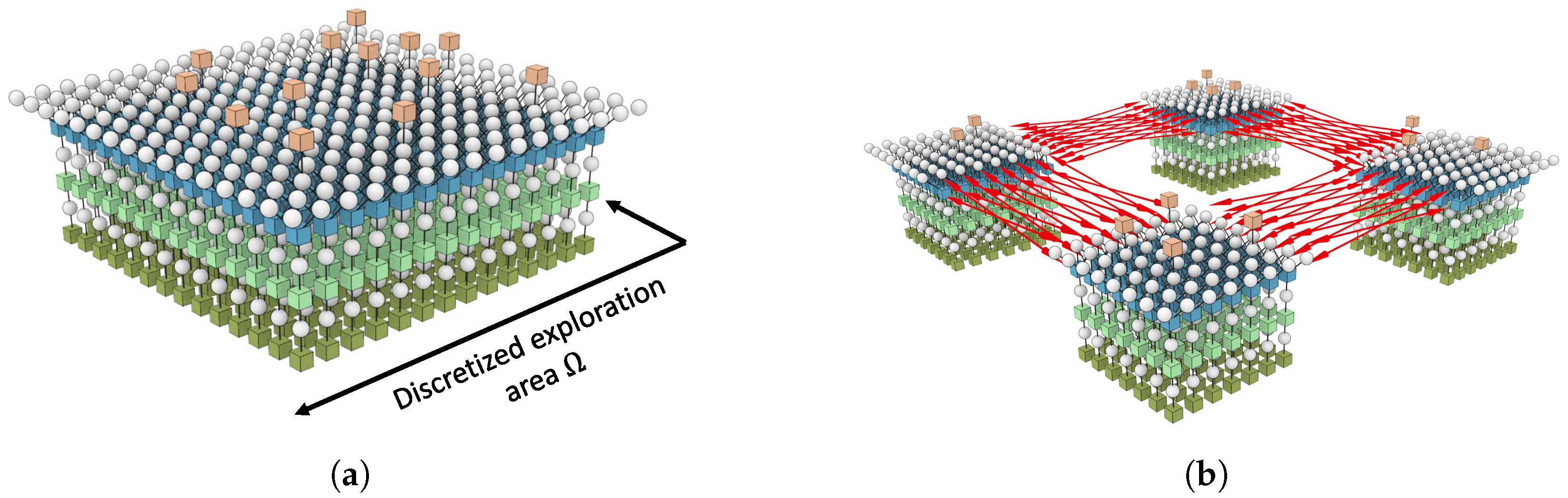

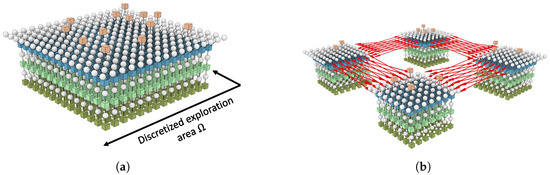

4.1. Design Approach: Factor Graphs

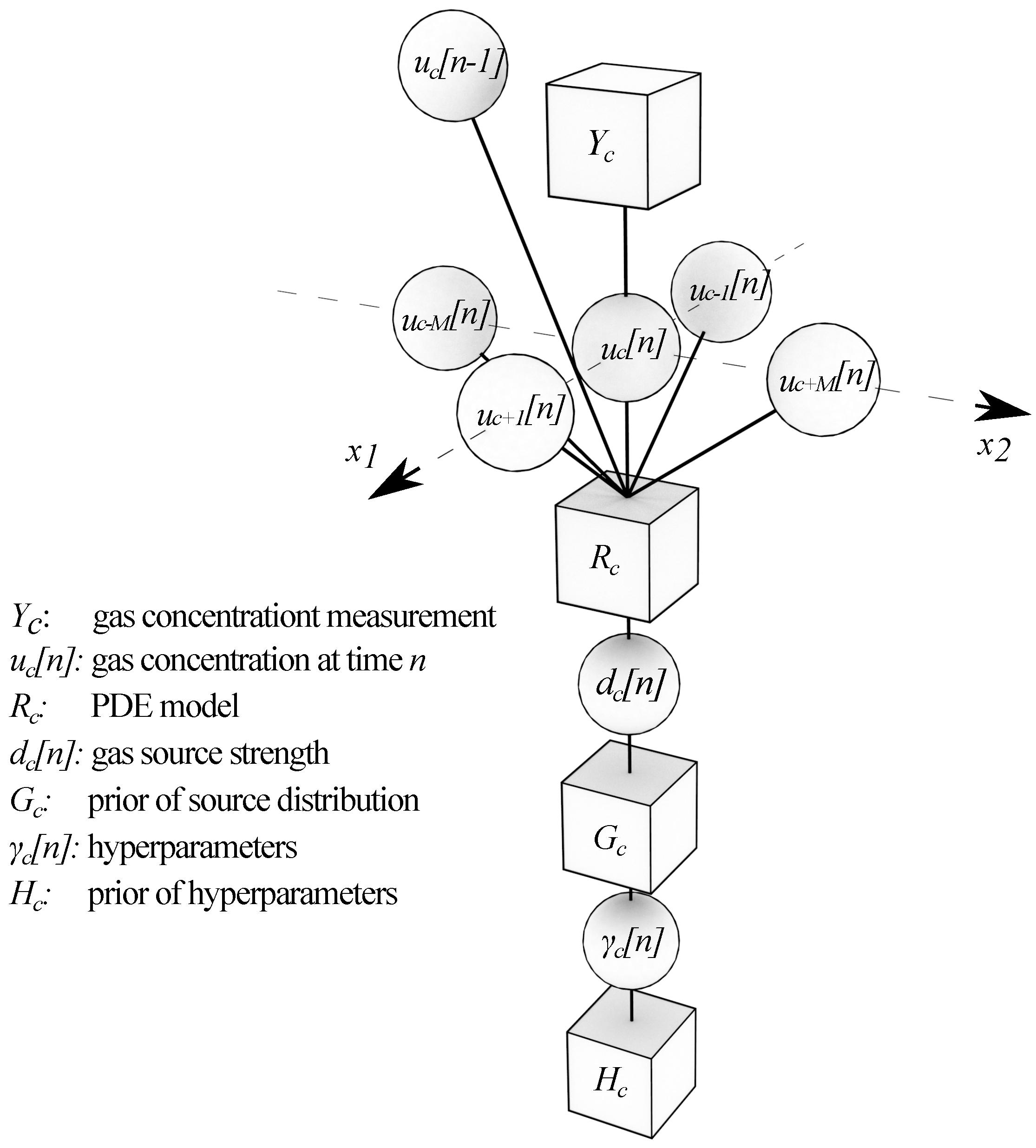

We first illustrate how FGs can be used to solve inverse PDE problems in a distributed fashion. In [55], the PDE given by the diffusion equation has been modeled by a FG, and the parameter of interest, namely the position of a diffusive source, has been inferred by a message-passing algorithm over the FG. To enable a distributed implementation in a multi-agent network, the FG has been split over its connections such that each agent infers within a specific geometric region. An illustration of this approach is shown in Figure 3: In Figure 3a, the complete FG over a geometric region of interest is shown that models the gas concentration at a specific position. The multiple layers of nodes describe the probabilistic relationship of the gas diffusion for the respective grid cell, while skin-colored nodes at the top indicate that measurements of gas concentration have been taken at the respective positions. Details regarding the probabilistic modeling are described in Section 5.1.1. To enable a distributed inference over this FG, the FG is split over several sub-graphs, as can be seen in Figure 3b. Each sub-graph is then assigned to one agent, which then infers the parameters of interest using approximative message passing schemes, see, e.g., [56]. The red arrows between the sub-graphs indicate where information between agents needs to be exchanged.

Figure 3.

(a) FG model of a discretized PDE describing a gas diffusion process at a fixed time instance over grid cells in the spatial domain . At each grid cell, there is a layer of nodes that describes the probabilistic relationship of the gas diffusion process in this specific cell. The skin-colored nodes at the top represent measurements of gas concentration at a specific location. (b) The FG model is partitioned into four smaller FGs on four agents to enable distributed inference. Red arrows indicate where information between the smaller FGs needs to be exchanged in order to realize inference.

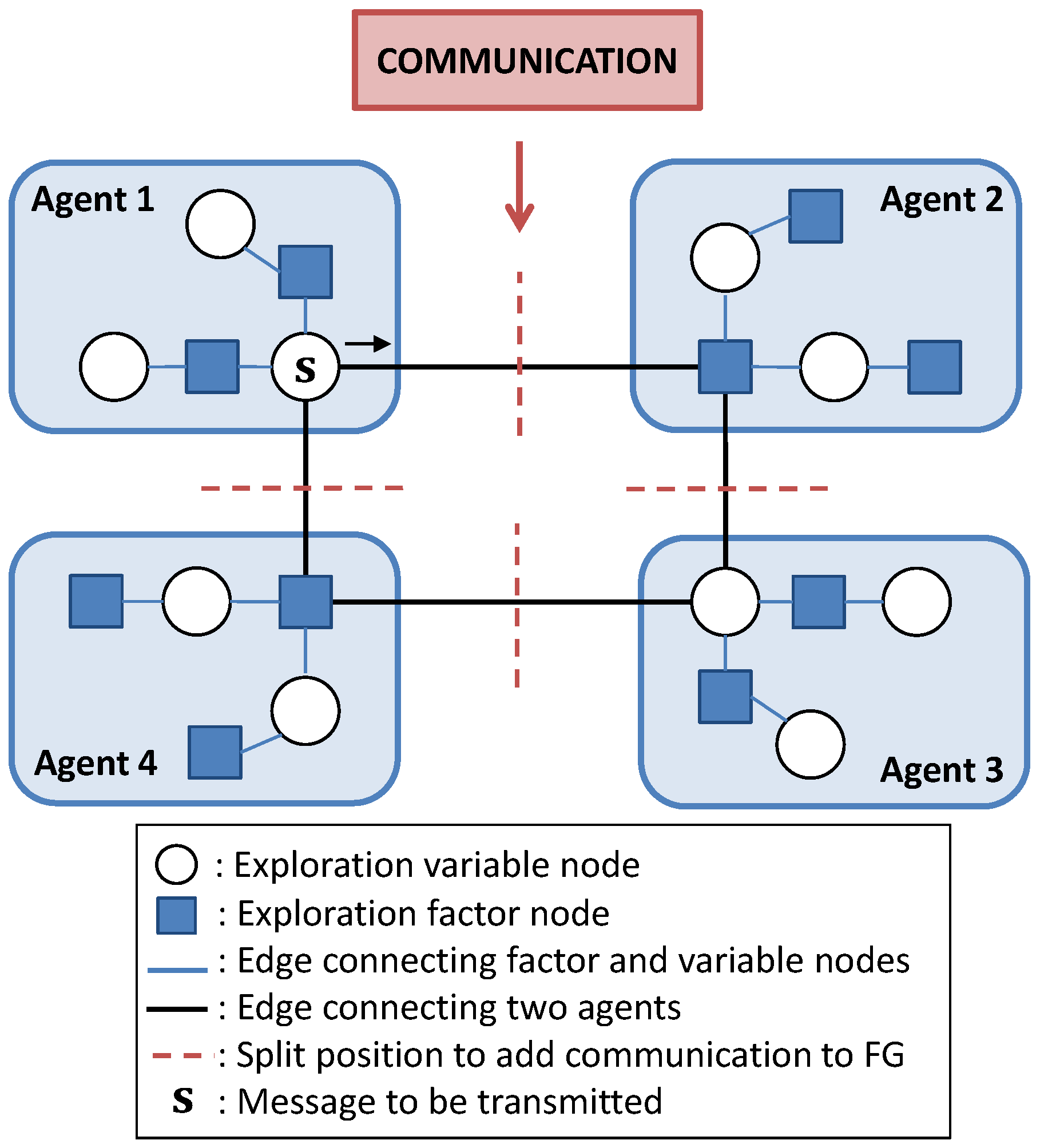

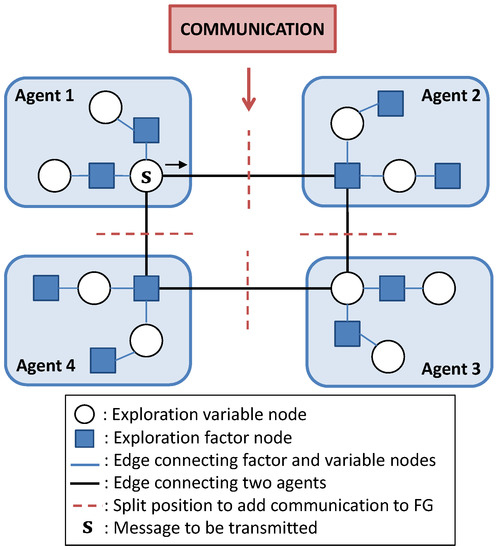

Now, to connect both realms of exploration and communication with each other, we propose the use of FGs for each exploration and each communication block, respectively. This is illustrated in Figure 4: Each agent owns a local graph that is part of a global factor graph distributed over all agents, which is analogous to Figure 3b. The global FG models spatial and temporal interrelations of a PDE over the geometric area . Hence, each agent itself is responsible for the inference of the PDE over a sub-region of . In the local FG of each agent, a variable node contains the quantity to be exchanged with neighboring agents. On each edge between two agents, data needs to be exchanged, and hence, a block representing the communication FG is placed between two connected agents. Since the communication block is also described by means of a FG (see [57] for an example), it can be readily placed between the FGs of two agents. It should be noted, that the specific structure of the FG depends on whether an agent is a transmitting or receiving agent. This is due to the fact that a variable node is always connected to a factor node and vice versa. With this approach, it remains to show how to specifically implement and integrate the communications FG.

Figure 4.

A schematic diagram of a joint FG for exploration and communication. The split of the FG w.r.t. spatially separated and distributed agents requires the inclusion of communications.

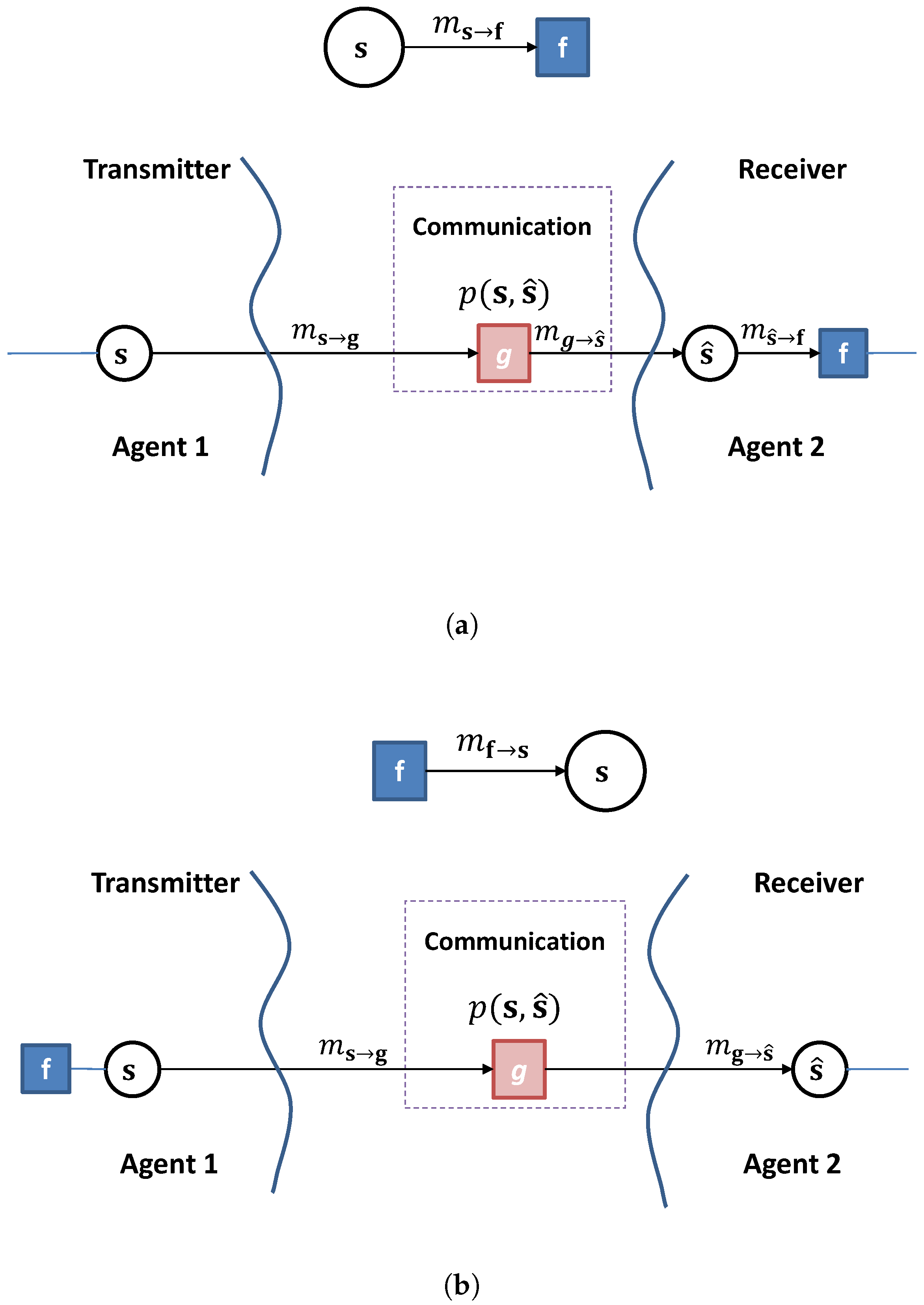

4.2. Integration of Communication as Factor Node

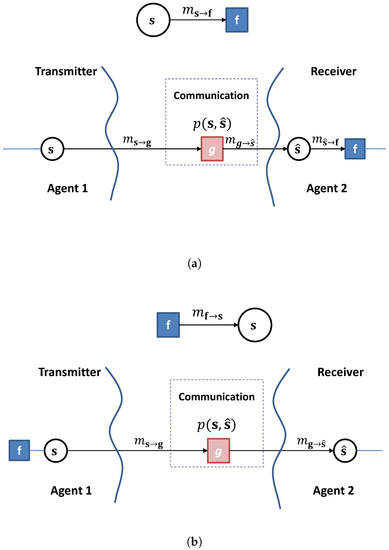

To give a possible integration of the communications FG into the exploration FG, we consider the following: In the distributed setting, each agent implements a message passing algorithm over its local FG. In general, the inference on FGs requires the exchange of two types of messages: message from a variable node to a factor node f, and messages from a factor node f to a variable node (see Figure 5). Now, we include the physical transmission of a variable-to-factor message through the incorporation of a factor node g into the FG. This factor represents the full communication chain between two agents. To properly account for the new factor, we modify the graph as shown in Figure 5a: A message is now sent from variable node to the communication factor node g between Agent 1 and Agent 2. At the receiver side, the variable is used to represent the received message. This variable is set to the belief of the received and decoded message . Communication of the message is constructed similarly, yet this time the transmitter is augmented with a “copy” of a variable that effectively belongs to the receiver FG graph of the Agent 2 (see Figure 5b). By designing the modified FG as shown in Figure 5, the communication is fully integrated into the exploration FG. The whole communications chain including the transmitter, channel, and receiver is hence modeled by a factor node g reflecting the communication uncertainties in a probability density function . Beside point estimates , this information facilitates exploration by bringing more informativeness into the estimation process, e.g., by exchanging approximations of or bounds on learned parameters. All of them enable the exploration to be “communication-aware”.

Figure 5.

Example of possible modifications to the FG to integrate communication as a factor node between Agent 1 and Agent 2. (a) FG for transmitting the message from Agent 1 to Agent 2. (b) FG for transmitting the message from Agent 1 to Agent 2.

For transmission of variable , we propose to use semantic communication that is aware of the importance or relevance of . For instance, if data is transmitted over a highly unreliable communication channel between two agents, the receiving agent should be aware of the poor data quality and consider this information for its local inference. The agent can either assign a very low priority to the received data for the inference procedure or discard it completely. Bad data quality can also indicate that the agent should change its position to improve the condition of the communication channel. If received data from another agent is of high relevance, the receiving agent should adjust its position such that the communication condition is kept stable or improved. As such, intelligent exploration strategies can be designed to select new sampling locations subject to the optimization of relevance/confidence of the variables of interest.

5. Key Challenges and Possible Approaches

5.1. Exploration

In the design approach from Section 4, we proposed the use of FGs to model the underlying PDE of the physical process and to incorporate inter-agent communication as a factor and variable nodes into the exploration procedure. From the proposed design approach, we derive the following two key challenges w.r.t. a “communication-aware” exploration:

- Probabilistic description of PDE by factor graphs: How to describe the PDE model in a Bayesian framework and conduct inference using FGs?

- Process prediction for exploitation: How to design a “communication-aware” exploration objective to determine new measurement positions for the agents?

These challenges give rise to the following possible approaches for the exploration framework.

5.1.1. Probabilistic Description of PDE by Factor Graphs

For the exploration of the physical process , we first need to solve the corresponding PDE numerically that describes this process. To this end, a broad variety of methods are available in the literature from the domain of Finite-Difference Methods (FDM) and Finite Element Methods (FEM). FEM allows for higher flexibility in spatial resolution since it is a mesh-based discretization. Based on the discretized PDE, one can employ a FG in the Bayesian framework to model and eventually solve the PDE. Here, variable nodes represent mesh parameter estimates at the corresponding mesh vertices (when using Lagrange elements in FEM). Factor nodes describe the spatial interrelation between two mesh vertices as modeled by the PDE. The complexity of the mesh directly influences the size of the FG since the number of mesh vertices corresponds to the number of variable nodes. Hence, it is crucial to optimize the FEM mesh by reducing, e.g., redundant vertices. Here, adaptive discretization methods can be investigated that are trained to change the mesh depending on the required spatial resolution of the physical process in certain areas.

As an example of how an inverse PDE problem can be described and solved using FGs, we consider the exploration of a gas field. Details can be found in [55]. The underlying PDE describing the gas field is given by the diffusion equation:

where is the gas concentration at position and time t, is a gas diffusion coefficient, is the gas source distribution and is the spatial Laplace operator. The problem in gas exploration is to determine both and from gas concentration measurements at distinct sampling positions. To this end, the diffusion Equation (2) needs to be solved numerically first. As mentioned before, FDM or FEM can be used for this matter. Authors in [55] used the FDM method, yet independent of the used discretization, we obtain a system of linear equations that needs to be solved. In the case of FDM, the unknown parameters in this system represent concentration and source strengths in each cell of the discretized spatial domain. We summarize these concentration values and the source strengths into vectors and at discrete time index n. If we define a measurement vector for L agents in the network, we can model the gas measurement process via

where is a spatial sampling matrix that selects respective gas concentration values from at the agent positions and is zero-mean white Gaussian noise.

Now, to transfer the gas exploration problem into a probabilistic framework, we first model the discretized gas concentration and gas source strength as random vectors. Then we model the gas measurement stage via a Gaussian probability density function (pdf) due to the Gaussian noise assumption in the measurement model. Furthermore, we define a conditional pdf of the gas concentration , denoted as . Additionally, we define two prior pdfs, one for the initial gas concentration and one for the source . For initial gas concentration , we use a Gaussian pdf with zero mean and high variance, since in the beginning no information about the gas concentration is available. For the source prior , we include a sparse assumption on the spatial distribution of gas sources and make use of sparse Bayesian learning techniques from the domain of compressed sensing [58]. To this end, hyperparameters are introduced that describe the precision of the prior of the source distribution in each Cell c. They are also random variables that need to be estimated. In other words, for in Cell c, a Gaussian pdf with variance is assumed. For , a Gamma pdf is selected. A detailed derivation of this approach is given in [55]. In short: the hierarchical prior favors source distributions that are sparse. Based on these pdfs, one can then formulate the desired posterior pdf using Bayes theorem:

for a total of N time steps and C mesh cells in the discretized spatial domain.

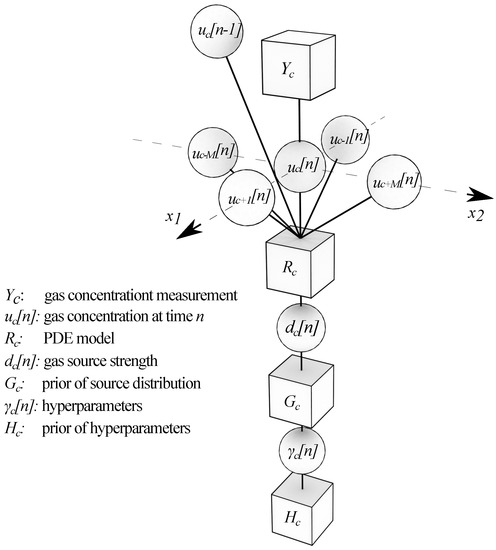

The posterior pdf can be graphically modeled via an FG. In Figure 6, a part of the FG is shown depicting the relations of one cell. Here, variable nodes are shown as circles and describe random variables of concentration , source strength , and hyperparameter in the Cell c at time instant n. The factor node models a measurement is taken in cell c and is the likelihood function . Factor describes the PDE model with the finite difference method: It relates the concentration value in the Cell c to those in neighboring grid cells and in preceding time instants. Furthermore, the source strength in Cell c is put in relation to according to the right-hand side of the PDE. The remaining factors and represent the parametric prior of the sparse source distribution and the prior of the hyperparameters .

Figure 6.

Factor graph representation of posterior pdf for one grid cell c in the spatial domain under consideration.

To finally enable inference on the FG to obtain the posterior pdf, one can use the message passing algorithm. Here, messages that represent pdfs are exchanged between factor and variable nodes. Based on an iterative exchange of such messages, outgoing messages of variable nodes eventually converge to the marginal distributions of the respective variables. For the FG model from Figure 6, the messages that are connected to factors and can be calculated using the sum-product algorithm. These messages are Gaussian pdfs which are parameterized by mean and variance. In particular, the mean and precision of the message can be computed in closed form and are the only quantities that need to be communicated over the edges. We summarize the messages or its characterizing parameters in a vector . In contrast, messages for factor nodes and are not computable in closed form. However, variational message passing [56] can be used to obtain an analytical approximation of these specific messages, cf. [55]. To enable a distributed inference, the complete FG that covers all mesh cells can be separated into several parts. Each part corresponds to a different 2D region of the spatial domain, as shown previously in Figure 3.

5.1.2. Process Prediction for Exploitation

The second key challenge considers the design of a communication-aware exploration criterion to decide on the optimal sampling positions of the agents. In particular, the exploration criterion needs to consider the reliability of inter-agent communication to guide the agents to measurement positions that are both informative about the physical process and reliable in terms of communication. Hence, both exploration objectives and communication constraints need to be respected by this criterion. To this end, we intend to utilize the FG to make predictions about process properties or message/variable certainties at arbitrary measurement locations. These predictions are essential for exploitation and should include uncertainty measures from communications. In fact, these predictions form a formal basis for determining new measurement locations of the agents. Such uncertainty measures are contained in the pdf that can be extracted from the joint pdf of transmitted and received messages and . This joint pdf is provided by the semantic communication system to the respective receiving agent, e.g., by marginalization or point estimate. Thus, by properly exploiting pdf one can optimally decide on new sampling positions of the agents. Specifically, given (i) a trained FG FG(·) with estimated marginal pdfs and (ii) measurement data y, we aim at finding new measurement positions for L agents as a solution for the following optimization problem

where is some chosen exploration criterion. Different choices for can be investigated such as information-theoretic measures like mutual information, entropy, or entropy rate. The motivation for the choice of information-theoretic measures is mainly owed to the fact that this leads to an indirect optimization (maximization) of the information gathered by the swarm.

5.2. Semantic Communication

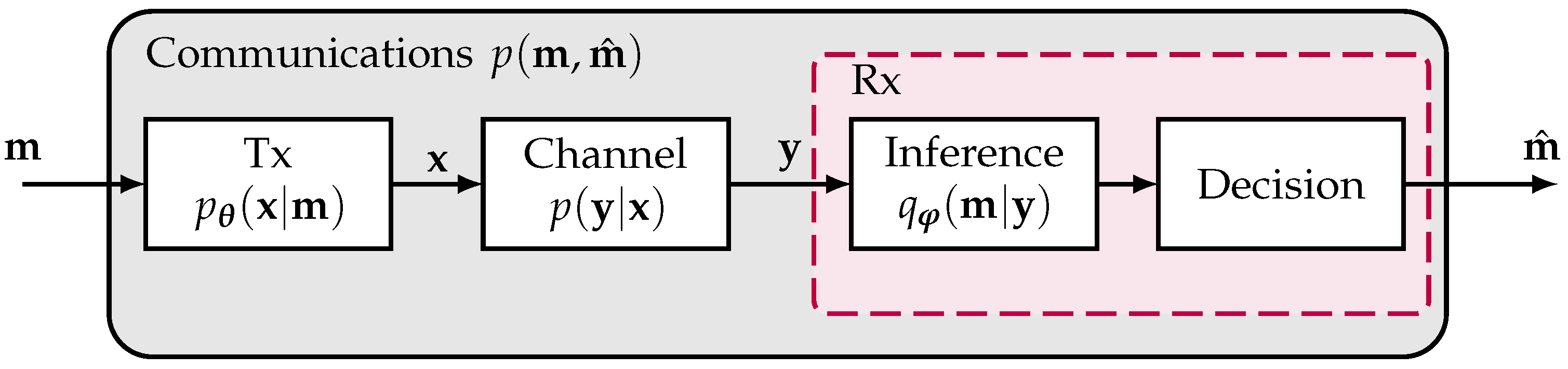

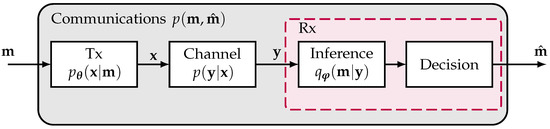

Following the design approach of Section 4.2, communication is modeled as a factor node that consists of the main communication blocks shown in Figure 7. As input, we have a semantic Random Variable (RV) from domain of dimension set to the message .

Figure 7.

Typical communications design, but here adapted for transmission of the semantic or message variable . For exploration, the communication system is a black box and only needs to be abstracted for the purpose of integration as a factor node with joint pdf .

For the remainder of the article, note that the domain of all RVs may be either discrete or continuous. Further, we note that the definition of entropy for discrete and continuous RVs differs. For example, the differential entropy of continuous RVs may be negative whereas the entropy of discrete RVs is always positive [59]. Without loss of generality, we will thus assume all RVs either to be discrete or to be continuous. In this work, we avoid notational clutter by using the expected value operator: Replacing the integral by summation over discrete RVs, the equations are also valid for discrete RVs and vice versa.

The message could, e.g., consist of samples of the pdf of or of its parameters, i.e., mean and variance in case of a Gaussian distribution. These parameters themselves are subject to stochastic perturbations when computed or measured. After encoding with the transmitter , we transmit signals over the wireless channel to the receiver side. There, we infer an estimate set to the message based on the received signals with the decoder . Communication is modeled with its distribution . Since the semantic context is included in communication system design with the RV , we enter into the field of semantic communication, which has seen a lot of research interest recently [6,7,9,11,46,47,48,53]. We will adapt and modify here the promising approach of [11] where the authors originally designed a semantic communication system for the transmission of written language/text similar to [51] using transformer networks.

As an alternative idea, we could also follow the approach of [49]: There, the authors model semantics by means of hidden random variables and define the semantic communication task as the data-reduced and reliable transmission of a communications source over a communication channel such that semantics is best preserved. The authors cast this task as an end-to-end Information Bottleneck problem, allowing for compression while preserving relevant information. As a solution approach, the authors propose the ML-based semantic communication system SINFONY and analyze its performance in a distributed multipoint scenario where the meaning behind image sources is to be transmitted, revealing a tremendous rate-normalized SNR shift up to 20 dB compared to classically designed communication systems. Adapted to our scenario, this means we would aim to reconstruct the exploration RV directly instead of . How this translates into a different integration strategy is an open question, and we leave further elaboration for future work.

To make communications semantic/application-aware, we have to master two key challenges:

- Exploration integration: How can the meaning of exploration variables be exploited in communications when using tools of probabilistic learning?

- Exploration interface design: Which information should be passed to the exploration to reflect the uncertainty or reliability of communication, and how can we design this output by using tools from probabilistic learning?

We now propose how these challenges can be approached.

5.2.1. Model Selection

In order to tackle a semantic design of both transmitter and receiver in the considered exploration scenario, we first need to define a well-suited communication or machine learning model. From a probabilistic ML viewpoint, this design is equivalent to an unsupervised learning problem. Since we want to learn a hidden representation of our input data , our aim is to learn a probabilistic encoder or discriminative model parametrized by a parameter vector . It includes both transmitter and channel model . Note that is probabilistic here but usually assumed to be deterministic since we aim for uncertainty reduction at the receiver and that is independent of .

As transmitters, application-adapted DNNs are preferably analyzed in the literature [11,53]. Also for exploration, we propose to use DNNs mainly for a generic and flexible design of ML-based prototype transceivers, since DNNs are able to approximate any function well (universal approximation theorem) and can be easily optimized using automatic differentiation frameworks. But we point out that alternative forms of learning are not excluded from future implementations.

The channel model in mobile communications is typically assumed to be a frequency-selective Rayleigh fading channel. Oftentimes, this assumption is further abstracted to Additive White Gaussian Noise (AWGN) or Multiple Input Multiple Output (MIMO) channels for basic research. In contrast, time selectivity can be neglected on Earth for slowly moving agents with long coherence time. Hence, we expect mainly frequency selective channels that should even be valid for exploration of, e.g., an extraterrestrial environment like Moon or Mars, assuming rural area models [60,61].

With this likelihood model , and the semantic prior distribution in mind, the whole generative model is given by the joint probability distribution function usually assumed in communication systems. Furthermore, we will assume to belong to the exponential family. This leads to efficient learning and inference algorithms.

The last remaining communications component is the receiver. From a Bayesian perspective, the receiver infers given the received signal based on the posterior distribution , which can be inferred from using Bayes theorem.

Now, we are able to give an Answer to Challenge 1: Exploration Integration. It is the prior that allows modelling the message (see, e.g., Figure 5a). In data-driven transceiver design, samples of the prior are used as training data. In other words, through this prior, the design of the communication system becomes “exploration-aware”.

5.2.2. Learning of the Semantic Communication System

To define an unsupervised learning optimization criterion for our discriminative model or encoder , it is useful to follow the infomax principle from the information-theoretic perspective [59]. This means our aim is to find a representation that retains a significant amount of information about the input, i.e., maximization of the mutual information w.r.t. the encoder [49,62]:

where is the entropy and denotes the expected value of w.r.t. both discrete or continuous RVs . Here we also used the fact that is independent of and that . Further, note that the form of has to be constrained to avoid learning a trivial identity mapping. For communications, the channel , e.g., AWGN, indeed constraints .

If the calculation of the posterior in (9) is intractable, we are able to replace it by a variational distribution with parameters . Similar to the transmitter, DNNs are usually used in semantic communication literature [11,53] for the design of the approximate posterior at the receiver. To enhance the performance complexity trade-off, the application of deep unfolding can be considered—a model-driven learning approach that introduces model knowledge of to construct [43,44].

With , we are able to define a Mutual Information Lower BOund (MILBO) [62] similar to the well-known Evidence Lower BOund (ELBO) [38]:

Optimization of and can now be done w.r.t. this lower bound, i.e.,

There, is the cross entropy between two pdfs and . We note that the MILBO in (10) is equivalent to the negative amortized cross-entropy in (12). This means that approximate maximization of the mutual information justifies the minimization of the cross entropy in the Auto Encoder (AE) approach [39] oftentimes seen in recent semantic communication literature [11,53]. Thus, the idea is to learn parametrizations of the transmitter discriminative model and of the variational receiver posterior, e.g., by AEs or RL.

Furthermore, we can rewrite the amortized cross entropy [63,64]:

This means that optimization of the MILBO balances minimization of the Kullback-Leibler (KL) divergence and maximization of the mutual information . The former criterion can be seen as a regularization term that favors encoders with high mutual information, for which decoders can be learned that are close to the true posterior.

Indeed, our communications design and optimization based on probabilistic modeling can be applied in general also to semantics-agnostic settings. But in this article, we introduce semantics, i.e., messages, into the exploration-tailored design and aim for accurate analog transmission of . In fact, we adopt and modify the idea of [11] where the authors originally designed a semantic communication system for the transmission of written language/text similar to [51] using transformer networks:

- We replace the text with the messages .

- The objective in [11] is to reconstruct (sentences) as accurately as possible while preserving as much information of in . Optimization is done w.r.t. to a loss function consisting of two parts: Cross entropy between language input and output , as well as an additional scaled mutual information term between transmit signal , and received signal . We omit the latter in our approach (12).

This view resembles JSCC and is backed by the extensive survey in [9]. After optimization, the authors measure semantic performance with BiLingual Evaluation Understudy (BLEU) and semantic similarity [11].

We note that computation of the MILBO (10) (or cross-entropy (12)) leads to similar problems as for the ELBO [59]: If calculating the expected value cannot be solved analytically or is computationally intractable, we can approximate it using Monte Carlo sampling techniques. For stochastic gradient descent-based optimization, i.e., the AE approach, the gradient w.r.t. can then be calculated by application of the backpropagation algorithm in automatic differentiation frameworks like TensorFlow. Computation of the so-called reinforce gradient w.r.t. leads to a high variance of the gradient estimate since we sample w.r.t. the pdf dependent on . Typically, the reparametrization trick is used to overcome this problem [59].

As a final remark, we arrive at a special case of the infomax principle if we fix the encoder with and hence the transmitter. Then, only the receiver approximate posterior needs to be optimized in (14). Thus, in this case, maximization of the MILBO is equivalent to a supervised learning problem and minimization of KL divergence between true and approximate posterior [44]. This setup has several benefits: In practice, we avoid the reinforce gradient, and especially we do not need any ideal connection between transmitter and receiver. Further, even today in 5G, we can apply a semantic receiver design to standardized systems having fixed transmitter capabilities to possibly achieve semantic performance gains. As a first step, we thus suggest research should focus on the design of ML-based receivers, given a non-learning state-of-the-art transmitter. We give a first example in Section 5.3. Finally, we note that the latter was designed for the digital transmission of bits, requiring near-deterministic links. This may not really be needed from a semantic perspective and is a waste of resources. Hence, it is also worth considering the adaption of the transmitter to achieve more efficient use of bandwidth and increase data rate.

5.2.3. Interface to the Application

Based on the learned posterior , we have full information about our model at the receiver side but still need to design an interface to the application, e.g., by inferring transmitted messages/beliefs . For the efficient integration as a factor node, the posterior should hence belong to the exponential family. Further, we can define an interface by finding the optimal estimator w.r.t. a loss function which measures the quality of the inference prediction . Non-informative loss functions are, e.g., quadratic error loss or the MAP estimator [38]. If the variance needs to be computed for the exploration part but is intractable, we can lower bound it by the Fisher information [65].

In summary, we are able to give an Answer to Challenge 2: Exploration Interface Design. Possible approaches for output design include equipping the receiver with the capability to learn an approximation of its corresponding posterior , e.g., a Gaussian with mean and variance, or to bound the estimator’s variance with, e.g., Fisher information.

5.3. First Numerical Example: Semantic Receiver

In order to show the benefits of an exploration-oriented communication design, we present a first numerical toy simulation using the application example of the distributed full waveform inversion from [22] mentioned in Section 3. There, we make a first change to the error-free digital communication system and introduce a semantics-aware receiver to improve communication efficiency, as proposed in Section 5.2.2.

Since this is out of the scope of the first investigation of this paper, note that we will not investigate the exact effect of semantic communication on overall exploration performance, as well as the tight integration of communications into exploration with, e.g., an interface, as outlined in Section 5.2.3. But still, we can gain insight into why such a design may improve communication efficiency w.r.t. the exploration task.

5.3.1. Exploration Scenario and Data

As simulation data, we assume local models and gradients of agents after the second iteration of the distributed full waveform inversion from Section 3 as communicated messages, which are used to compute the global model i.e., to execute the exploration task [22]. Note that the global model is equivalent to the exploration RV . Both local models and gradients are summarized in the semantic RV , being continuous-valued but processed as floating point numbers with bits on digital hardware. The size of the dataset provided from [22] is = 1,147,000. In every iteration of the distributed seismic exploration algorithm, these discrete floating point numbers are exchanged between the agents and need to be communicated.

Relying on modern digital error-free protocols, each bit would be considered equally important and equally likely. However, with floating point representation and data distribution , respectively, this is in fact not the case: The bits are mapped via a weighted sum within function into the real-valued domain, i.e., the semantic space of the exploration task. To explain what we mean by semantic space, let us consider the example of language. Words m like “neat” and “fine” have similar meanings and are thus close in the semantic space. If we confuse both words, the change in meaning is minor. In our example, this means that there is room for non-perfect transmission of bit sequences as long as their meaning remains close, e.g., and . With semantic space in the real-valued domain and without any further detailed knowledge about the exploration task, i.e., utilizing low-level semantics [49], it is reasonable to assume that our receiver estimates should be close to the true transmit value m in the Mean Square Error (MSE) sense. By doing so, we expect that the exploration task should be still completed with high accuracy while increasing communications efficiency. As an important remark, we note that the model of this scenario resembles that proposed in [49], as it distinguishes between communications source and semantic source and assumes a deterministic bijective semantic channel . Further, we both optimize and measure semantic performance with the MSE metric, in contrast to [11].

5.3.2. Transmission Model

Since we want to focus on the key aspect of introducing semantics into the communications design at the receiver side, we use a simple abstraction of the digital transmission system in this first investigation neglecting details, i.e., modern communication protocols with, e.g., strong LDPC or Polar codes: We assume an uncoded Binary Phase Shift Keying (BPSK) transmission of the bits of each floating point number over an AWGN channel with noise variance to a receiver.

5.3.3. Methodology

With this given transmitter, we focus now on the design of the receiver, as explained in Section 5.2. Based on the statistics/prior of the simulation data, we are able to compute the ideal posterior by marginalization of . From our simulations, we note that for computational tractability, the resolution needs to be lower than bits.

To reduce computational complexity, we can introduce an approximate posterior . Assuming a Gaussian approximate posterior from the exponential family, minimization of the cross entropy (12), i.e., maximization of the mutual information (6), reduces to minimization of the MSE loss w.r.t. receiver parameters [59]. Thus, from the latter general perspective, the choice of the MSE loss for both semantic receiver optimization and semantic performance metric is well motivated.

We examine here the following approaches for the final decision/estimation of (see Figure 7) based on the computed posterior or :

- MAP detection: Optimal for error-free transmission of bit sequences , since error rate is minimized.

- Mean estimator: Optimal for estimation of semantics m in the MSE sense.

- Single-bit detector: As usually assumed in classic digital communications, every bit is considered stochastically independent, i.e., , and detected separately. We assume that the prior probability of every single-bit is known. Subsequently, we estimate .

- Analog transmission: Analog transmission of m over the AWGN channel is used as a reference curve. We assume power-normalized channel uses with subsequent averaging for a fair comparison.

- DNN estimator: For approximate estimation, we set the mean of a Gaussian approximate posterior to a small DNN with input , 2 dense intermediate ReLU layers of width and a linear output layer for estimation of m. We take the mean, i.e., the output of the DNN, as the estimate .

We trained the DNN with MSE loss for epochs with the stochastic gradient descent variant Adam and a batch size of . To optimize the receiver over a wider SNR range, we choose the SNR to be uniformly distributed within dB where with noise variance .

5.3.4. Results

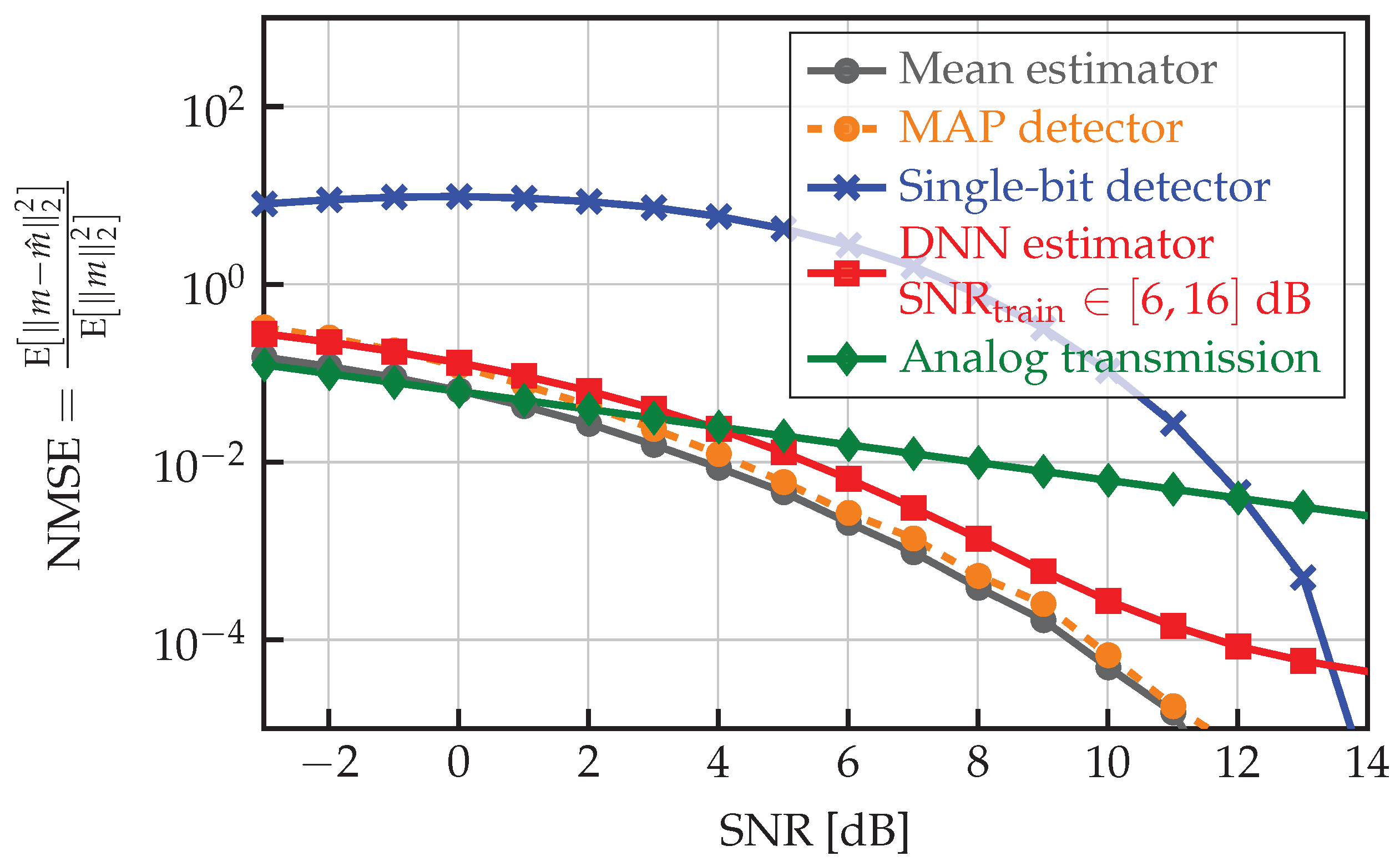

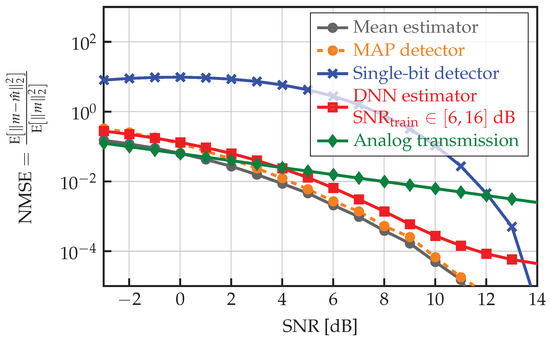

In Figure 8, we show the Normalized MSE (NMSE) performance of the considered (sub) optimal receiver approaches as a function of SNR for floating point resolution.

Figure 8.

Normalized MSE (NMSE) as a function of Signal-to-Noise Ratio (SNR) for different non- and semantic exploration data receiver approaches. We assume uncoded digital BPSK transmission of gradients and models m of the distributed full seismic waveform inversion [22] over an AWGN channel.

The classic single-bit approach is clearly inferior in the considered SNR range. Even the approximative DNN estimator outperforms the latter clearly. Notably, we observe only a 2 dB SNR shift compared to the mean estimate, i.e., the optimal approach, but with much lower computational complexity.

5.3.5. Discussion

By just adapting the receiver to account for semantics in this first investigation of a simple digital transmission scheme, we achieved a notable semantic performance gain. Further, we are able to achieve near-optimal semantic performance with a DNN of low complexity and hence with small training and inference time, possibly allowing for a real-time implementation. Thus, we conclude that a semantic communication design is profitable and can be realized with manageable effort. We note that it is still an open question which NMSE is required to achieve satisfactory performance on the task of distributed full waveform inversion. But provided that the given NMSE values of, e.g., , are accurate enough, we could avoid high latency, complexity energy consumption and increase data rate of modern communication protocols even with this simplified transmission scheme and by just adapting the receiver.

6. Conclusions

In this work, we presented an approach to make both exploration and communications mutually aware. In particular, we proposed to use probabilistic machine learning models to enable a unified description of both exploration and communications in one framework and made a first attempt towards integrating both areas using factor graphs. By using a factor graph description, we can integrate communications as a factor node between two communicating agents and improve communication efficiency in terms of latency, bandwidth, data rate, energy, and complexity. A first numerical example of integrating exploration data into semantic communications showed promising semantic performance gains.

We note that exploration is just an example of the application of the proposed framework. It can naturally be applied to other domains with communication links as well, e.g., in control engineering problems, etc. This philosophy of designing communications explicitly for a particular application lies at the heart of recent research interest in semantic communication. The introduction of the semantic aspect holds the promise of data rate increase in 6G networks. Further research is required to develop first prototype algorithms that lay the foundation for a “tight” integration of exploration and communications. However, we anticipate that this work serves as a reason to stimulate the required research to close the gap between both realms.

Author Contributions

Conceptualization, E.B., B.-S.S., S.W., D.S. and A.D.; methodology, E.B., B.-S.S., D.S. and A.D.; software, E.B., B.-S.S. and T.W.; validation, E.B., B.-S.S., S.W., D.S. and A.D.; formal analysis, E.B. and T.W.; investigation, E.B., B.-S.S. and T.W.; resources, B.-S.S. and T.W.; data curation, E.B. and B.-S.S.; writing—original draft preparation, E.B. and B.-S.S.; writing—review and editing, E.B., B.-S.S., S.W., T.W., D.S. and A.D.; visualization, E.B., B.-S.S., S.W., T.W. and D.S.; supervision, D.S. and A.D.; project administration, D.S. and A.D.; funding acquisition, D.S. and A.D. All authors have read and agreed to the published version of the manuscript.

Funding

This work was partly funded by the Federal State of Bremen and the University of Bremen as part of the Human on Mars Initiative.

Data Availability Statement

The data is not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AE | Auto Encoder |

| AI | Artificial Intelligence |

| ADMM | Alternating Directions Method of Multipliers |

| AWGN | Additive White Gaussian Noise |

| BPSK | Binary Phase Shift Keying |

| DNN | Deep Neural Network |

| FDM | Finite-Difference Methods |

| FEM | Finite Element Methods |

| FG | Factor Graph |

| GPU | Graphics Processing Unit |

| JSCC | Joint Source-Channel Coding |

| MAP | Maximum A-Posteriori |

| MIMO | Multiple Input Multiple Output |

| ML | Machine Learning |

| MSE | Mean Square Error |

| NMSE | Normalized Mean Square Error |

| PDE | Partial Differential Equation |

| probability density function | |

| RL | Reinforcement Learning |

| SNR | Signal-to-Noise Ratio |

References

- Burgués, J.; Marco, S. Environmental chemical sensing using small drones: A review. Sci. Total Environ. 2020, 748, 141172. [Google Scholar] [CrossRef] [PubMed]

- Tzoumas, G.; Pitonakova, L.; Salinas, L.; Scales, C.; Richardson, T.; Hauert, S. Wildfire detection in large-scale environments using force-based control for swarms of UAVs. Swarm Intell. 2022, 17, 89–115. [Google Scholar] [CrossRef]

- Wiedemann, T.; Manss, C.; Shutin, D. Multi-agent exploration of spatial dynamical processes under sparsity constraints. Auton. Agents Multi-Agent Syst. 2018, 32, 134–162. [Google Scholar] [CrossRef]

- Viseras, A. Distributed Multi-Robot Exploration under Complex Constraints. Ph.D. Thesis, Universidad Pablo de Olavide, Seville, Spain, 2018. [Google Scholar]

- Popovski, P.; Simeone, O.; Boccardi, F.; Gündüz, D.; Sahin, O. Semantic-Effectiveness Filtering and Control for Post-5G Wireless Connectivity. J. Indian Inst. Sci. 2020, 100, 435–443. [Google Scholar] [CrossRef]

- Calvanese Strinati, E.; Barbarossa, S. 6G networks: Beyond Shannon towards semantic and goal-oriented communications. Comput. Netw. 2021, 190, 107930. [Google Scholar] [CrossRef]

- Lan, Q.; Wen, D.; Zhang, Z.; Zeng, Q.; Chen, X.; Popovski, P.; Huang, K. What is Semantic Communication? A View on Conveying Meaning in the Era of Machine Intelligence. J. Commun. Inf. Netw. 2021, 6, 336–371. [Google Scholar] [CrossRef]

- Uysal, E.; Kaya, O.; Ephremides, A.; Gross, J.; Codreanu, M.; Popovski, P.; Assaad, M.; Liva, G.; Munari, A.; Soret, B.; et al. Semantic Communications in Networked Systems: A Data Significance Perspective. IEEE/ACM Trans. Netw. 2022, 36, 233–240. [Google Scholar] [CrossRef]

- Gündüz, D.; Qin, Z.; Aguerri, I.E.; Dhillon, H.S.; Yang, Z.; Yener, A.; Wong, K.K.; Chae, C.B. Beyond Transmitting Bits: Context, Semantics, and Task-Oriented Communications. IEEE J. Sel. Areas Commun. 2023, 41, 5–41. [Google Scholar] [CrossRef]

- Parr, T.; Pezzulo, G.; Friston, K.J. Active Inference: The Free Energy Principle in Mind, Brain, and Behavior; The MIT Press: Cambridge, MA, USA, 2022. [Google Scholar] [CrossRef]

- Xie, H.; Qin, Z.; Li, G.Y.; Juang, B.H. Deep Learning Enabled Semantic Communication Systems. IEEE Trans. Signal Process. 2021, 69, 2663–2675. [Google Scholar] [CrossRef]

- Schizas, I.D.; Mateos, G.; Giannakis, G.B. Distributed LMS for consensus-based in-network adaptive processing. IEEE Trans. Signal Process. 2009, 57, 2365–2382. [Google Scholar] [CrossRef]

- Kar, S.; Moura, J.M. Distributed consensus algorithms in sensor networks with imperfect communication: Link failures and channel noise. IEEE Trans. Signal Process. 2008, 57, 355–369. [Google Scholar] [CrossRef]

- Pereira, S.S. Distributed Consensus Algorithms for Wireless Sensor Networks: Convergence Analysis and Optimization. Ph.D. Thesis, Universitat Politècnica de Catalunya-Barcelona Tech, Barcelona, Spain, 2012. [Google Scholar]

- Talebi, S.P.; Werner, S. Distributed Kalman Filtering and Control Through Embedded Average Consensus Information Fusion. IEEE Trans. Autom. Control 2019, 64, 4396–4403. [Google Scholar] [CrossRef]

- Wang, S.; Shin, B.S.; Shutin, D.; Dekorsy, A. Diffusion Field Estimation Using Decentralized Kernel Kalman Filter with Parameter Learning over Hierarchical Sensor Networks. In Proceedings of the IEEE MLSP, Espoo, Finland, 21–24 September 2020. [Google Scholar]

- Shin, B.S.; Shutin, D. Distributed blind deconvolution of seismic signals under sparsity constraints in sensor networks. In Proceedings of the IEEE International Workshop on Machine Learning for Signal Processing, MLSP, Espoo, Finland, 21–24 September 2020. [Google Scholar] [CrossRef]

- Shutin, D.; Shin, B.S. Variational Bayesian Learning for Decentralized Blind Deconvolution of Seismic Signals Over Sensor Networks. IEEE Access 2021, 9, 164316–164330. [Google Scholar] [CrossRef]

- Boyd, S.; Parikh, N.; Chu, E. Distributed Optimization and Statistical Learning via the Alternating Direction Method of Multipliers; Now Publishers Inc.: Delft, The Netherlands, 2011. [Google Scholar]

- Sayed, A.H. Adaptation, learning, and optimization over networks. Found. Trends Mach. Learn. 2014, 7, 311–801. [Google Scholar] [CrossRef]

- Shin, B.S.; Yukawa, M.; Cavalcante, R.L.G.; Dekorsy, A. Distributed adaptive learning with multiple kernels in diffusion networks. IEEE Trans. Signal Process. 2018, 66, 5505–5519. [Google Scholar] [CrossRef]

- Shin, B.S.; Shutin, D. Adapt-then-combine full waveform inversion for distributed subsurface imaging in seismic networks. In Proceedings of the ICASSP, IEEE International Conference on Acoustics, Speech and Signal Processing—Proceedings, 2021, Toronto, ON, Canada, 6–11 June 2021; pp. 4700–4704. [Google Scholar] [CrossRef]

- Shin, B.S.; Shutin, D. Distributed Traveltime Tomography Using Kernel-based Regression in Seismic Networks. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Fichtner, A. Full Seismic Waveform Modelling and Inversion; Springer: Berlin/Heidelberg, Germany, 2011. [Google Scholar] [CrossRef]

- Bishop, C.M. Pattern Recognition and Machine Learning; Springer: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Loeliger, H.A. An introduction to factor graphs. IEEE Signal Process. Mag. 2004, 21, 28–41. [Google Scholar] [CrossRef]

- Kschischang, F.R.; Frey, B.J.; Loeliger, H.A. Factor graphs and the sum-product algorithm. IEEE Trans. Inf. Theory 2001, 47, 498–519. [Google Scholar] [CrossRef]

- Wang, J.; Zabaras, N. Hierarchical Bayesian models for inverse problems in heat conduction. Inverse Probl. 2004, 21, 183. [Google Scholar] [CrossRef]

- Pukelsheim, F. Optimal Design of Experiments; SIAM: Philadelphia, PA, USA, 2006. [Google Scholar]

- Viseras, A.; Shutin, D.; Merino, L. Robotic active information gathering for spatial field reconstruction with rapidly-exploring random trees and online learning of Gaussian processes. Sensors 2019, 19, 1016. [Google Scholar] [CrossRef]

- Julian, B.J.; Angermann, M.; Schwager, M.; Rus, D. Distributed robotic sensor networks: An information-theoretic approach. Int. J. Robot. Res. 2012, 31, 1134–1154. [Google Scholar] [CrossRef]

- Hüttenrauch, M.; Šošić, A.; Neumann, G. Deep Reinforcement Learning for Swarm Systems. J. Mach. Learn. Res. 2019, 20, 1–31. [Google Scholar]

- Zhu, X.; Zhang, F.; Li, H. Swarm Deep Reinforcement Learning for Robotic Manipulation. Procedia Comput. Sci. 2022, 198, 472–479. [Google Scholar] [CrossRef]

- Kakish, Z.; Elamvazhuthi, K.; Berman, S. Using Reinforcement Learning to Herd a Robotic Swarm to a Target Distribution. In Distributed Autonomous Robotic Systems, Proceedings of the 15th International Symposium, Brussels, Belgium, 19–24 September 2022; Springer International Publishing: Berlin, Germany, 2022; pp. 401–414. [Google Scholar]

- Schizas, I.D.; Ribeiro, A.; Giannakis, G.B. Consensus in ad hoc WSNs with noisy links—Part I: Distributed estimation of deterministic signals. IEEE Trans. Signal Process. 2008, 56, 350–364. [Google Scholar] [CrossRef]

- Zhao, X.; Tu, S.; Sayed, A. Diffusion Adaptation over Networks Under Imperfect Information Exchange and Non-Stationary Data. IEEE Trans. Signal Process. 2012, 60, 3460–3475. [Google Scholar] [CrossRef]

- Shannon, C.E. A Mathematical Theory of Communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Simeone, O. A Very Brief Introduction to Machine Learning with Applications to Communication Systems. IEEE Trans. Cogn. Commun. Netw. 2018, 4, 648–664. [Google Scholar] [CrossRef]

- O’Shea, T.; Hoydis, J. An Introduction to Deep Learning for the Physical Layer. IEEE Trans. Cogn. Commun. Netw. 2017, 3, 563–575. [Google Scholar] [CrossRef]

- Gruber, T.; Cammerer, S.; Hoydis, J.; Brink, S.t. On deep learning-based channel decoding. In Proceedings of the 51st Annual Conference on Information Sciences and Systems (CISS 2017), Baltimore, MD, USA, 22–24 March 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Monga, V.; Li, Y.; Eldar, Y.C. Algorithm Unrolling: Interpretable, Efficient Deep Learning for Signal and Image Processing. IEEE Signal Process. Mag. 2021, 38, 18–44. [Google Scholar] [CrossRef]

- Balatsoukas-Stimming, A.; Studer, C. Deep Unfolding for Communications Systems: A Survey and Some New Directions. In Proceedings of the IEEE International Workshop on Signal Processing Systems (SiPS 2019), Nanjing, China, 20–23 October 2019; pp. 266–271. [Google Scholar] [CrossRef]

- Farsad, N.; Shlezinger, N.; Goldsmith, A.J.; Eldar, Y.C. Data-Driven Symbol Detection Via Model-Based Machine Learning. In Proceedings of the 2021 IEEE Statistical Signal Processing Workshop (SSP), Rio de Janeiro, Brazil, 11–14 July 2021; pp. 571–575. [Google Scholar] [CrossRef]

- Beck, E.; Bockelmann, C.; Dekorsy, A. CMDNet: Learning a Probabilistic Relaxation of Discrete Variables for Soft Detection with Low Complexity. IEEE Trans. Commun. 2021, 69, 8214–8227. [Google Scholar] [CrossRef]

- Gracla, S.; Beck, E.; Bockelmann, C.; Dekorsy, A. Robust Deep Reinforcement Learning Scheduling via Weight Anchoring. IEEE Commun. Lett. 2023, 27, 210–213. [Google Scholar] [CrossRef]

- Weaver, W. Recent Contributions to the Mathematical Theory of Communication. In The Mathematical Theory of Communication; The University of Illinois Press: Champaign, IL, USA, 1949; Volume 10, pp. 261–281. [Google Scholar]

- Carnap, R.; Bar-Hillel, Y. An Outline of a Theory of Semantic Information; Research Laboratory of Electronics, Massachusetts Institute of Technology: Cambridge, MA, USA, 1952; p. 54. [Google Scholar]

- Bao, J.; Basu, P.; Dean, M.; Partridge, C.; Swami, A.; Leland, W.; Hendler, J.A. Towards a theory of semantic communication. In Proceedings of the 2011 IEEE Network Science Workshop, New York, NY, USA, 22–24 June 2011; pp. 110–117. [Google Scholar] [CrossRef]

- Beck, E.; Bockelmann, C.; Dekorsy, A. Semantic Information Recovery in Wireless Networks. arXiv 2023, arXiv:2204.13366. [Google Scholar]

- Basu, P.; Bao, J.; Dean, M.; Hendler, J. Preserving quality of information by using semantic relationships. Pervasive Mob. Comput. 2014, 11, 188–202. [Google Scholar] [CrossRef]

- Farsad, N.; Rao, M.; Goldsmith, A. Deep Learning for Joint Source-Channel Coding of Text. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 2326–2330. [Google Scholar] [CrossRef]

- Weng, Z.; Qin, Z.; Li, G.Y. Semantic Communications for Speech Signals. In Proceedings of the 2021 IEEE International Conference on Communications (ICC), Montreal, QC, Canada, 14–18 June 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Sana, M.; Strinati, E.C. Learning Semantics: An Opportunity for Effective 6G Communications. In Proceedings of the 2022 IEEE 19th Annual Consumer Communications Networking Conference (CCNC), Las Vegas, NV, USA, 8–11 January 2022; pp. 631–636. [Google Scholar] [CrossRef]

- Bourtsoulatze, E.; Kurka, D.B.; Gündüz, D. Deep Joint Source-Channel Coding for Wireless Image Transmission. IEEE Trans. Cogn. Commun. Netw. 2019, 5, 567–579. [Google Scholar] [CrossRef]

- Wiedemann, T.; Shutin, D.; Lilienthal, A.J. Model-based gas source localization strategy for a cooperative multi-robot system—A probabilistic approach and experimental validation incorporating physical knowledge and model uncertainties. Robot. Auton. Syst. 2019, 118, 66–79. [Google Scholar] [CrossRef]

- Winn, J.; Bishop, C. Variational message passing. J. Mach. Learn. 2005, 6, 661–694. [Google Scholar]

- Woltering, M.; Wübben, D.; Dekorsy, A. Factor graph-based equalization for two-way relaying with general multi-carrier transmissions. IEEE Trans. Wirel. Commun. 2017, 17, 1212–1225. [Google Scholar] [CrossRef]

- Beck, E.; Bockelmann, C.; Dekorsy, A. Compressed Edge Spectrum Sensing: Extensions and Practical Considerations. at-Automatisierungstechnik 2019, 67, 51–59. [Google Scholar] [CrossRef]

- Simeone, O. A Brief Introduction to Machine Learning for Engineers. Found. Trends® Signal Process. 2018, 12, 200–431. [Google Scholar] [CrossRef]

- Chukkala, V.; DeLeon, P.; Horan, S.; Velusamy, V. Radio frequency channel modeling for proximity networks on the Martian surface. Comput. Netw. 2005, 47, 751–763. [Google Scholar] [CrossRef]

- Zhang, S. Autonomous Swarm Navigation. Ph.D. Thesis, University of Kiel, Kiel, Germany, 2020. [Google Scholar]

- Vincent, P.; Larochelle, H.; Lajoie, I.; Bengio, Y.; Manzagol, P.A. Stacked Denoising Autoencoders: Learning Useful Representations in a Deep Network with a Local Denoising Criterion. J. Mach. Learn. Res. 2010, 11, 3371–3408. [Google Scholar]

- Stark, M.; Aoudia, F.A.; Hoydis, J. Joint Learning of Geometric and Probabilistic Constellation Shaping. In Proceedings of the 2019 IEEE Globecom Workshops (GC Wkshps), Waikoloa, HI, USA, 9–13 December 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Cammerer, S.; Aoudia, F.A.; Dörner, S.; Stark, M.; Hoydis, J.; Brink, S. Trainable Communication Systems: Concepts and Prototype. IEEE Trans. Commun. 2020, 68, 5489–5503. [Google Scholar] [CrossRef]

- Jiang, Z.; He, Z.; Chen, S.; Molisch, A.F.; Zhou, S.; Niu, Z. Inferring Remote Channel State Information: Cramér-Rao Lower Bound and Deep Learning Implementation. In Proceedings of the IEEE GLOBECOM, Abu Dhabi, United Arab Emirates, 9–13 December 2018. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).