A Non-Intrusive Load Monitoring Method Based on Feature Fusion and SE-ResNet

Abstract

1. Introduction

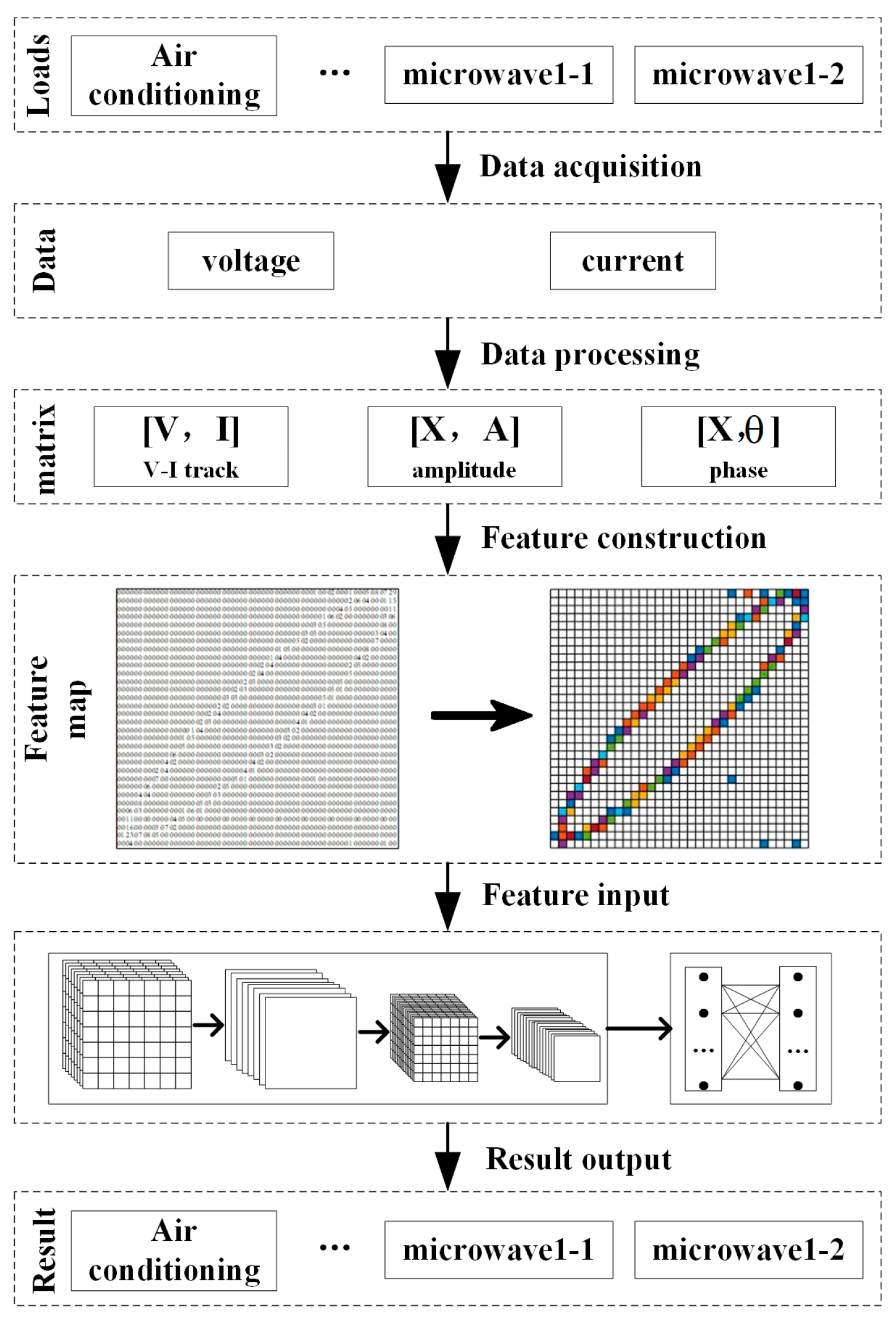

2. Feature Construction

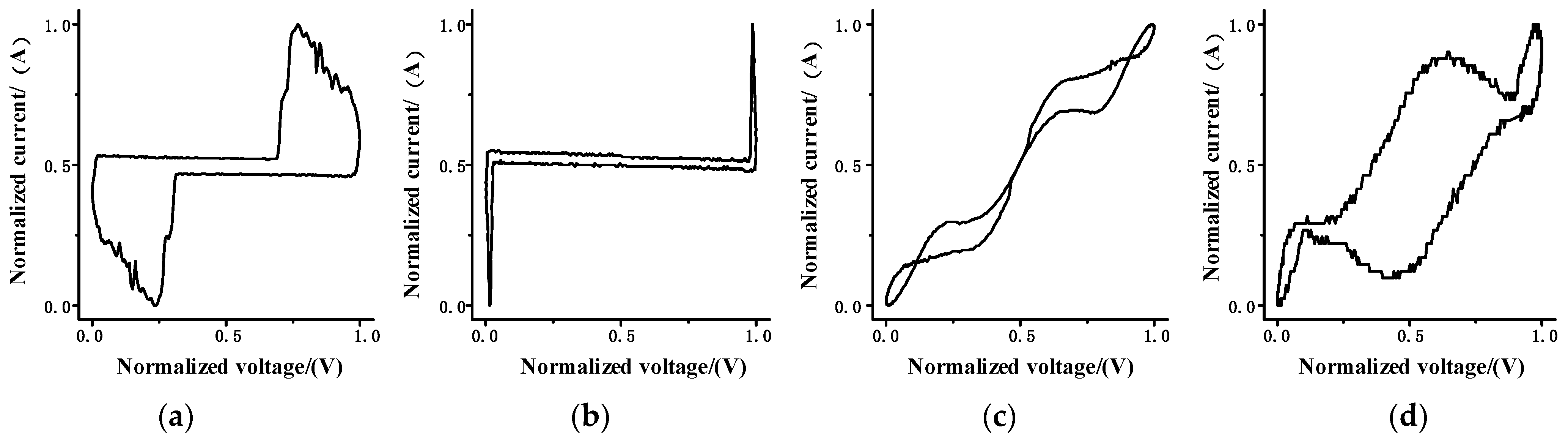

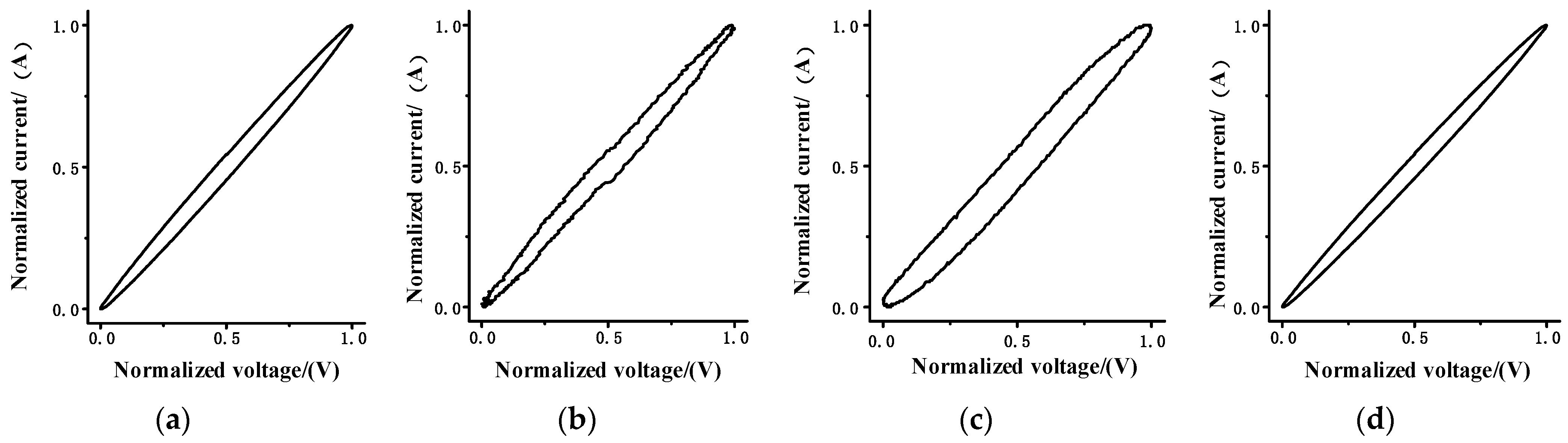

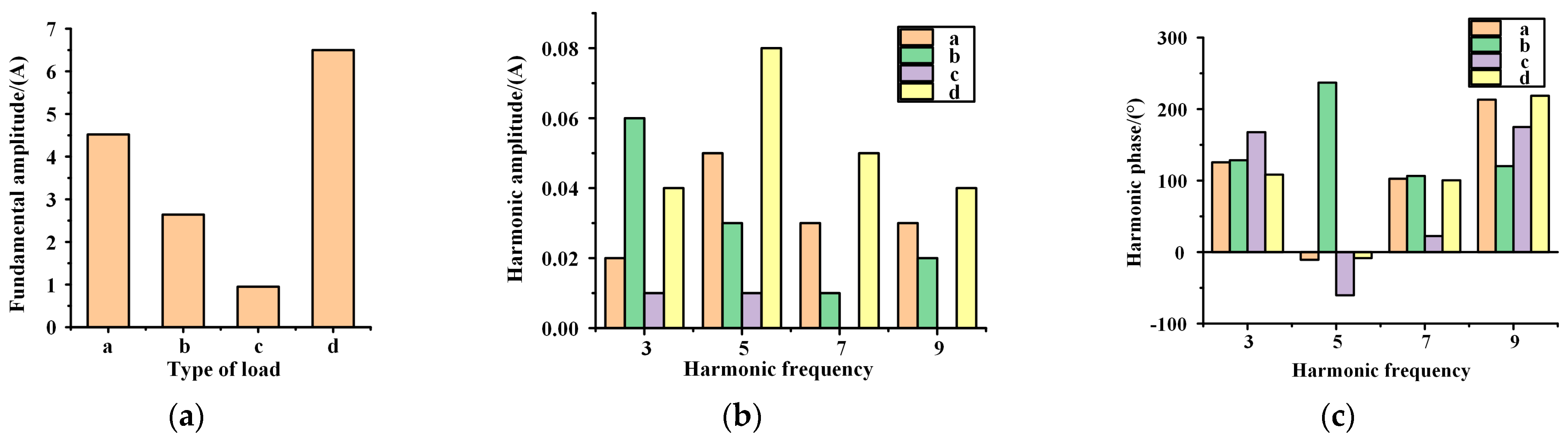

2.1. Single-Class Feature Processing

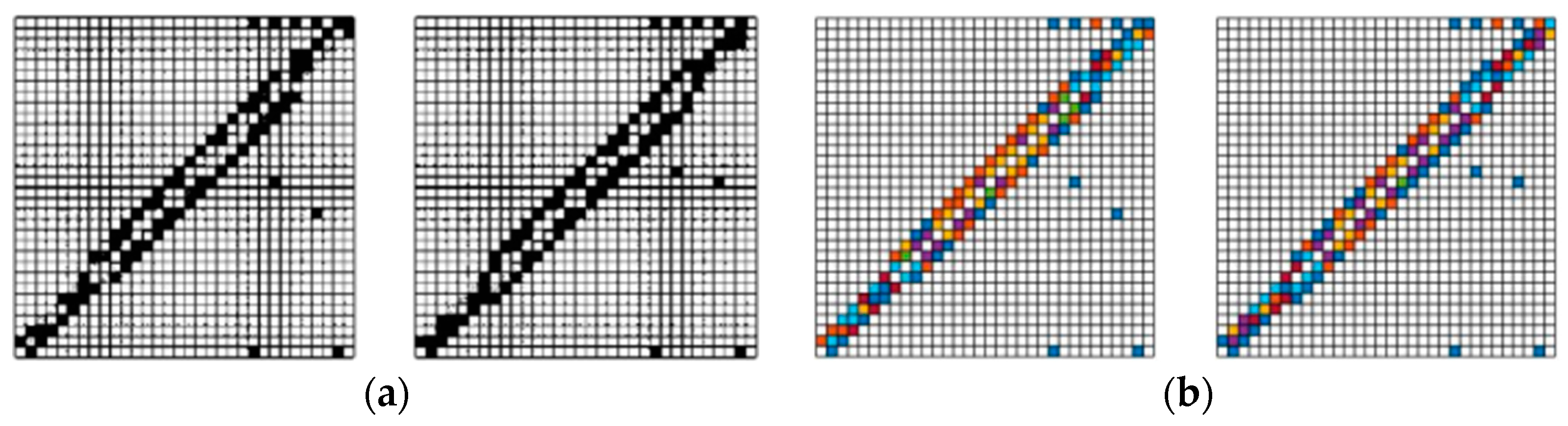

2.2. Matrix Heat Map Construction

3. Identification Network Construction

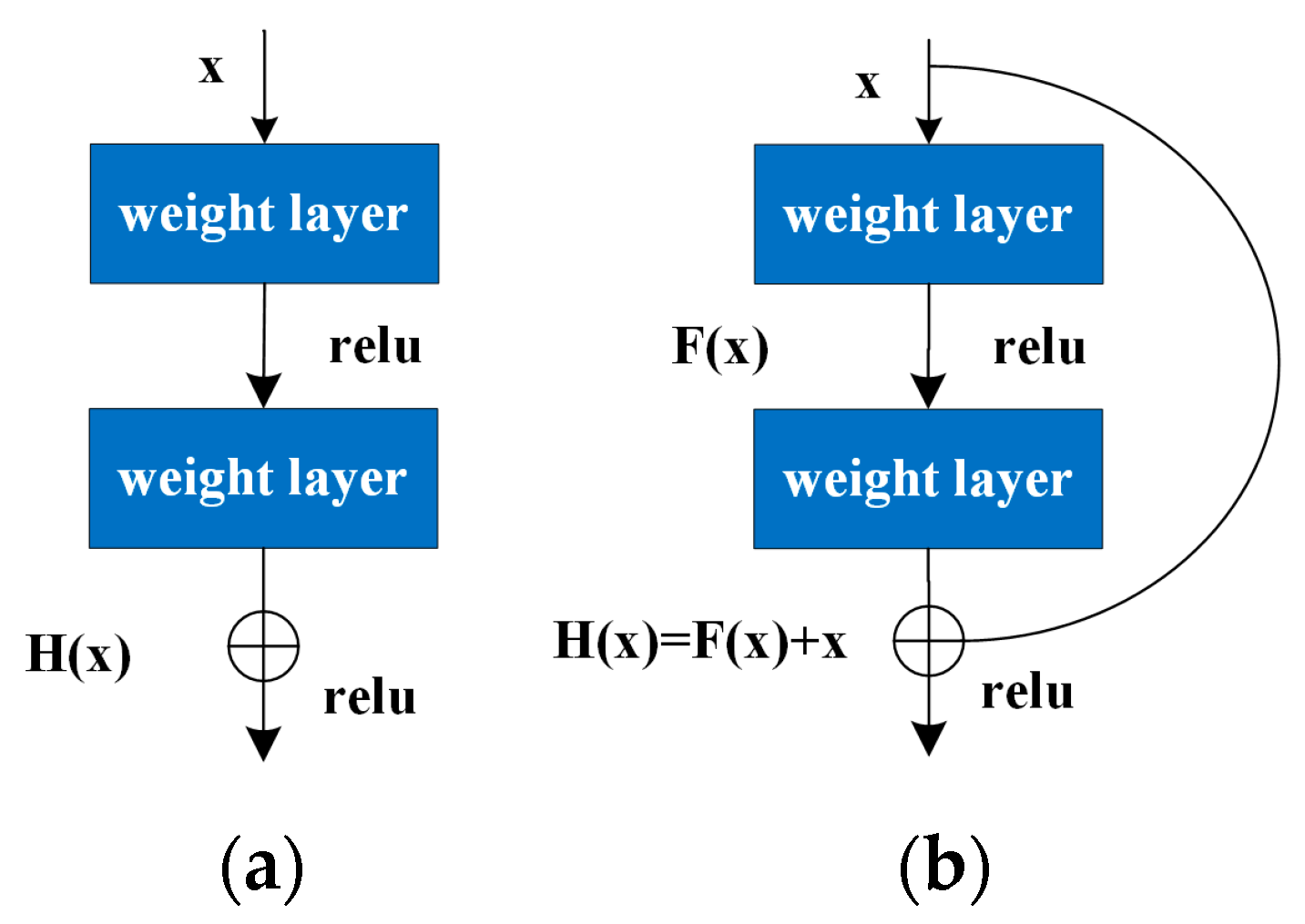

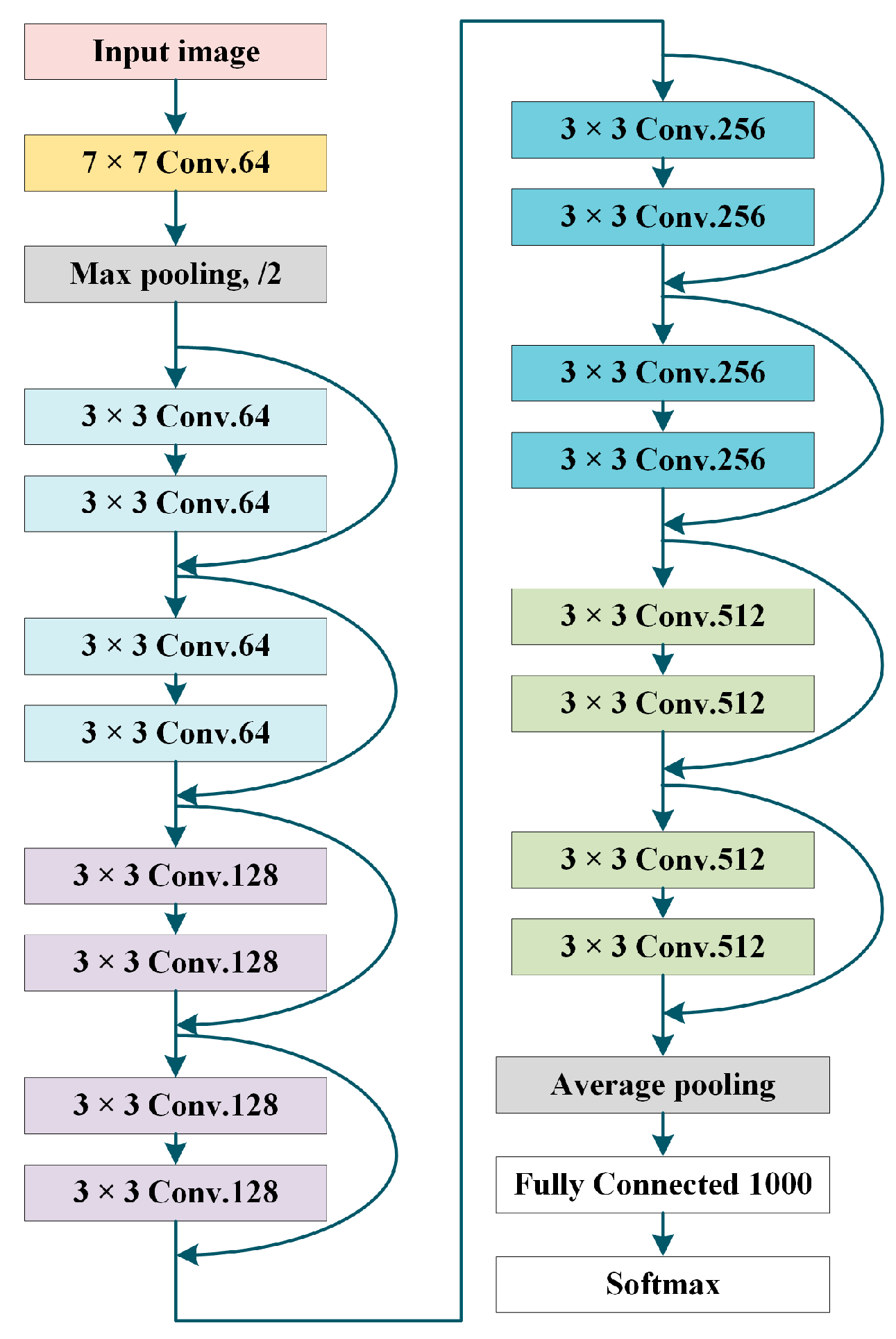

3.1. ResNet Network Structure

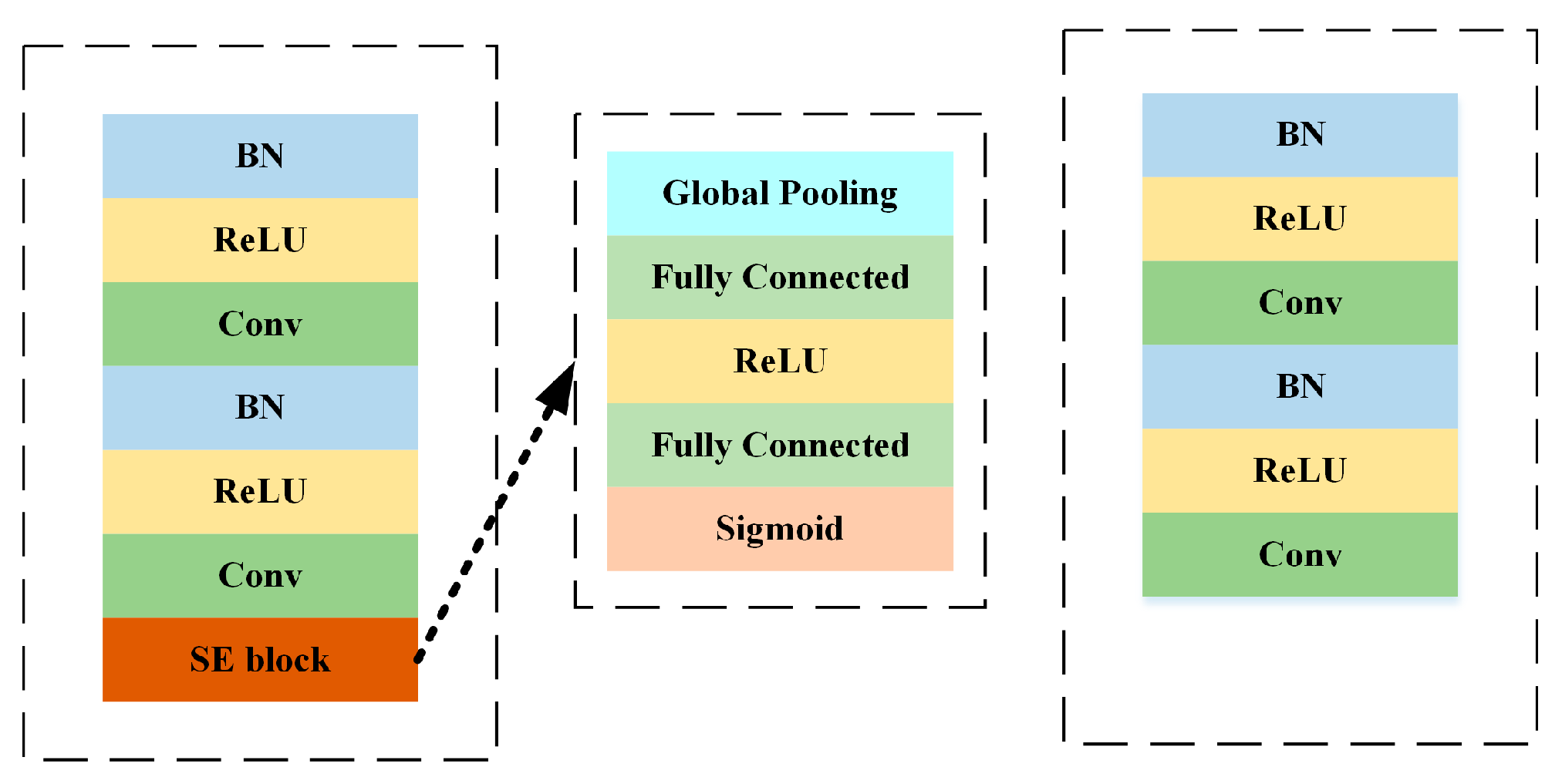

3.2. SE-ResNet

3.3. Identification Process

4. Experimental Results of REDD and PLAID

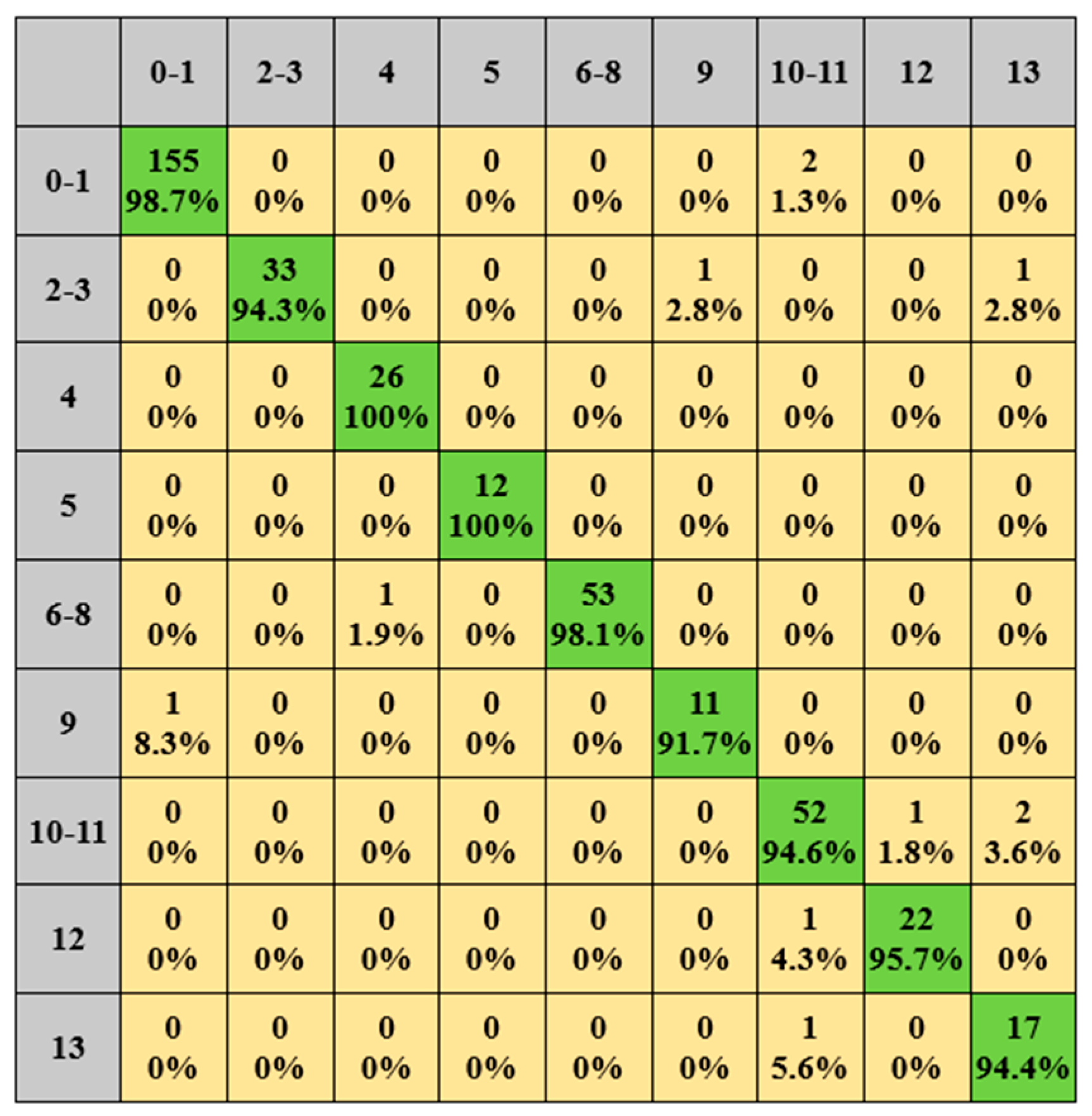

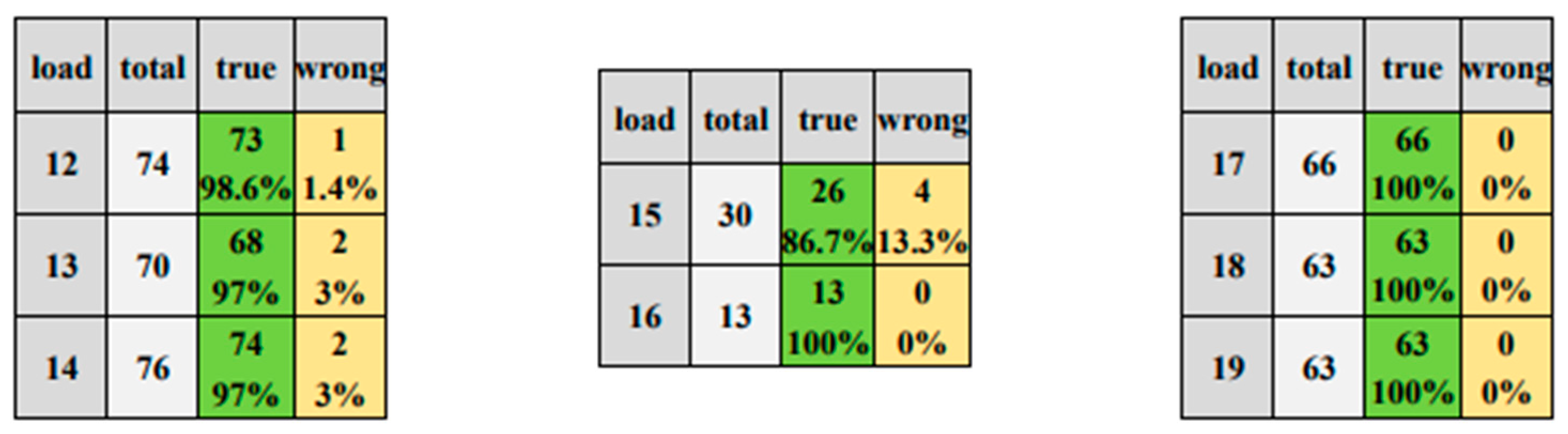

4.1. REDD

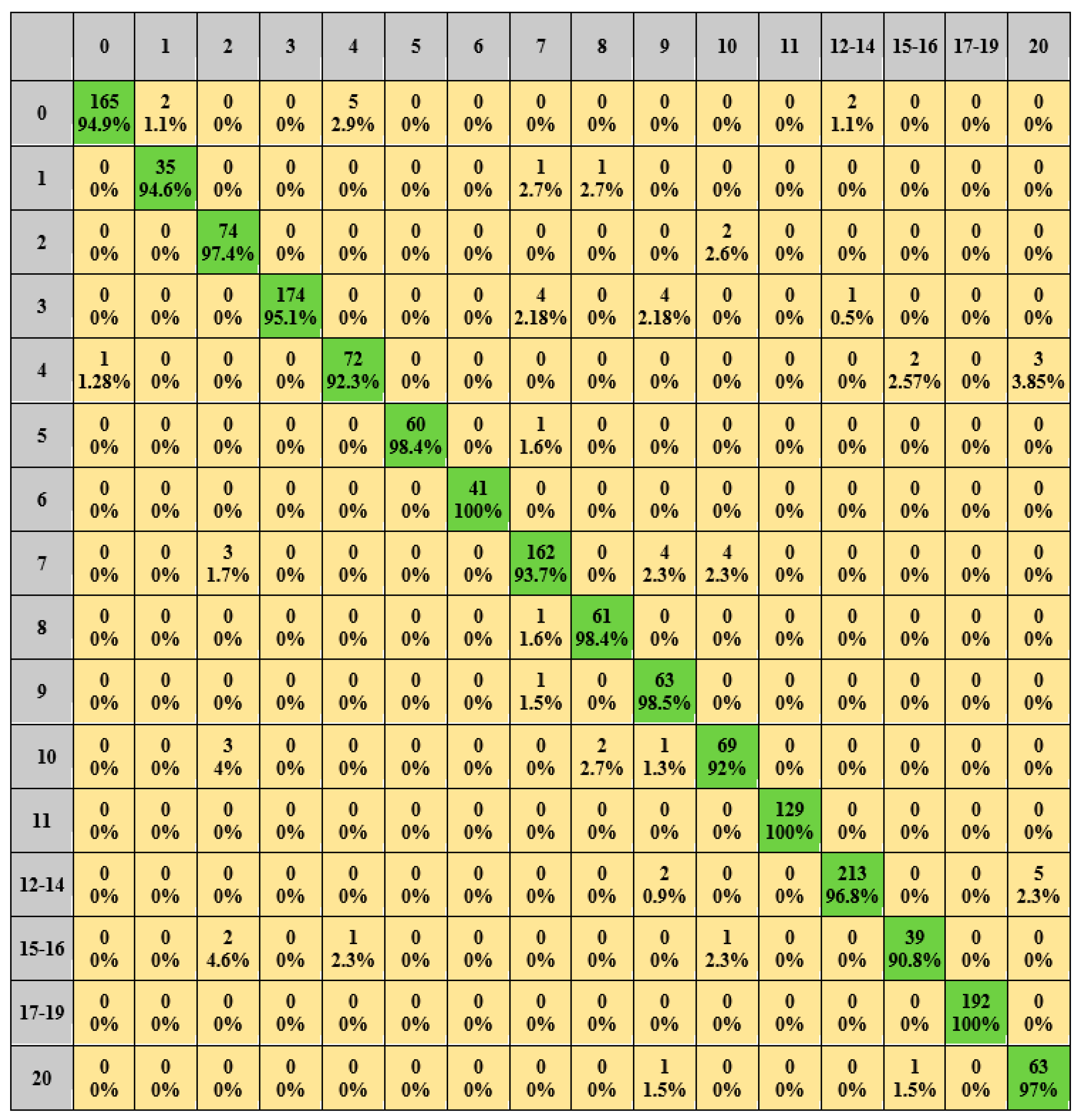

4.2. PLAID

4.3. Comparative Analysis of Results

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Tekler, Z.D.; Low, R.; Zhou, Y.; Yuen, C.; Blessing, L.; Spanos, C. Near-real-time plug load identification using low-frequency power data in office spaces: Experiments and applications. Appl. Energy 2020, 275, 115391. [Google Scholar] [CrossRef]

- Deng, X.P.; Zhang, G.Q.; Wei, Q.L.; Wei, P.; Li, C.D. A survey on the non-intrusive load monitoring. Acta Autom. Sin. 2022, 48, 644–663. [Google Scholar]

- Shaw, S.R.; Leeb, S.B.; Norford, L.K.; Cox, R.W. Nonintrusive load monitoring and diagnostics in power systems. IEEE Trans. Instrum. Meas. 2008, 57, 1445–1454. [Google Scholar] [CrossRef]

- Tekler, Z.D.; Low, R.; Yuen, C.; Blessing, L. Plug-Mate: An IoT-based occupancy-driven plug load management system in smart buildings. Build. Environ. 2022, 223, 109472. [Google Scholar] [CrossRef]

- Ridi, A.; Gisler, C.; Hennebert, J. A survey on intrusive load monitoring for appliance recognition. In Proceedings of the 2014 22nd International Conference on Pattern Recognition, Stockholm, Sweden, 24–28 August 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 3702–3707. [Google Scholar]

- Tekler, Z.D.; Low, R.; Blessing, L. User perceptions on the adoption of smart energy management systems in the workplace: Design and policy implications. Energy Res. Soc. Sci. 2022, 88, 102505. [Google Scholar] [CrossRef]

- Bonfigli, R.; Principi, E.; Fagiani, M.; Severini, M.; Squartini, S.; Piazza, F. Non-intrusive load monitoring by using active and reactive power in additive Factorial Hidden Markov Models. Appl. Energy 2017, 208, 1590–1607. [Google Scholar] [CrossRef]

- Liang, J.; Ng, S.K.; Kendall, G.; Cheng, J.W. Load signature study-Part I: Basic concept, structure, and methodology. IEEE Trans. Power Deliv. 2009, 25, 551–560. [Google Scholar] [CrossRef]

- He, D.; Du, L.; Yang, Y.; Harley, R.; Habetler, T. Front-end electronic circuit topology analysis for model-driven classification and monitoring of appliance loads in smart buildings. IEEE Trans. Smart Grid 2012, 3, 2286–2293. [Google Scholar] [CrossRef]

- Wichakool, W.; Avestruz, A.T.; Cox, R.W.; Leeb, S.B. Modeling and estimating current harmonics of variable electronic loads. IEEE Trans. Power Electron. 2009, 24, 2803–2811. [Google Scholar] [CrossRef]

- Mulinari, B.M.; de Campos, D.P.; da Costa, C.H.; Ancelmo, H.C.; Lazzaretti, A.E.; Oroski, E.; Lima, C.R.; Renaux, D.P.; Pottker, F.; Linhares, R.R. A new set of steady-state and transient features for power signature analysis based on VI trajectory. In Proceedings of the 2019 IEEE PES Innovative Smart Grid Technologies Conference-Latin America (ISGT Latin America), Gramado, Brazil, 15–18 September 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–6. [Google Scholar]

- Gao, J.; Kara, E.C.; Giri, S.; Bergés, M. A feasibility study of automated plug-load identification from high-frequency measurements. In Proceedings of the 2015 IEEE Global Conference on Signal and Information Processing (GlobalSIP), Orlando, FL, USA, 13–16 December 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 220–224. [Google Scholar]

- Yi, S.; Can, C.; Jun, L.; Jianhong, H.; Xiangjun, L. A Non-Intrusive Household Load Monitoring Method Based on Genetic Optimization. First Power Syst. Technol. 2016, 40, 3912–3917. [Google Scholar] [CrossRef]

- Zheng, Z.; Chen, H.; Luo, X. A supervised event-based non-intrusive load monitoring for non-linear appliances. Sustainability 2018, 10, 1001. [Google Scholar] [CrossRef]

- Wang, S.X.; Guo, L.Y.; Chen, H.W.; Deng, X.Y. Non-intrusive Load Identification Algorithm Based on Feature Fusion and Deep Learning. Autom. Electr. Power Syst. 2020, 44, 103–110. [Google Scholar]

- De Baets, L.; Develder, C.; Dhaene, T.; Deschrijver, D. Automated classification of appliances using elliptical fourier descriptors. In Proceedings of the 2017 IEEE International Conference on Smart Grid Communications (SmartGridComm), Dresden, Germany, 23–27 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 153–158. [Google Scholar]

- Chen, J.; Wang, X.; Zhang, H. Non-intrusive load recognition using color encoding in edge computing. Chin. J. Sci. Instrum. 2020, 41, 12–19. [Google Scholar] [CrossRef]

- Xiang, Y.; Ding, Y.; Luo, Q.; Wang, P.; Li, Q.; Liu, H.; Fang, K.; Cheng, H. Non-Invasive Load Identification Algorithm Based on Color Coding and Feature Fusion of Power and Current. Front. Energy Res. 2022, 10, 534. [Google Scholar] [CrossRef]

- Gai, R.L.; Cai, J.R.; Wang, S.Y. Research Review on Image Recognition Based on Deep Learning. J. Chin. Comput. Syst. 2021, 42, 1980–1984. [Google Scholar]

- Ji, T.Y.; Liu, L.; Wang, T.S.; Lin, W.B.; Li, M.S.; Wu, Q.H. Non-Intrusive Load Monitoring Using Additive Factorial Approximate Maximum a Posteriori Based on Iterative Fuzzy c-Means. IEEE Trans. Smart Grid 2019, 10, 6667–6677. [Google Scholar] [CrossRef]

- Culler, D. BuildSys’15: The 2nd ACM International Conference on Embedded Systems for Energy-Efficient Built Environments, Seoul, Republic of Korea, 4–5 November 2015; Association for Computing Machinery: New York, NY, USA, 2015. [Google Scholar]

- Athanasiadis, C.L.; Papadopoulos, T.A.; Doukas, D.I. Real-time non-intrusive load monitoring: A light-weight and scalable approach. Energy Build. 2021, 253, 111523. [Google Scholar] [CrossRef]

- De Baets, L.; Ruyssinck, J.; Develder, C.; Dhaene, T.; Deschrijver, D. Appliance classification using VI trajectories and convolutional neural networks. Energy Build. 2018, 58, 32–36. [Google Scholar] [CrossRef]

| Load | Symbol | Load | Symbol |

|---|---|---|---|

| fridge 1-1 | 0 | microwave 1-2 | 7 |

| fridge 1-2 | 1 | microwave 1-3 | 8 |

| light 1-1 | 2 | CE appliance | 9 |

| light 1-2 | 3 | sockets 1-1 | 10 |

| light 2 | 4 | sockets 1-2 | 11 |

| light 3 | 5 | washer dryer | 12 |

| microwave 1-1 | 6 | electric furnace | 13 |

| P | R | F1 | A | |

|---|---|---|---|---|

| 0–1 | 0.994 | 0.987 | 0.990 | 0.992 |

| 2–3 | 1.000 | 0.943 | 0.971 | 0.995 |

| 4 | 0.963 | 1.000 | 0.981 | 0.997 |

| 5 | 1.000 | 1.000 | 1.000 | 1.000 |

| 6–8 | 1.000 | 0.981 | 0.990 | 0.997 |

| 9 | 0.917 | 0.917 | 0.917 | 0.995 |

| 10–11 | 0.929 | 0.945 | 0.937 | 0.982 |

| 12 | 0.957 | 0.957 | 0.957 | 0.995 |

| 13 | 0.850 | 0.944 | 0.895 | 0.990 |

| average | 0.956 | 0.964 | 0.969 | 0.993 |

| Load | Symbol | Load | Symbol |

|---|---|---|---|

| air conditioning | 0 | laptop | 11 |

| blender | 1 | microwave 1-1 | 12 |

| coffee maker | 2 | microwave 1-2 | 13 |

| compact fluorescent lamp | 3 | microwave 1-3 | 14 |

| fan | 4 | soldering iron 1-1 | 15 |

| fridge | 5 | soldering iron 1-2 | 16 |

| hair curler | 6 | vacuum 1-1 | 17 |

| hair dryer | 7 | vacuum 1-2 | 18 |

| heater | 8 | vacuum 1-3 | 19 |

| incandescent light bulb | 9 | washing machine | 20 |

| kettle | 10 |

| P | R | F1 | A | |

|---|---|---|---|---|

| 0 | 0.990 | 0.929 | 0.959 | 0.996 |

| 1 | 0.974 | 0.974 | 0.974 | 0.997 |

| 2 | 0.925 | 0.974 | 0.949 | 0.993 |

| 3 | 1.000 | 0.925 | 0.961 | 0.996 |

| 4 | 0.935 | 0.923 | 0.929 | 0.993 |

| 5 | 1.000 | 0.983 | 0.991 | 0.999 |

| 6 | 1.000 | 1.000 | 1.000 | 1.000 |

| 7 | 0.925 | 0.940 | 0.932 | 0.994 |

| 8 | 0.953 | 0.934 | 0.943 | 0.997 |

| 9 | 0.820 | 0.984 | 0.895 | 0.990 |

| 10 | 0.900 | 0.920 | 0.910 | 0.990 |

| 11 | 1.000 | 1.000 | 1.000 | 1.000 |

| 12–14 | 0.986 | 0.968 | 0.977 | 0.993 |

| 15–16 | 0.979 | 0.933 | 0.955 | 0.990 |

| 17–19 | 1.000 | 1.000 | 1.000 | 1.000 |

| 20 | 0.955 | 0.969 | 0.962 | 0.997 |

| Characteristic | REDD | |

|---|---|---|

| Algorithm | F1 | |

| V-I trajectories | SoftMax | 0.880 |

| SE-ResNet | 0.855 | |

| V-I binary graph | CNN | 0.826 |

| SE-ResNet | 0.83 | |

| (V-I + harmonics) binary diagram | ResNet | 0.872 |

| SE-ResNet | 0.895 | |

| (V-I + power) vector characteristic | ResNet | 0.879 |

| SE-ResNet | 0.964 | |

| Characteristic | PLAID | |

|---|---|---|

| Algorithm | Accuracy Rate | |

| V-I trajectories | Random forest [15] | 77% |

| Random forest [7] | 81.75% | |

| Amplitude, phase angle | Multi-layer perceptron [16] | 73% |

| V-I binary graph | CNN [17] | 75.3% |

| ResNet | 76.1% | |

| (V-I + harmonics) binary diagram | CNN | 80.25% |

| ResNet | 84.37% | |

| (V-I + power) vector characteristic | BP neural network [10] | 83.7% |

| SE-ResNet | 85.3% | |

| (V-I + power) vector characteristic | CNN | 89.35% |

| SE-ResNet | 96.24% | |

| True color feature map | CNN [13] | 82.87% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, T.; Qin, H.; Li, X.; Wan, W.; Yan, W. A Non-Intrusive Load Monitoring Method Based on Feature Fusion and SE-ResNet. Electronics 2023, 12, 1909. https://doi.org/10.3390/electronics12081909

Chen T, Qin H, Li X, Wan W, Yan W. A Non-Intrusive Load Monitoring Method Based on Feature Fusion and SE-ResNet. Electronics. 2023; 12(8):1909. https://doi.org/10.3390/electronics12081909

Chicago/Turabian StyleChen, Tie, Huayuan Qin, Xianshan Li, Wenhao Wan, and Wenwei Yan. 2023. "A Non-Intrusive Load Monitoring Method Based on Feature Fusion and SE-ResNet" Electronics 12, no. 8: 1909. https://doi.org/10.3390/electronics12081909

APA StyleChen, T., Qin, H., Li, X., Wan, W., & Yan, W. (2023). A Non-Intrusive Load Monitoring Method Based on Feature Fusion and SE-ResNet. Electronics, 12(8), 1909. https://doi.org/10.3390/electronics12081909