Abstract

The ItemCF algorithm is currently the most widely used recommendation algorithm in commercial applications. In the early days of recommender systems, most recommendation algorithms were run on a single machine rather than in parallel. This approach, coupled with the rapid growth of massive user behavior data in the current big data era, has led to a bottleneck in improving the execution efficiency of recommender systems. With the vigorous development of distributed technology, distributed ItemCF algorithms have become a research hotspot. Hadoop is a very popular distributed system infrastructure. MapReduce, which provides massive data computing, and Hive, a data warehousing tool, are the two core components of Hadoop, each with its own advantages and applicable scenarios. Scholars have already utilized MapReduce and Hive for the parallelization of the ItemCF algorithm. However, these pieces of literature make use of either MapReduce or Hive alone without fully leveraging the strengths of both. As a result, it has been difficult for parallel ItemCF recommendation algorithms to feature both simple and efficient implementation and high running efficiency. To address this issue, we proposed a distributed ItemCF recommendation algorithm based on the combination of MapReduce and Hive and named it HiMRItemCF. This algorithm divided ItemCF into six steps: deduplication, obtaining the preference matrixes of all users, obtaining the co-occurrence matrixes of all items, multiplying the two matrices to generate a three-dimensional matrix, aggregating the data of the three-dimensional matrix to obtain the recommendation scores of all users for all items, and sorting the scores in descending order, with Hive being used to carry out steps 1 and 6, and MapReduce for the other four steps involving more complex calculations and operations. The Hive jobs and MapReduce jobs are linked through Hive’s external tables. After implementing the proposed algorithm using Java and running the program on three publicly available user shopping behavior datasets, we found that compared to algorithms that only use MapReduce jobs, the program implementing the proposed algorithm has fewer lines of source code, lower cyclomatic complexity and Halstead complexity, and can achieve a higher speedup ratio and parallel computing efficiency when processing all datasets. These experimental results indicate that the parallel and distributed ItemCF algorithm proposed in this paper, which combines MapReduce and Hive, has both the advantages of concise and easy-to-understand code as well as high time efficiency.

1. Introduction

With the rapid development of the Internet, everyone can access a large amount of information. The challenge now is how to obtain truly useful information from such vast amounts of data. To address this issue, personalized recommendation systems are gaining increasingly widespread applications in areas such as commercial websites and electronic libraries [1]. The era of big data has arrived, and commercial websites, electronic libraries, and other platforms generate a vast amount of user behavior data every moment [2,3]. Traditional recommendation algorithms based on single-machine mode encounter severe performance bottlenecks when processing massive data and experience difficulty meeting practical computing demands due to their time-consuming processes [4,5]. On the premise of having sufficient computing resources, the recommendation process based on a distributed mode can significantly shorten the processing time and improve the algorithm’s performance. Collaborative filtering is the most successful technology in the field of personalized recommendations, and in recent years, many studies have begun to focus on the distributed version of this algorithm.

Hadoop, developed by the Apache Software Foundation, has become the most influential open-source distributed development platform of the big data era and is, in fact, the standard tool for big data processing [6]. It can be deployed in inexpensive computer clusters. With the help of Hadoop, programmers can easily write distributed parallel programs and run them on computer clusters to achieve the storage and distributed processing of massive data [7]. Currently, many scholars have successfully implemented distributed collaborative filtering recommendation algorithms using Hadoop. The two core components of Hadoop are HDFS for massive data storage and MapReduce for large-scale data processing. Moreover, Hadoop also provides components such as Sqoop, Mahout, Hive, Pig, Hbase, Flume, and Ooize, which are based on underlying storage and computing to accomplish tasks such as data integration, data mining, data security protection, data management, and user experience enhancement. Among these components, the parallel computing framework MapReduce and the database warehouse Hive are most used in implementing collaborative filtering algorithms [8,9,10,11,12,13,14,15]. Currently, this research is mainly focused on the item-based collaborative filtering (ItemCF) algorithm of collaborative filtering algorithm, and the parallelization of the user-based collaborative filtering (UserCF) algorithm will be discussed in the following research.

The current literature on distributed ItemCF algorithms using MapReduce and Hive often sticks to using either MapReduce or Hive exclusively. MapReduce excels in flexibility and efficiency, whereas Hive offers superior convenience and ease of use [16]. These algorithms cannot be completed through the collaboration of MapReduce and Hive jobs; thus, they are unable to fully exploit the individual strengths of both MapReduce and Hive.

To address the issues mentioned above, this paper explores a new distributed ItemCF recommendation algorithm called HiMRItemCF. By using Hive’s external tables to link MapReduce jobs and Hive jobs, the ItemCF algorithm can be divided into several sequentially executed MapReduce jobs and Hive jobs. This approach makes it possible to harness the respective strengths of both to implement the parallelization of the ItemCF algorithm. This ItemCF algorithm can be divided into six steps: deduplication, obtaining the preference matrix of all users, obtaining the co-occurrence matrix of all items, multiplying the two matrices to generate a three-dimensional matrix, aggregating the data of the three-dimensional matrix to obtain the recommendation scores of all users for all items, and sorting the scores in descending order, with input and output paths being set for each step. Building on the above, external tables and HiveQL statements of Hive are used to carry out distributed processing for deduplication in steps 1 and sorting the scores in descending order in stedp 6, with the location of the data tables on HDFS being set as the input paths for these two steps, and the execution results of HiveQL statements being saved to the output paths for these two steps. MapReduce is used to implement distributed computing for the other four steps while associating them with the two Hive job through input and output paths. The algorithm in this paper achieves simpler and more efficient programming, reduces the likelihood of errors, and delivers better time efficiency all at once. Of course, this algorithm also has some cons and limitations. Due to the limited expressive capacity of Hive’s HQL, some complex calculations are not easy to express with HQL, so most steps still require MapReduce tasks to complete, and the Hive tasks in the HiMRItemCF algorithm only serve as aids, which results in the advantages of Hive not being fully realized. This paper attempts to combine the advantages of MapReduce and Hive to build a distributive ItemCF algorithm with both concise and easy-to-understand code and high time efficiency. However, since Hive tasks ultimately need to be converted into MapReduce tasks for execution, the underlying program of the algorithm in this paper is still MapReduce. Therefore, the improvement in execution efficiency is not very significant compared to distributed ItemCF algorithms that are completely based on MapReduce.

The main contributions and innovations of this paper are as follows:

- (1)

- Innovatively, through the external table of Hive, the input and output of MapReduce jobs are associated with the data in the Hive table. This lays the theoretical and practical foundation for combining MapReduce and Hive to solve the parallelization problem of the ItemCF recommendation algorithm. This provides a completely new idea and direction for solving the parallelization problem of the ItemCF recommendation algorithm. This is the basis of the research work of this paper and also one of the innovative points of this paper.

- (2)

- With the respective advantages of MapReduce and Hive, the ItemCF algorithm is divided into six steps and uses Hive and MapReduce to parallelize their respective proficient operations. This achieves a distributed ItemCF recommendation algorithm that is simple and quick to implement, not prone to errors, and has high temporal efficiency.

The basic structure of the full text is as follows: Section 2 expounds on the theoretical background and related works of this paper; Section 3 introduces related knowledge and technologies such as the MapReduce framework and collaborative filtering; Section 4 presents the basic process of the HiMRItemCF algorithm; Section 5 discusses in detail the two Hive jobs of the HiMRItemCF algorithm; Section 6 discusses in detail the four MapReduce jobs of the HiMRItemCF algorithm; Section 7 conducts experiments on three public user shopping behavior datasets and discusses the results; Finally, the full text is summarized, and prospects for future work are proposed.

2. Theoretical Background and Related Works

This section briefly describes the theoretical background and related works of this paper. Firstly, the shortcomings and limitations of the ItemCF algorithm and its research status are introduced. Then, the distributed ItemCF algorithm is summarized, and the research trends and existing problems and shortcomings of ItemCF technologies based on the Hadoop platform are analyzed and discussed.

2.1. Cons and Limitations of the ItemCF Algorithm and Its Recent Studies

ItemCF, otherwise known as item-based collaborative filtering, is a commonly used approach in recommendation systems. However, while this method is widely applied, it also has several significant disadvantages and limitations, including:

- (1)

- Cold-start problem: This is a common issue in collaborative filtering systems. For the ItemCF algorithm, as long as the new user has an action on an item, it can recommend other items related to the item, so the problem of the user's cold-start is not serious. But for new items that do not yet have enough users interacting with them, it is usually difficult to obtain the item’s similarity, which can cause ItemCF to underperform when recommending these kinds of items.

- (2)

- Data sparsity problem: Most users only interact with a very small portion of the items in the system, prompting a very sparse user–item matrix. Because of this sparsity, calculating the similarity between items may become very challenging.

- (3)

- Scalability problem: When dealing with large-scale data, calculating and storing the similarity of all item pairs is a challenge. As the number of products increases, this problem may worsen.

- (4)

- Long-tail problem: Due to the rich behavioral data of popular products, these items tend to gain more weight when calculating item similarity, invariably inclining the recommendation results towards popular products. This effect is not beneficial when recommending long-tail (unpopular) items.

- (5)

- Static nature: The ItemCF algorithm typically calculates the similarity among items based on historical data. While this can reflect item similarity to some degree, it does not consider the changes in user interests over time.

At present, scholars have completed a lot of research work to solve the above shortcomings and limitations of ItemCF:

- (1)

- Handling the cold-start problem: We do not have enough interaction data for new users or items to calculate their true preferences, and therefore, we cannot make accurate recommendations for them. This issue has been the focus of scholars—for example, Lei et al. [17] and Barman et al. [18].

- (2)

- Dealing with data sparsity: Another research focus is how to solve the sparsity problem—that is, the lack of user behavior data, resulting in poor results. Many researchers try to solve this problem using complex processing techniques, such as matrix factorization [19], latent factor model [20], and so on.

- (3)

- Algorithm optimization and improvement: Although the ItemCF algorithm is quite mature, different application environments and business characteristics require us to optimize and improve the algorithm. For example, for the optimization of the ItemCF algorithm in a big data environment, some data structures and parallel computing techniques are used to effectively improve the computing performance. This is also the main concern of this paper.

- (4)

- Dealing with the long-tail problem: In this problem, a few popular items will be recommended by a large number of people while a large number of long-tail (unpopular) items are difficult to be recommended, leading to a lack of diversity of recommendations, which is also a focus of current ItemCF research—for example, by Sreepada et al. [21].

- (5)

- Time dynamics: In the actual e-commerce environment, users’ interests change dynamically, which requires the ItemCF algorithm to take into account the time factor, so the ItemCF algorithm considering the time factor is also a research direction—for example, in the work by Zhang et al. [22].

- (6)

- Enhance the effect by integrating other technologies: The ItemCF algorithm is usually combined with other technologies and methods, such as machine learning [23], deep learning [24], and genetic algorithms [25,26], which can more effectively mine deep features and correlations, and improve the accuracy and personalization level of the recommendation system.

In this paper, we focus on solving the execution efficiency of ItemCF through distributed computing.

2.2. Distributed ItemCF Algorithm

According to the above discussion, optimizing the ItemCF algorithm through distributed technology to improve computing performance in big data environments is an important research direction in the field of recommendation algorithms. The distributed ItemCF algorithm is focused on solving large-scale data processing and real-time problems. Based on the traditional ItemCF algorithm, it uses distributed computing frameworks, such as Apache Hadoop, Apache Spark, Flink, and GraphLab (now called Turi), to realize distributed data processing, which can improve the operation efficiency and processing power of the algorithm—for example, the collaborative filtering algorithm implemented on Spark [27], an open-source distributed computing system, and the collaborative filtering algorithm implemented on Flink [28], an open-source stream processing framework. Implementing the ItemCF algorithm using the Hadoop framework is discussed in detail in the next subsection.

2.3. ItemCF Technologies Based on Hadoop Platform

In the current research on the distributed ItemCF algorithm, the Hadoop framework is the most widely used. These studies mainly implement the parallel computing of ItemCF based on the MapReduce and Hive components of the Hadoop framework.

Yan et al. [8] parallelized the Item-Based Collaborative Filtering recommendation algorithm using the MapReduce model. Their algorithm consists of three MapReduce processes. Map1 obtains item ID and its rating by each user, and Reduce1 calculates the average rating for each item. Map2 computes all pairs of items, and Reduce2 calculates the similarity between items. Map3 identifies all other items that are similar to a given item, and Reduce3 calculates the predicted rating of items without ratings. Li et al. [9] decomposed the Item-Based CF algorithm into six steps: mapping product (item) IDs, mapping user IDs, generating a preference matrix, building a co-occurrence matrix, calculating the dot product of the preference matrix and the co-occurrence matrix, and producing a recommendation vector. The algorithm is implemented using 12 MapReduce jobs in total. Jia et al. [10] proposed a method that involves seven steps: setting up the platform in the Hadoop cluster, preprocessing data logs, building an average rating vector, building a user vector, building a co-occurrence matrix, matrix multiplication, and generating recommendations; five of them (building average rating vector, building user vector, building co-occurrence matrix, matrix multiplication, and generating recommendations) are each implemented using a separate MapReduce job (i.e., distributed implementation). Cheng et al. [11] proposed a new collaborative filtering algorithm to address the issues with data sparsity and system scalability in traditional collaborative filtering algorithms. They use the ratings given by users to different items to obtain an item rating matrix, then classify items using a naive Bayes classifier and find the nearest neighbor set of items within the same class using a modified cosine similarity calculation method. Each of those steps is implemented using a separate MapReduce job to achieve distributed implementation. Ying et al. [12] implemented the ItemCF algorithm using the MapReduce programming model with three MapReduce jobs for calculating the user–item co-occurrence matrix, item–item co-occurrence matrix, and user–interest matrix, respectively, and two batch processing steps for computing the item cosine similarity matrix and optimizing the recommendation results. Ghuli et al. [13] divided the most time-consuming calculation in the Collaborative Filtering (CF) algorithm into three Map-Reduce stages. The first stage combines items and ratings with users, the second stage obtains co-occurring items and computes their similarity, and the third stage ranks items and outputs the pairwise similarity between items. After outputting pairwise item similarities, their proposed recommendation module can recommend Top-N items to users. Building the similarity matrix is the most time-consuming task in the collaborative filtering algorithm and the main cause of its performance bottlenecks. Therefore, Kim et al. [14] proposed an efficient parallel similarity matrix construction algorithm, ConSimMR, which is based on MapReduce. This algorithm consists of three rounds of MapReduce: dividing items into disjoint groups, computing item–item similarity within groups, and computing group-to-group similarity by using each user’s rating list instead of each item’s rating list (which achieves parallel similarity calculation that helps improve performance). Liu et al. [15] used the HiveQL language (multi-table join queries and other statements) of the data warehouse Hive to calculate the user–item ratings, item–item similarity matrix, and data tables with user–recommended items (i.e., completing the critical tasks of the recommendation algorithm).

ItemCF algorithms implemented by MapReduce framework require advanced programming languages like Java to preprocess data, build scoring matrices, sort similarities, and implement other business logic. Therefore, the program logic of these algorithms is mostly rather complicated and difficult to understand and implement. In addition, in some of these algorithms (such as Yan et al. [8], Ying et al. [12], Ghuli et al. [13], Kim et al. [14]), MapReduce parallel computing is only used for process of c building scoring matrices, building co-occurrence matrices and matrix multiplication, etc., while more time-consuming processes such as similarity (recommendation scores) sorting and obtaining top-N items are still completed through sequential computations. The reason is that these two processes are not easily implemented through MapReduce programming. Some researchers have also used Hive to implement distributed ItemCF algorithm. Hive provides HiveQL, a query language similar to SQL in relational databases. HiveQL statements can be used to quickly implement simple MapReduce statistics without having to develop specialized MapReduce applications [16]. In other words, parallelizing ItemCF using HiveQL is very simple and convenient. However, implementing the ItemCF algorithm using Hive involves a large number of multi-table join queries and requires a process of transformation into MapReduce programs, both of which limit their time performance.

3. Related Knowledge and Technologies

3.1. MapReduce Framework [29]

MapReduce is a core component framework of Hadoop. Applications developed using this framework can parallelly process large amounts of data (more than 1 TB) on large clusters (with thousands of nodes) in a reliable and fault-tolerant manner and also perform tasks such as big data processing, mining, and optimization.

The MapReduce algorithm contains two important tasks, namely, Map and Reduce. The former is used to parallelly process small datasets generated by splitting large datasets, and its core execution method is the map() method. The latter is used to merge the calculation results of the small datasets, which is also performed in parallel, and its core execution method is the reduce() method. MapReduce highly abstracts the parallel computing process into the map() and reduce() methods. Therefore, programmers only need to write the codes for these two methods (and sometimes rewrite the codes for other methods such as setup(), combine(), and sort() to meet certain requirements) while leaving all other complex problems in parallel programs, such as distributed storage, job scheduling, load balancing, and fault tolerance processing, to be handled entirely by the MapReduce framework.

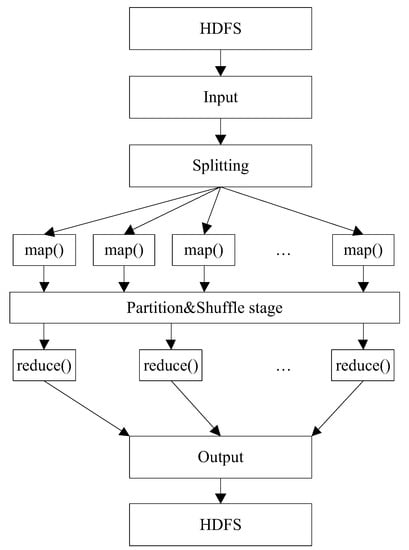

The design logic behind MapReduce is that we can obtain a large input dataset from HDFS, then divide it into multiple small datasets and perform parallel calculations on them, and in the end, aggregate the calculation results of the small datasets to obtain the final result and output it back to HDFS (as shown in Figure 1).

Figure 1.

MapReduce architecture.

3.2. Collaborative Filtering [2]

As the earliest and most well-known recommendation algorithm, collaborative filtering has seen not only extensive study in the academic community but also a wide range of industrial applications. It can be divided into user-based collaborative filtering (UserCF) and item-based collaborative filtering (ItemCF).

3.2.1. UserCF

UserCF is the algorithm used by the earliest recommender systems, and it can be said that its birth marks the birth of recommender systems. First proposed in 1992, by now, it still remains one of the most famous algorithms in the field of recommender systems.

The UserCF algorithm conforms to our cognition of “similar taste”; that is, users with similar interests tend to like the same or similar items. When the target user needs personalized recommendations, a viable option is to find a user group with similar interests to the target user and then recommend to the target user items that the user group likes but he/she has never heard of. This method is called “user-based collaborative filtering”.

The implementation of the UesrCF algorithm contains two main steps:

- (1)

- Find a group of users with similar interests to the target user.

- (2)

- Find items that are preferred by the users in the group but not known to the target user, and recommend them to the target user.

3.2.2. ItemCF

ItemCF is currently the most widely used algorithm in the field, including serving as the foundation algorithm for recommender systems of major companies such as Amazon and Netflix.

The purpose of the ItemCF algorithm is to recommend items that are similar to previously preferred items. In this algorithm, the calculation of item similarity is mainly based on user behavior records rather than the content attributes of the items. The algorithm is based on the assumption that items A and B have a high similarity because users who like item A mostly also like B. For example, the algorithm will recommend Machine Learning in Action to a user who has purchased Introduction to Data Mining because most users who have previously purchased Introduction to Data Mining have also purchased Machine Learning in Action.

Similar to the implementation of UesrCF, that of ItemCF also contains two main steps:

- (1)

- Calculate the similarity between items.

- (2)

- Generate a recommendation list for the user based on the similarity of items and the user’s historical behavior.

4. The Basic Process of HiMRItemCF Algorithm

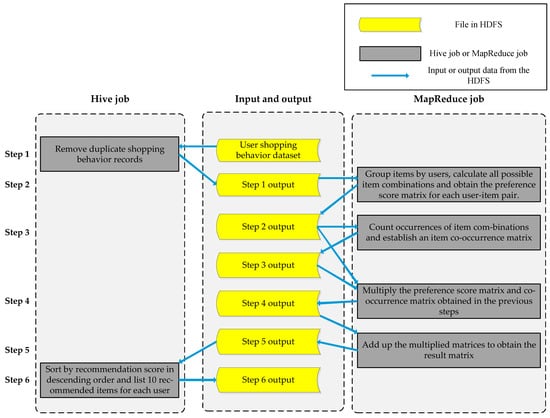

Based on the ItemCF algorithm implemented using MapReduce [30], this paper proposes a MapReduce + Hive-based ItemCF recommendation algorithm that is called HiMRItemCF. The algorithm can be divided into six steps (two Hive jobs and four MapReduce jobs): step 1 uses Hive to remove duplicate shopping records; step 2 uses MapReduce to compute all possible item combinations based on user groups from the output of the first job, producing a preference score matrix for each user–item pair; step 3 uses MapReduce to count occurrences of item combinations and establish an item co-occurrence matrix based on the output of step 2; step 4 uses MapReduce to multiply the preference score and co-occurrence matrix matrix obtained in step 2 and step 3, respectively; step 5 uses MapReduce to add up the multiplied matrices obtained in step 4 to obtain the result matrix; and step 6 uses Hive to sort the output of step 5 in descending order by recommendation score and list 10 recommended items for each user. The basic process flow of these steps is shown in Figure 2.

Figure 2.

Basic process of HiMRItemCF algorithm.

The pseudocode of the HiMRItemCF algorithm is described as Algorithm 1.

| Algorithm 1: The HiMRItemCF algorithm |

| Input: user behavior data |

| Output: recommendation results |

| HiMRItemCF() |

| 1: Initialize the Configuration class object config |

| 2: Set the input path for step 1 and add it to paths |

| 3: Set the output path for step 1 and add it to paths |

| 4: Set the input path for step 2 as the output path of step 1 and add it to paths |

| 5: Set the output path for step 2 and add it to paths |

| 6: Set the input path for step 3 as the output path of step 2 and add it to paths |

| 7: Set the output path for step 3 and add it to paths |

| 8: Set the input path for step 4 as the output paths of step 2 and step 3, and add it to paths |

| 9: Set the output path for step 4 and add it to paths |

| 10: Set the input path for step 5 as the output path of step 4 and add it to paths |

| 11: Set the output path for step 5 and add it to paths |

| 12: Set the input path for step 6 as the output path of step 5 and add it to paths |

| 13: Set the output path for step 6 and add it to paths |

| 14: Step1_Hive.RUN(paths) |

| 15: Step2_MapReduce.RUN(config, paths) |

| 16: Step3_MapReduce.RUN(config, paths) |

| 17: Step4_MapReduce.RUN(config, paths) |

| 18: Step5_MapReduce.RUN(config, paths) |

| 19: Step6_Hive.RUN(paths) |

5. Hive Jobs

The HiMRItemCF algorithm mainly consists of two Hive jobs and four MapReduce jobs. The basic ieda and pseudocode of the algorithm for the two Hive jobs are described as follows.

5.1. The 1st Hive Job

5.1.1. Basic Idea

The first Hive job is to remove duplicate user behavior records. Its basic idea is as follows:

Create a Hive database (if none exists) and an external table named “user_behavior_table” (if none exists), then use the DISTINCT clause of HiveQL to remove duplicates and save the results.

5.1.2. Pseudocode Description

The RUN() function for the first Hive task is defined in the Step1_Hive class, with the pseudocode description described as Algorithm 2.

| Algorithm 2: The RUN() function for the first Hive task |

| Input: input output paths, user behavior data |

| Output: deduplicated user behavior data |

| RUN(paths) |

| 1: Get the input and output paths for Step 1 from paths |

| 2: Execute HiveQL statements to create a Hive database (if none exists) |

| 3: Execute HiveQL statements to create an external table “user_behavior_table” (if none exists) in the database while specifying the location of the table on HDFS as the input path for Step 1 |

| 4: Use the DISTINCT clause in HiveQL to remove duplicates from the table |

| 5: Use the OVERWRITE DIRECTORY clause in HiveQL to save the deduplicated results to the output path for Step 1 |

5.2. The 2nd Hive Job

5.2.1. Basic Idea

The second Hive task is to list ten recommended items for each user by sorting the items in descending order of the recommendation scores (that is, the total recommendation degree of each item calculated by MapReduce job 4 in Section 6.4). Its basic idea is as follows:

Create an external table “recommendation_score_table” in Hive (if none exists), which is partitioned by the user ID using the PARTITION BY clause and sorted in descending order of recommendation score using the ORDER BY clause, then select the top 10 rows for each user based on the recommendation score and save the results.

5.2.2. Pseudocode Description

The RUN() function for the second Hive task is defined in the Step6_Hive class, with the pseudocode description described as Algorithm 3.

| Algorithm 3: The RUN() function for the second Hive task |

| Input: input output paths, the recommendation scores |

| Output: recommendation results |

| RUN(paths) |

| 1: Obtain the input and output paths for Step 6 from paths |

| 2: Execute HiveQL statements to create an external table “recommendation_score_table” in the database, specifying its location on HDFS as the input path for Step 6 |

| 3: Group the “recommendation_score_tables” by user ID using the PARTITION BY clause in HiveQL, sort items by recommendation score using the ORDER BY clause in HiveQL, and generate row sequences using the bucket function ROW_NUMBER() in HiveQL |

| 4: Retrieve all rows with a number less than or equal to 10 |

| 5: Use the OVERWRITE DIRECTORY clause in HiveQL to save the retrieved rows to the output path of step 6 |

6. MapReduce Jobs

The other four steps of the HiMRItemCF algorithm are completed by four MapReduce jobs. The basic idea, input and output formats and pseudocode description of the algorithms for the four MapReduce jobs are discussed separately below.

6.1. MapReduce Job 1

6.1.1. Basic Idea

MapReduce job 1 is to obtain the preference score matrix of all users. The basic idea is as follows:

The Map task extracts the item IDs, user IDs, and behaviors from the input value and outputs the behavior of each user for each item. The Reduce task counts the items that have been operated on by users in each group, as well as their preference scores.

6.1.2. Input and Output Formats

Based on the above flow, we design the input and output formats of our first MapReduce job as follows:

- (1)

- Map input and output formats

Map takes the output of the first Hive task as input, the user ID as the output key, and the combination (item:action) of the ID and the action as the output value.

- (2)

- Reduce input and output formats

Reduce takes the aggregated output of Map as input, the user ID as the output key, and the list of combinations of item IDs and item preference scores as the output value.

6.1.3. Pseudocode Description

The pseudocode of the map() function of the first MapReduce is described as Algorithm 4.

| Algorithm 4: The map() function of the first MapReduce |

| Input: the output of the first Hive job |

| Output: the behavior of each user for each item |

| map(key, value, context) |

| 1: Convert the value separated by “,” into an array and assign it to temp |

| 2: item ← temp [0] |

| 3: user ← temp [1] |

| 4: action ← temp [2] |

| 5: output(user, item:action) |

The pseudocode of the reduce () function of the first MapReduce is described as Algorithm 5.

| Algorithm 5: The reduce () function of the first MapReduce |

| Input: the aggregated output of Map |

| Output: the preference score matrix of all users |

| reduce(key, values, context) |

| 1: for v:values |

| 2: Convert the v separated by ":" into an array and assign it to tmp |

| 3: item ← tmp[0] |

| 4: action ← temp[1] |

| 5: if item_prefScore.get(item) ≠ null |

| 6: action ← item's original preference score item_prefScore.get(item) + action |

| 7: else |

| 8: action ← 0 + action |

| 9: Add (item, action) to item_prefScore |

| 10: Traverse item_prefScore, separate the key and value of each element with ":" and end with ",", then concatenate the character sequence and append it to V |

| 11: output(key, V) |

The pseudocode of the first MapReduce’s RUN function is described as Algorithm 6.

| Algorithm 6: The first MapReduce’s RUN function |

| Input: mapreduce configuration, input output paths |

| Output: whether the MapReduce job is successful |

| RUN(config, paths) |

| 1: Build MapReduce job 1 object job |

| 2: Set the Mapper class of job |

| 3: Set the output type of key and value for job's Map |

| 4: Set the Reducer class of job |

| 5: Set the output type of key and value for job's Reduce |

| 6: Get the first input path and output path of MapReduce task 1 from paths, and set them as the input path and output path of job |

| 7: Submit the job to the Hadoop cluster |

6.2. MapReduce Job 2

6.2.1. Basic Idea

MapReduce job 2 builds the co-occurrence (similarity) matrix for items. The basic idea is as follows:

The Map task is used to construct item pairs and output key–value pairs, where the key is the item pair and the value is 1, indicating that the item pair appears only once. The Reduce task calculates the number of times each pair of items co-occurs.

6.2.2. Input and Output Formats

According to the above flow, the input and output formats of the second MapReduce job are designed as follows:

- (1)

- Map input and output formats

Map takes the output of the first MapReduce job as input, and uses the pairwise combination (itemA:itemB) of the items operated on by two users as the output key, with 1 as the output value.

- (2)

- Reduce input and output formats

Reduce takes the aggregated output of Map as input, the pairwise combination (itemA:itemB) of two user-operated items as the output key, and the number of times the combination occurs as the output value.

6.2.3. Pseudocode Description

The pseudocode of the map() function of MapReduce job 2 is described as Algorithm 7.

| Algorithm 7: The map() function of MapReduce task 2 |

| Input: the output of the first MapReduce job |

| Output: item pairs |

| map(key, value, context) |

| 1: Convert the value separated by tab into an array and assign it to temp |

| 2: Convert the string in temp[1] separated by "," into an array and assign it to items |

| 3: for i←0 to items.length |

| 4: itemA ← items[i]’s item ID |

| 5: for j←0 to items.length |

| 6: itemB ← items[j]’s item ID |

| 7: output(itemA:itemB, 1) |

The pseudocode of the reduce() function of MapReduce task 2 is described as Algorithm 8.

| Algorithm 8: The reduce() function of MapReduce task 2 |

| Input: the aggregated output of Map |

| Output: the co-occurrence (similarity) matrix for items |

| reduce(key, values, context) |

| 1: sum ← 0 |

| 2: for v:values |

| 3: sum ← sum + 1 |

| 4: output(key, sum) |

The pseudocode description of the RUN function for MapReduce task 2 is similar to that of MapReduce task 1’s RUN function.

6.3. MapReduce Job 3

6.3.1. Basic Idea

MapReduce job 3 multiplies the obtained co-occurrence matrix and the preference score matrix. The basic idea is as follows:

The setup() function determines which file is being input. In the Map task, if the data come from the co-occurrence matrix, they will produce a key–value pair of item IDs and their co-occurrence ids and counts. If the data come from the preference scoring matrix, they will produce a key–value pair consisting of the item ID and user ID, as well as the user’s preference score for the item. The Reduce task calculates the product of the similarity (i.e., the value of the corresponding element for the two items in the co-occurrence matrix) between the current item and each other item, and the user preference score, and groups the results by item.

6.3.2. Input and Output Formats

According to the above flow, the input and output formats of the third MapReduce job are designed as follows:

- (1)

- Map input and output formats

Map takes the output of the first or second MapReduce job as input. If the input is the first MapReduce output file, then the item ID is used as the output key, and the combination (B:userID,prefScore) of the user ID and the preference score is used as the output value. If the input is the output of the second MapReduce file, the first item in the pairwise combination (A:itemIDB,num) is used as the output key, and the combination of the second item and the similarity is used as the output value.

- (2)

- Reduce input and output formats

Reduce takes the aggregated output of Map as input, the user ID as the output key, and the combination of the corresponding item ID and the user’s recommendation degree for the item as the output value.

6.3.3. Pseudocode Description

The pseudocode of the setup() function of MapReduce job 3 is described as Algorithm 9.

| Algorithm 9: The setup() function of MapReduce task 3 |

| Input: the mapreduce job context |

| Output: the data origin flag |

| setup(context) |

| 1: Determine which file is being read and mark it with flag (step2 or step3) |

The pseudocode description of the map() function for MapReduce task 3 is as Algorithm 10.

| Algorithm 10: The map() function for MapReduce task 3 |

| Input: the output of the first or second MapReduce job as input |

| Output: the user's preference score for an item or the similarity of two items |

| 1: Convert the value separated by tab or comma into an array and assign it to temp |

| 2: if flag = “step2” |

| 3: userID ← temp[0] |

| 4: for i ← 1 to temp.length |

| 5: Convert the string in temp[i] separated by “”:“” into an array and assign it to item_prefScore |

| 6: itemID ← item_prefScore[0] |

| 7: prefScore ← item_prefScore[1] |

| 8: output(itemID, B:userID,prefScore) |

| 9: else if flag = "step3" |

| 10: Convert the string in temp[0] separated by “:” into an array and assign it to v |

| 11: itemIDA = v[0] |

| 12: itemIDB = v[1] |

| 13: num = temp[1] |

| 14: output(itemIDA, A:itemIDB,num) |

The pseudocode description of the reduce() function for MapReduce jobs 3 is as Algorithm 11.

| Algorithm 11: The reduce() function for MapReduce task 3 |

| Input: the aggregated output of Map |

| Output: the product of preference score matrix and co-occurrence matrix |

| reduce(key, values, context) |

| 1: for v: values |

| 2: if v starts with “A:” |

| 3: Convert the v separated by tab or comma into an array and assign it to kv |

| 4: Add (kv[0], kv[1]) to A |

| 5: else if v starts with “B:” |

| 6: Convert the v separated by tab or comma into an array and assign it to kv |

| 7: Add (kv[0], kv[1]) to B |

| 8: Set itera as an iterator for A |

| 9: while itera.hasNext() |

| 10: ka ← itera.next() |

| 11: num ← the value retrieved from the key ka |

| 12: Set iterb as an iterator for B |

| 13: while iterb.hasNext() |

| 14: kb ← iterb.next() |

| 15: prefScore ← the value retrieved from the key kb |

| 16: result ← num * prefScore |

| 17: output(kb, ka + "," + result) |

The pseudocode description of the RUN function for MapReduce job 3 is similar to that of MapReduce task 1’s RUN function.

6.4. MapReduce Job 4

6.4.1. Basic Idea

MapReduce job 4 adds the multiplied matrices to obtain the result matrix. The basic idea is as follows:

The Map job outputs the output results of MapReduce task 3 as-is, and the Reduce job calculates the sum of each item’s recommendation degrees (i.e., the products in MapReduce task 3) for each user group, which are their final total recommendation degree.

6.4.2. Input and Output Formats

According to the above flow, the input and output formats of the fourth MapReduce job are designed as follows:

- (1)

- Map input and output formats

Map takes the output of the third MapReduce job as input, the user ID as the output key, and the combination of the item ID and the recommendation degree as the output value.

- (2)

- Reduce input and output formats

Reduce takes the aggregated output of Map as input, the user ID as the output key, and the combination of the itemID and the item’s total recommendation degree (itemID, rcmdDeg) as the output value.

6.4.3. Pseudocode Description

The pseudocode description of the map() function for MapReduce task 4 is demonstrated in Algorithm 12.

| Algorithm 12: The map() function for MapReduce task 4 |

| Input: the output of the third MapReduce job |

| Output: the same as the output of the third MapReduce job |

| map(key, value, context) |

| 1: Convert the value, which is separated by tab or comma, into an array and assign it to temp |

| 2: k ← temp[0] |

| 3: v ← temp[1] + “,” + temp[2] |

| 4: output(k, v) |

The pseudocode description of the reduce() function for MapReduce task 4 is demonstrated in Algorithm 13.

| Algorithm 13: The reduce() function for MapReduce task 4 |

| Input: the aggregated output of Map |

| Output: all users' recommendation degrees for all items |

| reduce(key, values, context) |

| 1: for v: values |

| 2: Convert v, which is separated by “,”, into an array and assign it to tmp |

| 3: itemID ← temp[0] |

| 4: rcmdDeg ← temp[1] |

| 5: if map.containsKey(exerciseID) |

| 6: Add (itemID, map.get(itemID) + rcmdDeg) to the map |

| 7: else |

| 8: Add (itemID, rcmdDeg) to the map |

| 9: Set iter as map's iterator |

| 10: while itera.hasNext() |

| 11: itemID ← itera.next() |

| 12: rcmdDeg ← map.get(itemID) |

| 13: output(key, itemID + “,” + rcmdDeg) |

The pseudocode description of the RUN function for MapReduce task 4 is similar to that of MapReduce task 1’s RUN function.

7. Experiment and Result Analysis

To evaluate the complexity and time efficiency of the program implementing the algorithms described in this paper, we used Java to develop a program that implements the proposed HiMRItemCF algorithm and the MapReduce-based ItemCF algorithm [30] and ran the program on three publicly available user shopping behavior datasets, then analyzed and discussed the experimental results from the perspectives of program complexity and time efficiency.

7.1. Experimental Environment and Dataset

Using Java and Eclipse 3.81 integrated development environment, we implemented the HiMRItemCF algorithm proposed in this paper and the MapReduce-based ItemCF algorithm [30] on the Hadoop 2.10.0 platform. The experimental environment consisted of a cluster with six Tencent Cloud servers, with one serving as the master node and the remaining five serving as worker nodes. Each cloud server had the following configuration: Ubuntu Server 18.04.1 LTS 64, 8 cores, 32 GB memory, 5 Mbps bandwidth, and 100 GB hard disk.

Our experiments used a user shopping behavior dataset named “user.zip”, which contains a large-scale dataset “raw_user.csv” and a small dataset “small_user.csv”, as well as a Taobao user behavior dataset UserBehavior.csv provided by Alibaba. Table 1 lists the sample sizes and field numbers of the three datasets.

Table 1.

Experimental datasets.

7.2. Evaluation Indicators

To evaluate the complexity of the program implementing the proposed algorithms, we used three indicators: lines of code, cyclomatic complexity, and Halstead complexity.

- (1)

- Lines of code: Excluding blank lines; comments; package imports; and declarations of members, types, and namespaces.

- (2)

- Cyclomatic complexity

- (3)

- Halstead complexity: In this study, we considered five metrics, namely, the length of the program, the program vocabulary length, the predicted length, the information content (volume), and the predicted number of errors.

Please refer to [31,32] for detailed explanations of the above metrics. The evaluation of program complexity only considers the core algorithm codes.

In terms of measuring algorithm time efficiency, we used two metrics: the speedup ratio and parallel computing efficiency.

- (1)

- The speedup ratio refers to the ratio of the time required for a single-processor system and a parallel processor system to complete the same task, which is used to measure the performance and effectiveness of parallel systems or program parallelization. Its calculation formula iswhere Ts is the time required for solving the problem in a single-machine environment, and Tp is that for solving the same problem with a parallel algorithm.

- (2)

- The parallel computing efficiency is a measure of processor utilization, and its calculation formula iswhere p is the number of nodes contained in the cluster.

7.3. Result Analysis

We wrote programs to implement the HiMRItemCF algorithm proposed in this paper and the MapReduce-based ItemCF algorithm [30].

Given that the main difference between the proposed HiMRItemCF algorithm and the MapReduce-based ItemCF algorithm [30] lies in the first and sixth steps of the six steps, while other modules (including the main module) of the two contain basically the same codes, we only counted the data of two modules (referred to as Module 1 and Module 2, respectively) corresponding to the first and sixth steps, respectively. Table 2 lists the lines of code, cyclomatic complexity, and Halstead complexity of Module 1 and Module 2 for both programs.

Table 2.

Complexity of both programs.

As shown in Table 1, the total number of code lines, cyclomatic complexity, program vocabulary length, program length, program predicted length, program volume, and predicted numbers of errors of the two modules in the MapReduce-based ItemCF algorithm [30] were 150, 8, 154, 337, 943.55, 2178.05, and 0.73, respectively. On the other hand, on the basis of using MapReduce to implement complex calculation logic, the algorithm proposed in this paper can assign some tasks to Hive to complete, which greatly reduces the complexity of the program by virtue of Hive’s convenience and ease of use. As a result, the total number of code lines, cyclomatic complexity, program vocabulary length, program length, program predicted length, program volume, and predicted number of errors of the two modules in the algorithm described in this paper were reduced to 87, 0, 79, 143, 389.17, 759.24, and 0.25, respectively. For the more complex Module 2, the effect of reducing program complexity is particularly evident.

To measure the time efficiency of the algorithms, we ran the MapReduce-based ItemCF algorithm [30] and the HiMRItemCF algorithm on three datasets (small_user.csv, raw_user.csv and UserBehavior.csv), respectively. By increasing the number of nodes in the cluster from 1 to 3 and then to 6, we obtained the running time, speedup ratio, and parallel computing efficiency of the algorithms on the three datasets, as shown in Table 3, Table 4 and Table 5.

Table 3.

Performance comparison of the two algorithms on small_user.csv.

Table 4.

Performance comparison of the two algorithms on raw_user.csv.

Table 5.

Performance comparison of the two algorithms on UserBehavior.csv.

According to Table 3, for the small_user.csv dataset, when there was only one data node, the speedup ratios of the MapReduce-based ItemCF algorithm [30] and HiMRItemCF algorithm were 0.81 and 0.85, respectively, and their parallel computing efficiencies were 0.81 and 0.85, with both being outperformed by the non-parallel ItemCF algorithm on a single machine in terms of time efficiency. This is because the data transmission and communication between nodes in the distributed cluster cause additional time overhead. When the number of nodes increased to three, the speedup ratios of the MapReduce-based ItemCF algorithm [30] and HiMRItemCF algorithm increased to 2.51 and 2.63, respectively. Their parallel computing efficiencies also increased to 0.84 and 0.88, with the latter having far better time efficiency than the non-parallel ItemCF algorithm on a single machine and outperforming the MapReduce-based ItemCF algorithm [30] in this regard. Given this, it can be seen that the process of converting HiveQL statements into MapReduce programs takes time, and using Hive can optimize the performance of MapReduce, thereby achieving lower-level computing performance optimization. Therefore, overall, the running time of the HiMRItemCF algorithm is faster than that of the MapReduce-based ItemCF algorithm [30]. When the number of nodes increased to six, the speedup ratios of the MapReduce-based ItemCF algorithm [30] and HiMRItemCF algorithm increased to 5.45 and 5.53, respectively, while their parallel computing efficiencies also increased to 0.91 and 0.92, indicating that the proposed algorithm performed even better in this case.

Similarly, as shown in Table 4, for the raw_user.csv dataset, when there was only one node, both the MapReduce-based ItemCF algorithm [30] and the algorithm proposed in this paper were outperformed by the non-parallel ItemCF algorithm on a single machine in terms of time efficiency. However, when the number of nodes was increased to three and six, the HiMRItemCF algorithm performed better in terms of speedup ratio and parallel computing efficiency (better than that of the HiMRItemCF algorithm when processing the smaller dataset small_user.csv with the same number of nodes). On the larger UserBehavior.csv dataset, when the number of nodes increases to three and six, the speedup ratio/parallel computing efficiency of HiMRItemCF algorithm reaches 2.73/0.91 and 5.71/0.95, respectively. It is significantly better than the ItemCF algorithm based on MapReduce [30] and the HiMRItemCF algorithm with the same number of nodes on small_user.csv and raw_user.csv datasets.

Based on the above analysis, it can be concluded that the proposed HiMRItemCF algorithm features both lower complexity and higher time efficiency and performs better on larger datasets.

8. Conclusions

The existing Hadoop-based ItemCF algorithms all use MapReduce or Hive separately without fully leveraging the flexibility and efficiency of MapReduce and the convenience and usability of Hive. On the other hand, the HiMRItemCF algorithm proposed in this paper uses Hive tasks to implement deduplication, sorting, and other operations that are more suitable for them while leaving more complex calculations and operations such as matrix multiplication to MapReduce. This approach achieves simple and efficient programming, reduces the likelihood of errors, and delivers better time efficiency all at once.

The work and research described in this paper have certain limitations, leaving room for further expansion and improvements in the future that mainly include:

- (1)

- Improving experimental resources. Our current experiment only uses clusters with a relatively small number of nodes that are low-configuration purchased cloud servers. In addition, due to objective conditions such as time constraints, the scale of the dataset we selected is not large enough. In future experiments, we should use larger-scale datasets and computer clusters that are composed of multiple high-configuration PCs.

- (2)

- Expanding the application of the proposed idea (of combining MapReduce and Hive) from parallelization of just the ItemCF algorithm to that of the UserCF algorithm, as well as other data mining algorithms such as k-nearest neighbor (KNN), k-means, support vector machine (SVM), and deep learning.

- (3)

- Currently, the idea of combining MapReduce and Hive proposed in this paper cannot be directly applied to solutions to similar problems that require concurrently processing multiple tasks in sequence (i.e., it is not yet universally applicable). In the future, we will develop a parallel computing framework (named HiMapReduce) based on this idea which can be applied to solutions to all similar problems that require concurrently processing multiple tasks in sequence. We will also encapsulate common Hive functions, such as file merging, deduplication, sorting, and averaging, in HiMapReduce to enhance the usability of the framework.

Author Contributions

Conceptualization, L.W.; methodology, L.W.; software, L.W. and Y.F.; validation, L.W. and Y.F.; investigation, L.W. and Y.F.; resources, Y.F.; data curation, L.W.; writing—original draft preparation, L.W. and Y.F.; writing—review and editing, L.W. and Y.F.; visualization, L.W. and Y.F.; supervision, L.W.; project administration, L.W.; funding acquisition, L.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (NSFC), grant number 61932004; the China University Industry University Research Innovation Fund, grant number 2021LDA04003; the Open Research Fund Program of Key Laboratory of Industrial Internet and Big Data, China National Light Industry, Beijing Technology and Business University, grant number IIBD-2021-KF10; and the Open Fund Project of Shaanxi Key Laboratory of Intelligent Processing for Big Energy Data, grant number IPBED22.

Data Availability Statement

user.zip dataset are available at https://pan-yz.chaoxing.com/external/m/file/323080072910897152 (accessed on 28 January 2023); UserBehavior.csv datasets are available at https://pan-yz.chaoxing.com/external/m/file/664436719294349312 (accessed on 25 July 2022).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Guo, F. Research on Key Technologies of P2P-Based Distributed Personalized Recommendation System. Master’s Thesis, Xiamen University, Xiamen, China, 2005. [Google Scholar]

- Mayer-Schonberger, V.; Cukier, K. Big Data: A Revolution That Will Transform How We Live, Work and Think; Eamon Dolan/Houghton Mifflin Harcourt: Boston, MA, USA; New York, NY, USA, 2013. [Google Scholar]

- Mei, H. Introduction to Big Data; Higher Education Press: Beijing, China, 2018. [Google Scholar]

- Gan, W.; Lin, J.C.; Chao, H.; Zhan, J. Data mining in distributed environment: A survey. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2017, 7, e1216. [Google Scholar] [CrossRef]

- Jo, Y.Y.; Jang, M.H.; Kim, S.W.; Han, K. Efficient processing of recommendation algorithms on a single-machine-based graph engine. J. Supercomput. 2020, 76, 7985–8002. [Google Scholar] [CrossRef]

- White, T. Hadoop: The Definitive Guide, 4th ed.; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2015. [Google Scholar]

- Lin, Z. Principle and Application of Big Data Technology, 2nd ed.; Posts & Telecom Press: Beijing, China, 2017. [Google Scholar]

- Yan, C.; Ji, G. Design and Implementation of Item-Based Parallel Collaborative Filtering as a Recommendation Algorithm. J. Nanjing Norm. Univ. 2014, 37, 71–75. [Google Scholar]

- Li, W.; Xu, S. Design and implementation of recommendation system for E-commerce on Hadoop. Comput. Eng. Des. 2014, 35, 130–136+143. [Google Scholar]

- Jia, C.; Xu, B.; Sun, Y.; Zhang, F.; Chen, S. An ItemCF Recommendation Method Implemented Using Hadoop. CN107180063A, 19 September 2017. [Google Scholar]

- Cheng, X.; Chen, J. Collaborative Filtering Algorithm Based on MapReduce and Item Classification. Comput. Eng. 2016, 42, 194–198. [Google Scholar]

- Ying, Y.; Liu, Y.; Chen, C. Personalized Recommendation System Based on Cloud Computing Technology. Comput. Eng. Appl. 2015, 51, 111–117. [Google Scholar]

- Ghuli, P.; Ghosh, A.; Shettar, R. A collaborative filtering recommendation engine in a distributed environment. In Proceedings of the 2014 International Conference on Contemporary Computing and Informatics (IC3I), Mysore, India, 27–29 November 2014; IEEE: Washington, DC, USA, 2015; pp. 568–574. [Google Scholar]

- Kim, S.; Kim, H.; Min, J.K. An efficient parallel similarity matrix construction on MapReduce for collaborative filtering. J. Supercomput. 2019, 75, 123–141. [Google Scholar] [CrossRef]

- Liu, Y.; Sun, Y.; Han, T.; Tang, C. A Hive-Based Collaborative Filtering Recommendation Method. CN110532330A, 12 March 2019. [Google Scholar]

- Lin, Z. Fundamentals of Big Data: Programming, Experimentation and Cases; Tsinghua University Press: Beijing, China, 2017. [Google Scholar]

- Lei, Q. Research on Cold Start Problem in Personalized RecommenderSystems. Master’s Thesis, Beijing Jiaotong University, Beijing, China, 2023. [Google Scholar]

- Barman, S.D.; Hasan, M.; Roy, F. A Genre-Based Item-Item Collaborative Filtering: Facing the Cold-Start Problem. In Proceedings of the 2019 8th International Conference on Software and Computer Application, Penang, Malaysia, 19–21 February 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 258–262. [Google Scholar]

- Anwar, T.; Uma, V.; Srivastava, G. Rec-CFSVD++: Implementing Recommendation System Using Collaborative Filtering and Singular Value Decomposition (SVD)++. Int. J. Inf. Technol. Decis. Mak. 2021, 20, 1075–1093. [Google Scholar] [CrossRef]

- Xu, Q. Research on Personalized Recommendation System Based on Latent Factor Model. Master’s Thesis, Guangdong University of Technology, Guangzhou, China, 2019. [Google Scholar]

- Sreepada, R.S.; Patra, B.K. Enhancing long tail item recommendation in collaborative filtering: An econophysics-inspired approach. Electron. Commer. Res. Appl. 2021, 49, 101089. [Google Scholar] [CrossRef]

- Zhang, Z.; Kudo, Y.; Murai, T.; Ren, Y. Enhancing Recommendation Accuracy of Item-Based Collaborative Filtering via Item-Variance Weighting. Appl. Sci. 2019, 9, 1928. [Google Scholar] [CrossRef]

- Ren, L.; Wang, W. An SVM-based collaborative filtering approach for Top-N web services recommendation. Future Gener. Comput. Syst. 2017, 78, 531–543. [Google Scholar] [CrossRef]

- Fu, M.; Qu, H.; Yi, Z.; Lu, L.; Liu, Y. A Novel Deep Learning-Based Collaborative Filtering Model for Recommendation System. IEEE Trans. Cybern. 2019, 49, 1084–1096. [Google Scholar] [CrossRef] [PubMed]

- Stitini, O.; Kaloun, S.; Bencharef, O. An Improved Recommender System Solution to Mitigate the Over-Specialization Problem Using Genetic Algorithms. Electronics 2022, 11, 242. [Google Scholar] [CrossRef]

- Stitini, O.; Kaloun, S.; Bencharef, O. The Use of a Genetic Algorithm to Alleviate the Limited Content Issue in a Content-Based Recommendation System. In Proceedings of the International Conference on Artificial Intelligence and Smart Environment, Errachidia, Morocco, 4–26 November 2022; Springer: Cham, Switzerland, 2022; pp. 776–782. [Google Scholar]

- Tao, J.; Gan, J.; Wen, B. Collaborative Filtering Recommendation Algorithm based on Spark. Int. J. Perform. Eng. 2019, 15, 930–938. [Google Scholar] [CrossRef]

- Hazem, H.; Awad, A.; Hassan, A. A Distributed Real-Time Recommender System for Big Data Streams. arXiv 2022. [Google Scholar] [CrossRef]

- Zhang, W. Practicing Big Data Development Using Hadoop; Tsinghua University Press: Beijing, China, 2019. [Google Scholar]

- CSDN. Implementation of Item-Based Collaborative Filtering (ItemCF) Algorithm Using MapReduce. Available online: https://blog.csdn.net/u011254180/article/details/80353543 (accessed on 17 May 2018).

- Zhang, H.; Mou, Y. Introduction to Software Engineering, 6th ed.; Tsinghua University Press: Beijing, China, 2013. [Google Scholar]

- Pressman, R.S. Software Engineering: A Practitioner’s Approach, 9th ed.; McGraw-Hill Companies: New York, NY, USA, 2019. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).