An Improved LSTNet Approach for State-of-Health Estimation of Automotive Lithium-Ion Battery

Abstract

:1. Introduction

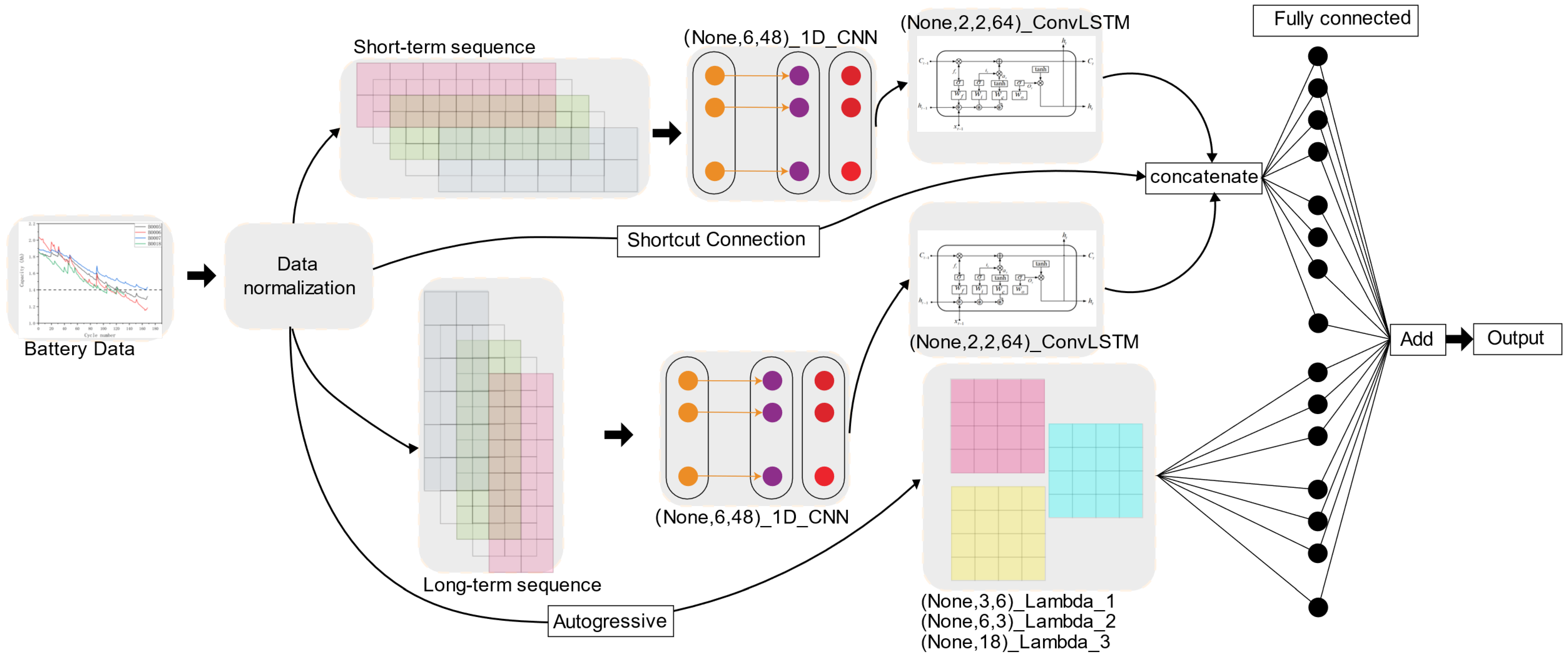

- This paper proposes an approach that directly handles battery capacity data by dividing the raw data into long-term and short-term sequences, thereby effectively learning the hidden features of the original data;

- Due to the complexity of the original data, ConvLSTM is introduced in this study to endow the model with spatial feature analysis capabilities. Shortcut connections are also incorporated to expedite model convergence, improving performance;

- A method for estimating the SOH of automotive LIBs is proposed based on an improved LSTNet. The experimental results demonstrate that the proposed method achieves higher accuracy without extensive preprocessing steps.

2. Methods

2.1. 1D-CNN

2.2. ConvLSTM

2.3. Fully Connected Layer and AR Model

2.4. Shortcut Connection

3. Results and Discussion

3.1. Experimental Setup

3.1.1. Dataset Introduction

- Data Reliability: The NASA LIB dataset, provided by the National Aeronautics and Space Administration, exhibits high reliability and authenticity. This dataset is carefully collected and recorded, encompassing battery operational data from various real-world applications and environmental conditions. It provides meaningful training and evaluation data for battery life prediction models;

- High Dimensionality and Richness: The NASA LIB dataset typically includes a wealth of sensor measurements, such as current, voltage, and temperature, as well as battery state information, such as charge status and capacity degradation. These high-dimensional and rich data provide comprehensive feature information that aids in developing accurate battery life prediction models;

- Academic Reference: The NASA LIB dataset is widely used in academic research and has become a benchmark test dataset for battery life prediction algorithms and models. Choosing this dataset ensures the comparability of research results and enables comparison and validation against the work of other researchers.

3.1.2. Experimental Data and Parameter Settings

- Selecting LSTM and GRU models to evaluate whether the proposed model outperforms commonly used LSTM models and their variants;

- Selecting a CNN-LSTM to verify whether the proposed model is superior to structurally similar network models;

- Selecting a WD-2DCNN and BLS-LSTM to evaluate whether the proposed model outperforms methods with preprocessing steps.

3.2. Model Evaluation Indicators

3.3. Experimental Results and Comparative Analysis

3.3.1. Comparison with Traditional Models

3.3.2. Sensitivity Analysis

3.3.3. Comparison with Advanced Methods

3.4. Generalizability Verification

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Wang, S.; Jin, S.; Bai, D.; Fan, Y.; Shi, H.; Fernandez, C. A Critical Review of Improved Deep Learning Methods for the Remaining Useful Life Prediction of Lithium-ion Batteries. Energy Rep. 2021, 7, 5562–5574. [Google Scholar] [CrossRef]

- Wang, J.; Zhang, C.; Zhang, L.; Su, X.; Zhang, W.; Li, X.; Du, J. A Novel Aging Characteristics-based Feature Engineering for Battery State of Health Estimation. Energy 2023, 273, 127169. [Google Scholar] [CrossRef]

- Locorotondo, E.; Corti, F.; Pugi, L.; Berzi, L.; Reatti, A.; Lutzemberger, G. Design of a Wireless Charging System for Online Battery Spectroscopy. Energies 2021, 14, 218. [Google Scholar] [CrossRef]

- Luo, K.; Chen, X.; Zheng, H.; Shi, Z. A Review of Deep Learning Approach to Predicting the State of Health and State of Charge of Lithium-ion Batteries. J. Energy Chem. 2022, 74, 159–173. [Google Scholar] [CrossRef]

- Abdelhafiz, S.M.; Fouda, M.E.; Radwan, A.G. Parameter Identification of Li-ion Batteries: A Comparative Study. Electronics 2023, 12, 1478. [Google Scholar] [CrossRef]

- Xiong, R.; Zhang, Y.; Wang, J.; He, H.; Peng, S.; Pecht, M. Lithium-Ion Battery Health Prognosis Based on a Real Battery Management System Used in Electric Vehicles. IEEE Trans. Veh. Technol. 2019, 68, 4110–4121. [Google Scholar] [CrossRef]

- Zheng, Y.; He, F.; Wang, W. A Method to Identify Lithium Battery Parameters and Estimate SOC Based on Different Temperatures and Driving Conditions. Electronics 2019, 8, 1391. [Google Scholar] [CrossRef] [Green Version]

- Lee, H.; Park, J.; Kim, J. Incremental Capacity Curve Peak Points-Based Regression Analysis for the State-of-Health Prediction of a Retired LiNiCoAlO2 Series/Parallel Configured Battery Pack. Electronics 2019, 8, 1118. [Google Scholar] [CrossRef] [Green Version]

- Xiong, W.; Xu, G.; Li, Y.; Zhang, F.; Ye, P.; Li, B. Early prediction of lithium-ion battery cycle life based on voltage-capacity discharge curves. J. Energy Storage 2023, 62, 106790. [Google Scholar] [CrossRef]

- Xing, J.; Zhang, H.; Zhang, J. Remaining useful life prediction of Lithium batteries based on principal component analysis and improved Gaussian process regression. Int. J. Electrochem. Sci. 2023, 18, 100048. [Google Scholar] [CrossRef]

- Song, K.; Hu, D.; Tong, Y.; Yue, X. Remaining life prediction of lithium-ion batteries based on health management: A review. J. Energy Storage 2023, 57, 106193. [Google Scholar] [CrossRef]

- Zhang, Y.; Ma, H.; Wang, S.; Li, S.; Guo, R. Indirect prediction of remaining useful life for lithium-ion batteries based on improved multiple kernel extreme learning machine. J. Energy Storage 2023, 64, 107181. [Google Scholar] [CrossRef]

- Zhou, Z.; Liu, Y.; You, M.; Xiong, R.; Zhou, X. Two-stage aging trajectory prediction of LFP lithium-ion battery based on transfer learning with the cycle life prediction. Green Energy Intell. Transp. 2022, 1, 100008. [Google Scholar] [CrossRef]

- Kim, S.; Yi, Z.; Kunz, M.R.; Dufek, E.J.; Tanim, T.R.; Chen, B.-R.; Gering, K.L. Accelerated battery life predictions through synergistic combination of physics-based models and machine learning. Cell Rep. Phys. Sci. 2022, 3, 101023. [Google Scholar] [CrossRef]

- Fei, Z.; Zhang, Z.; Yang, F.; Tsui, K.-L. A deep attention-assisted and memory-augmented temporal convolutional network based model for rapid lithium-ion battery remaining useful life predictions with limited data. J. Energy Storage 2023, 62, 106903. [Google Scholar] [CrossRef]

- Pham, T.; Truong, L.; Bui, H.; Tran, T.; Garg, A.; Gao, L.; Quan, T. Towards Channel-Wise Bidirectional Representation Learning with Fixed-Point Positional Encoding for SoH Estimation of Lithium-Ion Battery. Electronics 2023, 12, 98. [Google Scholar] [CrossRef]

- Zhang, C.; Wang, H.; Wu, L. Life Prediction Model for Lithium-ion Battery Considering Fast-charging Protocol. Energy 2023, 263, 126109. [Google Scholar] [CrossRef]

- Duong, P.L.T.; Raghavan, N. Heuristic Kalman Optimized Particle Filter for Remaining Useful Life Prediction of Lithium-ion Battery. Microelectron. Reliab. 2018, 81, 232–243. [Google Scholar] [CrossRef]

- Zhang, H.; Miao, Q.; Zhang, X.; Liu, Z. An improved unscented particle filter approach for lithium-ion battery remaining useful life prediction. Microelectron. Reliab. 2018, 81, 288–298. [Google Scholar] [CrossRef]

- Cheng, G.; Wang, X.; He, Y. Remaining useful life and state of health prediction for lithium batteries based on empirical mode decomposition and a long and short memory neural network. Energy 2021, 232, 121022. [Google Scholar] [CrossRef]

- Lyu, C.; Lai, Q.; Ge, T.; Yu, H.; Wang, L.; Ma, N. A Lead-acid Battery’s Remaining Useful Life Prediction by Using Electrochemical Model in the Particle Filtering Framework. Energy 2016, 120, 975–984. [Google Scholar] [CrossRef]

- Dang, W.; Liao, S.; Yang, B.; Yin, Z.; Liu, M.; Yin, L.; Zheng, W. An Encoder-decoder Fusion Battery Life Prediction Method Based on Gaussian Process Regression and Improvement. J. Energy Storage 2023, 59, 106469. [Google Scholar] [CrossRef]

- Zhai, X.; Wang, K.; Peng, N.; Wang, K.; Peng, N.; Zhang, X. Synchronous estimation of state of health and remaining useful lifetime for lithium-ion battery using the incremental capacity and artificial neural networks. J. Energy Storage 2019, 26, 100951. [Google Scholar] [CrossRef]

- Zhao, G.; Liu, Y.; Liu, G.; Jiang, S.; Hao, W. State-of-charge and state-of-health estimation for lithium-ion battery using the direct wave signals of guided wave. J. Energy Storage 2021, 39, 102657. [Google Scholar] [CrossRef]

- Fasahat, M.; Manthouri, M. State of charge estimation of lithium-ion batteries using hybrid autoencoder and long short term memory neural networks. J. Power Sources 2020, 469, 228375. [Google Scholar] [CrossRef]

- Chen, D.; Zhang, W.; Zhang, C.; Sun, B.; Cong, X.; Wei, S.; Jiang, J. A novel deep learning-based life prediction method for lithium-ion batteries with strong generalization capability under multiple cycle profiles. Appl. Energy 2022, 327, 120114. [Google Scholar] [CrossRef]

- Wang, S.; Takyi-Aninakwa, P.; Jin, S.; Yu, C.; Fernandez, C.; Stroe, D.-I. An improved feedforward-long short-term memory modeling method for the whole-life-cycle state of charge prediction of lithium-ion batteries considering current-voltage-temperature variation. Energy 2022, 254, 124224. [Google Scholar] [CrossRef]

- Shi, X.; Chen, Z.; Wang, H.; Yeung, D. Convolutional LSTM network: A machine learning approach for precipitation nowcasting. Adv. Neural Inf. Process. Syst. 2015, 28, 802–810. [Google Scholar] [CrossRef]

- Goebel, B.S.A.K. Battery data set. In NASA Prognostics Data Repository; NASA Ames Research Center: Moffett Field, CA, USA, 2007. [Google Scholar]

- Ding, P.; Liu, X.; Li, H.; Huang, Z.; Zhang, K.; Shao, L.; Abedinia, O. Useful life prediction based on wavelet packet decomposition and two-dimensional convolutional neural network for lithium-ion batteries. Renew. Sustain. Energy Rev. 2021, 148, 111287. [Google Scholar] [CrossRef]

- Zhao, S.; Zhang, C.; Wang, Y. Lithium-ion battery capacity and remaining useful life prediction using board learning system and long short-term memory neural network. J. Energy Storage 2022, 52, 104901. [Google Scholar] [CrossRef]

- Severson, K.A.; Attia, P.M.; Jin, N.; Perkins, N.; Jiang, B.; Yang, Z.; Chen, M.H.; Aykol, M.; Herring, P.K.; Fraggedakis, D.; et al. Data-driven prediction of battery cycle life before capacity degradation. Nat. Energy 2019, 4, 383–391. [Google Scholar] [CrossRef] [Green Version]

- Clerici, D.; Mocera, F.; Somà, A. Electrochemical–mechanical multi-scale model and validation with thickness change measurements in prismatic lithium-ion batteries. J. Power Sources 2022, 542, 231735. [Google Scholar] [CrossRef]

- Attia, P.M.; Grover, A.; Jin, N.; Severson, K.A.; Markov, T.M.; Liao, Y.-H.; Chen, M.H.; Cheong, B.; Perkins, N.; Yang, Z.; et al. Closed-loop optimization of fast-charging protocols for batteries with machine learning. Nature 2020, 578, 397–402. [Google Scholar] [CrossRef] [PubMed] [Green Version]

| Method | Hidden Layer Structure | Hidden Layer Settings | Dropout | Filter Size | Optimizer | Batch size |

|---|---|---|---|---|---|---|

| LSTM | One LSTM layer and one dense layer | LSTM nodes: 100 and fully connected layer nodes: none, 1 | 0.5 | - | Adam | 8 |

| GRU | One GRU layer and one dense layer | GRU nodes: 100 and fully connected layer nodes: none, 1 | 0.5 | - | Adam | 8 |

| CNN_GRU | One 1D-CNN, one max-pooling, one GRU, and one fully connected layer | The number of filters is 48, GRU nodes: 100, and fully connected layer nodes: none, 1 | 0.5 | 3 | Adam | 8 |

| LSTNet | As shown in Figure 1 | The number of filters is 48, GRU nodes: 64, and fully connected layer nodes: none, 1 | 0.5 | 3 | Adam | 8 |

| Battery Number | Percentage of Training Set | Method | RMSE | MAE | MAPE |

|---|---|---|---|---|---|

| B0005 | 50% | LSTM | 0.01732 | 0.01592 | 0.01161 |

| GRU | 0.02247 | 0.01888 | 0.01393 | ||

| CNN-GRU | 0.01620 | 0.01298 | 0.00962 | ||

| LSTNet | 0.00492 | 0.00313 | 0.00232 | ||

| 40% | LSTM | 0.01246 | 0.00972 | 0.00715 | |

| GRU | 0.01078 | 0.00797 | 0.00586 | ||

| CNN-GRU | 0.00341 | 0.00254 | 0.00182 | ||

| LSTNet | 0.00563 | 0.00369 | 0.00261 | ||

| 60% | LSTM | 0.00935 | 0.00775 | 0.00579 | |

| GRU | 0.00549 | 0.00425 | 0.00319 | ||

| CNN-GRU | 0.00806 | 0.00773 | 0.00560 | ||

| LSTNet | 0.00064 | 0.00051 | 0.00038 | ||

| B0006 | 50% | LSTM | 0.01435 | 0.01360 | 0.01022 |

| GRU | 0.01079 | 0.01042 | 0.00783 | ||

| CNN-GRU | 0.03268 | 0.03132 | 0.02389 | ||

| LSTNet | 0.00370 | 0.00180 | 0.00146 | ||

| 40% | LSTM | 0.02107 | 0.01885 | 0.01436 | |

| GRU | 0.01303 | 0.01097 | 0.00847 | ||

| CNN-GRU | 0.04118 | 0.03821 | 0.02890 | ||

| LSTNet | 0.00650 | 0.00580 | 0.00435 | ||

| 60% | LSTM | 0.00902 | 0.00723 | 0.00566 | |

| GRU | 0.00927 | 0.00698 | 0.00563 | ||

| CNN-GRU | 0.00853 | 0.00710 | 0.00549 | ||

| LSTNet | 0.00193 | 0.00116 | 0.00090 | ||

| B0007 | 50% | LSTM | 0.02158 | 0.01806 | 0.01234 |

| GRU | 0.02191 | 0.02015 | 0.01364 | ||

| CNN-GRU | 0.01769 | 0.01572 | 0.01065 | ||

| LSTNet | 0.00218 | 0.00085 | 0.00059 | ||

| 40% | LSTM | 0.01479 | 0.01177 | 0.00800 | |

| GRU | 0.01746 | 0.01431 | 0.00972 | ||

| CNN-GRU | 0.00597 | 0.00489 | 0.00327 | ||

| LSTNet | 0.00261 | 0.00129 | 0.00086 | ||

| 60% | LSTM | 0.00526 | 0.00491 | 0.00334 | |

| CNN-GRU | 0.00744 | 0.00625 | 0.00414 | ||

| GRU | 0.00617 | 0.00503 | 0.00346 | ||

| LSTNet | 0.00339 | 0.00127 | 0.00090 | ||

| B0018 | 50% | LSTM | 0.00324 | 0.00287 | 0.00202 |

| GRU | 0.00341 | 0.00330 | 0.00233 | ||

| CNN-GRU | 0.00505 | 0.00462 | 0.00324 | ||

| LSTNet | 0.00059 | 0.00026 | 0.00018 | ||

| 40% | LSTM | 0.00925 | 0.00883 | 0.00619 | |

| GRU | 0.00236 | 0.00195 | 0.00137 | ||

| CNN-GRU | 0.00830 | 0.00783 | 0.00547 | ||

| LSTNet | 0.00145 | 0.00052 | 0.00037 | ||

| 60% | LSTM | 0.00191 | 0.00169 | 0.00121 | |

| GRU | 0.00239 | 0.00222 | 0.00158 | ||

| CNN-GRU | 0.00280 | 0.00255 | 0.00181 | ||

| LSTNet | 0.00602 | 0.00349 | 0.00255 |

| Method | Number of Neurons | Batch Size | Epoch | RMSE | MAE | MAPE |

|---|---|---|---|---|---|---|

| LSTNet | 8 | 4 | 20 | 0.00969 | 0.00649 | 0.00483 |

| 128 | 64 | 300 | 0.01064 | 0.00661 | 0.00496 | |

| 8 | 4 | 300 | 0.00916 | 0.00534 | 0.00404 | |

| 8 | 64 | 300 | 0.01136 | 0.00709 | 0.00534 | |

| 8 | 64 | 20 | 0.01295 | 0.00814 | 0.00613 | |

| 128 | 64 | 20 | 0.01418 | 0.00931 | 0.00698 | |

| 128 | 4 | 20 | 0.00918 | 0.00690 | 0.00511 | |

| 128 | 4 | 300 | 0.00927 | 0.00576 | 0.00341 | |

| 64 | 8 | 300 | 0.00492 | 0.00313 | 0.00232 | |

| CNN-GRU | 8 | 4 | 20 | 0.16497 | 0.12308 | 0.07404 |

| 128 | 64 | 300 | 0.02195 | 0.02162 | 0.01548 | |

| 8 | 4 | 300 | 0.04300 | 0.03624 | 0.02667 | |

| 8 | 64 | 300 | 0.01992 | 0.01585 | 0.01171 | |

| 8 | 64 | 20 | 0.30365 | 0.29070 | 0.21020 | |

| 128 | 64 | 20 | 0.16846 | 0.15989 | 0.11581 | |

| 128 | 4 | 20 | 0.03641 | 0.03505 | 0.02453 | |

| 128 | 4 | 300 | 0.06779 | 0.05828 | 0.04281 | |

| 64 | 8 | 300 | 0.01620 | 0.01298 | 0.00962 |

| Battery Number | Percentage of Training Set | Method | RMSE | MAE |

|---|---|---|---|---|

| B0005 | 40% | WD-2DCNN | 0.0102 | 0.0065 |

| BLS-LSTM | - | - | ||

| LSTNet | 0.00563 | 0.00369 | ||

| 50% | WD-2DCNN | 0.0103 | 0.0045 | |

| BLS-LSTM | 0.0067 | 0.0069 | ||

| LSTNet | 0.00492 | 0.00313 | ||

| B0006 | 40% | WD-2DCNN | 0.0215 | 0.0154 |

| BLS-LSTM | - | - | ||

| LSTNet | 0.00650 | 0.00580 | ||

| 50% | WD-2DCNN | 0.0106 | 0.0102 | |

| BLS-LSTM | 0.0055 | 0.0067 | ||

| LSTNet | 0.00370 | 0.00180 | ||

| B0007 | 40% | WD-2DCNN | 0.0098 | 0.0045 |

| BLS-LSTM | - | - | ||

| LSTNet | 0.00261 | 0.00129 | ||

| 50% | WD-2DCNN | 0.0089 | 0.0061 | |

| BLS-LSTM | - | - | ||

| LSTNet | 0.00218 | 0.00085 | ||

| B0018 | 40% | WD-2DCNN | 0.0114 | 0.0063 |

| BLS-LSTM | - | - | ||

| LSTNet | 0.00145 | 0.00052 | ||

| 50% | WD-2DCNN | 0.0072 | 0.0102 | |

| BLS-LSTM | - | - | ||

| LSTNet | 0.00059 | 0.00026 |

| Battery Number | Percentage of Training Set | Method | RMSE | MAE | MAPE |

|---|---|---|---|---|---|

| CH5 | 40% | LSTNet | 0.004878 | 0.004483 | 0.004939 |

| 50% | 0.004972 | 0.004751 | 0.005253 | ||

| CH10 | 40% | LSTNet | 0.011803 | 0.010935 | 0.010024 |

| 50% | 0.011642 | 0.010217 | 0.011529 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ping, F.; Miao, X.; Yu, H.; Xun, Z. An Improved LSTNet Approach for State-of-Health Estimation of Automotive Lithium-Ion Battery. Electronics 2023, 12, 2647. https://doi.org/10.3390/electronics12122647

Ping F, Miao X, Yu H, Xun Z. An Improved LSTNet Approach for State-of-Health Estimation of Automotive Lithium-Ion Battery. Electronics. 2023; 12(12):2647. https://doi.org/10.3390/electronics12122647

Chicago/Turabian StylePing, Fan, Xiaodong Miao, Hu Yu, and Zhiwen Xun. 2023. "An Improved LSTNet Approach for State-of-Health Estimation of Automotive Lithium-Ion Battery" Electronics 12, no. 12: 2647. https://doi.org/10.3390/electronics12122647

APA StylePing, F., Miao, X., Yu, H., & Xun, Z. (2023). An Improved LSTNet Approach for State-of-Health Estimation of Automotive Lithium-Ion Battery. Electronics, 12(12), 2647. https://doi.org/10.3390/electronics12122647