Abstract

The multilinear system framework allows for the exploitation of the system identification problem from different perspectives in the context of various applications, such as nonlinear acoustic echo cancellation, multi-party audio conferencing, and video conferencing, in which the system could be modeled through parallel or cascaded filters. In this paper, we introduce different memoryless and memory structures that are described from a bilinear perspective. Following the memory structures, we develop the multilinear recursive least-squares algorithm by considering the Kronecker product decomposition concept. We have performed a set of simulations in the context of echo cancellation, aiming both long length impulse responses and the reverberation effect.

1. Introduction

In the field of system identification, many applications involve adaptive filtering algorithms [1,2]. One of them is the echo cancellation problem, which has raised many challenges over the years [3,4]. Based on the input-output relation, a dynamic system should be determined (i.e., the echo path), considering various parameters and external factors that must be estimated. These dynamic systems are modeled linearly through an adaptive filter with a finite-impulse-response (FIR) structure [5,6]. The main performance bottlenecks, in terms of computational complexity, tracking, and convergence rate, arise when the length of the impulse response reaches hundreds/thousands of coefficients. The literature presents many approaches to improve the overall performance, also taking into account the fact that the echo paths are sparse in nature [7,8,9,10,11,12,13]. Recently, in our previous work [14], we introduced a new approach of splitting a long length impulse response into several impulse responses of shorter lengths, aiming to reduce the computational complexity by maintaining the overall performance. Another challenge arises when the echo path produces multiple reflections, and this effect is called reverberation. From a mathematical point of view, this effect could be described (to some extent) by using the Kronecker product decomposition of the impulse response [15,16].

In this paper, we extend our study on cascaded adaptive filters, aiming to reduce the computational complexity considering both long length impulse responses and the reverberation effect. Our approach is based on multilinear structures and the Kronecker product decomposition. The main goal is to outline the features of this development and its potential.

The rest of the paper is organized as follows. Section 2 presents the background for different bilinear structures without memory, while Section 3 introduces bilinear structures with memory. In Section 4, the new development is combined with the recursive least-squares (RLS) algorithm, thus resulting in a practical solution based on adaptive filtering. We perform an experimental study in Section 5 and conclude the paper in Section 6.

2. Bilinear Structures without Memory

In order to introduce the bilinear structures with memory and the development based on the Kronecker product decomposition, let us start by presenting the bilinear structure without memory [14,17,18], defined as

where is the multiple input data matrix of size , with

and

is an input signal vector containing the most recent data at the discrete-time index n, while the superscript T is the transpose operator. The two impulse responses and have and coefficients, respectively. In other words, the input-output equation in (1) describes a system with inputs and a single output. In order to facilitate the graphical representation, let us rewrite (1) as

where

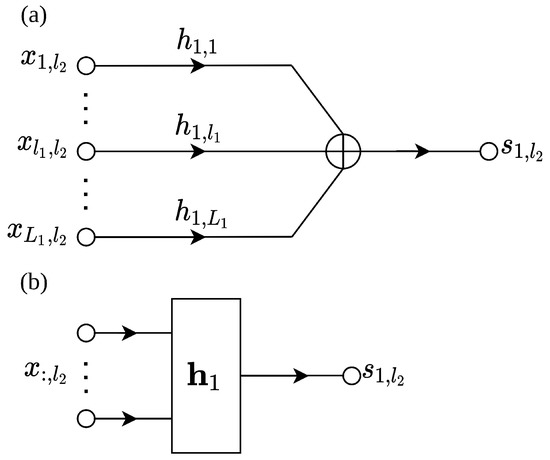

is the output of a memoryless weighted adder with inputs at the discrete-time index n. We can transpose (5) in a graphical representation as shown in Figure 1.

Figure 1.

(a) The structure of [see (5)] and (b) the symbolic representation of the memoryless weighted adder.

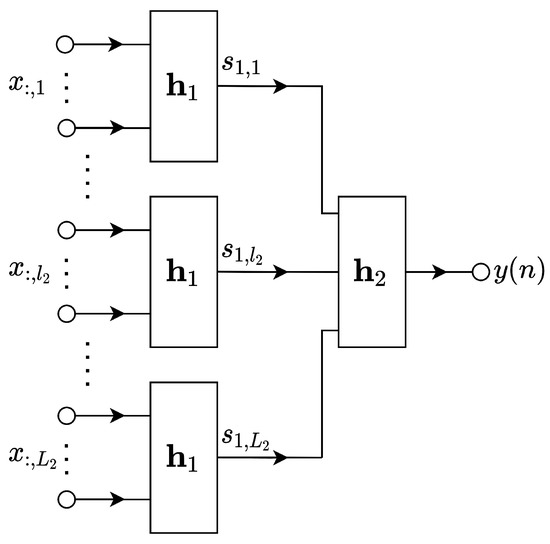

Based on (4) and Figure 1, we can introduce the graphical respresentation of the multiple-input single-output (MISO) system as shown in Figure 2. Overall, this structure consists of two levels of combiners.

Figure 2.

The two combiner levels structure (i.e., a MISO system).

3. Bilinear Structures with Memory

By introducing a delay line, the structure described by inputs and a single output can be transformed in a single-input single-output (SISO) structure. Therefore, the following input signal vector results

Thus, the input data matrix has the following structure:

Hence,

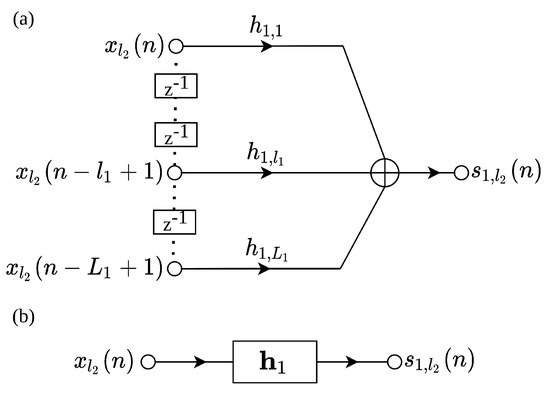

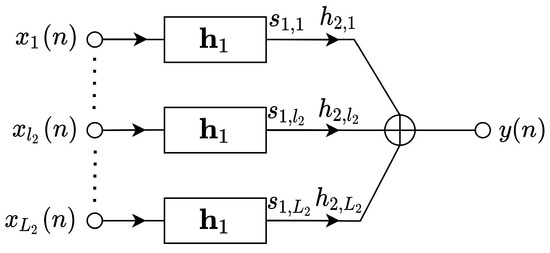

is a structure associated to a transversal filter, with the weighted function , having as input the vector . The graph representation of the new structure is shown in Figure 3. Also, Figure 4 outlines the two combiner level structures based on the transversal filters.

Figure 3.

(a) The structure of with delay line and (b) its symbolic representation.

Figure 4.

The two combiner levels structure based on transversal filters.

A more efficient form in terms of correlation between the columns of the input matrix can be obtained if we consider successive data related to the columns, and is defined as

The input signal vector becomes a sequence of successive data applied to a FIR filter of length , so that

where denotes the vectorization operation and

with . In terms of the z-transform, we can write (11) as

Forwards, the output of the global system in the z-transform domain is

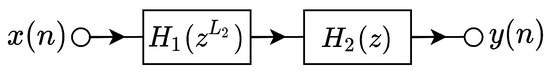

where

Equation (12) describes a SISO structure of two cascaded filters as shown in Figure 5. The first filter is obtained through interpolation with zeroes by factor of the function and its length is . Indeed, it has only non-zero coefficients, from the total of , (hence a certain degree of sparsity), according to its impulse response

where is the impulse response of and denotes the modulo operation. The second filter, , is of length . Afterwards, the total length of the filter is .

Figure 5.

SISO system in cascaded configuration.

Based on this configuration, let us consider the two vectors:

and the Kronecker product:

Having as coefficients the elements of this vector, the polynomial form is developed as

and can be factored in the form described in (14). While the position of an element for the indexes is , we have

4. Cascaded Multilinear RLS Algorithm Using Kronecker Product Decomposition

Based on the development from Section 3, we introduce the set of equations for the RLS algorithm in a multilinear manner, following the Kronecker product decomposition [14,19]. Our approach is determined considering the system identification framework. In this context, the output of the MISO system is

where N denotes the multilinear degree and represents the multiplication operation by the dimension . The input data are described in a N degree tensorial form as with the real-values . The vector of length , stores the impulse response for the i cascaded filter, . Based on the () impulse responses of the MISO system, the rank-1 tensor of dimension is

where ∘ denotes the outer product. Usually, in the context of system identification, the desired signal results from the output signal corrupted by an additive noise, , which in our development is a zero-mean Gaussian signal, so that

Consequently, the output signal described by (18) results in

where

and

with and representing the frontal slices of and , respectively. The two new vectors and consist of elements. Also, the output of the system can be rewritten as

Then, the a priori error signal is computed as

where represents an estimate of the output signal. Following the least-squares (LS) error criterion, we can introduce the cost functions:

where , , represent the forgetting factors and

with denoting the identity matrix of size , . Following the minimization of the cost functions , ,…, , the update equations of the RLS algorithm in the multilinear approach result:

where the a priori errors are defined as

with

and

In fact, the equations from (26) represent a multilinear optimization strategy, where impulse responses are considered fixed during the optimization of the remaining one [20]. In other words, in each of the cost functions from Equation (24), for the optimization of , we consider that the other , with , are fixed. The initialization of the RLS-based algorithms is influenced by the initialization of the matrix , which represents a recursive estimate of the inverse of the covariance matrix of the input signal [21]. In fact, this is the initialization factor that controls the initial convergence of the algorithm. Usually, this initialization is , where is the so-called regularization parameter and is the identity matrix of size . This regularization parameter depends on the length of the filter and the power of the input signal. In the case of the RLS-CKD algorithm, the matrices from (29) should be initialized in a similar manner. However, even if the initialization of the conventional RLS and RLS-CKD algorithms could be different from this point of view, the regularization parameters do not bias the overall performance, since their influence (for n large enough) is negligible due to the forgetting factors (i.e., for the conventional RLS and for the RLS-CKD algorithm), which are positive constants smaller than 1. Finally, the cascaded multilinear RLS algorithm based on the Kronecker product decomposition (RLS-CKD) is defined by Equations (26)–(29). While the classical RLS algorithm involves matrices of size , the RLS-CKD algorithm solves the system identification problem by splitting the long length impulse response in shorter length impulse responses, so that it implies matrices of sizes , , where . The classical RLS algorithm involves a computational complexity of . In the case of the RLS-CKD algorithm, the computation complexity results as a sum of . Following the presented approach, the computational complexity of the RLS-CKD is reduced to , with . The extra computational amount is due to the Kronecker product operations. At this point, we can observe a drastic reduction in computational complexity for the RLS-CKD algorithm as compared to that of the classical RLS algorithm, especially for impulse responses of long length (as in echo cancellation).

5. Simulation Results

In order to simulate the RLS-CKD algorithm, we have chosen two different multilinear degrees, (bilinear) and (trilinear), considering the echo cancellation framework. As input signals, we have used white Gaussian noise (i.e., a random process with standard normal distribution, zero mean, and unit variance), an AR(1) process produced by filtering a white Gaussian noise through a first-order system , and a speech sequence, at a sample rate of 8 kHz. For the purpose of these simulations, we have considered that the output of the target system (i.e., the echo signal) is corrupted by white Gaussian noise [i.e., ], considering an echo-to-noise ratio (ENR) of 20 dB when the input signal is a white Gaussian noise or an AR(1) process, and 30 dB when the input signal is a speech sequence. In order to measure the performance, we have used the normalized misalignment in dB, defined as

where denotes the Euclidean norm and

As initialization we have used (i.e., the first coefficient is equal to one, which is followed by zeros), while the other impulse responses , with are initialized as

where denotes a column vector with all its elements equal to one. The conventional all-zeros initialization specific to most of the adaptive filtering algorithms cannot be used in the case of tensor-based algorithms, due to connection between the individual filters, as shown in Equation (25). In this case, the initialization () would stall the algorithm.

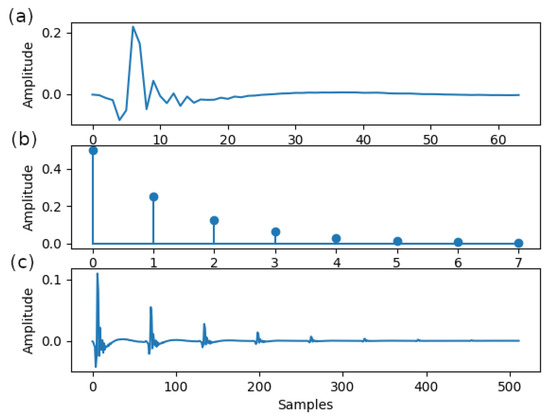

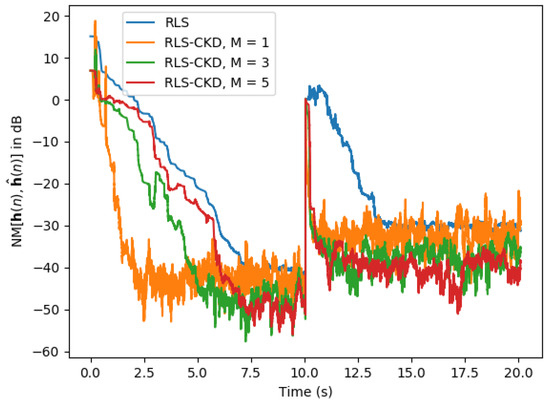

For the first set of simulations that implies the bilinear approach, we have considered the impulse responses depicted in Figure 6. In the first plot, Figure 6a, the first impulse response from the G168 Recommendation [22] is represented (i.e., a 64 coefficients cluster). Next, Figure 6b depicts the second impulse response , evaluated as , with , where . The third impulse response is the target that must be determined and is obtained as the Kronecker product between the first two impulse responses, i.e., . This impulse response is similar to the echo produced by an acoustic environment characterized by a reverberation effect and its length is coefficients. Here, we consider the case of a linearly separable system, which is the benchmark of our approach, and show how it can be efficiently exploited in the framework of system identification problems. The impulse response from Figure 6c could correspond to a channel with echoes. This repetitive (but not periodic) structure could also result if a certain impulse response is followed by its reflections, e.g., as in wireless transmissions. The method allows temporal localization and magnitude estimation of the reflections, considering a temporal grid, without any restrictions of periodicity. However, the tensor-based adaptive algorithms can efficiently model the separable part of the system. The forgetting factor used for the RLS algorithm is computed as , with in the bilinear context and in the trilinear context, while for the RLS-CKD algorithm is computed as , , with and .

Figure 6.

Impulse responses for the bilinear setup: (a) , first impulse from the G168 Recommendation [22]; (b) , exponential generated impulse response; and (c) impulse response of the target system, .

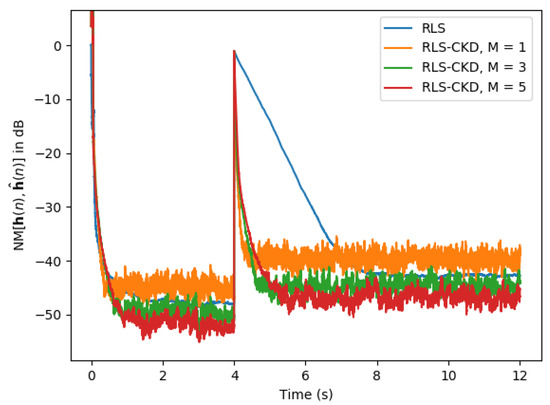

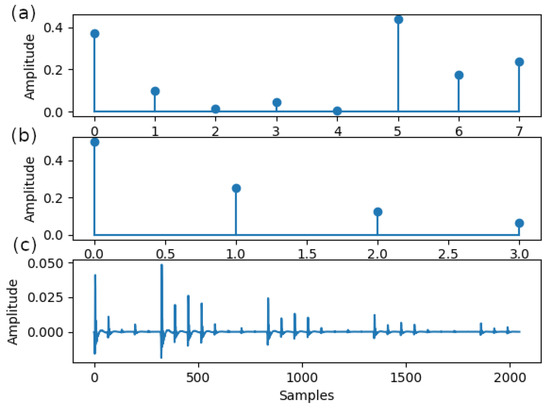

In the first simulation represented in Figure 7, we analyze the performance of the RLS-CKD algorithm with that of the classical RLS algorithm. The echo path changes after 4 s of simulation by changing the impulse response with a random impulse response of the same length, with samples between 0 and 0.5. In the first part of the plot, we can remark that the RLS-CKD algorithm achieves a convergence rate similar to that of the classical RLS algorithm. Regarding the tracking capability, when the echo path changes, the RLS-CKD algorithm outperforms the RLS algorithm. The RLS-CKD achieves a normalized misalignment of −30 dB in less than 200 ms. We can remark that the constant value M only affects the normalized misalignment level when the echo path changes.

Figure 7.

Normalized misalignment of the classical RLS () and RLS-CKD (, ) algorithms. The input signal is white Gaussian noise and the impulse response changes after 4 s of simulation.

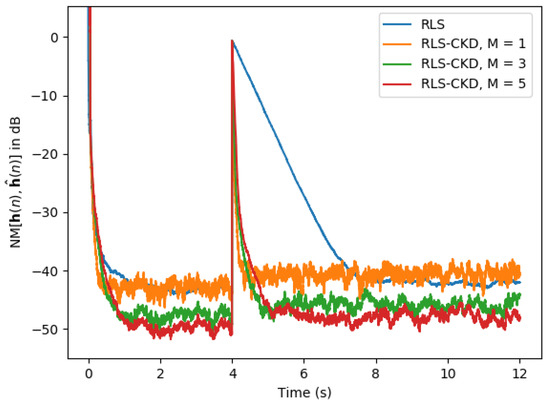

Next, in Figure 8, we analyze the behavior of the RLS-CKD algorithm in a scenario where the input signal is an AR(1) process. The echo path changes in the same manner as in the previous scenario. In this case, the RLS-CKD algorithm achieves an even lower normalized misalignment of almost 10 dB (e.g., when ) compared to the RLS algorithm. When the echo path changes, the values of M do not impact the RLC-CKD algorithm too much. This time, the RLS-CKD achieves a normalized misalignment of −40 dB in less than 500 ms, while the RLS algorithm requires at least 3 s to achieve a comparable level.

Figure 8.

Normalized misalignment of the classical RLS () and RLS-CKD (, ) algorithms. The input signal is an AR(1) process and the impulse response changes after 4 s of simulation.

We conclude the first set of simulations with the scenario depicted in Figure 9, where the input signal is a speech sequence and the echo path changes in the middle of the simulation in the same manner.

Figure 9.

Normalized misalignment of the classical RLS () and RLS-CKD (, ) algorithms. The input signal is a speech sequence and the impulse response changes in the middle of the simulation.

As we can notice in Figure 9, the steady-state misalignment of the conventional RLS algorithm (the blue curve) is similar to the misalignment of the RLS-CKD algorithm using , while the initial convergence rate and tracking of the proposed algorithm are much better. A larger value of M influences only the initial convergence rate of the RLS-CKD algorithm, but keeps the same fast tracking reaction. On the other hand, the steady-state misalignment of the RLS-CKD is improving for a larger value of M (i.e., for larger values of the forgetting factors , closer to 1).

Furthermore, we continue the simulations with the trilinear approach, based on the impulse responses from Figure 10. In this case, we have considered an even longer echo path of thousands of coefficients. The echo path of the system that must be identified is obtained as , of size , with () from Figure 6a, () from Figure 10a, and () from Figure 10b. The second impulse response (i.e., ) is randomly generated, with samples between 0 and 0.5, while the third impulse response (i.e., ) is obtained as , with , where .

Figure 10.

Impulse responses for the trilinear setup: (a) , random generated impulse response; (b) , exponential generated impulse response; and (c) Impulse response of the target system, .

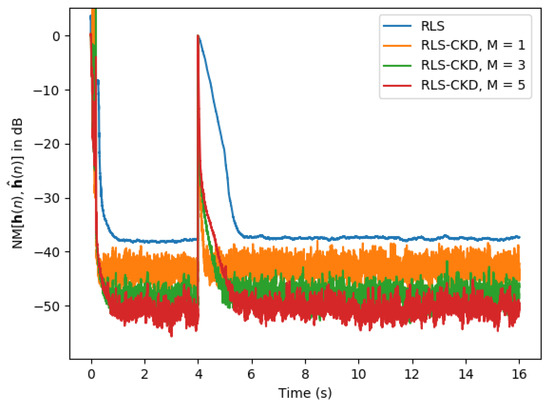

In Figure 11, the first simulation in the trilinear scenario is represented. The input signal is a white Gaussian noise and the echo path changes by generating as a random impulse response after 4 s, so this impacts the whole system. It is worth noting that the RLS-CKD algorithm presents a slightly faster converge rate compared to that of the RLS algorithm and a lower normalized misalignment for of at least 10 dB. In terms of tracking, the RLS-CKD algorithm succeeds in re-estimating the new echo path and we can see that the smaller the forgetting factor is (i.e., ), the faster the tracking.

Figure 11.

Normalized misalignment of the classical RLS () and RLS-CKD (, , ) algorithms. The input signal is white Gaussian noise and the impulse response changes after 4 s.

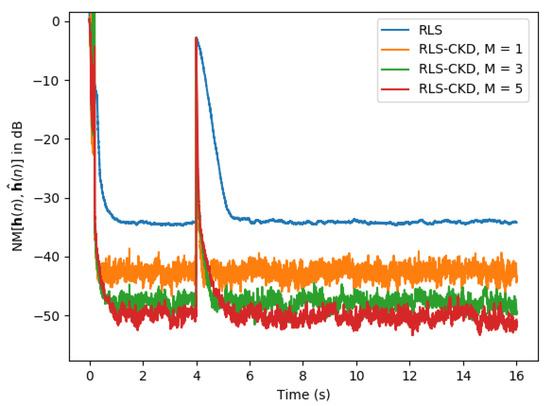

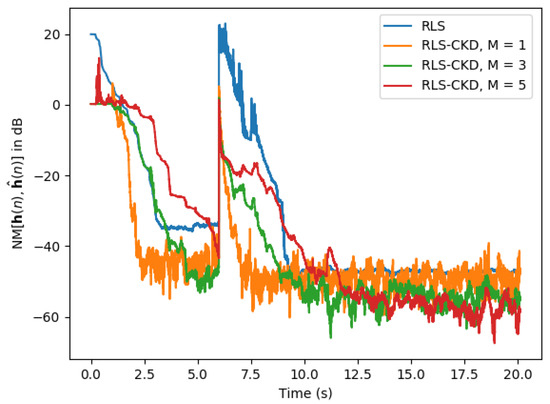

In the scenario represented in Figure 12, the input signal is an AR(1) process and the echo path changes by regenerating after 4 s. The RLS-CKD algorithm outperforms the classical RLS algorithm in terms of convergence rate, normalized misalignment, and tracking capability, with a much lower computational complexity. Finally, in Figure 13, we conclude the set of simulations with a scenario where the input signal is a speech sequence. Again, the echo path changes by regenerating after 6 s of simulation. While the classical RLS algorithm requires more than 3 s to achieve a reasonable normalized misalignment level, the RLS-CKD algorithm succeeds at estimating the target system, presenting a good tracking capability even when the echo path changes. However, for a faster convergence rate, the RLS-CKD algorithm requires a much lower forgetting factor (e.g., ). Also, the steady-state misalignment of the conventional RLS algorithm (after the change of the system) is similar to the misalignment of the RLS-CKD algorithm using .

Figure 12.

Normalized misalignment of the classical RLS () and RLS-CKD (, , ) algorithms. The input signal is an AR(1) process and the impulse response changes after 4 s.

Figure 13.

Normalized misalignment of the classical RLS () and RLS-CKD (, , ) algorithms. The input signal is a speech sequence and the impulse response changes after 6 s.

6. Conclusions

In this paper, we have introduced various memoryless and memory structures described by a bilinear input-output relation. Based on this approach, we have obtained a SISO system from a MISO system, which is a cascade of shorter length filters. We then developed the multilinear RLS algorithm considering the Kronecker product decomposition and outlining the reduction in terms of computational complexity. Finally, we have presented a set of simulations as a comparison between the newly developed RLS-CKD algorithm and the classical RLS algorithm. Simulations proved that the RLS-CKD algorithm outperforms the classical RLS algorithm in terms of convergence rate, normalized misalignment, and tracking capability. We can conclude that the RLS-CKD algorithm is a good candidate for real-time applications, which implies long length impulse responses and systems characterized by reverberation.

Author Contributions

Conceptualization, A.-G.R.; Formal analysis, S.C.; Software, C.P.; Methodology, J.B. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by a grant of the Romanian Ministry of Education and Research, CNCS-UEFISCDI, project number: PN-III-P1-1.1-TE-2019-0420, within PNCDI III.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare that they have no conflict of interest.

References

- Haykin, S. Adaptive Filter Theory, 4th ed.; Prentice-Hall: Upper Saddle River, NJ, USA, 2002. [Google Scholar]

- Benesty, J.; Huang, Y. (Eds.) Adaptive Signal Processing–Applications to Real-World Problems; Springer: Berlin/Heidelberg, Germany, 2003. [Google Scholar]

- Gay, S.L.; Benesty, J. (Eds.) Acoustic Signal Processing for Telecommunication; Kluwer Academic Publisher: Boston, MA, USA, 2000. [Google Scholar]

- Benesty, J.; Gaensler, T.; Morgan, D.R.; Sondhi, M.M.; Gay, S.L. Advances in Network and Acoustic Echo Cancellation; Springer: Berlin/Heidelberg, Germany, 2001. [Google Scholar]

- Tsoulos, I.G.; Stavrou, V.; Mastorakis, N.E.; Tsalikakis, D. GenConstraint: A programming tool for constraint optimization problems. SoftwareX 2019, 10. [Google Scholar] [CrossRef]

- Stavrou, V.N.; Tsoulos, I.G.; Mastorakis, N.E. Transformations for FIR and IIR Filters’ Design. Symmetry 2021, 13, 533. [Google Scholar] [CrossRef]

- Duttweiler, D.L. Proportionate normalized least-mean-squares adaptation in echo cancelers. IEEE Trans. Speech Audio Process. 2000, 8, 508–518. [Google Scholar] [CrossRef] [Green Version]

- Benesty, J.; Gay, S.L. An improved PNLMS algorithm. In Proceedings of the 2002 IEEE International Conference on Acoustics, Speech, and Signal Processing, Orlando, FL, USA, 13–17 May 2002; pp. II-1881–II-1884. [Google Scholar]

- Deng, H.; Doroslovački, M. Proportionate adaptive algorithms for network echo cancellation. IEEE Trans. Signal Process. 2006, 54, 1794–1803. [Google Scholar] [CrossRef]

- Loganathan, P.; Khong, A.W.; Naylor, P. A class of sparseness-controlled algorithms for echo cancellation. IEEE Trans. Audio Speech Lang. Process. 2009, 17, 1591–1601. [Google Scholar] [CrossRef]

- Paleologu, C.; Benesty, J.; Ciochină, S. Sparse Adaptive Filters for Echo Cancellation; Morgan & Claypool Publishers: San Rafael, CA, USA, 2010. [Google Scholar]

- Yang, Z.; Zheng, Y.R.; Grant, S.L. Proportionate affine projection sign algorithms for network echo cancellation. IEEE Trans. Audio Speech Lang. Process. 2011, 19, 2273–2284. [Google Scholar] [CrossRef]

- Liu, J.; Grant, S.L. Proportionate adaptive filtering for block-sparse system identification. IEEE/ACM Trans. Audio Speech Lang. Process. 2016, 24, 623–630. [Google Scholar] [CrossRef] [Green Version]

- Rusu, A.-G.; Ciochină, S. Cascaded adaptive filters in a bilinear approach for system identification. In Proceedings of the 2020 International Symposium on Electronics and Telecommunications (ISETC), Timisoara, Romania, 5–6 November 2020; pp. 1–4. [Google Scholar]

- Loan, C.F.V. The ubiquitous Kronecker product. J. Comput. Appl. Math. 2000, 123, 85–100. [Google Scholar] [CrossRef] [Green Version]

- Benesty, J.; Cohen, I.; Chen, J. Array Processing–Kronecker Product Beamforming; Springer: Cham, Switzerland, 2019. [Google Scholar]

- Benesty, J.; Paleologu, C.; Ciochină, S. On the identification of bilinear forms with the Wiener filter. IEEE Signal Process. Lett. 2017, 24, 653–657. [Google Scholar] [CrossRef]

- Paleologu, C.; Benesty, J.; Ciochină, S. Adaptive filtering for the identification of bilinear forms. Digital Signal Process. 2018, 75, 153–167. [Google Scholar] [CrossRef]

- Dogariu, L.-M.; Stanciu, C.L.; Elisei-Iliescu, C.; Paleologu, C.; Benesty, J.; Ciochină, S. Tensor-based adaptive filtering algorithms. Symmetry 2021, 13, 481. [Google Scholar] [CrossRef]

- Bertsekas, D.P. Nonlinear Programming, 2nd ed.; Athena Scientific: Belmont, MA, USA, 1999. [Google Scholar]

- Benesty, J.; Paleologu, C.; Ciochină, S. Regularization of the RLS algorithm. IEICE Trans. Fundam. 2011, E94-A, 1628–1629. [Google Scholar] [CrossRef]

- Digital Network Echo Cancellers; ITU-T Recommendation G.168; ITU: Geneva, Switzerland, 2002.

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).