1. Introduction

It is becoming more and more common to use drones in many fields of human activity. Ljungblat et al. [

1] group domains, in which research on human—drone interaction is already carried out: such as sport, construction, and rescue operations. Reddy Maddikunta et al. [

2] and Lambertini et al. [

3] point out important applications in agriculture. The European Union Aviation Safety Agency (EASA) has published proposals to implement in Europe a special low-level altitude U-Space airspace available to unmanned aerial vehicles [

4]. Drones have also become tools commonly used by television and cinematography [

5,

6,

7,

8,

9,

10] as well as autonomous target tracking [

11]. It was already Levine [

6] who had said that Unmanned Aerial Vehicles would bring about transformation in journalism and the market of information transfer. In the media studies, literature unmanned aerial vehicles have already started to be included in the group of mobile media. As an example, Hildebrand [

12] describes drones in a variety of aspects as mobile media that allow not only to access physical, digital, and social spaces but also to shape them. Adams [

7] presents ways in which drone pilots influence content-related shape and production of journalistic material. Therefore, technical aspects of applying new technology (among others, examining sight activity of drone pilots) related to operating an aerial vehicle are currently within the area of interest of researchers, also those dealing with media.

TV drone flights, during which the drone pilot or the observer maintains eye contact with an unmanned aerial vehicle, are permitted in many countries around the world after completing specific conditions [

13,

14,

15]. Visual Line of Sight (VLOS) flight is an air operation in which the pilot maintains visual contact with the drone to ensure the safety of flight [

16]. In Poland, during a VLOS flight, it is allowed to look away from the drone to control the drone flight parameters on the screen of the drone operator [

17]. During television productions at low altitudes of flight, among many terrain obstacles, VLOS flight may prove more efficient and safer than BVLOS (Beyond Visual Line of Sight) flight. Using a drone in this manner opens up a possibility to capture significant shots, which open or close a given thread of news, coverage, and documentary instead of using only descriptive shots from high altitudes.

The article analyses a special situation where the pilot is the drone camera operator at the same time. In such camera and film crews that require the team to be as compact and mobile as possible, television crews among others, the pilot of the drone is usually also the drone camera operator. In big, commercial news channels in Poland (such as TVN24, TVN Discovery, Polsat News) ground camera operators, who obtained an adequate certificate of professional competence, use drones as pilots—cameramen, which speeds up the TV reactions to an event and extends the possibility of using drones in everyday information materials. For the sake of completeness, it is worth adding that a flight with both a pilot and a drone camera operator is highly effective. The pilot can focus on observing the drone in airspace, while the drone camera operator is focusing on filming. Another situation is a flight with an observer, who informs the pilot of possible collision courses and the drone’s position relative to the pilot. Thanks to this the pilot can focus more on the images from the drone and can easily recover drone visibility after completing a shot. Flights of this type can be carried out as VLOS and BVLOS operations depending on legal considerations and qualifications of the pilot.

The authors of the article have narrowed down their research area to a VLOS operation carried out single-handedly by a television pilot (

Figure 1). The goal was to determine the actual conditions of a VLOS drone flight while filming at low altitudes in a narrow maneuver corridor. A difficult flight—operator task carried out by pilots—drone operators, which nearly ended in crashing the drone—allowed, among others, to isolate two VLOS flight coefficients. The authors’ scientific contribution is innovation in the study and analysis of the drone operator’s behavior. Our motivation for the research was to analyze the behavior, and thus the situational awareness of the drone pilot during VLOS operation. We have limited the research to one aspect of this awareness related to the mutable observation of the drone.

The structure of the paper is as follows: in the introduction, we explain the difference between a VLOS flight and a BVLOS flight and also point out the perceptual consequences for a television drone pilot filming during VLOS operations; next, we do an analysis of the research status and quote the theoretical VLOS model, as well as, initiate a problem of mutable visual observation; then, we describe the experiment, including data acquisition; the paper is accomplished with a discussion of the obtained results. The paper concludes that the experiment allowed establishing two coefficients related to the effectiveness of a VLOS flight aiming at filming from the drone. The results also point to clear differences in screen perception styles used by drone television pilots. We also emphasize that the outcomes may help to optimize the process of aerial filming with the use of a drone, carried out for television, film, and other media, as well as in a simulation of such a flight for research and training.

2. Analysis of Research Status and the Related Work

The literature analysis of the authors of the paper showed that the current state of knowledge focuses mainly on the safety of the drone flight [

18]; technical aspects related to, among others, with the flight stability of the UAV [

19]; or, the security of the data (instructions sent to the vehicle, authentication, data integrity, and vehicle reactions), while, i.e., using drones in the delivery system or when deploying these devices to function in heterogeneous wireless 5G networks [

20,

21]. A lot of the publications are the analyzes of authors who raise various issues related to the safety [

14,

22,

23,

24] and security [

25] of UAVs in BVLOS flight, including, anti-collision systems [

26,

27], mobile edge computing and augmented reality [

28,

29], range of operating the drone in the situation of terrain obstacles [

30,

31,

32,

33], or engaging additional observers in EVLOS flight [

34].

The research results presented in the paper come from the authors’ new concept. To the authors’ knowledge, there are no other similarly constructed and conducted studies that would allow for direct comparative results. In further content of the paper, the literature supporting the authors’ research was cited and discussed.

A theoretical model of a drone pilot is an integral element of the system of unmanned vehicle flight control. In our model, the pilot—drone camera operator—processes information coming from the drone measuring instruments and the airspace, in which the unmanned vehicle is observed, whereby the drone pilot’s contact with the aircraft may be of direct or indirect nature. We have paid attention to the significant role of the pilot’s level of training in the techniques of television image execution. While analyzing the mutable observation of the drone by the television pilot, we have noticed that independent drone pilots have to combine two orders of observation: observing the drone in airspace and the image from the drone on the controller screen. Such perception actions require the skills of both piloting the drone, as well as cinematography. We have distinguished two types of vision that present the characteristics of the image operator’s perception (light and shade vision vs. contour vision). The query of research into pilots’ visual activity and ergonomics of the equipment allowed noticing how the eye-tracker and other research tools to study drone pilots’ perception had been used as well as what burden for the organ of sight can displaying information on the controller present. Concerning studies of manned aerial vehicles, we assumed that the pilot—drone operator performs two complementary activities of filming and navigating the drone. We argue that using drones as mobile, interactive media requires developed skills of perception in the area of mutable observation.

2.1. Theoretical Model of the Pilot Taking into Account the VLOS Flight Filming Process

Adamski [

35] presents the general theoretical model of operating unmanned aerial vehicles w (UAV) in his book. “While carrying out tasks, a pilot-operator should be considered an integral element of the unmanned aerial vehicle flight steering system. We can distinguish two basic subsystems enabling cooperation between a human and an unmanned aerial vehicle (UAV). Information on the parameters of a UAV (such as flight parameters, navigation data, and engine parameters) comes from measuring and processing collected data devices (treated as a subsystem ‘input’). Pilot as the steering system operator passes back the adequately processed commands to executive systems” (see [

35], p. 45). In this model, the pilot, as an integral element of the steering system, processes input information coming from the measuring devices of the drone.

During VLOS flight filming, the pilot—operator has direct visual (and auditory up to a certain distance) contact with the aircraft to ensure the safety of attempted maneuvers. At the moment of losing visual contact with the drone (while observing the drone image on the display), “the contact of the pilot—operator with the aircraft is indirect and usually takes place through signals that are carriers of individual pieces of information. Those signals are considered actual features of the control object and constitute the basis for its decision-making process. As a result, the decision taken, translated into controls movements, depends on the pilot’s operating environment and environment in general.” (see [

35], p. 45). According to Adamski’s typology, the factors influencing the pilot—operator while operating a UAV are, among others: training factors (methodology of training, training level, using the simulator), tactical factors (low altitude, collision hazard, high altitude, high speed, time deficit), technical factors (technological level of the construction, avionics equipment, equipment failure), biological and physical factors (oxygen deficiency, reduced threshold of color discrimination, fatigue), psychological factors (personal situation, professional situation, material situation, environmental situation) (see [

35], p. 46). Additional training factors influencing the pilot—drone camera operator are the level of training in television and filmmaking techniques and professional experience in this scope. Technical factors are, among others, sensors installed in drones, informing of obstacles (sensors operation is limited to specific, low cruising speeds and maneuver corridors with specific minimum width).

2.2. Mutable Visual Observation Maintained by the Drone Pilot during VLOS Flight

The authors of the article define the term ‘observation’ concerning the concept of observation and observer created by Niklas Luhmann, the author of the Social Systems Theory. To this end, they use the dictionary of terms developed by Krause [

36]. “Also according to Luhmann—writes Krause—observing has something to do with seeing, watching the subject, following the events. The question is if at all and how can you see and what do you see, when you see” (see [

36], p. 71; translated from German by the author). The question about a distinction that is at the base of observation arises from defining observation as “extracting distinction and naming the distinguished” (see [

36], p. 72; translated from German by the author). In this concept of observation, the pilot consciously perceives the drone speedometer on the controller screen, if distinguishing the drone’s speed is important to him at that moment. The observation includes the decision. “Anything that wasn’t distinguished and named is excluded. The other side of distinction is not known, however, it contributes—as what has not been distinguished—to distinguished clarification” (see [

36], p. 72; translated from German by the author). An observer understood as a subject is replaced in the systems theory with an observer understood as a system. In this regard, it is not the final instance of observation, but it is specified and distinguished by the observation of another observer or self-observation. Luhmann uses the term first and second-order observers. “The first-order observer cannot see how he sees the object of his observation. The second-order observer can see that the first-order observer uses a distinction invisible to him and only thus can see his object. The distinction of the second-order observer is typical for him, not a type of double distinction of the first-order observer. (…) There must be at least a minimal time difference (…) between the first and second-order observation” (see [

36], p. 78; translated from German by the author). The first-order observation is subject to the question (level) of ‘what?’, while second-order observation is subject to the question ‘how?’. In this sense, observing the drone image on the controller screen is, to some degree, second-order observation, since the pilot who is filming with the drone should recognize the potential meaning contained in the shot. Such identification is possible thanks to, among others, bringing out distinctions that are at the core of observation of these shots by a potential spectator. Observing the drone in airspace also assumes a certain meta-level. The aerial vehicle, maintaining the given altitude, moves away from the observer and can be perceived as descending. To control this illusion the pilot should be able to distinguish the real loss of height from the illusion caused by perspective. For this purpose, the pilot can distinguish the altimeter readings visible on the drone controller touchscreen, and so on.

Solo drone pilots must combine two types of visual surveillance: (1) observing the drone in the airspace is supposed to ensure flight safety and compliance with VLOS flight procedures, and (2) observing the image from the drone on the controller screen is necessary for the execution of proper shots. The necessity to see light and shade as well as contours in the drone camera image competes with a different type of observation, which keeps the drone in real airspace and is focused on flight safety. This results in a specific visual perception, which in the first approximation we called a mutable observation of the drone pilot. In this way, the drone pilot carries out two complementary activities: filming and navigating the drone. An additional aspect is the pilot’s situational awareness at the location from which he controls the unmanned aerial vehicle. In our study, the pilot did not have to move around. However, in many real-world situations, the space behind the pilot’s back becomes important when, for example, the pilot follows the drone. Schmitz [

37] conducted phenomenological deliberations on the perception of space behind human backs. In the conducted study, we tried to neutralize the impact of this space on pilots.

2.3. Contour and Light and Shade Vision in the Work of a Cinematographer

The model of a drone pilot, (compared to Adamski data [

35]) needs to be completed with forms of vision, i.e., strategies of observation significant during the execution of television and film shots in general (not only with the use of a drone). Creating the composition of a frame by adequately locating the camera requires the ability to contour vision from image operators. Such vision is facilitated by black-and-white viewfinders frequently used in professional television cameras. Strzemiński [

38] concerning the art of indigenous peoples, introduces the term contour vision as the earliest type of visual awareness. In Strzemiński’s theory of vision [

38], contour vision “used as a tool for a battle for survival, rejects all unnecessary, complicating visual sensations and stops at those that allow confirming the existence or non-existence of an object—on the contour drawn around the object” (see [

38], p. 23). Contour vision allows the image operators to reduce the information content of a frame to its composition potential.

Framing space by image operators to a high degree consists of observing the edges of a frame. Looking into the frame to assess image quality is a different kind of look—light and shade vision, which allows image operators to notice, among others, the exposure level of a shot (black noise, white balance, etc.). With baroque paintings, Strzemiński [

38] explains the new visual awareness of light and shade vision: “Light and shade visual awareness blurred the contours of images, introduced the color of shade (from the background), shattered the existing local unity of color. The individuality of the object, individuality of its character as a sample of a product, had to give way to see the whole—for the benefit of the process of seeing” (see [

38], p. 125). According to the researcher, the light and shade visual awareness causes “the line of contour to disappear not only in the shade. It also disappears in passages from shadow to light and becomes torn in several places. The object ceases to possess one continuous contour line” (see [

38], p. 117). Light and shade vision is characteristic in film productions, but it constitutes an additional difficulty for the safe navigation of the drone by a pilot, who is simultaneously a camera operator, like in our experiment.

2.4. Examining Sight Activity of Drone Pilots and Equipment Ergonomics

Hoepf et al. [

39] described data related to the eye movements of a drone pilot (eye blinking rate, blink duration, pupil diameter size) and pointed out severe eyestrain during piloting. High eye workload resulted in lower eye blinking rate, shorter blink duration, and dilated pupils. According to the researchers, it would be a good idea to introduce an automated system of monitoring physiological changes (eye movements and heart rate) with the potential to detect an upcoming decrease in labor efficiency of a drone pilot in high-eye workload conditions.

On the other hand, McKinley et al. [

40] used the time of complete eye closure to detect the presence of fatigue during the simulated use of a drone. The authors did not find any fatigue effects during the simulated use of a drone after a period of sleep deprivation. However, they did notice fatigue effects after carrying out tasks in a traditional flight simulator in the case of two specific tasks of a drone flight (defining objectives and psychomotor vigilance task). According to the authors, those two tasks proved to be more complex and difficult for drone pilots in comparison to other tasks and, as a result, led to the so-called optimal excitement, which, in turn, led to the overall better performance of drone pilots.

Moreover, McIntire et al. [

41] demonstrated that eye-tracking tests might be used to monitor changes in drone pilots’ vigilance levels. Increased and longer blinking and longer eye closure reflected the weak focus of attention during drone pilots’ vigilance tasks. The researchers additionally noted that until then no scientific papers had been published, that would compare eye-scanning strategies between drone pilots and manned aircraft pilots.

While steering and flying a drone it is also important to visually control its display. Tvaryanas [

42] researched how drone pilots visually scanned data from the RQ1 Predator drone flight controller. Focusing sight by the drone pilot was, according to Tvaryanas [

42], a heavy strain on the pilot’s eyes due to the method of information presented on the RQ1 Predator controller. The author stated, for example, that the information was displayed in frames that kept moving up and down the screen linearly. Tvaryanas [

42] concluded that such a method of data display on the drone flight controller proved inefficient due to the wrong design, which was not taking into consideration the limitations of human perception.

Jin et al. [

43] also analyzed the efficiency of the display/controller, however, of the Mission Planner. They conducted an experiment where the drone flight controller usability was compared with the use of an altered original interface version of the device. According to the researchers, the main problems with controller usability concern, among others, the flexibility of the system, user activity, and minimum load of the memory. A result analysis of a few factors such as task execution time clicks of the mouse, and fixation points showed that according to respondents the reprogrammed system interface worked more efficiently.

Researchers Kumar et al. [

44] proposed an innovative camera system Gazeguide, which offers the possibility to control the movements of a drone-mounted camera through the sight of a remote user. A video recorded by the drone camera is sent to eye-tracking glasses with the display function located on the user’s head. The test involved filming both static and mobile elements in 3D space. According to the test’s authors, comparing the Gazeguide system with a system using a classic flight controller shows better results of Gazeguide.

One of the latest physical examinations conducted by Wang et al. [

45] showed that researchers see the necessity of changing the philosophy of designing drone pilot equipment. The research was aimed at assessing the flight controller/display ergonomics, using eye-tracking exams. The researchers also pointed to the necessity of connecting methods that scan the drone pilot’s sight. Apart from the method of measuring sight activity with the use of eye tracking, the test on 12 pilots also used the method of expert evaluation.

2.5. Studying Pilots’ Complementary Activities during a Complicated Flight Situation

The experiment conducted by the authors of the article involved modeling a complicated flight situation and studying complementary activities during drone filming, which allowed tying flight safety directly to effective filming. The aerial film task was highly difficult because it was assumed that in complicated flight conditions the drone pilots would be forced to use their entire potential to execute it. A complicated drone maneuver they had to carry out to capture the model shot should reveal any shortcomings of the eye-head system (Gregory [

46] describes the operation of two movement perception systems, which he calls “image-retina system and eye-head system.” “(…) The image-retina system: when eyes are immobile, the image of a mobile object is running on the retina, which creates movement information as a consequence of another receptor discharge on the route of a moving image. (…) In the eye-head system, when the eyes are following a moving object, the image does not move on the retina, yet we see movement. Both systems may sometimes produce non-compliant information, which is the source of peculiar illusions.” (see [

46], p. 106).) activity, flight strategy, and interface ergonomics.

In research regarding psychology, physiology, and safety of flights of Łomow and Płatonow [

47], it is possible to distinguish the two most typical and universal models of complicated experimental flight situations. The first is modeling the conditions of complementary activities, where attention is divided between two essential tasks requiring active perception and operation. “Such is the situation of a low altitude flight in the regime of searching for ground-based orientation points, where it is necessary to join piloting with activities related to solving emergencies or controlling and assessing the state of aircraft systems (…). The second model consists of modeling emergencies evoked by technical elements refusing to work or receiving incomplete or imprecise information. In this case, it is essential to maintain the confidentiality of the experiment’s goal. This model should be aimed at determining the reliability of the cooperation between the pilot and the devices in a technical failure situation (…)” (see [

47], p. 348).

Researchers of aviation psychology Łomow and Płatonow [

47] point out that “in simple flight conditions the pilot manages inactivated reserves, which allows compensating for some equipment shortcomings (…). Examining the influence of a complicated situation on the pilot provides knowledge of the performance properties of the pilot in an emergency, on changing the structure of activities, characteristics of the intellectual and perceptive process, allows to determine what is the critical component of activity, what especially disrupts the cooperation of the pilot and the aircraft, which activity component needs to be trained in the training process or support it with technical measures (…)” (see [

47], pp. 348–349). It is worth noting here that, as van Dijk [

48] writes: “Taking full advantage of the opportunities offered by the new media requires well-developed visual, auditory, verbal, logical and analytical skills” (see [

48], p. 298).

3. Hypothesis, Research Method, and Description of the Experiment, Including Data Collection

Safe and effective drone visibility flight, during which the pilot is also the operator of the camera mounted on the unmanned aerial vehicle, can be a heavy burden for the pilot’s perception. At higher altitudes (within 100 m), if the drone pilots find that there are no obstacles in the vicinity of the unmanned aerial vehicle, they usually focus on the image from the drone displayed on the controller screen. However, in this case, the pilot should (simultaneously) observe the drone in the airspace to ensure separation from other aircraft, birds, or objects that may unexpectedly appear (To decrease the risk related to drones moving in the controlled airspace, The Polish Air Navigation Services Agency is developing an innovative system PansaUTM to manage, control and integrate unmanned air traffic with controlled air traffic (see [

15,

49])). Filming with a drone at low altitudes is more associated with the risk of collision with terrain obstacles, but at the same time, it extends the possibilities of narration with the image. With appropriate lighting and spatial conditions, the image quality from a small drone may not differ noticeably from the image from professional ground cameras. It encourages TV and film producers to include pictures from drones in their productions. Small and foldable drones providing good quality recordings, easy to transport and use, are most often piloted by independent pilots.

To sum up: the basic research problem can be formulated in the form of a question about the impact of mutable observation of the drone and the controller screen (during the VLOS flight) on the efficiency, quality, and safety of aerial photography. The research problem posed has led to the distinction of two VLOS flight coefficients that affect the course of the flight and the quality of the shots taken.

Research hypothesis: During a solo VLOS flight, the more experienced pilots gaze towards the unmanned aerial vehicle (UAV) more rarely and for shorter periods and focus more on observing the screen content of the controller than the less advanced pilots.

The test of drone pilots was conducted under the regime of a VLOS flight at low altitudes among obstacles such as buildings, TV antennas, and trees. The research material was recorded on 8 and 9 June 2019 in Warsaw and the experiment was conducted with the use of Tobii Pro Glasses 2 mobile eye-tracker, Sony X70 camera, DJI Mavic Pro drone, Blackmagic VideoAssist recording monitor and DJI Crystal Sky 5,5

touchscreen—detailed information about all the software components (including version number) and all the hardware platforms have been provided in the

Appendix B. Eight television pilots—drone operators were qualified for the test and divided into two categories: advanced and beginners, based on the declared number of hours spent piloting drones.

While deciding on the choice of location for the experiment, an important factor turned out to be the degree of shading of the drones’ takeoff site. To maintain and control the lighting conditions, the subjects were divided into two subgroups of 4 persons. Both groups had been subjected to an identical test on two consecutive days, in the same time frames. The local weather and lighting conditions were very similar. At the start of the test, a participant was shown the model shot (The model shot was executed by the co-author of the article S. Strzelecki, who, in addition to scientific work, is a professional drone operator and pilot cooperating with the most important Polish TV broadcasters: TVP and TVN Discovery.) and asked to re-capture it at least three times duplicate within a specified time frame.

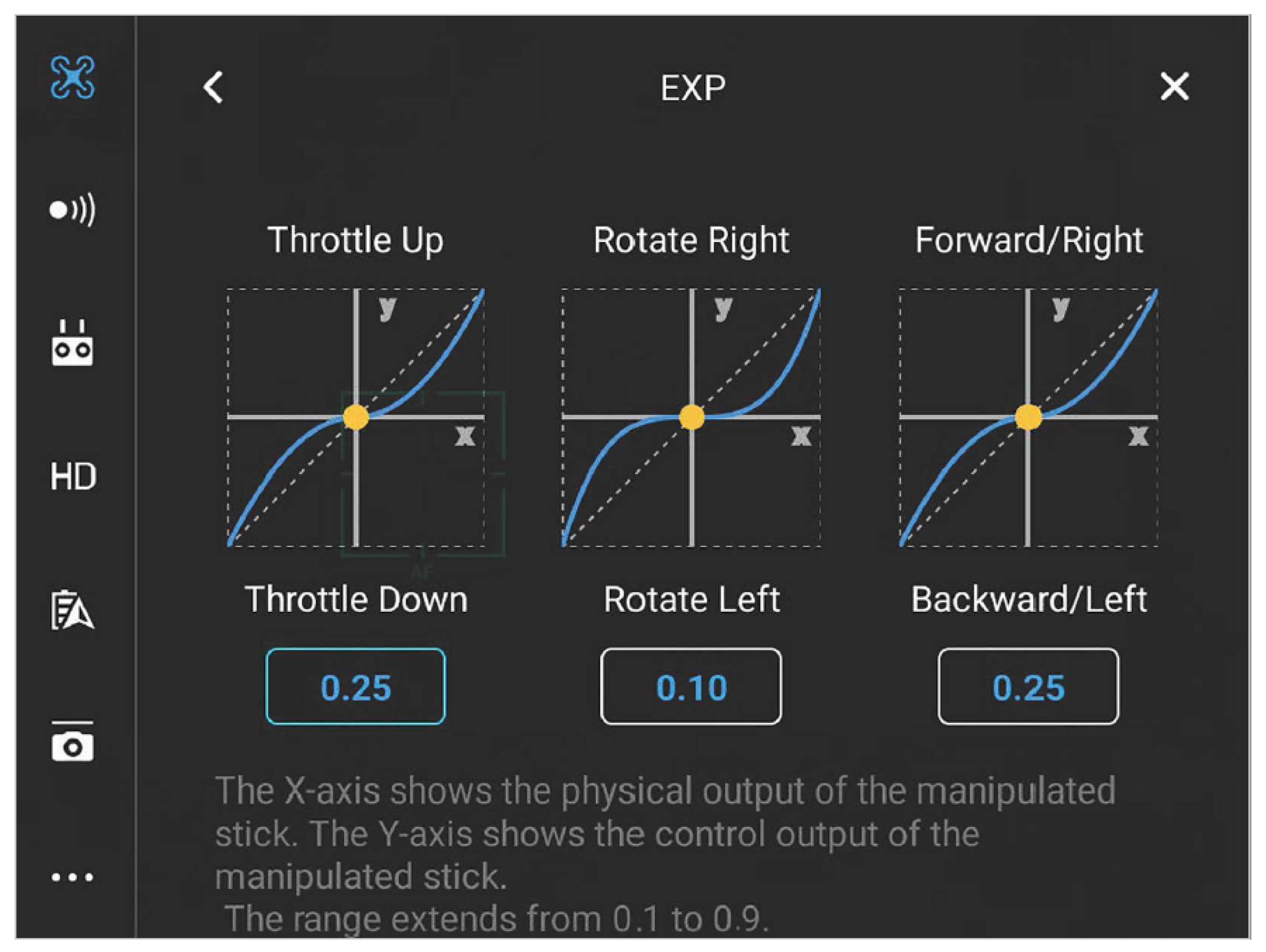

The shot lasted 50 s. The presented in

Table 1 parameter values come from the DIJ Go ver. 4 software used during the realization of the model shot. The screenshot presented in

Figure 2 shows the characteristics of the knobs during the model shot of the experiment. The characteristics have been softened compared to the default settings. Due to the nature of the shot, the characteristics on the axis of rotation of the drone (yaw) were set as softly as possible—characteristics presented in the middle graph. During the research, the RTH (return to home) system had been activated which allows returning the drone to the home base when the critical battery level was exceeded, however, this never happened during our research. The exemplary and complicated shot was at first executed at an altitude of 3 m, which grew to 22 m. It required implementing many techniques of executing aerial imagery by a drone. Therefore, various aspects of its completion are representative of many types of shots used in television and film. Repeating the shot seen earlier creates more problems for the pilot than executing his shot. This made the task more difficult and required the pilots to use most of their perceptual reserves. Therefore, we did not study the creativity of the pilots as image operators, but the limits of their technical and perceptual abilities when executing the shots. Apart from navigational difficulties, such as maneuvering in a narrow air corridor, an additional difficulty for the tested pilots was the necessity to repeat the model shot. In the experiment, researchers designed a complex flight and filming situation to study the perception of pilots in extreme VLOS flight conditions, which may happen in various other situations of aerial photography from a drone. According to the assumptions of aviation psychology according to Łomow and Płatonow [

47], described in detail in

Section 2.5, it was assumed that in the complicated flight conditions the drone pilots would be forced to use their full potential to perform the task. During the experiment, the conditions of complementary activities were modeled (in the case of the presented study, it was piloting and filming a drone). The study aimed to assess the effectiveness of human-machine cooperation, i.e., the cooperation of drone pilots (beginners and advanced) with the classic controller system with a touchscreen for shot execution in the VLOS flight mode.

The eye-tracking analysis was carried out on seven shots included in the research based on a selection made by peer reviewers (Peer review method—method of objectification of assessments collected during quantitative research, when a group of people, with similar competencies in a given field, analyses and assesses the research material (see [

50]). Three peer reviewers took part in the analysis of the collected research material. The competencies of reviewers were due to their employment in the Laboratory of Television and Film Studies at the Faculty of Journalism and Book Studies at the University of Warsaw). In the case of each of the seven shots, the reviewers chose one best shot (the most similar shot to the presented model—with similar time and visual course). The same criteria were used to choose the two best shots in the entire group. Additionally, the third shot of pilot P6, which showed a collision of the drone with a treetop, was included in the analysis. In the analyzed research material, none of the shots taken by pilot P8 were taken into account, because no shot was completed and their visual course significantly differed from the model shot. In the case of each of the seven subjects included in the sample, the peer reviewers indicated one best shot (the closest to the model shot—a similar time and visual course of this shot). According to the same criteria, peer reviewers selected the two best shots in the whole group. Additionally, the analysis included the third shot of the P6 pilot, during which there was a collision with the tree crown.

For peer reviewers to be able to select the shot most similar to the model shot and to conduct eye-tracking analysis using the iMotions 7 software, the researchers developed a proprietary method of video imaging techniques. For this purpose, the researchers combined on a computer screen the footage recorded by the eye tracker with two videos recorded by the drone and with a shot taken by the Sony X70 camera archiving the operator’s behavior. The method of image production techniques consisted in combining four time-synchronized video recordings on one screen. The researchers determined the beginning and end of each shot by entering a number in the center of the screen.

In

Figure 3 we present the screenshot of the research footage. The upper left window contains the image from the eye tracker camera with fixations; the upper right window contains the image from the drone’s camera along with the graphical user interface (GUI); the lower left image shows the pilot and additional information: pilot number, number of flying hours, shot date and time code of the entire flight; the image in the lower right window appears when the pilot starts recording—this image contains additional information about the drone’s position along with the camera parameters.

The survey for each of the respondents was carried out immediately after completing the flight with the drone of each of the surveyed pilots. A detailed methodological description of the entire experiment can be found in the text Kożdoń-Dębecka and Strzelecki [

51] (Paper accessible in English and Polish), where the authors focused primarily on the research methodology.

4. Findings

4.1. Results of Surveys

An analysis of surveys made it possible not only to group drone pilots’ opinions and proposals as well as their preferred methods of operation regarding the VLOS flight but also their previous experiences and new technological solutions for the drone interface, proposed by the subjects.

Table A1 in

Appendix A presents only those answers of the pilots that occurred with at least two pilots—respondents.

Concluding opinions, experiences, and findings of the pilots, it is worth reminding that the aerial-film task prepared for the pilots had a high level of difficulty from the start. Therefore, the maneuver space in the air corridor was limited and the camera was set to double zoom before the flight. These elements made it hard for pilots to maneuver the drone based on camera playback and enforced observation of the drone in the airspace. In their surveys, the pilots admitted that zoom filming and limited maneuver space together with their limited competencies caused the greatest difficulties. One operator also mentioned a drone visibility problem since it was blending in with its surroundings. The pilots mentioned the stability of the drone as its advantage. They mainly used a thumb grip for control rod steering. Thumb and index finger grip, which, in the authors’ opinion, provides more control, for control rods steering was less popular among pilots—operators. Modes of intelligent flight used (outside the experiment) by some professional pilots—operators are Tripod, in which the drone reacts calmly to steer movements, Terrain follow, in which the drone can automatically react to land elevations and depressions, and Point of interest, in which the drone can circle a designated point. Some pilots noted it should have been possible to turn off displaying some flight parameters on the controller screen. In an emergency, flight duration on battery and distance from the takeoff site were the most significant for the pilots. The pilots also noticed the need for better distance sensors, since the sensors can only stop the drone in front of an obstacle at a limited speed of the UAV.

4.2. Eye-Tracking and Video-Analytical Tests

Two VLOS flight factors influencing drone maneuver safety and efficiency of operation of the drone pilot’s eye-head system during a VLOS flight were distinguished based on the analysis of variables: visual contact efficiency factor (VCEF) and eye-head system efficiency factor (EHEF).

Equation (

1) defines the Visual Contact Efficiency Factor (VCEF)—efficiency factor of visual contact with the drone—while filming during a VLOS flight. It determines the ratio of the number of eye contacts with the drone (ECD) to the sum of the number of eye contacts with the drone (ECD), the number of full gazes on the drone controller display (FGC) and the number of gazes outside of the system of the drone and the display of the controller (OUT) during the shot execution. Based on the hypothesis, it can be assumed that the efficiency of eye contact with the drone increases the closer the VCEF factor value is to the maximum. The maximum and minimum critical values of the VCEF depend on the size of the maneuver corridor in a given airspace and the perceptual-motor possibilities of the drone pilot.

where:

VCEF—Visual Contact Efficiency Factor,

ECD—Eye Contacts with the Drone (the number of),

FGC—Full Gazes on the Controller Display (the number of),

OUT—Gazes Outside the System (the number of).

Gazes within the drone-controller system include: (a) drone vision (central or peripheral) within the scope of possibility to assess the drone’s position relative to terrain obstacles, and (b) drone controller display vision (central or peripheral) within the scope that enables navigation based on the drone camera feed.

In the analyzed cases of the experiment, the number of detected gazes outside the system visible in the denominator amounted to 0. We assume that in a real-life situation it may be necessary to gaze outside of the drone-controller display system to, for instance, observe other flying or ground objects or in case there is a need to change the position or location of the operator. In such a situation, while the number of gazes outside the system increases, the efficiency of visual contact with the drone decreases.

Equation (

2) defines the eye-head efficiency factor (EHEF) while filming during a VLOS flight. It determines the ratio of the total time spent on full gazes on the drone controller display (FPV) to the time of flight with no drone visibility (BVLOS). Based on the hypothesis, it can be assumed that the efficiency of the eye-head system increases the closer the EHEF factor value is to the maximum. The maximum and minimum critical values of the EHEF factor depend on the size of the maneuver corridor in a given airspace and the perceptual-motor skills of the drone pilot.

where:

EHEF—Eye-Head Efficiency Factor,

FPV—Time Spent on Full Gazes on the Drone Controller Display,

BVLOS—Beyond Visual Time of Sight.

Table 2 presents the values of the VCEF and EHEF coefficients calculated for the best shots (in the assessment of peer reviewers) made by individual pilots. The criteria for assessing the shots by the judges assumed a similar time and visual course compared to the model shot. Columns P1 and P4 (data for pilots P1 and P4—marked in green) show the results obtained with the analysis of the two best shots in the entire study group. The last column contains the VLOS flight data of the P6 pilot, where during the third attempt to take the shot, the drone collided with the tree’s crown after 35 s of flight (after the collision, the drone remained in the air). The analysis of the eye-tracking record applied to the video image showed that some of the eyesight clusters of pilots P1 and P5 exceeded the selected area of the controller’s screen (AOI). This situation is marked with an exclamation mark in the table. Therefore, the values of the eye-head system efficiency (EHEF) and eye contact efficiency (VCEF) for these pilots may differ from the actual effectiveness of their perceptual systems as part of their screen observation methods (cf.

Section 4.3 Anomalies in eye-tracking results).

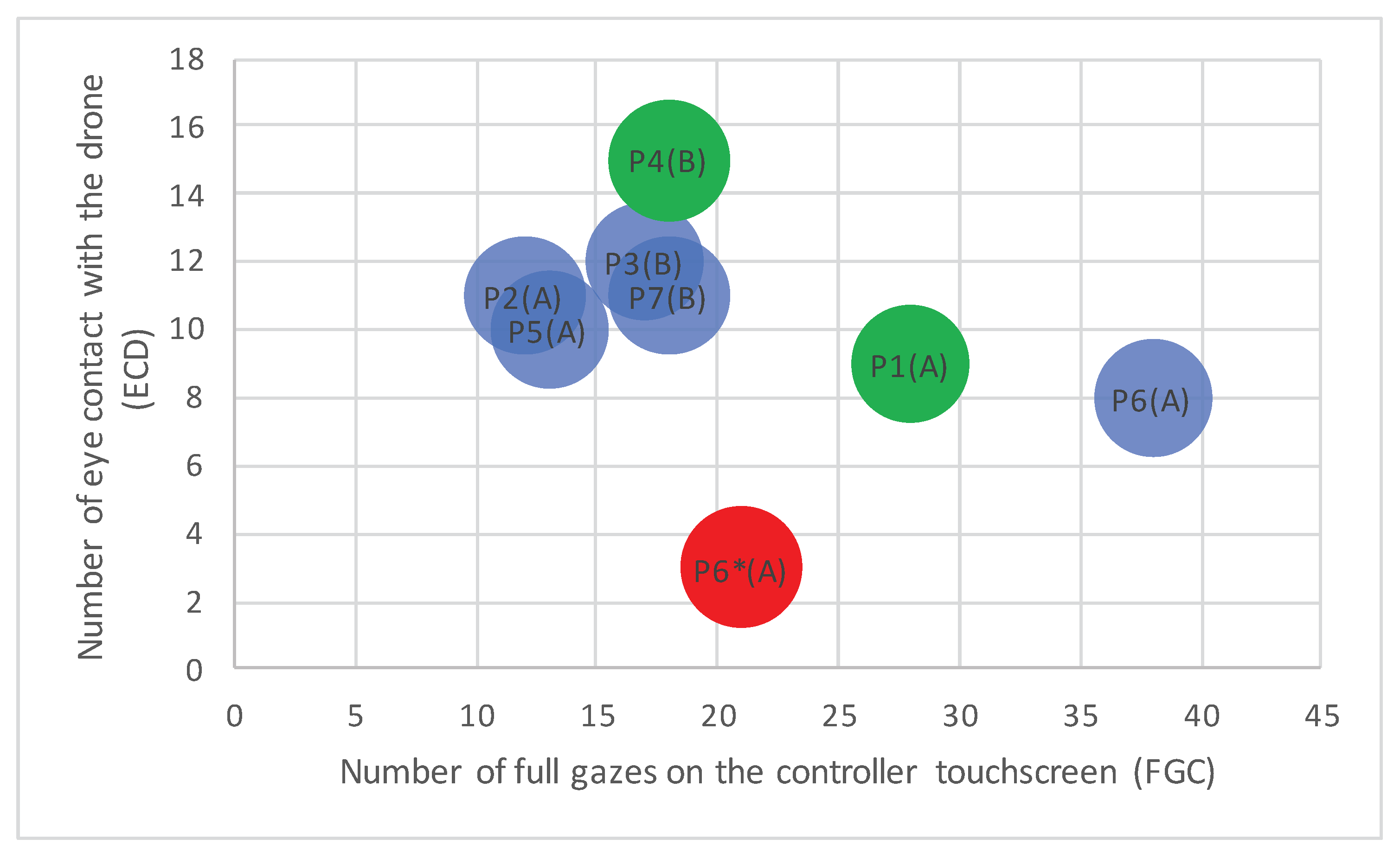

Figure 4 presents the diagram of the relationship between the number of full gazes at the controller and the number of eye contacts with the drone. In the diagram, the blue bubbles describe the pilot’s best shots selected for analysis, the best shots in the assessment of peer reviewers are marked in green, and a collision flight is marked in red. The diagram shows that the best shots from the entire study group (green) were taken by the novice pilot P4 (B), who had the most visual contact with the drone using the intra-screen observation of the controller (IS), and the pilot advanced P1 (A), who had about half as much eye contact with the drone using the peri-screen observation of the controller (PS). It can be seen that, depending on the flight technique, the photos may be at the same level of implementation, but in the case of the advanced pilot P1 (A), the flight was more burdensome for him. This is evident in the video material attached to the article and it is also probably due to the pilot’s use of peri-screen observation (PS). The diagram shows that in the case of professional drone pilots, in most cases, the number of eye contacts with the controller screen exceeded the number of eye contacts with the drone, however, with too few eye contacts with the drone, the drone collided with the crown of the tree (red bubble P6*(A)). All the shots made by the pilot P1 (B)—green bubble, P4 (B), and P6 (A)—red bubble, were included in the video material in three separate files. The number appearing in the center of the screen indicates the start and end of the shot and its number.

Based on the results of the analysis of the best shots from

Table 2, it was calculated that the average shot time, in BVLOS, for advanced pilots was 77.4% of the shot time and 67.4% for beginner pilots. It follows that both beginner and advanced pilots did not look at the drone for more than half of the flight time (BVLOS flight time relative to the shooting time is greater than 50%). The minimum flight time with no drone visibility for advanced pilots is 67.9% and for beginner pilots 58.2% of the shooting time. The maximum flight time with no drone visibility is 88.3% for advanced pilots and 77.4% for beginners. The results show that despite the rather narrow air corridor in which the drone could safely maneuver during the VLOS flight, the pilots spent more time observing the image from the drone than the aircraft itself.

The authors of the article noted that the total flight time with no drone visibility differs from the total time spent looking at the drone controller screen. It takes time to change the view angle from the drone to the controller. The smaller the difference between these times, the greater the efficiency of the eye-head system. It is worth noting that one of the best shots in the entire group was taken by the P4 pilot, for whom the efficiency of the eye-head system (EHEF) was 51.2%. Pilot P2 with an efficiency factor (EHEF) of 78.9% had problems reproducing the model shot during his flight. The skill of the pilot as a camera operator is not directly correlated with the EHEF factor. Perhaps there is a limit value for the work of the eye-head system, beyond which the work of the system becomes over-effective and overburdens the pilot’s perception. The maximum critical value of this factor should be determined in separate research.

It is worth paying attention to the differences in the way the controller is operated by individual pilots. The advanced P5 pilot and the beginner P4 pilot held the controller high compared to other pilots. Observation of the image on the controller held high made it possible to reduce the amplitude of head movements (up and down). It can be assumed that thanks to this, the pilots consciously used peripheral vision (Gregory [

46] points out that the perimeter of the retina allows the perception of movement and its direction, but the viewer is not able to determine the type of object. On the other hand, when the movement stops the object becomes invisible. According to the researcher, this experience is closest to the most primal forms of perception. “The periphery of the retina is, therefore, an early warning device that rotates the eyes so that the object-recognizing part of the system is aimed at objects that may turn out to be friendly or hostile, at least not indifferent” (see [

46], p. 106)) to observe the direction of the drone’s movement.

The results of the video image analysis confirm the research hypothesis, while both advanced and beginner pilots did not look at the drone for more than half of the shooting time during the VLOS flight.

4.3. Anomalies in Eye-Tracking Results

Pilot P1 had 28 revisits/full gazes/fixations in the AOI tested area (FGC) in 4 s (per 57 s of the shot duration). 84.2% of the shot execution time (BVLOS flight) he was not looking at the drone. He spent only 7% of that time (FPV) actively focusing on the interface display (highlighted area of AOI). The analysis of the eye-tracking recording applied to the video showed that some of the sight fixations of this pilot went beyond the highlighted area of AOI. Analysis of all fixations of the pilot shows that in some of them, the pilot was visually scanning the upper screen frame, focusing his sight slightly above it.

The advanced P5 pilot also looked beyond the screen while focusing on the drone controller. Such perceptive effects caused pilots P1 and P5 to have very short total times of full gazes calculated by the eye-tracker (FPV) in comparison to the time of the BVLOS flight. Hence, the values of eye-head system efficiency factors (EHEF) for advanced pilots P1 and P5 may diverge from the real efficiency of their perception systems within the executed methods of screen perception. The research results only initially point to possible relations between visual contact efficiency and eye-head efficiency and the quality and safety of executed shots.

4.4. Pilot Perception Techniques

Comparison of shots captured by individual operators (carried out by peer review) allowed distinguishing shots taken by two pilots, advanced pilot P1 and beginner pilot P4 as the best and most closely resembling the model shot (similar timing and visual course of the shot). The first advanced pilot P1 with many flight hours (around 160 h), was not looking at the drone for 84.2% of the shot execution time, and during the shot had 9 eye contacts with the drone and executed 29 full gazes on the drone controller display. Hence, his visual contact efficiency factor was low (VCEF = 24.3%). The fourth beginner pilot P4 with very few flight hours (13 h), was not looking at the drone for 77.4% of the shot execution time. This pilot had 15 eye contacts with the drone and executed 18 full gazes in the tested AOI (FGC). Hence, his visual contact efficiency factor was quite high (VCEF = 45.5%). P4 pilot, despite lack of experience in filming from an unmanned aerial vehicle, captured, similarly to the advanced P1 pilot, one of the best shots in the entire tested group (The P4 beginner pilot did not press ‘record’, hence the shot was not recorded. It was, however, analyzed by peer review, since an image along with the interface transmitted from the drone controller directly to an independent video recording monitor was recorded.). What aided him was most probably his experience in using a video game console, similar to some degree to a drone controller used in the experiment. (Pilot P4 also mentioned using a video game console in the talks after the experiment). There were also significant differences between the P1 pilot and P4 pilot in techniques of image perception on the drone controller display. The beginner pilot P4 focused his vision in the middle of the controller display, which allowed him to see the whole frame, including perimeters, on the 5.5” screen. Saccades (rapid, involuntary movements of the eyes between fixation points) appeared mainly on the vertical axis. The advanced pilot P1 quickly and frequently scanned the upper frame of the screen focusing his vision slightly above the frame. Many horizontal saccades appeared. In both cases, the pilots were probably effectively using their peripheral and contour vision, described in the theoretical part of the study, but fixated their sights in different spots.

The beginner P4 pilot focused his vision more or less in the center of the screen taking in the entire frame using a perception of the perimeter. Both pilots probably used perimeter vision to observe the frames of the shot, but started at different points of fixation, and different techniques of eye tracking. The perception technique of the beginner pilot P4 may be called intra-screen observation (IS), and the technique of the advanced pilot P1—peri-screen observation (PS). The intra-screen observation (IS) was also observed in the advanced pilot P2 and the beginner pilot P7. Compared to the rest of the group, the flight efficiency factors were high in the P2 pilot’s performance (EHEF = 78.9%, VCEF = 47.8%). However, according to peer reviewers, shots executed by these pilots were not the best.

The intra-screen observation of the fourth pilot (P4) allows us to simultaneously join the contour vision with the light and shade vision (discussed in

Section 2.3) more effectively than the scanning perception of the first pilot since fixation points fall on a limited area of the screen. Such intra-screen observation (IS) is probably a smaller burden for the pilot’s perception systems than the peri-screen observation (PS). The advanced P1 pilot informed of problems with shot execution, his facial expressions showed signs of perception difficulties. It is worth reminding that the shots of both pilots P1 and P4 were assessed as the closest to the model shot. It should also be mentioned that the control rod thumb-only grip signalized in surveys as preferred by the P1 pilot was different from the one preferred by the beginner P4 pilot gripping the rod between the thumb and index finger.

4.5. Risky Drone Filming during VLOS Flight of the Pilot P6 (EHEF = 94.3%; VCEF = 12.5%)

If a BVLOS flight time (BVLOS Time) was longer than 90%, then collision situations appeared. During his second attempt, the advanced P6 pilot (170 flying hours) did not look at the drone throughout the entire time of the execution of the shot. It led to a highly dangerous situation in the 35th second of the shot execution (18th minute of the flight). Too wide a left arc almost caused the drone to collide with a building, which had suddenly appeared on the controller display in the distance of around 2 m from the drone. The pilot managed to bring the aircraft under control, however, it came close to a collision. During the following, third attempt (analyzed shot AS3, last column of

Table 2), the pilot P6 gazed at the drone three times (ECD = 3). This meant he was not observing the drone for 94% of the shot execution time (EHEF = 94.3%, VCEF = 12.5%). In the 30th second of the third attempt (AS3), the drone got caught in some tree leaves as a result of performing too wide a left arc, similar to the one in the second attempt. The drone flew through the leaves and remained airborne. In the fourth attempt (AS4), the pilot safely executed a shot, which turned out to be the most similar to the model shot. The time of BVLOS flight (BVLOS Time) shrunk from 94.3% to 88.3%, the pilot also increased the number of eye contacts with the drone (ECD) from three to eight. The analysis of the P6 pilot’s best shot (AS4) showed the visual contact efficiency factor VCEF was as low as 17.4%. During the execution of the shot (AS4) the P6 pilot had 8 eye contacts with the drone and made 38 full gazes on the drone controller display. It shows that the pilot preferred to navigate the drone on the interface and limited the looking at the drone in the airspace. Compared to other pilots, the P6 pilot compensated for the limited number of eye contacts with the drone with an accurate analysis of the drone video. However, three eye contacts with the drone in the first thirty seconds of the third shot AS3 execution were not enough to safely navigate the drone among the buildings.

4.6. General Finding

The experimental conditions of the VLOS flight required the pilots to constantly assess the distance of the drone from the buildings and other objects being filmed. All pilots participating in the study did not look at the drone for more than half of the time of the shot (flight time in the BVLOS model > 50%). The most effective were flights during which the percentage of flight time without drone visibility oscillated between 70% and 80%. It follows that during the realization of complicated drone shots, close to the filmed objects, pilots most of the time observe the image from the drone visible on the controller. They spend only about 20–30% of their time observing the drone in the airspace. In such a flight model (70% BVLOS and 30% VLOS) pilots with television practical experience can perform a complicated shot of closely filmed objects in a relatively safe manner.

The shots of two pilots (P1 and P4) were indicated by the competent judges as the two best shots from the entire test group. At the same time, the execution of the shot by the beginner pilot P4, however with experience in using a video game console, was more effective and thus safe (VCEF = 45.5). The shot strategy of the advanced pilot P1 (VCEF = 24.3) also resulted in a very good shot, but it was less effective—it burdened the pilot’s perception more.

However, it should be noted that all pilots participating in the experiment were professional camera operators, underwent appropriate training for VLOS flights, and had practice in taking shots for television. The experiment also showed that when the time that pilots spend observing the image from the drone controller exceeds 90% of the shot time, collision situations can occur (P6). Evaluation of the distance of the drone from the filmed objects only based on the image from the drone camera turned out to be insufficient in the designed VLOS flight situation. In particular, when the image from the drone’s camera was zoomed in twice, which allowed for more interesting shots from the operator’s point of view, the zoom image was an additional problem in assessing the distance based on the image from the drone’s camera.

In our experiment, the pilots repeated a model shot that required complicated drone maneuvers close to the subjects being filmed. Thanks to this, the conclusions from the study can be representative of shots taken at low altitudes (up to about 30 m) near the filmed objects during the VLOS flight. A limitation of the study is that the results do not apply to televised VLOS operations at large distances from terrain obstacles, as well as sideways or backward flights, where pilots cannot use the drone camera image to assess the distance to potential terrain obstacles. The results of the study also do not apply to situations in which the drone pilot (during the flight with VLOS visibility of the drone) has the support of the drone observer (EVLOS—Extended Visual Line of Sight). In such situations, the critical values of flight coefficients may differ from those described in our experiment. Nevertheless, in many TV productions using a drone in VLOS flight, the values of the coefficients can have a significant impact on the safety, efficiency, and aesthetics of aerial shots.

5. Discussion

Any considerations on the safety of drone flights in the UK, European Union, and the United States of America are related to the legal conditions systematized in articles [

13,

14]. The authors of the paper [

13] also classified the types of UAV flight operations, i.e., VLOS, EVLOS, BVLOS, and pointed out that drone flight operations require a direct connection between the UAS and the remote pilot. Moreover, the remote pilot is responsible for the safety of all operations. In our paper, we assent that apart from the quality of the television shot, safety plays a key role during flight operations. The observation times of the drone and the controller are the main components of the VCEF coefficient proposed in our paper. The VCEF defines the quality and safety of the shots. Our research on VLOS coefficients can contribute to increasing the safety of flights and the quality of shots taken within U-Space [

15].

The article [

8] discusses the challenges of filming with drones. Indeed, the primary military use of UAVs introduced disruptive innovation for broadcasting information, entertainment, sport, etc. However, it involves many challenges, such as navigation in three-dimensional space, compared to the typical action of a TV operator who has been operating in two-dimensional space so far. The article discusses many corresponding problems, e.g., covering the filming area that is directly related to the additional degree of freedom, determining the flight trajectory [

5,

9,

52], limitations of battery capacity, the weight of the UAV, or transmitting images in high resolution to the ground data repository. Moreover, a pilot who intends to fly the drone straight ahead and simultaneously turn the drone with the camera on the vertical axis (yaw) must compensate for the movement of the rudder knob with the second aileron knob. Automatic film trajectories of the drone flight may help the pilot in the implementation of shots that require framing the image, for example, by rotating the drone on the vertical axis [

10,

53]. What our article has in common with the apprised issues is, above all, determining the correct flight trajectory by the operator (in real-time), and thus analyzing his behavior in the context of safety and the quality of the shot.

Our study may prove the plausibility of monitoring flight coefficients dependent on the pilot’s perception to keep him informed on the limits of his perceptive capabilities. At the beginning of our paper, we referred to research by Hoepf et al. [

39] suggesting the introduction of an automated system to monitor physiological changes for drone pilots. Whereby, such a system in the case of described coefficients should monitor the pilot’s eye and head movements. At the same time, monitoring eye movement enables eye control. Kumar et al. [

44], point to the high efficiency of drone camera control through sight and with the use of opaque virtual reality goggles, which enforce a BVLOS flight (Flight with VR goggles, during which the pilot does not see the drone, may be considered a VLOS flight if there is an observer present, who informs the pilot of possible collision courses and other threats). Eye control in virtual reality should concern, first of all, such camera parameters as focus, aperture, shutter, white balance, and zoom. Eye control of other functions of the drone should concern only those parameters that do not have a direct and immediate impact on the flight trajectory of the drone. It is easy to imagine a situation, where an operator is executing a shot with the drone positioned with the sun behind it, which causes the operator to be momentarily blinded. For that reason, the controller interface with built-in control rods should be retained and could, optionally, accommodate the sticks’ response to the drone overburdening and also transfer the drone engine sound to provide the pilot with information about the unmanned aerial vehicle flight dynamics. The bigger the focal length of the drone camera (bigger zoom), the gentler the sticks’ performance characteristics should be.

Another researcher Tvaryanas [

42], who analyzed the graphic interface operations of a reconnaissance version of the Predator military drone drew attention to burdening a pilot’s perception as a result of the graphic layout of information displayed on the screen. Imperfections of this type are also visible in a popular drone flight application (DJI GO 4). For instance, observing the drone’s speed indicator during a solo VLOS flight poses additional difficulty for an independent pilot, since he needs to simultaneously observe images from the drone and the aircraft itself. On one hand, it is fairly easy to imagine better graphic solutions (i.e., user-modifiable ones); on the other hand, many operators have accustomed to the present solutions in the user interface. New technology, which can improve drone-filming possibilities, is in our opinion an adequately designed augmented reality system, considering verbal communication, engine noise, and visualization of the interface elements in the operator’s line of sight.

Professional television drone pilots can independently execute complicated outdoor day shots in a narrow maneuver corridor during a VLOS flight. This is difficult, as the flight is partially carried out among terrain obstacles. Throughout the entire flight trajectory, the pilots are not able to gaze at the drone even for the majority of the shot execution time. The experiment proved that if a pilot, focused on the drone controller display, does not look at the aircraft for over 90% of the shot execution time; it may lead to a collision of the unmanned aerial vehicle with terrain obstacles and increases the risk of an accident. The best shots (BS—closest to the model shot) were executed when the pilots made on average over a dozen eye contacts with the drone and were not observing the aircraft for close to 80% of the time of shot execution focusing their attention on the drone controller display. The biggest difficulties related to the execution of the task, reported in the surveys, concerned the necessity to film with a double zoom and limited maneuver space.

Determining the maximum and minimum critical values of VLOS flight factors (VCEF and EHEF) requires a separate study. The results of pilots P2 and P7 show, that a high level of VLOS flight factors does not always go hand in hand with the quality of the executed shot, as assessed by peer reviewers.

Within the conducted experiment, the best compromise for the visual contact efficiency, eye-head system efficiency, and filming effectiveness, is shown by the data collected during P4 pilot flight: eye-head efficiency factor (EHEF = 51.2%), visual contact efficiency factor (VCEF = 45.5%), low amplitude of up-down head movements as well as effective intra-screen observation (IS) allowing to join the contour vision with the light and shade vision in a single perception act. The beginner P4 pilot, despite the lack of experience in flying a drone, executed (as assessed by peer reviewers) one of the best shots in the entire tested group (timing and visual course closest to the model shot). The advanced P1 pilot executed another of the best shots in the entire group, however, his low visual contact with the drone efficiency factor (VCEF = 24.3%) introduced additional flight risk in a narrow maneuver corridor, which was related to the peri-screen observation (PS) used by this pilot.

Filming in VLOS flight by one person requires a lot of commitment. In this type of situation, the pilot paradoxically loses the drone’s line of sight many times to control the drone’s controller screen. In light of the conducted research, devoting half of the shooting time to drone observation seems to be a good compromise between the safety of shooting and the quality of shots taken at low altitudes among many terrain obstacles. More advanced pilots can spend less time observing the drone, but the time when pilots lose sight of the drone, even with very advanced pilots, should not exceed 80% of the shooting time.

The results can help, for example in improving the efficiency of aerial photography from a drone taken for television, film, and other media or for training purposes (for example, in simulating such a flight). Due to the difficulties and danger of shooting at low altitudes under the VLOS flight regime, it seems justified to simulate the conditions of this flight in the safe space of augmented reality. In such a simulator, the detected real-time monitored flight coefficients can determine a critical moment in the pilot’s perception. The system can react by immobilizing the drone or informing the pilot with a control lamp that it has reached its limits of stimulus integration.

Further research would require the creation of appropriate virtual drone flight spaces representative of many real movie sets. When designing the spaces assuming the achievement of specific values of the coefficients for the operators taking the intakes, the degree of difficulty of the intakes can be related to the VLOS flight coefficients described in the article. VLOS flight factors can therefore help design suitable spaces for a VLOS flight simulator in virtual reality.

Another conclusion concerns the drone’s flight assist function. The current assisted flight functions often do not support low-altitude television shots. The functions of assisted flight in the popular DJI GO 4 application used by more experienced drone operators in news television are, above all, Point of Interest, Active Track, Follow Me Mode, and Course Lock. Neither of these assisted flight modes allowed for the footage taken during the experiment, as it was necessary to change the flight path in the horizontal/vertical plane with the simultaneous rotation of the drone. These are maneuvers that can be successfully creatively carried out by properly trained television and film drone pilots.

6. Conclusions

Drones allow us to create shots that are more and more important in the narrative of the image, but using the full potential of aerial photography requires pilots to be highly competent in controlling the drone and executing shots. Media is a challenge to the abilities of their users, as van Dijk [

48] aptly noted when defining media in the spirit of McLuhan: “Media and computers can be treated as extensions or even substitutes of human perception, cognition, and communication. They extend in time and space and reduce the consequences of limitations imposed on us by the body and mind” (see [

48], p. 301). Drones used for filming, as mobile media of the present day, require pilots to combine two orders of observation that are important in a TV or film narrative, i.e., pragmatic vision focused on the assessment of distance and risk related to flight, and film vision aimed at recognizing the meanings in the image from the drone.

In our article, we have indicated the risks associated with VLOS flight filming, which result from the pilot’s need for mutable supervision on two levels, i.e., film and air. We have narrowed down the research area to a VLOS operation carried out single-handedly by a television pilot. Our scientific contribution is innovation in the study and analysis of the drone operator’s behavior. The results of the research presented in the article may help, among others in improving the effectiveness of aerial photos taken from a drone for television, film, and other media as well as in the simulation of flight, for training and research purposes. The factors described by us primarily affect the efficiency of drone filming and flight safety. Monitoring these factors can be important when building a VLOS augmented reality (VR) flight simulator model. A simulator based on VR goggles would be able to reliably reproduce VLOS flight conditions and monitor specific flight coefficients. Designing virtual spaces in such a simulator should take into account the potential values of flight coefficients for specific drone maneuvers. At the training level, monitoring of the relevant flight coefficients can signal to the pilot that he has reached the limits of his stimulus integration capacity and should modify his flight method. On the research level, the simulator will enable the optimization of the process of alternating observation during filming in flight with drone visibility.

Society is on the threshold of a new era in which machines will no longer be separate, lifeless mechanisms but will be extensions of the human body. Drones equipped with appropriate control controllers expand human observation capabilities, but at the same time require the use of many perceptual reserves. The alternating enforced observation by the medium of the drone allows humans to see from an avian perspective, but inadequate surveillance of the aircraft carries the risk of crashing it.