SlowFast Action Recognition Algorithm Based on Faster and More Accurate Detectors

Abstract

1. Introduction

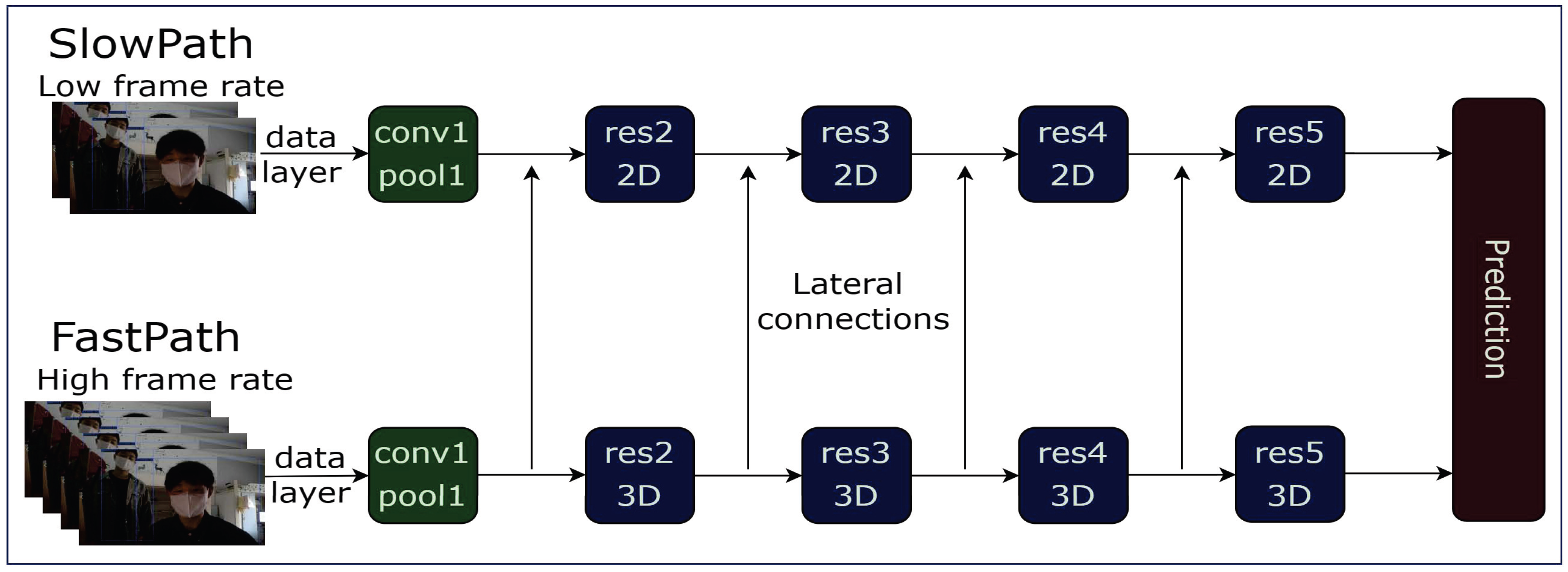

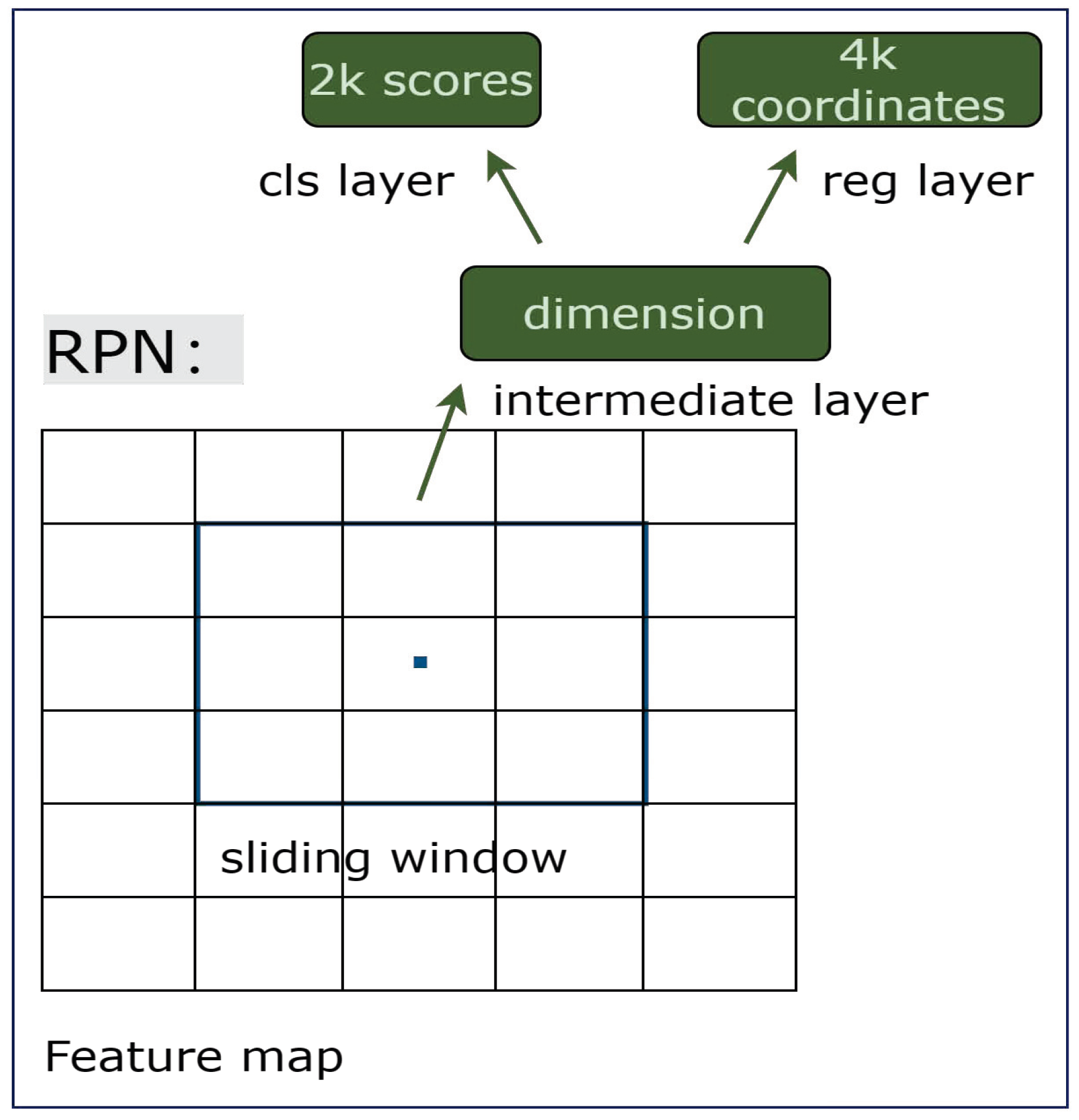

2. Related Works and Methods

3. Experiments

3.1. Datasets

3.2. Experiment Settings

3.3. Evaluation Metrics

3.4. Results and Analysis

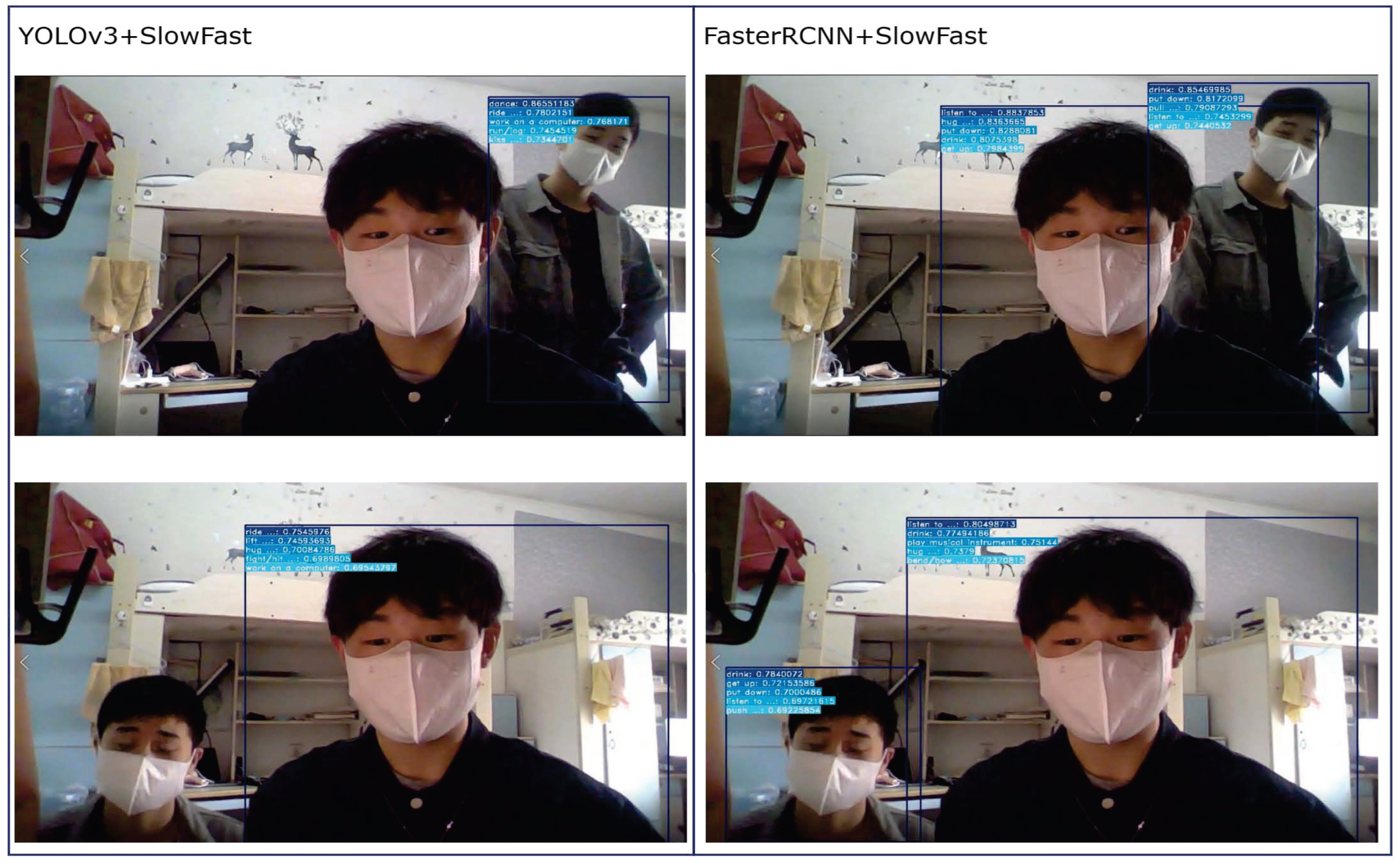

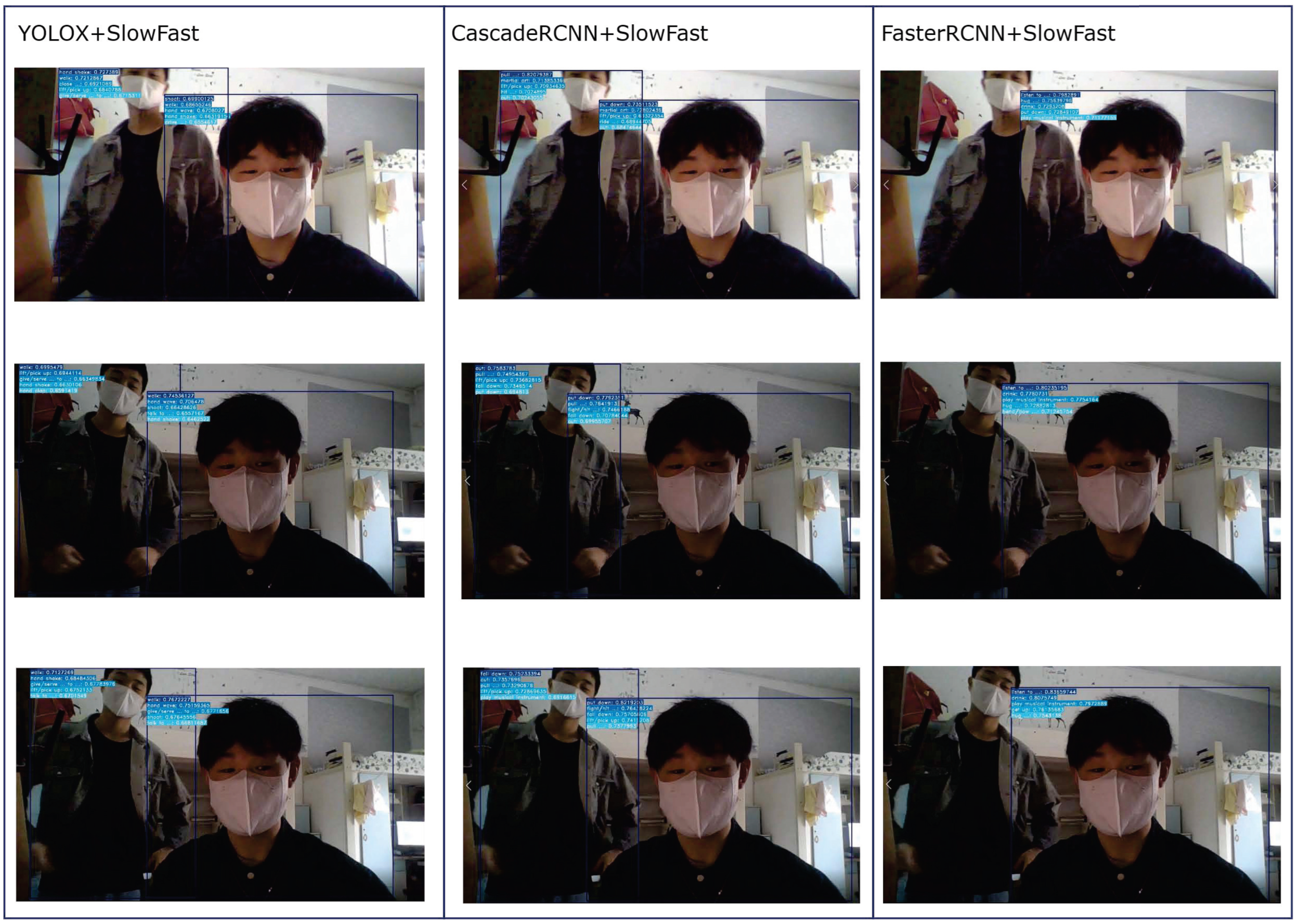

3.5. Action Recognition Effect

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Karpathy, A.; Toderici, G.; Shetty, S.; Leung, T.; Sukthankar, R.; Li, F.-F. Large-scale video classification with convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014; pp. 1725–1732. [Google Scholar]

- Tran, D.; Bourdev, L.; Fergus, R.; Torresani, L.; Paluri, M. Learning spatiotemporal features with 3d convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 4489–4497. [Google Scholar]

- Nguyen, H.C.; Nguyen, T.H.; Scherer, R.; Le, V.H. Unified End-to-End YOLOv5-HR-TCM Framework for Automatic 2D/3D Human Pose Estimation for Real-Time Applications. Sensors 2022, 22, 5419. [Google Scholar] [CrossRef] [PubMed]

- Varol, G.; Laptev, I.; Schmid, C. Long-term temporal convolutions for action recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 1510–1517. [Google Scholar] [CrossRef] [PubMed]

- Bilen, H.; Fernando, B.; Gavves, E.; Vedaldi, A.; Gould, S. Dynamic image networks for action recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 3034–3042. [Google Scholar]

- Fernando, B.; Gould, S. Learning end-to-end video classification with rank-pooling. In Proceedings of the International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016; pp. 1187–1196. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Two-stream convolutional networks for action recognition in videos. Adv. Neural Inf. Process. Syst. 2014, 27, 568–576. [Google Scholar]

- Feichtenhofer, C.; Pinz, A.; Wildes, R.P. Spatiotemporal multiplier networks for video action recognition. In Proceedings of the IEEE Conference on Computer Vision and Rattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4768–4777. [Google Scholar]

- Baccouche, M.; Mamalet, F.; Wolf, C.; Garcia, C.; Baskurt, A. Sequential deep learning for human action recognition. In International Workshop on Human Behavior Understanding; Springer: Berlin/Heidelberg, Germany, 2011; pp. 29–39. [Google Scholar]

- Donahue, J.; Hendricks, L.A.; Guadarrama, S.; Rohrbach, M.; Venugopalan, S.; Saenko, K.; Darrell, T. Long-term recurrent convolutional networks for visual recognition and description. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 2625–2634. [Google Scholar]

- Pigou, L.; Oord, A.V.D.; Dieleman, S.; Herreweghe, M.V.; Dambre, J. Beyond temporal pooling: Recurrence and temporal convolutions for gesture recognition in video. Int. J. Comput. Vis. 2018, 126, 430–439. [Google Scholar] [CrossRef]

- Du, W.; Wang, Y.; Qiao, Y. Rpan: An end-to-end recurrent pose-attention network for action recognition in videos. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 3725–3734. [Google Scholar]

- Sun, L.; Jia, K.; Chen, K.; Yeung, D.Y.; Shi, B.E.; Savarese, S. Lattice long short-term memory for human action recognition. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2147–2156. [Google Scholar]

- Shi, Y.; Tian, Y.; Wang, Y.; Zeng, W.; Huang, T. Learning long-term dependencies for action recognition with a biologically-inspired deep network. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 716–725. [Google Scholar]

- Yan, X.; Chang, H.; Shan, S.; Chen, X. Modeling video dynamics with deep dynencoder. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2014; pp. 215–230. [Google Scholar]

- Srivastava, N.; Mansimov, E.; Salakhudinov, R. Unsupervised learning of video representations using lstms. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 843–852. [Google Scholar]

- Mathieu, M.; Couprie, C.; LeCun, Y. Deep multi-scale video prediction beyond mean square error. arXiv 2015, arXiv:1511.05440. [Google Scholar]

- Feichtenhofer, C.; Fan, H.; Malik, J.; He, K. Slowfast networks for video recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6202–6211. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28, 91–99. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Ge, Z.; Liu, S.; Wang, F. Yolox: Exceeding yolo series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

- Lin, T.Y.; Dollar, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed]

- Cai, Z.; Vasconcelos, N. Cascade r-cnn: Delving into high quality object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6154–6162. [Google Scholar]

- Yu, J.; Jiang, Y.; Wang, Z. Unitbox: An advanced object detection network. In Proceedings of the 24th ACM International Conference on Multimedia, Amsterdam, The Netherlands, 15–19 October 2016; pp. 516–520. [Google Scholar]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.Y. Generalized intersection over union: A metric and a loss for bounding box regression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 658–666. [Google Scholar]

- Zheng, Z.; Wang, P.; Liu, W. Distance-IoU loss: Faster and better learning for bounding box regression. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 12993–13000. [Google Scholar]

- Zhang, Y.F.; Ren, W.; Zhang, Z. Focal and efficient IOU loss for accurate bounding box regression. Neurocomputing 2022, 506, 146–157. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Gidaris, S.; Komodakis, N. Attend refine repeat: Active box proposal generation via in-out localization. In Proceedings of the British Machine Vision Conference (BMVC), York, UK, 19–22 September 2016; pp. 90.1–90.13. [Google Scholar]

- Szegedy, C.; Toshev, A.; Erhan, D. Deep neural networks for object detection. Adv. Neural Inf. Process. Syst. 2013, 26, 1–9. [Google Scholar]

- Erhan, D.; Szegedy, C.; Toshev, A.; Anguelov, D. Scalable object detection using deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 2147–2154. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Bourdev, L.; Girshick, R.; Hays, J.; Perona, P.; Ramanan, D.; Zitnick, C.L.; Dollar, P. Microsoft coco: Common objects in context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Springer: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar]

- Everingham, M.; Van Gool, L.; Williams, C.K.I. The pascal visual object classes (voc) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Everingham, M.; Winn, J. The PASCAL Visual Object Classes Challenge 2012 (VOC2012) Development Kit. Available online: https://pjreddie.com/media/files/VOC2012_doc.pdf (accessed on 5 October 2022).

- Gu, C.; Sun, C.; Ross, D.A. Ava: A video dataset of spatio-temporally localized atomic visual actions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6047–6056. [Google Scholar]

| Method | Backbone | Evaluation Time | AP0.50–0.95 | AP50 | AP75 | APS | APM | APL |

|---|---|---|---|---|---|---|---|---|

| FasterRCNN | ResNet50 | 2.51 s | 0.514 | 0.810 | 0.564 | 0.102 | 0.312 | 0.601 |

| YOLOv3 (Ours) | DarkNet53 | 1.92 s | 0.486 | 0.790 | 0.537 | 0.123 | 0.282 | 0.586 |

| Method | AP50 | Score-Threshold | Precision | Recall | LAMR | F1-Score |

|---|---|---|---|---|---|---|

| FasterRCNN | 0.8114 | 0.5 | 0.4702 | 0.9172 | 0.41 | 0.62 |

| YOLOv3 (Ours) | 0.7789 | 0.5 | 0.7614 | 0.7050 | 0.46 | 0.73 |

| Method | AP0.50–0.95 | AP50 | AP75 | APS | APM | APL |

|---|---|---|---|---|---|---|

| IOU | 0.449 | 0.694 | 0.493 | 0.145 | 0.287 | 0.510 |

| CIOU | 0.435 | 0.694 | 0.471 | 0.127 | 0.269 | 0.500 |

| EIOU | 0.454 | 0.709 | 0.495 | 0.176 | 0.297 | 0.515 |

| LIOU(ours) | 0.459 | 0.717 | 0.510 | 0.174 | 0.315 | 0.517 |

| Method | AP0.50–0.95 | AP50 | AP75 | APS | APM | APL |

|---|---|---|---|---|---|---|

| FasterRCNN | 0.348 | 0.627 | 0.348 | 0.182 | 0.428 | 0.518 |

| YOLOv3 (Ours) | 0.233 | 0.512 | 0.182 | 0.079 | 0.260 | 0.429 |

| YOLOX (Ours) | 0.353 | 0.654 | 0.340 | 0.203 | 0.435 | 0.493 |

| CascadeRCNN (Ours) | 0.379 | 0.628 | 0.388 | 0.182 | 0.468 | 0.577 |

| Method | Human Detection Time | Spatio-Temporal Action Detection Time |

|---|---|---|

| FasterRCNN + SlowFast | 17 s | 12.5 s |

| YOLOv3 + SlowFast | 6.5 s | 5.7 s |

| YOLOX + SlowFast | 6.6 s | 7.9 s |

| CascadeRCNN + SlowFast | 16.6 s | 12.4 s |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zeng, W.; Huang, J.; Zhang, W.; Nan, H.; Fu, Z. SlowFast Action Recognition Algorithm Based on Faster and More Accurate Detectors. Electronics 2022, 11, 3770. https://doi.org/10.3390/electronics11223770

Zeng W, Huang J, Zhang W, Nan H, Fu Z. SlowFast Action Recognition Algorithm Based on Faster and More Accurate Detectors. Electronics. 2022; 11(22):3770. https://doi.org/10.3390/electronics11223770

Chicago/Turabian StyleZeng, Wei, Junjian Huang, Wei Zhang, Hai Nan, and Zhenjiang Fu. 2022. "SlowFast Action Recognition Algorithm Based on Faster and More Accurate Detectors" Electronics 11, no. 22: 3770. https://doi.org/10.3390/electronics11223770

APA StyleZeng, W., Huang, J., Zhang, W., Nan, H., & Fu, Z. (2022). SlowFast Action Recognition Algorithm Based on Faster and More Accurate Detectors. Electronics, 11(22), 3770. https://doi.org/10.3390/electronics11223770