Semantic Conceptual Framework for Environmental Monitoring and Surveillance—A Case Study on Forest Fire Video Monitoring and Surveillance

Abstract

:1. Introduction

- access the state of the environment at the moment of planning;

- access the desired goals or desired state; and

- create an optimal plan (or steps) for reaching the desired state from the current state.

- Planning sensing and communication technology—by constructingthe values of the system attributes (e.g., scale, precision, area of coverage and feature of interest) that are in accordance with the application domain, and selecting only the technologies that can offer the needed features.

- Designing the communication architecture of the monitoring software—by analyzing communication patterns one can group paths of communication, eliminate redundant communication and design more efficient data communication architectures.

- Designing monitoring and surveillance system functional requirements—choosing the functionalities from the taxonomy items of the framework can lead to unambiguous descriptions of the functional requirements.

- Designing testing procedures—the validation of functional and nonfunctional requirements can be extracted from the formal description (i.e., the coveredarea and precision), which can lead to formal procedures of validation.

- Implementation of the procedures of spatio-temporal surveillance data retrieval—the spatial and temporal constraints of the observation data are natively introduced in the framework and can be easily queried.

- Designing interoperability protocols with software that can use data from the observation system—modeling, forecasting or classification software that use real-time data retrieved from the observation system. This can utilize the data together with all the metadata known to the system.

Related Work

2. Conceptual Framework of an Environment Observation System

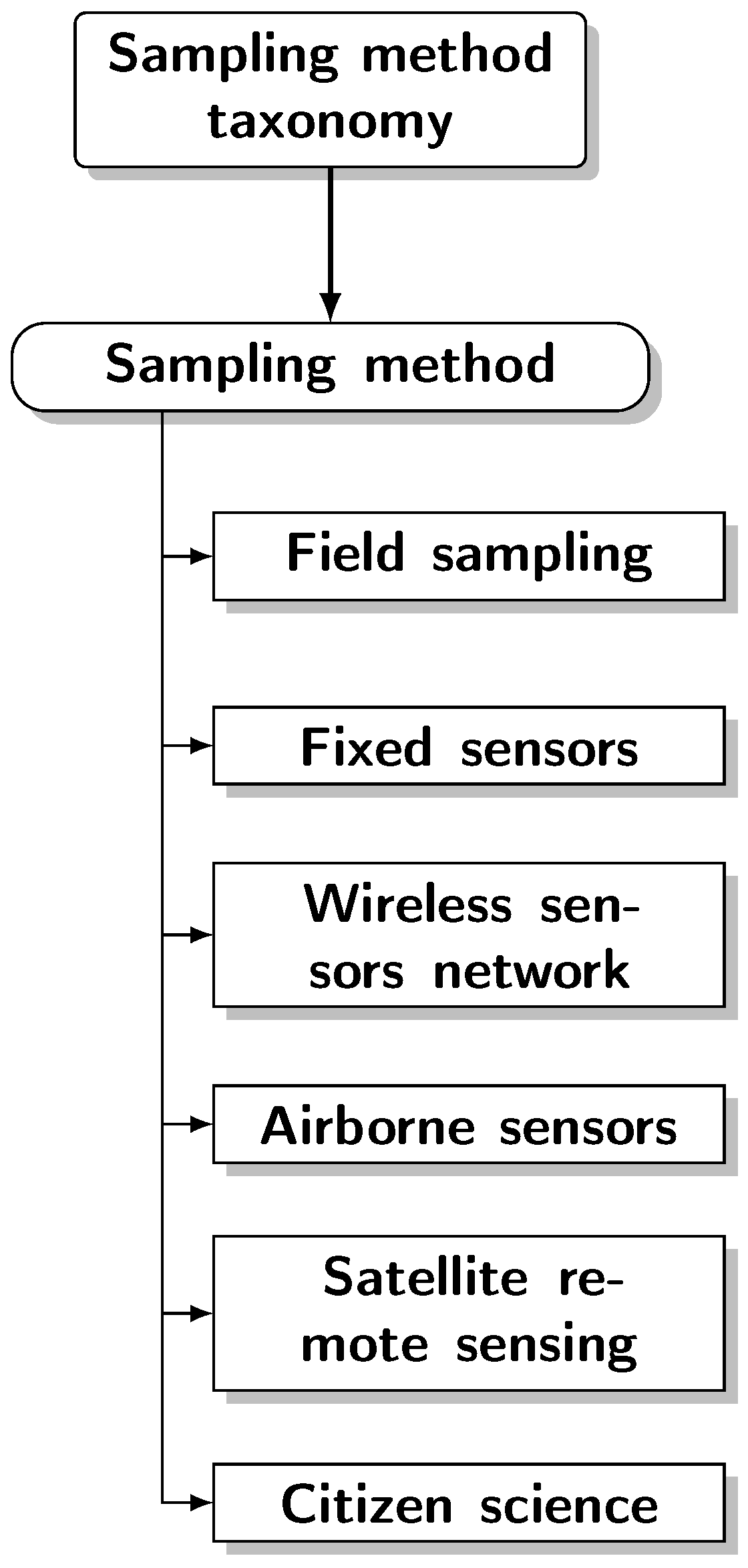

3. Observation System Taxonomies

- a sampling method taxonomy;

- a value format taxonomyl and

- a functionality query taxonomy.

3.1. Sampling Method Taxonomy

- Field and manual data collection—this is a traditional method which is not automated but is still sometimes used;

- Fixed sensors—these sensors have a fixed location, can rely on the infrastructure where one is available, and can be dependent on a more reliable source of power and network;

- Wireless sensors powered by batteries or solar or wind energy harvesting are capable of measuring certain aspects of the environment;

- Airborne sensors mounted on aerial vehicles;

- Satellite-based remote sensing; and

- Citizen science, community sensing, crowdsourcing and social networks.

3.1.1. Field Manual Data Collection

3.1.2. Fixed Sensors

3.1.3. Wireless Sensor Network

3.1.4. Airborne Sensors

3.1.5. Satellite Remote Sensing

3.1.6. Citizen Science

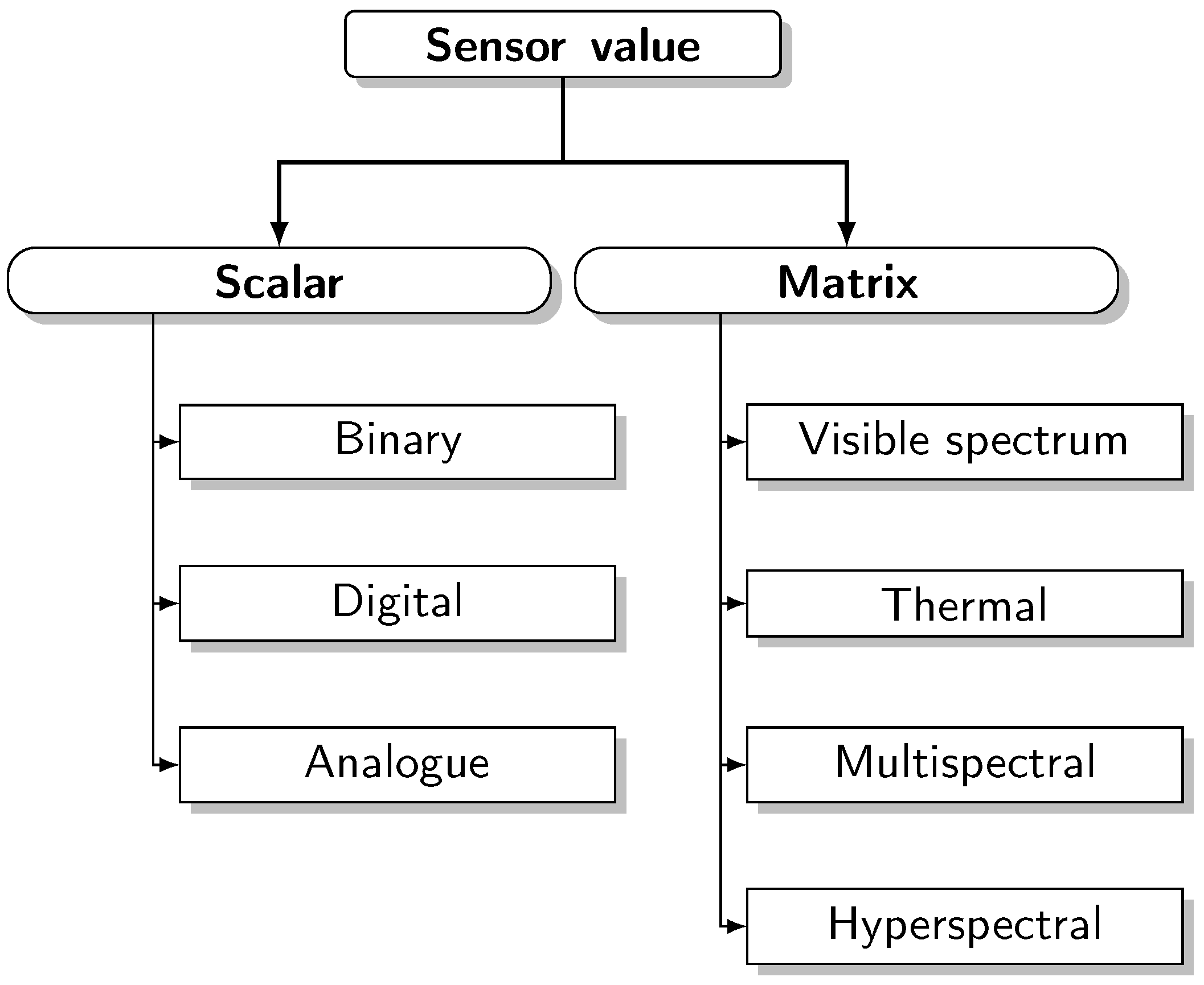

3.2. Sensor Value Taxonomy

- scalar sensors;

- visible spectrum cameras;

- thermal or thermographic cameras;

- bispectral cameras;

- hyperspectral cameras; and

- multispectral cameras.

3.2.1. Scalar Sensors

3.2.2. Cameras

3.2.3. PTZ Cameras

3.2.4. Airborne Cameras

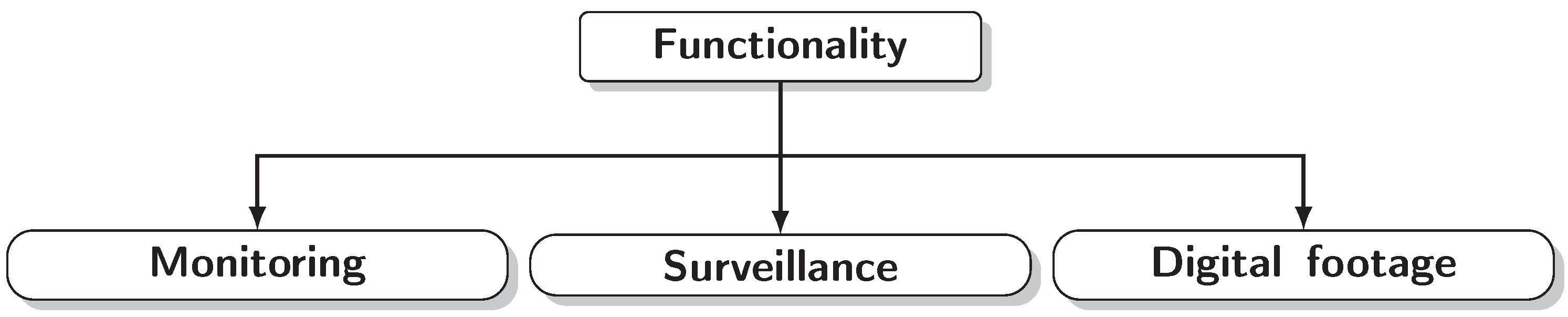

3.3. Functionality Taxonomy

- Monitoring;

- Surveillance; and

- Digital footage and reconstruction.

3.3.1. Environmental Monitoring

3.3.2. Event Surveillance

3.3.3. Digital Footage and Reconstruction

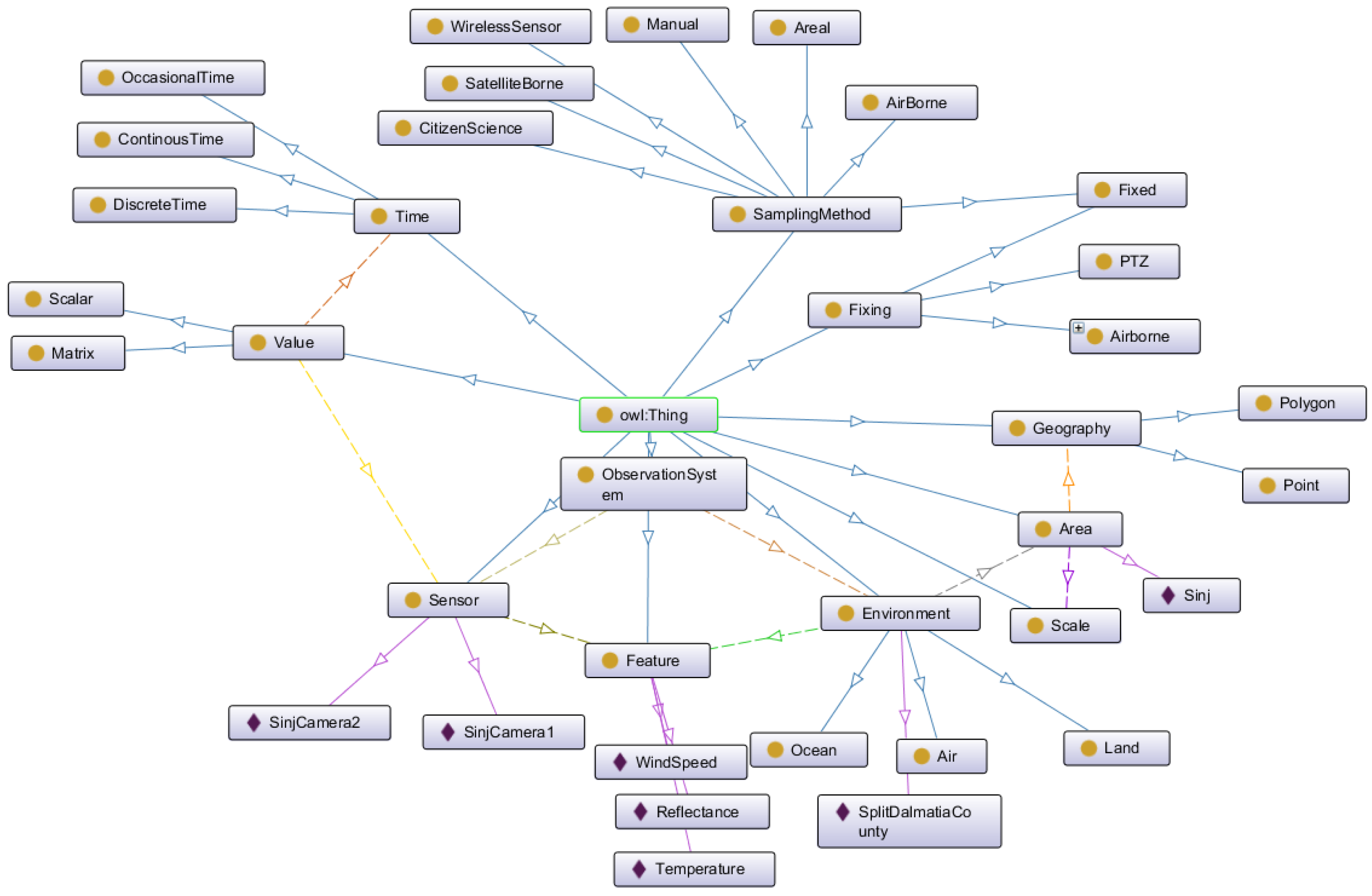

4. Implementation of an Environment Observation System Ontology

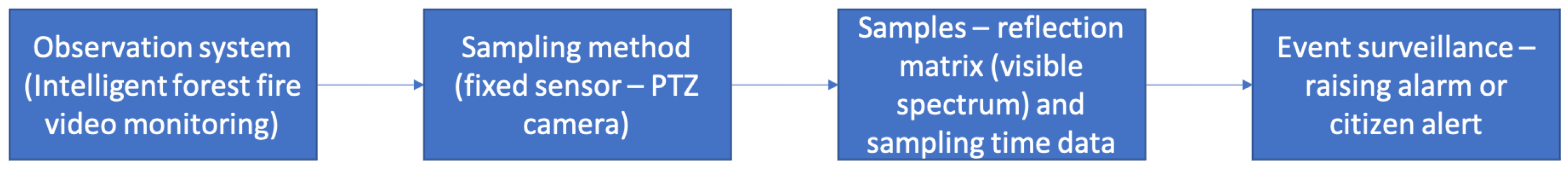

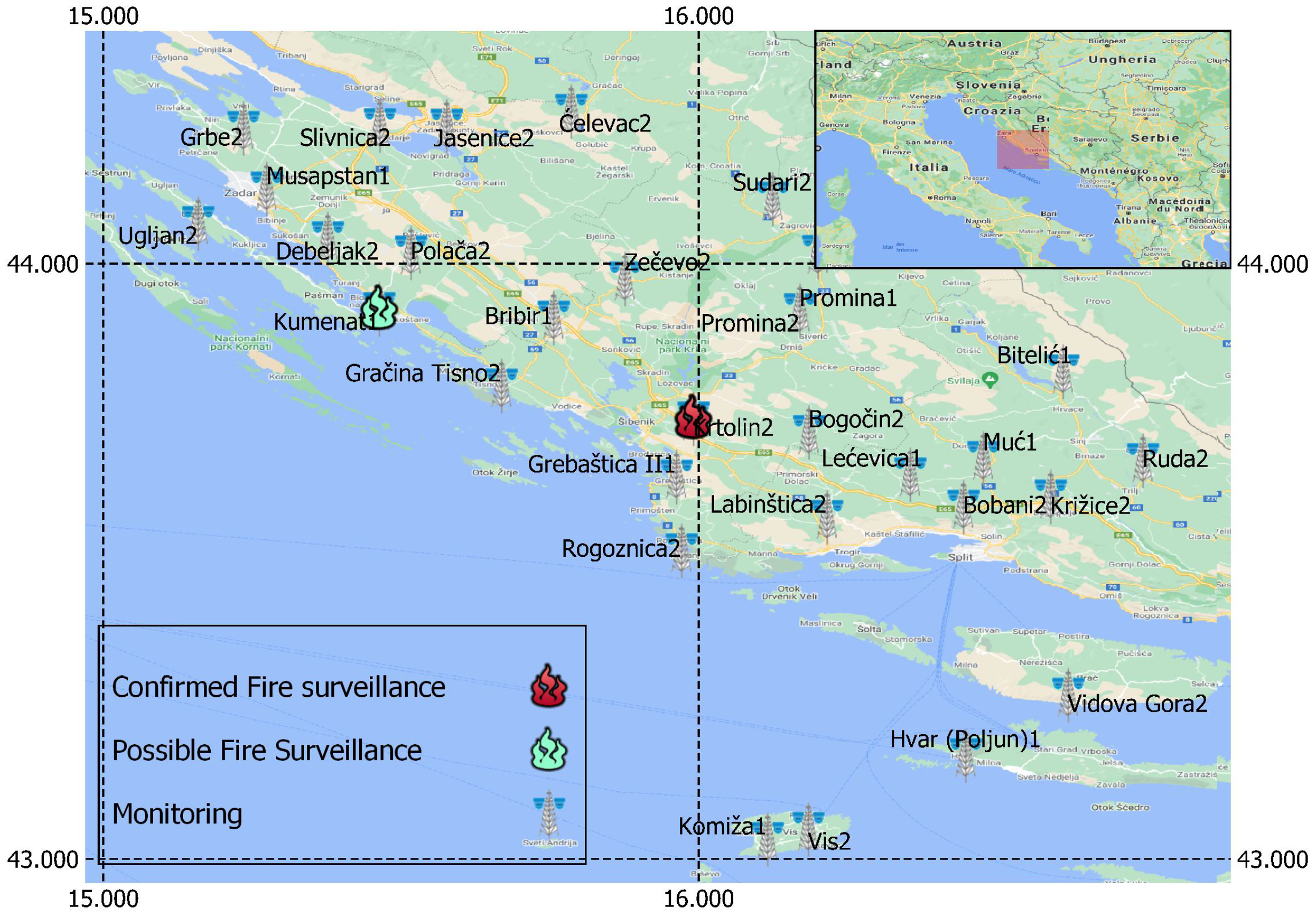

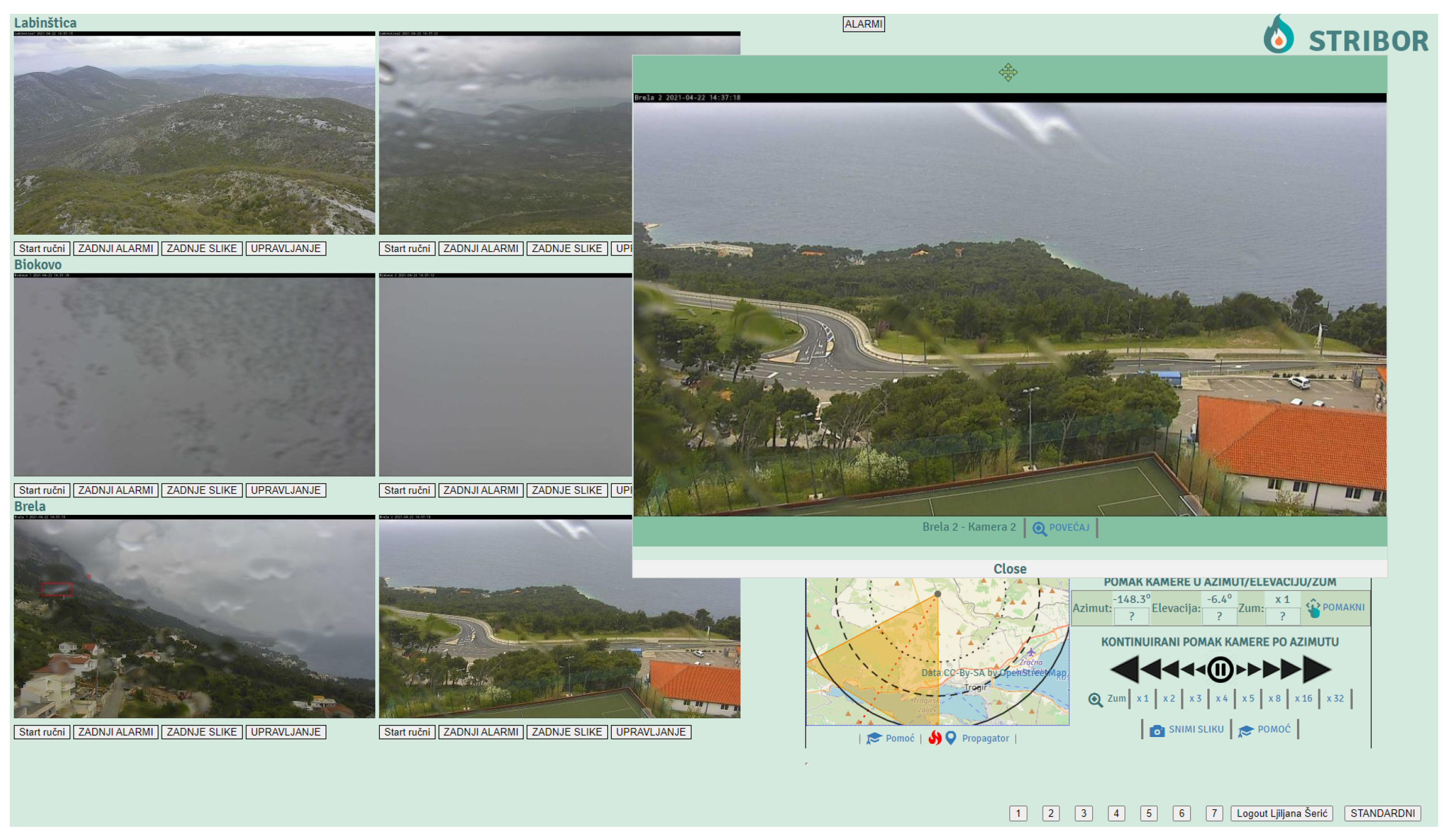

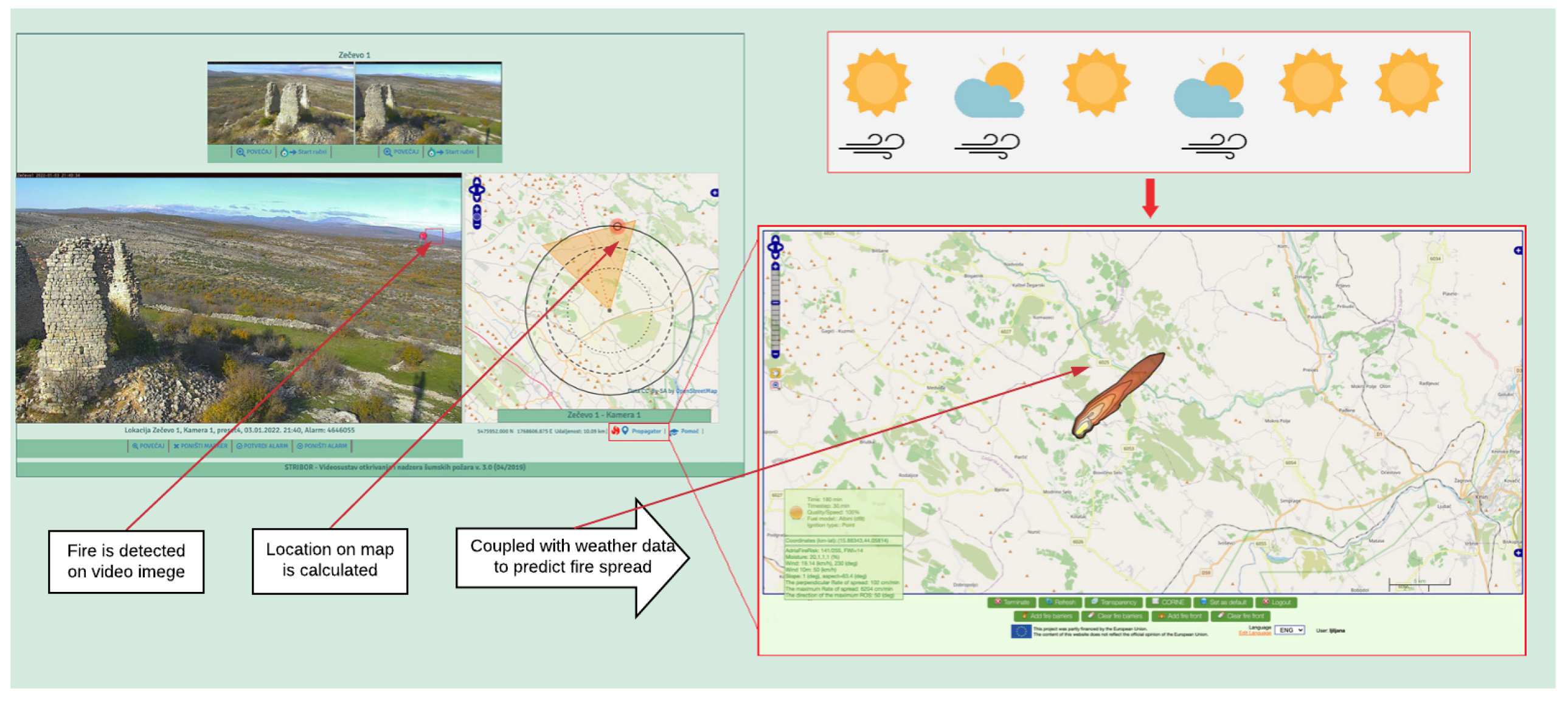

5. A Case Study—Intelligent Forest Fire Video Monitoring and Surveillance in Croatia

6. Conclusions

- The monitoring of a large area with a network of PTZ cameras and the triggering of alarms in cases of forest fire detection;

- Surveillance of an event through adjusting one camera position in order to focus on the area where the event takes place;

- Interoperability with a fire propagation modeling system by sending the location of the fire ignition and adjusting the area that needs to be surveilled based on the fire propagation forecasting; and

- Retrieval of archived data with spatio-temporal filtering.

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| ICT | Information and communication technologies |

| GIS | Geographic information system |

| DPSIR | Drivers, Pressures, State, Impact and Response |

| PSR | Pressures, State and Response |

| OECD | Organization for Economic Co-operation and Development |

| SOSA | Sensors, Observations, Samples and Actuators |

| IoT | Internet of Things |

| OS | Observation System |

| UAV | Unmanned Aerial Vehicle |

| PTZ | Pan-Tilt-Zoom |

| RGB | Red Green Blue |

References

- Webster, R.; Lark, M. Field Sampling for Environmental Science and Management; Routledge: London, UK, 2012. [Google Scholar]

- Othman, M.F.; Shazali, K. Wireless Sensor Network Applications: A Study in Environment Monitoring System. Procedia Eng. 2012, 41, 1204–1210. [Google Scholar] [CrossRef] [Green Version]

- Tsakanikas, V.; Dagiuklas, T. Video surveillance systems-current status and future trends. Comput. Electr. Eng. 2018, 70, 736–753. [Google Scholar] [CrossRef]

- Toth, C.; Jóźków, G. Remote sensing platforms and sensors: A survey. ISPRS J. Photogramm. Remote Sens. 2016, 115, 22–36. [Google Scholar] [CrossRef]

- Strobl, R.; Robillard, P.; Shannon, R.D.; Day, R.L.; McDonnell, A. A water quality monitoring network design methodology for the selection of critical sampling points: Part I. Environ. Monit. Assess. 2006, 112, 137–158. [Google Scholar] [CrossRef]

- Jabareen, Y. Building a conceptual framework: Philosophy, definitions, and procedure. Int. J. Qual. Methods 2009, 8, 49–62. [Google Scholar] [CrossRef]

- Zhang, S.; Yen, I.L.; Bastani, F.B. Toward Semantic Enhancement of Monitoring Data Repository. In Proceedings of the 2016 IEEE Tenth International Conference on Semantic Computing (ICSC), Laguna Hills, CA, USA, 4–6 February 2016; pp. 140–147. [Google Scholar] [CrossRef]

- Badii, A.; Tiemann, M.; Thiemert, D. Data integration, semantic data representation and decision support for situational awareness in protection of critical assets. In Proceedings of the 2014 International Conference on Signal Processing and Multimedia Applications (SIGMAP), Vienna, Austria, 28–30 August 2014; pp. 341–345. [Google Scholar]

- Bennett, N.J.; Whitty, T.S.; Finkbeiner, E.; Pittman, J.; Bassett, H.; Gelcich, S.; Allison, E.H. Environmental stewardship: A conceptual review and analytical framework. Environ. Manag. 2018, 61, 597–614. [Google Scholar] [CrossRef] [Green Version]

- Gari, S.R.; Newton, A.; Icely, J.D. A review of the application and evolution of the DPSIR framework with an emphasis on coastal social-ecological systems. Ocean. Coast. Manag. 2015, 103, 63–77. [Google Scholar] [CrossRef] [Green Version]

- Svarstad, H.; Petersen, L.K.; Rothman, D.; Siepel, H.; Wätzold, F. Discursive biases of the environmental research framework DPSIR. Land Use Policy 2008, 25, 116–125. [Google Scholar] [CrossRef]

- Janowicz, K.; Haller, A.; Cox, S.J.; Le Phuoc, D.; Lefrançois, M. SOSA: A lightweight ontology for sensors, observations, samples, and actuators. J. Web Semant. 2019, 56, 1–10. [Google Scholar] [CrossRef] [Green Version]

- Honti, G.M.; Abonyi, J. A review of semantic sensor technologies in internet of things architectures. Complexity 2019, 2019, 6473160. [Google Scholar] [CrossRef] [Green Version]

- Dziak, D.; Jachimczyk, B.; Kulesza, W.J. IoT-based information system for healthcare application: Design methodology approach. Appl. Sci. 2017, 7, 596. [Google Scholar] [CrossRef]

- Makris, D.; Ellis, T. Learning semantic scene models from observing activity in visual surveillance. IEEE Trans. Syst. Man Cybern. Part B (Cybern.) 2005, 35, 397–408. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wilde, F.D. Water-Quality Sampling by the U.S. Geological Survey—Standard Protocols and Procedures. U.S. Geol. Surv. Fact Sheet, 2010-3121. Available online: https://pubs.usgs.gov/fs/2010/3121/ (accessed on 20 November 2021).

- Ministry of Agriculture. Pravilnik o NačInu Prikupljanja Podataka, sadržAju i Vođenju Upisnika te Uvjetiima korišTenja Podataka o šUmskim požArima. 2019. Available online: https://narodne-novine.nn.hr/clanci/sluzbeni/2019_09_82_1708.html (accessed on 20 November 2021).

- Nixon, S.; Grath, J.; Bøgestrand, J. EUROWATERNET: The European Environment Agency’s Monitoring and Information Network for Inland Water Resources—Technical Guidelines for Implementation; Final Draft; EEA Technical Report; European Environment Agency—EEA: Copenhagen, Denmark, 1998. [Google Scholar]

- Jones, A.; Fernandez-Ugalde, O.; Scarpa, S. LUCAS 2015 Topsoil Survey. Presentation of Dataset and Results; EUR 30332 EN; Publications Office of the European Union: Luxembourg, 2020; ISBN 978-92-76-21080-1. [Google Scholar]

- Gohin, F.; Bryère, P.; Lefebvre, A.; Sauriau, P.G.; Savoye, N.; Vantrepotte, V.; Bozec, Y.; Cariou, T.; Conan, P.; Coudray, S.; et al. Satellite and in situ monitoring of Chl-a, Turbidity, and Total Suspended Matter in coastal waters: Experience of the year 2017 along the French Coasts. J. Mar. Sci. Eng. 2020, 8, 665. [Google Scholar] [CrossRef]

- Ballabio, C.; Lugato, E.; Fernández-Ugalde, O.; Orgiazzi, A.; Jones, A.; Borrelli, P.; Montanarella, L.; Panagos, P. Mapping LUCAS topsoil chemical properties at European scale using Gaussian process regression. Geoderma 2019, 355, 113912. [Google Scholar] [CrossRef] [PubMed]

- Stipaničev, D.; Bugarić, M.; Krstinić, D.; Šerić, L.; Jakovčević, T.; Braović, M.; Štula, M. New Generation of Automatic Ground Based Wildfire Surveillance Systems. Available online: http://hdl.handle.net/10316.2/340132014 (accessed on 20 November 2021).

- Andriolo, U.; Mendes, D.; Taborda, R. Breaking wave height estimation from Timex images: Two methods for coastal video monitoring systems. Remote Sens. 2020, 12, 204. [Google Scholar] [CrossRef] [Green Version]

- Bjorck, J.; Rappazzo, B.H.; Chen, D.; Bernstein, R.; Wrege, P.H.; Gomes, C.P. Automatic detection and compression for passive acoustic monitoring of the african forest elephant. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 476–484. [Google Scholar]

- Brito, T.; Pereira, A.I.; Lima, J.; Castro, J.P.; Valente, A. Optimal sensors positioning to detect forest fire ignitions. In Proceedings of the 9th International Conference on Operations Research and Enterprise Systems, Valletta, Malta, 22–24 February 2020; pp. 411–418. [Google Scholar]

- Azevedo, B.F.; Brito, T.; Lima, J.; Pereira, A.I. Optimum Sensors Allocation for a Forest Fires Monitoring System. Forests 2021, 12, 453. [Google Scholar] [CrossRef]

- Mois, G.; Folea, S.; Sanislav, T. Analysis of Three IoT-Based Wireless Sensors for Environmental Monitoring. IEEE Trans. Instrum. Meas. 2017, 66, 2056–2064. [Google Scholar] [CrossRef]

- Cui, F. Deployment and integration of smart sensors with IoT devices detecting fire disasters in huge forest environment. Comput. Commun. 2020, 150, 818–827. [Google Scholar] [CrossRef]

- Placidi, P.; Morbidelli, R.; Fortunati, D.; Papini, N.; Gobbi, F.; Scorzoni, A. Monitoring soil and ambient parameters in the iot precision agriculture scenario: An original modeling approach dedicated to low-cost soil water content sensors. Sensors 2021, 21, 5110. [Google Scholar] [CrossRef]

- Adu-Manu, K.S.; Adam, N.; Tapparello, C.; Ayatollahi, H.; Heinzelman, W. Energy-harvesting wireless sensor networks (EH-WSNs) A review. ACM Trans. Sens. Netw. (TOSN) 2018, 14, 1–50. [Google Scholar] [CrossRef]

- Cario, G.; Casavola, A.; Gjanci, P.; Lupia, M.; Petrioli, C.; Spaccini, D. Long lasting underwater wireless sensors network for water quality monitoring in fish farms. In Proceedings of the OCEANS 2017—Aberdeen, Aberdeen, UK, 19–22 June 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Garaba, S.P.; Aitken, J.; Slat, B.; Dierssen, H.M.; Lebreton, L.; Zielinski, O.; Reisser, J. Sensing ocean plastics with an airborne hyperspectral shortwave infrared imager. Environ. Sci. Technol. 2018, 52, 11699–11707. [Google Scholar] [CrossRef] [PubMed]

- Kukkonen, M.; Maltamo, M.; Korhonen, L.; Packalen, P. Comparison of multispectral airborne laser scanning and stereo matching of aerial images as a single sensor solution to forest inventories by tree species. Remote Sens. Environ. 2019, 231, 111208. [Google Scholar] [CrossRef]

- Adão, T.; Hruška, J.; Pádua, L.; Bessa, J.; Peres, E.; Morais, R.; Sousa, J.J. Hyperspectral Imaging: A Review on UAV-Based Sensors, Data Processing and Applications for Agriculture and Forestry. Remote Sens. 2017, 9, 1110. [Google Scholar] [CrossRef] [Green Version]

- Pochwała, S.; Anweiler, S.; Deptuła, A.; Gardecki, A.; Lewandowski, P.; Przysiężniuk, D. Optimization of air pollution measurements with unmanned aerial vehicle low-cost sensor based on an inductive knowledge management method. Optim. Eng. 2021, 22, 1783–1805. [Google Scholar] [CrossRef]

- Gholizadeh, A.; Žižala, D.; Saberioon, M.; Borůvka, L. Soil organic carbon and texture retrieving and mapping using proximal, airborne and Sentinel-2 spectral imaging. Remote Sens. Environ. 2018, 218, 89–103. [Google Scholar] [CrossRef]

- Cao, Q.; Yu, G.; Sun, S.; Dou, Y.; Li, H.; Qiao, Z. Monitoring Water Quality of the Haihe River Based on Ground-Based Hyperspectral Remote Sensing. Water 2022, 14, 22. [Google Scholar] [CrossRef]

- Pettorelli, N.; Schulte to Bühne, H.; Tulloch, A.; Dubois, G.; Macinnis-Ng, C.; Queirós, A.M.; Keith, D.A.; Wegmann, M.; Schrodt, F.; Stellmes, M.; et al. Satellite remote sensing of ecosystem functions: Opportunities, challenges and way forward. Remote. Sens. Ecol. Conserv. 2018, 4, 71–93. [Google Scholar] [CrossRef]

- Fritz, S.; Fonte, C.C.; See, L. The role of citizen science in earth observation. Remote Sens. 2017, 9, 357. [Google Scholar] [CrossRef] [Green Version]

- Bioco, J.; Fazendeiro, P. Towards Forest Fire Prevention and Combat through Citizen Science. In Proceedings of the World Conference on Information Systems and Technologies, Galicia, Spain, 16–19 April 2019; Springer: Berlin/Heidelberg, Germany, 2019; pp. 904–915. [Google Scholar]

- Kirchhoff, C.; Callaghan, C.T.; Keith, D.A.; Indiarto, D.; Taseski, G.; Ooi, M.K.; Le Breton, T.D.; Mesaglio, T.; Kingsford, R.T.; Cornwell, W.K. Rapidly mapping fire effects on biodiversity at a large-scale using citizen science. Sci. Total Environ. 2021, 755, 142348. [Google Scholar] [CrossRef]

- Quinlivan, L.; Chapman, D.V.; Sullivan, T. Validating citizen science monitoring of ambient water quality for the United Nations sustainable development goals. Sci. Total Environ. 2020, 699, 134255. [Google Scholar] [CrossRef]

- Little, S.; Pavelsky, T.M.; Hossain, F.; Ghafoor, S.; Parkins, G.M.; Yelton, S.K.; Rodgers, M.; Yang, X.; Crétaux, J.F.; Hein, C.; et al. Monitoring variations in lake water storage with satellite imagery and citizen science. Water 2021, 13, 949. [Google Scholar] [CrossRef]

- Hirth, M.; Seufert, M.; Lange, S.; Meixner, M.; Tran-Gia, P. Performance Evaluation of Hybrid Crowdsensing and Fixed Sensor Systems for Event Detection in Urban Environments. Sensors 2021, 21, 5880. [Google Scholar] [CrossRef] [PubMed]

- Musen, M.A. The protégé project: A look back and a look forward. AI Matters 2015, 1, 4–12. [Google Scholar] [CrossRef] [PubMed]

- Stula, M.; Krstinic, D.; Seric, L. Intelligent forest fire monitoring system. Inf. Syst. Front. 2012, 14, 725–739. [Google Scholar] [CrossRef]

- Šerić, L.; Stipaničev, D.; Štula, M. Observer network and forest fire detection. Inf. Fusion 2011, 12, 160–175. [Google Scholar] [CrossRef]

- Bugarić, M.; Stipaničev, D.; Jakovčević, T. AdriaFirePropagator and AdriaFireRisk: User Friendly Web Based Wildfire Propagation and Wildfire Risk Prediction Software. Available online: http://hdl.handle.net/10316.2/445172018 (accessed on 20 November 2021).

- Seric, L.; Braovic, M.; Beovic, T.; Vidak, G. Metadata-Oriented Concept-Based Image Retrieval for Forest Fire Video Surveillance System. In Proceedings of the 2018 3rd International Conference on Smart and Sustainable Technologies (SpliTech), Split, Croatia, 26–29 June 2018; pp. 1–5. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Šerić, L.; Ivanda, A.; Bugarić, M.; Braović, M. Semantic Conceptual Framework for Environmental Monitoring and Surveillance—A Case Study on Forest Fire Video Monitoring and Surveillance. Electronics 2022, 11, 275. https://doi.org/10.3390/electronics11020275

Šerić L, Ivanda A, Bugarić M, Braović M. Semantic Conceptual Framework for Environmental Monitoring and Surveillance—A Case Study on Forest Fire Video Monitoring and Surveillance. Electronics. 2022; 11(2):275. https://doi.org/10.3390/electronics11020275

Chicago/Turabian StyleŠerić, Ljiljana, Antonia Ivanda, Marin Bugarić, and Maja Braović. 2022. "Semantic Conceptual Framework for Environmental Monitoring and Surveillance—A Case Study on Forest Fire Video Monitoring and Surveillance" Electronics 11, no. 2: 275. https://doi.org/10.3390/electronics11020275

APA StyleŠerić, L., Ivanda, A., Bugarić, M., & Braović, M. (2022). Semantic Conceptual Framework for Environmental Monitoring and Surveillance—A Case Study on Forest Fire Video Monitoring and Surveillance. Electronics, 11(2), 275. https://doi.org/10.3390/electronics11020275