Abstract

Autonomous maneuver decision by an unmanned combat air vehicle (UCAV) is a critical part of air combat that requires both flight safety and tactical maneuvering. In this paper, an unmanned combat air vehicle air combat maneuver decision method based on a proximal policy optimization algorithm (PPO) is proposed. Firstly, a motion model of the unmanned combat air vehicle and a situation assessment model of air combat was established to describe the motion situation of the unmanned combat air vehicle. An enemy maneuver policy based on a situation assessment with a greedy algorithm was also proposed for air combat confrontation, which aimed to verify the performance of the proximal policy optimization algorithm. Then, an action space based on a basic maneuver library and a state observation space of the proximal policy optimization algorithm were constructed, and a reward function with situation reward shaping was designed for accelerating the convergence rate. Finally, a simulation of air combat confrontation was carried out, which showed that the agent using the proximal policy optimization algorithm learned to combine a series of basic maneuvers, such as diving, climb and circling, into tactical maneuvers and eventually defeated the enemy. The winning rate of the proximal policy optimization algorithm reached 62%, and the corresponding losing rate was only 11%.

1. Introduction

As a critical part of modern war, air combat intensively embodies the trend that emerging technologies are widely applied in war. Autonomous decision by an unmanned combat air vehicle (UCAV) is one of the main research directions in air combat. In the 1960s, the National Aeronautics and Space Administration (NASA) began an experiment using artificial intelligence (AI) to replace human pilots in making air combat decisions [1,2]. In 2016, Psibernetix Inc., Air Force Research Laboratory and University of Cincinnati, disclosed an AI called ALPHA, which controls the flights of UCAVs in air combat missions [3]. In 2019, the Defense Advanced Research Project Agency (DARPA) kicked off the Air Combat Evolution program (ACE), which is aimed at developing trusted, scalable, human-level, AI-driven autonomy for air combat [4].

The main advantages of UCAV autonomous air combat are as follows: improving the combat level of the pilot by man–machine confrontation training; easing the operational pressure of the pilot with decision support in air combat; reducing training time, costs and casualties by autonomous air combat or human–machine collaborative combat.

The theory and technology regarding autonomous air combat maneuver decision have been evolving in recent years. Traditional research methods include game theory, expert system and optimization theory.

Differential game theory is a typical representative air combat maneuver decision method based on game theory. Differential game of pursuit and evasion was introduced by Isaacs [5]. Sufficient conditions of qualitative differential game, which assure the termination of a particular target of a pursuit–evasion problem, were derived by Leitmann [6]. A differential game theory-based maneuver algorithm following a hierarchical decision structure was developed in [7]. The algorithm establishes a scoring function matrix computed from the relative geometry, relative distance and velocity of two aircrafts, and then it performs a scoring function matrix calculation based on differential game theory to determine the optimal maneuvers in a dynamic and challenging combat situation. The combat results and maneuvers under the controlled computation environment are similar to those of real air combat.

A rule-based expert system, which needs less effort to engineer and possesses high interpretability, constructs production rules with predicate logic similar to IF–ELSE–THEN. An air combat decision expert system was established in [8]. The system matches collected air combat situation information with the rules in an expert knowledge base, and then it outputs corresponding strategies. A systematic decision method based on an expert system was proposed in [9]. The method designs a maneuver selection auxiliary system, which is composed of a knowledge base, tactical planner, maneuver method selector, execution planner, special case processor and man–machine interface. The knowledge base is constructed according to expert evaluation of air combat decision. The other parts of the system are mainly constructed by fuzzy logic. This method can assist pilots in realizing the potential combat capability of the aircraft and meet the operational performance requirements within time limits. In [10], a rule base was established according to potential air combat situations faced by UCAVs, and the constraints of air combat decision were set with a priori knowledge. In [11], an evolutionary expert system tree method was proposed to solve the problem that traditional expert systems are unable to be applied in unexpected situations.

An air combat decision method based on an optimization algorithm formalizes decision-making problems into multiobjective optimization problems. In [12], a Bayesian inference-theory-based air combat situation assessment was calculated to adjust the weights of the maneuver decision factors. Functions based on fuzzy logic of the maneuver decision factors were established to enhance the robustness and effectiveness of the decision results. The simulation results demonstrated the intensity of the air combat game and displayed the drawbacks of an element maneuver method. An intelligent tactical aid decision system based on linear discriminant analysis (LDA) and improved glowworm swarm optimization (IGSO) was proposed in [13]. The simulation results showed that the LDA-IGSO-ELM algorithm can quickly and accurately solve the problem of threat assessment and provide effective support for firepower allocation and maneuver decision. The biological immune system protects the body against intruders by recognizing and destroying harmful cells or molecules. Inspired by this, [14] employed the genetic algorithm and evolutionary algorithm to imitate the immune mechanism to select and construct air combat maneuvers. The test results demonstrated the potential of immunized maneuver selection in terms of constructing effective motion-based trajectories over a relatively short (1–2 s) period of time. Reference [15] modeled air combat based on optimal control and game theory and then transformed dual aircraft air combat into an optimization problem using parameterized curves. The problem was then solved with a moving time horizon scheme to generate optimal strategies for the aircraft. The results from different scenarios showed that the method is capable of providing solutions in air combat for autonomous aerial vehicles.

Traditional air combat decision methods require accurate modeling of a complex and changeable air combat process, which is usually difficult to achieve. With the expansion of the decision space, it is also faced with dimension explosion, which leads to difficulties in solving problems and low real-time performance. Emerging research methods represented by deep reinforcement learning (DRL) train an agent through a trial-and-error mechanism, which removes accurate modeling and generates maneuver decision with data based on a neural network to improve solution efficiency [16].

The feasibility of autonomous maneuver decision based on reinforcement learning (RL) was proved in [17]. However, motion of UCAVs was only considered in the 2D plane, and research on the motion of UCAVs in the 3D space was lacking in the paper. An intelligent tactical decision method based on a deep Q-learning network (DQN) was proposed in [18]. In order to verify the validity of the method, an enemy based on Min-Max algorithm was also designed. The simulation results showed that the decision-making performance of the DQN approach was quicker and more effective than the Min-Max recursive approach. However, velocity was not considered as a feature vector in the input of the neural network, which may have affected the convergence rate of the method. In [19], a heuristic Q-network method was proposed to improve the efficiency of reinforcement learning, which realizes self-learning of air confrontation maneuver strategy and, finally, helps the fighters make reasonable maneuver decisions independently under different air confrontation situations. However, similar to [17], the influence of changes in altitude on the motion of UCAVs was not considered. In [20], an autonomous maneuver decision model based on a deep Q network was proposed for UCAVs in short-range air combat; however, velocity and angles were coupled in the definition of an air combat situational advantage, which may interfere in the process of learning optimal strategies with a reward function. A novel maneuver strategy generation algorithm based on a state-adversarial deep deterministic policy gradient algorithm was proposed in [21], but the experimental results in the paper only proved the effectiveness of the algorithm in the 2D plane.

There are two main inadequacies in the existing research on air combat maneuver decision based on deep reinforcement learning: one is that the training environment of the agent is different from actual air combat, which limits the application of the research results; the other is that factors affecting air combat are not fully considered, which affects the learning efficiency of the agent. In this paper, a novel UCAV air combat maneuver decision method based on a proximal policy optimization algorithm (PPO) [22] is proposed. Firstly, a general UAV short-range air combat confrontation framework was established including a motion model of a fixed-wing aircraft and a situation assessment model of air combat. In order to verify the effectiveness of the method, an enemy maneuver strategy based on a greedy algorithm was designed. Then, the action space based on a basic maneuver library and the state observation space of the agent were constructed. The maneuver policy was generated by training with the PPO algorithm. A reward function, based on situation reward shaping, was designed for accelerating the convergence rate of the algorithm. Finally, simulations of one-to-one, short-range air combat in stochastic situations were carried out to verify the effectiveness of the PPO algorithm. The winning rate of the PPO algorithm reached 62%, and the corresponding losing rate was only 11%. The results of the simulations showed that the maneuver decision method proposed in this paper can enable UCAVs to learn the maneuver policy autonomously and defeat the enemy.

The paper is arranged as follows: the general UCAV short-range air combat confrontation framework is constructed in Section 2; the agent training method based on deep reinforcement learning is designed in Section 3; the simulation analysis is performed in Section 4; Section 5 summarizes the research.

2. Air Combat Confrontation Framework

In this section, the simulation environment of general UCAV short-range air combat is constructed, which mainly included a UCAV motion model, air combat situation assessment model, and enemy maneuver strategy based on the situation assessment. The UCAV motion model provides a research object for air combat maneuver decision. The air combat situation assessment model is the basis for evaluating the advantages and disadvantages of the air combat maneuver decision, and the enemy maneuver strategy based on the situation assessment adds antagonism to the air combat simulation environment and serves as a training opponent to verify the effectiveness of the air combat maneuver decision based on deep reinforcement learning.

2.1. Unmanned Combat Air Vehicle Motion Model

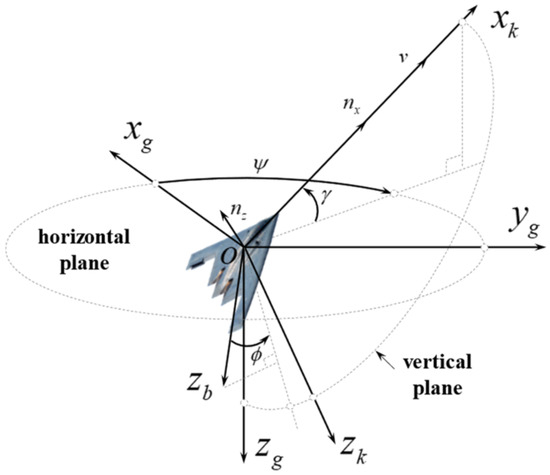

The velocities and positions of UCAVs are the main factors that affect short-range air combat. Therefore, a three degrees of freedom model for a fixed-wing aircraft was employed in this paper, which not only reflected the air combat situation but also improved the simulation’s efficiency. Considering that the angle of attack and the angle of sideslip are so small that the two angles can be ignored, a dynamic model of a UCAV in the NED (north-east-down) coordinate system can be expressed as [23]:

where represents the position, and the flight altitude of the UCAV is . , and denote the north velocity, the east velocity and the vertical velocity, respectively; represents the airspeed; and denote the angle of climb and the azimuth angle of the flight path; respectively. The state variable is:

where is the control input of a UCAV; is the longitudinal overload, and its direction is consistent with the direction of the velocity vector; is the normal overload, and its direction is consistent with the direction of the lift; is the roll angle with the velocity vector as the axis, and its definition conforms to the right-hand rule. The velocity magnitude can be changed by , and the velocity direction can be changed by and . Therefore, the desired maneuvers of a UCAV can be achieved with an appropriate in theory. Considering the real conditions of the short-range air combat environment and the limited maneuverability of a UCAV, several limitations were set, m and m; m/s; and . The parameters of the UCAV motion model are shown in Figure 1.

Figure 1.

UCAV motion model.

2.2. Air Combat Situation Assessment Model

An air combat situation can be understood as qualitative or quantitative descriptions of various indicators of UCAVs including the position, velocity, firepower and relative position and velocity difference between UCAVs. The air combat situation is dynamic so that the descriptions in different evaluation systems may be different.

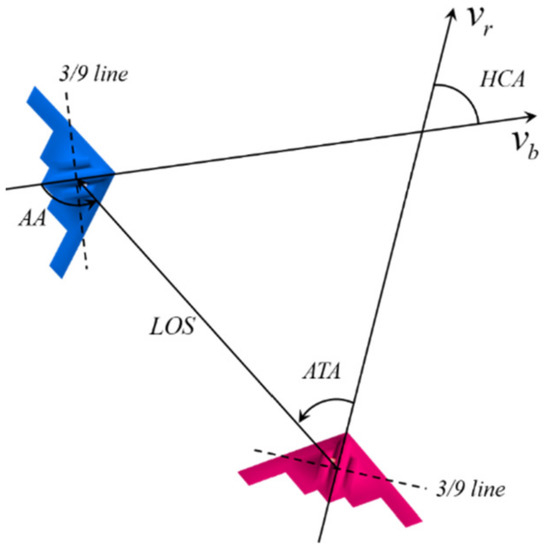

The geometry between two UCAVs can be determined by the relative angle and distance in the short-range air combat. The geometry of air combat is shown in Figure 2. The red aircraft represents the UCAV trained by deep reinforcement learning, and the blue aircraft represents the enemy UCAV. is line of sight, which is defined as the vector from the center of mass of the red UCAV to that of the blue UCAV, and its magnitude is the distance between the two UCAVs. is the antenna train angle, which is defined as the angle between the velocity vector of the red UCAV and the , . is the aspect angle, which is defined as the angle between the velocity vector of the blue UCAV and the , . is the heading crossing angle, which is defined as the angle between the velocity vectors of the two UCAVs, . The three-ninths line is the line between the 3 and 9 o’clock directions of an aircraft, which is used to determine whether the other aircraft is located in the front or rear hemisphere of the aircraft.

Figure 2.

Geometry of air combat.

Let and denote the position and the velocity vector of the red UCAV, respectively, and and denote the position and the velocity vector of the blue UCAV, respectively. Then, the formula using the above parameters is as follows:

The situation assessment was divided into two parts: one was the conditions for the end of air combat including the attack zone, altitude limit and velocity limit; the other part was the situation assessment in the process of air combat including the angle, velocity and altitude situations.

2.2.1. Conditions for the End of Air Combat

Short-range air combat, also known as a dogfight, usually covers an operational range of 10 km, and the aim is to shoot down enemy aircraft or make them lose their combat capability. Therefore, whether the air combat is over can be judged by the following conditions:

- Shooting down the enemy or being shot down;

- Stalling or crashing.

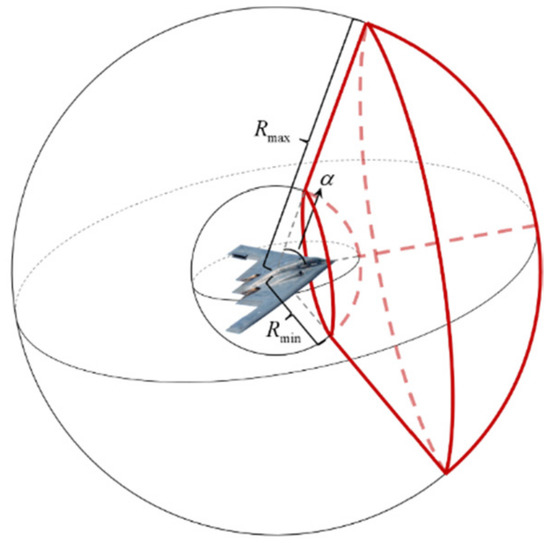

The attack zone of a UCAV is limited due to the impact of the types and performances of weapons. In this paper, the attack zone was simplified to , which is the zone surrounded by red lines in Figure 3. denotes the maximum attack angle of a UCAV, . denotes the minimum attack range, and denotes the maximum attack range. If and the distance of two UCAVs is greater than and less than , the blue UCAV is in the attack zone of the red UCAV, so that the red UCAV will fire to shoot down the blue UCAV. On the contrary, the red UCAV will be shot down. The attack zone, , can be expressed as:

Figure 3.

Attack zone of a UCAV.

Aircraft with an extremely low velocity or altitude are not allowed in air combat. Therefore, the other condition for judging whether the air combat is over can be expressed as:

where and denote the minimum allowable velocity and altitude of a UCAV, respectively.

In addition, it was necessary to limit the number of steps per episode in the simulation. Therefore, another condition for judging whether the air combat is over can be expressed as:

where denotes the maximum steps per episode.

2.2.2. Situation Assessment in the Process of Air Combat

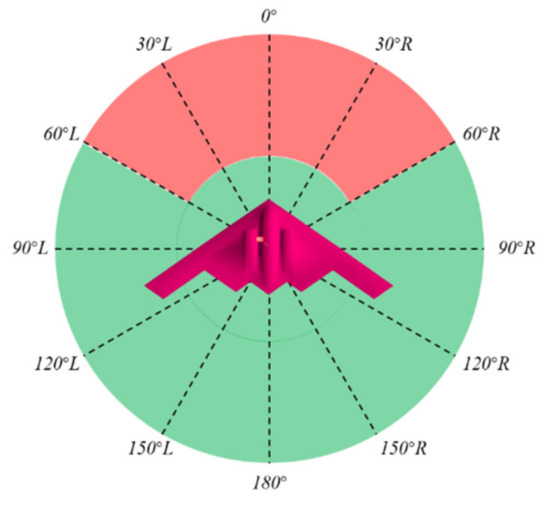

This section takes the red UCAV as an example. In essence, the whole process of air combat can be understood as that the red UCAV avoids entering the attack zone of the blue UCAV and makes the blue UCAV enter that of the red UCAV by maneuvering. Figure 4 shows a top view of the attack zone. The red and the green parts represent the attack zone and the security zone, respectively.

Figure 4.

Top view of the attack zone.

According to Figure 2 and Figure 4, the smaller the and , the more favorable it is for the red UCAV in the same other conditions. In addition, flight safety is also a key factor affecting the situation according to Section 2.2.1. Therefore, the air combat situation, , can be divided into four parts, which can be switched according to the state of the UCAV. The switching process is as follows (Algorithm 1).

| Algorithm 1 Switching Process of an Air Combat Situation |

| If or are less than the threshold Else if Else if distance is greater than the threshold |

| Else |

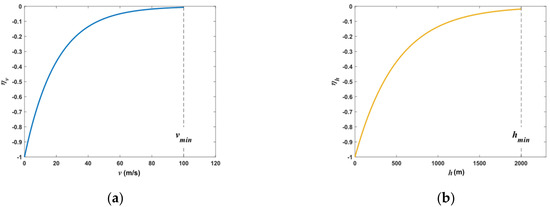

The first part of the situation model takes the velocity and altitude of the UCAV as variables. When the velocity or altitude is less than the threshold, takes effect, which is to ensure flight safety. The velocity index and altitude index of are as follows:

is defined as:

where and are constants used to adjust the trend of the index curves, , and and are shown in Figure 5.

Figure 5.

(a) Velocity index; (b) altitude index.

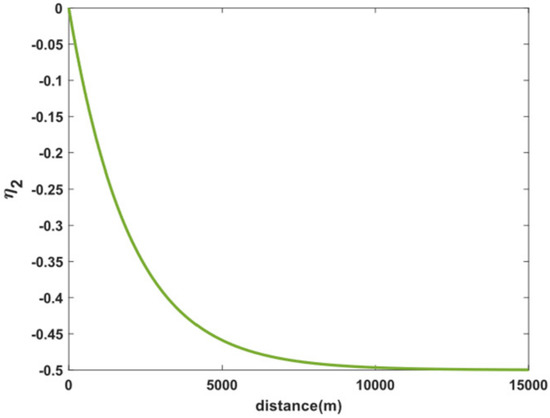

The second part of the situation model, , takes the distance between UCAVs as a variable. When the UCAV flies at a normal velocity and altitude, , and takes effect, which aims to guide the UCAV to quickly approach the enemy and reach attack conditions. is defined as:

where is a constant used to adjust the trend of , . The change in with the distance between two the UCAVs is shown in Figure 6.

Figure 6.

Change in with the distance between two UCAVs.

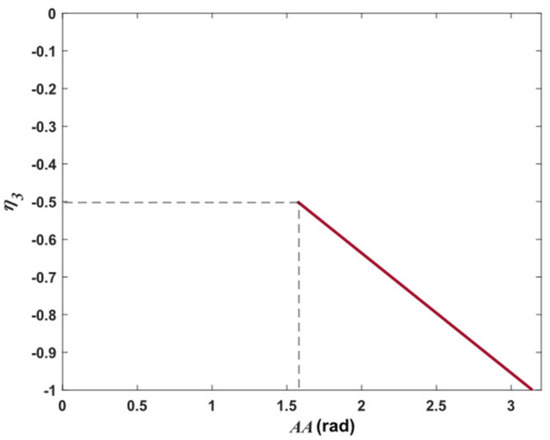

The third part of the situation model, , takes as a variable. When the UCAV flies at a normal velocity and altitude, , and the distance between the two UCAVs is greater than the safety threshold, meaning takes effect, the aim of which is to lay the foundation for attack conditions for the UCAV. is defined as:

. The change in with is shown in Figure 7.

Figure 7.

Change in with .

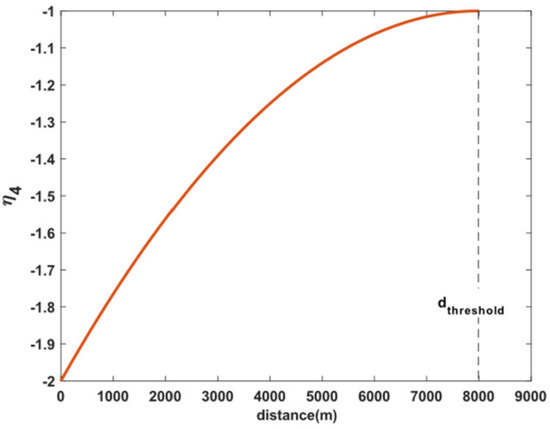

The fourth part of the situation model, , takes the distance between the UCAVs as a variable. When the UCAV flies at a normal velocity and altitude, , and the distance between the two UCAVs is less than the safety threshold, meaning takes effect, which is aimed at guiding the UAV to safer states so that the enemy loses the attack conditions. is defined as:

where is a constant, . The change in with the distance between two the UCAVs is shown in Figure 8.

Figure 8.

Change in with the distance between two UCAVs.

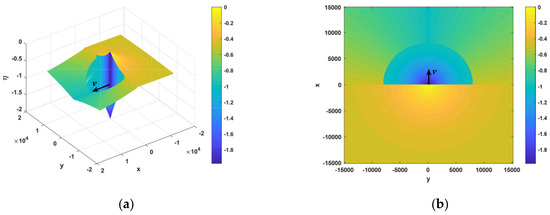

Let , , and . According to the velocity of the enemy, , the air combat situation, , at the same altitude can be drawn with the enemy as the origin as shown in Figure 9.

Figure 9.

Air combat situation at the same altitude: (a) stereoscopic view of the air combat situation at the same altitude; (b) plan view of the air combat situation at the same altitude.

It can be seen from Figure 9 that in a position a high air combat situation value, it is difficult for the enemy to form attack conditions, while the UCAV is relatively safe and with conditions that are conducive to the formation of attack conditions against the enemy.

2.3. Enemy Maneuver Policy

A real air combat environment has high-intensity antagonism; that is, the enemy will attack with all its strength. In order to verify effectiveness of the deep reinforcement learning, an enemy maneuver strategy based on a greedy algorithm is designed in this section. Let and denote the states of the red and the blue UCAVs, respectively, at each decision step, . Firstly, calculating each air combat situation after the blue UCAV executes each available control input, , separately, and recording these situations as , . Then, the corresponding to the maximum as the actual control input of the blue UCAV to maneuver is taken. The maneuver strategy based on a greedy algorithm is defined as:

In order to meet the real-time requirements of air combat confrontation and to improve the exploration efficiency of the greedy algorithm, it was necessary to limit the exploration space of the available control input, . Complex maneuvers can be decomposed into a series of simple basic maneuvers. NASA designed 7 elemental maneuvers, that realize the complex maneuvers of aircraft in 3D space [24]. Therefore, a 13-dimensional basic maneuver library was designed, which is shown in Table 1. Each action, , in the library corresponds to a set of control input , .

Table 1.

Basic maneuver library.

The effectiveness of the maneuver strategy based on a greedy algorithm was verified in the simulation in Section 4.

3. Maneuver Decision Method Based on a Proximal Policy Optimization Algorithm

3.1. Proximal Policy Optimization Algorithm

Reinforcement learning is a branch of machine learning [25]. Different from supervised learning and unsupervised learning, the agent in reinforcement learning learns strategy by interaction with the environment. The general model of reinforcement learning is the Markov decision process (MDP), which is shown in Figure 10. The model is composed of an environment, agent, state, reward and action. The environment includes the motion law of the object and some restrictions. It receives the action and updates the state and feeds back the reward to the agent. The agent is the carrier of reinforcement learning algorithm, which outputs the action according to the state of the environment and the reward.

Figure 10.

General model of reinforcement learning.

At each step, the agent executes action according to the policy , and then the state of the agent updates from to with the state transition probability . The environment feedbacks reward to the agent at the same time. The goal of reinforcement learning is to determine the optimal strategy to maximize the expected discount cumulative return, , called the value function [25], which is defined as:

where denotes the discount factor. Therefore, the optimal value function, (i.e., the maximum value of the expected discount cumulative return), is defined as:

Deep reinforcement learning is a combination of deep learning and reinforcement learning, which uses the parameter of neural networks to store and update the policy of reinforcement learning. It has an edge in solving the problems with high task complexity, high dimension of solution space and redundant state information.

The proximal policy optimization algorithm (PPO) is an on-policy algorithm based on policy gradient methods [26] for deep reinforcement learning. Policy gradient methods work by computing an estimator of the policy gradient and plugging it into a stochastic gradient ascent algorithm. The loss function of a PPO is defined as [22]:

where and are coefficients; is the value function of the squared-error loss; denotes the entropy bonus of policy [27,28]. The surrogate objective is the core of the PPO algorithm, which is defined as [22]:

where denotes the clip range, which is used to clip the probability ratio to avoid an excessively large policy update; is an estimator of the advantage function, which can be calculated by generalized advantage estimation (GAE) [29].

where is the GAE factor, and is defined as [29]:

In the training process, the PPO algorithm updates the parameter of the neural network by optimizing the loss function to achieve the purpose of updating the policy.

3.2. Action Space

The action space is divided into discrete space and continuous space. The discrete action space, , can be directly composed of the basic maneuver library in Table 1, which is expressed as . The discrete action space, based on the maneuver library, is equivalent to providing priori experience for the agent. Compared with the discrete action space, the continuous action space, , can make better use of the maneuverability of a UCAV, but it may increase the difficulty of flight control and reduce the learning efficiency. Since the actions of reinforcement learning generally need to be normalized, it was necessary to linearly map the control input . Therefore, the continuous action space can be expressed as:

where denotes linear mapping; denotes the jth dimension element of action .

A comparative experiment between the discrete action space and the continuous action space is carried out in Section 4.

3.3. State Observation Space

The convergence of the algorithm is affected by the state observation space. Design of the state observation space strives to be concise and efficient. On the one hand, incompleteness of information in the state observation space may make the algorithm difficult to converge. On the other hand, redundant information in the state observation may cause overfitting. In addition, refining the original state information properly and designing the information highly relevant to decisions can reduce the training time. Therefore, a 14-dimensional state observation space is designed in this section, and the information was scaled to facilitate feature extraction with a neural network.

Taking the red UCAV as an example, the state observation space is shown in Table 2.

Table 2.

State observation space.

3.4. Reward Function with Situation Reward Shaping

The design of the reward function is one of the keys to deciding whether the algorithm is convergent. Only when the UCAV of one side dies can victory or defeat be determined in air combat, which can be abstracted as a sparse reward problem in reinforcement learning. The sparse reward problem means that the agent finds it difficult to learn tasks with high exploration complexity due to the lack of a feedback signal [30]. In order to make the algorithm converge within a limited time, a reward function with situation reward shaping based on air combat situation assessment is designed in this section. The design using the air combat situation assessment can make the reward function continuous. The idea of situation reward shaping is to design the reward by the state situation of the agent, so that the agent will explore the terminal state faster to experience less punishment. The situation guidance reward is defined as:

The situation guidance reward reflects the current state of the UCAV. When the reward is positive, the UCAV is in an offensive situation, and when the reward is negative, the UCAV is in a defensive situation.

4. Simulation Results

4.1. Simulation Platform

The simulation environment in this paper was built with Python, and the algorithm was implemented based on PyTorch. The real-time confrontation display was based on Tacview. The experimental equipment was a desktop computer configured with an Intel(R) Xeon(R) Gold 6254 CPU @ 3.10 GHz and a single NVIDIA GeForce RTX 3090 GPU.

In order to ensure the fairness of the confrontation, the blue UCAV adopted the same parameters as the red UCAV. The relevant parameter settings are shown in Table 3.

Table 3.

Relevant parameters of air combat.

After debugging, the relevant parameters of deep reinforcement learning, including neural network parameters, were set as follows. The policy network and value network were constructed by a fully connected network. Both of the networks had two hidden layers with 64 and 64 units, respectively. The activation function was a tanh function. The PPO algorithm used eight environments to collect data in parallel in the simulation. The number of steps to run for each environment per update was 4096, the minibatch size was 128 and the number of epoch when optimizing the surrogate loss was 10. The discount factor , the value function squared-error loss coefficient , the entropy bonus coefficient , the clip range and the GAE factor .

4.2. Results and Analysis

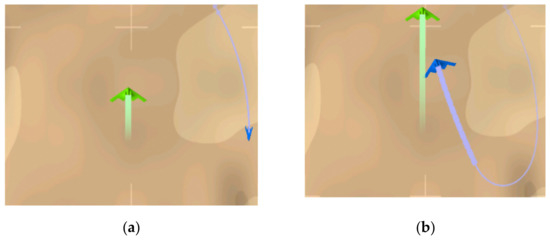

Figure 11 and Figure 12 show that the different stages of the blue UCAV with the greedy algorithm chasing the green target moving in the horizontal plane and vertical plane, respectively. It can be seen from Figure 11 and Figure 12 that the blue UCAV shot down the green target successfully through a series of maneuvers such as turn and climb. Moreover, the blue UCAV also had the ability to avoid entering the attack zone of the target, which verifies the effectiveness of the maneuver strategy based on the greedy algorithm.

Figure 11.

Blue UCAV chasing the green target moving in the horizontal plane: (a) early stage of air combat; (b) later stage of air combat.

Figure 12.

Blue UCAV chasing the green target moving in the vertical plane: (a) early stage of air combat; (b) later stage of air combat.

In order to ensure the fairness of the confrontation and to be consistent with an actual combat environment, the position, velocity and attitude of the UCAVs were initialized stochastically within a certain range in the training process. The initial states settings of the UCAVs are shown in Table 4.

Table 4.

Initial states setting.

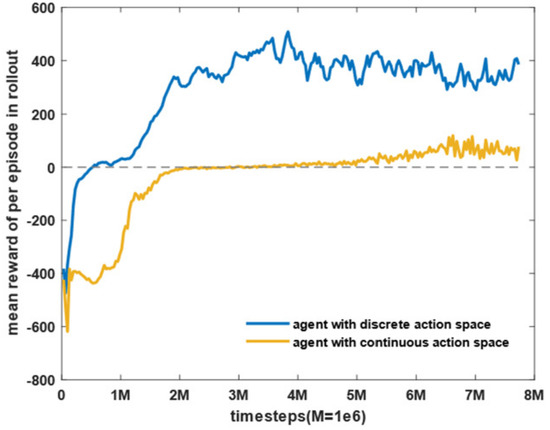

Figure 13 shows the training curve of the agents with different action spaces. The horizontal axis is the number of training time steps, and the vertical axis is the average reward value of each episode in the rollout of the PPO algorithm, which reflects the convergence of the training. As can be seen from Figure 13, the agent using discrete action space quickly improved the average reward per episode and stabilized at approximately 300. However, the agent using continuous action space had no prior experience and needed to learn flight control first. Therefore, the average reward increased slowly and only stabilized at approximately 50 in the later stage. The subsequent experiments in this paper used the discrete action space.

Figure 13.

Training curve of the agents using different action spaces.

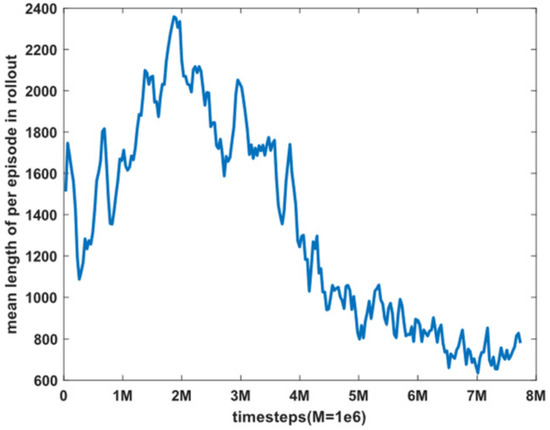

Figure 14 shows the average steps of the agent in each episode during the training process. It can be seen from the figure that the average length of the episode increased gradually in the early stage of the training but decreased gradually when the number of training time steps was greater than 2 M, and it finally stabilized at approximately 700 steps. In addition, the average reward value of per episode in Figure 13 tended to rise first and then stabilize, which shows that the average reward received by the agent at each step gradually increased.

Figure 14.

Mean length per episode in the training process.

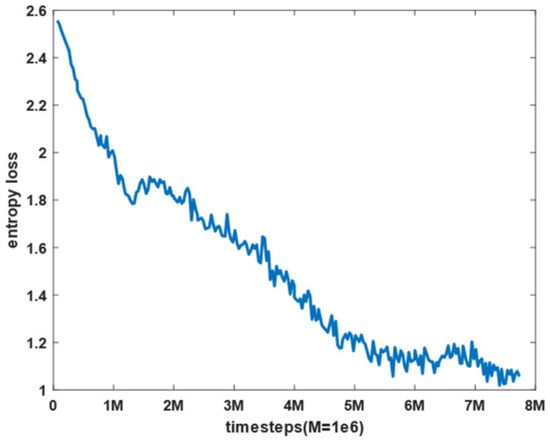

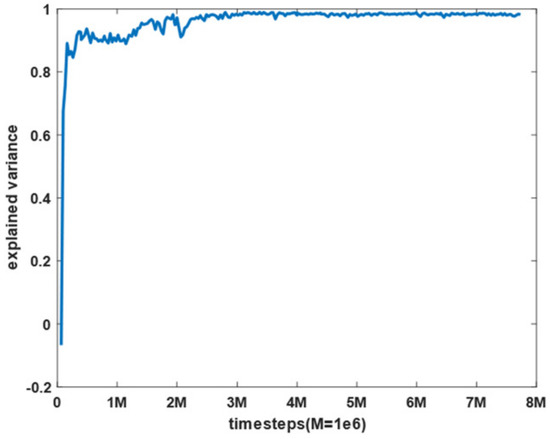

Figure 15 and Figure 16 show the entropy loss and the explained variance of the PPO algorithm during the training process, respectively. The entropy loss reflects the randomness of the actions of the agent. As can be seen from Figure 15, the entropy loss gradually dropped to a lower level after the beginning of training, which indicates that the policy of the agent was relatively stable in the later stage of training. The explained variance reflects the fitting accuracy of the value network of the PPO algorithm. The closer it is to one, the higher the accuracy. It can be seen from Figure 16 that the explained variance rose rapidly in the beginning of training and increased with the training time steps, and it finally stabilized at approximately one. Figure 15 and Figure 16 show that the performance of the policy network of the agent converged to a high level after the training.

Figure 15.

Entropy loss of the PPO algorithm in the training process.

Figure 16.

Explained variance of the PPO algorithm in the training process.

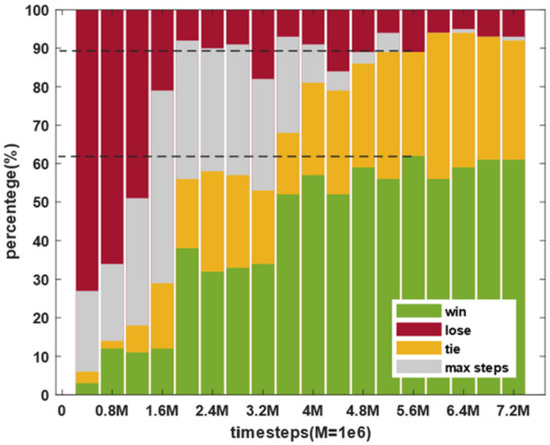

Figure 17 shows the results of 100 episodes of air combat confrontation between the agent and the blue UCAV based on the greedy policy. The confrontation results are divided into four types including winning, losing, drawing and reaching the maximum number of steps in the current episode. Winning means that the UCAV shot down the enemy, or the enemy went beyond the margins. Losing means that the enemy shot down the UCAV, or the UCAV went beyond the margins. Drawing means that the two UCAVs met the firing conditions at the same time and were shot down by the other side, or the two the UCAVs went beyond the margins. The horizontal axis is the number of training steps, and the vertical axis is the percentage of the results. Green represents winning, red represents losing, yellow represents a tie, and grey represents that the current episode reached the maximum number of steps. As can be seen from Figure 17, the winning rate of the agent gradually rose with the increase in the number of training time steps and tended to be stable. After training, the winning rate can reach 62%, and the corresponding losing rate is only 11%. In addition, the average decision time per step of the PPO algorithm was 0.98 ms and that of the greedy algorithm was 1.62 ms, both of which were less than the simulation step size of 0.1 s, thus meeting the real-time requirements. It should be pointed out that even in the later stage of training, the greedy algorithm could still maintain a certain unbeaten rate for the blue UCAV, which shows that it also had a strong autonomous decision ability.

Figure 17.

Air combat confrontation results.

Figure 14 and Figure 17 show that the agent lacked attack skills in the early stage of training, but learned to avoid attack from the blue UCAV so as to increase the cumulative reward by prolonging the survival steps. As a result, the agent in the early stage of training often reached the maximum number of steps. When the training reached a certain level, the agent gradually learned the attack skills, which could not only defeat the blue UCAV but also reduce the maneuver time steps. The above conditions show that the maneuver decision method proposed in this paper can enable a UCAV to learn the maneuver policy autonomously and defeat the enemy.

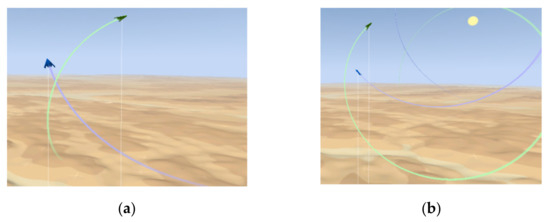

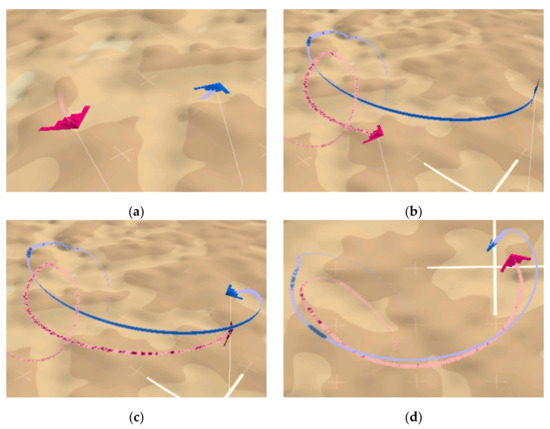

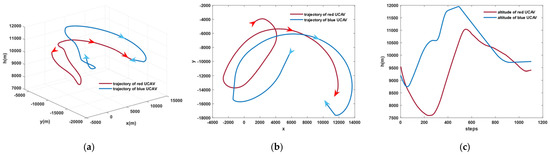

The experiments in this paper realized real-time situation display through a TCP communication protocol and Tacview software. Figure 18 is an example of the real-time display. Figure 19 displays the recorded trajectory curves of the two UCAVs for the example in Figure 18. As can be seen from Figure 18 and Figure 19, the agent had learned to combine a series of basic maneuvers, such as diving, climbing and circling, into tactical maneuvers and finally defeated the enemy.

Figure 18.

Real-time display example of air combat confrontation: (a) early stage of air combat; (b) medium stage of air combat; (c) later stage of air combat; (d) top view of the air combat.

Figure 19.

Trajectories for the air combat confrontation example: (a) two UCAVs; (b) top view of the two UCAVs; (c) altitudes of the two UCAVs.

5. Conclusions

In this paper, a UCAV air combat maneuver decision method based on a PPO algorithm was proposed. Firstly, a general UAV short-range air combat confrontation framework was established including a motion model of a fixed-wing aircraft and a situation assessment model of air combat. In order to verify the effectiveness of the method, an enemy maneuver strategy based on a greedy algorithm was designed. Then, the action space based on a basic maneuver library and a state observation space for the agent were constructed. A maneuver policy was generated by training with the PPO algorithm. A reward function based on situation reward shaping was designed for accelerating the convergence rate of the algorithm. Finally, simulations of one-to-one, short-range air combat in stochastic situations were carried out to verify the effectiveness of the PPO algorithm. The experiment with agents using different action spaces showed that the discrete action space may reduce the difficulty of flight control and increase the learning efficiency. The experiment with the blue UCAV using a greedy algorithm to chase a moving target resulting in 100 episodes of air combat confrontation showed that the greedy algorithm also has a strong autonomous decision ability, but the performance of the PPO algorithm was better. The winning rate of the PPO algorithm reached 62%, and the corresponding losing rate was only 11%. In addition, the average decision time per step of the PPO algorithm was less than the simulation step size of 0.1 s, meeting real-time requirements. As can be seen from the real-time display example, the agent learned to combine a series of basic maneuvers, such as diving, climbing and circling, into tactical maneuvers. The results of the simulations show that the maneuver decision method proposed in this paper can enable a UCAV to learn the maneuver policy autonomously and defeat the enemy.

In addition, the proposed UCAV maneuver decision method is essentially motion control of a UAV. Therefore, it also has application potential in other fields including monitoring, organizing communication, search and transportation [31,32,33].

Author Contributions

Conceptualization, K.Y. and W.D.; methodology, K.Y. and W.D.; software, K.Y. and M.C.; validation, K.Y., W.D. and M.C.; formal analysis, K.Y.; investigation, M.C.; resources, S.J. and R.L.; data curation, K.Y.; writing—original draft preparation, K.Y.; writing—review and editing, K.Y. and W.D.; visualization, K.Y. and M.C.; supervision, W.D., S.J. and R.L.; project administration, W.D., S.J. and R.L.; funding acquisition, W.D. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Experience-Based Reinforcement Learning Approach for UAV Control (grant number: 61806217).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

Thank you to our partners for their trust and collaboration and for facilitating our work’s completion. Thank you to the editors for their patience, guidance and corrections. Thank you to Wenhan Dong, who supported us in this work the entire time.

Conflicts of Interest

The authors declare no conflict of interest.

Nomenclature

| position vector of a UCAV | |

| longitudinal overload my | |

| normal overload | |

| control input | |

| line of sight | |

| antenna train angle | |

| aspect angle | |

| heading crossing angle | |

| maximum attack angle of a UCAV | |

| attack range of a UCAV | |

| air combat situation | |

| maneuver policy of a UCAV | |

| Action vector of a UCAV | |

| value function of reinforcement learning | |

| loss function of a neural network | |

| probability ratio of a policy update | |

| action space of reinforcement learning | |

| state observation space of reinforcement learning | |

| discount factor of reinforcement learning | |

| value function squared-error loss coefficient | |

| entropy bonus coefficient | |

| clip range of the PPO | |

| generalized advantage estimation factor |

References

- McManus, J.W.; Chappell, A.R.; Arbuckle, P.D. Situation Assessment in the Paladin Tactical Decision Generation System. In AGARD Conference AGARD-CP-504: Air Vehicle Mission Control and Management; NATO: Amsterdam, The Netherlands, 1992. [Google Scholar]

- Burgin, G.H. Improvements to the Adaptive Maneuvering Logic Program; NASA CR-3985; NASA: Washington, DC, USA, 1986. [Google Scholar]

- Ernest, N.; Carroll, D.; Schumacher, C.; Clark, M.; Cohen, K.; Lee, G. Genetic Fuzzy based Artificial Intelligence for Unmanned Combat Aerial Vehicle Control in Simulated Air Combat Missions. J. Def. Manag. 2016, 6, 1–7. [Google Scholar] [CrossRef]

- DARPA. Air Combat Evolution. Available online: https://www.darpa.mil/program/air-combat-evolution (accessed on 24 June 2022).

- Vajda, S. Differential Games. A Mathematical Theory with Applications to Warfare and Pursuit, Control and Optimization. By Rufus Isaacs. Pp. xxii, 384. 113s. 1965. (Wiley). Math. Gaz. 1967, 51, 80–81. [Google Scholar] [CrossRef]

- Mendoza, L. Qualitative Differential Equations. Dict. Bioinform. Comput. Biol. 2004, 68, 421–430. [Google Scholar] [CrossRef]

- Park, H.; Lee, B.-Y.; Tahk, M.-J.; Yoo, D.-W. Differential Game Based Air Combat Maneuver Generation Using Scoring Function Matrix. Int. J. Aeronaut. Space Sci. 2016, 17, 204–213. [Google Scholar] [CrossRef]

- Bullock, H.E. ACE: The Airborne Combat Expert Systems: An Exposition in Two Parts. Master’s Thesis, Defense Technical Information Center, Fort Belvoir, VA, USA, 1986. [Google Scholar]

- Chin, H.H. Knowledge-based system of supermaneuver selection for pilot aiding. J. Aircr. 1989, 26, 1111–1117. [Google Scholar] [CrossRef]

- Wang, R.; Gao, Z. Research on Decision System in Air Combat Simulation Using Maneuver Library. Flight Dyn. 2009, 27, 72–75. [Google Scholar]

- Xuan, W.; Weijia, W.; Kepu, S.; Minwen, W. UAV Air Combat Decision Based on Evolutionary Expert System Tree. Ordnance Ind. Autom. 2019, 38, 42–47. [Google Scholar]

- Huang, C.; Dong, K.; Huang, H.; Tang, S.; Zhang, Z. Autonomous air combat maneuver decision using Bayesian inference and moving horizon optimization. J. Syst. Eng. Electron. 2018, 29, 86–97. [Google Scholar] [CrossRef]

- Cao, Y.; Kou, Y.-X.; Xu, A.; Xi, Z.-F. Target Threat Assessment in Air Combat Based on Improved Glowworm Swarm Optimization and ELM Neural Network. Int. J. Aerosp. Eng. 2021, 2021, 4687167. [Google Scholar] [CrossRef]

- Kaneshige, J.; Krishnakumar, K. Artificial immune system approach for air combat maneuvering. In Proceedings of the SPIE 6560, Intelligent Computing: Theory and Applications V, Orlando, FL, USA, 9–13 April 2007; Volume 6560, p. 656009. [Google Scholar] [CrossRef]

- Başpinar, B.; Koyuncu, E. Assessment of Aerial Combat Game via Optimization-Based Receding Horizon Control. IEEE Access 2020, 8, 35853–35863. [Google Scholar] [CrossRef]

- François-Lavet, V.; Henderson, P.; Islam, R.; Bellemare, M.G.; Pineau, J. An Introduction to Deep Reinforcement Learning. Found. Trends Mach. Learn. 2018, 11, 219–354. [Google Scholar] [CrossRef]

- McGrew, J.S.; How, J.; Williams, B.C.; Roy, N. Air-Combat Strategy Using Approximate Dynamic Programming. J. Guid. Control Dyn. 2010, 33, 1641–1654. [Google Scholar] [CrossRef]

- Liu, P.; Ma, Y. A Deep Reinforcement Learning Based Intelligent Decision Method for UCAV Air Combat. In Modeling, Design and Simulation of Systems, Proceedings of the 17th Asia Simulation Conference, AsiaSim 2017, Malacca, Malaysia, 27–29 August 2017; Communications in Computer and Information Science; Springer: Singapore, 2017; pp. 274–286. [Google Scholar] [CrossRef]

- Zhang, X.; Liu, G.; Yang, C.; Wu, J. Research on Air Confrontation Maneuver Decision-Making Method Based on Reinforcement Learning. Electronics 2018, 7, 279. [Google Scholar] [CrossRef]

- Yang, Q.; Zhang, J.; Shi, G.; Hu, J.; Wu, Y. Maneuver Decision of UAV in Short-Range Air Combat Based on Deep Reinforcement Learning. IEEE Access 2019, 8, 363–378. [Google Scholar] [CrossRef]

- Kong, W.; Zhou, D.; Yang, Z.; Zhao, Y.; Zhang, K. UAV Autonomous Aerial Combat Maneuver Strategy Generation with Observation Error Based on State-Adversarial Deep Deterministic Policy Gradient and Inverse Reinforcement Learning. Electronics 2020, 9, 1121. [Google Scholar] [CrossRef]

- Schulman, J.; Wolski, F.; Dhariwal, P.; Radford, A.; Klimov, O. Proximal Policy Optimization Algorithms. arXiv 2017, arXiv:1707.06347. [Google Scholar]

- Hu, J.; Wang, L.; Hu, T.; Guo, C.; Wang, Y. Autonomous Maneuver Decision Making of Dual-UAV Cooperative Air Combat Based on Deep Reinforcement Learning. Electronics 2022, 11, 467. [Google Scholar] [CrossRef]

- Austin, F.; Carbone, G.; Falco, M.; Hinz, H.; Lewis, M. Automated maneuvering decisions for air-to-air combat. In Proceedings of the Guidance, Navigation and Control Conference, Monterey, CA, USA, 17–19 August 1987. [Google Scholar] [CrossRef]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction; MIT Press: Cambridge, MA, USA, 2018. [Google Scholar]

- Paczkowski, M. Low-Friction Composite Creping Blades Improve Tissue Properties. In Proceedings of the Pulp and Paper, Stockholm, Sweden, 9 October 1996; Volume 70. [Google Scholar]

- Williams, R.J. Simple Statistical Gradient-Following Algorithms for Connectionist Reinforcement Learning. Mach. Learn. 1992, 8, 229–256. [Google Scholar] [CrossRef]

- Mnih, V.; Badia, A.P.; Mirza, L.; Graves, A.; Lillicrap, T.; Harley, T.; Silver, D.; Kavukcuoglu, K. Asynchronous Methods for Deep Reinforcement Learning. In Proceedings of the 33rd International Conference on Machine Learning, ICML 2016, New York, NY, USA, 19–24 June 2016; Volume 48, pp. 1928–1937. [Google Scholar]

- Schulman, J.; Moritz, P.; Levine, S.; Jordan, M.; Abbeel, P. High-Dimensional Continuous Control Using Generalized Advantage Estimation. In Proceedings of the 4th International Conference on Learning Representations, ICLR 2016–Conference Track Proceedings, San Juan, Puerto Rico, 2–4 May 2016; pp. 1–14. [Google Scholar]

- Riedmiller, M.; Hafner, R.; Lampe, T.; Neunert, M.; Degrave, J.; Van De Wiele, T.; Mnih, V.; Heess, N.; Springenberg, J.T. Learning by Playing Solving Sparse Reward Tasks from Scratch. In Proceedings of the 35th International Conference on Machine Learning, ICML 2018, Stockholm, Sweden, 10–15 July 2018; Volume 80, pp. 4344–4353. [Google Scholar]

- Mukhamediev, R.I.; Symagulov, A.; Kuchin, Y.; Zaitseva, E.; Bekbotayeva, A.; Yakunin, K.; Assanov, I.; Levashenko, V.; Popova, Y.; Akzhalova, A.; et al. Review of Some Applications of Unmanned Aerial Vehicles Technology in the Resource-Rich Country. Appl. Sci. 2021, 11, 10171. [Google Scholar] [CrossRef]

- Agarwal, A.; Kumar, S.; Singh, D. Development of Neural Network Based Adaptive Change Detection Technique for Land Terrain Monitoring with Satellite and Drone Images. Def. Sci. J. 2019, 69, 474–480. [Google Scholar] [CrossRef]

- Smith, M.L.; Smith, L.N.; Hansen, M.F. The quiet revolution in machine vision–A state-of-the-art survey paper, including historical review, perspectives, and future directions. Comput. Ind. 2021, 130, 103472. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).