Crime Scene Shoeprint Image Retrieval: A Review

Abstract

:1. Introduction

- (1)

- Each kind of method is reviewed and compared in terms of the feature extraction method, performance, etc. This may help neophytes become involved in research easily and quickly.

- (2)

- Discourse is presented on publicly available datasets and their details in terms of attributes, size, etc.

- (3)

- A comprehensive discussion is presented about current research issues and challenges linked with these methods.

- (4)

- Potential future work directions are explored to advance the relevant research issue.

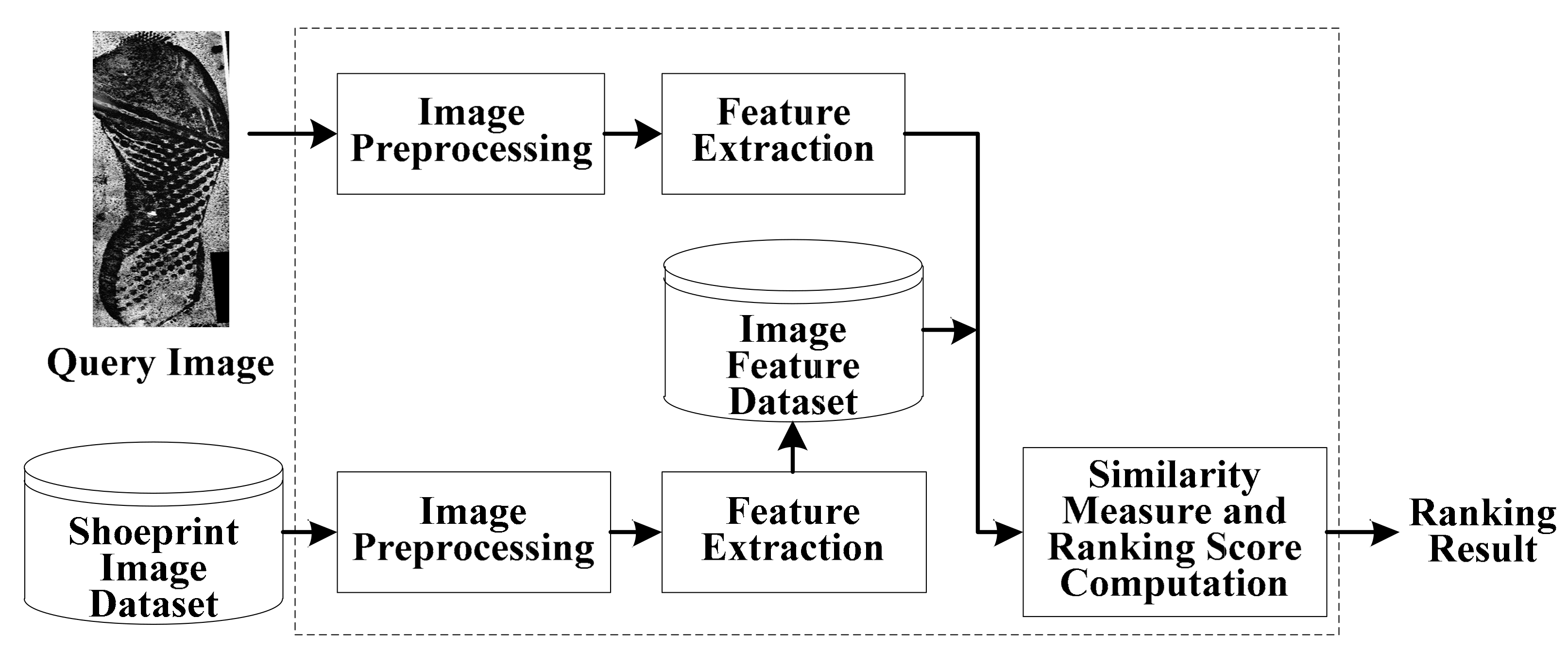

2. Shoeprint Retrieval Method

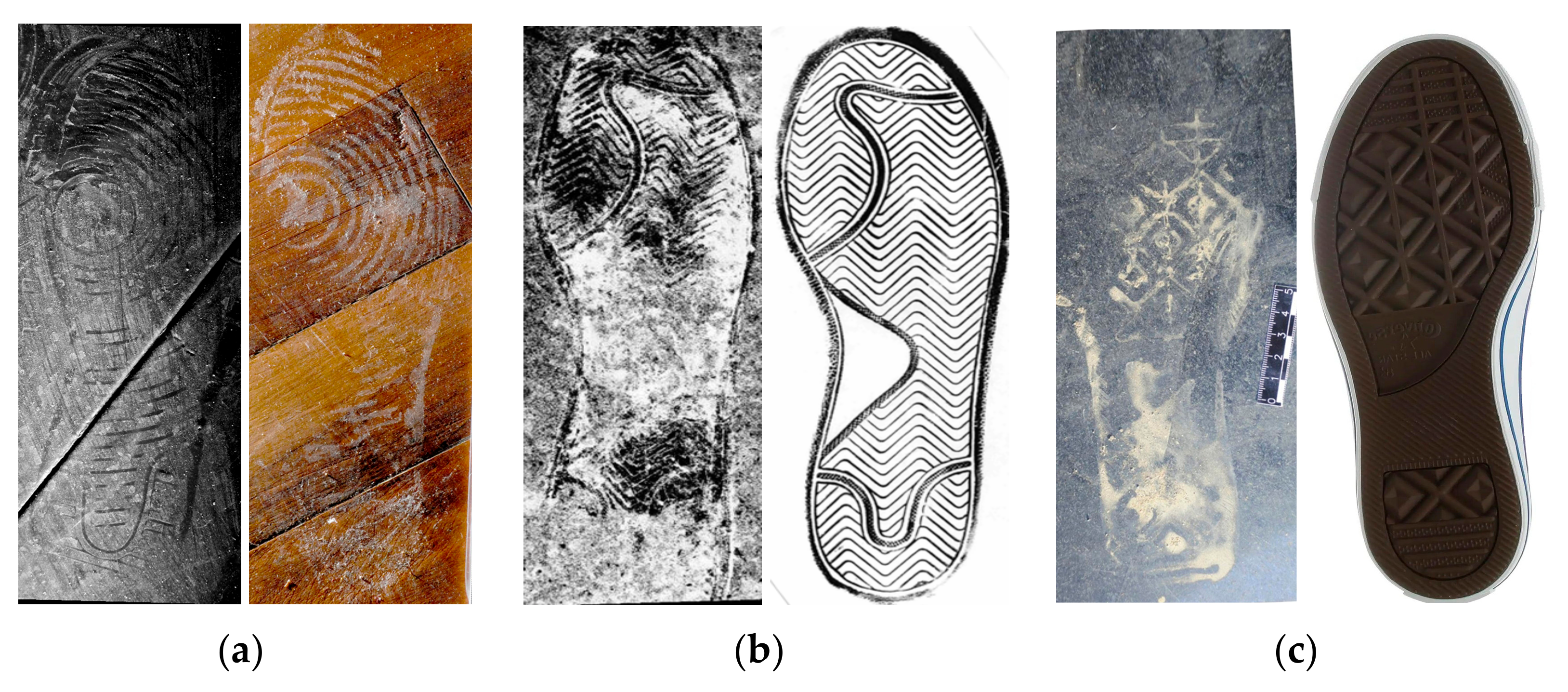

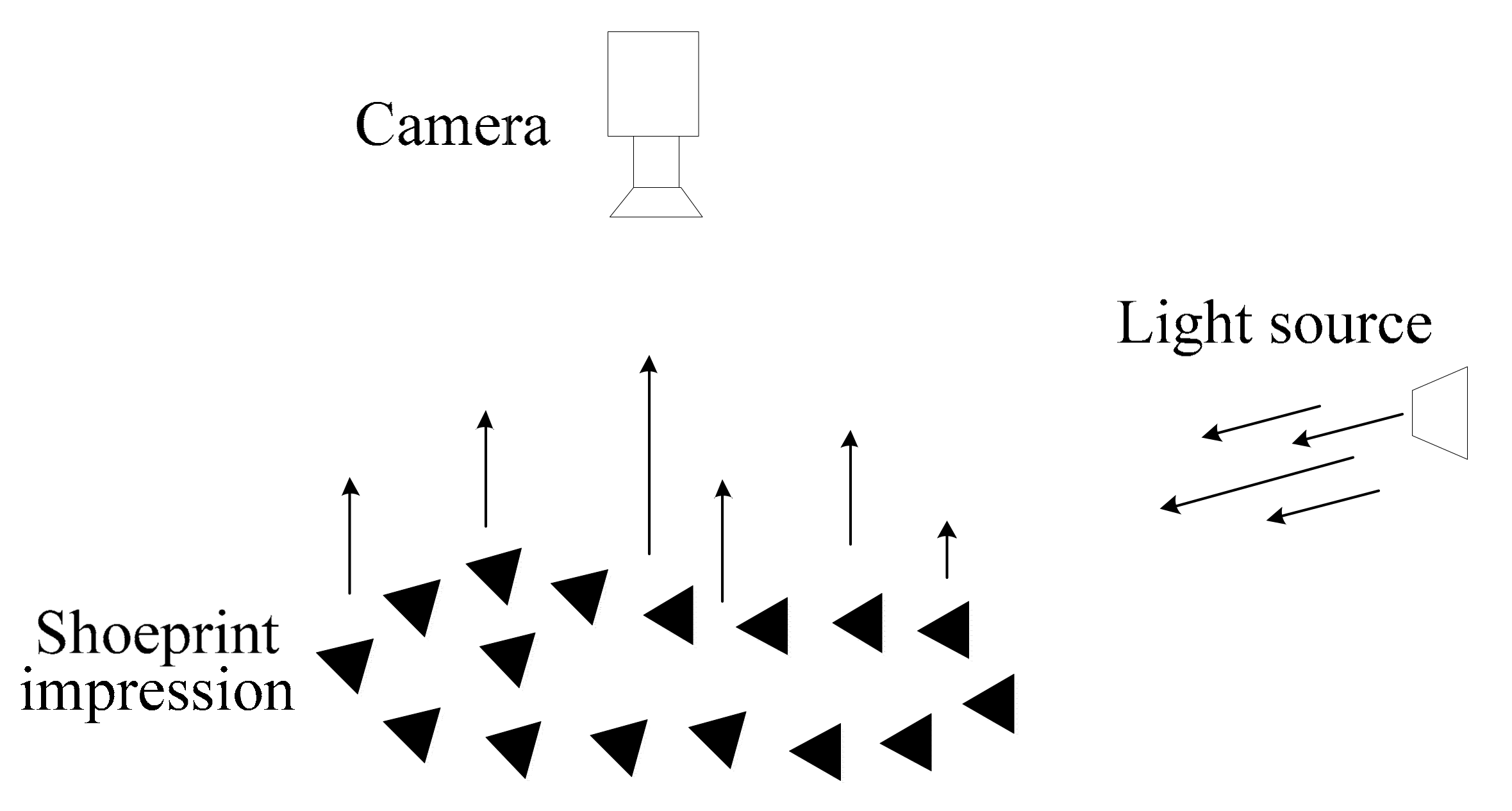

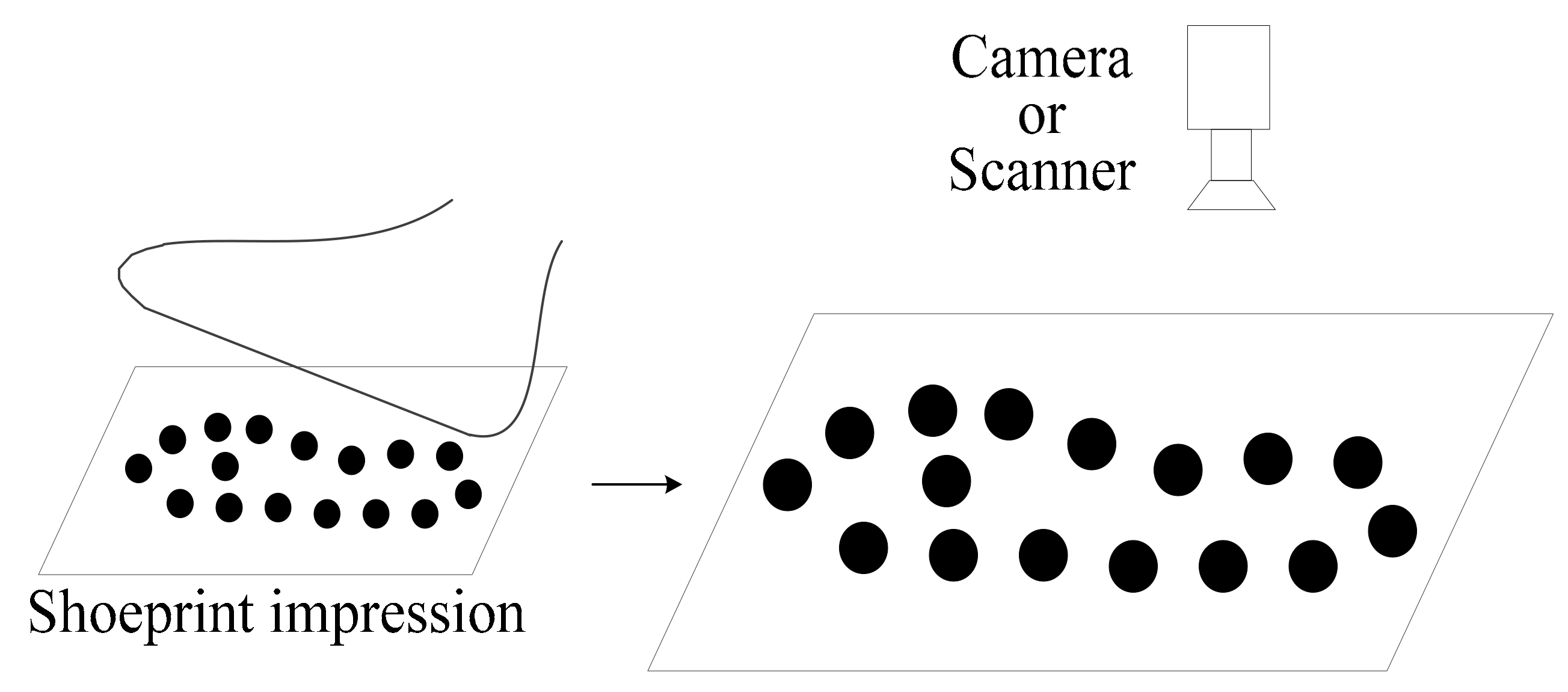

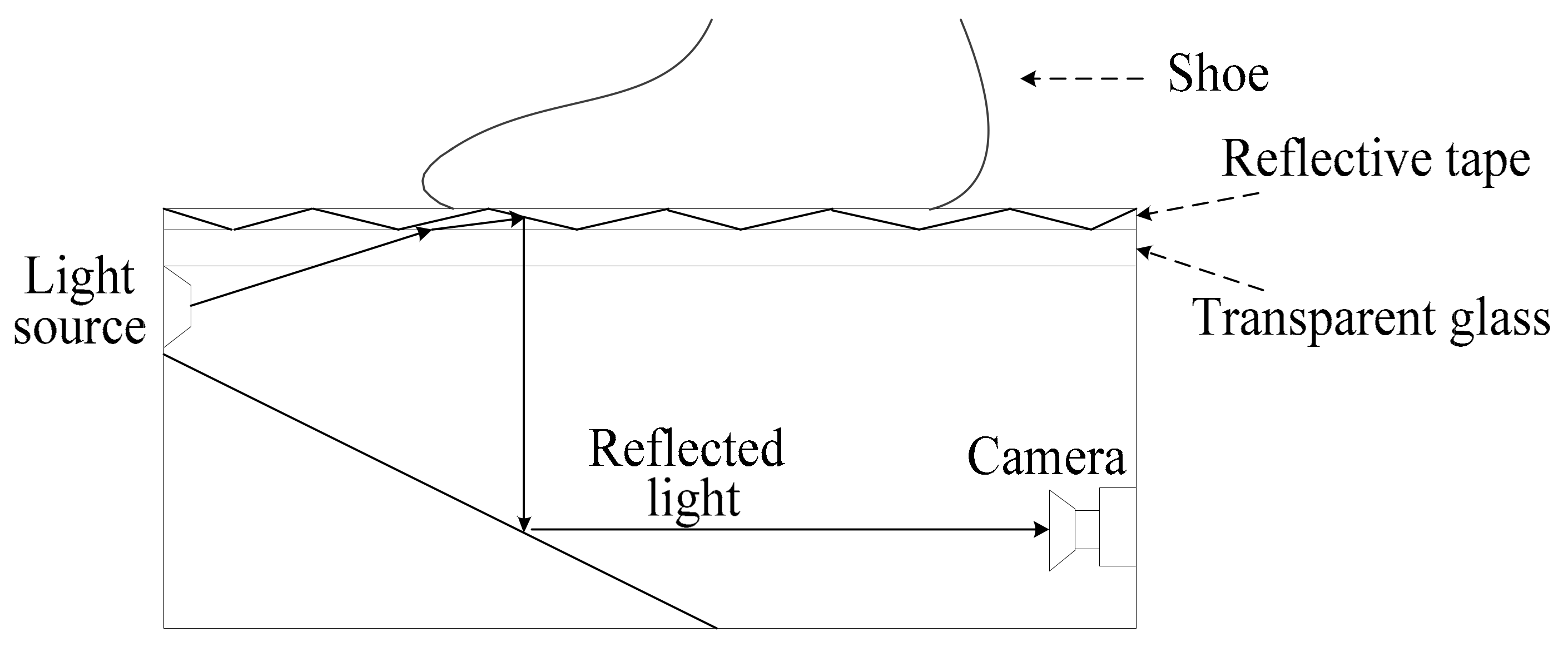

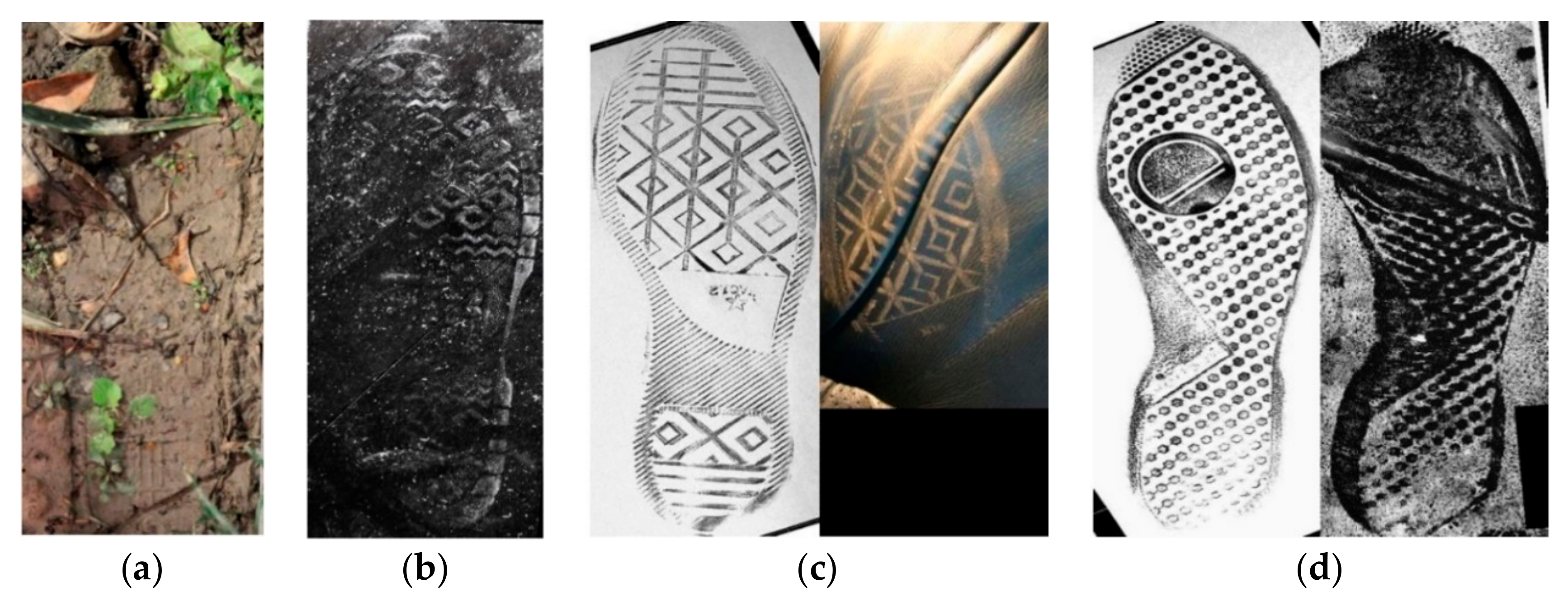

2.1. Shoeprint Image Acquisition

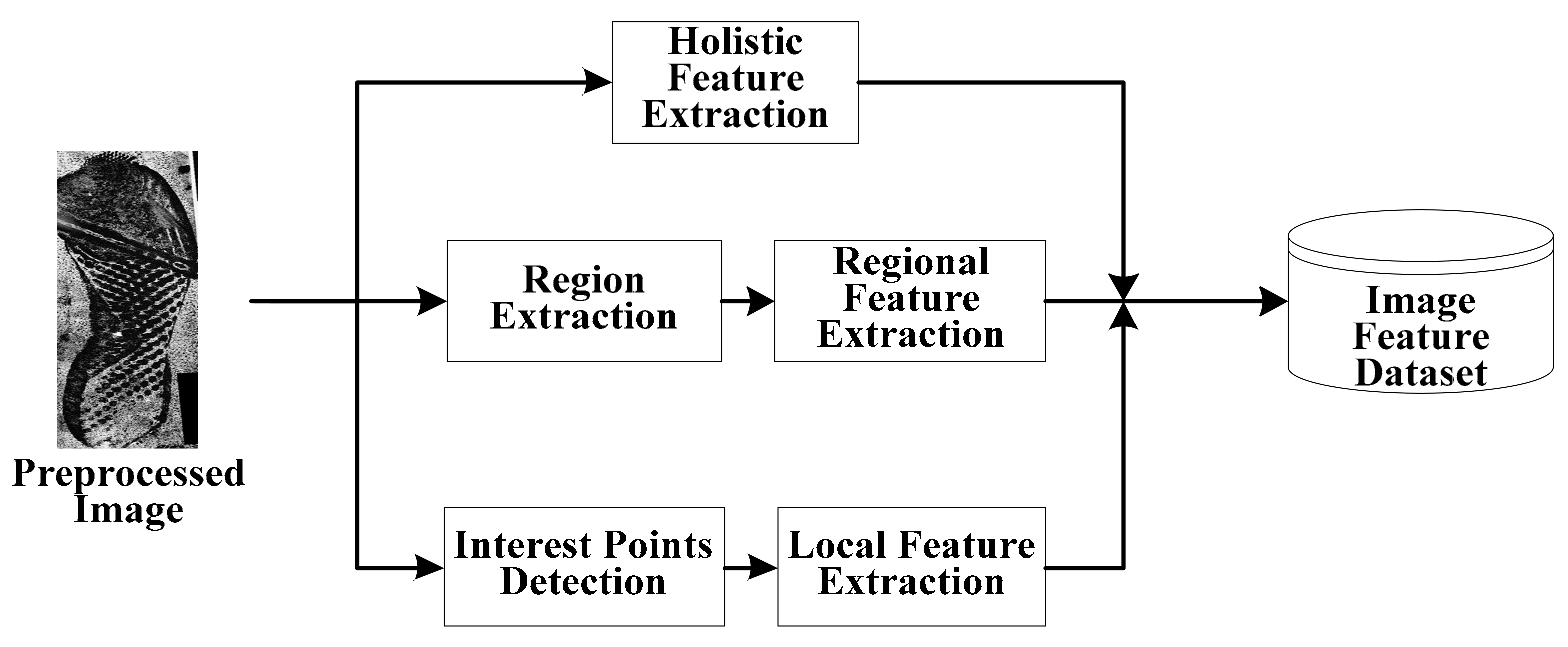

2.2. Feature Extraction Methods

2.2.1. Holistic-Feature-Based Methods

2.2.2. Regional Feature-Based Methods

2.2.3. Interest-Point-Feature-Based Methods

2.3. Similarity Evaluation and Ranking Score Computation

3. Datasets and Evaluation Metrics

3.1. Publicly Available Datasets

3.1.1. FID-300 Dataset

3.1.2. CS Dataset

3.2. Evaluation Metrics

4. Research Challenges and Discussions

4.1. Research Challenges

4.1.1. Limited Data

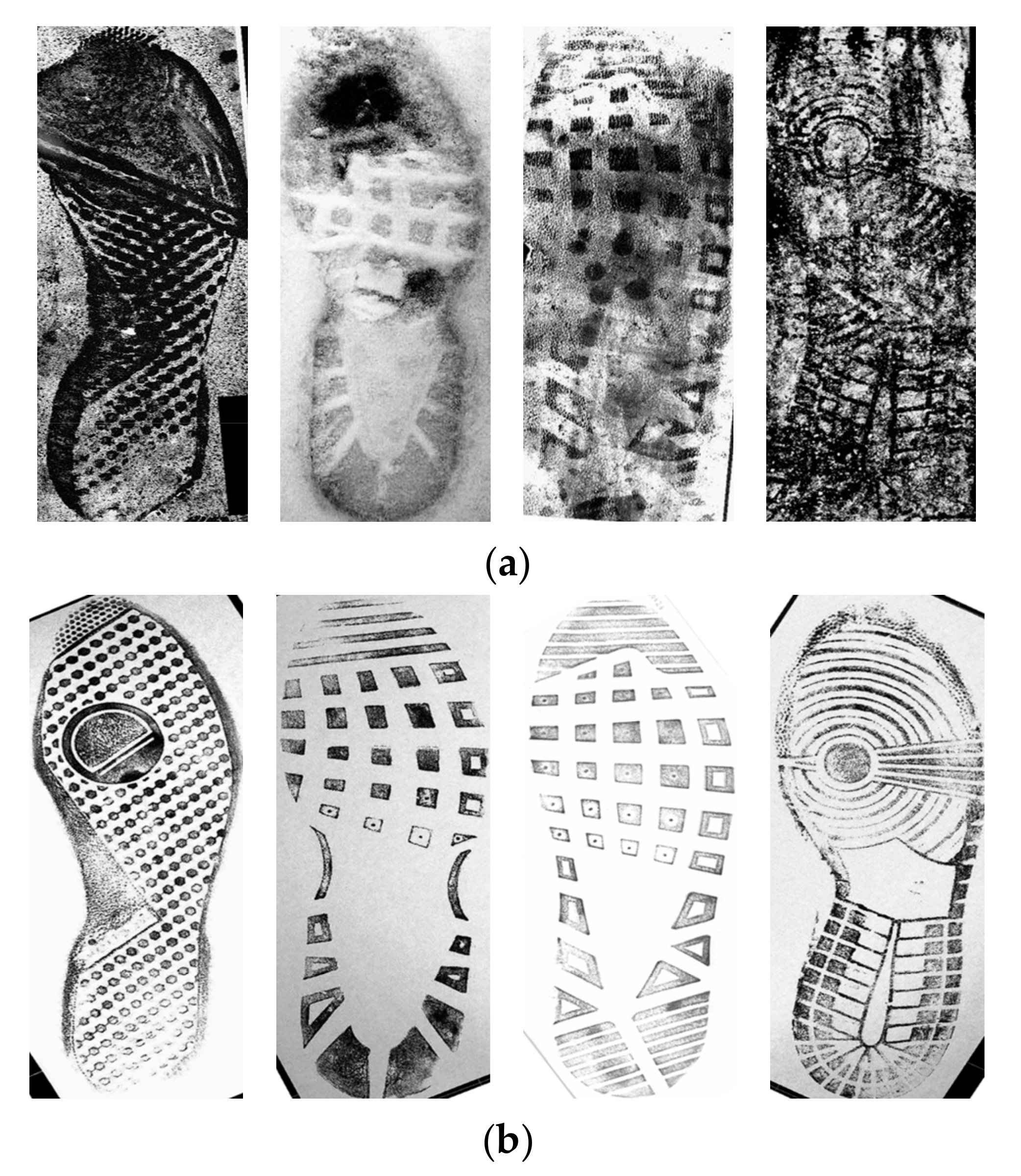

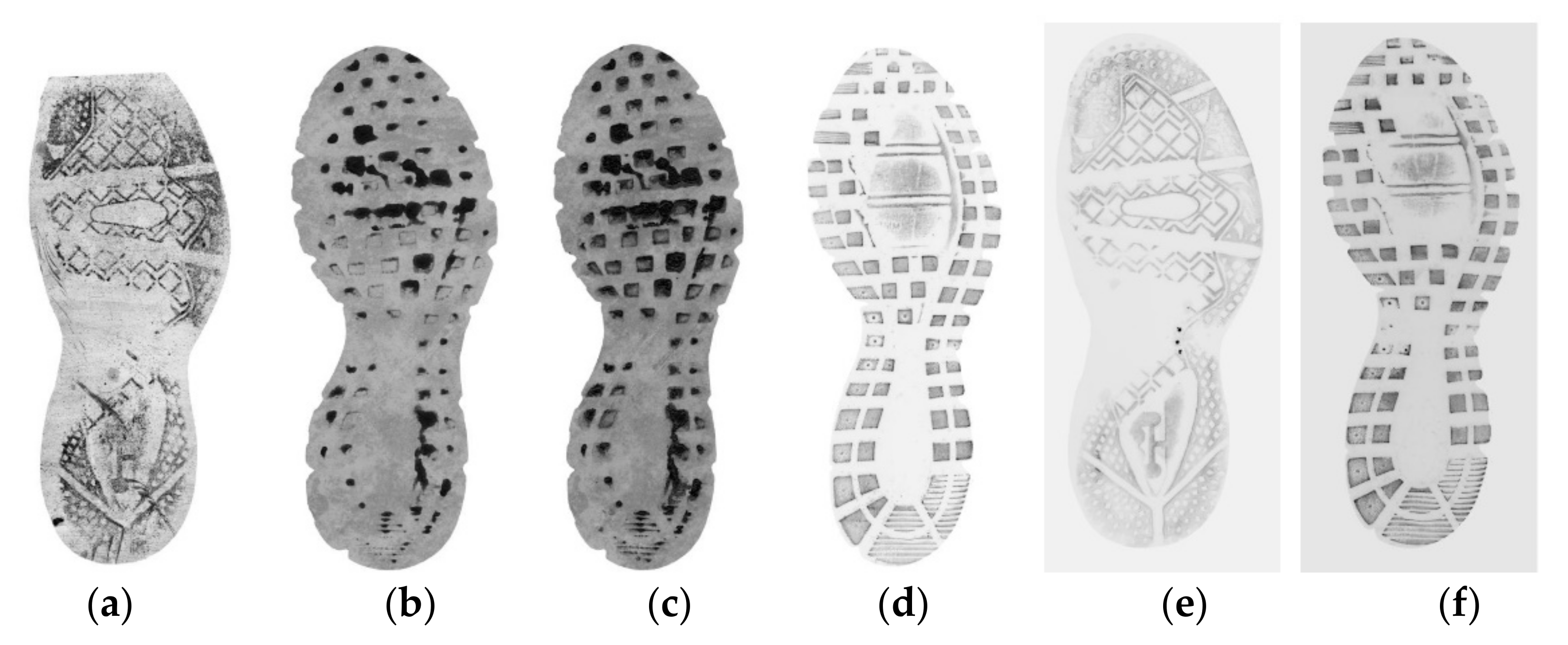

4.1.2. Degraded Images

4.1.3. Large Intra-Class Variations

4.2. Discussions

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Liu, Y.; Hu, D.; Fan, J.; Wang, F.; Zhang, D. Multi-feature fusion for crime scene investigation image retrieval. In Proceedings of the IEEE International Conference on Digital Image Computing: Techniques and Applications, Sydney, NSW, Australia, 29 November–1 December 2017; pp. 1–7. [Google Scholar]

- Benecke, M. DNA typing in forensic medicine and in criminal investigations: A current survey. Naturwissenschaften 1997, 84, 181–188. [Google Scholar] [CrossRef] [PubMed]

- Robertson, J.R. Forensic Examination of Hair; CRC Press: Boca Raton, FL, USA, 2002. [Google Scholar]

- Buckleton, J.S.; Bright, J.-A.; Taylor, D. Forensic DNA Evidence Interpretation; CRC Press: Boca Raton, FL, USA, 2016. [Google Scholar]

- Robertson, B.; Vignaux, G.A.; Berger, C.E. Interpreting Evidence: Evaluating Forensic Science in the Courtroom; John Wiley & Sons: Hoboken, NJ, USA, 2016. [Google Scholar]

- Locard, E. The analysis of dust traces. Am. J. Police Sci. 1930, 1, 276–298. [Google Scholar] [CrossRef]

- Cervelli, F.; Dardi, F.; Carrato, S. Comparison of footwear retrieval systems for synthetic and real shoe mark. In Proceedings of the International Symposium on Image and Signal Processing and Analysis, Salzburg, Austria, 16–18 September 2009; pp. 534–542. [Google Scholar]

- Bodziak, W.J. Footwear Impression Evidence Detection, Recovery and Examination, 2nd ed.; CRC Press: Boca Raton, FL, USA, 2000. [Google Scholar]

- Rankin, B. Footwear marks-a step by step review. Forensic Sci. Soc. 1998, 32, 54–70. [Google Scholar]

- Thompson, T.; Black, S. Forensic Human Identification: An Introduction; CRC Press: Boca Raton, FL, USA, 2006. [Google Scholar]

- Hassan, M.; Wang, Y.; Wang, D.; Li, D.; Liang, Y.; Zhou, Y.; Xu, D. Deep learning analysis and age prediction from shoeprints. Forensic Sci. Int. 2021, 327, 110987. [Google Scholar] [CrossRef]

- Francis, X.; Sharifzadeh, H.; Newton, A.; Baghaei, N.; Varastehpour, S. Learning wear patterns on footwear outsoles using convolutional neural networks. In Proceedings of the 18th IEEE International Conference on Trust, Security and Privacy in Computing and Communications/13th IEEE International Conference on Big Data Science and Engineering, Rotorua, New Zealand, 5–8 August 2019; pp. 450–457. [Google Scholar]

- Speir, J.A.; Richetelli, N.; Fagert, M.; Hite, M.; Bodziak, W.J. Quantifying randomly acquired characteristics on outsoles in terms of shape and position. Forensic Sci. Int. 2016, 266, 399–411. [Google Scholar] [CrossRef]

- Ribaux, O.; Girod, A. Forensic intelligence and crime analysis. Law Probab. Risk 2003, 2, 47–63. [Google Scholar] [CrossRef]

- Ribaux, O.; Baylon, A.; Roux, C.; Delémont, O.; Lock, E.; Zingg, C.; Margot, P. Intelligence-led crime scene processing. Part I: Forensic intelligence. Forensic Sci. Int. 2010, 195, 10–16. [Google Scholar] [CrossRef]

- Geradts, Z.; Keijzer, J. The image-database REBEZO for shoeprint with developments on automatic classification of shoe outsole designs. Forensic Sci. Int. 1996, 79, 21–23. [Google Scholar] [CrossRef]

- Budka, M.; Ashraf, A.W.U.; Bennett, M.; Neville, S.; Mackrill, A. Deep multilabel CNN for forensic footwear impression descriptor identification. Appl. Soft Comput. 2021, 109, 107496. [Google Scholar] [CrossRef]

- Srihari, S.N. Analysis of Footwear Impression Evidence. 2011. Available online: https://www.ojp.gov/pdffiles1/nij/grants/233981.pdf (accessed on 10 July 2022).

- Rida, I.; Al-Maadeed, N.; Al-Maadeed, S.; Bakshi, S. A comprehensive overview of feature representation for biometric recognition. Multimed. Tools Appl. 2020, 79, 4867–4890. [Google Scholar] [CrossRef]

- Ramakrishnan, V.; Srihari, S. Extraction of shoe-print patterns from impression evidence using conditional random fields. In Proceedings of the 19th IEEE International Conference on Pattern Recognition (ICPR), Tampa, FL, USA, 8–12 December 2008; pp. 1–4. [Google Scholar]

- Francis, X.; Sharifzadeh, H.; Newton, A.; Baghaei, N.; Varastehpour, S. Feature enhancement and denoising of a forensic shoeprint dataset for tracking wear-and-tear effects. In Proceedings of the IEEE International Symposium on Signal Processing and Information Technology, Ajman, United Arab Emirates, 10–12 December 2019; pp. 1–5. [Google Scholar]

- Guo, T.; Tang, Y.; Guo, W. Planar shoeprint segmentation based on the multiplicative intrinsic component optimization. In Proceedings of the 3rd International Conference on Image, Vision and Computing, Chongqing, China, 27–29 June 2018; pp. 283–287. [Google Scholar]

- Wang, X.N.; Wu, Y.J.; Zhang, T. Multi-Layer Feature Based Shoeprint Verification Algorithm for Camera Sensor Images. Sensors 2019, 19, 2491. [Google Scholar] [CrossRef] [Green Version]

- Rida, I.; Fei, L.; Proença, H.; Nait-Ali, A.; Hadid, A. Forensic shoe-print identification: A brief survey. arXiv 2019, arXiv:1901.01431. [Google Scholar]

- Kong, B.; Supancic, J.; Ramanan, D. Cross-Domain forensic shoeprint matching. In Proceedings of the British Machine Vision Conference, London, UK, 4–7 September 2017; pp. 128–135. [Google Scholar]

- Kong, B.; Supančič, J.; Ramanan, D.; Fowlkes, C.C. Cross-Domain Image Matching with Deep Feature Maps. Int. J. Comput. Vis. 2019, 127, 1738–1750. [Google Scholar] [CrossRef] [Green Version]

- Richetelli, N.; Lee, M.C.; Lasky, C.A.; Gump, M.E.; Speir, J.A. Classification of footwear outsole patterns using Fourier transform and local interest points. Forensic Sci. Int. 2017, 275, 102–109. [Google Scholar] [CrossRef]

- Kortylewski, A.; Albrecht, T.; Vetter, T. Unsupervised footwear impression analysis and retrieval from crime scene data. In Proceedings of the Asian Conference on Computer Vision, Singapore, 1–5 November 2014; pp. 644–658. [Google Scholar]

- Kortylewski, A.; Vetter, T. Probabilistic Compositional Active Basis Models for Robust Pattern Recognition. In Proceedings of the 27th British Machine Vision Conference (BMVC), York, UK, 19–22 September 2016. [Google Scholar]

- Kortylewski, A. Model-Based IMAGE Analysis for Forensic Shoe Print Recognition. Ph.D. Dissertation, Department Computer Graphic Bilder Kennung, University of Basel, Basel, Switzerland, 2017. [Google Scholar]

- Alizadeh, S.; Kose, C. Automatic retrieval of shoeprint images using blocked sparse representation. Forensic Sci. Int. 2017, 277, 103–114. [Google Scholar] [CrossRef]

- Ma, Z.; Ding, Y.; Wen, S.; Xie, J.; Jin, Y.; Si, Z.; Wang, H. Shoe-Print Image Retrieval with Multi-Part Weighted CNN. IEEE Access 2019, 7, 59728–59736. [Google Scholar] [CrossRef]

- Rathinavel, S.; Arumugam, S. Full shoe print recognition based on pass band dct and partial shoe print identification using overlapped block method for degraded images. Int. J. Comput. Appl. 2011, 26, 16–21. [Google Scholar] [CrossRef]

- Hasegawa, M.; Tabbone, S. A local adaptation of the histogram radon transform descriptor: An application to a shoe print dataset. In Proceedings of the 2012 Joint IAPR International Conference on Structural, Syntactic, and Statistical Pattern Recognition, Hiroshima, Japan, 7–9 November 2012; pp. 675–683. [Google Scholar]

- Almaadeeda, S.; Bouridaneb, A.; Crookesc, D.; Nibouche, O. Partial shoeprint retrieval using multiple point-of-interest detectors and SIFT descriptors. Integr. Comput. Aided Eng. 2015, 22, 41–58. [Google Scholar] [CrossRef]

- Alexander, A.; Bouridane, A.; Crookes, D. Automatic classification and recognition of shoeprints. In Proceedings of the International Conference on Image Processing and its Applications, Manchester, UK, 24–28 October 1999; pp. 638–641. [Google Scholar]

- Bouridane, A.; Alexander, A.; Nibouche, M.; Crookes, D. Application of fractals to the detection and classification of shoeprints. In Proceedings of the International Conference on Image Processing, Vancouver, BC, Canada, 10–13 September 2000; pp. 474–477. [Google Scholar]

- Hu, M.K. Visual pattern recognition by moment invariants. IEEE Trans. Inf. Theory 1962, 8, 179–187. [Google Scholar]

- Teague, M.R. Image analysis via the general theory of moments. J. Opt. Soc. Am. 1980, 70, 920–930. [Google Scholar] [CrossRef]

- Teh, C.H.; Chin, R.T. On image analysis by the methods of moments. Pattern Anal. Mach. Intell. 1988, 10, 496–513. [Google Scholar] [CrossRef]

- Algarni, G.; Amiane, M. A novel technique for automatic shoeprint image retrieval. Forensic Sci. Int. 2008, 181, 10–14. [Google Scholar] [CrossRef]

- Khotanzad, A.; Hong, Y.H. Invariant image recognition by Zernike moments. Pattern Anal. Mach. Intell. 1990, 12, 489–497. [Google Scholar] [CrossRef] [Green Version]

- Wei, C.H.; Gwo, C.Y. Alignment of core point for shoeprint analysis and retrieval. In Proceedings of the International Conference on Information Science, Electronics and Electrical Engineering, Sapporo City, Hokkaido, Japan, 26–28 April 2014; pp. 1069–1072. [Google Scholar]

- Gwo, C.Y.; Wei, C.H. Shoeprint retrieval: Core point alignment for pattern comparison. Sci. Justice 2016, 56, 341–350. [Google Scholar] [CrossRef] [PubMed]

- Huynh, C.; de Chazal, P.; McErlean, D.; Reilly, R.; Hannigan, T.; Fleury, L. Automatic classification of shoeprints for use in forensic science based on the Fourier transform. In Proceedings of the International Conference on Image Processing, Barcelona, Spain, 14–18 September 2003; pp. 569–572. [Google Scholar]

- de Chazal, P.; Flynn, J.; Reilly, R.B. Automated processing of shoeprint images based on the Fourier transform for use in forensic science. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 341–350. [Google Scholar] [CrossRef] [PubMed]

- Gueham, M.; Bouridane, A.; Crookes, D.; Nibouche, O. Automatic recognition of shoeprints using Fourier-Mellin transform. In Proceedings of the NASA/ESA Conference on Adaptive Hardware and Systems, Noordwijk, The Netherlands, 22–25 June 2008; pp. 487–491. [Google Scholar]

- Dardi, F.; Cervelli, F.; Carrato, S. An automatic footwear retrieval system for shoe marks from real crime scenes. In Proceedings of the International Symposium on Image and Signal Processing and Analysis, Salzburg, Austria, 16–18 September 2009; pp. 668–672. [Google Scholar]

- Dardi, F.; Cervelli, F.; Carrato, S. A texture based shoe retrieval system for shoe Marks of real crime scenes. In Proceedings of the International Conference on Image Analysis and Processing, Trieste, Italy, 7–10 November 2009; pp. 384–393. [Google Scholar]

- Cervelli, F.; Dardi, F.; Carrato, S. A translational and rotational invariant descriptor for automatic footwear retrieval of real cases shoe marks. In Proceedings of the European Signal Processing Conference, Aalborg, Denmark, 23–27 August 2010; pp. 1665–1669. [Google Scholar]

- Cervelli, F.; Dardi, F.; Carrato, S. A texture recognition system of real shoe marks taken from crime scenes. In Proceedings of the IEEE International Conference on Image Processing, Cairo, Egypt, 7–10 November 2009; pp. 2905–2908. [Google Scholar]

- Dardi, F.; Cervelli, F.; Carrato, S. A combined approach for footwear retrieval of crime scene shoe marks. In Proceedings of the 3rd International Conference on Crime Detection and Prevention (ICDP), London, UK, 3 December 2009; pp. 1–6. [Google Scholar]

- Crookes, D.; Bouridane, A.; Su, H.; Gueham, M. Following the Footsteps of Others: Techniques for Automatic Shoeprint Classification. In Proceedings of the Second NASA/ESA Conference on Adaptive Hardware and Systems, Edinburgh, UK, 5–8 August 2007; pp. 67–74. [Google Scholar]

- Jing, M.Q.; Ho, W.J.; Chen, L.H. A novel method for shoeprints recognition and classification. In Proceedings of the IEEE International Conference on Machine Learning and Cybernetics, Baoding, China, 7–15 July 2009; pp. 2846–2851. [Google Scholar]

- Daugman, J.G. Two-dimensional spectral analysis of cortical receptive field profiles. Vis. Res. 1980, 20, 847–856. [Google Scholar] [CrossRef]

- Daugman, J.G. Uncertainty relation for resolution in space, spatial frequency, and orientation optimized by two dimensional visual cortical filters. J. Opt. Soc. Am. 1985, 2, 1160–1169. [Google Scholar] [CrossRef] [PubMed]

- Patl, M.P.; Kulkarni, V.J. Rotation and intensity invariant shoeprint matching using Gabor transform with application to forensic science. Pattern Recognit. 2009, 42, 1308–1317. [Google Scholar] [CrossRef]

- Deshmukh, M.P.; Patl, M.P. Automatic shoeprint matching system for crime scene investigation. Int. J. Comput. Sci. Commun. Technol. 2009, 2, 281–287. [Google Scholar]

- Li, X.; Wu, M.; Shi, Z. The retrieval of shoeprint images based on the integral histogram of the Gabor transform domain. In Proceedings of the International Conference on Intelligent Information Processing, Hangzhou, China, 3–6 October 2014; pp. 249–258. [Google Scholar]

- Pei, W.; Zhu, Y.; Na, Y.; He, X. Multiscale Gabor wavelet for shoeprint image retrieval. In Proceedings of the 2nd IEEE International Congress on Image and Signal Processing (CISP), Tianjin, China, 17–19 October 2009; pp. 1–5. [Google Scholar]

- Kong, X.; Yang, C.; Zheng, F. A novel method for shoeprint recognition in crime scenes. In Proceedings of the 9th Chinese Conference on Biometric Recognition, Shenyang, China, 7-9 November 2014; pp. 498–505. [Google Scholar]

- Vagač, M.; Povinský, M.; Melicherčík, M. Detection of shoe sole features using dnn. In Proceedings of the 14th IEEE International Scientific Conference on Informatics, Poprad, Slovakia, 14–16 November 2017; pp. 416–419. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Zhang, Y.; Fu, H.; Dellandréa, E.; Chen, L. Adapting convolutional neural networks on the shoeprint retrieval for forensic use. In Proceedings of the Chinese Conference on Biometric Recognition, Shenzhen, China, 28–29 October 2017; pp. 520–527. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proceedings of the International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Zhang, L.; Allinson, N. Automatic shoeprint retrieval system for use in forensic investigations. In Proceedings of the UK Workshop On Computational Intelligence, London, UK, 5–7 September 2005; pp. 137–142. [Google Scholar]

- Tang, Y.; Srihari, S.N.; Kasiviswanathan, H.; Corso, J.J. Footwear print retrieval system for real crime scene marks. In Proceedings of the International Workshop on Computational Forensics, Tokyo, Japan, 11–12 November 2010; pp. 88–100. [Google Scholar]

- Tang, Y.; Srihari, S.N.; Kasiviswanathan, H. Similarity and Clustering of Footwear Prints. In Proceedings of the IEEE International Conference on Granular Computing, San Jose, CA, USA, 14–16 August 2010; pp. 459–464. [Google Scholar]

- Tang, Y.; Kasiviswanathan, H.; Srihari, S.N. An efficient clustering-based retrieval framework for real crime scene footwear marks. Int. J. Granul. Comput. Rough Sets Intell. Syst. 2012, 2, 327–360. [Google Scholar] [CrossRef]

- Pavlou, M.; Allinson, N.M. Automatic extraction and classification of footwear patterns. In Proceedings of the International Conference on Intelligent Data Engineering and Automated Learning, Burgos, Spain, 20–23 September 2006; pp. 2088–2095. [Google Scholar]

- Pavlou, M.; Allinson, N.M. Automated encoding of footwear patterns for fast indexing. Image Vis. Comput. 2009, 27, 402–409. [Google Scholar] [CrossRef]

- Wang, X.N.; Sun, H.H.; Yu, Q.; Zhang, C. Automatic shoeprint retrieval algorithm for real crime scenes. In Proceedings of the Asian Conference on Computer Vision, Singapore, 1–5 November 2014; pp. 399–413. [Google Scholar]

- Wang, X.; Zhang, C.; Wu, Y.; Shu, Y. A manifold ranking based method using hybrid features for crime scene shoeprint retrieval. Multimed. Tools Appl. 2017, 76, 21629–21649. [Google Scholar] [CrossRef]

- Wu, Y.; Wang, X.; Nankabirwa, N.L.; Zhang, T. LOSGSR: Learned Opinion Score Guided Shoeprint Retrieval. IEEE Access 2019, 7, 55073–55089. [Google Scholar] [CrossRef]

- Wu, Y.J.; Wang, X.N.; Zhang, T. Crime Scene Shoeprint Retrieval Using Hybrid Features and Neighboring Images. Information 2019, 10, 45. [Google Scholar] [CrossRef] [Green Version]

- Tang, C.; Dai, X. Automatic shoe sole pattern retrieval system based on image content of shoeprint. In Proceedings of the IEEE International Conference on Computer Design and Applications (ICCDA), Qinhuangdao, China, 25–27 June 2010; pp. 602–605. [Google Scholar]

- Ghouti, L.; Bouridane, A.; Crookes, D. Classification of shoeprint images using directional filter banks. In Proceedings of the International Conference on Visual Information Engineering (VIE), Bangalore, India, 26–28 September 2006; pp. 167–173. [Google Scholar]

- Alizadeh, S.; Jond, H.B.; Nabiyev, V.V. Automatic Retrieval of Shoeprints Using Modified Multi-Block Local Binary Pattern. Symmetry 2021, 13, 296. [Google Scholar] [CrossRef]

- Wang, J.; Sun, K.; Cheng, T.; Jiang, B.; Deng, C.; Zhao, Y.; Liu, D.; Mu, Y.; Tan, M.; Wang, X.; et al. Deep High-Resolution Representation Learning for Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 3349–3364. [Google Scholar] [CrossRef] [Green Version]

- Cao, Z.; Hidalgo, G.; Simon, T.; Wei, S.E.; Sheikh, Y. OpenPose: Realtime Multi-Person 2D Pose Estimation Using Part Affinity Fields. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 172–186. [Google Scholar] [CrossRef] [Green Version]

- Ma, W.; Shen, J.; Zhu, H.; Zhang, J.; Zhao, J.; Hou, B.; Jiao, L. A Novel Adaptive Hybrid Fusion Network for Multiresolution Remote Sensing Images Classification. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5400617. [Google Scholar] [CrossRef]

- Huang, W.; Yang, H.; Liu, X.; Li, C.; Zhang, I.; Wang, R.; Zheng, H.; Wang, S. A Coarse-to-Fine Deformable Transformation Framework for Unsupervised Multi-Contrast MR Image Registration with Dual Consistency Constraint. IEEE Trans. Med. Imaging 2021, 40, 2589–2599. [Google Scholar] [CrossRef]

- Zhang, L.; Liang, R.; Yin, J.; Zhang, D.; Shao, L. Scene Categorization by Deeply Learning Gaze Behavior in a Semisupervised Context. IEEE Trans. Cybern. 2021, 51, 4265–4276. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrel, T.; Malik, J. Region-based convolutional networks for accurate object detection and segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 142–158. [Google Scholar] [CrossRef] [PubMed]

- Calonder, M.; Lepetit, V.; Strecha, C.; Fua, P. Brief: Binary robust independent elementary features. In Proceedings of the European Conference on Computer Vision, Heraklion, Crete, Greece, 5–11 September 2010; pp. 778–792. [Google Scholar]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the IEEE International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar]

- Alahi, A.; Ortiz, R.; Vandergheynst, P. Freak: Fast retina keypoint. In Proceedings of the IEEE Conference on Computer vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 510–517. [Google Scholar]

- Leutenegger, S.; Chli, M.; Siegwart, R. BRISK: Binary robust invariant scalable keypoints. In Proceedings of the IEEE International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2548–2555. [Google Scholar]

- Ke, Y.; Sukthankar, R. PCA-SIFT: A more distinctive representation for local image descriptors. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 27 June–2 July 2004; pp. 506–513. [Google Scholar]

- Bay, H.; Tuytelaars, T.; Van Gool, L. Surf: Speeded up robust features. In Proceedings of the European Conference on Computer Vision, Graz, Austria, 7–13 May 2006; pp. 404–417. [Google Scholar]

- Li, Z.; Wei, C.; Li, Y.; Sun, T. Research of shoeprint image stream retrieval algorithm with scale-invariance feature transform. In Proceedings of the International Conference on Multimedia Technology, Hangzhou, China, 26–28 July 2011; pp. 5488–5491. [Google Scholar]

- Wang, H.; Fan, J.; Li, Y. Research of shoeprint image matching based on SIFT algorithm. J. Comput. Methods Sci. Eng. 2016, 16, 349–359. [Google Scholar] [CrossRef]

- Nibouche, O.; Bouridane, A.; Gueham, M.; Laadjel, M. Rotation invariant matching of partial shoeprints. In Proceedings of the 13th International Machine Vision and Image Processing Conference, Dublin, Ireland, 2–4 September 2009; pp. 94–98. [Google Scholar]

- Su, H.; Crookes, D.; Bouridane, A.; Gueham, M. Local Image Features for Shoeprint Image Retrieval. In Proceedings of the British Machine Vision Conference, Warwick, UK, 10–13 September 2007; pp. 1–10. [Google Scholar]

- Bouridane, A. Techniques for Automatic Shoeprint Classification; Springer: Boston, MA, USA, 2009. [Google Scholar]

- Gueham, M.; Bouridane, A.; Crookes, D. Automatic Recognition of Partial Shoeprints Based on Phase-Only Correlation. In Proceedings of the IEEE International Conference on Image Processing, San Antonio, TX, USA, 16–19 September 2007; pp. 441–444. [Google Scholar]

- Gueham, M.; Bouridane, A.; Crookes, D. Automatic classification of partial shoeprints using advanced correlation filters for use in forensic science. In Proceedings of the 19th IEEE International Conference on Pattern Recognition (ICPR), 8–11 December 2008; pp. 1–4. [Google Scholar]

- Chiu, H.-C.; Chen, C.-H.; Yang, W.-C.; Jiang, J. Automatic Full and Partial Shoeprint Retrieval System for Use in Forensic Investigations. In Proceedings of the 12th International Congress on Image and Signal Processing, BioMedical Engineering and Informatics (CISP-BMEI), Suzhou, China, 17–19 October 2019; pp. 1–6. [Google Scholar]

- Phillips, P.J.; Moon, H.; Rizvi, S.A.; Rauss, P.J. The FERET Evaluation Methodology for Face-Recognition Algorithms. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1090–1104. [Google Scholar] [CrossRef]

| Methods | Features | Matching Methods | Performance | Dataset |

|---|---|---|---|---|

| Kortylewski, et al., 2014 [28] | Periodical Texture | Defined Similarity Measure | 27.1%@1% | #S:170, #R:1175 |

| Wang, et al., 2014 [72] | Fourier–Mellin | Correlation Coefficient | 87.5%@2% | #S:72, #S:10096 |

| Almaadeed, et al., 2015 [35] | Harris + Hessian + SIFT | RANSAC | 68.5%@10 | #R:400, #R:400 |

| Kortylewski, et al., 2016 [29] | Original Pixels | Probabilistic Model | 71%@20% | #S:300, #R:1175 |

| Wang, et al., 2017 [73] | Fourier–Mellin | Manifold Ranking | 93.5%@2% | #S:72, #S:10096 |

| Richetelli, et al., 2017 [27] | SIFT | RANSAC | 97%@5 | #R:272, #R:100 |

| Alizadeh, et al., 2017 [31] | Original Pixels | Sparse Representation for Classification | 99.5%@1 | #R:190, #R:190 |

| Kong, et al., 2017 [25] | Deep Features | Normalized Cross-Correlation | 92.5%@20% | #S:300, #R:1175 |

| Kong, et al., 2019 [26] | Deep Features | Normalized Cross-Correlation | 94%@20% | #S:300, #R:1175 |

| Ma, et al., 2019 [32] | Deep Features | Deep Neural Networks | 89.8%@10% | #S:300, #R:1175 |

| Wu, et al., 2019 [74] | Fourier–Mellin | Manifold Ranking | 96.6%@2% | #S:72, #S:10096 |

| Wu, et al., 2019 [75] | Fourier–Mellin | Manifold Ranking | 92.5%@2% | #S:72, #S:10096 |

| Alizadeh, et al., 2021 [78] | Local Binary Pattern | Chi-squared Test | 97.6%@1 | #R:190, #R:760 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, Y.; Dong, X.; Shi, G.; Zhang, X.; Chen, C. Crime Scene Shoeprint Image Retrieval: A Review. Electronics 2022, 11, 2487. https://doi.org/10.3390/electronics11162487

Wu Y, Dong X, Shi G, Zhang X, Chen C. Crime Scene Shoeprint Image Retrieval: A Review. Electronics. 2022; 11(16):2487. https://doi.org/10.3390/electronics11162487

Chicago/Turabian StyleWu, Yanjun, Xianling Dong, Guochao Shi, Xiaolei Zhang, and Congzhe Chen. 2022. "Crime Scene Shoeprint Image Retrieval: A Review" Electronics 11, no. 16: 2487. https://doi.org/10.3390/electronics11162487

APA StyleWu, Y., Dong, X., Shi, G., Zhang, X., & Chen, C. (2022). Crime Scene Shoeprint Image Retrieval: A Review. Electronics, 11(16), 2487. https://doi.org/10.3390/electronics11162487