Virtual Reality for Safe Testing and Development in Collaborative Robotics: Challenges and Perspectives

Abstract

:1. Introduction

2. Human-Robot Collaboration, Safety and Acceptability

3. The Use of Virtual Reality for Testing Human–Robot Collaboration

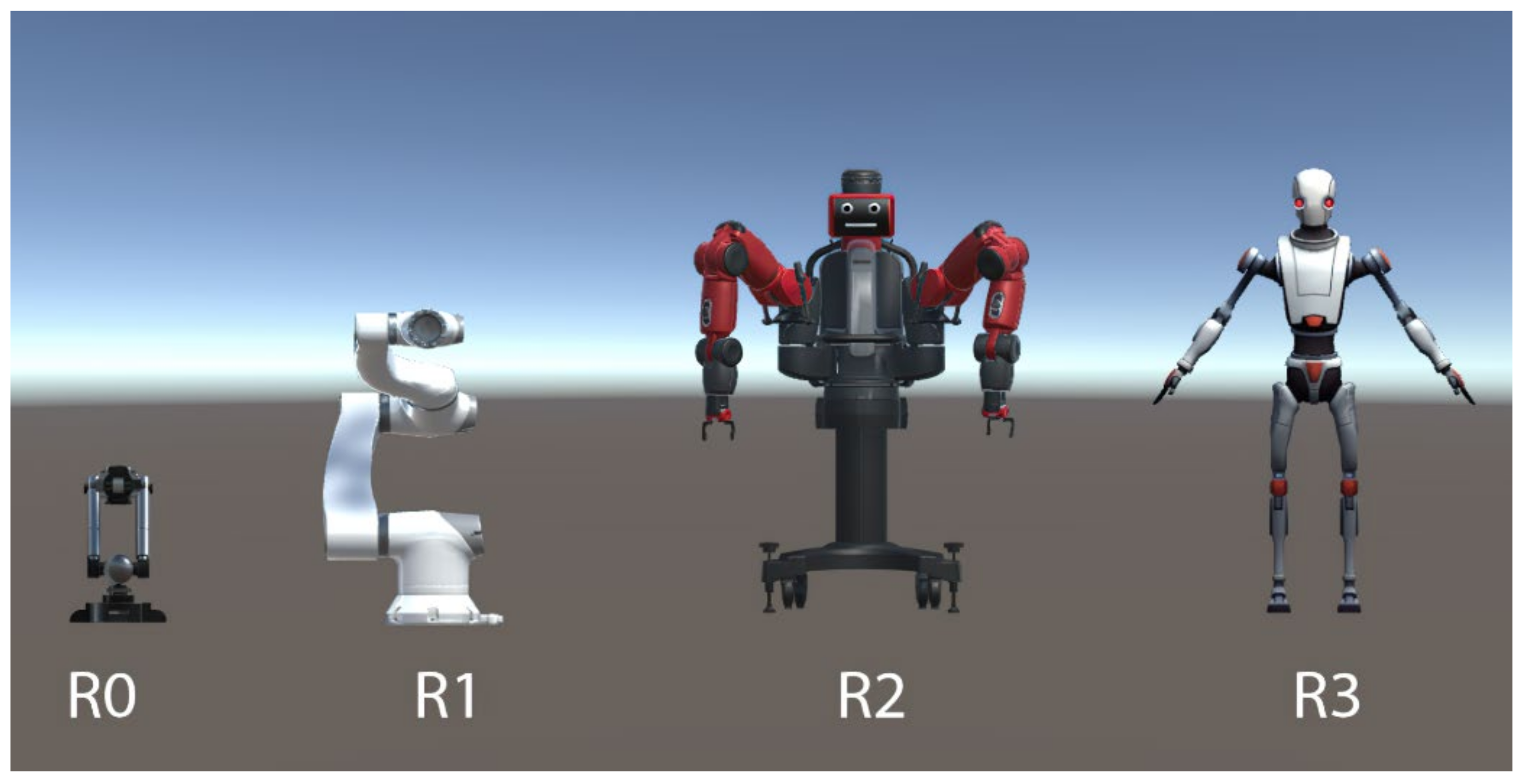

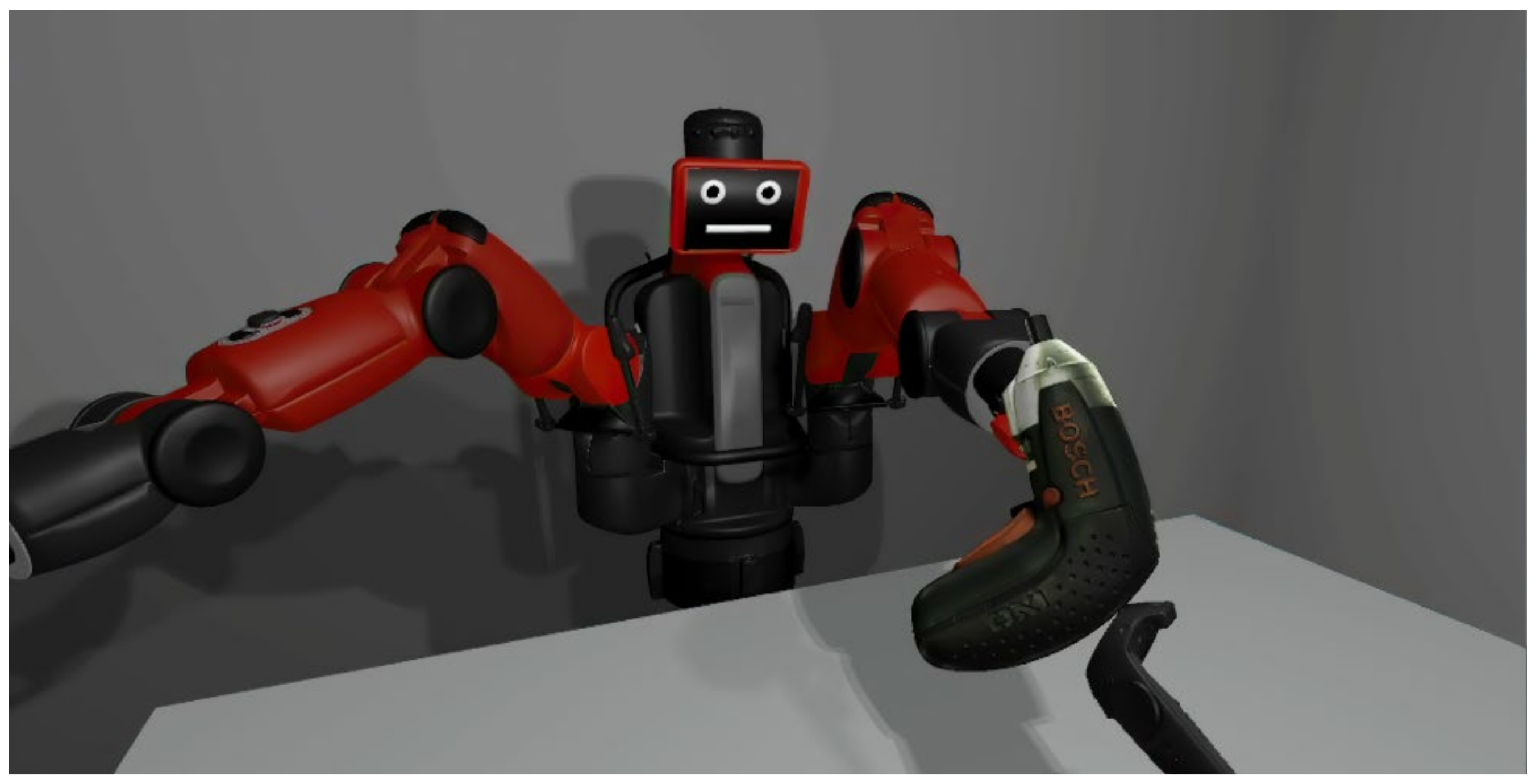

4. A Framework for Extended Reality in Testing Human–Robot Collaboration

5. VR in Testing Cognitive and Social Aspects of Collaboration

6. Combined Use of User Experience Questionnaires and Objective Measures in HRC

7. Telepresence and Teleoperation Scenarios

8. The Use of Augmented Reality

9. Considering Operator Gender and Age in Cobot Testing

10. Conclusions and Future Directions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Contreras, F.; Baykal, E.; Abid, G. E-Leadership and Teleworking in Times of COVID-19 and Beyond: What We Know and Where Do We Go. Front. Psychol. 2020, 11, 590271. [Google Scholar] [CrossRef]

- Caselli, M.; Fracasso, A.; Traverso, S. Robots and Risk of COVID-19 Workplace Contagion: Evidence from Italy. Technol. Forecast. Soc. Chang. 2021, 173, 121097. [Google Scholar] [CrossRef]

- Guizzo, E.; Klett, R. How Robots Became Essential Workers in the COVID-19 Response. Available online: https://spectrum.ieee.org/how-robots-became-essential-workers-in-the-covid19-response (accessed on 13 January 2022).

- IFR Position Paper Demystifying Collaborative Industrial Robots; International Federation of Robotics: Frankfurt, Germany, 2018.

- Towers-Clark, C. Keep The Robot In The Cage—How Effective (And Safe) Are Co-Bots? Available online: https://www.forbes.com/sites/charlestowersclark/2019/09/11/keep-the-robot-in-the-cagehow-effective--safe-are-co-bots/ (accessed on 13 January 2022).

- Grosz, B.J. Collaborative Systems (AAAI-94 Presidential Address). AI Mag. 1996, 17, 67. [Google Scholar]

- Bröhl, C.; Nelles, J.; Brandl, C.; Mertens, A.; Schlick, C.M. TAM Reloaded: A Technology Acceptance Model for Human-Robot Cooperation in Production Systems. In Proceedings of the HCI International 2016 – Posters’ Extended Abstracts; Communications in Computer and Information Science, 617; Stephanidis, C., Ed.; Springer International Publishing: Cham, Switzerland, 2016; pp. 97–103. [Google Scholar]

- Bauer, W.; Bender, M.; Braun, M.; Rally, P.; Scholtz, O. Lightweight Robots in Manual Assembly–Best to Start Simply. In Examining Companies Initial Experiences with Lightweight Robots; Frauenhofer-Institut für Arbeitswirtschaft und Organisation IAO: Stuttgart, Germany, 2016; pp. 1–32. [Google Scholar]

- Bauer, A.; Wollherr, D.; Buss, M. Human–robot collaboration: A survey. Int. J. Humanoid Robot. 2008, 05, 47–66. [Google Scholar] [CrossRef]

- Zuberbühler, K. Gaze Following. Curr. Biol. CB 2008, 18, R453–R455. [Google Scholar] [CrossRef] [Green Version]

- Marques, B.; Silva, S.S.; Alves, J.; Araujo, T.; Dias, P.M.; Sousa Santos, B. A Conceptual Model and Taxonomy for Collaborative Augmented Reality. IEEE Trans. Vis. Comput. Graph. 2021, 1–21. [Google Scholar] [CrossRef]

- Gromeier, M.; Koester, D.; Schack, T. Gender Differences in Motor Skills of the Overarm Throw. Front. Psychol. 2017, 8, 212. [Google Scholar] [CrossRef] [Green Version]

- Moreno-Briseño, P.; Díaz, R.; Campos-Romo, A.; Fernandez-Ruiz, J. Sex-Related Differences in Motor Learning and Performance. Behav. Brain Funct. 2010, 6, 74. [Google Scholar] [CrossRef] [Green Version]

- Blakemore, S.-J.; Decety, J. From the Perception of Action to the Understanding of Intention. Nat. Rev. Neurosci. 2001, 2, 561–567. [Google Scholar] [CrossRef]

- Eaves, D.L.; Riach, M.; Holmes, P.S.; Wright, D.J. Motor Imagery during Action Observation: A Brief Review of Evidence, Theory and Future Research Opportunities. Front. Neurosci. 2016, 10, 514. [Google Scholar] [CrossRef] [Green Version]

- Martin, A.; Weisberg, J. Neural foundations for understanding social and mechanical concepts. Cogn. Neuropsychol. 2003, 20, 575–587. [Google Scholar] [CrossRef] [Green Version]

- MacDorman, K.F.; Green, R.D.; Ho, C.-C.; Koch, C.T. Too Real for Comfort? Uncanny Responses to Computer Generated Faces. Comput. Hum. Behav. 2009, 25, 695–710. [Google Scholar] [CrossRef] [Green Version]

- Steckenfinger, S.A.; Ghazanfar, A.A. Monkey Visual Behavior Falls into the Uncanny Valley. Proc. Natl. Acad. Sci. 2009, 106, 18362–18366. [Google Scholar] [CrossRef] [Green Version]

- Kahn, P.H.; Ishiguro, H.; Friedman, B.; Kanda, T.; Freier, N.G.; Severson, R.L.; Miller, J. What Is a Human?: Toward Psychological Benchmarks in the Field of Human–Robot Interaction. Interact. Stud. Soc. Behav. Commun. Biol. Artif. Syst. 2007, 8, 363–390. [Google Scholar] [CrossRef]

- Maurice, P.; Huber, M.E.; Hogan, N.; Sternad, D. Velocity-Curvature Patterns Limit Human–Robot Physical Interaction. IEEE Robot. Autom. Lett. 2018, 3, 249–256. [Google Scholar] [CrossRef] [Green Version]

- Spüler, M.; Niethammer, C. Error-Related Potentials during Continuous Feedback: Using EEG to Detect Errors of Different Type and Severity. Front. Hum. Neurosci. 2015, 9, 155. [Google Scholar] [CrossRef] [Green Version]

- Weistroffer, V.; Paljic, A.; Callebert, L.; Fuchs, P. A Methodology to Assess the Acceptability of Human-Robot Collaboration Using Virtual Reality. In Proceedings of the the 19th ACM Symposium on Virtual Reality Software and Technology, Singapore, 6–9 October 2013; ACM Press: New York, NY, USA, 2013; pp. 39–48. [Google Scholar]

- Dubosc, C.; Gorisse, G.; Christmann, O.; Fleury, S.; Poinsot, K.; Richir, S. Impact of Avatar Facial Anthropomorphism on Body Ownership, Attractiveness and Social Presence in Collaborative Tasks in Immersive Virtual Environments. Comput. Graph. 2021, 101, 82–92. [Google Scholar] [CrossRef]

- Furlough, C.; Stokes, T.; Gillan, D.J. Attributing Blame to Robots: I. The Influence of Robot Autonomy. Hum. Factors J. Hum. Factors Ergon. Soc. 2021, 63, 592–602. [Google Scholar] [CrossRef]

- Rabby, K.M.; Khan, M.; Karimoddini, A.; Jiang, S.X. An Effective Model for Human Cognitive Performance within a Human-Robot Collaboration Framework. In Proceedings of the 2019 IEEE International Conference on Systems, Man and Cybernetics (SMC), Bari, Italy, 6–9 October 2019; pp. 3872–3877. [Google Scholar] [CrossRef]

- Dianatfar, M.; Latokartano, J.; Lanz, M. Review on Existing VR/AR Solutions in Human–Robot Collaboration. Procedia CIRP 2021, 97, 407–411. [Google Scholar] [CrossRef]

- Duguleana, M.; Barbuceanu, F.G.; Mogan, G. Evaluating Human-Robot Interaction during a Manipulation Experiment Conducted in Immersive Virtual Reality. In Proceedings of the International Conference on Virtual and Mixed Reality, Orlando, FL, USA, 9–14 July 2011; pp. 164–173. [Google Scholar]

- Oyekan, J.O.; Hutabarat, W.; Tiwari, A.; Grech, R.; Aung, M.H.; Mariani, M.P.; López-Dávalos, L.; Ricaud, T.; Singh, S.; Dupuis, C. The Effectiveness of Virtual Environments in Developing Collaborative Strategies between Industrial Robots and Humans. Robot. Comput.-Integr. Manuf. 2019, 55, 41–54. [Google Scholar] [CrossRef]

- Dombrowski, U.; Stefanak, T.; Perret, J. Interactive Simulation of Human-Robot Collaboration Using a Force Feedback Device. Procedia Manuf. 2017, 11, 124–131. [Google Scholar] [CrossRef]

- Holtzblatt, K.; Beyer, H. Contextual Design, 2nd ed.; Morgan Kaufmann: Burlington, MA, USA, 2016; ISBN 978-0-12-801136-2. [Google Scholar]

- Sommerville, I. Software Engineering, 10th ed.; Pearson: Boston, MA, USA, 2016; ISBN 978-0-13-394303-0. [Google Scholar]

- Tonkin, M.; Vitale, J.; Herse, S.; Williams, M.A.; Judge, W.; Wang, X. Design Methodology for the UX of HRI: A Field Study of a Commercial Social Robot at an Airport; ACM Press: New York, NY, USA, 2018; ISBN 978-1-4503-4953-6. [Google Scholar]

- Zhong, V.J.; Schmiedel, T. A User-Centered Agile Approach to the Development of a Real-World Social Robot Application for Reception Areas. In Proceedings of the Companion of the 2021 ACM/IEEE International Conference on Human-Robot Interaction, Boulder, CO, USA, 8–11 March 2021; pp. 76–80. [Google Scholar]

- Onnasch, L.; Roesler, E. Anthropomorphizing Robots: The Effect of Framing in Human-Robot Collaboration. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2019, 63, 1311–1315. [Google Scholar] [CrossRef] [Green Version]

- Onnasch, L.; Roesler, E. A Taxonomy to Structure and Analyze Human–Robot Interaction. Int. J. Soc. Robot. 2021, 13, 833–849. [Google Scholar] [CrossRef]

- Kessler, S. This Industrial Robot Has Eyes Because They Make Human Workers Feel More Comfortable. Available online: https://qz.com/958335/why-do-rethink-robotics-robots-have-eyes/ (accessed on 13 January 2022).

- Johansson, R.S.; Westling, G.; Bäckström, A.; Flanagan, J.R. Eye–Hand Coordination in Object Manipulation. J. Neurosci. 2001, 21, 6917–6932. [Google Scholar] [CrossRef]

- Osiurak, F.; Rossetti, Y.; Badets, A. What Is an Affordance? 40 Years Later. Neurosci. Biobehav. Rev. 2017, 77, 403–417. [Google Scholar] [CrossRef]

- Pilacinski, A.; De Haan, S.; Donato, R.; Almeida, J. Tool Heads Prime Saccades. Sci. Rep. 2021, 11, 11954. [Google Scholar] [CrossRef]

- Richards, D. Escape from the Factory of the Robot Monsters: Agents of Change. Team Perform. Manag. Int. J. 2017, 23, 96–108. [Google Scholar] [CrossRef]

- Salem, M.; Lakatos, G.; Amirabdollahian, F.; Dautenhahn, K. Would You Trust a (Faulty) Robot? Effects of Error, Task Type and Personality on Human-Robot Cooperation and Trust. In In Proceedings of the 2015 10th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Portland, OR, USA, 2–5 March 2015; pp. 141–148. [Google Scholar] [CrossRef] [Green Version]

- Lee, J.D.; See, K.A. Trust in Automation: Designing for Appropriate Reliance. Hum. Factors J. Hum. Factors Ergon. Soc. 2004, 46, 50–80. [Google Scholar] [CrossRef]

- Bacharach, M.; Guerra, G.; Zizzo, D.J. The Self-Fulfilling Property of Trust: An Experimental Study. Theory Decis. 2007, 63, 349–388. [Google Scholar] [CrossRef]

- Gambetta, D. Can We Trust? In Trust: Making and Breaking Cooperative. Relations, Electronic Edition; Department of Sociology, University of Oxford: Oxford, UK, 2000; Volume 13. [Google Scholar]

- Wang, Y.; Lematta, G.J.; Hsiung, C.-P.; Rahm, K.A.; Chiou, E.K.; Zhang, W. Quantitative Modeling and Analysis of Reliance in Physical Human–Machine Coordination. J. Mech. Robot. 2019, 11, 060901. [Google Scholar] [CrossRef]

- Cameron, D.; Collins, E.; Cheung, H.; Chua, A.; Aitken, J.M.; Law, J. Don’t Worry, We’ll Get There: Developing Robot Personalities to Maintain User Interaction after Robot Error. In Conference on Biomimetic and Biohybrid Systems; Springer: Cham, Switzerland, 2016; pp. 409–412. [Google Scholar] [CrossRef]

- Desai, M.; Kaniarasu, P.; Medvedev, M.; Steinfeld, A.; Yanco, H. Impact of Robot Failures and Feedback on Real-Time Trust. In Proceedings of the 2013 8th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Tokyo, Japan, 3–6 March 2013; pp. 251–258. [Google Scholar] [CrossRef] [Green Version]

- Gupta, K.; Hajika, R.; Pai, Y.S.; Duenser, A.; Lochner, M.; Billinghurst, M. In AI We Trust: Investigating the Relationship between Biosignals, Trust and Cognitive Load in VR. In Proceedings of the VRST ’19: 25th ACM Symposium on Virtual Reality Software and Technology, Parramatta, Australia, 12–15 November 2019; pp. 1–10. [Google Scholar]

- Gupta, K.; Hajika, R.; Pai, Y.S.; Duenser, A.; Lochner, M.; Billinghurst, M. Measuring Human Trust in a Virtual Assistant Using Physiological Sensing in Virtual Reality. In Proceedings of the 2020 IEEE Conference on Virtual Reality and 3D User Interfaces, VR, Atlanta, GA, USA, 22–26 March 2020; pp. 756–765. [Google Scholar] [CrossRef]

- Etzi, R.; Huang, S.; Scurati, G.W.; Lyu, S.; Ferrise, F.; Gallace, A.; Gaggioli, A.; Chirico, A.; Carulli, M.; Bordegoni, M. Using Virtual Reality to Test Human-Robot Interaction During a Collaborative Task. In Proceedings of the International Design Engineering Technical Conferences and Computers and Information in Engineering Conference, Anaheim, CA, USA, 18–21 August 2019; p. V001T02A080. [Google Scholar]

- Slater, M. Place Illusion and Plausibility Can Lead to Realistic Behaviour in Immersive Virtual Environments. Philos. Trans. R. Soc. B Biol. Sci. 2009, 364, 3549–3557. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Slater, M.; Wilbur, S. A Framework for Immersive Virtual Environments (FIVE): Speculations on the Role of Presence in Virtual Environments. Presence Teleoperators Virtual Environ. 1997, 6, 603–616. [Google Scholar] [CrossRef]

- Baños, R.M.; Botella, C.; Alcañiz, M.; Liaño, V.; Guerrero, B.; Rey, B. Immersion and Emotion: Their Impact on the Sense of Presence. Cyberpsychol. Behav. 2004, 7, 734–741. [Google Scholar] [CrossRef] [PubMed]

- Slater, M.; Lotto, B.; Arnold, M.M.; Sánchez-Vives, M.V. How We Experience Immersive Virtual Environments: The Concept of Presence and Its Measurement. Anu Psicol 2009, 40, 193–210. [Google Scholar]

- Slater, M.; Antley, A.; Davison, A.; Swapp, D.; Guger, C.; Barker, C.; Pistrang, N.; Sanchez-Vives, M.V. A Virtual Reprise of the Stanley Milgram Obedience Experiments. PLoS ONE 2006, 1, e39. [Google Scholar] [CrossRef] [PubMed]

- Slater, M.; Rovira, A.; Southern, R.; Swapp, D.; Zhang, J.J.; Campbell, C.; Levine, M. Bystander Responses to a Violent Incident in an Immersive Virtual Environment. PLoS ONE 2013, 8, e52766. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Martens, M.A.; Antley, A.; Freeman, D.; Slater, M.; Harrison, P.J.; Tunbridge, E.M. It Feels Real: Physiological Responses to a Stressful Virtual Reality Environment and Its Impact on Working Memory. J. Psychopharmacol. 2019, 33, 1264–1273. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Buckingham, G. Hand Tracking for Immersive Virtual Reality: Opportunities and Challenges. Front. Virtual Real. 2021. [Google Scholar] [CrossRef]

- Morasso, P. Spatial Control of Arm Movements. Exp. Brain Res. 1981, 42, 223–227. [Google Scholar] [CrossRef]

- Flash, T.; Henis, E. Arm Trajectory Modifications During Reaching Towards Visual Targets. J. Cogn. Neurosci. 1991, 3, 220–230. [Google Scholar] [CrossRef]

- Rao, A.K.; Gordon, A.M. Contribution of Tactile Information to Accuracy in Pointing Movements. Exp. Brain Res. 2001, 138, 438–445. [Google Scholar] [CrossRef]

- Kosuge, K.; Kazamura, N. Control of a Robot Handling an Object in Cooperation with a Human. In Proceedings of the 6th IEEE International Workshop on Robot and Human Communication. RO-MAN’97 SENDAI, Sendai, Japan, 29 September–1 October 1997; pp. 142–147. [Google Scholar]

- Bradley, M.M.; Codispoti, M.; Cuthbert, B.N.; Lang, P.J. Emotion and Motivation I: Defensive and Appetitive Reactions in Picture Processing. Emot. Wash. DC 2001, 1, 276–298. [Google Scholar] [CrossRef]

- Lazar, J.; Feng, J.H.; Hochheiser, H. Research Methods in Human-Computer Interaction, 2nd ed.; Morgan Kaufmann Publishers: Burlington, MA, USA, 2017; ISBN 978-0-12-809343-6. [Google Scholar]

- McCambridge, J.; de Bruin, M.; Witton, J. The Effects of Demand Characteristics on Research Participant Behaviours in Non-Laboratory Settings: A Systematic Review. PLoS ONE 2012, 7, e39116. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Button, K.S.; Ioannidis, J.P.A.; Mokrysz, C.; Nosek, B.A.; Flint, J.; Robinson, E.S.J.; Munafò, M.R. Power Failure: Why Small Sample Size Undermines the Reliability of Neuroscience. Nat. Rev. Neurosci. 2013, 14, 365–376. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tachi, S. From 3D to VR and Further to Telexistence. In Proceedings of the 2013 23rd International Conference on Artificial Reality and Telexistence (ICAT), Tokyo, Japan, 11–13 December 2013; pp. 1–10. [Google Scholar]

- Kim, J.-H.; Starr, J.W.; Lattimer, B.Y. Firefighting Robot Stereo Infrared Vision and Radar Sensor Fusion for Imaging through Smoke. Fire Technol. 2015, 51, 823–845. [Google Scholar] [CrossRef]

- Ewerton, M.; Arenz, O.; Peters, J. Assisted Teleoperation in Changing Environments with a Mixture of Virtual Guides. Adv. Robot. 2020, 34, 1157–1170. [Google Scholar] [CrossRef]

- Toet, A.; Kuling, I.A.; Krom, B.N.; van Erp, J.B.F. Toward Enhanced Teleoperation Through Embodiment. Front. Robot. AI 2020, 7, 14. [Google Scholar] [CrossRef] [Green Version]

- Cipresso, P.; Giglioli, I.A.C.; Raya, M.A.; Riva, G. The Past, Present, and Future of Virtual and Augmented Reality Research: A Network and Cluster Analysis of the Literature. Front. Psychol. 2018, 9, 2086. [Google Scholar] [CrossRef] [Green Version]

- Liu, H.; Wang, L. An AR-Based Worker Support System for Human-Robot Collaboration. Procedia Manuf. 2017, 11, 22–30. [Google Scholar] [CrossRef]

- Hietanen, A.; Pieters, R.; Lanz, M.; Latokartano, J.; Kämäräinen, J.-K. AR-Based Interaction for Human-Robot Collaborative Manufacturing. Robot. Comput.-Integr. Manuf. 2020, 63, 101891. [Google Scholar] [CrossRef]

- Palmarini, R.; del Amo, I.F.; Bertolino, G.; Dini, G.; Erkoyuncu, J.A.; Roy, R.; Farnsworth, M. Designing an AR Interface to Improve Trust in Human-Robots Collaboration. Procedia CIRP 2018, 70, 350–355. [Google Scholar] [CrossRef]

- Michalos, G.; Karagiannis, P.; Makris, S.; Tokçalar, Ö.; Chryssolouris, G. Augmented Reality (AR) Applications for Supporting Human-Robot Interactive Cooperation. Procedia CIRP 2016, 41, 370–375. [Google Scholar] [CrossRef] [Green Version]

- Abel, M.; Kuz, S.; Patel, H.J.; Petruck, H.; Schlick, C.M.; Pellicano, A.; Binkofski, F.C. Gender Effects in Observation of Robotic and Humanoid Actions. Front. Psychol. 2020, 11, 797. [Google Scholar] [CrossRef] [PubMed]

- Nomura, T. Robots and Gender. Gend. Genome 2017, 1, 18–26. [Google Scholar] [CrossRef] [Green Version]

- Oh, Y.H.; Ju, D.Y. Age-Related Differences in Fixation Pattern on a Companion Robot. Sensors 2020, 20, 3807. [Google Scholar] [CrossRef]

| Level of Collaboration | 1 Cell | 2 Coexistence | 3 Sequential Collaboration | 4 Cooperation | 5 Responsive Collaboration |

|---|---|---|---|---|---|

| Requirement for intrinsic safety features vs. external sensors | Fenced robot | No fence but no shared workspace | Robot and worker both active in the workspace but movements are sequential | Robot and worker work on the same part at the same time, both in motion | Robot responds in real time to the movement of the operator. |

| Critical Variables for HRC Experiments | ||||

|---|---|---|---|---|

| Manipulated (Independent) | Measured (Dependent) | |||

| Cobot | Environment | User | Subjective | Objective |

|

|

|

|

|

| Issues in HRC Studies and Proposed Remedies for VR Experiments | |

|---|---|

| Problem | Suggested Remedy |

| |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Badia, S.B.i.; Silva, P.A.; Branco, D.; Pinto, A.; Carvalho, C.; Menezes, P.; Almeida, J.; Pilacinski, A. Virtual Reality for Safe Testing and Development in Collaborative Robotics: Challenges and Perspectives. Electronics 2022, 11, 1726. https://doi.org/10.3390/electronics11111726

Badia SBi, Silva PA, Branco D, Pinto A, Carvalho C, Menezes P, Almeida J, Pilacinski A. Virtual Reality for Safe Testing and Development in Collaborative Robotics: Challenges and Perspectives. Electronics. 2022; 11(11):1726. https://doi.org/10.3390/electronics11111726

Chicago/Turabian StyleBadia, Sergi Bermúdez i, Paula Alexandra Silva, Diogo Branco, Ana Pinto, Carla Carvalho, Paulo Menezes, Jorge Almeida, and Artur Pilacinski. 2022. "Virtual Reality for Safe Testing and Development in Collaborative Robotics: Challenges and Perspectives" Electronics 11, no. 11: 1726. https://doi.org/10.3390/electronics11111726