Abstract

Virtual reality (VR) and augmented reality (AR) are engaging interfaces that can be of benefit for rehabilitation therapy. However, they are still not widely used, and the use of surface electromyography (sEMG) signals is not established for them. Our goal is to explore whether there is a standardized protocol towards therapeutic applications since there are not many methodological reviews that focus on sEMG control/feedback. A systematic literature review using the PRISMA (preferred reporting items for systematic reviews and meta-analyses) methodology is conducted. A Boolean search in databases was performed applying inclusion/exclusion criteria; articles older than 5 years and repeated were excluded. A total of 393 articles were selected for screening, of which 66.15% were excluded, 131 records were eligible, 69.46% use neither VR/AR interfaces nor sEMG control; 40 articles remained. Categories are, application: neurological motor rehabilitation (70%), prosthesis training (30%); processing algorithm: artificial intelligence (40%), direct control (20%); hardware: Myo Armband (22.5%), Delsys (10%), proprietary (17.5%); VR/AR interface: training scene model (25%), videogame (47.5%), first-person (20%). Finally, applications are focused on motor neurorehabilitation after stroke/amputation; however, there is no consensus regarding signal processing or classification criteria. Future work should deal with proposing guidelines to standardize these technologies for their adoption in clinical practice.

1. Introduction

Rehabilitation therapies currently include a variety of techniques and approaches that have allowed specialized care of impaired patients up to personalized therapy, which is becoming a leading strategy in public health [1]. Among them, a new category of rehabilitation systems and virtual environments for therapy has arisen, from telemedicine [2], alterations of user interfaces and videogame controllers [3], to serious games [4], virtual reality (VR), and augmented reality (AR) [5]. Conventional physical therapy (CPT) and VR/AR therapies are believed to have a symbiotic relationship, where the latter increase patients’ engagement and help them immerse in therapy, while CPT stimulates tactile and proprioceptive paths by means of mobilization, strengthening, and stretching. Therefore, the combination of both approaches could be beneficial to patients, bringing a more comprehensive and integrated treatment that can be clinically useful [5]. VR/AR environments for rehabilitation are mainly used for stroke aftermath rehabilitation therapy and prosthesis control training.

Furthermore, the control of the interface and the feedback received by the user are crucial to stimulate neurological pathways that aid in cases like neuromotor rehabilitation [6,7], or in learning how to use a new prosthetic device [8].

The use of biological signals such as surface electromyography (sEMG), which is the electrical representation of muscle activity, additionally improves the biofeedback benefits of therapy with advantages such as avoiding fatigue [9,10].

A virtual environment is used by means of an interface; VR interfaces should comply with certain conditions, i.e., no significant lag time to perceive it as a real time interaction, have seamless digitalization, use a behavioral interface (sensorial and motor skills), and have an effective immersion as close as possible to reality [11]. The use of virtual reality technologies for rehabilitation purposes has recently increased [12,13,14,15], especially for motor rehabilitation applications [16,17]. In the literature, it has been found that the main uses of these technologies fall into two main areas: motor neurological rehabilitation [15,18,19] and training for prosthesis control [20,21].

Feedback is very important during rehabilitation of new neuromotor pathways since it helps the user to correct the direction of the movement or intention towards the right track [15,20,22,23,24,25]. Instant feedback tells the brain and the body how to recalibrate in the same way that it learned it the first time [22]. Moreover, as the user interaction in rehabilitation systems grows towards a closed loop approach there is a need for a wider variety of feedback strategies, whether in the form of visual and audio-visual [26], tactile [27], or haptic [28]. Some studies centered on feedback, report closed-loop [29,30] and open-loop algorithms, among other combinations.

sEMG signals have been widely used as a control signal for rehabilitation systems and applications for a long time now [31]. This approach has several advantages regarding signal acquisition which allows the user to move freely depending on the type of hardware used, including wearable arrays, wireless systems, and even implantable electrodes [32,33,34]. Additionally, there is a variety of electrodes and electrode types, shapes, and arrays that can suit different applications and needs [35,36].

The use of sEMG signals as a control strategy has been widely explored in the myoelectric prosthesis research area, but it is not until recent years that these control techniques have migrated towards other therapy applications, for example, in the control of computer interfaces and environments such as VR and AR [37]. sEMG signals bring about the possibility of a complex multichannel/multiclass type of control algorithm that enables, in turn, the implementation of more intuitive user interfaces for the patient to perform [38]. This is important as it closes the loop of control/feedback interaction, and this special quality promotes neuroplasticity pathways to emerge [39]. However, the use of biosignals in VR/AR applications require more effort than other control strategies that involve other sensor measurements or motion analysis.

On one hand, sEMG signals have been widely explored for control purposes [31] and, on the other hand, VR/AR interfaces have been explored to improve the outcomes of physical rehabilitation therapies [40,41]. Furthermore, as mentioned above, several feedback techniques have been considered recently, but mainly as sensors that record a variable and return a quantitative measure to the experimenter or provide the user feedback that might feel unnatural [26,27,28].

Literature suggests that using sEMG signals to control VR/AR interfaces can provide better outcomes when paired to CPT. In 2018, Meng et al. [9] performed a 20-day follow-up experiment to prove the effectiveness of a rehabilitation training system based on sEMG feedback and virtual reality that showed that this system has a positive effect on recovery and evaluation of upper limb motor function of stroke patients. Then, in 2019, Dash et al. [42] carried out an experiment with healthy subjects and post-stroke patients to increase their grip strength. Both groups showed an improvement in task-related performance score, physiological measures (using sEMG features), and readings from a dynamometer; from the latter, both groups gained at least twice their grip ability. Later, in 2021, Hashim et al. [43] found a significant correlation of training time and the Box and Block Test score when testing healthy subjects and amputee patients in 10 sessions during a 4-week period using a videogame-based rehabilitation protocol. They demonstrated improved muscle strength, coordination, and control in both groups. Moreover, these features added to induced neuroplasticity and enabled a better score in this test, which is related to readiness to use a myoelectric prosthesis. More recently, in 2022, Seo et al. [44] proposed to determine feasibility of training sEMG signals to control games with the goal of improving muscle control. They found improvement in completion times of the daily life activities proposed; however, interestingly, they report no significant changes in the Box and Block Test. The contrasting results found should be further investigated for specific clinical instruments or experimental settings.

Literature supports the hypothesis that sEMG signals can be a robust biofeedback method for VR/AR interfaces that can potentially boost therapy effects. On top of an increased motivation and adherence from patient to complete rehabilitation therapies [43], the method also yields a different type of awareness to the patient of their own rehabilitation progress. Furthermore, this type of therapy offers quantitative data to the therapist, potentially allowing a better understanding of patient progress, which brings certainty to the process.

Nonetheless, sEMG signals as control or feedback of a VR/AR interface merge has not been investigated thoroughly, and even less so in the form of a systematic literature review (SLR). sEMG signals can be better interpretated by patients as control and the visual feedback completes the natural pathway they lost and are trying to get back through rehabilitation therapy.

There are some SLRs that have analyzed VR and AR interfaces used for hand rehabilitation, but some lack an adequate inclusion of feedback techniques [45], whereas others include feedback and focus on the similarity of techniques among VR interfaces for rehabilitation therapy and CPT [5]. Some studies use a computer screen interface to address virtual rehabilitation therapies [46]. Although there are several articles reporting individually the use of sEMG signals and VR/AR interfaces [9,43,44,47], there are no SLRs focused on the use of for these interfaces.

Although some studies show sEMG signals paired with VR/AR environments, just a few discuss if there are advantages in clinical results compared to conventional therapy groups, and there is a shortage of standardized protocols when sEMG signals are used for rehabilitation therapy purposes [37,48,49]. Hence, there is not enough information in the literature to determine if these biosignals used as control or feedback of VR/AR systems improve the positive outcomes of neuromotor rehabilitation therapies and whether they promote, e.g., neuroplasticity or support training of myoelectric control for prosthesis fitting. It is also important to know if the hardware used is commercially available or proprietary/developed, and if the signal processing techniques used are similar enough to be compared. Likewise, it is important to learn the rehabilitation target to which this technology has been applied to and if they have been tested with healthy subjects or patients, and if this technology is aimed for a clinical environment or only for research protocols.

To address these matters, our goal and contribution to the field is to explore and analyze if sEMG signals can be used as control and/or biofeedback for VR/AR interfaces, and to find out if the proposed techniques converge on a standard of care protocol, since there are not many methodological reviews to date that focus on sEMG control/feedback. Therefore, we considered it essential and necessary to carry out an exhaustive review of the published scientific literature regarding this topic. In this paper we applied the PRISMA (preferred reporting items for systematic reviews and meta-analyses) methodology [50] for a systematic literature review (SLR) to find out how AR and VR interfaces are used in rehabilitation applications that are controlled through sEMG signals. To complete this task, we collected relevant articles dealing with state-of-the-art AR and VR environments used for rehabilitation purposes based on sEMG signals used as control or biofeedback.

2. Materials and Methods

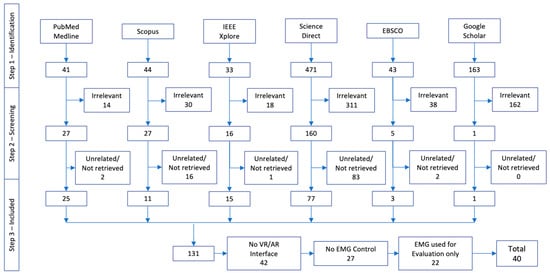

The PRISMA methodology was followed to conduct the SLR search [50]. A set of six academic and scientific databases were searched: PubMed/Medline, IEEE Xplore, Science Direct, Scopus, EBSCO, and Google Scholar. The search included titles, abstracts, and keywords of articles written in the English language. The search was conducted from January 2017 to March 2022.

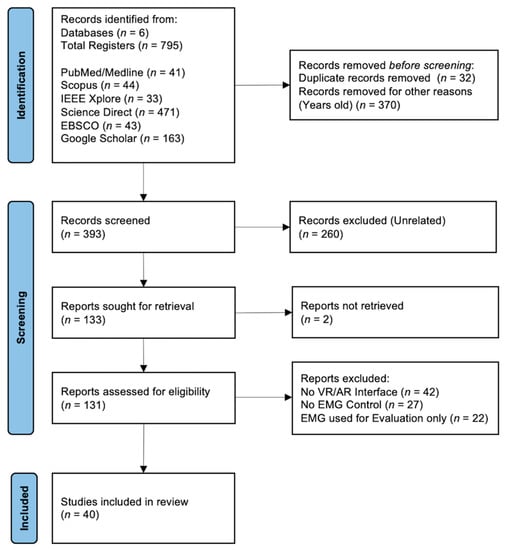

Search query and selection criteria—The aim of the SLR was to find and analyze the state-of-the-art of motor neurological rehabilitation based on VR/AR interfaces, focusing on those using sEMG control covering feature extraction and classification algorithms. The search query was performed in three steps (Figure 1). Step 1—Identification: from the articles resulting from the Boolean search of keywords in databases, titles, abstracts, and keywords; they are looked over to eliminate duplicates and unrelated articles. Step 2—Screening eligibility: articles were selected if dealing with any form of VR/AR interfaces for rehabilitation controlled by sEMG, while excluding those that cannot be retrieved, the ones that aim at other research focus, those that do not use sEMG as control or feedback, the ones that use sEMG for assessment purposes, and those that do not include a virtual interface. Step 3—Including: the filtered articles are selected for analysis after full text reading.

Figure 1.

PRISMA flow diagram for the systematic literature review.

Research questions—The main information to be extracted from the SLR, to find out the use of sEMG control for rehabilitation using VR/AR application, is summarized in the following series of research questions (RQ):

- RQ1:

- What is the share in the use of VR and AR interfaces in rehabilitation?

- RQ2:

- Which is the target anatomical region aimed to be rehabilitated?

- RQ3:

- What type of rehabilitation therapy is the interface used for?

- RQ4:

- What are the characteristics of VR/AR interfaces when used for rehabilitation?

- RQ5:

- How are sEMG signals used to interact with VR/AR interfaces for rehabilitation?

- RQ6:

- What hardware is used for signal acquisition?

Inclusion and exclusion criteria—The keywords used for a Boolean search through the databases were: ((Virtual Reality) OR (Augmented Reality)) AND (Rehabilitation) AND (Surface Electromyography) AND (Control OR Feedback). Articles from peer-reviewed conference proceedings, indexed scientific journals, books, and chapters are included. After this examination, articles that are duplicated or unrelated to the scope of this paper were removed. The remaining articles were explored for other related keywords such as: interface, videogame, stroke, and prosthesis. Those which were considered relevant and belong to recent advances in the techniques of interest were selected for analysis in the SLR.

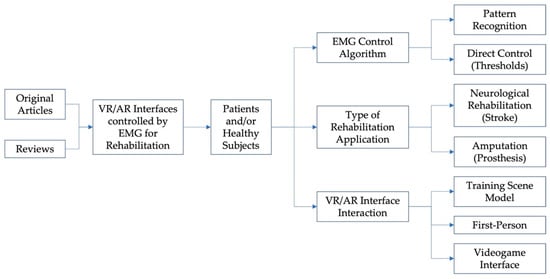

Data extraction and analysis—This section describes the proposed classification for the selected articles, including original and review articles (Figure 2). The articles were filtered into three classes: first class consisted of sEMG control algorithms and was subdivided into pattern recognition and direct control; the second class was the mode of rehabilitation application that can be either for neurological rehabilitation (e.g., stroke) or for amputation rehabilitation in the form of training for prosthesis control. Finally, the third class took up the categories of VR/AR interface interaction, including the training scene model, first-person mode, and videogame interfacing.

Figure 2.

Taxonomy of the SLR: sEMG control algorithms for VR/AR interfaces for motor rehabilitation.

3. Results

The Boolean search of keywords in electronic databases accounted for 795 studies (Step 1—Identification). Articles dealing with any form of VR/AR interfaces for rehabilitation controlled by sEMG signals were selected (Figure 3). First, irrelevant articles were removed, including those eliminated for being duplicated and those older than January 2017, summing up to 573 articles.

Figure 3.

Number of records identified from each database for the systematic literature review.

Then, articles unrelated to the focus topic and those that could not be retrieved were eliminated (Step 2—Screening eligibility), subtracting 104 additional articles.

Finally, those that do not use sEMG as control or feedback (27), the ones that use sEMG signals just for evaluation (22), and those that do not include a virtual or augmented reality interface (42) were excluded (Step 3—Including), leaving us with 40 articles for full text reading and analysis.

From the 40 works analyzed, 2 were review articles, 2 were book chapters, and the remaining (36) were original articles. Some 82.5% (33) of literature articles were oriented to upper limb, and 17.5% (7) to lower limb. Patients were included in 15 articles (37.5%), with 7 concerning amputee patients and 8 concerning post-stroke patients. A total of 67.5% (27) of the articles included abled-bodied healthy subjects in their trials. All the articles (40) used visual feedback through the VR or AR interfaces, but a few used a second type of feedback, such as 2 articles that included fatigue and closed-loop feedback to regulate intensity [51,52], while another 2 papers used audio feedback [47,53], 2 relied on tactile feedback [53,54,55], 1 had haptic feedback, and 1 asked the subject to think of the movement (to be detected through electroencephalography (EEG)) as well as to perform it [56]. Just 3 articles mentioned exoskeletons for movement assistance triggered by sEMG signals [56,57,58], and 1 article used functional electrical stimulation (FES) for movement assistance [59]; all 4 of them belong to neurorehabilitation applications.

3.1. RQ1: What Is the Share in the Use of VR and AR Interfaces in Rehabilitation?

From the analyzed articles, we found that 57.5% (23) of them use a VR interface environment for rehabilitation purposes (Table 1). Some 27.5% (11) of the articles propose a virtual interface that operates as a computer interface (CI) (Table 2). Meanwhile, 4 (10%) of them use AR interfaces as biofeedback (Table 3).

Mostly, VR and CI interfaces show an environment to be controlled by the user to complete an action or different movements repetitions. There are three main variations: videogame interface (11 for VR, 7 for CI), imitation tasks named training scene model (2 articles for both cases VR and CI), and first person, e.g., outreach tasks (10 for VR and 11 for CI). In the case of AR interfaces, 3 correspond to a videogame or serious games interfaces [60,61,62], and 1 to a training scene model [63].

In total, 18 (52.94%) of the articles concerning VR or CI interfaces use a videogame or a serious game as interface with 7 of them, for CI, showing tests performed by patients with positive performance results [42,43,47,51,64,65,66], and 3 for VR interfaces [53,67,68].

There were 2 VR and 2 CI articles presenting interfaces based on a training scene model, with only 2 of them showing results for patient use [69,70]. Meanwhile, 5 (13.8%) articles use a first-person approach for their interface, however, only 3 show results with patients and do not report performance metrics [29,56,70,71,72].

Only [61] reports the use of the AR interface with patients (five amputees). Melero et al. [60] use a Microsoft Kinect to locate upper limbs of three abled-bodied subjects; when sEMG signals show a perfect performance of the activity, the subject scores a point. They report a 77% accuracy in hand gesture classification.

There is a widespread conception of what a VR interface is implied to have and look like. However, most articles use a conventional videogame computer interface, and only 8 (22.2%) report using a headset [61,69,72,73,74], an environment [65,68], or immersive VR [75].

3.2. RQ2: Which Is the Target Anatomical Region Aimed to Be Rehabilitated?

There are two types of pathologies to which the VR/AR interfaces are targeted: post-stroke paresis rehabilitation and training for myoelectric prosthesis use. From the articles that include patients in their studies (40%), these are mainly upper limb amputees (50%) [6,43,56,61,65,67,68,70], post-stroke with hemiparesis patients (43.75%) (which may need both upper and/or lower limb rehabilitation) [42,47,51,53,64,66,69], and there is 1 study where authors tested their environment with a patient that presented a bilateral upper-limb congenital transverse deficiency [72].

Most of the developments are focused on upper limb rehabilitation (82.5%), which include all the AR interfaces described above. Even though there are more cases of lower limb amputations and paresis than upper limb amputations [76], upper limb disability has been reported as a larger burden than lower limb impairment or loss [77].

3.3. RQ3: What Type of Rehabilitation Therapy Is the Interface Used for?

Neurological motor rehabilitation is the goal of 28 (70%) of the analyzed articles, while 12 (30%) present interfaces used for training the amputee patient for future myoelectric prosthesis use.

3.4. RQ4: What Are the Characteristics of VR/AR Interfaces When Used for Rehabilitation?

We found the interfaces can be divided into three types: videogame, first-person, and training scene model.

The less common interface is the training scene model (15% of analyzed articles), only used in 2 (8.69%) VR interfaces, in 2 (18.18%) CI interfaces, and 2 (50%) AR interfaces. Here, the user is shown an arm and/or hand that performs the movements the user is sending for control or biofeedback. The next type of interface is first-person with 16 (40%) interfaces found. This type of interface is trying to embed the user in the environment, as if they were going through it; most of the times the user can see either their arms and hands or some tool used to attain the goal of the game. Finally, the videogame interface is based on the movement of a character to perform a given task within a designed environment, and each virtual movement is related to a real movement from the impaired hand. The articles show 11 (27.5%) videogame type for VR interfaces, and 7 (17.5%) for CI interfaces; no videogame interfaces were reported for AR interfaces in the analyzed articles.

3.5. RQ5: How Are sEMG Signals Used to Interact with VR/AR Interfaces for Rehabilitation?

Regarding the user interaction with the interface, the main aspect to be described is sEMG control, which is based on acquiring and classifying muscle activity to detect volitional activity, and in some cases, which type of hand gesture is being performed. To accomplish this, several types of classifiers are presented within the analyzed articles.

Regarding signal processing algorithms, Li et al. [55] reported the use of the wavelet transform to process sEMG signals and to extract features for further classification using support vector machines (SVM). However, 13 articles (32.5%) reported the use of pattern/gesture recognition to differentiate among hand grasps.

Support vector machine is used in 4 (10%) articles, only 3 of them report performance (with 96.3%, 95%, and 99.5% (healthy subjects)/94.75% (stroke patients), respectively) [9,51,52,55]. Another 4 (10%) articles use neural networks, and 1 reports a 97.5% performance using a convolutional neural network [63], while another 1 uses a deep learning model [73], and 1 more a probabilistic neural network [64]. Only 1 article uses linear discriminant analysis for classification of grasps [67]. The Myo Software® is used for classification of hand grasps in 2 articles [59,61], and [6] reports the use of a Kalman filter-base decode algorithm. The above-mentioned techniques are state-of-the-art classification methods for sEMG control, which is based on pattern/gesture recognition and accounts for 32.5% (13) of the upper limb prosthesis training papers analyzed.

Furthermore, classic control approaches such as proportional control and threshold-based classification are found within the analyzed articles, with 2 articles for the former [47,60] which also considers the strength of the muscle contraction, and 4 (10%) concerning the latter [54,56,62,78]. Additionally, 4 (10%) articles [53,68,78,79] consider the intention of motion to generate a control or activation signal. The remaining ones either do not specify or are unclear or ambiguous regarding their classification method.

3.6. RQ6: What Hardware Is Used for Signal Acquisition?

For sEMG signal acquisition, 9 (22.5%) of the analyzed articles report the use of a Myo Armband (Thalmic Labs, Kitchener, Canada) with all applications related to the use and training for upper limb prosthesis; this accounts for 75% of the upper limb prosthesis applications reported. Another 4 (10%) articles used a model of Delsys© sEMG acquisition system (Delsys Inc., Natick, MA, USA); both hardware systems are considered among the three best acquisition systems regarding the quality of their signals and the high classification accuracy achieved with them [80]. A further 7 (17.5%) research articles present applications using proprietary hardware. Refs.[73,74] use the Leap Motion (Ultraleap, San Francisco, CA, USA) hardware to acquire arm/hand movements as an extra input for system control. Melero, et al. used the Microsoft® Kinect as a second acquisition input for control [60].

Finally, exoskeletons are used by [56,57,58], triggered by the events detected from sEMG signals, to promote correct trajectories during rehabilitation therapy.

For biofeedback, visual interfaces are used in all cases, but some also incorporate other types of feedback. For instance, Wang et al. [51] use fatigue to adapt the level of difficulty of the videogame interface; Dash et al. [47] present an audiovisual stimulus to the user, as do as Llorens et al. [53], where they incorporate tactile user feedback to the audiovisual modality; Li et al. [29] use electrotactile feedback for a closed-loop control application with a VR environment; Ruiz-Olaya et al. [58] use visual and haptic feedback, whereas Covaciu et al. [81] use visualization of the functional movement through the VR interface as feedback.

The following tables shows the most relevant characteristics of the articles analyzed. When the article did not include information regarding a certain topic the slot is left blank. The first table shows results for VR interfaces (Table 1), the next one presents the summary of articles dealing with AR interfaces (Table 2), and finally, the last one regards computer interfaces found among the analyzed articles (Table 3).

Table 1.

VR interfaces used for motor rehabilitation based on sEMG Control.

Table 1.

VR interfaces used for motor rehabilitation based on sEMG Control.

| First Author, Year | Type of Rehabilitation | Type of Interface | Interaction | Subjects | Anatomical Region | Acquisition Hardware | Feature Extraction | Classification Algorithm and Performance | Feedback | |

|---|---|---|---|---|---|---|---|---|---|---|

| Healthy Subject | Patient | |||||||||

| Mazzola, S., 2020 [73] | Neurological Motor Rehabilitation | VR 3D Upper Limbs Precision-based block staking task | Comparison of sEMG signals by means of RMS Voltage | 24 | - | Upper limb/ flexor carpi radialis, extensor digitorum, biceps brachii, triceps brachii bilateral | Vive Pro HMDLeap Motion Delsys Trigno wireless Electrodes 4-channels | RMS from sEMG + | Compare RMS + level from sEMG Signal (with and without the VR Interface) | Visual feedback |

| Lydakis, A., 2017 [69] | Neurological Motor Rehabilitation | VR Videogame Interface Movement Imitation | 3D Avatar (1) Assessment experiment (2) Action observation (3) Combined motor imagery and action observation | - | 4 post-stroke | Upper limb/ Musculi flexor pollicis longus, flexor digitorum superficialis and flexor carpi radialis | Myo Armband+ R7 AR Glasses + IMU g.Hlamp | RMS from sEMG + | Thresholds | Visual feedback |

| Woodward, R.B., 2019 [67] | Prosthesis Training | VR Virtual forest virtual crossbow in real-time | sEMG control of real-time hand grasps | 16 | 4 amputees (3 transradial, 1 wrist dislocation) | Upper limb/ Forearm Hand gestures (no motion, hand open, hand close, wrist pronation, wrist supination, wrist flexion, and wrist extension) | Custom-fabricated sEMG acquisition armbands included six pairs of stainless-steel dome electrodes TI ADS1299 bioinstrumentation chip | Movement velocity (advanced proportional control algorithm) Speed (smoothed) MRV, WVL, ZC SSC, and ARF from sEMG + | Pattern recognition 3D Target Achievement Control Test LDA *** | Visual feedback |

| Summa, S., 2019 [74] | Neurological Motor Rehabilitation | VR Robotic platform (Dynamic Oriented Rehabilitative Integrated System–DORIS) + motion analysis + sEMG | Training of equilibrium and gait Game experiences for VR | - | - | Lower limb Core Equilibrium and gait | Unreal VR Headset + Leap Motion + Vicon/sEMG Server – DORIS | - | - | Visual feedback |

| Kluger, D.T., 2019 [6] | Prosthesis Training | VR Virtual Modular Prosthetic Limb | Closed-loop virtual task | - | 2 amputees (transradial) | 19 contact sensors at the hand | MAV + from sEMG | Modified Kalman-filter-based decode | Visual feedback | |

| Meng, Q., 2019 [9] | Neurological Motor Rehabilitation | VR Rehabilitation Game | Virtual rehabilitation game | 8 | - | Upper limb/ Wrist flexion– extension | Property Design | Moving average window Autoregressive model (AR)parameter model in time domain | SVM *** Recognition of action 96.3% | Visual feedback |

| Nissler, C., 2019 [68] | Prosthesis Training | VR Environment Serious Games (Unity) | Box and Block Test At virtual living room and kitchen | 15 | 1 amputee (uses prosthesis) | Upper limb | Myo Armband | - | Intent detection | Visual feedback |

| Covaciu, F., 2021 [81] | Neurological Motor Rehabilitation | VR Collect Apples | Foot movements | 10 | - | Lower limb/ Ankle | Gyroscope Accelerometer Myoware | - | KNN *** 5-fold cross-validation 81.35% | Visual/functional feedback |

| Llorens, R., 2021 [53] | Neurological Motor Rehabilitation | VR Pick up Apples that grow before they disappeared | Intention of action while administering transcranial direct current stimulation | - | 29 | Upper limb/ brachioradialis, palmaris longus, and flexors and extensors of the fingers | Myo Armband | - | Intention of action while administering transcranial direct current stimulation | Audiovisual and tactile feedback |

| Li, K., 2019 [29] | Neurological Motor Rehabilitation | VR Environment Virtual Hand | Control with sEMG Electrotactile stimulation module Force proportional to intensity | 10 | - | Upper limb | Multichannel sEMG Acquisition System Elonxi Ltd. | sEMG intensity | - | Visual Feedback: Numerical indicators of force and deformation ElectrotactileStimulation Closed-loop |

| Cardoso, V.F., 2019 [75] | Neurological Motor Rehabilitation | VR Immersive Serious Game | EEG sEMG Robotic Monocycle | 8 (5 males) | - | Lower limb | Property sEMG acquisition 4-channels | - | - | Visual feedback |

| Li, X., 2019 [55] | Neurological Motor Rehabilitation | VR Kitchen Scene (open door, clean table, ventilator, cut food) | Control with sEMG | 4 | - | Upper limb | Wireless acquisition module | MAV, RMS, SD from sEMG + MAV, singular values of wavelet coefficients | SVM, PNN *** 95% for wavelet coefficients | Visual feedback |

| Bank, P., 2017 [78] | Neurological Motor Rehabilitation | VR Imitation Game | Control with sEMG | 18 | - | Upper limb/ wrist flexor carpi radialis and extensor carpi radialis | Porti7 22 bits A/D fs = 2000 Hz | MVC from sEMG + | Task Performance 96.6% Effort 100% Co-contraction 99.8% | Visuomotor tracking |

| Ruiz-Olaya, A.F., 2019 [58] | Neurological Motor Rehabilitation | VR Environments and/or Headsets | Control left/right position of virtual car High-density surface sEMG EEG | - | - | Upper limb, lower limb, full body Exoskeletons | Several | - | . | Visual feedback Haptic Exoskeleton |

| Castellini, C., 2020 [82] | Neurological Motor Rehabilitation | VR/AR Avatar Upper Limb Interaction | Control with sEMG | - | - | Upper limb | - | MVC from sEMG + | ML *** Pattern Recognition | Visual feedback (Positive psychological effects) |

| Raz, G., 2020 [71] | Neurological Motor Rehabilitation | VR Environment | Headset Sit in real table Arms represented in virtual world | - | - | Upper limb | - | - | - | Visual feedback |

| Bhagat, N.A., 2020 [56] | Neurological Motor Rehabilitation | VR Outreach task | BMI detects motion intention from sEMG and EEG motor intent to trigger exoskeleton for assistance | - | 10 chronic post-stroke | Upper limb/ biceps brachii, triceps brachii | Proprietary sEMG | RMS from sEMG + | sEMG threshold + EEG motor intent | Think of movement Visual feedback |

| Heerschop, A., 2020 [83] | Prosthesis Training | VR Serious Games:Control a grabber, free catching task, following task | Control from sEMG | 43 | - | Upper limb/ flexor-extensor of wrist | Otto Bock 13E200 Electrodes 2-channels | - | - | Visual feedback |

| Liew, S.L., 2022 [59] | Neurological Motor Rehabilitation | VR Serious Games | Control from sEMG to trigger FES * | - | - | Upper limb/ lower limb | Several | - | - | Visual feedback FES * activation |

| Ida, H., 2022 [84] | Neurological Motor Rehabilitation | VR Videogame | Postural adjustment after perturbation (rReal vs VR) Single-leg obstacle avoidance task | 10 | - | Lower limb | Myopac RUN | Mean + SD from sEMG + | - | Visual feedback |

| Montoya-Vega, M.F., 2019 [52] | Prosthesis Training | VR Serious Games Force Defense | Change difficulty of videogame depending on fatigue | 12 | - | Upper limb/ biceps brachii | Myo Armband | Fatigue | Motor Learning | Muscle fatigue as feedback |

| Mazzola, S., 2020 [73] | Neurological Motor Rehabilitation | VR Gesture-level hand tracking | Stack blocks using dominant hand | 24 | - | Upper limb/ flexor carpi radialis, extensor digitorum, biceps brachii and triceps brachii Bilateral | Delsys Trigno wireless electrodes | Amplitude RMS from sEMG + Completion Task Time | - | Visual feedback |

| Kisiel-Sajewicz, K., 2020 [72] | Neurological Motor Rehabilitation | VR Headset Virtual Upper Extremity | Reaching task precision fine grasping | 1 | 1 | Upper limb | OTbioLab ELSCHO064LS | MVC, Sub-MVC (20% MVC) from sEMG + | - | Visual feedback |

* IMU—inertial movement unit, FES—functional electrical stimulation. *** SVM—support vector machine, ML—machine learning, ANN—artificial neural network, LDA—linear discriminant analysis, DL—deep learning, KNN–K-nearest neighbor, PNN—probabilistic neural network, CNN—convolutional neural network. + Features acronyms: SSC–slope sign changes, ZC–zero crossings, RMS–root mean square, WL–waveform length, MDF–median frequency, MNF–mean frequency, MAV—mean average value, MPF—mean power frequency, SE—self-ordering entropy, MRV—mean relative value, WVL—waveform vertical length, ARF—auto-regressive features, MVC—maximum voluntary contraction, ASS—absolute value of the summation of square root, MSR—mean value of square root.

Table 2.

CI interfaces used for motor rehabilitation based on sEMG control.

Table 2.

CI interfaces used for motor rehabilitation based on sEMG control.

| First Author, Year | Type of Rehabilitation | Type of Interface | Interaction | Subjects | Anatomical Region | Acquisition Hardware | Feature Extraction | Classification Algorithm and Performance | Feedback | |

|---|---|---|---|---|---|---|---|---|---|---|

| Healthy Subject | Patient | |||||||||

| Wang, L., 2017 [51] | Neurological Motor Rehabilitation | VR Videogame Interface | Training scene model | 4 | 4 post-stroke | Upper limb/ hand gestures | eego™sports, Delsys | SE, MPF from sEMG + | SVM *** to identify action patterns – 99.5% healthy subjects (4) 94.75% stroke patients (4) | EEG and sEMGfatigue status to adapt level of difficulty |

| Lai, J., 2017 [79] | Prosthesis Training | VR Real-time interaction | Visual feedback real time response of sEMG control | 1 | - | Upper Limb/ Forearm | Danyang Prosthetic Electrodes 4-channel and UBS6351 NI | ×5 20 repetitions trials | SVM *** Training and pattern recognition | Visual feedback |

| Dash, A., 2019 [47] | Neurological Motor Rehabilitation | VR Videogame Interface Basketball tower, 3 goal posts | sEMG biofeedback for strength inference and EDA for tonic mean | 6 | 6 post-stroke hemiplegic | Upper limb/ flexor carpi radialis and extensor carpi radialis longus | Biopac MP150 fs = 1000 Hz | MAV from sEMG + | Levels of strength | Audio-visual feedback |

| Trifonov, A.A., 2020 [57] | Prosthesis Training | VR Movement Imitation | Replicates movements in VR, sEMG used as input of an exoskeleton that places the limb at given coordinates | 1 | - | Upper limb | Proprietary AD8232 2-channels – Exoskeleton | RMS, MAV from sEMG + | ANN *** (Two layers: Kohonen and Grossberg) | Visual feedback |

| Nasri, N. 2020 [64] | Neurological Motor Rehabilitation | VR (Unity) Serious Games | sEMG control | - | 4 | Upper limb/ hand gestures | Myo Armband | - | DL Model Conv-GRU architecture | Visual feedback |

| Dash, A., 2020 [42] | Neurological Motor Rehabilitation | VR (Unity) Fountains, Basketball court | sEMG control triggered grip exercise (move VR objects according to hand gesture) | 8 | 12 post-stroke | Upper limb | Biopac MP150 | MAV + from sEMG sEMG-controlled dynamic positioning of VR object | - | Visual feedback |

| Lukyanenko, P., 2021 [70] | Prosthesis Training | VR Representation of a prosthetic hand | Activate virtual hand using EMG | - | 2 | Upper limb | Chronically implanted EMG (ciEMG) electrodes Ripple Grapevine system collected ciEMG fs = 2000 Hz 15–350 Hz filter | MAV + from EMG | KNN mapping technique | Visual feedback |

| Hashim, N., 2021 [43] | Neurological Motor Rehabilitation | VR Videogames Crate Whacker, Race the Sun, Fruit Ninja, andKaiju Carnage | 1-h sessions 4-week rehabilitation program Box and Block Test sEMG Assessment | 5 | 5 amputees Transradial | Upper limb/ forearm | Myo Armband | MVC + from sEMG to randomly select a game | - | Visual feedback Timer and score visible |

| Quinayás, C., 2019 [65] | Prosthesis Training | VR Environment to locate & grasp object (Unity) | Hand grasps: rest, open hand, power, and precision grip 20 trials | - | 1 | Upper limb/ forearm | Property sEMG Bracelet fs = 1000 Hz | ASS, MSR from sEMG + | Online recognition of motion intention 86.6% | Visual feedback |

| Yassin, M.M., 2021 [85] | Neurological Motor Rehabilitation | VR Cellphone Apps (Patient + therapist) Car game (gauge & bar) | Control from sEMG | 5 | - | Upper limb | Property sEMG microcontroller—based on ARM Cortex 32- bit M3 architecture | RMS+ from sEMG | - | Visual feedback (gauge and bar) |

| Ma, L., 2018 [66] | Neurological Motor Rehabilitation | VR Videogame Interface (Hamster, Flappy Bird) | Picture guidance Gesture recognition of hand movement generates game character movement | 6 validation only | 9 post-stroke (pre-, mid-, post-rehab) (5–right hemiplegia) | Upper limb/ hand grasps (relax, open hand, close hand) | Delsys 4-channels, dry electrode, fs = 2000 Hz | SSC, ZC, RMS, WL, MDF, and MNF from sEMG + | ML *** 2-fold model fusion of Stacking – 95% in healthy subjects 90% 2 post-rehab patients’ hemiplegic side | Visual feedback |

*** SVM—support vector machine, ML—machine learning, ANN—artificial neural network, LDA—linear discriminant analysis, DL—deep learning, KNN—K-nearest neighbor, PNN—probabilistic neural network, CNN—convolutional neural network. + Features acronyms: SSC—slope sign changes, ZC—zero crossings, RMS—root mean square, WL—waveform length, MDF—median frequency, MNF—mean frequency, MAV—mean average value, MPF—mean power frequency, SE—self-ordering entropy, MRV—mean relative value, WVL—waveform vertical length, ARF—auto-regressive features, MVC—maximum voluntary contraction, ASS—absolute value of the summation of square root, MSR—mean value of square root.

Table 3.

AR Interfaces used for motor rehabilitation based on sEMG control.

Table 3.

AR Interfaces used for motor rehabilitation based on sEMG control.

| First Author, Year | Type of Rehabilitation | Type of Interface | Interaction | Subjects | Anatomical Region | Acquisition Hardware | Feature Extraction | Classification Algorithm and Performance | Feedback | |

|---|---|---|---|---|---|---|---|---|---|---|

| Melero, M., 2019 [60] | Prosthesis Training | AR Visualization of muscle activity Dance game Imitation | Score when movements are performed correctly Perform choreographed dance containing hand gestures involved in upper limb rehabilitation therapy | 3 | - | Upper limb/ hand gestures | Wired intramuscular sEMG recording implant 4-channels Myo Armband Microsoft Kinect | 10 trials | Myo Armband Software 77% Accuracy Hand Gesture Classification | Visual feedback |

| Gazzoni, M., 2021 [62] | Neurological Motor Rehabilitation | AR Superimposed muscles Smartglasses | Control from sEMG | 1 | - | Upper limb/ lower limb | Due 14-channels | RMS from sEMG + | Threshold sEMG | |

| Liu, L., 2020 [63] | Prosthesis Training | AR Imitation game | Exercise finger movements | 100 | - | Upper limb/ aand | Myo Armband | Spectogram | CNN * Pattern Recognition 10 gestures 97.8% | Visual feedback |

| Palermo, F., 2019 [61] | Prosthesis Training | AR Portable Environment | AR Environment renders a table, a hand and bottle, a screwdriver, tennis ball, pen, can Control with sEMG | 5 | 5 amputees Transradial | Upper limb | Microsoft HoloLens Myo Armband | - | Pattern recognition with Myo Software | Visual feedback |

* CNN—Convolutional Neural Network. + Features acronyms: SSC—slope sign changes, ZC—zero crossings, RMS—root mean square, WL—waveform length, MDF—median frequency, MNF—mean frequency, MAV—mean average value, MPF—mean power frequency, SE—self-ordering entropy, MRV—mean relative value, WVL—waveform vertical length, ARF—auto-regressive features, MVC—maximum voluntary contraction, ASS—absolute value of the summation of square root, MSR—mean value of square root.

4. Discussion

VR/AR technologies can be used as a visual guide to perform an activity, or to immerse in a different environment, but they can also be controlled using a variety of sensors or biosignals where a natural movement of the body generates a response in the environment displayed as movement or control of an avatar [11]. A simple example would be to adapt the environment so that when the subjects walk, it moves too, and they can explore it. Several rehabilitation strategies can emerge from these interactions [2,7,9,17,86,87]. In this paper we presented the analysis of 40 articles that deal with the use of sEMG signals to control or feedback a VR/AR interface for rehabilitation purposes, which provides a global framework of the most common applications found.

VR is characterized as being an immersive interface or environment [11]. Despite this, it was found that 11 out of the 34 articles (32.35%) proposing VR interfaces interact with the user through a computer interface. The effects of these type of interfaces should be further investigated since they present advantages (less expensive, ready to use, needs less space to be used) and disadvantages (lack of immersion, allows distractions).

The application of AR technology has more challenging requirements to emulate virtual items over real life environments, which could be a room, furniture, or an open field. This visualization is commonly made through a screen that shows a virtual object projected over a real time image or video of the experimentation room [88]. A way to overcome this issue requires technology that can be as advanced as a holographic projector. On the other hand, this type of interface is much more immersive; as its name suggests, it is closer to reality, trying to erase the limits between the virtual and the real world. So, an AR interface could have a potentially higher impact on the user’s brain and consequently on rehabilitation therapy [5,41,88].

Videogame and first-person interfaces engage the patient by allowing them to train actively, compared to traditional rehabilitation therapy where monotonous repetitions are typical. This type of therapy approach is copied by training scene model interfaces.

On the other hand, VR/AR technologies have great potential, since they can completely change the perception of the user’s own motor functions, potentially restructuring body proprioception, vital for neurorehabilitation applications, neuroplasticity, and motor rehabilitation in general. For example, Osumi et al. found that VR therapy helped alleviate phantom limb pain effectively, compared to CPT [87]. The interaction achieved with these technologies highly improve patient engagement with therapy, adherence to treatment, and excitement to come back [9,42,43,82]. Specifically, Castellini et al. [82] mentioned a positive psychological effect from VR/AR interfaces used in rehabilitation.

Acquisition hardware is a sensitive subject because is the first link to the user, a mistake here can cause chaos in the system. Melero et al. [60] and Palermo et al. [61] use the Myo Armband for signal acquisition which allows them to have a more compact and portable system. In total, 9 articles report the use of this band. Different models of the Delsys acquisition system are reported in other articles [51,66,73]. Both systems are not only in the top 3 devices for sEMG signals acquisition [80], but are also very small, portable, and convenient to use, which translates into an easier way to use this technology in a clinical environment, consequently involving more patients in the tryouts. Several authors chose to develop their own hardware, which come with advantages (specific design to fulfill specific needs) and disadvantages (manufacture can be problematic, especially to miniaturize the electronics).

Surprisingly, 32.5% of the articles did not mention the processing algorithms, type of classifier used, classification performance, etc., and a few more mentioned it but were very ambiguous—they neither reported the protocol followed for therapy, the evaluations performed to the technique selected, or the effectiveness of the technique for therapy purposes. Reporting this data is highly important disregarding the clinical section of the results. The use of sEMG signals and their processing aiming rehabilitation applications is still scattered and heterogenous, and there is no consensus to select the methodology for processing algorithms, signal features, classification approach, and performance evaluation. We consider it would be very important for authors to state the signal processing and classification algorithm designed or used, and their performance metrics, which could be among the technical guidelines that could be proposed to homogenize the protocols.

All these environments are controlled using sEMG signals and provide feedback through a visual, tactile, or functional stimulus. It becomes obvious that virtual therapy is also based on repetitions and practice, like CPT, but the manner the patient can interact with it is what makes it as engaging and addictive as ludic videogames.

Motor rehabilitation and neurorehabilitation are both intended to help the brain adjust to a new way to function, to re-learn how to control an impaired limb, and even to generate new paths to communicate with the limb, a process best known as neuroplasticity [89]. Neuroplasticity is based on principles such as goal-oriented practice, multisensorial stimulation, explicit feedback, implicit knowledge of performance, and action observation, among others [89]. These qualities are implicit to the use of VR/AR interfaces [5], and when they are aggregated to the improvement of muscle control, coordination, and control of movements or contractions [43], therapy can take an upturn in the best interest of the patient. An outstanding aspect is that patient betterment will have quantitative recordings that could ultimately yield specific changes in their therapy to target the aspects that need the most attention.

Some authors [16] propose sEMG signals as a popular form of biofeedback, nevertheless, there have been developments where they combine two biosignals [51,90,91]. Electroencephalography (EEG) and sEMG are non-invasive biopotentials that offer plenty of information regarding brain and muscle activity in clinical and daily life contexts. Interestingly, sEMG signals are commonly considered an undesired noise source in EEG recordings. Cortico-muscular (EEG–sEMG) coherence is a new analysis tool that studies the functional connection between the brain (EEG) and muscle (sEMG) electrical activity. EEG–sEMG coherence has been used for assessment of neuronal recovery [91] in rehabilitation applications, including those based on virtual reality [92]. Moreover, it has been shown that EEG–sEMG coherence, measured from a single EEG and a single sEMG channel, can be used as a control signal for distinction of hand movements [93] with potential for rehabilitation applications. Furthermore, simultaneous recording and analysis of multiple sEMG and EEG signals in key body and scalp zones can help to evaluate potential effects and interrelations between types and parameters of AR/VR during rehabilitation interventions, on the activity of central and peripheral central nervous system structures related to movement control, planning, and execution. Moreover, since VR/AR technologies are oriented to visual simulation during body movements, EEG–sEMG coherence or other combined parameters could be an alternative to evaluate relations between visual attention to objects in the VR/AR interface and visual information processing in the brain and motor responses in the body.

Considering the above evidence, it is not rare that only a few developments have reached a commercial environment and therefore been applied in the clinic [94]. All the analyzed articles are still in a research and development stage and do not mention their use for therapy; on the contrary, they propose larger studies as future work, meaning that even though this is a promising technology, more and larger studies are necessary to prove its efficiency.

To have access to a wide variety of human movements and dynamic interaction, through VR/AR therapy, is an additional benefit that has the potential to generate new solutions in rehabilitation. This information could be useful, especially if there were specific guidelines or protocols to standardize the acquisition of other sensors or signals, as there are for sEMG signals [95], as well as other data used for control and feedback. This would allow to propose the design of a database to house standardized reports for documentation and filing regarding signal recordings, acquisition hardware, environmental or user conditions, and experimentation.

Finally, there is a major gap regarding the standard of care protocols or guidelines to perform VR/AR therapies for rehabilitation. To further evaluate the advantages of these developments, a structured methodology should be proposed and followed. It could include session time and frequency, maximum number of activations per limb, proper guides to use external aids such as exoskeletons, FES, orthosis, or prosthetics, type of movements to be commanded according to the pathology or target therapeutic application, along with a larger list of requirements.

Future Directions

Future directions in sEMG-based control of VR/AR interfaces for rehabilitation applications include several features. Hardware implementations of acquisition systems that come closer to a wearable device where most of the system could be integrated will be significant. Hybrid multisignal inputs accompanied by signal processing algorithms that incorporate the contributions of several systems of the body being analyzed simultaneously could be the first proposal of a novel approach for a complex and robust control that adapts to the patient dexterity level and moves up and down with them through difficulty levels.

It will be very important to go beyond the current widespread interfaces with visual feedback for these systems–one option could be to incorporate tactile and haptic feedback, based on information from gyroscope and accelerometer sensors. These hardware systems combined with VR/AR interfaces will promote a richer environment to develop rehabilitation therapies, where several metrics related to the patient’s movement could be monitored and used as feedback to promote motor rehabilitation and neuroplasticity. Some applications have shown that using exoskeletons or FES can be beneficial to help the patient in training muscles and neural pathways to practice the correct movement trajectories during therapy. Moreover, there is a need for more cohesive technologies (hardware and software) that allows the user, patient, and care provider to perform this type of therapy in a real-life environment. For this technology to become a regular therapy it must be integrated and ready to use, without the complications of too many wires or lengthy donning and doffing procedures.

Personalized therapy is also within reach by means of VR/AR technologies, since these interfaces can adapt the complexity level to patient performance and be updated as the patient improves their control over the impaired limb. In this paper, we have examined examples where researchers use biofeedback to adjust the complexity of the task, e.g., fatigue, correct position (proprioception), or performance of repetitions, e.g., the TAC test proposed by Simon et al. [96]. Personalization includes videogame difficulty levels for the VR/AR interfaces which can be controlled as in a regular ludic videogame, except in therapy the user can downgrade levels. This characteristic could be very useful in case of muscular fatigue, which is very common during therapies. This little detail might allow patients to complete more repetitions or to endure larger therapy sessions; also, changes in sEMG signals during therapy can be considered too, i.e., retraining the control algorithm mid-session to lower the patient’s muscular strength demand.

5. Conclusions

This SLR provides a global framework of the most common application of sEMG signals for control/feedback of VR/AR interfaces. Nowadays, the use of these signals for rehabilitation is still scattered and heterogenous. There is no consensus regarding the selection methodology of sEMG signal processing algorithms, signal features, the classification approach, the performance evaluation, and even less about its use in applications for rehabilitation. There are no reports of these interfaces being adopted in clinical practice. Future work should be targeted to propose a set of guidelines to standardize these technologies for clinical therapies.

Author Contributions

Conceptualization, C.L.T.-P. and J.G.-M.; methodology, C.L.T.-P., G.V.-M., J.A.M.-G. and J.G.-M.; software, C.L.T.-P.; validation, C.L.T.-P., G.V.-M., J.A.M.-G. and J.G.-M.; formal analysis, C.L.T.-P., G.V.-M. and J.A.M.-G.; investigation, C.L.T.-P., G.V.-M. and J.A.M.-G.; resources, C.L.T.-P., G.V.-M., J.A.M.-G., G.R.-R., A.V.-H., L.L.-S. and J.G.-M.; data curation, C.L.T.-P., G.V.-M., J.A.M.-G., G.R.-R., A.V.-H., L.L.-S. and J.G.-M.; writing—original draft preparation, C.L.T.-P., G.V.-M., J.A.M.-G., G.R.-R., A.V.-H., L.L.-S. and J.G.-M.; writing—review and editing, C.L.T.-P., G.V.-M., J.A.M.-G., G.R.-R., A.V.-H., L.L.-S. and J.G.-M.; visualization, C.L.T.-P., G.V.-M., J.A.M.-G., G.R.-R., A.V.-H., L.L.-S. and J.G.-M.; supervision, G.R.-R., A.V.-H., L.L.-S. and J.G.-M.; project administration, L.L.-S. and J.G.-M.; funding acquisition, L.L.-S. and J.G.-M. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by CYTED-DITECROD-218RT0545, Proyecto IV-8 call AMEXcid-AUCI 2018-2022.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Stucki, G. Advancing the Rehabilitation Sciences. Front. Rehabil. Sci. 2021, 1, 617749. [Google Scholar] [CrossRef]

- Reddy, N.P.; Unnikrishnan, R. EMG Interfaces for VR and Telematic Control Applications. IFAC Proc. Vol. 2001, 34, 443–446. [Google Scholar] [CrossRef]

- Mejia, J.A.; Hernandez, G.; Toledo, C.; Mercado, J.; Vera, A.; Leija, L.; Gutierrez, J. Upper Limb Rehabilitation Therapies Based in Videogames Technology Review. In Proceedings of the 2019 Global Medical Engineering Physics Exchanges/Pan American Health Care Exchanges (GMEPE/PAHCE) 2019, Buenos Aires, Argentina, 26–31 March 2019; pp. 1–5. [Google Scholar]

- González-González, C.S.; Toledo-Delgado, P.A.; Muñoz-Cruz, V.; Torres-Carrion, P.V. Serious games for rehabilitation: Gestural interaction in personalized gamified exercises through a recommender system. J. Biomed. Inform. 2019, 97, 103266. [Google Scholar] [CrossRef] [PubMed]

- Cerritelli, F.; Chiera, M.; Abbro, M.; Megale, V.; Esteves, J.; Gallace, A.; Manzotti, A. The Challenges and Perspectives of the Integration Between Virtual and Augmented Reality and Manual Therapies. Front. Neurol. 2021, 12, 700211. [Google Scholar] [CrossRef] [PubMed]

- Kluger, D.T.; Joyner, J.S.; Wendelken, S.M.; Davis, T.S.; George, J.A.; Page, D.M.; Hutchinson, D.T.; Benz, H.L.; Clark, G.A. Virtual Reality Provides an Effective Platform for Functional Evaluations of Closed-Loop Neuromyoelectric Control. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 876–886. [Google Scholar] [CrossRef]

- Huang, J.; Lin, M.; Fu, J.; Sun, Y.; Fang, Q. An Immersive Motor Imagery Training System for Post-Stroke Rehabilitation Combining VR and EMG-based Real-Time Feedback. In Proceedings of the 2021 43rd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Guadalajara, Mexico, 1–5 November 2021; pp. 7590–7593. [Google Scholar]

- Muri, F.; Carbajal, C.; Echenique, A.M.; Fernández, H.; López, N.M. Virtual reality upper limb model controlled by EMG signals. J. Phys. Conf. Ser. 2013, 477, 012041. [Google Scholar] [CrossRef]

- Meng, Q.; Zhang, J.; Yang, X. Virtual Rehabilitation Training System Based on Surface EMG Feature Extraction and Analysis. J. Med. Syst. 2019, 43, 48. [Google Scholar] [CrossRef] [PubMed]

- Montoya, M.F.; Munoz, J.E.; Henao, O.A. Enhancing Virtual Rehabilitation in Upper Limbs With Biocybernetic Adaptation: The Effects of Virtual Reality on Perceived Muscle Fatigue, Game Performance and User Experience. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 740–747. [Google Scholar] [CrossRef]

- Thériault, L.; Robert, J.-M.; Baron, L. Virtual Reality Interfaces for Virtual Environments. Virtual Reality International Conference. Available online: https://www.researchgate.net/publication/259576863 (accessed on 20 April 2022).

- Liang, Y.; Wu, D.; Ledesma, D.; Davis, C.; Slaughter, R.; Guo, Z. Virtual Tai-Chi System: A smart-connected modality for rehabilitation. Smart Health 2018, 9–10, 232–249. [Google Scholar] [CrossRef]

- Chen, P.-J.; Penn, I.-W.; Wei, S.-H.; Chuang, L.-R.; Sung, W.-H. Augmented reality-assisted training with selected Tai-Chi movements improves balance control and increases lower limb muscle strength in older adults: A prospective randomized trial. J. Exerc. Sci. Fit. 2020, 18, 142–147. [Google Scholar] [CrossRef]

- Muñoz, J.E.; Montoya, M.F.; Boger, J. From Exergames to Immersive Virtual Reality Systems: Serious Games for Supporting Older Adults, 1st ed.; Elsevier Inc.: Amsterdam, The Netherlands, 2021; pp. 141–204. [Google Scholar]

- Barrett, A.M.; Oh-Park, M.; Chen, P.; Ifejika, N.L. Neurorehabilitation: Five new things. Neurol. Clin. Pract. 2013, 3, 484–492. [Google Scholar] [CrossRef] [Green Version]

- Giggins, O.M.; Persson, U.M.; Caulfield, B. Biofeedback in rehabilitation. J. Neuroeng. Rehabil. 2013, 10, 60. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Dosen, S.; Markovic, M.; Somer, K.; Graimann, B.; Farina, D. EMG Biofeedback for online predictive control of grasping force in a myoelectric prosthesis. J. Neuroeng. Rehabil. 2015, 12, 55. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ogourtsova, T.; Archambault, P.S.; Lamontagne, A. Let’s do groceries-a novel virtual assessment for post-stroke unilateral spatial neglect Effects of virtual scene complexity and knowledge translation initiatives. In Proceedings of the 2017 International Conference on Virtual Rehabilitation (Icvr), Montreal, QC, Canada, 19–22 June 2017. [Google Scholar]

- Tao, G.; Archambault, P.S.; Levin, M.F. Evaluation of Kinect skeletal tracking in a virtual reality rehabilitation system for upper limb hemiparesis. In Proceedings of the 2013 International Conference on Virtual Rehabilitation, ICVR 2013, Philadelphia, PA, USA, 26–29 August 2013; pp. 164–165. [Google Scholar]

- Wada, T.; Takeuchi, T. A Training System for EMG Prosthetic Hand in Virtual Environment. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting, New York, NY, USA, 22–26 September 2008; Volume 52, pp. 2112–2116. [Google Scholar]

- Sime, D.W. Potential Application of Virtual Reality for Interface Customisation (and Pre-training) of Amputee Patients as Preparation for Prosthetic Use. Adv. Exp. Med. Biol. 2019, 1120, 15–24. [Google Scholar] [CrossRef] [PubMed]

- Clemente, F.; D’Alonzo, M.; Controzzi, M.; Edin, B.B.; Cipriani, C. Non-Invasive, Temporally Discrete Feedback of Object Contact and Release Improves Grasp Control of Closed-Loop Myoelectric Transradial Prostheses. IEEE Trans. Neural Syst. Rehabil. Eng. 2016, 24, 1314–1322. [Google Scholar] [CrossRef] [PubMed]

- Markovic, M.; Schweisfurth, M.A.; Engels, L.F.; Bentz, T.; Wüstefeld, D.; Farina, D.; Dosen, S. The clinical relevance of advanced artificial feedback in the control of a multi-functional myoelectric prosthesis. J. Neuroeng. Rehabil. 2018, 15, 28. [Google Scholar] [CrossRef] [Green Version]

- Thomas, G.P.; Jobst, B.C. Feedback-Sensitive and Closed-Loop Solutions; Elsevier Inc.: Amsterdam, The Netherlands, 2017. [Google Scholar]

- Svensson, P.; Wijk, U.; Björkman, A.; Antfolk, C. A review of invasive and non-invasive sensory feedback in upper limb prostheses. Expert Rev. Med. Devices 2017, 14, 439–447. [Google Scholar] [CrossRef]

- Earnshaw, R.; Liggett, S.; Excell, P.; Thalmann, D. Technology, Design and the Arts-Opportunities and Challenges; Springer International Publishing: Cham, Switzerland, 2020. [Google Scholar]

- Casellato, C.; Ambrosini, E.; Galbiati, A.; Biffi, E.; Cesareo, A.; Beretta, E.; Lunardini, F.; Zorzi, G.; Sanger, T.D.; Pedrocchi, A. EMG-based vibro-tactile biofeedback training: Effective learning accelerator for children and adolescents with dystonia? A pilot crossover trial. J. Neuroeng. Rehabil. 2019, 16, 150. [Google Scholar] [CrossRef] [PubMed]

- Gutiérrez, Á.; Sepúlveda-Muñoz, D.; Gil-Agudo, Á.; de los Reyes Guzman, A. Serious Game Platform with Haptic Feedback and EMG Monitoring for Upper Limb Rehabilitation and Smoothness Quantification on Spinal Cord Injury Patients. Appl. Sci. 2020, 10, 963. [Google Scholar] [CrossRef] [Green Version]

- Li, K.; Boyd, P.; Zhou, Y.; Ju, Z.; Liu, H. Electrotactile Feedback in a Virtual Hand Rehabilitation Platform: Evaluation and Implementation. IEEE Trans. Autom. Sci. Eng. 2019, 16, 1556–1565. [Google Scholar] [CrossRef] [Green Version]

- Markovic, M.; Varel, M.; Schweisfurth, M.A.; Schilling, A.F.; Dosen, S. Closed-Loop Multi-Amplitude Control for Robust and Dexterous Performance of Myoelectric Prosthesis. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 498–507. [Google Scholar] [CrossRef] [PubMed]

- Parker, P.; Englehart, K.; Hudgins, B. Myoelectric signal processing for control of powered limb prostheses. J. Electromyogr. Kinesiol. 2006, 16, 541–548. [Google Scholar] [CrossRef] [PubMed]

- Youn, W.; Kim, J. Development of a compact-size and wireless surface EMG measurement system. In Proceedings of the ICCAS-SICE 2009-ICROS-SICE International Joint Conference 2009, Fukuoka, Japan, 18–21 August 2009; pp. 1625–1628. [Google Scholar]

- Lowery, M.; Weir, R.; Kuiken, T. Simulation of Intramuscular EMG Signals Detected Using Implantable Myoelectric Sensors (IMES). IEEE Trans. Biomed. Eng. 2006, 53, 1926–1933. [Google Scholar] [CrossRef] [PubMed]

- Reategui, J.; Callupe, R. Surface EMG multichannel array using active dry sensors for forearm signal extraction. In Proceedings of the 2017 IEEE 24th International Congress on Electronics, Electrical Engineering and Computing, INTERCON 2017, Cusco, Peru, 15–18 August 2017; pp. 1–4. [Google Scholar]

- Drost, G.; Stegeman, D.F.; van Engelen, B.G.; Zwarts, M.J. Clinical applications of high-density surface EMG: A systematic review. J. Electromyogr. Kinesiol. 2006, 16, 586–602. [Google Scholar] [CrossRef]

- Xie, L.; Yang, G.; Xu, L.; Seoane, F.; Chen, Q.; Zheng, L. Characterization of dry biopotential electrodes. In Proceedings of the 2013 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Osaka, Japan, 3–7 July 2013; pp. 1478–1481. [Google Scholar]

- Roche, A.D.; Rehbaum, H.; Farina, D.; Aszmann, O.C. Prosthetic Myoelectric Control Strategies: A Clinical Perspective. Curr. Surg. Rep. 2014, 2, 44. [Google Scholar] [CrossRef]

- Cordella, F.; Ciancio, A.L.; Sacchetti, R.; Davalli, A.; Cutti, A.G.; Guglielmelli, E.; Zollo, L. Literature Review on Needs of Upper Limb Prosthesis Users. Front. Neurosci. 2016, 10, 209. [Google Scholar] [CrossRef]

- Pallavicini, F.; Ferrari, A.; Mantovani, F. Video Games for Well-Being: A Systematic Review on the Application of Computer Games for Cognitive and Emotional Training in the Adult Population. Front. Psychol. 2018, 9, 2127. [Google Scholar] [CrossRef] [Green Version]

- Reilly, C.A.; Greeley, A.B.; Jevsevar, D.S.; Gitajn, I.L. Virtual reality-based physical therapy for patients with lower extremity injuries: Feasibility and acceptability. OTA Int. Open Access J. Orthop. Trauma 2021, 4, e132. [Google Scholar] [CrossRef]

- Gil, M.J.V.; Gonzalez-Medina, G.; Lucena-Anton, D.; Perez-Cabezas, V.; Ruiz-Molinero, M.D.C.; Martín-Valero, R. Augmented Reality in Physical Therapy: Systematic Review and Meta-analysis. JMIR Serious Games 2021, 9, e30985. [Google Scholar] [CrossRef]

- Dash, A.; Lahiri, U. Design of Virtual Reality-Enabled Surface Electromyogram-Triggered Grip Exercise Platform. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 444–452. [Google Scholar] [CrossRef]

- Hashim, N.A.; Razak, N.A.A.; Gholizadeh, H.; Osman, N.A.A. Video Game–Based Rehabilitation Approach for Individuals Who Have Undergone Upper Limb Amputation: Case-Control Study. JMIR Serious Games 2021, 9, e17017. [Google Scholar] [CrossRef] [PubMed]

- Seo, N.J.; Barry, A.; Ghassemi, M.; Triandafilou, K.M.; Stoykov, M.E.; Vidakovic, L.; Roth, E.; Kamper, D.G. Use of an EMG-Controlled Game as a Therapeutic Tool to Retrain Hand Muscle Activation Patterns Following Stroke: A Pilot Study. J. Neurol. Phys. Ther. 2022, 46, 198–205. [Google Scholar] [CrossRef] [PubMed]

- Pereira, M.F.; Prahm, C.; Kolbenschlag, J.; Oliveira, E.; Rodrigues, N.F. Application of AR and VR in hand rehabilitation: A systematic review. J. Biomed. Inform. 2020, 111, 103584. [Google Scholar] [CrossRef] [PubMed]

- Merians, A.S.; Jack, D.; Boian, R.; Tremaine, M.; Burdea, G.C.; Adamovich, S.V.; Recce, M.; Poizner, H. Virtual Reality–Augmented Rehabilitation for Patients Following Stroke. Phys. Ther. 2002, 82, 898–915. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Dash, A.; Yadav, A.; Lahiri, U. Physiology-sensitive Virtual Reality based Strength Training Platform for Post-stroke Grip Task. In Proceedings of the 2019 IEEE EMBS International Conference on Biomedical & Health Informatics (BHI), Chicago, IL, USA, 19–22 May 2019; pp. 1–4. [Google Scholar]

- Peng, L.; Hou, Z.G.; Peng, L.; Luo, L.; Wang, W. Robot assisted upper limb rehabilitation training and clinical evaluation: Results of a pilot study. In Proceedings of the 2017 IEEE International Conference on Robotics and Biomimetics, ROBIO 2017, Macau, Macao, 5–8 December 2017; pp. 1–6. [Google Scholar]

- Wei, X.; Chen, Y.; Jia, X.; Chen, Y.; Xie, L. Muscle Activation Visualization System Using Adaptive Assessment and Forces-EMG Mapping. IEEE Access 2021, 9, 46374–46385. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef]

- Wang, L.; Du, S.; Liu, H.; Yu, J.; Cheng, S.; Xie, P. A virtual rehabilitation system based on EEG-EMG feedback control. In Proceedings of the 2017 Chinese Automation Congress (CAC), Jinan, China, 20–22 October 2017; pp. 4337–4340. [Google Scholar]

- Vega, M.F.M.; Henao, O.A. Cross-validation of a classification method applied in a database of sEMG contractions collected in a body interaction videogame. J. Phys. Conf. Ser. 2019, 1247, 012049. [Google Scholar] [CrossRef]

- Llorens, R.; Fuentes, M.A.; Borrego, A.; Latorre, J.; Alcañiz, M.; Colomer, C.; Noé, E. Effectiveness of a combined transcranial direct current stimulation and virtual reality-based intervention on upper limb function in chronic individuals post-stroke with persistent severe hemiparesis: A randomized controlled trial. J. Neuroeng. Rehabil. 2021, 18, 108. [Google Scholar] [CrossRef]

- Li, Y.; Chen, J.; Yang, Y. A Method for Suppressing Electrical Stimulation Artifacts from Electromyography. Int. J. Neural Syst. 2019, 29, 1850054. [Google Scholar] [CrossRef]

- Li, X.; Zhou, Z.; Liu, W.; Ji, M. Wireless sEMG-based identification in a virtual reality environment. Microelectron. Reliab. 2019, 98, 78–85. [Google Scholar] [CrossRef]

- Bhagat, N.A.; Yozbatiran, N.; Sullivan, J.L.; Paranjape, R.; Losey, C.; Hernandez, Z.; Keser, Z.; Grossman, R.; Francisco, G.E.; O’Malley, M.K.; et al. Neural activity modulations and motor recovery following brain-exoskeleton interface mediated stroke rehabilitation. Neuroimage Clin. 2020, 28, 102502. [Google Scholar] [CrossRef] [PubMed]

- Trifonov, A.A.; Kuzmin, A.A.; Filist, S.A.; Degtyarev, S.v.; Petrunina, E.v. Biotechnical System for Control to the Exoskeleton Limb Based on Surface Myosignals for Rehabilitation Complexes. In Proceedings of the 2020 IEEE 14th International Conference on Application of Information and Communication Technologies (AICT), Tashkent, Uzbekistan, 7–9 October 2020. [Google Scholar]

- Ruiz-Olaya, A.F.; Lopez-Delis, A.; da Rocha, A.F. Upper and lower extremity exoskeletons. In Handbook of Biomechatronics; Elsevier: Amsterdam, The Netherlands; pp. 283–317.

- Liew, S.-L.; Lin, D.J.; Cramer, S.C. Interventions to Improve Recovery After Stroke, 7th ed; Elsevier Inc.: Amsterdam, The Netherlands, 2022. [Google Scholar]

- Melero, M.; Hou, A.; Cheng, E.; Tayade, A.; Lee, S.C.; Unberath, M.; Navab, N. Upbeat: Augmented Reality-Guided Dancing for Prosthetic Rehabilitation of Upper Limb Amputees. J. Healthc. Eng. 2019, 2019, 2163705. [Google Scholar] [CrossRef] [Green Version]

- Palermo, F.; Cognolato, M.; Eggel, I.; Atzori, M.; Müller, H. An augmented reality environment to provide visual feedback to amputees during sEMG data acquisitions. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Switzerland, 2019; Volume 11650, pp. 3–14. [Google Scholar]

- Gazzoni, M.; Cerone, G.L. Augmented Reality Biofeedback for Muscle Activation Monitoring: Proof of Concept. In IFMBE Proceedings; Springer: Cham, Switzerland, 2021; Volume 80, pp. 143–150. [Google Scholar]

- Liu, L.; Cui, J.; Niu, J.; Duan, N.; Yu, X.; Li, Q.; Yeh, S.-C.; Zheng, L.-R. Design of Mirror Therapy System Base on Multi-Channel Surface-Electromyography Signal Pattern Recognition and Mobile Augmented Reality. Electronics 2020, 9, 2142. [Google Scholar] [CrossRef]

- Nasri, N.; Orts-Escolano, S.; Cazorla, M. An sEMG-Controlled 3D Game for Rehabilitation Therapies: Real-Time Time Hand Gesture Recognition Using Deep Learning Techniques. Sensors 2020, 20, 6451. [Google Scholar] [CrossRef] [PubMed]

- Quinayás, C.; Barrera, F.; Ruiz, A.; Delis, A. Virtual Hand Training Platform Controlled Through Online Recognition of Motion Intention. In Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Switzerland, 2019; Volume 11896, pp. 761–768. [Google Scholar]

- Ma, L.; Zhao, X.; Li, Z.; Zhao, M.; Xu, Z. A sEMG-based Hand Function Rehabilitation System for Stroke Patients. In Proceedings of the 2018 3rd International Conference on Advanced Robotics and Mechatronics (ICARM), Singapore, 18–20 July 2018; pp. 497–502. [Google Scholar]

- Woodward, R.B.; Hargrove, L.J. Adapting myoelectric control in real-time using a virtual environment. J. Neuroeng. Rehabil. 2019, 16, 11. [Google Scholar] [CrossRef]

- Nissler, C.; Nowak, M.; Connan, M.; Büttner, S.; Vogel, J.; Kossyk, I.; Márton, Z.-C.; Castellini, C. VITA—an everyday virtual reality setup for prosthetics and upper-limb rehabilitation. J. Neural Eng. 2019, 16, 026039. [Google Scholar] [CrossRef] [Green Version]

- Lydakis, A.; Meng, Y.; Munroe, C.; Wu, Y.N.; Begum, M. A learning-based agent for home neurorehabilitation. In Proceedings of the IEEE International Conference on Rehabilitation Robotics, London, UK, 17–20 July 2017; pp. 1233–1238. [Google Scholar]

- Lukyanenko, P.; Dewald, H.A.; Lambrecht, J.; Kirsch, R.F.; Tyler, D.J.; Williams, M.R. Stable, simultaneous and proportional 4-DoF prosthetic hand control via synergy-inspired linear interpolation: A case series. J. Neuroeng. Rehabil. 2021, 18, 50. [Google Scholar] [CrossRef]

- Raz, G.; Gurevitch, G.; Vaknin, T.; Aazamy, A.; Gefen, I.; Grunstein, S.; Azouri, G.; Goldway, N. Electroencephalographic evidence for the involvement of mirror-neuron and error-monitoring related processes in virtual body ownership. NeuroImage 2020, 207, 116351. [Google Scholar] [CrossRef]

- Kisiel-Sajewicz, K.; Marusiak, J.; Rojas-Martínez, M.; Janecki, D.; Chomiak, S.; Mencel, J.; Mañanas, M.; Jaskólski, A.; Jaskólska, A. High-density surface electromyography maps after computer-aided training in individual with congenital transverse deficiency: A case study. BMC Musculoskelet. Disord. 2020, 21, 682. [Google Scholar] [CrossRef]

- Mazzola, S.; Prado, A.; Agrawal, S.K. An upper limb mirror therapy environment with hand tracking in virtual reality. In Proceedings of the 2020 8th IEEE RAS/EMBS International Conference for Biomedical Robotics and Biomechatronics (BioRob), New York, NY, USA, 29 November–1 December 2020; pp. 752–758. [Google Scholar]

- Summa, S.; Gori, R.; Castelli, E.; Petrarca, M. Development of a dynamic oriented rehabilitative integrated system. In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society, EMBS, Berlin, Germany, 23–27 July 2019; pp. 5245–5250. [Google Scholar]

- Cardoso, V.F.; Pomer-Escher, A.; Longo, B.B.; Loterio, F.A.; Nascimento, S.S.G.; Laiseca, M.A.R.; Delisle-Rodriguez, D.; Frizera-Neto, A.; Bastos-Filho, T. Neurorehabilitation platform based on EEG, sEMG and virtual reality using robotic monocycle. In IFMBE Proceedings; Springer: Singapore; Volume 70, pp. 315–321.

- Braza, D.W.; Martin, J.N.Y. Upper Limb Amputations. In Essentials of Physical Medicine and Rehabilitation: Musculoskeletal Disorders, Pain, and Rehabilitation; Elsevier: Amsterdam, The Netherlands; pp. 651–657.

- Alshehri, F.M.; Ahmed, S.A.; Ullah, S.; Ghazal, H.; Nawaz, S.; Alzahrani, A.S. The Patterns of Acquired Upper and Lower Extremity Amputation at a Tertiary Centre in Saudi Arabia. Cureus 2022, 14, 4. [Google Scholar] [CrossRef]

- Bank, P.J.; Dobbe, L.R.; Meskers, C.G.; De Groot, J.H.; De Vlugt, E. Manipulation of visual information affects control strategy during a visuomotor tracking task. Behav. Brain Res. 2017, 329, 205–214. [Google Scholar] [CrossRef]

- Lai, J.; Zhao, Y.; Liao, Y.; Hou, W.; Chen, Y.; Zhang, Y.; Li, G.; Wu, X. Design of a multi-degree-of-freedom virtual hand bench for myoelectrical prosthesis. In Proceedings of the 2017 2nd International Conference on Advanced Robotics and Mechatronics (ICARM), Hefei/Tai’an, China, 27–31 August 2017; pp. 345–350. [Google Scholar]

- Pizzolato, S.; Tagliapietra, L.; Cognolato, M.; Reggiani, M.; Müller, H.; Atzori, M. Comparison of six electromyography acquisition setups on hand movement classification tasks. PLoS ONE 2017, 12, e0186132. [Google Scholar] [CrossRef] [Green Version]

- Covaciu, F.; Pisla, A.; Iordan, A.-E. Development of a Virtual Reality Simulator for an Intelligent Robotic System Used in Ankle Rehabilitation. Sensors 2021, 21, 1537. [Google Scholar] [CrossRef] [PubMed]

- Castellini, C. Design Principles of a Light, Wearable Upper Limb Interface for Prosthetics and Teleoperation. In Wearable Robotics; Elsevier: Amsterdam, The Netherlands, 2020; pp. 377–391. [Google Scholar]

- Heerschop, A.; van der Sluis, C.K.; Otten, E.; Bongers, R.M. Performance among different types of myocontrolled tasks is not related. Hum. Mov. Sci. 2020, 70, 102592. [Google Scholar] [CrossRef] [PubMed]

- Ida, H.; Mohapatra, S.; Aruin, A.S. Perceptual distortion in virtual reality and its impact on dynamic postural control. Gait Posture 2021, 92, 123–128. [Google Scholar] [CrossRef] [PubMed]

- Yassin, M.M.; Saber, A.M.; Saad, M.N.; Said, A.M.; Khalifa, A.M. Developing a Low-cost, smart, handheld electromyography biofeedback system for telerehabilitation with Clinical Evaluation. Med. Nov. Technol. Devices 2021, 10, 100056. [Google Scholar] [CrossRef]

- Galido, E.; Esplanada, M.C.; Estacion, C.J.; Migriño, J.P.; Rapisora, J.K.; Salita, J.; Amado, T.; Jorda, R.; Tolentino, L.K. EMG Speed-Controlled Rehabilitation Treadmill With Physiological Data Acquisition System Using BITalino Kit. In Proceedings of the 2018 IEEE 10th International Conference on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment and Management (HNICEM), Baguio City, Philippines, 29 November–2 December 2018; pp. 1–5. [Google Scholar]

- Osumi, M.; Inomata, K.; Inoue, Y.; Otake, Y.; Morioka, S.; Sumitani, M. Characteristics of Phantom Limb Pain Alleviated with Virtual Reality Rehabilitation. In Pain Medicine; Oxford University Press: Oxford, UK, 2019; Volume 20, pp. 1038–1046. [Google Scholar]

- Sousa, M.; Vieira, J.; Medeiros, D.; Arsénio, A.; Jorge, J. SleeveAR: Augmented reality for rehabilitation using realtime feedback. In Proceedings of the International Conference on Intelligent User Interfaces, Proceedings IUI, Sonoma, CA, USA, 7–10 March 2016; pp. 175–185. [Google Scholar]

- Maier, M.; Ballester, B.R.; Verschure, P.F.M.J. Principles of Neurorehabilitation After Stroke Based on Motor Learning and Brain Plasticity Mechanisms. Front. Syst. Neurosci. 2019, 13, 74. [Google Scholar] [CrossRef]