MAN-EDoS: A Multihead Attention Network for the Detection of Economic Denial of Sustainability Attacks

Abstract

:1. Introduction

1.1. Problems Statements

1.2. Contribution

- An effective EDoS detection scheme is proposed, which applies the attention technique. Attention in DL can be interpreted as a vector of attraction score weights. To predict or comprehend one element, such as a pixel in an image or a word in a sentence, the attention vector is used to determine how strongly the unit is correlated with other units in the sequence data, and the sum of their values weighted by the attention vector is taken as the approximation of the target.

- In the present context, it is the relative scores of one feature to another features in the network packet and one packet to the others in the flow network. These scores help to remember the history features of the input sequence. Furthermore, unlike most other statistical techniques that have to compute with the feature selection threshold, this scheme can compute the score of one feature that it observes and apply it to other features for calculating the relative of one feature to another.

- The attention score is applied in the model that is similar to the auto encoder–decoder network that has been introduced in the transformer model [13,14]. Instead of using both modules as the transformer, the proposed modified model includes only one encoder module block that can compute, in parallel, the inputs for accelerating the training time but still gain the best accuracy as a sequence model as in LSTM schemes.

- Moreover, the constructed attention model can address unsupervised problems by predicting the attack traffic using the attention score for classifying the zero-day attack output.

- In addition, the MAN mechanism speeds-up the time processing. The improvement in testing time will improve the detection time for the system, and the improvement in training time will help update the model by fine-tuning the system update immediately to get used to the change in the attacks.

- Common types of EDoS attacks in the cloud environment were investigated by adjusting the attack rate to be lower than that in popular flooding attacks in the cloud and network environments.

2. Related Works

3. Background Knowledge

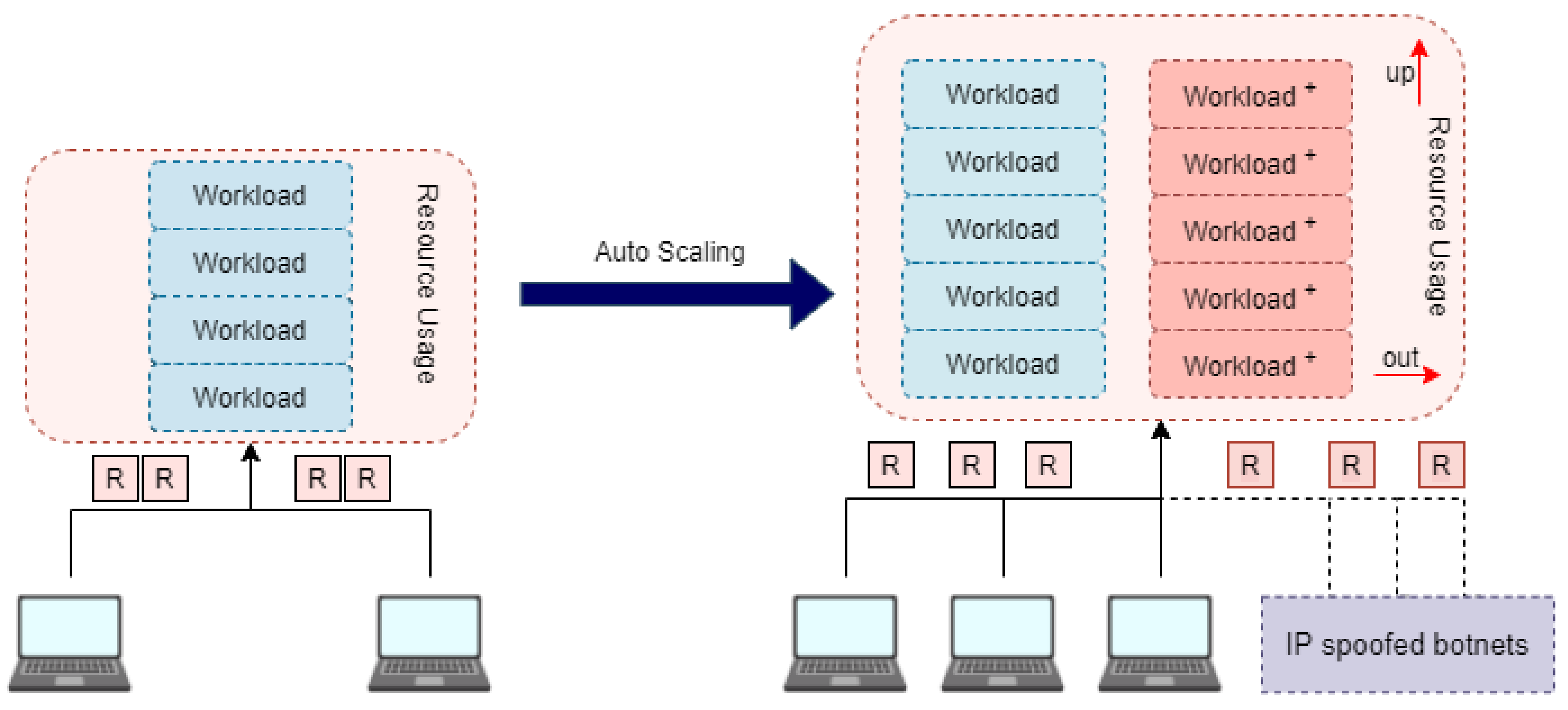

3.1. EDoS Attack on Network

3.1.1. TCP SYN Flooding Attack

3.1.2. UDP Flooding Attack

3.1.3. ICMP Flooding Attack

- The attacker sends many ICMP request packets to the aimed server using multiple botnets.

- The victim server then sends an ICMP echo-response packet back respectively to the request one to the botnet’s IP address as an answer. The effect on the network traffic is damaging when the number of requests made to the targeted server increase.

3.2. Attention Technique

4. Proposed Scheme and Experiment

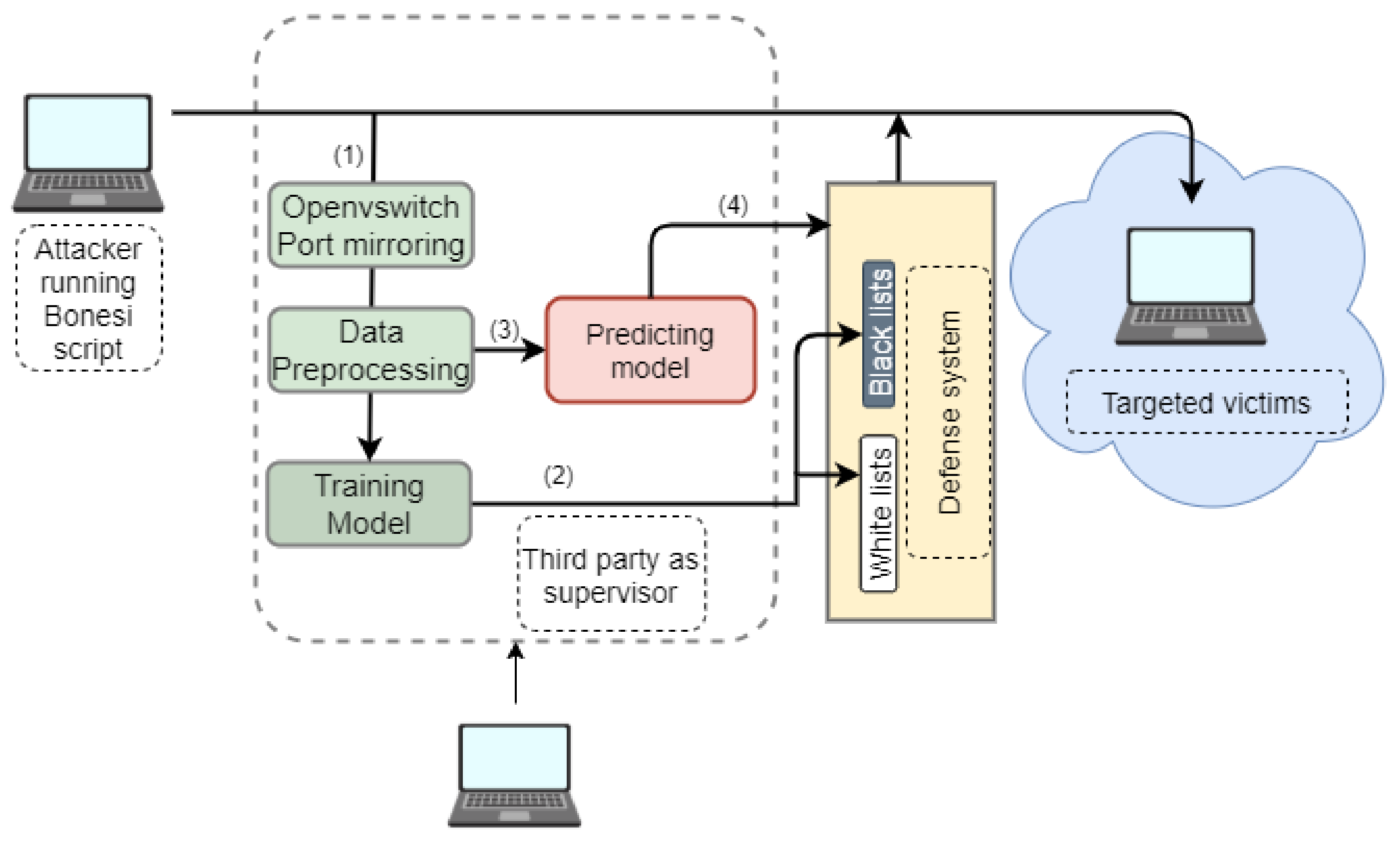

4.1. Testbed Scheme for Network Communication

| Algorithm 1 Workflow of testbed for EDoS detection and mitigation |

|

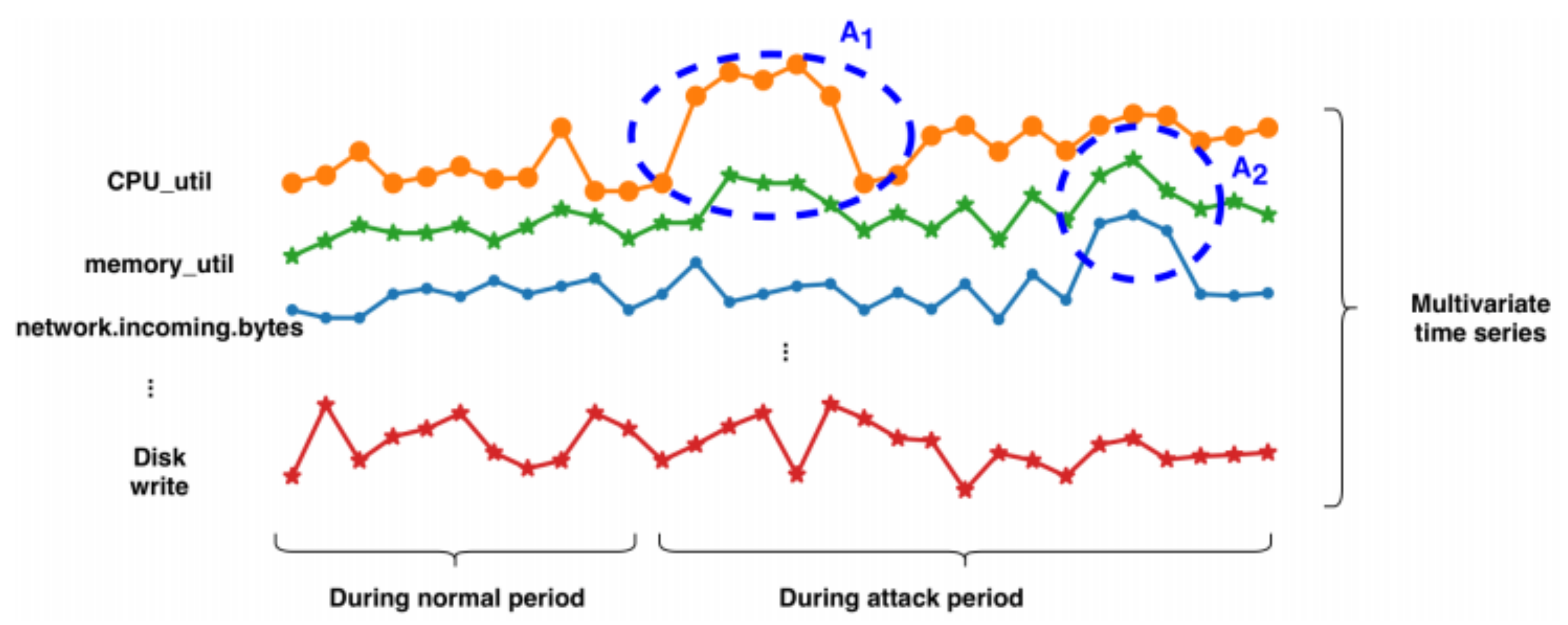

4.2. EDoS Attack Performance

4.3. Preprocessing and Model Work Flow

4.3.1. Data Preparation

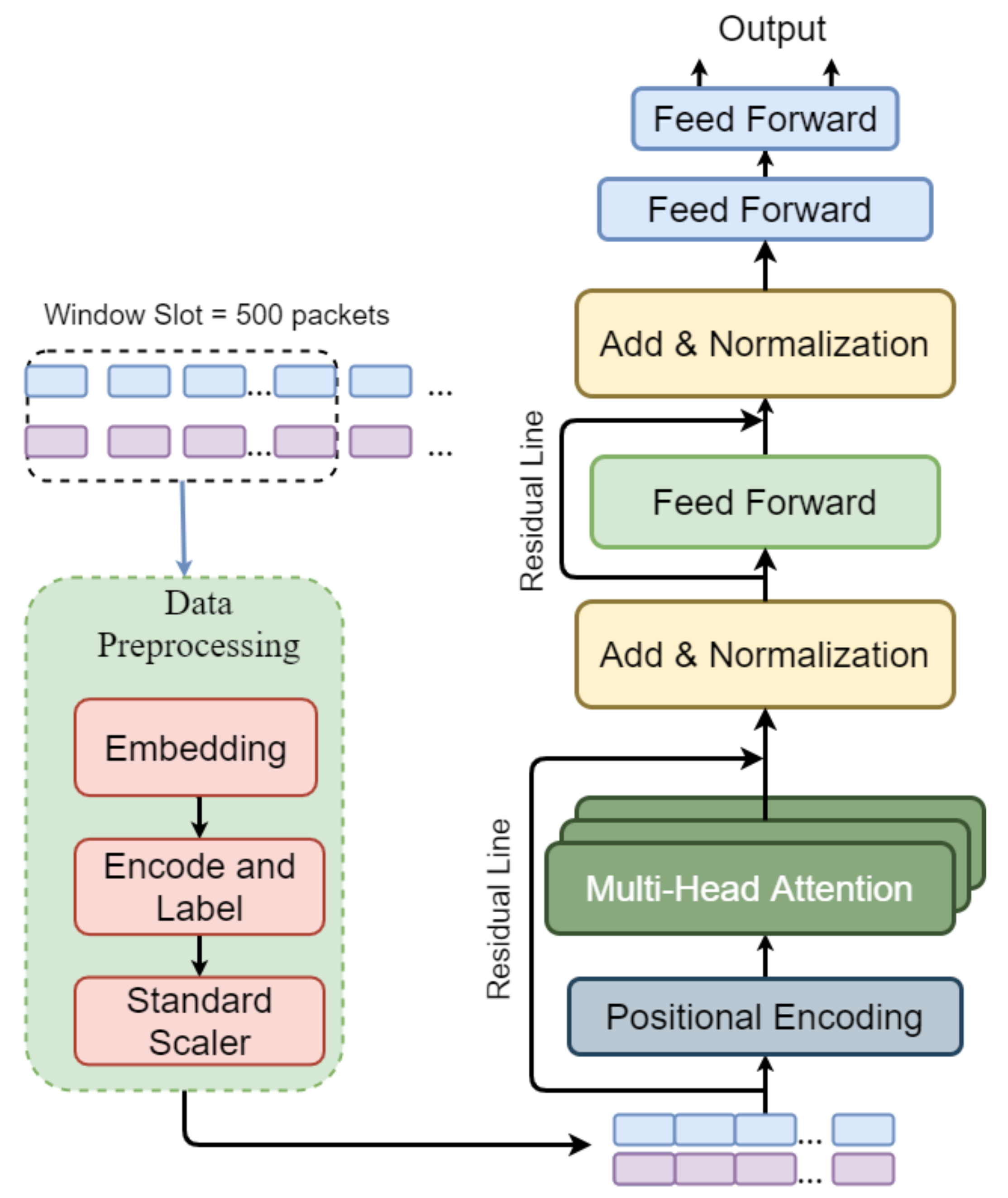

- Data capture: This module runs in basic network traffic. After collecting generated packets from BoNeSi attackers, the packets inflow is captured by the Wireshark tool which can split flow by the number of packets per flow and by the time per flow. Referring to a previous study [21], the sequence length is 250 packets per flow. To overcome the loss of information and memory vanishing of LSTM, a longer sequence length of the window slot, that is 500 packets per flow sequence, is proposed. For a sequence flow that does not have enough packets of protocol, the proposed methods generate fake packets in the flow, which contain zero values to fill up the sequence window slot.

- Data preprocessing: The embedding module transforms the categorical features into numerical form by using the one-hot encoding technique. Encoding and labeling are used to label flows generated from the window slot. The Standard Scaler normalizes the data that are significant to the model for calculating and accelerating the time in training and predicting the phase. The formula can be expressed as:where x is the value that is standardized, and are the minimum and maximum values of every features in dataset.

- Data description: For the training phase and testing phase, two datasets are used from the training scheme and the UNSW-NB15 dataset [31,32]. The UNSW-NB15 dataset contains pcap files that can extract the packets from the flows by the timestamp. This dataset can represent actual situations in the real network and overcome the shortcomings of KDDCUP’99 [33]. Moreover, to evaluate the model prediction results, the NSL-KDD and CICIDS dataset introduced by the Canadian Institute are used with the same features and behaviors [33,34]. They have gradually become one of the benchmark datasets in the field of network security. Table 2 shows the features of packets that are generated from the system and from the testing UNSW-NB15 dataset.

4.3.2. Model Architecture

- POSITIONAL ENCODING: To make use the order of the sequence input, instead of recurrence and no convolution, the positional encoding adds up the encoding of the order of the sequence in the window slot and the input sequence:The parameter is the position and i is the dimension. Each dimension of the positional encoding harmonizes to a sinusoidal. The wavelengths form a geometrical advancement from to . Connecting these values to the embeddings provides full meanings of distances between the embedded vectors once they are projected into the and vectors and during dot-product attention.

- Multihead Attention enables the model to attend to information from different positions of the sequence inputs jointly. The Residual Line is introduced from ResNet [35] to prevent the loss of information through network layers and improve the propagation of useful gradient information.

- Add and Normalization: add up the input information to the output from the attention layers. The normalization layer normalizes the activation of the previous layer for each given example in a batch independently.where is the input sequence in the window slot that uses the residual to add to the output of the multihead layers.

- Feed-Forward Network (FFN) is used to process the output of the previous layers that compute the feature maps as Fully Connected Feed-Forward networks and enforce a Sigmoid activation function:

| Algorithm 2 Multihead attention network for EDoS attack scheme |

|

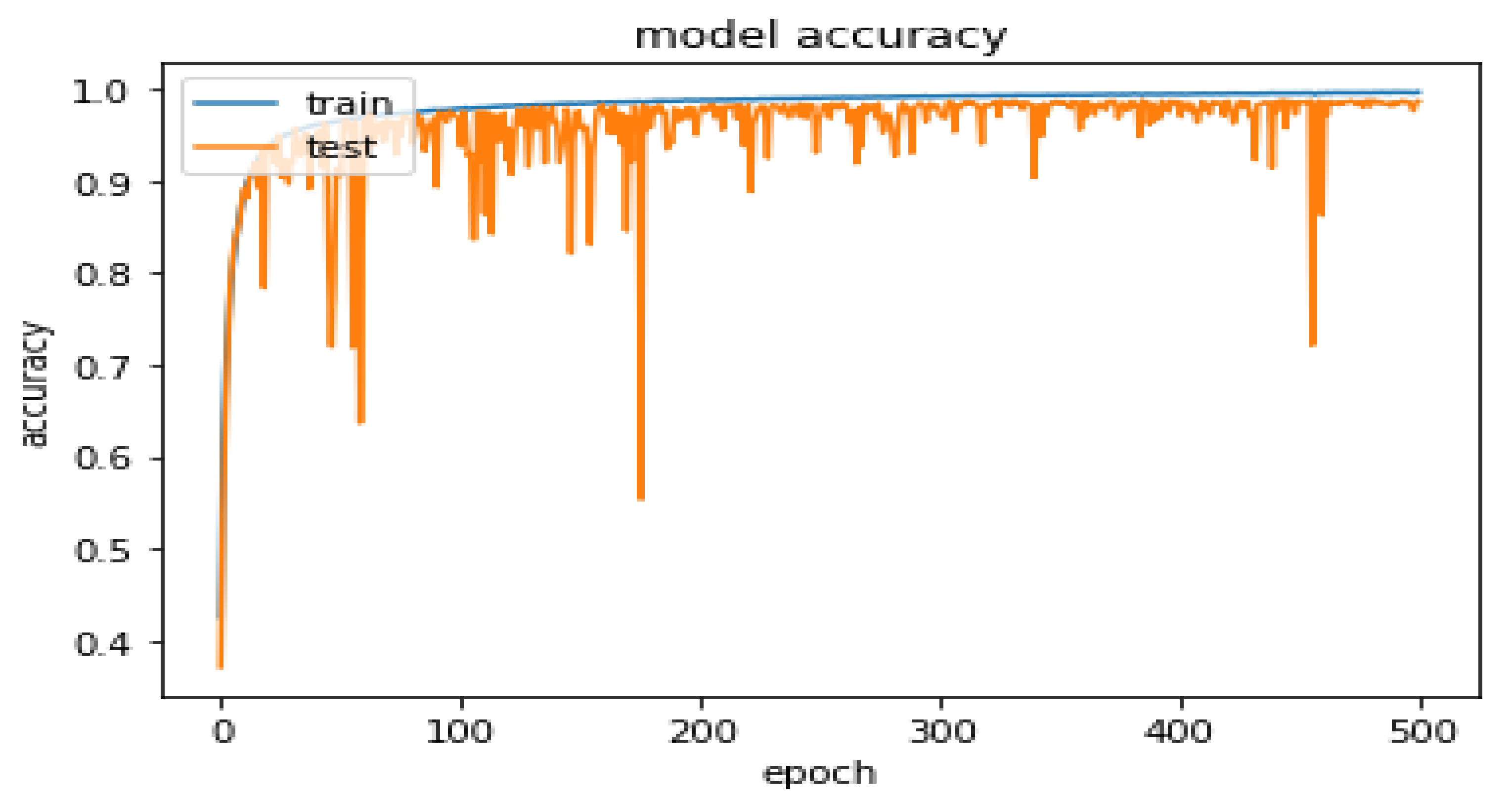

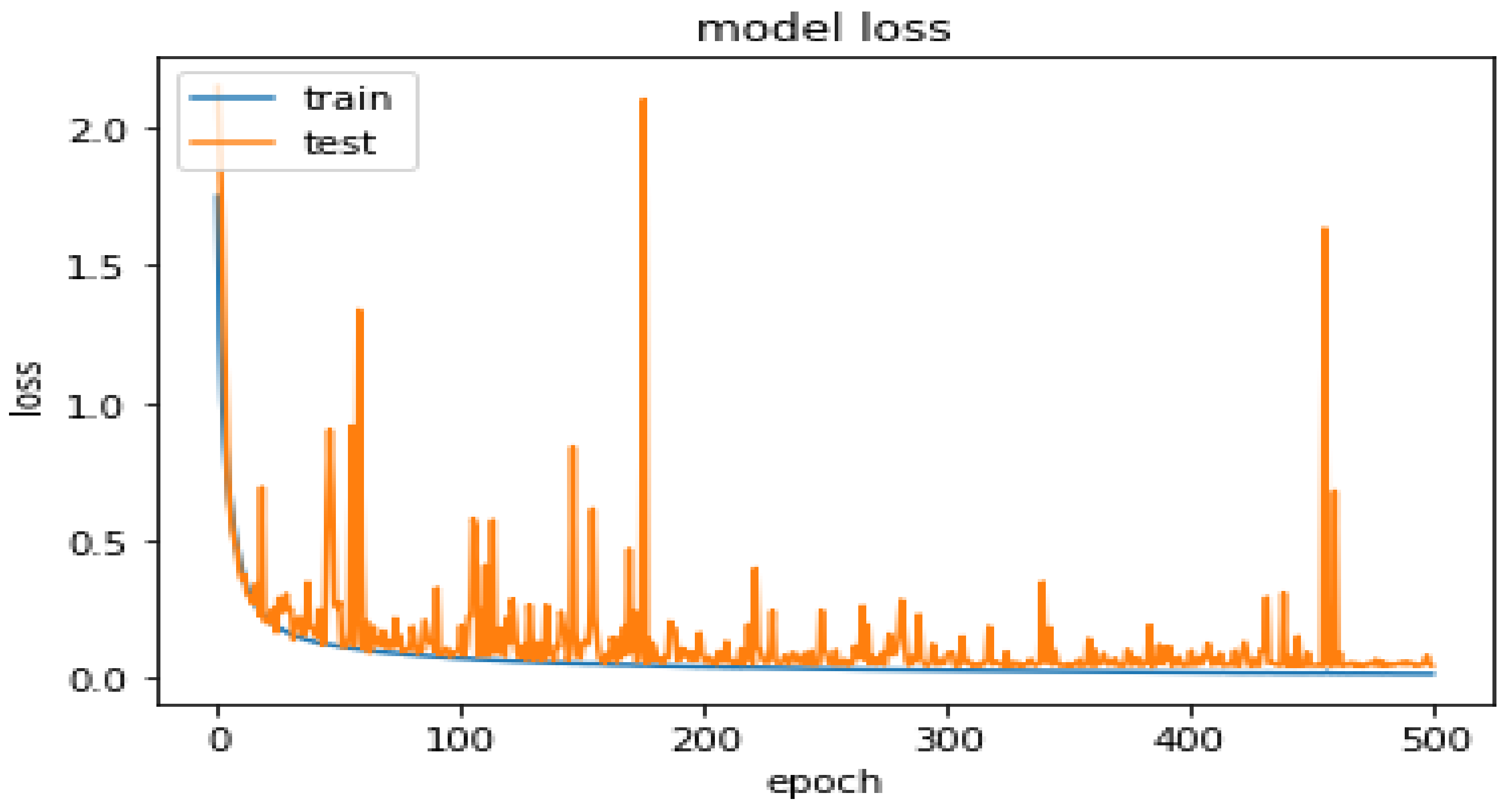

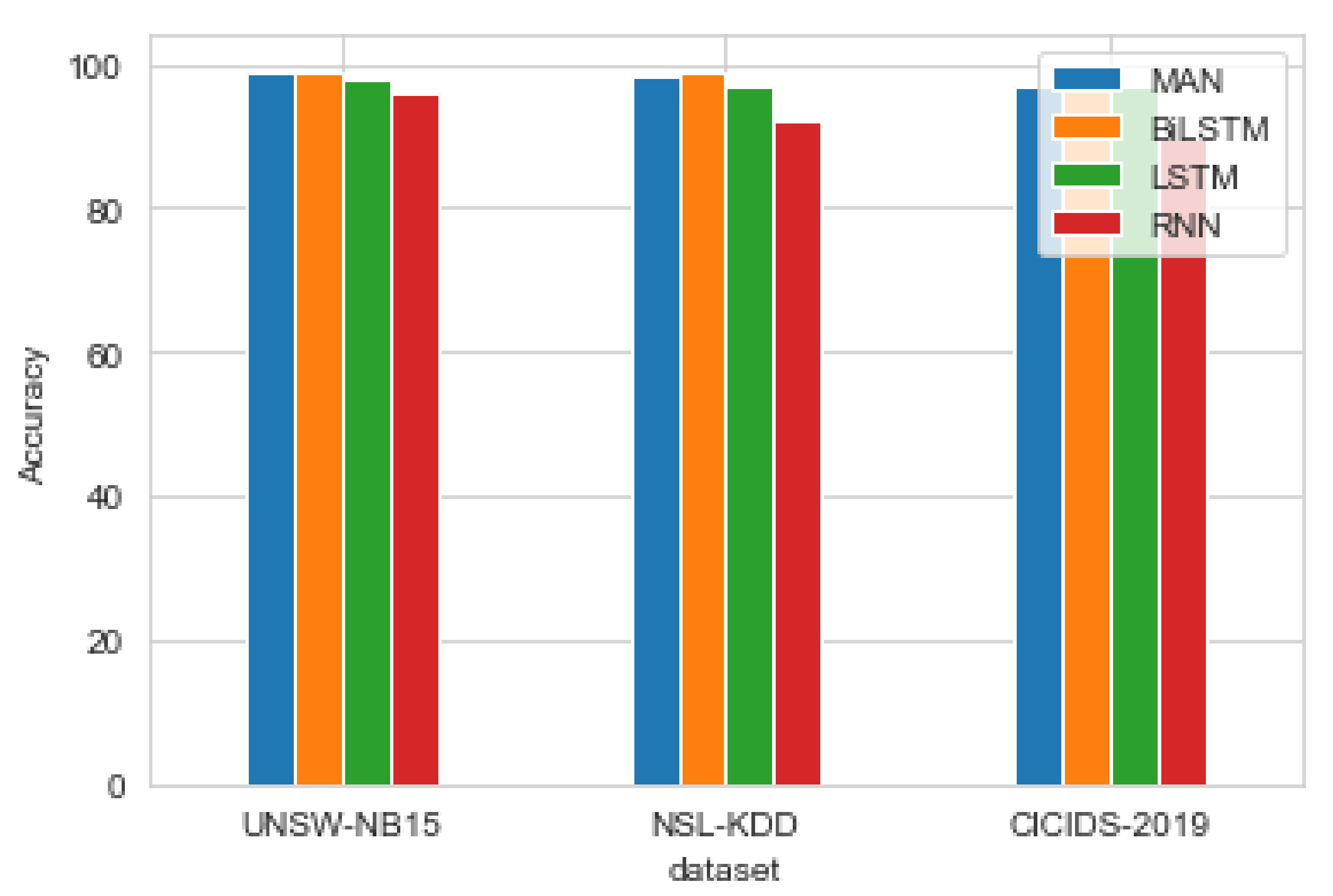

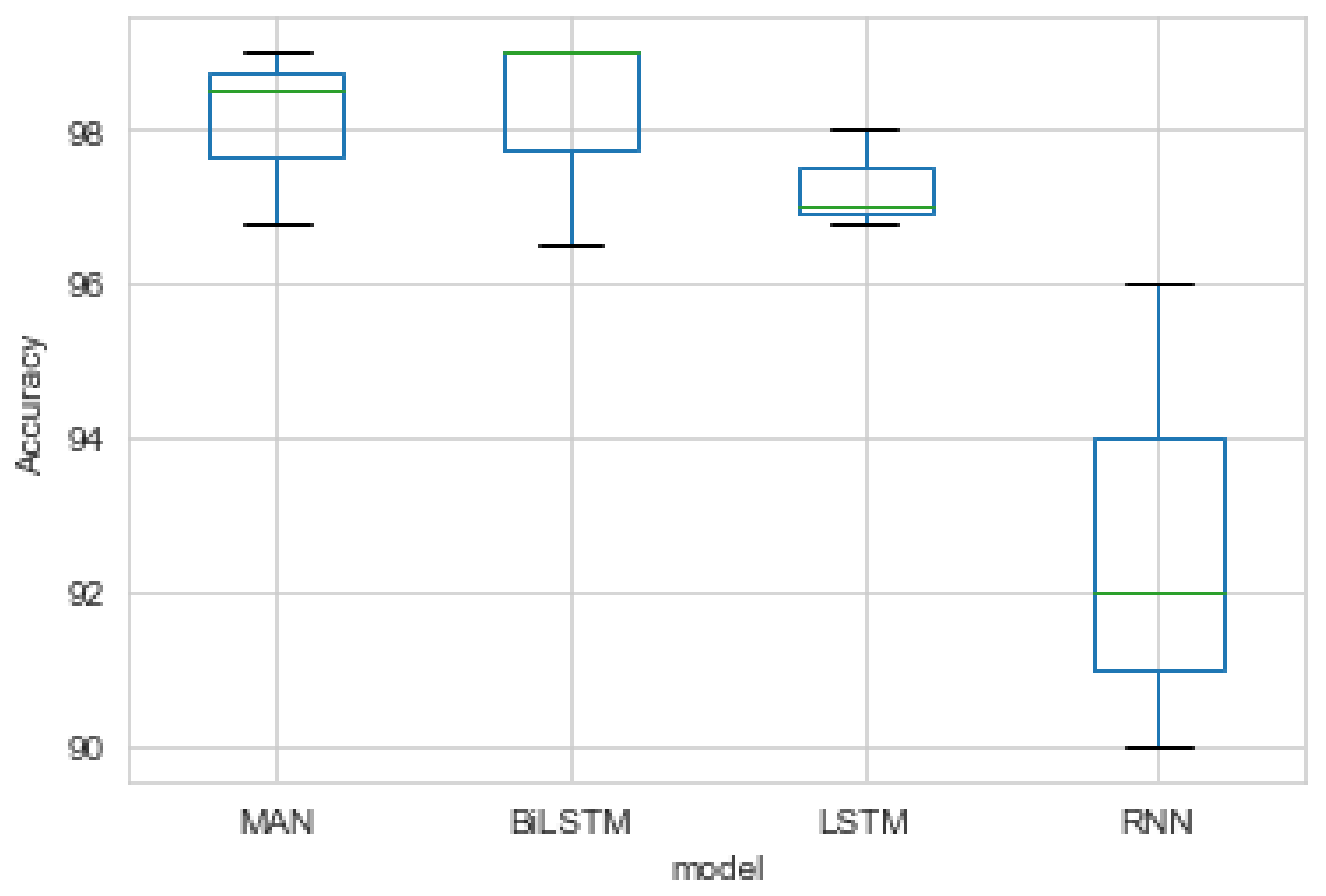

5. Results and Evaluation

5.1. Results

5.2. Evaluation with Recurrent Models

6. Conclusions and Future Works

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Rambabu, M.; Gupta, S.; Singh, R.S. Data Mining in Cloud Computing: Survey. In Innovations in Computational Intelligence and Computer Vision; Advances in Intelligent Systems and Computing; Sharma, M.K., Dhaka, V.S., Perumal, T., Dey, N., Tavares, J.M.R.S., Eds.; Springer: Singapore, 2021; Volume 1189. [Google Scholar] [CrossRef]

- El Kafhali, S.; El Mir, I.; Hanini, M. Security Threats, Defense Mechanisms, Challenges, and Future Directions in Cloud Computing. Arch. Comput. Methods Eng. 2021, 1–24. [Google Scholar] [CrossRef]

- Kuyoro, S.O.; Ibikunle, F.; Awodele, O. Cloud Computing Security Issues and Challenges. Int. J. Comput. Netw. 2011, 3, 247–255. [Google Scholar]

- Sabahi, F. Cloud computing security threats and responses. In Proceedings of the 2011 IEEE 3rd International Conference on Communication Software and Networks, Xi’an, China, 27–29 May 2011; pp. 245–249. [Google Scholar] [CrossRef]

- Liagkou, V.; Kavvadas, V.; Chronopoulos, S.K.; Tafiadis, D.; Christofilakis, V.; Peppas, K.P. Attack Detection for Healthcare Monitoring Systems Using Mechanical Learning in Virtual Private Networks over Optical Transport Layer Architecture. Computation 2019, 7, 24. [Google Scholar] [CrossRef] [Green Version]

- Singh, P.; Rehman, S.U.; Manickam, S. Comparative Analysis of State-of-the-Art EDoS Mitigation Techniques in Cloud Computing Environment. arXiv 2019, arXiv:1905.13447. [Google Scholar]

- Zargar, S.T.; Joshi, J.; Tipper, D. A Survey of Defense Mechanisms Against Distributed Denial of Service (DDoS) Flooding Attacks. IEEE Commun. Surv. Tutor. 2013, 15, 2046–2069. [Google Scholar] [CrossRef] [Green Version]

- Shawahna, A.; Abu-Amara, M.; Mahmoud, A.S.H.; Osais, Y. EDoS-ADS: An Enhanced Mitigation Technique Against Economic Denial of Sustainability (EDoS) Attacks. IEEE Trans. Cloud Comput. 2020, 8, 790–804. [Google Scholar] [CrossRef]

- Ghanem, K.; Aparicio-Navarro, F.J.; Kyriakopoulos, K.G.; Lambotharan, S.; Chambers, J.A. Support Vector Machine for Network Intrusion and Cyber Attack Detection. In Proceedings of the 2017 Sensor Signal Processing for Defence Conference (SSPD), London, UK, 6–7 December 2017; pp. 1–5. [Google Scholar] [CrossRef] [Green Version]

- Phan, T.V.; Park, M. Efficient Distributed Denial-of-Service Attack Defense in SDN-Based Cloud. IEEE Access 2019, 7, 18701–18714. [Google Scholar] [CrossRef]

- Yin, C.; Zhu, Y.; Fei, J.; He, X. A Deep Learning Approach for Intrusion Detection Using Recurrent Neural Networks. IEEE Access 2017, 5, 21954–21961. [Google Scholar] [CrossRef]

- Liang, X.; Znati, T. A Long Short-Term Memory Enabled Framework for DDoS Detection. In Proceedings of the 2019 IEEE Global Communications Conference (GLOBECOM), Waikoloa, HI, USA, 9–13 December 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I.I. Attention Is All You Need. arXiv 2017, arXiv:1706.03762. [Google Scholar]

- Dehghani, M.; Gouws, S.; Vinyals, O.; Uszkoreit, J.; Kaiser, L. Universal Transformers. arXiv 2019, arXiv:1807.03819. [Google Scholar]

- Al-Haidari, F.; Salah, K.; Sqalli, M.; Buhari, S.M. Performance Modeling and Analysis of the EDoS-Shield Mitigation. Arab. J. Sci. Eng. 2017, 42, 793–804. [Google Scholar] [CrossRef]

- Khor, S.H.; Nakao, A. Spow On-Demand Cloud-based EDDoS Mitigation Mechanism. In Proceedings of the 5th Workshop on Hot Topics in System Dependability, Lisbon, Portugal, 29 June 2009; pp. 1–6. [Google Scholar]

- Masood, M.; Anwar, Z.; Raza, S.A.; Hur, M.A. EDoS Armor: A cost effective economic denial of sustainability attack mitigation framework for e-commerce applications in cloud environments. In Proceedings of the INMIC, Lahore, Pakistan, 19–20 December 2013; pp. 37–42. [Google Scholar] [CrossRef]

- Chowdhury, F.Z.; Idris, M.Y.I.; Kiah, L.M.; Manazir Ahsan, A.M. EDoS eye: A game theoretic approach to mitigate economic denial of sustainability attack in cloud computing. In Proceedings of the 2017 IEEE 8th Control and System Graduate Research Colloquium (ICSGRC), Shah Alam, Malaysia, 4–5 August 2017; pp. 164–169. [Google Scholar] [CrossRef]

- Shaaban, A.R.; Abd-Elwanis, E.; Hussein, M. DDoS attack detection and classification via Convolutional Neural Network (CNN). In Proceedings of the 2019 Ninth International Conference on Intelligent Computing and Information Systems (ICICIS), Cairo, Egypt, 8–10 December 2019; pp. 233–238. [Google Scholar] [CrossRef]

- Li, Y.; Lu, Y. LSTM-BA: DDoS Detection Approach Combining LSTM and Bayes. In Proceedings of the 2019 Seventh International Conference on Advanced Cloud and Big Data (CBD), Suzhou, China, 21–22 September 2019; pp. 180–185. [Google Scholar] [CrossRef]

- Dinh, P.T.; Park, M. Dynamic Economic-Denial-of-Sustainability (EDoS) Detection in SDN-based Cloud. In Proceedings of the 2020 Fifth International Conference on Fog and Mobile Edge Computing (FMEC), Paris, France, 20–23 April 2020; pp. 62–69. [Google Scholar] [CrossRef]

- Roy, B.; Cheung, H. A Deep Learning Approach for Intrusion Detection in Internet of Things using Bi-Directional Long Short-Term Memory Recurrent Neural Network. In Proceedings of the 2018 28th International Telecommunication Networks and Applications Conference (ITNAC), Sydney, Australia, 21–23 November 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Dong, S.; Abbas, K.; Jain, R. A Survey on Distributed Denial of Service (DDoS) Attacks in SDN and Cloud Computing Environments. IEEE Access 2019, 7, 80813–80828. [Google Scholar] [CrossRef]

- Monge, M.A.S.; Vidal, J.M.; Perez, G.M. Detection of economic denial of sustainability (EDoS) threats in self-organizing networks. Comput. Commun. 2019, 145, 248–308. [Google Scholar] [CrossRef]

- Geetha, K.; Sreenath, N. SYN flooding attack—Identification and analysis. In Proceedings of the International Conference on Information Communication and Embedded Systems (ICICES2014), Chennai, India, 27–28 February 2014; pp. 1–7. [Google Scholar] [CrossRef]

- Boro, D.; Basumatary, H.; Goswami, T.; Bhattacharyya, D.K. UDP Flooding Attack Detection Using Information Metric Measure. In Proceedings of International Conference on ICT for Sustainable Development; Advances in Intelligent Systems and Computing; Satapathy, S., Joshi, A., Modi, N., Pathak, N., Eds.; Springer: Singapore, 2016; Volume 408. [Google Scholar] [CrossRef]

- Open vSwitch. Available online: https://www.openvswitch.org/ (accessed on 9 August 2016).

- VirtualBox Oracle. Available online: https://www.virtualbox.org/ (accessed on 13 December 2012).

- Sqalli, M.H.; Al-Haidari, F.; Salah, K. EDoS-Shield—A Two-Steps Mitigation Technique against EDoS Attacks in Cloud Computing. In Proceedings of the 2011 Fourth IEEE International Conference on Utility and Cloud Computing, Melbourne, Australia, 5–8 December 2011; pp. 49–56. [Google Scholar] [CrossRef]

- BoNeSi, The DDoS Botnet Simulator. 2015. Available online: https://github.com/Markus-Go/bonesi (accessed on 2 December 2018).

- Moustafa, N.; Slay, J. UNSW-NB15: A comprehensive dataset for network intrusion detection systems (UNSW-NB15 network dataset). In Proceedings of the 2015 Military Communications and Information Systems Conference (MilCIS), Canberra, Australia, 10–12 November 2015; pp. 1–6. [Google Scholar] [CrossRef]

- Moustafa, N.; Slay, J. The evaluation of Net-work Anomaly Detection Systems: Statistical analysis of the UNSW-NB15 dataset and the comparison with the KDD99 dataset. Inf. Secur. J. Glob. Perspect. 2016, 25, 18–31. [Google Scholar] [CrossRef]

- Tavallaee, M.; Bagheri, E.; Lu, W.; Ghorbani, A. A Detailed Analysis of the KDD CUP 99 DataSet. In Proceedings of the 2009 IEEE Symposium on Computational Intelligence for Security and Defense Applications (CISDA), Ottawa, ON, Canada, 8–10 July 2009; pp. 1–6. [Google Scholar] [CrossRef] [Green Version]

- Sharafaldin, I.; Lashkari, A.H.; Ghorbani, A.A. Toward Generating a New Intrusion Detection Dataset and Intrusion Traffic Characterization. In Proceedings of the 4th International Conference on Information Systems Security and Privacy (ICISSP), Funchal, Portugal, 22–24 January 2018. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. arXiv 2015, arXiv:1512.03385. [Google Scholar]

- Tan, M.; Iacovazzi, A.; Cheung, N.M.; Elovici, Y. A Neural Attention Model for Real-Time Network Intrusion Detection. In Proceedings of the 2019 IEEE 44th Conference on Local Computer Networks (LCN), Osnabrueck, Germany, 14–17 October 2019; pp. 291–299. [Google Scholar] [CrossRef]

- Liu, C.; Liu, Y.; Yan, Y.; Wang, J. An Intrusion Detection Model with Hierarchical Attention Mechanism. IEEE Access 2020, 8, 67542–67554. [Google Scholar] [CrossRef]

| EDoS Attack | Rate (pkts/s) | Botnets | Times (s) |

|---|---|---|---|

| TCP SYN flooding | 2000 | 100 | 3600 |

| ICMP flooding | 3000 | - | 3600 |

| UDP flooding | 3000 | - | 3600 |

| Features | Description |

|---|---|

| ip_src | Source IP address |

| ip_dst | Destination IP address |

| proto | Network protocol type |

| port_src | Source port |

| port_dst | Destination port |

| dur | Flow duration |

| sttl | Source time to live |

| dttl | Destination time to live |

| time_s | Time stamp packet captured |

| length | Packet length bytes |

| spkts | Source to destination packets count |

| dpkts | Destination to source packets count |

| sload | Source bits per second |

| dload | Destination bits per second |

| stcpb | Source TCP base |

| cpu_util | CPU utilization |

| memory_usage | Memory usage |

| label | Label attack |

| Number of attention heads | 8 |

| Sequence length | 500 |

| Embed and Feed forward dimension | 64 |

| Dropout | 0.1 |

| Batch size | 512 |

| Epochs | 500 |

| Optimizer | SGD |

| Metrics | MAN | BiLSTM | LSTM | RNN |

|---|---|---|---|---|

| Training time (s/epoch) | 11 | 50 | 32 | 26 |

| Predicting time (s) | 2.40 | 3.12 | 2.77 | 2.56 |

| Accuracy | 0.98 | 0.99 | 0.98 | 0.95 |

| Precision | 0.989 | 1 | 0.981 | 0.95 |

| Recall | 0.983 | 0.967 | 0.98 | 0.958 |

| F1_score | 0.986 | 0.99 | 0.978 | 0.965 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ta, V.Q.; Park, M. MAN-EDoS: A Multihead Attention Network for the Detection of Economic Denial of Sustainability Attacks. Electronics 2021, 10, 2500. https://doi.org/10.3390/electronics10202500

Ta VQ, Park M. MAN-EDoS: A Multihead Attention Network for the Detection of Economic Denial of Sustainability Attacks. Electronics. 2021; 10(20):2500. https://doi.org/10.3390/electronics10202500

Chicago/Turabian StyleTa, Vinh Quoc, and Minho Park. 2021. "MAN-EDoS: A Multihead Attention Network for the Detection of Economic Denial of Sustainability Attacks" Electronics 10, no. 20: 2500. https://doi.org/10.3390/electronics10202500

APA StyleTa, V. Q., & Park, M. (2021). MAN-EDoS: A Multihead Attention Network for the Detection of Economic Denial of Sustainability Attacks. Electronics, 10(20), 2500. https://doi.org/10.3390/electronics10202500