Abstract

Face detection, which is an effortless task for humans, is complex to perform on machines. The recent veer proliferation of computational resources is paving the way for frantic advancement of face detection technology. Many astutely developed algorithms have been proposed to detect faces. However, there is little attention paid in making a comprehensive survey of the available algorithms. This paper aims at providing fourfold discussions on face detection algorithms. First, we explore a wide variety of the available face detection algorithms in five steps, including history, working procedure, advantages, limitations, and use in other fields alongside face detection. Secondly, we include a comparative evaluation among different algorithms in each single method. Thirdly, we provide detailed comparisons among the algorithms epitomized to have an all-inclusive outlook. Lastly, we conclude this study with several promising research directions to pursue. Earlier survey papers on face detection algorithms are limited to just technical details and popularly used algorithms. In our study, however, we cover detailed technical explanations of face detection algorithms and various recent sub-branches of the neural network. We present detailed comparisons among the algorithms in all-inclusive and under sub-branches. We provide the strengths and limitations of these algorithms and a novel literature survey that includes their use besides face detection.

1. Introduction

Face detection is a computer vision problem that involves finding faces in images. It is also the initial step for many face-related technologies, for instance, face verification, face modeling, head pose tracking, gender and age recognition, facial expression recognition, and many more.

Face detection is a trifling task for humans, which we can perform naturally with almost no effort. However, the task is complicated to perform via machines and requires many computationally complex steps to be undertaken. Recent developments in computational technologies have ameliorated the research in face detection. As such, many algorithms and methods for detecting faces have been proposed. Even so, there is little attention given in making a robust and updated survey of these face detection methods.

There have been some survey works referring to face detection methods. Ismail et al. [1] conducted a survey on face detection techniques in 2009. In their survey, four issues for face detection (size and types of database, illumination tolerance, facial expressions variations, and pose variations) were dealt with, along with reviews of several face detection algorithms. The algorithms reviewed were limited to principal component analysis (PCA), linear discriminant analysis (LDA), skin color, wavelet, and artificial neural network (ANN). However, a comparison between the face detection techniques was not provided for a global understanding of the methods. Omaima [2] reviewed some updated face detection methods in 2014. Focused on performance evaluation, a full comparison of several algorithms with the databases involved was presented. However, the work was only based on ANN. Among all the surveys, the survey papers by Erik and Low [3] and Ashu et al. [4] were robust and well explained. Erik and Low explained methods of face detection and the later updates on the methods quite descriptively. Ashu et al. followed the path of Erik and Low, adding face detection databases and application programming interfaces (APIs) for face detection. However, both the works are missing the recent efficient methods of face detection, such as subbranches of neural networks and statistical methods. Many recent research papers on face detection are also available in the literature [5,6,7,8,9], which, closely related to our work, attempted to review face detection algorithms. Our study, on the other hand, has conducted a more thorough review with more technical details than these reviews and is multi-dimensional as shown in Table 1.

Table 1.

Comparison of our work with other similar survey papers.

In this survey, we present a structured classification of the related literature. The literature on face detection algorithms is very diverse; therefore, structuring the relevant works in a systematic way is not a trivial task. The following are some of the contributions of this paper:

- Different face detection algorithms are reviewed in five parts, including history, working principle, advantages, limitations, and use in fields other than face detection.

- Many face detection algorithms are reviewed, such as different statistical and neural network approaches, which were neglected in the earlier literature but gained popularity recently because of hardware development.

- Systematic discrepancies are shown between algorithms for each single method.

- A comprehensive comparison between all the methods is presented.

- A list of research challenges in face detection with further research directions to pursue is given.

This paper is directed to anyone who wants to learn about the different branches of face detection algorithms. There is no perfect algorithm to use as a face detection method. However, the comparative comparisons in this paper will help to choose the algorithm to use depending on the specific problems and challenges. The description of each algorithm will aid in gaining a clear understanding of that particular process. Additionally, knowing about the history, advantages, and limitations described for each of the algorithms will assist in deciding which algorithm is best suited for any task at hand with a clear understanding.

Face detection is one of the most popular computer vision problems that involve finding faces in digital images. In recent times, face detection techniques have advanced from conventional computer vision techniques toward more sophisticated machine learning (ML) approaches. The main steps of face detection technology involve finding the area in an image where a face or faces are. The main challenges in face detection are occlusion, illuminations, and complex background. A wide variety of algorithms have been proposed to combat these challenges. Basically, the available algorithms are divided mainly on two parts: feature-based and image-based approaches. While feature-based approaches find features (image edges, corners, and other structures well localized in two dimensions), image-based approaches depend largely on image scanning, which is based on window or sub-frames.

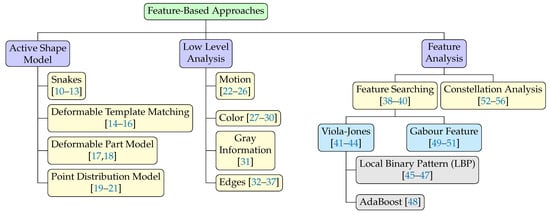

The rest of this paper is organized as follows: In Section 2, we briefly explain the feature-based approaches as shown in Figure 1. Section 3 provides an overview of the image-based approaches. Section 4 provides a robust comparison of the face detection algorithms. Section 5 epitomizes the research challenges in face detection and further research ideas to pursue. Finally, the conclusion is presented in Section 6.

Figure 1.

Feature-based approaches for face detection: This can be broadly classified into active shape model [10,11,12,13,14,15,16,17,18,19,20,21], low level analysis [22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37], and feature analysis [38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56]. Each of these again can be classified into several subcategories as shown here.

2. Feature-Based Approaches

Feature-based approaches are further divided into three sub-fields, as shown in Figure 1. The active shape models deals with complex and non-rigid shapes by deforming to fit a given example by iterative processing. In low level analysis, segmentation is performed using pixel information and is typically more concerned about individual components of a face. On the other hand, feature analysis involves organizing facial features onto a global perspective, taking into account the facial geometry.

2.1. Active Shape Model (ASM)

ASM epitomizes the actual substantial and thus, higher level appearance of features. When the system views an image, it links with facial features, such as the nose, mouth, etc., as soon as it finds a close proximity with any of these features. The coordinates of those parts are taken as a map, and from here, a mask is generated. The mask can be manually changed. Even if the system decides on the shape, this can be adjusted by the user. By training with a greater number of images, a better map can be achieved. ASMs can be classified into four groups: snakes, deformable template model (DTM), deformable part model (DPM), and point distribution model (PDM).

2.1.1. Snakes

Snakes, which are generic active contours, were first proposed by Kass et al. in 1987 [10]. Snakes are commonly utilized in locating the head boundaries [11]. To achieve the task, a snake is countersigned around the region of a head on an approximation. Once released, on the close proximity of a head, it then uses the natural evolution of snakes, which is shrinking or expanding, to gradually deform to the shape of the head. An energy function matches the intuition about what makes it a good segmentation and the function [10] can be denoted as follows:

where and are the internal and external energy functions, respectively. depends on the shape of the curve, while depends on the image intensities (edges). The initialized snake will iteratively evolve to reduce or minimize .

In the minimization of the energy function, internal energy enables the snakes to shrink or expand. In contrast, external energy makes the curve fit with nearby image edges in a state of equilibrium. Elastic energy [12] is commonly used as internal energy. By contrast, external energy relies on image features. The energy minimization process requires a high computational prerequisite. This is why, for faster convergence, methods of fast iteration by greedy algorithms were employed in [13].

Snakes are autonomous and self-adapting in their search for a minimal energy state [57]. They also can be made sensitive to image scale by incorporating Gaussian smoothing in the image energy function [58]. Snakes are also relatively insensitive to noise and other ambiguities in the images because the integral operator used to find both the internal and external energy functions is an inherent noise filter. The snakes algorithm works efficiently in real-time scenarios [59]. Furthermore, snakes are easy to manipulate because the external image forces behave in an intuitive manner [60,61].

Snakes generally are capable of determining the boundaries of features but they have several limitations [62]; the contours often become trapped onto false image features, and in terms of extracting non-convex features, they are not particularly suitable [63]. The convergence criteria used in the energy minimization technique govern their accuracy; tighter convergence criteria are required for higher accuracy. This results in longer computation times. Snakes are space consuming, while the Viterbi algorithm trades space for time. This means that they require a lot of time for processing [64].

Mostafa et al. [65] used snakes algorithm to extract buildings automatically from urban aerial images. Instead of using the snakes’ traditional way of computing the weight coefficient value of the energy function, a user emphasis method was employed. A combination of genetic algorithm (GA) and snakes was used to calculate the parameters. This combination resulted in a needing fewer operators and ameliorated the speed. However, the detection accuracy was only good for detecting a single building, and it faced problems in detecting building blocks. Fang et al. presented a snake model in tracking multiple objects [66]. The model can track more than one object by splitting and connecting contours. This topology independent technique allows it to detect any number of objects unaccompanied by any exact number. Saito et al. also amalgamated GA with snakes to detect eye glass in a human face [67]. GA was used to find the parameters of snakes in this case also. The method faced problems in detecting asymmetric glasses.

2.1.2. Deformable Template Matching (DTM)

DTM model is classified as ASM because it actively deforms preset boundaries to fit a given face. Along with the face boundary, other facial features’—such as the eyes, mouth, eyebrows, nose, and ears—extraction is a pivotal task in the face detection process. The concept of snakes was taken one step further by Yuille et al. [14] in 1992 by integrating information of the eyes as a global feature for a better extraction process. The conventional template-based approach is convenient for rigid shaped faces, but suffers from problems in detecting faces with various shapes. DTM, therefore, comes with a solution by adjusting to the different shapes of the face; DTM is particularly competent with non-rigid face shapes.

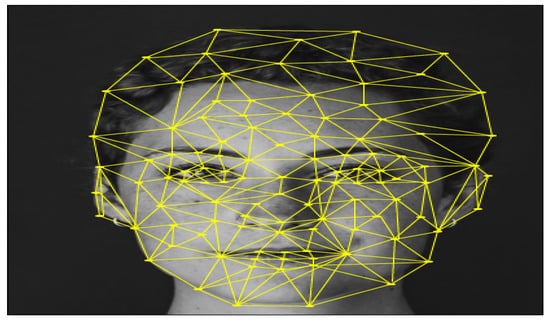

DTM works by forming deformable shapes of the face, which is achieved by predefined shapes [68]. The shapes can be made in two ways: (a) polygonal templates (PT) and (b) hierarchical templates (HT). In PT, as depicted in Figure 2, a face is formed with a number of triangles, where every triangle is deformed to contort the overall version of the face [15]. On the other hand, HT functions by creating a tree shape described in Figure 3 [16].

Figure 2.

Polygonal template of human face. A face is made up of a number of triangles, each of which is warped to adjust the overall shape of the face [15].

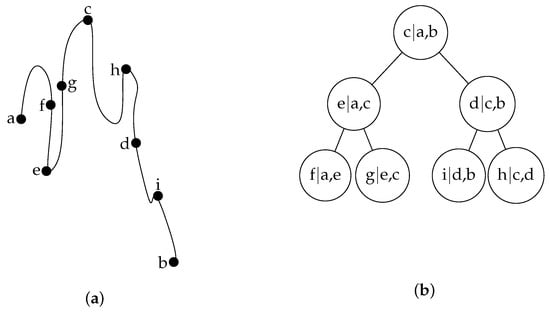

Figure 3.

Formation of binary shape tree in building HT: (a) The distance from ‘a’ to ‘b’ is marked with their middle points in the deformation process; (b) The resulting hierarchical tree of the deformation process. Each node represents a local curvature that can be used to fit locally to any given model by adding noise recursively. Hence, this deforms the initialization to the global shape of the given model [16].

Let us suppose that we want to find the structure of a curve from ‘a’ to ‘b’, as shown in Figure 3. A binary tree from ‘a’ to ‘b’ is built, and the process is started by selecting midpoint ‘c’. With the midpoint ‘c’, the two halves of the curve are described recursively. Other sub-nodes at the tree are made in the same way by finding the midpoints. Here, every node in the tree describes a midpoint relative to other neighboring points. Sub-trees in the tree describes sub-curves, thereby giving the local relative positions, explaining the local curvature. Adding little noise in every node, we can reconstruct the local curvature to fit any given sample recursively and thus, fit the global shape. The steepest gradient descent minimization of a combination of external energy is implicated in this deformation process.

Here, in Equation (2), the energy due to image brightness, valley, peak, edges, and internal energy is epitomized by , , , , and , respectively.

DTM combines local information with global information, and thus, ensures better extraction. Furthermore, it is accommodating of any type of shape in the given data [14] and can be employed in real time [69]. However, the weights of the energy terms are troublesome to interpolate. The consecutive execution of the minimization process costs an excessive processing time. To exacerbate the drawbacks, it is also sensitive to the initializing position; for instance, midpoint ‘c’ is needed to be initialized in HT.

Kluge and Lakshmanan utilized DTM in lane detection [70]. The use of DTM in detecting lanes allows likelihood of image shape (LOIS) to detect lanes without the need for thresholding and edge–non-edge classification. The detection process wholly depends on the intensity gradient information. However, the process is limited to using a smaller dataset. Jolly et al. applied DTM in vehicle segmentation [71]. A novel likelihood probability function was presented by Jolly et al. Inside and outside of the template is calculated, using directional edge-based terms. The process lacks the use of trucks, buses and trailers, like most common vehicles. Moni and Ali applied DTM, combining it with GA in object detection [72]. This combination solves the problem of optimal placement of objects in DTM. The term fitness was introduced to calculate how well random deformation fits the target shape without excessive deformation of the object. The fitness function for an object with various rotation, translation, scale or deformation was found to be harder to calculate.

2.1.3. Deformable Part Model (DPM)

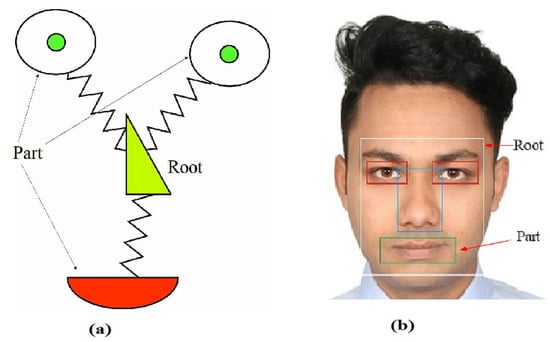

DPM uses the pictorial structure for face detection, which was first proposed by Fischler and Elschlager et al. [73] in 1973. DPM is commonly employed in the detection of human faces [17,74] as well as in the detection of faces in comics [18]. DPM is a training-based model. In DPM, a face mask is formed by modeling discrete parts (eyes, nose, etc.) individually, and each of these parts are rigid parts. A set of geometric constraints are set between these parts (typically describing the distance between the eyes and nose, etc.) and can be imagined as springs, as shown in Figure 4. The intuition here is that the object parts change in appearance approximately linearly in some feature space, and the springs between these parts constrain their locations to be consistent with the deformations observed. To fit with the given data, the model is moved onto an image and stretched in different ways, trying to find a place for it that does not put too much pressure on the springs and explains that the image data are underneath the model.

Figure 4.

Pictorial structures of a face: (a) The face mask where the part and root filters, which are rigid, are connected with geometric constraints imagined as springs [73]; (b) the pictorial structure projected onto a real human face along with the a clear indication of part and root filters [17].

The pictorial structure can be classified into two parts: part filters and root filter, as shown in Figure 4. Part filters change depending on the articulation of the face. To be particular, parts are not changing but the distance between the parts are to be incorporated with the given image.

DPM performs well in terms of detecting various shapes of faces, as it detects faces efficiently in a real-time environment [75]. Additionally, it easily detects faces with various poses and can work with variations caused by different viewpoints and illuminations [76]. However, DPM faces difficulties, such as speed bottleneck or slowness [77], and has issues in extending to new object or face categories.

Yang and Ramanan employed DPM in object detection and pose estimation [78]. The method brings forth a general framework for two things. One is the modeling of co-occurrence relations between a mixture of parts, and the other is to draw classic spatial relations, linking the positions of the parts. However, the method produces a detection problem in images with more orientation because of fewer mixture components. Yan et al. proposed a multi-pedestrian detection method, using DPM [79]. The proposed method improves the detection of pedestrian in crowded environments that have heavy occlusion. To handle occlusion, Yan et al. utilized two layer representations. On the first layer, DPM was employed to represent the part and global appearance of the pedestrians. This approach yielded a problem regarding partially visible pedestrians. The second layer was instigated by appending the subclass weights of the body. This mixture model handles various occlusions well. In an updated paper, Yan et al. [80] solved the problem of numerous resolution imaging in pedestrian detection with DPM. This updated model faced false positive results around vehicles. This particular problem was solved by constructing a context model to reduce them, depending on the pedestrian–vehicle relationship.

2.1.4. Point Distribution Model (PDM)

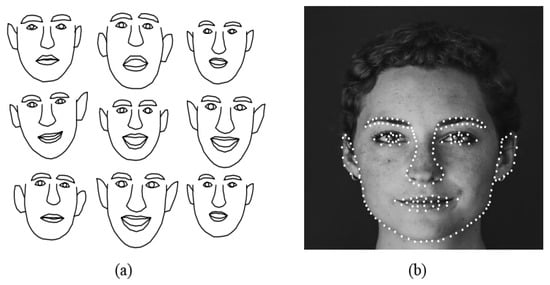

PDM is a shape description technique. The shape of a face is described by points in PDM. PDM was first invented by Cootes et al. [19] in 1992. However, the initial face PDM was devised by Lantis et al. [20]. PDM relies on landmark points. A landmark point is the annotation of any image onto any given shape on the training set images. The shape of a face in PDM is formed by planting landmark points on the shape of a face in the training image set. The model is generally built with a global face shape, having the formations of eyes, ears, nose, and other elements of a face, as shown in Figure 5.

Figure 5.

Global face shapes: (a) typical training global face shapes consisting of facial features, such as eyes, mouth, nose, eyebrows, and ears [20]; (b) model points projected onto training image with a face which produces the global face shapes [21].

In the training stage, a number of training samples are taken, where every image holds a number of points for each sample, building a shape for each. The shapes are then rigidly aligned to calculate the mean and covariance matrix. During the fitting of a PDM onto a face, the mean of the shape is positioned within reach of the face. Accordingly, a search strategy, named the gray-scale search strategy, is performed in deforming the shape to the given face. In the process, the training set controls the deformation according to the way the information is modeled within it.

PDM reduces the computation time for searching face features, as it blueprints the features while building the global face [81]. Additionally, the difficulty of occlusion of any face feature is reduced by compact global face information with features since other information of the face compensates for the occluded area [21]. PDM can be fitted with numerous face shapes and provides a compact structure of a face. However, the process of building training set by pointing out the landmarks of face and facial features is an unavoidable drudgery and, in many cases, is prone to errors. Furthermore, the control point movements are restricted to straight lines so that the line of action is linear.

Edwards et al. [82] used PDM in the human recognition, tracking and detection process. Variables, such as pose, lighting conditions and expressions, were handled up to the par. To avoid the need for a large dataset, decoupled dynamic variation models for each class were proposed, while initial approximate decoupling was allowed to be updated during a sequence. Over and above that, PDM is sometimes put to use for searching three-dimensional (3D) volume data. Comparisons among different ASM are presented in Table 2.

Table 2.

Comparison of different ASM.

2.2. Low Level Analysis (LLA)

LLA wrenches out the descriptions of an image that are usually available in an image. LLA does not agitate with the type of object nor even the perspective of the viewer. In an image, a severe number of independent descriptors can be available, such as edges, color information, etc. For instance, if we look at an image containing a face shape, the LLA descriptors would signify where the edges of the face are, the different color variation of the face and image, etc. Provided that the descriptors are associated with an image, LLA descriptors are applicable all over the image and not just in the face structure. LLA can be classified into four subcategories: motion, color, gray information and edges.

2.2.1. Motion

A number of continuous image frames or video sequences is the primary condition for motion-based face detection. Moving targets and objects provide valuable information, which can be used in detecting faces. Two leading ways to detect visual motion are moving image contours and frame variance analysis. In frame variance analysis, the moving forepart is identified in any type of background. Moving parts that contain a face are discerned by thresholding the gathered frame difference [22,23]. Along with the face region, face features can also be extracted in this way [24,25]. Sometimes, the eye pair referenced position is taken into account while measuring frame difference [25]. If we take contours into account, it yields better results than frame variance [26]. McKenna et al. [26] applied a spatio-temporal Gaussian filter to detect moving face boundaries.

One more type of advanced motion analysis is optical flow analysis. To detect faces, we need short-ranged and sensitive motions to take into account. Optical flow analysis relies on estimating accurately the apparent brightness velocity. The face motion is detected at the beginning, and then the information is used to distinguish a face [83]. Lee et al. [83] modified the algorithm introduced by Schunck in [84] and proposed a line clustering algorithm, where moving regions of a face are obtained by thresholding the image velocity.

Motion analysis provides a robust and precise tracking [25,85]. Moreover, the analysis works on a reduced search space, as it largely focuses on the movement, and is very competent in a real-time environment [86]. However, the system is incapable of detecting eyes if the major axis is not perpendicular to the eye center connecting the line [22]. Additionally, faces with beards may belie the positive results.

2.2.2. Color Information

Human skin color information prominently builds a skin color cluster, which paves the way for faster face detection. The main reason behind this is the faster processing of color. Skin color–based face detection was popularly used by Kovac et al. in 2003 [27], Lik et al. in 2010 [28], Ban et al. in 2013 [29], Hewa et al. in 2015 [30] and many others.

There are several color models being used in face detection. Among them, the following are the significant ones: red, green, blue (RGB) model; hue, saturation, intensity (HSI) mode; and luminance, in-phase, quadrature (YIQ) model. In the RGB model, all the existing colors are aggregated, using basic red, green and blue colors. As the three basic colors are amalgamated to build a color, all the colors have specific values of red, green and blue. In order to detect a face structure, the pixel values corresponding to a face, which represents the maximum likelihood, are deduced. HSI model shows superior performance, compared to the color models, giving color clusters of face features a larger variance. This allows HSI to be used in detecting such human face features as the lips, eyes and eyebrows. Lastly, the YIQ model works by bolstering RGB colors for YIQ representation. The conversion shows a discrepancy between the face and background, which allows face detection in a natural environment.

Color processing is much faster, compared to other facial feature processing. Additionally, the color orientation is invariant under certain lighting conditions. Nonetheless, color information is sensitive to luminance change, and different cameras produce significantly different color values. For side viewed faces, the algorithm yields low accuracy [87].

Dong et al. [88] proposed color processing in color tattoo segmentation. A skin color model was implemented in LAB (L—lightness, A—the red/green coordinate, and B—the yellow/blue coordinate) color space. The main goal was to wane isolated noise, which makes the color tattoo area smooth. The model cannot handle variation in lighting conditions. Chang and Sun proposed two novel skin color–based models for detecting hands [89]. First, the model is constructed by amalgamating with Cr information which provides more representative and low-noise information, compared to other methods. The second one is built directly in accordance with the certain regions on the invariant surface. This method classifies actual skin color placed on a white board efficiently, but suffers in classifying different skin colors from around the world. Tan et al. [90] presented a skin color–based gesture segmentation model. The model detects the boundary points of skin area and then utilizes the least squares method to elliptically fit the border points. Finally, the model computes an elliptical model of the skin color distribution. This model by Tan et al. produces a high false acceptance rate. Huang et al. [91] proposed a skin color–based eye detection method. The method performs color conversion as the starting step, which allows it to handle variable lighting conditions well. However, the approach only works on a defined size of pixels. Cosatto and Graf [92] proposed a skin color–based approach to produce an almost photo-realistic talking head animation system. Each frame was analyzed, using two different algorithms. As a first step, color segmentation is followed by texture segmentation. In the color segmentation step, hue is split up into a span of background colors and a range of hair or skin colors. Manual sampling is done to define the ranges. The same features are extracted from the blob after a thresholding and component connecting process. A combination of these texture and color models is used to locate mouth, eyes, eyebrows, etc. Yoo and Oh [93] presented a face segmentation method, using skin color information. The model tracks human faces depending on the chromatic histogram and histogram backpropagation algorithm. However, adaptive determination of faces in the scenario of zooming in and out failed in the method.

2.2.3. Gray Information

In grayscale images, each of the pixels in an image represents only an amount of light. In other words, every pixels contain only intensity information, which is described as gray information. There are only two basic colors: black and white, with many shades of gray in between [31]. Generally the face shape, edges and features are darker, compared to their surrounding regions. This dichotomy can be used to delineate various facial parts and faces from image background or noise.

Gray information is two-dimensional (2D) processing, while color information is 3D processing. Therefore, this is computationally less complex (requires less processing time). However, gray information processing is less efficient, and the signal-to-noise ratio is not up to par.

Caoa and Huan [94] implemented gray information processing in multi-target tracking. Depending on the prior information, a grey level likelihood function is built. Then, the grey level likelihood function is introduced into the generalized, labeled, multi-Bernoulli (GLMB) algorithm. This multi-target tracking system works well in a cluttered environment. Wang et al. [95] proposed a self-adaptive image enhancing algorithm, based on gray scale power transformation. This solved the problem of excessive darkness and brightness in gray scale images. The main advantages of using the method is sharpness improvement, adaptive brightness adjustment and the ability for self-adaptive selection of transformation co-efficiency. Gray information processing was employed in digital watermarking by Liu and Ying [96]. The spread spectrum principle is presented to improve the robustness of the watermarking. As a result, the model shows good robustness to arbitrary noise attacks, cutting, and Joint Photographic Experts Group (JPEG) compression. Bukhari et al. [97] presented a novel approach for curled text line information extraction without the need for post-processing, based on gray information processing. In the text line extraction process, a major challenge is binarization noise. The method works well in mitigating the effect of binarization noise. Patel and Parmar [98] implemented gray information processing in image retrieval. The model adds color to grayscale images. The resultant images achieve a match pixel colorization accuracy of 92%.

2.2.4. Edge

Edge representation was one of the earliest techniques in computer vision. A sharp change in image brightness is considered an edge. Sakai et al. [32] implemented edge information in face detection in 1972. Analyzing line drawings to detect face features, and eventually the full face, was employed effectively by Sakai et al. Based on the works of Sakai et al., Craw et al. [33] developed a human head outline detection method which used a hierarchical framework. More recent applications of edge-based face detection can be found in [34,35,36,37].

The edges in an image are to be of a label, which are matched to a set pre-model for accurate detection. To detect edges in an image, many different filters and operators are implemented.

- Sobel Operator: The sobel operator is the most commonly used operator [99,100,101]. It works by computing an approximation of the gradient of the image intensity function.

- Marr–Hildreth edge operator: The Marr–Hildreth edge operator [102] works by convolving the image with the Laplacian of Gaussian function. Then, zero crossing is detected in the filtered results to obtain the edges.

- Steerable filter: The steerable filter [103] is performed in three steps, which are edge detection, filter orientation of edge detection and tracking neighboring edges.

In an edge-based face detection system, a face can be detected using a minimal amount of scanning [104] and withal, the system is relatively robust and cost effective. In spite of this, edge-based face detection is not suitable for noisy images, as it does not examine edges in all scales.

Chen et al. [105] applied edge detection in laminated wood edge cutting. To detect edges, a canny operator was employed, and defect detection was performed, using the pattern recognition method. However, the proposed model needed position adjustment of the wood. Zhang and Zhao utilized an edge detection system in automatic video object segmentation [106]. The framework found the current frames moving edge by taking the background edge map into account and preceding the frame’s moving edge. Provided that there is a moving background available, the model returns poor segmentation. Liu and Tang employed the artificial bee colony (ABC) algorithm in searching global optimized points of edge detection [107]. The process of searching neighbor edge points is ameliorated, depending on these global optimized points. Fan et al. extended the SUSAN operator to detect edges in a moving target detection process [108]. The frame difference for moving target detection was gathered after detecting edges. The technique effectively counterbalances overlapping and the empty hole problem of a single detection algorithm. However, the method highly relies on the selection of the gray threshold, binarization threshold and geometry threshold. Yousef et al. [109] employed edge detection in conscious machine building. The framework is a bio-inspired model, which utilizes a linear summation of the decisions made by previous kernels. This summing operator facilitates a much better edge detection quality at the price of imposing a high computational cost. Table 3 lists some similarities and differences between different LLA.

Table 3.

Comparison of different LLA.

2.3. Feature Analysis (FA)

FA theorizes the possibility that the human face has features that function as detectors, observing individual characteristics or features we can locate on the face. LLA sometimes detects noise (background objects) as faces, which can be solved, using analysis of high level features. Geometrical face analysis was employed rigorously to find the actual face structure, which was obtained ambiguously in low level analysis. Using the geometric shape information, there are two ways that we can put it into application. The first is the positioning of face features by the relative position of these features, and the other is flexible face structures.

2.3.1. Feature Searching

Feature searching techniques employ a rather conventional technique, which is that a notable face feature is searched at first, then other less notable face features are found by referencing the eminent features found first. Among the literature surveyed, eyes pair, face axis, facial outline, and body below the head are some features used as reference.

Face Outline: One of the best examples of feature searching is finding a face structure by referencing the outline of a face. The algorithm was presented by De Silva et al. [38]. This algorithm starts by searching the forehead of a face [33]. After the forehead is found, the searching algorithm searches for the eye pair, which is actually presented by sudden high variation in densities [32]. The forehead and the eye pair is then taken as reference points, and other less notable face features are searched according to the reference points.

The algorithm presented by De Silva et al. communicated to have an accuracy of 82% for facial images having < faces on a plain background. The algorithm can detect faces of various races. The facial outline–based algorithm cannot detect images with glass on the faces, and also images with hair covering the forehead of the face in the image.

Eye Reference: Taking the eyes pair directly as a reference point in searching face in images was proposed by Jeng et al. [39]. The algorithm first searches for possible eyes pair locations in images. The images used as an input in the algorithm are pre-processed binary images. The next step in the algorithm is almost similar as the outline; it searches for other facial features, such as the mouth, nose, etc., corresponding to the position of the eye pair. The face features have distinct functions weighted by their density.

This algorithm showed an 86% rate of detection. In contrast, the image dataset needed to be assembled in controlled imaging surroundings [39]. Moreover, the background of the images in the dataset must be thrown into disorder for detection.

Eye Movement: By taking into account normal human vision system eye movement, Herpers et al. [40] proposed GAZE. The GAZE algorithm detects the most projecting feature in the image, which is eye movement. A rough representation is constructed, using a multi-orientation Gaussian filter. The saliency rough representation map is then used to locate the most important feature which has maximum saliency. Secondly, the saliency of the drawn out area is plunged, and the next possible area is augmented for the next iteration. The remaining facial features are perceived at later iterations.

With only the first three iterations, Herpers et al. [40] was able to detect moving eyes with 98% accuracy. Different orientations and modest illuminance fluctuations have no effect on the detection rate. Tilted faces and variations in the magnitude of the face size have no effect on accuracy, and the algorithm is independent of any measurements of face features as shown in the performance results in [40].

Feature searching can further be classified into two categories: the Viola–Jones algorithm and local binary pattern (LBP).

Viola–Jones Algorithm

Viola and Jones came up with an object detection framework in 2001 [41,42]. The main purpose of the framework was to solve the problem of face detection which the algorithm achieved with faster, high detection accuracy, even though the algorithm could detect a diverse class of objects. The algorithm solved problems of real-time face detection, such as slowness, computational complexity, etc.

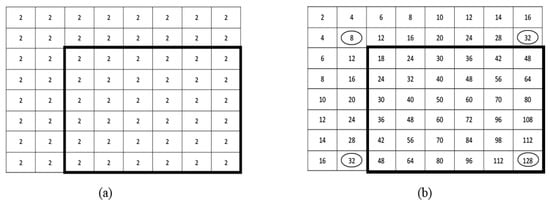

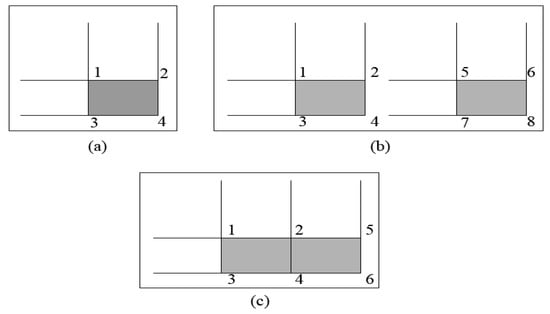

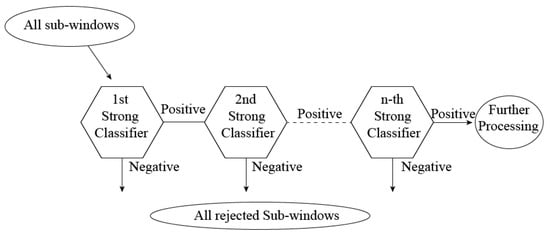

The Viola–Jones algorithm functions in two steps: training and detection. In the detection stage, the image is converted into grayscale. The algorithms then finds the face on the grayscale image, using a box search throughout the image. After that, it finds the location in the colored image. For searching the face in a grayscale image, Haar-like features are used to search an image [43]. All human faces consist of the same features, and Haar-like features explores this similarity by making three types of Haar features for the face, namely edges, line and four-sided features. With the help of these, a value for each feature of the face is calculated. An integral image is made out of them and compared very quickly, as shown in Figure 6. An integral image is what makes this model faster because it reduces the computation costs by reducing number of array references, as shown in Figure 7. In the second stage, a boosting algorithm named the Adaboost learning algorithm is employed to select a few number of prominent features out of large set to make the detection efficient. A simplified version was delineated by Viola and Jones in 2003 [44]. Finally, a cascaded classifier is assigned to quickly reject non-face images in which prominent facial features selected by boosting are absent, as shown in Figure 8.

Figure 6.

Example of integral image: (a) an sized input image expressed with pixel values. Using conventional method, the rectangle has a summation of 72 pixels, which uses all 36 array references; (b) integral image of the input image. Using this integral image, the value is calculated as . Here, are the positions of the rectangles shown with circles. So, the sum of the pixels in rectangle is , which is same as the real value, using only 4 array references instead of 36 [41].

Figure 7.

Integral image array reference: (a) the sum within the dark shaped location is computed as (four array references); (b) the sum within the two dark shaped location is computed as and , respectively, (eight array references); (c) the sum within the two adjacent dark shaped location is computed as and hence, (six array references) [41].

Figure 8.

Schematic diagram of the detection cascade [41]. Strong classifiers can be different facial features, such as the mouth, eyes, etc. An image without a human mouth or other strong classifiers is surely not a human face. Hence, the window is rejected, which makes the process faster. On the other hand, if all the strong classifiers are present in an image, it is classified as a face [42].

The Viola–Jones algorithm has high detection accuracy, and at the time of release it was reported to be 95%. A very recent study by Jamal et al. [110] reported to have 97.88% face detection accuracy. The algorithm is most widely used for face detection for its shorter computation time [111]. The algorithm is extremely successful for its low false positive rate. The algorithm was 15% quicker than the existing algorithms at the time of release. However, the algorithm can only detect the frontal side of the face. The algorithm possesses an intensely larger training time. Training with a limited number of classifiers can result in far less accurate results; a number of sub-windows was provided with more attention [112].

Rahman and Zayed presented the Viola–Jones algorithm in detecting ground penetrating radar (GPR) profiles in bridge deck [113]. The method is utilized in the detection of hyperbolic regions acquired from GPR scans. The framework lacks different clustering approaches, which would be suitable in this purpose. Huang et al. [114] proposed an improved Viola–Jones algorithm to detect faces in Microsoft Hololens. In comparison with FACE API, the speed of the local detection is 4 times and 20 times via network, but can handle a rotation of . Winarno et al. [115] built face counter to count faces in an image, using the Viola–Jones algorithm. The model resulted in poor accuracy in images with low light intensity. Kirana et al. [116] proposed the Viola–Jones algorithm in the application of emotion recognition. The model was made for learning environments, such as a school. One predicament of the model is that it only works on forward-facing faces. Kirana et al. [117] extended the emotion recognition model [116] for fisher face type images. Feature extraction was performed, using PCA and linear discriminant analysis, and then combined with Viola–Jones for the detection process. Nonetheless, this combined framework is 15 times slower than the original Viola–Jones algorithm. Additionally, Hasan et al. [118] implemented the Viola–Jones algorithm in drowsiness detection, which is the main problem in the brain–computer interface paradigm. The method is based on the eye detection technique. The decision of drowsiness is made depending on the eye state (open or close). Saleque et al. [119] employed the Viola–Jones algorithm in detecting Bengali license plates in motor vehicles. The framework detects single vehicle license plates with 100% accuracy, but suffers from a reduction in accuracy in detecting multiple license plates.

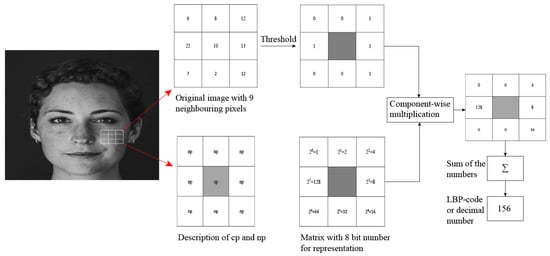

Local Binary Pattern (LBP) LBP was mainly proposed for monochrome still images. LBP is based on the texture analysis on images. The texture analysis model was proposed first in 1990 [45]. However, LBP was first described by Ojala et al. in 1994 [46]. LBP works robustly as a texture descriptor and was found to have significant performance boost when working with histogram of oriented gradients (HOG) [47]. There have been reported a myriad of LBP variants, for instance, spatial temporal LBP (STLBP), LBP, center symmetric local binary patterns (CS-LBP), spatial color binary patterns (SCBP), opponent color local binary pattern (OC-LBP), double local binary pattern (DLBP), uniform local binary pattern (ULBP), local SVD binary pattern (LSVD-BP), etc. [120].

LBP looks for nine pixels at a time of an image—to be exact, a matrix—and particularly puts interest in the central pixel. LBP compares the central pixel (cp) with its neighboring pixel (np) and assigns 0 for np < cp and 1 for np > cp in the corresponding neighbor. Then, it turns the eight binary np into one single byte which corresponds to a LBP-code or decimal number. This is done by multiplying the matrix component wise with an eight bit number representative matrix as shown in Figure 9. This decimal number is used in the training process. We are basically interested in edges; in the image, the transition from 1 to 0 and vice versa presents a change in brightness of the image. These changes are the edge descriptors. When we look at a whole image, we look for comparisons or change in pixels or brightness, and the edges are received.

Figure 9.

The process of calculating LBP code. Every neighboring pixel is taken under np < cp and np > cp threshold to produce the binary comparison matrix [45]. The binary matrix is then multiplied component wise with eight bit representative matrix [46] and summed up to generate the LBP code or the decimal number representation [121].

LBP is tolerant of monochromatic illumination changes because LBP just compares the neighboring pixels; a change in illumination would change the comparative values, which would not result in change in values in the comparison [122]. LBP is mostly popular for its computation simplicity and fast performance. LBP can detect a moving object by subtracting the background, and has high discriminating power with a low false detection rate [123]. The algorithm yields the same detection accuracy in offline images and in real-time operation [124]. However, LBP is not invariant to rotations and high computation complexity. LBP uses only pixel difference while ignoring the magnitude information. It is not sensitive to minor adjustments in the image.

Rahim et al. developed a face recognition system by making use of feature extraction with LBP [125]. The model achieves an incredible 100% accuracy but does not work in real time. Yeon [126] implemented a face detection framework, using LBP features. The method performed faster when compared with the Haar-like features extraction method. Priya et al. [121] utilized LBP’s advantage of micro-pattern description and solved the problem of identical twin detection. LBP was also used in surface defect detection by Liu and Xue [127]. The proposed model is an improvement of the original LBP, called gradient LBP. The model exploits image sub-blocks to plunge the LBP data matrix dimensionality. Finally, it strains non-continuity of the pixels in the local area to determine the defect area. However, this method faces noise influence when employed on the image as a whole. Varghese et al. presented an extended version of LBP, named modified LBP (MOD-LBP), and showed its application in the level identification of brain MR images [128]. When compared with original LBP, using histogram-based features, Varghese et al. found MOD-LBP to be two times better. Moreover, LBP was used in pose estimation by Solai and Raajan [129]. The model proposed by Solai and Raajan divides the estimation into five parts: front, left, tight, up and down. The pose is determined according to the pitch, yaw and roll angles of the face image. Nurzynska and Smolka implemented LBP in smile veracity recognition [130]. The model efficiently classified a posed smile and spontaneous expression. The feature vector was calculated with the use of uniform LBP. Support vector machine (SVM) was used as a classifier. Zhang et al. [131] proposed a fusion approach combining histogram of oriented grading and uniform LBP feature on blocks to recognize hand gestures. In the fusion, a histogram of oriented grading features depicts the hand shape, while LBP features epitomize the hand texture. However, the fusion yields poor result for complex backgrounds, and the speed is slow. Furthermore, LBP was implemented in script identification of handwritten documents by Rajput and Ummapure [132]. Handwritten scripts written in English, Hindi, Kannada, Malayalam, Telugu and Urdu were taken into account. Nearest neighbor and SVM were used as classifiers. LBP was used to extract features from a block of images. The defined block size was pixels. However, the model did not recognize word level images.

AdaBoost “Adaptive Boosting”, which is recognized in short for AdaBoost, is the introductory pragmatic boosting algorithm; Freund and Schapire first came up with this in 1996 [48]. AdaBoost mainly centers the attention on the classification and regression problem. The main objective of this algorithm is to adapt with hard-to-classify features. The algorithm functions by combining weak classifiers and originating a strong classifier. The algorithm changes the weights of different instances. To be exact, the algorithm puts more weight on hard-to-define classifiers and less on already-sorted-out classifiers. This is how the algorithm develops a better functioning classifier.

AdaBoost contains a high degree of precision. It has achieved a wide variety of success in many fields along with image processing. The algorithm can attain almost equivalent result on classification with small amounts of adjustment. As it combines weak classifiers, a wide variety of weak classifiers can be used to generate one strong classifier [133]. However, AdaBoost requires an enormous time for training [134]. It also can be sensitive to noisy background images and currently does not support null rejection.

Peng et al. [135] proposed two extended versions of the AdaBoost-based fault diagnosis system. One version, named gentle AdaBoost, was employed in fault diagnosis for the first time by Peng et al. For binary classification, one version of gentle AdaBoost was employed, and for multi-class classification, another version named AdaBoost multi-class hamming trees (AdaBoost.MH) was used. However, both of the extended version cannot deal with imbalanced data. Aleem et al. [136] utilized AdaBoost in software bug count prediction. Yadahalli and Nighot applied AdaBoost in intrusion detection [137]. The main goal of the system is to reduce the false alarm and ameliorate the rate of detection. Bin and Lianwen proposed a two-stage boosting–based scheme along with a conventional distance-based classification algorithm [138]. The application was to recognize in handwritten Chinese similar characters. Finally, the model was compared with AdaBoost, and AdaBoost outperformed the model. Selvathi and Selvaraj proposed an automatic method to segment brain tumor tissue and classification on magnetic resonance imaging (MRI) [139]. A combination of random forest and modified AdaBoost was presented. To extract tumor tissue texture, curvelet and wavelet transform was employed. However, this framework is limited to detecting brain tumors only. Lu et al. [140] proposed an improved ensemble algorithm, AdaBoost–GA—a combination of AdaBoost and GA—for cancer gene expression data classification. The model was designed to ameliorate the diversity of base classifiers and embellish integration processes. In AdaBoost–GA, Lu et al. introduced a decision group to improve the diversity of the classifiers, but the dimension of the decision group was not increased in order to gain highest accuracy.

Gabor Feature

The Gabor filter, named after Dennis Gabor, is extensively used for edge detection [49]. It is a linear filter used for texture analysis of an image. Gabor features are constructed by applying Gabor filters on images [50]. Images have smooth regions interrupted by abrupt changes and contrast called edges. These abrupt changes or edges usually contain the most prominent information in an image and hence, can indicate the presence of a face. Fourier transform is prominent in change analysis, but is not efficient in dealing with abrupt changes. Usually, Fourier transforms are represented by sine waves oscillating in infinite time and space. However, for image analysis, there is a need for something that can be localized in finite time and space. This is why the Gabor wavelet is utilized, which is a rapidly plunging oscillation with a mean zero [55]. For detecting the edges, the wavelet is used and searched over an image, initially positioning it at a random position in the image. If no edges are detected, the wavelet is searched at a different random position [51].

Using the Gabor feature, impressive results on face detection was reported by the dynamic link architecture (DLA) [141], elastic bunch graph matching (EBGM) [142], Gabor Fisher classifier (GFC) [143], and AdaBoosted Gabor Fisher classifier (AGFC) [144]. Gabor feature analysis works well with magnitudes [145]. A large amount of information can be gathered from local image regions [146,147]. Gabor feature analysis is found to be invariant to rotation, illumination and scale [148,149,150,151]. However, Gabor feature analysis has time complexity and image quality issues.

Gabor feature analysis was employed on gait analysis by Li et al. [152]. Human gait was classified into seven components. Two types of gait recognition processes were performed; one based on an entire gait outline, and another based on certain combinations. Two applications were proposed, depending on the analysis: human identification and gender recognition. The model cannot wring out dynamic properties of walking sequence. Zhang et al. [153] proposed an improved version of Gabor feature analysis, named local Gabor binary pattern histogram sequence (LGBPHS), for face representation and recognition. The model does not need any training because of its non-statistical approach. LGBPHS comprises many parts of the histogram, corresponding to different face components at various orientations and scales. However, the framework does not handle pose and occlusion variation well enough. Cheng et al. [154] implemented Gabor feature analysis in facial expression recognition. The method is based on Gabor wavelet phase features. Conventional Gabor transformation processes utilize Gabor amplitude as a feature. Yet, Gabor amplitude features have small changes as the variation of the spatial location, while the phase can quickly change in accordance with the change in position. The presented model uses the intense texture characteristics gathered from phase information to detect facial expression. Priyadharshini et al. [155] compared Gabor and Log Gabor in vehicle recognition and proved the superiority of Log Gabor in vehicle recognition. Yakun Zhang et al. [156] presented a solution to the parameter adjustment of the Gabor filter with an application in finger vein detection. The model was named the adaptive learning Gabor filter. Additionally, a new solution for texture recognition by combining gradient descent and the convolution processing of the Gabor filter was proposed. However, the soft features available in finger veins was ignored in the process. Gabor feature analysis was utilized in fabric detection by Han and Zhang [157]. A GA algorithm was proposed to use, jointly, in determining the optimal parameters of the Gabor filter, depending on the defect-free fabric image. The model yields positive results on defects of various shapes, sizes, types. Rahman et al. applied Gabor feature analysis in the detection of the pectoral muscle boundary [158]. The model tunes the Gabor filter in the direction of the muscle boundary on the region of interest (ROI) containing the pectoral muscle. After that, it calculates the magnitude and phase responses. The responses calculated with the edge connect and region merge are then utilized for the detection process.

2.3.2. Constellation Analysis

Constellation is a cluster of similar things. In a constellation analysis, a facial feature group is formed to search a face in an image [52,53]. The algorithm is free from rigidity. This is why it can detect faces in images with noisy backgrounds. Most of the algorithms reviewed before failed to perform face detection in images with a complex background. Using the facial features, a face constellation model solved this very problem easily.

Various types of face constellations have been proposed by numerous scientists. We discuss three of them: statistical shape theory by Burl et al. [54], probabilistic shape model by Yow and Cipolla et al. [56] and graph matching. Statistical shape theory has a success rate of 84% and it can operate smoothly with features that are missing. The algorithm handles properly the problems originated from rotation, scale and translation to a certain magnitude. However, a significant amount of rotation in the subject’s head causes a severe problem in detection. On the other hand, the probabilistic shape model marks a plunge in the detection of invalid features from noisy image and illustrates a 92% accuracy. The algorithm handles minor variations in viewpoint, scale and orientation. Additionally, eye glasses and missing features do not generate any problems. Lastly, graph matching can perform face detection in an automatic system and has higher detection accuracy.

Constellation analysis has been effectively used in telecommunication [159], diagnostic monitoring [160] and in autonomous satellite monitoring [161]. The similarities and differences among different FA are epitomized in Table 4.

Table 4.

Comparison of different FA.

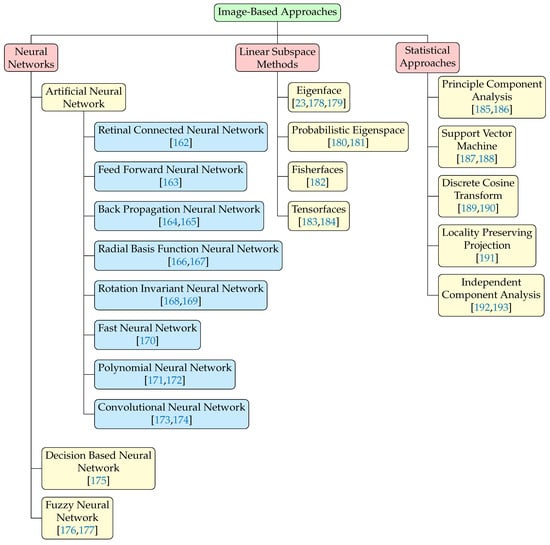

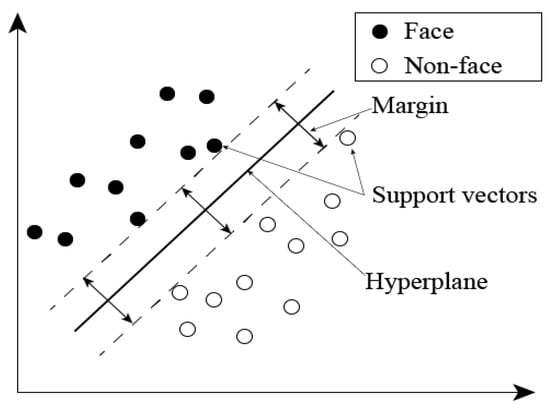

3. Image-Based Approaches

Detecting faces on a more cluttered backgrounds paved the the way for most image-based approaches. Most of the image-based face detection techniques work by using window-based scanning. The window is scanned pixel by pixel to classify a face and non-face. Typically, every method in image-based approaches varies in terms of the scanning window, step size, iteration number and sub-sampling rate to produce a more efficient approach. Image-based approaches are the most recent techniques that have emerged in face detection and are classified into three major fields: neural networks, linear subspace methods and statistical approaches as shown in Figure 10.

Figure 10.

Image-based approaches for face detection. This can be broadly classified into neural networks [162,163,164,165,166,167,168,169,170,171,172,173,174,175,176,177], linear subspace methods [23,178,179,180,181,182,183,184], and statistical approaches [185,186,187,188,189,190,191,192,193]. Each of these again can be classified into several subcategories as shown here.

3.1. Neural Network

Neural network algorithms are inspired by the human brain’s biological neural network. Neural networks take in data and train themselves to recognize the pattern (for face detection the face pattern). Then, the networks predict the output for a new set of similar faces. Neural networks can be subdivided into artificial neural network (ANN), decision-based neural network (DBNN) and fuzzy neural network (FNN).

3.1.1. Artificial Neural Network (ANN)

Like the biological human brain, ANN is based on a collection of connected nodes. The connected nodes are called artificial neurons. The fact of learning patterns in data enables ANN to produce better results with the availability of more data. There are several numbers of ANNs available. The most popularly used ANNs in face detection are added below.

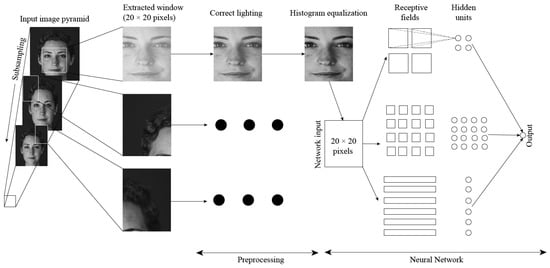

Retinal Connected Neural Network (RCNN)

A neural network based on the retinal connection of human eyes was proposed by Rowley, Baluja and Kanade et al. in 1998 [162]. The proposed ANN was named the retinal connected neural network (RCNN). RCNN takes a small-scale frame of the main image to analyze whether the frame contains a face as shown in Figure 11. RCNN applies a filter on an image. The filter is based on the neural network. A temporary arbitrator is used to merge the output to a single node. The input image is searched thoroughly, applying a different scale of frame to search for face content. The output node, with the help of an arbitrator, eliminates the overlapping features and combines the face features gathered from filtering.

Figure 11.

Schematic diagram of RCNN for face detection [162]. The original image is sub-sampled on a image window. All the extracted image windows are passed through illumination correctness and histogram equalization. The resulting image works as a network input. The network classifies the image as a face or non-face class.

RCNN can handle a wide variety of images with different poses and rotation. When using RCNN, the methodology can be sorted out to be more or less conservative depending on the arbitration heuristics or thresholds used. The algorithm reports an acceptable number of false positives. However, the procedure is complex in terms of implementing and can only encounter frontal faces looking at the camera.

Feed Forward Neural Network (FFNN)

The feed forward neural network (FFNN), also known as multi-layer perception, is considered to be the simplest form of ANN. The neural network was upheld from perceptrons developed by Frank Posenblatt et al. in 1958 [163]. Perceptrons are methodologies of the brain to store and organize information. Information or, for images, the face feature information moves to output nodes from input nodes, where the movement is done via hidden layers. Hidden layers assign weights to face features on the training process as shown in Figure 12. In the detection stage, the weights are compared to report a result on a given image. FFNN can handle large tasks, and accuracy is only higher on training samples.

Figure 12.

Schematic diagram of three-layer FFNN for face detection [195]. Any given image data go in neural network, and random weight is initialized with every face feature, and then adjusted to recognize faces.

Ertugrul et al. employed FFNN in estimating the short term power load of a small house [194]. The proposed model is a randomized FFNN. A small grid dataset was used in the evaluation and validation process. However, total accuracy dropped because of the bias in the output layer. Chaturvedi et al. [195] applied the FFNN and Izhikevich neuron model in the handwritten pattern recognition of digits and special characters. A comparison between both of them was made and it was proved that by adjusting synaptic weights and threshold values, the input patterns can achieve the same firing rate. Additionally, FFNN was implemented in image denoising by Saikia and Sarma [196]. The proposed method is a combination of FFNN and multi-level discrete cosine transform. The fusion manages speckle noise, which is a kind of multiplicative noise generated in images. Mikaeil et al. proposed FFNN in low latency traffic estimation [197]. The framework shows significant improvement in utilization performance, upstream delay and packet loss handling. Dhanaseely et al. [164] presented FFNN and cascade neural network (CASNN) in face recognition. Feature extraction was performed, using PCA, and Olivetti Research Lab (ORL) database was utilized. Both models were compared after the recognition process and it was found that CASNN is better in this scenario.

Back Propagation Neural Network (BPNN)

The origin of BPNN has some misleading information. Despite this fact, Steinbunch et al. proposed a learning matrix in 1963 [164]. This is one of the earliest involvement with BPNN [198]. A modern updated version was developed by Seppo et al. in 1975 [199]. The version is also called reverse mode automatic differentiation (RMAD). BPNN came into attention after the release of a paper by Rumelhart, Hinton and Williams in 1986 [165].

BPNN implements a system called “learning by example". BPNN calculates the error back in the input from the output to adjust the weights in the hidden layer for more accurate output. A number of face features are used as input in the training stage. A larger amount of weights are assigned to every features and compared with input nodes with errors. If the error rate is higher, the weight value is decreased on the next attempt and compared with the input node again. Thus, minimal error reporting weights are generated and employed in the detection stage for new images given. The face features are calculated using the predicted weights.

BPNN is fast, easy to program and simple to implement. The algorithm does notneed any special mention of the features of the function to be learned. BPNN is also flexible without the need for any prior knowledge about the network. Furthermore, the algorithm has no input parameter, except the input number. However, BPNN faces the major disadvantage of getting stuck into local minima.

Chanda et al. [200] applied BPNN in plant disease identification and classification. In order to fight overfitting and local optima, the framework utilizes BPNN to obtain the weight coefficient and particle swarm optimization (PSO) for optimization. The model implements five pre-processing steps: resizing, contrast enhancement, green pixel masking, and color model transformation. Finally, image segmentation is performed to classify te diseased portions of the plant. However, the model faces problems in choosing the initial parameter values of PSO. Yu et al. [201] implemented BPNN in tooth decay diagnosis. The model takes input the X-ray images of the patient’s teeth. Normalized autocorrelation coefficients were employed to classify decayed and normal teeth. Additionally, BPNN was used in data pattern recognition by Dilruba et al. [202]. The model aims at finding the match ratio of training patterns to testing patterns. Two types were taken as a match: one is an exact match and the other is almost similar pattern. Li et al. utilized BPNN in building a ship equipment fault grade assessment model [203]. Three types of BPNN were taken into account: gradient descent back propagation algorithm, momentum gradient descent back propagation algorithm and Levenberg–Marquard backpropagation algorithm. To quantify the initial weight value of the neural network, GA is employed. Finally, a comparison among the three BPNN shows that Levenberg–Marquard backpropagation outperforms the other two. Furthermore, BPNN was employed in the analysis of an intrusion detection system by Jaiganesh et al. [204]. The framework analyses the user behavior and classifies them as normal or attack. The model yields poor attack detection accuracy.

Radial Basis Function Neural Network (RBFNN)

The radial basis function neural network (RBFNN) was presented by Broomhead and Lowe in 1988 [166,205]. RBFNN has similarities structurally with BPNN. RBFNN is comprised of input, hidden and output layers. However, RBFNN has only one hidden layer, and it is strictly bounded to only one hidden layer, named the feature vector. When mapping or neuron activating, RBFNN makes use of the Gaussian potential function.

In RBFNN, computations are relatively easy [167]. The network can be trained, using the first two stages of the training algorithm. The network possesses the property of best approximation [206]. The ANN shows easy design and strong tolerance to input noise, online learning ability and good generalization [207]. Additionally, RBFNN has a flexible control system. Despite those, an inadequate number of neurons in the hidden layer results in the failure of the system [208]. Additionally, a large number of neurons can result in overlapping in RBFNN.

Karayiannis and Xiong implemented an extended version of RBFNN, named cosine RBFNN, in identifying uncertainty in data classification [209]. The model was built by expanding the concepts behind the design and training of quantum neural networks (QNN), which is capable of detecting uncertainty in data classification by themselves. This method yields a learning algorithm that fits the cosine RBFNN. In the field of data mining, RBFNN was employed by Zhou et al. [210]. To speed up the learning process, a two-stage learning technique was used. To increase the output accuracy of the RBFNN, an error correlation algorithm was proposed. Static and dynamic hidden layer architecture was suggested to build a better structure of hidden layers. Venkateswarlu et al. [211] applied RBFNN in speech recognition. The framework is suitable in recognizing isolated words. Word recognition was performed in a speaker-dependent mode. When compared to multilayer perceptron neural networks (MLP), it improves efficiency significantly. Guangying and Yue employed RBFNN in the study of an electrocardiograph [212]. For the construction of RBFNN, a new algorithm was introduced. The proposed model generalizes on the given input well. The framework shows great efficiency in electrocardiogram (ECG) feature extraction. The model only considers two basic cardiac actions, despite the fact that cardiac activity is far more complex.

Rotation Invariant Neural Network (RINN)

Rowley, Baluja and Kanade proposed rotation invariant neural network (RINN) in 1997 [168]. Conventional algorithms are restricted to detecting frontal face only, while RINN can detect faces at any angle of rotation. The RINN system consists of manifold networks. At the beginning, a network name router network holds every input network to find its orientation. After that, the network prepares the window to detect one or more detector networks. The detector network processes the image plane to search for a face.

RINN can handle an image at any degree of rotation. RINN displays a higher classification performance [169]. Even so, RINN can learn only a limited number of features and performs well only with a small number of training sets.

RINN was implemented in coin recognition [213] and in estimating the rotation angle of any object in an image [214]. Additionally, RINN was also exploited in pattern recognition [215].

Fast Neural Network (FNN)

The fast neural network (FNN), which reduces the computation time of the neural network, was first presented by Hazem El-Bakry et al. in 2002 [170]. FNN is very fast in computing and detecting human faces in an image plan. FNN works by diving an image into sub-images. Each of the sub-images are then searched for a face or faces, using the fast ANN or FNN. A high speed in detecting faces was reported when using FNN.

FNN reduces the computation steps in detecting a face [170]. In FNN, the problem of sub-image centering and normalization in the Fourier space is solved. FNN is a high-speed neural network, and parallel processing is implemented in the system. Yet, FNN is reported to be computationally expensive. FNN can be implemented in object detection besides face detection [170].

Polynomial Neural Network (PNN)

Haung et al. presented the polynomial neural network (PNN)–based face detection technique in 2003 [171]. PNN was originally proposed by Ivakhnenko in 1971 [172,216]. The algorithm is also known as group methods of data handling (GMDH). The GMDH neuron has two inputs and one output, which is a quadratic combinations of two inputs.

To detect a face, a frame that can slide over an image is introduced, and the detector labels the frames that contain a face. The test image is divided into variable scales to examine the numerous face shapes. The dividing process is actually re-scaling the input image into a standard frame. To overcome overlapping due to the re-scaling and multiple faces in the detection region, the images are arbitrated. The lighting conditions are ratified with an optimal plane, causing minimal error, and pixel intensities are fixed to compose a feature vector of 368 measurements. The classifier PNN, which has a single output, detects a window with a face or non-face. The complexity is slumped by PCA. PCA also helps to improve efficiency.

PNN can handle images with a cluttered background. Additionally, the algorithm is reported to have a high detection rate and low false positive rate in images with both simple and complex backgrounds. However, the algorithm is reported to have suffered from overlapping in the images output, due to re-scaling. The algorithm can also cause a problem in detecting faces in an image with a large number of faces in it.

PNN was implemented in signal processing with a chaotic background by Gardner [217]. The model generates a global chaotic background prediction, which is then subtracted to improve the signal. Ridge PNN (RPNN), an extended version of PNN, is a non-linear prediction model developed by Ghazali et al. [218] to forecast the future patterns of financial time series. The model was also extended to another version in the same paper as the dynamic ridge polynomial neural network (DRPNN), which is almost similar to the feed forward RPNN, with a feedback connection as the difference. Over and above that, PNN was put into action in gesture learning and recognition by Zhiqi [219]. The activation function is a Chebyshev polynomial, and the weights of the neural network are obtained by using a direct approach based on the pseudo-inverse. The procedure cuts down on training time. It also boosts precision and generalization. Furthermore, PNN was employed in the modeling of switched reluctance motors by Vejian et al. [220]. The model is used to simulate the flux linkage and torque characteristics of switched reluctance motors mathematically. The most appealing aspect of this is that it is self-adaptive and does not depend on a priori mathematical models.

Convolutional Neural Network (CNN)

There are many debates over who was the first to present the convolutional neural network (CNN). Despite much argument, most literature review and studies refer to some papers of LeCun et al. from 1988 to 1998 [173,221]. CNN was implemented in many research works on face detection. Some early contributions are the proposed works of Lawrence in 1996 [222], in 1997 [223] and Matsugu in 2003 [174].

CNN has a structure almost similar to FFNN. Hence, CNN has convolutional and some other layers consisting of hidden layers; for convolutions in the hidden layers, CNN is named as such. In convolutional layers, an input is convoluted and then passed to the next layer. We should define filters in the convolution layer. To sum up the process, the training stage trains the network, and best weighted values or filters are saved for detection. The network is trained with the usual backpropagation gradient descent procedure [222]. In the detection stage, the filters are scanned over the image to find patterns. Patterns can be edges, shapes or the colors.

For a completely new task, CNN is a very good feature extractor. Additionally, CNN shows a very high computational efficiency and high accuracy. However, CNN requires big dataset for proper training. CNN reports to be slow and holds a high computational cost.

Besides face detection, CNN was also employed in improving bug localization by Xiao and Keung [224]. Bug reports and source files were reviewed on a character-by-character basis rather than a word-by-word basis. To extract features, a character level CNN was used, and the output was fed into a recurrent neural network (RNN) encoder–decoder. However, no fine tuning was performed in the model. Mahajan et al. applied CNN in the prediction of fault in gas chromatographs [225]. The fault was predicted by abnormalities in the pattern of the gas chromatogram, according to the model. Shoulder top, negative peak, and good peak faults were all successfully established. In spite of them, the model training lacked an adequate dataset. Hu and Lee proposed a new time transition layer that models variable temporal convolution kernel depths, using improved 3D CNN [226]. The model was also improved by adding an extended DenseNet architecture with 3D filters and pooling kernels. The model also added a cost-effective method of transferring pre-trained data from a 2D CNN to a random 3D CNN for appropriate weight initialization. CNN was employed in node identification in wireless network by Shen and Wang [227]. The dimension of every node was downsized, using PCA. Local features were extracted, using two layers CNN. For optimizing the model, stochastic gradient descent was utilized. To execute, the decision output softmax model was employed. The model performs poorly on larger scale networks. Shalini et al. conducted a sentiment analysis of Indian languages, using CNN [228]. The dataset used contained Telugu and Bengali languages. Data were classified as positive, negative and neutral. The model was implemented, using just one hidden layer. However, the model had a low cross validation accuracy for Telugu data.

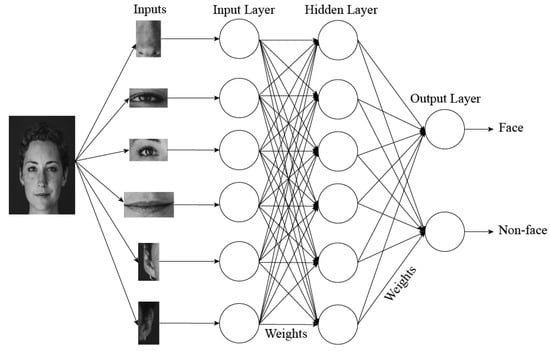

3.1.2. Decision-Based Neural Network (DBNN)

Kung et al. presented an eminent face detection algorithm based on the decision-based neural network (DBNN) in 1995 [175]. DBNN uses static process for still images and a temporal strategy for video. In the training stage, the face pattern was annealed in order to make the eye plane horizontal and to produce a structure where distance between the eyes are constant. A Sobel edge map was assembled of size 16 by 16 pixels from images containing either a face or non-face. The Sobel edge map was later used as an input to the DBNN. The sub-images were processed to find face shapes in them. The face pattern was then located in the sub-image of the main frame it corresponds to. When the whole image is considered, only the found sub-image containing face is located as the desired location of the face.

DBNN is very effective in computation performance and time [229]. The hierarchical structure of DBNN provides a better understanding of structural richness. Furthermore, DBNN is reported to have high recognition accuracy, and its processing speed is very high (less than 0.2 second). However, the detection rate is higher only when the facial orientation is between −15 and 15 degrees.