Abstract

System identification problems are always challenging to address in applications that involve long impulse responses, especially in the framework of multichannel systems. In this context, the main goal of this review paper is to promote some recent developments that exploit decomposition-based approaches to multiple-input/single-output (MISO) system identification problems, which can be efficiently solved as combinations of low-dimension solutions. The basic idea is to reformulate such a high-dimension problem in the framework of bilinear forms, and to then take advantage of the Kronecker product decomposition and low-rank approximation of the spatiotemporal impulse response of the system. The validity of this approach is addressed in terms of the celebrated Wiener filter, by developing an iterative version with improved performance features (related to the accuracy and robustness of the solution). Simulation results support the main theoretical findings and indicate the appealing performance of these developments.

1. Introduction

Solving a system identification problem represents a key step in many important real-world applications [1,2]. In general, such a problem can be formulated in terms of estimating or modeling the parameters of an unknown system when a set of data is available, which is usually related to the input and output of the system. Depending on the specific particularities of the problem or application, we can deal with different types of systems, according to their numbers of inputs and outputs. The simplest formulation is the well-known single-input/single-output (SISO) system. Furthermore, in some applications we can deal with more elaborated structures, such as multiple-input/single-output (MISO) and multiple-input/multiple-output (MIMO) systems.

The linearity is an important feature of a system, which can significantly simplify the overall identification problem. Even if many real-world systems face nonlinear behaviors, it is always desirable to address or reformulate the framework such that it has a linear approach to some extent. In this context, a useful topic is related to bilinear forms, which have been addressed in the literature in different ways and contexts [3,4,5,6,7,8,9,10,11,12,13,14,15,16,17,18,19,20,21,22]; most often they are related to approximating nonlinear systems.

In general, a bilinear model can approximate a large class of nonlinear systems via a finite sum of the Volterra series expansion between the inputs and outputs of the system. Therefore, in this context, bilinear systems behave similarly (to some extent) to linear models. This could further simplify the analysis, as outlined before. Due to this simplicity, bilinear systems have been involved in a wide range of applications, such as digital filter synthesis [7], prediction problems [8], channel equalization [9], echo cancellation [10], chaotic communications [16], neural networks [20], and active noise control [21]. Nevertheless, in all these frameworks, the bilinear term is defined with respect to the data, i.e., in terms of an input-output relation.

In this study, we focus on a different approach by defining the bilinear term with respect to the impulse responses of a spatiotemporal model, in the context of MISO systems. Several similar frameworks can be found in the literature, in the context of particular applications, such as channel equalization [13], nonlinear acoustic echo cancellation [15], and target detection [18]. However, most of these works were not associated with or analyzed in conjunction with bilinear forms. Usually, they were referred to as joint adaptation processes or cascaded systems, which are similar to the Hammerstein model [23].

More recently, an iterative Wiener filter for such bilinear forms was developed in the framework of a MISO system identification problem [24]. As compared to the conventional Wiener filter, the iterative version can obtain good accuracy even when a only small amount of information is available for the estimation of the statistics. Following the Wiener benchmark, another category of solutions relies on adaptive filtering [25,26]. Several adaptive filters tailored for the identification of bilinear forms have also been developed, following the main categories of algorithms. For example, the least-mean-square (LMS) and normalized LMS (NLMS) versions can be found in [27,28]. In addition, several recursive least-squares (RLS) algorithms for bilinear forms were developed in [29]. Moreover, a Kalman filter tailored for the identification of bilinear forms was proposed in [30].

In the previously mentioned approaches, the spatiotemporal impulse response of the MISO system is considered perfectly separable, and its components are combined using the Kronecker product. The identification of such linearly separable systems can be efficiently exploited in the frameworks of different applications, such as source separation [31,32], array beamforming [33,34], and object recognition [35,36]. In these contexts, the basic solution relies on the decomposition and modeling techniques of rank-1 tensors [37,38,39,40,41,42]. Nevertheless, it is highly useful to exploit the decomposition-based approach for the identification of more general forms of impulse responses.

Several recent works have followed this idea by exploiting the nearest Kronecker product decomposition and low-rank approximations [43,44,45,46,47]. In this context, the basic concept is to reformulate a high-dimension system identification problem as a combination of low-dimension solutions, thereby gaining in terms of both performance and complexity. Due to its features, this approach can be used in different practical applications—e.g., [48,49,50,51,52,53,54,55], among which we can mention acoustic feedback cancellation, adaptive beamforming, speech dereverberation, multichannel linear prediction, and nonlinear system identification.

A unified study on the efficient identification of linear and bilinear systems exploiting the decomposition-based approach is provided in this review paper. First, in Section 2, we present different system models, in the context of linear and bilinear forms. Then, in Section 3, we show how these models are related, thereby outlining the equivalence among the systems. The ideas behind the decomposition-based approach together with the optimal low-rank approximation are presented in Section 4. Since the Wiener filter represents a benchmark tool for the system identification problems, we illustrate its behavior in Section 5, wherein we also introduce an iterative version with improved performance features. Simulation results are provided in Section 6, in order to support the main theoretical findings. Finally, several conclusions and perspectives of this study are outlined in Section 7.

2. Different Input Output Linear/Bilinear System Models

In this study, we assume that the input and output, and the noise signals, take real values and have zero means. The most popular input output system is the so-called SISO system given by

where denotes the desired (or reference) signal at discrete-time index k, is the system’s temporal impulse response of length L, and the superscript denotes the transpose operator. The vector

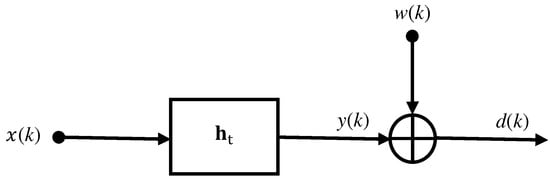

contains the most recent L samples of the input signal, ; is the additive noise; and is the linear form in . A typical assumption that can be made is that and are uncorrelated (or even independent, which is not really required if we only handle second-order statistics). We refer to (1) as the linear SISO (LSISO) system. Its general block diagram is provided in Figure 1.

Figure 1.

A general block diagram of the LSISO system from (1).

Without loss of generality, let us assume that , with . A shorter version of the input signal vector, , may be written as

As a result, we can express (2) as

from which we deduce the matrix of size :

In other terms, we have

where denotes vectorization, i.e., the operation of converting a matrix into a vector. It may also be convenient to use the inverse of the vectorization operator [40], i.e., , which is equivalent to (6). Therefore, the most straightforward bilinear system that follows from the previous development results as

where and are the first and second temporal impulse responses, of lengths and , respectively; and is now bilinear in and . We call (7) the bilinear SISO (BSISO1) system. The equivalency between the LSISO and BSISO1 systems is explained and detailed in Section 3, together with the connections among different models that are discussed in the current section.

An obvious generalization of (7) is

where and are the first and second sets of the system temporal impulse responses of lengths and , respectively. We refer to (8) as the BSISO2 system. Expression (8) can be rewritten as

where

and

is a block-diagonal matrix with diagonal blocks. We can see that is bilinear in and .

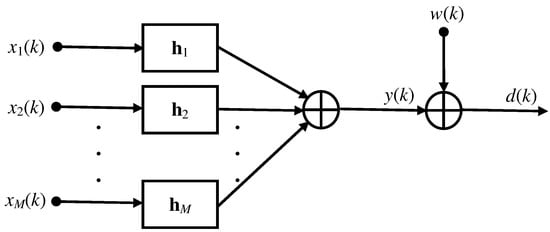

An important extension of the LSISO system in (1) is the so-called linear MISO (LMISO) system:

where M denotes the number of system inputs (or channels), are the M channel impulse responses of length L, and the vector

contains the most recent L samples of the mth () input signal, . The general block diagram of the LMISO system is provided in Figure 2. Equation (13) can be rewritten as

where

Figure 2.

A general block diagram of the LMISO system from (13).

Clearly, is linear in . Of course, the particular case of corresponds to the LSISO system.

As in the single-channel case, let but with . We can decompose , similarly to (4), as

where

Then, we concatenate the M input signals as

where

is a vector of length . Consequently, the LMISO system in (13) or (15) can be expressed in an equivalent manner as

where (of length ) represents the spatiotemporal impulse response of the system, with the same coefficients as , resulting through simple permutations, according to the inputs.

The first bilinear MISO (BMISO1) system can be derived from the LSISO system in (1), according to (15) [24]:

where (of length L) represents the temporal impulse response of the system, , (of length M) represents the spatial impulse response of the system, and is the bilinear form in and . For , (23) is equivalent to the LSISO system in (1); this also means that the bilinear structure is lost in the single-channel particular case.

Now, from (20), we can build the matrix of size :

Then, our second bilinear MISO (BMISO2) system is derived according to (22). We get

where (of length ) is the spatiotemporal impulse response of the system, (of length ) is the system temporal impulse response, and is the bilinear form in and . For , we obtain exactly the BSISO1 system in (7).

Our third and last bilinear MISO (BMISO3) system is just an obvious generalization of (25), i.e.,

where (of length ) is the set of spatiotemporal impulse responses of the system, and (of length ) is the set of temporal impulse responses of the system. For , we get the BSISO2 in (8). Relation (26) can be rewritten as

where

and

is a block-diagonal matrix with diagonal blocks, while is bilinear in and .

3. Equivalence among Systems

In this section, we show how the different linear and bilinear systems are related. Let us start with the BSISO1 system in (7). Its bilinear term can be rewritten as

where denotes the trace of a square matrix and ⊗ is the Kronecker product [56]. With (31) in mind, comparing the BSISO1 system with the LSISO system in (1), we can clearly observe that the two systems are identical if . Therefore, in general, we can say that BSISO1 is a particular case of LSISO. In other words, BSISO1 is also an LSISO with some structure of its temporal impulse response.

Now, let us focus on the BSISO2 system in (8). Another way to express its bilinear term is

where

is a matrix of size of rank equal to in general. At the same time, the temporal impulse response of the LSISO system can be decomposed as

where are impulse responses of length each. Next, we can rewrite the linear term of the LSISO system as

where . It can be easily seen by comparing (32) and (35) that the LSISO and BSISO2 systems are equivalent.

In the same way, we can write the bilinear term of the BMISO1 system in (23) as

Then, by comparing the previous expression with the bilinear form of the LMISO system in (15), we can see that the two are the same if . In general, BMISO1 is a particular case of LMISO.

The bilinear term of the second bilinear MISO system, i.e., BMISO2 in (25), can also be expressed as

Again, we can conclude that the BMISO2 system is a particular case of the LMISO system in (22), where .

Finally, the bilinear form of the BMISO3 system in (26) may be written as

where

is a matrix of size of rank equal to in general. At the same time, the spatiotemporal impulse response of the LMISO system in (22) can be decomposed as

where are impulse responses of length each. Next, we can rewrite the linear term of the LMISO system as

where . It can be easily seen by comparing (38) and (41) that the LMISO and BMISO3 systems are equivalent.

4. Best Approximation

The main objective in this study is to identify the LMISO system in (13) (or, equivalently, in (15) or (22)). The LSISO system is just a particular case and has been studied before. We can achieve this goal based on what is already known about bilinear forms and how they are best approximated.

Let be a real-valued vector of length L. The 2-norm or Euclidean norm of this vector is defined as

Let be a real-valued rectangular matrix of size . The Frobenius norm and the 2-norm of this matrix are, respectively,

and

Now, we can consider the impulse response of the BMISO3 system in (26); i.e., the matrix of size with (see Equation (38)). As mentioned before, this system is equivalent to the LMISO system defined by relation (22). The matrix can be factorized through the singular value decomposition (SVD):

where , of size , and , of size , are orthogonal matrices and is an rectangular diagonal matrix having on the main diagonal nonnegative real numbers. The columns of and are known as the left-singular and right-singular vectors, respectively, of , whereas the elements on the diagonal of are called singular values of with .

Based on (39) and (45), we deduce that

with , where , are the first columns of , and are the columns of . It may be easily checked that and . In addition, since (see (41)), the global impulse response can be decomposed as

However, in practical scenarios, the matrix is never really of full rank, because of the reflections and/or sparseness in the system [57,58,59,60]. Let and let us define the following matrix:

Now, the objective is to verify whether can be well approximated by . In the positive scenario, the LMISO system can be written as

where

denotes the correlated noise (considered negligible), with . Consequently, the goal becomes to identify the new matrix instead of . This new idea may have a few advantages, as is explained in the following.

Next, we state a theorem given in [61,62], which helps to prove that can be well approximated by . Let and let be the set of matrices of rank equal to . Then, the solution to the minimization problem

is given by (49). Furthermore, we have

and

Consequently, as long as the normalized misalignment,

remains very small, it is sufficient in practice to estimate the impulse responses and for .

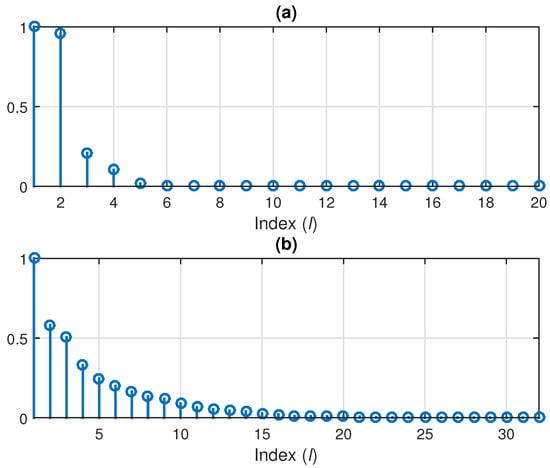

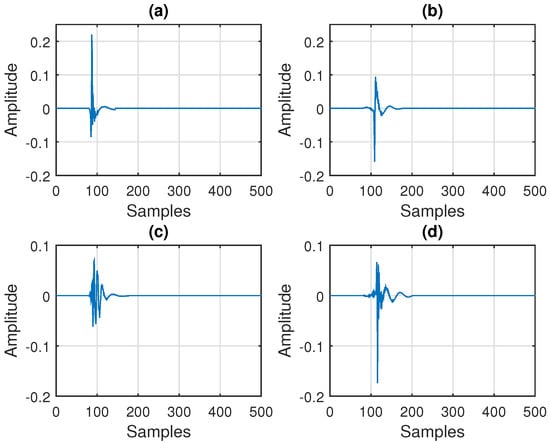

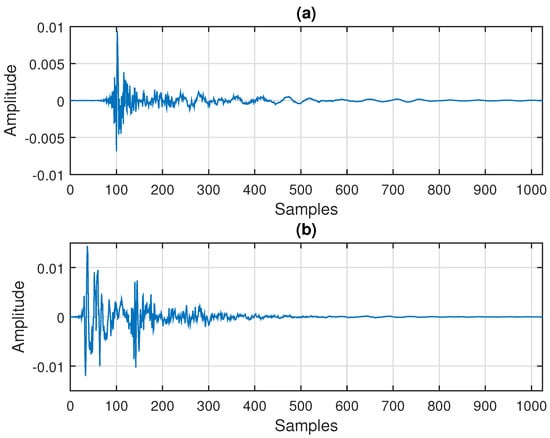

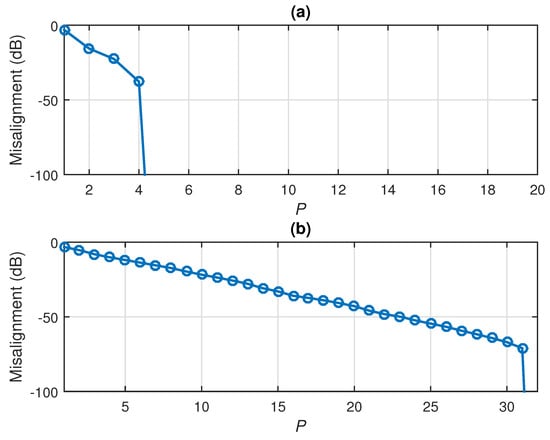

In order to show the validity of this approach, let us consider two scenarios that will also be detailed in the simulations provided in Section 6. In the first scenario, we consider impulse responses from the G168 Recommendation [63], which are network echo paths of length , as depicted in Figure 3. In this case, the decomposition was performed using and . In the second scenario, we used two acoustic impulse responses (i.e., ), each one having coefficients, as depicted in Figure 4. Here, we set for the decomposition. In both cases, we evaluate the normalized misalignment from (55) and the evolution of the singular values () of the matrix . As we can see in Figure 5, the normalized misalignment decreased with the value of P. This was much more apparent in the first scenario (corresponding to Figure 3), where the rank of resulted in , as shown in Figure 5a. Consequently, a good approximation was obtained for . In case of the acoustic impulse responses (i.e., the scenario from Figure 4), the resulting matrix was closer to being full rank, so that a larger value of P was required to obtain a good approximation. Nevertheless, as we can notice in Figure 5b, a value of P significantly lower as compared to led to reasonable attenuation of the misalignment (e.g., around dB). This behavior is also supported in Figure 6, where we can notice the decreasing trend of the singular values (which are normalized to the maximum value).

Figure 3.

Impulse responses used in the first set of simulations from Section 6 (according to the G168 Recommendation [63]), with and : (a) the first network echo path from [63], (b) the second network echo path from [63], (c) the fifth network echo path from [63], and (d) the sixth network echo path from from [63].

Figure 4.

Impulse responses used in the second set of simulations from Section 6, with and : (a) right acoustic echo path and (b) left acoustic echo path.

5. Identification with the Wiener Filter

The identification of the LMISO system in (22) involves finding a real-valued filter, , of length , which estimates the system . The error signal can be defined as

where . The optimization criterion used to find the optimal filter is the mean-squared error (MSE):

where is the mathematical expectation, represents the cross-correlation vector between and , and denotes the covariance matrix of . After minimizing , the celebrated (multichannel) Wiener filter is obtained:

Since the covariance matrix in the expression above is of size , a large number of data samples (more than ) is needed in order to obtain a reliable solution.

An alternative approach to identifying the LMISO system in (22) and estimating as in the conventional case is to identify the LMISO system in (50) and estimate . In the rest of this paper, the subscripts and are dropped in order to simplify the notation, and in this way becomes .

Next, we assume that . Consequently, can be decomposed as

where the impulse responses and have lengths and , respectively. Therefore, the filter may also be decomposed as

where the filters and have lengths and , respectively. With the relations

and

where and are the identity matrices of sizes and , respectively, (60) may be rewritten as

where

are matrices of sizes and , respectively. As a result, we may express the error signal defined in (56) in two distinct ways:

and

where

Continuing with this formulation, we can write the MSE criterion as

where

It can be noticed that the matrices and have sizes and , respectively, which can be much smaller than the size of , which is . Additionally, at least data samples are required for the estimation of the statistics in the MSE from (67) or (68), whereas in order to estimate the statistics in the conventional MSE from (57), we need at least data samples. When is fixed, we can express (67) as

and when is fixed, we can write (68) as

This represents a bilinear optimization strategy [64].

In order to obtain the optimal filters, an iterative algorithm similar to the those presented in [24,43] can be derived. At iteration 0, we may take

where . Then, we may form and

By substituting the quantities above into the MSE from (69), we get at iteration 1:

which can be minimized with respect to , thereby yielding

Next, using , we can construct and

Consequently, the MSE from (70) is

The minimization of the previous expression with respect to gives

By iterating further, we obtain at iteration n

where , , , and are constructed similarly to , , , and , respectively. In the end, the Wiener filter at iteration n results as

The multichannel iterative Wiener filter is summarized in Table 1. For (i.e., single-channel case), the problem is reduced to a regular SISO scenario, and the algorithm becomes equivalent to the version developed in [43]. Additionally, if the system is perfectly separable/decomposable, we can obtain the optimal solution for . In this case, the iterative Wiener filter for bilinear forms (proposed in [24]) is obtained.

Table 1.

The multichannel iterative Wiener filter.

6. Simulation Results

In this section, we evaluate the performance of the conventional and iterative Wiener filters in two different scenarios. The first one is dedicated to the case of independent input signals, . The second scenario is more challenging, since it considers the case when the input signals are coming from the same source and they are linearly related. In both cases, the performance measure used to evaluate the overall behavior is the normalized misalignment (in dB), which is related to the spatiotemporal impulse response of the system, . In this framework, the solution provided by the conventional Wiener filter is given in (58), so that the performance measure is evaluated as

Similarly, for the iterative Wiener filter from (77), the performance measure results in

Both the conventional and iterative Wiener filters rely on the estimation of the statistics, i.e., the covariance matrix and the cross-correlation vector . Considering that N data samples are available, these estimates result in

Clearly, the value of N influences the quality of these estimates. Nevertheless, in practice, only a small amount of data could be available, which makes the identification process more challenging. In this case, the advantages of the iterative Wiener filter (which operates with smaller data structures) become more apparent, as will be supported in the following analysis.

The additive noise may also affect the accuracy of the Wiener solution. In relation to (13) or (22), the signal-to-noise ratio (SNR) can be defined as

where and are the variances of the output signal and noise, respectively. In practice, the Wiener solution is satisfactory with reasonable levels of the SNR, but it is not with small values of the SNR. In our experiments, different values of the SNR were used, in order to illustrate this behavior.

All the experiments were performed using MATLAB R2018b on an Asus GL552VX device (Windows 10 OS), having an Intel Core i7-6700HQ CPU @ 2.60 GHz, with four cores, eight logical processors, and 16 GB of RAM. In the first set of experiments, we considered the case of M independent input signals, which were generated as AR(1) processes. These were obtained by filtering white Gaussian noise through an AR(1) model with a pole at 0.9. Of course, different other inputs can be used instead of the AR(1) model. The most common considerations are: (i) the input signal is wide-sense stationary, (ii) all of the signals (i.e., , , and ) have zero means, and (iii) usually, the noise is not correlated with the input signal .

In our scenario, the number of channels was and their impulse responses were chosen from the G168 Recommendation [63]. They were network echo paths of length , as depicted in Figure 3.

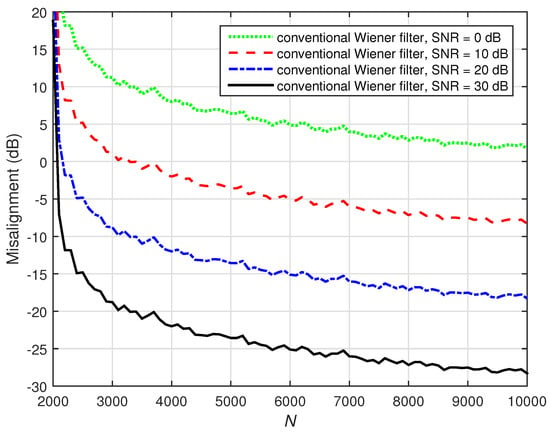

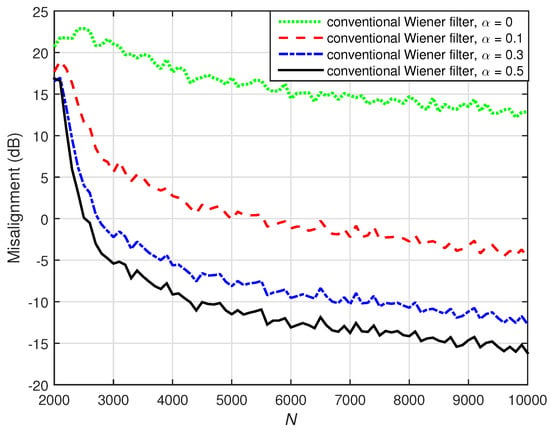

As mentioned before, the performance of the Wiener solution is influenced by the value of N and the level of the SNR. This is supported in Figure 7, where the performance of the conventional Wiener filter from (58) is illustrated for different values of N (from 2000 to 10,000 available data samples) and SNR levels (from 0 dB to 30 dB). As we can notice, a larger value of N (i.e., ) is required to obtain reasonable attenuation of the misalignment. Additionally, as expected, a more accurate solution was obtained with higher SNRs.

Figure 7.

Performance of the conventional Wiener filter for different values of N (number of available data samples to estimate the statistics) and SNRs. The input signals were independent AR(1) processes, , and .

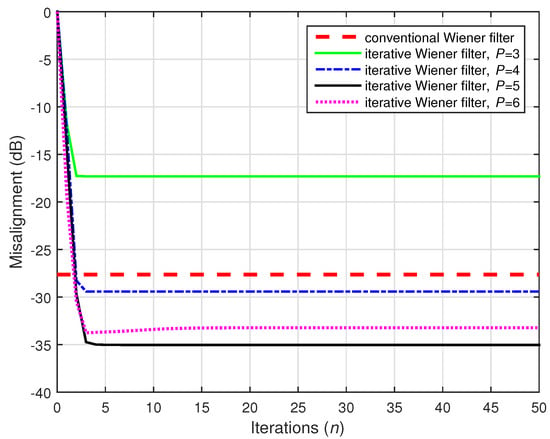

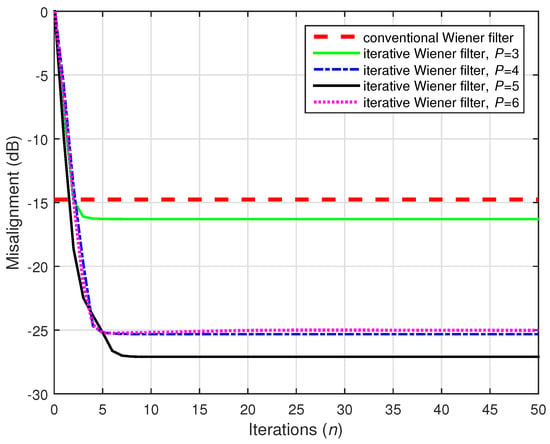

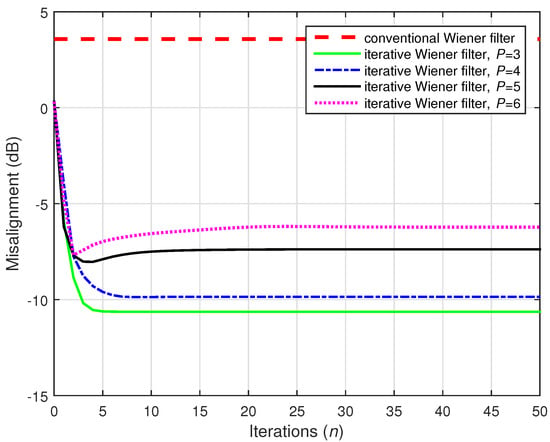

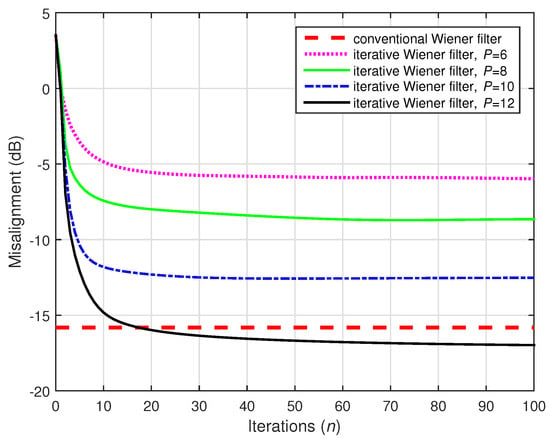

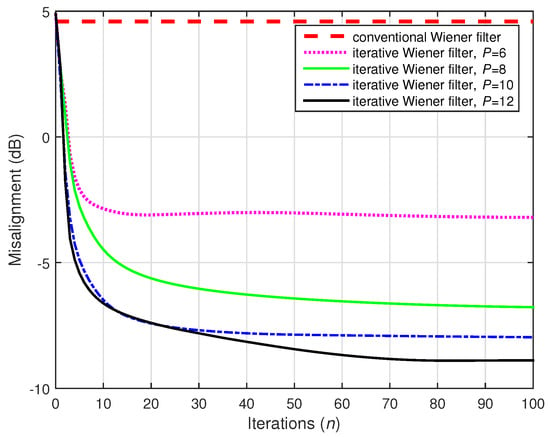

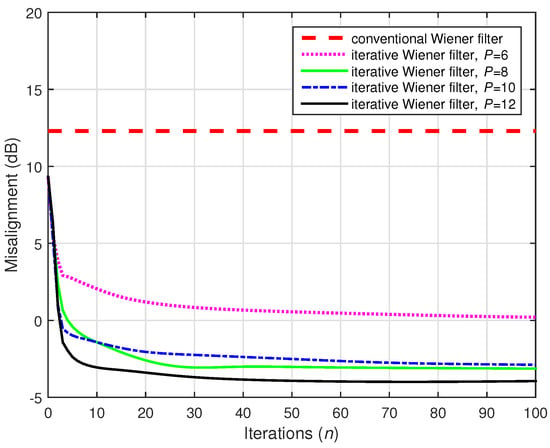

In this context, let us first compare the performance of the conventional and iterative Wiener filters in “favorable” conditions, using a large amount of data to estimate the statistics (i.e., 10,000), in a high SNR environment (i.e., dB). The iterative Wiener filter from (77) uses , , and different values of the decomposition parameter P (from 3 to 6). These values are much lower than , which represents an important advantage, as discussed in Section 5. We should also note that for the scenario considered in this first set of experiments (using the setup from Figure 3), the rank of the matrix (or ) was equal to . As we can notice in Figure 8, the iterative Wiener filter was able to outperform the conventional Wiener filter for most of the values of P. Even the case of led to reasonable attenuation of the misalignment. Moreover, all these iterative solutions were obtained in only a few iterations.

Figure 8.

Performances of the conventional and iterative Wiener filters, using 10,000. The input signals were independent AR(1) processes, , , and dB.

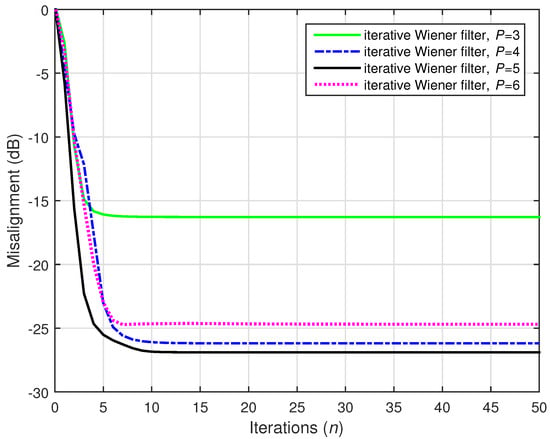

The advantages of the iterative Wiener filter became more apparent when less data were available to estimate the statistics. The previous simulation was repeated, but using (Figure 9). According to the results shown in Figure 7, the performance of the conventional Wiener filter was affected in this case (even for dB); its misalignment level was close to dB. This result is also confirmed in Figure 9. Most importantly, the iterative Wiener filter outperformed the conventional solution for all the values of P, thereby being more robust in this case due to the low-dimensional data structures used in its development.

Figure 9.

Performances of the conventional and iterative Wiener filters, using . The input signals were independent AR(1) processes, , , and dB.

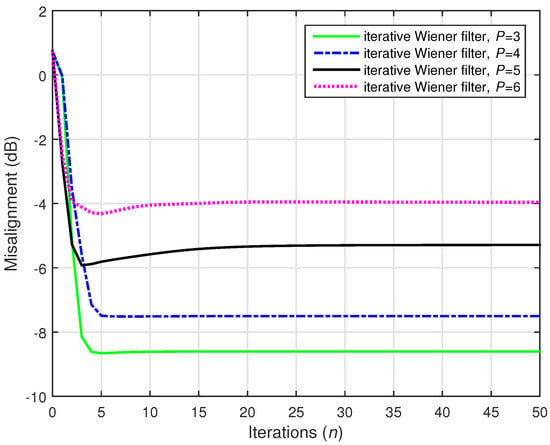

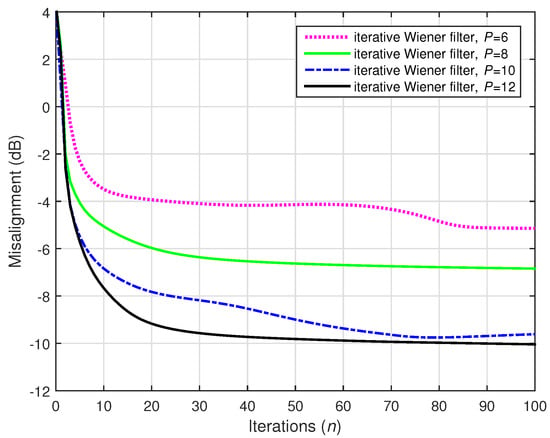

For the scenario considered in this first set of experiments, the length of the spatiotemporal impulse response was . Therefore, the case represents a limit in terms of the available amount of data. As we can notice in Figure 7, the conventional Wiener filter could not cope with this limit. On the other hand, the iterative Wiener filter was still able to obtain good performances, for , as supported in Figure 10.

Figure 10.

Performance of the iterative Wiener filter with different values of P, using . The input signals were independent AR(1) processes, , , and dB.

Furthermore, using is a significant challenge in terms of system identification, since apparently we deal with an "incomplete" scenario, when trying to estimate coefficients using less data. Clearly, the conventional Wiener filter cannot be used in this case. However, the iterative Wiener filter reformulates the original system identification problem (of size ) as a combination of low-dimension solutions of size and , with . Hence, it could overcome this limit of . This is supported in Figure 11: only data samples were available for the estimation of the statistics. Even in this challenging case, the iterative Wiener filter was able to provide a good attenuation of the misalignment, in a relatively small number of iterations. This represents an important feature and a significant advantage when dealing with small amounts of data. In other words, the iterative Wiener filter exploiting the decomposition-based approach can be used to solve system identification problems with highly incomplete information, which is a condition imposed in many important applications.

Figure 11.

Performance of the iterative Wiener filter with different values of P, using . The input signals were independent AR(1) processes, , , and dB.

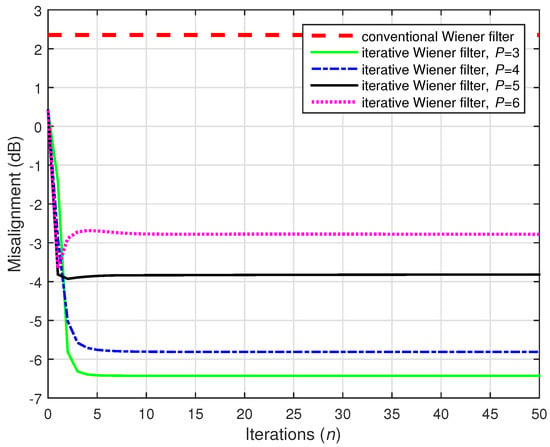

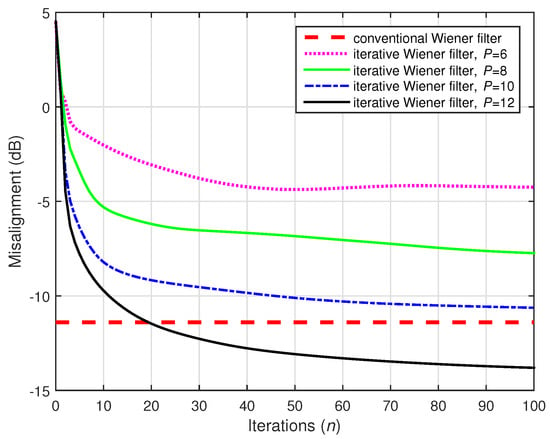

The SNR is also a critical factor in system identification problems. As shown in Figure 7, the performance of the conventional Wiener filter is highly influenced by the SNR level. In the following simulations from this first set of experiments, we considered a more challenging environment, by setting dB. In this case, even for a large amount of data (i.e., 10,000), the conventional Wiener filter was outperformed by the iterative version, as supported in Figure 12.

Figure 12.

Performances of the conventional and iterative Wiener filters, using 10,000. The input signals were independent AR(1) processes, , , and dB.

The gain becomes more apparent when fewer data are available to estimate the statistics. Such a case is considered in Figure 13, where dB and . As we can see, the conventional Wiener filter could not provide an accurate solution (as also indicated in Figure 7), whereas its iterative counterpart still attenuated the misalignment to an acceptable degree.

Figure 13.

Performances of the conventional and iterative Wiener filters, using . The input signals were independent AR(1) processes, , , and dB.

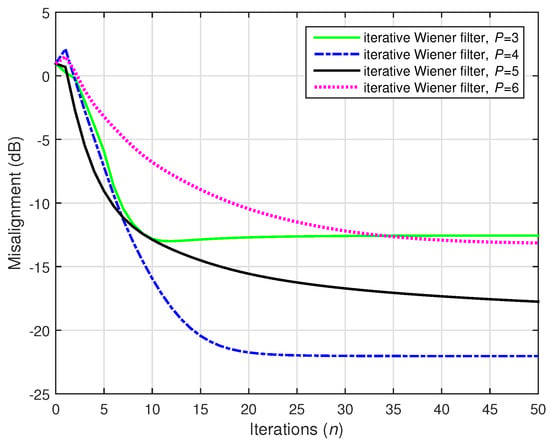

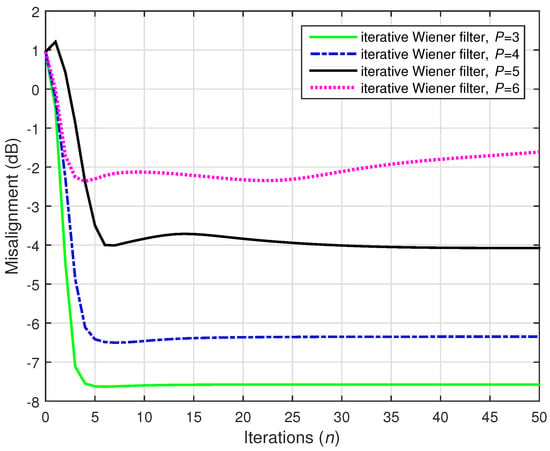

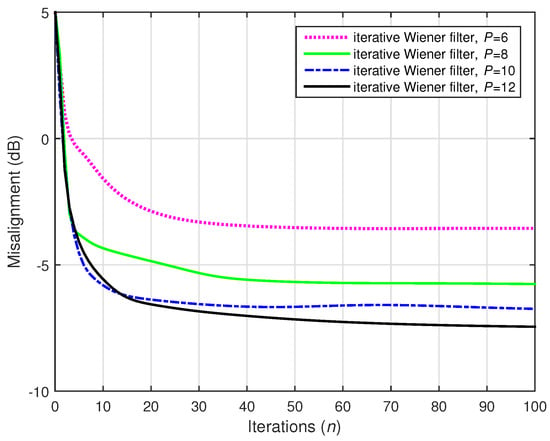

As previously explained (related to Figure 10 and Figure 11), using represents a critical scenario for the conventional Wiener filter. In this context, a lower SNR level made the situation even more challenging. Nevertheless, the iterative Wiener filter is reasonably robust even in these adverse conditions. This behavior is supported in Figure 14 and Figure 15, where and 1500, respectively. The same noisy conditions were considered, with dB. As we can see in these figures, the best behavior was obtained for , which is significantly lower than .

Figure 14.

Performance of the iterative Wiener filter with different values of P, using . The input signals were independent AR(1) processes, , , and dB.

Figure 15.

Performance of the iterative Wiener filter with different values of P, using . The input signals were independent AR(1) processes, , , and dB.

Finally, the last simulation of the first set of experiments was performed in "extreme" SNR conditions, using dB. As we already know from Figure 7, the conventional Wiener filter cannot provide an accurate solution in this case, despite the value of N. In Figure 16, 10,000, and dB. As expected, the conventional Wiener filter failed to provide an accurate estimate. On the other hand, the iterative Wiener filter was able to reach a much lower misalignment level, using . Therefore, it is much more robust in noisy conditions, which are frequent in practice.

Figure 16.

Performances of the conventional and iterative Wiener filters, using 10,000. The input signals were independent AR(1) processes, , , and dB.

The second set of experiments was performed in a more challenging situation that appeared in the context of stereophonic acoustic echo cancellation (SAEC) [65,66,67,68]. There were two acoustic echo paths to identify (for each microphone); i.e., . Consequently, the reference (or microphone) signal resulted in

where and correspond to the loudspeaker-to-microphone acoustic impulse responses (right and left, respectively), and and comprise the loudspeaker signal samples (right and left, respectively).

At first glance, from a system identification perspective, we need to identify the global impulse response . Nevertheless, in an SAEC scenario, the difficulty is many-fold. One of the main challenges is the so-called nonuniqueness problem [66,67], which comes from the fact that the loudspeaker (input) signals are linearly related. This issue can be addressed by manipulating the signals transmitted to the receiving room, e.g., using a preprocessor on the loudspeaker signals to make them less coherent, without affecting the stereo perception and the signal quality much. A simple but efficient nonlinear method uses positive and negative half-wave rectifiers on each channel, respectively [67]. In this case, the nonlinearly transformed signals become

where is a parameter used to control the amount of nonlinearity; the recommended interval for this parameter is [67]. The distortion parameter (which controls the amount of nonlinearity) is provided a priori. Clearly, this distortion must be performed in such a way that the quality of the signals and the stereo effect are not degraded. Experiments reported in [67] (and also in many subsequent works) show that stereo perception is not affected even with an as large as 0.5. Additionally, the audible distortion is small because of the psychoacoustic masking effects [69].

However, we should note that other methods can be used to address the nonuniqueness problem; e.g., see [70,71] and the references therein. An analysis of their influences on the overall performance of the decomposition-based approach is beyond the scope of this paper.

In our simulations, the source signal (in the transmission room) was white Gaussian noise. The acoustic impulse responses in the transmission room had 2048 coefficients. The acoustic impulse responses in the receiving room had coefficients, as depicted in Figure 4. The background noise (in the receiving room) was white and Gaussian, with dB.

The influence of the preprocessing technique from (84) and (85) on the loudspeaker signals can be seen in Figure 17, where the performance of the conventional Wiener filter is evaluated for different values of N (i.e., the available amount of data used to estimate the statistics) and using different values of , which acted as a distortion parameter. First, we can notice that the performance was clearly improved when preprocessing the input signals using positive and negative half-wave rectifiers with larger values of . Additionally, even with preprocessing, a large amount of data (i.e., ) is required for the conventional Wiener filter, in order to obtain reasonable misalignment attenuation.

Figure 17.

Performance of the conventional Wiener filter for different values of N (number of available data samples to estimate the statistics) and different values of (the distortion parameter). The source signal (white Gaussian noise) was preprocessed with positive and negative half-wave rectifiers. The numbers of channels were (stereophonic scenario), , and dB.

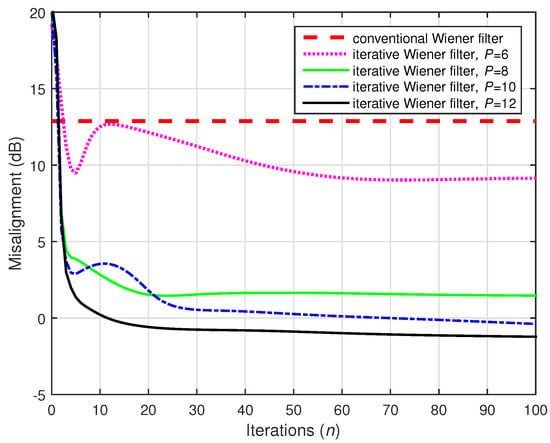

In the following simulations, the identification problem was addressed using the conventional and iterative Wiener filters. The length of the global impulse response was , so that we set . Due to the nature of acoustic impulse responses, the matrix (or ) was closer to full rank, so a higher value of P was required. In this context, the iterative Wiener filter involved in the experiments used , and 12. Nevertheless, these values are lower than .

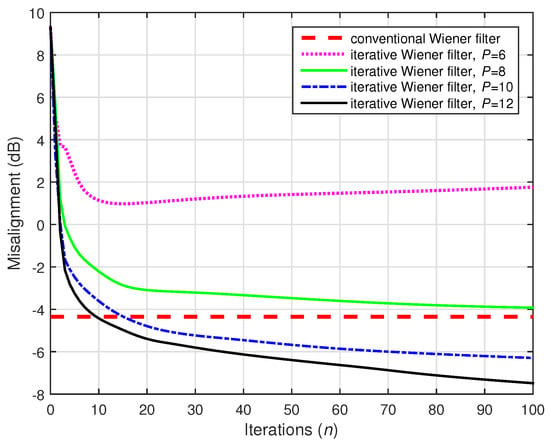

For the results shown in Figure 18, 10,000 data samples were used to estimate the statistics, and the input signals were preprocessed using positive and negative half-wave rectifiers, with . These represent favorable conditions for the identification, so the conventional Wiener filter had good accuracy (as also indicated in Figure 17). The performance of the iterative Wiener filter was improved for a larger value of P and even outperformed the conventional filter in the case of .

Figure 18.

Performances of the conventional and iterative Wiener filters, using 10,000. The source signal (white Gaussian noise) was preprocessed with positive and negative half-wave rectifiers, using . The numbers of channels were (stereophonic scenario), , and dB.

Reducing the value of the distortion parameter influenced the performance for the conventional and the iterative Wiener filters, as shown in Figure 19, where 10,000 and . However, the iterative Wiener filter is more robust to this modification, since for it outperformed the conventional benchmark, and for it reached a misalignment level close to the conventional Wiener solution.

Figure 19.

Performances of the conventional and iterative Wiener filters, using 10,000. The source signal (white Gaussian noise) was preprocessed with positive and negative half-wave rectifiers, using . The numbers of channels were (stereophonic scenario), , and dB.

Next, for the simulations shown in Figure 20 and Figure 21, less data were used to estimate the statistics—i.e., . The other conditions were the same as in Figure 18 and Figure 19, respectively. As we can notice, the conventional Wiener filter could not obtain an accurate solution in these cases, despite the value of the distortion parameter. On the other hand, the iterative Wiener filter was still able to achieve reasonable results (for ), thereby far outperforming the conventional solution. The difference was even more apparent for a lower value of , as supported in Figure 21.

Figure 20.

Performances of the conventional and iterative Wiener filters, using . The source signal (white Gaussian noise) was preprocessed with positive and negative half-wave rectifiers, using . The numbers of channels were (stereophonic scenario), , and dB.

Figure 21.

Performances of the conventional and iterative Wiener filters, using . The source signal (white Gaussian noise) was preprocessed with positive and negative half-wave rectifiers, using . The numbers of channels were (stereophonic scenario), , and dB.

A challenging case was considered in the following two simulations, where we set (while ). Two values of the distortion parameter were considered, i.e., and , and the results are depicted in Figure 22 and Figure 23, respectively. The conventional Wiener filter was not included in these experiments, since it could not provide an accurate solution (as indicated in Figure 17). As we can notice in both cases, despite the adverse conditions, the iterative Wiener filter was still able to attenuate the misalignment to a reasonable extent and provide a robust solution, for a value of P smaller than .

Figure 22.

Performance of the iterative Wiener filter with different values of P, using . The source signal (white Gaussian noise) was preprocessed with positive and negative half-wave rectifiers, using . The numbers of channels were (stereophonic scenario), , and dB.

Figure 23.

Performance of the iterative Wiener filter with different values of P, using . The source signal (white Gaussian noise) was preprocessed with positive and negative half-wave rectifiers, using . The numbers of channels were (stereophonic scenario), , and dB.

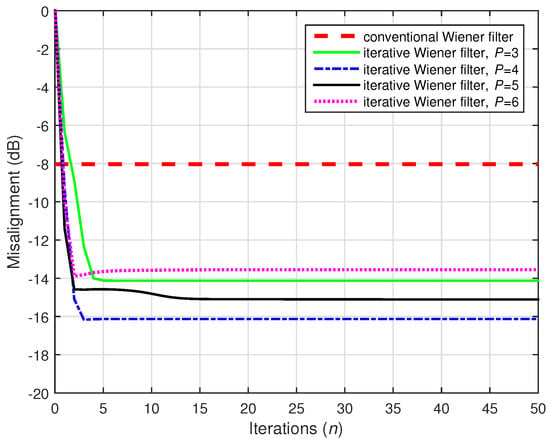

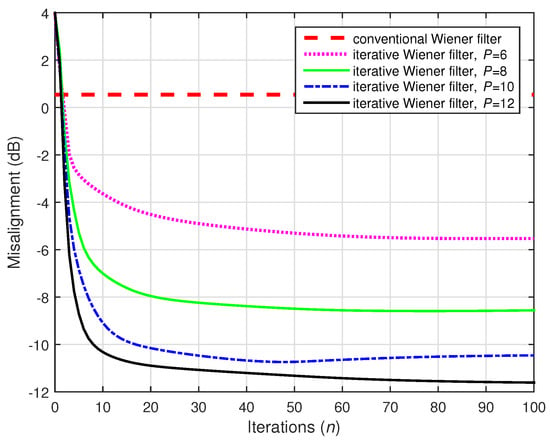

As shown in Figure 17, the conventional Wiener filter could not obtain an accurate solution for small values of the distortion parameter (i.e., closer to 0). For example, when using , its misalignment could not reach dB even with a large amount of data (i.e., 10,000); and for less data (e.g., ), it provided a far from accurate solution. These cases are considered in Figure 24 and Figure 25, using ; 10,000 and 2500, respectively. For N = 10,000 (Figure 24), the iterative Wiener filter outperformed the conventional solution for and 12. The decomposition using reached the misalignment level provided by the conventional Wiener filter. However, the differences (in favor of the iterative version, for all the values of P) were significant when , as supported in Figure 25.

Figure 24.

Performances of the conventional and iterative Wiener filters, using 10,000. The source signal (white Gaussian noise) was preprocessed with positive and negative half-wave rectifiers, using . The numbers of channels were (stereophonic scenario), , and dB.

Figure 25.

Performances of the conventional and iterative Wiener filters, using . The source signal (white Gaussian noise) was preprocessed with positive and negative half-wave rectifiers, using . The numbers of channels were (stereophonic scenario), , and dB.

Finally, as shown in Figure 26, 10,000 data samples are used to estimate the statistics, but the input signals were not preprocessed (i.e., ). Even if , the conventional Wiener filter could not obtain an accurate solution in this case. On the other hand, the iterative Wiener filter with was able to outperform the conventional Wiener solution, even in this extremely difficult scenario. In other words, the influence of the nonuniqueness problem is less significant for the iterative Wiener filter. This is probably due to the fact that the matrices within the iterative Wiener filter are smaller as compared to the full matrix of size within the conventional Wiener filter.

Figure 26.

Performances of the conventional and iterative Wiener filters, using 10,000. The source signal (white Gaussian noise) was not preprocessed (no distortion, i.e., ). The numbers of channels were (stereophonic scenario), , and dB.

Summarizing, the main feature of the multichannel iterative Wiener filter is that it operates with smaller data structures, i.e., of size and , with P values far smaller than . On the other hand, the conventional Wiener filter addresses a system identification problem of size . In our experiments, we tried to cover a wide range of scenarios, in order to properly assess the performance of the multichannel iterative Wiener filter, as compared to its conventional counterpart. Consequently, we used different values of N (the available data to estimate the statistics), different noise levels (SNR), and different values of the decomposition parameter P. Moreover, in the SAEC scenario, we also used different values of the distortion parameter . As an overall conclusion, the iterative version significantly outperformed the conventional Wiener filter, especially in difficult conditions and environments, e.g., when using small amounts of data or low SNRs. These scenarios are of great importance in practice, as in real-world applications, only small amounts of data may be available (to estimate the statistics) or the filters may need to operate in noisy environments.

7. Conclusions and Perspectives

In this review paper, we have addressed the system identification problem from an efficient decomposition-based perspective. The contributions are threefold. First, we have shown how the main categories of linear SISO and MISO systems can be interpreted and related in a unified framework, taking advantage of the bilinear form representation. Second, we have demonstrated that the resulting spatiotemporal impulse response of the MISO system can be efficiently identified using the nearest Kronecker product decomposition, followed by low-rank approximations. Third, we have developed an iterative Wiener filter based on these techniques which outperforms the conventional Wiener filter in terms of accuracy and robustness of the solution. The main feature of the overall approach consists of an efficient (re)formulation of a high-dimension system identification problem (e.g., identification of a long spatiotemporal impulse response) as a combination of low-dimension solutions, which result from the optimization of shorter component filters.

In this study, we have illustrated the performance of the decomposition-based approach only in terms of the Wiener filter, which represents a benchmark tool for system identification problems. Simulation results have indicated that the iterative version is able to outperform the conventional Wiener filter, especially when a small amount of data is available for the estimation of the statistics. This represents an important performance feature, taking into account that in many real-world applications we deal with incomplete information related to the inputs/outputs of the system.

In perspective, improved solutions based on adaptive filtering should also be developed, which could further extend the applicability of the decomposition-based approach. For example, several preliminaries toward this goal can be found in [44,45]. Furthermore, a rigorous convergence analysis of these algorithms could reveal the influence of the decomposition parameters, which could be further exploited in order to improve the overall behavior. In addition, finding a practical method to determine the optimal value of the decomposition parameter P represents one of our main tasks for future works. Nevertheless, this is not a straightforward task, since the decomposition parameter depends on the nature of the system to be identified (which is unknown in practice). However, we can take advantage on some a priori knowledge of the system. For example, in the case of network echo paths (which are usually very sparse), the value of P is much smaller than . For acoustic impulse responses, the value of P should be increased, but it must still be considerably lower than . A preliminary study on the influence of the decomposition parameter can be found in [43].

Author Contributions

Conceptualization, J.B.; methodology, C.P.; validation, L.-M.D.; formal analysis, S.C. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by a grant of the Romanian Ministry of Research and Innovation, CNCS—UEFISCDI, project number PN-III-P1-1.1-PD-2019-0340, within PNCDI III.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Ljung, L. System Identification: Theory for the User, 2nd ed.; Prentice-Hall: Upper Saddle River, NJ, USA, 1999. [Google Scholar]

- Benesty, J.; Huang, Y. (Eds.) Adaptive Signal Processing–Applications to Real-World Problems; Springer: Berlin, Germany, 2003. [Google Scholar]

- Mohler, R.R.; Kolodziej, W.J. An overview of bilinear system theory and applications. IEEE Trans. Syst. Man Cybern. 1980, 10, 683–688. [Google Scholar]

- Halawani, T.U.; Mohler, R.R.; Kolodziej, W.J. A two-step bilinear filtering approximation. IEEE Trans. Acoust. Speech Signal Process. 1984, 32, 344–352. [Google Scholar] [CrossRef]

- Inagaki, M.; Mochizuki, H. Bilinear system identification by Volterra kernels estimation. IEEE Trans. Autom. Control 1984, 29, 746–749. [Google Scholar] [CrossRef]

- Baik, H.K.; Mathews, V.J. Adaptive lattice bilinear filters. IEEE Trans. Signal Process. 1993, 41, 2033–2046. [Google Scholar] [CrossRef]

- Forssén, U. Adaptive bilinear digital filters. IEEE Trans. Circuits Syst. II Analog. Digit. Signal Process. 1993, 40, 729–735. [Google Scholar] [CrossRef]

- Ma, G.-K.; Lee, J.; Mathews, V.J. A RLS bilinear filter for channel equalization. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, Adelaide, SA, Australia, 19–22 April 1994; pp. III-257–III-260. [Google Scholar]

- Lee, J.; Mathews, V.J. Adaptive bilinear predictors. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, Adelaide, SA, Australia, 19–22 April 1994; pp. III-489–III-492. [Google Scholar]

- Hu, R.; Hassan, H.M. Echo cancellation in high speed data transmission systems using adaptive layered bilinear filters. IEEE Trans. Commun. 1994, 42, 655–663. [Google Scholar]

- Bose, T.; Chen, M.-Q. Conjugate gradient method in adaptive bilinear filtering. IEEE Trans. Signal Process. 1995, 43, 1503–1508. [Google Scholar] [CrossRef]

- Lee, J.; Mathews, V.J. Output-error LMS bilinear filters with stability monitoring. In Proceedings of the 1995 International Conference on Acoustics, Speech, and Signal Processing, Detroit, MI, USA, 9–12 May 1995; pp. 965–968. [Google Scholar]

- Gesbert, D.; Duhamel, P. Robust blind joint data/channel estimation based on bilinear optimization. In Proceedings of the 8th Workshop on Statistical Signal and Array Processing, Corfu, Greece, 24–26 June 1996; pp. 168–171. [Google Scholar]

- Stenger, A.; Kellermann, W.; Rabenstein, R. Adaptation of acoustic echo cancellers incorporating a memoryless nonlinearity. In Proceedings of the Proceedings of 8th Workshop on Statistical Signal and Array Processing, Corfu, Greece, 24–26 June 1996. [Google Scholar]

- Stenger, A.; Kellermann, W. Adaptation of a memoryless preprocessor for nonlinear acoustic echo cancelling. Signal Process. 2000, 80, 1747–1760. [Google Scholar] [CrossRef]

- Zhu, Z.; Leung, H. Adaptive identification of nonlinear systems with application to chaotic communications. IEEE Trans. Circuits Syst. I Fundam. Theory Appl. 2000, 47, 1072–1080. [Google Scholar] [CrossRef]

- Kuo, S.M.; Wu, H.-T. Nonlinear adaptive bilinear filters for active noise control systems. IEEE Trans. Circuits Syst. I Regul. Pap. 2005, 52, 617–624. [Google Scholar] [CrossRef]

- Abrahamsson, R.; Kay, S.M.; Stoica, P. Estimation of the parameters of a bilinear model with applications to submarine detection and system identification. Digit. Signal Process. 2007, 17, 756–773. [Google Scholar] [CrossRef]

- Lopes dos Santos, P.; Ramos, J.A.; Martins de Carvalho, J.L. Identification of bilinear systems with white noise inputs: An iterative deterministic-stochastic subspace approach. IEEE Trans. Control. Syst. Technol. 2009, 17, 1145–1153. [Google Scholar] [CrossRef]

- Zhao, H.; Zeng, X.; He, Z. Low-complexity nonlinear adaptive filter based on a pipelined bilinear recurrent neural network. IEEE Trans. Neural Netw. 2011, 22, 1494–1507. [Google Scholar] [CrossRef]

- Tan, L.; Jiang, J. Nonlinear active noise control using diagonal-channel LMS and RLS bilinear filters. In Proceedings of the 2014 IEEE 57th International Midwest Symposium on Circuits and Systems (MWSCAS), College Station, TX, USA, 3–6 August 2014; pp. 789–792. [Google Scholar]

- Huang, Y.; Skoglund, J.; Luebs, A. Practically efficient nonlinear acoustic echo cancellers using cascaded block RLS and FLMS adaptive filters. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 596–600. [Google Scholar]

- Bai, E.-W.; Li, D. Convergence of the iterative Hammerstein system identification algorithm. IEEE Trans. Autom. Control 2004, 49, 1929–1940. [Google Scholar] [CrossRef]

- Benesty, J.; Paleologu, C.; Ciochina, S. On the identification of bilinear forms with the Wiener filter. IEEE Signal Process. Lett. 2017, 24, 653–657. [Google Scholar] [CrossRef]

- Haykin, S. Adaptive Filter Theory, 4th ed.; Prentice-Hall: Upper Saddle River, NJ, USA, 2002. [Google Scholar]

- Diniz, P.S.R. Adaptive Filtering: Algorithms and Practical Implementation, 4th ed.; Springer: New York, NY, USA, 2013. [Google Scholar]

- Rupp, M.; Schwarz, S. A tensor LMS algorithm. In Proceedings of the 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), South Brisbane, QLD, Australia, 19–24 April 2015; pp. 3347–3351. [Google Scholar]

- Paleologu, C.; Benesty, J.; Ciochină, S. Adaptive filtering for the identification of bilinear forms. Digit. Signal Process. 2018, 75, 153–167. [Google Scholar] [CrossRef]

- Elisei-Iliescu, C.; Stanciu, C.; Paleologu, C.; Benesty, J.; Anghel, C.; Ciochină, S. Efficient recursive least-squares algorithms for the identification of bilinear forms. Digit. Signal Process. 2018, 83, 280–296. [Google Scholar] [CrossRef]

- Dogariu, L.; Paleologu, C.; Ciochină, S.; Benesty, J.; Piantanida, P. Identification of bilinear forms with the Kalman filter. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 4134–4138. [Google Scholar]

- Cichocki, A.; Zdunek, R.; Pan, A.H.; Amari, S. Nonnegative Matrix and Tensor Factorizations: Applications to Exploratory Multiway Data Analysis and Blind Source Separation; Wiley: Chichester, UK, 2009. [Google Scholar]

- Boussé, M.; Debals, O.; de Lathauwer, L. A tensor-based method for large-scale blind source separation using segmentation. IEEE Trans. Signal Process. 2017, 65, 346–358. [Google Scholar] [CrossRef]

- Benesty, J.; Cohen, I.; Chen, J. Array Processing–Kronecker Product Beamforming; Springer: Cham, Switzerland, 2019. [Google Scholar]

- Ribeiro, L.N.; de Almeida, A.L.F.; Mota, J.C.M. Separable linearly constrained minimum variance beamformers. Signal Process. 2019, 158, 15–25. [Google Scholar] [CrossRef]

- Vasilescu, M.A.O.; Kim, E. Compositional hierarchical tensor factorization: Representing hierarchical intrinsic and extrinsic causal factors. In Proceedings of the ACM SIGKDD Conference on Knowledge Discovery and Data Mining (KDD), Anchorage, AK, USA, 4–8 August 2019. [Google Scholar]

- Vasilescu, M.A.O.; Kim, E.; Zeng, X.S. CausalX: Causal eXplanations and block multilinear factor analysis. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021. [Google Scholar]

- Vervliet, N.; Debals, O.; Sorber, L.; de Lathauwer, L. Breaking the curse of dimensionality using decompositions of incomplete tensors: Tensor-based scientific computing in big data analysis. IEEE Signal Process. Mag. 2014, 31, 71–79. [Google Scholar] [CrossRef]

- Cichocki, A.; Mandic, D.; de Lathauwer, L.; Zhou, G.; Zhao, Q.; Caiafa, C.; Phan, A.H. Tensor decompositions for signal processing applications: From two-way to multiway component analysis. IEEE Signal Process. Mag. 2015, 32, 145–163. [Google Scholar] [CrossRef] [Green Version]

- Sidiropoulos, N.; de Lathauwer, L.; Fu, X.; Huang, K.; Papalexakis, E.; Faloutsos, C. Tensor decomposition for signal processing and machine learning. IEEE Trans. Signal Process. 2017, 65, 3551–3582. [Google Scholar] [CrossRef]

- da Costa, M.N.; Favier, G.; Romano, J.M.T. Tensor modelling of MIMO communication systems with performance analysis and Kronecker receivers. Signal Process. 2018, 145, 304–316. [Google Scholar] [CrossRef] [Green Version]

- Dogariu, L.-M.; Stanciu, C.L.; Elisei-Iliescu, C.; Paleologu, C.; Benesty, J.; Ciochină, S. Tensor-based adaptive filtering algorithms. Symmetry 2021, 13, 481. [Google Scholar] [CrossRef]

- Dogariu, L.-M.; Paleologu, C.; Benesty, J.; Stanciu, C.L.; Oprea, C.C.; Ciochină, S. A Kalman filter for multilinear forms and its connection with tensorial adaptive filters. Sensors 2021, 21, 3555. [Google Scholar] [CrossRef] [PubMed]

- Paleologu, C.; Benesty, J.; Ciochină, S. Linear system identification based on a Kronecker product decomposition. IEEE/ACM Trans. Audio Speech Lang. Process. 2018, 26, 1793–1808. [Google Scholar] [CrossRef]

- Elisei-Iliescu, C.; Paleologu, C.; Benesty, J.; Stanciu, C.; Anghel, C.; Ciochină, S. Recursive least-squares algorithms for the identification of low-rank systems. IEEE/ACM Trans. Audio Speech Lang. Process. 2019, 27, 903–918. [Google Scholar] [CrossRef]

- Dogariu, L.-M.; Paleologu, C.; Benesty, J.; Ciochină, S. An efficient Kalman filter for the identification of low-rank systems. Signal Process. 2020, 166, 107239. [Google Scholar] [CrossRef]

- Benesty, J.; Paleologu, C.; Oprea, C.C.; Ciochină, S. An iterative multichannel Wiener filter based on a Kronecker product decomposition. In Proceedings of the 2020 28th European Signal Processing Conference (EUSIPCO), Amsterdam, The Netherlands, 18–21 January 2021; pp. 211–215. [Google Scholar]

- Elisei-Iliescu, C.; Paleologu, C.; Benesty, J.; Stanciu, C.; Anghel, C.; Ciochină, S. A multichannel recursive least-squares algorithm based on a Kronecker product decomposition. In Proceedings of the 2020 43rd International Conference on Telecommunications and Signal Processing (TSP), Milan, Italy, 7–9 July 2020; pp. 14–18. [Google Scholar]

- Cohen, I.; Benesty, J.; Chen, J. Differential Kronecker product beamforming. IEEE/ACM Trans. Audio Speech Lang. Process. 2019, 27, 892–902. [Google Scholar] [CrossRef]

- Bhattacharjee, S.S.; Kumar, K.; George, N.V. Nearest Kronecker product decomposition based generalized maximum correntropy and generalized hyperbolic secant robust adaptive filters. IEEE Signal Process. Lett. 2020, 27, 1525–1529. [Google Scholar] [CrossRef]

- Bhattacharjee, S.S.; George, N.V. Nonlinear system identification using exact and approximate improved adaptive exponential functional link networks. IEEE Trans. Circuits Syst. II Express Briefs 2020, 67, 3542–3546. [Google Scholar] [CrossRef]

- Bhattacharjee, S.S.; George, N.V. Fast and efficient acoustic feedback cancellation based on low rank approximation. Signal Process. 2021, 182, 107984. [Google Scholar] [CrossRef]

- Bhattacharjee, S.S.; George, N.V. Nearest Kronecker product decomposition based linear-in-the-parameters nonlinear filters. IEEE/ACM Trans. Audio Speech Lang. Process. 2021. accepted for publication. [Google Scholar]

- Yang, W.; Huang, G.; Chen, J.; Benesty, J.; Cohen, I.; Kellermann, W. Robust dereverberation with Kronecker product based multichannel linear prediction. IEEE Signal Process. Lett. 2021, 28, 101–105. [Google Scholar] [CrossRef]

- Kuhn, E.V.; Pitz, C.A.; Matsuo, M.V.; Bakri, K.J.; Seara, R.; Benesty, J. A Kronecker product CLMS algorithm for adaptive beamforming. Digit. Signal Process. 2021, 111, 102968. [Google Scholar] [CrossRef]

- He, H.; Chen, J.; Benesty, J.; Yu, Y. Robust recursive least M-estimate adaptive filter for the identification of low-rank acoustic systems. In Proceedings of the ICASSP 2021-2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 940–944. [Google Scholar]

- Van Loan, C.F. The ubiquitous Kronecker product. J. Comput. Appl. Math. 2000, 123, 85–100. [Google Scholar] [CrossRef] [Green Version]

- Gay, S.L.; Benesty, J. (Eds.) Acoustic Signal Processing for Telecommunication; Kluwer Academic Publisher: Boston, MA, USA, 2000. [Google Scholar]

- Benesty, J.; Gänsler, T.; Morgan, D.R.; Sondhi, M.M.; Gay, S.L. Advances in Network and Acoustic Echo Cancellation; Springer: Berlin, Germany, 2001. [Google Scholar]

- Paleologu, C.; Benesty, J.; Ciochină, S. Sparse Adaptive Filters for Echo Cancellation; Morgan & Claypool Publishers: Williston, VT, USA, 2010. [Google Scholar]

- Liu, J.; Grant, S.L. Proportionate adaptive filtering for block-sparse system identification. IEEE/ACM Trans. Audio Speech Lang. Process. 2016, 24, 623–630. [Google Scholar] [CrossRef] [Green Version]

- Golub, G.H.; van Loan, C.F. Matrix Computations, 3rd ed.; The John Hopkins University Press: Baltimore, MD, USA, 1996. [Google Scholar]

- Gander, W.; Gander, M.J.; Kwok, F. Scientific Computing–An Introduction Using Maple and MATLAB; Springer: Berlin, Germany, 2014. [Google Scholar]

- Digital Network Echo Cancellers; ITU-T Recommendations G.168; ITU: Geneva, Switzerland, 2002.

- Bertsekas, D.P. Nonlinear Programming, 2nd ed.; Athena Scientific: Belmont, MA, USA, 1999. [Google Scholar]

- Sondhi, M.M.; Morgan, D.R. Acoustic echo cancellation for stereophonic teleconferencing. In Proceedings of the IEEE Workshop on Applications of Signal Processing to Audio and Acoustics (WASPAA), New Paltz, NY, USA, 20–23 October 1991. [Google Scholar]

- Sondhi, M.M.; Morgan, D.R.; Hall, J.L. Stereophonic acoustic echo cancellation–An overview of the fundamental problem. IEEE Signal Process. Lett. 1995, 2, 148–151. [Google Scholar] [CrossRef]

- Benesty, J.; Morgan, D.R.; Sondhi, M.M. A better understanding and an improved solution to the specific problems of stereophonic acoustic echo cancellation. IEEE Trans. Speech Audio Process. 1998, 6, 156–165. [Google Scholar] [CrossRef] [Green Version]

- Benesty, J.; Paleologu, C.; Gänsler, T.; Ciochină, S. A Perspective on Stereophonic Acoustic Echo Cancellation; Springer: Berlin, Germany, 2011. [Google Scholar]

- Moore, B.C.J. An Introduction to the Psychology of Hearing; Academic Press: London, UK, 1989. [Google Scholar]

- Romoli, L.; Cecchi, S.; Peretti, P.; Piazza, F. A mixed decorrelation approach for stereo acoustic echo cancellation based on the estimation of the fundamental frequency. IEEE Trans. Audio Speech Lang. Process. 2012, 20, 690–698. [Google Scholar] [CrossRef]

- Schneider, M.; Kellermann, W. Multichannel acoustic echo cancellation in the wave domain with increased robustness to nonuniqueness. IEEE/ACM Trans. Audio Speech Lang. Process. 2016, 24, 518–529. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).