Federated Multi-Stage Attention Neural Network for Multi-Label Electricity Scene Classification

Abstract

1. Introduction

- (1)

- Explicit modeling of label correlation strengths. The paper proposes a novel approach that explicitly captures varying label correlation strengths in federated multi-label classification, which is crucial for multi-label electricity scene classification. Unlike existing frameworks, the proposed method differentiates between strong and weak correlations (e.g., “no safety helmet ↔ no work clothes” vs. “no safety helmet ↔ smoking”) by constructing masked label correlation strength graphs, enhancing model performance by preserving critical dependencies that may otherwise be diluted.

- (2)

- Multi-stage aggregation to mitigate over-averaging. The paper proposes a multi-stage aggregation process in FMMAN to address over-averaging in conventional single-stage global aggregation methods. By clustering client models based on parameter similarities and refining them through multiple stages, the model alleviates the loss of region-specific features and adapts to local variations in data and label distributions, thus alleviating the over-averaging.

2. Related Works

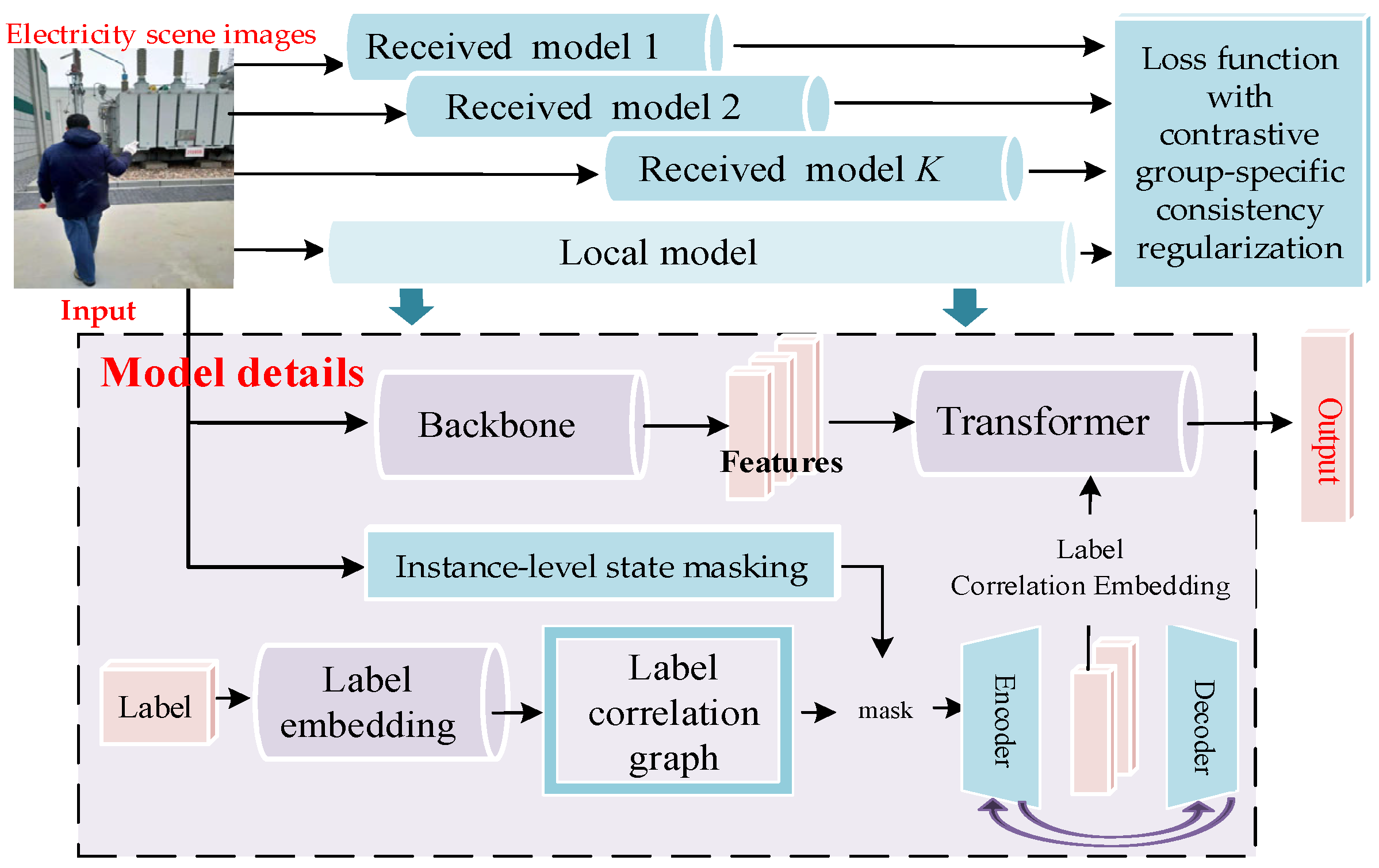

3. Proposed Model

3.1. Federated Learning Problem Description

3.2. The Details of FMMAN on Learning Label Correlation Strengths on the Clients

3.2.1. A State Embedding Module

3.2.2. The Label Embedding Module for Learning the Strength of Label Correlations

3.2.3. Contrastive Group-Specific Consistency Regularization for Alleviating the Over-Averaging

3.3. Multi-Stage Cluster-Driven Global Aggregation with Consistency-Aware Mechanism on Server

- (1)

- After initialization, clients dynamically construct label correlation strength graphs and design contrastive group-specific consistency regularization based on the received K models to capture both local correlation patterns and global consistency based on the methods in Section 3.2. Then, all N clients send their local models to the server.

- (2)

- In the server-side, obtained N client models are clustered into K clusters (groups) based on significant parameters.

- (3)

- These K global models are redistributed to all N clients, where each client trains them locally (using the same client-side learning method) based on Formula (12). This training produces a refined local model for learning different local correlations and data features, which is subsequently returned to the server.

- (4)

- Finally, the server aggregates all N models into a unified global model, completing one full interaction cycle. Specifically, the model first calculates the loss values of the client models transmitted to the server. Then, for these N models, the contribution of each model’s loss to the aggregation is weighted, with the weight being the proportion of that model’s loss in the total loss of the N models. This aggregation process is expressed as follows:

4. Experiments

4.1. Datasets and Parameters Setting

4.2. Comparison of Experiments

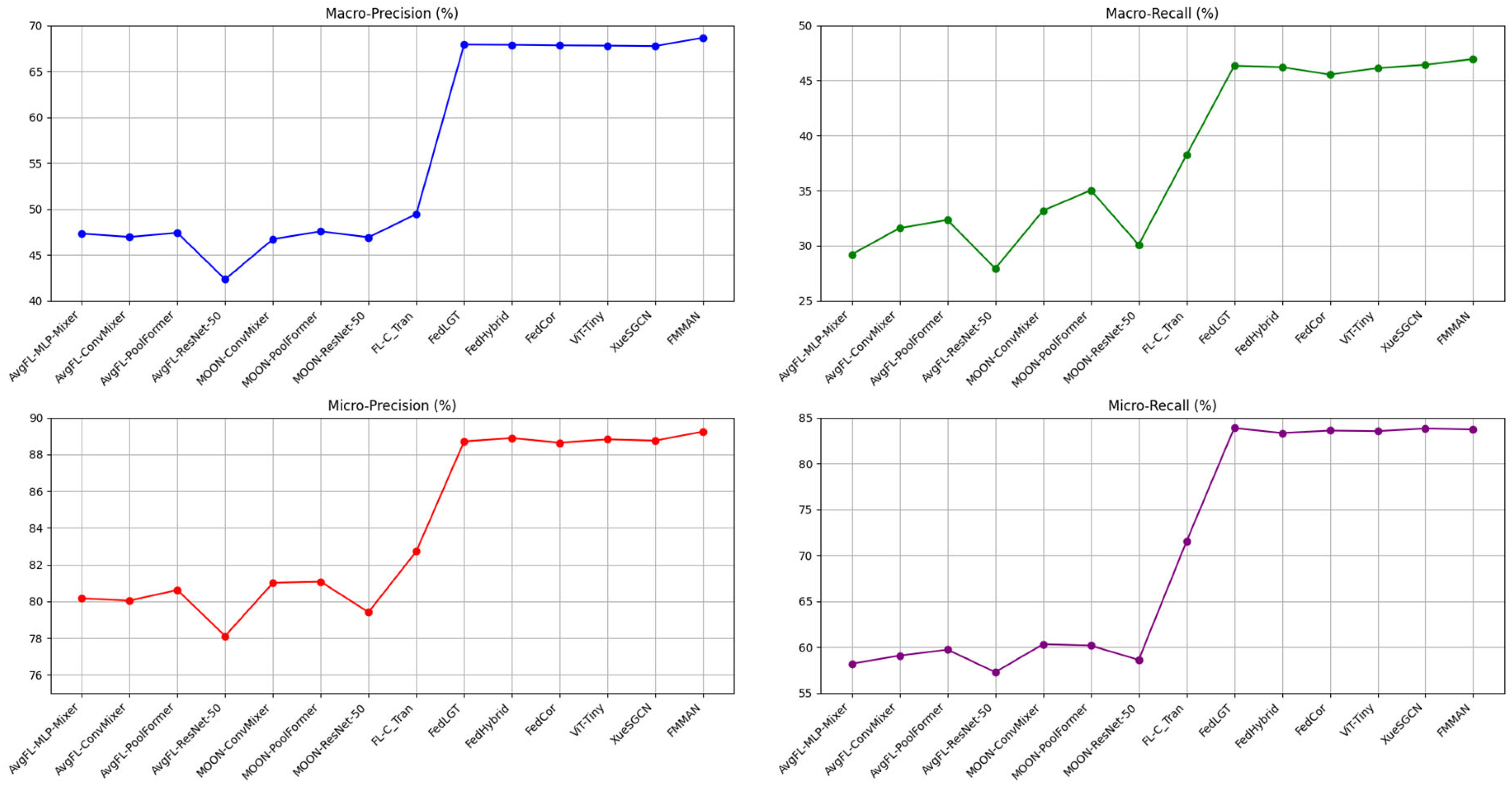

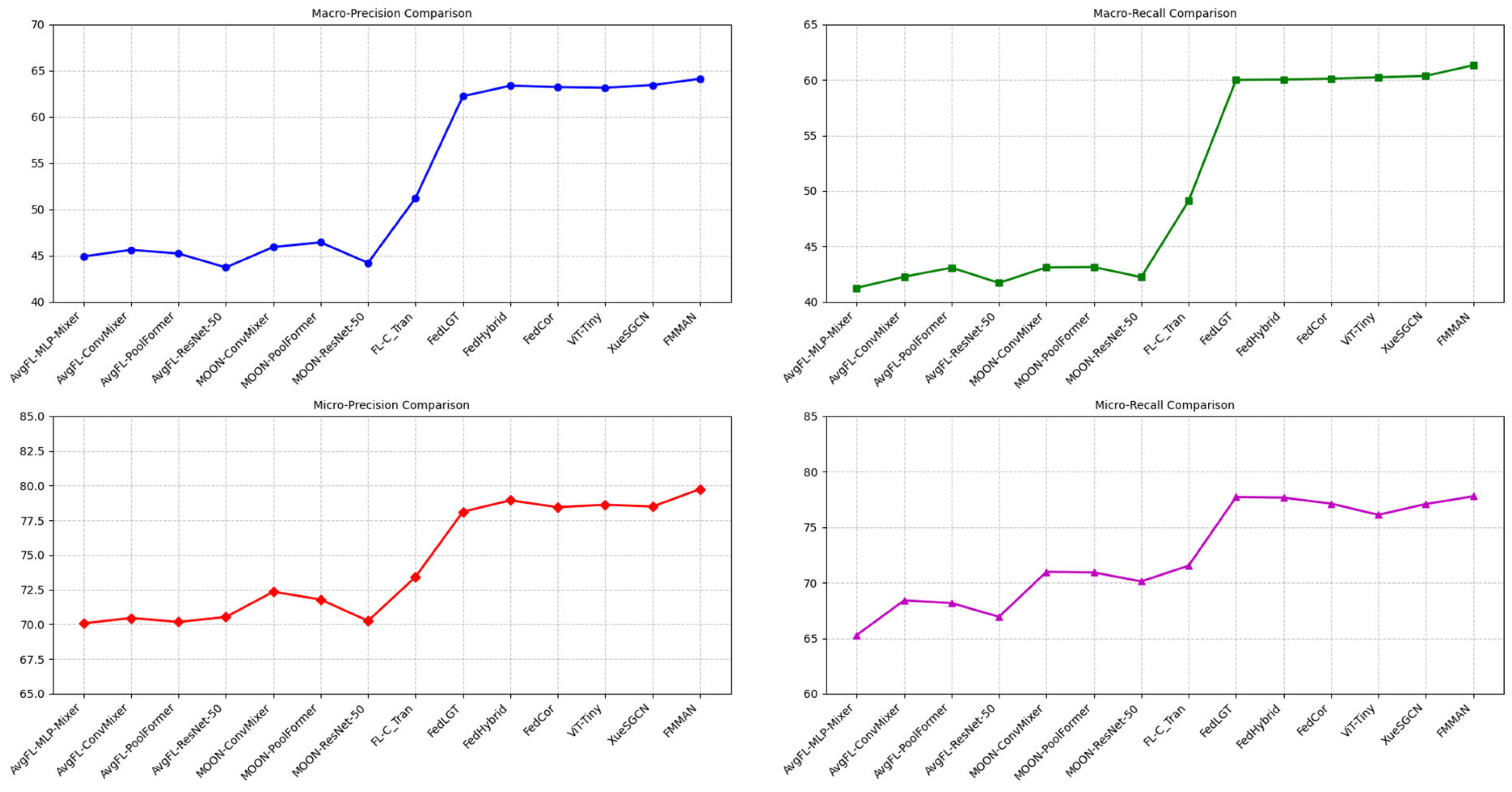

- AvgFL-MLP-Mixer: A FL model using MLP-Mixer in clients for training. The MLP-Mixer [22] is introduced as an alternative computer vision model to CNNs and Vision Transformers (ViT) to reduce the computational time.

- AvgFL-ConvMixer: An AvgFL model using ConvMixer in clients for training. The ConvMixer architecture builds upon the MLP-Mixer by including channel and token mixing mechanisms to process channel and spatial features.

- AvgFL-PoolFormer [2]: An AvgFL model using PoolFormer in clients for training. The PoolFormer architecture replaces the attention-based token mixer module by the simple average pooling operation as a token mixer.

- AvgFL-ResNet-50: An AvgFL model using ResNet-50 in clients for training.

- FL-C_Tran [5]: An FL model using C_Tran in clients for training.

- FedCor [23]: Correlation-Based Active Client Selection Strategy for Heterogeneous Federated Learning.

- MOON-ConvMixer: A MOON FL model using ConvMixer in clients for training.

- MOON-PoolFormer [2]: A MOON FL model using PoolFormer in clients for training.

- MOON-ResNet-50: A MOON FL model using ResNet-50 in clients for training.

- FedLGT [7]: FedLGT is served as a customized model update technique while exploiting the label correlation at each client.

- FedHybrid [8]: FedHybrid introduces a proximal term that limits gradient updates, helping to improve convergence in the presence of label distribution skew.

- ViT-Tiny [15]: ViT-Tiny model for FL, on the other hand, utilized a transformer variant with 5.7 million parameters. The last feature representation has a dimension of 192.

- XueSGCN [16]: Scene-based Graph Convolutional Networks for Federated Multi-Label Classification.

4.2.1. Comparison of Experiments on FLAIR Dataset and FL-PASCAL VOC

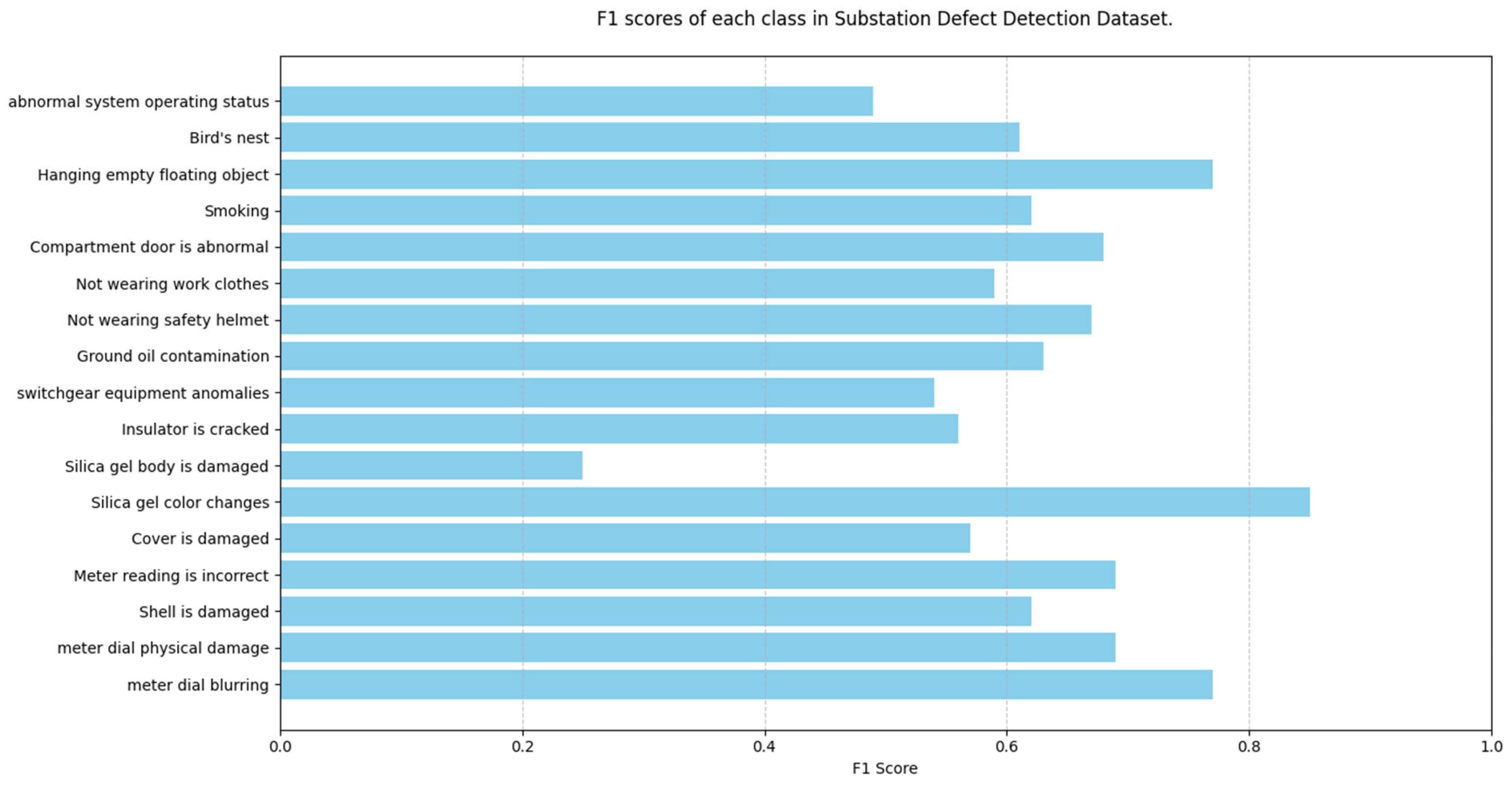

4.2.2. Comparison of Experiments on Substation Defect Detection Dataset

4.2.3. Comparison of Experiments on Substation Control Cabinet Condition Monitoring Dataset

4.3. Discussion

4.4. Ablation Experiments

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- McMahan, B.; Moore, E.; Ramage, D.; Hampson, S.; y Arcas, B.A. Communication-efficient learning of deep networks from decentralized data. In Proceedings of the 20th International Conference on Artificial Intelligence and Statistics, Fort Lauderdale, FL, USA, 20–22 April 2017; pp. 1273–1282. [Google Scholar]

- Büyüktaş, B.; Weitzel, K.; Völkers, S.; Zailskas, F.; Demir, B. Transformer-based Federated Learning for Multi-Label Remote Sensing Image Classification. arXiv 2024, arXiv:2405.15405. [Google Scholar]

- Zhang, M.L.; Zhou, Z.H. A review on multi-label learning algorithms. IEEE Trans. Knowl. Data Eng. 2013, 26, 1819–1837. [Google Scholar] [CrossRef]

- Zhang, J.; Wei, T.; Zhang, M.L. Label-specific time-frequency energy-based neural network for instrument recognition. IEEE Trans. Cybern. 2024, 54, 7080–7093. [Google Scholar] [CrossRef] [PubMed]

- Hang, J.Y.; Zhang, M.L. Collaborative learning of label semantics and deep label-specific features for multi-label classification. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 9860–9871. [Google Scholar] [CrossRef] [PubMed]

- Lanchantin, J.; Wang, T.; Ordonez, V.; Qi, Y. General multi-label image classification with transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 16478–16488. [Google Scholar]

- Liu, I.J.; Lin, C.S.; Yang, F.E.; Wang, Y.C.F. Language-Guided Transformer for Federated Multi-Label Classification. AAAI Conf. Artif. Intell. 2024, 38, 13882–13890. [Google Scholar] [CrossRef]

- Niu, X.; Wei, E. FedHybrid: A hybrid federated optimization method for heterogeneous clients. IEEE Trans. Signal Process. 2023, 71, 150–163. [Google Scholar] [CrossRef]

- Huang, X.; Li, P.; Li, X. Stochastic controlled averaging for federated learning with communication compression. arXiv 2024, arXiv:2308.08165. [Google Scholar]

- Gao, L.; Fu, H.; Li, L.; Chen, Y.; Xu, M.; Xu, C.Z. Feddc: Federated learning with non-iid data via local drift decoupling and correction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 10112–10121. [Google Scholar]

- Li, J.; Zhang, C.; Zhou, J.T.; Fu, H.; Xia, S.; Hu, Q. Deep-LIFT: Deep label-specific feature learning for image annotation. IEEE Trans. Cybern. 2021, 52, 7732–7741. [Google Scholar] [CrossRef] [PubMed]

- Yu, Z.B.; Zhang, M.L. Multi-label classification with label-specific feature generation: A wrapped approach. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 5199–5210. [Google Scholar] [CrossRef] [PubMed]

- Jia, B.B.; Zhang, M.L. Multi-dimensional multi-label classification: Towards encompassing heterogeneous label spaces and multi-label annotations. Pattern Recognit. 2023, 138, 109357. [Google Scholar] [CrossRef]

- Guan, H.; Yap, P.T.; Bozoki, A.; Liu, M. Federated learning for medical image analysis: A survey. Pattern Recognit. 2024, 151, 110424. [Google Scholar] [CrossRef] [PubMed]

- Ben Youssef, B.; Alhmidi, L.; Bazi, Y.; Zuair, M. Federated Learning Approach for Remote Sensing Scene Classification. Remote Sens. 2024, 16, 2194. [Google Scholar] [CrossRef]

- Xue, S.; Luo, W.; Luo, Y.; Yin, Z.; Gu, J. Scene-based Graph Convolutional Networks for Federated Multi-Label Classification. In Proceedings of the 2024 International Joint Conference on Neural Networks (IJCNN), Yokohama, Japan, 30 June–5 July 2024; pp. 1–6. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- Radford, A. Learning transferable visual models from natural language supervision. In Proceedings of the 38th International Conference on Machine Learning, Virtual, 18–24 July 2021. [Google Scholar]

- Song, C.; Granqvist, F.; Talwar, K. FLAIR: Federated Learning Annotated Image Repository. arXiv 2022, arXiv:2207.08869. [Google Scholar]

- Hoiem, D.; Divvala, S.K.; Hays, J.H. Pascal VOC 2008 challenge. World Lit. Today 2009, 24, 1–4. [Google Scholar]

- Li, Q.; He, B.; Song, D. Model-contrastive federated learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 10713–10722. [Google Scholar]

- Tolstikhin, I.O.; Houlsby, N.; Kolesnikov, A.; Beyer, L.; Zhai, X.; Unterthiner, T.; Yung, J.; Steiner, A.; Keysers, D.; Uszkoreit, J.; et al. Mlp-mixer: An all-mlp architecture for vision. Adv. Neural Inf. Process. Syst. 2021, 34, 24261–24272. [Google Scholar]

- Tang, M.; Ning, X.; Wang, Y.; Sun, J.; Wang, Y.; Li, H.; Chen, Y. FedCor: Correlation-based active client selection strategy for heterogeneous federated learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 10102–10111. [Google Scholar]

| Macro-AP | Macro-F1 | Micro-AP | Micro-F1 | |

|---|---|---|---|---|

| AvgFL-MLP-Mixer | 40.91% | 36.09% | 77.33% | 67.44% |

| AvgFL-ConvMixer | 41.46% | 37.31% | 78.71% | 67.98% |

| AvgFL-PoolFormer | 42.62% | 38.22% | 79.16% | 68.63% |

| AvgFL-ResNet-50 | 40.13% | 33.51% | 77.12% | 66.09% |

| MOON-ConvMixer | 41.91% | 38.71% | 79.20% | 69.15% |

| MOON-PoolFormer | 42.90% | 40.03% | 79.59% | 69.08% |

| MOON-ResNet-50 | 43.32% | 36.53% | 77.34% | 67.45% |

| FL-C_Tran | 56.00% | 43.02% | 88.15% | 76.71% |

| FedLGT | 60.60% | 54.94% | 88.72% | 86.23% |

| FedHybrid | 61.03% | 54.93% | 88.43% | 86.17% |

| FedCor | 61.33% | 54.07% | 87.62% | 86.10% |

| ViT-Tiny | 60.72% | 53.87% | 87.67% | 86.10% |

| XueSGCN | 61.13% | 54.98% | 87.99% | 86.22% |

| FMMAN | 62.15% | 55.58% | 88.94% | 86.71% |

| Macro-AP | Macro-F1 | Micro-AP | Micro-F1 | |

|---|---|---|---|---|

| AvgFL-ConvMixer | 86.93% | 80.19% | 91.41% | 84.21% |

| AvgFL-PoolFormer | 86.94% | 81.34% | 91.62% | 85.24% |

| AvgFL-ResNet-50 | 87.77% | 82.53% | 91.74% | 85.26% |

| MOON-ConvMixer | 86.77% | 81.03% | 91.43% | 84.33% |

| MOON-PoolFormer | 87.85% | 81.28% | 91.71% | 86.10% |

| MOON-ResNet-50 | 88.39% | 81.32% | 91.45% | 84.51% |

| FL-C_Tran | 89.01% | 84.13% | 91.72% | 87.46% |

| FedLGT | 89.72% | 84.25% | 91.79% | 88.43% |

| FedCor | 89.73% | 84.87% | 93.02% | 87.77% |

| ViT-Tiny | 90.02% | 85.37% | 93.13% | 88.18% |

| XueSGCN | 89.11% | 85.02% | 92.79% | 88.25% |

| FMMAN | 91.66% | 86.95% | 94.37% | 89.70% |

| Macro-AP | Macro-F1 | Micro-AP | Micro-F1 | |

|---|---|---|---|---|

| AvgFL-MLP-Mixer | 50.02% | 43.01% | 70.9% | 67.60% |

| AvgFL-ConvMixer | 50.05% | 43.18% | 71.01% | 69.41% |

| AvgFL-PoolFormer | 50.33% | 44.02% | 71.5% | 69.16% |

| AvgFL-ResNet-50 | 49.19% | 42.26% | 70.08% | 68.69% |

| MOON-ConvMixer | 51.19% | 44.12% | 72.94% | 71.66% |

| MOON-PoolFormer | 50.27% | 44.21% | 72.32% | 71.36% |

| MOON-ResNet-50 | 49.28% | 43.07% | 70.22% | 70.19% |

| FL-C_Tran | 58.47% | 50.02% | 73.23% | 72.47% |

| FedLGT | 65.23% | 60.98% | 79.72% | 77.92% |

| FedHybrid | 65.44% | 61.08% | 79.44% | 78.02% |

| FedCor | 65.23% | 61.56% | 79.58% | 77.78% |

| ViT-Tiny | 66.04% | 61.72% | 79.23% | 77.34% |

| XueSGCN | 66.13% | 61.88% | 79.67% | 77.79% |

| FMMAN | 67.14% | 62.33% | 80.26% | 78.76% |

| Macro-AP | Macro-F1 | Micro-AP | Micro-F1 | |

|---|---|---|---|---|

| AvgFL-MLP-Mixer | 62.76% | 59.04% | 70.17% | 69.93% |

| AvgFL-ConvMixer | 63.34% | 60.23% | 70.39% | 71.32% |

| AvgFL-PoolFormer | 63.88% | 60.43% | 71.72% | 71.28% |

| AvgFL-ResNet-50 | 63.27% | 60.09% | 70.93% | 70.77% |

| MOON-ConvMixer | 64.06% | 60.34% | 72.66% | 71.31% |

| MOON-PoolFormer | 63.25% | 60.37% | 71.74% | 71.42% |

| MOON-ResNet-50 | 63.73% | 60.21% | 70.78% | 70.29% |

| FL-C_Tran | 66.97% | 61.09% | 73.99% | 72.67% |

| FedLGT | 67.14% | 63.36% | 74.37% | 72.71% |

| FedCor | 67.39% | 63.36% | 74.78% | 72.70% |

| ViT-Tiny | 67.44% | 63.72% | 74.28% | 73.06% |

| XueSGCN | 67.68% | 63.77% | 74.94% | 72.98% |

| FMMAN | 68.33% | 64.37% | 75.81% | 73.73% |

| Macro-AP | Macro-F1 | Micro-AP | Micro-F1 | |

|---|---|---|---|---|

| FMMAN_without_graph | 66.87% | 61.19% | 79.93% | 78.41% |

| FMMAN_without_mask | 65.41% | 60.66% | 79.72% | 78.40% |

| FedLGT | 65.23% | 60.98% | 79.72% | 77.92% |

| FedLGT-MS | 65.73% | 61.25% | 79.83% | 78.32% |

| FMMAN_without_contrastive | 67.04% | 61.97% | 79.76% | 78.53% |

| FMMAN | 67.14% | 62.33% | 80.26% | 78.76% |

| Macro-AP | Macro-F1 | Micro-AP | Micro-F1 | |

|---|---|---|---|---|

| FedLGT | 65.23% | 60.98% | 79.72% | 77.92% |

| FMMAN_without | 66.55% | 61.26% | 79.77% | 70.08% |

| FMMAN | 67.14% | 62.33% | 80.26% | 78.76% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhong, L.; Jiang, X.; Xu, J.; Zheng, K.; Wu, M.; Gao, L.; Ma, C.; Zhu, D.; Ai, Y. Federated Multi-Stage Attention Neural Network for Multi-Label Electricity Scene Classification. J. Low Power Electron. Appl. 2025, 15, 46. https://doi.org/10.3390/jlpea15030046

Zhong L, Jiang X, Xu J, Zheng K, Wu M, Gao L, Ma C, Zhu D, Ai Y. Federated Multi-Stage Attention Neural Network for Multi-Label Electricity Scene Classification. Journal of Low Power Electronics and Applications. 2025; 15(3):46. https://doi.org/10.3390/jlpea15030046

Chicago/Turabian StyleZhong, Lei, Xuejiao Jiang, Jialong Xu, Kaihong Zheng, Min Wu, Lei Gao, Chao Ma, Dewen Zhu, and Yuan Ai. 2025. "Federated Multi-Stage Attention Neural Network for Multi-Label Electricity Scene Classification" Journal of Low Power Electronics and Applications 15, no. 3: 46. https://doi.org/10.3390/jlpea15030046

APA StyleZhong, L., Jiang, X., Xu, J., Zheng, K., Wu, M., Gao, L., Ma, C., Zhu, D., & Ai, Y. (2025). Federated Multi-Stage Attention Neural Network for Multi-Label Electricity Scene Classification. Journal of Low Power Electronics and Applications, 15(3), 46. https://doi.org/10.3390/jlpea15030046