Abstract

Wireless sensor systems powered by batteries are widely used in a variety of applications. For applications with space limitation, their size was reduced, limiting battery energy capacity and memory storage size. A multi-exit neural network enables to overcome these limitations by filtering out data without objects of interest, thereby avoiding computing the entire neural network. This paper proposes to implement a multi-exit convolutional neural network on the ESP32-CAM embedded platform as an image-sensing system with an energy constraint. The multi-exit design saves energy by 42.7% compared with the single-exit condition. A simulation result, based on an exemplary natural outdoor light profile and measured energy consumption of the proposed system, shows that the system can sustain its operation with a 3.2 kJ (275 mAh @ 3.2 V) battery by scarifying the accuracy only by 2.7%.

1. Introduction

Dramatic advances in computation requires an increasing amount of data to analyze. Sensors became an essential device to collect the data from a physical world. Electronic sensing systems have been utilized in a variety of applications, including biomedical observation, civil engineering monitoring, and energy resource detection [1,2,3,4,5,6,7,8]. Size of the sensors have reduced to fit into a greater number of applications, and they employ batteries to power themselves without external power connection for easier placement [9,10,11,12,13,14,15,16,17]. As an example, three AAA-sized batteries with an energy capacity of 2400 mAh can continuously power a wireless sensor for 2.4 months [17]. To maximize system lifetime for a given battery capacity, a sensing system uses a duty-cycled operation between active and sleep modes [14,16]. It saves the total energy consumption or the average power consumption by reducing power in sleep mode for a long time; during that period the system does not need to be fully operated. The second solution is to develop low-power circuits both for active and sleep modes [18,19,20,21,22,23,24,25]. Lastly, the system includes an energy harvester to recharge the connected battery using environment energy [17]. However, the harvested power is typically lower than power consumption in active mode.

An image is one of most popular data to analyze a target. Low-power wireless image systems have been studied to operate for an extended time at a given battery capacity [25]. Similar with other data (e.g., acceleration), the system experiences a trade-off between sampling frequency and power consumption/data storage size. Slower sampling frequency saves power consumption and data storage size while increasing a chance to miss important data. For lower data storage size even with a high sampling rate, image-based sensors recently employ machine learning algorithms to evaluate if images include objects of interest [26]. It only stores the useful images in memory. For image recognition, a Convolutional Neuron Network (CNN) is widely used [27]. It generates a classification label by convoluting image data with proper weights, through multiple layers. The label indicates a type of object in the original image. However, CNN requires heavy computation (millions of multiply–accumulation (MAC) operations [27]) and thus high power consumption, which is critical for a battery-powered image system with energy harvesting.

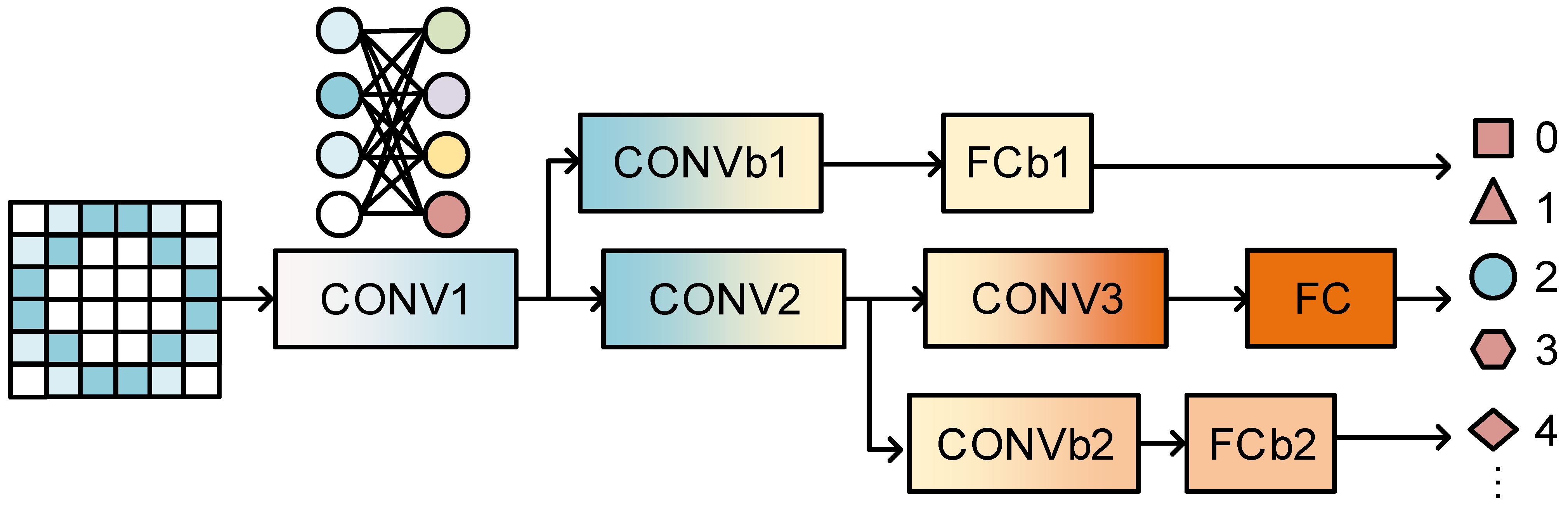

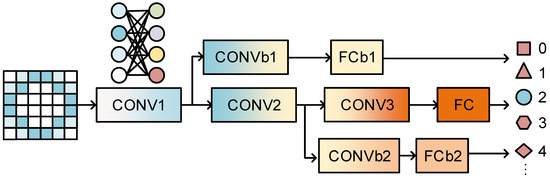

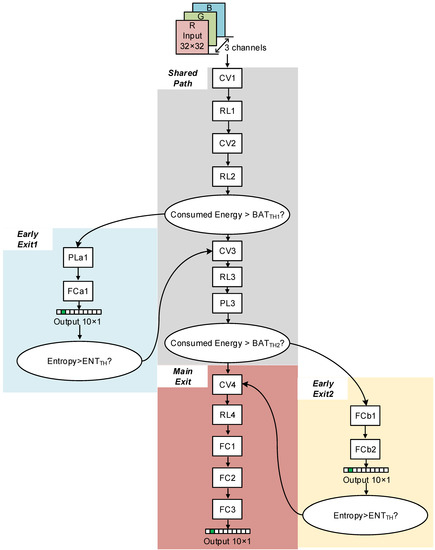

There has been continuous effort to reduce energy consumption in neural network computation. To overcome energy shortage in intermittent computing systems with energy harvesting, software compression and hardware acceleration are proposed for embedded systems [28]. However, it is not suitable for tasks that require instant outcomes since this approach completes one inference in multiple energy cycles. Instead, recently, multi-exit CNNs were proposed [27,29], as shown in Figure 1. It has multiple paths that generate labels from an image. Each path has different depth and thus different cost of time. Shorter path provides less accuracy, but it uses entropy of an inference result to check its confidence. This approach is applied to millimeter scale systems with energy harvesting and µAh-level battery [30], but the inference takes up to 4.1 min. Zeng, et al. [31] applies a multi-exit CNN to industrial internet-of-things to satisfy various timing requirements for real-time processing, but power reduction was not a primary consideration.

Figure 1.

Example of a multi-exit CNN.

In this paper, we propose an image-based inferencing system operating on a commercial ESP32-CAM microcontroller. Periodically, the system takes an image by a connected camera, runs a three-exit CNN the image, and obtains a label. The three exits achieve accuracy of 60.5%, 70% and 76% from the CIFAR-10 dataset. The system detects battery energy level by its built-in ADC at each branch and selects the optimal path accordingly. At the end of two early exits, it calculates entropy to find if a deeper path is necessary. The system saves energy consumption by 42.54% at 240 MHz clock frequency. A simulation result, based on an exemplary natural outdoor light profile [17] and measured energy consumption of the proposed system, achieves accuracy of 72.1% with an entropy threshold of 1.9 and a battery energy threshold of 1500 J. It requires battery energy of 3.2 kJ, which can be supported by a battery with size of 43 mm × 14 mm × 14 mm [32].

The rest of this article is arranged as follows. Section 2 introduces a target system. Section 3 proposes the CNN architecture, and Section 4 shows the hardware platform. Section 5 and Section 6 show measurement results and evaluation results for long-term use, respectively. Finally, Section 7 concludes this paper.

2. Target System

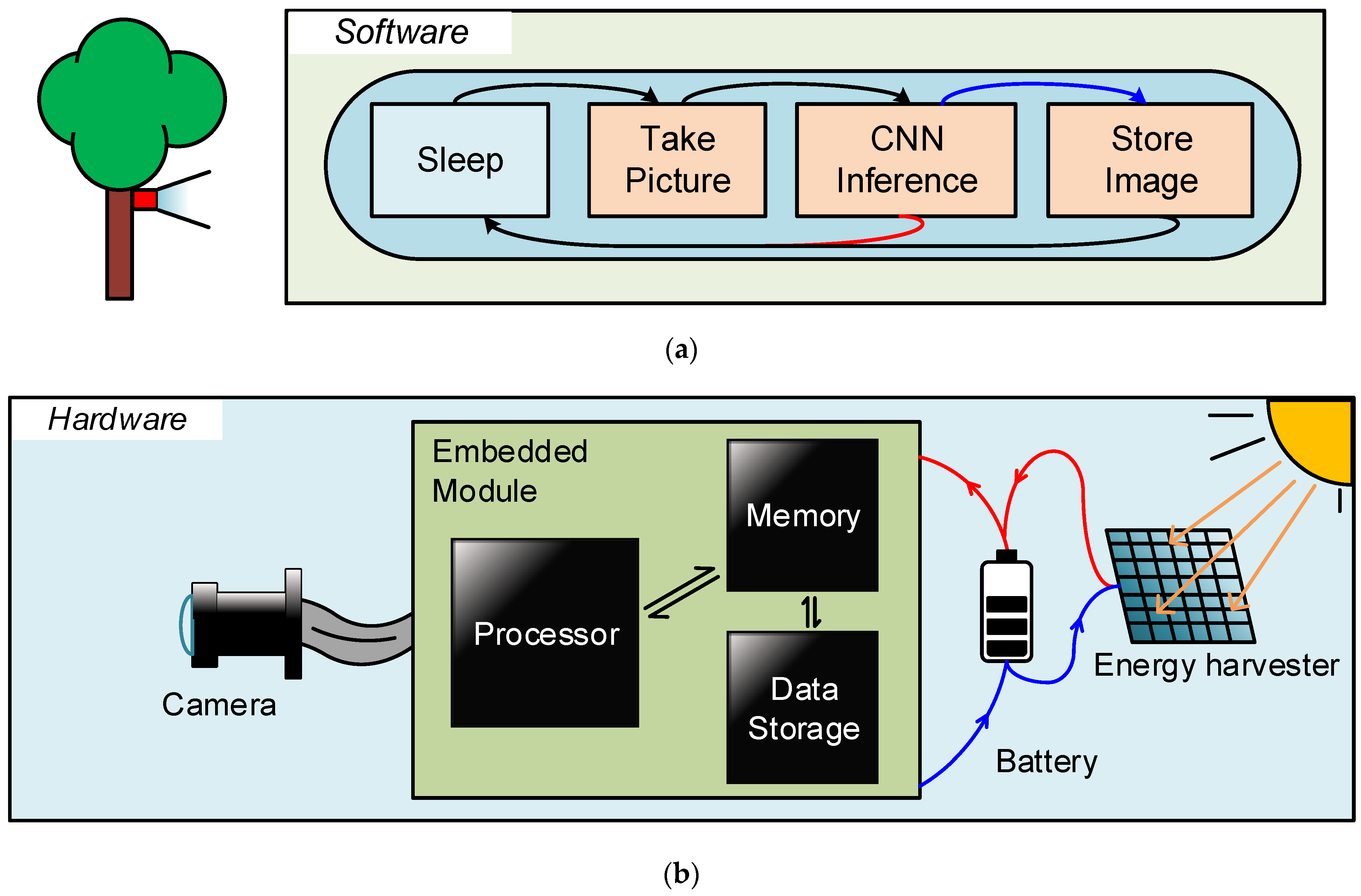

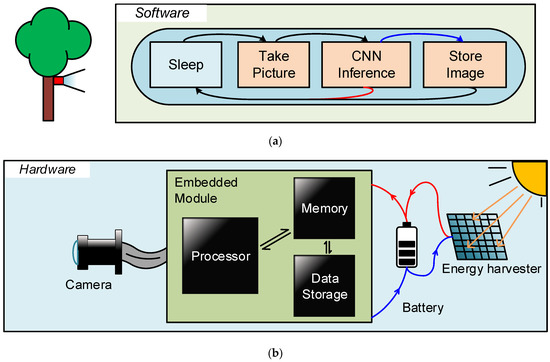

We target an image-based system that can be attached to a static location (e.g., tree) and monitor the objects. Figure 2 shows the software and hardware diagram of a target system. To detect specific objects (e.g., wild animals), the system repeats active and sleep modes, as shown in Figure 2a. Once the system transits from sleep to active mode by a timer, the system takes an image using a camera and categorizes its contents by a multi-exit CNN. If the captured image contains a classified object, the system stores the picture in a data storage. Otherwise, it goes back to sleep mode without storing the image. Figure 2b shows the hardware structure of the targeted system. A microprocessor controls the mode transition between active and sleep modes, image capture and process, and data transfer. Data storage such as an SD card stores selected images and coefficients of CNN. The entire system is powered by a battery. An energy harvester (e.g., solar panel) recharges a battery to extend system lifetime.

Figure 2.

Diagram of software and hardware for the targeted system: (a) software operation flow, and (b) hardware structure.

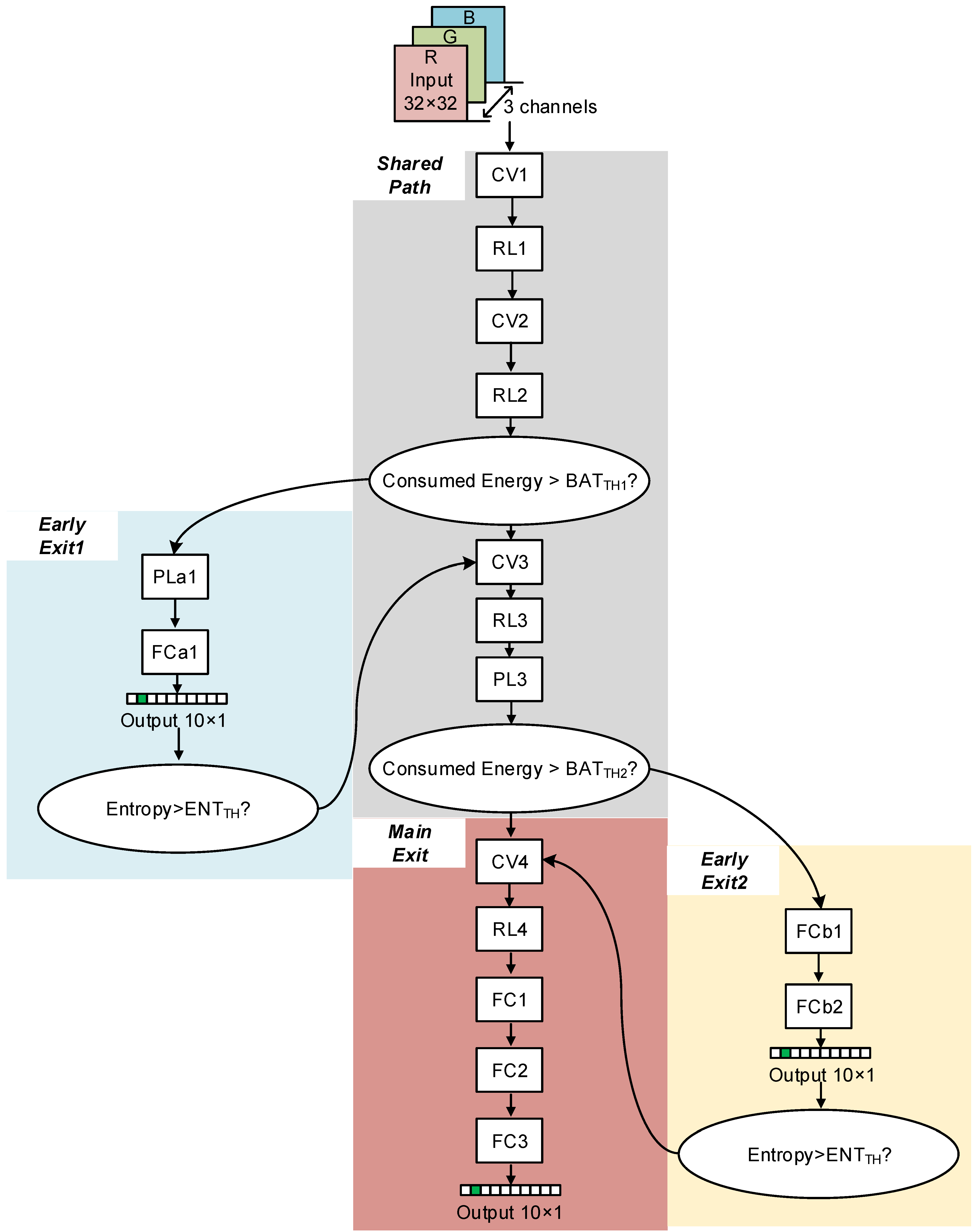

3. Proposed Multi-Exit CNN

Figure 3 shows the proposed multi-exit CNN. We added more layers to the model of [27] and modified part of its parameters for higher accuracy. It processes images with 3 channels as input (red, green, and blue). Each channel has a resolution of 32 × 32 and an 8-bit color depth, which is identical with the CIFAR-10 dataset. The convolution (CVx) convolves the input feature map with several filters and generates the output feature map. A ReLU function (RLx) activates the output feature map by substituting the negative values with 0. Some specific ReLU layers are followed by max pooling (PLx). At the end of each exit, fully connected layers (FCx) generate the 10 final outputs. The CNN has 3 exits, marked as early exit1, early exit2 and main exit. At two branching points (after RL2 and after PL3), the system measures the battery voltage and finds the consumed battery energy. If the consumed energy is higher than a threshold (BATTHx), the narrower path is selected (PLa1 or PCb1). The model is trained including the 3 exits together with the same importance, and the average loss of the 3 exits is minimized. At the output of the two early exits, it checks entropy of the outputs as a confidence level. If the entropy is larger than a threshold value (ENTTH), the result is more likely to be unreliable, and the CNN returns the processing back to a deeper path (CV3 or CV4). The entropy is calculated as:

Figure 3.

Proposed multi-exit CNN diagram.

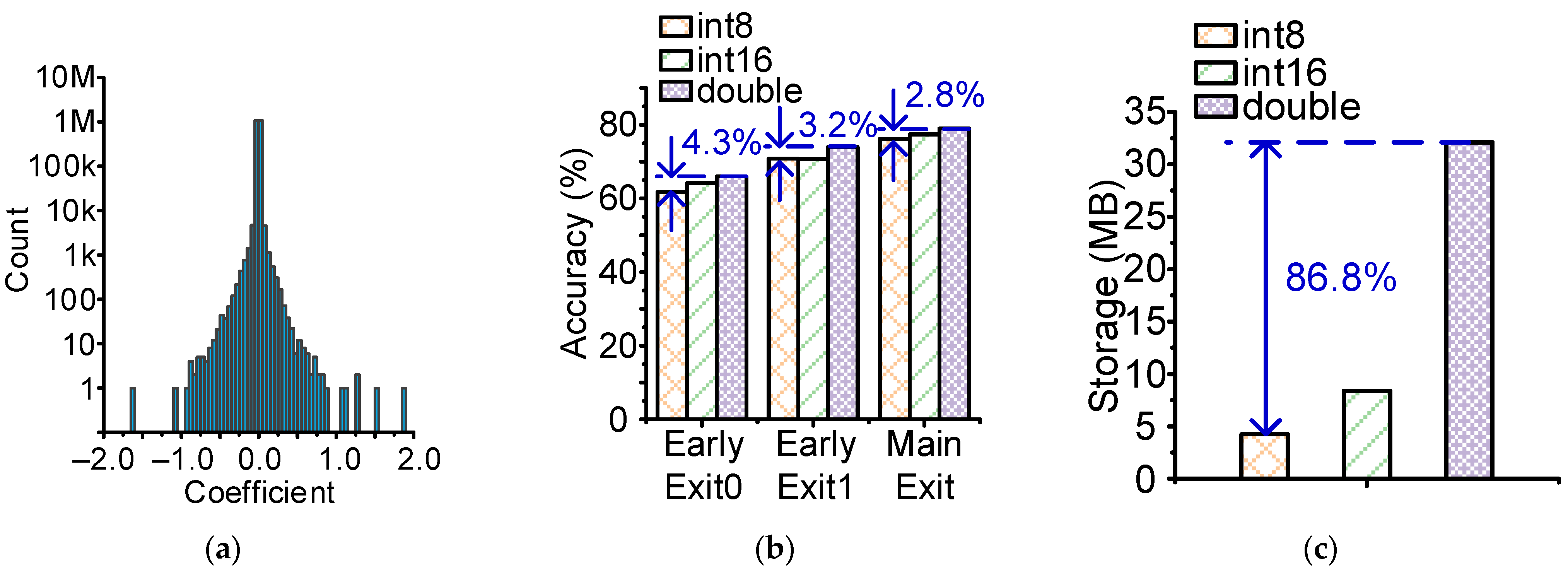

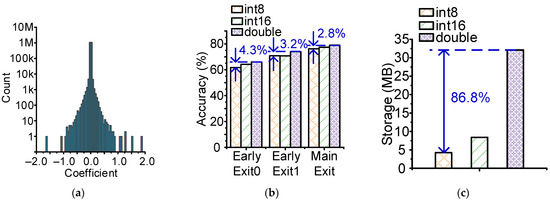

For energy efficiency, the system employs fixed-point data and coefficients. Figure 4a shows distribution of the coefficients. The coefficients mainly distribute between −1.0 and 1.0, implying that the decimal number is more important than the integer. Figure 4b,c shows accuracy and storage size for coefficients for 8-bit, 16-bit and double-type data, respectively. Compared with the double-type data, using 8-bit integer format saves the storage size by 86.8% at a cost of accuracy drop of 4.3%.

Figure 4.

Simulation results of the proposed CNN: (a) coefficient distribution, (b) simulated accuracy, and (c) necessary memory size.

4. Hardware Implementation

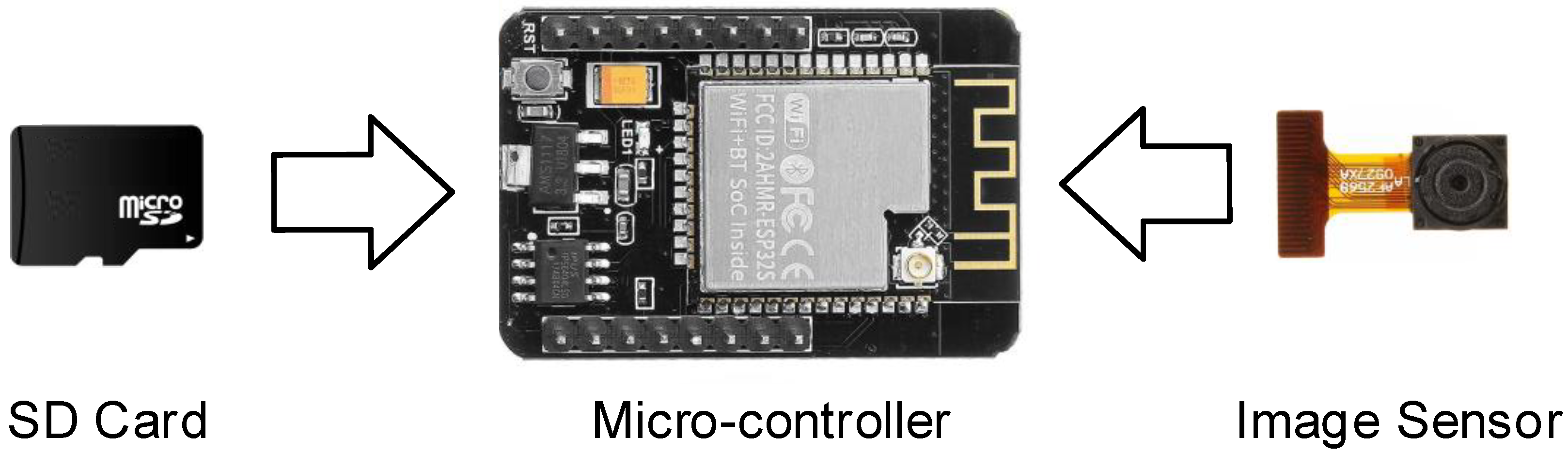

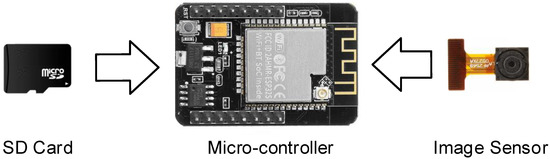

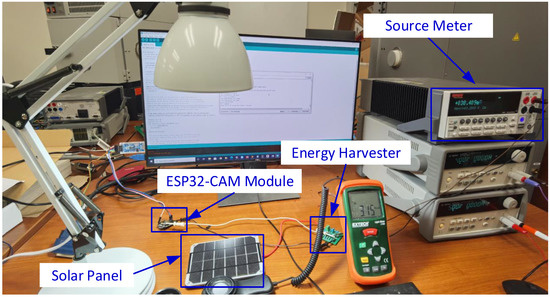

Figure 5 shows the implemented hardware for the proposed system, consisting of an ESP32-CAM module, an OV2460 camera module (1632 × 1232 resolution), and an SD card (≥128 MB). Table 1 details the ESP32-CAM module [33]. The processor of the ESP32-CAM module operates at a clock frequency of 160 MHz. At the maximum frequency, the entire operation including image capture and CNN inference is processed in 35.7 s. The module takes 5 or 3.3 V as a supply voltage.

Figure 5.

Hardware of the proposed system.

Table 1.

Specification of the ESP32-CAM module.

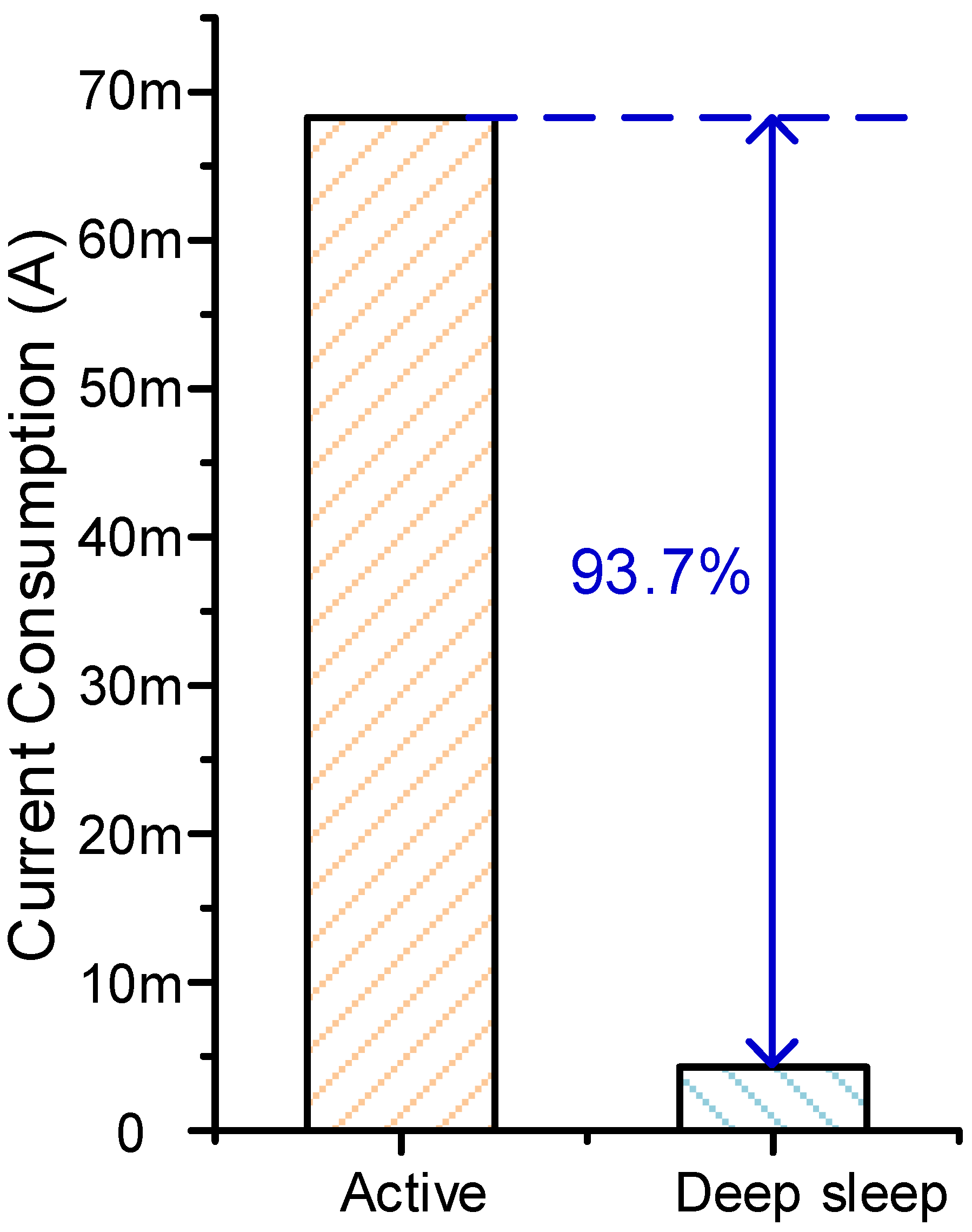

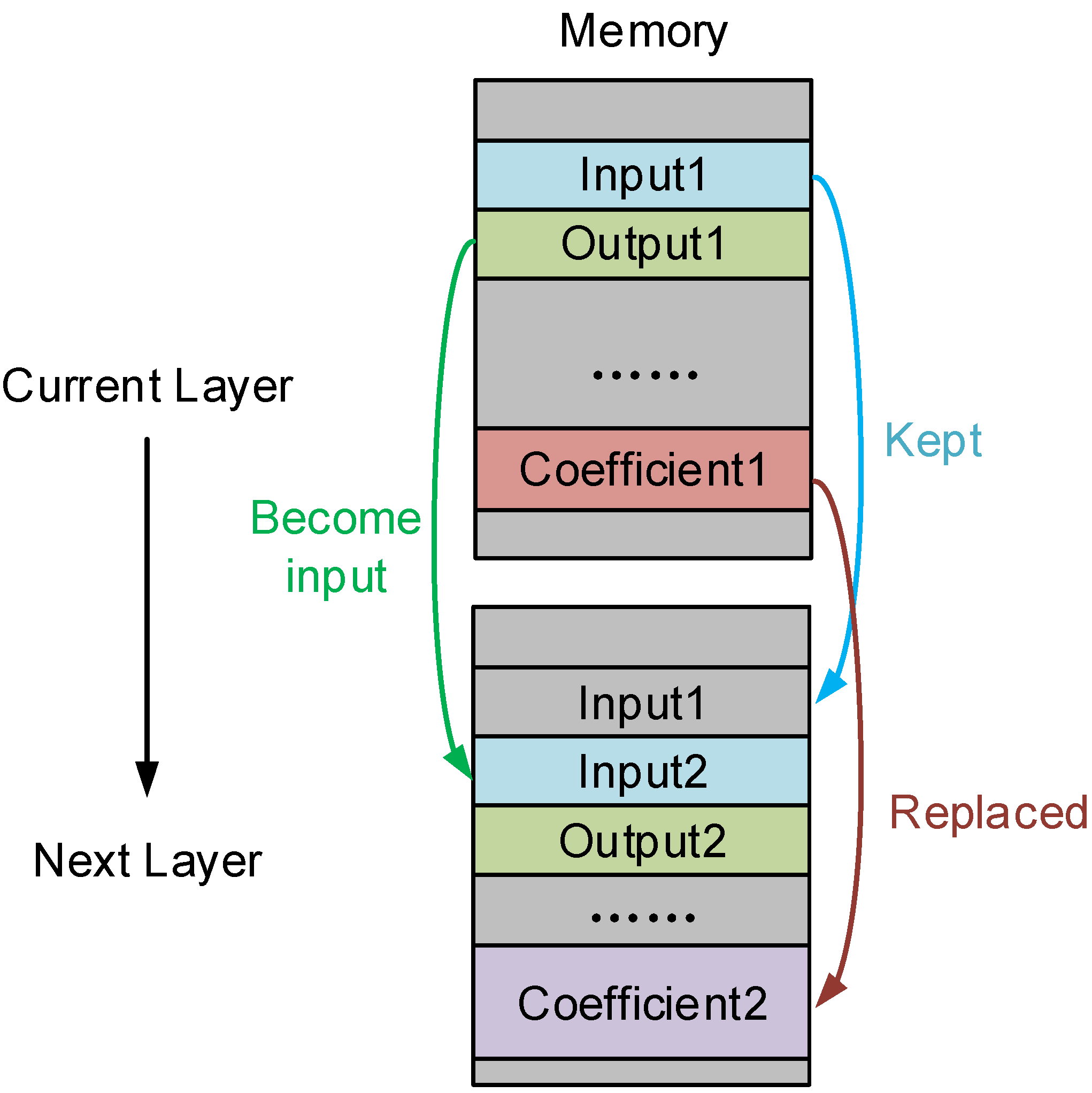

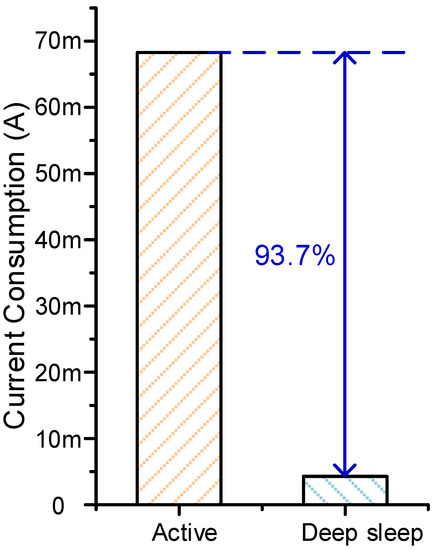

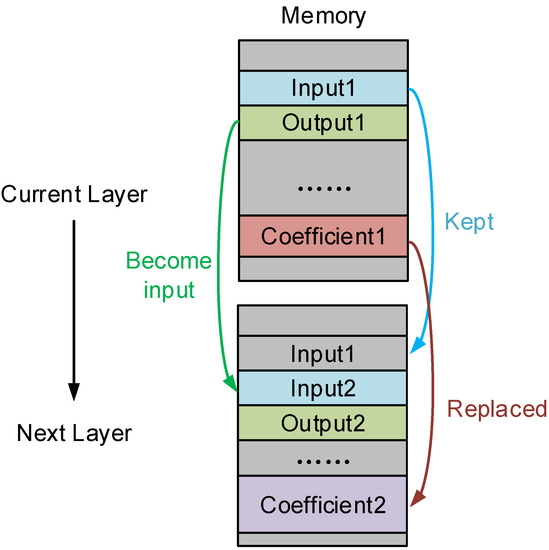

Figure 6 shows the current consumption in active and sleep modes. The sleep mode saves power consumption by 93.7% compared with the active mode. Figure 7 depicts the data flow between external storage and SRAM. After processing each layer, the coefficients for the used layer are replaced by those for the new layer. All the inputs and outputs of the layers are kept in memory. Thus, the CNN processing can roll back to a former layer before branching when entropy is larger than a threshold.

Figure 6.

Current consumption in active and sleep modes.

Figure 7.

Data movement in memory.

5. Measurement Results

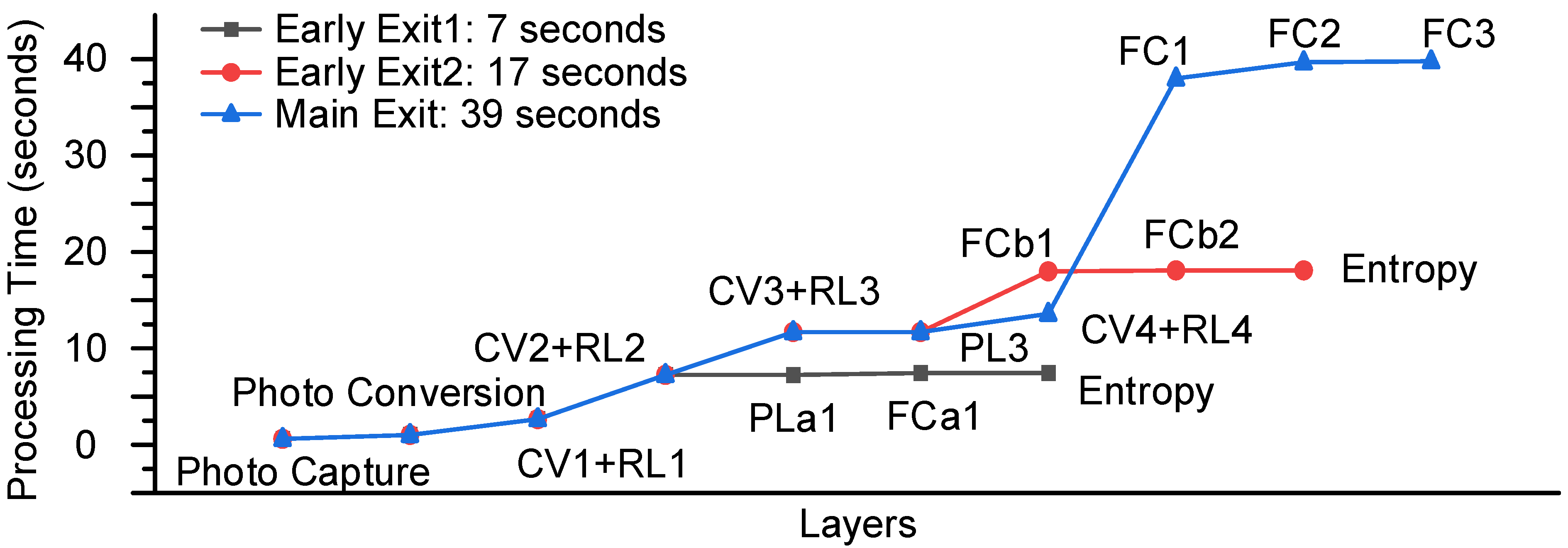

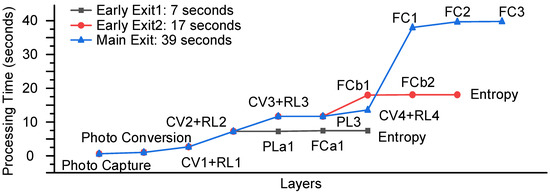

Figure 8 shows measured processing time for the 3 paths. At the clock frequency of 240 MHz, an image can be processed within 36 s for the longest path (main exit). The early exit1 and exit2 take only 16 and 6 s to complete the processes, reducing the active-mode time by 55% and 83%, respectively. The convolutional and fully connected layers dominate the processing time due to the iterative MAC operation. The first fully connected layer for the main exit (FC1) multiplies vectors with 2560 dimensions and adds them up, taking 22 s and dominating the processing time.

Figure 8.

Measured processing time of each layer for different exits.

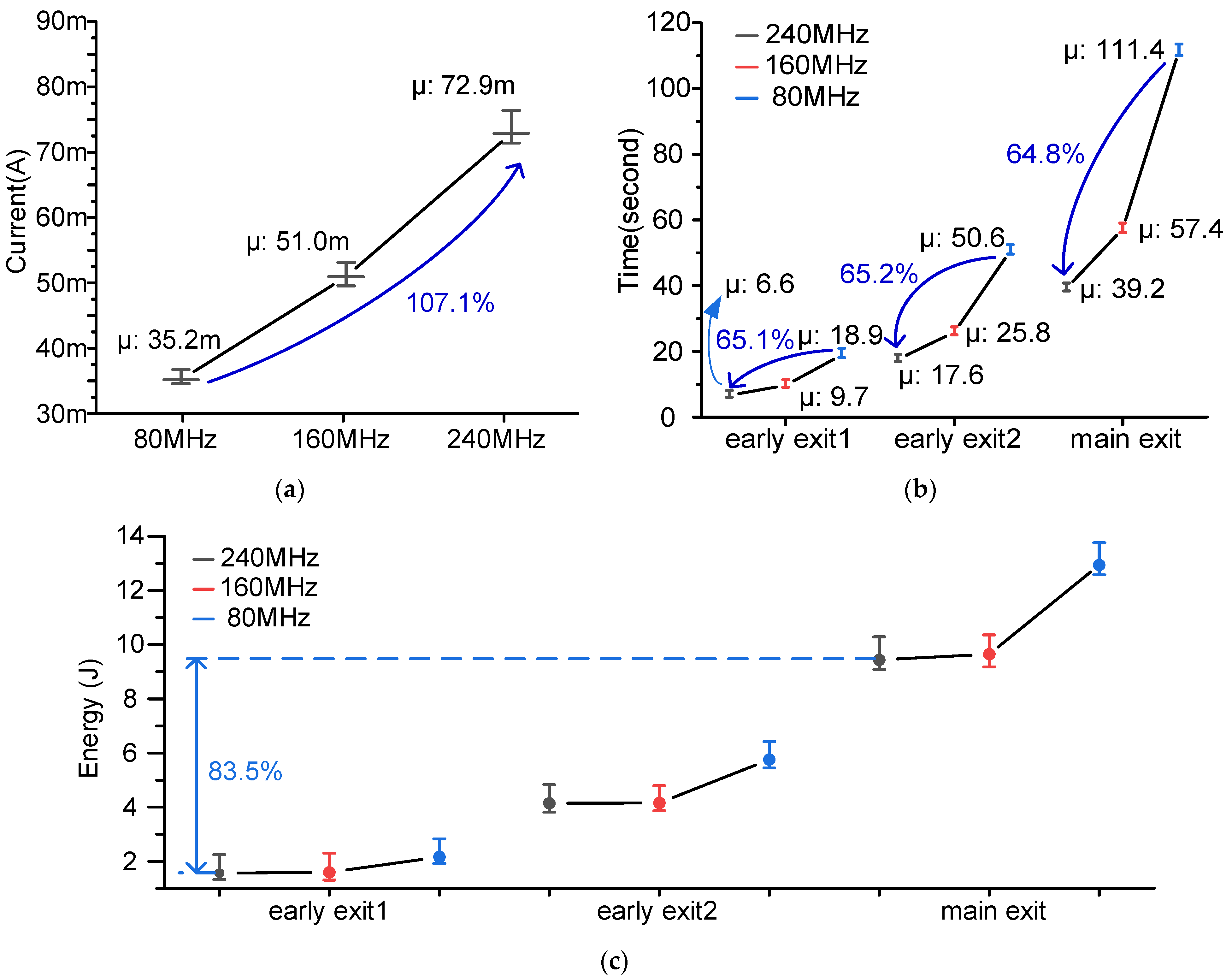

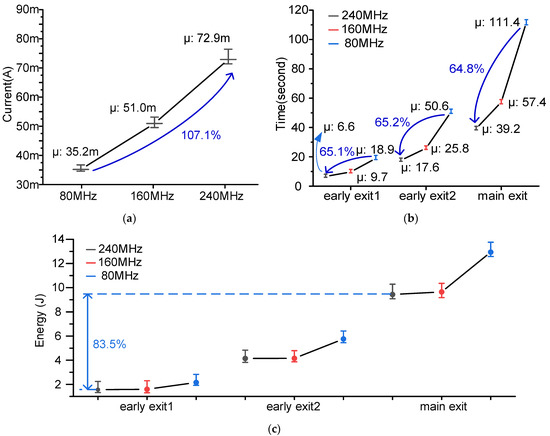

Figure 9 shows measured results of the total inference at different clock frequencies and exits; the maximum, minimum and average values from 20 samples are shown. In Figure 9a, the average current consumption increases from 35.2 to 72.9 mA as frequency increases from 80 to 240 MHz. In Figure 8b, the processing time decreases roughly linearly with higher clock frequency. The clock frequency of 240 MHz saves processing time by around 65%. Figure 8c shows the energy consumption for each exit at different frequencies. Compared with the main exit, the early exit1 saves energy by 83.5%, and the early exit2 saves energy by 56.3%. The highest frequency (240 MHz) costs the lowest energy consumption (1.3, 3.5 and 8.2 J) for all 3 exits. It means that energy reduction from shorter processing time at higher frequency is larger than energy increase due to higher power consumption; this results from power consumption that does not depend on clock frequency.

Figure 9.

Measured CNN inference at different clock frequency and processing paths: (a) current consumption across frequencies, (b) time across frequencies and paths, and (c) energy consumption across frequencies and paths.

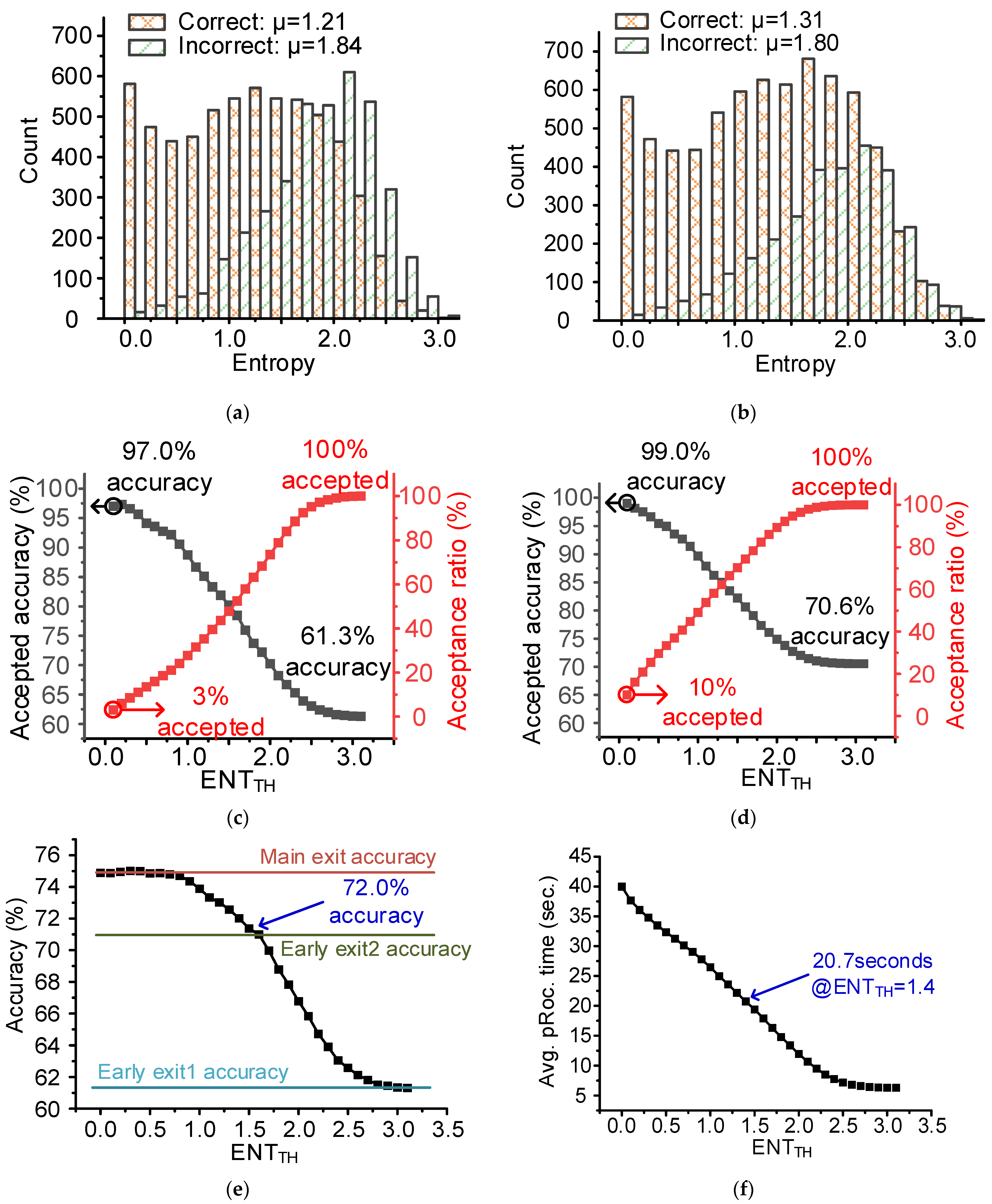

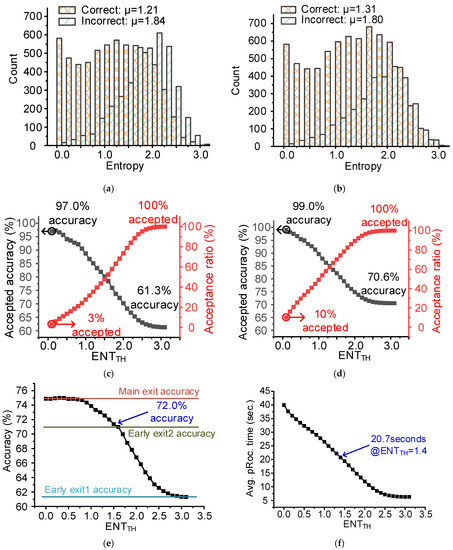

Figure 10 shows measured CNN inference with 10,000 images from the CIFAR-10 dataset. Figure 10a,b shows entropy distribution from early exit1 and early exit2, respectively. In both figures, the average entropy of the correct inferences is lower than that of the incorrect inferences, matching with the proposed confidence checking method at the output of earlier exits. The proposed system considers lower entropy as higher confidence in the inference result at an exit. Figure 10c,d shows the number of accepted results with different ENTTH and the accuracy among them. Note that the accuracy does not include the results of samples discarded by the entropy check. Beginning from 0.1, only 3% (10%) of the results are accepted with an accuracy of 97% (99%) at early exit1 (early exit 2). As ENTTH increases, more results are accepted at the early exit, resulting in lower accuracy. When ENTTH is higher than 3.1, all results are accepted, and the accuracy becomes equal to the accuracy of a single path (early exit1 or early exit2). Figure 10e shows the overall accuracy using all 3 exits across ENTTH. Note that inference results are obtained for all the samples, which is different from Figure 10c. As ENTTH increases, the overall accuracy decreases from the main exit only accuracy to the early exit1 only accuracy. For example, the accuracy is 72% at ENTTH = 1.4. Figure 9f shows that the average processing time is 20.7 s at ENTTH = 1.4. Compared to a single-exit CNN with only the main exit, the proposed multi-exit CNN system reduces the processing time by 42.5% and thus saves energy consumption by the same amount, at the cost of an accuracy loss of 2.9%.

Figure 10.

Measured CNN inference with 10,000 images from the CIFAR-10 dataset: (a) entropy distribution for early exit1, (b) entropy distribution for early exit2, (c) acceptance ratio and accepted accuracy across ENTTH for early exit1, (d) acceptance ratio and accepted accuracy across ENTTH for early exit2, (e) overall accuracy over 3 exits across ENTTH, and (f) average processing time for each image across ENTTH.

6. Simulation Results for Long Term Operation

To evaluate the energy saving of the proposed multi-exit CNN system for a target system, we perform simulations using MATLAB, based on an exemplary natural outdoor light profile [17], measurement results of an energy harvester [17] and the proposed system. The light profile is obtained from 5 HOBO MX2202 light sensors in the Beechwood Farms Nature Reserve of Audubon Society of Western Pennsylvania in Allegheny County, Pennsylvania.

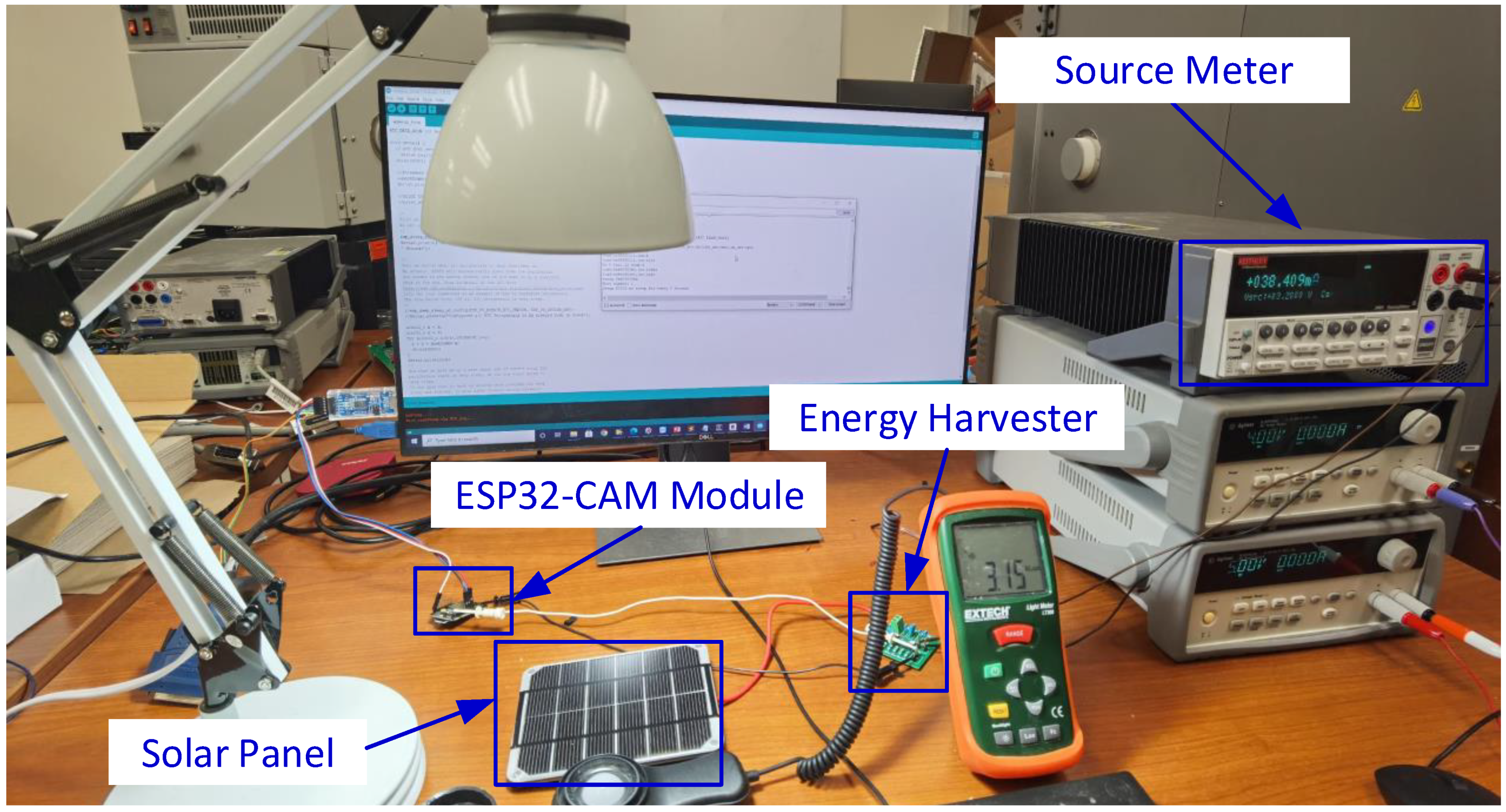

The charging power of an energy harvester is measured as shown in Figure 11. The energy harvesting system includes a solar panel (Adafruit 200) and an energy harvesting chip with power management (TI BQ25570). The output of the energy harvester is connected to the ESP32 module as a power supply. A Keithley 2401 source measurement unit measures the harvested power. Simulation emulates a scenario where the proposed system wakes up every 3 min, performs a CNN inference operation, and then enters sleep mode again. To suppress the sleep-mode power consumption and achieve sustainable system operation, we include models of a low-power timer (TI TPL5111) and a switch (ZVN2110A). In sleep mode, the timer consumes 35 nA counting for a fixed period, and the leakage current of the switch is only 30 pA. As the timer reaches a threshold, the switch is turned on, and the system enters the active mode. Thus, the system power consumption is considered as 35 nA in sleep mode, mainly due to the low-power timer.

Figure 11.

Testing setup for the proposed system.

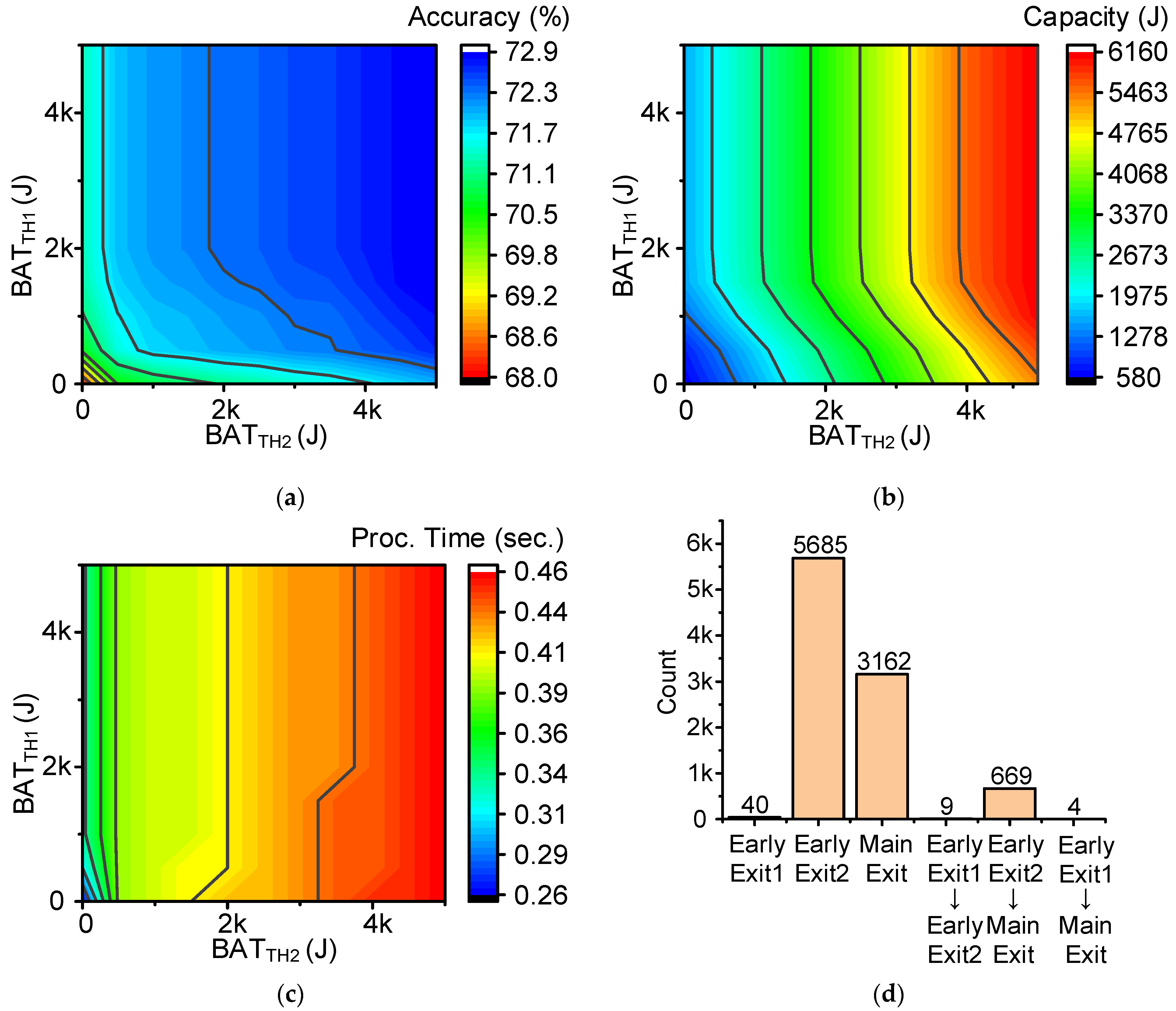

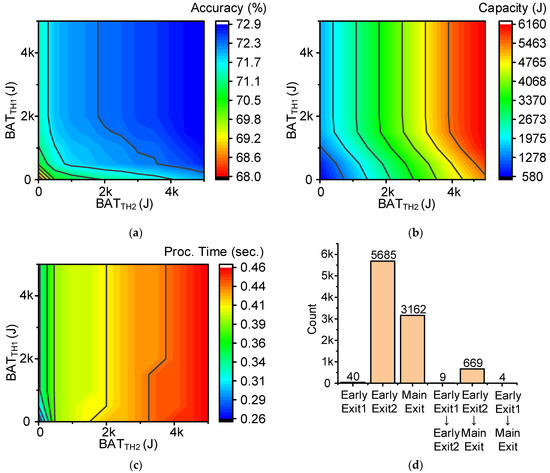

Figure 12 shows the simulated long-term operation. Figure 12a shows the accuracy across BATTH1 and BATTH2 when ENTTH is set to 2.0. As a wider margin is given to the energy budget (higher BATTHX), the main exit is selected more frequently, resulting in higher accuracy. At BATTH1 = 1500 J and BATTH2 = 1500 J, the accuracy is 72.11%, which is lower than the main-exit only approach (74.77%) by 2.66%. Figure 12b show the required battery capacity without power outage. Figure 12c shows the average processing time per wakeup. At BATTH1 = 1500 J and BATTH2 = 1500 J, a battery with capacity of 3050 J and average processing time of 0.4 min is required. This can be implemented through a rechargeable battery (43 mm × 14 mm × 14 mm) [32]. Figure 12d shows the distribution of the selected path for each inference at BATTH1 = 1500 J and BATTH2 = 1500 J. Among the 9569 inferences, 59.8% are processed by the two early exits to save energy without skipping any inference. 17% of the early exit1 results exceed the entropy threshold and are processed one more time with early exit2. 10.2% of the early exit2 results are re-processed by the main exit.

Figure 12.

Simulated long-term operation across BATTH1 and BATTH2: (a) accuracy, (b) capacity, (c) processing time, and (d) distribution of the selected paths.

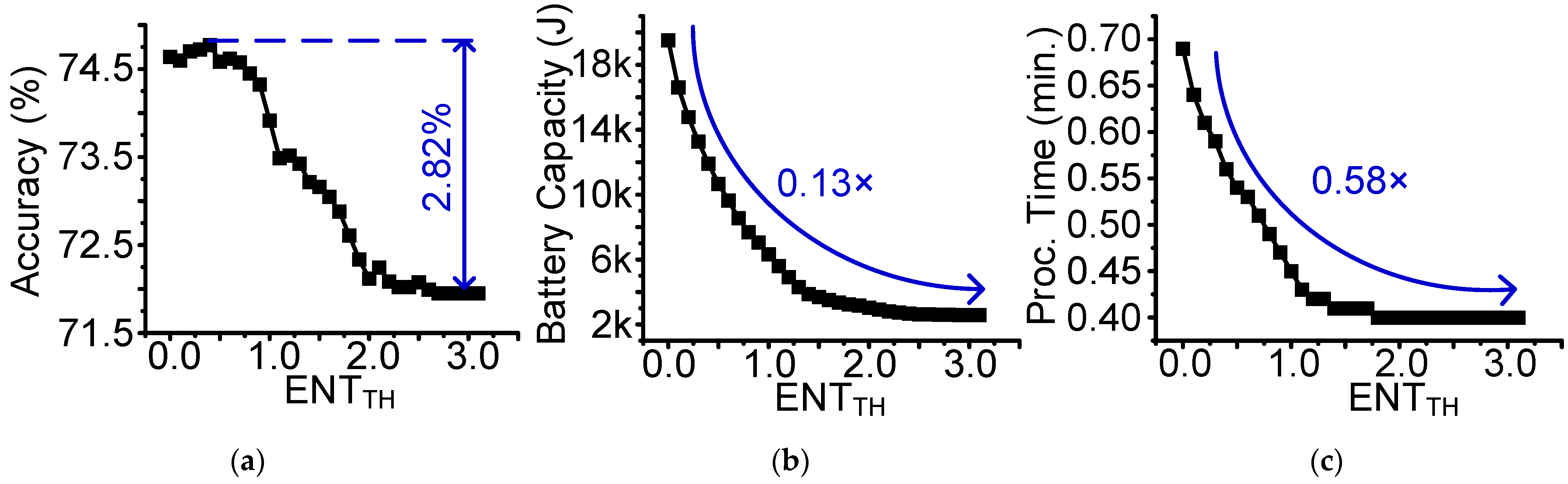

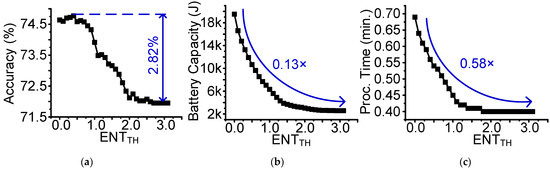

Figure 13a shows the accuracy across ENTTH from 0.1 to 3.1 at BATTH1 = 1500 J and BATTH2 = 1500 J. The accuracy decreases from 74.77% to 71.95% by using more early exits. At ENTTH = 1.9, the required battery capacity reduces from 19.5 to 3.2 kJ by the reduced re-calculation as shown in Figure 13b. In Figure 13c, the average process time also reduces from 0.69 to 0.40 min.

Figure 13.

Simulated long-term operation across ENTTH: (a) accuracy, (b) battery capacity, (c) Processing time.

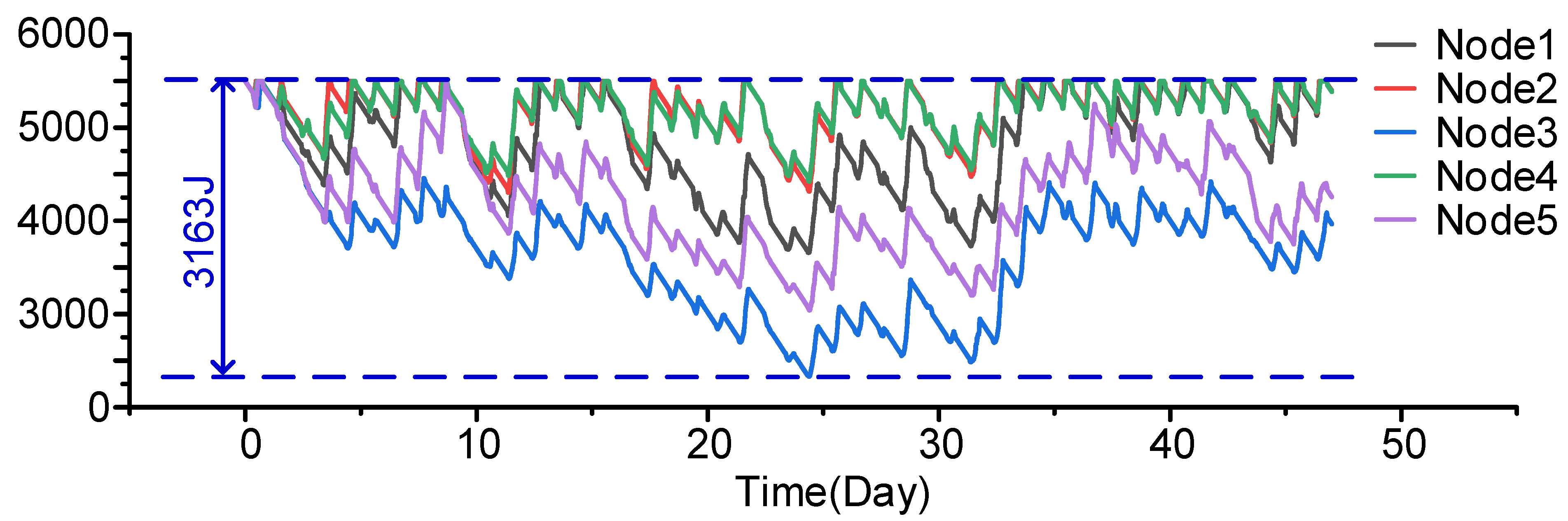

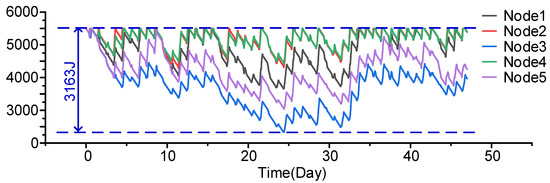

Figure 14 shows the battery energy across time. With the energy harvester, the system can regain the consumed power in a duty-cycled operation and achieve energy autonomy. The worst case consumes 3163 J. The operation of the system can be sustained by a rechargeable battery, which has energy capacity as high as 4.6 kJ [32]. It proves the feasibility of such systems.

Figure 14.

Available battery energy across time.

7. Conclusions

This paper demonstrates the feasibility of implementing a CNN in a battery-powered sensing system. By using multiple exits with different depths, the proposed system analyzes captured images with shorter time and lower energy by 42.5% at the cost of 2.9% accuracy drop, compared with a conventional, single-exit CNN. Simulation results, based on an exemplary natural outdoor light profile and measured energy consumption of the proposed system, show that the system can sustain its operation with a 3.2 kJ (275 mAh @ 3.2 V) battery by scarifying the accuracy only by 2.7%.

Author Contributions

Conceptualization, Y.L. and I.L.; methodology, Y.G., Y.L., M.S., J.T.T., Y.W., J.H. and I.L.; validation, Y.G. and Y.L.; data curation, Y.G. and Y.L.; writing—original draft preparation, Y.L.; writing—review and editing, Y.G., Y.L., J.T.T., J.H. and I.L.; visualization, Y.L.; supervision, I.L.; project administration, I.L.; All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported in part by NSF CNS-2007274.

Data Availability Statement

The data presented in this study are openly available in FigShare at [https://doi.org/10.6084/m9.figshare.15057825.v1], (accessed on 20 August 2021).

Acknowledgments

The authors are grateful to the Audubon Society of Western Pennsylvania (ASWP), Pittsburgh, PA, USA, for their support.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Lorincz, K.; Kuris, B.; Ayer, S.M.; Patel, S.; Bonato, P.; Welsh, M. Wearable Wireless Sensor Network to Assess Clinical Status in Patients with Neurological Disorders. In Proceedings of the 2007 6th International Conference on Information Processing in Sensor Networks, New York, NY, USA, 25–27 April 2007; pp. 563–564. [Google Scholar] [CrossRef]

- Thiyagarajan, K.; Rajini, G.K.; Maji, D. Cost-effective, Disposable, Flexible and Printable MWCNT-based Wearable Sensor for Human Body Temperature Monitoring. IEEE Sens. J. 2021. [Google Scholar] [CrossRef]

- Ling, T.Y.; Wah, L.H.; McBride, J.W.; Chong, H.M.H.; Pu, S.H. Nanocrystalline Graphite Humidity Sensors for Wearable Breath Monitoring Applications. In Proceedings of the 2019 IEEE International Conference on Sensors and Nanotechnology, Penang, Malaysia, 24–25 July 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Hester, T.; Hughes, R.; Sherrill, D.M.; Knorr, B.; Akay, M.; Stein, J.; Bonato, P. Using wearable sensors to measure motor abilities following stroke. In Proceedings of the International Workshop on Wearable and Implantable Body Sensor Networks (BSN’06), Cambridge, MA, USA, 3–5 April 2006; pp. 4–8. [Google Scholar] [CrossRef]

- Alavi, A.H.; Hasni, H.; Jiao, P.; Aono, K.; Lajnef, N.; Chakrabartty, S. Self-charging and self-monitoring smart civil infrastructure systems: Current practice and future trends. In Proceedings Volume 10970, Sensors and Smart Structures Technologies for Civil, Mechanical, and Aerospace Systems 2019; SPIE-International Society for Optics and Photonics: Bellingham, WA, USA, 2019. [Google Scholar] [CrossRef]

- Grosse, C.U.; Krüger, M. Wireless Acoustic Emission Sensor Networks for Structural Health Monitoring in Civil Engineering, 2006. Available online: http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.159.3947&rep=rep1&type=pdf (accessed on 2 September 2021).

- Ong, J.B.; You, Z.; Mills-Beale, J.; Tan, E.L.; Pereles, B.D.; Ong, K.G. A Wireless, Passive Embedded Sensor for Real-Time Monitoring of Water Content in Civil Engineering Materials. IEEE Sens. J. 2008, 8, 2053–2058. [Google Scholar] [CrossRef]

- Shi, Z.; Chen, Y.; Yu, M.; Zhou, S.; Al-Khanferi, N. Development and Field Evaluation of a Distributed Microchip Downhole Measurement System. Paper presented at the SPE Digital Energy Conference and Exhibition, The Woodlands, TX, USA, 3–5 March 2015. [Google Scholar]

- Iyer, V.; Najafi, A.; James, J.; Fuller, S.; Gollakota, S. Wireless steerable vision for live insects and insect-scale robots. Sci. Robot. 2020, 5, eabb0839. [Google Scholar] [CrossRef] [PubMed]

- Iyer, V.; Nandakumar, R.; Wang, A.; Fuller, S.B.; Gollakota, S. Living IoT: A Flying Wireless Platform on Live Insects. In Proceedings of the 25th Annual International Conference on Mobile Computing and Networking (MobiCom’19), New York, NY, USA, 21–25 October 2019. [Google Scholar] [CrossRef] [Green Version]

- Nazari, M.H.; Mujeeb-U-Rahman, M.; Scherer, A. An implantable continuous glucose monitoring microsystem in 0.18µm CMOS. In Proceedings of the 2014 Symposium on VLSI Circuits Digest of Technical Papers, Honolulu, HI, USA, 10–13 June 2014; pp. 1–2. [Google Scholar] [CrossRef]

- Bhamra, H.; Tsai, J.; Huang, Y.; Yuan, Q.; Shah, J.V.; Irazoqui, P. A Subcubic Millimeter Wireless Implantable Intraocular Pressure Monitor Microsystem. IEEE Trans. Biomed. Circuits Syst. 2017, 11, 1204–1215. [Google Scholar] [CrossRef] [PubMed]

- Mercier, P.P.; Lysaght, A.C.; Bandyopadhyay, S.; Chandrakasan, A.P.; Stankovic, K.M. Energy extraction from the biologic battery in the inner ear. Nat. Biotechnol. 2012, 30, 1240–1243. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jeong, S.; Kim, Y.; Kim, G.; Blaauw, D. A Pressure Sensing System with ±0.75 mmHg (3σ) Inaccuracy for Battery-Powered Low Power IoT Applications. In Proceedings of the 2020 IEEE Symposium on VLSI Circuits, Honolulu, HI, USA, 16–19 June 2020; pp. 1–2. [Google Scholar] [CrossRef]

- Cho, M.; Oh, S.; Shi, Z.; Lim, J.; Kim, Y.; Jeong, S. 17.2 A 142 nW Voice and Acoustic Activity Detection Chip for mm-Scale Sensor Nodes Using Time-Interleaved Mixer-Based Frequency Scanning. In Proceedings of the 2019 IEEE International Solid- State Circuits Conference—(ISSCC), San Francisco, CA, USA, 17–21 February 2019; pp. 278–280. [Google Scholar] [CrossRef]

- Oh, S.; Shi, Y.; Kim, G.; Kim, Y.; Kang, T.; Jeong, S. A 2.5 nJ duty-cycled bridge-to-digital converter integrated in a 13mm3 pressure-sensing system. In Proceedings of the 2018 IEEE International Solid—State Circuits Conference—(ISSCC), San Francisco, CA, USA, 11–15 February 2018; pp. 328–330. [Google Scholar] [CrossRef]

- Li, Y.; Hamed, E.A.; Zhang, X.; Luna, D.; Lin, J.-S.; Liang, X.; Lee, I. Feasibility of Harvesting Solar Energy for Self-Powered Environmental Wireless Sensor Nodes. Electronics 2020, 9, 2058. [Google Scholar] [CrossRef]

- Wang, J.; An, H.; Zhang, Q.; Kim, H.S.; Blaauw, D.; Sylvester, D. A 40-nm Ultra-Low Leakage Voltage-Stacked SRAM for Intelligent IoT Sensors. IEEE Solid-State Circuits Lett. 2020, 4, 14–17. [Google Scholar] [CrossRef]

- Chuo, L.X.; Feng, Z.; Kim, Y.; Chiotellis, N.; Yasuda, M.; Miyoshi, S. Millimeter-Scale Node-to-Node Radio Using a Carrier Frequency-Interlocking IF Receiver for a Fully Integrated 4 × 4 × 4 mm3 Wireless Sensor Node. IEEE Solid-State Circuits Lett. 2019, 55, 1128–1138. [Google Scholar] [CrossRef]

- Xu, L.; Jang, T.; Lim, J.; Choo, K.; Blaauw, D.; Sylvester, D. 3.3 A 0.51 nW 32 kHz Crystal Oscillator Achieving 2ppb Allan Deviation Floor Using High-Energy-to-Noise-Ratio Pulse Injection. In Proceedings of the 2020 IEEE International Solid- State Circuits Conference—(ISSCC), San Francisco, CA, USA, 16–20 February 2020; pp. 62–64. [Google Scholar] [CrossRef]

- Lee, J.; Saligane, M.; Blaauw, D.; Sylvester, D. A 0.3-V to 1.8–3.3-V Leakage-Biased Synchronous Level Converter for ULP SoCs. IEEE Solid-State Circuits Lett. 2020, 3, 130–133. [Google Scholar] [CrossRef]

- Lee, J.; Zhang, Y.; Dong, Q.; Lim, W.; Saligane, M.; Kim, Y. A Self-Tuning IoT Processor Using Leakage-Ratio Measurement for Energy-Optimal Operation. IEEE J. Solid-State Circuits 2020, 55, 87–97. [Google Scholar] [CrossRef]

- Oh, S.; Cho, M.; Shi, Z.; Lim, J.; Kim, Y.; Jeong, S. An Acoustic Signal Processing Chip with 142-nW Voice Activity Detection Using Mixer-Based Sequential Frequency Scanning and Neural Network Classification. IEEE J. Solid-State Circuits 2019, 54, 3005–3016. [Google Scholar] [CrossRef]

- Jeong, S.; Chen, Y.; Jang, T.; Tsai JM, L.; Blaauw, D.; Kim, H.S.; Sylvester, D. Always-On 12-nW Acoustic Sensing and Object Recognition Microsystem for Unattended Ground Sensor Nodes. IEEE J. Solid-State Circuits 2018, 53, 261–274. [Google Scholar] [CrossRef]

- Choo, K.D.; Xu, L.; Kim, Y.; Seol, J.H.; Wu, X.; Sylvester, D.; Blaauw, D. 5.2 Energy-Efficient Low-Noise CMOS Image Sensor with Capacitor Array-Assisted Charge-Injection SAR ADC for Motion-Triggered Low-Power IoT Applications. In Proceedings of the 2019 IEEE International Solid-State Circuits Conference—(ISSCC), San Francisco, CA, USA, 17–21 February 2019; pp. 96–98. [Google Scholar] [CrossRef]

- An, H.; Schiferl, S.; Venkatesan, S.; Wesley, T.; Zhang, Q.; Wang, J. An Ultra-Low-Power Image Signal Processor for Hierarchical Image Recognition with Deep Neural Networks. IEEE J. Solid-State Circuits 2020, 56, 1071–1081. [Google Scholar] [CrossRef]

- Wu, Y.; Wang, Z.; Jia, Z.; Shi, Y.; Hu, J. Intermittent Inference with Nonuniformly Compressed Multi-Exit Neural Network for Energy Harvesting Powered Devices. In Proceedings of the ACM/IEEE Design Automation Conference, San Francisco, CA, USA, 20–24 July 2020. [Google Scholar]

- Gobieski, G.; Lucia, B.; Beckmann, N. Intelligence beyond the Edge: Inference on Intermittent Embedded Systems. In Proceedings of the International Conference on Architectural Support for Programming Languages and Operating Systems, New York, NY, USA, 13–17 April 2019. [Google Scholar]

- Teerapittayanon, S.; McDanel, B.; Kung, H.T. BranchyNet: Fast Inference via Early Exiting from Deep Neural Networks. In Proceedings of the International Conference on Pattern Recognition, Cancun, Mexico, 4–8 December 2016. [Google Scholar]

- Li, Y.; Wu, Y.; Zhang, X.; Hamed, E.; Hu, J.; Lee, I. Developing a Miniature Energy-Harvesting-Powered Edge Device with Multi-Exit Neural Network. In Proceedings of the IEEE International Symposium on Circuits and Systems, Daegu, Korea, 22–28 May 2021. [Google Scholar]

- Zeng, L.; Li, E.; Zhou, Z.; Xu, C. Boomerang: On-Demand Cooperative Deep Neural Network Inference for Edge Intelligence on the Industrial Internet of Things. IEEE Netw. 2019, 33, 96–103. [Google Scholar] [CrossRef]

- GEILIENERGY GLE IFR 14430 400MAH 3.2V 1.28WH. Available online: https://www.glybattery.com/gle-ifr-14430-400mah-3-point-2v-1-point-28wh.html (accessed on 20 August 2021).

- ESP32-CAM Development Board. Available online: https://media.digikey.com/pdf/Data%20Sheets/DFRobot%20PDFs/DFR0602_Web.pdf (accessed on 20 August 2021).

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).