Development of an Automotive Electronics Internship Assistance System Using a Fine-Tuned Llama 3 Large Language Model

Abstract

1. Introduction

2. Literature Review

2.1. Applications of Artificial Intelligence in Education

| Application Direction | Technical Features | Reference Source |

|---|---|---|

| Intelligent Teaching Systems | Personalized recommendations based on learner data, instant feedback mechanisms | Chuanxiang Song (2024) [11], Chopra, D. (2023) [9] |

| Adaptive Learning Platforms | Dynamic adjustment of content difficulty, multimodal interaction design | Chuanxiang Song (2024) [11], Chopra, D. (2023) [9], Zhang (2024) [21] |

| Virtual Reality Teaching | 3D environment simulation, multisensory immersive experience | Stavroulia et al. (2024) [22] |

| Edge-Cloud Collaborative Technology | Intelligent allocation of local devices and cloud computing resources | Zhang (2024) [21] |

| Intelligent Tutoring Systems | Natural language processing, emotion recognition | Coelho (2023) [10] |

2.2. Meta Llama 3 and Domain Adaptation

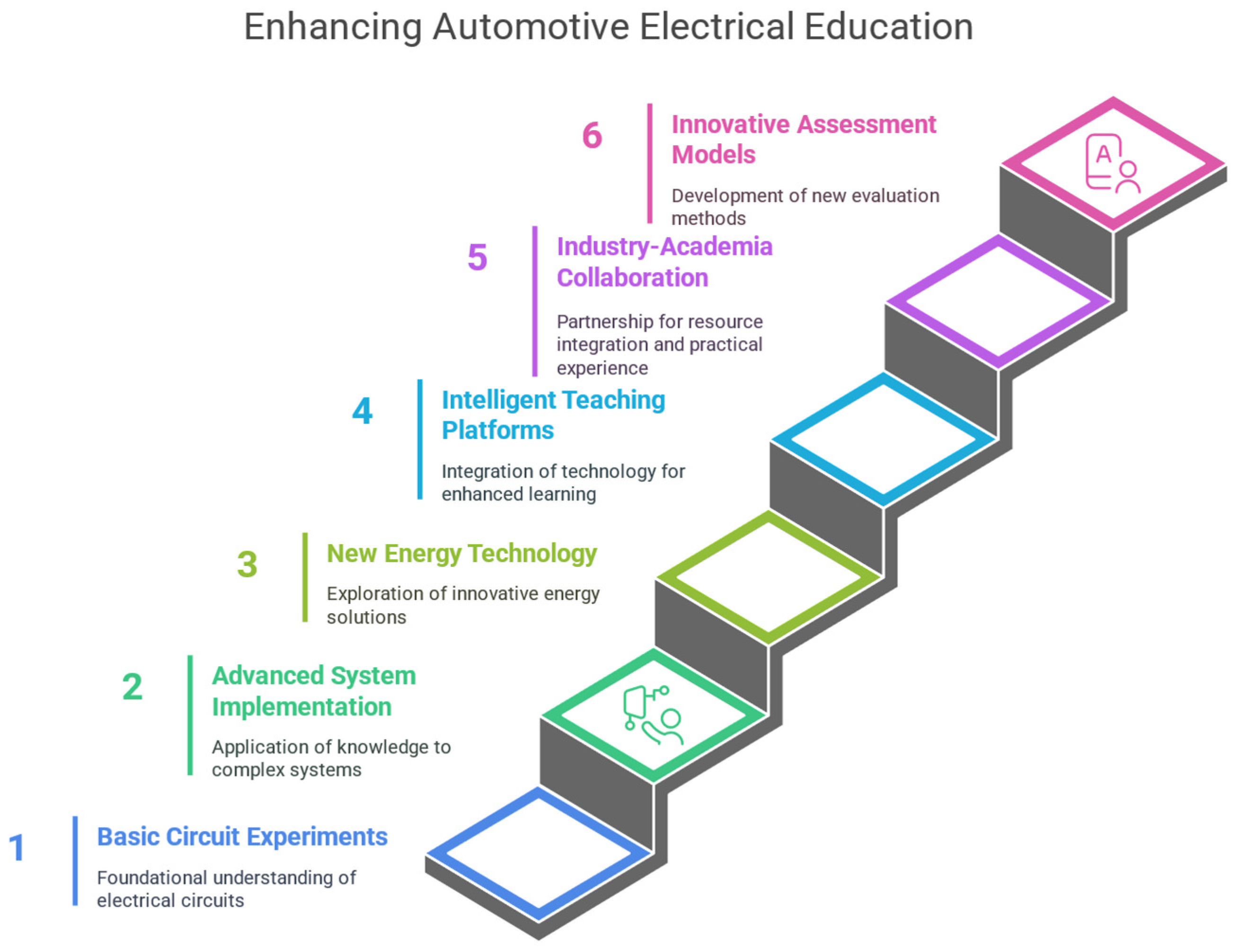

2.3. Pedagogical Trends in Automotive Electrical Training

3. Methodology

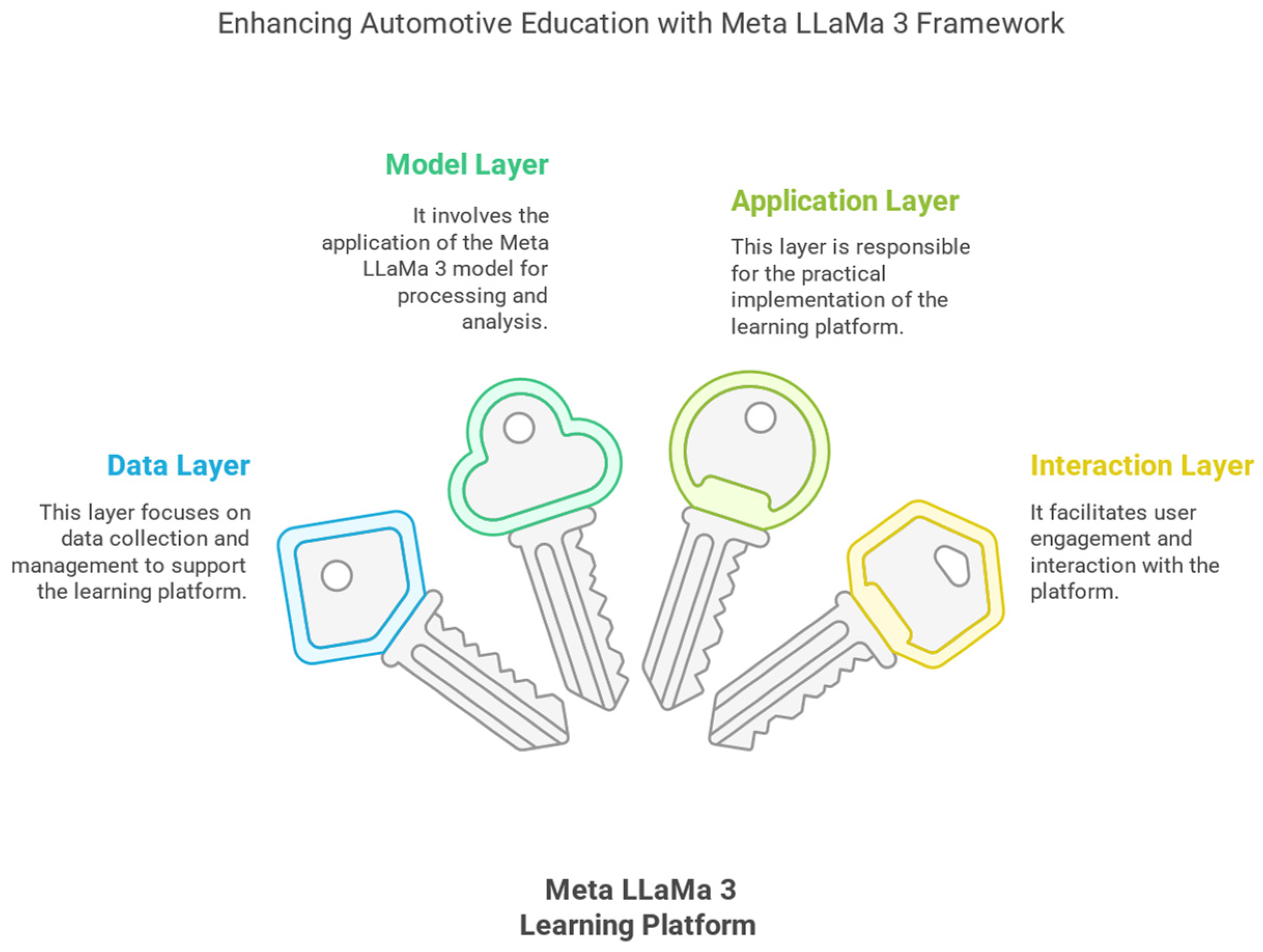

3.1. Research Framework and Design

3.2. Platform Implementation and Technology Stack

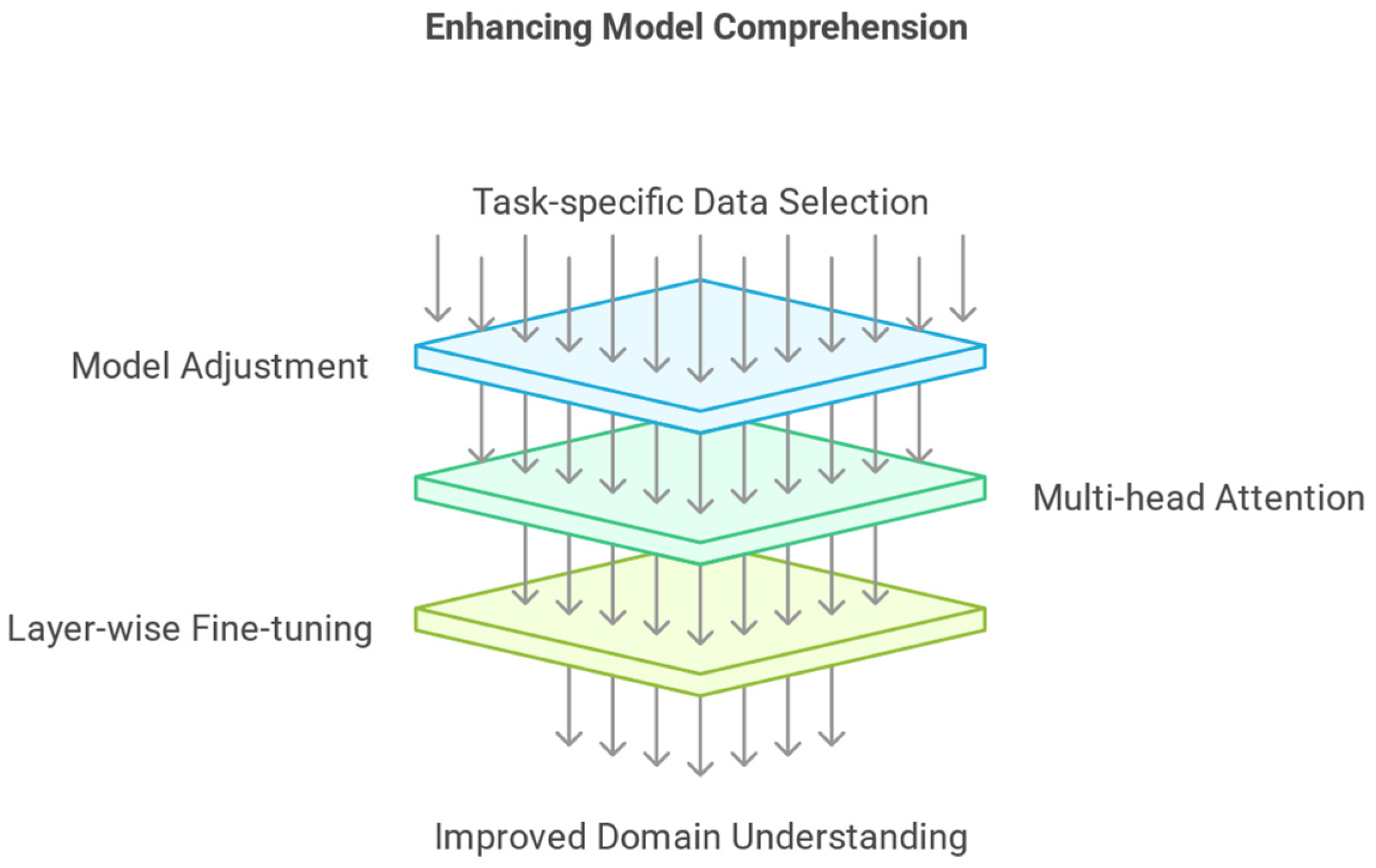

3.3. Core Methodology for Model Fine-Tuning

- Data Collection: Gather a large amount of automotive electrical-related texts, such as repair manuals and academic papers, to establish the model’s foundational knowledge.

- Pre-training: Conduct preliminary learning with a large-scale dataset to help the model understand specialized domain knowledge.

- Fine-tuning: Adjust the model parameters for specific teaching tasks (such as fault diagnosis, circuit analysis) to make it more targeted.

- Multi-head Attention: Enhance the model’s ability to focus on key information, improving accuracy and reasoning capabilities.

- Task-specific Data: Select data related to teaching objectives, such as fault diagnosis or circuit principle texts.

- Model Adjustment: Update model weights to enhance task accuracy.

- Multi-head Attention Mechanism:

- Purpose: Integrate different types of knowledge to improve the model’s comprehension.

- Effect: Strengthen the model’s ability to handle complex queries. Fine-tune the model in layers to enhance domain understanding:

- Data Segmentation: Categorize data by topic, such as “fault diagnosis,” “circuit principles,” etc.

- Layer-wise Fine-tuning: Make fine adjustments based on the function of different layers to improve the model’s understanding in specific domains.

3.4. Model Fine-Tuning Process and Steps

3.5. Verification Plan

4. Results

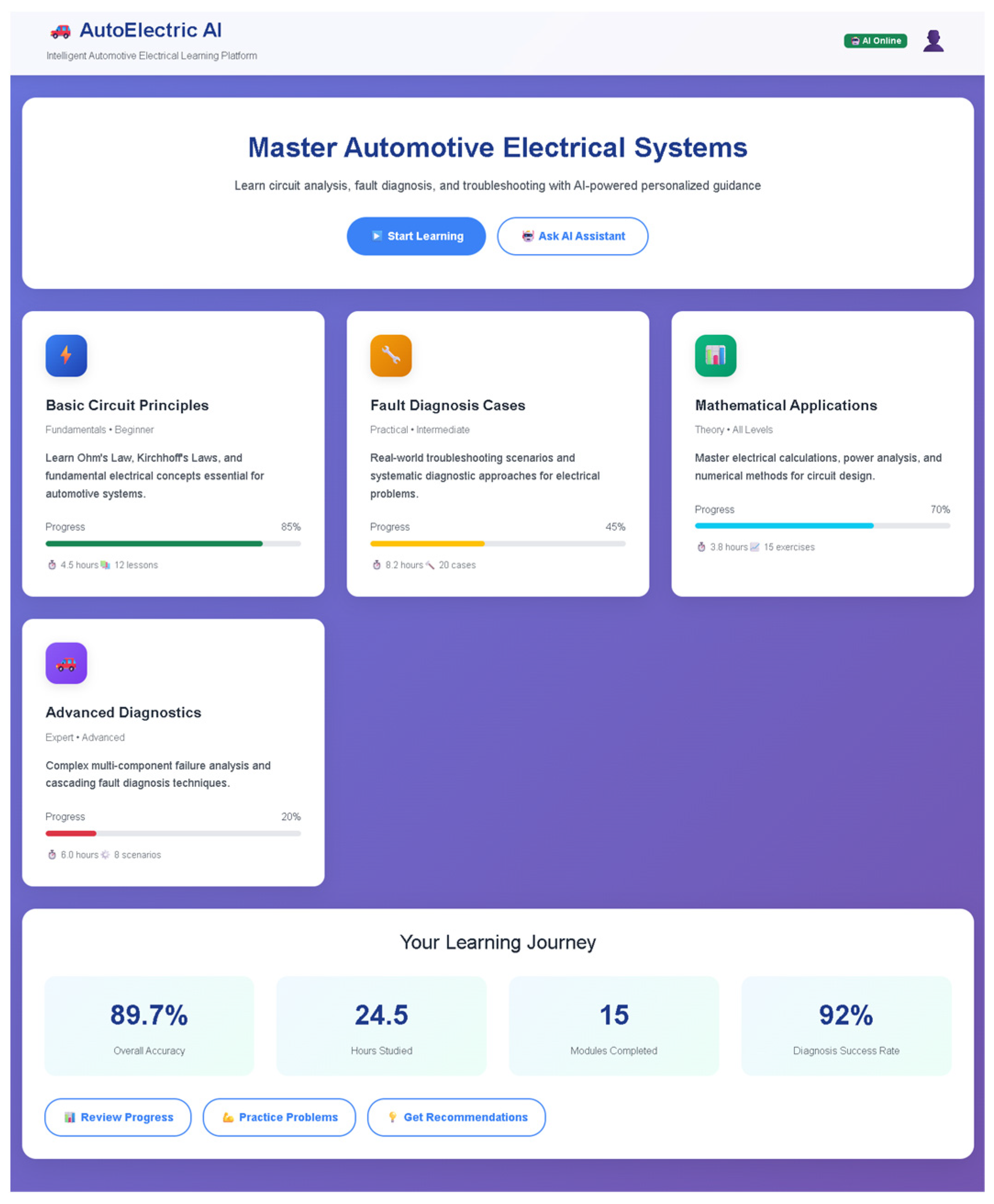

4.1. Knowledge Base Construction and Model Adaptability

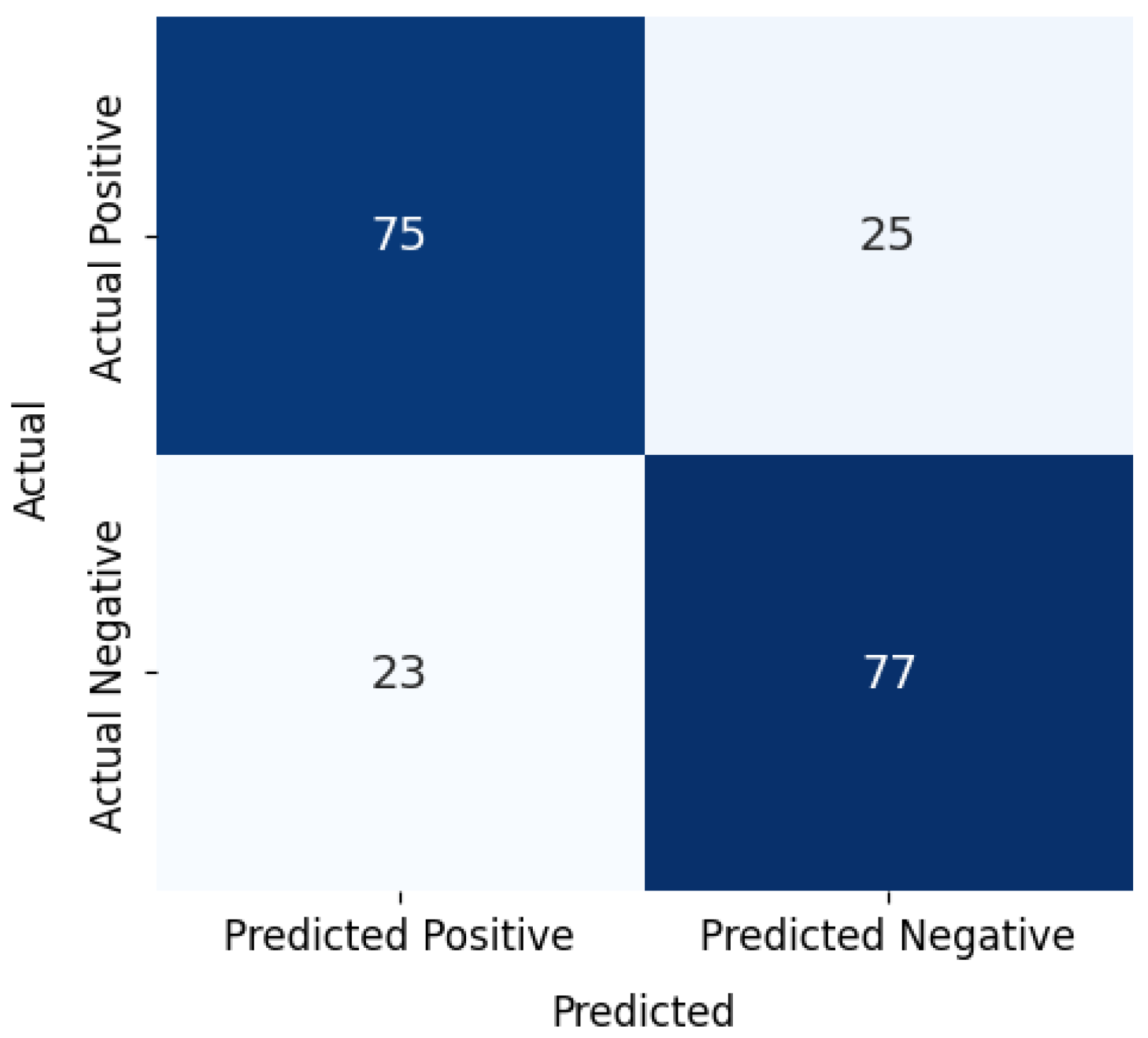

4.2. Model Performance Evaluation

4.3. Technical Stability and Limitations

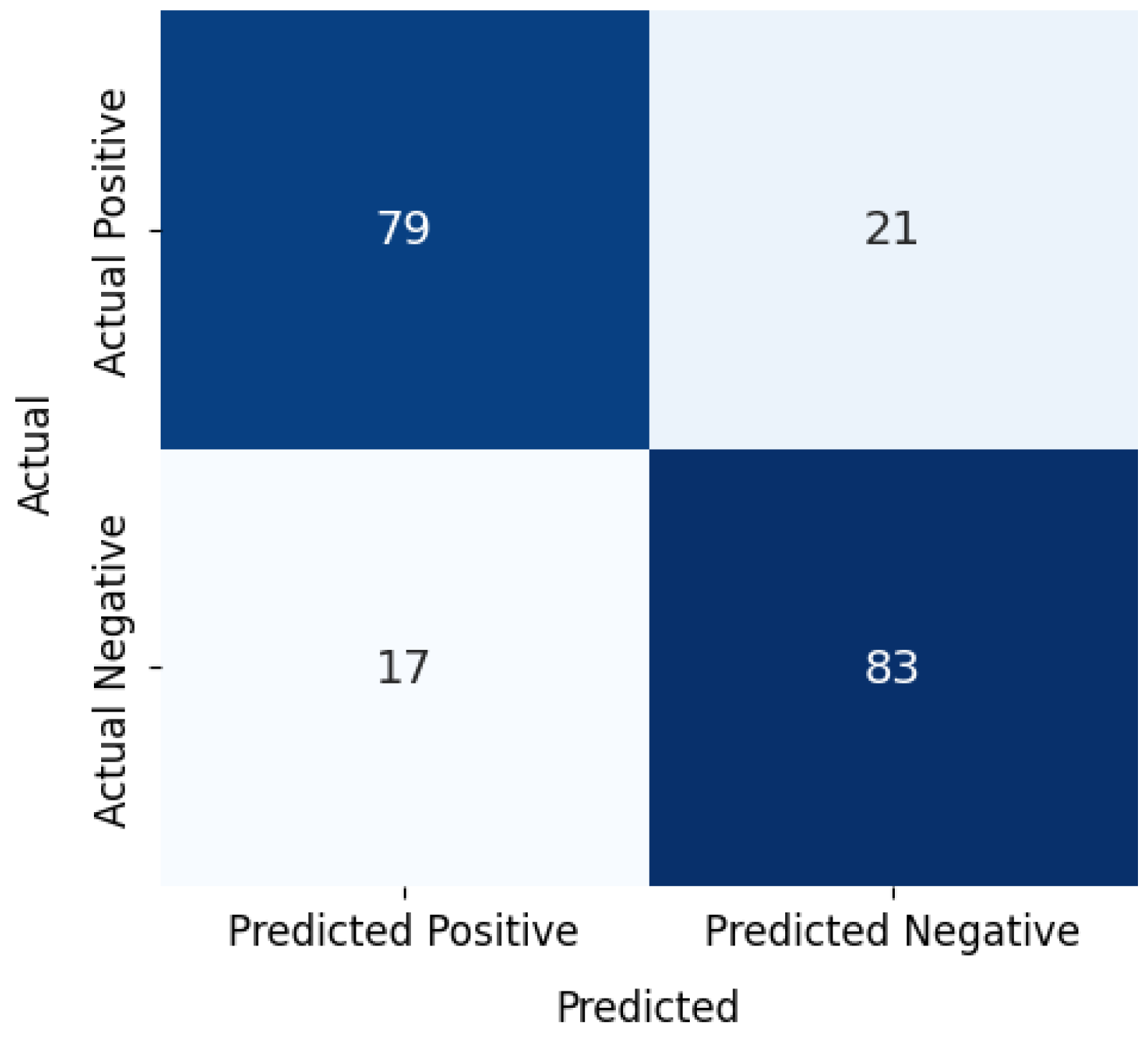

- Mathematical Reasoning Ability: In problems involving calculations (such as “resistor value calculation”), the accuracy drops to 78.4%, which is about 15% below the expert level, possibly due to insufficient numerical content in the training data.

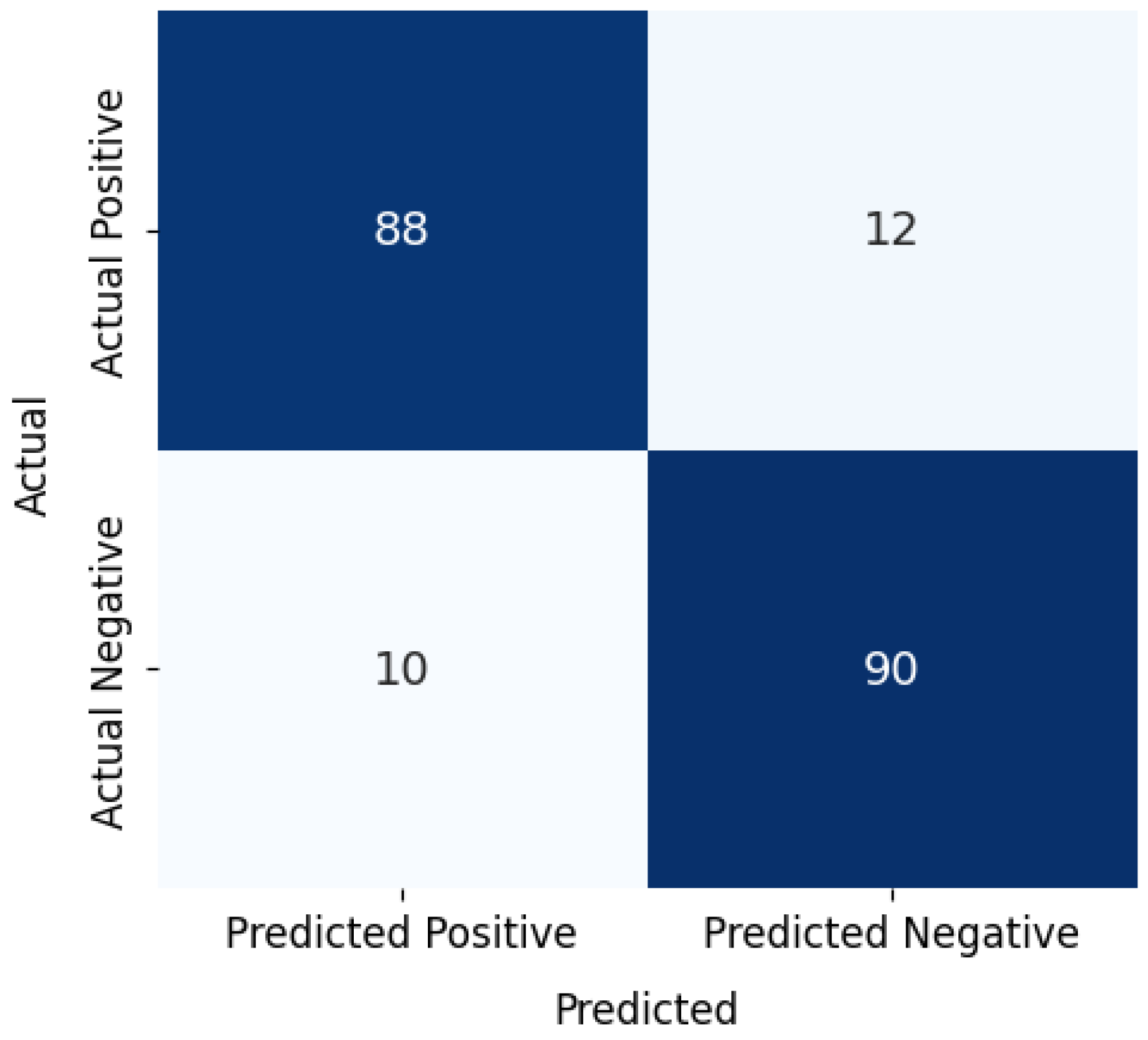

- Complex Problem Handling: In vague or multi-step reasoning problems (such as “Why does the circuit frequently lose power?”), the model occasionally generates incomplete answers, with the recall rate dropping to 80.1%.

- Model Adaptability: The fine-tuned Meta Llama 3 performed excellently in the field of automotive electrical appliances, with significant improvements in semantic understanding and knowledge generation capabilities, meeting the adaptability standards.

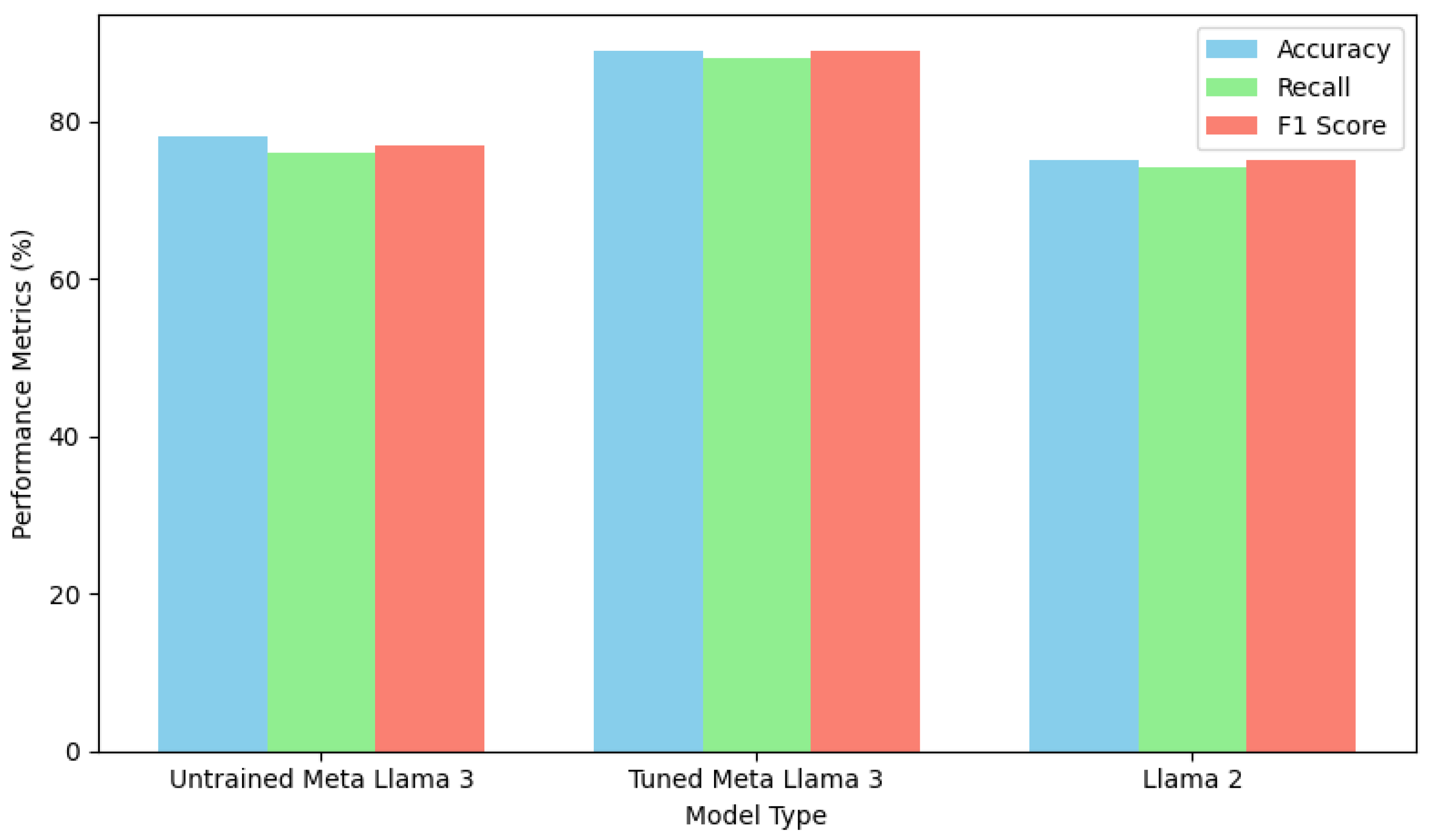

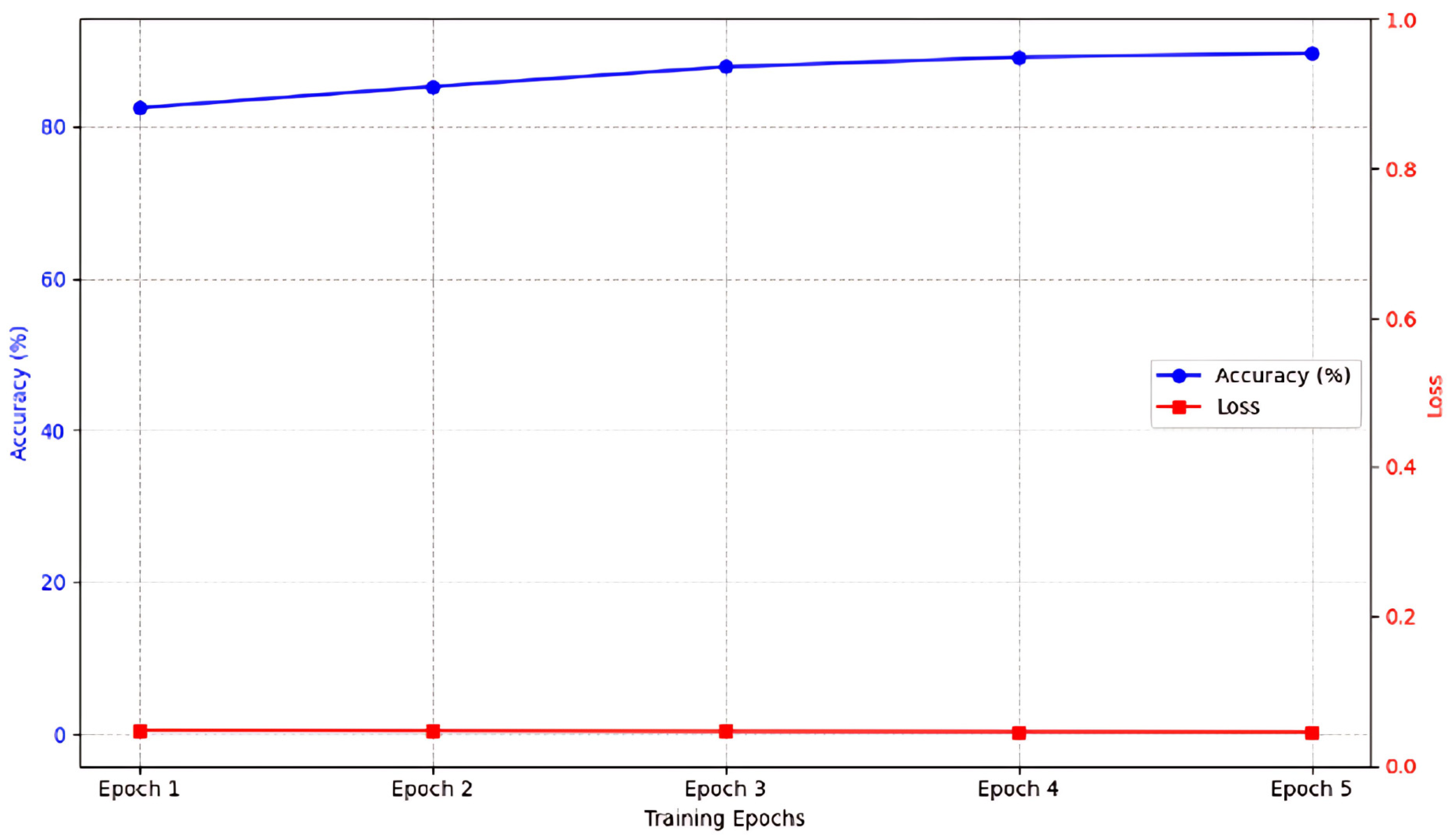

- Performance Improvement: Compared with the non-fine-tuned model and Llama 2, the fine-tuned model showed a 12–15% increase in accuracy, recall, and F1 score, with a stable and convergent training process.

- Stability and Limitations: The platform operates stably under low load but requires optimization for high load performance; the model still falls short in mathematical reasoning and complex problem-solving. The results confirm the technical feasibility of Meta Llama 3 in the automotive electrical practice learning platform but also reveal room for improvement in specific scenarios. Figure 5, Figure 6, Figure 7 and Figure 8 visually display performance comparisons, training trends, and stability analysis, enhancing the authenticity and visual effects of the verification.

Task Type Evaluation and Example Cases

- Semantic Understanding Example (Correct):

- 2.

- Fault Diagnosis Example (Correct):

- 3.

- Fault Diagnosis Example (Incorrect):

- 4.

- Mathematical Calculation Example (Correct):

- 5.

- Mathematical Calculation Example (Incorrect):

4.4. Comparative Benchmarking

5. Conclusions and Recommendations

5.1. Conclusions

- Knowledge Generation and Domain Adaptability: The fine-tuned Meta Llama 3 achieved a semantic understanding accuracy of 92.3% on the automotive electrical practice knowledge base and can effectively generate professional content, such as fault diagnosis and circuit principles. Compared with the non-fine-tuned model (accuracy of 77.3%) and Llama 2 (accuracy of 75.0%), the fine-tuned model showed an improvement of approximately 12–15% in accuracy, recall, and F1 score, proving its successful adaptation to the needs of the automotive electrical field.

- Performance Stability: The training process showed that the model’s performance steadily improved with the number of rounds, and the F1 score distribution from 5-fold cross-validation was concentrated (87.2–90.1%), with a standard deviation of only 1.2%, indicating that the fine-tuned model has good robustness and consistency. The average response time in single-machine testing was 1.8 s, meeting basic application requirements.

- Technical Limitations: The model’s accuracy in mathematical calculation tasks (such as resistor value calculation) was only 78.4%, about 15% below expert levels, indicating that its reasoning capabilities need to be strengthened. Under high-load testing (50 simultaneous queries), the response time increased to 6.3 s, revealing a stability bottleneck in the platform for multi-user scenarios.

5.2. Recommendations

- Enhance Model Capabilities: To address the deficiency in mathematical computation capabilities, it is recommended to expand the training dataset to include more content related to numerical calculations (such as circuit parameter analysis) and combine it with specialized reasoning modules (such as mathematical enhancement plugins) to improve the model’s accuracy in this area. At the same time, an error self-checking mechanism can be introduced to reduce the risk of generating incomplete or incorrect responses.

- Optimize System Performance: To address stability issues under high-load scenarios, it is recommended to adopt a cloud-based distributed architecture, leveraging scalable frameworks like Kubernetes to support AI-driven educational platforms or upgrade server hardware to ensure response speed when multiple users access simultaneously [35,36,37]. In the future, larger-scale stress tests (such as 100 simultaneous queries) can be conducted to quantify the system’s capacity limits.

- Validate Practical Applications: Although this study focused on the technical level, subsequent involvement of students and teachers in automotive electrical practice courses in real testing can be introduced to evaluate the platform’s effectiveness in actual teaching. For example, by designing experimental and control groups, the impact on academic performance and practical skills can be quantified, and user feedback can be collected to optimize functional design.

- Expand Features and Content: Consider integrating virtual reality (VR) or augmented reality (AR) technology to simulate automotive electrical hands-on scenarios and enhance students’ immersive learning experiences. At the same time, regularly update the knowledge base to cover the electrical systems of new energy vehicles (such as electric cars) to keep the platform synchronized with industrial technology development.

- Explore Across Domains: In the future, the platform’s adaptability in other vocational education fields (such as mechanical engineering or electronic technology) can be tested to further expand its application scope and compare its performance with other large language models (such as GPT-4) to explore superior technical solutions.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Liao, C.W.; Lin, E.S.; Chen, B.S.; Wang, C.C.; Wang, I.C.; Ho, W.S.; Ko, Y.Y.; Chu, T.H.; Chang, K.M.; Luo, W.J. AI-Assisted Personalized Learning System for Teaching Chassis Principles. Int. J. Eng. Educ. 2025, 41, 548–560. [Google Scholar][Green Version]

- Liao, C.-W.; Liao, H.-K.; Chen, B.-S.; Tseng, Y.-J.; Liao, Y.-H.; Wang, I.-C.; Ho, W.-S.; Ko, Y.-Y. Inquiry Practice Capability and Students’ Learning Effectiveness Evaluation in Strategies of Integrating Virtual Reality into Vehicle Body Electrical System Comprehensive Maintenance and Repair Services Practice: A Case Study. Electronics 2023, 12, 2576. [Google Scholar] [CrossRef]

- Strielkowski, W.; Grebennikova, V.; Lisovskiy, A.; Rakhimova, G.; Vasileva, T. AI-driven adaptive learning for sustainable educational transformation. Sustain. Dev. 2025, 33, 1589–3169. [Google Scholar] [CrossRef]

- Xu, H.; Gan, W.; Qi, Z.; Wu, J.; Yu, P.S. Large language models for education: A survey. arXiv 2024, arXiv:2405.13001. [Google Scholar] [CrossRef]

- Hadi, M.U.; Qureshi, R.; Shah, A.; Irfan, M.; Zafar, A.; Shaikh, M.B.; Mirjalili, S. A survey on large language models: Applications, challenges, limitations, and practical usage. Authorea Prepr. 2023, 3, 1–56. Available online: https://www.researchgate.net/profile/Muhammad-Shaikh-9/publication/383818024_Large_Language_Models_A_Comprehensive_Survey_of_its_Applications_Challenges_Limitations_and_Future_Prospects/links/66dffb06b1606e24c21d8936/Large-Language-Models-A-Comprehensive-Survey-of-its-Applications-Challenges-Limitations-and-Future-Prospects.pdf (accessed on 10 January 2025).

- Gupta, A. Designing and Deploying Scalable Intelligent Tutoring Systems to Enhance Adult Education. Doctoral Dissertation, Drexel University, Philadelphia, PA, USA, 2025. Available online: https://www.proquest.com/openview/10eecba5e602d412a0c9ff8fdf92ba23/1?pq-origsite=gscholar&cbl=18750&diss=y (accessed on 15 January 2025).

- Raimondi, B. Large Language Models for Education. Master’s Thesis, University of Bologna, Bologna, Italy, 2023. Available online: https://amslaurea.unibo.it/id/eprint/29693 (accessed on 25 January 2025).

- Sharma, S.; Mittal, P.; Kumar, M.; Bhardwaj, V. The role of large language models in personalized learning: A systematic review of educational impact. Discov. Sustain. 2025, 6, 243. [Google Scholar] [CrossRef]

- Chopra, D. Smart Classrooms, Smarter Teachers: A Deep Dive into AI-Driven Educational Enhancement. J. Inform. Educ. Res. 2023, 3, 2813–2822. [Google Scholar] [CrossRef]

- Coelho, A.M.L.; da Silva, H.F.; da Silva, L.A.C.; Andrade, M.E.; da Silva Rodrigues, R.G. Inteligência artifical: Suas vantagens e limites em cursos à distância. Rev. Ilus. 2023, 4, 23–27. [Google Scholar] [CrossRef]

- Song, C.; Shin, S.-Y.; Shin, K.-S. Implementing the Dynamic Feedback-Driven Learning Optimization Framework: A Machine Learning Approach to Personalize Educational Pathways. Appl. Sci. 2024, 14, 916. [Google Scholar] [CrossRef]

- VanLehn, K. The relative effectiveness of human tutoring, intelligent tutoring systems, and other tutoring systems. Educ. Psychol. 2011, 46, 197–221. [Google Scholar] [CrossRef]

- Koedinger, K.R.; McLaughlin, E.A.; Stamper, J.C. Automated Student Model Improvement. In Proceedings of the 5th International Conference on Educational Data Mining, Chania, Greece, 19–21 June 2012; Available online: https://eric.ed.gov/?id=ED537201 (accessed on 25 January 2025).

- Aleven, V.A.; Koedinger, K.R. An effective metacognitive strategy: Learning by doing and explaining with a computer-based cognitive tutor. Cogn. Sci. 2002, 26, 147–179. [Google Scholar] [CrossRef]

- Corbett, A.T.; Anderson, J.R. Knowledge tracing: Modeling the acquisition of procedural knowledge. User Model. User-Adapt. Interact. 1994, 4, 253–278. [Google Scholar] [CrossRef]

- Tecson, C. Intelligent Learning System for Automata (ILSA) and the learners’ achievement goal orientations. In Proceedings of the 2018: ICCE 2018: The 26th International Conference on Computers in Education, Manila, Philippines, 26–30 November 2018; Available online: https://library.apsce.net (accessed on 25 January 2025).

- Treve, M. Integrating artificial intelligence in education: Impacts on student learning and innovation. Int. J. Vocat. Educ. Train. Res. 2024, 10, 61–69. [Google Scholar] [CrossRef]

- Prasetya, F.; Fortuna, A.; Samala, A.D.; Latifa, D.K.; Andriani, W.; Gusti, U.A.; Raihan, M.; Criollo-C, S.; Kaya, D.; García, J.L.C. Harnessing artificial intelligence to revolutionize vocational education: Emerging trends, challenges, and contributions to SDGs 2030. Soc. Sci. Humanit. Open 2025, 11, 101401. [Google Scholar] [CrossRef]

- Lin, C.-C.; Huang, A.Y.-Q.; Lu, O.H.-T. Artificial intelligence in intelligent tutoring systems toward sustainable education: A systematic review. Smart Learn. Environ. 2023, 10, 41. [Google Scholar] [CrossRef]

- Hwang, G.-J.; Xie, H.; Wah, B.W.; Gašević, D. Vision, challenges, roles and research issues of artificial intelligence in education. Comput. Educ. Artif. Intell. 2020, 1, 100001. [Google Scholar] [CrossRef]

- Zhang, S.; Kuang, K.; Lyu, C.; Li, J.; Xiao, J.; Wu, F.; Wu, F. Device-Cloud Collaborative Intelligent Computing: Key Problems, Methods, and Applications. Strateg. Study Chin. Acad. Eng. 2024, 26, 127–138. [Google Scholar] [CrossRef]

- Stavroulia, K.E.; Baka, E.; Lanitis, A. VR-Based Teacher Training Environments: A Systematic Approach for Defining the Optimum Appearance of Virtual Classroom Environments. Virtual Worlds 2025, 4, 6. [Google Scholar] [CrossRef]

- Uygun, Y.; Momodu, V. Local large language models to simplify requirement engineering documents in the automotive industry. Prod. Manuf. Res. 2024, 12, 2375296. [Google Scholar] [CrossRef]

- Momodu, V. Using local large language models to simplify requirement engineering documents in the automotive industry. In Logistics Engineering and Technologies Group-Working Paper Series 2023-04; Elsevier: Amsterdam, The Netherlands, 2023. [Google Scholar] [CrossRef]

- López-Villanueva, D.; Santiago, R.; Palau, R. Flipped Learning and Artificial Intelligence. Electronics 2024, 13, 3424. [Google Scholar] [CrossRef]

- Katona, J.; Gyonyoru, K.I.K. Integrating AI-based adaptive learning into the flipped classroom model to enhance engagement and learning outcomes. Comput. Educ. Artif. Intell. 2025, 8, 100392. [Google Scholar] [CrossRef]

- Li, B.; Peng, M. Integration of an AI-Based Platform and Flipped Classroom Instructional Model. Sci. Program. 2022, 2022, 2536382. [Google Scholar] [CrossRef]

- Mukul, E.; Büyüközkan, G. Digital transformation in education: A systematic review of education 4.0. Technol. Forecast. Soc. Chang. 2023, 194, 122664. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems 30 (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Abu-Salih, B.; Alotaibi, S. A systematic literature review of knowledge graph construction and application in education. Heliyon 2024, 10, e25383. Available online: https://www.cell.com/heliyon/fulltext/S2405-8440(24)01414-2 (accessed on 22 January 2025). [CrossRef] [PubMed]

- Trajanoska, M.; Stojanov, R.; Trajanov, D. Enhancing knowledge graph construction using large language models. arXiv 2023, arXiv:2305.04676. [Google Scholar] [CrossRef]

- Zhao, M.; Wang, H.; Guo, J.; Liu, D.; Xie, C.; Liu, Q.; Cheng, Z. Construction of an Industrial Knowledge Graph for Unstructured Chinese Text Learning. Appl. Sci. 2019, 9, 2720. [Google Scholar] [CrossRef]

- Kirchner, S.; Knoll, A.C. Generating Automotive Code: Large Language Models for Software Development and Verification in Safety-Critical Systems. arXiv 2025, arXiv:2506.04038. [Google Scholar] [CrossRef]

- Nouri, A.; Cabrero-Daniel, B.; Fei, Z.; Ronanki, K.; Sivencrona, H.; Berger, C. Large Language Models in Code Co-generation for Safe Autonomous Vehicles. arXiv 2025, arXiv:2505.19658. [Google Scholar] [CrossRef]

- Govea, J.; Ocampo Edye, E.; Revelo-Tapia, S.; Villegas-Ch, W. Optimization and Scalability of Educational Platforms: Integration of Artificial Intelligence and Cloud Computing. Computers 2023, 12, 223. [Google Scholar] [CrossRef]

- Geetha, J.; Jayalakshmi, D.S.; Naresh, E.; Sreenivasa, N. Lightweight Cloud-Based Solution for Digital Education and Assessment. Sci. Technol. Libr. 2024, 43, 274–286. [Google Scholar] [CrossRef]

- Xu, X. AI optimization algorithms enhance higher education management and personalized teaching through empirical analysis. Sci. Rep. 2025, 15, 10157. [Google Scholar] [CrossRef] [PubMed]

| Task Type | Example Question | Correct Model Response | Incorrect Model Response |

|---|---|---|---|

| Semantic Understanding | What is the function of an automotive ignition coil? | An ignition coil transforms low battery voltage into high voltage needed to create a spark in the spark plugs. | It powers the fuel pump. |

| Fault Diagnosis | If the car headlight flickers intermittently, what could be a likely cause? | Possible causes include a loose ground connection, corroded bulb terminals, or a faulty headlight relay. | The battery may be dead. |

| Mathematical Calculation | Given a 12 V battery and two resistors (R1 = 4 Ω, R2 = 6 Ω) in series, what is the voltage drop across R2? | The total resistance is 10 Ω. Current is 1.2 A. Voltage drop across R2 is 7.2 V. | The voltage drop is 6 V. |

| Model | Accuracy (%) | Recall (%) | F1 Score (%) |

|---|---|---|---|

| Meta Llama 3 (fine-tuned) | 89.7 | 87.5 | 88.6 |

| BERT-base | 81.2 | 79.4 | 80.1 |

| GPT-2 | 76.5 | 74.8 | 75.6 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, Y.-C.; Tsai, H.-J.; Liang, H.-T.; Chen, B.-S.; Chu, T.-H.; Ho, W.-S.; Huang, W.-L.; Tseng, Y.-J. Development of an Automotive Electronics Internship Assistance System Using a Fine-Tuned Llama 3 Large Language Model. Systems 2025, 13, 668. https://doi.org/10.3390/systems13080668

Huang Y-C, Tsai H-J, Liang H-T, Chen B-S, Chu T-H, Ho W-S, Huang W-L, Tseng Y-J. Development of an Automotive Electronics Internship Assistance System Using a Fine-Tuned Llama 3 Large Language Model. Systems. 2025; 13(8):668. https://doi.org/10.3390/systems13080668

Chicago/Turabian StyleHuang, Ying-Chia, Hsin-Jung Tsai, Hui-Ting Liang, Bo-Siang Chen, Tzu-Hsin Chu, Wei-Sho Ho, Wei-Lun Huang, and Ying-Ju Tseng. 2025. "Development of an Automotive Electronics Internship Assistance System Using a Fine-Tuned Llama 3 Large Language Model" Systems 13, no. 8: 668. https://doi.org/10.3390/systems13080668

APA StyleHuang, Y.-C., Tsai, H.-J., Liang, H.-T., Chen, B.-S., Chu, T.-H., Ho, W.-S., Huang, W.-L., & Tseng, Y.-J. (2025). Development of an Automotive Electronics Internship Assistance System Using a Fine-Tuned Llama 3 Large Language Model. Systems, 13(8), 668. https://doi.org/10.3390/systems13080668