Abstract

Programming education has become increasingly vital within global K–12 curricula, and large language models (LLMs) offer promising solutions to systemic challenges such as limited teacher expertise and insufficient personalized support. Adopting a human-centric and systems-oriented perspective, this study employed a six-week quasi-experimental design involving 103 Grade 7 students in China to investigate the effects of instruction supported by the iFLYTEK Spark model. Results showed that the experimental group significantly outperformed the control group in programming performance, cognitive interest, and programming self-efficacy. Beyond these quantitative outcomes, qualitative interviews revealed that LLM-assisted instruction enhanced students’ self-directed learning, a sense of real-time human–machine interaction, and exploratory learning behaviors, forming an intelligent human–AI learning system. These findings underscore the integrative potential of LLMs to support competence, autonomy, and engagement within digital learning systems. This study concludes by discussing the implications for intelligent educational system design and directions for future socio-technical research.

1. Introduction

Programming education has garnered increasing attention in global K–12 curricula [1,2,3], and it is now recognized as a critical emerging form of literacy [4]. Extensive literature has underscored its pivotal role in fostering students’ computational thinking [5,6] and cultivating essential 21st-century skills [7,8,9]. Despite its acknowledged importance, however, programming education continues to face significant challenges, notably insufficient teacher expertise [10] and difficulties in delivering effective personalized instruction [11], both of which constrain its widespread adoption and overall effectiveness.

Rapid advances in the development of large language models (LLMs) have introduced new opportunities for programming education. Research has indicated that LLMs can provide personalized guidance tailored to students’ specific needs, facilitate dynamic and interactive learning environments, and offer intelligent error detection along with real-time feedback throughout the learning process [12,13,14,15]. These capabilities position LLMs as promising technological solutions to address the persistent challenges faced in programming education. While LLMs can generate code, human learners must still possess programming knowledge to determine whether the output is appropriate, safe, and efficient.

Although scholars have begun to explore the application of LLMs in programming education [16,17,18,19,20], research in this field remains relatively limited. To our knowledge, a recent systematic review by Raihan et al. [21] identified only 69 primary studies addressing this topic. Further, existing findings are inconclusive: while several studies have demonstrated the potential of LLMs to enhance students’ programming performance and learning engagement [19,22,23], other researchers have raised concerns about students’ growing dependence on AI tools, which may hinder their independent problem-solving and reduce their sense of self-efficacy [20,24,25]. Most research has also been conducted in higher education settings, with limited attention given to the K–12 context [21]. Studies from developing regions remain scarce [26]. Current investigations also tend to focus on students’ programming performance while neglecting the affective and cognitive dimensions of learning [16,18,19,24]. Most interventions have also been short-term, and there is a lack of longitudinal research on the sustained impact of LLMs in programming education.

The present study employed a quasi-experimental design in a Chinese K–12 educational context and implemented a six-week instructional intervention in order to address these gaps. Specifically, this research not only examined students’ programming performance but also systematically investigated students’ affective and cognitive interest in learning, as well as changes in their self-efficacy.

2. Literature Review

2.1. Programming Education

Programming education has gained increased attention worldwide due to the rapid development of information and communication technologies. In the 21st century, programming is regarded not merely as a technical skill but also as an emerging form of literacy [4]. Programming is widely acknowledged for its pivotal role in fostering students’ computational thinking [5,6,27], creativity, logical reasoning, problem-solving abilities, and abstract thinking skills [28]. Extensive research has also underscored that programming education as essential for cultivating core competencies required in the 21st century [7,8,9] while significantly enhancing students’ cognitive potential [29]. An increasing number of countries have thus integrated programming into their school curricula and progressively extended programming education to lower grade levels [30,31,32]. This global trend has been further reinforced by the European Union’s Digital Education Action Plan 2021–2027, which explicitly recognizes programming competence as a core element for future societal innovation and advocates curricular reforms to promote programming education universally and at younger ages [33].

However, implementing effective programming instruction remains challenging. Students frequently struggle with programming performance due to difficulties in mastering abstract concepts and applying logical reasoning, especially when they lack prior computational experience [34,35]. Such difficulties can negatively affect their overall programming performance, which can lead to lower academic outcomes and poorer programming achievement [31]. Further, when students encounter challenges in comprehending complex programming concepts, it often results in frustration, which can diminish their interest in learning [35,36]. Self-efficacy has also been identified as a critical factor influencing programming learning. Research has suggested that students with low self-efficacy often experience anxiety and discomfort during programming tasks, thus adversely affecting their learning engagement and persistence [37,38]. This issue is particularly pronounced among beginners, who typically lack sufficient programming experience to build confidence [39]. Thus, providing positive programming experiences that gradually build students’ self-efficacy, enhance their learning interest, and improve programming performance has become an essential objective for future programming education [38].

2.2. Large Language Models

LLMs are advanced AI systems based on deep learning and natural language processing techniques. They are characterized by their massive parameter sizes and extensive training corpora [40,41]. Well-known LLMs, such as BERT, ChatGPT (GPT-3/4), BARD, LLaMA, and DeepSeek, have achieved significant performance improvements in tasks that involve interpreting and generating language [42,43,44,45]. These capabilities have prompted growing interest in leveraging LLMs in education and related domains [46,47].

LLMs possess three technical features crucial to programming education. First, LLMs provide real-time and context-sensitive feedback so that students can receive immediate and adaptive responses when they encounter programming errors or pose questions, thus facilitating timely correction and reducing frustration [12,42]. Second, LLMs support natural language interaction, thus allowing students to express their programming intentions or inquiries in everyday language without relying on rigid syntactic commands. This lowers the learning threshold and makes programming more accessible to beginners [47]. Third, LLMs exhibit a certain level of reasoning and generalization ability, which not only allows them to explain programming concepts and generate code, but also to provide personalized guidance based on students’ proficiency levels and misconceptions [41,48].

These features underpin a wide range of applications in education. For example, LLMs can be used to generate instructional materials such as quizzes, multiple-choice questions, and code exercises automatically, which can reduce teacher workload and diversify resources [49,50,51,52]. They have also been shown to enhance learners’ curiosity and peer feedback mechanisms through interactive agents and collaborative assessment models [53,54]. While some applications are grounded in higher or professional education, such as medical education [48,55], the underlying instructional logic—real-time feedback, personalized support, and language-mediated code generation—is highly transferable to K–12 programming learning environments.

2.3. Large Language Models and Programming Education

The integration of LLMs into programming education has emerged as a growing research focus in recent years, with studies indicating that LLMs offer significant benefits for learners. For example, Okonkwo and Ade-Ibijola [16] developed Python-Bot, a tool that provides immediate coding guidance to beginners. Their findings showed that the tool reduced learning difficulty and improved students’ confidence. Groothuijsen et al. [18] assessed the application of ChatGPT in a master’s program in the Netherlands and found that students generally believed that ChatGPT effectively helped them understand complex code and provided support in debugging, code generation, and optimization. ChatGPT was regarded as a “secondary tutor”, particularly for explaining code and solving mathematical problems, that significantly enhanced learning efficiency and programming performance. These results demonstrate that ChatGPT can provide powerful programming assistance and effectively support students at different stages of the learning process. For example, Yilmaz and Karaoglan Yilmaz [56] conducted a study in Turkey on university students’ experiences while using ChatGPT to learn programming; they found that students generally believed ChatGPT provided quick and effective feedback, enhanced their cognitive abilities, facilitated the debugging process, and increased their programming confidence. Students’ feedback indicated that ChatGPT performed exceptionally well in boosting motivation and improving their efficiency in solving programming problems.

Numerous experimental studies have also provided empirical evidence that LLMs can significantly enhance students’ programming performance. For example, Lyu et al. [19] evaluated the LLM-based programming assistance tool, CodeTutor, in an undergraduate programming course at a university in the United States. They found that students in the experimental group had an average score of 102.29 on the final exam, which was significantly higher than the control group’s 93.40, with a score difference of 8.89 points and a medium to large effect size (Hedges’ g = 0.69). This suggests that CodeTutor effectively improved students’ programming performance, particularly in syntax comprehension and task completion. In a quasi-experimental study at Sultan Qaboos University in Oman, Abdulla et al. [22] found that students using ChatGPT performed significantly better on a programming exam than the control group (78.85 vs. 58.08, p = 0.0004). These results indicate that LLMs can significantly enhance students’ programming performance in education, especially in task understanding and debugging.

Despite the significant advantages of LLMs in improving students’ programming abilities, research has also highlighted their limitations. Groothuijsen et al. [18] found that although ChatGPT can provide support in code debugging, error checking, and concept understanding, it had limited effectiveness in promoting critical thinking. Students generally felt that ChatGPT made no significant contribution to developing deep reasoning and advanced programming skills. Similarly, Yilmaz and Karaoglan Yilmaz [56] pointed out that although ChatGPT boosts students’ confidence and efficiency in problem-solving, it still falls short in addressing critical thinking and complex tasks. In a study at the University of Arkansas, Johnson et al. [20] found that students who engaged in self-directed programming outperformed the ChatGPT-assisted group in both programming self-efficacy (Cohen’s d = −0.75) and posttest programming performance (Cohen’s d = −0.76). Johnson et al. [20] suggested that, while ChatGPT is somewhat helpful for beginner programming, students who program independently are better able to enhance their programming confidence and ability, particularly in mastering some programming skills in depth.

Although previous studies have identified both the advantages and limitations of LLMs in programming education, the overall findings remain inconsistent, and no clear consensus has been reached. Some evidence suggests that LLMs can enhance students’ learning performance and motivation, while other studies indicate potential drawbacks, such as overreliance on AI tools, limited performance gains, or even reduced self-efficacy. Most research has also concentrated on short-term outcomes and basic skills, with insufficient attention paid to the sustained and multidimensional effects of LLMs over time. These contradictions and methodological limitations underscore the need for further empirical research to examine how LLMs affect students’ learning performance, interest, and self-efficacy in programming education systematically. Accordingly, this study addresses the following research questions:

RQ1: What are the effects of integrating LLMs on students’ programming performance?

RQ2: What are the effects of employing LLMs on students’ interest in learning programming in terms of affective interest and cognitive interest?

RQ3: What are the effects of using LLMs on students’ programming self-efficacy?

3. Method

3.1. Participants

This study involved 103 seventh-grade middle school students from a school in W City, China. All of the participants were enrolled in an information technology course with a focus on Python programming. The experimental group consisted of 52 students (30 males and 22 females), and the control group comprised 51 students (28 males and 23 females). All of the sessions were conducted by the same instructor in the school’s computer laboratory using standardized equipment. The instructional content covered core Python programming concepts, such as input/output functions and data types.

This study did not adopt randomized group assignment due to practical and ethical constraints in authentic school settings. Random reallocation of students could disrupt the existing classroom dynamics and peer relationships, thereby lowering the ecological validity of this study. Instead, two naturally formed parallel classes were selected. These classes were established at the beginning of the academic year through standard administrative procedures. In accordance with national education policy, tracked classes—such as honors or remedial sections—are prohibited at the compulsory level in China. Students were assigned to different classes upon enrollment regardless of academic performance or background.

A pretest on programming knowledge was administered alongside surveys assessing students’ learning interest and programming self-efficacy in order to ensure the initial equivalence of the two groups. The results indicated no significant differences between the two groups on these variables, thus confirming comparability at baseline.

3.2. Research Design

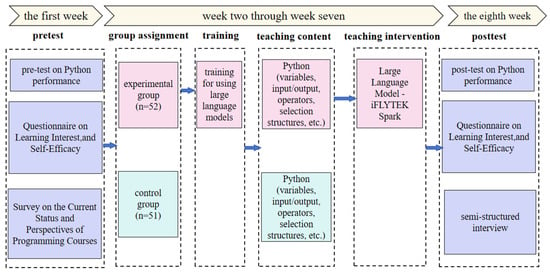

A quasi-experimental design was employed to examine the effectiveness of iFLYTEK Spark in programming education. Two naturally formed classes were selected, and a six-week instructional intervention was implemented, with one 40 min programming session per week. The course content included basic Python concepts, such as sequential structures, selection structures, variables, input/output, and operators. The experimental procedure was divided into three phases.

Pre-experimental phase: Prior to the intervention, all of the participants completed a programming performance test, a learning interest questionnaire, and a self-efficacy questionnaire to establish baseline data for subsequent comparisons.

Experimental phase: Students in the experimental group received initial training on the use of the iFLYTEK Spark LLM, focusing on how to use its real-time feedback, error correction, and personalized learning support during programming exercises. Students used the model to assist with problem-solving and learning tasks during the following lessons. The control group followed traditional instruction, with no intelligent tool support. The instructor first delivered concept explanations, followed by programming practice in both groups.

We did not administer weekly tests or questionnaires during the six-week intervention period for two reasons. First, the class time was limited and primarily used for instruction and hands-on programming tasks, leaving little room for frequent standardized assessments; second, we aimed to examine the sustained effects of LLM-assisted instruction over time, rather than short-term fluctuations; third, repeated weekly questionnaires or testing might have induced fatigue or resistance among students, potentially interfering with authentic learning experiences. Therefore, we adopted a traditional pretest–posttest approach to capture overall learning outcomes.

Post-experimental phase: Upon completion of the intervention, all of the students took the programming test again, as well as completing the learning interest and self-efficacy questionnaires. Semi-structured interviews were conducted with selected students from the experimental group to gain deeper insights into their learning experiences. The detailed experimental design is illustrated in Figure 1.

Figure 1.

Quasi-experimental design of this study.

To mitigate potential issues commonly reported in previous studies—such as overreliance on LLM tools, diminished learner autonomy, and academic dishonesty—specific control measures were integrated into the research design. The use of iFLYTEK Spark was restricted to designated practice sessions under teacher supervision. Students were clearly informed that final assessments would be completed independently without the aid of any intelligent tools. Additionally, all instructional activities were conducted in a monitored computer lab, where teachers could observe students’ on-screen behavior in real time. These design elements aimed to promote responsible tool usage, reinforce student accountability, and ensure the integrity of learning outcomes.

3.3. Instructional Intervention

3.3.1. Overview of iFLYTEK Spark

The iFLYTEK Spark LLM was developed by iFLYTEK Co., Ltd., Hefei, China, It is similar to ChatGPT and offers advanced natural language processing capabilities such as text generation, translation, and responding to questions. iFLYTEK is a leading artificial intelligence company in China and its products are widely used in the education sector, particularly in basic education. iFLYTEK Spark was selected for this study due to its technological maturity, extensive application in educational contexts, and positive user feedback. In addition, the model is freely accessible in mainland China and has been technically benchmarked to perform comparably to leading international LLMs such as GPT-4 in areas including code generation, logic, and multi-turn dialog.

3.3.2. Integration of iFLYTEK Spark into Instruction

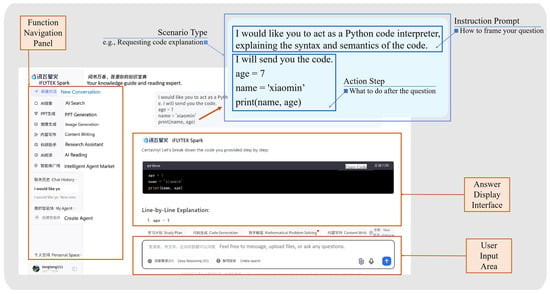

At the outset of the intervention, a 20 min training session was provided to the experimental group to introduce the basic concepts of LLMs, the functionalities of the iFLYTEK Spark platform, and effective questioning strategies. Students were taught how to structure their queries by defining roles, providing background information, clarifying objectives, and specifying output requirements. Techniques for formulating follow-up questions to obtain more precise responses were also covered.

After the training, students received individual accounts to access iFLYTEK Spark and practiced using the platform. During subsequent lessons, students were encouraged to consult the model freely for support during programming exercises. To enhance the effectiveness of interactions, a Programming Question Guide was introduced to help students formulate structured prompts when seeking help from the LLM. Figure 2 presents a real example from this guide, illustrating how students engaged with iFLYTEK Spark through structured prompting strategies. (See Appendix B for additional sample entries from the guide.)

Figure 2.

Student use of the programming question guide in iFLYTEK Spark interaction.

3.4. Data Collection Instruments

3.4.1. Programming Performance Test

Two sets of programming performance tests were designed for the pretest and posttest. These were based on content from the Zhejiang Province compulsory education textbook Information Technology Grade 8 (Volume I) and the instructional material covered during the intervention. Test items were developed under the guidance of an experienced teacher. The pretest included 10 multiple-choice questions assessing programming knowledge and two short programming tasks evaluating practical skills. The posttest expanded on these areas, containing 15 multiple-choice questions and four programming tasks, with additional topics such as string manipulation, operators, and multi-branch structures. Comparison of pre- and posttest scores provided a measure of learning progress and instructional effectiveness.

3.4.2. Learning Interest Questionnaire

The Learning Interest Questionnaire measured two dimensions: affective interest (students’ emotional engagement) and cognitive interest (curiosity and understanding of programming knowledge). The questionnaire was adapted from Ai-Lim Lee et al. [57] and contained four items rated on a five-point Likert scale, with two items per dimension. The internal consistency was high, with a Cronbach’s α coefficient of 0.867. The full version of the questionnaire is provided in Appendix A.

3.4.3. Self-Efficacy Questionnaire

The Programming Self-Efficacy Questionnaire assessed two aspects: perceived programming competence and perceived control over learning. It was adapted from the Self-Efficacy Scale developed by Ma [58] and included 13 items—6 evaluating competence and 7 evaluating control. Reliability analysis yielded Cronbach’s α coefficients of 0.897 (competence), 0.828 (control), and 0.881 overall, thus indicating good reliability. The complete questionnaire can be found in Appendix A.

3.4.4. Semi-Structured Interviews

To explore students’ subjective experiences with iFLYTEK Spark, semi-structured interviews were conducted with nine participants from the experimental group. Interviews were guided by three overarching research questions concerning the impact of iFLYTEK Spark on learning interest and self-efficacy. Each interview lasted 5–10 min, was audio-recorded, and transcribed for qualitative analysis. The resulting transcripts totaled approximately 14,572 words.

3.5. Data Analysis

The data analysis of this study was divided into two parts: quantitative data analysis and qualitative data analysis. Quantitative data analysis was carried out using SPSS (version 26) software. Independent samples t-tests were performed on programming test scores, as they met normality assumptions. For the ordinal data from learning interest and self-efficacy questionnaires, Mann–Whitney U tests were applied. We also collected gender information and conducted moderation analyses to examine whether gender influenced the effects of the intervention. For the qualitative analysis, in order to systematically examine students’ programming learning experiences and behaviors supported by large language models, we adopted a qualitative strategy that combined open coding and axial coding. The researcher repeatedly reviewed the interview transcripts, extracted significant statements and patterns, and gradually grouped them into conceptual categories. This process laid the foundation for constructing the subsequent thematic structure.

4. Result

4.1. Programming Performance

An independent samples t-test was conducted to compare the programming performance of the experimental and the control groups. The key statistical results are presented in Table 1. The results show that there was no significant difference between the two groups in terms of mastery of basic programming concepts, which indicates that both groups had comparable baseline knowledge. However, the experimental group scored significantly higher than the control group in programming practical application ability, thus demonstrating stronger practical skills. The total score comparison also indicates that the experimental group outperformed the control group in overall programming ability, which suggests that the LLM-assisted instructional approach had a positive impact on enhancing students’ comprehensive programming competence.

Table 1.

Independent samples T-test results for programming ability test: experimental vs. control group.

4.2. Learning Interest

As shown in Table 2, the Mann–Whitney U test results indicate that the experimental group achieved significantly higher overall learning interest scores than the control group. This finding suggests that LLM-assisted instruction positively influenced students’ interest in programming. The experimental group scored significantly higher in cognitive interest, thus indicating greater curiosity and motivation during the exploration of programming knowledge and skills. The experimental group also outperformed the control group in terms of affective interest, although the difference was not statistically significant. Overall, the experimental group demonstrated better performance in all of the dimensions of learning interest, but particularly in cognitive interest.

Table 2.

Results for differences in learning interest between experimental and control groups.

4.3. Self-Efficacy

As shown in Table 3, the Mann–Whitney U test results reveal that the experimental group scored significantly higher than the control group in overall programming self-efficacy and its two sub-dimensions. First, regarding overall programming self-efficacy, the experimental group exhibited greater confidence in completing programming tasks. The experimental group also scored significantly higher in perceived programming learning ability, which suggests a stronger sense of self-identity in acquiring programming skills. The experimental group also demonstrated significantly higher perceived control over the programming learning process. In summary, the experimental group showed significant advantages across all of the dimensions of programming self-efficacy, which indicates that LLM-assisted instruction positively enhanced students’ programming confidence, perceived competence, and learning control.

Table 3.

Results for programming efficacy, ability, and control.

To further examine the potential influence of individual differences, moderation analyses were conducted using gender as a variable. The results indicated that gender did not significantly moderate the effects of the intervention on programming performance, learning interest, or programming self-efficacy.

4.4. Qualitative Findings

Through qualitative coding of the interview data, a total of 16 representative nodes were identified. These nodes were further categorized into six thematic categories, which were organized under three overarching themes. Table 4 presents the overall thematic structure developed in this study, providing an integrated framework for interpreting students’ experiences and perceptions in LLM-assisted programming learning contexts.

Table 4.

Results of themes, categories, and nodes for LLM-assisted programming learning experiences.

4.4.1. Themed Finding 1: LLMs Are Intelligent Learning Companions Fostering Students’ Self-Directed Learning and Mastery of Programming

The interviews revealed that the integration of iFLYTEK Spark significantly enhanced students’ self-directed learning behaviors. Rather than relying solely on teacher-led instruction, students reported taking greater ownership of their learning process by using iFLYTEK Spark to seek immediate feedback and personalized support. This autonomy allowed students to master concepts at their own pace, revisit difficult material, and explore additional knowledge based on their interests. Students generally described a two-step approach: they first attempted to solve problems independently and then turned to the iFLYTEK Spark when encountering difficulties. One student reflected the following:

When there was an error in the code, I would check it by myself first, but sometimes I couldn’t find out what the problem is. Then I would ask iFLYTEK Spark, and it could quickly help me analyze the error while explaining why it was wrong.

Another student highlighted, “In the past, if I didn’t understand, I would just sit there. But now with iFLYTEK Spark, I can ask it anything I don’t understand and almost complete all the coding tasks each lesson”.

Others highlighted the flexibility to extend their learning beyond the classroom, noting that the model allowed them to deepen their understanding of programming topics whenever needed. Overall, the iFLYTEK Spark functioned not merely as a problem-solving tool but as an intelligent learning companion that empowered students to engage actively with their learning content, develop problem-solving skills, and pursue individual learning goals.

4.4.2. Themed Finding 2: LLMs Provided Just-in-Time Support and Fostered Dialogic Engagement in Human–Machine Interaction

A second major theme that emerged from the interviews was the role of iFLYTEK Spark in offering timely learning support and fostering a more dialogic learning experience. Students frequently described the model as a “personal tutor” that was always accessible and provided detailed, immediate responses. As one student shared, “iFLYTEK Spark is like an exclusive teacher, answering my questions at any time. No matter when I ask, it can give detailed answers”.

Beyond delivering factual responses, the model also supported students’ self-reflection by allowing them to compare their own code with AI-generated suggestions. This process helped them recognize mistakes more efficiently and clarify conceptual misunderstandings. As another student noted, “Sometimes teachers are too busy to help right away, but iFLYTEK Spark is always available—really convenient”.

Although these experiences do not reflect traditional peer-to-peer or teacher–student social presence, many students reported developing a sense of familiarity and comfort with the AI over time.

Interestingly, some students even anthropomorphized the model, describing it in relational terms such as “it understands me” or “it’s like a helpful companion.” This perception likely stems from the LLM’s natural language interactions, which simulate human-like dialog and responsiveness. While this does not constitute social presence in the conventional sense, it does point to the formation of a perceived human–machine social bond—an emerging phenomenon increasingly noted in AI-integrated learning environments.

4.4.3. Themed Finding 3: LLMs Stimulated Exploratory Learning and Supported the Transfer of Programming Knowledge

The final theme highlighted how the use of iFLYTEK Spark stimulated exploratory learning and expanded students’ knowledge horizons. Unlike traditional instruction, which often focuses on systematic knowledge transfer, interaction with iFLYTEK Spark encouraged students to ask questions, consider multiple solutions, and explore programming topics beyond the prescribed curriculum. Students frequently noted that the model offered multiple perspectives on problem-solving. One student shared, “iFLYTEK Spark provides both functional and non-functional solutions, which allows me to understand the problem in a more comprehensive way”. Others described how they used the model to explore advanced concepts such as code optimization, which led to a deeper understanding of programming best practices.

5. Discussion and Conclusions

5.1. Impact of LLMs on Programming Performance

The quantitative results of this study demonstrate that students in the experimental group significantly outperformed those in the control group in both practical programming skills and overall programming performance, despite the lack of a significant difference in basic programming knowledge between the two groups. This suggests that LLM-assisted instruction effectively enhances students’ ability to apply programming concepts to real-world tasks.

Interview data further corroborated this finding. As indicated in Section 4.4.1, students commonly adopted a two-step learning strategy: they first attempted to solve problems independently and then sought support from iFLYTEK Spark for real-time feedback and conceptual clarification when difficulties arose. Additionally, as shown in Section 4.4.2, students perceived the model as a readily accessible “personal tutor,” which enhanced their efficiency and confidence in completing programming tasks.

These findings are consistent with constructivist learning theory. Schunk [59] argues that learning is a process of actively constructing knowledge through experience, and that dynamic scaffolding and timely feedback are essential for skill development. In this study, iFLYTEK Spark functioned as a dynamic scaffold that supported iterative learning by helping students refine their code and deepen their understanding.

From a systems perspective, the integration of LLMs into programming education can be seen as forming an intelligent human–machine learning system, wherein students interact with the model in a continuous feedback loop. This closed-loop interaction, involving student input and AI-generated responses, supports adaptive learning within a socio-technical system. Empirical evidence from Lyu et al. [19] also demonstrated that students using LLM-based tools achieved significantly higher programming scores than those in the control group, further validating the effectiveness of such systems in improving programming performance.

5.2. Impact of LLMs on Interest in Programming

The results also indicated that students in the experimental group demonstrated significantly higher interest in learning programming than those in the control group, particularly in the dimension of cognitive interest. This suggests that LLMs not only improved students’ engagement but also stimulated their intrinsic motivation to actively explore programming content.

As shown in Section 4.4.3, students often used iFLYTEK Spark to raise out-of-syllabus questions, compare alternative solutions, and explore code optimization strategies—behaviors reflecting strong curiosity and exploratory intent. In addition, Section 4.4.2 reveals that the LLM offered a highly interactive and dialogic experience that made the learning process more continuous, immersive, and responsive, thereby enhancing students’ emotional connection and engagement.

From a human–machine systems perspective, the dialogic interaction between students and the LLM forms an intelligent, semi-autonomous learning subsystem embedded within the broader instructional ecosystem. This subsystem supports recursive information processing and sustained motivational feedback, which are essential for fostering curiosity and inquiry-based learning.

These findings can be explained by self-determination theory. Ryan and Deci [60] posited that intrinsic motivation is more likely to be activated when learning environments fulfill individuals’ psychological needs for autonomy, competence, and relatedness. The LLM supported students’ autonomy through open-ended questioning and personalized exploration, enhanced their sense of competence through real-time feedback, and fostered relatedness through ongoing interaction.

These mechanisms are consistent with the findings of Okonkwo and Ade-Ibijola [16], who developed Python-Bot, as well as those of Yilmaz and Karaoglan Yilmaz [56], who studied the use of ChatGPT. Both studies confirmed the positive role of LLMs in stimulating students’ learning motivation and exploratory interest. The present study further reinforces this conclusion.

5.3. Impact of LLMs on Programming Self-Efficacy

Students in the experimental group significantly outperformed those in the control group in terms of programming self-efficacy and its two subdimensions: perceived learning ability and learning control. This indicates that LLMs help strengthen students’ confidence in completing programming tasks and enhance their perceived control over the learning process.

Interview data offer explanatory insights into this outcome. As noted in Section 4.4.1, students developed confidence and persistence by consistently interacting with iFLYTEK Spark and accumulating successful problem-solving experiences. As shown in Section 4.4.2, students widely perceived the model as responsive and stable, providing a “constantly available” learning companion that reduced frustration and increased their willingness to confront challenges.

From a system thinking perspective, LLMs function as cognitive agents embedded within the digital learning system. Their continual interaction with learners facilitates feedback loops that build mastery and emotional resilience. These loops contribute to the emergence of a self-reinforcing learning system that supports efficacy development.

These findings are highly consistent with Bandura’s [61] self-efficacy theory, which identifies mastery experience as the most critical source of efficacy beliefs. In this study, the LLM provided specific, timely feedback that enabled students to succeed in real programming tasks repeatedly, thereby fostering positive self-judgments and stronger beliefs in their learning capabilities.

This finding aligns with the results of Yilmaz and Karaoglan Yilmaz [56] and Lyu et al. [19], who also found that LLM-assisted programming instruction significantly improved students’ task confidence and self-efficacy. The present study further substantiates these conclusions.

5.4. Implications for Programming Teaching

The results of this study offer several practical implications for programming instruction. First, educators should consider integrating LLMs into programming courses not merely as ancillary tools but as embedded components of a human–machine learning system that supports students’ real-time exploration and problem-solving. By enabling timely assistance and feedback within this system, LLMs can promote greater learner autonomy and resilience. Second, teachers should design learning activities that guide students to interact with LLMs actively and reflectively, rather than relying passively on generated outputs. Structured support for effective questioning, response evaluation, and metacognitive reflection will optimize the cognitive benefits of such integration. Third, while leveraging the advantages of LLMs, educators must remain attentive to system-level risks, such as over-dependence and diminished self-regulation. Thus, fostering critical thinking and self-verification habits should be an integral part of a balanced, system-aware instructional design. In particular, assessment tasks should be designed to evaluate students’ internalized understanding of programming concepts, rather than over-reliance on AI-generated output. This study also suggests that instructional safeguards—such as requiring independent completion of final assessments and using real-time classroom monitoring—can help reduce the risks of over-reliance on large language model tools. These measures promote responsible use and uphold the integrity of learning, offering practical guidance for accountable and ethical LLM-assisted instruction.

5.5. Limitations and Future Research

Despite its contributions, this study has several limitations that warrant careful consideration. First, the sample was drawn from a single middle school with a relatively homogeneous student population, and this study primarily focused on programming performance, learning interest, and self-efficacy. These constraints may limit the generalizability of the findings. Future research should examine more diverse educational contexts and incorporate broader variables—such as programming creativity, collaboration, ethical reasoning, and digital literacy—to gain a more comprehensive understanding of the multifaceted impact of LLM-assisted learning.

Second, although we carefully considered group equivalence in our quasi-experimental design, the absence of random assignment means that we cannot completely rule out the influence of confounding factors. Therefore, any causal interpretations of the results should be made with caution. Moreover, while the six-week intervention provided students with sustained exposure to LLM-assisted learning and partially mitigated novelty effects, the duration may still be insufficient to assess long-term outcomes. Future research could consider extending the intervention to a full semester or academic year, especially given the gradual nature of programming skill development.

Third, the qualitative findings were based on a small number of student interviews and lacked triangulation, which may affect the richness and representativeness of the results. In addition, although this study did not observe commonly reported concerns such as overreliance on LLMs or academic misconduct, these positive outcomes may be attributed to the structured instructional safeguards implemented during the intervention. Further studies are needed to investigate whether similar effects can be achieved in less supervised or more open learning environments, in order to evaluate the ecological validity of the findings.

Lastly, as an exploratory study, this research only examined gender as a potential moderating factor, without considering other individual differences. Future studies are encouraged to incorporate a wider range of learner characteristics and adopt more fine-grained analytical approaches to better understand how such variables may interact with LLM design and instructional strategies.

Author Contributions

Conceptualization, H.L.; Data curation, J.L.; Formal analysis, B.T. and J.L.; Investigation, B.T. and J.L.; Methodology, H.L. and J.L.; Project administration, H.L.; Resources, H.L.; Supervision, H.L.; Validation, H.L.; Visualization, B.T.; Writing—original draft, B.T. and W.H.; Writing—review and editing, J.L. and H.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research study was partially funded by the Special Project on Empowering Innovation in Education and Teaching through Digital and Intelligent Technologies of Central China Normal University, grant number CCNU25JG07.

Data Availability Statement

The data presented in this study are openly available in the Mendeley Data database at https://data.mendeley.com/datasets/zyhn8498rv/1 (accessed on 15 May 2025).

Acknowledgments

During the preparation of this manuscript, the authors used ChatGPT (OpenAI, GPT-4.0, accessed in May 2025) for the purposes of language refinement and expression optimization. The authors have reviewed and edited the output and take full responsibility for the content of this publication.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A

Student Questionnaire on Python Learning Experience

This questionnaire aims to collect students’ reflections on their experience in the past Python programming course. The results are solely for academic research purposes and have no impact on students’ final grades. All of the information will be kept confidential. Students are encouraged to answer truthfully based on their actual experience.

Section I: Basic Information

Name: __________

Gender: __________

Class: __________

Section II: Perceptions of the Python Course

Please indicate the extent to which you agree with the following statements, using the scale below:

A = Strongly Disagree, B = Disagree, C = Neutral, D = Agree, E = Strongly Agree

Learning Interest

1. I felt enthusiastic about the Python course.

2. The topics covered in the Python course fascinated me.

3. I understood the course materials in the Python class.

4. The information presented in the Python class increased my knowledge.

Programming Self-Efficacy

Perceived Programming Competence

5. I was confident in my ability to master Python-related knowledge and skills.

6. When I encountered difficulties in Python programming, I believed I could solve them.

7. I looked forward to learning new knowledge before each Python class.

8. I believed I could successfully complete the programming tasks assigned by the teacher.

9. I believed I could master the content taught in each Python lesson.

10. When facing challenging Python tasks, I believed I could complete them.

Programming learning control

11. I voluntarily practiced programming to consolidate what I had learned, even without being required.

12. I often wanted to chat with classmates or do unrelated things during Python class.

13. I actively sought help from the teacher or classmates when encountering difficulties.

14. If my peers were not practicing programming, I tended not to practice either.

15. I was often afraid the teacher would call on me and that I would not be able to answer.

16. I often felt that my programming assignments were not as good as others’.

17. When I couldn’t write correct code, I would give up and wait for the teacher’s explanation.

Appendix B

How Should We Ask Programming Questions to iFLYTEK Spark?

1. When we want it to explain our code

Sample question:

I hope you can act as a Python code interpreter to explain the syntax and semantics of the following code. (Then paste your code here or above.)

2. When we want it to annotate our code

Sample question:

I hope you can act as a Python code annotator and add comments to each line of the following code. (Then paste your code here or above.)

3. When we want it to explain programming concepts or logic

Sample questions:

What are variables in Python, and what are their types?

Can you explain the meaning and syntax of the following ‘print’ statement?

4. When we want it to find and correct errors in our code.

Sample question:

I hope you can act as a Python code debugger. Please tell me where the error is in this piece of code I send you and explain why. Then, please help me correct it. (Then paste your code here or above.)

References

- Tuomi, P.; Multisilta, J.; Saarikoski, P.; Suominen, J. Coding skills as a success factor for a society. Educ. Inf. Technol. 2018, 23, 419–434. [Google Scholar] [CrossRef]

- Panth, B.; Maclean, R. Introductory overview: Anticipating and preparing for emerging skills and jobs—Issues, concerns, and prospects. In Anticipating and Preparing for Emerging Skills and Jobs. Education in the Asia-Pacific Region: Issues, Concerns and Prospects; Springer: Singapore, 2020; pp. 1–10. [Google Scholar] [CrossRef]

- Papadakis, S. The impact of coding apps to support young Children in computational thinking and computational fluency. A literature review. Front. Educ. 2021, 6, 657895. [Google Scholar] [CrossRef]

- Chen, C.-M.; Huang, M.-Y. Enhancing programming learning performance through a Jigsaw collaborative learning method in a metaverse virtual space. Int. J. STEM Educ. 2024, 11, 36. [Google Scholar] [CrossRef]

- Tsai, M.-J.; Wang, C.-Y.; Hsu, P.-F. Developing the Computer Programming Self-Efficacy Scale for Computer Literacy Education. J. Educ. Comput. Res. 2019, 56, 1345–1360. [Google Scholar] [CrossRef]

- Atmatzidou, S.; Demetriadis, S. Advancing students’ computational thinking skills through educational robotics: A study on age and gender relevant differences. Rob. Auton. Syst. 2016, 75, 661–670. [Google Scholar] [CrossRef]

- Kanbul, S.; Uzunboylu, H. Importance of coding education and robotic applications for achieving 21st-century skills in North Cyprus. Int. J. Emerg. Technol. Learn. 2017, 12, 130. [Google Scholar] [CrossRef]

- Nouri, J.; Zhang, L.; Mannila, L.; Norén, E. Development of computational thinking, digital competence and 21st century skills when learning programming in K-9. Educ. Inq. 2020, 11, 1–17. [Google Scholar] [CrossRef]

- Moraiti, I.; Fotoglou, A.; Drigas, A. Coding with block programming languages in educational robotics and mobiles, improve problem solving, creativity & critical thinking skills. Int. J. Interact. Mob. Technol. 2022, 16, 59–78. [Google Scholar] [CrossRef]

- Wu, L.; Looi, C.-K.; Multisilta, J.; How, M.-L.; Choi, H.; Hsu, T.-C.; Tuomi, P. Teacher’s perceptions and readiness to teach coding skills: A comparative study between Finland, Mainland China, Singapore, Taiwan, and South Korea. Asia-Pac. Educ. Res. 2020, 29, 21–34. [Google Scholar] [CrossRef]

- Lindberg, R.S.N.; Laine, T.H.; Haaranen, L. Gamifying programming education in K-12: A review of programming curricula in seven countries and programming games. Br. J. Educ. Technol. 2019, 50, 1979–1995. [Google Scholar] [CrossRef]

- Kasneci, E.; Sessler, K.; Küchemann, S.; Bannert, M.; Dementieva, D.; Fischer, F.; Gasser, U.; Groh, G.; Günnemann, S.; Hüllermeier, E.; et al. ChatGPT for good? On opportunities and challenges of large language models for education. Learn. Individ. Differ. 2023, 103, 102274. [Google Scholar] [CrossRef]

- Silva, C.A.G.d.; Ramos, F.N.; de Moraes, R.V.; Santos, E.L.d. ChatGPT: Challenges and benefits in software programming for higher education. Sustainability 2024, 16, 1245. [Google Scholar] [CrossRef]

- Phung, T.; Pădurean, V.-A.; Cambronero, J.; Gulwani, S.; Kohn, T.; Majumdar, R.; Singla, A.; Soares, G. Generative AI for programming education: Benchmarking ChatGPT, GPT-4, and human tutors. In Proceedings of the 2023 ACM Conference on International Computing Education Research, Chicago IL USA, 7 August 2023; Volume 2, pp. 41–42. [Google Scholar] [CrossRef]

- Humble, N.; Boustedt, J.; Holmgren, H.; Milutinovic, G.; Seipel, S.; Östberg, A.-S. Cheaters or AI-Enhanced Learners: Consequences of ChatGPT for programming education. Electron. J. e-Learn. 2024, 22, 16–29. [Google Scholar] [CrossRef]

- Okonkwo, C.W.; Ade-Ibijola, A. Python-bot: A chatbot for teaching python programming. Eng. Lett. 2020, 29, 25–34. [Google Scholar]

- Chen, E.; Huang, R.; Chen, H.-S.; Tseng, Y.-H.; Li, L.-Y. GPTutor: A ChatGPT-powered programming tool for code explanation. In Artificial Intelligence in Education. Posters and Late Breaking Results, Workshops and Tutorials, Industry and Innovation Tracks, Practitioners, Doctoral Consortium and Blue Sky. AIED 2023. Communications in Computer and Information Science; Springer: Cham, Switzerland, 2023; pp. 321–327. [Google Scholar] [CrossRef]

- Groothuijsen, S.; van den Beemt, A.; Remmers, J.C.; van Meeuwen, L.W. AI chatbots in programming education: Students’ use in a scientific computing course and consequences for learning. Comput. Educ. Artif. Intell. 2024, 7, 100290. [Google Scholar] [CrossRef]

- Lyu, W.; Wang, Y.; Chung, T.; Sun, Y.; Zhang, Y. Evaluating the effectiveness of LLMs in introductory computer science education: A semester-long field study. In Proceedings of the Eleventh ACM Conference on Learning @ Scale, Atlanta, GA, USA, 7 September 2024; pp. 63–74. [Google Scholar] [CrossRef]

- Johnson, D.M.; Doss, W.; Estepp, C.M. Using ChatGPT with novice arduino programmers: Effects on performance, interest, self-efficacy, and programming ability. J. Res. Tech. Careers 2024, 8, 1. [Google Scholar] [CrossRef]

- Raihan, N.; Siddiq, M.L.; Santos, J.C.S.; Zampieri, M. Large language models in computer science education: A systematic literature review. In Proceedings of the 56th ACM Technical Symposium on Computer Science Education V. 1, Pittsburgh, PA, USA, 2 December 2025; pp. 938–944. [Google Scholar] [CrossRef]

- Abdulla, S.; Ismail, S.; Fawzy, Y.; Elhag, A. Using ChatGPT in teaching computer programming and studying its impact on students performance. Electron. J. e-Learn. 2024, 22, 66–81. [Google Scholar] [CrossRef]

- Choi, S.; Kim, H. The impact of a large language model-based programming learning environment on students’ motivation and programming ability. Educ. Inf. Technol. 2024, 30, 8109–8138. [Google Scholar] [CrossRef]

- Jošt, G.; Taneski, V.; Karakatič, S. The impact of large language models on programming education and student learning outcomes. Appl. Sci. 2024, 14, 4115. [Google Scholar] [CrossRef]

- Padiyath, A.; Hou, X.; Pang, A.; Viramontes Vargas, D.; Gu, X.; Nelson-Fromm, T.; Wu, Z.; Guzdial, M.; Ericson, B. Insights from social shaping theory: The appropriation of large language models in an undergraduate programming course. In Proceedings of the 2024 ACM Conference on International Computing Education Research-Volume 1, Melbourne, VIC, Australia, 12 August 2024; pp. 114–130. [Google Scholar] [CrossRef]

- Guizani, S.; Mazhar, T.; Shahzad, T.; Ahmad, W.; Bibi, A.; Hamam, H. A systematic literature review to implement large language model in higher education: Issues and solutions. Discov. Educ. 2025, 4, 35. [Google Scholar] [CrossRef]

- Wing, J.M. Computational thinking. Commun. ACM 2006, 49, 33–35. [Google Scholar] [CrossRef]

- Fessakis, G.; Gouli, E.; Mavroudi, E. Problem solving by 5–6 years old kindergarten children in a computer programming environment: A case study. Comput. Educ. 2013, 63, 87–97. [Google Scholar] [CrossRef]

- Theodoropoulos, A.; Lepouras, G. Augmented reality and programming education: A systematic review. Int. J. Child-Comput. Interact. 2021, 30, 100335. [Google Scholar] [CrossRef]

- Brinda, T.; Puhlmann, H.; Schulte, C. Bridging ICT and CS. ACM SIGCSE Bulletin 2009, 41, 288–292. [Google Scholar] [CrossRef]

- Kalelioğlu, F. A new way of teaching programming skills to K-12 students: Code.org. Comput. Hum. Behav. 2015, 52, 200–210. [Google Scholar] [CrossRef]

- Bers, M.U. Coding and computational thinking in early childhood: The Impact of scratchJr in europe. Eur. J. STEM Educ. 2018, 3, 8. [Google Scholar] [CrossRef]

- European Commission. Digital Education Action Plan 2021–2027: Resetting Education and Training for the Digital Age; Publications Office of the European Union: Luxembourg, 2020. [Google Scholar]

- Robins, A.; Rountree, J.; Rountree, N. Learning and teaching programming: A review and discussion. Comput. Sci. Educ. 2003, 13, 137–172. [Google Scholar] [CrossRef]

- Gandy, E.A.; Bradley, S.; Arnold-Brookes, D.; Allen, N.R. The use of LEGO mindstorms NXT robots in the teaching of introductory Java programming to undergraduate students. Innov. Teach. Learn. Inf. Comput. Sci. 2010, 9, 2–9. [Google Scholar] [CrossRef]

- de la Hera, D.P.; Zanoni, M.B.; Sigman, M.; Calero, C.I. Peer tutoring of computer programming increases exploratory behavior in children. J. Exp. Child Psychol. 2022, 216, 105335. [Google Scholar] [CrossRef]

- Kinnunen, P.; Malmi, L. Why students drop out CS1 course? In Proceedings of the Second International Workshop on Computing Education Research, Canterbury, UK, 9 September 2006; pp. 97–108. [Google Scholar] [CrossRef]

- Kadirhan, Z.; Gül, A.; Battal, A. Self-efficacy to teach coding in K-12 education. In Self-Efficacy in Instructional Technology Contexts; Springer: Cham, Switzerland, 2018; pp. 205–226. [Google Scholar] [CrossRef]

- Askar, P.; Davenport, D. An investigation of factors related to self-efficacy for java programming among engineering students. Turk. Online J. Educ. Technol. 2009, 8, 3. [Google Scholar]

- Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.L.; Almeida, D.; Altenschmidt, J.; Altman, S.; Anadkat, S. Gpt-4 technical report. arXiv 2023, arXiv:2303.08774. [Google Scholar] [CrossRef]

- Wei, J.; Tay, Y.; Bommasani, R.; Raffel, C.; Zoph, B.; Borgeaud, S.; Yogatama, D.; Bosma, M.; Zhou, D.; Metzler, D. Emergent abilities of large language models. arXiv 2022, arXiv:2206.07682. [Google Scholar] [CrossRef]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A. Language models are few-shot learners. Adv. Neural Inf. Process. Syst. 2020, 33, 1877–1901. [Google Scholar]

- Bi, X.; Chen, D.; Chen, G.; Chen, S.; Dai, D.; Deng, C.; Ding, H.; Dong, K.; Du, Q.; Fu, Z. Deepseek llm: Scaling open-source language models with longtermism. arXiv 2024, arXiv:2401.02954. [Google Scholar] [CrossRef]

- Shanahan, M. Talking about large language models. Commun. ACM 2024, 67, 68–79. [Google Scholar] [CrossRef]

- Naveed, H.; Khan, A.U.; Qiu, S.; Saqib, M.; Anwar, S.; Usman, M.; Akhtar, N.; Barnes, N.; Mian, A. A comprehensive overview of large language models. arXiv 2023, arXiv:2307.06435. [Google Scholar] [CrossRef]

- Chang, Y.; Wang, X.; Wang, J.; Wu, Y.; Yang, L.; Zhu, K.; Chen, H.; Yi, X.; Wang, C.; Wang, Y.; et al. A survey on evaluation of large language models. ACM Trans. Intell. Syst. Technol. 2024, 15, 1–45. [Google Scholar] [CrossRef]

- Demszky, D.; Yang, D.; Yeager, D.S.; Bryan, C.J.; Clapper, M.; Chandhok, S.; Eichstaedt, J.C.; Hecht, C.; Jamieson, J.; Johnson, M.; et al. Using large language models in psychology. Nat. Rev. Psychol. 2023, 2, 688–701. [Google Scholar] [CrossRef]

- Kung, T.H.; Cheatham, M.; Medenilla, A.; Sillos, C.; De Leon, L.; Elepaño, C.; Madriaga, M.; Aggabao, R.; Diaz-Candido, G.; Maningo, J.; et al. Performance of ChatGPT on USMLE: Potential for AI-assisted medical education using large language models. PLoS Digit. Health 2023, 2, e0000198. [Google Scholar] [CrossRef]

- Dijkstra, R.; Genç, Z.; Kayal, S.; Kamps, J. Reading comprehension quiz generation using generative pre-trained transformers. In Proceedings of the iTextbooks@ AIED, Durham, UK, 27–31 July 2022; pp. 4–17. [Google Scholar]

- Gabajiwala, E.; Mehta, P.; Singh, R.; Koshy, R. Quiz maker: Automatic quiz generation from text using NLP. In Futuristic Trends in Networks and Computing Technologies; Lecture Notes in Electrical Engineering; Springer: Singapore, 2022; pp. 523–533. [Google Scholar] [CrossRef]

- Qu, F.; Jia, X.; Wu, Y. Asking questions like educational experts: Automatically generating question-answer pairs on real-world examination data. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, Virtual Event/Punta Cana, Dominican Republic, 7–11 November 2021; pp. 2583–2593. [Google Scholar] [CrossRef]

- Raina, V.; Gales, M. Multiple-choice question generation: Towards an automated assessment framework. arXiv 2022, arXiv:2209.11830. [Google Scholar] [CrossRef]

- Abdelghani, R.; Wang, Y.-H.; Yuan, X.; Wang, T.; Lucas, P.; Sauzéon, H.; Oudeyer, P.-Y. GPT-3-driven pedagogical agents to train children’s curious question-asking skills. Int. J. Artif. Intell. Educ. 2024, 34, 483–518. [Google Scholar] [CrossRef]

- Jia, Q.; Cui, J.; Xiao, Y.; Liu, C.; Rashid, P.; Gehringer, E.F. All-in-one: Multi-task learning bert models for evaluating peer assessments. arXiv 2021, arXiv:2110.03895. [Google Scholar] [CrossRef]

- Lucas, H.C.; Upperman, J.S.; Robinson, J.R. A systematic review of large language models and their implications in medical education. Med. Educ. 2024, 58, 1276–1285. [Google Scholar] [CrossRef] [PubMed]

- Yilmaz, R.; Karaoglan Yilmaz, F.G. Augmented intelligence in programming learning: Examining student views on the use of ChatGPT for programming learning. Comput. Hum. Behav. Artif. Hum. 2023, 1, 100005. [Google Scholar] [CrossRef]

- Ai-Lim Lee, E.; Wong, K.W.; Fung, C.C. How does desktop virtual reality enhance learning outcomes? A structural equation modeling approach. Comput. Educ. 2010, 55, 1424–1442. [Google Scholar] [CrossRef]

- Ma, Q. The Impact of Project-Based Learning on Elementary School Students’ Programming Self-Efficacy. Master’s Dissertation, Inner Mongolia Normal University, Hohhot, China, 2022. [Google Scholar]

- Schunk, D.H. Learning Theories; Printice Hall Inc.: Upper Saddle River, NJ, USA, 1996. [Google Scholar]

- Ryan, R.M.; Deci, E.L. Self-determination theory and the facilitation of intrinsic motivation, social development, and well-being. Am. Psychol. 2000, 55, 68–78. [Google Scholar] [CrossRef]

- Locke, E.A. Self-efficacy: The exercise of control. Pers. Psychol. 1997, 50, 801. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).