Harnessing Generative Artificial Intelligence to Construct Multimodal Resources for Chinese Character Learning

Abstract

1. Introduction

2. Literature Review

2.1. Multimedia Learning Methods in Language Learning

2.2. Generative Artificial Intelligence and Foundation Models

2.3. The Present Study

3. Methods

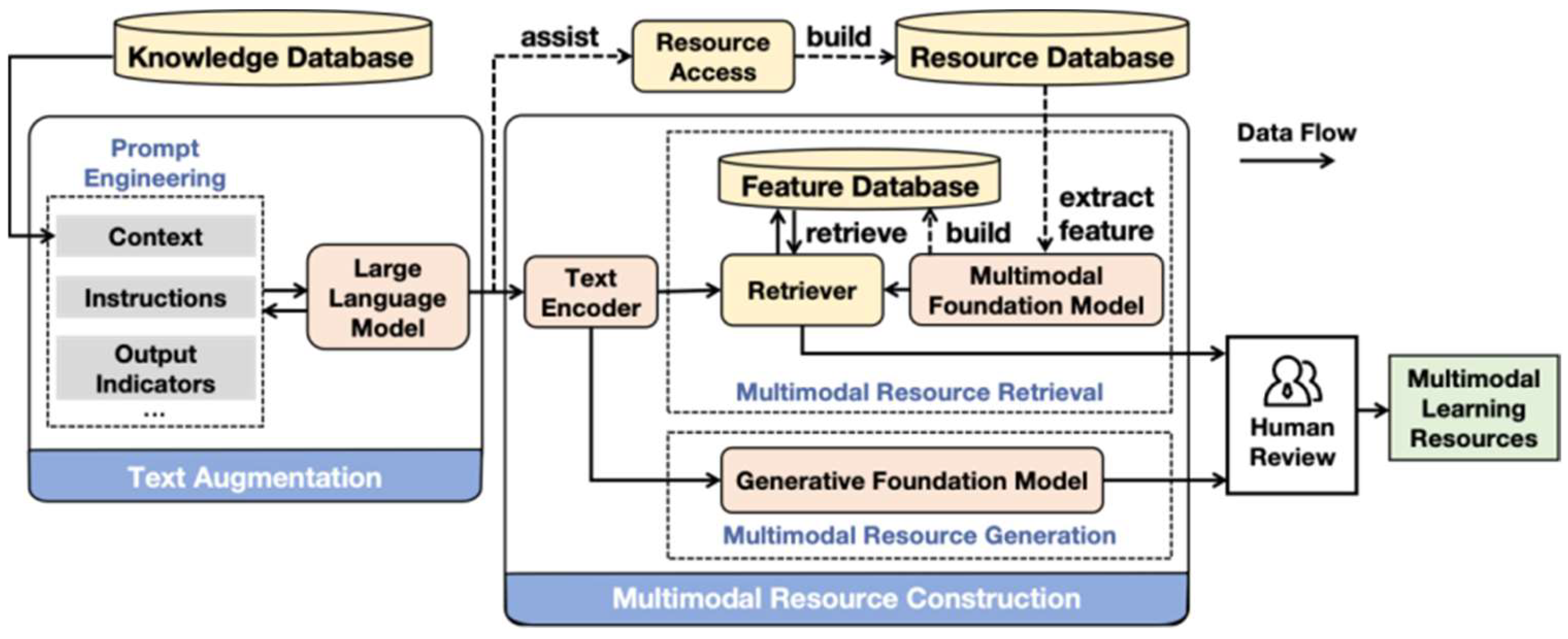

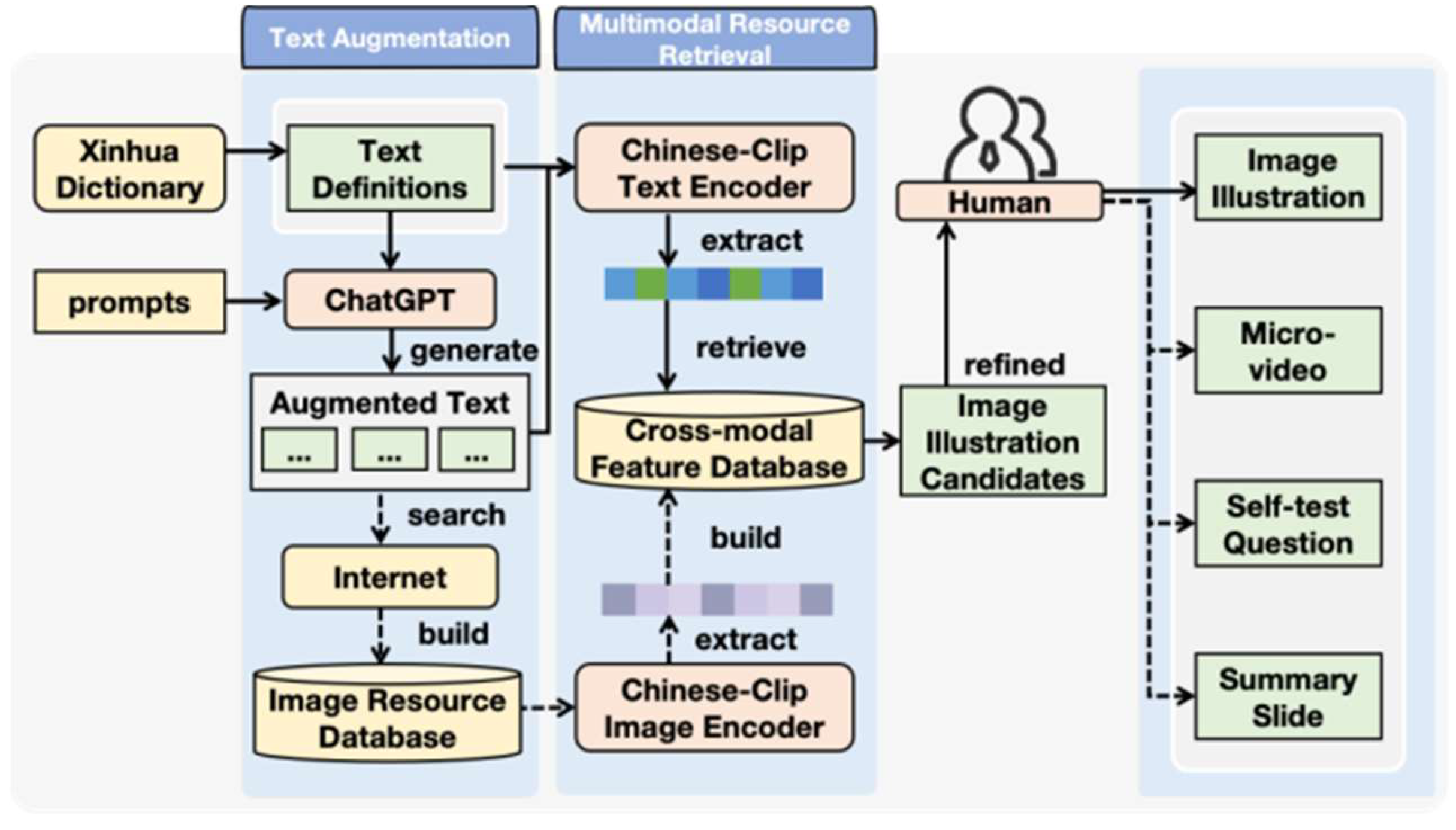

3.1. Multimodal Learning Resource Construction Framework

3.2. Implementation of Multimodal Learning Resource Construction Framework

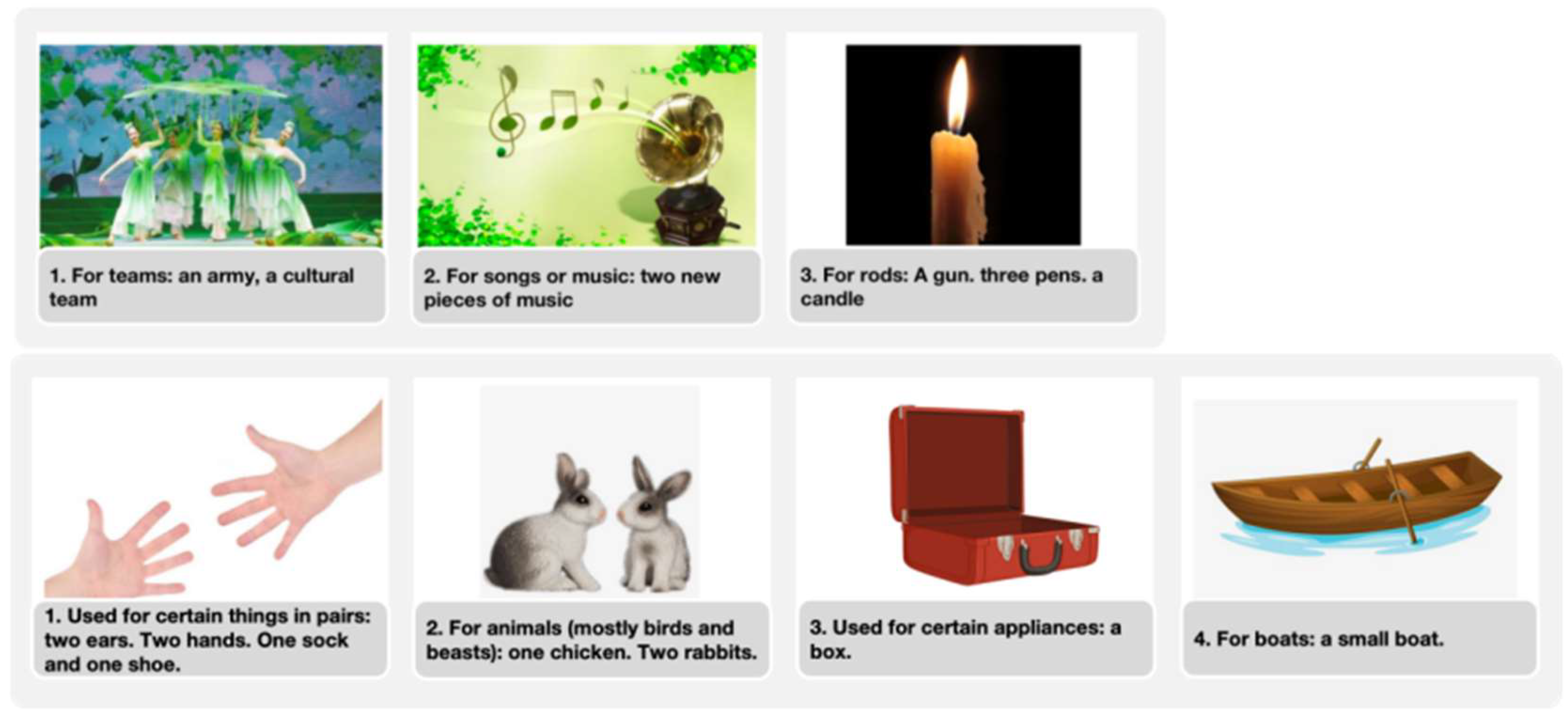

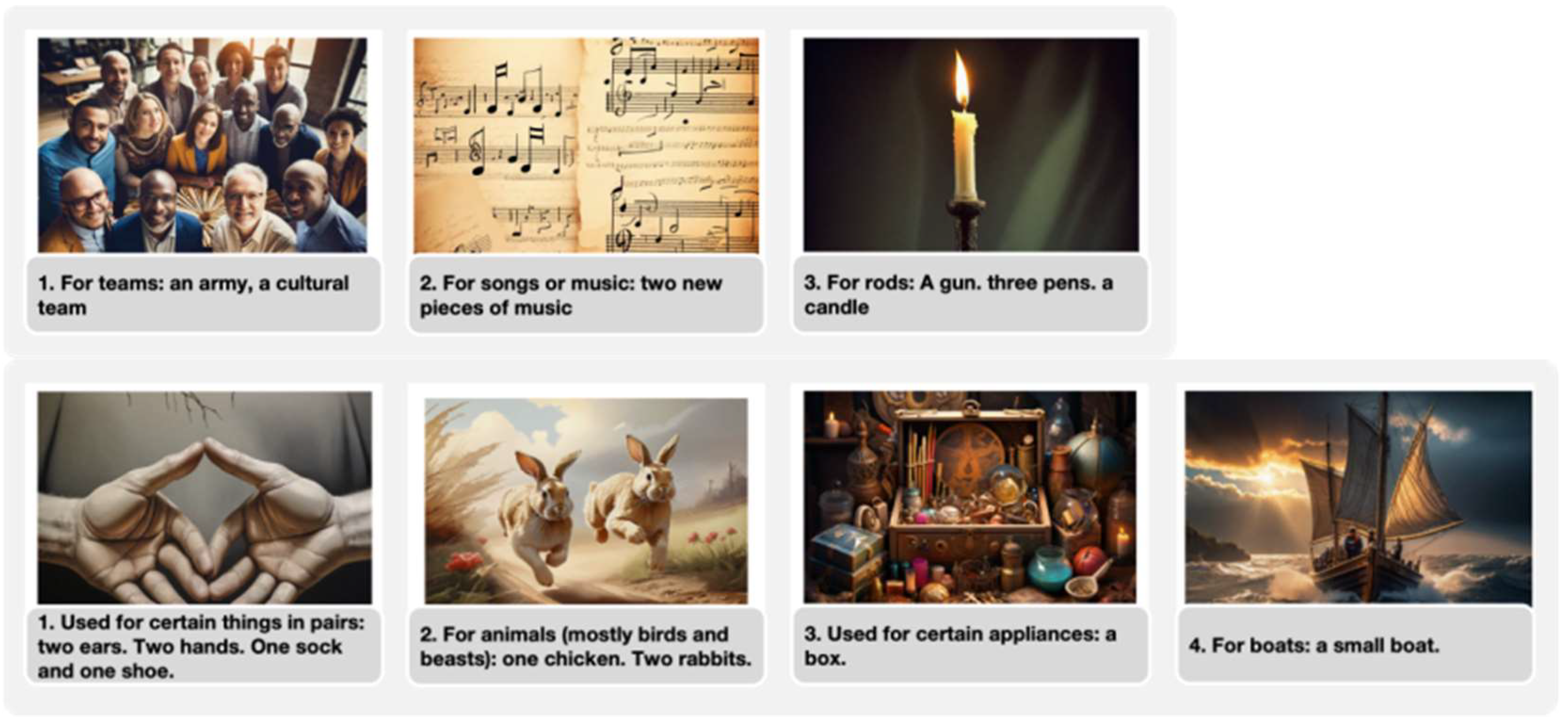

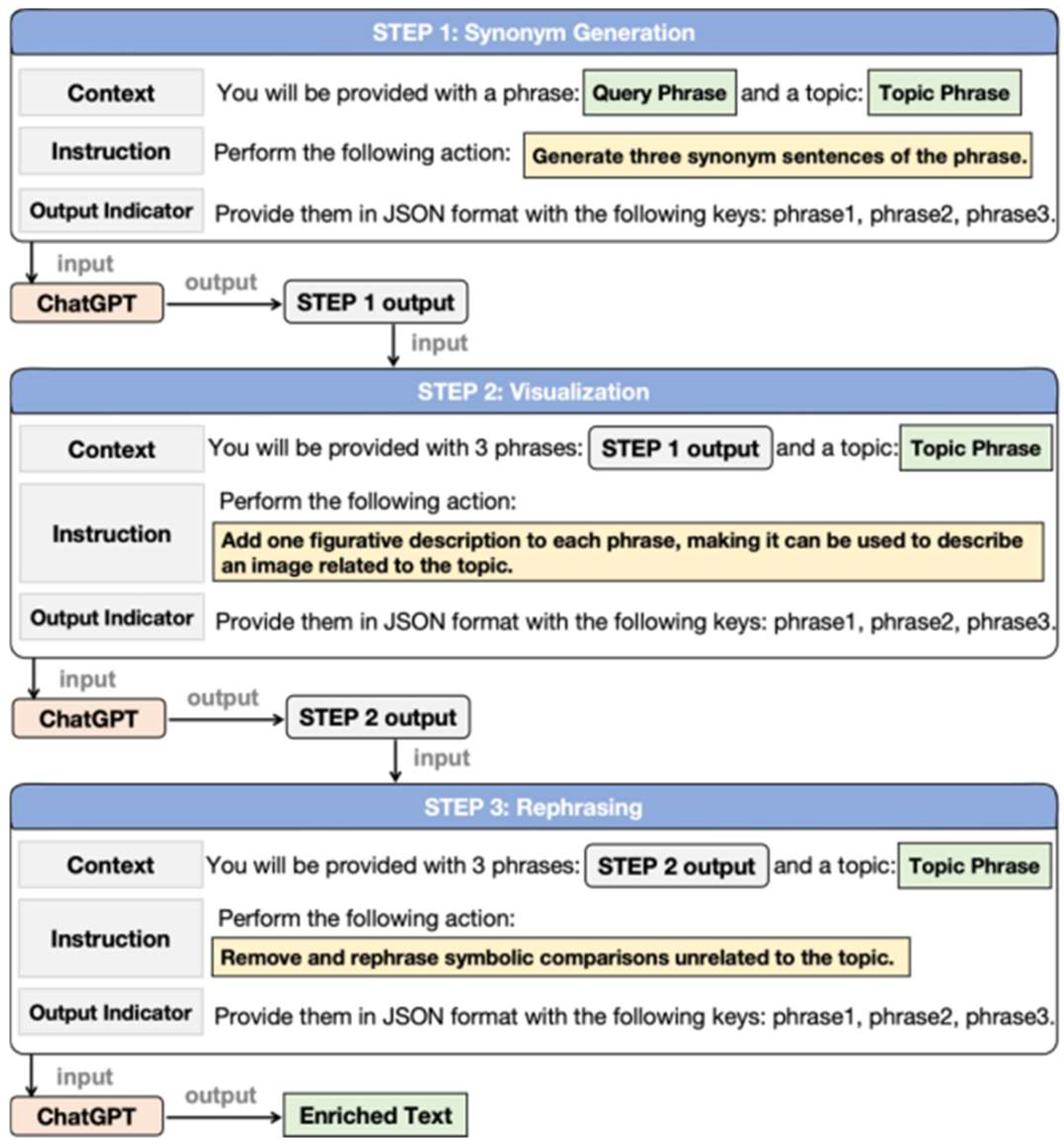

3.2.1. Image Illustration Construction Using Foundation Models

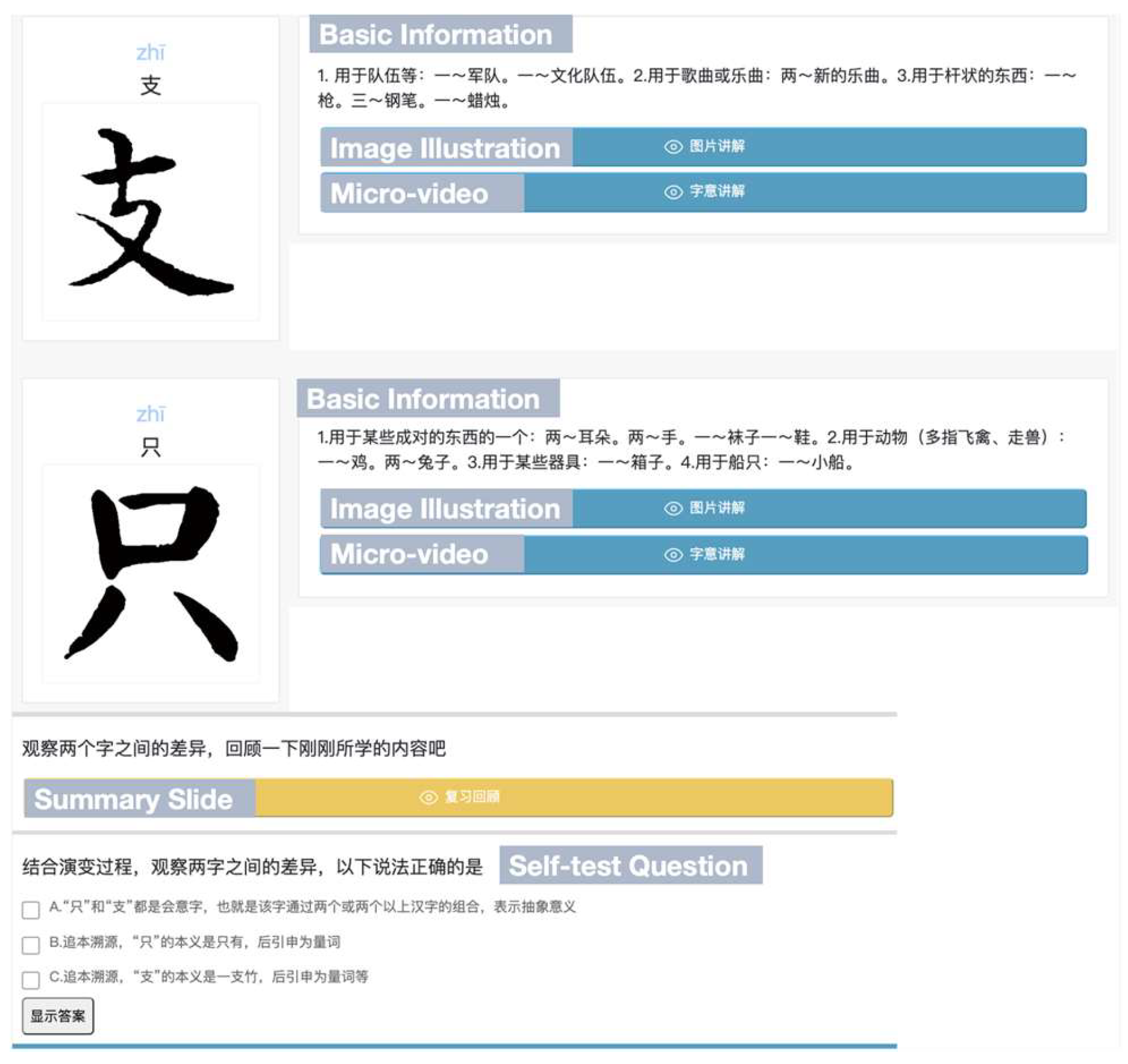

3.2.2. Multimodal Learning Resources Design

- (1)

- Image illustrations serve as visual mnemonic corresponding to the Chinese character’s text definition. Each image is tailored to effectively illustrate each meaning of the character, which can either depict sample words or convey the meaning description.

- (2)

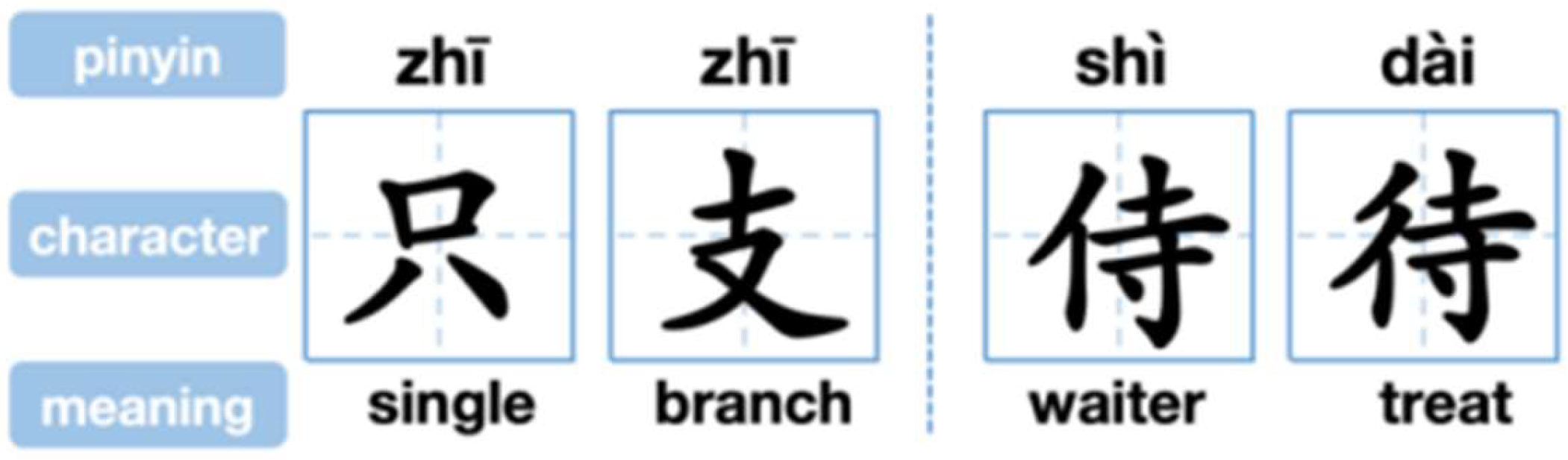

- Summary slides are created to review the key points mentioned in the other resources, presenting similar Chinese characters in relational clusters, including their pinyin, meanings and sample words, pictorial representations, current and ancient forms, as well as the evolvement between them.

- (3)

- Micro-videos mainly analyze Chinese characters from the evolvement perspective, introducing formation knowledge, as shown in Figure 7. Four short sections are designed to explain how to write the character, how the character’s form evolves in calligraphy, how the character is created and conveys meanings, and how to use the character in words.

- (4)

- Self-test questions are presented in a multiple-choice format assessing learners’ grasp of knowledge covered in the micro-videos. Learners have the option to request answers and receive immediate feedback after making their choices.

- (5)

- Basic information mainly contains pinyin and multiple definitions of Chinese characters. Pinyin represents the pronunciation of the Chinese character using the official romanization system. Each definition consists of a meaning description and sample words that demonstrate the character’s usage in words.

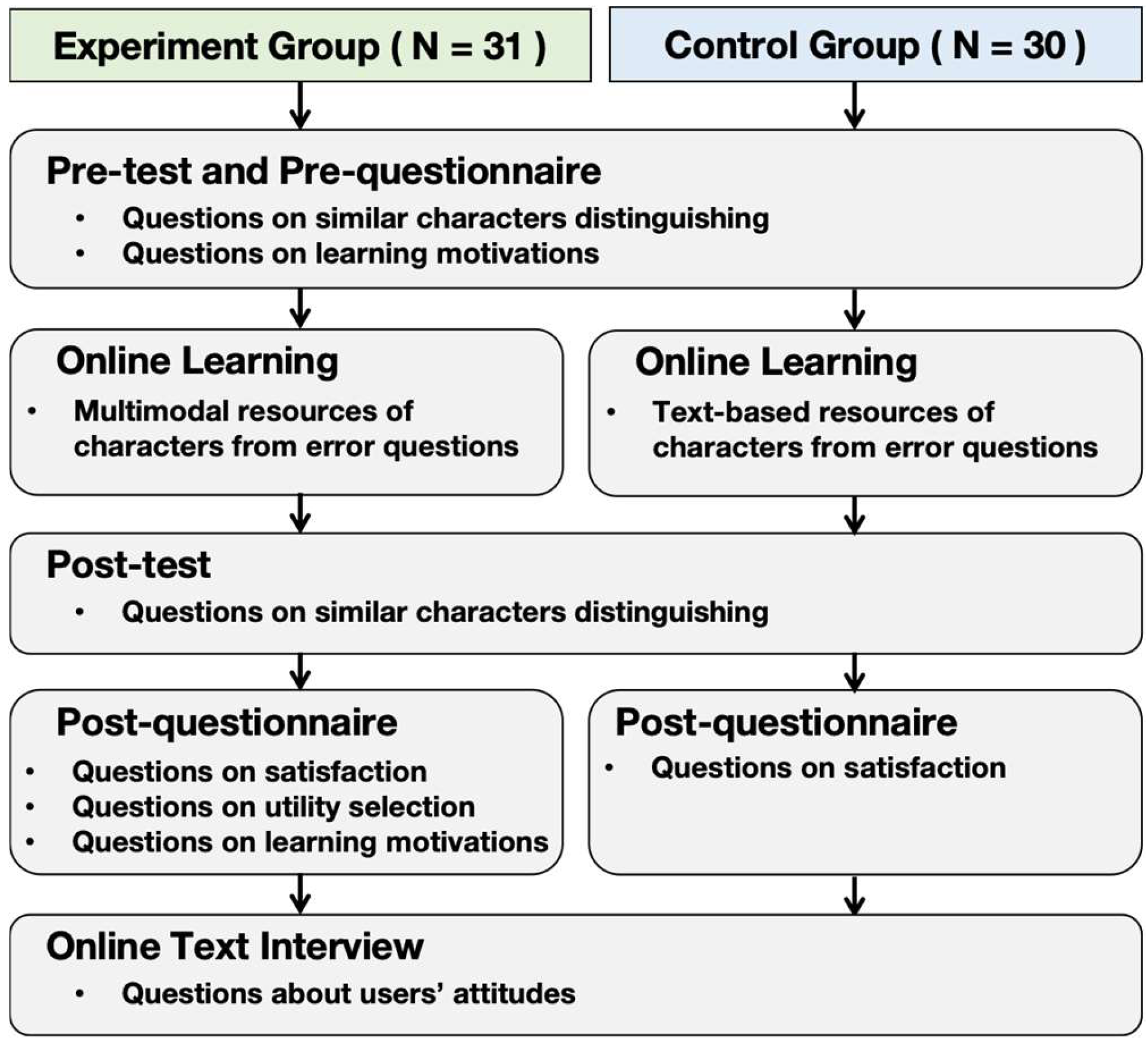

4. Experiment

4.1. Participants

4.2. Measuring Tools

4.3. Procedure

5. Results

5.1. Demographic Characteristics of the Participants

5.2. Learning Performance

5.3. Learning Motivation

5.4. Satisfaction and Attitude

6. Discussion

6.1. Efficient Framework Design for Constructing Multimodal Learning Resources

6.2. Effectiveness of Multimodal Resources in Distinguishing Similar Chinese Characters

6.3. Effective Feature Designs in Multimodal Resources for Distinguishing Similar Chinese Characters

7. Conclusions, Limitation and Future Research

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Tan, L.H.; Spinks, J.A.; Eden, G.F.; Perfetti, C.A.; Siok, W.T. Reading depends on writing, in Chinese. Proc. Natl. Acad. Sci. USA 2005, 102, 8781–8785. [Google Scholar] [CrossRef]

- Zhang, H.; Roberts, L. The role of phonological awareness and phonetic radical awareness in acquiring Chinese literacy skills in learners of Chinese as a second language. System 2019, 81, 163–178. [Google Scholar] [CrossRef]

- Zhang, L.; Xing, H. The interaction of orthography, phonology and semantics in the process of second language learners’ Chinese character production. Front. Psychol. 2023, 14, 1076810. [Google Scholar] [CrossRef] [PubMed]

- Caravolas, M.; Lervåg, A.; Defior, S.; Seidlová Málková, G.; Hulme, C. Different patterns, but equivalent predictors, of growth in reading in consistent and inconsistent orthographies. Psychol. Sci. 2013, 24, 1398–1407. [Google Scholar] [CrossRef] [PubMed]

- McBride, C.A. Is Chinese special? Four aspects of Chinese literacy acquisition that might distinguish learning Chinese from learning alphabetic orthographies. Educ. Psychol. Rev. 2016, 28, 523–549. [Google Scholar] [CrossRef]

- Shen, H.H.; Ke, C. Radical awareness and word acquisition among nonnative learners of Chinese. Mod. Lang. J. 2007, 91, 97–111. [Google Scholar] [CrossRef]

- Liu, C.L.; Lai, M.H.; Tien, K.W.; Chuang, Y.H.; Wu, S.H.; Lee, C.Y. Visually and phonologically similar characters in incorrect Chinese words: Analyses, identification, and applications. ACM Trans. Asian Lang. Inf. Process. (TALIP) 2011, 10, 1–39. [Google Scholar] [CrossRef]

- Wu, X.; Li, W.; Anderson, R.C. Reading instruction in China. J. Curric. Stud. 1999, 31, 571–586. [Google Scholar] [CrossRef]

- Tse, S.K.; Marton, F.; Ki, W.W.; Loh, E.K.Y. An integrative perceptual approach for teaching Chinese characters. Instr. Sci. 2007, 35, 375–406. [Google Scholar] [CrossRef]

- Chou, P.H. Study of Chinese similar form of the character teaching by theory of learning. J. Natl. United Univ. 2009, 6, 79–98. [Google Scholar]

- Chang, L.Y.; Tang, Y.Y.; Lee, C.Y.; Chen, H.C. The Effect of Visual Mnemonics and the Presentation of Character Pairs on Learning Visually Similar Characters for Chinese-as-Second-Language Learners. Front. Psychol. 2022, 13, 783898. [Google Scholar] [CrossRef] [PubMed]

- Chen, M.P.; Wang, L.C.; Chen, H.J.; Chen, Y.C. Effects of type of multimedia strategy on learning of Chinese characters for non-native novices. Comput. Educ. 2014, 70, 41–52. [Google Scholar] [CrossRef]

- Lee, C.P.; Shen, C.W.; Lee, D. The effect of multimedia instruction for Chinese learning. Learn. Media Technol. 2008, 33, 127–138. [Google Scholar] [CrossRef]

- Mayer, R.E. The Cambridge Handbook of Multimedia Learning; Cambridge University Press: Cambridge, UK, 2005. [Google Scholar]

- Mayer, R.E. Multimedia Learning. Psychology of Learning and Motivation; Academic Press: Cambridge, CA, USA, 2002; pp. 85–139. [Google Scholar]

- Mayer, R.E.; Moreno, R. Nine ways to reduce cognitive load in multimedia learning. Educ. Psychol. 2003, 38, 43–52. [Google Scholar] [CrossRef]

- Paivio, A. Mental Representations: A Dual Coding Approach; Oxford University Press: Oxford, UK, 1990. [Google Scholar]

- Sweller, J.; Van Merrienboer, J.J.; Paas, F.G. Cognitive architecture and instructional design. Educ. Psychol. Rev. 1998, 10, 251–296. [Google Scholar] [CrossRef]

- Olmanson, J.; Liu, X. The Challenge of Chinese Character Acquisition: Leveraging Multimodality in Overcoming a Centuries-Old Problem. Emerg. Learn. Des. J. 2018, 4, 1–9. [Google Scholar]

- Al-Seghayer, K. The effect of multimedia annotation modes on L2 vocabulary acquisition: A comparative study. Lang. Learn. Technol. 2005, 3, 133–151. [Google Scholar]

- Kim, D.; Gilman, D.A. Effects of text, audio, and graphic aids in multimedia instruction for vocabulary learning. J. Educ. Technol. Soc. 2008, 11, 114–126. [Google Scholar]

- Plass, J.L.; Chun, D.M.; Mayer, R.E.; Leutner, D. Cognitive load in reading a foreign language text with multimedia aids and the influence of verbal and spatial abilities. Comput. Hum. Behav. 2003, 19, 221–243. [Google Scholar] [CrossRef]

- Ramezanali, N.; Faez, F. Vocabulary learning and retention through multimedia glossing. Lang. Learn. Technol. 2019, 23, 105–124. [Google Scholar]

- Zhu, Y.; Mok, P. The role of prosody across languages. In The Routledge Handbook of Second Language Acquisition and Speaking, 1st ed.; Routledge: Abingdon-on-Thames, UK, 2022; pp. 201–214. [Google Scholar]

- Figueiredo, S. The efficiency of tailored systems for language education: An app based on scientific evidence and for student-centered approach. Eur. J. Educ. Res. 2023, 12, 583–592. [Google Scholar] [CrossRef]

- Figueiredo, S.; Brandão, T.; Nunes, O. Learning Styles Determine Different Immigrant Students’ Results in Testing Settings: Relationship Between Nationality of Children and the Stimuli of Tasks. Behav. Sci. 2019, 9, 150. [Google Scholar] [CrossRef]

- Amy Kuo, M.L.; Hooper, S. The effects of visual and verbal coding mnemonics on learning Chinese characters in computer-based instruction. Educ. Technol. Res. Dev. 2004, 52, 23–34. [Google Scholar] [CrossRef]

- Bommasani, R.; Hudson, D.A.; Adeli, E.; Altman, R.; Arora, S.; von Arx, S.; Liang, P. On the opportunities and risks of foundation models. arXiv 2021, arXiv:2108.07258. [Google Scholar]

- GPT-4. OpenAI Website. Available online: https://openai.com/research/gpt-4 (accessed on 3 August 2023).

- Ouyang, L.; Wu, J.; Jiang, X.; Almeida, D.; Wainwright, C.; Mishkin, P.; Lowe, R. Training language models to follow instructions with human feedback. Adv. Neural Inf. Process. Syst. 2022, 35, 27730–27744. [Google Scholar]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sutskever, I. Learning transferable visual models from natural language supervision. In Proceedings of the 38th International Conference on Machine Learning, Virtual, 18 July 2021. [Google Scholar]

- Rombach, R.; Blattmann, A.; Lorenz, D.; Esser, P.; Ommer, B. High-resolution image synthesis with latent diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19 June 2022. [Google Scholar]

- Yang, A.; Pan, J.; Lin, J.; Men, R.; Zhang, Y.; Zhou, J.; Zhou, C. Chinese clip: Contrastive vision-language pretraining in Chinese. arXiv 2022, arXiv:2211.01335. [Google Scholar]

- Yang, L.; Zhang, Z.; Song, Y.; Hong, S.; Xu, R.; Zhao, Y.; Zhang, W.; Cui, B.; Yang, M. Diffusion Models: A Comprehensive Survey of Methods and Applications. ACM Comput. Surv. 2023, 56, 39. [Google Scholar] [CrossRef]

- Ramesh, D.; Dhariwal, P.; Nichol, A.; Chu, C.; Chen, M. Hierarchical text-conditional image generation with clip latents. arXiv 2022, arXiv:2204.06125. [Google Scholar]

- HiDream. AI Website. Available online: https://www.hidreamai.com/ (accessed on 31 March 2024).

- Chu, H.C.; Hwang, G.J.; Tsai, C.C.; Tseng, J.C. A two-tier test approach to developing location-aware mobile learning systems for natural science courses. Comput. Educ. 2010, 55, 1618–1627. [Google Scholar] [CrossRef]

- Hwang, G.J.; Yang, L.H.; Wang, S.Y. A concept map-embedded educational computer game for improving students’ learning performance in natural science courses. Comput. Educ. 2013, 69, 121–130. [Google Scholar] [CrossRef]

- Wang, M.; Perfetti, C.A.; Liu, Y. Alphabetic readers quickly acquire orthographic structure in learning to read Chinese. Sci. Stud. Read. 2003, 7, 183–208. [Google Scholar] [CrossRef]

- Sung, K.Y.; Wu, H.P. Factors influencing the learning of Chinese characters. Int. J. Biling. Educ. Biling. 2011, 14, 683–700. [Google Scholar] [CrossRef]

- Betker, J.; Goh, G.; Jing, L.; Brooks, T.; Wang, J.; Li, L.; Ramesh, A. Improving image generation with better captions. Comput. Sci. 2023, 2, 8. [Google Scholar]

- Yu, Z. Teaching Chinese character literacy from the perspective of word theory. Teach. Manag. 2018, 9, 80–82. [Google Scholar]

- Paas, F.G.W.C.; Van, M.J.J.G. Instructional control of cognitive load in the training of complex cognitive tasks. Educ. Psychol. Rev. 1994, 6, 351–371. [Google Scholar] [CrossRef]

- Wang, H.; Zhang, X.; Jin, Y.; Ding, X. Examining the relationships between cognitive load, anxiety, and story continuation writing performance: A structural equation modeling approach. Hum. Soc. Sci. Commun. 2024, 11, 1297. [Google Scholar] [CrossRef]

- Baddeley, A.D.; Hitch, G.J. Working memory. Psychol. Learn. Motiv. 1974, 8, 47–90. [Google Scholar]

- Paas, F.; Renkl, A.; Sweller, J. Cognitive load theory and instructional design: Recent developments. Educ. Psychol. 2003, 38, 1–4. [Google Scholar] [CrossRef]

- DeStefano, D.; LeFevre, J.A. Cognitive Load in Hypertext Reading: A Review. Comput. Hum. Behav. 2007, 23, 1616–1641. [Google Scholar] [CrossRef]

- Chu, H.C. Potential negative effects of mobile learning on students’ learning achievement and cognitive load—A format assessment perspective. J. Educ. Technol. Soc. 2014, 17, 332–344. [Google Scholar]

- Chuang, H.Y.; Ku, H.Y. The effect of computer-based multimedia instruction with Chinese character recognition. Educ. Media Int. 2011, 48, 27–41. [Google Scholar] [CrossRef]

- Zahradníková, M. A qualitative inquiry of character learning strategies by Chinese L2 beginners. Chin. Second Lang. J. 2016, 51, 117–137. [Google Scholar] [CrossRef]

- Figueiredo, S.; Silva, C. Cognitive differences in second language learners and the critical period effects. L1 Educ. Stud. Lang. Lit. 2009, 9, 157–178. [Google Scholar] [CrossRef]

- Hello GPT-4o. Available online: https://openai.com/index/hello-gpt-4o/ (accessed on 26 May 2024).

| Modality | Multimodal Resources |

|---|---|

| Image | 1. Image illustration 2. Summary slide |

| Video | 3. Micro-video |

| Text | 4. Self-test question |

| 5. Basic information |

| Participants | ||||||

|---|---|---|---|---|---|---|

| Characteristics | All (N = 61) | Experimental Group (N = 31) | Control Group (N = 30) | |||

| n (%) | n (%) | n (%) | X2 | df | p | |

| Gender | 0.759 | |||||

| Male | 13 (21.3%) | 8 (25.8%) | 5 (16.7%) | 1 | 0.384 | |

| Female | 48 (78.7%) | 23 (74.2%) | 25 (83.3%) | |||

| Self-assessed proficiency in Chinese | 2.908 | 3 | 0.406 | |||

| Excellent (A) | 13 (21.3%) | 7 (22.6%) | 6 (20%) | |||

| Good (B) | 35 (57.3%) | 18 (58.1%) | 17 (56.7%) | |||

| Fair (C) | 11 (18.0%) | 4 (12.9%) | 7 (23.3%) | |||

| Pass (D) | 2 (3.3%) | 2 (6.5%) | 0 (0%) | |||

| Fail (F) | 0 (0%) | 0 (0%) | 0 (0%) | |||

| Level of Education | ||||||

| Secondary Education | 36 (59.0%) | 17 (54.8%) | 19 (63.3%) | 3.823 | 3 | 0.281 |

| Bachelor’s Degree | 22 (36.1%) | 13 (41.9%) | 9 (30.0%) | |||

| Master’s Degree | 2 (3.3%) | 0 (0%) | 2 (6.7%) | |||

| Doctoral Degree | 1 (1.6%) | 1 (3.2%) | 0 (0%) | |||

| Mean (SD) | Mean (SD) | Mean (SD) | U | Z | p | |

| Age | 22.1 (2.47) | 21.7 (2.604) | 22.5 (2.307) | 356.5 | −1.582 | 0.114 |

| Character Category | Question N | Group | N | Mean | SD | Adjusted Mean | Adjusted SD | F | p Value |

|---|---|---|---|---|---|---|---|---|---|

| Simple Character | 2 | Experimental | 31 | 7.32 | 1.037 | 7.437 | 0.168 | 5.799 * | 0.019 |

| Control | 30 | 6.97 | 0.801 | 6.848 | 0.171 | ||||

| Compound Character | 8 | Experimental | 31 | 29.39 | 2.186 | 29.396 | 0.294 | 3.294 | 0.075 |

| Control | 30 | 30.17 | 1.440 | 30.157 | 0.299 |

| Character Category | Question N | Group | N | Mean | SD | t | p Value | |

|---|---|---|---|---|---|---|---|---|

| Homophones | 8 | Pre-test | Experimental | 31 | 28.677 | 2.116 | –1.372 | 0.175 |

| Control | 30 | 29.300 | 1.242 | |||||

| Post-test | Experimental | 31 | 29.741 | 2.228 | 0.547 | 0.586 | ||

| Control | 30 | 29.467 | 1.565 | |||||

| Non-homophones | 2 | Pre-test | Experimental | 31 | 7.290 | 1.113 | –0.486 | 0.628 |

| Control | 30 | 7.433 | 1.146 | |||||

| Post-test | Experimental | 31 | 6.968 | 1.448 | –2.439 * | 0.019 | ||

| Control | 30 | 7.667 | 0.596 |

| Variables | N | Mean | SD | t | p Value | |

|---|---|---|---|---|---|---|

| Image Illustration | Pre-questionnaire | 31 | 4.088 | 0.862 | –0.444 | 0.661 |

| Post-questionnaire | 31 | 4.129 | 0.713 | |||

| Character Evolvement and Calligraphy (Micro-video) | Pre-questionnaire | 31 | 4.074 | 0.856 | –2.698 * | 0.011 |

| Post-questionnaire | 31 | 4.290 | 0.752 |

| Modality | Multimodal Resources | Reason Themes | Frequency |

|---|---|---|---|

| Image | Image illustration | 1. Aiding memorization | 3 |

| 2. Efficient to compare differences between similar characters | 2 | ||

| 3. Stimulating interest | 2 | ||

| 4. Helping to understand meaning of Chinese character | 1 | ||

| Summary slide | 1. Containing concise and intuitive visual comparisons between similar characters | 5 | |

| 2. Allowing for reviewing of new learning | 1 | ||

| Video | Micro-video | 1. Helping to understand Chinese character evolvement and meanings | 4 |

| 2. Having good correlation between videos to distinguish similar characters | 3 | ||

| 3. Stimulating interest | 3 | ||

| 4. Aiding memorization | 2 | ||

| 5. Helping to accumulate knowledge | 1 | ||

| Text | Self-test question | 1. Allowing for testing of new learning | 1 |

| 2. Helping to distinguish differences in meanings of Chinese characters | 1 | ||

| Basic information | 1. Intuitive | 1 | |

| Total | 30 | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yu, J.; Song, J.; Lu, Y. Harnessing Generative Artificial Intelligence to Construct Multimodal Resources for Chinese Character Learning. Systems 2025, 13, 692. https://doi.org/10.3390/systems13080692

Yu J, Song J, Lu Y. Harnessing Generative Artificial Intelligence to Construct Multimodal Resources for Chinese Character Learning. Systems. 2025; 13(8):692. https://doi.org/10.3390/systems13080692

Chicago/Turabian StyleYu, Jinglei, Jiachen Song, and Yu Lu. 2025. "Harnessing Generative Artificial Intelligence to Construct Multimodal Resources for Chinese Character Learning" Systems 13, no. 8: 692. https://doi.org/10.3390/systems13080692

APA StyleYu, J., Song, J., & Lu, Y. (2025). Harnessing Generative Artificial Intelligence to Construct Multimodal Resources for Chinese Character Learning. Systems, 13(8), 692. https://doi.org/10.3390/systems13080692