How Perceived Value Drives Usage Intention of AI Digital Human Advisors in Digital Finance

Abstract

1. Introduction

2. Literature Review and Hypothesis Development

2.1. AI Digital Human Advisors in Financial Services

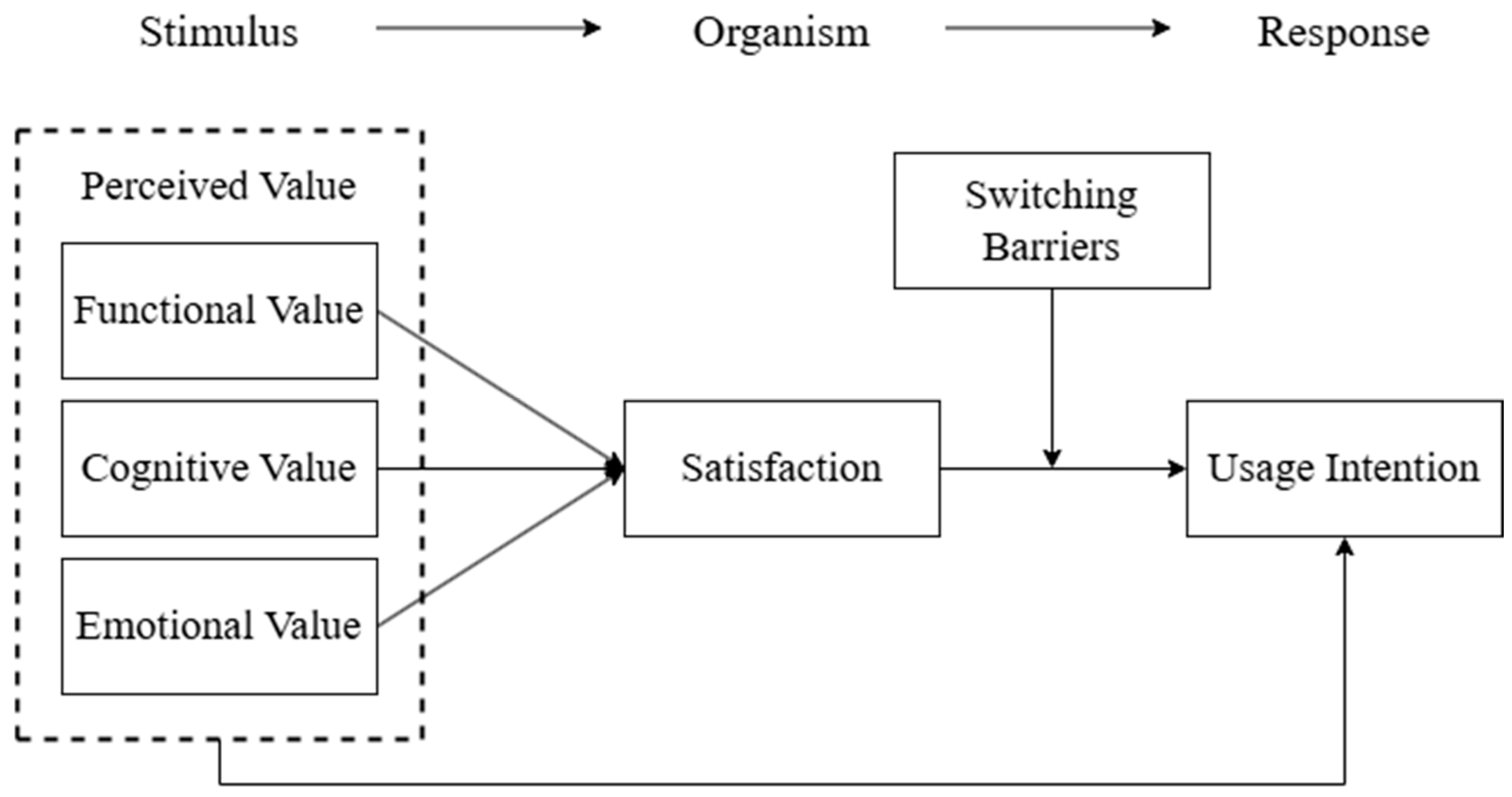

2.2. Perceived Value and Its Impact on Satisfaction

2.3. Usage Intention in Intelligent Financial Services

2.4. Satisfaction and Its Relationship with Usage Intention

2.5. Mediating Role of Satisfaction Between Perceived Value and Usage Intention

2.6. The Moderating Role of Switching Barriers Between Satisfaction and Usage Intention

2.7. Integration of Switching Barriers into the S–O–R Framework

3. Methods and Data

3.1. Survey Design and Data Collection

3.2. Scale Development and Pretest Validation

3.3. Formal Survey Sample Profile and Construct Overview

3.4. Distributional Features and Group Differences in Core Constructs

4. Results

4.1. Inter-Construct Correlations and Theoretical Alignment

4.2. Confirmatory Factor Analysis (CFA) and Model Fit

4.3. Structural Equation Modeling (SEM)

4.4. Direct Effects

4.5. Mediation Analysis

4.6. Moderation Analysis

5. Discussion

5.1. Theoretical Implications

5.2. Practical Implications

5.3. Limitations and Future Research Directions

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Construct | Code | Measurement Item | Source |

|---|---|---|---|

| Functional Value | FV1 | The AI digital human advisor provides comprehensive and complete financial information. | [7,64] |

| FV2 | The AI digital human advisor enhances the quality of service. | ||

| FV4 | The interactive interface of AI digital human advisors provides good visual design and usability. | ||

| FV5 | I believe AI digital human advisors represent an innovative form of financial service. | ||

| Cognitive Value | CV1 | AI digital human advisors help me better understand financial products or services. | [8] |

| CV2 | AI digital human advisors provide more objective information. | ||

| CV3 | AI digital human advisors provide more accurate information. | ||

| CV4 | AI digital human advisors offer a new way to learn about financial products and services. | ||

| Emotional Value | EV1 | Using AI digital human advisors makes me feel relaxed and happy. | [9,65] |

| EV2 | Watching AI digital human advisors explain financial products is very interesting. | ||

| EV4 | The personification of AI digital consultants does not make me uncomfortable. | ||

| EV5 | The AI digital human advisor captured my attention. | ||

| Satisfaction | DS1 | I am satisfied with my experience using AI digital human advisors. | [26,58] |

| DS3 | AI digital human advisors meet my financial consultation needs well. | ||

| DS4 | Overall, I am satisfied with the services provided by AI digital human advisors. | ||

| DS5 | I would be happy to continue relying on AI digital human advisors for financial services. | ||

| Switching Barriers (Switching Cost/Alternative Attractiveness/Habit strength) | LB1 | Adapting to AI digital human advisors requires considerable time and effort. | [46,51] |

| LB2 | Using AI digital human advisors feels complicated and hard to operate. | ||

| LB3 | Switching to AI digital human advisors causes me to lose part of my previous service habits. | ||

| LB4 | If I had to replace human advisors, I would not consider AI digital human advisors a better option. | ||

| LB6 | I believe AI digital human advisors can hardly fully replace human advisors. | ||

| LB7 | I have become accustomed to using human customer service or human financial advisors. | ||

| LB8 | I prefer communicating with traditional financial advisors rather than switching to AI. | ||

| LB9 | While using the AI digital human advisor for financial management, I felt that my financial habits have changed. | ||

| Usage Intention | WW1 | I am willing to continue using AI digital human advisors in the future. | [33,66] |

| WW2 | I will actively follow AI digital human advisor services on major financial platforms and use them more frequently. | ||

| WW3 | I am willing to speak positively about AI digital human advisor services to friends and family. | ||

| WW4 | I would recommend AI digital human advisors to others. | ||

| WW5 | I am likely to explore more financial services provided by AI digital human advisors in the future. |

| Variable | Min | Max | M ± SD | Skewness | Kurtosis (−3) |

|---|---|---|---|---|---|

| Functional Value | 1.25 | 7.00 | 4.584 ± 1.271 | −0.389 | −0.574 |

| Cognitive Value | 1.25 | 7.00 | 4.786 ± 1.109 | −0.533 | 0.432 |

| Emotional Value | 1.00 | 7.00 | 4.669 ± 1.301 | −0.430 | −0.081 |

| Satisfaction | 1.00 | 7.00 | 5.070 ± 1.268 | −0.790 | 0.130 |

| Switching Barriers | 1.50 | 7.00 | 5.011 ± 1.054 | −0.826 | 0.823 |

| Usage Intention | 1.00 | 7.00 | 4.827 ± 1.292 | −0.588 | 0.009 |

| Variable | M ± SD (Male, N = 226) | M ± SD (Female, N = 298) | t | p |

|---|---|---|---|---|

| Functional Value | 4.50 ± 1.29 | 4.65 ± 1.26 | −1.293 | 0.197 |

| Cognitive Value | 4.63 ± 1.09 | 4.90 ± 1.11 | −2.798 | 0.005 |

| Emotional Value | 4.52 ± 1.41 | 4.78 ± 1.20 | −2.228 | 0.026 |

| Satisfaction | 4.99 ± 1.31 | 5.13 ± 1.23 | −1.312 | 0.190 |

| Switching Barriers | 5.05 ± 1.08 | 4.98 ± 1.04 | 0.711 | 0.477 |

| Usage Intention | 4.67 ± 1.35 | 4.95 ± 1.23 | −2.500 | 0.013 |

| Variable | M ± SD (20–29 Years, N = 126) | M ± SD (30–39 Years, N = 182) | M ± SD (40–49 Years, N = 103) | M ± SD (50–59 Years, N = 99) | M ± SD (≥60 Years, N = 14) | F | p |

|---|---|---|---|---|---|---|---|

| Functional Value | 4.42 ± 1.23 | 4.69 ± 1.26 | 4.65 ± 1.26 | 4.50 ± 1.33 | 4.79 ± 1.48 | 1.088 | 0.362 |

| Cognitive Value | 4.59 ± 1.06 | 4.82 ± 1.07 | 4.99 ± 1.00 | 4.71 ± 1.29 | 5.21 ± 1.12 | 2.617 | 0.034 |

| Emotional Value | 4.61 ± 1.29 | 4.70 ± 1.30 | 4.78 ± 1.29 | 4.54 ± 1.31 | 4.80 ± 1.47 | 0.578 | 0.679 |

| Satisfaction | 4.92 ± 1.25 | 5.07 ± 1.26 | 5.24 ± 1.16 | 5.11 ± 1.35 | 4.91 ± 1.72 | 0.960 | 0.429 |

| Switching Barriers | 4.99 ± 0.84 | 4.91 ± 1.12 | 4.97 ± 1.10 | 5.20 ± 1.12 | 5.50 ± 0.93 | 2.009 | 0.092 |

| Usage Intention | 4.78 ± 1.27 | 4.87 ± 1.34 | 5.06 ± 1.14 | 4.63 ± 1.31 | 4.27 ± 1.47 | 2.204 | 0.067 |

| Variable | M ± SD (High School or Below, N = 15) | M ± SD (Associate Degree, N = 63) | M ± SD (Bachelor’s Degree, N = 354) | M ± SD (Master’s Degree and Above, N = 92) | F | p |

|---|---|---|---|---|---|---|

| Functional Value | 4.97 ± 1.11 | 4.69 ± 1.28 | 4.56 ± 1.28 | 4.54 ± 1.27 | 0.671 | 0.570 |

| Cognitive Value | 4.48 ± 1.03 | 4.87 ± 1.21 | 4.80 ± 1.05 | 4.72 ± 1.26 | 0.641 | 0.589 |

| Emotional Value | 5.10 ± 1.28 | 4.69 ± 1.27 | 4.69 ± 1.29 | 4.49 ± 1.35 | 1.205 | 0.307 |

| Satisfaction | 5.53 ± 0.65 | 4.98 ± 1.30 | 5.07 ± 1.29 | 5.07 ± 1.23 | 0.782 | 0.504 |

| Switching Barriers | 4.77 ± 1.25 | 5.08 ± 1.15 | 5.00 ± 1.04 | 5.04 ± 1.00 | 0.392 | 0.759 |

| Usage Intention | 5.00 ± 1.25 | 4.84 ± 1.15 | 4.83 ± 1.34 | 4.79 ± 1.20 | 0.115 | 0.951 |

References

- Belanche, D.; Casaló Ariño, L.; Flavian, C.; Schepers, J. Service robot implementation: A theoretical framework and research agenda. Serv. Ind. J. 2020, 40, 203–225. [Google Scholar] [CrossRef]

- Mehrabian, A.; Russell, J.A. An Approach to Environmental Psychology; The MIT Press: Cambridge, MA, USA, 1974. [Google Scholar]

- Jones, M.A.; Mothersbaugh, D.L.; Beatty, S.E. Why customers stay: Measuring the underlying dimensions of services switching costs and managing their differential strategic outcomes. J. Bus. Res. 2002, 55, 441–450. [Google Scholar] [CrossRef]

- Zhu, H.; Vigren, O.; Söderberg, I.-L. Implementing artificial intelligence empowered financial advisory services: A literature review and critical research agenda. J. Bus. Res. 2024, 174, 114494. [Google Scholar] [CrossRef]

- Khanna, P.; Jha, S. AI-Based Digital Product ‘Robo-Advisory’ for Financial Investors. Vis. J. Bus. Perspect. 2024. [Google Scholar] [CrossRef]

- Zaman, T.; Ahmed, S.; Lee, J. Engaging roboadvisors in financial advisory services: The role of perceived value for user adoption. Inf. Dev. 2023, 39, 145–161. [Google Scholar] [CrossRef]

- Sánchez, J.; Callarisa, L.; Rodríguez, R.M.; Moliner, M.A. Perceived value of the purchase of a tourism product. Tour. Manag. 2006, 27, 394–409. [Google Scholar] [CrossRef]

- Sheth, J.N.; Newman, B.I.; Gross, B.L. Why we buy what we buy: A theory of consumption values. J. Bus. Res. 1991, 22, 159–170. [Google Scholar] [CrossRef]

- Sweeney, J.C.; Soutar, G.N. Consumer perceived value: The development of a multiple item scale. J. Retail. 2001, 77, 203–220. [Google Scholar] [CrossRef]

- Feine, J.; Gnewuch, U.; Morana, S.; Maedche, A. A Taxonomy of Social Cues for Conversational Agents. Int. J. Hum.-Comput. Stud. 2019, 132, 138–161. [Google Scholar] [CrossRef]

- Gnewuch, U.; Morana, S.; Maedche, A. Towards Designing Cooperative and Social Conversational Agents for Customer Service. In Proceedings of the International Conference on Interaction Sciences 2017, New York, NY, USA, 27–30 June 2017. [Google Scholar]

- Byambaa, O.; Yondon, C.; Rentsen, E.; Darkhijav, B.; Rahman, M. An empirical examination of the adoption of artificial intelligence in banking services: The case of Mongolia. Future Bus. J. 2025, 11, 76. [Google Scholar] [CrossRef]

- Dimitriadis, K.A.; Koursaros, D.; Savva, C.S. Exploring the dynamic nexus of traditional and digital assets in inflationary times: The role of safe havens, tech stocks, and cryptocurrencies. Econ. Model. 2025, 151, 107195. [Google Scholar] [CrossRef]

- Zeithaml, V. Consumer Perceptions of Price, Quality and Value: A Means-End Model and Synthesis of Evidence. J. Mark. 1988, 52, 2–22. [Google Scholar] [CrossRef]

- Monroe, K.B. Pricing: Making Profitable Decisions, 3rd ed.; McGraw-Hill: New York, NY, USA, 2003. [Google Scholar]

- Woodruff, R.B. Customer value: The next source for competitive advantage. J. Acad. Mark. Sci. 1997, 25, 139–153. [Google Scholar] [CrossRef]

- Kim, H.; Park, S. Machines think, but do we? Embracing AI in flat organizations. Inf. Dev. 2024, 40, 25–42. [Google Scholar] [CrossRef]

- Lee, J.; Xiong, Y. Investigating the factors influencing the adoption and use of artificial agents in higher education. Inf. Dev. 2024, 40, 87–102. [Google Scholar] [CrossRef]

- Costa, R.; Cruz, M.; Gonçalves, R.; Dias, Á.; da Silva, R.V.; Pereira, L. Artificial intelligence and its adoption in financial services. Int. J. Serv. Oper. Inf. 2022, 12, 70. [Google Scholar] [CrossRef]

- Ali, O.; Murray, P.A.; Al-Ahmad, A.; Jeon, I.; Dwivedi, Y.K. Comprehending the theoretical knowledge and practice around AI-enabled innovations in the finance sector. J. Innov. Knowl. 2025, 10, 100762. [Google Scholar] [CrossRef]

- Kanaparthi, V. AI-based Personalization and Trust in Digital Finance. arXiv 2024, arXiv:2401.15700. [Google Scholar] [CrossRef]

- Oliver, R.L. A Cognitive Model of the Antecedents and Consequences of Satisfaction Decisions. J. Mark. Res. 1980, 17, 460–469. [Google Scholar] [CrossRef] [PubMed]

- Cronin, J.J.; Brady, M.K.; Hult, G.T.M. Assessing the effects of quality, value, and customer satisfaction on consumer behavioral intentions in service environments. J. Retail. 2000, 76, 193–218. [Google Scholar] [CrossRef]

- Hu, H.-H.; Kandampully, J.; Juwaheer, T.D. Relationships and impacts of service quality, perceived value, customer satisfaction, and image: An empirical study. Serv. Ind. J. 2009, 29, 111–125. [Google Scholar] [CrossRef]

- Lee, C.-K.; Yoon, Y.-S.; Lee, S.-K. Investigating the relationships among perceived value, satisfaction, and recommendations: The case of the Korean DMZ. Tour. Manag. 2007, 28, 204–214. [Google Scholar] [CrossRef]

- MacKenzie, S.; Olshavsky, R. A Reexamination of the Determinants of Consumer Satisfaction. J. Mark. 1996, 60, 15–32. [Google Scholar] [CrossRef]

- Jacoby, J. Stimulus-Organism-Response Reconsidered: An Evolutionary Step in Modeling (Consumer) Behavior. J. Consum. Psychol. 2002, 12, 51–57. [Google Scholar] [CrossRef]

- Ajzen, I. From Intentions to Actions: A Theory of Planned Behavior. In Action Control: From Cognition to Behavior; Kuhl, J., Beckmann, J., Eds.; Springer: Berlin/Heidelberg, Germany, 1985; pp. 11–39. [Google Scholar]

- Venkatesh, V.; Thong, J.; Xu, X. Consumer Acceptance and Use of Information Technology: Extending the Unified Theory of Acceptance and Use of Technology. MIS Q. 2012, 36, 157–178. [Google Scholar] [CrossRef]

- Sokolova, K.; Perez, C. You follow fitness influencers on YouTube. But do you actually exercise? How parasocial relationships, and watching fitness influencers, relate to intentions to exercise. J. Retail. Consum. Serv. 2021, 58, 102276. [Google Scholar] [CrossRef]

- Chen, C.-C.; Lin, Y.-C. What drives live-stream usage intention? The perspectives of flow, entertainment, social interaction, and endorsement. Telemat. Inform. 2018, 35, 293–303. [Google Scholar] [CrossRef]

- Wang, Z.; Yuan, R.; Li, B.; Kumar, V.; Kumar, A. An Empirical Study of AI Financial Advisor Adoption Through Technology Vulnerabilities in the Financial Context. J. Prod. Innov. Manag. 2025, 10, 12795. [Google Scholar] [CrossRef]

- Salisbury, W.; Pearson, R.; Pearson, A.; Miller, D. Perceived security and World Wide Web purchase intention. Ind. Manag. Data Syst. 2001, 101, 165–177. [Google Scholar] [CrossRef]

- Cardozo, R.N. An Experimental Study of Customer Effort, Expectation, and Satisfaction. J. Mark. Res. 1965, 2, 244–249. [Google Scholar] [CrossRef]

- Zeithaml, V.A.; Berry, L.L.; Parasuraman, A. The behavioral consequences of service quality. J. Mark. 1996, 60, 31–46. [Google Scholar] [CrossRef]

- Kim, D.; Ferrin, D.; Rao, R. Trust and Satisfaction, Two Stepping Stones for Successful E-Commerce Relationships: A Longitudinal Exploration. Inf. Syst. Res. 2009, 20, 237–257. [Google Scholar] [CrossRef]

- Chen, S.-C.; Liu, M.-L.; Lin, C.-P. Integrating Technology Readiness into the Expectation-Confirmation Model: An Empirical Study of Mobile Services. Cyberpsychol. Behav. Soc. Netw. 2013, 16, 604–612. [Google Scholar] [CrossRef]

- Barjaktarovic Rakocevic, S.; Rakic, N.; Rakocevic, R. An Interplay Between Digital Banking Services, Perceived Risks, Customers’ Expectations, and Customers’ Satisfaction. Risks 2025, 13, 39. [Google Scholar] [CrossRef]

- Shin, D. The effects of explainability and causability on perception, trust, and acceptance: Implications for explainable AI. Int. J. Hum.-Comput. Stud. 2021, 146, 102551. [Google Scholar] [CrossRef]

- Wirtz, J.; Patterson, P.G.; Kunz, W.H.; Gruber, T.; Lu, V.N.; Paluch, S.; Martins, A. Brave new world: Service robots in the frontline. J. Serv. Manag. 2018, 29, 907–931. [Google Scholar] [CrossRef]

- Cao, J.; Meng, T. Ethics and governance of artificial intelligence in digital China: Evidence from online survey and social media data. Chin. J. Sociol. 2025, 11, 58–89. [Google Scholar] [CrossRef]

- Huynh, M.-T.; Aichner, T. In generative artificial intelligence we trust: Unpacking determinants and outcomes for cognitive trust. AI Soc. 2025. [Google Scholar] [CrossRef]

- Anderson, E.W.; Fornell, C.; Lehmann, D.R. Customer Satisfaction, Market Share, and Profitability: Findings from Sweden. J. Mark. 1994, 58, 53–66. [Google Scholar] [CrossRef]

- Yum, K.; Kim, J. The Influence of Perceived Value, Customer Satisfaction, and Trust on Loyalty in Entertainment Platforms. Appl. Sci. 2024, 14, 5763. [Google Scholar] [CrossRef]

- Fornell, C. A National Customer Satisfaction Barometer: The Swedish Experience. J. Mark. 1992, 56, 6–21. [Google Scholar] [CrossRef]

- Jones, M.A.; Mothersbaugh, D.L.; Beatty, S.E. Switching barriers and repurchase intentions in services. J. Retail. 2000, 76, 259–274. [Google Scholar] [CrossRef]

- Ouyang, L.; Mak, J. Examining how switching barriers moderate the link between customer satisfaction and repurchase intention in the health and fitness club sector. Asia Pac. J. Mark. Logist. 2025. [Google Scholar] [CrossRef]

- Lee, M.; Lee, J.; Feick, L. The impact of switching barriers on the customer satisfaction–loyalty link: Mobile phone service in France. J. Serv. Mark. 2001, 15, 35–48. [Google Scholar] [CrossRef]

- Verplanken, B.; Aarts, H. Habit, Attitude, and Planned Behaviour: Is Habit an Empty Construct or an Interesting Case of Automaticity? Eur. Rev. Soc. Psychol. 2011, 10, 101–134. [Google Scholar] [CrossRef]

- Evanschitzky, H.; Stan, V.; Nagengast, L. Strengthening the satisfaction loyalty link: The role of relational switching costs. Mark. Lett. 2022, 33, 293–310. [Google Scholar] [CrossRef]

- Chuang, Y.-F. Pull-and-suck effects in Taiwan mobile phone subscribers switching intentions. Telecommun. Policy 2011, 35, 128–140. [Google Scholar] [CrossRef]

- Kim, J.; Lennon, S. Effects of reputation and website quality on online consumers’ emotion, perceived risk and purchase intention: Based on the stimulus-organism-response model. J. Res. Interact. Mark. 2013, 7, 33–56. [Google Scholar] [CrossRef]

- Albarq, A. Effect of Web atmospherics and satisfaction on purchase behavior: Stimulus–organism–response model. Future Bus. J. 2021, 7, 62. [Google Scholar] [CrossRef]

- iiMedia Research. White Paper on the Development of China’s Virtual Digital Human Industry in 2024; iiMedia Research Group: Guangzhou, China, 2024. [Google Scholar]

- Hair, J.F.; Black, W.C.; Babin, B.J.; Anderson, R.E. Multivariate Data Analysis, 8th ed.; Cengage Learning: Andover, MA, USA, 2021. [Google Scholar]

- Hu, L.T.; Bentler, P.M. Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Struct. Equ. Model. A Multidiscip. J. 1999, 6, 1–55. [Google Scholar] [CrossRef]

- Fornell, C.; Larcker, D.F. Evaluating structural equation models with unobservable variables and measurement error. J. Mark. Res. 1981, 18, 39–50. [Google Scholar] [CrossRef]

- Bhattacherjee, A. Understanding Information Systems Continuance: An Expectation-Confirmation Model. MIS Q. 2001, 25, 351–370. [Google Scholar] [CrossRef]

- Chen, Z.; Dubinsky, A.J. A conceptual model of perceived customer value in e-commerce: A preliminary investigation. Psychol. Mark. 2003, 20, 323–347. [Google Scholar] [CrossRef]

- Lin, C.-P.; Bhattacherjee, A. Extending technology usage models to interactive hedonic technologies: A theoretical model and empirical test. Inf. Syst. J. 2010, 20, 163–181. [Google Scholar] [CrossRef]

- Whang, J.B.; Song, J.H.; Choi, B.; Lee, J.-H. The effect of Augmented Reality on purchase intention of beauty products: The roles of consumers’ control. J. Bus. Res. 2021, 133, 275–284. [Google Scholar] [CrossRef]

- Nass, C.; Moon, Y. Machines and Mindlessness: Social Responses to Computers. J. Soc. Issues 2000, 56, 81–103. [Google Scholar] [CrossRef]

- Ho, C.-C.; MacDorman, K.F. Revisiting the uncanny valley theory: Developing and validating an alternative to the Godspeed indices. Comput. Hum. Behav. 2010, 26, 1508–1518. [Google Scholar] [CrossRef]

- Parasuraman, A.P.; Zeithaml, V.; Malhotra, A. E-S-Qual: A Multiple-Item Scale for Assessing Electronic Service Quality. J. Serv. Res. 2005, 7, 213–233. [Google Scholar] [CrossRef]

- Davis, F.D.; Bagozzi, R.P.; Warshaw, P.R. Extrinsic and intrinsic motivation to use computers in the workplace. J. Appl. Soc. Psychol. 1992, 22, 1111–1132. [Google Scholar] [CrossRef]

- Xu, J.; Benbasat, I.; Cenfetelli, R.T. Integrating Service Quality with System and Information Quality: An Empirical Test in the E-Service Context. MIS Q. 2013, 37, 777–794. [Google Scholar] [CrossRef]

| Name | Options | Frequency | Percentage (%) |

|---|---|---|---|

| Gender | Male | 226 | 43.13 |

| Female | 298 | 56.87 | |

| Age | 20–29 years | 126 | 24.05 |

| 30–39 years | 182 | 34.73 | |

| 40–49 years | 103 | 19.66 | |

| 50–59 years | 99 | 18.89 | |

| 60 years and above | 14 | 2.67 | |

| Highest Education | High School or below | 15 | 2.86 |

| Associate Degree | 63 | 12.02 | |

| Bachelor’s Degree | 354 | 67.56 | |

| Master’s Degree and above | 92 | 17.56 | |

| Monthly Income | 1500 yuan and below | 72 | 13.74 |

| 1501 to 3000 yuan | 169 | 32.25 | |

| 3001–5000 yuan | 193 | 36.83 | |

| 5001 to 10,000 yuan | 79 | 15.08 | |

| Over 10,000 yuan | 11 | 2.1 | |

| Frequency of Using Financial Apps Monthly | Almost Never | 40 | 7.63 |

| 5–10 times | 136 | 25.95 | |

| 11–15 times | 77 | 14.69 | |

| 16–20 times | 61 | 11.64 | |

| 21–25 times | 41 | 7.82 | |

| 26–30 times | 76 | 14.5 | |

| Daily use | 93 | 17.75 | |

| Occupation | Corporate Employee | 339 | 64.69 |

| Individual/Independent | 136 | 25.95 | |

| Student | 44 | 8.4 | |

| Other | 5 | 0.95 | |

| City of Residence | First-tier City | 102 | 19.47 |

| New-tier City | 149 | 28.44 | |

| Second-tier City | 176 | 33.59 | |

| Other Cities | 97 | 18.51 | |

| Total | 524 | 100 | |

| Dimension | Latent and Observed Variables | Factor Loadings | AVE | CR |

|---|---|---|---|---|

| Functional Value | FV1 | 0.735 | ||

| FV2 | 0.684 | |||

| FV3 | 0.819 | |||

| FV4 | 0.789 | 0.58 | 0.84 | |

| Cognitive Value | CV1 | 0.633 | ||

| CV2 | 0.812 | |||

| CV3 | 0.714 | |||

| CV4 | 0.809 | 0.56 | 0.83 | |

| Emotional Value | EV1 | 0.768 | ||

| EV2 | 0.779 | |||

| EV3 | 0.788 | |||

| EV4 | 0.799 | 0.61 | 0.86 | |

| Satisfaction | DS1 | 0.849 | ||

| DS2 | 0.803 | |||

| DS3 | 0.785 | |||

| DS4 | 0.770 | 0.64 | 0.88 | |

| Switching Barriers | LB1 | 0.762 | ||

| LB2 | 0.844 | |||

| LB3 | 0.817 | |||

| LB4 | 0.832 | |||

| LB5 | 0.817 | |||

| LB6 | 0.760 | |||

| LB7 | 0.774 | |||

| LB8 | 0.780 | 0.60 | 0.85 | |

| Usage Intention | WW1 | 0.749 | ||

| WW2 | 0.755 | |||

| WW3 | 0.713 | |||

| WW4 | 0.744 | |||

| WW5 | 0.704 | 0.54 | 0.85 |

| Path Relationship | Effect Type | Standardized Effect Value | 95% CI | Effect Proportion |

|---|---|---|---|---|

| Cognitive Value → Satisfaction → Usage Intention | Direct Effect | 0.132 | [−0.028, 0.292] | - |

| Indirect Effect | 0.073 | [0.010, 0.136] | 35.6% | |

| Total Effect | 0.205 | [0.043, 0.367] | - | |

| Emotional Value → Satisfaction → Usage Intention | Direct Effect | 0.345 | [0.187, 0.503] | 81.4% |

| Indirect Effect | 0.079 | [0.018, 0.140] | 18.6% | |

| Total Effect | 0.424 | [0.268, 0.580] | - | |

| Functional Value → Satisfaction → Usage Intention | Direct Effect | 0.025 | [−0.108, 0.158] | - |

| Indirect Effect | 0.010 | [−0.014, 0.034] | - | |

| Total Effect | 0.035 | [−0.100, 0.170] | - |

| Model | Variable | β | p |

|---|---|---|---|

| Model 1: Satisfaction (M) | Functional Value (X1) | 0.051 | 0.352 |

| Cognitive Value (X2) | 0.300 *** | 0.000 | |

| Emotional Value (X3) | 0.419 *** | 0.000 | |

| R2 | 0.408 | ||

| Model 2: Usage Intention (Y) | Functional Value (X1) | 0.023 | 0.715 |

| Cognitive Value (X2) | 0.100 | 0.097 | |

| Emotional Value (X3) | 0.338 *** | 0.000 | |

| Satisfaction (M) | 0.184 ** | 0.005 | |

| Switching Barriers (W) | −0.152 * | 0.045 | |

| M × W (Interaction Term) | −0.307 *** | 0.000 | |

| R2 | 0.367 |

| Mediated Path | Effect | Boot SE | Boot CI Lower Bound | Boot CI Upper Bound | p |

|---|---|---|---|---|---|

| Low Switching Barriers Level | |||||

| Cognitive Value → Satisfaction → Usage Intention | 0.224 | 0.05 | 0.126 | 0.322 | 0.001 |

| Emotional Value → Satisfaction → Usage Intention | 0.242 | 0.061 | 0.122 | 0.362 | 0.000 |

| Functional Value → Satisfaction → Usage Intention | 0.031 | 0.034 | −0.036 | 0.098 | 0.363 |

| High Switching Barriers Level | |||||

| Cognitive Value → Satisfaction → Usage Intention | −0.030 | 0.036 | −0.100 | 0.040 | 0.415 |

| Emotional Value → Satisfaction → Usage Intention | −0.033 | 0.039 | −0.109 | 0.043 | 0.411 |

| Functional Value → Satisfaction → Usage Intention | −0.004 | 0.021 | −0.045 | 0.037 | 0.615 |

| No. | Research Hypothesis | Test Results |

|---|---|---|

| H1 | The functional value of AI digital human advisors positively influences user satisfaction. | Not Supported |

| H2 | The cognitive value of AI digital human advisors positively influences user satisfaction. | Supported |

| H3 | The emotional value of AI digital human advisors positively influences user satisfaction. | Supported |

| H4 | The functional value of AI digital human advisors directly and positively influences users’ usage intention. | Not Supported |

| H5 | The cognitive value of AI digital human advisors directly and positively influences users’ usage intention. | Not Supported |

| H6 | The emotional value of AI digital human advisors directly and positively influences users’ usage intention. | Supported |

| H7 | User satisfaction with AI Digital Human Advisors positively influences their usage intention. | Supported |

| H8 | Satisfaction mediates the relationship between functional value and users’ usage intention. | Not Supported |

| H9 | Satisfaction mediates the relationship between cognitive value and users’ usage intention. | Supported |

| H10 | Satisfaction mediates the relationship between emotional value and users’ usage intention. | Supported |

| H11 | Switching barriers negatively moderate the relationship between user satisfaction and usage intention. | Supported |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tang, Y.; Son, H. How Perceived Value Drives Usage Intention of AI Digital Human Advisors in Digital Finance. Systems 2025, 13, 973. https://doi.org/10.3390/systems13110973

Tang Y, Son H. How Perceived Value Drives Usage Intention of AI Digital Human Advisors in Digital Finance. Systems. 2025; 13(11):973. https://doi.org/10.3390/systems13110973

Chicago/Turabian StyleTang, Yishu, and Hosung Son. 2025. "How Perceived Value Drives Usage Intention of AI Digital Human Advisors in Digital Finance" Systems 13, no. 11: 973. https://doi.org/10.3390/systems13110973

APA StyleTang, Y., & Son, H. (2025). How Perceived Value Drives Usage Intention of AI Digital Human Advisors in Digital Finance. Systems, 13(11), 973. https://doi.org/10.3390/systems13110973