Abstract

Over the last two years, with the rapid development of artificial intelligence, Large Language Models (LLMs) have obtained significant attention from the academic sector, making their application in higher education attractive for students, managers, faculty, and stakeholders. We conducted a Systematic Literature Review on the adoption of LLMs in the higher education system to address persistent issues and promote critical thinking, teamwork, and problem-solving skills. Following the PRISMA 2020 protocol, a systematic search was conducted in the Web of Science Core Collection for studies published between 2023 and 2024. After a systematic search and filtering of 203 studies, we included 22 articles for further analysis. The findings show that LLMs can transform traditional teaching through active learning, align curricula with real-world demands, provide personalized feedback in large classes, and enhance assessment practices focused on applied problem-solving. Their effects are transversal, influencing multiple dimensions of higher education systems. Consequently, LLMs have the potential to improve educational equity, strengthen workforce readiness, and foster innovation across disciplines and institutions. This systematic review is registered in PROSPERO (2025 CRD420251165731).

1. Introduction

Higher education is essential for every country. Universities and colleges create and share knowledge, helping students become skilled workers, responsible citizens, and individuals who can think critically [,]. In many countries, completing university can transform a person’s life and help them secure better job opportunities. It is also essential to consider that the quality of higher education has a direct impact on national innovation and productivity.

However, many students are not developing the necessary skills for life and work [,], including problem-solving, teamwork, and creative thinking. This situation presents a complex problem involving various factors that may explain why many higher education systems fail to develop the skills necessary for life and work in students [,]. Among the reasons, we expose the following [,,]:

- Traditional teaching methods focus on memorization, instead of developing critical thinking, teamwork, and creative problem-solving through hands-on activities or active participation [,].

- Curricula do not align with the skills required in real-life situations because many courses are primarily theoretical, lacking practical experience []. As a result, students have fewer opportunities to work on projects, internships, or group tasks, making it difficult for them to apply what they learn in professional settings.

- Large classes hinder the teaching and learning process []. In this scenario, teachers and students face various challenges that impact student development in the short and long term.

- There is an absence or delay in efficient and effective feedback from teachers [].

- Traditional assessment methods, which require memorizing information, do not promote problem-solving, creativity, or practical skills, neglecting the skills students need for work and life [].

Therefore, some universities have tried new methods based on Information and Communication Technologies (ICTs), such as e-learning [], gamification [], mobile technology [], and virtual reality [], to encourage active student participation inside and outside the classroom.

Given this promising situation, international organizations, governments, universities, and experts recommend using ICTs to enhance access to education and improve learning quality. ICTs are expected to help (a) overcome limitations of traditional teaching with interactive activities and student-centered learning; (b) offer personalized feedback; (c) align the curriculum with real-world skills; and (d) enable flexible and meaningful assessment methods. Additionally, ICTs are anticipated to strengthen educational systems, improve university governance, and support administrative processes.

On this basis, the next step, Artificial Intelligence (AI), promises to drive educational innovation, offering new ways to enhance teaching, learning, and institutional management [,]. Within this field, LLMs represent one of the most transformative technologies, with the potential to reshape higher education systems by addressing persistent problems, such as traditional teaching methods focused on memorization, curricula misaligned with real-world needs, delayed feedback, and limited opportunities for skill development in large classes. By resolving these problems, universities can strengthen critical thinking, collaboration, and problem-solving skills, preparing future professionals to solve complex problems with creativity and adaptability.

However, despite increasing interest in their use, a clear gap remains in the literature regarding their adoption in higher education and their potential to address ongoing challenges while developing skills for life and work. In fact, this systematic literature review aims to analyze and synthesize current evidence on how LLMs are being adopted in higher education and how their use can help solve persistent systemic problems within higher education systems. Specifically, this review evaluates the extent to which LLMs can (a) promote critical thinking, collaboration, and problem-solving skills; (b) bridge the gap between theoretical curricula and real-world applications; (c) provide scalable, personalized feedback in large courses; and (d) support the development of competency-based assessments. By integrating findings from diverse institutional contexts, this review aims to offer evidence on how LLMs can help modernize the higher education system to prepare students for innovation, employability, and life. The research findings can significantly contribute to understanding the role of LLMs in higher education, benefiting institutions, researchers, stakeholders, students, and educators. The research questions (RQs) for this study are as follows:

RQ1: How can LLMs foster critical thinking, collaborative skills, and problem-solving in higher education?

RQ2: How can LLM reduce the gap between theoretical curricula and real-world skills?

RQ3: How can LLMs deliver personalized and practical feedback to students in large courses?

RQ4: How can LLMs support the development of assessments?

This document is organized as follows. In Section 2, we present the main concepts related to this study. In Section 3, we describe previous works. In Section 4, we explain the methodology employed in this systematic literature review. In Section 5, we present the main findings of this study by answering the research questions. In Section 6, we present the discussion section. In Section 7, we present the conclusion section.

2. Background

2.1. Developing Skills in Higher Education

People can acquire skills such as creativity, critical thinking, collaboration, and communication through formal studies, professional experience, or everyday life []. However, higher education has the responsibility to help students develop these skills for personal growth, effective job performance, and progress toward their career goals [,]. This goal can be achieved when the Institutional Educational Project places the student at the center and provides the right conditions for learning and skill development []. These conditions include adequate infrastructure, appropriate equipment, and manageable class sizes. In addition, changing the pedagogical model is essential, not only through institutional regulations but also through teachers’ active adoption of innovative teaching methods [,].

Despite these efforts, higher education systems worldwide still face a persistent skills gap [,,]. Employers increasingly report that graduates possess formal qualifications but often lack the essential soft skills, such as teamwork, communication, and problem-solving, necessary to perform effectively in real-world settings []. This disconnect reflects a deeper structural issue. While universities focus on technical and cognitive skills, they often overlook interpersonal and social abilities that drive collaboration, adaptability, and innovation []. In the current information technology (IT) era, organizations require professionals who can work in teams, communicate clearly, and respond creatively to complex challenges [].

The soft skills gap limits employability and weakens the link between higher education and labor market needs []. While technical skills remain essential, today’s global economy increasingly rewards graduates who combine disciplinary expertise with interpersonal and reflective capabilities. Therefore, higher education systems must transform their structures and teaching methods to close this gap. Universities need to develop curricula that strike a balance between technical knowledge and soft skills, as well as critical thinking. This shift requires transitioning from traditional, memorization-focused teaching to student-centered learning, where problem-solving, creativity, and collaboration are central to the educational process.

2.2. The Gap of Critical Thinking, Problem-Solving, and Teamwork Skills in Higher Education

There is a persistent gap between the skills students acquire in higher education and those required by today’s competitive and rapidly changing workforce []. Skills, such as critical thinking, problem-solving, and teamwork, refer to competencies that are not tied to a specific technical task but are essential for collaboration and effective performance in any professional context [,,]. These abilities complement technical expertise and determine how well individuals interact, solve problems, and lead within organizations.

Despite their recognized importance for employability, leadership, and career advancement, higher education systems still fail to develop these skills effectively. Traditional curricula emphasize theoretical knowledge and discipline-specific content, often neglecting experiential learning and teamwork-based assessment. As a result, graduates may excel academically but lack the interpersonal and reflective abilities required in modern workplaces [].

The integration of active teaching methods, such as simulations, team-based projects, and technology-assisted learning, encourages critical thinking, problem-solving, and teamwork skills []. However, their adoption varies unevenly across institutions. This systemic issue underscores the urgent need for higher education to redesign its teaching and assessment practices to prioritize these skills as fundamental outcomes rather than secondary goals.

2.3. Critical Thinking, Problem-Solving, and Teamwork as Key Skills for Life

Critical thinking is one of the most important skills for learning throughout life and adapting to change. In today’s job market, it helps people find employment, generate new ideas, and contribute to society []. Employers value workers who can analyze problems, make informed decisions, and handle uncertain situations, not just those with technical knowledge. Moreover, critical thinking involves making inferences, evaluating information, and drawing logical conclusions []. These skills enable professionals to understand situations and act effectively in the workplace. However, many students and workers still need to strengthen their flexibility and perseverance, which are crucial for overcoming challenges and taking responsibility for their choices. Additionally, critical thinking fosters innovation and economic growth []. It helps professionals anticipate future needs, discover innovative solutions, and make informed decisions. Therefore, employers now expect graduates to combine knowledge with thinking, communication, and teamwork skills. These abilities are essential for securing desirable jobs, engaging in innovative problem-solving, and actively participating in community life.

Consequently, critical thinking involves the ability to understand concepts, reason logically, apply strategies, analyze situations, make informed decisions, and combine ideas to solve problems effectively []. It serves as the foundation for effective problem-solving, enabling individuals to evaluate information, make informed judgments, and consider potential solutions before taking action.

Problem-solving, in turn, requires high-level thinking. It involves analyzing a situation, evaluating different options, and reflecting on the results to make the best decision []. When students face tasks or challenges without clear solutions, they use problem-solving to explore, test, and find appropriate ways to achieve their goals. In fact, university students need to develop strong critical thinking and problem-solving skills. Employers now expect graduates who can deal with uncertainty, handle complex situations, and make informed decisions []. However, many employers report that graduates still lack these abilities. These competencies are not only essential for employability but also for innovation and civic life. Individuals who can think critically and solve problems effectively are better prepared to adapt to change, contribute to social progress, and support sustainable economic growth [].

At the same time, teamwork complements and strengthens these abilities. Effective problem-solving often requires collaboration, where individuals share diverse perspectives, challenge ideas, and build on each other’s reasoning []. Critical thinking becomes more meaningful when practiced in a team setting, as members must evaluate evidence, negotiate viewpoints, and make collective decisions. Teamwork also fosters creativity and innovation by combining different areas of expertise and ways of thinking []. When students work together, they learn to communicate clearly, coordinate efforts, and respect different opinions.

These experiences prepare the students to participate effectively in professional environments, where cooperation and collective intelligence are key to success []. Moreover, teamwork encourages social responsibility and civic engagement. Through collaboration, learners develop empathy, tolerance, and the ability to work toward common goals, skills that strengthen not only workplaces but also communities. In this way, teamwork, critical thinking, and problem-solving form a connected set of competencies that higher education must prepare graduates for the demands of a complex and changing world.

2.4. Persistent Challenges in the Higher Education Systems

One of the most ongoing challenges in higher education worldwide is the prevalence of large classes [,,]. Although there is no specific number that defines a large class, and this varies by higher education system, it typically exceeds 40 students. These are typically undergraduate foundational courses designed to prepare students conceptually and practically for advanced academic work.

However, large classes pose not only a numerical challenge but also a deeper issue of ensuring equality and quality in learning opportunities for all students []. Teaching in such settings can be demanding, even for experienced faculty members. Teachers frequently encounter challenges in maintaining student engagement, offering personalized support, and providing timely feedback []. For new faculty members, the experience can be especially overwhelming.

Another persistent challenge in higher education is the lack of or delay in feedback to students [,,,]. Feedback is vital for guiding learning, reinforcing progress, and maintaining motivation. When students do not receive timely responses about their performance, their engagement and persistence tend to decline []. Studies show that groups that received delayed or no feedback achieved only moderate success, despite putting in similar effort. This indicates that the absence of immediate feedback can significantly reduce students’ motivation to complete tasks or improve performance [,].

In contrast, students who received efficient and effective feedback showed notable gains in achievement, effort, and persistence. Effective feedback helps students identify errors, reflect on their learning process, and make necessary adjustments. At the systemic level, the challenge lies in providing effective feedback in large, diverse classrooms, where instructors often face heavy workloads. This issue highlights the need for innovative teaching tools and intelligent tutoring systems that deliver real-time, personalized feedback to students.

2.5. Technology in Supporting the Teaching and Learning Process

Technology-mediated learning has proven to be a practical approach to supporting education when used thoughtfully []. Researchers have found that technology tools such as online learning platforms and digital feedback systems help students improve their understanding and performance []. One key reason for this success is that learners can receive immediate feedback and access learning materials at their own pace [,]. Instead of relying only on classroom lectures, students can review materials multiple times, attempt practice questions, and reflect on their mistakes [].

Another important advantage is that digital tools help teachers better understand how students are learning []. For example, teachers can identify which topics are more difficult and provide additional explanations or adjust lessons accordingly. This kind of support makes learning more flexible, personalized, and student-centered [].

However, the effectiveness of technology depends on how it is used. Studies show that technology works best when it supports good teaching practices, rather than replaces them. When teachers guide students in using digital tools and design meaningful learning activities, students become more motivated and take responsibility for their own learning [].

Despite these benefits, traditional educational technologies still face several limitations []. Consequently, tools such as AI and LLMs can provide real-time feedback, personalize learning experiences, and offer deeper insights into students’ progress []. These tools have the potential to move higher education beyond the limits of traditional technology and make teaching and learning more responsive, inclusive, and effective.

2.6. Artificial Intelligence in Higher Education

AI refers to computer systems capable of emulating human intelligence, such as reasoning, problem-solving, and language processing []. The use of AI in higher education is being studied, assessed, and formalized in many institutions worldwide [,,]. AI can create personalized learning experiences [], support teaching activities [], or assist the learning process by correcting errors and providing immediate feedback to students []. These capabilities enable students to learn more effectively, allowing teachers to focus on higher-value tasks, such as mentoring and analysis.

Beyond the classroom, AI can improve university decision-making by analyzing large datasets to identify patterns []. Due to these capabilities, many people around the world believe this disruptive technology can help close gaps in higher education. Therefore, expectations about the benefits of AI in higher education are high.

Furthermore, AI can reduce the time required for routine tasks, enabling faculty to focus more on teaching, research, and innovative problem-solving [,,]. As data volumes grow, AI provides a practical way to turn information into actionable insights. As a result, AI presents higher education systems with an opportunity to modernize decision-making, enhance teaching quality, and improve institutional efficiency. Its successful adoption depends not only on the technology itself but also on the institution’s preparedness to adopt it responsibly and strategically.

2.6.1. Generative AI

Generative AI (GenAI) is a branch of AI that creates new content, such as text, images, music, or video, by identifying and reproducing patterns from large datasets []. In higher education, GenAI offers several advantages [,]. For example, GenAI can support teachers in preparing lecture materials or exercises, thereby reducing the time spent on repetitive tasks. Additionally, GenAI can provide personalized explanations and feedback to students inside and outside the classroom []. GenAI provides efficiency with adaptability to complement traditional teaching and student-centered learning experiences.

2.6.2. LLMs

Within GenAI, LLMs are the most accessible form of AI technology. LLMs are deep learning systems trained on enormous collections of text, often containing billions of parameters []. The strength of LLMs lies in their ability to understand and generate natural language in a coherent and context-aware way, enabling the performance of tasks such as summarization, translation, text completion, and question answering at previously unimaginable levels [].

LLMs are valuable in higher education by simplifying complex tasks such as simulations, debugging code, and testing ideas. This helps students save time, gain confidence, and approach problem-solving creatively. In [], LLMs supported psychology students as thinking partners, helping them strengthen critical thinking and reflect more deeply. Moreover, LLMs guided math students to explore problems more independently, encouraging active participation []. Finally, LLMs served as tutors, providing feedback and explanations in simple language, thereby promoting student confidence [].

2.7. Role of Assessment

Assessment plays a central role in the educational system because it provides evidence of the knowledge and skills students have acquired, helping them succeed academically, professionally, and personally. Beyond evaluating outcomes, assessments should align with learning goals [], incorporate formative and summative elements [], and prioritize real-world problems reflected in authentic tasks that foster critical thinking, creativity, and teamwork []. Assessments continue to serve a purpose and help students prepare for the demands of the workplace, thanks to their alignment with learning outcomes.

Formative assessment enhances learning by providing feedback to teachers and students, enabling them to modify their teaching and learning activities accordingly []. This process encourages students to reflect on their reasoning, identify gaps, and engage in peer feedback, thereby enhancing their skills in analysis, self-criticism, and collaborative problem-solving. Alternatively, summative assessment aims to summarize what students know, understand, and can do. However, when summative assessments focus only on memorization, they restrict learning; when designed around performance, application, and reflection, they support the development of higher-order thinking skills [].

In modern societies characterized by constant technological and economic change, assessments must foster creativity, collaboration, and problem-solving skills; otherwise, they will fail to fulfill their educational mission [,]. Institutions must thus design assessment strategies that foster a belief in every student’s potential to succeed, promoting resilience, confidence, and intellectual curiosity. In this way, assessment becomes not only a measure of achievement but also a mechanism for empowerment, cultivating citizens who are capable of critical thinking, ethical decision-making, and innovative contributions to society.

3. Related Works

In [], the authors examined the use of LLMs in higher education. The results reported that LLMs improved independent learning by 18%, collaborative learning by 19%, and interactive learning by 15%. Students were able to practice critical and creative thinking, work on problem-solving, and take more control of their studies. At the same time, teachers moved away from just giving lectures and instead acted more like guides and mentors. Still, the authors pointed out concerns such as ethics, privacy, accuracy, and unequal access to technology. They concluded that LLMs can improve education in meaningful ways, but only if schools handle these risks with strong rules and supervision.

In [], the authors investigated the application of Conversational Artificial Intelligence (CAI) in the field of software engineering. The authors found that CAI can act as a tutor or assistant, providing technical guidance and feedback to students. Consequently, CAI reinforces concepts and gives beginners greater confidence. The authors concluded that CAI can improve learning and practice; however, further research and refinement of methods are still required.

In [], the authors reviewed 41 studies about ChatGPT (GPT-3 and GPT-4) in education. They found that most uses were in higher education, primarily in the health sciences, STEM fields, and language learning. ChatGPT helped students reason, solve problems, prepare for clinical work, and improve language skills. It also encouraged feedback and independent thinking. Still, the authors noted issues such as plagiarism, unequal access, and lack of teacher preparation. They stressed that benefits depend on the context and on teacher guidance.

In [], the authors conducted a systematic literature review to examine the role of LLMs in higher education, identifying positive and negative results. On the positive side, the authors found that LLMs can assist students with content generation and provide instant feedback, thereby enhancing engagement, autonomy, and learning efficiency. On the negative side, the authors identified several critical concerns associated with LLMs, including the reliability and accuracy of generated outputs, risks of plagiarism, over-reliance on automated systems, and the need to safeguard academic integrity, which can impact students’ correct learning. The authors suggest that the effectiveness of LLMs depends largely on institutional policies and the development of digital literacy among students and teachers.

In [], the authors investigated the use of LLMs in higher education. The authors found that LLMs enhance academic and digital skills through activities such as writing, programming, and problem-solving. The study also noted that LLMs increase student motivation and engagement, offering real-time feedback and new ways to practice independent and active learning. However, the authors identified the following challenges, including plagiarism, privacy issues, and the risk of students becoming overly dependent on technology. The authors noted that the impact of LLMs depends on how they are integrated into the classroom, the policies that govern their use, and the preparation of both students and teachers.

In [], the authors studied the use of LLMs in higher education, finding the following benefits: (a) providing instant feedback, students can know the mistakes or errors faster; (b) personalizing guidance, students can find guideline to complete a particular activity; and (c) developing soft-skills, students can strong or develop skills like critical thinking, creativity, and problem solving. Furthermore, the authors found that LLMs can promote the independent development of each student. However, the authors also identified challenges, including plagiarism, over-reliance on technology, and ethical issues related to privacy and data use.

4. Methodology

The systematic literature review was conducted in accordance with the PRISMA 2020 guidelines [,] to select studies for inclusion. A completed PRISMA checklist is provided as Supplementary Material to ensure transparency and reproducibility. A systematic review registration statement was created in PROSPERO (2025 CRD420251165731).

4.1. Research Questions

We conduct this literature review by addressing the following research questions (RQs).

- RQ1: How can LLMs foster critical thinking, collaborative skills, and problem-solving in higher education?

The purpose of this question is to examine whether LLMs can foster active learning. Additionally, this question examines how strengthening critical skills at scale may impact broader education systems and help prepare a workforce capable of innovation and adaptability.

- RQ2: How can LLM reduce the gap between theoretical curricula and real-world skills?

This question explores how LLMs can bridge the gap between theory and practice by creating real-world experiences and providing students with more opportunities to apply their knowledge in practical settings. In addition, the answer analyzes how such alignment between education and workplace needs can impact workforce readiness, economic competitiveness, and national innovation capacity.

- RQ3: How can LLMs deliver personalized and practical feedback to students in large courses?

This question investigates the potential of LLMs as scalable tools for individualized learning support. At a systemic level, this involves examining how scalable feedback mechanisms can reshape educational equity, reduce disparities in large enrollment settings, and enhance the efficiency of higher education systems.

- RQ4: How can LLMs support the development of assessments?

This question analyzes the potential of LLMs to modernize assessment practices, shifting from rote memorization to authentic evaluations that capture higher-order competencies. Furthermore, it addresses how to rethink assessment culture.

4.2. Search Strings

The primary source for the search process was the Web of Science core collection. The decision to use Web of Science as a primary and unique source was based on its consistent metadata, reliability, and international acceptance.

Then, selecting keywords was a crucial step in the process. We selected only three keywords extracted from previous studies that mark the core of this literature review. The keywords are: LLM, Large Language Model, and Higher Education. From the keywords, we created two search strings: (A) “LLM” and “Higher Education”, and (B) “Large Language Model” and “Higher Education”.

Each search string includes the main component of this review, which refers to the technology being evaluated. In this case, we adopted the more general name of the technology, rather than specific solutions like ChatGPT, to maintain consistency in the information. The other component limited the area, level, or scenario where the study was carried out. In this case, we used the educational level instead of specific programs, such as chemistry, to include different perspectives, experiences, and results.

The initial dataset consisted of 203 documents, with 115 identified through search string A and 88 through search string B. Then, the duplicate documents were removed. The final number of records is 165.

4.3. Inclusion and Exclusion Criteria

In this subsection, we explain the inclusion and exclusion criteria to choose the most relevant articles to the primary purpose of this literature review. The initial number of records in this stage was 165.

The inclusion criteria (IC) are:

- IC1: Publication Year: The final publication date is no later than December 2024.

- IC2: Publication Range: The document was published between January 2023 and December 2024.

- IC3: Document Type: The document is classified as a peer-reviewed journal article.

- IC4: Language: The document is written in English.

The exclusion criteria (EC) are:

- EC1: Duplication: The document is a duplicate of another entry.

- EC2: Document Type: The document is categorized as a preview, book chapter, or conference proceeding.

- EC3: Language: The document is written in a language other than English.

- EC4: Early access

After applying the inclusion and exclusion filters, 117 documents were excluded, with 48 papers for retrieval.

4.4. Study Selection

After downloading the filtered results of the initial search, we reviewed 48 documents to assess their eligibility for inclusion in this review. The screening process followed a structured and transparent approach based on five inclusion criteria (C1–C5). Each criterion was designed to ensure that each selected study provided meaningful evidence to address the four research questions (RQ1–RQ4) regarding the educational use and systemic implications of LLMs in higher education.

- C1: Explicit teaching-learning focus. Papers that focused exclusively on administrative, policy, or technological aspects without a clear pedagogical dimension were excluded.

- C2: Direct use of LLMs with students. Studies were selected only if students directly used LLMs during learning activities, tasks, or assessments.

- C3: Application field is pedagogy/education or subject-specific learning. To ensure disciplinary diversity while maintaining educational relevance, studies were required to focus either on general pedagogy or on subject-specific applications in higher education.

- C4: Practical or empirical use that enhances learning outcomes. Only studies presenting practical implementations, experiments, or empirical analyses were included.

- C5: Provides data, case studies, experiments, or structured evaluation. To ensure methodological rigor, studies had to present structured evidence such as data analysis, experiments, or detailed case studies.

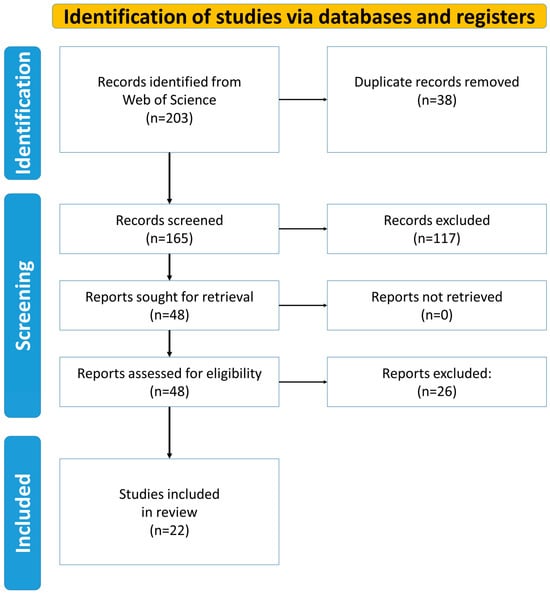

In Appendix A, we present the 48 documents assessed for eligibility in this review. Using these criteria, each document was carefully reviewed, and only those that met the conditions were retained for analysis. The final selection comprised 22 papers: 10 identified with search string A and 12 with search string B. Figure 1 shows the study selection process, based on the PRISMA flow diagram.

Figure 1.

PRISMA flow diagram of the document selection process.

5. Findings

In this section, we present the results of this study. Appendix B shows the relevance of each document in relation to the four research questions (RQ1–RQ4). This organization helps to identify which sources offer the most comprehensive insights for the study and which provide more targeted perspectives.

5.1. Geographic Distribution of Documents Assessed for Eligibility

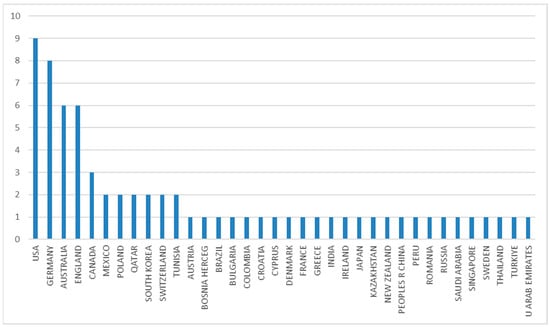

The 48 documents assessed for eligibility represented a wide range of countries, including contributions from 35 countries across North America, Europe, Asia, and Oceania. The United States led with the highest number of publications (n = 9), reflecting its strong research involvement in applying LLMs in higher education. Following the U.S. and Germany (n = 8), Australia (n = 6), and England (n = 6) emerged as other significant contributors, collectively accounting for over half of all eligible studies. A second group of countries, including Canada (n = 3), Mexico (n = 2), Poland (n = 2), Qatar (n = 2), South Korea (n = 2), Switzerland (n = 2), and Tunisia (n = 2), showed moderate participation, indicating a growing global interest in LLM-related educational research. The remaining publications come from diverse regions such as Latin America, Asia, the Middle East, and Europe, each contributing one study. Figure 2 displays the distribution of the 48 documents by country.

Figure 2.

Distribution of studies by country.

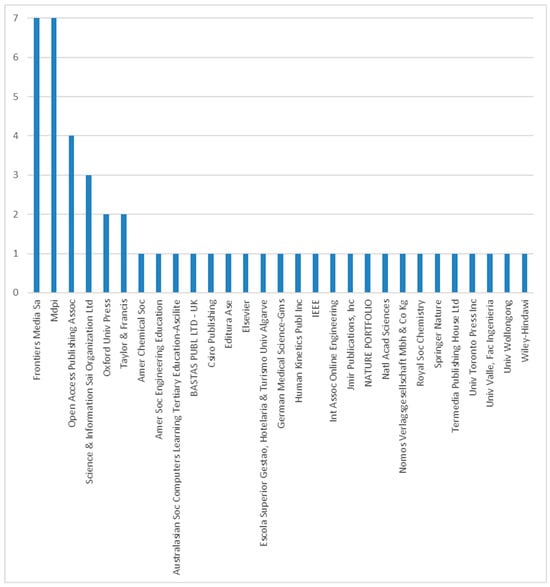

5.2. Publisher Distribution of Documents Assessed for Eligibility

The analysis showed that research on LLMs in higher education is mainly concentrated among a few leading publishers, especially those promoting open-access sharing. Frontiers Media SA and MDPI each published seven of the analyzed documents, together accounting for nearly one-third of the total output. This underscores the strong presence of interdisciplinary and open-access platforms in advancing discussions on AI applications in education. The Open Access Publishing Association ranked third with four publications, followed by Science & Information SAI Organization Ltd. with three, and Oxford University Press with two. These publishers collectively accounted for more than half of the reviewed documents, indicating that the topic is becoming increasingly prominent in journals focused on educational technology, innovation, and digital transformation. Figure 3 shows the distribution of the 48 documents by publisher.

Figure 3.

Distribution of studies by publisher.

5.3. Descriptive Analysis of Relevant Articles

Table 1 shows information about the 22 articles selected for this study. As shown in Table 1, the articles are distributed across numerous journals, including Frontiers in Education and Frontiers in Artificial Intelligence, with two articles each, representing 18.2% of the total. Two articles were also published in the journal Sustainability, demonstrating the growing interest in the ethical and sustainable use of LLMs in higher education.

Table 1.

22 documents included in this review.

When examining publication years, most articles are recent, reflecting the novelty and rapidly growing attention to LLMs. Out of the 22 articles, nine were published in 2023, including works in the Journal of Chemical Education, Australasian Journal of Educational Technology, Tourism & Management Studies, International Journal of Sports Physiology and Performance, International Journal of Engineering Pedagogy, Trends in Higher Education, Journal of University Teaching and Learning Practice, and Biology of Sport.

The majority, however, were published in 2024 (n = 13), showing a rapid increase in academic production on the subject. These include studies in Contemporary Educational Technology, Frontiers in Education, Frontiers in Artificial Intelligence, JMIR Medical Education, Sustainability, Proceedings of the National Academy of Sciences (PNAS), NPJ Digital Medicine, Studies in Higher Education, International Journal of Advanced Computer Science and Applications, and Human Behavior and Emerging Technologies.

This distribution of publications shows two significant trends: (1) The increasing number of articles coincides with the global adoption of ChatGPT and similar tools; and (2) The number of articles increased in 2024, confirming that LLMs are now a central topic in research in education, computer science, health sciences, and social sciences.

5.3.1. Publishers and Research Categories

We analyzed the 22 documents included in this review by examining their distribution across publishing houses and research areas (see Table 2). The analysis of publishing houses reveals that a significant proportion of the studies were published in open-access journals, particularly Frontiers Media SA (n = 4), MDPI (n = 3), and the Open Access Publishing Association (n = 2). This trend reflects the growing tendency to disseminate research on LLMs through outlets that prioritize accessibility and broad readership.

Table 2.

A list of publishers and research areas of each document included in the study.

When examining research areas, we found that Education and Educational Research represented the most significant portion of documents (n = 10), indicating a strong academic interest in the role of LLMs within higher education. Another critical area is Computer Science (n = 4), illustrating a technical focus on developing, implementing, and assessing the advantages and challenges of LLMs.

These findings indicate that, although pedagogical implications take precedence in the research agenda, technical perspectives also influence the scholarly discussion on LLMs. Overall, the distribution of publishers and research areas shows that research on LLMs and higher education is not confined to a single disciplinary perspective or publishing tradition. Instead, it spans interdisciplinary, high-impact, and open-access outlets, emphasizing both the global importance of the topic and its relevance across fields like education, computer science, health sciences, and social sciences.

5.3.2. Keywords Co-Occurrence Analysis

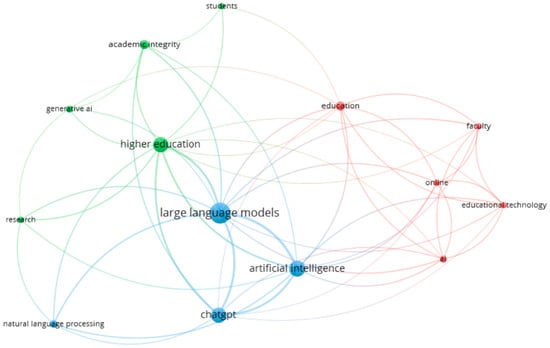

We performed co-occurrence analysis using VOSviewer(v1.6.19) [] to examine research trends on the use of LLMs in higher education. This method enabled us to visualize the frequency of specific keywords co-occurring across the corpus and to group them into thematic clusters []. This approach offered a high-level view of the field’s conceptual structure. Figure 4 depicts the following clusters.

Figure 4.

Network visualization of keyword co-occurrence analysis using VOSviewer. Note: (a) Red indicates themes related to AI integration in educational settings, (b) Green represents topics concerning academic integrity and student-centered issues, and (c) Blue reflects technical concepts related to LLMs.

The first cluster highlights how AI is being integrated into higher education learning environments. The cluster includes terms such as AI, education, educational technology, faculty, and online. The focus is often on the changing role of faculty and how educational technology can be integrated into classroom practices to enhance accessibility, scalability, and student engagement.

The second cluster was defined by keywords such as academic integrity, generative AI, higher education, research, and students. This group addresses the debates surrounding the impact of LLMs on student learning, assessment, and research ethics. Articles in this cluster frequently explore questions of plagiarism, trust, and responsible use, as well as the challenges institutions face in maintaining academic integrity while adopting generative AI in coursework and research activities.

The final cluster centered on terms like artificial intelligence, ChatGPT, large language models, and natural language processing. This cluster lies in understanding the capabilities and limitations of LLMs in educational and professional contexts.

Although some generic terms with high occurrence values (e.g., AI, education) naturally appeared, they served as bridges between clusters rather than stand-alone themes. This co-occurrence reveals that the impact of LLM research in higher education focuses on the following areas: pedagogy, technology integration, student perceptions, professional applications, and academic integrity. These topics reinforce the connection between the use of LLMs and the development of key skills for the personal and professional growth of university students.

5.4. RQ1: How Can LLMs Foster Critical Thinking, Collaborative Skills, and Problem-Solving in Higher Education?

The studies examined demonstrate that LLMs can actively assist in developing these skills through repeated interaction, reflection, and collaboration. One key educational benefit identified is that LLMs often produce incomplete or incorrect responses, prompting students to question, verify, and improve the information provided [S9, S11]. This process fosters a cycle of validation and correction, enhancing critical thinking and mental independence [S19, S21]. Students learn to evaluate the accuracy, consistency, and trustworthiness of content generated by LLMs [S16, S21], while effectively framing prompts improves their understanding and analytical skills [S5, S8]. Through these cycles, students engage in comparison, synthesis, and assessment—core elements of critical thinking [S11, S12, S22].

LLMs also act as catalysts for collaboration by aiding in idea generation, organization, and peer feedback. They can simulate evaluative processes such as rubric-based assessments or expert reviews, providing students with structured input that accelerates iterative improvement [S13, S14, S22]. Additionally, by democratizing access to knowledge and reducing participation barriers, LLMs promote more equitable opportunities for collaborative learning, especially in diverse and large-class settings [S1, S17].

In terms of problem-solving, LLMs help students explore alternative solutions, incorporate interdisciplinary perspectives, and simulate authentic scenarios that mirror real-world dilemmas [S3, S14, S15]. LLMs enable students to emulate technical or social reasoning within safe environments and approach complex problems from multiple perspectives [S14, S15, S3, S19]. Furthermore, LLMs can imitate roles of peers or experts from various fields, enriching teamwork and boosting cognitive activities [S13, S22].

From a systemic standpoint, integrating LLMs into higher education can address longstanding weaknesses in traditional systems. By embedding LLMs into curricula and assessment activities, institutions can foster deeper learning that emphasizes reasoning over memorization. This transformation not only enhances graduate employability but also supports institutional adaptability and national competitiveness. Ultimately, fostering critical thinking, collaboration, and problem-solving through LLMs should be viewed as part of a broader educational reform effort—one that aligns pedagogical innovation with systemic change, preparing students to succeed professionally and contribute meaningfully to civic and economic development.

5.5. RQ2: How Can LLM Reduce the Gap Between Theoretical Curricula and Real-World Skills?

The evidence reviewed shows that LLMs can significantly bridge the gap between theory and practice by simulating real-world situations, supporting hands-on learning, and encouraging autonomous skill development. One of their strongest features is the ability to create simulated scenarios, cases, and problem contexts that are hard to reproduce in traditional classrooms [S2, S7]. These simulations enable students to apply theoretical concepts safely and effectively in various situations [S10]. For example, in health sciences, learners can develop and assess innovation projects that include ethical dilemmas or professional standards used by actual review committees [S3, S14, S15].

In STEM programs, LLMs assist students in testing, debugging, and improving technical setups or prototypes during project-based or inquiry-driven activities [S3, S18, S21]. When mistakes happen, the models can provide alternative solutions instantly, allowing continuous iteration and refinement [S1, S4, S22]. This quick feedback accelerates learning cycles and enhances problem-solving efficiency. LLMs also strengthen written communication and reasoning skills, as students must craft clear prompts and articulate precise arguments to obtain coherent, relevant responses [S18, S19, S22].

Another important discovery involves promoting self-management and independent learning, skills that are increasingly valued in today’s workplace. By allowing students to access materials anytime and anywhere through connected devices, LLMs support time management, responsibility, and independence.

At the institutional and systemic levels, LLMs represent a disruptive innovation capable of transforming higher education’s alignment with workforce demands. They enable a curricular shift from theory-focused instruction to experiential, skills-oriented learning that reflects professional realities [S6, S16]. Faculty and administrators are increasingly incorporating LLMs into interdisciplinary projects that address real-world challenges, thereby promoting collaboration across disciplines [S13, S14]. Additionally, LLMs are changing assessment methods by moving beyond rote memorization to performance-based evaluations that measure applied knowledge and practical skills [S11, S20].

The findings suggest that as educational systems worldwide adapt to these new technologies, LLMs could play a vital role in closing the ongoing skills gap between academia and the labor market. However, their impact will mainly depend on how effectively faculty, managers, and policymakers adopt and incorporate these tools into teaching and institutional strategies. Successful implementation requires not only technological infrastructure but also organizational readiness, ethical guidance, and professional development to ensure that AI-enhanced curricula prepare graduates for a rapidly changing world [S19, S22].

5.6. RQ3: How Can LLMs Deliver Personalized and Practical Feedback to Students in Large Courses?

The studies analyzed in this review suggest that LLMs can substantially improve feedback practices by offering scalable, personalized, and interactive responses that are not bound by time or place. Evidence shows that LLMs can respond to student questions almost instantly, thus shortening the delay and reducing dependency on teachers’ availability [S1, S11, S17]. This efficiency enables students to clarify doubts in real time, minimizing frustration and fostering continuous engagement [S2, S10]. Unlike traditional systems, students can access LLM from anywhere at any time, taking ownership of their learning by identifying errors, understanding underlying reasoning, and refining their approaches independently [S5, S6, S18].

LLMs also enhance self-regulated learning by enabling students to generate practice tests or self-assessment exercises, allowing them to monitor their progress and strengthen their metacognitive skills [S3, S11, S20]. In writing-intensive disciplines, LLMs provide iterative feedback on academic texts, suggesting improvements in structure, argumentation, and clarity [S19, S22]. These repeated interactions encourage students to refine prompts and reasoning strategies, promoting deeper analytical thinking and critical reflection [S3, S5].

From the faculty’s perspective, the reviewed studies emphasize that LLMs can significantly reduce teaching workload by automating feedback on routine tasks such as drafts, quizzes, or structured assignments. This enables teachers to focus more on complex, high-level tasks that need human expertise and disciplinary judgment [S17, S22]. Furthermore, LLMs can support instructors in course design by reviewing assessment items, suggesting improvements, and ensuring they align with learning objectives [S3, S19].

The findings also reveal that feedback supported by LLMs extends beyond one-way correction; it fosters collaboration and iterative learning. Feedback becomes a social process where students share ideas, critique one another’s outputs, and co-construct understanding through project- and problem-based learning activities []. LLMs can serve as active partners in this process, offering diverse perspectives that enrich group discussions and collective problem-solving [S1, S7, S13].

On a systemic level, LLMs’ ability to provide scalable, personalized feedback can help higher education institutions balance quality and capacity. As universities face increasing enrollment and more diverse student populations, incorporating LLMs into feedback processes enables tailored learning paths without compromising instructional fairness. The evidence shows that LLM-supported feedback enhances not only individual learning outcomes but also institutional efficiency, supporting education systems in delivering more adaptive, inclusive, and high-quality learning experiences across large student cohorts.

5.7. RQ4: How Can LLMs Support the Development of Assessments?

The studies reviewed show that LLMs can significantly influence how assessments are designed and implemented, emphasizing skills over mere memorization. Specifically, LLMs can help educators develop criteria and rubrics that align with disciplinary learning outcomes and professional standards [S16, S22]. Their generative abilities enable them to produce a wide variety of assessment items, including open-ended, multiple-choice, and reasoning questions tailored to specific course objectives and contexts [S1, S3, S4, S21].

Furthermore, evidence suggests that LLMs support scenario-based and problem-focused learning, shifting the emphasis in assessment from factual recall to complex cognitive tasks such as analysis, creativity, and critical thinking [A9, S8, S11, S13, S19]. By simulating authentic work-related or ethical situations, LLMs allow students to showcase applied skills and decision-making in realistic settings, particularly valuable in fields such as engineering, health care, and business [S3, S12, S14, S15].

These findings imply that LLMs can aid the move toward competency-based assessments by providing flexible, scalable tools that foster multidisciplinary collaboration, ethical reasoning, and reflection. Additionally, offering immediate feedback and adaptive testing, LLMs can enhance formative assessment practices and support ongoing learning improvements.

On a broader scale, integrating LLMs into assessment design may shift higher education’s assessment culture from knowledge reproduction to applied understanding. When aligned with institutional and national education policies, these tools can ensure graduates possess 21st-century skills such as problem-solving, teamwork, and creativity [S11, S17, S21]. In this way, LLM can boost workforce readiness, institutional innovation, and social resilience, highlighting the systemic role of higher education as a key driver of national competitiveness and societal advancement.

6. Discussion

Persistent challenges continue to affect higher education systems globally. Traditional teaching methods still prioritize memorization over active learning; many curricula remain disconnected from real-world needs; large classes limit individualized instruction; feedback is often delayed; and assessment methods focus more on recall than application. These systemic issues hinder the development of essential competencies such as critical thinking, teamwork, and problem-solving, which are vital for life, work, and national development. Findings from this review provide evidence that LLMs can help mitigate these persistent problems while informing educational reform at both institutional and policy levels.

First, LLMs can enhance critical thinking, teamwork, and creativity, competencies that are essential for employability and for fostering innovation. The reviewed studies show that interacting with LLMs, by verifying, refining, and challenging their responses, encourages students to question assumptions, validate information, and think more analytically. Moreover, when used collaboratively, LLMs enable students to negotiate meaning, exchange interdisciplinary perspectives, and generate novel solutions. These skills, when developed at scale, contribute directly to a nation’s capacity for innovation and competitiveness, linking classroom learning to broader economic and social progress.

Second, the evidence confirms that LLMs bridge the gap between theory and practice by allowing students to simulate real-world situations. In medicine, for instance, LLMs can create clinical or ethical scenarios that promote decision-making without risk to patients, while in STEM fields, they can help test and refine prototypes without costly infrastructure. Such applications suggest that educational policymakers and administrators could strategically use LLMs to redesign curricula, thereby ensuring stronger alignment between academic programs and labor-market needs.

Third, LLMs can address the persistent challenge of limited feedback in large classes. Studies indicate that LLMs can provide timely, personalized, and formative feedback, helping students identify mistakes, track progress, and stay motivated. This scalability has significant policy implications: institutions can adopt tutoring systems based on AI to promote equitable learning experiences, especially in resource-constrained contexts or high-enrollment programs.

Fourth, the findings highlight that LLMs encourage assessment reform. By supporting reflective, scenario-based, and challenge-oriented evaluations, LLMs shift focus from memory-based testing to competency-based assessment. Policymakers and faculty developers can leverage these capabilities to modernize evaluation frameworks and foster higher-order thinking and creativity.

Finally, LLMs represent more than instructional tools, they are potential catalysts for systemic improvement. When integrated into decision-making, curriculum design, and assessment policies, LLMs can help to modify higher education systems to prepare citizens prepared for sustainable national development. This transformation can promote social equity, workforce readiness, and institutional innovation, contributing to a more adaptive and future oriented education ecosystem.

7. Conclusions

This systematic literature review analyzed 22 studies indexed in the Web of Science Core Collection to explore the adoption of LLMs in higher education. Our goal was to understand how LLMs can support higher education systems in overcoming ongoing challenges that hinder the development of critical thinking, problem-solving, and teamwork among students. The evidence indicates that LLMs can help address persistent issues in higher education, thereby enabling institutions to enhance critical thinking, problem-solving, and teamwork skills.

First, LLMs help move beyond traditional teaching models centered on memorization by promoting active, hands-on learning through projects and simulation activities. Second, LLMs support the practical application of theoretical knowledge by simulating professional scenarios and ethical dilemmas, aligning academic curricula with real-world workplace situations. Third, the ability of LLMs to deliver scalable, timely, and personalized feedback enhances meaningful learning interactions, especially in large classes where individual attention is limited. Finally, LLMs enable dynamic assessments, leading to more comprehensive evaluations of student performance.

At the systemic level, these benefits underscore the importance of establishing institutional frameworks that promote ethical, equitable, and sustainable adoption of LLMs. University leaders and policymakers must create policies that incorporate AI literacy into curricula, prepare faculty for responsible use, and protect academic integrity.

Future research should gather stronger empirical evidence on how LLMs influence teaching and learning outcomes across disciplines and institutional contexts, and evaluate their broader impact on higher education systems and workforce readiness.

Limitations

Due to the nature of this literature review, our work has the following limitations: (a) the search was based on the Web of Science core collection without considering other databases; (b) the inclusion criteria included publications in scientific journals without considering other types of documents; (c) the documents included in this review do not focus on the challenges posed by the adoption of LLMs in the teaching and learning process, such as ethical concerns or privacy; and (d) the literature review included documents from 2023 and 2024, which represent the first two years of the emergence of this technology.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/systems13111013/s1. The sample for the bibliometric and content analysis was selected in accordance with the principles recommended for Systematic Literature Reviews—PRISMA [].

Author Contributions

Conceptualization, R.M.-P., L.J.M. and V.G.F.; methodology, R.M.-P., V.G.F., V.F., A.G.P. and H.T.-C.; investigation, V.G.F., A.O.-B., R.O., J.C.R.P. and R.A.F.; resources, A.G.P., R.M.-P., L.J.M. and V.G.F.; writing—original draft preparation, R.M.-P., A.O.-B., R.O., J.C.R.P. and R.A.F.; writing—review and editing, V.G.F., V.F., H.T.-C. and L.J.M.; project administration, R.M.-P., V.G.F. and L.J.M.; funding acquisition, R.M.-P., V.F. and H.T.-C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was partially funded by the UNIVERSIDAD CATOLICA DEL NORTE through the Concurso Fondo de Desarrollo de Proyectos Docentes de Pregrado (FDPD 2023), under grant number DGPRE.N°176/2023 and Fondo de Apoyo para Publicaciones Open Access 2025, under grant number VRIDT-UCN_10201251.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

During the preparation of this study, the authors used VOSviewer (v1.6.19) for visually explore bibliometric maps and networks based on co-occurrence of keywords, and Grammarly Pro (v1.2.189.1739) for the purpose of improve the quality of writing.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A

Table A1.

48 Documents assessed for eligibility.

Table A1.

48 Documents assessed for eligibility.

| ID | C1 | C2 | C3 | C4 | C5 | Relevance to Theme |

|---|---|---|---|---|---|---|

| A1 | Yes | Yes | Business management | Custom-trained chatbot as teaching companion | Qualitative (experts) and quantitative (student survey) | High |

| A2 | Yes | No | Mathematics | Automated e-assessment generation | 240 items reviewed by 3 experts | Medium |

| A3 | Yes | Partial | Chemistry | Highlights strategies but not directly tested with students | No | Medium |

| A4 | Yes | Yes | General high education | Practical experiment comparing LLM vs. educator | Structured 2 × 2 design, path analysis | High |

| A5 | Yes | Yes | Software Engineering | Applied workshops with code generation and AI tools | Student feedback and outcome analysis | High |

| A6 | No | No | Not directly pedagogy (policy/ethics lens) | Theoretical analysis only | No | Low |

| A7 | Yes | Partial | Education | Conceptual framework, but designed to enhance learning | No | Medium |

| A8 | Yes | No | STEM | Systematic evaluation of LLM answering real course material | Dataset of 50 courses, GPT-3.5 and GPT-4 performance | Medium–High |

| A9 | Yes | No | General higher education | Descriptive | No | Medium |

| A10 | Yes | No | General education | Conceptual, system design focus | No | Medium |

| A11 | Yes | Partial | Tourism education | Practical testing of LLM | Structured evaluation against critical thinking standards | High |

| A12 | No | No | General higher education | Regression analyses of test scores vs. mindset | 68 students, structured study | Low |

| A13 | Yes | Partial | General higher education literacy | Primarily theoretical discussion | No | Medium |

| A14 | Yes | Yes | Computer Science | Enhances readability | Readability metrics and student work analysis | High |

| A15 | No | No | General higher education | Descriptive analysis | 25 articles reviewed | Low |

| A16 | Partial | No | Data engineering | Enhancement is technical, not pedagogical | Case study analysis | Medium |

| A17 | Partial | No | Computer science | Focus is classification of code origin, not learning enhancement | Supervised ML accuracy tests | Medium-Low |

| A18 | Yes | No | General higher education | Conceptual and strategic | No | Medium |

| A19 | Yes | No | General higher education | Theoretical | Case studies | Medium-Low |

| A20 | No | No | Chemistry | Research acceleration | Symposium summaries | Low |

| A21 | Yes | Yes | General higher education | Perceptions of skills | Strong quantitative data (large survey) | High |

| A22 | Yes | No | General higher education | Theoretical and ethical | Literature review only | Medium-High |

| A23 | Yes | Yes | Dentistry | Knowledge exam results and student feedback | Strong mixed methods data | High |

| A24 | Yes | Yes | Computer science | Empirical case study | Case study | High |

| B1 | Partial | No | Economics | Improves grading Reliability | Statistical analysis | Medium |

| B2 | Yes | No | General higher education | AI for assessment design, instructional text | Case study | High |

| B3 | Yes | Yes | General higher education | Virtual tutor | 207 students, completion and satisfaction data | High |

| B4 | Yes | Yes | Biomedical and Health Informatics | Practical testing of LLM in knowledge assessment | 139 students vs. multiple LLMs | High |

| B5 | Yes | No | General higher education | Formative feedback and autonomy | Case study in leadership research | Medium-High |

| B6 | Yes | Yes | Economics | Risk of LLMs | Survey-based | Medium |

| B7 | Yes | Yes | General higher education | Identifies adoption drivers and barriers to LLM use | 772 students, SEM analysis | High |

| B8 | No | No | No | Conceptual | No | Low |

| B9 | Yes | Yes | Chemistry | Activity to improve confidence and critical analysis | Student-reported outcomes | High |

| B10 | Partial | No | No | Conceptual | No | Low |

| B11 | Yes | No | Pedagogy | Conceptual | No | Medium-Low |

| B12 | Partial | No | General higher education | Support faculty, but indirect for student learning | Experimental analysis | Medium |

| B13 | Yes | No | General higher education | Conceptual | No | Medium |

| B14 | Yes | No | General higher education | Demonstrates potential to empower and guide students | Exploratory evaluation of generated answers | Medium |

| B15 | No | No | No | Highlights risks or errors | Applied to published papers | Low |

| B16 | Yes | Yes | General higher education | Models an applied pedagogy for creativity | Example with Poe’s The Black Cat | High |

| B17 | Yes | Yes | General higher education | Shows both positive and negative learning/social effects | Structural equation modeling data | High |

| B18 | Yes | No | General higher education | Conceptual framework | No | Medium-Low |

| B19 | Yes | Yes | Computer science | Improves formative feedback and assessment | Tested with real assignments | High |

| B20 | Yes | Yes | General higher education | Explores behavioral intention and usage for learning | Survey and sentiment analysis, gender-based | High |

| B21 | Yes | Yes | STEM | Enhanced ownership of learning | Sentiment and thematic analysis of student feedback | High |

| B22 | Yes | No | General higher education | Shows strategies for learning | Survey and text mining of faculty responses | Medium |

| B23 | Yes | Yes | General higher education | Shows benefits for brainstorming, structuring, and revising | Analysis | High |

| B24 | Yes | No | General higher education | Analysis of critical discourse | Text analysis of paraphrasing websites | Medium–Low |

Appendix B

Table A2.

Mapping documents to research questions.

Table A2.

Mapping documents to research questions.

| Id | RQ1 | RQ2 | RQ3 | RQ4 |

|---|---|---|---|---|

| S1 | Yes | Yes | Yes | Yes |

| S2 | Yes | Yes | ||

| S3 | Yes | Yes | Yes | Yes |

| S4 | Yes | Yes | ||

| S5 | Yes | Yes | Yes | |

| S6 | Yes | Yes | ||

| S7 | Yes | Yes | ||

| S8 | Yes | Yes | ||

| S9 | Yes | Yes | ||

| S10 | Yes | Yes | ||

| S11 | Yes | Yes | Yes | Yes |

| S12 | Yes | Yes | ||

| S13 | Yes | Yes | Yes | Yes |

| S14 | Yes | Yes | Yes | |

| S15 | Yes | Yes | Yes | |

| S16 | Yes | Yes | Yes | |

| S17 | Yes | Yes | Yes | |

| S18 | Yes | Yes | ||

| S19 | Yes | Yes | Yes | Yes |

| S20 | Yes | Yes | ||

| S21 | Yes | Yes | Yes | |

| S22 | Yes | Yes | Yes | Yes |

References

- Jones, P.; Miller, C.; Pickernell, D.; Packham, G. The Role of Education, Training and Skills Development in Social Inclusion: The University of the Heads of the Valley Case Study. Educ. Train. 2011, 53, 638–651. [Google Scholar] [CrossRef]

- Humphreys, P.; Greenan, K.; McIlveen, H. Developing Work-Based Transferable Skills in a University Environment. J. Eur. Ind. Train. 1997, 21, 63–69. [Google Scholar] [CrossRef]

- Goulart, V.G.; Liboni, L.B.; Cezarino, L.O. Balancing Skills in the Digital Transformation Era: The Future of Jobs and the Role of Higher Education. Ind. High. Educ. 2022, 36, 118–127. [Google Scholar] [CrossRef]

- Succi, C.; Canovi, M. Soft Skills to Enhance Graduate Employability: Comparing Students and Employers’ Perceptions. Stud. High. Educ. 2020, 45, 1834–1847. [Google Scholar] [CrossRef]

- Kosslyn, S.M.; Nelson, B. Building the Intentional University: Minerva and the Future of Higher Education; MIT Press: Cambridge, MA, USA, 2017. [Google Scholar]

- Halabieh, H.; Hawkins, S.; Bernstein, A.E.; Lewkowict, S.; Unaldi Kamel, B.; Fleming, L.; Levitin, D. The Future of Higher Education: Identifying Current Educational Problems and Proposed Solutions. Educ. Sci. 2022, 12, 888. [Google Scholar] [CrossRef]

- Fatkullina, F.; Morozkina, E.; Suleimanova, A. Modern Higher Education: Problems and Perspectives. Procedia-Soc. Behav. Sci. 2015, 214, 571–577. [Google Scholar] [CrossRef]

- Avdeeva, T.I.; Kulik, A.D.; Kosarevaa, L.A.; Zhilkina, T.A.; Belogurov, A.Y. Problems and Prospects of Higher Education System Development in Modern Society. Eur. Res. Stud. J. 2017, 20, 112–124. [Google Scholar] [CrossRef]

- Koedinger, K.R.; Kim, J.; Jia, J.Z.; McLaughlin, E.A.; Bier, N.L. Learning is not a spectator sport: Doing is better than watching for learning from a MOOC. In Proceedings of the Second (2015) ACM Conference on Learning, Vancouver, BC, Canada, 14–18 March 2015. [Google Scholar]

- Hornsby, D.; Osman, R. Massification in Higher Education: Large Classes and Student Learning. High. Educ. 2014, 67, 711–719. [Google Scholar] [CrossRef]

- Winstone, N.E.; Boud, D. The Need to Disentangle Assessment and Feedback in Higher Education. Stud. High. Educ. 2022, 47, 656–667. [Google Scholar] [CrossRef]

- Mayer, R.E.; Stull, A.; DeLeeuw, K.; Almeroth, K.; Bimber, B.; Chun, D.; Bulger, M.; Campbell, J.; Knight, A.; Zhang, H. Clickers in College Classrooms: Fostering Learning with Questioning Methods in Large Lecture Classes. Contemp. Educ. Psychol. 2009, 34, 51–57. [Google Scholar] [CrossRef]

- Wu, I.-L.; Hsieh, P.-J.; Wu, S.-M. Developing Effective E-Learning Environments through e-Learning Use Mediating Technology Affordance and Constructivist Learning Aspects for Performance Impacts: Moderator of Learner Involvement. Internet High. Educ. 2022, 55, 100871. [Google Scholar] [CrossRef]

- Oliveira, R.P.; Souza, C.G.d.; Reis, A.d.C.; Souza, W.M.d. Gamification in E-Learning and Sustainability: A Theoretical Framework. Sustainability 2021, 13, 11945. [Google Scholar] [CrossRef]

- Bernacki, M.L.; Greene, J.A.; Crompton, H. Mobile Technology, Learning, and Achievement: Advances in Understanding and Measuring the Role of Mobile Technology in Education. Contemp. Educ. Psychol. 2020, 60, 101827. [Google Scholar] [CrossRef]

- Marougkas, A.; Troussas, C.; Krouska, A.; Sgouropoulou, C. Virtual Reality in Education: A Review of Learning Theories, Approaches and Methodologies for the Last Decade. Electronics 2023, 12, 2832. [Google Scholar] [CrossRef]

- Diab Idris, M.; Feng, X.; Dyo, V. Revolutionizing Higher Education: Unleashing the Potential of Large Language Models for Strategic Transformation. IEEE Access 2024, 12, 67738–67757. [Google Scholar] [CrossRef]

- Vrontis, D.; Chaudhuri, R.; Chatterjee, S. Role of ChatGPT and Skilled Workers for Business Sustainability: Leadership Motivation as the Moderator. Sustainability 2023, 15, 12196. [Google Scholar] [CrossRef]

- Hurrell, S.A. Rethinking the Soft Skills Deficit Blame Game: Employers, Skills Withdrawal and the Reporting of Soft Skills Gaps. Hum. Relat. 2016, 69, 605–628. [Google Scholar] [CrossRef]

- Richa, S.; Paul, J.; Tewari, V. The Soft Skills Gap: A Bottleneck in the Talent Supply in Emerging Economies. Int. J. Hum. Resour. Manag. 2021, 33, 2630–2661. [Google Scholar] [CrossRef]

- Morin, J.; Willox, S. Closing the Soft Skills Gap: A Case in Leveraging Technology and the “Flipped” Classroom with a Programmatic Approach to Soft Skill Development in Business Education. Transform. Dialogues Teach. Learn. J. 2022, 15, 82–97. [Google Scholar] [CrossRef]

- Indrašienė, V.; Jegelevičienė, V.; Merfeldaitė, O.; Penkauskienė, D.; Pivorienė, J.; Railienė, A.; Sadauskas, J. Value of Critical Thinking in the Labour Market: Variations in Employers’ and Employees’ Views. Soc. Sci. 2023, 12, 221. [Google Scholar] [CrossRef]

- Rodzalan, S.A.; Saat, M.M. The perception of critical thinking and problem solving skill among Malaysian undergraduate students. Procedia-Soc. Behav. Sci. 2015, 172, 725–732. [Google Scholar] [CrossRef]

- Rocha, H.J.B. Exploring the Effects of Feedback on the Problem-Solving Process of Novice Programmers. In Proceedings of the Simpósio Brasileiro de Informática na Educação (SBIE), Online, 4 November 2024; pp. 474–485. [Google Scholar]

- McClough, A.C.; Rogelberg, S.G. Selection in Teams: An Exploration of the Teamwork Knowledge, Skills, and Ability Test. Int. J. Sel. Assess. 2003, 11, 56–66. [Google Scholar] [CrossRef]

- Smith, K.; Maynard, N.; Berry, A.; Stephenson, T.; Spiteri, T.; Corrigan, D.; Mansfield, J.; Ellerton, P.; Smith, T. Principles of Problem-Based Learning (PBL) in STEM Education: Using Expert Wisdom and Research to Frame Educational Practice. Educ. Sci. 2022, 12, 728. [Google Scholar] [CrossRef]

- Chua, B.L.; Tan, O.S.; Liu, W.C. Journey into the Problem-Solving Process: Cognitive Functions in a PBL Environment. Innov. Educ. Teach. Int. 2016, 53, 191–202. [Google Scholar] [CrossRef]

- Askari, G.; Asghri, N.; Gordji, M.E.; Asgari, H.; Filipe, J.A.; Azar, A. The Impact of Teamwork on an Organization’s Performance: A Cooperative Game’s Approach. Mathematics 2020, 8, 1804. [Google Scholar] [CrossRef]

- Maringe, F.; Sing, N. Teaching Large Classes in an Increasingly Internationalising Higher Education Environment: Pedagogical, Quality and Equity Issues. High. Educ. 2014, 67, 761–782. [Google Scholar] [CrossRef]

- Mulryan-Kyne, C. Teaching Large Classes at College and University Level: Challenges and Opportunities. Teach. High. Educ. 2010, 15, 175–185. [Google Scholar] [CrossRef]

- Japhet, E.L. Teaching Large Classes in Higher Education: Challenges and Strategies. Educ. Rev. 2022, 6, 251–262. [Google Scholar] [CrossRef]

- Fakhri, M.M.; Ahmar, A.S.; Rosidah, R.; Fadhilatunisa, D.; Tabash, M. Barriers to Effective Learning: Examining the Influence of Delayed Feedback on Student Engagement and Problem Solving Skills in Ubiquitous Learning Programming. J. Appl. Sci. Eng. Technol. Educ. 2024, 6, 69–79. [Google Scholar] [CrossRef]

- Rahmandad, H.; Repenning, N.; Sterman, J. Effects of Feedback Delay on Learning. Syst. Dyn. Rev. 2009, 25, 309–338. [Google Scholar] [CrossRef]

- Hu, P.J.-H.; Hui, W. Examining the Role of Learning Engagement in Technology-Mediated Learning and Its Effects on Learning Effectiveness and Satisfaction. Decis. Support Syst. 2012, 53, 782–792. [Google Scholar] [CrossRef]

- Antonietti, C.; Schmitz, M.-L.; Consoli, T.; Cattaneo, A.; Gonon, P.; Petko, D. Development and Validation of the ICAP Technology Scale to Measure How Teachers Integrate Technology into Learning Activities. Comput. Educ. 2023, 192, 104648. [Google Scholar] [CrossRef]

- Chang, H.-Y.; Wang, C.-Y.; Lee, M.-H.; Wu, H.-K.; Liang, J.-C.; Lee, S.W.-Y.; Chiou, G.-L.; Lo, H.-C.; Lin, J.-W.; Hsu, C.-Y.; et al. A Review of Features of Technology-Supported Learning Environments Based on Participants’ Perceptions. Comput. Hum. Behav. 2015, 53, 223–237. [Google Scholar] [CrossRef]

- Vogel, D.; Klassen, J. Technology-Supported Learning:Status, Issues and Trends. J. Comput. Assist. Learn. 2001, 17, 104–114. [Google Scholar] [CrossRef]

- Dumitru, D.; Halpern, D.F. Critical Thinking: Creating Job-Proof Skills for the Future of Work. J. Intell. 2023, 11, 194. [Google Scholar] [CrossRef]

- Fetzer, J.H. What Is Artificial Intelligence? In Artificial Intelligence: Its Scope and Limits; Fetzer, J.H., Ed.; Springer: Dordrecht, The Netherlands, 1990; pp. 3–27. ISBN 978-94-009-1900-6. [Google Scholar]

- Talan, T. Artificial Intelligence in Education: A Bibliometric Study. Int. J. Res. Educ. Sci. 2021, 7, 822–837. [Google Scholar] [CrossRef]

- Crompton, H.; Burke, D. Artificial Intelligence in Higher Education: The State of the Field. Int. J. Educ. Technol. High. Educ. 2023, 20, 22. [Google Scholar] [CrossRef]

- Zhai, X.; Chu, X.; Chai, C.S.; Jong, M.S.Y.; Istenic, A.; Spector, M.; Liu, J.-B.; Yuan, J.; Li, Y. A Review of Artificial Intelligence (AI) in Education from 2010 to 2020. Complexity 2021, 2021, 8812542. [Google Scholar] [CrossRef]

- Floridi, L.; Chiriatti, M. GPT-3: Its Nature, Scope, Limits, and Consequences. Minds Mach. 2020, 30, 681–694. [Google Scholar] [CrossRef]

- Wolf, L.; Farrelly, T.; Farrell, O.; Concannon, F. Reflections on a Collective Creative Experiment with GenAI: Exploring the Boundaries of What is Possible. Ir. J. Technol. Enhanc. Learn. 2023, 7, 1–7. [Google Scholar] [CrossRef]

- Law, L. Application of Generative Artificial Intelligence (GenAI) in Language Teaching and Learning: A Scoping Literature Review. Comput. Educ. Open 2024, 6, 100174. [Google Scholar] [CrossRef]

- Lee, S.; Choe, H.; Zou, D.; Jeon, J. Generative AI (GenAI) in the Language Classroom: A Systematic Review. Interact. Learn. Environ. 2025, 1–25. [Google Scholar] [CrossRef]

- Yildirim, I.; Paul, L.A. From Task Structures to World Models: What Do LLMs Know? Trends Cogn. Sci. 2024, 28, 404–415. [Google Scholar] [CrossRef]

- Choe, S.K.; Ahn, H.; Bae, J.; Zhao, K.; Kang, M.; Chung, Y.; Pratapa, A.; Neiswanger, W.; Strubell, E.; Mitamura, T.; et al. What Is Your Data Worth to GPT? LLM-Scale Data Valuation with Influence Functions. arXiv 2024, arXiv:2405.13954. [Google Scholar] [CrossRef]

- Machin, M.A.; Machin, T.M.; Gasson, N. Comparing ChatGPT With Experts’ Responses to Scenarios that Assess Psychological Literacy. Psychol. Learn. Teach. 2024, 23, 265–280. [Google Scholar] [CrossRef]

- Matzakos, N.; Moundridou, M. Exploring Large Language Models Integration in Higher Education: A Case Study in a Mathematics Laboratory for Civil Engineering Students. Comput. Appl. Eng. Educ. 2025, 33, e70049. [Google Scholar] [CrossRef]

- Haindl, P.; Weinberger, G. Students’ Experiences of Using ChatGPT in an Undergraduate Programming Course. IEEE Access 2024, 12, 43519–43529. [Google Scholar] [CrossRef]

- Kaspi, S.; Venkatraman, S. Data-Driven Decision-Making (DDDM) for Higher Education Assessments: A Case Study. Systems 2023, 11, 306. [Google Scholar] [CrossRef]

- Black, P.; Wiliam, D. Assessment and Classroom Learning. Assess. Educ. Princ. Policy Pract. 1998, 5, 7–74. [Google Scholar] [CrossRef]

- Rao, N.J.; Banerjee, S. Classroom Assessment in Higher Education. High. Educ. Future 2023, 10, 11–30. [Google Scholar] [CrossRef]

- Lacey, M.M.; Smith, D.P. Teaching and Assessment of the Future Today: Higher Education and AI. Microbiol. Aust. 2023, 44, 124–126. [Google Scholar] [CrossRef]

- Peláez-Sánchez, I.C.; Velarde-Camaqui, D.; Glasserman-Morales, L.D. The Impact of Large Language Models on Higher Education: Exploring the Connection between AI and Education 4.0. Front. Educ. 2024, 9, 1392091. [Google Scholar] [CrossRef]