Low-Cost AI-Enabled Optoelectronic Wearable for Gait and Breathing Monitoring: Design, Validation, and Applications

Abstract

1. Introduction

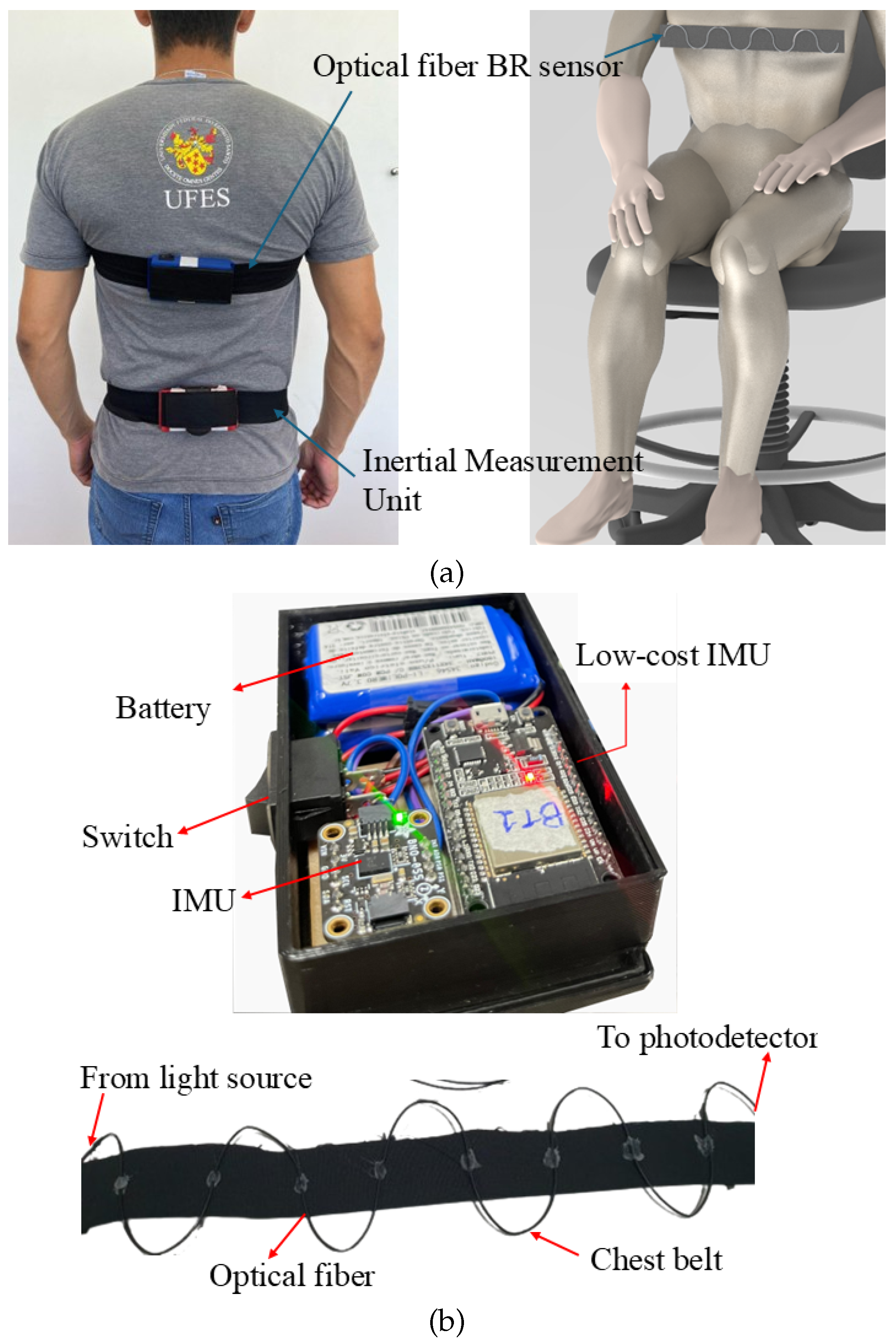

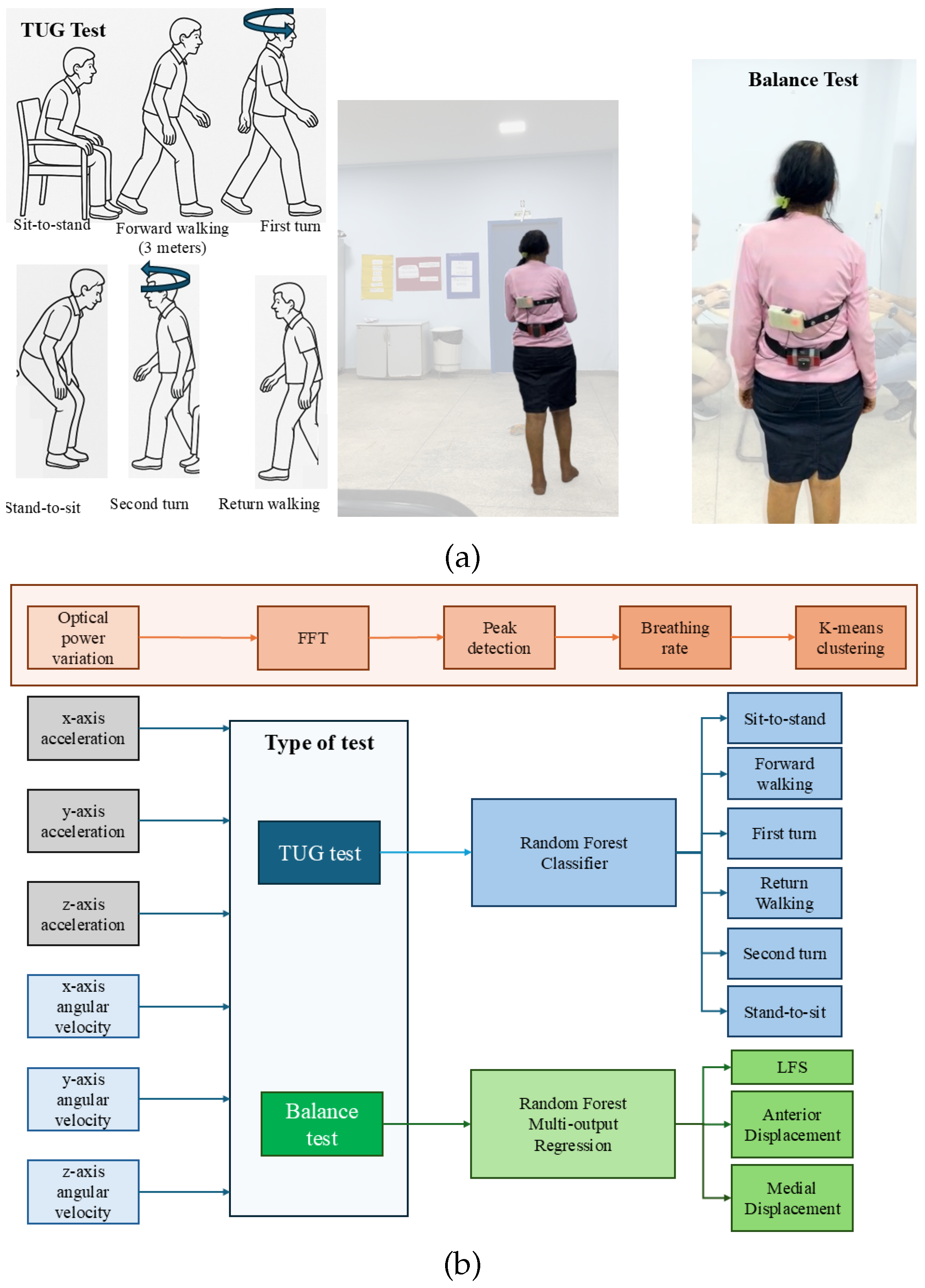

2. Materials and Methods

3. Results and Discussion

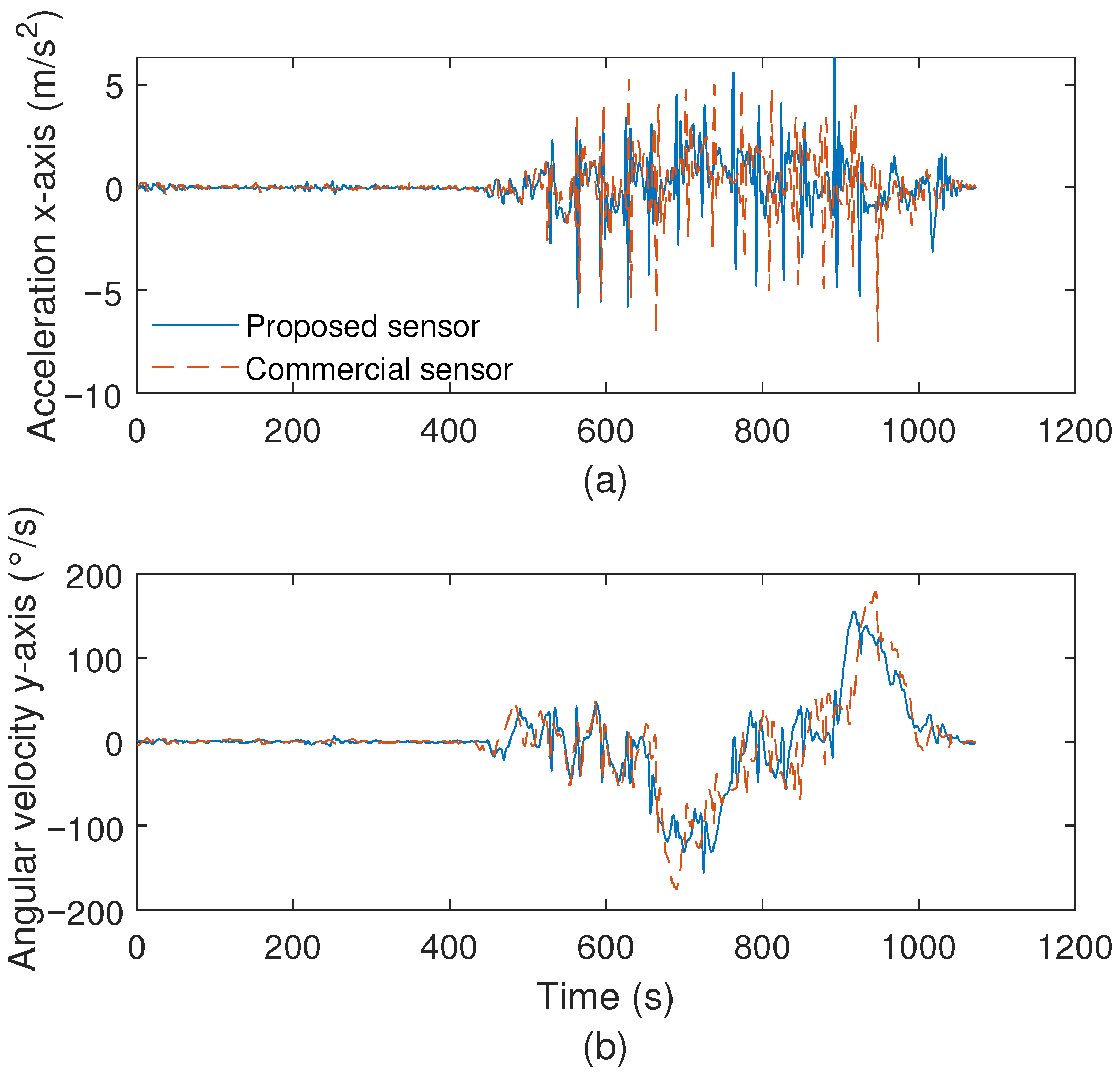

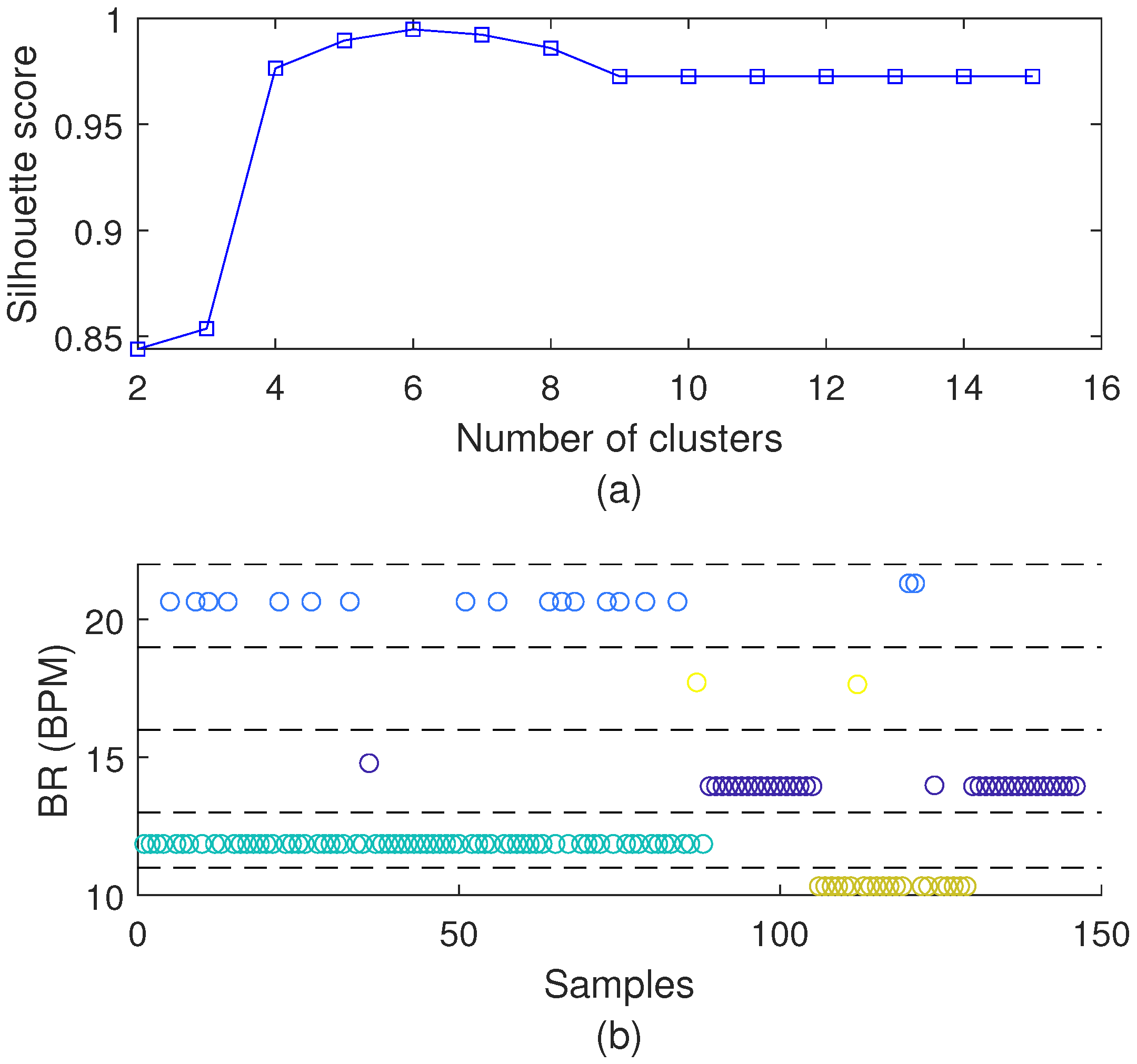

3.1. Optoelectronic Sensor System Characterization

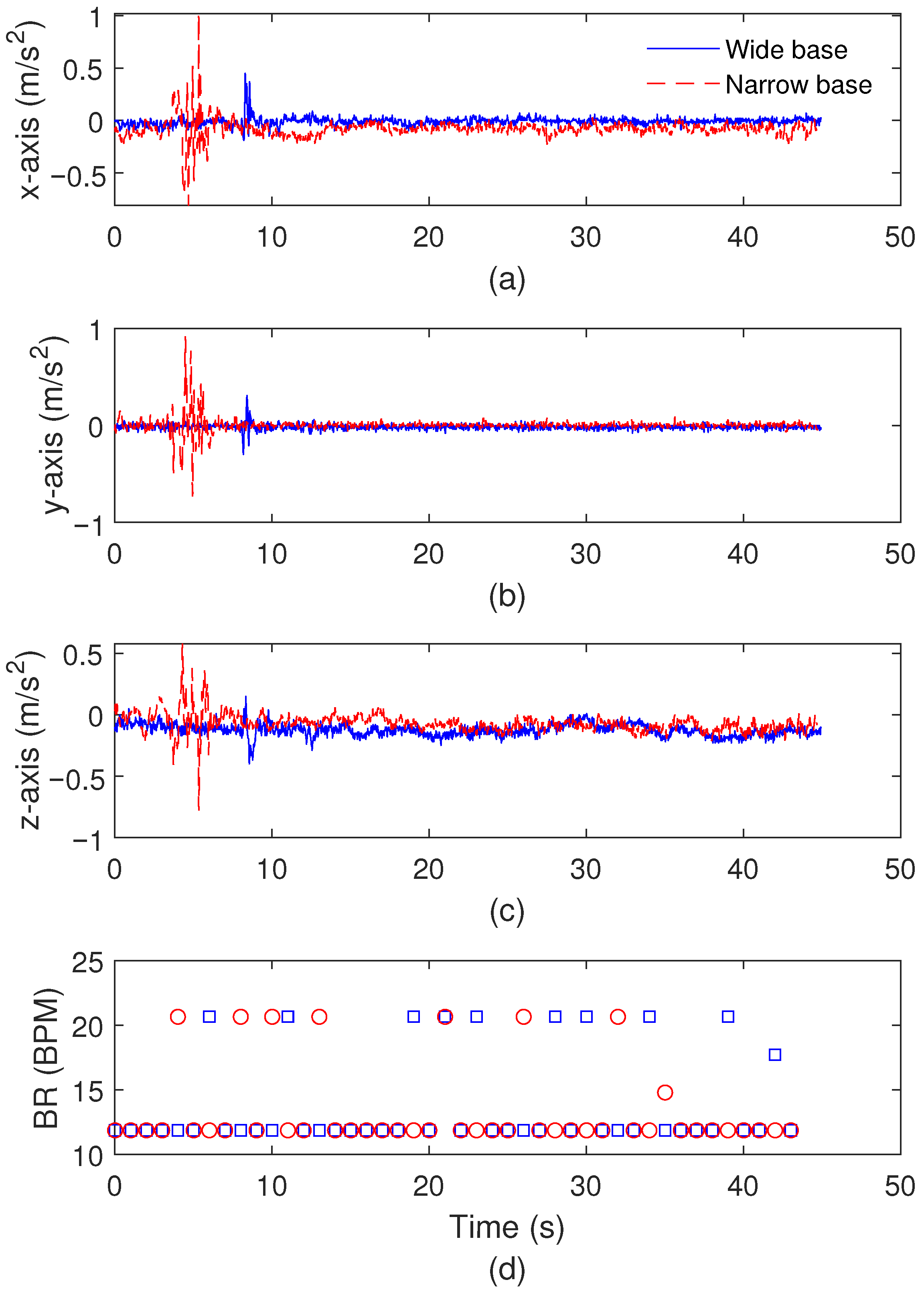

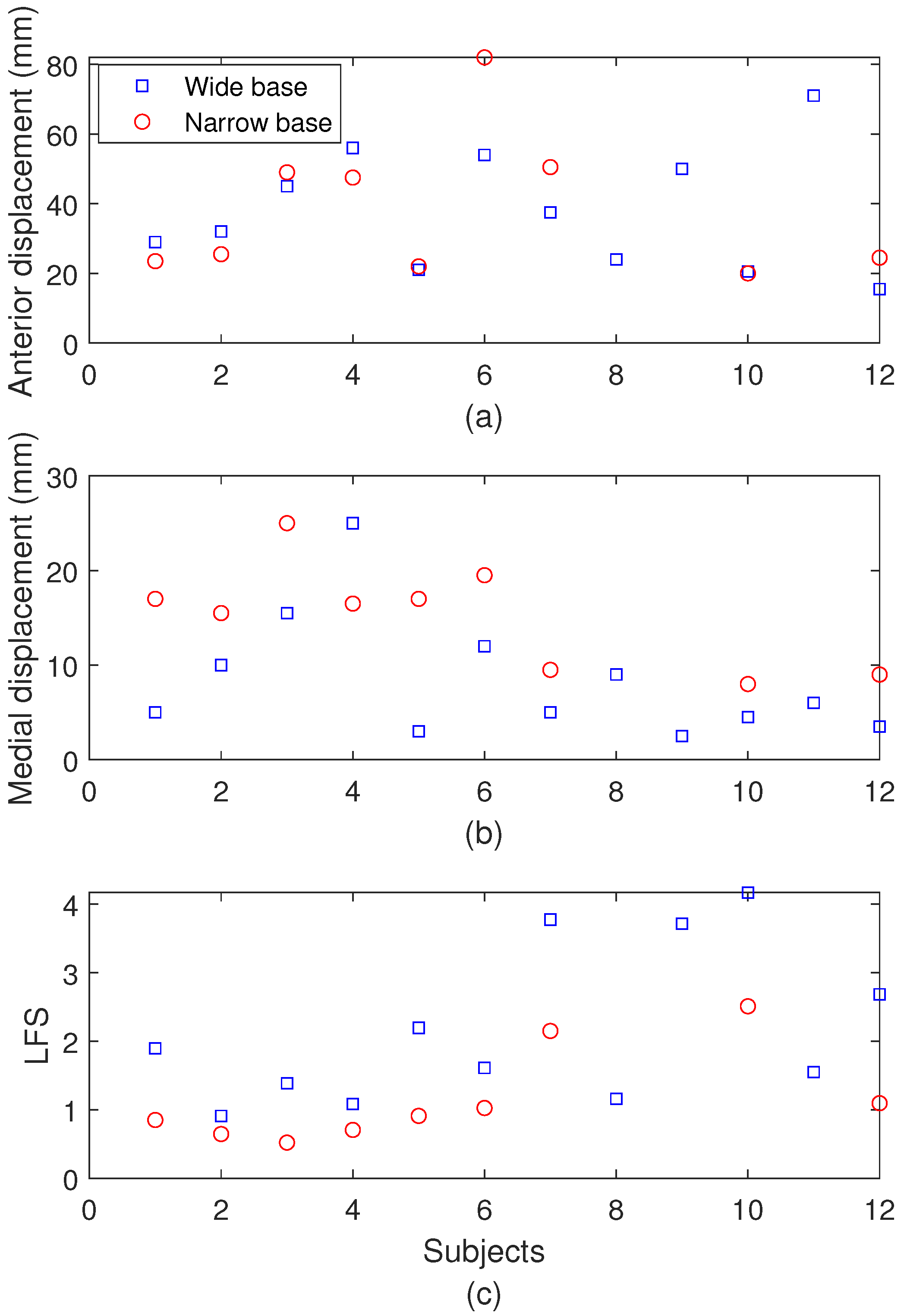

3.2. Balance Test Results

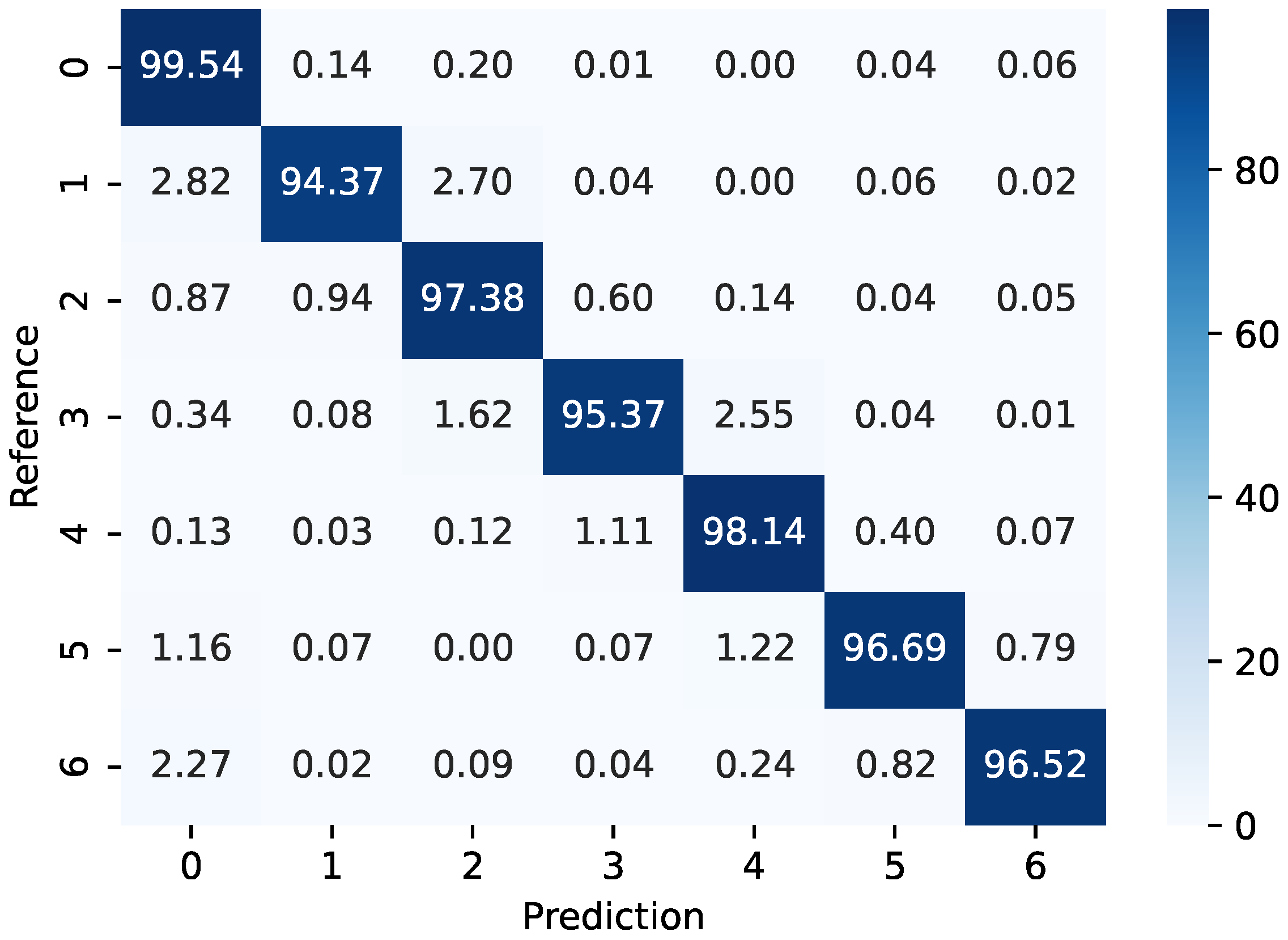

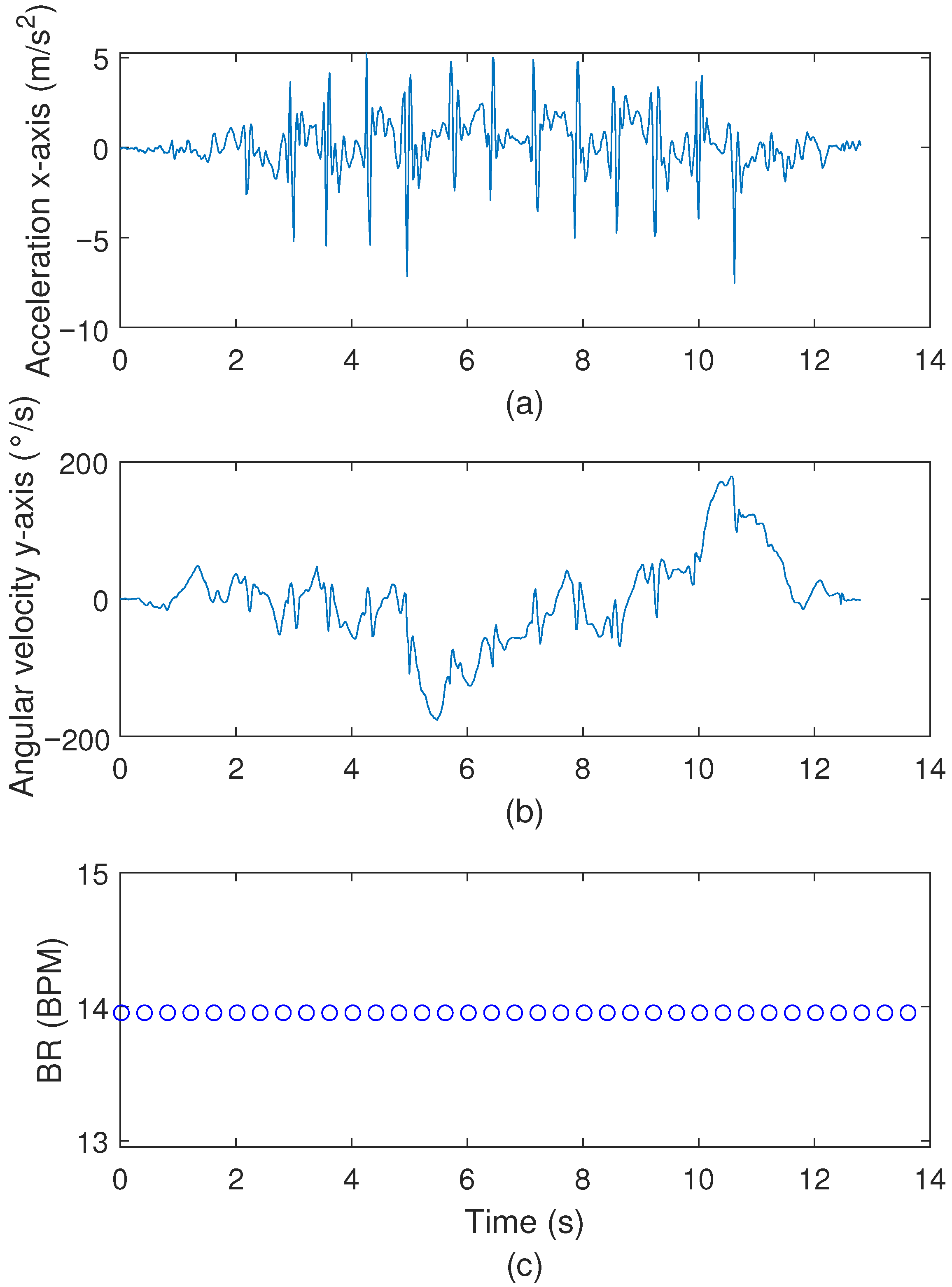

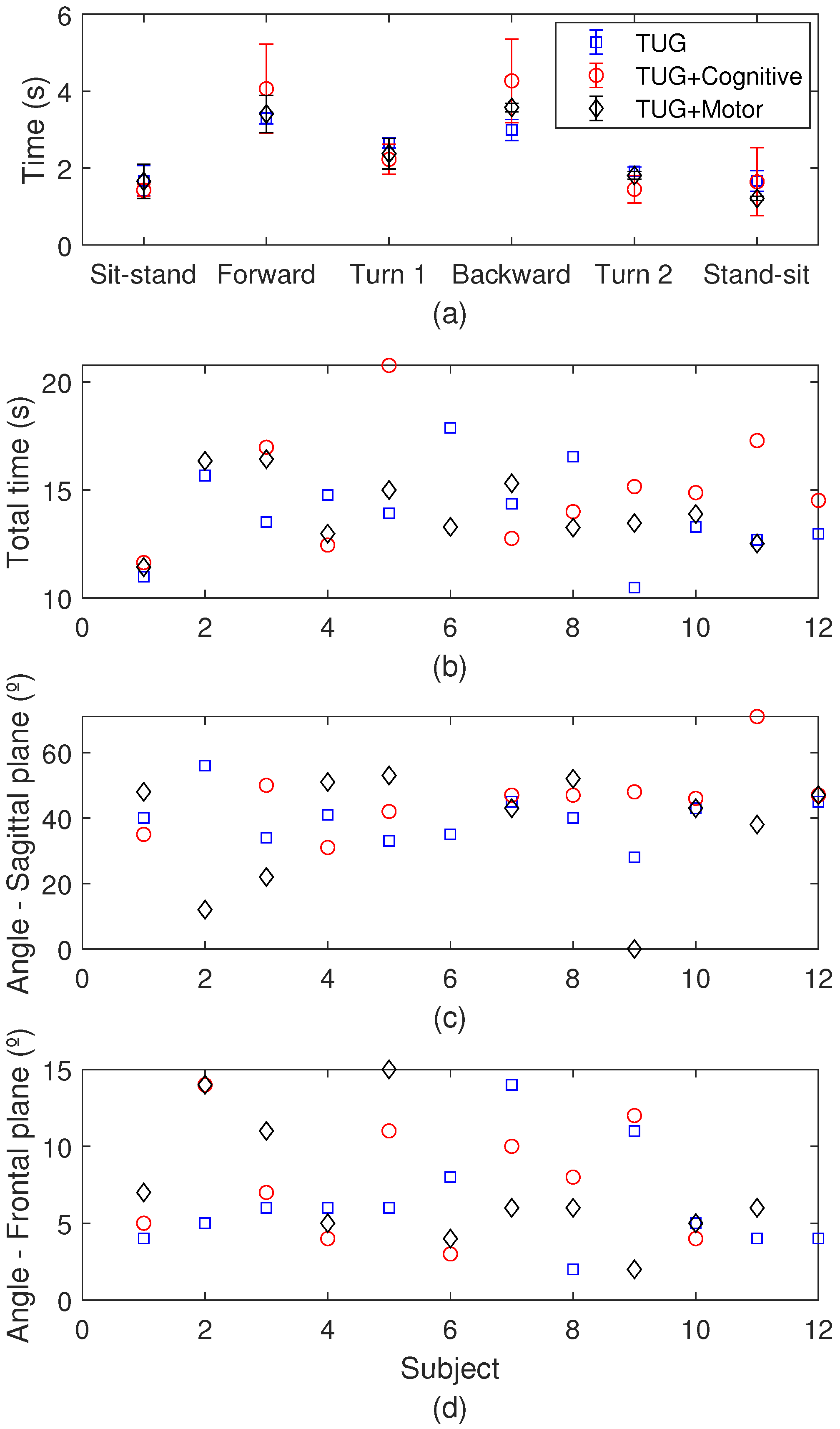

3.3. TUG Test Results

3.4. Discussion and Analysis

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Cruz-Jentoft, A.J.; Baeyens, J.P.; Bauer, J.M.; Boirie, Y.; Cederholm, T.; Landi, F.; Martin, F.C.; Michel, J.P.; Rolland, Y.; Schneider, S.M.; et al. Sarcopenia: European consensus on definition and diagnosis. Age Ageing 2010, 39, 412–423. [Google Scholar] [CrossRef]

- Ambrose, A.F.; Paul, G.; Hausdorff, J.M. Risk factors for falls among older adults: A review of the literature. Maturitas 2013, 75, 51–61. [Google Scholar] [CrossRef]

- Rubenstein, L.Z. Falls in older people: Epidemiology, risk factors and strategies for prevention. Age Ageing 2006, 35, 37–41. [Google Scholar] [CrossRef] [PubMed]

- Bergen, G.; Stevens, M.R.; Burns, E.R. Falls and Fall Injuries Among Adults Aged ≥65 Years—United States, 2014. Morb. Mortal. Wkly. Rep. 2016, 65, 993–998. [Google Scholar] [CrossRef] [PubMed]

- Stolt, L.R.O.G.; Kolisch, D.V.; Tanaka, C.; Cardoso, M.R.A.; Schmitt, A.C.B. Increase in fall-related hospitalization, mortality, and lethality among older adults in Brazil. Rev. Saude Publica 2020, 54, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Turner, K.; Staggs, V.S.; Potter, C.; Cramer, E.; Shorr, R.I.; Mion, L.C. Fall Prevention Practices and Implementation Strategies: Examining Consistency Across Hospital Units. J. Patient Saf. 2022, 18, E236–E242. [Google Scholar] [CrossRef]

- Buisseret, F.; Catinus, L.; Grenard, R.; Jojczyk, L.; Fievez, D.; Barvaux, V.; Dierick, F. Timed up and go and six-minute walking tests with wearable inertial sensor: One step further for the prediction of the risk of fall in elderly nursing home people. Sensors 2020, 20, 3207. [Google Scholar] [CrossRef]

- Lourenço, R.A.; Moreira, V.G.; de Mello, R.G.B.; de Souza Santos, I.; Lin, S.M.; Pinto, A.L.F.; Lustosa, L.P.; de Oliveira Duarte, Y.A.; Ribeiro, J.A.; Correia, C.C.; et al. Brazilian consensus on frailty in older people: Concepts, epidemiology and evaluation instruments. Geriatr. Gerontol. Aging 2018, 12, 121–135. [Google Scholar] [CrossRef]

- Muro-de-la Herran, A.; García-Zapirain, B.; Méndez-Zorrilla, A. Gait analysis methods: An overview of wearable and non-wearable systems, highlighting clinical applications. Sensors 2014, 14, 3362–3394. [Google Scholar] [CrossRef]

- Tedesco, S.; Barton, J.; O’Flynn, B. A review of activity trackers for senior citizens: Research perspectives, commercial landscape and the role of the insurance industry. Sensors 2017, 17, 1277. [Google Scholar] [CrossRef]

- WHO. WHO Global Report on Falls; WHO: Geneva, Switzerland, 2007; pp. 1–47. [Google Scholar]

- Lewis, J.E.; Neider, M.B. Designing Wearable Technology for an Aging Population. Ergon. Des. Q. Hum. Factors Appl. 2017, 25, 4–10. [Google Scholar] [CrossRef]

- Wu, M.; Luo, J. Wearable Technology Applications in Healthcare: A LiteraTure Rev. Online J. Nurs. Inform. Contrib. 2019, 23. Available online: https://www.proquest.com/openview/6c96964dfb83ca06895f330233831a50/1?pq-origsite=gscholar&cbl=2034896 (accessed on 15 September 2024).

- Niknejad, N.; Ismail, W.B.; Mardani, A.; Liao, H.; Ghani, I. A comprehensive overview of smart wearables: The state of the art literature, recent advances, and future challenges. Eng. Appl. Artif. Intell. 2020, 90, 103529. [Google Scholar] [CrossRef]

- Li, J.; Ma, Q.; Chan, A.H.; Man, S.S. Health monitoring through wearable technologies for older adults: Smart wearables acceptance model. Appl. Ergon. 2019, 75, 162–169. [Google Scholar] [CrossRef]

- Stavropoulos, T.G.; Papastergiou, A.; Mpaltadoros, L.; Nikolopoulos, S.; Kompatsiaris, I. IoT Wearable Sensors and Devices in Elderly Care: A Literature Review. Sensors 2020, 20, 2826. [Google Scholar] [CrossRef]

- Allseits, E.; Kim, K.J.; Bennett, C.; Gailey, R.; Gaunaurd, I.; Agrawal, V. A novel method for estimating knee angle using two leg-mounted gyroscopes for continuous monitoring with mobile health devices. Sensors 2018, 18, 2759. [Google Scholar] [CrossRef]

- Vargas-Valencia, L.; Schneider, F.; Leal-Junior, A.; Caicedo-Rodriguez, P.; Sierra-Arevalo, W.; Rodriguez-Cheu, L.; Bastos-Filho, T.; Frizera-Neto, A. Sleeve for Knee Angle Monitoring: An IMU-POF Sensor Fusion System. IEEE J. Biomed. Health Inform. 2021, 25, 465–474. [Google Scholar] [CrossRef]

- Pasciuto, I.; Ligorio, G.; Bergamini, E.; Vannozzi, G.; Sabatini, A.M.; Cappozzo, A. How angular velocity features and different gyroscope noise types interact and determine orientation estimation accuracy. Sensors 2015, 15, 23983–24001. [Google Scholar] [CrossRef]

- Lim, A.C.Y.; Natarajan, P.; Fonseka, R.D.; Maharaj, M.; Mobbs, R.J. The application of artificial intelligence and custom algorithms with inertial wearable devices for gait analysis and detection of gait-altering pathologies in adults: A scoping review of literature. Digit. Health 2022, 8, 20552076221074128. [Google Scholar] [CrossRef]

- Leal-Junior, A.; Vargas-Valencia, L.; dos Santos, W.; Schneider, F.; Siqueira, A.; Pontes, M.; Frizera, A. POF-IMU sensor system: A fusion between inertial measurement units and POF sensors for low-cost and highly reliable systems. Opt. Fiber Technol. 2018, 43, 82–89. [Google Scholar] [CrossRef]

- Leal-Junior, A.; Avellar, L.; Blanc, W.; Frizera, A.; Marques, C. Opto-Electronic Smart Home: Heterogeneous Optical Sensors Approaches and Artificial Intelligence for Novel Paradigms in Remote Monitoring. IEEE Internet Things J. 2023, 11, 9587–9598. [Google Scholar] [CrossRef]

- Leal-Junior, A.G.A.; Frizera, A.; Vargas-Valencia, L.; Dos Santos, W.M.W.; Bo, A.A.P.L.; Siqueira, A.A.A.G.; Pontes, M.M.J. Polymer Optical Fiber Sensors in Wearable Devices: Toward Novel Instrumentation Approaches for Gait Assistance Devices. IEEE Sens. J. 2018, 18, 7085–7092. [Google Scholar] [CrossRef]

- Min, R.; Liu, Z.; Pereira, L.; Yang, C.; Sui, Q.; Marques, C. Optical fiber sensing for marine environment and marine structural health monitoring: A review. Opt. Laser Technol. 2021, 140, 107082. [Google Scholar] [CrossRef]

- Lyu, S.; Wu, Z.; Shi, X.; Wu, Q. Optical Fiber Biosensors for Protein Detection: A Review. Photonics 2022, 9, 987. [Google Scholar] [CrossRef]

- Leal-Junior, A.G.; Diaz, C.R.; Jimenez, M.F.; Leitao, C.; Marques, C.; Pontes, M.J.; Frizera, A. Polymer Optical Fiber-Based Sensor System for Smart Walker Instrumentation and Health Assessment. IEEE Sens. J. 2019, 19, 567–574. [Google Scholar] [CrossRef]

- Chen, Z.; Lau, D.; Teo, J.T.; Ng, S.H.; Yang, X.; Kei, P.L. Simultaneous measurement of breathing rate and heart rate using a microbend multimode fiber optic sensor. J. Biomed. Opt. 2014, 19, 057001. [Google Scholar] [CrossRef]

- Gomes, L.G.; de Mello, R.; Leal-Junior, A. Respiration frequency rate monitoring using smartphone-integrated polymer optical fibers sensors with cloud connectivity. Opt. Fiber Technol. 2023, 78, 103313. [Google Scholar] [CrossRef]

- Presti, D.L.O.; Member, S.; Massaroni, C.; Sofia, C.; Leitão, J.; Domingues, M.D.E.F.; Sypabekova, M.; Barrera, D.; Floris, I.; Massari, L.; et al. Fiber Bragg Gratings for Medical Applications and Future Challenges: A Review. IEEE Access 2020, 8, 156863–156888. [Google Scholar] [CrossRef]

- Massaroni, C.; Saccomandi, P.; Schena, E. Medical smart textiles based on fiber optic technology: An overview. J. Funct. Biomater. 2015, 6, 204–221. [Google Scholar] [CrossRef]

- Leal-Junior, A.G.; Díaz, C.R.; Leitão, C.; Pontes, M.J.; Marques, C.; Frizera, A. Polymer optical fiber-based sensor for simultaneous measurement of breath and heart rate under dynamic movemEnts. Opt. Laser Technol. 2019, 109, 429–436. [Google Scholar] [CrossRef]

- Pant, S.; Umesh, S.; Asokan, S. Knee Angle Measurement Device Using Fiber Bragg Grating Sensor. IEEE Sens. J. 2018, 18, 10034–10040. [Google Scholar] [CrossRef]

- Majumder, S.; Mondal, T.; Deen, M. Wearable Sensors for Remote Health Monitoring. Sensors 2017, 17, 130. [Google Scholar] [CrossRef] [PubMed]

- Lee, G.H.; Moon, H.; Kim, H.; Lee, G.H.; Kwon, W.; Yoo, S.; Myung, D.; Yun, S.H.; Bao, Z.; Hahn, S.K. Multifunctional materials for implantable and wearable photonic healthcare devices. Nat. Rev. Mater. 2020, 5, 149–165. [Google Scholar] [CrossRef] [PubMed]

- Leal-Junior, A.; Avellar, L.; Biazi, V.; Soares, M.S.; Frizera, A.; Marques, C. Multifunctional flexible optical waveguide sensor: On the bioinspiration for ultrasensitive sensors development. Opto-Electron. Adv. 2022, 5, 210098. [Google Scholar] [CrossRef]

- Leal-Junior, A.G.; Frizera, A.; José Pontes, M. Sensitive zone parameters and curvature radius evaluation for polymer optical fiber curvature sensors. Opt. Laser Technol. 2018, 100, 272–281. [Google Scholar] [CrossRef]

- Beerse, M.; Lelko, M.; Wu, J. Biomechanical analysis of the timed up-and-go (TUG) test in children with and without Down syndrome. Gait Posture 2019, 68, 409–414. [Google Scholar] [CrossRef]

- Tan, D.; Pua, Y.H.; Balakrishnan, S.; Scully, A.; Bower, K.J.; Prakash, K.M.; Tan, E.K.; Chew, J.S.; Poh, E.; Tan, S.B.; et al. Automated analysis of gait and modified timed up and go using the Microsoft Kinect in people with Parkinson’s disease: Associations with physical outcome measures. Med. Biol. Eng. Comput. 2019, 57, 369–377. [Google Scholar] [CrossRef]

- Liwsrisakun, C.; Pothirat, C.; Chaiwong, W.; Techatawepisarn, T.; Limsukon, A.; Bumroongkit, C.; Deesomchok, A.; Theerakittikul, T.; Tajarernmuang, P. Diagnostic ability of the Timed Up & Go test for balance impairment prediction in chronic obstructive pulmonary disease. J. Thorac. Dis. 2020, 12, 2406–2414. [Google Scholar] [CrossRef]

- Frenken, T.; Brell, M.; Gövercin, M.; Wegel, S.; Hein, A. aTUG: Technical apparatus for gait and balance analysis within component-based Timed Up & Go using mutual ambient sensors. J. Ambient Intell. Humaniz. Comput. 2013, 4, 759–778. [Google Scholar] [CrossRef]

| Subject | Gender | Age | Clinical Conditions | Mobility |

|---|---|---|---|---|

| 1 | Male | 70 years | Hypertension Diabetes mellitus | Normal |

| 2 | Male | 61 years | Hypertension Hearing impariment | Normal |

| 3 | Female | 67 years | Epilepsy Asthma | Normal |

| 4 | Female | 65 years | Hypertension Diabetes mellitus | Normal |

| 5 | Female | 57 years | Hypertension | Normal |

| 6 | Male | 75 years | Hypertension Diabetes mellitus | Normal |

| 7 | Female | 70 years | Hypertension Diabetes mellitus | Normal |

| 8 | Female | 71 years | Hypertension Diabetes mellitus | Normal |

| 9 | Female | 53 years | Hypertension Diabetes mellitus | Normal |

| 10 | Female | 67 years | Diabetes mellitus | Normal |

| 11 | Female | 50 years | Diabetes mellitus | Normal |

| 12 | Female | 57 years | Hypertension | Normal |

| Parameter | Axis | Error | Standard Deviation |

|---|---|---|---|

| Accelerometer | x-axis | 0.060 m/s2 | 0.323 m/s2 |

| Accelerometer | y-axis | 0.010 m/s2 | 0.352 m/s2 |

| Accelerometer | z-axis | 0.042 m/s2 | 0.356 m/s2 |

| Angular velocity | x-axis | 0.25 °/s | 7.13 °/s |

| Angular velocity | y-axis | 0.24 °/s | 8.18 °/s |

| Angular velocity | z-axis | 0.33 °/s | 4.48 °/s |

| Test | Parameter | Mean Value |

|---|---|---|

| Wide base | Anterior displacement | 33 mm |

| Wide base | Medial displacement | 6 mm |

| Wide base | LFS | 1.68 |

| Narrow base | Anterior displacement | 20 mm |

| Narrow base | Medial displacement | 16 mm |

| Narrow base | LFS | 0.92 |

| Test | Total Time | Sagittal Angle | Frontal Angle |

|---|---|---|---|

| TUG | 10.96 s | 35° | 5° |

| TUG+Motor | 12.01 s | 47° | 8° |

| TUG+Cognitive | 11.63 s | 35° | 5° |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Morau, S.; Macedo, L.; Morais, E.; Menegardo, R.; Nedoma, J.; Martinek, R.; Leal-Junior, A. Low-Cost AI-Enabled Optoelectronic Wearable for Gait and Breathing Monitoring: Design, Validation, and Applications. Biosensors 2025, 15, 612. https://doi.org/10.3390/bios15090612

Morau S, Macedo L, Morais E, Menegardo R, Nedoma J, Martinek R, Leal-Junior A. Low-Cost AI-Enabled Optoelectronic Wearable for Gait and Breathing Monitoring: Design, Validation, and Applications. Biosensors. 2025; 15(9):612. https://doi.org/10.3390/bios15090612

Chicago/Turabian StyleMorau, Samilly, Leandro Macedo, Eliton Morais, Rafael Menegardo, Jan Nedoma, Radek Martinek, and Arnaldo Leal-Junior. 2025. "Low-Cost AI-Enabled Optoelectronic Wearable for Gait and Breathing Monitoring: Design, Validation, and Applications" Biosensors 15, no. 9: 612. https://doi.org/10.3390/bios15090612

APA StyleMorau, S., Macedo, L., Morais, E., Menegardo, R., Nedoma, J., Martinek, R., & Leal-Junior, A. (2025). Low-Cost AI-Enabled Optoelectronic Wearable for Gait and Breathing Monitoring: Design, Validation, and Applications. Biosensors, 15(9), 612. https://doi.org/10.3390/bios15090612