Abstract

The combination of different imaging modalities into single imaging platforms has a strong potential in biomedical sciences as it permits the analysis of complementary properties of the target sample. Here, we report on an extremely simple, cost-effective, and compact microscope platform for achieving simultaneous fluorescence and quantitative phase imaging modes with the capability of working in a single snapshot. It is based on the use of a single illumination wavelength to both excite the sample’s fluorescence and provide coherent illumination for phase imaging. After passing the microscope layout, the two imaging paths are separated using a bandpass filter, and the two imaging modes are simultaneously obtained using two digital cameras. We first present calibration and analysis of both fluorescence and phase imaging modalities working independently and, later on, experimental validation for the proposed common-path dual-mode imaging platform considering static (resolution test targets, fluorescent micro-beads, and water-suspended lab-made cultures) as well as dynamic (flowing fluorescent beads, human sperm cells, and live specimens from lab-made cultures) samples.

1. Introduction

Multimodal imaging deals with the mixing of different imaging modalities in a single imaging platform in order to gain deeper knowledge about a specific sample [1]. Some examples of such integration of imaging modalities can be found, just as examples, in biology [2], medicine [3], and ophthalmology [4], as well as in combination with deep learning approaches [5,6]. In the frame of this multimodal strategy, optical microscopy is one of the most appealing fields from a multimodal imaging perspective where combination of non-linear [7,8,9], linear [10,11,12,13], or a mixing [14,15,16] of techniques allows parallel and complementary information of a specific sample or event to improve imaging and understanding of specific biological processes.

In particular, strong efforts have been provided in the last two decades for combining fluorescence imaging (FI) with quantitative phase imaging (QPI) [17,18,19,20,21,22,23,24,25,26,27,28,29]. This dual-mode imaging modality combination is probably the simplest one capable of working in a single exposure while maximizing the obtained information. Whereas QPI retrieves morphological information and cell dynamics coming from the topography and/or spatial refractive index distribution in the specimen [30], FI can reveal functional features for studying sub-cellular molecular events of live cells by using fluorophore labeling with high specificity [31]. For this reason, the combination of FI with QPI opens up new possibilities to extract more information about biological specimens and processes.

However, the integration of FI and QPI into a single imaging platform has some drawbacks. In some cases, such a combination is implemented using a single digital camera for recording the images [17,18,23,25,26]. Thus, one needs to switch between the two illumination modes and their associated optical paths, meaning that there is no possibility for single snapshot imaging and the recordings must be done sequentially in time, preventing the imaging of some biological events that occur at very short time scales. This disadvantage can be circumvented using dynamic range multiplexing where both images are recorded in a single snapshot at the expense of reducing the dynamic range of the detector [20,24]. Nevertheless, the preferred solution implies the use of two different synchronized digital cameras (one for QPI and the other for FI modes) where a dichroic mirror separates both imaging paths [12,20,21,22,27,28,29].

Another drawback comes from the fact that both imaging modes utilize different geometries (FI is usually implemented in reflection, whereas QPI is implemented in transmission configurations) [17,19,22,23,24,26,27,28,29]. As a result, the whole imaging platform becomes bulky, including some additional optical components (dichroic mirrors, selective filters, etc.) that increase the system’s price and weight. This can be a limiting issue as compact and cost-effective systems could be pursued depending on the application, such as, for instance, the study of a specimen inside an incubator or in the field-setting as well as the adaptation of the instrument to resource-limited labs. This second drawback can be partially reduced by introducing the fluorescence excitation beam using an oblique angle geometry in transmission with an illumination angle higher than the one defined by the numerical aperture (NA) of the used objective lens [25]. This way the fluorescence excitation beam will not pass through the imaging path, and some optical components can be spared and the system is reduced in size.

In this manuscript, we propose a simple, compact, and cost-effective imaging platform for the integration of FI and QPI into a common-path system with the capability of working in a single snapshot (both imaging modes simultaneously in parallel). The key point is to use a single illumination light with a double function: to excite fluorescence in the sample and to provide holographic imaging. Thus, the system is minimized in terms of optical components, improving compactness, weight, and pricing. After the tube lens, a regular dichroic mirror separates both imaging modes, and two digital cameras allow simultaneous recordings of the same specimen under test. FI is obtained in the whole field of view (FOV) provided by the microscope lens and limited by the used fluorophore, whereas QPI comes from an in-line configuration similar to the configuration on phase from defocused images [25,26] and phase imaging under the Gabor regime [32]. Experimental results are included considering different types of samples, that is, static (resolution test targets, fluorescent beads, and lab-made cultures in water suspension) and dynamic (flowing fluorescent beads, human sperm cells, and live specimens from lab-made cultures) samples. Summarizing, the proposed dual-mode imaging platform provides an optimized configuration for FI/QPI integration that minimizes size, weight, and price (thus improving compactness, portability, and affordability) and that can be easily configured by selecting some specific components (coherent illumination, fluorophore and dichroic mirror) depending on the target application. In this manuscript, Section 2 provides the system’s description, preparation of samples, and different details concerning the imaging procedure; Section 3 presents calibration of QPI and FI modes as well as experimental support and proof of principle validation of the integrated platform. Finally, Section 4 discusses and concludes the paper.

2. Materials and Methods

2.1. Experimental Setup and Numeric Propagation

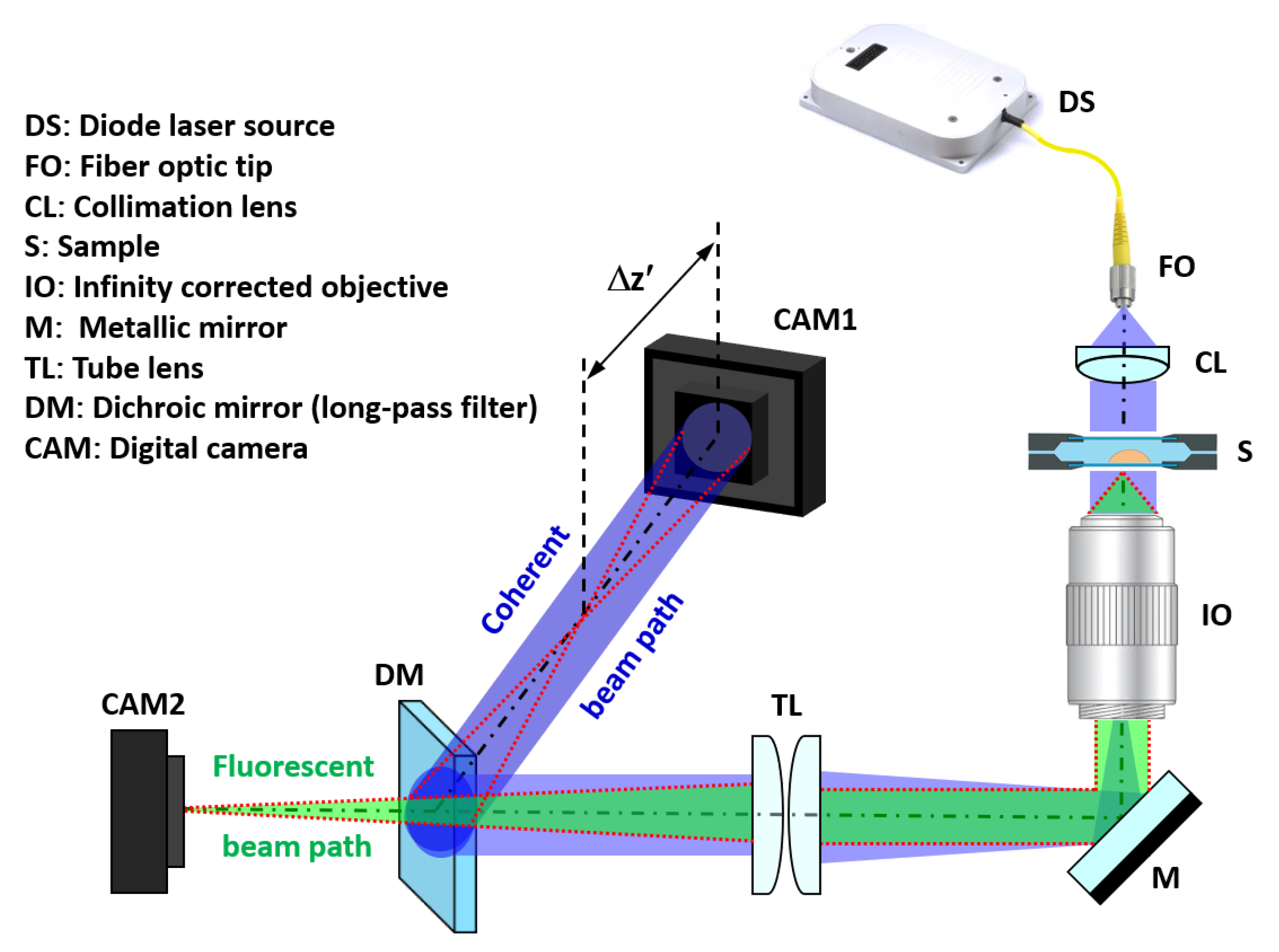

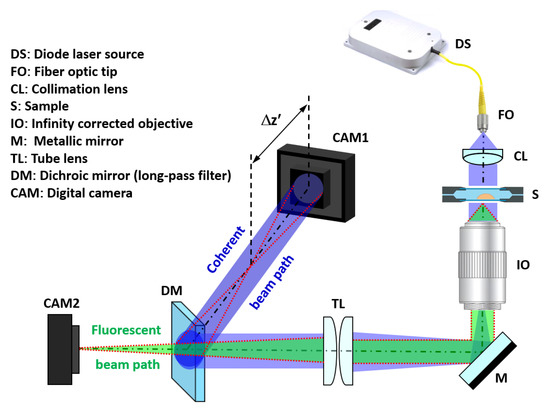

The proposed setup must allow the simultaneous capture of the incoherent light emitted by fluorescence and the coherent in-line Gabor hologram of the sample. To achieve this goal, we put forward a simple system (Figure 1) composed of a Mitutoyo long working distance objective (10X 0.28NA) with coherent illumination coming from a fiber coupled diode laser. Specifically, we have used blue laser light (450 nm) of the multiwavelength illumination source from Blue Sky Research (SpectraTec 4 STEC4 405/450/532/635 nm). Before illuminating the sample, light is collimated using a collimation lens (QIOptics G052010000, achromat visible, 40 mm focal length) module that is mounted along a vertical axis to allow horizontal sample positioning. The sample is held by a translation stage that allows the vertical displacement of the object by an adjustment knob. Light is deflected by a mirror (Thorlabs BB111-E02, broadband dielectric mirror) after passing the microscope objective to a horizontal plane where the rest of the system lies. After being reflected, both fluorescent and coherent beam paths are focused using a tube lens system (Thorlabs TTL180-A, 180 mm focal length) and separated with a long pass filter dichroic mirror (Thorlabs DMLP490, Longpass Dichroic Mirror, 490 nm Cut-On). Thus, hologram and direct fluorescent imaging of the sample can be simultaneously captured using two analogous digital cameras (Basler acA1300, 1280x1024 px, 4.6 µm pizel size) connected to the visualization software. To easily understand the optical layout, the coherent beam path has been included in 3D perspective at Figure 1 to clearly identify its positioning along the horizontal plane.

Figure 1.

Optical arrangement of the proposed system. The blue beam represents the coherent laser light, whereas the green one is the fluorescent emission. The red dashed line represents the ray tracing of the image at the output plane where the camera for FI (CAM2) is placed and from where is generated according to the optimal defocus distance necessary for a proper phase reconstruction.

Another key aspect of the assembly is that, in order to induce a Gabor hologram recording scheme, the camera (CAM1) must be axially displaced from the image plane. This defocus distance is not arbitrary and has to be calibrated in order to obtain good results (discussed in Section 3.1.1). Once the hologram is recorded, it is digitally propagated by the use of the convolution method applied to the diffraction Rayleigh–Sommerfeld integral [33]. Here, the diffraction integral is numerically computed using three digital Fourier transformations as

where is the numerically propagated complex amplitude wave field at the object plane, FT is the numerical Fourier transform operation (implemented by fast Fourier transform (FFT) algorithm), is the amplitude at the recording plane coming from the recorded intensity distribution (in-line Gabor hologram), is the impulse response of free space propagation, are the spatial coordinates, and d is the propagation distance. Equation (1) can be simplified by defining the Fourier transformation of the impulse response as . Thus, the calculation of the propagated wave field is simplified to

2.2. Calibration Targets and Imaging of the Samples

For the calibration of both imaging modes (FI and QPI), we have also used amplitude and phase USAF test targets. In particular, in phase imaging calibration we used different USAF-style phase resolution tests of the quantitative phase target from Benchmark Technologies (www.benchmarktech.com/quantitativephasemicroscop), having nominal heights of 50 µm, 100 µm, and 150 µm. In the case of the FI calibration, an USAF positive resolution test target (amplitude test target) from Edmund Optics was used. In order to produce the fluorescence emission, a drop of fluorescein was deposited on top of it and subsequently covered with a coverslip.

Imaging of the samples after positioning CAM1 at the optimal defocus distance consists of focusing the FI mode in CAM2 by adjusting the vertical adjustment knob of the micrometric translation stage. In this way, the hologram will be captured by CAM1 having the optimal defocus for a proper phase reconstruction. Regarding the process of acquisition, as the light emitted during the fluorescence process is much dimmer than the one coming from the coherent imaging path, it will need a long exposure time to get enough intensity images. Specifically, all the images corresponding to the FI mode (CAM2) have been acquired during 100 ms integration time, whereas the holographic ones (CAM1) are obtained using a much shorter integration time (around 0.1 ms but dependent on the laser intensity). However, to synchronize both imaging modes (same snapshots at same time) while not producing huge amounts of data in the holographic recordings, the acquisition frame rate has been fixed to the more restrictive one, that is, 10 fps coming from CAM2.

2.3. Preparation of Fluorescence Micro-Beads and Living Samples

Micro-beads are an excellent sample for calibration of both cameras due to the simplicity of working with them and their well-defined shape and fluorescence properties. We used a 10-micron diameter fluorescent microsphere suspension (Thermo scientific G1000) with maximum excitation/emission wavelengths of 468/508 nm, respectively, so they can be excited with the 450 nm laser light and will emit above 490 nm, which is the cutoff wavelength of the used bandpass filter. These beads are distributed in a liquid solution, but the density is too high for phase reconstruction using Gabor holography (which requires a weak diffracting object). Hence, we prepared a wide range of samples, adding different quantities of distilled water in order to obtain a proper bead density. Subsequently, these samples were introduced by pipetting and sealed in 20 µm thickness counting chambers in order to prevent aqueous medium evaporation.

Human sperm cells and water suspended microorganisms were used as living samples for testing our proposed imaging platform. In particular, lab-made cultures were grown at room temperature in a container filled with water and some plant residues. To produce fluorescent emission, a general eye drop solution fluorophore for ocular use (minims fluorescein sodium 2% provided by BauschLomb) were added in every test. However, because the used fluorophore usually works in combination with tears, it was diluted in a water solution of 1:10 before being spread on the samples. Next, biosamples were dropped into an object holder by pipetting 10 to 15 mL from the Eppendorf where samples were prepared (diluted or directly extracted from the main culture solution) and were sprinkled with one load of the water solution with fluorescein. In some cases, the samples were prepared in conventional microscope slides, so they were covered with a coverslip before being placed in the sample stage.

3. Results

3.1. Phase Imaging Calibration

3.1.1. Defocus Distance

As we noted previously, one important step to achieve high quality phase images is to select an appropriate defocus distance (). Because precisely varying the position of the camera in a microscope embodiment is a complicated task, we decided instead to vertically shift the object by a certain amount . Note that both distances can be related through the square of the objective magnification (M) as . However, obtaining the precise defocus distance experimentally is not a trivial procedure because it depends on the specific objective characteristics (beam divergence and distances hard to measure with accuracy). Hence, we performed the following analysis using the 150 nm—thickness USAF-style phase resolution test: we varied z in the range 300 µm z < 1800 µm using steps of 50 µm and numerically computed the best in-focus image for each distance. A sweep was previously done to qualitatively check which is the best resolution zone. The experimental methodology for the phase target consisted of placing the target in the microscope stage and focusing on the sample plane by vertically moving the test using the coarse/fine adjustment knob of the micrometric translation stage. This defined the regular imaging condition in the microscope layout and would be our starting point () from which defocus distances were produced using the vertical micrometric adjustment of the microscope’s sample stage. Note that we did not consider negative defocus distances due to worse performance in phase reconstruction [32].

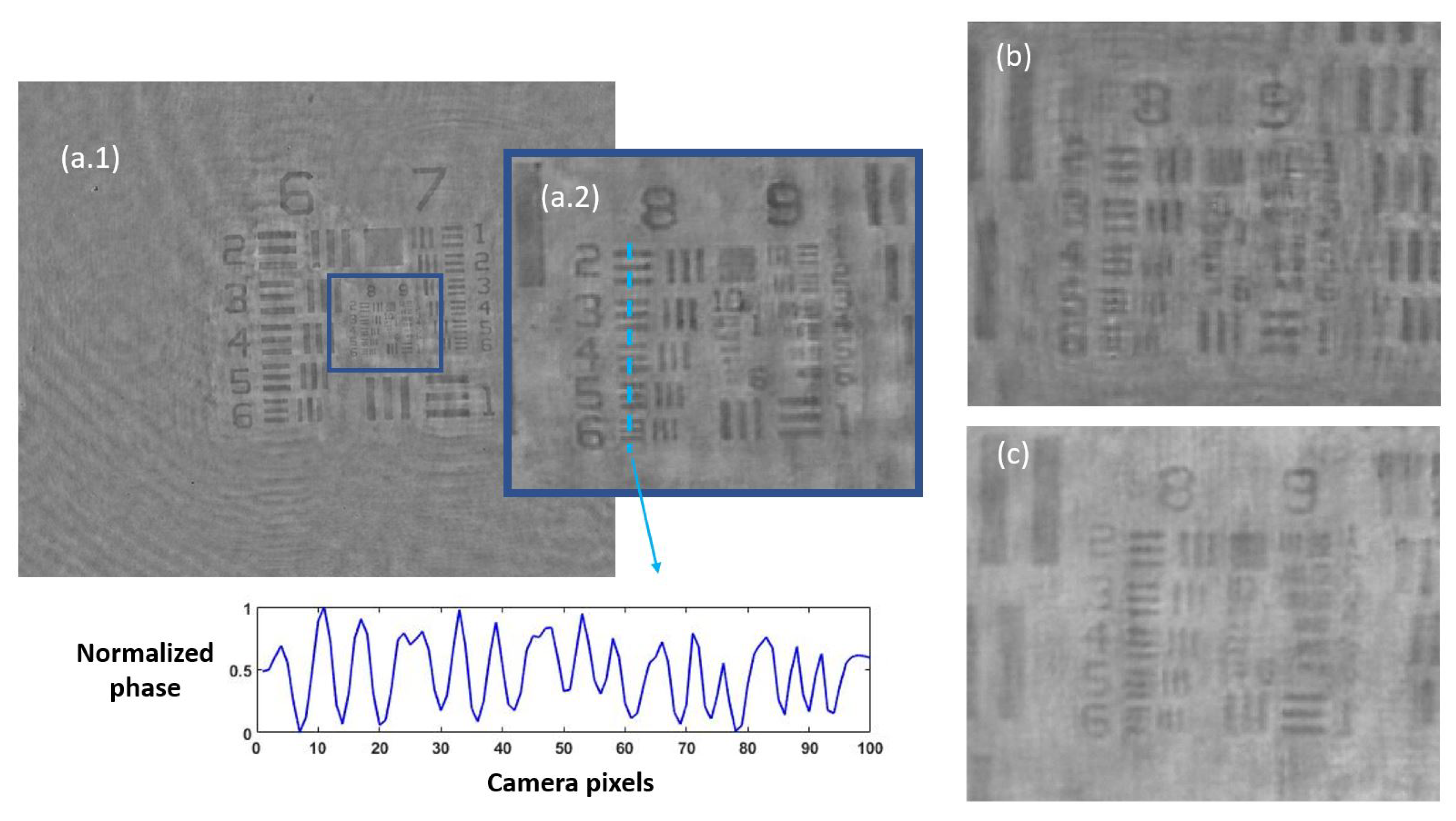

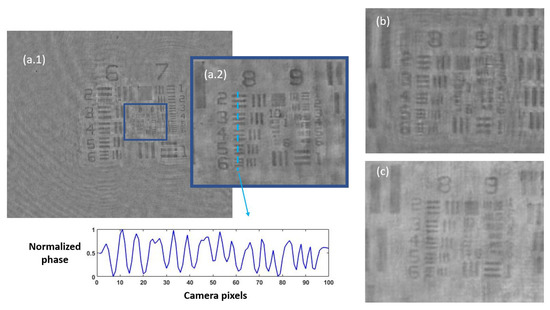

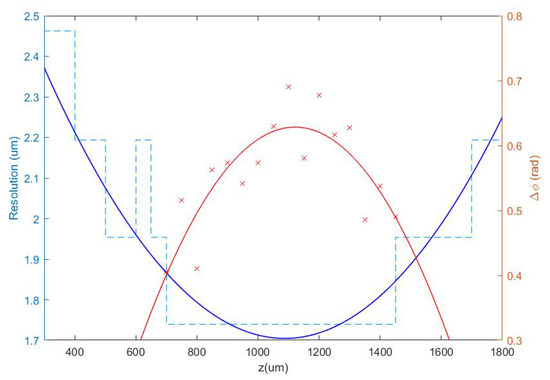

Once the set of different reconstructed phase USAF target images from each defocus distance was obtained, we could easily get the effective resolution as the last resolved element for each in-focus image. Equation (2) represents how to numerically propagate the recorded hologram to focus the sample plane. We implemented this algorithm in MATLAB software by varying the propagation distance (d in Equation (2)) and checking the visual quality of the retrieved image. For this demonstration, we did not include any technique (iterative algorithm for twin image mitigation, averaging for noise reduction, etc.) for improving the reconstructions. Moreover, the sample was simply focused by visual criterion looking at the reconstructed images, although some automation can be implemented in the reconstruction process. Thus, three cases are exemplified in Figure 2 for best defocus distance (around 1100 µm), 300 µm, and 1800 µm. Figure 3 shows the results of this analysis by plotting resolution vs. defocus distance. We can see an optimal range for between 700 and 1450 µm where the resolution was maximal (group 9, element 2 defining a resolution limit of 1.74 µm). If we were above or below this defocus range, resolution started to get worse (group 9 was not resolved), as we can see in Figure 2b,c.

Figure 2.

Phase reconstructed images of the USAF test for three different defocus distances: (a.1) presents the obtained image from best defocus distance, whereas (a.2) shows a noise-filtered magnification of the central area with the finest details of the test. The blue dashed line represents the zone where the contrast parameter was computed, showing the horizontally averaged and normalized phase value across the group at the bottom picture. In addition, (b,c) present magnifications of the central area of the USAF test for a defocus distance of 300 µm and 1800 µm, respectively, showing worse reconstructed images.

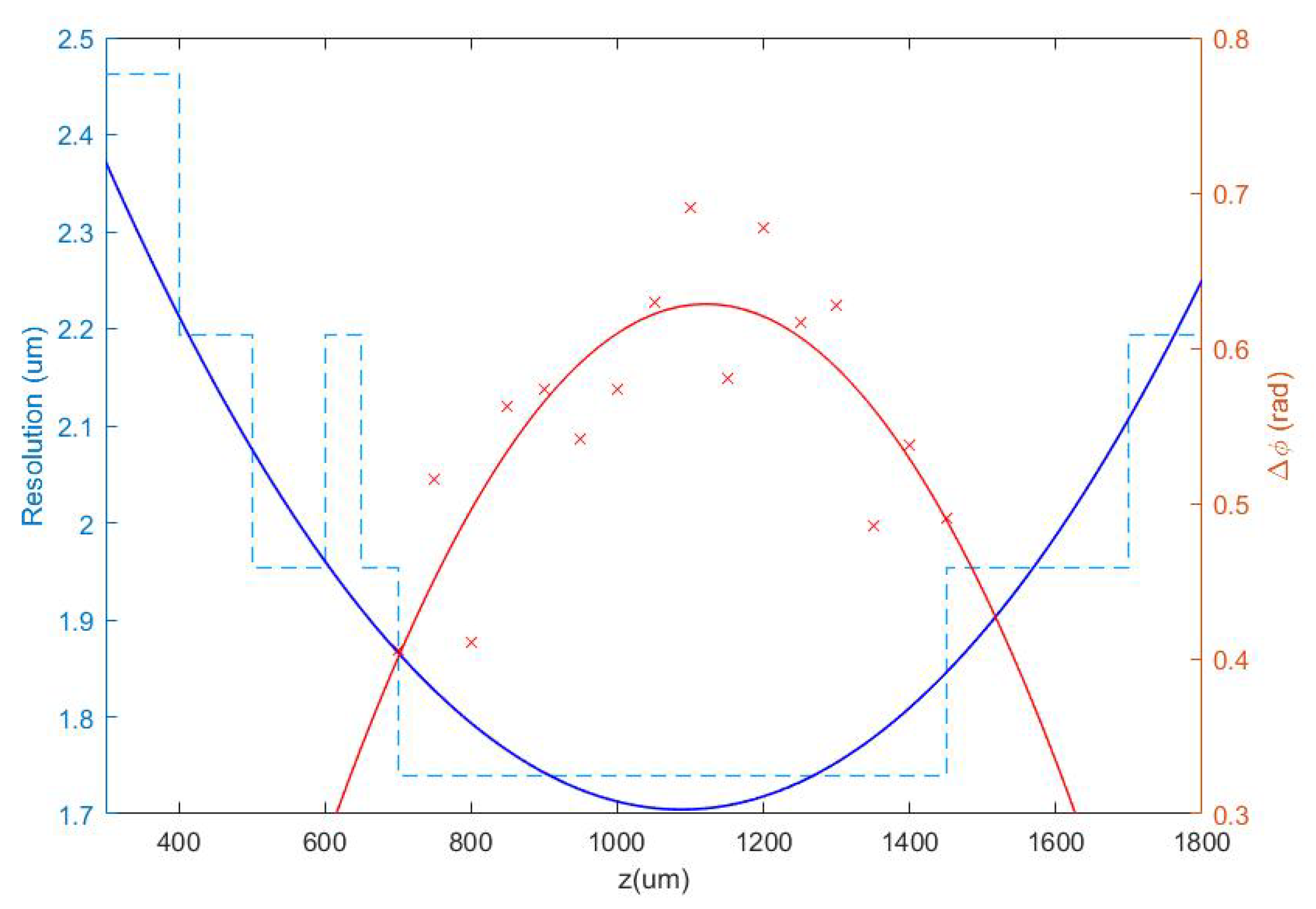

Figure 3.

Representation of the resolution obtained from the analysis of the phase test images for different defocus distances in the range 300 µm z < 1800 µm (blue dashed line), as well as a contrast parameter calculated in the range where the resolution limit is achieved (red crosses). Continuous lines show quadratic polynomial fits to the respective data.

At this point, we chose a defocus distance around 1100 µm in the center of the flat region of best resolution limit (minimum value of the blue plot in Figure 3). Notice that there is some tolerance around this value from which we will not lose resolution due to small mismatches in the defocus distance of the camera. In order to obtain a more quantitative result and to support the best defocus distance driven by the resolution criterion analysis, it is possible to include another parameter in the picture: the phase amplitude value defined as . Thus defined, is a kind of contrast value coming from the phase step produced by the test target, which is supposed to be maximum at the best defocus distance. We have computed it for group 8 of the best reconstructed in-focus images for each defocus distance. Through the red plot in Figure 3, we can see the computed contrast values depending on the defocus distances. Despite the fact that the dispersion of points was quite large, they showed a behavior similar to the resolution criterion analysis, being maximum around the same defocus value. For this reason, we chose 1100 µm as the proper defocus distance for holographic phase reconstruction in this setup, which resulted in a camera shift of = 11 cm at the image space.

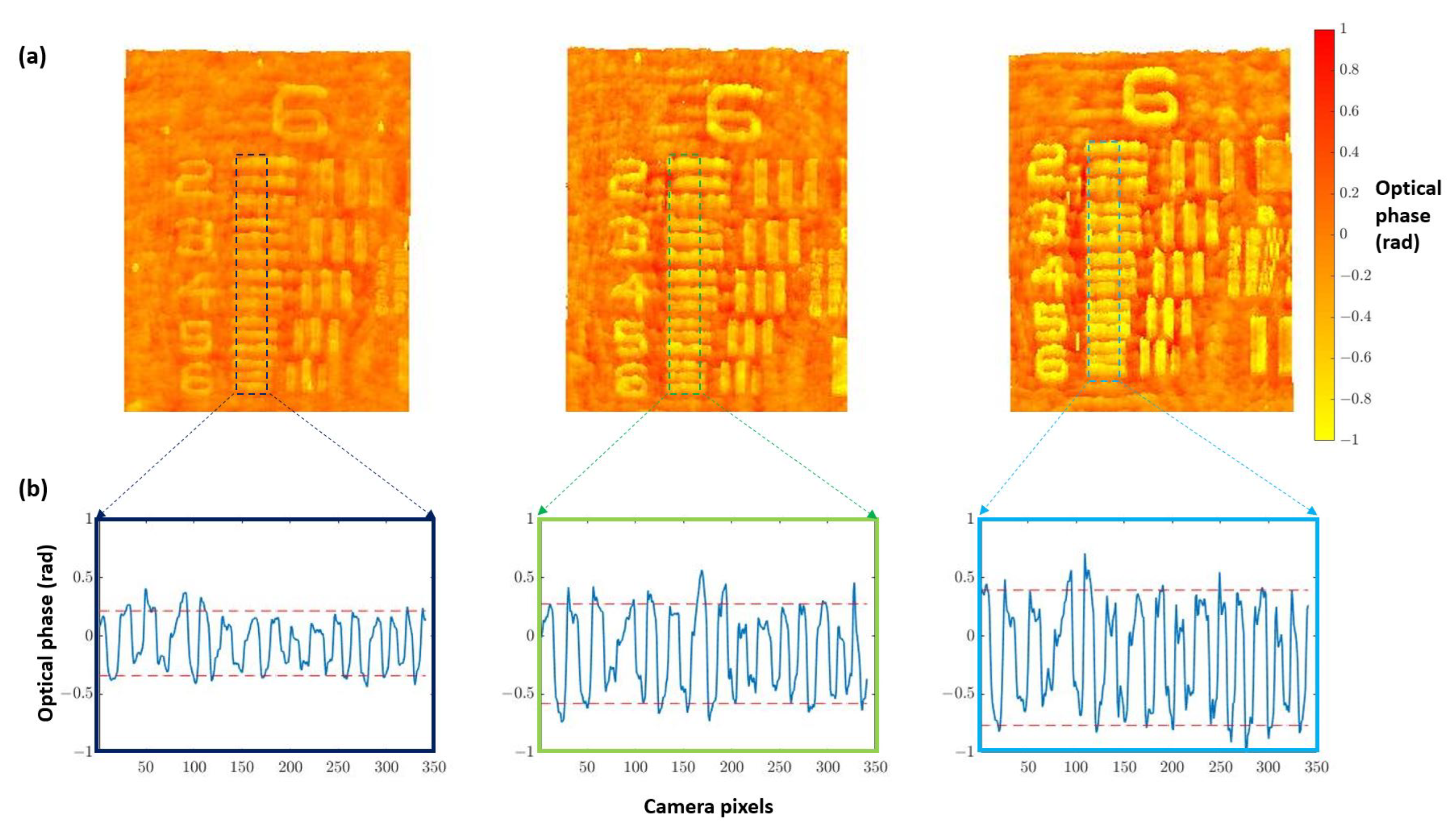

3.1.2. Quantitative Validation

Once the best defocus distance was selected, quantitative validation with experimental results was undertaken. For this purpose, we used the three different heights noted for the USAF phase target to perform quantitative phase imaging (QPI) analysis. Figure 4a shows how the increase in the thickness of the elements suppose an increase in the optical phase. Furthermore, computing the phase step () between elements and the background (Figure 4b) allows for obtaining the height of the phase target () through the following equation:

where is the illumination wavelength (450 nm in our case), is the refractive index of the phase target material (Corning Eagle XG glass, according to https://refractiveindex.info/ accessed on 4 December 2022), and is the refractive index of the air (in this particular case).

Figure 4.

Height analysis performed on group 6 of three different thicknesses (50, 100, and 150 nm nominal heights) for the USAF phase target. (a) Images of the retrieved phase distributions by numerical propagation of the different test targets. (b) Phase profile computed along the dashed marked regions corresponding with elements 2 to 6. Phase step values increase from left image (50 nm) to the right one (150 nm), and they are retrieved as the gap between red dashed lines at (b) that represents the average of the maximum and minimum peaks of the retrieved optical phase.

Results of this analysis are shown in Table 1, where the height of the phase target obtained from Equation (3) is compared with the value provided by the manufacturer (not the nominal one but the measured one). We can see a good agreement for the two thicker values (100 and 150 nm heights) where the expected measured value is inside the error provided by the presented quantitative phase characterization. However, the measured QPI value is higher in comparison with the one reported by the manufacturer for the lowest target height value (50 nm). We think this is due to the noise coming from the in-line geometry due to the presence of twin image disturbance and coherent artifacts that are present when implementing a Gabor in-line configuration. All these drawbacks are also responsible for the deviation between real and measured values in the other two characterized heights. However, and despite all the shortcomings provided by the Gabor layout, we still think it is advantageous because it defines the most simple holographic layout possible as well as retrieves QPI values quite close to the real ones.

Table 1.

Comparison between the thickness of the elements () obtained from the phase step analysis () and the values (nominal and measured ones) provided by the manufacturer.

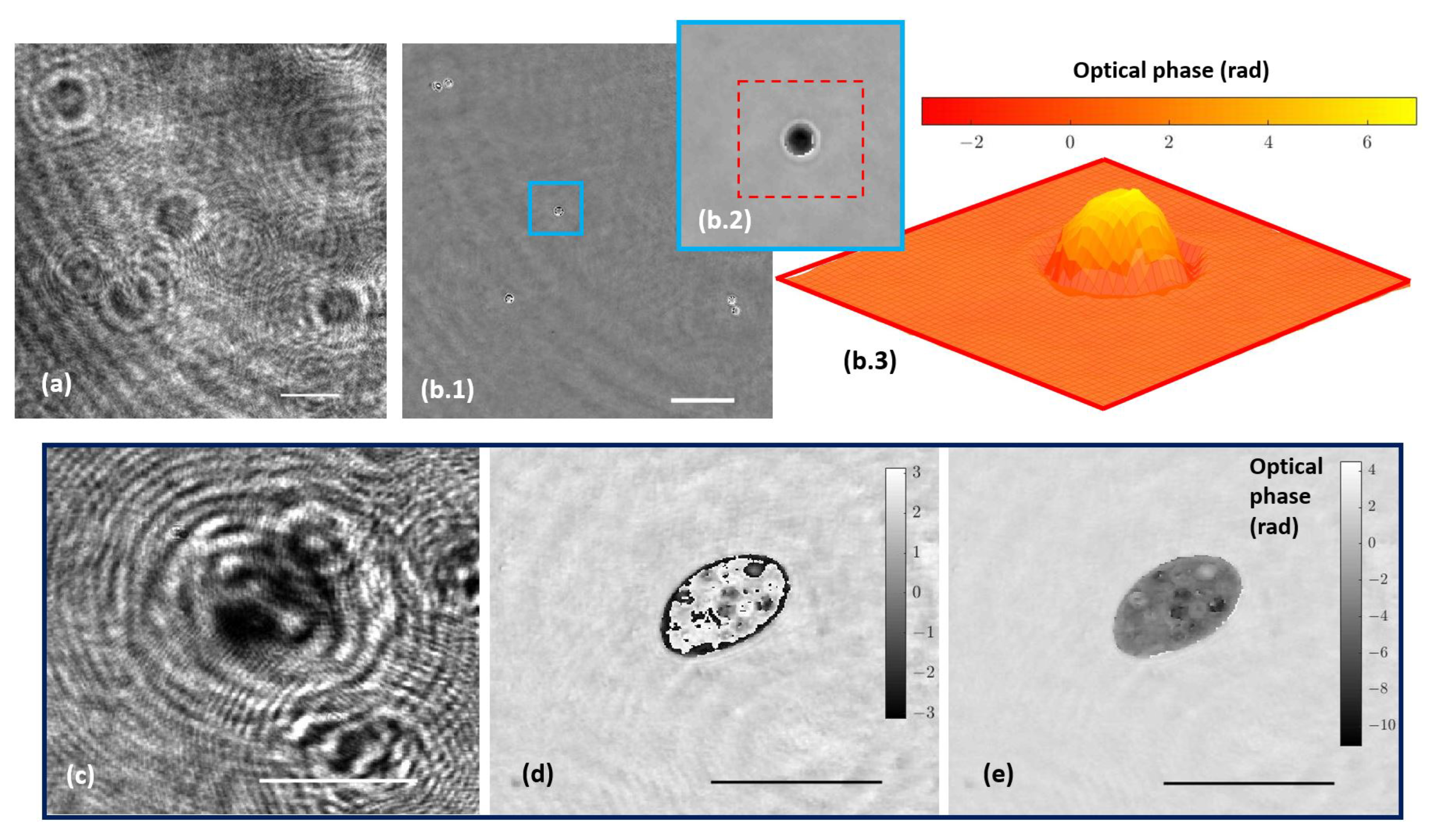

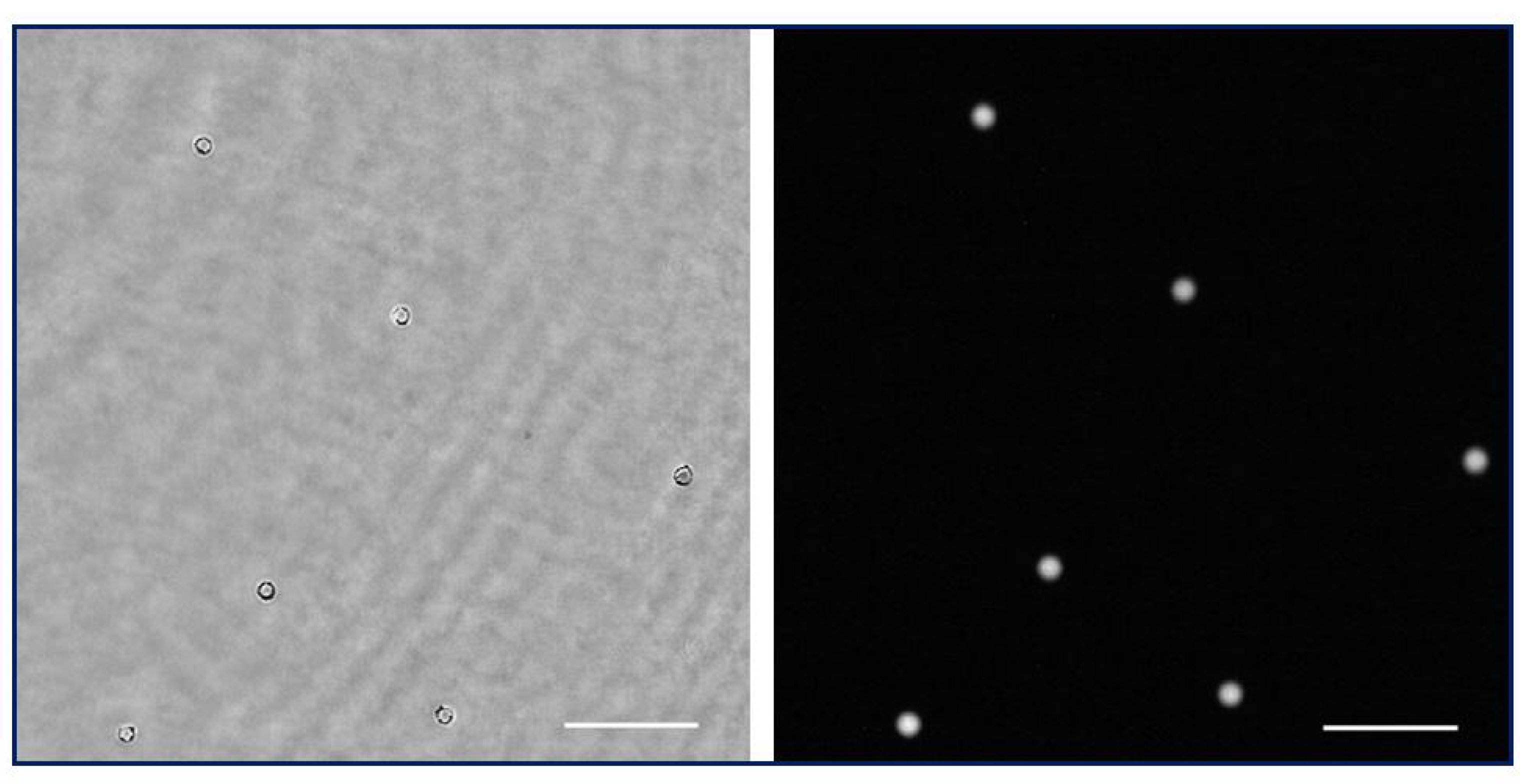

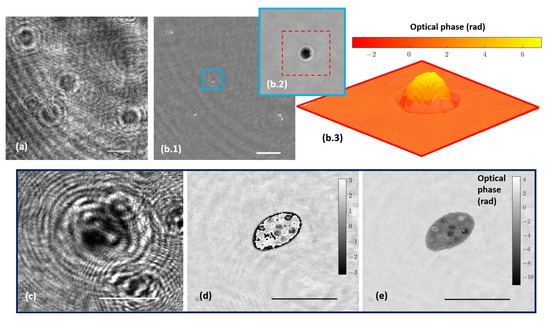

The holographic architecture was extended to more complex samples. Specifically, 10 µm diameter beads as well as water suspended microorganisms were selected to validate QPI coming from the proposed holographic layout. These samples were processed according to Section 2.3. Figure 5a shows the recorded hologram of a low-density beads sample, and Figure 5b includes the retrieved phase distribution by numerically propagating to the in-focus beads plane.

Figure 5.

Holograms and phase distributions of different samples. (a) Hologram of a low-density water suspended beads sample. (b.1) Phase distribution retrieved by numerical propagation using the defocus distance obtained in calibration; (b.2,b.3) show a magnification of a single bead after applying an unwrapping procedure and being represented in two and three dimensions, respectively. (c–e) Representative frames corresponding, respectively, to movies of the hologram, the reconstructed wrapped, and unwrapped phase sequences of a water suspended microorganism (Visualizations 1, 2, and 3 in the Supplementary Materials). Scale bars represent a length of 50 µm.

Because the bead diameter is enough to produce a phase step greater than 2, wrapping effects appear in the reconstructed phase images. Although it is possible to unwrap the phase in order to perform QPI, as Figure 5(b.2,b.3) depict, phase reconstructed from static microbeads shows defects around the bead’s edge. The main reasons for this problem are the low NA of the objective used and the high refractive index of the beads, with the result that only a small cone of light is captured by the objective lens, thus physically preventing an accurate reconstruction. Hence, only a spherical cap of the bead can be reconstructed and the retrieved phase information becomes noisy with lack of information towards the bead’s edge. This effect is less aggressive than in the case of air suspended beads (higher refractive index step) but still enough to cause the beads to appear surrounded by artifacts. On the other hand, Figure 5c–e present a frame of the movies corresponding with the hologram (Visualization 1 in the Supplementary Materials) and the reconstructed wrapped (Visualization 2 in the Supplementary Materials) and unwrapped phase (Visualization 3 in the Supplementary Materials) distributions, respectively, of a living organism in free movement in the aqueous medium where phase imaging allows us to observe some structures inside the organism.

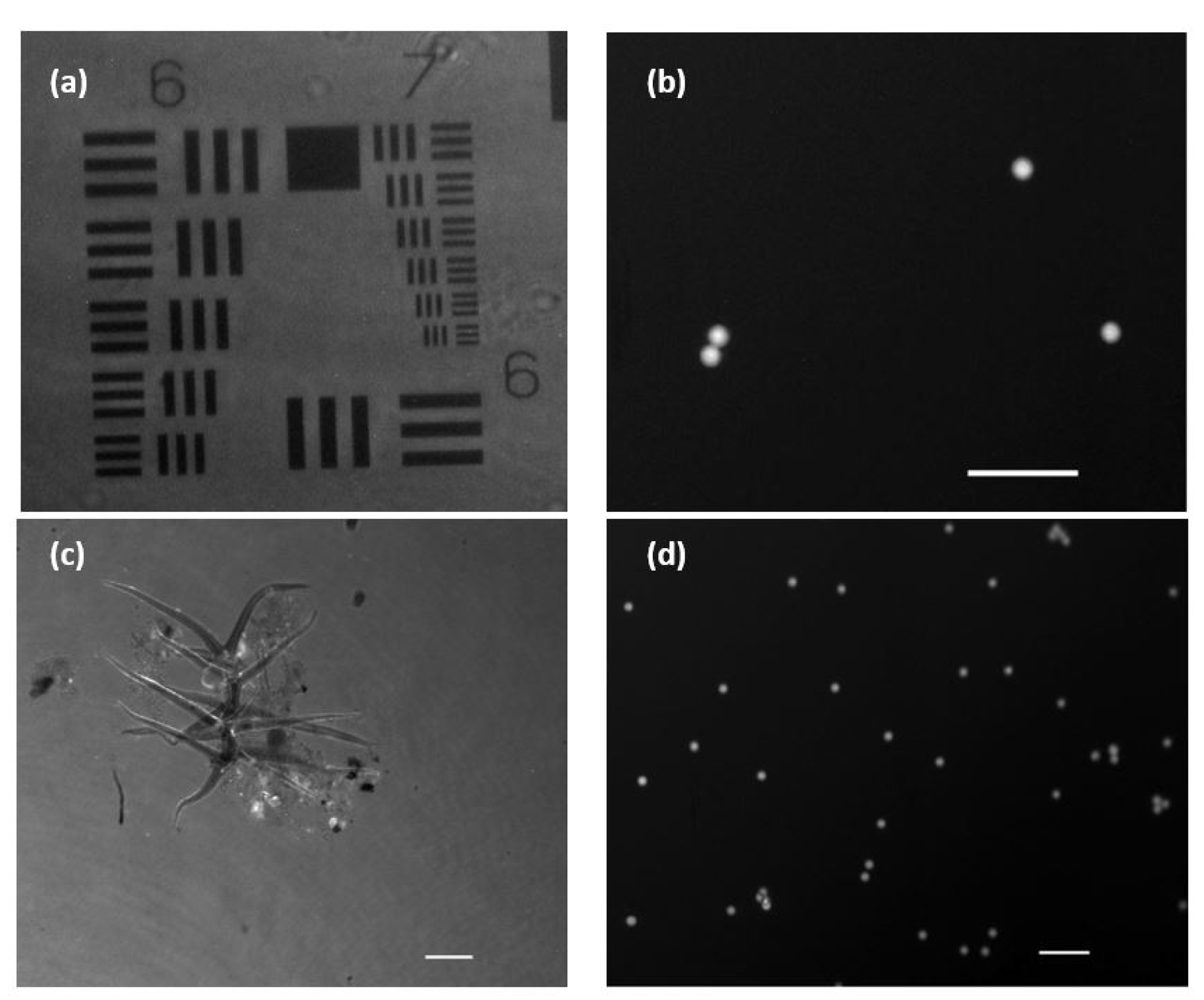

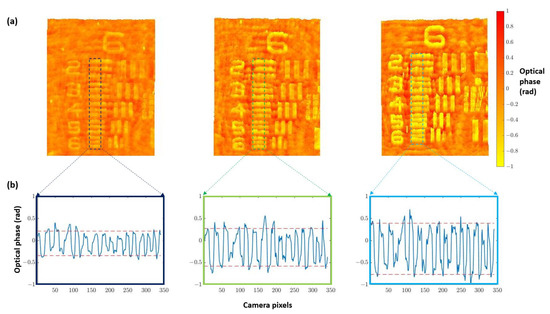

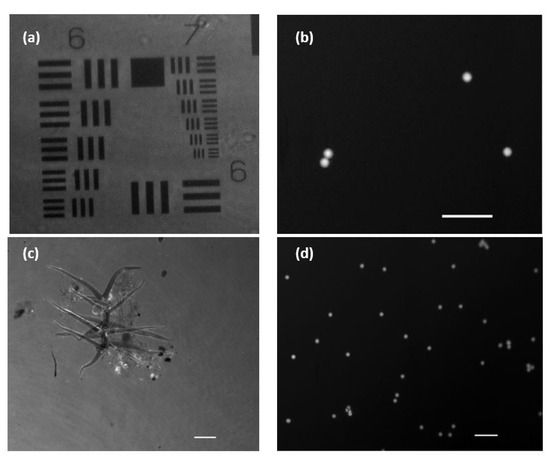

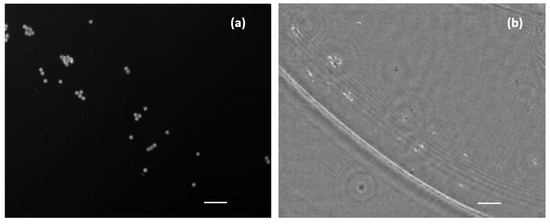

3.2. Fluorescence Imaging Calibration

In order to validate the performance of the FI mode, we used the two different types of fluorophores previously noted: fluorescent beads and eye drop solution. Figure 6a shows the stained USAF positive resolution test target. This way, the image is observed in reverse contrast, as it is the background itself that is emitting the fluorescent light, in contrast to what happens in Figure 6b where the fluorescent beads are the emitters themselves. Figure 6c shows a microorganism moving in the aqueous medium developed under lab conditions (Visualization 4 in the Supplementary Materials). The brighter areas are due to the differential fluorescein adherence to the different structures in the biosample (we can see some more brilliant parts corresponding with fluorescein accumulus at those parts).

Figure 6.

Images and visualizations of fluorescent samples acquired with an exposure time of 100 ms. (a) USAF amplitude test with added fluorescein. (b) Low density sample of fluorescent micro-beads (10 µm in diameter). (c) Stain environment with fluorescein showing a microorganism in free movement (Visualization 4 in the Supplementary Materials). (d) Mid-density sample of fluorescent micro-beads flowing in a aqueous medium (Visualization 5 in the Supplementary Materials). White scale bars are 50 µm length.

In Figure 6d, we can see the movement of water suspended fluorescence beads flowing into the counting chamber (Visualization 5 in the Supplementary Materials). Both movies show that the proposed frame rate (discussed in Section 2.2) is enough to track the sample’s movement (at least considering the movement speeds of the objects here considered) as well as the total suppression of the coherent illumination that is not introducing veil effects. In addition, we computed some quality imaging ratios to evaluate the performance of the FI mode. Thus, signal-to-noise ratio (SNR) and signal-to-background ratio (SBR) are calculated from Figure 6b. This figure contains four fluorescent beads over a dark background, and it is a nice example for computing those parameters in our system. We have applied the following definitions to SNR and SBR [34]:

SNR = (mean signal − mean background)/(STD background), and

SBR = mean signal / mean background.

Using those definitions, we obtained the following results: SNR = 9.2 ± 0.9 and SBR = 2.95 ± 0.15 where the standard deviation error has also been included for every metric. All of them are good results as the SNR value approaches 10 (the signal is one order of magnitude higher than the noise), whereas the SBR is higher than 2 (value from which the sample can be clearly differentiated from the background).

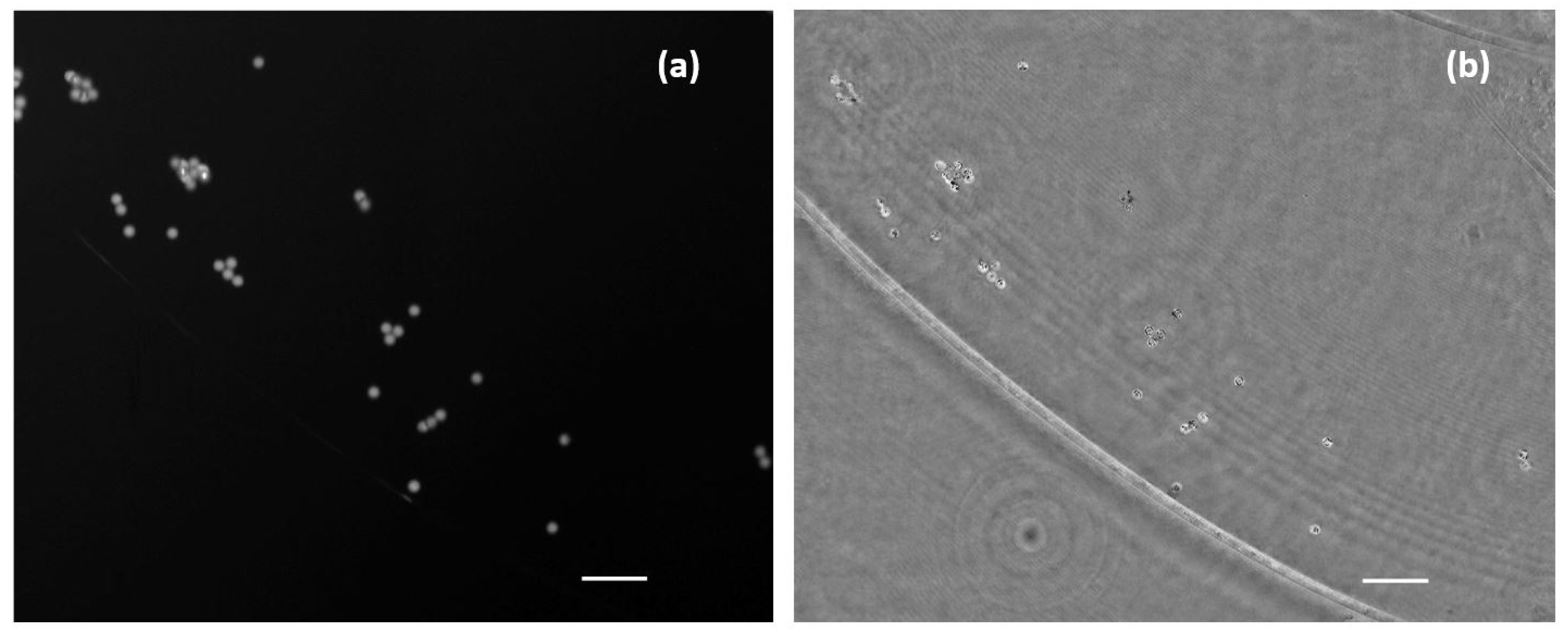

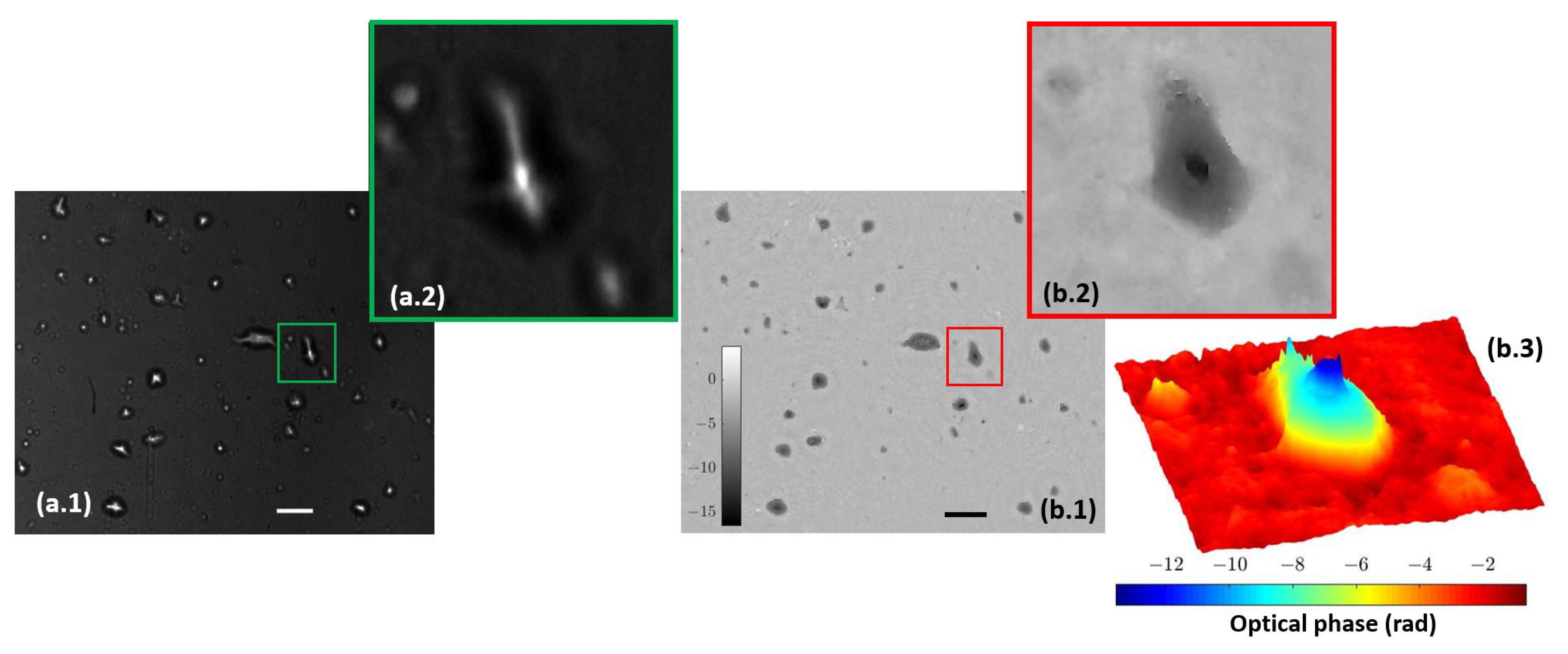

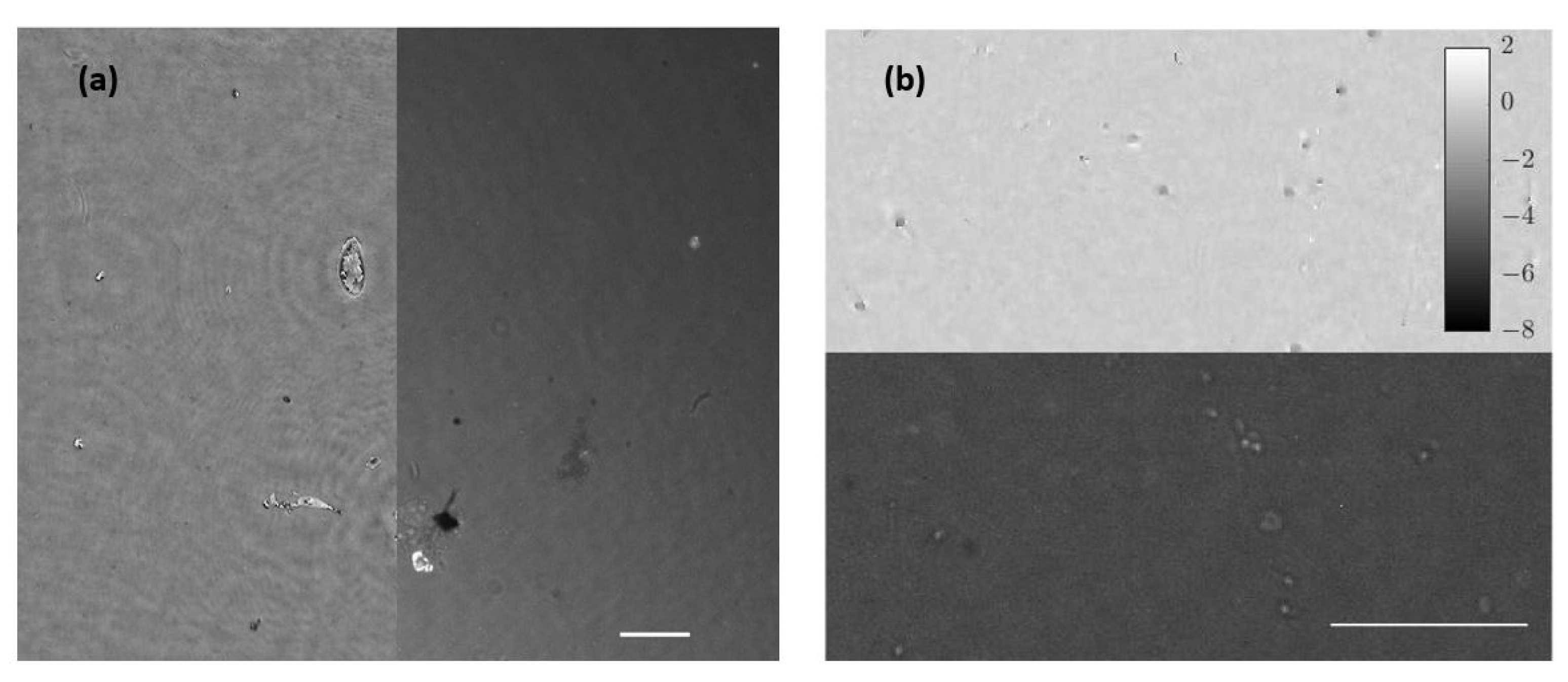

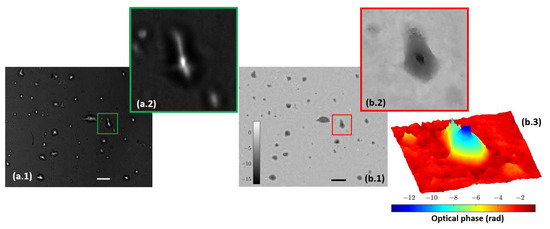

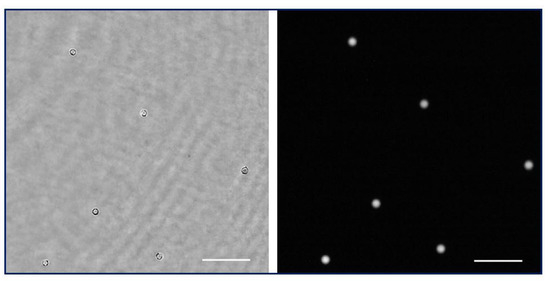

3.3. Combined Station

After independent calibration and validation of our two introduced imaging modalities (QPI and FI), this section presents the results provided by the combined imaging platform where simultaneous dual mode imaging is achieved. As a first case, we use static samples (micro beads inside the counting chamber filled with water and lab-made culture in water suspension). Figure 7 includes the simultaneous reconstructions coming from the two experimental imaging modes when considering the micro spheres. FI shows a perfect contrast visualization mode (bright beads with dark background) where only light from the beads is collected. QPI shows additional information of the sample because we can observe the liquid edge (something fully missed in the FI mode), meaning that the micro bead solution is not completely covering the full FOV. The difference of the information provided by fluorescence and holography is also evident in Figure 8, where the case of the lab-made culture in water suspension is presented. Here, the fluorescein adheres or accumulates to the area of the cell nuclei while phase imaging shows the entire cell spatial distribution. As in the previous case, FI is bright against a dark background, whereas QPI is complementary (clear background with dark cells) to it.

Figure 7.

Simultaneous images of a mid-density water suspended fluorescent beads sample. (a) Direct image from fluorescent emission. (b) Phase distribution retrieved after numerical propagation to the in-focus plane. Scale bars are 50 µm length.

Figure 8.

Lab-made cultures cells stained with fluorescein. (a.1) Full field image of the fluorescent arm; (a.2) includes magnification of a single cell. (b.1) Full field image of the phase distribution; (b.2,b.3) depict the magnification of the same single cell in two and three dimensions, respectively. Scale bars are 50 µm length.

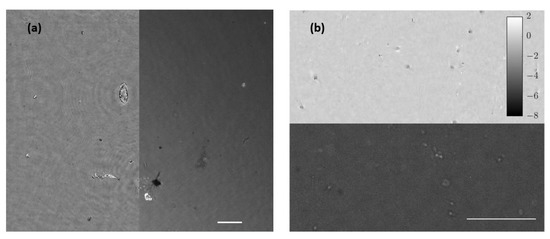

As a second case, living/moving samples are considered under the proposed visualization platform. As previously noted, movies were acquired with a frame rate of 10 fps considering the case of beads, lab-made cultures, and human sperm cells. Results are presented showing fluorescence and holography imaging as separated images (Figure 9) for flowing beads or combining both in the same visualization frame where half FOV is for FI and the other half for QPI (Figure 10) for living cells. Note, in this latter case, some microorganisms and sperm cells are able to cross the separation line between the two imaging modes, allowing visualization with the two imaging modalities inside the same movie. Although the lab-made culture has a number of microorganisms large enough to be observed and perfectly resolved, the resolution of the system is limited to resolve the sperm cells because the spermatozoa tail width is below 1 µm and exceeds the resolution limit; thus, the retrieved phase distribution only clearly shows the head of sperm cells in free movement inside the counting chamber.

Figure 9.

Simultaneous visualization of the phase retrieved by numeric propagation and fluorescence direct image of a low density fluorescent beads sample (Visualization 6 in the Supplementary Materials). Scale bar is 50 µm length.

Figure 10.

Movies of biological samples with the simultaneously combined image of holography and fluorescence. (a) Visualization of water suspended microorganism (Visualization 7 in the Supplementary Materials) where left half FOV shows the retrieved phase distribution and right half FOV includes the fluorescence direct image. (b) Spermatozoa in free movement (Visualization 8 in the Supplementary Materials) where bottom half FOV shows florescence and the upper half FOV includes phase distribution. White bars represent a length of 50 µm.

4. Discussion and Conclusions

In this manuscript we have proposed, calibrated, and validated an extremely simple and cost-effective imaging platform integrating FI and QPI while using a single illumination light for both imaging modalities. The system has been optimized by the calibration of the optimum defocus distance, which allows us to obtain the best retrieved phase distribution from the in-line holographic recording layout. Although calibration was performed for a specific imaging system configuration, it is quite simple to apply similar procedures to variations of the system presented in this manuscript according to the target application. Thus, for instance, it is possible to select a different excitation wavelength depending on the fluorochrome to be used and to select the proper bandpass filter to separate both imaging modalities or to use a higher magnification and NA imaging lens to see smaller details in the samples. Whatever the case, we think the proposed concept defines a cost-effective and compact (it minimizes the number of optical elements) station that can be configurable depending on the type of samples to be imaged, fluorophores to be added, and imaging requirements of the target application.

Worthy of note is the fact that the proposed concept can be perfectly implemented in regular/commercial bright field microscopes because all the needed modifications are implemented prior to the sample plane (inclusion of a coherent illumination source) and after the tube lens system (separation and digital recording of both imaging paths). Thus, it is possible to think of the external insertion of coherent illumination and a compact add-on module to be coupled at the exit port of the microscope embodiment for providing both simultaneous recordings. Because upgrading a regular microscope with other imaging modalities different from the ones provided by the microscope is currently a very appealing research field [32], this possibility will be explored in future work.

Regarding the layout, the introduction of the defocus distance (11 cm in our case) could be a size problem, making the whole system a bit bulky. However, on one hand, it is the simplest way to achieve holographic imaging and, on the other hand, it is possible to reduce such defocus distance by including additional optics at the expense of increasing the price of the imaging platform. In addition, from the retrieved quantitative phase images, we can observe some oscillations in the optical phase profile that comes from coherent noise and the influence of the twin image caused by the in-line Gabor holography scheme. These problems can lead to less accurate results, but there are some solutions that can be implemented. Coherent noise can be strongly mitigated by the use of partial coherent illumination [35], so that there is not so much interference between the waves that are reflected back and forth. Furthermore, to increase the quality of the retrieved phase distributions and minimize the twin image effect, there are some different phase recovery algorithms that raise, for example, the use of several wavelengths during holographic reconstruction [36]. Whatever the case, we have computed the STD values at clear background areas in some of the holographic reconstructions as a metric related with the phase accuracy in the proposed holographic layout. The STD results are as follows: 0.14 rads (Figure 2(a.1)), 0.13/0.17/0.18 rads (Figure 4a—left to right), 0.13 rads (Figure 5(b.1)), and 0.19 rads (Figure 5e), yielding in a averaged STD value of 0.16 rads considering all images. This is a nice value considering the Gabor restriction defined in the holographic architecture. For instance, 0.16 rads are equal to 20 nm when considering height variation in the system (see Table 1) and, although holography can be much more accurate in general, this is a good compromise between accuracy and technique complexity from a experimental point of view.

Note that our analysis considers thin samples. In the case of having volume information or thick samples, two constraints are raised. First, thick/volume samples must still satisfy Gabor’s condition, as our holographic reconstruction is based on a weak diffraction assumption. For this reason, the density of the sample cannot be too high and occlusions (objects or particles projecting their own shadows to each other) should not happen in order to preserve a reasonable Gabor behavior in holography. Second, thick/volume samples will provide more than a single sample section with useful information. Because the optimum defocus distance here derived is for a single plane (let us say the central plane of the thick sample), sections before and after that central plane will not be under optimum defocus distance recording conditions. Fortunately, the proposed system is not too sensitive to this fact because there is a range of defocus distances that seems to apply pretty well in holographic reconstruction ( 700 < z < 1450 µm according to Figure 3), so the presented approach tolerates this fact. Nevertheless, this is only a constraint to be considered in the holographic imaging mode, because fluorescent imaging does not allow for a 3D image over a thick/volume sample and only provides an image of a single image plane section (using a single recording). Therefore, for the limitation introduced by having dual mode imaging of the same section, the proposed approach is perfectly defined.

Moreover, because we are defining different imaging recording conditions on both imaging modes, the magnification provided by each mode is slightly different. FI provides a magnification according to the used infinity corrected microscope, which is close to a 10 × factor as we are using a tube lens with 180 mm focal length. However, QPI defines a Gabor in-line hologram which, in addition, introduces an intrinsic magnification factor as the geometrical projection of the object (in our case, the image provided by our microscope layout) over the camera plane. This geometric magnification () depends on the ratio between the distance from the illumination point source to the digital camera (d) and the distance between the illumination point source to the object (z) in the form of = d/z. In our case, the effective point source position comes from the image of the fiber optic end through the whole system and it is theoretically located at infinity because the tube lens is aimed to provide collimated illumination at the image space (blue beam at Figure 1). Under these conditions, = 1. However, this position can be slightly altered for experimental reasons, so that will be slightly different from 1× because of the definition of = 11 cm. This is the reason why the FOVs provided by both imaging modalities are not exactly the same, as can be appreciated from Figure 8(a.1,b.1).

In the case of the fluorescent imaging mode, we have used a fluorochrome type (fluorescein) that is commonly used in ophthalmology but not in biology or biomedicine. Because of this, maybe the results are not significant from a biological point of view but, in our view, they are good enough to show fluorescent effect and its simultaneous combination with holography in a single imaging platform. A specific fluorophore will have a higher adhesion to the cells structures, showing more significant information as usually happens in biology. However, there aresome fluorophores with potential use in our presented imaging platform concept because excitation and emission are distant enough to be separated with a long-pass filter, thus the proposed concept prevails. Here, the use of a generic fluorophore has the main objective of showing that it is possible to capture the simultaneous image of FI and QPI modes using only one illumination light.

Finally, it is important to highlight that, despite the fact that we proposed a dual mode station mixing holography and fluorescence in optical microscopy (both imaging techniques working simultaneously), the user can decide to work with only one of them. In that case, the imaging platform will act as a regular microscope updated with coherence sensing capabilities, that is, as a holographic microscope restricted to a Gabor domain. In that sense, in-line holography allows QPI at different sample sections. Therefore, as proposed, the imaging platform is capable of simultaneously working with full 3D holographic reconstructions and single plane fluorescent imaging. However, we have in mind the development of novel methodologies in future work involving 3D fluorescent imaging aided by holographic information. Because fluorescence is an incoherent imaging technique, 3D reconstructions for thick samples usually need axial scanning of the input volume. This fact penalizes the temporal resolution of the method, preventing the analysis of fast/dynamic events. We think that holography can help fluorescence to achieve digital 3D reconstructions from a single image plane recording. With this manuscript, we are presenting the first steps towards this direction by introducing the common station for achieving simultaneous fluorescent and holographic imaging in a compact and cost-effective way.

In summary, we have reported on an experimental dual mode imaging platform with simultaneous FI and QPI analysis of static and living samples. The key concept is the use of a single illumination wavelength for providing both the excitation light in FI and the coherent beam for QPI. This characteristic confers the system with some advantages concerning compactness, simplicity, and pricing of the complete imaging platform. For a wide variety of samples, we have provided, first, calibration and analysis of the independent imaging modes and, after that, validation of FI and QPI while working simultaneously in parallel (single shot imaging capability).

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/bios13020253/s1, Visualization 1; Visualization 2; Visualization 3; Visualization 4; Visualization 5; Visualization 6; Visualization 7; Visualization 8.

Author Contributions

All coauthors had contributed, with similar participation, to the following manuscript parts: conceptualization, methodology, numerical processing, validation, data analysis, and data curation. Additionally: writing—original draft preparation, D.A. and V.M.; writing—review and editing, V.M. and J.G.; resources, J.G. and V.M.; supervision, J.G. and V.M.; project administration, J.G. and V.M.; funding acquisition, J.G. and V.M. All authors have read and agreed to the published version of the manuscript.

Funding

Grant PID2020-120056GB-C21 funded by MCIN/AEI/10.13039/501100011033.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

D.A. acknowledges an internal scholarship at the University of Valencia (Ayudas para la colabroación en la investigación, Curso 21-22, Expediente Num. 1879967) to do this work.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| FI | Fluorescence imaging |

| QPI | Quantitative phase imaging |

| NA | Numerical aperture |

| FOV | Field of view |

| USAF | United State Air Force |

| CAM | Camera |

References

- Vogler, N.; Heuke, S.; Bocklitz, T.W.; Schmitt, M.; Popp, J. Multimodal imaging spectroscopy of tissue. Annu. Rev. Anal. Chem. 2015, 8, 359–387. [Google Scholar] [CrossRef] [PubMed]

- Jena, B.P.; Taatjes, D.J. NanoCellBiology: Multimodal Imaging in Biology and Medicine, 1st ed.; Pan Stanford Publishing: Singapore, 2014. [Google Scholar]

- Zhou, Q.; Chen, Z. Multimodality Imaging: For Intravascular Application, 1st ed.; Springer: New York, NY, USA, 2020. [Google Scholar]

- Sen, H.N.; Read, R.W. Multimodal Imaging in Uveitis, 1st ed.; Springer: New York, NY, USA, 2018. [Google Scholar]

- Suresh, A.; Udendran, R.; Vimal, S. Deep Neural Networks for Multimodal Imaging and Biomedical Applications, 1st ed.; IGI Global: Pensilvania, PA, USA, 2020. [Google Scholar]

- Baz, A.E.; Suri, J.S. Big Data in Multimodal Medical Imaging, 1st ed.; Chapman & Hall: London, UK, 2020. [Google Scholar]

- Mazumder, N.; Balla, N.K.; Zhuo, G.-Y.; Kistenev, Y.V.; Kumar, R.; Kao, F.-J.; Brasselet, S.; Nikolaev, V.V.; Krivova, N.A. Label-Free Non-linear Multimodal Optical Microscopy—Basics, Development, and Applications. Front. Phys. 2019, 7, 170. [Google Scholar] [CrossRef]

- Yu, Z.G.; Spandana, K.U.; Sindhoora, K.M.; Kistenev, Y.V.; Kao, F.-J.; Nikolaev, V.V.; Zuhayri, H.; Krivova, A.N.; Mazumber, N. Label-free multimodal nonlinear optical microscopy for biomedical applications. J. Appl. Phys. 2021, 129, 214901. [Google Scholar]

- Peres, C.; Nardin, C.; Yang, G.; Mammano, F. Commercially derived versatile optical architecture for two-photon STED, wavelength mixing and label-free microscopy. Biomed. Opt. Express 2022, 13, 1410–1429. [Google Scholar] [CrossRef] [PubMed]

- Rodríguez, A.D.; Clemente, P.; Tajahuerce, E.; Lancis, J. Dual-mode optical microscope based on single-pixel imaging. Opt. Lasers. Eng. 2016, 82, 87–94. [Google Scholar] [CrossRef]

- Picazo-Bueno, J.A.; Cojoc, D.; Iseppon, F.; Torre, V.; Micó, V. Single-shot, dual-mode, water-immersion microscopy platform for biological applications. Appl. Opt. 2018, 57, A242–A249. [Google Scholar] [CrossRef]

- Yeh, L.-H.; Chowdhury, S.; Repina, N.A.; Waller, L. Speckle-structured illumination for 3D phase and fluorescence computational microscopy. Opt. Express. 2019, 10, 3635–3653. [Google Scholar] [CrossRef]

- Lee, Y.; Kim, B.; Choi, S. On-Chip Cell Staining and Counting Platform for the Rapid Detection of Blood Cells in Cerebrospinal Fluid. Sensors 2018, 18, 1124. [Google Scholar] [CrossRef]

- Pavillon, N.; Fujita, K.; Smith, N.I. Multimodal label-free microscopy. J. Innov. Opt. Health Sci. 2014, 7, 1330009. [Google Scholar] [CrossRef]

- Krafft, C.; Schmitt, M.; Schie, I.W.; Cialla, D.-M.; Matthaus, C.; Bocklitz, T. Label-Free Molecular Imaging of Biological Cells and Tissues by Linear and Nonlinear Raman Spectroscopic Approaches. Angew. Chem. Int. Ed. 2017, 56, 4392–4430. [Google Scholar] [CrossRef]

- Ryu, J.; Kang, U.; Song, J.W.; Kim, J.; Kim, J.M.; Yoo, H.; Gweon, B. Multimodal microscopy for the simultaneous visualization of five different imaging modalities using a single light source. Biomed. Opt. Express 2021, 12, 5452–5469. [Google Scholar] [CrossRef] [PubMed]

- Park, Y.; Popescu, G.; Badizadegan, K.; Dasari, R.R.; Feld, M.S. Diffraction phase and fluorescence microscopy. Opt. Express 2006, 14, 8263–8268. [Google Scholar] [CrossRef] [PubMed]

- Yelleswarapu, C.S.; Tipping, M.; Kothapalli, S.R.; Veraksa, A.; Rao, D.V.G.L.N. Common-path multimodal optical microscopy. Opt. Lett. 2009, 34, 1243–1245. [Google Scholar] [CrossRef]

- Quan, X.; Nitta, K.; Matoba, O.; Xia, P.; Awatsuji, Y. Phase and fluorescence imaging by combination of digital holographic microscopy and fluorescence microscopy. Opt. Lett. 2015, 22, 349–353. [Google Scholar] [CrossRef]

- Chowdhury, S.; Eldridge, W.J.; Wax, A.; Izatt, J.A. Spatial frequency-domain multiplexed microscopy for simultaneous, single-camera, one-shot, fluorescent, and quantitative-phase imaging. Opt. Lett. 2015, 40, 4839–4842. [Google Scholar] [CrossRef] [PubMed]

- Chowdhury, S.; Eldridge, W.J.; Wax, A.; Izatt, J.A. Structured illumination multimodal 3D-resolved quantitative phase and fluorescence sub-diffraction microscopy. Biomed. Opt. Express 2017, 8, 2496–2518. [Google Scholar] [CrossRef]

- Shen, Z.; He, Y.; Zhang, G.; He, Q.; Li, D.; Ji, Y. Dual-wavelength digital holographic phase and fluorescence microscopy for an optical thickness encoded suspension array. Opt. Lett. 2018, 43, 739–742. [Google Scholar] [CrossRef]

- Dubey, V.; Ahmad, A.; Singh, R.; Wolfson, D.L.; Basnet, P.; Acharya, G.; Mehta, D.S.; Ahluwalia, B.S. Multi-modal chip-based fluorescence and quantitative phase microscopy for studying inflammation in macrophages. Opt. Express 2018, 26, 19864–19876. [Google Scholar] [CrossRef]

- Nygate, Y.N.; Singh, G.; Barnea, I.; Shaked, N.T. Simultaneous off-axis multiplexed holography and regular fluorescence microscopy of biological cells. Opt. Lett. 2018, 43, 2587–2590. [Google Scholar] [CrossRef]

- Kernier, I.D.; Cherif, A.A.; Rongeat, N.; Cioni, O.; Morales, S.; Savatier, J.; Monneret, S.; Blandin, P. Large field-of-view phase and fluorescence mesoscope with microscopic resolution. J. Biomed. Opt. 2019, 24, 036501. [Google Scholar] [CrossRef]

- Mandula, O.; Kleman, J.P.; Lacroix, F.; Allier, C.; Fiole, D.; Hervé, L.; Blandin, P.; Kraemer, D.C.; Morales, S. Phase and fluorescence imaging with a surprisingly simple microscope based on chromatic aberration. Opt. Express 2020, 28, 2079–2090. [Google Scholar] [CrossRef] [PubMed]

- Kumar, M.; Quan, X.; Awatsuji, Y.; Tamada, Y.; Matoba, O. Digital holographic multimodal cross-sectional fluorescence and quantitative phase imaging system. Sci. Rep. 2020, 10, 7580. [Google Scholar] [CrossRef] [PubMed]

- Wen, K.; Gao, Z.; Fang, X.; Liu, M.; Zheng, J.; Ma, M.; Zalevsky, Z.; Gao, P. Structured illumination microscopy with partially coherent illumination for phase and fluorescent imaging. Opt. Express 2021, 29, 33679–33693. [Google Scholar] [CrossRef] [PubMed]

- Quan, X.; Kumar, M.; Rajput, S.K.; Tamada, Y.; Awatsuji, Y.; Matoba, O. Multimodal microscopy: Fast acquisition of quantitative phase and fluorescence imaging in 3D space. IEEE J. Sel. Topics Quantum. Electron. 2021, 27, 6800911. [Google Scholar] [CrossRef]

- Liu, P.Y.; Chin, L.K.; Ser, W.; Chen, H.F.; Hsieh, C.M.; Lee, C.H.; Sung, K.B.; Ayi, T.C.; Yap, P.H.; Liedberg, B.; et al. Cell refractive index for cell biology and disease diagnosis: Past, present and future. Lab Chip 2016, 16, 634–644. [Google Scholar] [CrossRef]

- Lichtman, J.W.; Conchello, J.-A. Fluorescence microscopy. Nat. Methods 2005, 2, 910–919. [Google Scholar] [CrossRef]

- Micó, V.; Trindade, T.; Picazo-Bueno, J.A. Phase imaging microscopy under the Gabor regime in a minimally modified regular bright-field microscope. Opt. Express 2021, 29, 42738–42750. [Google Scholar] [CrossRef]

- Trusiak, M.; Cywińska, M.; Micó, V.; Picazo-Bueno, J.Á.; Zuo, C.; Zdańkowski, P.; Patorski, K. Variational Hilbert quantitative phase imaging. Sci. Rep. 2020, 10, 13955. [Google Scholar] [CrossRef]

- Zhai, J.; Shi, R.; Kong, L. Improving signal-to-background ratio by orders of magnitude in high-speed volumetric imaging in vivo by robust Fourier light field microscopy. Photon. Res. 2022, 10, 1255–1263. [Google Scholar] [CrossRef]

- Perucho, B.; Micó, V. Wavefront holoscopy: Application of digital in-line holography for the inspection of engraved marks in progressive addition lenses. J. Biomed. Opt. 2014, 19, 016017. [Google Scholar] [CrossRef]

- Sanz, M.; Picazo-Bueno, J.A.; García, J.; Micó, V. Dual mode holographic microscopy imaging platform. Lab Chip 2018, 18, 1105–1112. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).