Abstract

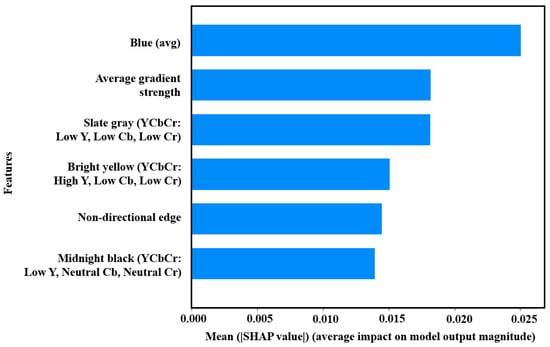

Solar power generation is rapidly emerging within renewable energy due to its cost-effectiveness and ease of deployment. However, improper inspection and maintenance lead to significant damage from unnoticed solar hotspots. Even with inspections, factors like shadows, dust, and shading cause localized heat, mimicking hotspot behavior. This study emphasizes interpretability and efficiency, identifying key predictive features through feature-level and What-if Analysis. It evaluates model training and inference times to assess effectiveness in resource-limited environments, aiming to balance accuracy, generalization, and efficiency. Using Unmanned Aerial Vehicle (UAV)-acquired thermal images from five datasets, the study compares five Machine Learning (ML) models and five Deep Learning (DL) models. Explainable AI (XAI) techniques guide the analysis, with a particular focus on MPEG (Moving Picture Experts Group)-7 features for hotspot discrimination, supported by statistical validation. Medium Gaussian SVM achieved the best trade-off, with 99.3% accuracy and 18 s inference time. Feature analysis revealed blue chrominance as a strong early indicator of hotspot detection. Statistical validation across datasets confirmed the discriminative strength of MPEG-7 features. This study revisits the assumption that DL models are inherently superior, presenting an interpretable alternative for hotspot detection; highlighting the potential impact of domain mismatch. Model-level insight shows that both absolute and relative temperature variations are important in solar panel inspections. The relative decrease in “blueness” provides a crucial early indication of faults, especially in low-contrast thermal images where distinguishing normal warm areas from actual hotspot is difficult. Feature-level insight highlights how subtle changes in color composition, particularly reductions in blue components, serve as early indicators of developing anomalies.

1. Introduction

The United Nations Sustainable Development Goals (SDGs), especially the seventh goal, emphasize the significance of affordable, reliable, sustainable, and modern energy for all. In this context, solar energy is attracting attention due to its environmental benefits and economic potential. The key driver for this renewable energy technology is the solar cell that converts sunlight to electricity. However, the widespread adoption of solar energy presents challenges, such as the occurrence of hotspots. Hotspots are localized areas on solar panels that experience significantly higher temperatures than the surrounding areas, leading to reduced power loss of 25% [1] and potential fire damage. Figure 1 illustrates the thermal images of healthy and defective PV panels with hotspots. Addressing hotspot faults is critical to ensure the long-term performance and reliability of solar power systems. Neglecting these issues results in premature degradation, power output losses, and even safety hazards, ultimately impacting the economic viability and environmental sustainability of solar energy. It is shown in industry reports that there is a rapid rise in Asia Pacific solar power market size, and it is expected to be worth USD 178.24 billion by 2034 [2]. Furthermore, it is highlighted that PV incentive policies, such as the Sunshine Program, the PV Roadmap toward 2030, and initiatives focused on advancing next-generation solar cells, were overseen by the Ministry of Education, Culture, Sports, Science, and Technology (MEXT) and actively promoted by the Japan Science and Technology Agency (JST) [3]. The de facto principle is that solar powered electricity generation dominates the world energy market. If hotspots are not identified at early stages, with the effect of internal and external factors, the severity will becoming higher, the panel efficiency will drop, and the panel will be damaged. And if this persists further, it could result in a fire hazard. This is a red alarm for the safety of the system.

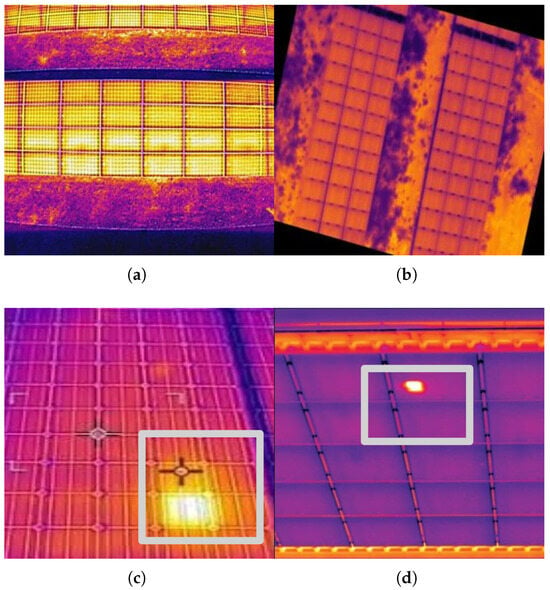

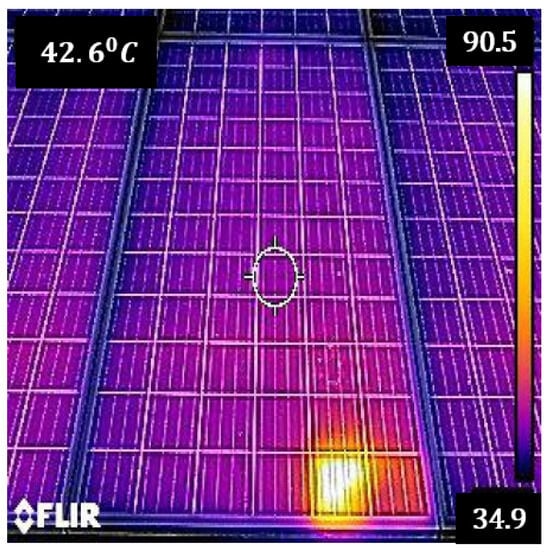

Figure 1.

Thermal images: (a,b) healthy PV panels, (c,d) defective PV panels with hotspots highlighted [4].

Despite recent advances in thermal image analysis, several critical research gaps remain unaddressed. One major limitation is the lack of computational efficiency analysis, as most existing studies emphasize classification accuracy while overlooking computational efficiency analysis. Training and inference times are rarely reported, although they are essential for deployment in resource-constrained environments such as Unmanned Aerial Vehicles (UAVs) [5,6]. Another significant limitation is that traditional Machine Learning (ML) approaches using handcrafted features such as histograms of oriented gradients and texture lack interpretability mechanisms and provide no explanation for the predictions of the model. A further critical challenge lies in the high hardware cost and limited scalability of sensor-dependent methods, which, despite achieving high accuracy, rely on additional electrical measurements, thus increasing system complexity and limiting large-scale aerial inspections. Furthermore, domain mismatch in deep models is another significant limitation. Deep Learning (DL) models based on transfer learning from convolutional neural networks pre-trained on RGB ImageNet (i.e., natural images composed of red, green, and blue color channels, representing how standard cameras capture visual scenes) often perform poorly when applied to IR thermal imagery due to domain mismatch, with models like VGG-16 achieving around 68% accuracy. However, no domain-adaptation strategies have been explored to address this issue. Lastly, there is no unified benchmarking framework that jointly evaluates classification performance, computational cost, and interpretability using explainable artificial intelligence tools such as SHAP (SHapley Additive exPlanations) and What-if Analysis. These limitations highlight the need for a comprehensive approach to thermal image classification that considers real-world constraints.

This research contributes to the literature in four key areas.

- The first is to evaluate 34 ML vs. 5 leading DL models. The study adopts a global approach, covering 34 ML models for 31 feature combinations. The feature extraction is carried out by the Automatic Content Extraction (ACE) media tool in ML models for five different datasets containing thermal images of solar panels with hotspots. The existing literature has predominantly focused on DL models and electrical parameter measurement of solar panel power generation.

- The second objective is to assess Explainable AI (XAI) interpretability via SHAP and What-if Analysis. The study employs a novel methodology that combines SHAP and What-if Analysis [7,8]. This enhances the interpretability of ML models, as part of the XAI [9] framework, in the context of (Unmanned Aerial Vehicle) UAV-based photovoltaic (PV) hotspot detection using thermal imagery. A novel methodology combining SHAP and What-if Analysis is employed to enhance the interpretability of ML models within the XAI framework, specifically for (Unmanned Aerial Vehicle) UAV-based photovoltaic (PV) hotspot detection using thermal imagery. This approach examines the linkage between the performance and computational complexity of both ML and DL models for this application. It highlights that feature-extraction-based ML models outperform DL models. In particular, transfer-learning-based CNNs trained on the ImageNet database lack effective generalization for domain-specific tasks like infrared-based solar panel hotspot detection [10,11].

- The third is to evaluate the classification performance and computational efficiency of the top five ML and DL models: It provides comprehensive insights by plotting and summarizing accuracy and time-scale graphs. These graphs are based on five datasets. The analysis includes the top five high-performing ML models, which are Binary GLM Logistic Regression, Quadratic Support Vector Machine, Medium Gaussian Support Vector Machine, RUSBoosted Trees, and Support Vector Machine Kernel. In addition, five DL models are also considered: ResNet-50, ResNet-101, VGG-16, MobileNetV3Small, and EfficientNetB0. Further, the computational efficiency is analyzed, with an emphasis on training and inference times, to determine the feasibility of deployment in resource-constrained environments.

- The final objective is to synthesize and recommend the optimum performing ML model suitable for UAV deployment: Research identifies optimal models that ensure a balance between predictive accuracy, generalization across datasets, and computational resource requirements, thus supporting practical and scalable real-world deployment.

Roadmap of the study.

To support the four research objectives, the manuscript has been carefully structured to ensure logical flow, clarity, and coherence. It begins with a focused literature review, covering thermal image-based feature extraction, ML and DL-based approaches for solar PV hotspot detection, and the limitations of existing methods. This is followed by a section on the significance of the study, which highlights key research gaps. The methodology presents a dual-approach framework: (1) traditional ML pipelines incorporating MPEG (Moving Picture Experts Group)-7 feature extraction and benchmarking of 34 models, and (2) end-to-end DL modeling using five state-of-the-art architectures, both complemented by XAI techniques such as SHAP and What-if Analysis. The results section presents detailed analyses of classification accuracy and computational efficiency (training and testing time) across five datasets, followed by a comparative discussion. The discussion provides local and global model interpretability using XAI methods and offers a comparative evaluation between ML and DL models, including statistical validation. A dedicated section explores the trade-offs between accuracy and resource utilization, reinforcing the practical implications of the findings. Finally, the paper concludes with recommendations for UAV-based deployment, highlighting optimal model choices and future research directions. This structured layout cohesively integrates the diverse components of the study, enhancing readability and guiding the reader clearly through each phase of the investigation.

2. Literature Review

This section provides a focused thematic review of recent methodological works including both classical ML and modern DL approaches applied to UAV-based solar PV hotspot detection. Rather than a generic review, it critically evaluates how these studies perform with respect to model accuracy, feature extraction, and operational constraints like computational efficiency and interpretability.

While many studies achieve high performance on standard metrics such as Accuracy, Precision, Recall, and F1-score, they frequently overlook essential deployment-focused indicators such as training time, inference time, and cross-dataset generalization. This gap limits the practical viability of their methods for real-world, resource-constrained PV monitoring systems.

To highlight the novelty and relevance of our proposed work, we organize this review into four focused sub-sections: a study on thermal image-based feature extraction techniques, a review of classical ML-based approaches, a discussion of DL-based techniques, and a concluding sub-section evaluating the significance of these methods in the context of UAV-assisted solar PV hotspot detection. Each sub-section identifies key limitations in previous studies that this work aims to address, especially regarding interpretability, computational efficiency, and deployment readiness.

2.1. Study on Thermal Image-Based Feature Extraction for Solar PV Hotspot Detection

Although the study [12] investigates the Histogram of Oriented Gradient (HOG) and texture features and reports an accuracy of 73.8%; the performance can potentially be improved by incorporating additional features. While high-dimensional feature vectors are often considered challenging, in the domain-specific context of thermal image-based hotspot detection, they can be particularly beneficial. The rich, detailed features help capture subtle thermal variations, indicating that combining more feature types could further enhance model performance in this specialized application.

However, based on the studies [13,14], it is further revealed that although the accuracies are analyzed based on feature extraction, neither an evaluation of computational complexity nor an explicit interpretation of its predictions has been provided, which should be a must and an essential output in research findings for further deployment in UAV-assisted solar PV hotspot detection.

2.2. Investigation of ML-Based Techniques for Solar PV Hotspot Detection

The studies [15], which employed ML, achieved high accuracies. However, the electrical parameters were measured with sensors. To elaborate, the research [15] delves into the field of study with four classifiers, Decision Tree (DT), Support Vector Machine (SVM), K-Nearest Neighbor (KNN), and Discriminant Classifier (DC), with 15 classification algorithms with three different setups to analyze. Setup 1 utilized only the maximum power point as input data, while setup 2 incorporated two parameters, and . Setup 3 expanded the input dataset to include three parameters, , , and . Each classifier was trained and evaluated across these setups to assess the impact of different input parameter combinations on classification performance, where DT achieved the lowest accuracy at 84% and DC reached the highest accuracy at 98%. To provide further insight, ref. [14] deployed SVM and the Feed-forward Back-Propagation Neural Network with 99% and 87% average accuracy, respectively, which required six input parameters, percentage power loss, open circuit voltage, short circuit current, irradiance, temperature, and impedance, for microcrack and hotspot classification in solar PV.

However, the accuracy and reliability of such sensor-based approaches are heavily dependent on the Precision of the measuring instruments. Scaling these methods for large solar farms demands a substantial number of additional sensors, which significantly increases costs related to hardware, installation, maintenance, compatibility, and the need for skilled personnel. While these methods may achieve high accuracy, they are often limited by their intrusive nature and lack of scalability. In contrast, the approach presented in this study addresses these limitations utilizing thermal imaging; a non-intrusive and non-contact technique that enables remote and real-time fault detection [16]. Furthermore, by integrating UAV-based deployment, the proposed method offers improved scalability and mobility.

2.3. Analysis of DL-Based Techniques for Solar PV Hotspot Detection

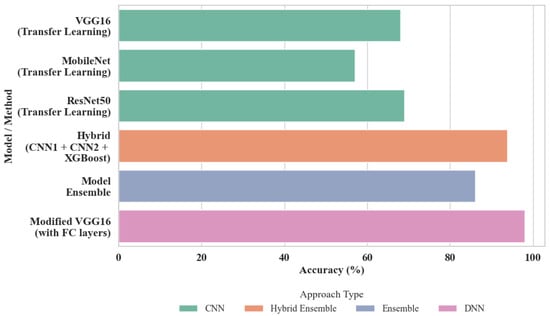

The research [17] investigates the pre-trained models designed and trained on the ImageNet [18,19] dataset, providing lower accuracies when using the transfer leaning [20] technique for the infrared (IR) image dataset. The reason is that the pre-trained models were trained on RGB (red–green–blue) images, illustrating a great difference in the physical characteristics with the thermal images. However, it is noted that only the parameters of the two added fully connected (FC) layers were updated since the parameters of the pre-trained models were frozen while training on the IR image dataset. This can be supported by the accuracy comparison study of different transfer learning models: VGG-16 achieves 68%, MobileNet reaches 57%, and ResNet-50 attains 69%.

To increase the accuracy, various techniques have been followed such as hybrid methods [21] and ensembles that combine different models and measurement of electrical parameters [22]. A hybrid scheme integrating three embedded learning models has been proposed for solar hotspot classification. The first mode, CNN (Convolutional Neural Network) Model 1, processes images using an improved gamma correction function for preprocessing. The second, CNN Model 2, utilizes IR temperature data from PV modules with a threshold function for preprocessing. The third, XGBoost Model 3, replaces CNN with the eXtreme Gradient Boosting (XGBoost) algorithm, leveraging selected temperature statistics. This hybrid approach achieves 93.8% accuracy in hotspot detection. An ensemble [17] achieves an accuracy of 86%. Nevertheless, Deep Neural Networks (DNNs) using the VGG-16 architecture with modified fully connected layers have been applied for thermal image classification, specifically for detecting hotspots and hot sub-strings [23]. Using the transfer learning technique, this approach achieves a classification accuracy of 98% [24]. The study [25] investigates the usage of an image dataset that deploys ResNet-50, which has an 85.37% harmonic mean of Precision and Recall. This performs two tasks in which the first model, ResNet-50, is the transfer learning model for the classification of the type of fault affecting the panel. Faster R-CNN identifies the region of interest of the faulty panel with a 67% mean average Precision. The edge image features of hotspots were extracted based on the Residual Neural Network (RNN), which is a DL architecture, for image segmentation by Mask R-CNN with 61.64% Precision [26]. In the study [22], the dataset of irradiance, temperature differences, and relative humidity, including thermal images, was used for the classification of whether a hotspot exists or not, while hotspot detection was carried out by random forest. However, these studies do not investigate computational complexity.

As illustrated in Figure 2, previous studies have predominantly focused on reporting accuracy [27,28,29] alone, while neglecting other critical performance indicators such as inference time, training time, and generalization ability across datasets. This limited scope restricts the depth of comparative analysis and impedes a comprehensive evaluation of model robustness and efficiency. The present study addresses this shortcoming by highlighting the importance of multi-metric evaluation and advocating for a more holistic framework in future research to enable meaningful and reliable comparisons.

Figure 2.

Quantitative analysis of model accuracy.

Although the aforementioned DL models demonstrate commendable performance across specific tasks [30,31,32], they often present significant limitations [33] in terms of computational complexity, storage overhead, and interpretability. Many existing studies rely on large, resource-intensive architectures that are unsuitable for real-time UAV deployment scenarios, where lightweight, fast, and energy-efficient models are essential [13,34]. Furthermore, the “black-box” nature of DNN obscures the underlying decision-making process, reducing transparency and making it difficult to validate or interpret model outputs [35,36]. These gaps underscore the urgent need for robust and interpretable solutions tailored to the domain of solar hotspot detection. In this context, our study addresses these challenges by evaluating both traditional ML and DL models not only based on accuracy but also on training and testing time, generalizability across diverse datasets, and computational efficiency. This multi-metric framework enhances model selection for practical UAV-based applications and promotes explainability—an increasingly critical requirement for responsible AI deployment in energy and sustainability domains.

2.4. Review of Hotspot Detection Techniques in PV Systems

To ensure coherence with the review-based nature of this work, we summarize key review articles related to hotspot detection in PV systems. Ref. [37] presented a comprehensive review of existing hotspot mitigation strategies, comparing them in terms of cost, power loss, and temperature reduction. They also proposed a novel series-resistor-based circuit-level strategy, laying a foundation for practical implementations. The study [38] explored vision-based monitoring systems for PV fault detection, highlighting the role of image processing and AI techniques in identifying anomalies like hotspots, and discussed the scalability and limitations of such systems in large-scale solar farms. Additionally, ref. [39] focused on models for predicting PV cracks and hotspots, emphasizing the role of physical parameters such as microcrack orientation and propagation and underscoring the complexity in accurate long-term performance modeling. These review articles provide a broad understanding of both detection and mitigation efforts in PV systems. However, a gap remains in terms of real-time, hardware-implementable mitigation solutions. Our study addresses this gap by proposing an area-based evaluation framework. This positions our work as a bridge between theoretical mitigation concepts and potential real-time, UAV-deployable solutions.

2.5. Significance of the Study

In summary, although certain DL models offer considerably high accuracy in hotspot detection and classification, their complexity and lack of interpretability are some drawbacks that can be highlighted [33]. Classical ML models provide a complementary advantage by being computationally efficient and easier to understand, especially when combined with informative features. For real-world PV monitoring systems, a balanced approach that incorporates accuracy, efficiency, and explainability is critical for building robust and scalable solutions [14,15].

While prior studies have extensively reported conventional performance metrics such as Accuracy, Precision, Recall, and F1-score, they often lack a blended evaluation of these alongside deployment-critical metrics such as training time, inference time, and generalization ability across datasets. This study aims to fill that gap by adopting a comprehensive performance assessment strategy. In particular, the models were evaluated not only for their predictive performance but also for their efficiency and cross-dataset generalizability metrics that are vital for UAV-based PV monitoring applications where resource constraints and variability in input data are common.

Moreover, to our knowledge, no previous work has combined MPEG-7-based feature extraction with XAI techniques such as SHAP and a What-if Analysis framework in the context of UAV-assisted PV hotspot detection. By integrating these tools with traditional ML models, this study contributes a novel methodology that not only delivers high performance but also promotes interpretability, low computational complexity, and robust generalization. This positions the work as a meaningful contribution toward practical, explainable, and scalable fault detection in the solar energy domain.

3. Methodology

3.1. Background and Approach

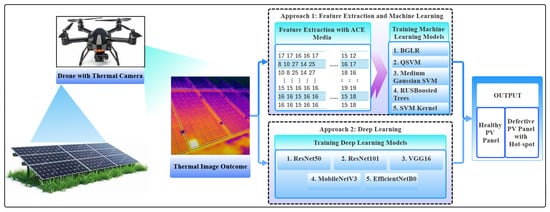

This study focuses on integrating image processing on UAVs for hotspot detection in solar farms.

The aim is to perform an in-depth comparison and evaluation of the performance, resource utilization analysis and efficiency between two approaches, namely, approach 1, feature-extraction-based ML models, and, approach 2, transfer-learning-based Convolutional Neural Network (CNN) DL models. This section begins with an overview of the methodological framework, followed by the presentation of approach 1 and approach 2 in detail.

Approach 1 will discuss in detail the use of ML models based on procedures for extracting valuable image features and benchmarks for identifying top-performing ML algorithms. Subsequently, approach 2 will move towards DL approaches based on selected DL models with end-to-end neural network training without extracting features.

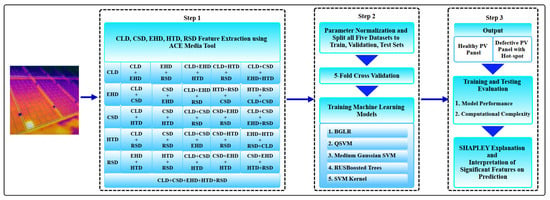

Figure 3 provides an overview of the study, and each component is discussed in detail in the subsequent sections.

Figure 3.

Proposed workflow on thermal UAV imaging and ML-based hotspot detection on solar panels along with the comparison of the DL-based approach.

- (1)

- Assess Selection of Solar Panel

According to Figure 3, images of monocrystalline solar panels have been captured by drone. Monocrystalline panels are widely installed at present due to higher energy conversion efficiency of within the 18–22% range compared to polycrystalline, which is lower in the 15–18% range. This type is more sensitive to hotspots, which cause more severe local heating and degradation in monocrystalline cells since they are often tightly packed for maximum efficiency. Hence, even a small hotspot quickly affects many cells. Further, this panel type is more expensive, so even a single hotspot damage causes greater financial losses if not detected early.

Monocrystalline solar panels from manufacturers such as SunPower (SunPower Corporation, San Jose, CA, USA) offering 400W; LG (LG Electronics Incorporated, Seoul, Republic of Korea) with 380W; Canadian Solar (Canadian Solar Incorporated, Guelph, ON, Canada) with 370W; JA Solar (JA Solar Technology Company Limited, Shanghai, China) with 370W; and Trina Solar (Trina Solar Limited, Changzhou, China) provide high efficiency and compact sizes and are widely used in both residential and commercial applications.

- (2)

- Thermal Data Acquisition using UAV and Dataset Preparation

Solar panels installed in large-scale solar farms are inspected using thermal cameras mounted on UAVs [40,41,42]. For small-scale solar installation, using handheld cameras would work, but when it comes to large-scale solar, this is not practical. Therefore, it is efficient and effective to use thermal cameras installed in UAV [43,44,45], i.e., drones [46], so that all the panels can be monitored in a timely manner. The thermal imaging system used a DJI Matrice 300 RTK drone [47]. It is capable of up to 55 min of flight time, supporting a maximum payload of 2.7 kg and providing centimeter-level positioning accuracy through the correction of the Real-time Kinematic Global Navigation Satellite System (RTK GNSS). RTK GNSS is a highly accurate satellite-based positioning technology. It improves the Precision of standard GPS by using correction data from a nearby reference station. Thermal data acquisition was performed using the DJI Zenmuse H20T camera [48]. It offers a resolution of 640 × 512 pixels, a spectral range of 8–14 μm, a Noise Equivalent Temperature Difference (NETD) of less than 0.05 °C, and radiometric imaging capabilities, enabling temperature measurements within a range of −20 °C to 500 °C.

Despite the native resolution of the thermal camera used, the datasets employed in this study included resized images with varying resolutions, specifically, 640 × 640 for Datasets 1, 2, and 3; 336 × 256 for Dataset 4; and 480 × 480 for Dataset 5. This design choice was intentional to introduce resolution-level diversity and evaluate model performance under varying image conditions. Such variability simulates real-world scenarios, where image resolutions may differ due to sensor types, preprocessing methods, or deployment constraints. It also contributes to enhancing the robustness and generalization capability of the trained models. Subsequently, images were resized to resolution-specific input dimensions required by DL models using bilinear interpolation, while for ML models, feature-level scaling was applied to ensure compatibility and consistent performance.

For this research, five datasets with different images with and without solar hotspots were used. The solar PVPs (Photovoltaic Panels) with hotspots and without hotspots are classified into two groups, namely class 1 (positive), and class 0 (negative) respectively. The classification of whether it is a healthy panel or a defective panel is concluded using ML and DL models. The training and testing data sets are then evaluated based on their performance and computational complexity.

- (3)

- Categorization of Selected Five Datasets

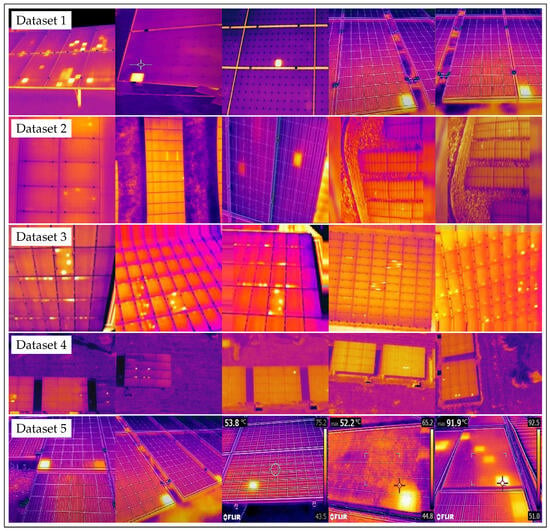

These images vary based on the angle they have been captured; panel layouts; the height between the panel and camera, which is the position, image quality and resolution; and external environmental conditions like irradiance level. Figure 4 illustrates five sample images from each of the five datasets.

Figure 4.

Visual samples from dataset 1 to 5 [4].

As shown in Figure 4, row 1 presents images from dataset 1, primarily featuring close-range thermal captures of solar panels. The panels appear large and sharply defined and occupy a significant portion of each frame. This close proximity enhances the visibility of fine details such as hotspots, edges, and surface variations, making the dataset visually rich and ideal for localized inspection and defect identification. Dataset 2, shown in row 2, includes images captured from a higher altitude using drones. The panels appear smaller, and each image tends to cover a wider area, often including multiple solar arrays within one frame. This wider perspective is valuable for large-scale inspections. The individual defects may be less distinguishable due to the reduced resolution at the module level. As for images in row 3, dataset 3 presents images that strike a balance between coverage and detail. These images show medium-range captures, where panels are sufficiently close to observe hotspots while still covering multiple modules in one shot. The dataset is well-suited for applications that require both defect detection and the broader context of the panel arrangement. Dataset 4, as illustrated in row 4, consists of clear and well-composed thermal images captured from an aerial perspective. The solar panels are distinctly visible, often framed with surrounding environmental features such as terrain or vegetation. The clean layout effectively highlights panel structures, supporting accurate visual interpretation and reliable model training. Finally, dataset 5 in row 5 presents thermal images that offer a balanced combination of close-up and broader contextual views of solar panels. The dataset includes diverse scenarios, in which some images focus on individual modules while others capture multiple panels within the same frame. This variety provides a comprehensive perspective on how thermal anomalies appear at different scales and conditions. The presence of distinct hotspot patterns across different backgrounds and lighting situations makes this dataset especially useful for training models that require generalization across variable environments. Its diversity also supports the development of diagnostic tools aimed at both localized defect detection and broader system-level performance assessment.

Table 1 and Table 2 define how images have been allocated for different datasets used for classification, along with a summary of dataset partitions and image-quality assessment for solar panel hotspot detection. The images for class 1 (‘with hotspots’) and class 0 (‘no-hotspots’) are equally distributed, with their total counts provided in Table 1. The trained model was evaluated for overfitting and underfitting using ROC (Receiver Operating Characteristic) curves, ensuring it was neither over-trained nor under-trained.

Table 1.

Summary of dataset partitions and image-quality assessment for solar panel hotspot detection. Note: BRISQUE, NIQE and PIQE are reported separately for Class 1 (hotspot) and Class 0 (no-hotspot).

Table 2.

Image allocation and core quality metrics for each dataset.

The presence of a hotspot in a solar PV panel represents a localized anomaly rather than a global structural change. Silhouette Score, Separation Ratio, BRISQUE (Blind/Referenceless Image Spatial Quality Evaluator), NIQE (Natural Image Quality Evaluator), PIQE (Perception-based Image Quality Evaluator), FSIM (Feature Similarity Index Measure), SSIM (Structural Similarity Index Measure), PSNR (Peak Signal-to-Noise Ratio), and MSE (Mean Squared Error) are commonly referred to as image evaluation metrics.

- Evaluation of Datasets using Non Reference-based Image Quality Metrics

To evaluate the quality and discriminative capacity of the image datasets used in this study, quantitative metrics, including the Silhouette Score, Separation Ratio, BRISQUE, NIQE, and PIQE, are emphasized in Table 1 below. Since the Silhouette Score primarily reflects global similarity within and between clusters, a relatively low Silhouette Score around 0.2 remains acceptable in this context. This is because the two classes, panels with and without hotspots, differ mainly in localized features such as color intensity or texture. Additionally, a Separation Ratio exceeding 0.6, indicates that although the classes exhibit distinguishable features, they inherently share common base characteristics, as both represent the same type of object, which is a solar panel. Therefore, complete separation in feature space is not expected.

In addition to clustering metrics, three non-reference image quality assessment techniques, BRISQUE, NIQE, and PIQE were utilized. These metrics estimate image quality, giving quantitative scores without requiring a reference image, making them suitable for evaluating real-world, variably acquired datasets. BRISQUE assesses spatial natural scene statistics to detect distortions, NIQE estimates naturalness using statistical models trained on high-quality images, and PIQE estimates perceptual quality by identifying visually degraded regions.

These metrics were included to assess the integrity and variability of the five distinct datasets used in the study. Their application is crucial for understanding the generalization capability of ML and DL models trained for hotspot detection. By quantifying dataset quality and inter-class separability, these evaluations support the development of models that are robust across different environmental conditions and data sources, thereby enhancing the reliability of thermal image-based hotspot detection in photovoltaic systems.

- Evaluation of Datasets using Reference-based Image Quality Metrics

In addition to non-reference image quality metrics, this study also incorporates reference-based evaluation methods, including FSIM, SSIM, PSNR, and MSE, as in Table 2. FSIM assesses how well critical image features such as edges and textures are preserved, offering a perceptually relevant evaluation of structural fidelity. SSIM compares images based on luminance, contrast, and structural information, reflecting human visual perception of image similarity. PSNR quantifies image quality by measuring the ratio between maximum signal power and noise, while MSE calculates the average squared difference between corresponding pixels in original and test images. These metrics are essential in hotspot detection, where subtle thermal variations must be preserved for accurate anomaly localization. High PSNR values indicate clearer images with minimal distortion, which is critical for effective model training and reliable prediction. PSNR is particularly valuable for identifying datasets with superior visual and structural integrity, especially when variations in sensor resolution, sensitivity, and acquisition protocols affect image quality.

These reference-based metrics are presented to complement the no-reference assessments and provide a comprehensive evaluation of the five different datasets used in this study. While no-reference metrics such as BRISQUE, NIQE, and PIQE are suitable for evaluating real-world images where ground truth references are unavailable, reference-based metrics offer more precise assessments when clean or ideal versions of the images are available for comparison. By integrating both approaches, the study ensures robust dataset characterization from both perceptual and signal fidelity perspectives. The inclusion of these metrics is critical for understanding how image quality and fidelity vary across datasets, which directly influences the generalization capability of ML and DL models. High variability or distortion in datasets lead to overfitting or poor model transferability. Therefore, evaluating both perceptual and quantitative image quality helps in selecting datasets to improve model robustness and cross-domain performance in hotspot detection from solar thermal imagery.

Following the standard training–testing split ratio of 90% to 10%, respectively, per dataset, columns two and three define how images have been allocated for different datasets used for classification, referring to Table 1 and Table 2.

Dataset 2 preserves more visual details such as rich textures, shadows, and edge variations, as well as diverse capture angles, especially under changing drone heights and lighting. It has excellent class separation and consistently high image quality while having fine-grained and structural variations. Dataset 1 has the lowest Silhouette Score and Separation Ratio, which allow for quick model convergence. Dataset 3 offers strong image quality—FSIM and SSIM are 0.7048 were relatively high—suggesting that important features like textures and edges are well-preserved compared to other datasets and have high class separation, which benefits image detail extraction by providing clear, well-defined features that are easier for the model to detect. Dataset 4 provides clear visual features with solid image quality, making training efficient but slightly less complex due to less variability in the capture conditions. Dataset 5 has significant variability in capture conditions, resulting in faster model convergence, but the image quality and class clustering are less stable.

Following dataset collection, two distinct approaches were employed for model evaluation and analysis: feature-extraction-based traditional ML and end-to-end DL using direct input images, which will be broadly discussed in Section 3.2 and Section 3.3, respectively.

3.2. Approach 1: Feature Extraction Based Traditional ML

3.2.1. Method for Extracting Image Features and Modeling Pipeline

The first step in this approach was to extract image features. The MPEG-7 standard is formallyreferred to as ISO 15938 [49]. A standardized set of descriptors and description schemes for audio, visual, and multimedia and a formal language are included in MPEG-7 specifications. The main features of visual descriptors are color, texture, shape and motion. This requires support elements like structure, viewpoint, localization and temporal. There are features standardized for descriptors such as color descriptors, including color space, color quantization, dominant color, scalable color, color layout, and color structure; texture descriptors, including homogeneous texture, texture browsing, and edge histogram; and shape descriptors, such as region shape, contour shape, and 3D shape [22]. This is followed by feature extraction. In this research, the Color Layout Descriptor (CLD), Color Structure Descriptor (CSD), Edge Histogram Descriptor (EHD), Homogeneous Texture Descriptor (HTD), and Region Shape Descriptor (RSD) are used.

The features of the images were extracted by the ACE (Automatic Content Extraction) Media Tool. It represents an automatic feature extraction approach, as opposed to manual, human-defined feature engineering, where features such as edges, color histograms, and textures are explicitly designed and extracted by a person. ACE is designed to extract structured information from unstructured multimedia data. It uses computer vision and ML techniques to identify and extract meaningful features without requiring manual intervention. Color, texture, and shape features were extracted by this tool. For the color descriptor, color layout and color structure features were extracted, where each accounts for 12 and 31 parameters, respectively. For the texture descriptor, edge histogram and homogeneous texture features included 80 and 62 parameters, respectively. For the region shape, 35 parameters were considered.

These five descriptors yielded 31 unique feature combinations (1 = 31). Based on the number of distinct features each descriptor contains, a total of 222 parameters were generated and evaluated in this study, as illustrated in Figure 5.

Figure 5.

Model-selection pipeline for hotspot detection in Photovoltaic Panels, encompassing thermal image processing, feature extraction using the ACE-tool, model validation, hotspot classification, and post hoc interpretability using SHAP for model explainability.

The section on “Feature Descriptors” will discuss the feature descriptors in detail. The selected MPEG-7 descriptors, CLD, CSD, EHD, HTD and RSD, provide a well-rounded representation of image content. The color layout and color structure capture both global and local color patterns. The Homogeneous Texture Describes surface textures, while theedge histogram highlights the distribution of edges and structural details. The region shape captures object outlines and shapes. Together, these features cover color, texture, edge, and shape information, making them ideal for accurate image classification and visual content analysis.

- Feature Descriptors

In accordance with the MPEG-7 standard, the YCbCr (Y prime: luminance, chrominance blue, and chrominance red) color space is primarily used for feature extraction due to its ability to separate luminance (Y) from chrominance components (Cb and Cr). This separation is particularly advantageous for image compression, retrieval, and processing, as it reduces redundancy and supports efficient storage. Compared to the RGB (Red, Green and Blue) color space, which combines brightness and color information in each channel, YCbCr offers a more effective framework for image analysis, especially for detecting intensity-based anomalies such as hotspots in solar images.

The CLD in MPEG-7 captures spatial color distribution by using DC coefficients, which represent the average color, and AC coefficients, which represent spatial variation coefficients. This enables a compact and effective image representation for retrieval and classification. The CSD uses 32 histogram bins and a quadrant indicator (33 parameters total) to represent localized color presence in a quantized YCbCr space, with values ranging from 0 (absent) to 255 (dominant). EHD represents spatial edge frequencies using 80 bins such that five edge types (vertical, horizontal, 45 °C, 135 °C, and isotropic) are computed across 16 image sub-blocks, highlighting local edge distribution. HTD captures texture via 62 parameters, representing average intensity, intensity variation, energy, and energy deviation to characterize texture patterns for classification and similarity detection. ART (Angular Radial Transform) describes image shape using 35 coefficients that are invariant to scale, rotation, and translation, representing the contribution of frequency components to overall shape features.

Once the features were extracted, feature vectors were combined to obtain 31 different combinations as described in the section titled “Method for Extracting Image Features and Modeling Pipeline” of Section 3.2.1 above. Equations (1)–(5) give the feature metrics for CLD, CSD, EHD, HTD, and RSD, respectively.

- Color Layout Descriptor (CLD):where are Discrete Cosine Transform (DCT) coefficients from the luminance channel and and are DCT coefficients from the chrominance channels. This descriptor gives a compact summary of where colors appear in the image.

- Color Structure Descriptor (CSD):where represents the histogram bin values corresponding to local color structures () and q denotes an additional global color quantization value. Thus, the CSD feature vector consists of 33 parameters in total. This shows how often different colors appear in small regions across the image.

- Edge Histogram Descriptor (EHD):where represents the local edge histogram values for vertical, horizontal, 45°, 135°, and non-directional edges across subdivided image blocks. This captures the texture and structure of the image based on edge patterns.

- Homogeneous Texture Descriptor (HTD):where values are energy features, values are deviation features across frequency bands, denotes mean energy deviation, and denotes energy mean. This tells us how rough or smooth different areas of the image are.

- Region Shape Descriptor (RSD):where values are shape-related features, such as Fourier coefficients or moment invariants, used to describe the shape characteristics. This helps understand the shapes of major objects in the image.

All parameters can be represented in matrix form as Equation (6) for all five combinations.

- Combined feature vector

The resulting combined feature vector has 222 parameters.

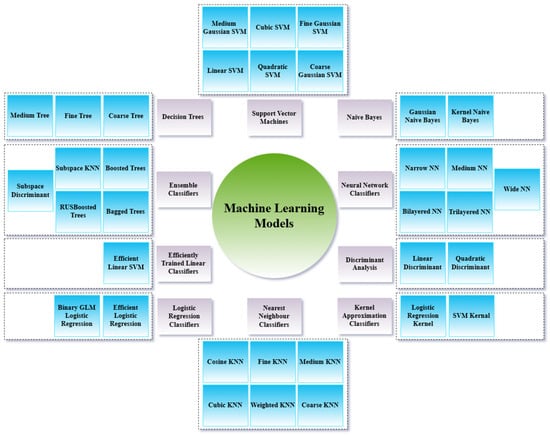

For all combinations, five-fold cross-validation was performed to evaluate the validation accuracy for 34 ML models. Finally, testing was conducted to predict the results of the trained classification model. The evaluated ML models are as illustrated in Figure 6.

Figure 6.

ML classifiers grouped by algorithm families.

3.2.2. Benchmarking and Selection of Best-Performing ML Algorithms

In this research, five MPEG-7 visual descriptors were employed for feature extraction: CLD, CSD, EHD, HTD, and RSD. Using the initial version of ACE Media Tool [50], 31 combinations of these features were generated and tested across 34 different ML models, as shown in Figure 6 above. These 34 models were selected to cover a broad spectrum of classification algorithms available in MATLAB R2024a, enabling a fair comparison across linear, nonlinear, ensemble, and instance-based methods suitable for feature-rich image data. From this extensive evaluation, the top five models were identified based on their accuracy and computational efficiency across the five datasets. The models that outperformed others in both accuracy and training and inference time included Binary GLM Logistic Regression (BGLR), Quadratic Support Vector Machine (QSVM), Medium Gaussian Support Vector Machine (Medium Gaussian SVM), RUSBoosted Trees, and Support Vector Machine Kernel (SVM Kernel). All these models achieved testing accuracies exceeding 95%. Among them, the Medium Gaussian SVM demonstrated the best overall performance. Its use of the radial basis function (RBF) kernel allows it to capture complex, nonlinear patterns in high-dimensional feature space, which is a key advantage when dealing with textured and shape-based descriptors. Additionally, the Gaussian SVM offers strong regularization and boundary smoothness control, making it more robust to the noise and overlapping class regions often present in thermal image data [51]. Notably, the feature combination comprising all five descriptors, CLD, CSD, EHD, HTD, and RSD, yielded the highest accuracy, affirming the effectiveness of comprehensive MPEG-7-based feature extraction for classification tasks. This combination has been widely used, consistently demonstrating high accuracy across various image classification applications [52].

3.2.3. Hyperparameter Configuration of Selected ML Models

Table 3 presents key hyperparameters for the five top-performing ML models. Hyperparameters are crucial for controlling the behavior of ML models. They directly influence model performance, training efficiency, and generalization ability. For example, the kernel function in SVM models defines the decision boundary, while the box constraint (C) controls the trade-off between margin size and misclassification. In tree-based models like RUSBoosted Trees, hyperparameters such as the number of learners and tree splits help manage model complexity and prevent overfitting. Regularization strength in logistic regression ensures the model avoids excessive fitting to the training data, promoting better generalization. Tuning these hyperparameters helps achieve optimal model performance on unseen data.

Table 3.

Hyperparameters used by ML models.

In the MATLAB Classification Learner app, the SVM kernel determines how input data is transformed into a higher-dimensional space to enable better class separation. The default kernel is the RBF, known for handling complex, non-linear patterns effectively. Key parameters include the box constraint (C), set to 1 by default, which balances margin width and classification error, and the kernel scale, also commonly set to 1, providing a moderate spread of the Gaussian function. These settings collectively offer a balanced model that generalizes well without overfitting.

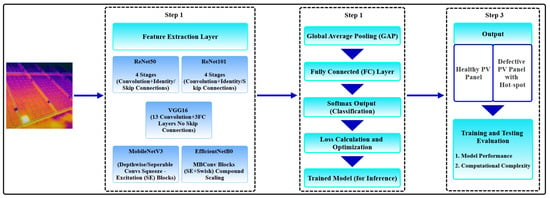

3.3. Approach 2: End-to-End DL

ResNet-50 (Residual Network-50) [53,54], ResNet-101 (Residual Network-101) [55], VGG-16 (Visual Geometry Group-16) [56], MobileNetV3Small [57], and EfficientNetB0 [58] are Convolutional Neural Network (CNN) architectures [59,60,61], which is a class of DL models illustrated by Figure 7. In general, these models are designed for image processing tasks like classification, object detection, and segmentation [62,63]. They have different designs, layers, optimizations, inference speeds, memory usage, and number of parameters. DL models are computationally expensive. The reason depends on the requirement of the large number of parameters, the optimization complexity, and the need for more computational resources such as memory and processing power during training and inference.

Figure 7.

Functional block diagram for the DL-based approach.

These were trained with NVIDIA T4 GPU (Graphics Processing Unit) [64] because it is preferred over a CPU (Central Processing Unit) for training and inference. The parallel processing power of GPUs enables them to handle the massive number of operations required in DL efficiently, leading to faster training and faster inference. NVIDIA is the name of the company, and T4 is the model name in the Turing architecture family of NVIDIA. T4 has specialized Tensor cores designed to accelerate DL operations, especially for tasks like matrix multiplications.

Five-fold cross-validation is a robust method to evaluate model performance. The dataset is divided into five equal subsets. The model trains on four subsets and tests on the remaining one, cycling through all combinations. This process repeats five times, ensuring every data point is used for both training and validation. It helps reduce overfitting, gives a more accurate estimate of model accuracy and supports reliable model comparison across different classifiers.

3.3.1. Selection Criteria of DL Models and Configuration of Hyperparameters for Selected Models

The five Deep Learning models, VGG-16, ResNet-50, ResNet-101, EfficientNetB0, and MobileNetV3Small, were selected to represent a diverse range of architectural styles, computational complexities, and deployment possibilities for UAV-based applications. VGG-16 serves as a well-established baseline with simple stacked convolutional layers, though it requires a high memory and has a relatively slow inference speed. ResNet-50 and ResNet-101 incorporate residual connections to enhance feature learning in deeper networks [54], with ResNet-50 offering moderate speed and memory usage, while ResNet-101 is more computationally intensive. EfficientNetB0 was chosen for its compound scaling of depth, width, and resolution, providing a favorable trade-off between accuracy and inference efficiency [65]. MobileNetV3Small, the lightest model among those considered, is optimized for low-latency and low-memory environments, making it ideal for edge devices and real-time UAV operations [66,67,68]. Other architectures such as Inception and DenseNet were excluded from this study due to their higher memory requirements and marginal performance benefits compared to the increased deployment complexity. Thus, the selected DL models offer a representative spectrum of capabilities suitable for evaluating performance under constrained computational environments. The final goal of this model selection was to assess the suitability of these architectures for the specific application of solar hotspot detection using UAV imagery. Thus, the selected DL models offer a representative spectrum of capabilities suitable for evaluating performance under constrained computational environments.

A summary of the parameters, input sizes, and optimizers used as DL models is provided in Table 4. The “Model” column lists the names of the pre-trained CNNs employed. “Total Params” indicates the complete number of parameters within each model, encompassing both trainable and non-trainable components. ‘Trainable Params’ refers to the subset of parameters that are updated during the training process via backpropagation. Non-trainable parameters are fixed during training and typically originate from frozen layers in transfer learning scenarios. The “Input size” column specifies the dimensionality of the input image required by each model, which in all cases is standardized to 224 × 224 × 3, corresponding to the width, height, and color channels (i.e., RGB), respectively. Finally, the “Optimizer Used” column denotes the optimization algorithm employed during model training; the Adam optimizer was selected for its adaptive learning rate and computational efficiency [69].

Table 4.

Comparison of DL model architectures.

- Hyperparameter Tuning and Validation

The hyperparameters considered for tuning across the DL models include the learning rate, which is varied within the range of and , enabling optimization flexibility. The batch size is explored between 16 and 128, balancing training speed and model performance. The number of epochs is set between 10 and 100, with training terminated upon reaching the minimum validation loss. The dropout rate is adjusted within the range of 0.2 to 0.5, acting as a regularization technique to prevent overfitting by randomly deactivating neurons during training. Finally, the number of trainable layers is determined based on the model architecture, ensuring effective learning while controlling complexity. Each of these hyperparameters is validated based on its impact on the performance of the model on the validation set, with the objective of achieving the optimal trade-off between accuracy and generalization.

The selection of tuning ranges was guided by both prior literature and empirical observations from preliminary experiments. A random search strategy was employed to explore the hyperparameter space efficiently, and the final optimal values were chosen based on the minimum validation loss for each respective model. These choices reflect a balance between training efficiency, generalization, and stability across all five DL architectures presented in Table 4, and they enhance the reproducibility of the tuning process.

3.3.2. End-to-End Training Pipeline Without Explicit Feature Extraction

In this approach, the entire model, from input data to output prediction, is trained in one unified process, as opposed to separately training individual components like feature extraction and model training. There is no manual intervention in which the model learns to extract features automatically from the raw data of solar panel images. In end-to-end learning, the raw input data of images is fed directly into the model, which automatically learns the relationship between relevant features at different levels of abstraction. An ROC curve was used to ensure that these models were not over-trained or under-trained. These DL models are designed to automatically learn hierarchical representations of the input data such that they initially detect basic features like edges. Then, they recognize patterns like textures or shapes and finally recognize complex objects. But these models need large amounts of data to learn effectively. If there is not sufficient data, the model may not generalize well. Computational resources like training large DL models require substantial computational power such as high performance, robust GPUs, and memory. End-to-end models, especially Deep Neural Networks, are difficult to interpret in the sense of having difficulty identifying exactly how the model is making its decisions.

Overall, the study established a structured approach to comprehensively evaluate the performance and computational complexity of ML and DL models for UAV application in solar industry fault identification. Beginning with a high-level block diagram, the workflow was designed to prioritize interpretability, highlighting the most influential factors in model predictions. The analysis then delved into specifics: traditional ML techniques (feature engineering and comparative algorithm analysis) contrasted with DL architectures (automated feature learning and end-to-end training). This dual-path comparison focuses on identifying optimal models for real-world UAV deployment.

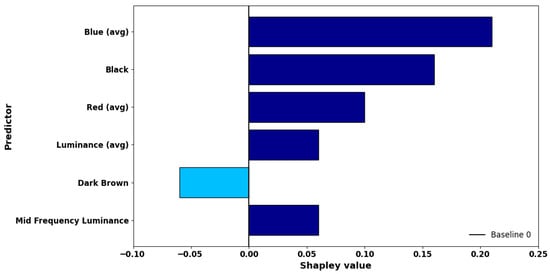

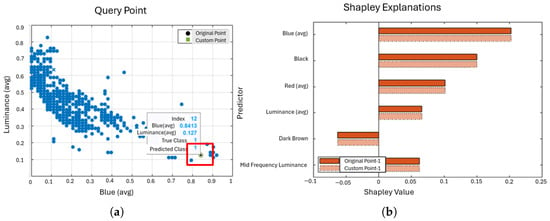

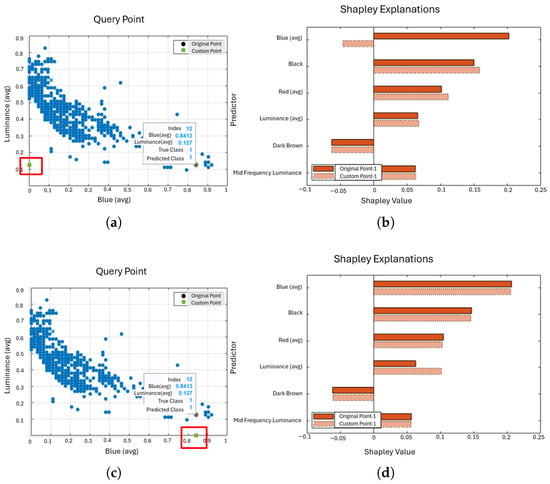

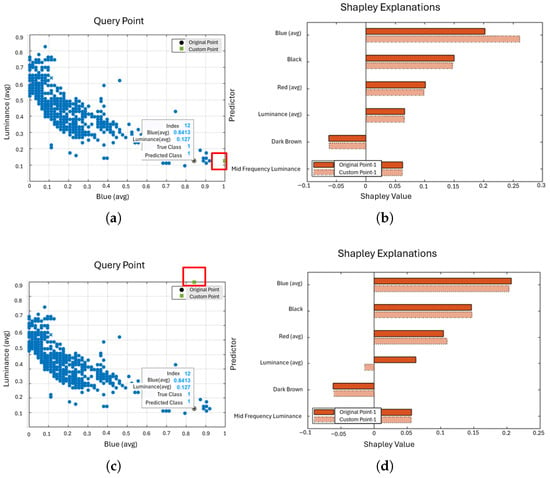

3.4. XAI and What-If Analysis

To address the inherent opacity in DL models, this study integrates XAI techniques, specifically SHAP, to interpret model decisions. SHAP is a unified framework based on cooperative game theory that assigns each feature an importance value for a particular prediction. For the Medium Gaussian SVM model, SHAP values were computed to highlight which image features contributed most strongly to the classification outcome.

In addition to static SHAP visualizations, a What-if Analysis was performed. This approach involves controlled perturbation of input feature values to simulate hypothetical changes and observe the resulting model prediction shifts. While the term “What-if Analysis" is often used in causal inference or business analytics, in this context, it refers to feature-level sensitivity testing conducted using SHAP’s dependence and force plots. These plots reveal how slight modifications in key features affect model behavior, enhancing the understanding of model robustness and decision boundaries.

What-if Analysis was performed using MATLAB’s built-in tools for varying parameters and simulating model behavior. This methodology enables both global (summary-based) and local (instance-level) explanations, making it applicable to deployment scenarios where model transparency is crucial, such as solar hotspot classification.

4. Results

This section assesses traditional ML and DL models based on predictive accuracy and computational efficiency. Performance metrics include training/testing accuracy, Precision, Recall, F1-score, and computational time (seconds), supported by comparative visualizations. All Accuracy, F1-score, Precision, and Recall values are presented as percentages (%) in the figures. Precision evaluates correctness of positive predictions, while Recall measures defect detection capability. The F1-score balances both, which is especially useful for imbalanced data. The computational time is another significant factor that has been evaluated throughout the research.

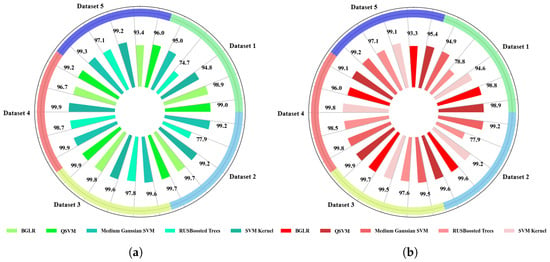

4.1. Comparison of Model Accuracies

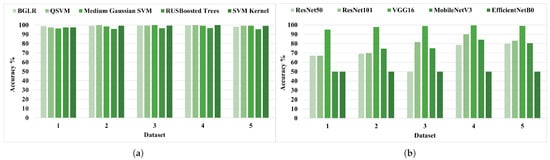

Section 4.1.1 and Section 4.1.2 present performance evaluation metrics for the ML and DL models, respectively. Figure 8, Figure 9, Figure 10 and Figure 11 provide training and testing results for ML models, while Figure 12, Figure 13, Figure 14 and Figure 15 present those for DL models.

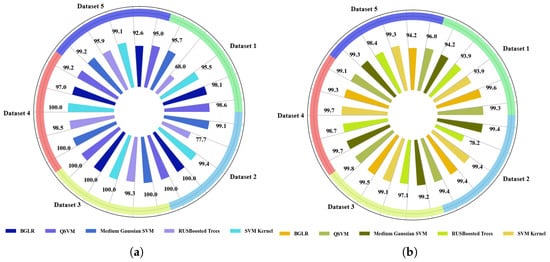

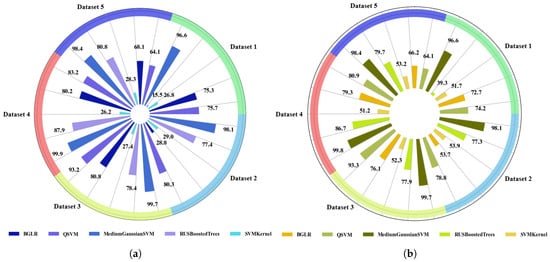

Figure 8.

Performance evaluation metrics for ML models—training: (a) Accuracy. (b) F1-score.

Figure 9.

Performance evaluation metrics for ML models—training: (a) Precision. (b) Recall.

Figure 10.

Performance evaluation metrics for ML models—testing: (a) Accuracy. (b) F1-score.

Figure 11.

Performance evaluation metrics for ML models—testing: (a) Precision. (b) Recall.

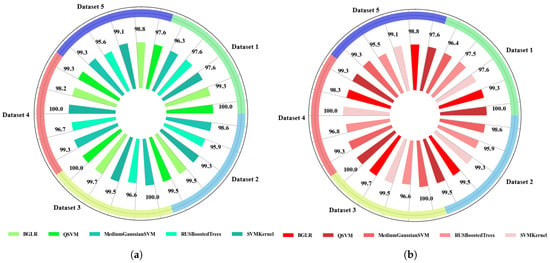

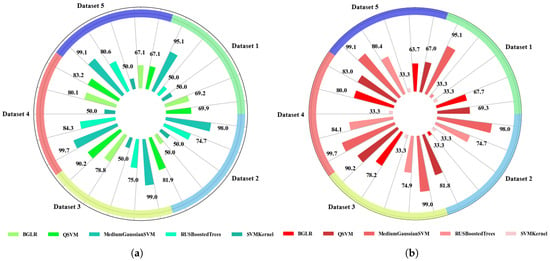

Figure 12.

Performance evaluation metrics for DL models—training: (a) Accuracy. (b) F1-score.

Figure 13.

Performance evaluation metrics for DL models—training: (a) Precision. (b) Recall.

Figure 14.

Performance evaluation metrics for DL models—testing: (a) Accuracy. (b) F1-score.

Figure 15.

Performance evaluation metrics for DL models—testing: (a) Precision. (b) Recall.

4.1.1. Accuracy Analysis in Traditional ML Models with Extracted Features

It has been emphasized that 31 combinations of features based on CLD, CSD, EHD, HTD and RSD resulted in the best combination of altogether 222 parameters, giving high five-fold cross validation accuracy, which was trained using 34 ML models. Among those models, the top five high-performing models, namely BGLR, QSVM, Medium Gaussian SVM, RUSBosted Trees, and SVM Kernel, were selected and are illustrated by comparative charts. The model performance was analyzed in terms of the training and validation accuracy and testing accuracy of the five ML models, as shown in Figure 8, Figure 9, Figure 10 and Figure 11.

It is observable that 92% of observations fell within the range of 93.4–99.9%, while only 8% were recorded in the range of 74–80%, indicating a strong tendency toward hitting higher accuracy values, as shown in Figure 8a. F1-score, Precision and Recall are illustrated in Figure 8b, Figure 9a, and Figure 9b, respectively. This also follows a similar trajectory, where Precision hits 100% in certain datasets like dataset 3 and dataset 4.

It is observable that all of observations fell above 95%, while Support Vector Machines achieved 100% testing accuracy, according to Figure 10a. In this scenario, the F1-score, Precision, and Recall, illustrated by Figure 10b, Figure 11a and Figure 11b, respectively, exhibit analogous trends, with Precision, Recall, and F1-score attaining 100% in mostly Support Vector Machines.

4.1.2. Accuracy Analysis of End-to-End DL

The performance of the model was evaluated based on the training and validation and the testing accuracies of five DL models, as presented in Figure 12, Figure 13, Figure 14 and Figure 15. The model received raw thermal imagery as input.

The analysis revealed that more than 50% of observations fell within the range of 40–80%, while only VGG-16 performed with higher accuarcy according to data in Figure 12a. The minimum value of the F1-score, as shown in Figure 12b, was recorded for MobileNetV3Small; 0.222 which recorded a minimum Precision and Recall of 0.155 and 0.393, as illustrated in Figure 13a and Figure 13b, respectively, for dataset 1. A vast divergence was observed across datasets based on the DL model implemented for resulted performance parameters.

It was observed that 56% of observations result in within the range of 50–80%, in which, altogether, ResNet-50, ResNet-101, VGG-16 and MobileNetV3Small showed above 80% only for dataset 5, as shown in the data recorded in Figure 14a. ResNet-50 maintained a moderate F1-score, Precision, and Recall, as shown in Figure 14b, Figure 15a, and Figure 15b, respectively. Dataset 1 and dataset 2 had a low F1-score and Precision of 0.333 and 0.250, respectively, in each dataset. The minimum value of F1-score was recorded by EfficientNetB0, and it was 0.333. The implemented DL models exhibited significant performance variability across datasets, as measured by key evaluation metrics.

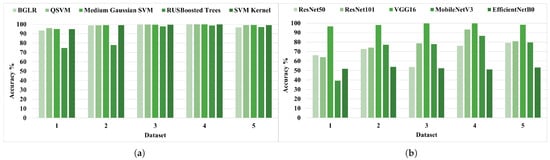

- Accuracy Plots of Training and Testing ML and DL Models

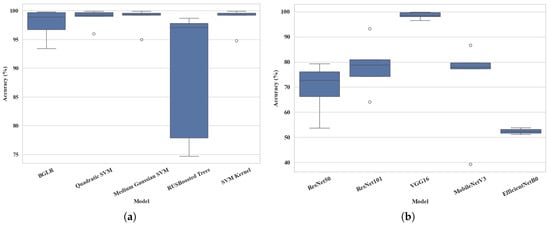

Figure 16 and Figure 17 illustrate a comparative analysis of five ML and five DL models, evaluated under different customized parameter configurations based on accuracies.

Figure 16.

Comparison of accuracies for five ML and five DL models under varying custom parameter settings: (a) ML training and validation accuracies; (b) DL training and validation accuracies.

Figure 17.

Comparison of accuracies for five ML and five DL models under varying custom parameter settings at testing phase: (a) ML testing accuracies, and (b) DL testing accuracies.

According to Figure 16a, ML models show consistency, with high accuracy in training and validation, suggesting that the models are learning effectively. DL models show more fluctuation in accuracy compared to ML models, while some DL models perform quite well, but many others lag behind, as shown in Figure 16b. These noticeable discrepancies were found between training and validation accuracies, particularly in datasets 1 to 3. ResNet-50 and ResNet-101 achieve high training accuracy but suffer significant drops in validation performance, indicating potential overfitting. In contrast, MobileNetV3Small and EfficientNetB0 demonstrate a better balance, suggesting improved generalization for smaller architectures. The observed variation may stem from the differing capacities of the model to generalize across the datasets.

ML models maintain high accuracy in the testing phase as of the illustration in Figure 17a. Having this consistency reinforces that ML models are likely generalizing well and are suitable for deployment in the application of solar panel hotspot classification to be implemented in UAV. The observed similarity between training and testing accuracies across all five ML models indicates an absence of overfitting and reflects a strong generalization capability. This consistent performance across multiple datasets suggests that the ML models are not only effective in learning from the training data but also robust and stable when evaluated on unseen data. The ability to maintain accuracy under varying configurations demonstrates their reliability and makes them suitable for practical deployment in scenarios where data diversity and quality fluctuate. According to Figure 17b, the DL models show variability in testing performance. Although some models generalize well, others show performance drops, suggesting that this variation may be attributed to differences in how well they capture relevant features from input images.

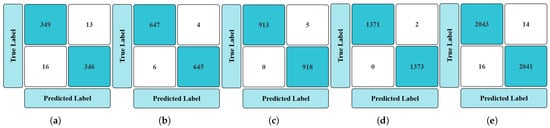

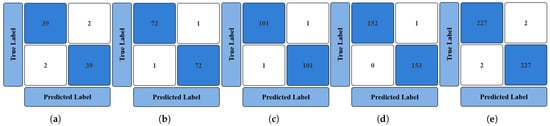

Figure 18 and Figure 19 represent the training phase Confusion Matrices (CMs) of ML models and DL models, respectively, across five datasets. Section 3.1 details the image distribution across the five datasets through Table 1. The number of training images are 724, 1302, 1836, 2746 and 4114.

Figure 18.

Confusion Matrices of ML models (SVM) for five datasets in the training phase: (a–e) CM of dataset 1 to dataset 5 in order.

Figure 19.

Confusion Matrices of DL models (VGG16) for five datasets in the training phase: (a–e) CM of dataset 1 to dataset 5 in order.

In Figure 18 and Figure 19 above, the best performing scenarios of both ML and DL are illustrated, showing that ML outperforms DL. For example, regarding the CM of Medium Gaussian SVM on dataset 5, 2043 images were classified as solar hotspot images, while DL classified 2024. When it comes to DL, the overall accuracies are lower in many DL models compared to ML models across varying datasets. Furthermore, this comparison will be elaborated in Section 4.2, where the computational efficiency of the models is analyzed, highlighting that DL models not only perform less consistently but also require significantly more training time than their ML counterparts.

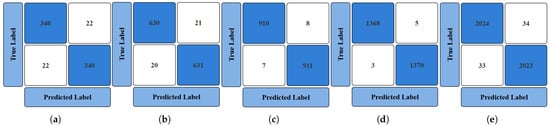

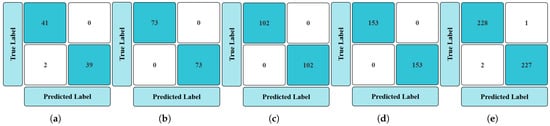

Figure 20 and Figure 21 represent the testing phase CMs of ML models and DL models across five datasets, respectively. The number of testing images are of 82, 146, 204, 306 and 458.

Figure 20.

Confusion Matrices of ML models (SVM) for five datasets in the testing phase: (a–e) CM of dataset 1 to dataset 5 in order.

Figure 21.

Confusion Matrices of DL models (VGG16) for five datasets at testing phase: (a–e) CM of dataset 1 to dataset 5 in order.

Based on the Confusion Matrices, the ML model shows better performance compared to the DL model. ML achieved perfect accuracy in several cases, for example, 153/153 and 228/229, indicating stronger class-wise prediction. In contrast, the DL model shows slightly more misclassifications such as 2 False Negatives vs. 39 True Positives. For instance, the ML model correctly predicted 227 out of 229 samples in one class, while DL misclassified 2. These results indicate that ML consistently maintains higher Precision and Recall across all tested classes.

4.2. Resource Utilization Analysis: Computational Efficiency in Terms of Training and Inference Time

To implement a well-informed choice for the appropriate hardware and model, it is essential to consider the training and inference time. DL is rapidly becoming a go-to tool due to its versatility across domains and continuous advancements. Despite its widespread use, it is essential to accurately estimate the time required to train a DL network for a given problem. Therefore, evaluating the time-based computational efficiency between ML and DL models remains a key consideration for targeted applications such as hotspot detection in solar panels.

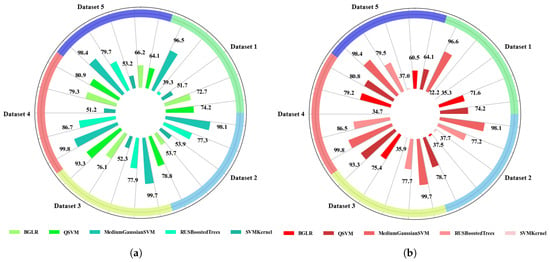

4.2.1. Resource Utilization Analysis of ML Models

Table 5 and Table 6 tabulate the training and testing time of top five performing ML models respectively, as given below. The time is in seconds.

Table 5.

Training time (in seconds) of ML models across five datasets.

Table 6.

Testing time (in seconds) of ML models across five datasets.

SVM Kernel and BGLR exhibit the highest training time, particularly on larger datasets, due to their complexity. For dataset 5, which has a higher number of testing images, the lowest testing time belonged to Medium Gaussian SVM at 18.810 s. For the smallest dataset, despite all five models having a comparatively higher testing time (based on image quality), the Medium Gaussian SVM hits the minimum time of 16.290 s. Therefore, Medium Gaussian SVM is a good candidate when computational efficiency is the key. Overall, it was observed that 96% of the results were below 150.000 s in training time.

The testing time also increases with dataset size, but at a much lower rate compared to training time. RUSBoosted Trees and BGLR tend to have higher testing times, especially on the larger datasets. Medium Gaussian SVM and QSVM show relatively fast inference times, making them suitable for real-time applications. Overall, all models exhibit reasonable testing times, staying below 25.000 s even on the largest dataset.

On the whole, the ML models demonstrate a favorable training–inference balance.

4.2.2. Resource Utilization Analysis of the DL Models

Table 7 and Table 8 tabulate the training and testing time of the DL models, which represents the time in seconds.

Table 7.

Training time (in seconds) for DL models across five datasets.

Table 8.

Testing time (in seconds) for the DL models across the five datasets.

Among all the datasets, dataset 2 forces the model to learn fine spatial features that persist across varying drone perspectives, which results in longer training and testing times compared to the other datasets. These high-detail images require deeper feature extraction, thus increasing both the computational load per image and the overall training and testing time.

Among the results of Table 7, EfficientNetB0 shows overall faster training time. ResNet-101 also has a higher training time than ResNet-50; both of these belong to the Residual Network family. MobileNetV3Small attains the highest training time of 6003.6 s.

Among the results shown in Table 8, ResNet-50’s testing time increased from Dataset 2 to 3, but it dropped from 85.2 s to 51.6 s in Dataset 4. ResNet-101 also shows similar scenario at dataset 4, dropping from 36.6 s to 4.2 s. The same is true for dataset 5 in VGG-16 but not in dataset 4. MobileNetV3Small has the highest training time. Overall, DLs’ testing times are higher than ML models’ testing times.

- Time Plots of Training and Testing ML and DL Models

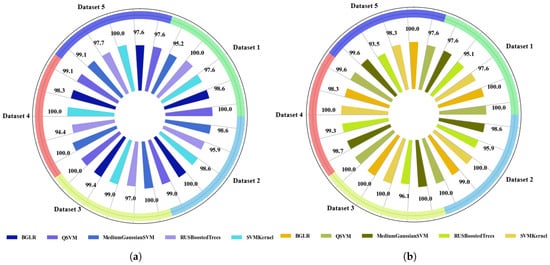

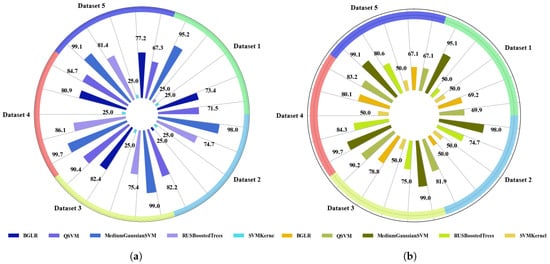

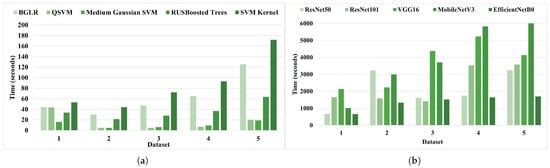

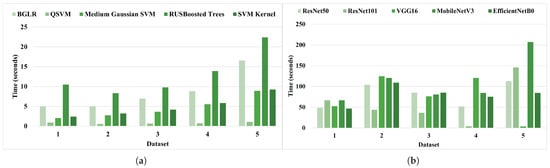

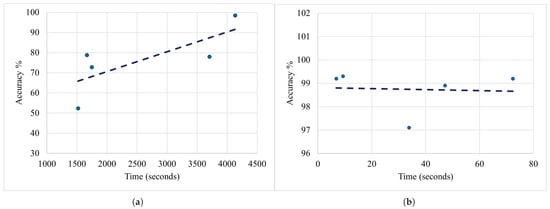

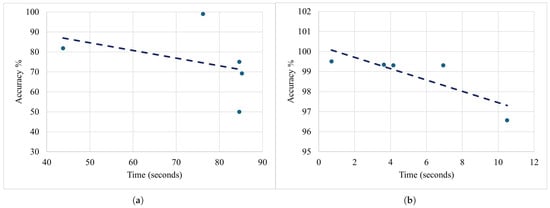

Figure 22 and Figure 23 present a comparative analysis of five ML and five DL models, assessed under various custom parameter settings with respect to training and testing time.

Figure 22.

Comparison of time for five ML and five DL models under varying custom parameter settings: (a) ML training time, (b) DL training time.

Figure 23.

Comparison of time for five ML and five DL models under varying custom parameter settings: (a) ML testing time and (b) DL testing time.

According to Figure 22a, ML models show very low training time across all datasets, mostly under 200 s. This highlights the computational efficiency of ML models. Their lightweight nature makes them suitable for applications where training time is a constraint, such as real-time or edge computing scenarios.

As illustrated by Figure 22b, DL models require significantly more time, reaching up to approximately 6000 s in certain scenarios. DL models are computationally intensive due to their complex architectures and iterative optimization processes. The inclusion of training overhead like model compilation and loading further increases the total time. This is a key consideration in deployment scenarios where resources or time are limited.

ML models demonstrate low latency during testing, with most below 25 s, according to the chart in Figure 23a. This fast inference time reinforces the feasibility of ML models for real-time decision-making tasks or embedded systems.

DL models show moderate testing times. However, it is significantly higher than ML models, some of which are beyond 200 s, as shown in Figure 23b. While the inference time remains within tolerable margins for many applications, the extra delay could be problematic in systems where the prompt response is essential.

4.3. Computational Efficiency Analysis of ML and DL Models

Although numerous studies on thermal-image feature extraction focus primarily on classification accuracy, they often neglect computational efficiency, which is an equally critical aspect for real-world deployment. This omission is particularly significant for Unmanned Aerial Vehicle (UAV)-based applications, where onboard systems operate under strict constraints on memory, processing power, and energy availability. In such environments, models must be not only accurate but also lightweight and fast enough to support real-time inference. To bridge this gap, the present study provides a detailed computational-efficiency analysis of five top-performing ML and five DL models across multiple datasets, with a specific focus on their suitability for UAV deployment.

In Section 4.3, Table 5, Table 6, Table 7 and Table 8 summarize the training and testing times for each model type, with times measured in seconds across different datasets. These models were selected for their strong performance in thermal image classification tasks.

- ML Models

Table 5 provides the training time for each of the five ML models across the datasets, while Table 6 details the testing times. The training time increases with the dataset size, as expected. Models like SVM Kernel and BGLR exhibit significantly higher training times, due to their inherent complexity. However, Medium Gaussian SVM consistently shows lower training times compared to others, with times under 20 s considering all the datasets. This makes it a strong candidate when computational efficiency is critical, particularly for UAV-based systems.

In terms of testing times, the analysis in Table 6 reveals that RUSBoosted Trees and BGLR exhibit the highest testing times, especially with datasets with a high number of images. On the other hand, Medium Gaussian SVM and QSVM provides faster inference times, well below 10 s for the largest datasets. These characteristics of low testing time and moderate training time make them ideal candidates for real-time applications and resource-constrained environments, such as those encountered in UAV-based monitoring.

- DL Models

In contrast, DL models, such as ResNet-50, VGG-16, and MobileNetV3Small, demonstrate significantly higher computational demands, as detailed in Table 7 and Table 8 for training and testing times, respectively. Models like VGG-16 and MobileNetV3Small require several thousand seconds for training for all datasets, making them less practical for real-time deployment on UAVs, where resource constraints are critical. For instance, MobileNetV3Small requires 1016.4 s for training on dataset 1, while it reaches 6003.6 s on dataset 5, clearly indicating its high computational overhead.

In terms of inference times, ResNet-50 and ResNet-101 exhibit increased testing times compared to the ML models, often exceeding 100 s. While EfficientNetB0 and MobileNetV3Small show slightly better inference times, the DL models still tend to be slower, with testing times as high as 207 s for MobileNetV3Small on dataset 5.

- Implications for UAV Deployment

The detailed analysis clearly illustrates the trade-off between model accuracy and computational efficiency, especially in the context of UAV deployment. ML models, particularly Medium Gaussian SVM, offer a significant advantage in resource-constrained UAV settings, where computational power and processing time are limited. These models not only achieve high accuracy but also ensure low training and inference times, making them ideal for real-time analysis in UAV applications.

On the other hand, while DL models offer state-of-the-art accuracy, their high training and inference times make them less suitable for scenarios where resource availability and real-time decision-making are critical. UAVs equipped with limited processing power may face challenges in deploying these models effectively, particularly when processing large datasets in a timely manner.

In conclusion, the analysis of the computational efficiency of both ML and DL models underscores the need to prioritize efficiency alongside accuracy when selecting models for UAV-based thermal image monitoring, especially in resource-constrained environments. Medium Gaussian SVM emerges as a favorable choice due to its low computational demand with fast inference times, making it highly suitable for deployment in real-time applications. By carefully considering both accuracy and computational efficiency, optimal performance should be achieved for UAV-based hotspot detection systems.

4.4. Understanding the Constraints of DL Performance

DL models, while powerful, exhibit noticeable variability in both training and testing performance across datasets. Unlike traditional ML models, which consistently generalize well with minimal performance drop, DL models such as ResNet-50 and ResNet-101 often show signs of overfitting, achieving high training accuracy but experiencing a decline in test set performance, especially in datasets 1 to 3. This discrepancy suggests that deeper models have difficulty generalizing when exposed to diverse data. In contrast, lighter architectures like MobileNetV3Small and EfficientNetB0 perform more consistently, indicating that smaller models offer better generalization under changing data characteristics. This variation arises not only from model complexity but also from differences in feature extraction capabilities across datasets.