Abstract

The You Only Look Once (YOLO) object detection model has been widely applied to electric power operation violation identification, owing to its balanced performance in detection accuracy and inference speed. However, it still faces the following challenges: (1) insufficient detection capability for irregularly shaped objects; (2) objects with low object-background contrast are easily omitted; (3) improving detection accuracy while maintaining computational efficiency is difficult. To address the above challenges, a novel real-time object detection model is proposed in this paper, which introduces three key innovations. To handle the first challenge, an edge perception cross-stage partial fusion with two convolutions (EPC2f) module that combines edge convolutions with depthwise separable convolutions is proposed, which can enhance the feature representation of irregularly shaped objects with only a slight increase in parameters. To handle the second challenge, an adaptive combination of local and global features module is proposed to enhance the discriminative ability of features while maintaining computational efficiency, where the local and global features are extracted respectively via 1D convolutions and adaptively combined by using learnable weights. To handle the third challenge, a parameter sharing of a multi-scale detection heads scheme is proposed to reduce the number of parameters and improve the interaction between multi-scale detection heads. The ablation study on the Ali Tianchi competition dataset validates the effectiveness of three innovation points and their combination. EAP-YOLO achieves the mAP@0.5 of 93.4% and an mAP@0.5–0.95 of 70.3% on the Ali Tianchi Competition dataset, outperforming 12 other object detection models while satisfying the real-time requirement.

1. Introduction

With the rapid development of the electricity industry and the continuous advancement of grid construction, the workload of grid workers has become increasingly heavy [1]. Ensuring the safe operation of the power supply system is crucial during the electricity production process. However, many workers lack safety awareness, fail to wear safety harnesses during high-altitude work, or work without supervisors present, which poses a potential threat to their safety. Therefore, strengthening safety supervision of electricity operations is of great significance for the sustainable development of the electricity industry [2]. Currently, most violations in power work are still identified through on-site or remote manual supervision, which not only wastes human resources but also increases corporate costs. To address this, the introduction of artificial intelligence technology to achieve intelligent electric power operation violation identification (EPOVI) can help enterprises reduce costs and improve efficiency.

Object detection technology, as an important research direction in the field of computer vision [3,4,5,6,7,8,9,10,11,12,13], has made significant advancements in recent years and has been widely applied in fields such as intelligent surveillance and industrial detection. With the progress of technology, the application of object detection in power operation safety management has also gained increasing attention. This technology can be used to identify key objects in work scenarios, such as supervisors, ground workers, aerial workers, safety belts, etc., to determine whether violations occur. For example, if an aerial worker is detected but no safety belt is detected, it can be concluded that a violation has occurred. To address this task, researchers have proposed a series of algorithm improvements and iterations. Liu et al. [14] proposed a power worker identification method based on You Only Look Once (YOLO), which uses the ArcFace loss function for bounding box coordinate regression and optimizes the setting of prior boxes using a dimensional clustering method to enhance detection stability. Kang and Li [15] improved YOLOv4 to address the issue of detecting whether power operation personnel are wearing safety helmets. They applied the mosaic data augmentation strategy to expand the training dataset and incorporated the k-means clustering algorithm to recalculate prior boxes, improving adaptability to small targets. Yan et al. [16] proposed a violation detection system for remote substation construction management, integrating artificial intelligence-based object detection technology. Their approach enhanced YOLOv5 by introducing data augmentation techniques such as target segmentation and background fusion, along with local erasure strategies to improve model generalization. Xie et al. [17] optimized the feature extraction module of YOLOv7 to meet the demand for detecting the hook status of safety harnesses. They introduced a decoupled head structure to separate classification and regression tasks and adjusted the loss function to reduce interference between different tasks. Currently, in industrial and commercial applications, the YOLO series [18,19,20,21,22,23,24,25] has become the mainstream object detection model. Since its initial release, it has evolved to YOLOv11, with different versions excelling in various scenarios, particularly YOLOv5 and YOLOv8, which are the most widely applied. In the field of power production violation recognition, accuracy is of paramount importance due to its implications for personal safety while also maintaining real-time performance. Therefore, this study selects YOLOv8 as the baseline model and further optimizes it. A comparison of detection accuracy across YOLO series models is provided in the table in Section 4.6.

Since the release of YOLOv8, it has demonstrated significant application value in the field of violation recognition, and researchers have proposed a series of optimization methods to improve its performance. Cai et al. [26] optimized the YOLOv8 algorithm for power construction scenarios by incorporating a channel attention mechanism in the backbone network to enhance low-level feature filtering, adding the convolutional block attention module (CBAM) attention mechanism in the head network to improve high-level feature filtering, and replacing the original complete intersection over union (CIoU) loss of YOLOv8 with efficient intersection over union (EIoU) loss to enhance the network’s ability to express violation features. Jiao et al. [27] developed a helmet detection model, leveraging transfer learning for object annotation and training on the YOLOv8s model while introducing matching criteria and a time-window strategy to reduce false positives and false negatives. Jiang et al. [28] improved YOLOv8 for high-altitude safety belt detection by integrating the biformer attention mechanism to enhance feature extraction, replacing the original upsampling layer with a lightweight upsampling operator, introducing a slim-neck structure in the neck, adding auxiliary detection heads in the head layer, and replacing the original loss function with intersection over union with minimum points distance (MPDIoU) to optimize bounding box prediction.

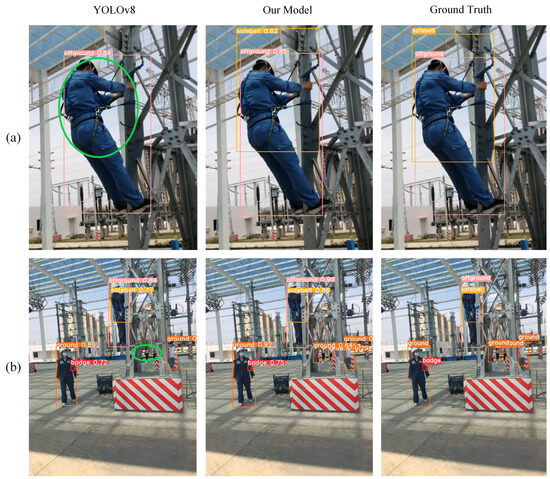

Although YOLOv8 has achieved promising detection results in various application scenarios, it still faces challenges in accurately detecting key targets in complex power operation environments without increasing computational costs. Firstly, YOLOv8 has limited feature representation capability for irregularly shaped objects, leading to missed detections. As shown in the green ellipse in Figure 1a, YOLOv8 fails to detect an irregularly shaped safety harness. Secondly, when the contrast between the target and the background is low, the model’s extracted features lack sufficient discriminability, resulting in missed detections. As illustrated in the green ellipse in Figure 1b, YOLOv8 fails to detect three distant ground workers because they are not sufficiently distinguishable from the background. Lastly, most existing improvements to YOLOv8 enhance detection accuracy at the cost of increased parameter size or computational complexity, significantly compromising its efficiency. This trade-off negatively impacts its applicability in real-time monitoring scenarios.

Figure 1.

Two typical examples of YOLOv8 and our model; the above images are cited from the Ali Tianchi competition dataset [29], and the YOLOv8 model is fine-tuned on the Ali Tianchi competition dataset. Green ellipses highlight missed detections by YOLOv8. (a) Improvement of our model for the missed detection of safety harnesses, (b) Improvement of our model for the missed detection of ground workers.

To address the aforementioned challenges, this paper proposes a novel real-time object detection model based on the YOLOv8 framework for EPOVI, denoted as EAP-YOLO, with the following key contributions:

- (1)

- To address the challenge of detecting irregularly shaped objects, an edge perception cross-stage partial fusion with two convolutions (EPC2f) module is proposed. The EPC2f module replaces the bottleneck in cross-stage partial fusion with two convolutions (C2f) with an edge_bottleneck to enhance the extraction of object contour features, thereby improving the model’s ability to detect irregularly shaped objects.

- (2)

- To address the detection problem when there is insufficient contrast between the target and background, we propose an adaptive combination of local and global features (ACLGF) module. The ACLGF module adaptively fuses local and global features with a learnable weight, significantly enhancing the discriminative ability of the features and enabling the model to detect targets even when the contrast with the background is insufficient.

- (3)

- In order to improve the detection accuracy without increasing the number of model parameters, a parameter sharing of multi-scale detection heads (PS-MDH) scheme is proposed. Through parameter sharing, on the one hand, the number of parameters and the computation amount of the detection head part are reduced; on the other hand, the information interaction between different-scale detection heads is realized, which improves the detection capability of the model for multi-scale targets.

- (4)

- The effectiveness of the EAP-YOLO model in improving detection accuracy while reducing parameter size and computational cost is validated through comparative experiments against mainstream YOLO models on the dataset provided by the Alibaba Tianchi competition.

The following contents of this paper are organized as follows. The Section 2 introduces the baseline model of our EAP-YOLO model. The Section 3 gives the details of our methods, especially for the three key innovations of this paper, i.e., the ACLGF module, the EPC2f module, and the PS-MDH scheme. The Section 4 demonstrates the experimental results of our method on the Alibaba Tianchi dataset and gives related analyses. Finally, the Section 5 summarizes the contributions and limitations of our method and introduces the future work of our team.

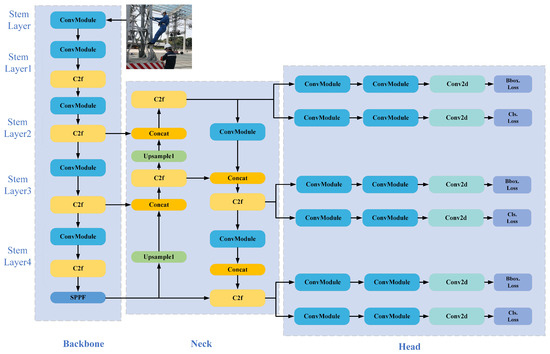

2. Baseline Model

The overall architecture of the YOLOv8 model can be divided into three main components: the backbone, the neck, and the detection head, as shown in Figure 2. The backbone employs the cross-stage partial network (CSPNet) [30], which is responsible for extracting multi-level features from the input image while effectively reducing computational cost and maintaining efficient information flow. Additionally, the spatial pyramid pooling-fast (SPPF) module is integrated to enhance multi-scale feature extraction through pooling operations at different scales, further reducing computational complexity. To improve object detection capability, the neck is designed to fuse features from different levels. It adopts the path aggregation feature pyramid network (PAFPN) [31] structure, which introduces lateral connections on top of the traditional feature pyramid network (FPN) [32]. This design facilitates effective propagation of high-level features to lower levels, enhancing robustness. Moreover, an optimized feature fusion strategy ensures that multi-scale information is efficiently transmitted to the detection head. Instead of relying on traditional anchor-based methods, an anchor-free design is adopted, eliminating dependency on predefined anchor boxes, improving generalization capability while reducing computational complexity. Furthermore, a decoupled detection head is employed to separate classification and regression tasks, optimizing feature learning and enhancing detection performance. For target assignment, the task-aligned assign [33] mechanism is introduced to improve the allocation of positive and negative samples, leading to more accurate object detection. Additionally, the model applies distribution focal loss for bounding box regression and incorporates CIoU as the loss function to optimize localization accuracy.

Figure 2.

YOLOv8 network structure.

3. Proposed Method

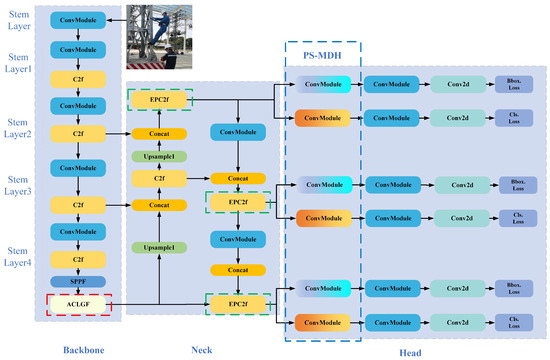

3.1. Overview

The overall structure of the EAP-YOLO target detection model proposed in this paper is shown in Figure 3. Among them, the middle part of the red dashed box is divided into three innovative points of this paper. Firstly, the ACLGF module significantly improves the discriminative power of the features by extracting the local and global features, respectively, through a two-branch structure and fusing them using adaptive fusion with learnable weights. Since the ACLGF module is located in the last layer of the backbone network, it improves the overall discriminative power of the features with the least amount of computation. Secondly, the feature representation ability of the model for target contours is enhanced by introducing edge convolution (EdgeConv) in the EPC2f module, and since the EPC2f module is located in the neck network and adjacent to the detection heads of various scales, the model has strong feature representation ability for the contours of targets of different scales. Finally, the PS-MDH scheme can effectively reduce the number of model parameters and computation and enhance the model’s detection capability for multi-scale targets through the information interaction between the detection heads at each scale. The details of the above three innovations are shown below.

Figure 3.

Architecture of our EAP-YOLO model. The red, green, and blue dashed lines indicate the innovations of EAP-YOLO, where ACLGF, EPC2f, and PS-MDH denote the adaptive combination of local and global features module, edge perception C2f module, and parameter sharing of multi-scale detection heads, respectively.

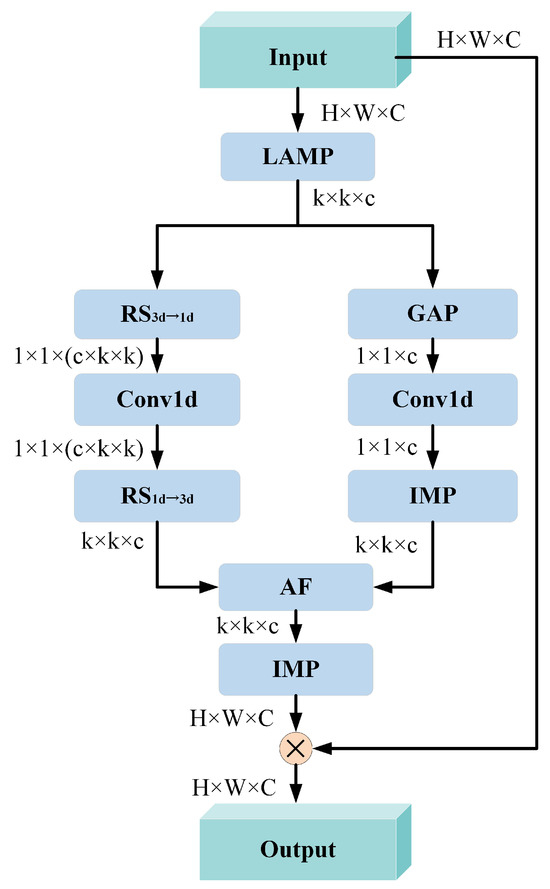

3.2. ACLGF Module

The network structure of the ACLGF module is shown in Figure 4. Firstly, adaptive mean pooling [34] is performed on the input feature maps to output feature maps of fixed size a. The process can be expressed by the following equation:

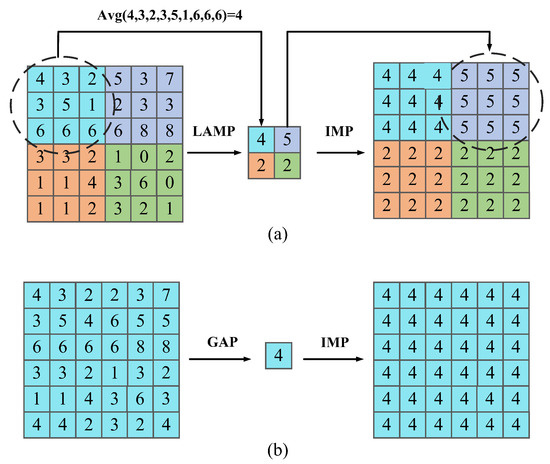

where represents the input feature map, H, W, and C represent the height, width, and number of channels of the feature map, respectively, represents the extracted pooled features with the default k = 5, and represents the local adaptive mean pooling operation, the principle of which is shown in Figure 5a, which ensures that has a fixed-size feature representation, thus making the ACLGF module compatible with input features of different sizes.

Figure 4.

Architecture of ACLGF module. The size of input features of ACLGF module is set to H × W × C; the details of LAMP, , , , GAP, IMP, and AF modules can be seen in Equations (1)–(5), where the GAP, LAMP, and IMP modules are also illustrated in Figure 5.

Figure 5.

Illustration of GAP, LAMP, and IMP. (a) Illustration of LAMP and IMP, where LAMP outputs a feature map with fixed size k × k (k is set to 2 in this figure). (b) Illustration of GAP and IMP.

The left branch in Figure 4 is a local feature extraction branch, and its process can be expressed by the following equation:

where represents an operation of deforming a three-dimensional matrix into a one-dimensional matrix, represents a one-dimensional convolution, represents an operation of deforming a one-dimensional matrix into a three-dimensional matrix, and represents the features output from the local feature extraction branch. The above local feature extraction process can effectively reduce the number of references of the ACLGF module through the one-dimensional convolution with the mutual conversion between one-dimensional and three-dimensional features.

The right branch in Figure 4 is the global feature extraction branch, and the process can be expressed by the following equation:

where represents the features output from the global feature extraction branch, represents the global average pooling operation, and represents the inverse mean pooling operation. As shown in Figure 5, the IMP operation copies each mean value to the corresponding pooling window. The one-dimensional global feature vector can be obtained by GAP, and then the feature size before GAP can be recovered by 1D convolution and IMP, which can also effectively reduce the number of parameters in the ACLGF module. After obtaining the local feature and global feature , the two are merged by Adaptive Fusion (AF), and this process can be expressed as

where is a learnable weighting coefficient for dynamically adjusting the fusion ratio between local and global features, and represents the fused features. Obviously, the above process can automatically determine the optimal fusion ratio between local and global features, eliminating the workload of manually setting hyperparameters.

Finally, the output feature map is obtained by IMP and short connection operation, and this process can be expressed as

where represents the output feature map, which has the same dimensions as the input feature map . Here, the principle of is shown in Figure 5a.

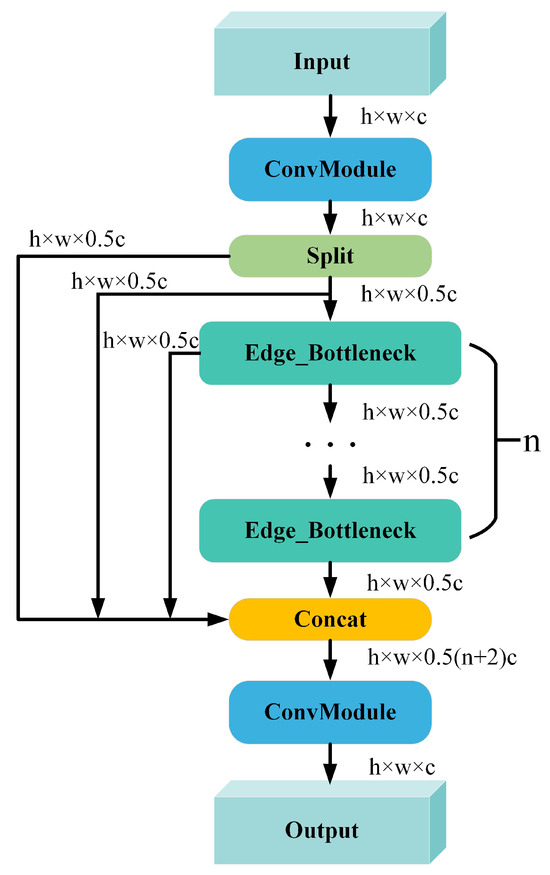

3.3. EPC2f Module

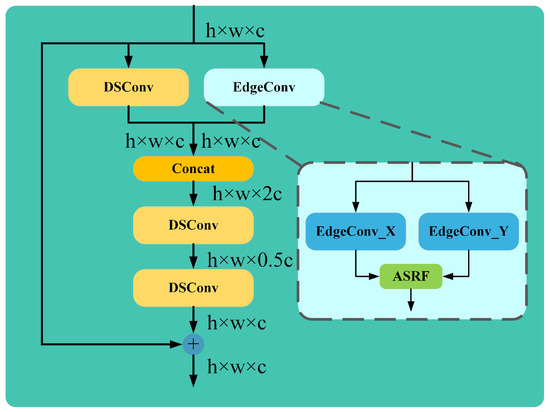

As shown in Figure 6, EPC2f replaces the original bottleneck with edge_bottleneck compared with the C2f module in the YOLOv8 model. The block diagram of the structure of edge_bottleneck is shown in Figure 7. Firstly, EdgeConv is used to extract the edge features and its implementation can be expressed by the following equation:

where represents the feature map of input edge_bottleneck, represents the edge convolution operation in the horizontal direction, represents the edge convolution operation in the vertical direction, mx is the convolution template in the horizontal direction, my is the convolution template in the vertical direction, represents the edge feature map in the horizontal direction. represents the edge feature map output by EdgeConv, and represents the edge feature map in the vertical direction. ASRF represents the arithmetic square root fusion, as shown in Equation (8); each element is squared and added together, and the final result is the square root of the sum.

Figure 6.

Architecture of EPC2f Module.

Figure 7.

Architecture of Edge_Bottleneck.

After obtaining the edge feature maps, a large number of depthwise separable convolution (DSConv) [35] are subsequently used to complete the remaining operations of edge_bottleneck, and the process can be expressed by the following equation:

where , , and represent the depth-separable convolution of the first, second, and third layers, respectively; represents the splicing operation in the channel direction; represents the spliced feature maps; and represent the feature maps output from the depth-separable convolution of the second and third layers, respectively; and represents the final feature maps output by the edge_bottleneck module, which is of the same size as that of the input feature maps I. The above process follows the design idea of the bottleneck structure in YOLOv8 and significantly reduces the computational and parametric quantities of the model by combining the DSConv and bottleneck structures.

3.4. PS-MDH Scheme

Traditional target detectors usually design independent detection heads for different-scale targets; however, this approach significantly increases the number of parameters and computational burden of the model. To cope with this problem, this paper proposes a parameter-sharing scheme for multi-scale detection heads. As shown in Figure 3, we only share the parameters of the first convolutional module of each scale classification detector head, and the same is true for the regression detector head, which not only reduces the number of parameters and enhances the information interaction between each scale detector head but also maintains a certain degree of independence of each detector head.

4. Experimental Results and Analysis

4.1. Dataset

The dataset used in this paper for EPOVI comes from the Alibaba Tianchi Guangdong power grid intelligent on-site operation challenge [29], and it includes a total of four types of detected objects: supervisor armbands, high-altitude workers, ground workers, and safety harnesses. The dataset consists of 2352 images with a size of 1968 × 4144~3472 × 4624. The annotation format was converted to the YOLO standard format, and the dataset was randomly divided into three subsets: 70% for training, 10% for validation, and 20% for testing. The label categories in the dataset are shown in Table 1.

Table 1.

Target category and description in the Alibaba Tianchi competition dataset.

4.2. Implementation Details

The input image size is uniformly scaled to 640 × 640, the number of training epochs is set to 150, and the batch size is set to 16. The initial learning rate is 0.01, and the optimizer used is stochastic gradient descent (SGD), with momentum and weight decay set to 0.937 and 0.0005, respectively. The IoU threshold for non-maximum suppression (NMS) is set to 0.7, consistent with the baseline YOLOv8n model settings. To adapt the training of YOLOv8 on the Ali Tianchi competition dataset, we change the default 80 classifications of YOLOv8 to 4 classifications while retaining the original training strategy and loss functions. The value of k in Equation (1) is set to 5. The experimental environment is as follows: Ubuntu 22.04 operating system, Python 3.8, PyTorch 1.12.1, CUDA 12.4, Intel(R) Xeon(R) E5-2650 v4 @2.20 GHz CPU, NVIDIA TITAN RTX GPU with 24 GB VRAM.

4.3. Evaluation Metrics

The model performance is quantitatively evaluated using several key metrics: mAP@0.5(%), mAP@0.5–0.95(%), parameter count (Params), floating-point operations (FLOPs), and frames per second (FPS). The mean average precision (mAP) is calculated through the following equations:

where , , and denote the number of true positives, false positives, and false negatives, respectively. P and R denote the precision and recall of the detection results. The average precision () corresponds to the area under the precision-recall () curve for a single category, and the mean average precision () is defined as the mean value of over N categories. Then, the definitions of and – can be formulated as follows:

where denotes the of ith category when is calculated under the condition of (a predicted box is considered a true positive when the IoU between it and the ground truth box is greater than or equal to 0.5), the meaning of can be obtained in a similar way, and the t is ranged from 0.5 to 0.95 with a step size of 0.05.

The Params represent the number of parameters in the model, which measures the model’s size. FLOPs represent the number of floating-point operations per second and assess the computational cost of the model. FPS indicates the number of image frames processed per second, measuring the model’s inference speed. The table in Section 4.6 is also visualized in Figure 7.

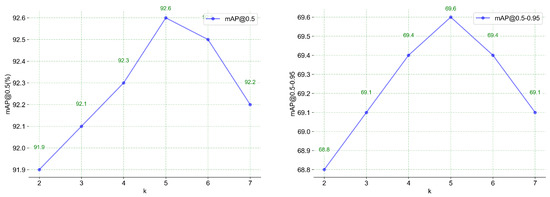

4.4. Parameters Analysis

The quantitative analysis of parameter k defined in Equation (1) is given in this section. As shown in Figure 8, our method achieves the highest mAP@0.5 and mAP@0.5–0.95 on the Alibaba Tianchi dataset when k = 5.

Figure 8.

Quantitative parameter analysis of k on the Alibaba Tianchi dataset.

4.5. Ablation Study

To verify the effectiveness of the three innovations proposed in this paper, namely the EPC2f module, ACLGF module, and PS-MDH scheme, ablation experiments were conducted. The YOLOv8n model served as the baseline. As shown in the red dashed line section of Figure 3, we add the ACLGF module after SPPF and replace the output of SPPF with the output of ACLGF, resulting in YOLOv8+ACLGF. As shown in the green dashed line sections of Figure 3, we replace the original C2f in YOLOv8 with EPC2f, resulting in YOLOv8+EPC2f. As shown in the blue dashed line section of Figure 3, the parameters of three blue ConvModules are shared with each other, and the same applies to the three orange ConvModules, resulting in YOLOv8 + PS-MDH. The specific experimental results are shown in Table 2.

Table 2.

Ablation study of our EAP-YOLO model.

The results show that when the EPC2f module is introduced alone, mAP@0.5(%) and mAP@0.5–0.95(%) increase by 1.3% and 0.4%, respectively. Introducing the ACLGF module alone results in increases of 1.2% and 0.5%, respectively. Introducing the PS-MDH scheme alone improves mAP@0.5(%) and mAP@0.5–0.95(%) by 0.4% and 0.2%, respectively. When EPC2f and ACLGF are introduced together, Params and FLOPs slightly increase, and FPS slightly decreases. However, when the PS-MDH scheme is introduced, Params and FLOPs decrease significantly, and FPS increases notably. Finally, when EPC2f, ACLGF and PS-MDH are combined, mAP@0.5(%) and mAP@0.5–0.95(%) reach 93.4% and 70.3%, respectively, an improvement of 2.0% and 1.2% over the baseline model. The number of parameters and FLOPs reduce to 2.9 M and 7.8 G, respectively, a decrease of 0.2 M and 0.3 G compared with the baseline, while FPS increases to 86.6, a 2.7 increase over the baseline. These results demonstrate that the three innovations proposed in this paper not only improve accuracy but also slightly reduce parameters and computational load, fully validating the effectiveness of these innovations.

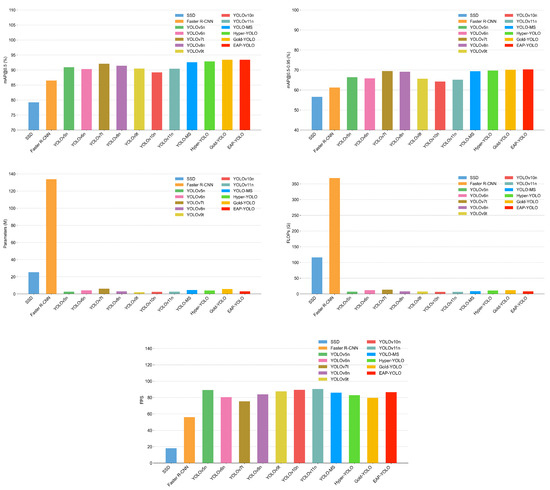

4.6. Quantitative Comparison with Other YOLO Models

To further validate the advantages of the EAP-YOLO model in power violation detection tasks, we conducted a comprehensive quantitative comparison with other 12 object detection models. All experiments were conducted under the same hardware and software environment, and the training was done using the training set of the Alibaba Tianchi competition dataset to ensure fairness. The comparison results are presented in Table 3, and are also visualized in Figure 9 to increase the clarity and readability. The EAP-YOLO model achieves mAP@0.5(%) and mAP@0.5–0.95(%) of 93.4% and 70.3%, respectively, which are superior to other 12 object detection models. The parameters and FLOPs of our EAP-YOLO are 2.9 M and 7.8 G, respectively, lower than SSD, Faster R-CNN, YOLOv6n, YOLOv7t, YOLOv8n, YOLO-MS, Gold-YOLO, and Hyper-YOLO, but higher than YOLOv5n, YOLOv9t, YOLOv10n, and YOLOv11n. FPS reaches 86.6, higher than SSD, Faster R-CNN, YOLOv6n, YOLOv7t, YOLOv8n, YOLO-MS, Gold-YOLO, and Hyper-YOLO, but lower than YOLOv5n, YOLOv9t, YOLOv10n, and YOLOv11n. Among above three metrics on real-time, companies are most concerned with FPS, as FPS needs to match the frame rate of monitoring probes. As shown in Table 3, the FPS of EAP-YOLO has no significant gap with YOLOv5n, YOLOv9t, YOLOv10n, and YOLOv11n. In fact, the frame rate of commonly used monitoring probes, such as those from Hikvision and Dahua, typically does not exceed 30 frames per second, and EAP-YOLO fully meets the real-time requirements of enterprises.

Table 3.

Quantitative comparison between our EAP-YOLO and other 12 object detection models.

Figure 9.

Visualized version of Table 3.

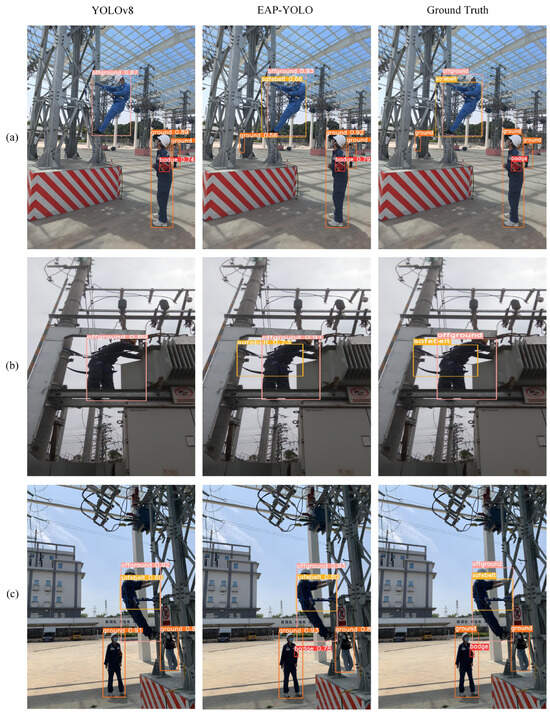

4.7. Subjective Evaluation

To visually verify the performance of the EAP-YOLO model in power operation violation detection, three representative experimental results from both YOLOv8 and EAP-YOLO were compared. As shown in Figure 10a, the contrast between the supervisor armband and the background is not distinct enough, so the YOLOv8 model failed to detect the supervisor armband, while the EAP-YOLO model successfully detected the target, verifying the effectiveness of the ACLGF module in enhancing feature discriminability. In Figure 10b, the YOLOv8 model failed to detect the irregularly shaped safety harness, while the EAP-YOLO model correctly detected it, validating the effectiveness of the EPC2f module in enhancing feature expression for irregularly shaped targets. In Figure 10c, the ground workers in the distance had insufficient differentiation from the background, and the high-altitude workers were carrying irregularly shaped safety harnesses. The YOLOv8 model missed both of these targets, while the EAP-YOLO model successfully detected them, validating the effectiveness of both the ACLGF and EPC2f modules.

Figure 10.

Visual comparison of detection results between our EAP-YOLO and YOLOv8. (a–c) are selected from the Alibaba Tianchi dataset.

5. Conclusions

To address the challenges in EPOVI, including irregular target contours, insufficient contrast between targets and background, and the trade-off between detection accuracy and computational cost, this paper proposes the EAP-YOLO model. The main contributions of this study are as follows: First, an EPC2f module is proposed, which enhances the model’s ability to extract features from irregularly contoured targets by integrating edge convolution with depthwise separable convolution. Second, an ACLGF module is introduced, which adaptively fuses local and global features through a learnable weighting mechanism, improving the model’s ability to distinguish targets in complex backgrounds. Third, an PS-MDH scheme is proposed, which significantly reduces the model’s parameter count while enabling cross-scale feature interaction, thereby enhancing the detection capability for multi-scale targets. Ablation experiments on the Alibaba Tianchi dataset validate the effectiveness of the EPC2f module, ACLGF module, and PS-MDH scheme. Comparative experiments with other 12 object detection models demonstrate that the proposed model achieves the highest detection accuracy while meeting real-time requirements, confirming the effectiveness of the EAP-YOLO model.

As shown in Table 3, our EAP-YOLO model achieves the highest mAP@0.5 and mAP@0.5–0.95 among 13 object detection models, however, its performance is lower than YOLOv10 and YOLOv11 in terms of Params, GFLOPs and FPS. The main reason is that the baseline of our EAP-YOLO model is YOLOv8n, as shown in Table 3, the performance of YOLOv8n is apparently lower than YOLOv10 and YOLOv11 in terms of Params, GFLOPs and FPS, although our model has slightly improved in above three metrics. To improve the performance of EAP-YOLO model in above three metrics, future work will select other lightweight object detection models as baseline model, such as YOLOv12 [42].

Currently, our experiments are still limited to the Alibaba Tianchi dataset, and many situations are not considered, such as extreme lighting conditions, highly cluttered scenes, rare cases that may not be well-represented in the Alibaba Tianchi dataset, etc. To improve the robustness and generalization ability of EAP-YOLO model, future work will train and test the EAP-YOLO model on more diverse datasets, such as the Site Object Detection dataset [43].

Author Contributions

Conceptualization, X.Q.; formal analysis, X.Q.; funding acquisition, W.W.; methodology, X.Q. and L.L.; project administration, W.D.; resources, W.W.; software, L.L., Y.L. and L.Z.; supervision, W.W.; validation, W.W. and Z.C.; writing—original draft, L.L.; writing—review and editing, X.Q. and W.D. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by the Natural Science Foundation of Henan (Grant No. 252300421063), the Research Project of Henan Province Universities (Grant No. 24ZX005), the National Natural Science Foundation of China (Grant No. 62076223), and the Third Batch of Science and Technology Projects for Production Frontline of State Grid Jiangsu Electric Power Co., Ltd. in 2023 (Grant No. DL-2023Z-198).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding authors.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| YOLO | You Only Look Once |

| EPC2f | Edge perception cross stage partial fusion with two convolutions |

| EPOVI | Electric power operation violation identification |

| CBAM | Convolutional block attention module |

| CIoU | Complete intersection over union |

| EIoU | Efficient intersection over union |

| MPDIoU | Intersection over union with minimum points distance |

| C2f | Cross stage partial fusion with two convolutions |

| ACLGF | Adaptive combination of local and global features |

| PS-MDH | Parameter sharing of multi-scale detection heads |

| CSPNet | Cross-stage partial network |

| SPPF | Spatial pyramid pooling-fast |

| PAFPN | Path aggregation feature pyramid network |

| FPN | Feature pyramid network |

| DSConv | Depthwise separable convolution |

| SGD | Stochastic gradient descent |

| NMS | Non-maximum suppression |

| Params | Parameter count |

| FLOPs | Floating-point operations |

| FPS | Frames per second |

| mAP | mean Average Precision |

References

- Wang, F.; Liu, H.; Liu, L.; Liu, C.; Tan, C.; Zhou, H. A Digital Advocacy and Leadership Mechanism that Empowers the Construction of Digital—Intelligent Strong Power Grid. Adv. Eng. Technol. Res. 2024, 10, 249. [Google Scholar] [CrossRef]

- Yi, M.; An, Z.; Liu, J.; Yu, S.; Huang, W.; Peng, Z. Recognition Method of Abnormal Behavior in Electric Power Violation Monitoring Video Based on Computer Vision. In International Conference on Multimedia Technology and Enhanced Learning; Springer: Berlin/Heidelberg, Germany, 2023; pp. 168–182. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Qian, X.; Wang, C.; Wang, W.; Yao, X.; Cheng, G. Complete and Invariant Instance Classifier Refinement for Weakly Supervised Object Detection in Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5627713. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Qian, X.; Wu, B.; Cheng, G.; Yao, X.; Wang, W.; Han, J. Building a Bridge of Bounding Box Regression Between Oriented and Horizontal Object Detection in Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5605209. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Qian, X.; Huo, Y.; Cheng, G.; Yao, X.; Wang, W. Mining High-quality Pseudoinstance Soft Labels for Weakly Supervised Object Detection in Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5607615. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Computer Vision–ECCV 2016: Proceedings of the 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016, Proceedings, Part I 14; Springer International Publishing: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Qian, X.; Zeng, Y.; Wang, W.; Zhang, Q. Co-saliency Detection Guided by Group Weakly Supervised Learning. IEEE Trans. Multimed. 2023, 25, 1810–1818. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Xie, X.; Cheng, G.; Wang, J.; Yao, X.; Han, J. Oriented R-CNN for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 3520–3529. [Google Scholar]

- Qian, X.; Li, C.; Wang, W.; Yao, X.; Cheng, G. Semantic Segmentation Guided Pseudo Label Mining and Instance Re-Detection for Weakly Supervised Object Detection in Remote Sensing Images. Int. J. Appl. Earth Obs. Geoinf. 2023, 119, 103301. [Google Scholar] [CrossRef]

- Liu, Q.; Hao, F.; Zhou, Q.; Dai, X.; Chen, Z.; Wang, Z. An effective electricity worker identification approach based on Yolov3-Arcface. Heliyon 2024, 10, e26184. [Google Scholar] [CrossRef]

- Kang, F.; Li, J. Research on the Detection Method of Electric Power Workers not Wearing Helmets based on YOLO Algorithm. In Proceedings of the 2023 9th International Conference on Computing and Artificial Intelligence, Tianjin, China, 17–20 March 2023; pp. 66–71. [Google Scholar]

- Yan, K.; Li, Q.; Li, H.; Wang, H.; Fang, Y.; Xing, L.; Yang, Y.; Bai, H.; Zhou, C. Deep learning-based substation remote construction management and AI automatic violation detection system. IET Gener. Transm. Distrib. 2022, 16, 1714–1726. [Google Scholar] [CrossRef]

- Xie, X.; Chang, Z.; Lan, Z.; Chen, M.; Zhang, X. Improved YOLOv7 Electric Work Safety Belt Hook Suspension State Recognition Algorithm Based on Decoupled Head. Electronics 2024, 13, 4017. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Farhadi, A.; Redmon, J. Yolov3: An incremental improvement. In Computer Vision and Pattern Recognition; Springer: Berlin/Heidelberg, Germany, 2018; Volume 1804, pp. 1–6. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A single-stage object detection framework for industrial applications. arXiv 2022, arXiv:2209.02976. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Wang, C.Y.; Yeh, I.H.; Mark Liao, H.Y. Yolov9: Learning what you want to learn using programmable gradient information. In European Conference on Computer Vision; Springer Nature: Cham, Switzerland, 2024; pp. 1–21. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. Yolov10: Real-time end-to-end object detection. arXiv 2024, arXiv:2405.14458. [Google Scholar]

- Cai, C.; Nie, J.; Zhang, Z.; Zhang, X.; Tang, P.; He, Z. Violation Recognition of Power Construction Operations Based on Improved YOLOv8 Algorithm. In Annual Conference of China Electrotechnical Society; Springer Nature: Singapore, 2024; pp. 517–526. [Google Scholar]

- Jiao, X.; Li, C.; Zhang, X.; Fan, J.; Cai, Z.; Zhou, Z.; Wang, Y. Detection Method for Safety Helmet Wearing on Construction Sites Based on UAV Images and YOLOv8. Buildings 2025, 15, 354. [Google Scholar] [CrossRef]

- Jiang, T.; Li, Z.; Zhao, J.; An, C.; Tan, H.; Wang, C. An improved safety belt detection algorithm for high-altitude work based on YOLOv8. Electronics 2024, 13, 850. [Google Scholar] [CrossRef]

- Tianchi Platform, Guangdong Power Information Technology Co., Ltd. Guangdong Power Grid Smart Field Operation Challenge, Track 3: High-Altitude Operation and Safety Belt Wearing Dataset. Dataset. 2021. Available online: https://tianchi.aliyun.com/specials/promotion/gzgrid (accessed on 1 July 2021).

- Wang, C.Y.; Liao, H.Y.M.; Wu, Y.H.; Chen, P.Y.; Hsieh, J.W.; Yeh, I.H. CSPNet: A new backbone that can enhance learning capability of CNN. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 390–391. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Feng, C.; Zhong, Y.; Gao, Y.; Scott, M.R.; Huang, W. Tood: Task-aligned one-stage object detection. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 3490–3499. [Google Scholar]

- Stergiou, A.; Poppe, R. Adapool: Exponential adaptive pooling for information-retaining downsampling. IEEE Trans. Image Process. 2022, 32, 251–266. [Google Scholar] [CrossRef]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Ultralytics. YOLOv5 (v7.0). 2022. Available online: https://github.com/ultralytics/yolov5 (accessed on 22 November 2022).

- Jocher, G. YOLOv8. 2025. Available online: https://github.com/ultralytics/ultralytics (accessed on 10 January 2023).

- Khanam, R.; Hussain, M. YOLOv11: An overview of the key architectural enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar]

- Chen, Y.; Yuan, X.; Wang, J.; Wu, R.; Li, X.; Hou, Q.; Cheng, M.-M. YOLO-MS: Rethinking multi-scale representation learning for real-time object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2025, 47, 4240–4252. [Google Scholar] [CrossRef]

- Feng, Y.; Huang, J.; Du, S.; Ying, S.; Yong, J.; Li, Y.; Ding, G.; Ji, R.; Gao, Y. Hyper-YOLO: When visual object detection meets hypergraph computation. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 47, 2388–2401. [Google Scholar] [CrossRef]

- Wang, C.; He, W.; Nie, Y.; Guo, J.; Liu, C.; Han, K.; Wang, Y. Gold-YOLO: Efficient object detector via gather-and-distribute mechanism. Adv. Neural Inf. Process. Syst. 2023, 36, 51094–51112. [Google Scholar]

- Tian, Y.; Ye, Q.; Doermann, D. YOLOv12: Attention-centric real-time object detectors. arXiv 2025, arXiv:2502.12524. [Google Scholar]

- Duan, R.; Deng, H.; Tian, M.; Deng, Y.; Lin, J. SODA: A large-scale open site object detection dataset for deep learning in construction. Autom. Constr. 2022, 142, 104499. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).