Abstract

With the continuous growth of maritime activities and the shipping trade, the application of maritime target detection in remote sensing images has become increasingly important. However, existing detection methods face numerous challenges, such as small target localization, recognition of targets with large aspect ratios, and high computational demands. In this paper, we propose an improved target detection model, named YOLOv5-ASC, to address the challenges in maritime target detection. The proposed YOLOv5-ASC integrates three core components: an Attention-based Receptive Field Enhancement Module (ARFEM), an optimized SIoU loss function, and a Deformable Convolution Module (C3DCN). These components work together to enhance the model’s performance in detecting complex maritime targets by improving its ability to capture multi-scale features, optimize the localization process, and adapt to the large aspect ratios typical of maritime objects. Experimental results show that, compared to the original YOLOv5 model, YOLOv5-ASC achieves a 4.36 percentage point increase in mAP@0.5 and a 9.87 percentage point improvement in precision, while maintaining computational complexity within a reasonable range. The proposed method not only achieves significant performance improvements on the ShipRSImageNet dataset but also demonstrates strong potential for application in complex maritime remote sensing scenarios.

1. Introduction

With the increasing frequency of maritime trade and ship activities, the amount of relevant maritime video and image data has also increased rapidly. However, the traditional way of obtaining maritime images, such as through cameras installed in ports or on sailboats, has problems such as limited information, fixed viewpoints, and unstable image quality [1,2]. These limitations make it difficult for traditional methods to meet the demands of increasingly complex maritime scene analyses. In contrast, maritime images acquired using remote sensing technology have significant advantages. In recent years, with the continuous progress of technology, the field of maritime remote sensing target detection has received wide attention and rapid development. Remote sensing images can not only provide a wider perspective and richer detailed information, but also have higher stability and flexibility [3,4,5,6].

Maritime images obtained through remote sensing can be divided into two categories: ocean images (which only include ship targets and ocean backgrounds) and coastal images (which include ships and coastal building targets with more complex backgrounds). The reason for dividing images into these two categories is that coastal areas often contain more buildings, port facilities, and other man-made structures, increasing the complexity of the scene. Coastal images represent real-world scenarios where ships appear alongside other targets (such as docks or cranes), providing a more diverse range of maritime scenarios. We believe that this classification better reflects the variety of environments ships may encounter in practice. Therefore, it covers most real-world scenarios where ships appear. In fact, these coastal images can be seen as an extension of ship-target images and a subset of maritime images, offering more application scenarios for future maritime target detection.

Target recognition algorithms can generally be divided into two categories: traditional image processing and pattern recognition algorithms, and neural network-based algorithms. Traditional algorithms rely on classical machine learning, image processing, and pattern recognition techniques, typically performing target recognition through manually designed features or heuristic methods. On the other hand, neural network-based algorithms include both shallow and deep architectures. Shallow neural networks, such as multilayer perceptrons (MLPs) and support vector machines (SVMs), use relatively simple network structures for pattern recognition, but their recognition capabilities often fall short of practical requirements. Deep neural networks offer higher performance, simplified design processes, and better adaptability to complex scenarios. Deep learning algorithms can be further divided into two categories: two-stage algorithms (e.g., Fast R-CNN [7] and Faster R-CNN [8]) and single-stage algorithms (e.g., YOLO [9,10,11,12,13,14] and SSD [15]). Two-stage algorithms usually have higher recognition accuracy, but are more suitable for scenarios with sufficient computational resources due to high computational requirements and slower running speed. Single-stage algorithms, on the other hand, have the advantage of faster speed and lower computational resource requirements, but the accuracy is relatively slightly lower and is more suitable for scenarios with high real-time requirements.

Existing maritime remote sensing detection methods (e.g., Related-YOLO [16] and YOLOv8: Ship Detection in Foggy Weather [17]) have achieved significant performance improvements, but still face many challenges. For example, accurate localization of small targets is still difficult, as feature extraction is limited due to the dense distribution of targets and the limited number of pixels; and for large aspect ratio targets, such as elongated ships, the mismatch between the ground truth region and the square sensing field in RoI Align will lead to detection errors. In addition, traditional models based on basic convolutional operations are limited in their ability to cope with these problems.

The intended application of the proposed method primarily focuses on satellite target detection, particularly for maritime remote sensing tasks. In these scenarios, due to the harsh space environment, the onboard computer (SOC) on satellites faces significant hardware and computational resource constraints, typically relying on embedded devices such as FPGA development boards whose processing power is far inferior to that of ground-based systems. Therefore, algorithms designed for satellite platforms must be both lightweight and efficient. Although advanced architectures like YOLOv8 and other state-of-the-art frameworks offer performance improvements, they require higher computational resources, making them less suitable for resource-constrained platforms. In contrast, YOLOv5 strikes an excellent balance between detection accuracy and computational efficiency, with superior portability and deployment flexibility, enabling faster inference and more reliable detection on embedded or edge devices. Consequently, in satellite-based maritime remote sensing scenarios, choosing YOLOv5 over YOLOv8 is a wise decision, as it achieves an optimal trade-off between performance and resource requirements.

Building on the strengths of YOLOv5, this paper addresses the specific challenges of maritime target detection by introducing several targeted enhancements. These include:

- Attention-based Receptive Field Enhancement Module: This paper introduces an original module that combines feature attention mechanisms with receptive field enhancement. The module uses different dilation rates to extract features with varying scales and dependencies, and further strengthens the extracted information using an attention mechanism [18]. Compared to simple receptive field enhancement, this structure better improves the model’s ability to extract multi-scale information, thereby significantly enhancing the model’s classification accuracy.

- Optimized model for maritime remote sensing scenarios: The proposed YOLOv5-ASC model integrates an attention-based receptive field enhancement (ARFEM), deformable convolutions (C3DCN), and the SIoU loss function to optimize feature extraction for maritime remote sensing. The attention mechanism enhances the model’s ability to capture multi-scale information, while deformable convolutions adaptively handle the large aspect ratios of ship targets, thereby improving detection performance. The SIoU (Smooth Intersection over Union) loss function enhances the model’s accuracy in locating targets by better handling the geometric relationship between predicted and ground truth boxes, especially in cases of extreme aspect ratios or target overlaps. This optimization effectively addresses unique challenges in maritime remote sensing, such as limitations in computational and transmission capabilities, as well as the complexity of detecting both ships and port-related objects within the same scene.

In this paper, an improved model of YOLOv5 using Attention Mechanism and Multi-Scale Feature Fusion is proposed, aiming to overcome the challenges faced by the original model in maritime remote sensing scenarios, such as large aspect ratio targets and targets in complex backgrounds. Furthermore, we evaluate the proposed model on ShipRSImageNet and demonstrate that it can improve the detection accuracy of remotely sensed maritime targets. Finally, the proposed model also demonstrates its effectiveness on additional maritime-related datasets.

2. Related Work

Maritime remote sensing target detection is a combination of remote sensing and maritime targets. Currently, there are many detection algorithms for this scenario, but it also faces many challenges.

Ma et al. [16] proposed a new model called Related-YOLO to address ship rotation and fine-grained characteristics. Although the model includes a detection head specifically designed for small targets, it does not fully utilize shallow feature information. Liang et al. [17] proposed an improved YOLOv8 model for detecting nearshore ships under fog interference, but the model did not take into account the special points of the ship target and the scene. Abhinaba H. et al. [19] designed a self-attention-based residual network for ship detection and classification, but their approach only implements a basic network design and does not address the unique challenges of maritime remote sensing images, such as the large aspect ratios compared to other remote sensing images. Xiong et al. [20] proposed an explainable attention network for fine-grained ship image classification to explore transparent and interpretable prediction processes. However, their study does not address the frequent need to detect numerous small targets in maritime remote sensing images. Zalukhu et al. [21] employed an extended YOLOv5x6 network to identify ships in the large-scale ShipRSImageNet dataset, but the network requires 209.8 GFLOPs of computation, making it unsuitable for maritime object detection. Guo et al. [22] proposed a detection algorithm based on shape-aware feature learning, which considers the large aspect ratio challenge in fine-grained ship detection. However, their method does not fully account for the identification of numerous small targets in complex backgrounds and fails to effectively utilize multi-scale information. Chenguang and Guifa [23] explored the application of an improved YOLOv5s model in remote sensing image target detection, applying a structurally optimized version of the model to assess regional poverty. Although their study improved detection performance in a specific domain, it primarily focused on land-based scenes and did not address the specific challenges of maritime remote sensing, such as dense small targets, complex sea clutter, and large aspect ratio variations of ships.

In conclusion, while existing maritime remote sensing object detection methods have demonstrated certain performance enhancements, there remain some deficiencies. A significant challenge is the accuracy of target localization for a large number of small targets. The detection and localization of small targets remains a challenging task due to their frequent clustering, which results in unclear boundaries, and the limited pixel count of individual small targets, which makes it difficult to capture clear texture features. Another issue is the detection of targets with large aspect ratios, as ships in satellite images often have elongated shapes. This results in a discrepancy between the ground-truth regions and the square receptive fields employed in RoI Align operations, which, in turn, affects detection performance [22]. Conventional models utilize basic convolution, resulting in a receptive field that is shaped like a square [24]. Small target categories are susceptible to confusion: The fine-grained classification of the dataset may result in intra-class differences exceeding inter-class differences, thereby increasing the difficulty of recognition. It is challenging to control the size of the model parameters. While larger parameter models typically yield better performance, excessive parameters limit the model’s applicability. The demand for remote sensing target recognition often arises on platforms with limited computational power. Although satellite remote sensing image recognition can be performed without edge computing, the recognition requirements are much larger, which creates the need for limiting computational load in recognition that is often overlooked in maritime remote sensing target recognition.

In order to address the aforementioned challenges, this paper proposes an improved model. This model incorporates an Attention-based Receptive Field Enhancement Module (ARFEM), the purpose of which is to enhance the localization of small objects, a Deformable Convolutional Network (C3DCN) to adapt to targets with large aspect ratios, and an optimized SIoU loss function to enhance bounding box regression accuracy. The model achieves a good balance between accuracy and computational efficiency, significantly improving detection performance and demonstrating potential for applications in resource-constrained platforms and complex scenarios.

3. Proposed Network

3.1. The Structure of the Network

The proposed model, named YOLOv5-ASC, is an enhanced version of the YOLOv5 model specifically optimized for maritime remote sensing target detection. The proposed YOLOv5-ASC model integrates Attention-based Receptive Field Enhancement (ARFEM), Deformable convolutions (C3DCN), and the SIoU loss function to optimize feature extraction for maritime remote sensing. The decision to base this model on YOLOv5 is made for several key reasons. First, YOLOv5 is an optimized model that offers high detection accuracy while maintaining low computational overhead, making it ideal for real-time applications. Second, this work builds upon previous research by further optimizing YOLOv5 to better address the specific challenges encountered in remote sensing maritime target detection. Finally, while newer models such as YOLOv8 offer enhanced features, YOLOv5 is preferred due to its broader support across hardware platforms, particularly on embedded and edge computing devices, making it especially suitable for resource-constrained platforms used in maritime remote sensing scenarios.

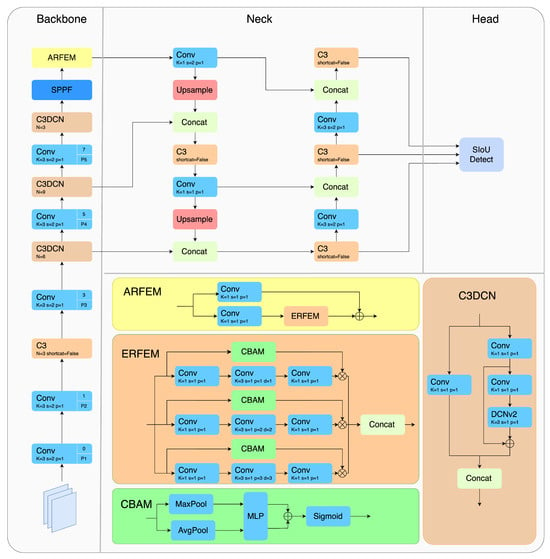

This paper aims to improve the performance of YOLOv5 [9] in small object detection by addressing the maritime remote sensing small object detection problem. As shown in Figure 1, compared to the original algorithm, the proposed YOLOv5-ASC model integrates attention-based receptive field enhancement (ARFEM), deformable convolutions (C3DCN), and the SIoU loss function to optimize feature extraction for maritime remote sensing. The attention mechanism enhances the model’s ability to capture multi-scale information, while deformable convolutions can adaptively handle the large aspect ratio of ship targets, thereby improving detection performance. The SIoU (Smooth Intersection over Union) loss function improves the model’s accuracy in localizing targets by better handling the geometric relationship between the predicted boxes and ground truth boxes, particularly in cases of extreme aspect ratios or target overlap. This optimization effectively solves unique challenges in maritime remote sensing, such as limitations in computational and transmission capacities, as well as the complexity of detecting both ships and port-related objects in the same scene. The model also includes other original YOLOv5 modules, such as SPPF (Spatial Pyramid Pooling Fast), which is designed to capture multi-scale feature information through pooling operations at different scales.

Figure 1.

The overall structure of YOLOv5-ASC.

3.2. Deformable Convolution

In convolutional neural networks (CNNs), features are typically extracted through convolution operations. However, the limitation of standard convolution lies in its ability to extract only fixed geometric shapes, making it difficult to accurately capture irregular shapes. In maritime vessel target recognition, targets often exhibit significant aspect ratio deformations. These deformations can lead to missed detections or false positives, reducing accuracy and the mean Average Precision (mAP). This occurs because standard convolution uses fixed-sized kernels, resulting in uniform receptive fields that cannot effectively handle complex target shapes.

Particularly for long and narrow objects like ships with large aspect ratios, fixed convolution kernels are unable to adapt to the shape variations, causing important feature information to be ignored. Additionally, in maritime environments, vessel targets not only have large aspect ratios but are also affected by factors such as pose variations and surface reflections, which exacerbate this issue.

To address these challenges, the proposed model utilizes Deformable Convolution V2, which significantly improves the original C3 module. By introducing Deformable Convolution, the model adapts the sampling method to better accommodate target deformations, thus enhancing detection performance. Since its introduction in 2017 with version v1 [25], the Deformable Convolution algorithm has undergone multiple improvements, with version v2 [26] released in 2018. The proposed model incorporates this improved version of Deformable Convolution, further boosting detection effectiveness.

Deformable Convolution v2 proposes a new approach to convolution, introducing horizontal and vertical offsets to the normal convolution, causing the sampling grid to be offset from the original sampling points.

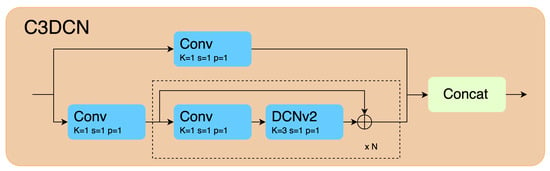

The model introduces deformable convolutions into the specific model to form the C3DCN module, which replaces the bottleneck layer on the basis of the original C3 module, which has the benefit of controlling the number of specific deformable convolution layers, as shown in Figure 2 (which is the same as the C3DCN in Figure 1). The dashed box and “×N” in the figure indicate that the contents within the dashed box are repeated N times.

Figure 2.

Introduction of deformable convolutions for the C3DCN module.

The process of standard convolution can usually be divided into two parts, sampling in the sampling region and assigning weights to the sampled data. The sampling region R contains the expansion factor and the size of the convolution kernel. For any point (represents one pixel) on the obtained feature y (which is the output feature map), the Formula (1) can be obtained, where is an instance in the sampling region R.

In deformable convolution, compared to standard convolution, the offset is introduced, along with a modulation factor , to limit the convolution’s receptive field and avoid interference from regions irrelevant to the target. The modulation factor is typically learned jointly from the input feature map through an additional convolutional layer. Its values are usually in the range [0, 1], representing the “importance” or “reliability” of each sampling point. Under this formulation, the convolution result is,

However, since the offsets are mostly fractional and cannot correspond one-to-one with the actual data points on the feature map, bilinear interpolation G is required. In this case, the expression for can be written as Equation (3), where m represents the spatial coordinate of the input feature x involved in the computation.

3.3. ARFEM

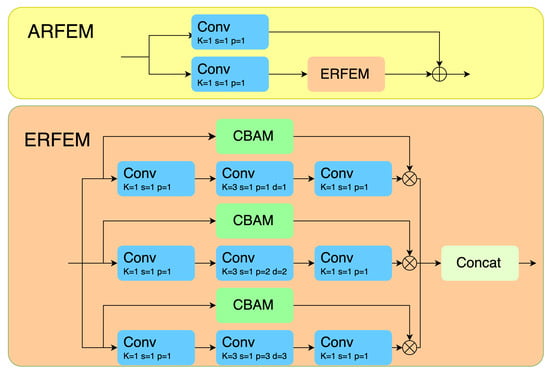

The attention-based receptive field enhancement module (ARFEM) combines the strengths of receptive field expansion and feature attention mechanisms to improve the model’s ability to extract multi-scale information. In traditional receptive field enhancement modules, although dilated convolutions can expand the receptive field and improve multi-scale feature capture, they still fall short of fully focusing on the most critical regions of the features. To address this issue, ARFEM integrates the Convolutional Block Attention Module (CBAM [27]) into the receptive field enhancement process, enabling the model to assign greater weight to specific regions and preserve more important information. The CBAM module is a lightweight attention mechanism module that aims to improve the feature expression ability of convolutional neural networks by paying attention to both channel and spatial information.

Specifically, ARFEM captures features at different scales through multiple dilation convolution branches, each of which adopts a different dilation rate (e.g., 1, 2, 3) to accommodate different ranges of dependencies, as shown in Figure 3. Meanwhile, the built-in attention mechanism dynamically adjusts the weights of each position according to the importance of the feature map, allowing the model to pay higher weighted attention to critical regions and suppress the influence of irrelevant regions. In this way, ARFEM is able to effectively extract multi-scale features and optimize the use of receptive fields.

In addition, ARFEM further improves the feature representation by weighted fusion of features extracted from each dilated convolutional branch by a weighted aggregation layer. This weighting operation assigns different weights to each branch via the attention mechanism, thus balancing the fusion effect of multi-scale information and improving the model’s adaptability and classification accuracy in maritime remote sensing and other environments. Overall, the attention-based receptive field enhancement module significantly improves the model’s ability to capture multi-scale targets by fusing the receptive field enhancement with the feature attention mechanism, and exhibits higher robustness and performance in tasks such as target detection [18].

Figure 3.

Attention-based Receptive Field Enhancement Module (ARFEM) structure (which is the same as the ARFEM in Figure 1).

3.4. SIoU

Prior to the introduction of the SIoU loss function, previous loss functions did not focus on the directional relationship between the ground truth and predicted boxes, instead only considering the distance, overlap area, and aspect ratio between them. The SIoU loss function reconsiders the vector angle and redefines the loss function [28]. It significantly increases the training speed and improves the accuracy while avoiding the random appearance of the predicted boxes. The SIoU loss function consists of the following parts: distance and angle cost, shape cost, and IoU loss. It is worth noting that the newly introduced angle cost is included in the distance cost, as shown in the following formula:

The purpose of introducing an angle cost function is to reduce the variable of change associated with distance. Basically, the model will first select either the X-axis or the Y-axis (choosing the closer axis) for prediction based on the distance to the target, and then continue to further adjust the prediction along the relevant axis. To achieve this, the convergence process first tries to minimize the angle when ; or if . is the angle between the line connecting the centers of the predicted box and the ground truth box and the horizontal direction. is the angle between the connecting line and the vertical direction.This approach ensures that the optimization process is more targeted and efficient, focusing on the most relevant angular changes.

The angle cost is computed using the following formula:

where

d is the predicted box, which refers to the bounding box generated by the model to localize the object of interest. It is typically derived by regressing offsets from a predefined anchor or prior box. is the ground truth box, which represents the actual location and size of the object manually annotated in the dataset. and are the horizontal and vertical coordinates of the centers of the predicted box and the ground truth box, and and are the vertical and horizontal distances between the predicted box and the ground truth box.

The distance cost is redefined to include the angle cost:

where

The shape cost is defined as

and the relevant parameters at this time need to satisfy Equation (13),

The values w and h represent the width and height of the predicted box, respectively, while and represent the width and height of the ground truth box. When the value of in the formula is set to 1, it will immediately optimize the shape, thereby compromising the free movement of the shape. According to the author’s related experiments, the value of ranges from 2 to 6, and setting it to 4 has a better effect.

At this time, the definition formula of the box loss function is shown as Equation (14):

where is

where B represents the predicted bounding box and represents the ground truth bounding box. The intersection is the area of overlap between the two boxes, while the union is the total area covered by both boxes combined. The above losses give the final SIoU loss function Formula (16) as shown below:

: This is the final SIoU loss function, which combines the bounding box loss, classification loss, and objectness loss to optimize the model during training. , , : These are the gain factors for the respective loss components. Each loss component (bounding box loss, classification loss, and objectness loss) is scaled by these factors to control their influence on the total loss. : This is the loss related to the predicted bounding box, which measures how well the predicted box matches the ground truth. It typically includes components such as distance, angle, and shape loss. This loss term is newly introduced in the SIoU function. : This is the classification loss, which measures how accurately the model classifies objects into the correct categories. This is a loss term that is included in the original model to evaluate whether each predicted box is classified correctly. : This is the objectness loss, which measures how accurately the model predicts the presence of an object within a given box. This is another loss term that is included in the original model to assess whether an object is present in the box.

4. Experimental Results and Analysis

4.1. Dataset and Experimental Environment

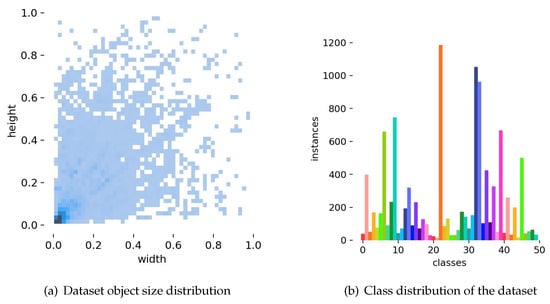

The ShipRSImageNet dataset is a maritime satellite remote sensing dataset proposed by Zhang et al. [4]. ShipRSImageNet is a database comprising over 3425 images, featuring 17,543 ship instances across 50 classes. These images have been meticulously annotated by experts with both transverse and oriented bounding boxes. This allocation results in 2198 images designated for training, 545 images assigned to validation, and 677 images set aside for testing.

This dataset focuses on overhead views of ships either anchored or in motion, including images of vessels across various types, locations, and weather conditions. It contains a large number of high-density small objects. Due to the fine-grained categorization of ship types, some classes in the dataset have very subtle inter-class differences, which makes the dataset particularly challenging for detection models. Moreover, this also results in a significant class imbalance, with certain categories—such as “Other Ships” and “Other Warships”—having considerably more samples than others. The figure below shows the class distribution of the dataset.

As illustrated in Figure 4a, the distribution of objects across various size categories is predominantly small, typically spanning only a few tens of pixels. Figure 4b highlights the class imbalance in the dataset, with some categories containing a disproportionate number of objects. This imbalance, together with the prevalence of small objects, serves to challenge the algorithm’s ability to accurately detect and recognize small targets.

Figure 4.

ShipRSImageNet dataset analysis.

The experiments are carried out on the Ubuntu 20.04 system, utilizing an RTX 4090 GPU for model training and inference. The PyTorch version is 2.4.1. The experimental settings include an automatically determined batch size, an image size of 640, a total of 300 epochs, and the default SGD optimizer. The training process is initiated from scratch without relying on pre-trained weights.

4.2. Experiment Results

4.2.1. ARFEM

This section evaluates the performance of the C3 module enhanced by Attention-based Receptive Field Enhancement. The unmodified YOLOv5s model is used as the baseline. The results, shown in Table 1, proved that YOLOv5s-ARFEM attains an enhancement of 2.618 in mAP@0.5, 3.276 in precision, and 2.249 in recall in comparison to the YOLOv5s. The improvement in detection accuracy is mainly attributed to the utilization of dilated convolution in the ARFEM module, which can effectively capture multi-scale information and dependencies by utilizing features from different receptive fields. The results obtained demonstrate unequivocally that the ARFEM modules enhance the capabilities for extracting and detecting small maritime objects.

Table 1.

Comparison of receptive field enhancement models.

4.2.2. SIoU

The enhancements brought about by the SIoU loss function, when compared to the original loss function, are appraised by utilizing a baseline model that incorporates a novel feature fusion structure and a small object detection layer. The experimental results, presented in Table 2, indicate that the YOLOv5s-SIoU model achieves an increase of 0.305 in mAP@0.5 compared to the baseline. Precision improves by 0.997, and recall improves by 1.126. These results demonstrate that incorporating the vector angle into the loss function enhances detection accuracy.

Table 2.

Comparison of SIoU loss function.

4.2.3. C3DCN

The present chapter is concerned with an evaluation of the impact of the deformable convolution module on the enhancement of the model’s detection accuracy. The baseline model used is YOLOv5s, and the original model is improved by replacing it with the deformable convolution module, resulting in the improved model YOLOv5s-C3DCN. The improved results are shown in Table 3.

Table 3.

Comparison of deformable convolution.

From the table, it can be seen that YOLOv5s-C3DCN improves the mAP@0.5 by 0.364 and the precision by 1.879 compared to the baseline model, and there is a slight decrease in the R-value, but the overall performance is enhanced.

4.3. Experimental Results of YOLOv5-ASC

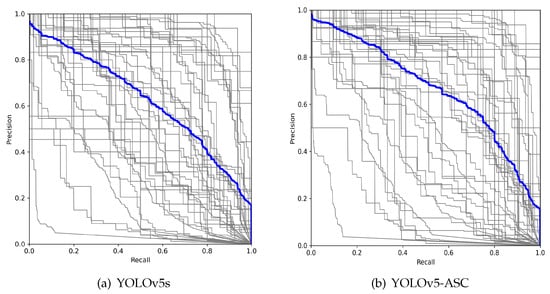

To validate the improved performance of the enhanced model, a comparison was made between the improved model and YOLOv5s. Figure 5b shows the Precision-Recall (PR) curve for the YOLOv5-ASC model on the ShipRS ImageNet V1 dataset, while Figure 5a is YOLOv5s. (The blue line represents the PR curve aggregated over all categories). It can be observed that the detection performance of the model has generally improved across different categories.

Figure 5.

PR-curve for YOLOv5s and YOLOv5-ASC.

4.4. Analysis of Detection Performance

In order to assess the efficacy of the YOLOv5-ASC model in real-world application environments, this paragraph delves into the model’s ability to perform in specific contexts. A variety of challenging scenarios were carefully selected for testing, including scenarios with small targets that are easily confused and scenarios with cluttered backgrounds. As shown in the figure below, these scenarios specifically cover situations where targets are closely fitting and difficult to distinguish, scenes with complex and variable backgrounds, blurred scenes with low image clarity, scenes containing targets with large aspect ratios, and scenes with small targets that occur in complex environments.

The label and confidence score displayed on the detection box represent the detected category and confidence. The confidence score indicates the model’s confidence that a target exists within a specific detection box, and it is the product of the objectness probability and the class probability.

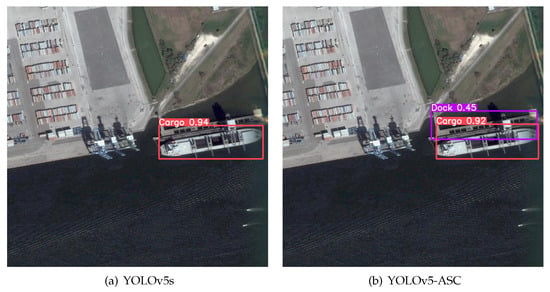

In comparison, it can be observed that the dock and the ship have overlapping parts, resulting in the loss of clear boundaries, which presents a challenge for target recognition. The detection result of the original model (Figure 6a) fails to successfully differentiate between the dock and the ship. In contrast, the proposed model demonstrates an advantage in handling this challenge and clearly differentiates between the dock and the ship, as shown in Figure 6b.

Figure 6.

Comparison of YOLOv5s and YOLOv5-ASC in the presence of poorly defined boundaries.

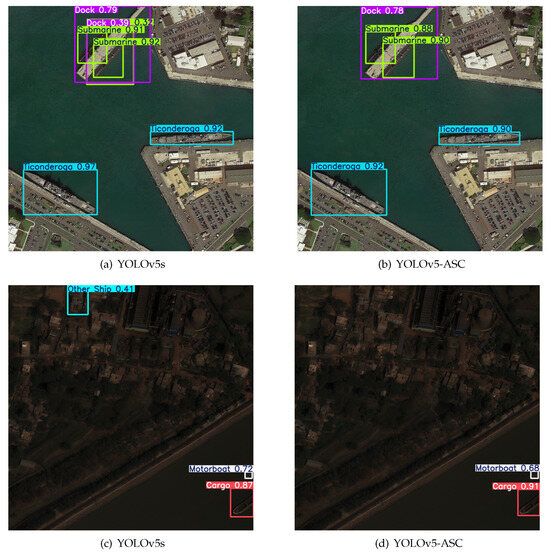

In maritime remote sensing scenarios, image blurring is a frequent occurrence, which may be due to weather, air quality, light angle, camera resolution, and so on. We can see that the model has an advantage in recognizing blurred images; as can be seen in Figure 7a, the YOLOv5s model detects one submarine, while the proposed model succeeds in recognizing all three submarines (Figure 7b).

Figure 7.

Comparison between YOLOv5s and YOLOv5-ASC in the case of blurred scenes.

Maritime remote sensing images of ships in the harbor—this situation is a test of the model’s ability to recognize complex scenes. In this scenario, due to the complex shape of the dock, the original model recognizes the two dock Figure 8a images, but the proposed model successfully recognizes the dock Figure 8b image and is not confused. The relevant context can also lead to misrecognition—as shown in Figure 8c, the original model recognizes the buildings on the shore as ships. The proposed model, on the other hand, was not confused by the buildings (Figure 8d).

Figure 8.

Comparison of YOLOv5s and YOLOv5-ASC in complex background situations.

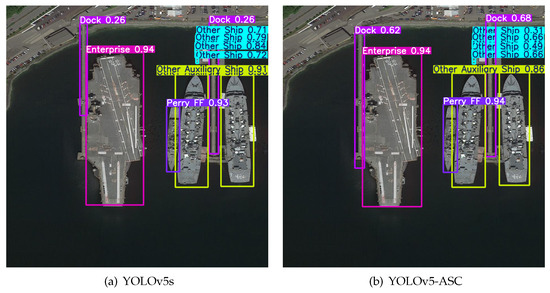

A marina is a special recognition target in remote sensing maritime imagery; it has a large aspect ratio and often appears side-by-side with boats that also have large aspect ratios, which poses a great challenge for recognition, as can be seen from Figure 9a. The original model rightly recognises the marina, but its box information is wrong and contains only a part of the marina. As can be seen from Figure 9b, the proposed model successfully meets this challenge by not only identifying the class correctly, but also the location of the box is not incorrect.

Figure 9.

Comparison between YOLOv5s and YOLOv5-ASC at high aspect ratios.

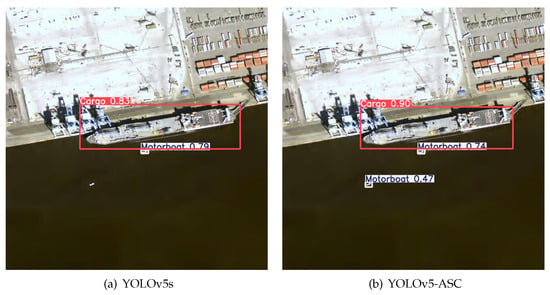

A huge difference in target size is also a frequent occurrence in ship remote sensing recognition scenarios, as seen in Figure 10; the original model does not accurately recognize small targets, and the motorboat in Figure 10a is ignored, while this problem does not appear in Figure 10b, the result of the improved model recognition.

Figure 10.

Comparison of YOLOv5s and YOLOv5-ASC in small target scenarios.

4.5. Ablation Studies

In order to evaluate the efficacy of the proposed enhancements, a series of ablation experiments were conducted using the YOLOv5s model on the ShipRS ImageNet V1 dataset. The outcomes of these experiments are presented in Table 4. The initial step entailed the incorporation of the ARFEM structure into the original model, with the objective being to enhance the receptive field and optimize the utilization of multi-scale information. Subsequently, the loss function was enhanced by substituting the original function with the SIoU loss function. Finally, the convolution module was substituted with a deformable convolution module. It is noteworthy that each experiment focused on the incorporation of a specific module, with no additional modifications made.

Table 4.

Comparison of ablation studies.

Table 4 presents the impact of different module combinations on the performance of the YOLOv5s model. The results show that incorporating the ARFEM module alone significantly improves both precision and recall. Specifically, mAP@0.5 increases from 61.8% to 64.564%, and mAP@0.5:0.95 improves by approximately 2.6 percentage points, demonstrating the effectiveness of ARFEM in enhancing receptive fields and multi-scale feature fusion. Similarly, adding either the SIoU or C3DCN module also yields noticeable performance gains. The mAP@0.5:0.95 increases to 48.10% and 49.03%, respectively, indicating improved localization accuracy.

The combination of multiple modules yields better results. For example, integrating ARFEM and SIoU increases the mAP@0.5 to 65.455% and the mAP@0.5:0.95 to 51.63%. The combination of SIoU and C3DCN further improves the mAP@0.5:0.95 to 53.021%. Ultimately, the full integration of ARFEM, SIoU, and C3DCN—forming the proposed YOLOv5-ASC model—achieves the best overall performance, with a mAP@0.5 of 66.214% and a mAP@0.5:0.95 of 53.846%, while maintaining a reasonable computational cost of 30.5 GFLOPs. These results validate the effectiveness of the proposed modules in enhancing detection accuracy and robustness without introducing excessive computational overhead.

4.6. Comparison of Different Methods

In the results comparison section, we selected several models for comparison, including YOLOv5s, YOLOv5s-DCS, SSD-VGG16, YOLOv8s, YOLOv5n, and YOLOv5s-BSD. YOLOv5s is the base model for the proposed YOLOv5-ASC, and comparing it with this model provides a clear demonstration of the performance improvements brought by our proposed modifications. YOLOv5n is a smaller version of the YOLOv5 series, and including it helps assess the impact of model size on performance. YOLOv8, as the latest version in the YOLO series, adopts more advanced structural designs. Comparing it with YOLOv8s allows us to verify the competitiveness of our proposed method in the context of current mainstream detectors. YOLOv5s-DCS is optimized for maritime target detection in general scenes (non-remote sensing), and by comparing it with our model, we can evaluate the performance in similar scenarios. YOLOv5s-BSD is optimized for remote sensing maritime target detection, and since it uses the same dataset as our study, the comparison clearly highlights the advantages of our model. SSD-VGG16, which uses VGG16 as the backbone in the SSD (Single Shot MultiBox Detector) model, is a classic target detection algorithm. Although not part of the YOLO series, its performance provides valuable reference data. Through these comparisons, we comprehensively evaluate the performance of the YOLOv5-ASC model.

As shown in Table 5, the proposed YOLOv5-ASC model demonstrates certain advantages compared to its baseline model. It maintains high accuracy and mAP while also offering advantages in terms of parameters and model size.

Table 5.

Comparison of ablation studies.

Compared to the original YOLOv5s model, the proposed model shows improvements across all aspects. The average precision is increased by 4.36%, and the accuracy is improved by 9.87 percentage points. Additionally, compared to our previously proposed YOLOv5-BSD algorithm, the new model exhibits significant enhancements in both performance and model parameters. The improved model requires only half the computational resources of YOLOv5-BSD while achieving a 1.91% increase in mAP@0.5 and a 7.4% improvement in accuracy.

When compared to the newer YOLOv8s model, the proposed model also shows strong competitiveness, outperforming it in terms of accuracy and mAP by 7.71 and 0.97 percentage points, respectively, with comparable parameter sizes. However, the proposed model demonstrates weaknesses in a broader mAP range and has a recall rate 3.17 percentage points lower than that of YOLOv8s. This might be due to the model overlooking a significant number of blurred targets, pointing to a potential direction for further optimization.

Time cost is a critical factor in maritime remote sensing applications, and the proposed model demonstrates a reasonable time cost. Firstly, compared to the original model, the computational cost of the proposed model has increased, with GFLOPs rising by 13.9 due to the incorporation of a more complex model structure. Secondly, compared to YOLOv5-BSD, a model with a similar detection focus, the proposed model achieves higher accuracy while requiring only 30.5 GFLOPs, which is just 88% of the computational cost of YOLOv5-BSD. Finally, compared to the newly proposed YOLOv8 model, the proposed model has a disadvantage in computational demand, with an additional 1.9 GFLOPs.

The proposed method not only demonstrates the performance advantage on the shipRSImageNet dataset, but also performs well on other maritime related datasets, and the experimental results are shown in Table 6. The Singapore Maritime Dataset (SMD), a common maritime related dataset, focuses on video and image data for both coastal and offshore scenarios. iVision MRSSD is a ship remote sensing dataset acquired using synthetic aperture radar (SAR), which is closer to the target scenario of the proposed model. The proposed model has advantages over the original model on these two datasets, with mAP0.5 improving by 1.2% and 1.8%.

Table 6.

Comparison of performance on more maritime datasets.

Table 7 presents the model’s detection performance across these object size categories. Compared to the original model, the proposed model shows varying degrees of performance improvement across different object sizes. For small objects, the proposed model demonstrates an approximately 2% overall improvement; however, the enhancement for small object detection is relatively modest compared to the other two categories. For medium-sized objects, the proposed model achieves the most significant improvement, with precision increasing by 8.73%. Large objects also see a notable enhancement, with precision improving by 2.17%.

Table 7.

Comparison of target detection for different sizes.

In summary, the feature network proposed in this paper combines receptive field enhancement and deformable convolution, improving the ability to detect high-aspect-ratio targets and a large number of small objects. However, while it demonstrates improvements over the original model in detecting small targets, the overall performance remains suboptimal. Additionally, in scenarios with numerous targets, although the recognition accuracy has increased, there are still many undetected targets. These challenges highlight the direction for our future research.

5. Conclusions

In order to address the shortage of maritime remote sensing object detectors and the challenges of false positives and missed detections in existing systems, this study introduces the YOLOv5-ASC detection framework, building upon the previous model. The framework incorporates a receptive field enhancement module, which captures multi-scale information through branches with different dilation rates and improves detection accuracy with the SIoU loss function. Additionally, deformable convolution is integrated to better adapt the receptive field to the high aspect ratio characteristics of maritime targets, enhancing recognition capability and detection precision. Validation on the ShipRSImageNet dataset shows that, compared to the original YOLOv5s model, the proposed model achieves a 4.36 percentage point improvement in mAP@0.5, a 5.86 percentage point improvement in mAP@0.5:0.95, a 9.87 percentage point increase in precision, and a 1.76 percentage point increase in recall. However, YOLOv5-ASC still faces significant challenges in detecting small objects and in scenarios with a large number of targets, highlighting directions for future research.

Author Contributions

T.L.: Conceptualization, methodology, writing—original draft, software; X.J.: Formal analysis, writing—review and editing; T.S.: Methodology, supervision, validation, funding acquisition, writing—review; Q.C.: Visualization, software, data curation, investigation. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Shanghai Pujiang Program: 22PJD028.

Data Availability Statement

All data included in this study are available upon request by contact with the corresponding author.

Acknowledgments

I would like to thank my supervisors for their guidance and for their care and assistance with the thesis and all aspects of it. We thank the Innovation Academy for Microsatellites of Chinese Academy of Science for providing arithmetic support.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| RFEM | Receptive Field Enhancement Module |

| ERFEM | Enhanced Receptive Field Enhancement Module |

| ARFEM | Attention-based Receptive Field Enhancement Module |

| DCN | Deformable Convolution Module |

| DCNv2 | Deformable Convolution Module v2 |

| SIoU | SCYLLA-IoU |

| CBAM | Convolutional Block Attention Module |

| SPPF | Spatial Pyramid Pooling Fast |

| CNN | Convolutional Neural Networks |

| IoU | Intersection over Union |

| YOLO | You Only Look Once |

| mAP | mean Average Precision |

| TP | true positive |

| FP | false positive |

| FN | false negative |

References

- Wang, Q.; Wang, J.; Wang, X.; Wu, L.; Feng, K.; Wang, G. A YOLOv7-Based Method for Ship Detection in Videos of Drones. J. Mar. Sci. Eng. 2024, 12, 1180. [Google Scholar] [CrossRef]

- Wang, W.; Zhang, X.; Sun, W.; Huang, M. A Novel Method of Ship Detection under Cloud Interference for Optical Remote Sensing Images. Remote Sens. 2022, 14, 3731. [Google Scholar] [CrossRef]

- Mishra, S.; Shalu, P.; Soman, D.; Singh, I. Advanced Ship Detection System Using Yolo V7. In Proceedings of the 2023 IEEE 7th Conference on Information and Communication Technology (CICT), Jabalpur, India, 15–17 December 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhang, L.; Wang, Y.; Feng, P.; He, R. ShipRSImageNet: A Large-Scale Fine-Grained Dataset for Ship Detection in High-Resolution Optical Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 8458–8472. [Google Scholar] [CrossRef]

- Zhuang, Y.; Li, L.; Chen, H. Small Sample Set Inshore Ship Detection From VHR Optical Remote Sensing Images Based on Structured Sparse Representation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 2145–2160. [Google Scholar] [CrossRef]

- Qin, P.; Cai, Y.; Liu, J.; Fan, P.; Sun, M. Multilayer Feature Extraction Network for Military Ship Detection from High-Resolution Optical Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 11058–11069. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar] [CrossRef]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A Single-Stage Object Detection Framework for Industrial Applications. arXiv 2022, arXiv:2209.02976. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the Computer Vision—ECCV, Amsterdam, The Netherlands, 8–10 and 15–16 October 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar] [CrossRef]

- Ma, S.; Wang, W.; Pan, Z.; Hu, Y.; Zhou, G.; Wang, Q. A Recognition Model Incorporating Geometric Relationships of Ship Components. Remote Sens. 2023, 16, 130. [Google Scholar] [CrossRef]

- Liang, S.; Liu, X.; Yang, Z.; Liu, M.; Yin, Y. Offshore Ship Detection in Foggy Weather Based on Improved YOLOv8. J. Mar. Sci. Eng. 2024, 12, 1641. [Google Scholar] [CrossRef]

- Yu, Z.; Huang, H.; Chen, W.; Su, Y.; Liu, Y.; Wang, X. YOLO-FaceV2: A Scale and Occlusion Aware Face Detector. arXiv 2022. [Google Scholar] [CrossRef]

- Abhinaba, H.; Jidesh, P. Detection and Classification of Ships Using a Self-Attention Residual Network. In Proceedings of the 2022 IEEE 6th Conference on Information and Communication Technology (CICT), Gwalior, India, 18–20 November 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Xiong, W.; Xiong, Z.; Cui, Y. An Explainable Attention Network for Fine-Grained Ship Classification Using Remote-Sensing Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–14. [Google Scholar] [CrossRef]

- Van Ricardo Zalukhu, B.; Wijayanto, A.W.; Habibie, M.I. Marine Vessels Detection on Very High-Resolution Remote Sensing Optical Satellites Using Object-Based Deep Learning. In Proceedings of the 2022 IEEE International Conference on Communication, Networks and Satellite (COMNETSAT), Solo, Indonesia, 3–5 November 2022; pp. 149–154. [Google Scholar] [CrossRef]

- Guo, B.; Zhang, R.; Guo, H.; Yang, W.; Yu, H.; Zhang, P.; Zou, T. Fine-Grained Ship Detection in High-Resolution Satellite Images With Shape-Aware Feature Learning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 1914–1926. [Google Scholar] [CrossRef]

- Chenguang, Z.; Guifa, T. Application of Improved YOLO V5s Model for Regional Poverty Assessment Using Remote Sensing Image Target Detection. Photogramm. Eng. Remote Sens. 2023, 89, 499–513. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Dai, J.; Qi, H.; Xiong, Y.; Li, Y.; Zhang, G.; Hu, H.; Wei, Y. Deformable Convolutional Networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 764–773. [Google Scholar]

- Zhu, X.; Hu, H.; Lin, S.; Dai, J. Deformable ConvNets v2: More Deformable, Better Results. arXiv 2018, arXiv:1811.11168. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. arXiv 2018, arXiv:1807.06521. [Google Scholar]

- Gevorgyan, Z. SIoU Loss: More Powerful Learning for Bounding Box Regression. arXiv 2022, arXiv:2205.12740. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).