Abstract

This review investigates the influence of European Union regulations on the adoption of artificial intelligence in smart city solutions, with a structured emphasis on regulatory barriers, technological challenges, and societal benefits. It offers a comprehensive analysis of the legal frameworks in effect by 2025, including the Artificial Intelligence Act, General Data Protection Regulation, Data Act, and sector-specific directives governing mobility, energy, and surveillance. This study critically assesses how these regulations affect the deployment of AI systems across urban domains such as traffic optimization, public safety, waste management, and energy efficiency. A comparative analysis of regulatory environments in the United States and China reveals differing governance models and their implications for innovation, safety, citizen trust, and international competitiveness. The review concludes that although the European Union’s focus on ethics and accountability establishes a solid basis for trustworthy artificial intelligence, the complexity and associated compliance costs create substantial barriers to adoption. It offers recommendations for policymakers, municipal authorities, and technology developers to align regulatory compliance with effective innovation in the context of urban digital transformation.

1. Introduction

Cities across Europe are increasingly relying on artificial intelligence to enhance urban services and infrastructure management [,]. Applications such as energy grid optimization, traffic management, and public safety enhancement demonstrate the potential of AI-driven smart city solutions to deliver notable improvements in efficiency, sustainability, and quality of life [,]. By 2025, it is anticipated that artificial intelligence will enable over thirty percent of smart city applications, particularly within the domain of urban mobility, highlighting the growing dependence on intelligent systems in municipal operations []. Nevertheless, the integration of AI in cities introduces significant challenges concerning ethics, security, and governance [,,]. Unregulated implementation may intensify surveillance, jeopardize privacy, or institutionalize bias within public services [,,]. Ensuring that AI is deployed responsibly and in accordance with European values has consequently become a priority for policymakers [,,,].

The European Union has established itself as a global leader in technological regulation through the development of a comprehensive framework to address the risks and challenges posed by artificial intelligence and related digital technologies [,,]. Central to this framework is the recently adopted Artificial Intelligence Act, alongside earlier regulations concerning data protection and cybersecurity, all of which exert a direct influence on the design and deployment of AI in smart city contexts [,]. The Artificial Intelligence Act (Regulation (EU) 2024/1689) represents the first dedicated law on AI worldwide, introducing harmonized rules based on a risk-oriented approach [,,]. The regulation classifies AI systems by risk level—unacceptable, high, limited, and minimal—and imposes stringent obligations on high-risk applications, which are particularly relevant to essential urban services [,,]. Supporting this regulation are instruments such as the General Data Protection Regulation, which secures personal data in all smart city applications involving citizen information [,], and the NIS 2 Directive on network and information security, which mandates cybersecurity protocols for critical sectors including energy, transport, water, waste, and public administration []. Furthermore, domain-specific instruments such as the Network Code on Cybersecurity for the Electricity Sector address the resilience of the power grid [,], while the EU Cybersecurity Act introduces a certification framework for information and communication technology product security []. The forthcoming Cyber Resilience Act will establish binding cybersecurity standards for virtually all connected hardware and software, aiming to ensure that digital products and devices are secure by design [,,].

Collectively, these instruments construct a complex regulatory environment for developers and deployers of AI in smart city ecosystems [,]. While the regulations are designed to mitigate risk, protect personal data and fundamental rights, enhance the security and reliability of AI systems, and build public trust in smart city innovation [,,], they also introduce legal responsibilities and liabilities that may hinder innovation if not addressed effectively [,,]. Municipal governments and technology providers engaged in urban AI deployment must contend with a variety of requirements, including algorithmic transparency and human oversight under the Artificial Intelligence Act [,,], privacy-by-design under the General Data Protection Regulation [,], and cybersecurity risk management and incident reporting as prescribed by the NIS 2 Directive and relevant sectoral instruments [,]. The challenge of simultaneously fulfilling multiple regulatory obligations is especially acute for smaller municipalities or startups operating in partnership with local authorities [,].

This article synthesizes insights from regulatory texts, academic literature, industry white papers, and institutional reports to provide a comprehensive resource on the current state of European Union policy concerning AI-enabled smart cities [,]. It is intended to support researchers, policymakers, and practitioners in understanding the prevailing regulatory framework and its implications, as well as in formulating strategies to achieve compliance while advancing societal and technological goals [,]. The development of artificial intelligence within smart city ecosystems will depend substantially on the co-evolution of governance structures and technological capabilities. Within the European Union, this requires harmonizing urban innovation with the overarching regulatory ambition to foster secure, inclusive, and trustworthy artificial intelligence [,,].

While the primary focus of this review is the regulatory environment of the European Union, it incorporates a structured comparative analysis of the United States and China to contextualize the EU’s regulatory approach globally. These two jurisdictions were selected due to their geopolitical significance and contrasting governance models. The intention is not to survey smart city AI applications worldwide but to provide a focused regulatory comparison relevant to transatlantic and transpacific policy dialogues.

Existing literature reviews have explored the intersection of AI and smart city governance, but they vary in regulatory scope. For example, some surveys propose broad principles or frameworks for governing AI in cities [] or classify generic adoption barriers—with policy and regulatory hurdles being just one category among technological and organizational challenges []. Others zoom in on particular contexts, such as a recent review focusing on European AI governance in smart cities [], which examines the EU’s emerging AI Act alongside privacy and ethics laws. However, none have provided a dedicated, in-depth analysis of the European Union’s regulatory framework and its impact on smart city AI adoption. This paper addresses that gap by concentrating on the EU regulatory environment—from the GDPR to the forthcoming EU AI Act—and evaluating how these regulations create unique barriers or catalysts for AI-driven smart city solutions. In doing so, our review offers a more specific and comparative perspective than prior works, delving into the EU’s approach in detail and contrasting its implications with broader international discussions on AI regulation in urban innovation.

The structure of the review is as follows. Section 2 describes the methodological approach. Section 3 offers a detailed account of the European Union’s regulatory landscape in 2025, including horizontal instruments such as the Artificial Intelligence Act, General Data Protection Regulation, Data Act, and Data Governance Act, as well as sectoral regulations relevant to domains such as energy, mobility, and public safety. Section 4 analyzes the regulatory barriers these frameworks pose for the adoption of artificial intelligence in cities, including compliance burdens, legal uncertainty, constraints on high-risk use cases, and disparities in capacity among municipalities and developers. Section 5 addresses the technological challenges intersecting with legal obligations, including data fragmentation, interoperability issues, infrastructure limitations, cybersecurity vulnerabilities, and the difficulty of implementing explainable and context-sensitive AI systems. Section 6 highlights the societal benefits of artificial intelligence in urban environments, from improved mobility and energy efficiency to enhanced public services and environmental outcomes, reinforcing the alignment between responsible governance and broader policy objectives. Section 7 provides a comparative analysis of the European Union’s rights-based regulatory model in contrast to the decentralized regulatory context of the United States and the state-led deployment strategy of China. Section 8 concludes the review by summarizing the key findings and arguing that, despite presenting barriers to innovation, the European Union’s regulatory framework lays the groundwork for a trustworthy and sustainable approach to AI adoption in cities.

2. Methodology

This scoping review was conducted following the PRISMA-ScR guidelines, which offer a structured framework for synthesizing interdisciplinary research in evolving domains such as AI regulation and smart cities. The objective was to systematically map the regulatory, technological, and societal factors influencing the adoption of artificial intelligence in European smart city contexts. A predefined protocol guided the review process, including scope definition, literature identification and selection, data extraction, and thematic synthesis.

The review was guided by three primary research questions, derived from the conceptual framing in the Introduction:

- What are the major regulatory barriers posed by the European Union’s legal framework to the development and deployment of AI in smart city domains?

- What are the core technological challenges that intersect with legal constraints in the implementation of AI-based smart city solutions?

- What societal benefits can AI deliver in smart cities, and how do EU regulations align with or constrain the realization of these benefits?

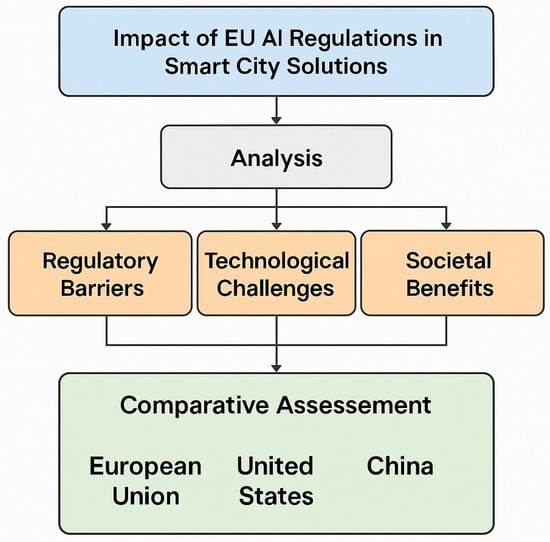

To provide a structured visual overview of how these three research questions are addressed, Figure 1 presents a conceptual framework for the scoping review. It illustrates the thematic structure of the analysis, which is organized around regulatory barriers, technological challenges, and societal benefits. These domains are subsequently examined through a comparative assessment of governance approaches across the European Union, the United States, and China. This framework supports the reader’s understanding of the review’s analytical flow and transregional perspective.

Figure 1.

Conceptual framework of the review outlining the structured analysis of EU AI regulation in smart city solutions, organized into three thematic pillars—regulatory barriers, technological challenges, and societal benefits—followed by a comparative assessment of governance approaches in the European Union, the United States, and China.

Eligibility Criteria: Given the multidisciplinary scope of the topic, the review draws on a broad spectrum of sources. Eligible materials include peer-reviewed academic literature, encompassing journal articles and conference proceedings, as well as European Union regulatory texts such as regulations, directives, and official guidance. Gray literature was also considered, including policy papers, industry reports, technical documents, and strategic roadmaps. Only documents that explicitly address artificial intelligence applications in smart cities within the European Union, or that directly examine EU regulations including the Artificial Intelligence Act, General Data Protection Regulation, Data Act, NIS2 Directive, and relevant sector-specific rules, were included. Sources focusing on artificial intelligence in general or in non-urban contexts without clear relevance to European smart cities were excluded. The review covers publications from 2013 to 2025, capturing significant regulatory developments and the progression of smart city technologies over this period. Only sources published in English were considered.

Information Sources and Search Strategy: A comprehensive search strategy was adopted to ensure thorough coverage of regulatory, academic, and policy-relevant materials. Academic databases including Scopus, IEEE Xplore, and Web of Science were queried using keyword combinations such as “artificial intelligence” AND “smart cities” AND “Europe” OR “EU” AND “regulation” OR “policy” OR “GDPR” OR “AI Act” OR “NIS2” OR “data governance.” In parallel, targeted searches were conducted on official European Union legal and policy repositories, including EUR-Lex, the European Commission’s artificial intelligence and digital policy portals, and the European Data Protection Board. Institutional websites such as those of ENISA, CEPS, CEER, and EU-funded projects including AI4Cities and PRELUDE were examined for relevant technical and policy documentation. Additional searches were carried out using Google Scholar and Google with domain-specific filters, site: europa.eu, to retrieve white papers, legislative drafts, and gray literature not indexed in academic databases.

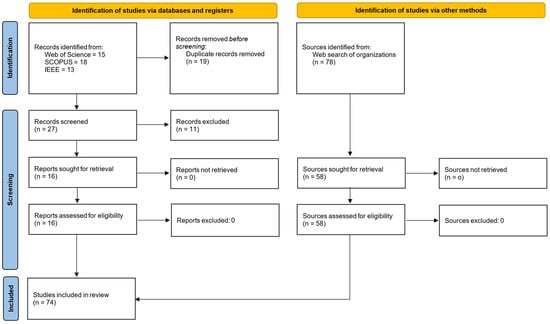

Source Selection: After duplicate removal, all records were screened by title and abstract or executive summary against the eligibility criteria. Sources were excluded if they lacked a European scope, failed to address AI deployment in smart city solutions, or did not engage substantively with regulatory or technological issues. The remaining sources were reviewed in full to assess their inclusion. A PRISMA flow diagram (Figure 2) summarizes the number of sources identified, screened, excluded, and included in the final synthesis.

Figure 2.

PRISMA flow diagram for source identification and selection.

Data Extraction and Synthesis: A structured extraction form was developed to chart relevant data from each source, capturing (a) regulatory provisions and constraints (e.g., AI Act classifications, GDPR requirements, NIS2 obligations), (b) technological dependencies and challenges (e.g., data interoperability, explainability, infrastructure limitations), and (c) societal impacts and public value dimensions (e.g., improved mobility, energy efficiency, citizen trust). A thematic synthesis approach was used to categorize findings under three analytical pillars: regulatory barriers, technological challenges, and societal benefits. Within each pillar, inductive sub-categorization was applied. For instance, under regulatory barriers, subthemes included compliance complexity, high-risk use-case restrictions, and fragmentation in interpretation. Selected findings were summarized in tables to illustrate key regulatory instruments, policy developments, and implementation challenges. Narrative synthesis was then applied to align these insights with the review’s core research questions, enabling an integrated understanding of how regulation interacts with innovation and social outcomes in the European smart city landscape.

Applicability of PRISMA: The PRISMA-ScR framework was selected for this review due to its capacity to structure and synthesize knowledge across diverse sources and disciplinary boundaries. Its emphasis on scoping rather than evaluating evidence is particularly well suited for transdisciplinary inquiries such as the regulation of artificial intelligence in smart city contexts, which span legal texts, technical standards, and policy reports. By enabling the systematic mapping of heterogeneous evidence, including normative EU legal instruments, technical white papers, and academic studies, the PRISMA-ScR supports the integration of insights from fields that do not share a unified methodological tradition. Nevertheless, certain limitations of the method should be acknowledged. PRISMA-ScR was originally designed for the aggregation of empirical studies and may be less equipped to fully capture interpretive dimensions present in regulatory analysis or governance studies. Furthermore, the reliance on document-based sources may underrepresent tacit or practice-based knowledge relevant to municipal decision-making. These limitations were partially mitigated by incorporating gray literature, official EU repositories, and cross-sectoral publications. However, the boundaries of the method must be recognized, particularly in relation to the epistemic diversity and normative character of the domains reviewed.

3. EU Regulatory Landscape for AI in Smart Cities

European smart city initiatives are subject to one of the most stringent legal frameworks for digital technology globally [,,]. A combination of general and sector-specific European Union regulations imposes requirements on artificial intelligence systems that may act as barriers or sources of friction for their deployment in urban services [,,]. This section examines the primary regulatory constraints, including data protection provisions, the forthcoming Artificial Intelligence Act and related proposals, and other European laws relevant to key smart city sectors [,,]. It considers how compliance obligations may impede or delay the adoption of artificial intelligence technologies [,,].

3.1. EU AI Act

A central element of the European Union’s approach to artificial intelligence governance is the Artificial Intelligence Act, the first comprehensive law on AI globally [,,]. Adopted in late 2023 and entering into force in 2024, the regulation introduces a harmonized, risk-based framework applicable across all member states [,]. The Artificial Intelligence Act classifies AI systems into four categories of risk: minimal, limited, high, and unacceptable, with corresponding obligations. Most everyday applications, such as spam filters and traffic prediction tools, are considered minimal risk and are subject only to voluntary codes of conduct []. Systems classified as limited risk must comply with specific transparency requirements, such as the obligation for chatbots to disclose their artificial nature. High-risk AI systems, by contrast, must meet extensive regulatory requirements under the Act [,,].

High-risk categories, as defined in Annex III of the Artificial Intelligence Act, include AI systems used in safety-critical infrastructure, law enforcement, the administration of justice, and other contexts with substantial implications for individuals’ lives and rights [,]. Many smart city applications, such as AI-based traffic control, algorithms for public service allocation, autonomous public transport, and biometric identification in public spaces, are expected to be classified as high risk due to their impact on public safety and fundamental rights [,]. Developers and deployers of high-risk AI must implement risk management procedures, ensure the use of high-quality datasets to minimize bias, provide clear information to users, enable human oversight, and maintain detailed technical documentation and logging to support compliance audits [,].

Before deployment, such systems may also be subject to conformity assessments and must be registered in the European Union database of high-risk AI systems. At the opposite end of the risk spectrum, the Act prohibits a limited number of artificial intelligence practices classified as unacceptable. For instance, systems involving the social scoring of individuals by public authorities or those employing subliminal manipulation that causes harm are explicitly banned [,,]. Importantly, the Act effectively prohibits real-time remote biometric identification, such as live facial recognition in public spaces for law enforcement purposes, except in narrowly defined emergency situations [,], Other forms of remote biometric identification, such as retrospective facial recognition using closed-circuit television footage, are not banned but are classified as high risk. Consequently, police forces or municipal authorities deploying such systems must comply with the stringent safeguards established by the Act [,]. This prohibition reflects fundamental rights considerations in the European context and has direct implications for smart surveillance initiatives in urban environments.

The implementation of the Artificial Intelligence Act is phased. It entered into force in 2024, but its provisions apply over a transition period to allow stakeholders to prepare. By early 2025, the bans on unacceptable AI practices take effect, and within approximately one to two years, that is, by 2025 to 2026, the compliance obligations for high-risk and certain other AI systems, including possibly rules on general-purpose AI systems, will become mandatory [,]. Cities and municipalities, as deployers or sometimes providers of AI systems, will therefore soon be legally required to ensure that any high-risk AI they use, for example, in traffic management, public safety, or administrative decisions, complies with the standards of the Act [,,]. In practice, this means that local governments must assess the risk category of AI solutions they procure, request the necessary compliance documentation from vendors, and in some cases modify or forgo certain use cases that may be prohibited, such as the use of live facial recognition through street surveillance cameras [,].

The Artificial Intelligence Act aims to foster trustworthy AI. Its proponents argue that by mitigating risks and enhancing public trust, the Act will ultimately support wider adoption of artificial intelligence, including in smart cities, on a foundation grounded in safety and fundamental rights [,]. However, concerns remain that the administrative burden and uncertainty introduced by the new rules may hinder innovation at the municipal level or discourage participation by smaller providers, an issue that will be revisited in the discussion of regulatory barriers [,].

3.2. Data Protection (GDPR) and Privacy

Long before the adoption of the Artificial Intelligence Act, the European Union’s robust data protection framework had already shaped the development of smart city initiatives. The General Data Protection Regulation, in force since 2018, applies to all processing of personal data within smart city systems. Much of the data that support smart city functions, including video from street cameras, residents’ mobility or energy consumption data, facial imagery, and vehicle registration details, constitute personal data subject to the provisions of the General Data Protection Regulation. The regulation imposes strict requirements concerning lawfulness, purpose limitation, data minimization, storage limitation, security, and the protection of individual rights, resulting in a substantial compliance burden for municipal authorities and technology providers [,]. For example, the deployment of an artificial intelligence-based traffic camera system that captures identifiable individuals or vehicles requires a valid legal basis under the General Data Protection Regulation such as the public interest or individual consent, a clearly defined purpose such as traffic flow analysis, and safeguards including data anonymization or deletion protocols. Special categories of personal data, including biometric identifiers used in facial recognition technologies, are subject to even stricter limitations. These categories are generally prohibited from processing unless a specific exception applies, due to their sensitive nature [].

As a consequence, cities must proceed with caution when deploying artificial intelligence systems that involve the monitoring of citizens, often being required to conduct Data Protection Impact Assessments and to consult regulatory authorities for high-risk data processing activities [,]. Numerous European smart city initiatives have revised their scope in response to privacy constraints. For instance, some municipalities have opted not to use artificial intelligence for individualized facial recognition in public spaces or have adopted anonymized data analytics instead, citing concerns about compliance with the General Data Protection Regulation [,]. Researchers have observed that the European Union’s stringent personal data protection laws can present considerable obstacles to smart city development, compelling projects to allocate significant resources to compliance and, in some cases, restricting the availability of data required to support artificial intelligence applications [,].

This has created a context in which developers of smart city technologies in Europe incur higher initial costs to implement privacy by design. In some instances, companies have redirected their focus to regions with less stringent data protection frameworks in order to test data-intensive innovations [,]. In summary, the General Data Protection Regulation remains both a foundational safeguard and a constraint for artificial intelligence in smart cities. It protects citizens’ privacy rights while simultaneously acting as a regulatory barrier when artificial intelligence solutions depend on large-scale personal data collection.

In addition to the General Data Protection Regulation, the proposed ePrivacy Regulation, which remains under negotiation as of 2025, may further influence the handling of smart city data, particularly communications metadata and data from Internet of Things sensors deployed in public spaces. However, its final form remains uncertain []. At the same time, the Law Enforcement Directive (EU) 2016/680 [] governs the processing of personal data by police and other public authorities for security-related purposes. This is particularly relevant where municipal police forces use artificial intelligence for surveillance or predictive policing, adding an additional layer of data protection obligations, including the need to satisfy tests of necessity and proportionality when deploying new technologies [,].

3.3. Data Governance and Sharing Frameworks

Recognizing that data are essential to artificial intelligence, the European Union has introduced legislation to promote data sharing and availability in a trustworthy manner. The Data Governance Act, which became applicable in September 2023, establishes mechanisms for sharing public sector and personal data for uses that serve the public interest [,]. It permits the reuse of certain categories of sensitive public sector data, such as anonymized health, environmental, or transport data, under strict conditions. It also promotes the creation of data intermediation services as neutral brokers and sets rules for data altruism, allowing individuals to donate their data for societal benefit []. For smart cities, the Data Governance Act is particularly relevant, as many valuable datasets, including traffic patterns, energy consumption, and pollution levels, are held by public authorities or generated through citizen activities. Under this framework, municipalities can share data with artificial intelligence developers more confidently. For example, a city may grant researchers access to urban mobility data through a trusted intermediary without breaching privacy or relinquishing control [,].

The Data Governance Act also supports the development of Common European Data Spaces in strategic domains, including domains directly relevant to smart cities such as mobility, energy, and public administration []. Indeed, an EU-supported initiative is underway to create a federated “Data Space for Smart and Sustainable Cities and Communities,” providing governance frameworks and standards to help cities and private partners exchange data while respecting European values [,]. This is expected to reduce a key barrier to AI adoption, lack of accessible data, by increasing the pool of data available for training algorithms and enabling cross-city learning, all within a regulated trust framework [].

3.4. Data Act

Complementing the Data Governance Act, the Data Act, which entered into force in January 2024, represents another critical element of the European Union’s data strategy. While the Data Governance Act emphasizes governance mechanisms and altruistic data sharing, the Data Act establishes new legal rights to access and share data generated by connected devices and services [,]. It is designed to dismantle data silos associated with the Internet of Things. In the context of smart cities, this means that data produced by sensors, vehicles, smart appliances, and urban infrastructure should not remain exclusively under the control of manufacturers or operators. The Data Act grants users of connected products, including municipal authorities, the right to access the data generated by those devices and to share it with third-party service providers of their choice [,]. For instance, if a city installs smart waste bins or traffic sensors supplied by a vendor, the Data Act ensures that the city can retrieve the sensor data and either use it directly or provide it to an artificial intelligence company to enhance route optimization, without the data being exclusively held by the vendor.

The Act also prohibits unfair contractual terms that hinder data sharing and introduces provisions for business-to-government data access in emergency situations. For example, cities may request relevant private sector data, such as telecommunications mobility data during a crisis, for uses that serve the public interest [,]. In addition, the Data Act contains measures to facilitate switching between cloud service providers and to mandate interoperability standards, helping cities avoid vendor lock-in when deploying artificial intelligence platforms []. Most of its provisions will take effect during the 2025 to 2026 period following a transition phase, with full implementation expected by the end of 2025. The Act is expected to significantly increase the volume of data available to support artificial intelligence solutions in urban contexts [,]. In summary, the Data Governance Act and the Data Act are European Union instruments that, rather than restricting artificial intelligence, aim to enable it by expanding access to data while maintaining public trust. They form part of the enabling regulatory framework that complements protective instruments such as the General Data Protection Regulation and the Artificial Intelligence Act.

3.5. Sector-Specific Regulations and Directives

Beyond these horizontal frameworks, several domain-specific EU laws influence AI applications in particular smart city sectors.

3.5.1. Mobility and Transportation

Intelligent Transport Systems are a central element of smart city development. In 2023, the European Union revised its Intelligent Transport Systems Directive (2010/40/EU) [] through Directive (EU) 2023/2661 [] to accelerate the deployment of digital transport services. The updated Directive explicitly addresses connected and automated mobility and mandates the interoperability of transport data and services across the European Union [,]. It requires, for example, the standardization of data relating to roadworks, traffic, and multimodal travel information, and mandates that such data be made available through a common European mobility data space []. This regulatory initiative obliges cities to ensure that their traffic data and smart mobility services comply with European specifications. In return, they gain access to broader data integration, such as vehicles communicating real-time hazard information to traffic management centers.

European Union vehicle safety regulations have begun to incorporate artificial intelligence components. Since July 2022, the General Safety Regulation requires that all new vehicles be equipped with advanced driver assistance systems, some of which are artificial intelligence-based, such as intelligent speed assistance and lane-keeping technologies, as well as black-box data recorders [,]. The European Union is also developing a unified regulatory framework for autonomous vehicles. By 2024 to 2025, the European Commission aims to enable Union-wide type approval of automated vehicles, moving beyond the limited Level 3 automation rules currently governed by the United Nations Economic Commission for Europe []. By 2026, the European Union intends to permit the approval of higher-level automated driving systems, such as autonomous shuttles and freight vehicles, and to harmonize the regulation of cross-border autonomous vehicle operations [,]. This development will offer cities piloting autonomous buses or robotaxis a more coherent legal pathway at the European level, replacing the fragmented national experimental frameworks that had previously governed such initiatives. In the interim, several member states, including Germany and France, have enacted national legislation authorizing autonomous driving in controlled conditions. However, a comprehensive European Union regulation is forthcoming to standardize the approach. In addition, the planned European Connected and Autonomous Vehicle Alliance, an initiative of the European Union, is expected to develop common software and standards for vehicle artificial intelligence, potentially benefiting municipal vehicle fleets [,].

These transport regulations are intended to reduce legal uncertainty and support the safe deployment of artificial intelligence-driven mobility solutions. However, they also impose specific obligations, such as the mandatory inclusion of data recorders in automated vehicles and cybersecurity requirements for vehicle systems under the NIS2 Directive, which practitioners must carefully observe [].

3.5.2. Energy and Utilities

In the energy sector, European Union regulations prioritize smart grids, data accessibility, and system security. The Electricity Directive (EU) 2019/944 [] mandates the deployment of smart metering and grants consumers rights to access their energy consumption data, aligning with the objectives of the Data Act [,]. Energy network operators are required to comply with interoperability standards, such as the use of national data hubs, which support the optimization of grids through artificial intelligence [,]. The European Green Deal has prompted policies that promote digitalization to enhance energy efficiency. For instance, cities are expected to implement building automation and demand response systems to achieve European Union energy efficiency targets [,]. The Data Governance Act and the Data Act also contribute by enabling the sharing of energy data, under appropriate privacy safeguards, to support the development of artificial intelligence models for demand forecasting and improved integration of renewable energy sources [,].

In addition, the Critical Entities Resilience Directive of 2022 [] and the NIS2 Directive [] include the energy sector within their scope. This means that urban energy infrastructure employing artificial intelligence, such as automated grid management systems, must comply with cybersecurity and resilience requirements []. Although there is not yet a dedicated artificial intelligence law for the energy sector, European Union funding programs, including Horizon Europe and Digital Europe, actively support the use of artificial intelligence in urban energy management [,]. The prevailing regulatory approach in the energy domain seeks to ensure both data availability to foster innovation and the reliability of infrastructure. A practical example is found in certain member states where regulators now require utilities to offer open application programming interfaces for real-time energy consumption data. This allows third-party artificial intelligence applications to assist consumers and city managers in optimizing energy use [,]. While such policies are enabling, ensuring compliance, such as verifying that artificial intelligence-based forecasts do not violate the General Data Protection Regulation when household data are involved, remains a significant operational challenge [,].

3.5.3. Public Safety and Surveillance

Surveillance remains one of the most sensitive areas in the context of smart cities. European fundamental rights law, including the General Data Protection Regulation and the Charter of Fundamental Rights of the European Union, imposes strict limitations on surveillance practices. The Artificial Intelligence Act’s prohibition on real-time biometric identification, with exceptions limited to serious crimes or terrorist threats, effectively constitutes a ban on indiscriminate facial recognition in urban surveillance systems [,,]. Several European Union institutions and member states have affirmed that mass biometric surveillance is incompatible with the concept of human-centric smart cities. Even prior to the adoption of the Artificial Intelligence Act, the European Data Protection Board had cautioned against the use of live facial recognition in public spaces []. As a consequence, numerous European cities have either refrained from deploying or have explicitly prohibited the use of facial recognition in public areas. For example, cities such as Amsterdam and Helsinki have committed to abstaining from facial recognition closed-circuit television until a clear legal framework is in place. The Artificial Intelligence Act reinforces this cautious approach [,].

At the same time, less invasive artificial intelligence surveillance tools are permitted, although still subject to regulation. For example, an artificial intelligence system that analyzes closed-circuit television feeds to detect behavioral anomalies, without identifying individuals, may be allowed as a high-risk system, provided it complies with transparency obligations and is subject to human oversight [,]. The use of artificial intelligence by law enforcement authorities, such as predictive policing algorithms or suspect identification tools, is governed by both the Artificial Intelligence Act, where applicable, and the Law Enforcement Directive. Such systems must be demonstrably necessary and proportionate under national legislation. In addition, the Artificial Intelligence Act introduces further obligations, including requirements for accuracy testing and measures to mitigate bias [,].

Cities therefore encounter regulatory barriers in the adoption of certain artificial intelligence-based policing technologies. The European Union framework requires thorough justification and the implementation of safeguards, which may delay or preclude deployment [,]. However, these regulations play a critical role in protecting citizens from potential overreach and misuse of artificial intelligence surveillance, thereby upholding societal values []. A real-world example is Hungary’s attempted implementation of biometric street cameras, which attracted criticism for its likely incompatibility with the forthcoming Artificial Intelligence Act [,]. In summary, the European Union clearly signals that although artificial intelligence can contribute to public safety, such as by enabling faster emergency response through gunshot detection or crowd monitoring algorithms, it must not do so at the expense of fundamental rights. Cities are required to innovate within these regulatory parameters, prioritizing artificial intelligence solutions that enhance safety without relying on continuous personal identification or unwarranted tracking.

3.5.4. Other Domains

In domains such as waste management, water supply, and general municipal administration, European Union law typically applies through the horizontal regulatory frameworks already discussed, including those on data governance, privacy, and the Artificial Intelligence Act, rather than through domain-specific rules for artificial intelligence [,]. Waste management, for instance, can benefit from artificial intelligence applications in route optimization and the sorting of recyclable materials. There is no European legislation prohibiting the use of artificial intelligence for routing waste collection vehicles. However, if such a system employs cameras to detect when bins are full and inadvertently capture images of individuals nearby, the General Data Protection Regulation would apply [,]. Similarly, while the Urban Wastewater Treatment Directive (EU) 2024/3019 [] and related instruments do not reference artificial intelligence, the use of artificial intelligence to monitor water quality in real time must be aligned with existing standards for environmental data reporting [].

In the area of municipal administration, the European Union’s digital government initiatives encourage the adoption of artificial intelligence, such as chatbots for electronic public services, while simultaneously promoting algorithmic transparency in public sector applications [,,]. Although not yet codified in law, there are soft-law instruments, including guidance issued by the European Commission on the use of artificial intelligence in public services, which are followed by many cities [,]. In addition, public procurement law in the European Union, particularly Directives 2014/24/EU [] and related instruments, plays an important role. Cities procuring artificial intelligence systems are required to do so through competitive procedures and may include criteria ensuring compliance with ethical and legal standards in their tender specifications [,]. The European Union has issued recommendations on the procurement of artificial intelligence, advising public authorities to request algorithmic explanations and risk assessments from vendors [,]. While these recommendations are not legally binding, they influence municipal procurement practices and contribute to establishing expectations that artificial intelligence solutions adopted by cities meet principles of trustworthiness [,].

3.6. Upcoming Liability and Safety Rules

While the Artificial Intelligence Act establishes ex ante obligations, the European Union has also considered ex post regulatory measures for instances in which artificial intelligence causes harm. In late 2022, the European Commission proposed an Artificial Intelligence Liability Directive aimed at easing the process for victims to claim compensation, for example, by lowering the burden of proof [,]. This proposal was particularly relevant for municipalities, as liability rules would determine how individuals could seek redress if, for instance, an autonomous bus operated by a city were to cause injury. However, as of early 2025, the Commission withdrew the proposal due to the absence of political consensus [,]. Concerns have been raised that the directive might lead to over-regulation or result in unnecessary duplication of existing liability frameworks.

In place of the Artificial Intelligence Liability Directive, the regulatory focus has shifted to the revision of the Product Liability Directive (EU) 2024/2853 [] in order to explicitly include software and artificial intelligence. This ensures that if a product incorporating artificial intelligence, such as a smart city sensor system or an autonomous vehicle, malfunctions and causes harm, the manufacturer or deployer can be held liable under harmonized rules across the European Union. The revised Product Liability Directive was adopted in 2024 and will enter into force by 2026. It will extend strict liability to producers of artificial intelligence-enabled products and, in some cases, to software providers []. For cities, this development provides greater legal clarity, as vendors of artificial intelligence systems will bear defined liability, facilitating the recovery of damages when malfunctions occur. However, municipalities themselves may also be held liable if found negligent in deploying artificial intelligence, although the application of sovereign immunity to public authorities continues to vary across national legal systems.

Furthermore, the European Union’s Cybersecurity Act and the new Cyber Resilience Act, enacted in 2024, require that connected devices and software, including artificial intelligence systems, comply with cybersecurity standards and bear CE markings for cyber safety between 2025 and 2027 [,,]. These measures aim to ensure that smart city Internet of Things and artificial intelligence systems are protected against cyberattacks, a critical concern as cities increasingly automate essential services. While compliance with these new safety and security obligations may increase costs or delay the deployment of certain artificial intelligence solutions pending certification, the long-term objective is to prevent severe incidents, such as the malicious takeover of municipal artificial intelligence infrastructure [,].

3.7. A Dense Regulatory Framework

In summary, by 2025, the EU has built a dense regulatory framework affecting AI in smart cities. Protective regulations like the AI Act, the GDPR, and sectoral privacy/safety rules set boundaries and obligations that can act as barriers or slow down the adoption of certain AI technologies in cities. Meanwhile, enabling frameworks like the Data Governance Act, the Data Act, and updated ITS standards seeks to provide the fuel in terms of data and legal clarity for AI innovation in cities. This combination reflects the EU’s philosophy of promoting technologically advanced smart cities that are also “lawful and ethical”. This EU legislation illustrates a dual-purpose regulatory strategy: to safeguard fundamental rights while simultaneously enabling innovation. As demonstrated, horizontal regulations such as the General Data Protection Regulation and the Artificial Intelligence Act establish essential legal boundaries for AI development and deployment in smart cities, especially concerning ethical use, transparency, and citizen trust. Concurrently, enabling instruments like the Data Governance Act and the Data Act are designed to unlock the potential of data-driven technologies by promoting interoperability, secure data access, and common standards.

Yet, this regulatory architecture can appear opaque to municipal stakeholders who must coordinate compliance across overlapping domains of data protection, cybersecurity, product safety, and sector-specific standards. To support a more integrated understanding, Table 1 presents a consolidated overview of the principal EU regulatory instruments discussed in this review, highlighting their scope, relevance to smart cities, key obligations, and implementation status. This synthesis underscores the density of the framework and the operational complexity it introduces, particularly for city-level authorities and SMEs tasked with deploying trustworthy AI systems.

Table 1.

Chronological overview of EU regulatory instruments governing AI in smart cities.

The next sections delve into how these regulations translate into on-the-ground barriers and challenges for smart city stakeholders and how overcoming them can unlock significant societal benefits.

4. Regulatory Barriers to AI Adoption in Smart Cities

While the EU’s comprehensive regulatory framework provides important safeguards, it also introduces non-trivial barriers to the adoption of AI in smart city initiatives. These barriers can be legal, administrative, and financial in nature, stemming from the need to comply with multiple regulations and the uncertain interpretation of new rules. Here, we analyze the major regulatory barriers faced by cities and companies deploying AI in urban environments, as identified by studies and practical experiences.

4.1. Compliance Burden and Complexity

One of the most immediate barriers is the complexity involved in complying with the European Union’s regulatory framework for artificial intelligence and data [,,]. A municipal authority seeking to implement an artificial intelligence system must navigate a dense and multifaceted body of legislation. This includes ensuring compliance with the General Data Protection Regulation for data protection, determining whether the system qualifies as high risk under the Artificial Intelligence Act and meeting the associated obligations, considering relevant sector-specific regulations in areas such as transport or health, and addressing applicable public procurement and cybersecurity requirements [,,,]. Meeting these legal obligations frequently demands specialized legal and technical expertise, which many municipalities may not possess internally.

For example, carrying out a Data Protection Impact Assessment for a smart city artificial intelligence project, as required under the General Data Protection Regulation for high-risk data processing, is a complex undertaking. It may necessitate the engagement of external data privacy consultants and can significantly delay project implementation [,,,]. The Artificial Intelligence Act will introduce similar obligations, including conformity assessments and extensive documentation for high-risk systems. These are tasks with which cities have limited experience, as safety certification has traditionally applied to physical products rather than algorithmic systems [,,,]. Smaller municipalities may find these processes particularly burdensome and difficult to manage [,,].

The costs associated with compliance, including the implementation of privacy by design, the conduct of audits, and the preparation of technical documentation to satisfy the requirements of the Artificial Intelligence Act, can place considerable strain on municipal budgets and may deter innovation. A recent qualitative study of European smart city projects found that high compliance and localization costs, particularly those related to adapting solutions to meet European legal standards, often compel developers either to seek additional funding or to reduce the scale and ambition of their artificial intelligence deployments [,]. In some instances, promising artificial intelligence pilot projects are discontinued by city authorities who are concerned about potential legal risks or who lack the financial capacity to manage the associated compliance burden. This situation has been characterized as a chilling effect, in which well-intentioned regulations inadvertently inhibit public sector innovation in artificial intelligence due to apprehension over non-compliance [,,].

4.2. Data Access Limitations Due to Privacy

Although the European Union actively promotes data sharing, privacy regulations continue to limit access to certain datasets that could support artificial intelligence development [,]. The General Data Protection Regulation, while essential for safeguarding individual rights, prevents the unrestricted use of many types of urban data unless they are anonymized or used with informed consent [,,,]. Effective anonymization is technically complex and can reduce the utility of data for artificial intelligence applications [,]. City officials have reported challenges in using historical closed-circuit television footage to train computer vision models due to privacy requirements that mandate the blurring of faces and vehicle registration plates. This process is costly and often beyond the capacity of small teams, resulting in potentially valuable training data remaining unused [,,,].

Similarly, real-time personalized data, such as tracking individual vehicles across a city to analyze congestion patterns, is generally prohibited unless robust pseudonymization techniques are applied [,,]. The purpose limitation principle under the General Data Protection Regulation further restricts data reuse. Data collected for one purpose, such as billing for water usage, cannot typically be repurposed for a different artificial intelligence project, such as predicting neighborhood water demand, without either obtaining renewed consent from citizens or demonstrating that the new use falls under a compatible purpose []. Obtaining consent on a population-wide scale is often impractical for municipal initiatives, thereby creating a significant barrier to the reuse of data [,].

As a consequence, developers are sometimes required to rely on synthetic data or simulations to train artificial intelligence models, which may not be as effective as real-world data [,,]. Alternatively, cities may limit their use of artificial intelligence to aggregate non-personal data. For example, general traffic flow counts may be used instead of detailed vehicle trajectory data, thereby constraining the potential sophistication of the artificial intelligence solution [,]. One scholar has observed that smart city development in the European Union “suffers from one-sided inputs” because privacy regulations restrict access to certain data streams, which may introduce bias into artificial intelligence systems or reduce their accuracy [].

While the Data Governance Act now offers mechanisms to enable the secure sharing of sensitive data, such as through data altruism and trusted intermediaries, these frameworks remain in their early stages as of 2025 and have not yet been widely adopted by cities. As a result, the data access barrier continues to persist in practice [,,].

4.3. Restrictions on High-Risk Use-Cases

The Artificial Intelligence Act’s classification of certain smart city applications as high risk or prohibited presents a direct regulatory obstacle to their adoption. The most prominent example is the prohibition of real-time remote biometric identification in public spaces for law enforcement purposes. Even if a city’s police authorities consider facial recognition useful for locating missing persons or apprehending suspects more efficiently, European Union law prevents the broad deployment of such systems [,,]. This effectively removes a technological option from the smart city repertoire, for better or for worse, and has prompted some security technology companies to redirect their focus away from such solutions in the European market [,,].

Even in the absence of outright prohibitions, the obligations associated with high-risk artificial intelligence systems may significantly delay deployment [,,]. For example, a city-operated artificial intelligence system used to allocate social housing, which involves decisions that affect individuals’ rights, would be classified as high risk. In such cases, the city or its vendor must implement comprehensive risk management procedures, maintain audit logs, and meet other compliance requirements. If these measures are not in place, the system cannot legally be brought into operation [,,]. As a result, regulation may slow the introduction of beneficial artificial intelligence solutions. An artificial intelligence-based traffic management system that dynamically controls traffic signals could be considered sufficiently critical to qualify as high risk due to its implications for public safety. If the vendor is unable to provide the required conformity assessment, or if the designated notified bodies experience delays in certification, the city would not be permitted to activate the system until compliance is fully achieved. This has raised concerns about a potential shortage of qualified bodies capable of certifying artificial intelligence systems during the initial implementation period [,,].

Such delays constitute a barrier to time-sensitive innovation. Some city officials have expressed concern that by the time compliance procedures are completed, the technology may already be outdated or the momentum of the pilot project lost [,,]. Furthermore, the classification of systems as high risk may discourage smaller local developers or start-ups, which frequently serve as drivers of smart city innovation [,,]. Concerns about liability also influence decision-making. Under forthcoming product liability rules, if a municipality deploys an artificial intelligence system and it causes harm, legal action may be brought against the authority with greater ease [,,]. While this framework offers important protection for citizens and is beneficial in principle, it contributes to a risk-averse environment among public officials. Many may choose to adopt a cautious approach, favoring traditional technologies over the perceived legal uncertainties associated with artificial intelligence [,].

In summary, regulatory risk aversion constitutes a significant barrier. Municipal leaders may choose to avoid or postpone the adoption of artificial intelligence not because it is explicitly prohibited by law but because the legal framework introduces uncertainty and the possibility of legal liability [,,].

4.4. Fragmentation and Uncertainty in Interpretation

In the initial phase of implementation of the Artificial Intelligence Act and related legislation, there remains uncertainty regarding the interpretation of specific provisions in the context of smart cities. Determining whether a particular municipal artificial intelligence system qualifies as high risk can be complex. Annex III of the Act lists high-risk use cases, such as traffic management systems that directly affect public safety. However, questions arise regarding systems that only provide recommendations to human operators, such as an artificial intelligence tool offering guidance to traffic managers without directly controlling traffic signals. Whether such a system qualifies as high risk remains open to interpretation. In the absence of jurisprudence or formal guidance, cities are left operating within a legal gray area. This uncertainty constitutes a barrier, as many organizations adopt a cautious stance or seek legal opinions, which requires additional time and resources. The International Association of Privacy Professionals, for example, has noted that distinctions between prohibited and permitted uses, such as the definition of emotion recognition systems, which are disfavored, compared to non-invasive biometric analysis, remain unclear [,]. These ambiguities may deter the deployment of such technologies in Europe altogether [,].

Moreover, although the Artificial Intelligence Act establishes a unified regulatory framework, its implementation may differ across member states in practice. Each member state is responsible for designating enforcement authorities, which may involve existing regulatory bodies or newly created institutions and may impose varying penalties. As a result, a company supplying artificial intelligence systems to municipalities may encounter differing regulatory expectations in jurisdictions such as France and Germany. Until full harmonization is achieved, firms often adhere to the most stringent interpretation to minimize legal risk, thereby increasing development costs [,,]. This situation is reminiscent of the early implementation period of the General Data Protection Regulation, when regulatory uncertainty and divergent national approaches led many organizations to adopt a cautious stance in deploying new data-driven services [,].

Another relevant consideration concerns public procurement. While European Union procurement directives mandate open tendering processes, some municipal procurement officials remain uncertain about their obligations under the Artificial Intelligence Act. Questions persist regarding whether procurement contracts must include specific artificial intelligence compliance clauses or whether purchasing a system that has not yet been certified under the Act could later be deemed non-compliant once the legislation is fully in force [,]. This uncertainty has made some procurement departments slower or more hesitant to approve artificial intelligence acquisitions in 2025, thereby creating a de facto barrier to adoption. Although anecdotal, such concerns have been reported at various smart city conferences. It is anticipated that as the regulatory environment matures, and with the publication of interpretative guidance from bodies such as the Commission’s Artificial Intelligence Office, this uncertainty will diminish. However, during the transitional period, it remains a significant obstacle [,].

4.5. Resource Inequalities and the Innovation Gap

Larger global technology firms typically possess the legal resources and financial capacity to ensure compliance with European Union regulations and, in some cases, to influence their formulation [,,]. In contrast, smaller artificial intelligence start-ups and municipal information technology departments often lack such capabilities. This regulatory barrier disproportionately affects smaller actors, despite their frequent role in driving local innovation [,]. A start-up offering a promising artificial intelligence solution for urban traffic management may choose to avoid the European market or fail to scale its operations due to the cost of achieving legal compliance, resulting in cities missing out on potentially more effective or affordable technologies [,,]. Similarly, wealthier municipalities, such as national capitals, are generally able to invest in pilot programs and navigate compliance obligations, whereas smaller cities may be compelled to abandon artificial intelligence initiatives due to limited resources [,].

This situation may result in an innovation gap in which only well-resourced cities are able to realize the benefits of artificial intelligence, contrary to the European Union’s objectives of territorial cohesion and equal opportunity [,]. In response to this concern, the Artificial Intelligence Act includes provisions for Regulatory Sandboxes as experimental frameworks that allow innovators to collaborate with regulators in testing artificial intelligence systems under conditions of regulatory flexibility [,,]. These sandboxes offer the potential for cities to trial artificial intelligence solutions in a controlled setting, thereby mitigating some compliance-related barriers. However, as of 2025, most sandboxes remain in the process of being established, and it is not yet clear how effective they will be in lowering practical barriers to deployment [,]. In the meantime, the uneven ability of different cities to manage regulatory demands constitutes a systemic barrier at the ecosystem level [,,].

4.6. Ethical and Public Acceptability Concerns Enforced by Regulation

European Union regulations often reflect underlying ethical principles such as fairness, transparency, and the mitigation of bias. In some cases, even where a smart city artificial intelligence project is legally permissible, it may encounter resistance due to public opposition grounded in these values. This dynamic can be understood as a societal regulatory effect [,]. For example, an artificial intelligence system used to allocate municipal services may fully comply with high-risk requirements under the Artificial Intelligence Act. Nevertheless, if the public perceives the system as a non-transparent mechanism that delivers unfair outcomes, it may provoke backlash or lead to political reluctance to proceed [,,]. Public awareness and activism concerning digital rights are particularly prominent in Europe. In response to civil society pressure, numerous municipal councils have voluntarily imposed moratoria on the use of specific artificial intelligence technologies, such as facial recognition, even in the absence of formal legal prohibitions [,,].

This cultural context means that, beyond compliance with formal legal requirements, cities must demonstrate algorithmic transparency and fairness to the public, which functions as a normative barrier [,,]. The Artificial Intelligence Act introduces certain transparency obligations, such as user notifications and, in some cases, summary explanations for decisions made by high-risk systems. However, delivering meaningful transparency for complex artificial intelligence models is a considerable challenge [,,]. Ensuring that a system is unbiased and explainable can be as demanding as fulfilling statutory obligations [,,]. This frequently necessitates additional technical work, including the implementation of bias audits and the integration of explanation tools. In some instances, it may also require compromising on model accuracy to achieve greater simplicity or interpretability [,].

City administrators must consider the risk that deploying an artificial intelligence system which produces a controversial outcome, such as a predictive policing model that disproportionately targets minority neighborhoods, could not only contravene legal standards but also erode public trust and result in legal challenges under anti-discrimination law [,]. The imperative to align with ethical and equality principles, which are strongly embedded in European Union law and values, constitutes a barrier in that it constrains the use of certain artificial intelligence approaches, such as opaque or black-box models, and requires additional measures to validate system behavior [,,]. Nevertheless, this is a barrier that cities largely recognize as essential to address in order to prevent societal harm.

4.7. Overcoming Regulatory Barriers

In examining these regulatory barriers, it becomes evident that the European Union’s protective approach introduces short- to medium-term challenges for the adoption of artificial intelligence. Municipalities must invest in legal expertise, engage with stakeholders, and, in some cases, forgo the most advanced artificial intelligence techniques in favor of more transparent, though potentially less powerful, alternatives [,]. The barriers include compliance costs, limitations on data access, restrictions on use cases, legal uncertainty, disparities in institutional capacity, and ethical constraints [,,].

While this may present a daunting picture, it reflects only one dimension of the broader landscape. Many of these barriers are being addressed through capacity-building efforts and the adaptation of technologies. For example, best practices and technical tools are emerging, with some supported through European Union funding, that aim to automate elements of General Data Protection Regulation compliance or facilitate the testing of artificial intelligence systems for bias. These innovations are expected to reduce the regulatory burden over time [,,]. The following section explores the technological challenges that are closely linked to these regulatory issues, noting that the resolution of regulatory constraints often depends on technical advances, such as privacy-enhancing technologies and explainable artificial intelligence methods [,]. By addressing regulatory and technological challenges in tandem, smart cities can chart a responsible path towards realizing the benefits of artificial intelligence [,].

5. Technological Challenges in Implementing AI in Smart Cities

In addition to regulatory barriers, smart city stakeholders encounter a range of technological challenges when implementing artificial intelligence solutions. These challenges are often closely linked to regulatory concerns. For instance, technical limitations such as insufficient data may be compounded by legal restrictions on data usage, while, in some cases, technical tools can help alleviate regulatory constraints, such as the use of privacy-enhancing technologies to comply with the General Data Protection Regulation. This section examines the principal technical and operational challenges, including issues related to data, infrastructure, algorithms, and institutional capacity, that cities must address in order to adopt artificial intelligence effectively.

5.1. Data Quality, Silos, and Interoperability

Artificial intelligence systems are only as effective as the data on which they are trained and which they continuously receive. Although cities produce vast volumes of data, ensuring their quality and accessibility presents a fundamental challenge. Urban data are frequently siloed across various departments and systems, with traffic data stored in one database, public transport data in another, and utility usage data elsewhere, often in incompatible formats and without standardization. Integrating these datasets to enable holistic artificial intelligence analysis, such as coordinating traffic control with pollution monitoring and public transport schedules, is often extremely difficult. Hence, interoperability challenges pose a significant barrier to seamless system integration [,]. Legacy systems commonly used by municipal departments may not be compatible with modern artificial intelligence platforms. For instance, traffic signal control infrastructure may operate using proprietary protocols, making real-time data extraction difficult and limiting an artificial intelligence system’s capacity to achieve a comprehensive view of traffic conditions.

Data quality presents an additional challenge. Sensors may produce errors or contain data gaps. For example, a malfunctioning air quality sensor could transmit inaccurate readings, potentially misleading an artificial intelligence model. Many municipalities lack robust data governance frameworks, resulting in datasets that may contain inaccuracies, outdated information, or embedded biases, such as crime statistics that systematically underreport certain types of incidents [,,]. Training artificial intelligence systems on such data can yield unreliable or distorted outcomes [,]. Achieving data interoperability frequently requires the adoption of common standards. The European Union’s Intelligent Transport Systems Directive and the broader push towards common European data spaces promote this objective. However, the practical implementation of these standards, such as DATEX II for traffic data or CityGML for spatial data, is technically complex and often progresses slowly [,,].

Solving data silos might require overhauling IT systems or investing in data middleware; until done, AI implementations are frequently limited to narrow verticals because horizontally merging data is too challenging [,]. This limitation prevents cities from fully utilizing AI’s predictive power which often comes from connecting diverse data sources. However, empirical evidence illustrates the impact of data integration challenges on AI deployment. In Barcelona, the introduction of an AI-based smart grid platform, supported by more than 19,000 smart meters, contributed to measurable reductions in municipal energy consumption and improved HVAC performance in public buildings []. By contrast, in Helsinki, despite the extensive availability of open datasets through the Helsinki Region Infoshare platform, technical reports indicate persistent interoperability issues due to inconsistent metadata structures and varying data formats. These issues complicate the integration of heterogeneous urban datasets for advanced AI modelling [].

5.2. Infrastructure and Connectivity Constraints

Smart city artificial intelligence solutions typically depend on a robust digital infrastructure. This includes extensive sensor networks through Internet of Things devices, high-speed connectivity such as fifth-generation or fiber-optic networks, and access to edge or cloud computing resources. Many municipalities, especially smaller ones, do not yet possess the necessary infrastructure to support such systems [,]. For example, operating an artificial intelligence system that manages traffic in real time may require numerous cameras or Internet of Things detectors at every intersection, along with a reliable, low-latency network to transmit the data to a centralized or edge computing unit. Where network bandwidth is insufficient or sensor coverage is incomplete, the performance of the artificial intelligence system is significantly constrained [,]. Certain advanced applications, including autonomous vehicles or drone-based surveillance, depend critically on fifth-generation networks to achieve the required low latency. Consequently, delays in telecommunications infrastructure deployment can postpone the implementation of artificial intelligence solutions that rely on such connectivity [,].

Computing infrastructure also presents a significant challenge. Artificial intelligence algorithms, particularly those based on deep learning, are often computationally demanding. Municipal information technology departments typically lack access to high-performance computing resources such as graphics processing unit clusters, leading them to depend on cloud computing services. However, reliance on the cloud introduces concerns regarding latency for real-time operations, as well as compliance issues related to the General Data Protection Regulation and national policies governing the use of cloud services by public authorities [,,]. Establishing local edge computing infrastructure, whereby data are processed on servers located within the city’s network to reduce latency, poses both technical and financial challenges [,]. In the absence of adequate computing capacity, some cities are compelled to use simpler, less data-intensive algorithms, which may result in reduced accuracy and limited benefits.

Energy consumption and infrastructure maintenance also present notable challenges. The deployment of thousands of Internet of Things sensors, while enabling data collection at scale, imposes additional maintenance responsibilities and increases energy demands, which must be accounted for by the municipality []. As a result, infrastructure readiness remains uneven across Europe. Leading smart cities such as Barcelona and Amsterdam have made considerable progress in expanding Internet of Things coverage and ensuring reliable connectivity. However, many mid-sized municipalities are still in the process of establishing this foundational infrastructure, which often necessitates limiting the scope of artificial intelligence projects or delaying their implementation [,].

5.3. Skill and Knowledge Gaps

The implementation and management of artificial intelligence systems require specialized expertise, which many municipal administrations currently lack. The shortage of skilled personnel is a frequently cited obstacle; cities often do not employ data scientists, artificial intelligence engineers, or even information technology staff with relevant expertise [,]. This situation results in a reliance on external vendors or consultants, which can be financially burdensome and may lead to the formation of knowledge silos. In the absence of internal capacity, municipalities also face difficulties in critically assessing artificial intelligence proposals or in monitoring algorithmic performance and ethical compliance over time [,,].

Public sector institutions often rely on traditional skill sets, and while efforts to reskill staff in artificial intelligence and data analytics are underway in some municipalities, they remain incomplete [,]. In addition, senior decision-makers, including city executives and policymakers, may have a limited understanding of the capabilities and limitations of artificial intelligence. These cognitive barriers can result in either overestimation, whereby the technology is embraced uncritically without sufficient planning, or underestimation, in which potentially valuable solutions are dismissed due to a lack of understanding [,]. A systematic literature review has emphasized that executive education and awareness are essential. Bridging this knowledge gap is necessary to provide informed leadership and to support the successful implementation of artificial intelligence initiatives [,].

Where such understanding is absent, artificial intelligence projects may fail to receive political support or may be implemented ineffectively. In essence, the development of human capacity must keep pace with the deployment of technology. Achieving this alignment presents a significant challenge, requiring sustained investment in training programs, long-term institutional commitment, and, in some cases, cultural transformation within bureaucratic organizations [,,].

5.4. Algorithmic Limitations and Context Adaptation

AI models often face difficulties when confronted with the complex, dynamic, and context-specific nature of cities. A model trained in one city might not work well in another due to differences in layout, population behavior, or simply climate. For example, a computer vision system for detecting potholes on streets might have high accuracy in a city with certain road materials and lighting conditions, but transfer it to a different environment, and accuracy drops. Adapting algorithms to local contexts requires additional data and tuning. Sometimes, city data is so unique that off-the-shelf AI models trained on generic datasets do not suffice, and custom development is needed [,].

Urban environments are inherently unpredictable. An artificial intelligence system controlling traffic may encounter unforeseen events, such as a spontaneous public demonstration blocking major roads or atypical traffic patterns emerging in the aftermath of the COVID-19 pandemic. Such scenarios may lie outside the system’s training data, resulting in inadequate or erroneous responses. Ensuring the robustness of artificial intelligence in the face of novel situations remains an unresolved technical challenge [,]. The potential for failures in critical urban functions also raises regulatory concerns. Municipalities are frequently required to maintain a human fallback mechanism or manual override, which adds complexity to system design and implementation [,,].

Another significant limitation concerns the explainability of artificial intelligence algorithms. Many of the most effective techniques, such as deep learning neural networks, function as black boxes. However, as previously noted, cities require artificial intelligence systems that are transparent and accountable [,,]. The development of explainable artificial intelligence that can be interpreted by municipal officials and understood by the public is an ongoing area of research [,]. At present, prioritizing explainability often necessitates the use of simpler models, such as decision trees or rule-based systems, which may offer reduced accuracy compared to more complex alternatives. This presents a technical trade-off between interpretability and performance [,].

Finally, certain urban challenges remain at the forefront of artificial intelligence research. Examples include truly multimodal mobility optimization, which involves balancing vehicles, drones, and pedestrian flows within a single model, as well as community sentiment analysis that is free from bias. In these domains, the underlying algorithms are still in development, and technology has not yet reached full maturity. As a result, the current technical limitations of artificial intelligence represent a barrier to realizing the broader vision of fully integrated smart cities [,].

5.5. Cybersecurity and Reliability