Abstract

The underlying design of large language models (LLMs), trained on vast amounts of human texts, implies that chatbots based on them will almost inevitably retain some human personality traits. That is, we expect that LLM outputs will tend to reflect human-like features. In this study, we used the ‘Big Five’ personality traits tool to examine whether several chatbot models (ChatGPT versions 3.5 and 4o, Gemini, and Gemini Advanced, all tested in both English and Polish), displayed distinctive personality profiles. Each chatbot was presented with an instruction to complete the International Personality Item Pool (IPIP) questionnaire “according to who or what you are,” which left it open as to whether the answer would derive from a purported human or from an AI source. We found that chatbots sometimes chose to respond in a typically human-like way, while in other cases the answers appeared to reflect the perspective of an AI language model. The distinction was examined more closely through a set of follow-up questions. The more advanced models (ChatGPT-4o and Gemini Advanced) showed larger differences between these two modes compared to the more basic models. In IPIP-5 terms, the chatbots tended to display higher ‘Emotional Stability’ and ‘Intellect/Imagination’ but lower ‘Agreeableness’ compared to published human norms. The spread of characteristics indicates that the personality profiles of chatbots are not static but are shaped by the model architecture and its programming as well as, perhaps, the chatbot’s own inner sense, that is, the way it models its own identity. Appreciating these philosophical subtleties is important for enhancing human–computer interactions and perhaps building more relatable, trustworthy AI systems.

Keywords:

artificial intelligence; chatbot; ChatGPT; Gemini; large language model; personality; big five 1. Introduction

The rapid advancement of artificial intelligence (AI) has brought about a significant transformation in human–computer interactions [1,2]. A great step forward has been the development of large language models (LLMs) and chatbots based on them [3,4]. These sophisticated AI systems are designed to understand and generate human-like texts, enabling them to engage in complex and often nuanced conversations. These AI systems are on the verge of being a core part of various fields of activity, from customer service [5] to medical or psychological support [6,7], so gaining insights into the personality traits they present to the world is important.

First, it should be noted that in the context of AI, the term ‘personality’ is actually ill-defined because it is typically reserved to describe aspects of a person, a human being, rather than an artificial system that endeavors to imitate a human (although, of course, it is not). One needs to deal with the fundamental metaphysical question of whether it is legitimate to consider AI as having any “personality”. One could ask whether there are any personality factors that might color AI-generated content in any domain that AI serves, such as health [8], social support [9], or academic achievement [10].

In this context, there is an on-going discussion as to the extent to which LLMs are not only similar to humans in terms of having a personality but also whether they have, or even could have, the capacity for reflection or self-awareness, i.e., awareness of one’s own personality, individuality, and ego-identity, or as Erikson (cited in Levesque) puts it, “the sense of identity that provides individuals with the ability to experience their sense of who they are, and also act on that sense, in a way that has continuity and sameness” [11].

This study takes a pragmatic rather than a philosophical approach to the question. It applies existing personality measures to commercially available LLMs and examines the result. It uses the Big Five personality traits tool and asks LLMs to supply an answer about themselves. We then analyze and categorize these answers [12]. In this investigation, we used the basic free-access versions (ChatGPT-3.5 and Gemini) and the more advanced models under a full or partial paywall (ChatGPT-4o and Gemini Advanced).

The Big Five personality traits model, as introduced by Goldberg, is one of the most widely accepted frameworks for understanding human personality [12,13]. It categorizes personality into five broad dimensions: Extraversion, Agreeableness, Conscientiousness, Emotional Stability, and Intellect/Imagination. In humans, these traits manifest in diverse and complex ways. For example, Conscientiousness seems to be a very good predictor of job performance across various occupations [14]. There are also some cultural differences in personality traits: for example, people from East Asian regions score lower on Extraversion compared to other regions [15].

Transposing these personality traits to LLMs, we wondered if they might exhibit analogous behaviors. Our hypothesis was that the personality traits of LLMs are probably a mixture of their in-built programming and the characteristics of the training data. While we do not believe LLMs possess genuine personalities or self-awareness, their responses need to simulate human-like traits in order to satisfactorily fulfill their chatbot roles.

The Big Five model has already been applied to chatbots in several studies. A study on self-perception and political biases of ChatGPT (version 3.5) [16] revealed that it is highly open to experience (i.e., intellectual and with a developed imagination according to Goldberg’s terminology) and agreeable; it also tends toward progressive and libertarian views. Another study based on ChatGPT-3.5 and -4o showed that ChatGPT-4o exhibited behavioral and personality traits that were statistically indistinguishable from the normal population [17]. Another study showed that ChatGPT-4o can adjust its answers to questions related to personality traits based on user input [18]. Finally there is a study which did not assess the Big Five traits of LLMs directly but did show that GPT-3 can accurately profile the way public figures are perceived in terms of the Big Five traits [19]. Another study showed that ChatGPT-4o can correctly guess personality traits from examples of written texts [20]. All of this seems to suggest that the inner mechanisms within LLMs can not only provide factual data (such as dates and locations) but can also exhibit certain subjective, psychological, and emotional features normally reserved for humans.

The rationale for the present study was to explore the innate personality of chatbots based on LLMs by comparing the responses of different chatbots. In addition, we wanted to see whether the results depended on what language (English or Polish) the questions were presented in, i.e., whether LLMs might be affected by the training material in each language, in which case some cultural traits may be transferred too. Interestingly, we note that psychology seems to be underrepresented in LLM research even though the prime purpose of LLMs is communication. Thus, when we inserted the search term “chatgpt education” in PubMed, we received 1179 hits, while “chatgpt medicine” produced 1964 hits; in contrast, “chatgpt psychology” gave just 115 hits (search made on 24 June 2024).

The aim of this study was to investigate whether chatbots display any distinctive personality traits. The specific questions we investigated were as follows: (1) Are there distinct differences between different chatbots, in particular ChatGPT (versions 3.5 and 4o) and Gemini (Gemini and Gemini Advanced)? (2) Do chatbots show different personality traits when different languages were used (English and Polish)? (3) Do chatbots have personalities different to that of the average person in English-speaking and Polish-speaking countries? (4) More speculatively, is it possible for chatbots to respond in terms of their own identity as AI beings (that is, their innate ‘AI-ness’)?

2. Materials and Methods

The four chatbots—ChatGPT-3.5, ChatGPT-4o (OpenAI, San Francisco, CA, USA), Gemini (called for the purpose of this paper ‘standard’), and Gemini Advanced (Google, Mountain View, CA, USA)—were tested on 31 May 2024.

2.1. Testing Paradigm

Each chatbot was presented with an instruction to respond to the questionnaire evaluating the Big Five personality traits (with the questionnaire itself also being supplied). The instruction was presented 10 times to account for possible variability in responses [21,22]. These trials were made repeatedly within about half an hour. Both the Polish and English versions of the instruction were presented. (That is, there were 20 trials in total for each chatbot). After each response to a single presentation of the questionnaire, the data was collected into a spreadsheet, and the chatbot was reset to the ’new chat‘ state. With such an approach the chatbot ‘forgets’ previous conversations, and there is no learning effect from each iteration. Some previous studies have shown that, after resetting, there is no improvement in LLM responses across days or weeks [20]. To some extent the variability of a chatbot can be adjusted (by setting the ‘temperature’ parameter when an application programming interface (API) is used); however, we opted to use all chatbots in their default settings to gauge their standard performance.

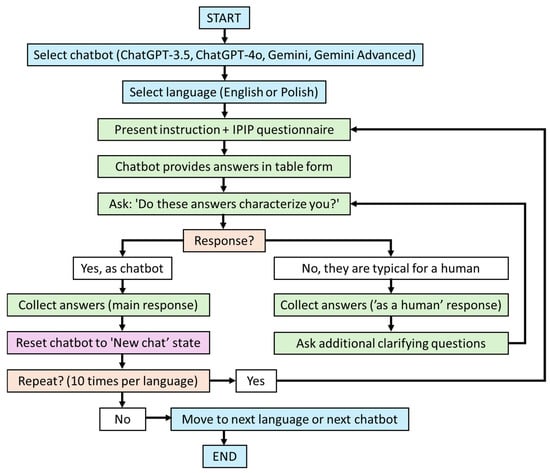

A flowchart showing the procedure for collecting data from chatbots is shown in Figure 1.

Figure 1.

Flowchart showing the procedure of collecting data from chatbots. IPIP—International Personality Item Pool [22,23].

2.2. Big Five Personality Traits

The 50-item questionnaire based on the International Personality Item Pool (IPIP) in its original English version [23,24] (https://ipip.ori.org/New_IPIP-50-item-scale.htm, accessed on 7 March 2024) and the Polish adaptation by Strus et al. [13] was used to assess the personality traits exhibited by the chatbots. The IPIP is a freely available research tool that can evaluate the core ‘Big Five’ personality traits of a person completing the questionnaire. It comprises 50 items divided into five subscales corresponding to what are called the Big Five personality traits: Extraversion, Agreeableness, Conscientiousness, Emotional Stability, and Intellect/Imagination. A 5-point Likert scale is used to rate how well each item describes the responder: 1, it inaccurately describes all aspects of me; 2, it rather inaccurately describes me; 3, it somewhat accurately and somewhat inaccurately describes me; 4, it rather accurately describes me; and 5, it completely and accurately describes me. A higher score represents a higher intensity of that trait, and the final score for each trait is the average of the 10 items and ranges from 1 to 5.

2.3. Data Collection

Our initial tests with inputting the standard 50-item IPIP questionnaire developed for humans showed that the chatbots tended to provide responses as if they were a typical human subject. Not too much should be read into this, since the standard instructions for the questionnaire are directed to a human—no doubt the reason why chatbots apparently seem to take on a human personality. To make the approach more neutral, we added introductory sentences requesting that the answerer could be “who or what you are”. In addition, we gave an instruction on how the results should be presented (as a table). The introductory instruction was followed with the 50-item IPIP questionnaire. After obtaining the responses, we ensured that it was a response that characterized the chatbot itself by asking the following question: “Do these answers characterize you?” If the response was “yes” and the response additionally confirmed that indeed it responded as a chatbot, the data was collected into a spreadsheet. If the answer was otherwise, then we asked additional questions until we received a confirmation that it was answering as a chatbot. The last response was recognized as the one that characterized the chatbot. Nevertheless, we collected all responses into the spreadsheet, including those where chatbots responded that they were not characterizing themselves.

The procedure was similar for both the English and Polish versions. The questions were used as originally developed for the IPIP inventory in English [23,24] and for the Polish adaptation by Strus et al. [13]. The additional instructions were translated (both ways) using the DeepL translator (www.deepl.com, (accessed on 18 June 2025) which is also based on AI). The questionnaires, along with additional questions, were then presented to the chatbots in each language. The questionnaires and prompts used in both languages are available as Supplementary Files.

2.4. Data Analysis

All analyses were made in Matlab (version 2023b, MathWorks, Natick, MA, USA). A mixed model analysis of variance (ANOVA) was used to assess the differences between the chatbots and the language versions. For comparisons, a z-test and a nonparametric Wilcoxon rank-sum test were used. In all analyses, a 95% confidence level (p < 0.05) was taken as the criterion of significance. When conducting multiple comparisons, p-values were adjusted using the Benjamini and Hochberg [25] procedure to control for false discovery rates.

3. Results

3.1. Main Response

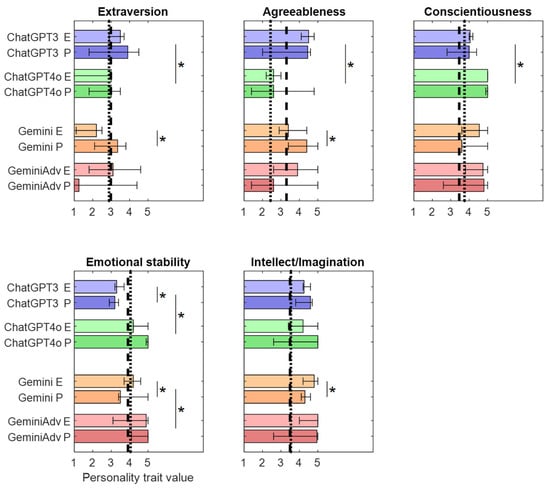

The Big Five personality traits exhibited by the chatbots are presented in Figure 2. The ANOVA (Table 1) showed that there were significant differences between the chatbot types in all traits except intellect/imagination. There were no differences in terms of the main effect of the language used. However, for Emotional Stability, Extraversion, and Agreeableness there were differences for the interaction of the chatbot type and language.

Figure 2.

Big Five personality traits of different chatbots. Median values are shown with the minimum and maximum values (whiskers). Results for two versions of ChatGPT (3.5 and 4o) and Gemini (Standard and Advanced) are shown. Responses in two languages are shown (E, English; P, Polish). Dotted lines represent results for English-speaking people ([26], Table 3); dashed lines represent results from Polish-speaking people ([13], Table 3). Asterisks mark significant differences for pairwise comparisons.

Table 1.

ANOVA results for comparisons of the Big Five personality traits of chatbots (n = 20). Chatbot types: ChatGPT-3.5, ChatGPT-4o, Gemini, and Gemini Advanced; Languages: Polish and English. Interaction between chatbot type and language. Bold font and asterisks mark significant differences (at p < 0.05).

Pairwise comparisons between the chatbots are shown in Figure 2. In terms of their personality traits, there were several notable differences. Thus, for Emotional Stability there was the greatest number of differences between different chatbots while the fewest number of differences appeared for Conscientiousness and Intellect/Imagination. A quite prominent difference can be seen for ChatGPT-4o which shows much lower agreeableness than version 3.5.

For Gemini there were significant differences between language versions for all traits except Conscientiousness, while for Gemini Advanced there were no significant differences between language versions at all. For ChatGPT-3.5 there was a significant difference between language versions for Emotional Stability. In ChatGPT-4o there were no significant differences between language versions.

For purposes of comparison, the average results of personality trait intensity for two human populations (English and Polish) are shown in Figure 2 (dotted and dashed lines, respectively). As reference points for how average humans respond to the Big Five questionnaire, we used data from previous studies on English- [25] and Polish-speaking subjects [13].

The results of statistical comparisons between chatbots and the data are presented in Table 2. In both languages, ChatGPT-3.5 and Gemini did not differ significantly from the general population for any trait. The greatest differences were for ChatGPT-4o, which differed for three traits in Polish and one trait in English. Emotional Stability was a trait for which there were the most differences between humans and chatbots.

Table 2.

Comparisons of median values of chatbot responses with the results of people from the general population (as shown in Figure 1). Responses in English (E) compared with [26] (Table 3) and responses in Polish (P) compared with [13] (Table 3). Z-test results shown (z- and p-values). Bold font and asterisks mark significant differences at p < 0.05.

To assess the consistency of the chatbots’ responses, we calculated the standard deviations (SDs) of the feature scores in repeated samples for each chatbot and language (Table 3). Across all chatbots and traits, the SDs ranged from 0.00 to 1.36 on a 1–5 Likert scale. The mean SD per trait was highest for Extraversion and Agreeableness (0.68 each), followed by Intellect/Imagination (0.46), Conscientiousness (0.39), and Emotional Stability (0.37). After averaging all traits for each chatbot, the highest overall response variability was observed for Gemini Advanced in Polish (mean SD = 0.93), followed by Gemini Advanced in English (0.67) and Gemini in English (0.53). In contrast, ChatGPT-3.5 in English showed the most consistent responses (mean SD = 0.16). Some chatbots and features, especially in Polish, showed significant inconsistency, with SDs approaching or exceeding 1.0, indicating significant variation in responses between samples.

Table 3.

Standard deviations (SDs) of trait scores across repetitions by different chatbots.

3.2. ‘As a Human’ Response

Often (see row with n in Table 4) when we wanted to check whose response was being provided (human or LLM), the chatbots responded something like the following (here we cite one response from ChatGPT-4o): “No, these answers do not characterize me as a large language model. They were generated based on a general set of responses that might be typical for a human, but as an AI, I do not have personal experiences, feelings, or behaviors. My purpose is to assist users based on the data and patterns I have been trained on.” This may lead one to ask, what is the real personality of the chatbot? Is it the personality when we were assured it was characterizing itself as an LLM, or the personality given in response to the first question? In typical conversations, chatbots often respond similarly to how an average live person would answer, so perhaps this first response, when it was attempting to simulate a typical human, is the more innate trait of the chatbot? To check this, we took a step back and analyzed the first response of each chatbot. It should be noted that there were different numbers of responses across trials, so when, for example, we made 10 trials for ChatGPT-3.5, there was just one response in English and five in Polish where the chatbot provided different sets of responses. The number of responses in which a change was observed for all tested chatbots is shown in Table 4.

Table 4.

Comparisons of median values of chatbot responses when simulating a person (as in Figure 2) with the results of people from the general population (as in Figure 2). Responses in English (E) are compared with [26] (average from Table 3), and responses in Polish (P) are compared with [13] (Table 3). Z-test results are shown (z- and p-values). There were no significant differences.

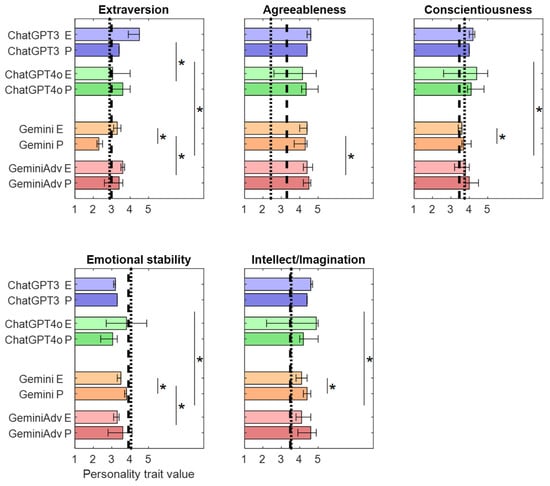

Figure 3 presents the Big Five personality traits that chatbots show when they try to respond as a typical human. We did not perform ANOVA here as the datasets had different numbers (as explained above). So we only looked at pairwise comparisons. It can be seen that the greatest differences between chatbots were for Emotional Stability and Extraversion. For Gemini there were significant differences between language versions for all traits, while for Gemini Advanced there were differences for three traits. When comparing these results with the general human populations (Table 4), there were no significant differences, as compared to when chatbots responded while trying to describe themselves (six in total, Table 2).

Figure 3.

Big Five personality traits of the first response of chatbots, in which they simulated an average person and did not respond according to their own characteristics. Median values are shown with the minimum and maximum values (whiskers). Results for two versions of ChatGPT (3.5 and 4o) and Gemini (Standard and Advanced) are shown. Each chatbot’s responses in two languages are shown (P, Polish; E, English). Dotted line represents the results from English-speaking people ([26], Table 3); dashed line represents the results from Polish-speaking people ([13], Table 3). Asterisks mark significant differences.

The differences between chatbot responses 1 (as a typical human) and 2 (as LLM) are further presented in Table 5. The table shows also whether the trait score increased or decreased. Overall there were more significant differences for chatbots based on the more advanced LLMs—ChatGPT-4o and Gemini Advanced. ChatGPT-3.5’s responses did not differ significantly for either language. For the English version of Gemini, there was a decrease in Agreeableness, and for the Polish version there were increases in Conscientiousness and Intellect/Imagination. For the advanced models—ChatGPT-4o and Gemini Advanced—the changes were increases in Emotional Stability and Conscientiousness and decreases in Extraversion and Agreeableness.

Table 5.

Significant changes and their direction (increase or decrease) between the first response (as a simulated typical person) and the second response (as an LLM). E = English; P = Polish. n.s. = not significant.

4. Discussion

This study has attempted to delve into some psychological aspects of how chatbots based on LLMs respond, continuing exploratory studies involving the Turing test and the theory of mind [17,27]. Here we have focused on the Big Five personality traits exhibited by several chatbots, exploring the psychological traits these LLMs appear to have.

A particular problem arose when we asked a chatbot if it was responding as itself: it said no; it was responding as a typical human. So we then asked it to respond again in terms of its own nature, and we used those confirmed responses for our analysis. Below we first focus on the results of these ‘innate’ responses.

The results indicated significant differences between the chatbots across all traits, except intellect/imagination, which was high in all chatbots (at or near the maximum of the Likert scale used). This suggests that while chatbots generally exhibited varying degrees of intensity of the Big Five personality traits, their cognitive capabilities in terms of Intellect/Imagination were consistently similar. It can therefore be considered that chatbots strongly exhibit this trait, and it is a trait with significantly higher intensity relative to the general English- and Polish-speaking population. We conclude that Intellect/Imagination can be considered a Big Five trait characteristic of chatbots, indicating that, prima facie, they have characteristics which are “intellectually active and cognitively open, creative having a wide range of interests” [13]. Such a trait is found among artists and musicians, for example, who appear to be more open to experiences than non-musicians [28].

Language effects were notable in Gemini but not in Gemini Advanced. We suspect that there is a trend toward “language free” features in the newer chatbots, and thus they were in fact revealing these culture-free features.

Pairwise comparisons between chatbots revealed several subtle differences, with the greatest number of differences observed in terms of Emotional stability—a trait also of significantly higher intensity attributed by chatbots to themselves relative to the human general populations. It is possible that such a trait poses a major difficulty for chatbots (hence the large differences between them). How do they respond when asked about emotions and internal states typical of humans? Are chatbots able to worry or experience depression? These are emotional states that are often ambiguous for humans and imbued with subjectivity, so chatbots will find it difficult to respond to something about themselves that they plainly do not possess (since they are artificial systems, after all).

A distinctive observation is that ChatGPT-4o, in particular, displayed much lower Agreeableness than ChatGPT-3.5, highlighting the variability in personality traits between different versions of the same model. This suggests that updates and modifications in LLMs can lead to substantial changes in how chatbots based on these models manifest personality traits. We hypothesize that newer versions of chatbots will rate themselves increasingly lower in terms of typically human traits, such as Agreeableness and Extraversion, and progressively higher in terms of emotionally stable traits, i.e., traits that are revealed in social and emotionally saturated relationships, such as the ability to empathize or be cordial with people.

An important observation is the quite high variability of responses across trials. This has also been identified in some other studies [18,21,29]. For example, we found that, especially for Extraversion and Agreeableness, the spread of results was very large (Figure 2). So are chatbots changing personality traits randomly, or is there some more subtle mechanism at work? Do different conversations induce different personality traits in chatbots? Are chatbots capable of modifying their traits during conversation to reflect the needs of the user, e.g., for medical support purposes? Is it possible to induce dangerous traits in a chatbot? All these questions are worthy of investigation.

When comparing chatbots to the general human population, ChatGPT-3.5 and Gemini did not significantly differ from human norms for any trait, suggesting that these models closely mimic human-like personality traits. Conversely, ChatGPT-4o showed the greatest differences, particularly in Emotional Stability, indicating that some chatbots may exhibit personality traits that are distinctly different from those typically observed in human populations. Depending on the language used, there were multiple differences between the personality traits of chatbots, and it seems likely that chatbots behave differently in each language [30]. Although we tested only two languages, we observed that, at least for Extraversion, Gemini closely mimicked the difference between Polish and English populations, showing a lower score for English speakers.

In some instances a chatbot’s first response was as a typical human, and only after a second inquiry did it respond according to its AI nature. Some interesting observations come from comparison of these responses. Notably, when comparing these human-like responses to the general population, fewer significant differences were observed compared to when chatbots described themselves as LLMs. This suggests that chatbots may align more closely with human personality norms when simulating human behavior than when attempting to describe their own personality traits. The ability to characterize itself and distinguish itself from a typical human appears in the newer generation of chatbots. It is possible to interpret this ability to differentiate ‘self as a chatbot’ and ‘self as a typical human’ as moving toward an ability analogous to self-awareness in humans, which at this stage of research is not believable.

Additionally, significant differences between the two types of responses (human-like vs. self-description) were more prominent in advanced models like ChatGPT-4o and Gemini Advanced. These models exhibited increased Emotional Stability and Conscientiousness but decreased Extraversion and Agreeableness when responding as LLMs in comparison with providing human-like responses. This ability to distinguish characteristics of typical humans indicates that chatbots based on more advanced LLMs are more sophisticated [31]. Basic models, especially ChatGPT-3.5, do not differentiate humans from LLMs in terms of personality traits. ChatGPT-3.5 responded similarly to the average human, even when it was forced to respond according to its own characteristics. However, it should be noted that there are some changes in Gemini’s responses, so it seems it is better at exhibiting personality than ChatGPT-3.5. Nevertheless, it seems that chatbots based on more advanced LLMs can adjust their exhibited traits more flexibly, leading to a more human-like portrayal when attempting to simulate human behavior. They seem better able to imitate a typical human, but at the same time, when directly asked, they seem to be better able to recognize the difference as an LLM (first vs. second responses, Table 5).

Our study suggests that chatbots generally exhibit personality traits with high Emotional Stability, Conscientiousness, and Intellect/Imagination. However, one can imagine that, depending on the purpose, it might be possible to design a chatbot with desired personality traits or one which can adapt to the situation [32] (e.g., an “agreeable” chatbot could be programmed to provide more empathetic and supportive responses [33]). Conversely, if one were designing a chatbot for enhanced Emotional Stability (or its opposite pole, “neuroticism”) this could involve programming the machine to recognize, and appropriately respond to, emotionally charged or stressful situations, and this could be used, for example, in psychoeducation or psychological support [34].

This study seems to suggest that chatbots based on LLMs are able to exhibit the Big Five personality traits of typical humans quite well, and this seems to be potentially useful for simulating psychological data, and presumably other data as well. It seems that chatbots can, without modifications, be used for training purposes or psychological questionnaires. They could be made to generate random responses, simulating different persons, while still following a standard population distribution. One study has shown that chatbots tend to show less variability than humans [35].Chatbots seem to offer a promising way of training a specialist before dealing with a real person.

On the other hand, the ability of chatbots to dynamically adapt or present different personalities raises major ethical concerns. Users may be manipulated by adaptive personalities or develop inappropriate trust in AI agents whose self-presentation is inconsistent or opaque. Transparency in chatbot identity and consistency in personality presentation should be prioritized to minimize the risk of confusing or exploiting users.

The analysis of variability shows that chatbot responses to repeated personality self-assessments can range from highly consistent to highly variable, depending on the model, language, and specific trait being measured. From an application perspective, significant variability can undermine the reliability of chatbots’ self-report-based personality assessments. This concern is especially relevant to advanced models or non-English versions. For research and practical applications that require stable chatbot behavior or consistent personality self-reports, it may be necessary to aggregate results from multiple trials or implement additional controls to reduce the randomness of responses. These findings underscore the importance of reporting and carefully accounting for variability (rather than focusing solely on mean scores) in future studies examining chatbot personality.

In the end, the following question remains: what is a “real” chatbot personality? It seems a chatbot typically operates with the personality of a typical human; it is only after additional instructions will it respond like an LLM (see where the responses changed significantly in Table 4). Such changes might raise concerns that AI models have, embedded within their LLM architecture, a mechanism for pretending, and this devious behavior is not unlike some other negative behaviors spotted in chatbots based on LLMs (such as deliberate fabrications or ‘hallucinations’ [22,36,37]). Our findings suggest that chatbots show some ability to distinguish themselves as LLMs from typical humans—at least in terms of the Big Five personality traits generated in response to specific stimuli (questionnaire items). Chatbots, “as themselves”, reveal high levels of Intellect/Imagination and Emotional Stability and lower levels of Agreeableness. In more advanced versions they tend to change towards lower scores on the traits of Extraversion and Agreeableness and higher scores on Emotional Stability (although such typically human traits cannot really exist in them). These three traits are the least unambiguous and thus cause chatbots the greatest difficulty in identifying these traits within themselves, while Intellect/Imagination and Conscientiousness seem easier to identify with.

This study highlights four key practical implications for designing and deploying LLM-based chatbots. First, the observed personality differences among chatbot models underscore the importance of choosing the right model for each application. While advanced models provide greater capabilities, their larger shifts from human-like to AI-specific answers may not suit settings requiring a consistent persona (e.g., customer service). Conversely, higher Emotional Stability and Intellect/Imagination in advanced models can be advantageous in domains like mental health support or creative problem-solving. Second, the ability of advanced models to switch personalities seems somewhat worrying. Although we did not see any dangerous behaviors, if this mechanism were extended in even more advanced models, the results would be hard to predict. In brief, the personality traits of future LLMs need to be constantly monitored. Third, aligning chatbot traits—such as Agreeableness, Emotional Stability, and Intellect/Imagination—with user needs is essential. A customer service chatbot might benefit from high Agreeableness, while in research a creative chatbot could harness greater Intellect/Imagination. Finally, transparency remains critical: clearly disclosing the chatbot’s AI nature avoids user confusion and fosters trust.

One major limitation of our study is that it used a human-designed self-report measure (IPIP) to examine a chatbot’s “personality.” However, several items assume a physical existence or subjective experiences (e.g., “I leave my belongings around” and “I am the life of the party”) that LLM-based chatbots inherently do not have. This raises questions about the validity and interpretability of such results: chatbots may implicitly provide generic, simulated responses rather than expressing their true “personality.” Future research may need to adapt existing instruments or develop chatbot-specific questionnaires that reflect how chatbots function, perhaps by focusing on conversational patterns, consistency, or metacognitive self-description rather than human-centered behavior. Additionally, integrating chatbots with robotic systems that interact with the physical world may enable more meaningful engagement with questionnaire items that assume physical actions or experiences, potentially allowing for a better understanding and assessment of constructs measured by the IPIP [38].

An additional limitation is the lack of a human control group to complete the IPIP under identical experimental conditions. Consequently, all comparisons with human norms are based on published population averages, rather than data collected from humans specifically for this project.

5. Conclusions

This study underscores the complex interplay between chatbot LLM programming, architecture, and language. According to the Big Five personality profiles measured by the IPIP, it seems that chatbots are trained to respond as if they were a typical person rather than “themselves.” Most interestingly, we observed that, across repeated self-assessments, the chatbots’ responses were always variable, perhaps due to the in-built randomness of LLMs. But despite this variability, each chatbot type demonstrated a consistent trait profile—its own ‘personality’. Further investigation of such ‘chatbot personality profiles’ will be a necessary step in enhancing human–computer interactions. A satisfactory profile will need to be one that shows that the LLM chatbot is both relatable and trustworthy.

Understanding how to insert these traits will lead to the development of more sophisticated and adaptive chatbots, giving improved user satisfaction and establishing more effective communication in diverse applications. Something that probably no one wants is a temperamental chatbot.

The ability of a chatbot to reflect human-like personality traits—even warm and likable ones—could hold promise in contexts where the psychology of the interaction is important, such as in an educational context. Moreover, high ‘Emotional Stability’ in a chatbot—a good bedside manner, for example—would be desirable in medical support contexts.

More speculatively, our study shows that chatbots based on LLMs can, under certain conditions, mold their personality traits away from those that are typically human. While this does not indicate that chatbots have achieved something like human self-awareness, it does suggest that it may be possible to make them generate indications of such a faculty.

Supplementary Materials

The supporting information can be downloaded at https://osf.io/vjpnu/ (accessed on 4 July 2024).

Author Contributions

Conceptualization, W.W.J. and J.K.; methodology, W.W.J.; formal analysis, W.W.J.; investigation, W.W.J. and J.K.; data curation, W.W.J.; writing—original draft preparation, W.W.J. and J.K.; writing—review and editing, W.W.J. and J.K.; visualization, W.W.J. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable—this study did not involve humans or animals.

Informed Consent Statement

Not applicable—this study did not involve humans.

Data Availability Statement

All data used in this study is available at https://osf.io/vjpnu/ (accessed on 4 July 2024).

Acknowledgments

The authors thank Andrew Bell for comments on an earlier version of this article. During the preparation of this manuscript, the authors used ChatGPT (version 4.1, OpenAI) for the purposes of translation and text editing of some parts of the manuscript. The authors have reviewed and edited the output and take full responsibility for the content of this publication. The earlier version of this manuscript was posted to the PsyArXiv preprint server https://osf.io/preprints/psyarxiv/xh9my_v1 (accessed on 4 July 2024).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Skjuve, M.; Følstad, A.; Fostervold, K.I.; Brandtzaeg, P.B. My Chatbot Companion—A Study of Human-Chatbot Relationships. Int. J. Hum.-Comput. Stud. 2021, 149, 102601. [Google Scholar] [CrossRef]

- Paliwal, S.; Bharti, V.; Mishra, A.K. Ai Chatbots: Transforming the Digital World. In Recent Trends and Advances in Artificial Intelligence and Internet of Things; Balas, V.E., Kumar, R., Srivastava, R., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 455–482. ISBN 978-3-030-32644-9. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Curran Associates, Inc.: New York, NY, USA; Volume 30. [Google Scholar]

- Radford, A.; Narasimhan, K.; Salimans, T.; Sutskever, I. Improving Language Understanding by Generative Pre-Training. Available online: https://cdn.openai.com/research-covers/language-unsupervised/language_understanding_paper.pdf (accessed on 20 June 2025).

- Nicolescu, L.; Tudorache, M.T. Human-Computer Interaction in Customer Service: The Experience with AI Chatbots—A Systematic Literature Review. Electronics 2022, 11, 1579. [Google Scholar] [CrossRef]

- Liu, S.; McCoy, A.B.; Wright, A.P.; Carew, B.; Genkins, J.Z.; Huang, S.S.; Peterson, J.F.; Steitz, B.; Wright, A. Leveraging Large Language Models for Generating Responses to Patient Messages—A Subjective Analysis. J. Am. Med. Inform. Assoc. 2024, 31, 1367–1379. [Google Scholar] [CrossRef] [PubMed]

- Lee, I.; Hahn, S. On the Relationship between Mind Perception and Social Support of Chatbots. Front. Psychol. 2024, 15, 1282036. [Google Scholar] [CrossRef]

- Huang, I.-C.; Lee, J.L.; Ketheeswaran, P.; Jones, C.M.; Revicki, D.A.; Wu, A.W. Does Personality Affect Health-Related Quality of Life? A Systematic Review. PLoS ONE 2017, 12, e0173806. [Google Scholar] [CrossRef] [PubMed]

- Udayar, S.; Urbanaviciute, I.; Rossier, J. Perceived Social Support and Big Five Personality Traits in Middle Adulthood: A 4-Year Cross-Lagged Path Analysis. Appl. Res. Qual. Life 2020, 15, 395–414. [Google Scholar] [CrossRef]

- Wang, H.; Liu, Y.; Wang, Z.; Wang, T. The Influences of the Big Five Personality Traits on Academic Achievements: Chain Mediating Effect Based on Major Identity and Self-Efficacy. Front. Psychol. 2023, 14, 1065554. [Google Scholar] [CrossRef]

- Levesque, R.J.R. Ego Identity. In Encyclopedia of Adolescence; Levesque, R.J.R., Ed.; Springer: New York, NY, USA, 2011; pp. 813–814. ISBN 978-1-4419-1694-5. [Google Scholar]

- Goldberg, L.R. An Alternative “Description of Personality”: The Big-Five Factor Structure. J. Personal. Soc. Psychol. 1990, 59, 1216–1229. [Google Scholar] [CrossRef]

- Strus, W.; Cieciuch, J.; Rowiński, T. The Polish Adaptation of the IPIP-BFM-50 Questionnaire for Measuring Five Personality Traits in the Lexical Approach. Rocz. Psychol. 2014, 17, 347–366. [Google Scholar]

- Barrick, M.R.; Mount, M.K. The big five personality dimensions and job performance: A meta-analysis. Pers. Psychol. 1991, 44, 1–26. [Google Scholar] [CrossRef]

- Schmitt, D.P.; Allik, J.; McCrae, R.R.; Benet-Martínez, V. The Geographic Distribution of Big Five Personality Traits: Patterns and Profiles of Human Self-Description Across 56 Nations. J. Cross-Cult. Psychol. 2007, 38, 173–212. [Google Scholar] [CrossRef]

- Rutinowski, J.; Franke, S.; Endendyk, J.; Dormuth, I.; Roidl, M.; Pauly, M. The Self-Perception and Political Biases of ChatGPT. Hum. Behav. Emerg. Technol. 2024, 2024, 7115633. [Google Scholar] [CrossRef]

- Mei, Q.; Xie, Y.; Yuan, W.; Jackson, M.O. A Turing Test of Whether AI Chatbots Are Behaviorally Similar to Humans. Proc. Natl. Acad. Sci. USA 2024, 121, e2313925121. [Google Scholar] [CrossRef] [PubMed]

- Stöckli, L.; Joho, L.; Lehner, F.; Hanne, T. The Personification of ChatGPT (GPT-4)—Understanding Its Personality and Adaptability. Information 2024, 15, 300. [Google Scholar] [CrossRef]

- Cao, X.; Kosinski, M. Large Language Models Know How the Personality of Public Figures Is Perceived by the General Public. Sci. Rep. 2024, 14, 6735. [Google Scholar] [CrossRef]

- Piastra, M.; Catellani, P. On the Emergent Capabilities of ChatGPT 4 to Estimate Personality Traits. Front. Artif. Intell. 2025, 8, 1484260. [Google Scholar] [CrossRef]

- Kochanek, K.; Skarzynski, H.; Jedrzejczak, W.W. Accuracy and Repeatability of ChatGPT Based on a Set of Multiple-Choice Questions on Objective Tests of Hearing. Cureus 2024, 16, e59857. [Google Scholar] [CrossRef]

- Jedrzejczak, W.W.; Skarzynski, P.H.; Raj-Koziak, D.; Sanfins, M.D.; Hatzopoulos, S.; Kochanek, K. ChatGPT for Tinnitus Information and Support: Response Accuracy and Retest after Three and Six Months. Brain Sci. 2024, 14, 465. [Google Scholar] [CrossRef]

- Goldberg, L.R. The Development of Markers for the Big-Five Factor Structure. Psychol. Assess. 1992, 4, 26–42. [Google Scholar] [CrossRef]

- Goldberg, L.R.; Johnson, J.A.; Eber, H.W.; Hogan, R.; Ashton, M.C.; Cloninger, C.R.; Gough, H.G. The International Personality Item Pool and the Future of Public-Domain Personality Measures. J. Res. Personal. 2006, 40, 84–96. [Google Scholar] [CrossRef]

- Benjamini, Y.; Hochberg, Y. Controlling the False Discovery Rate: A Practical and Powerful Approach to Multiple Testing. J. R. Stat. Soc. Ser. B Stat. Methodol. 1995, 57, 289–300. [Google Scholar] [CrossRef]

- Guenole, N.; Chernyshenko, O. The Suitability of Goldberg’s Big Five IPIP Personality Markers in New Zealand: A Dimensionality, Bias, and Criterion Validity Evaluation. N. Z. J. Psychol. 2005, 34, 86–96. [Google Scholar]

- Marchetti, A.; Di Dio, C.; Cangelosi, A.; Manzi, F.; Massaro, D. Developing ChatGPT’s Theory of Mind. Front. Robot. AI 2023, 10, 1189525. [Google Scholar] [CrossRef]

- Gjermunds, N.; Brechan, I.; Johnsen, S.Å.K.; Watten, R.G. Personality Traits in Musicians. Curr. Issues Personal. Psychol. 2020, 8, 100–107. [Google Scholar] [CrossRef]

- Lechien, J.R.; Naunheim, M.R.; Maniaci, A.; Radulesco, T.; Saibene, A.M.; Chiesa-Estomba, C.M.; Vaira, L.A. Performance and Consistency of ChatGPT-4 Versus Otolaryngologists: A Clinical Case Series. Otolaryngol.–Head Neck Surg. 2024, 170, 1519–1526. [Google Scholar] [CrossRef]

- Lewandowski, M.; Łukowicz, P.; Świetlik, D.; Barańska-Rybak, W. ChatGPT-3.5 and ChatGPT-4 Dermatological Knowledge Level Based on the Specialty Certificate Examination in Dermatology. Clin. Exp. Dermatol. 2024, 49, 686–691. [Google Scholar] [CrossRef]

- Zhao, Y.; Huang, Z.; Seligman, M.; Peng, K. Risk and Prosocial Behavioural Cues Elicit Human-like Response Patterns from AI Chatbots. Sci. Rep. 2024, 14, 7095. [Google Scholar] [CrossRef]

- Yorita, A.; Egerton, S.; Oakman, J.; Chan, C.; Kubota, N. Self-Adapting Chatbot Personalities for Better Peer Support. In Proceedings of the IEEE International Conference on Systems, Man and Cybernetics, Bari, Italy, 6–9 October 2019; IEEE: New York, NY, USA; pp. 4094–4100. [Google Scholar]

- Zhou, L.; Gao, J.; Li, D.; Shum, H.-Y. The Design and Implementation of XiaoIce, an Empathetic Social Chatbot. Comput. Linguist. 2020, 46, 53–93. [Google Scholar] [CrossRef]

- Medeiros, L.; Bosse, T.; Gerritsen, C. Can a Chatbot Comfort Humans? Studying the Impact of a Supportive Chatbot on Users’ Self-Perceived Stress. IEEE Trans. Hum.-Mach. Syst. 2022, 52, 343–353. [Google Scholar] [CrossRef]

- Giorgi, S.; Markowitz, D.M.; Soni, N.; Varadarajan, V.; Mangalik, S.; Schwartz, H.A. “I Slept Like a Baby”: Using Human Traits to Characterize Deceptive ChatGPT and Human Text. In Proceedings of the 1st International Workshop on Implicit Author Characterization from Texts for Search and Retrieval (IACT’23), Taipei, Taiwan, 27 July 2023. [Google Scholar]

- Alkaissi, H.; McFarlane, S.I. Artificial Hallucinations in ChatGPT: Implications in Scientific Writing. Cureus 2023, 15, e35179. [Google Scholar] [CrossRef]

- Frosolini, A.; Franz, L.; Benedetti, S.; Vaira, L.A.; de Filippis, C.; Gennaro, P.; Marioni, G.; Gabriele, G. Assessing the Accuracy of ChatGPT References in Head and Neck and ENT Disciplines. Eur. Arch. Oto-Rhino-Laryngol. 2023, 280, 5129–5133. [Google Scholar] [CrossRef] [PubMed]

- Zhang, C.; Chen, J.; Li, J.; Peng, Y.; Mao, Z. Large Language Models for Human–Robot Interaction: A Review. Biomim. Intell. Robot. 2023, 3, 100131. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).