Abstract

To address challenges such as large-scale variations, high density of small targets, and the large number of parameters in deep learning-based target detection models, which limit their deployment on UAV platforms with fixed performance and limited computational resources, a lightweight UAV target detection algorithm, YOLO-LSM, is proposed. First, to mitigate the loss of small target information, an Efficient Small Target Detection Layer (ESTDL) is developed, alongside structural improvements to the baseline model to reduce parameters. Second, a Multiscale Lightweight Convolution (MLConv) is designed, and a lightweight feature extraction module, MLCSP, is constructed to enhance the extraction of detailed information. Focaler inner IoU is incorporated to improve bounding box matching and localization, thereby accelerating model convergence. Finally, a novel feature fusion network, DFSPP, is proposed to enhance accuracy by optimizing the selection and adjustment of target scale ranges. Validations on the VisDrone2019 and Tiny Person datasets demonstrate that compared to the benchmark network, the YOLO-LSM achieves a mAP0.5 improvement of 6.9 and 3.5 percentage points, respectively, with a parameter count of 1.9 M, representing a reduction of approximately 72%. Different from previous work on medical detection, this study tailors YOLO-LSM for UAV-based small object detection by introducing targeted improvements in feature extraction, detection heads, and loss functions, achieving better adaptation to aerial scenarios.

1. Introduction

As a crucial branch of target detection, UAV image target detection plays an important role in various fields, including resource exploration, environmental monitoring, urban management, and national defense security [1,2,3,4]. UAV images present complex backgrounds, large target size spans, and numerous small densely distributed targets, which can easily result in missed or false detection, posing significant challenges to detection accuracy. Traditional target detection methods rely on feature engineering and shallow learning models, which effectively handle simple scenes but struggle to meet higher demands for accuracy and robustness when faced with complex UAV target data. With the progress in the field of deep learning, the target detection algorithm has been steadily improved. The first method is based on candidate regions, including R-CNN [5], Fast R-CNN [6], and FasterR-CNN [7]. The second method is based on regression, including the YOLO series [8,9,10,11,12], SSD [13], CenterNet [14], and other relevant improved algorithms [15]. However, the target detection models based on deep learning have a large number of parameters, making it difficult to deploy them to edge devices such as unmanned aerial vehicles (UAVs). Therefore, it is particularly necessary to explore an effective strategy to overcome these difficulties.

For the UAV datasets with a large number of densely distributed small targets, Zhang et al. [16] proposed an efficient UAV image target detection network, CFANet, based on cross-layer feature aggregation. Min et al. [17] improved and proposed the YOLO-DCTI, a UAV small target detection algorithm enhanced by context transformation, based on YOLOv7. Li et al. [18] proposed an adaptive UAV target detection algorithm, UA-YOLOv5s, based on YOLOv5s, which solved the problem of the loss of shallow information of small targets and greatly improved the efficiency of UAV small target detection. Li et al. [19] proposed a new perceptual GAN model to solve the problem of small target detection. By reducing the representation differences in size between targets, the representation of small targets was improved to a “super-resolution” representation, achieving similar features to those of large targets. Ma et al. [20] improved YOLOv8s with the SPD convolution module and proposed the SP-YOLOv8s model, which improved the detection accuracy of small targets, but the computational complexity increased significantly.

To address the issues of large model parameters and high computational complexity, Xie et al. [21] proposed a lightweight detection model, CSPPartial-YOLO, and introduced the Partial Hybrid Dilated Convolution (PHDC) module, which combines the mixed expanded convolution and partial convolution to increase the receptive field at a lower computational cost. Qin et al. [22] introduced the universal inverted neck structure (UIB) based on the classic components of MobileNet, optimized the neural architecture search (NAS) formula, and proposed the MobileNetV4 lightweight neural network. Han et al. [23] proposed a new Ghost module, which generates additional feature maps through inexpensive operations. It makes full use of the correlation and redundancy between feature maps and greatly reduces the amount of calculation. Cai et al. [24] proposed FalconNet, a new lightweight CNN model, to solve the redundancy problem caused by inconsistent model architecture. Compared with the existing lightweight CNN, it achieves higher accuracy with fewer parameters and FLOPs. Hu et al. [25] proposed a lightweight and efficient detection model, EL-YOLO, for low-end GPU aerial image detection scenarios, which realized the lightweight of the model and had strong generalization and robustness for small target detection in aerial images.

Recently, a method named LSM-YOLO [26] was proposed for medical object detection tasks. However, the YOLO-LSM proposed in this study is independently developed for UAV small target detection and is fundamentally different in terms of application domains, network structures, and optimization objectives. To address the unique challenges of UAV aerial imagery, such as complex backgrounds and densely distributed small objects, we propose an entirely new lightweight UAV target detection framework based on shallow and multiscale information learning, also named YOLO-LSM. The proposed YOLO-LSM incorporates several novel components, including the Efficient Small Target Detection Layer (ESTDL), the Multiscale Lightweight Convolution (MLConv) module, the Focaler Inner IoU loss function, and the DFSPP feature fusion network. These innovations make the proposed YOLO-LSM more suitable for UAV target detection tasks and clearly distinguish it from the previously mentioned work.

In view of the limited computing resources of the existing UAV mobile platform, balancing lightweight design with detection accuracy in aerial target detection algorithms remains a challenge. Current methods often reduce model complexity at the cost of detection performance. Therefore, how to minimize computational overhead while improving small target detection accuracy remains a pressing issue in UAV target detection. This paper proposes an improved lightweight target detection method based on the YOLOv5s network. By optimizing the feature extraction module and introducing an efficient computing structure, this method reduces the number of parameters while enhancing detection accuracy, particularly for small targets, making it more suitable for UAV platforms with limited computational resources.

2. Proposed Method

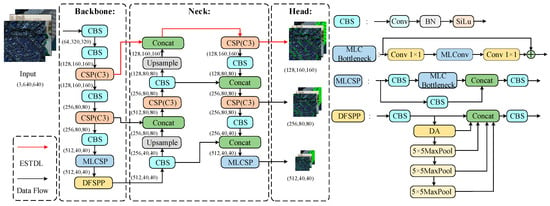

Due to the large variation in the scale of objects in UAV images, the dense distribution of small targets, and the large number of parameters of the target detection model based on deep learning, it is difficult to balance the detection accuracy and model deployment. This paper proposes a lightweight target detection and measurement algorithm for UAV aerial photography based on shallow information learning, YOLO-LSM. As shown in Figure 1, the improved network structure is composed of input, a backbone network, a neck network, and a head network.

Figure 1.

Network structure of YOLO-LSM. Here, (C, H, W) describes the dimensions of the output feature map from the previous layer. Specifically, C represents the number of channels, while H and W represent the spatial height and width, respectively. For example, (128, 160, 160) corresponds to a feature map with 128 channels and a size of 160 × 160 pixels.

- To enhance the extraction of small target features, a new Efficient Small Target Detection Layer (ESTDL) is added to the neck network. When the input is 640 × 640, the detection layer can predict on the 160 × 160 feature map to enhance the perception ability of the model to small targets.

- A Multiscale Lightweight Convolution (MLConv) is designed and applied to the feature extraction module. A new feature extraction module, MLCSP, is designed to improve the situation of insufficient extraction of original feature information, improve the accuracy of small target detection, and reduce the number of parameters and the computational burden of the model.

- The adopted Focaler inner IoU (Intersection over Union) loss function uses the scaling factor of the inner IoU to control the size of the auxiliary frame to accelerate the convergence of the calculated loss. At the same time, by combining with the Focaler IoU to focus on different regression samples, it can improve the detection performance of the model.

- To enhance the model’s perception of features at different scales, we introduced the Deformable Attention (DA) mechanism to improve the model’s ability to understand details, and designed the Deformable Attention Fast-Spatial Pyramid Pooling (DFSPP) module combined with the Spatial Pyramid Pooling-Fast (SPPF) module to reduce the rates of false detection and missed detection and enhance the robustness of the model.

2.1. Efficient Small Target Detection Layer

After feature extraction and fusion by the neck network, the information is forwarded to the head network for unmanned aerial vehicle (UAV) target detection. In the original YOLOv5s model, three detection layers—80 × 80 × 256 (P3), 40 × 40 × 512 (P4), and 20 × 20 × 1024 (P5)—are used to detect small, medium, and large targets, respectively. Taking UAV images with an input size of 640 × 640 as the training benchmark, the datasets often contain a large number of tiny targets. However, the 80 × 80 × 256 (P3) layer struggles to accurately localize these targets and fails to fully capture their fine details, leading to the loss of crucial shallow-level information. On the other hand, although the 20 × 20 × 1024 (P5) layer benefits from richer semantic information, its low spatial resolution becomes a bottleneck for tasks requiring precise localization, while also increasing model parameters and inference latency, ultimately degrading detection accuracy and robustness.

To overcome these challenges, we introduce an Efficient Small Target Detection Layer (ESTDL) into the neck network to enhance the recognition of small objects. Compared to the original 80 × 80 × 256 (P3) layer, ESTDL features a smaller receptive field and higher spatial resolution, enabling better capture of shallow-level details. Specifically designed for UAV target detection, this module significantly improves the model’s ability to detect tiny objects under real-world conditions.

In contrast to the traditional P3 layer in YOLOv5s and recent lightweight detection models, the proposed ESTDL introduces a high-resolution detection branch with a smaller receptive field, effectively preserving shallow-level spatial details crucial for tiny object detection. Additionally, unlike Transformer-based models that rely on computationally expensive global attention mechanisms, ESTDL enhances local feature details in a lightweight manner, making it more suitable for real-time UAV applications under resource-constrained conditions.

2.2. Multiscale Lightweight Feature Extraction Module

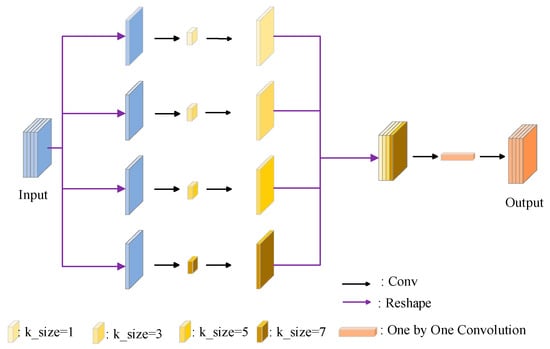

Standard convolution typically applies fixed-size kernels to convolve the image, resulting in feature maps with uniform receptive fields across layers. However, in UAV target detection, this uniformity makes it challenging to represent multiscale target information within a single feature map, limiting the ability to capture spatial and channel information. Additionally, standard convolution incurs high computational complexity and memory consumption, making deployment on edge devices difficult. Therefore, a Multiscale Lightweight Convolution (MLConv) is designed to address these issues.

As shown in Figure 2, since convolution kernels of different sizes capture feature details at multiple scales simultaneously, the input feature map is first grouped and rearranged based on the number of convolution kernel groups to ensure effective multiscale processing. Each channel group is then processed using convolution operations with its corresponding kernel size, thereby extracting multiscale feature information at this layer. In this design, the convolution kernel group is set to [1, 3, 5, 7]. Subsequently, the obtained multiscale convolutional features are stacked along a new dimension to integrate multiscale information. Finally, a 1 × 1 convolution is applied to fuse the extracted multiscale features, producing the final output feature map.

Figure 2.

Multiscale Lightweight Convolutional MLConv structure diagram.

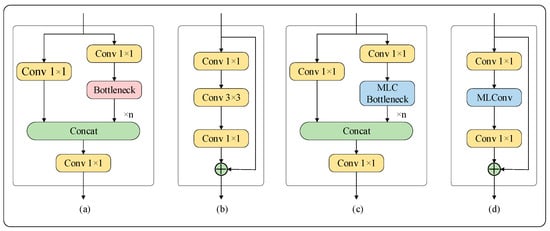

MLCSP is a novel feature extraction module that reconstructs the original CSP bottleneck by integrating MLConv, forming a new multiscale feature extraction structure. As illustrated in Figure 3, MLCSP applies convolutional kernels of different sizes at the same feature map level, enabling the model to capture features across multiple spatial scales. This design not only expands the receptive field but also enriches feature representations, effectively mitigating the loss of important information. Consequently, it enhances the model’s perception and expression capabilities. Furthermore, by removing the original 20 × 20 × 1024 (P5) detection layer from the backbone, MLCSP significantly reduces model complexity, making it more suitable for deployment on UAV platforms with limited computational resources.

Figure 3.

MLCSP structure diagram of feature extraction module. (a): CSP; (b): Bottleneck; (c): MLCSP; (d): MLC Bottleneck.

Unlike the traditional CSP bottleneck structures in YOLOv5s and other lightweight models such as YOLOv8s and YOLOv10s, the MLCSP module enhances multiscale feature extraction by introducing multiple convolution kernels with different receptive fields at the same feature level. This design not only improves the model’s ability to capture tiny object features but also ensures minimal computational overhead. In contrast to Transformer-based detectors, which heavily rely on global attention mechanisms that introduce substantial inference latency, MLCSP achieves efficient local feature aggregation in a lightweight manner, making it ideal for real-time UAV applications with limited computational resources.

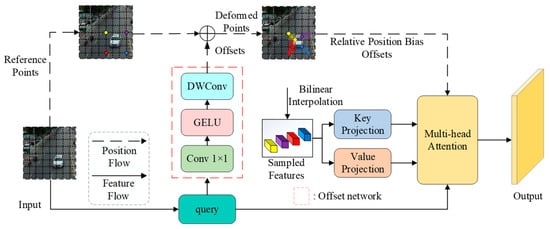

2.3. Deformable Attention Fast-Spatial Pyramid Pooling

The SPPF (Spatial Pyramid Pooling-Fast) structure in YOLOv5 is a feature fusion module that enhances the model’s capability to detect multiscale objects by applying Spatial Pyramid Pooling (SPP) to feature maps of different scales, thereby capturing richer contextual information. However, when dealing with a wide range of object scales, particularly in UAV target detection datasets, SPPF may misclassify background information as targets due to its limited adaptability to object geometric variations, leading to false detections. To address this issue, a Deformable Attention Fast-Spatial Pyramid Pooling (DFSPP) structure, as illustrated in Figure 1, is proposed. DFSPP optimizes the selection and adjustment of feature scale ranges, facilitating more effective multiscale feature fusion and reducing false detections.

Compared to traditional feature fusion modules like SPPF in YOLOv5 and other lightweight models, DFSPP incorporates a Deformable Attention mechanism that dynamically adapts to variations in object scale and geometry. While SPPF applies static pooling across multiple scales, DFSPP adjusts feature scale ranges based on the specific input, improving multiscale context aggregation and reducing background misclassification. This dynamic adjustment enhances detection precision, particularly for densely distributed small objects. Unlike Transformer-based models that rely on global attention for context aggregation, DFSPP uses a lightweight local attention mechanism that improves detection accuracy while maintaining computational efficiency.

Figure 4 illustrates the schematic diagram of Deformable Attention (DA). Guided by key regions in the feature map, it effectively focuses on the essential information. These focus areas are determined by multiple sets of deformable sampling points learned from the query through the offset network. A bilinear interpolation method is employed to sample features from the feature map, which are then fed into the key and value projections for deformation processing. Finally, standard multi-head attention is applied to aggregate the sampled query features with the deformed key and value representations. This mechanism adaptively adjusts the attention distribution based on the shape and structure of the input data, enabling the model to capture complex patterns and structures more effectively. Consequently, it enhances the fusion of UAV target features, improving both perception and representation capabilities.

Figure 4.

Schematic diagram of Deformable Attention.

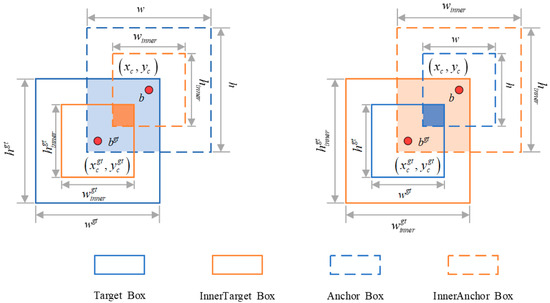

2.4. Focaler Inner Intersection over Union

Bounding box regression is a crucial component of object detection tasks, with commonly used methods including IoU (Intersection over Union), GIoU (Generalized IoU), CIoU (Complete IoU), and SIoU (Soft IoU). Due to the small and densely packed nature of UAV targets, CIoU in YOLOv5 primarily optimizes bounding boxes based on the distance between the center points of the predicted and ground truth boxes, as well as their aspect ratios. However, these metrics are not sufficiently sensitive to small targets. When the offset of the predicted box is minor, the model fails to consider the regression direction between the predicted and actual values, leading to ineffective optimization and slow convergence. To address this issue, this paper proposes the Focaler inner IoU loss function, which measures the degree of overlap between two bounding boxes by incorporating the internal overlapping area of the targets. This approach provides a more precise quantification of the spatial relationship between the predicted and ground truth boxes. By leveraging auxiliary bounding boxes of different scales to compute the loss, the proposed method effectively accelerates the bounding box regression process.

In comparison to Transformer-based models that struggle with small target localization due to their reliance on global attention, Focaler inner IoU Loss focuses on optimizing local feature extraction. It directly enhances the bounding box regression for small targets, improving both efficiency and localization accuracy without the overhead of global attention. As illustrated in Figure 5, the Focaler inner IoU loss function emphasizes the intersection region, enabling the model to better localize targets by focusing on the internal overlap within the bounding boxes. A linear interval mapping strategy is applied to refine regression samples, enhancing the effectiveness of bounding box regression. This ultimately improves the model’s accuracy and robustness. Algorithm 1 illustrates the pseudocode of the Focaler inner IoU algorithm. The detailed calculation process of the Focaler inner IoU loss function is as follows:

| Algorithm 1 The pseudo code of Focaler inner IoU. |

| Input: Map, Anchor box1 (1,4) and box2 (n,4), format is (x, y, w, h), and optional parameters: ratio, u, d Output: Focaler inner IoU, processed Boxes |

| 1: Compute intersection(inter) |

| 2: Compute Union (with ratio adjustment, default ratio = 0.7) 3: Compute 4: The linear interval mapping method is used to reconstruct the IoU (d = 0, u = 0.95) 5: Return Focaler inner IoU and processed boxes |

Figure 5.

Schematic diagram of Focaler inner IoU structure.

Calculate the IoU metric based on the center coordinates, width, and height of the bounding boxes:

where the width and height of the GT frame are, respectively, expressed as wgt and hgt, and the width and height of the anchor frame are, respectively, expressed as w and h; ratio is the scale factor, which is used to adjust the scale of the auxiliary frame. The value range is usually [0.5, 1.5]. (xgt, ygt) represents the center point of GT frame and auxiliary GT frame, and (x, y) represents the center point of the anchor frame and auxiliary anchor frame. The linear interval mapping method is used to reconstruct the IoU loss, so as to improve the edge regression.

As shown in Equation (8), where IoU is the reconstructed Focaler inner IoU focable auxiliary frame loss function, [d,u] ∈ [0, 1]. By adjusting the values of d and u, we can focus on different regression samples. The definition of loss function is shown in Equation (9):

3. Simulation and Validation

To validate the effectiveness of the proposed algorithm, we conducted simulations using two publicly available UAV datasets, VisDrone2019 [27] and Tiny Person, https://www.cvmart.net/dataSets/detail?tabType=1¤tPage=7&pageSize=12&id=36, accessed on 9 April 2025 [28], under the same evaluation conditions.

3.1. Datasets and Evaluation Environment Setup

The VisDrone2019 dataset consists of 8599 images (6471 for training, 548 for validation, and 1580 for testing) and 10 categories. This dataset is collected using different types of UAV platforms in different scenes and different weather and lighting conditions. Each image contains an average of 53 (high density) very small instance objects.

Tiny Person is a dataset for detecting small objects in UAV images, consisting of 1576 images (629 for training, 136 for validation, and 811 for testing). It includes two categories: earth_person and sea_person, forming a dense small target dataset.

The operating environment is Windows 11, and the graphics processing unit is RTX 4060Ti. For both training datasets, the batch size is set to 16, with a total of 300 training epochs. The mosaic augmentation is applied with a probability of 1.0, and Early Stopping patience is 100. The input image size is 640 × 640 for both datasets. Additionally, the Adam optimizer is used for VisDrone2019 with an initial learning rate of 0.001, while the SGD optimizer is used on Tiny Person with an initial learning rate of 0.01. Key hyperparameter settings and data augmentation configurations, as shown in Table 1.

Table 1.

Hyperparameter and data augmentation configurations.

3.2. Evaluation Metrics

In this work, to evaluate the network’s performance in recognizing small UAV targets, we adopted the PASCAL VOC [29] evaluation metrics, including Recall (R), Precision (P), Mean Average Precision (mAP), and F1-score. While prioritizing model accuracy, we also equally considered its computational cost. To assess the number of learnable parameters, we measured the model based on its parameters, computational complexity (GFLOPs), and size. Precision and Recall are calculated using the formulas in Equations (10) and (11), where TP refers to true positives, FP to false positives, and FN to false negatives:

mAP (Mean Average Precision) is the average value of the average precision of multiple categories. Generally, the larger the value of mAP, the better. Its calculation is shown in Formula (12):

Generally speaking, Recall is negatively correlated with Precision. Since these two indicators have the meaning of correctly classifying true positives, for objective evaluation, the harmonic average value of these indicators is defined as the F1-score, that is,

3.3. Comparison Analysis

To further assess the robustness of this method, a quantitative comparison with the current mainstream one-stage target detection algorithms and other improved UAV target detection algorithms was conducted under the same evaluation conditions on two publicly available UAV datasets, VisDrone2019 and Tiny Person. The comparison models include YOLOv5s, YOLOX-s [30], YOLOLite g [31], YOLOX-Tiny [30], YOLOv7-Tiny [32], YOLOv8s [33], YOLOv9t [9], YOLOv10s [8], YOLOv11s [12], SSD [13], RetinaNet [34], CenterNet [14], Faster-RCNN [7], URS-YOLOv5s [35], EL-YOLO [36], MCF-YOLOv5s [37], BD-YOLOv8s [38], SP-YOLOv8s [20], AIOD-YOLO [15], and UA-YOLOv5s [18].

Table 2 shows the detection performance of the above methods on the VisDrone2019 dataset. The average detection accuracy (mAP0.5) of the proposed model, YOLO-LSM, is 41.6%, which shows improvements of 6.9%, 1.4%, 2.0%, 14.2%, 3.5%, 4.4%, 0.9%, 7.2%, 1.9%, and 1.0% compared to YOLOv5s, YOLOX-s, YOLOv5m, YOLOLite-G, YOLOX-Tiny, YOLOv7-Tiny, YOLOv8s, YOLOv9t, YOLOv10s, and YOLOv11s, respectively. The F1-score of the proposed model, YOLO-LSM, is 45.5, with 1.97 M parameters, outperforming other advanced algorithms. Compared to the baseline model, YOLOv5s, GFLOPS decreased by 2.2 G.

Table 2.

Comparison results of different models on VisDrone2019.

On the VisDrone2019 dataset, YOLO-LSM’s mAP0.5 detection algorithm is 31%, 16.1%, 12.6%, and 5.8% higher than SSD, RetinaNet, CenterNet, and Fast R-CNN detection algorithms, respectively. Compared with other improved UAV target detection algorithms, EL-YOLO, MCF-YOLOv5s, URS-YOLOv5s, and UA-YOLOv5s, the proposed lightweight detection model YOLO-LSM performs better in mAP0.5, F1-score, parameters, and GFLOPs.

Moreover, we compared YOLO-LSM with other Transformer-based improved algorithms, including LIS-DETR [39], FNI-DETR [40], and YOLO-HV [41], and the results show that YOLO-LSM achieves the highest mAP0.5 (41.6%) and F1-score (45.5%), while maintaining the smallest number of parameters (1.97 M) and the lowest computational complexity (13.8 G FLOPs) among all compared methods. Compared to LIS-DETR, YOLO-LSM improves mAP0.5 by 1.5%, while reducing the number of parameters by approximately 7.7 times and the FLOPs by 5.5 times. Furthermore, compared to FNI-DETR and YOLO-HV, YOLO-LSM achieves better detection accuracy with significantly fewer parameters and lower computational cost, demonstrating the effectiveness and efficiency of the proposed design.

Table 3 presents the results of a paired t-test comparing the mAP0.5 of various models against the YOLOv5s baseline on the VisDrone2019 dataset. All alternative models, including YOLOv7-Tiny, YOLOv8s, YOLOv11s, and the proposed YOLO-LSM, demonstrate statistically significant improvements over the baseline, with p-values far below the 0.05 threshold. Notably, YOLO-LSM achieves the most significant improvement, with a p-value of 3 × 10−47, indicating a highly reliable performance gain. These results confirm the effectiveness of architectural enhancements in improving detection accuracy.

Table 3.

Significance test of different models relative to the baseline architecture on VisDrone2019.

As shown in Table 4, the comparison results on the Tiny Person dataset show that the proposed YOLO-LSM model achieves a mAP0.5 of 29.2%, which is higher than YOLOv5s, YOLOX-Tiny, YOLOX-s, YOLOLite-G, YOLOv7-Tiny, YOLOv8s, YOLOv9t, YOLOv10s, YOLOv11s, UA-YOLOv5s, BD-YOLOv8s, and AIOD-YOLO by 3.5%, 8.5%, 8.0%, 6.3%, 4.8%, 2.8%, 7.7%, 4.2%, 1.8%, 7.6%, 10.5%, and 1.9%, respectively. The Recall of the proposed YOLO-LSM model is 46.4%, Precision is 30.4%, and the F1-score is 36.7%, which also surpass other advanced methods. Meanwhile, the Parameters value is significantly reduced. Although SP-YOLOv8s performs better in mAP0.5 (34.5%), its computational complexity is 6.3 times higher than that of YOLO-LSM, which cannot be ignored. In conclusion, the comparison results further demonstrate the superiority and robustness of the YOLO-LSM.

Table 4.

Comparison results of different models on Tiny Person.

These results highlight the practical advantages of YOLO-LSM in real-world UAV scenarios. In particular, the model is well-suited for applications involving low-resolution inputs, dense object distributions, and resource-constrained platforms, such as UAV onboard deployment. The superior balance between accuracy and efficiency makes YOLO-LSM an effective solution for real-time small object detection tasks where both computational cost and detection robustness are critical.

3.4. Performance Comparison of Each Module

To evaluate the efficiency and performance in small target detection under the same conditions, based on the YOLOv5s benchmark model, the control variable method was used to carry out comparative tests on each module on the VisDrone2019 dataset.

- 1.

- Efficient Small Target Detection Layer

To validate the advantage of the ESTDL module in improving detection accuracy while maintaining lightweight performance, we compared the performance of different modules in terms of F1-score, mAP0.5, Parameter(M), and computational complexity (GFLOPs). The evaluation results are shown in Table 5. ESTDL performs excellently with a mAP0.5 of 40.1% and the F1-score of 43.9%, closely approaching P234 (40.8%, 44.7%) and P2345 (40.7%, 44.8%). However, its parameter count is only 2.04 M, significantly lower than P234 (5.39 M) and P2345 (7.18 M), demonstrating a remarkable lightweight advantage. Meanwhile, ESTDL’s computational complexity is 14.1 GFLOPs, lower than P234 (17.2 GFLOPs) and P2345 (18.7 GFLOPs), while still maintaining high detection accuracy. These results highlight its efficiency and excellent computational cost-effectiveness.

Table 5.

Performance comparison of different detection layers.

- 2.

- Multiscale Lightweight Feature Extraction Module

To validate the effectiveness of MLCSP for small target detection, we conducted a comparison replacing the Lightweight Convolution MLConv in the MLCSP module with different lightweight convolution modules such as PConv [42], GhostConv [23], SCConv [43], and compared it with MoblieNetV4. The performance results are shown in Table 6.

Table 6.

Performance comparison of different lightweight models.

Comparisons show that MLCSP achieves the best performance in F1-score (40.3%) and mAP0.5 (35.2%), which are improved by 0.8% and 0.5% compared with C3 structure, while maintaining a lower parameter amount (6.45 M) and calculation amount (14.9 G Flops), showing a better feature extraction ability.

In addition, although the MobileNetV4 structure has the least number of parameters (5.45 M) and the least amount of calculation (8.4 G Flops), its detection performance is significantly reduced (F1-score 32.2%, mAP0.5 26.1%), indicating that excessive lightweight may lose the ability of feature extraction. While the parameters of PConv and GhostConv structures are reduced, the detection performance is close to that of C3, while the performance of SCConv is relatively poor (mAP0.5 33.1%).

To sum up, MLCSP takes into account both computational efficiency and detection accuracy and has better feature extraction ability in small target detection tasks, providing an effective solution for lightweight target detection.

- 3.

- Deformable Attention Fast-Spatial Pyramid Pooling

To evaluate the effectiveness of the proposed DFSPP module for small object detection, we conducted comparisons by replacing the DA in DFSPP with other commonly used mechanisms, including CA, SE, CBAM, MLCA, EMA, and ECA, under the same evaluation conditions.

Traditional SPPF conducts multiscale feature fusion using pooling operations, but it lacks explicit modeling of spatial dependencies and channel-wise interactions. The integration of attention mechanisms enhances feature representation, optimizes feature selection, and thereby improves detection accuracy and generalization, especially for small targets. As shown in Table 7, all SPPF variants (SPPF_CA, SPPF_SE, SPPF_CBAM, etc.) show noticeable improvements in F1-score and mAP0.5, demonstrating the positive impact of attention mechanisms on detection performance.

Table 7.

Performance comparison of different attention mechanisms.

Among all variants, DFSPP achieves the best performance, with F1-score and mAP0.5 improvements of 1.1% and 0.6%, respectively, over the original SPPF. Compared to other attention mechanisms, DFSPP consistently outperforms in detection accuracy, while maintaining a reasonable number of parameters and FLOPs. For example, mAP0.5 is 0.1% and 0.2% higher than that of SPPF_EMA and SPPF_ECA, respectively, further validating its advantages in small target detection scenarios.

While the performance gain of DFSPP might appear modest in isolation, such cumulative improvements are highly valuable in real-time UAV applications, where even slight accuracy enhancements can significantly impact robustness under complex environmental conditions. Furthermore, the added complexity introduced by DFSPP is marginal and remains well within the constraints of edge deployment. Overall, DFSPP strikes an effective balance between performance and computational efficiency, offering a practical solution for real-time, small object detection on resource-constrained platforms.

- 4.

- Focaler inner Intersection over Union

To further assess the Focaler inner IoU loss, we conducted comparisons with different loss functions, and the results are shown in Table 8.

Table 8.

Performance comparison of different loss functions.

The introduction of different IoU variant loss functions affects object detection accuracy to varying degrees. Among them, Focaler inner IoU achieved the best performance in mAP0.5 (36.0%), which improved by 1.3%, 0.8%, 1.6%, 1.2%, 0.3%, and 0.6% compared to CIoU, SIoU, GIoU, DIoU, inner IoU, and Focaler IoU, respectively, indicating its advantages in optimizing object box regression. Additionally, both Inner IoU and Focaler IoU showed improvements in Recall and mAP0.5, further validating the effectiveness of the focused auxiliary box loss function in enhancing object detection capability.

From the perspective of false negatives and false positives, CIoU achieved the highest Precision (47.4%), but its Recall was only 34.9%, indicating that its object box regression strategy is stricter, which may lead to more false negatives. In contrast, Focaler inner IoU achieved the best Recall rate (36.2%), which is 1.3% higher than that of CIoU. While reducing false negatives, it maintained Precision at 46.7%, ensuring detection accuracy. Furthermore, both SIoU and Focaler IoU improved in Recall, reaching 35.9% and further reducing the risk of false negatives. Overall, Focaler inner IoU strikes a good balance between Precision and Recall, achieving the highest mAP0.5 (36.0%), which verifies its effectiveness in object detection tasks.

Although the performance gain brought by Focaler inner IoU appears moderate in isolation, it introduces only minimal additional computational cost. In real-time UAV scenarios, where edge devices demand both high accuracy and low latency, such lightweight yet effective enhancements can be particularly valuable. Therefore, Focaler inner IoU achieves a favorable trade-off between detection performance and implementation complexity, making it a practical choice for deployment in resource-constrained environments.

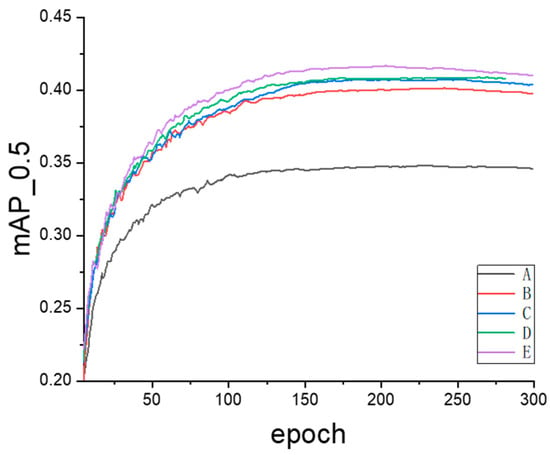

3.5. Ablation Study

To evaluate the capabilities of ESTDL, MLCSP, Focaler inner IoU, and DFSPP, ablation studies were conducted based on the VisDrone2019 dataset to assess the detection performance of different modules under the same evaluation conditions. YOLOv5s was selected as the baseline algorithm, and the settings were kept consistent across all simulations. The results after training for 300 epochs are presented in Table 9.

Table 9.

Ablation study based on VisDrone2019.

- Baseline Setup: The test results of YOLOv5s are selected as the benchmark. Since the proposed YOLO-LSM is built upon YOLOv5s by incorporating ESTDL, MLCSP, Focaler inner IoU, and DFSPP, it becomes more convenient to quantitatively assess the impact of the improved modules. According to Table 9, the Mean Average Precision (mAP0.5) is 34.7%, Precision is 47.4%, Recall is 34.9%, and the number of parameters is 7.04 million.

- Effect of Adding ESTDL: By enhancing the YOLOv5 architecture and designing the Efficient Small Target Detection Layer ESTDL, the model parameters are reduced to 2.04 million, with a Precision increase of 1.3%, a Recall increase of 5.0%, and a mAP0.5 increase of 5.4%. This indicates that the ESTDL module can fully capture the details of the tiny targets, improving the model’s detection accuracy and facilitating deployment on UAV devices.

- Effect of Adding ESTDL and MLCSP: With the parameters reduced to 1.86 million, Map0.5 increases by 0.6%. The MLCSP module utilizes convolutional kernels of various sizes, enabling the capture of feature information at different scales, thereby enhancing the extraction of multiscale information while reducing the model’s parameters.

- Effect of Adding ESTDL, MLCSP, and the Focaler Inner IoU: The proposed Focaler inner IoU improves mAP0.5 to 40.9% without altering the number of parameters. It also accounts for the impact of internal overlap areas between targets and the distribution of targets in bounding box regression, accelerating the model’s convergence speed.

- Effects of YOLO-LSM: mAP0.5 was increased from 34.7% to 41.6%, the accuracy was increased from 47.4% to 50.6%, the recall rate was increased from 34.9% to 41.0%, and the number of model parameters was decreased from 7.04 M to 1.97 M. The reason is that the Deformable Attention mechanism can help the model better integrate the features of different scales and improve the model’s ability to understand details. Ablation studies show the effectiveness of the improved method.

As shown in Table 10, we performed paired t-tests between each ablation variant and the baseline model based on per-image mAP0.5 scores. All variants demonstrate statistically significant improvements over the baseline, with p-values far below the standard 0.05 threshold. Notably, the ESTDL module yielded the most substantial improvement (p = 9 × 10−25), underscoring its strong contribution to performance. These results provide quantitative evidence for the effectiveness of each module in enhancing object detection accuracy.

Table 10.

Significance test of ablation variants relative to the baseline architecture.

The comparison curve of the ablation study on the VisDrone2019 dataset is shown in Figure 6. Curve A represents the baseline model YOLOv5s, curve B shows the introduction of the Efficient Small Target Detection Layer (ESTDL) into the baseline model YOLOv5s, curve C represents the addition of the Multiscale Lightweight Feature Extraction Module (MLCSP) based on simulation B, curve D shows the incorporation of Focaler inner IoU based on simulation C, and curve E represents the proposed improved model YOLO-LSM. The addition of Focaler inner IoU not only increases the average detection accuracy (mAP0.5) but also improves the model’s convergence speed, further validating the performance of Focaler inner IoU for this detection task.

Figure 6.

Accuracy comparison of ablation studies.

3.6. Deployment Experiment

To further validate the effectiveness of YOLO-LSM, different algorithms are deployed on the Jetson Nano Super development board, and comparative tests are carried out under the same experimental conditions.

The experimental results are shown in Table 11. YOLO-LSM achieves a mAP0.5 of 41.6%, representing a 6.9% improvement over YOLOv5s. Although its inference time is slightly higher than that of other YOLO variants, YOLO-LSM maintains high detection accuracy on edge devices while achieving a balanced FPS. These results demonstrate the model’s effectiveness and feasibility for real-world deployment.

Table 11.

Real-time performance comparison of models on edge devices.

In terms of energy consumption, YOLO-LSM demonstrates comparable inference power to YOLOv11s (2.2 W) and achieves an energy per frame of 0.089 J/frame, which is significantly lower than YOLOv8s (0.109 J/frame) and YOLOv10s (0.099 J/frame). This indicates that YOLO-LSM is more energy-efficient and better suited for real-time UAV applications where power constraints are critical.

These results collectively demonstrate the model’s effectiveness, efficiency, and feasibility for real-world deployment on resource-limited platforms.

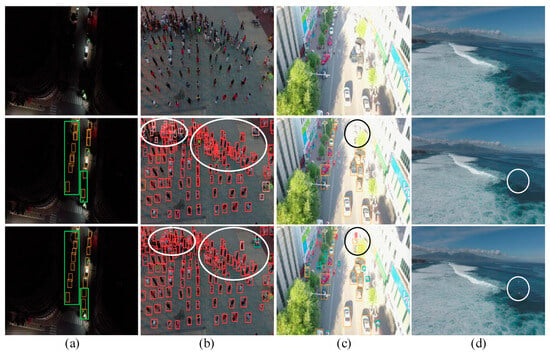

3.7. Visual Analysis

To validate the robustness and generalization of YOLO-LSM in practical UAV surveillance scenarios, we conduct additional qualitative comparisons with YOLOv5s under four challenging real-world conditions, as illustrated in Figure 7: (a) dark environments with extremely low contrast, (b) dense object distribution, (c) strong light environments with overexposure, and (d) low-resolution input images. In Figure 7a, under the dark environment, YOLOv5 (middle row) misses several targets, while YOLO-LSM (bottom row) successfully detects small objects, as highlighted by the green bounding boxes. This demonstrates the effectiveness of our model under low-light conditions. In Figure 7b, under the dense object scenario, YOLO-LSM (bottom row) achieves superior performance in detecting overlapping targets compared to YOLOv5 (middle row), as highlighted by the white elliptical region. In Figure 7c, under high-light conditions, YOLO-LSM shows similar detection performance to YOLOv5s, indicating that the generalization ability of YOLO-LSM under overexposed environments is relatively limited. In Figure 7d, under extremely low-resolution conditions, YOLO-LSM (bottom row) successfully detects the missed targets of YOLOv5s (middle row), demonstrating the strong robustness of YOLO-LSM in low-resolution scenarios.

Figure 7.

Qualitative comparisons between YOLOv5s and YOLO-LSM in four representative real-world UAV scenarios: (a) ultra-low contrast (dark environment), (b) dense object distributions, (c) high-light exposure, and (d) low-resolution input.

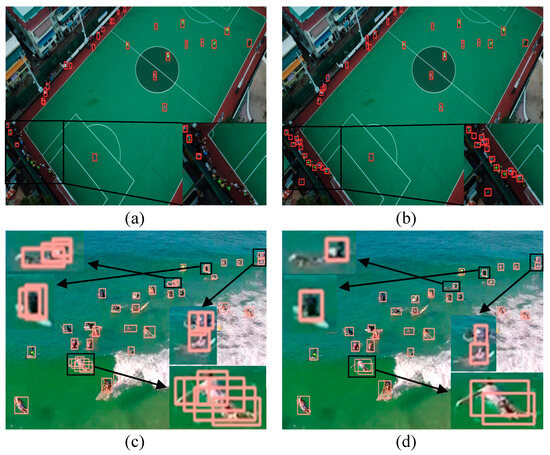

As shown in Figure 8, in the low-illumination scenes of the VisDrone2019 dataset, where features are blurry and boundaries are unclear, YOLO-LSM Figure 8b successfully detected the missed targets of YOLOv5s Figure 8a. The Efficient Small Target Detection Layer (ESTDL) proposed in this model can accurately detect small targets with a size of 160 × 160 in the feature map, reducing the missed detection rate for small targets. In the detection of tiny targets in Tiny Person, YOLOv5s Figure 8c resulted in false positives (black box areas), but in Figure 8d, it can be seen that YOLO-LSM precisely locates the targets, further validating the effectiveness of the algorithm. The key factor is that the Focaler inner IoU loss function, which combines the characteristics of inner IoU focusing on the core area of the bounding box and Focaler IoU focusing on overlapping samples, helps the model to better locate and extract features of dense, small targets, significantly reducing the false detection rate.

Figure 8.

Comparisons of detection results between YOLOv5s and YOLO-LSM. (a) Detection results of YOLOv5s in a low-contrast background. (b) Detection results of YOLO-LSM in a low-contrast background. (c) Detection results of YOLOv5s on dense and tiny targets. (d) Detection results of YOLO-LSM on dense and tiny targets.

Overall, based on the above comprehensive results, YOLO-LSM is more suitable for deployment in low-resolution scenarios, densely small-object distributed environments, and situations with limited onboard computational resources.

4. Conclusions

In this paper, YOLO-LSM, a lightweight UAV target detection algorithm based on shallow and information learning, is proposed for UAV aerial small target detection tasks. The goal is to improve small target detection accuracy while reducing model complexity. Specifically, the Efficient Small Target Detection Layer (ESTDL) is designed to be introduced to improve shallow feature capture, along with improvements to the model’s backbone that reduce parameters. In the feature extraction stage, it forms a new bottleneck structure (MLCSP) that further improves feature representation with fewer parameters. To enhance bounding box regression, a Focaler Inner IoU loss function is proposed to accelerate convergence and improve localization accuracy. Furthermore, a Deformable Attention Fast Spatial Pyramid Pooling (DFSPP) structure is designed to enhance multiscale feature fusion. Comparisons of the obtained results with the available experimental data show that, compared with other methods, the YOLO-LSM detection model achieves better small target detection performance in complex backgrounds while reducing model weight. Specifically, compared to the original YOLOv5, YOLO-LSM reduces parameters by 72% and improves detection accuracy (mAP0.5) by 6.9% (VisDrone2019) and 3.5% (Tiny Person), demonstrating a good balance between accuracy and efficiency.

However, despite these improvements, YOLO-LSM still exhibits certain limitations. First, the inference speed remains a challenge under real-time deployment on resource-constrained UAV platforms. Additionally, the detection performance under strong lighting conditions is not yet optimal, which may affect robustness in high-illumination environments. In future work, we plan to further optimize the model structure, improve inference speed, and enhance robustness under varying lighting conditions to broaden the practical applicability of the method.

Author Contributions

Conceptualization, C.W., J.W., Y.G. and L.M.; methodology, C.W.; software, C.W., C.C. and F.X.; validation, C.W., J.W., Y.G. and L.M.; formal analysis, C.W.; investigation, C.W., C.C. and F.X.; resources, C.W. and J.W.; data curation, C.W.; writing—original draft preparation, C.W., J.W., L.M. and Y.G.; writing—review and editing, C.C. and F.X.; visualization, C.W.; supervision, C.W.; project administration, C.C. and F.X.; funding acquisition, C.C. and F.X. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China, grant number 62171361, and Xi’an Key Laboratory of Intelligent Detection and Perception, grant number 201805061ZD12CG45.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Please contact the author for data requests.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Wang, Z.; Yang, S.; Qin, H.; Liu, Y.; Ding, J. CCW-YOLO: A Modified YOLOv5s Network for Pedestrian Detection in Complex Traffic Scenes. Information 2024, 15, 762. [Google Scholar] [CrossRef]

- Žigulić, N.; Glučina, M.; Lorencin, I.; Matika, D. Military Decision-Making Process Enhanced by Image Detection. Information 2024, 15, 11. [Google Scholar] [CrossRef]

- Vu, V.Q.; Tran, M.-Q.; Amer, M.; Khatiwada, M.; Ghoneim, S.S.M.; Elsisi, M. A Practical Hybrid IoT Architecture with Deep Learning Technique for Healthcare and Security Applications. Information 2023, 14, 379. [Google Scholar] [CrossRef]

- Saradopoulos, I.; Potamitis, I.; Rigakis, I.; Konstantaras, A.; Barbounakis, I.S. Image Augmentation Using Both Background Extraction and the SAHI Approach in the Context of Vision-Based Insect Localization and Counting. Information 2025, 16, 10. [Google Scholar] [CrossRef]

- Girshick, R.B.; Donahue, J.; Darrell, T.; Malik, J.; Berkeley, U. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R.B. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.B.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. YOLOv10: Real-Time End-to-End Object Detection. arXiv 2024, arXiv:2405.14458. [Google Scholar]

- Wang, C.Y.; Yeh, I.H.; Liao, H.P. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. arXiv 2024, arXiv:2402.13616. [Google Scholar]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A Single-Stage Object Detection Framework for Industrial Applications. arXiv 2022, arXiv:2209.02976. [Google Scholar]

- Redmon, J.; Divvala, S.K.; Girshick, R.B.; Farhadi, A.; Recognition, P. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 779–788. [Google Scholar]

- Khanam, R.; Hussain, M. YOLOv11: An Overview of the Key Architectural Enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the Computer Vision–European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Duan, K.; Bai, S.; Xie, L.; Qi, H.; Huang, Q.; Tian, Q. CenterNet: Keypoint Triplets for Object Detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshop (ICCVW), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6568–6577. [Google Scholar]

- Yan, P.; Liu, Y.; Lyu, L.; Xu, X.; Song, B.; Wang, F. AIOD-YOLO: An algorithm for object detection in low-altitude aerial images. J. Electron. Imaging 2024, 33, 013023. [Google Scholar] [CrossRef]

- Zhang, Y.; Wu, C.; Guo, W.; Zhang, T.; Li, W. CFANet: Efficient Detection of UAV Image Based on Cross-Layer Feature Aggregation. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–11. [Google Scholar] [CrossRef]

- Min, L.; Fan, Z.; Lv, Q.; Reda, M.; Shen, L.; Wang, B. YOLO-DCTI: Small Object Detection in Remote Sensing Base on Contextual Transformer Enhancement. Remote Sens. 2023, 15, 3970. [Google Scholar] [CrossRef]

- Li, J.; Zhang, Y.; Liu, H.; Guo, J.; Liu, L.; Gu, J.; Deng, L.; Li, S. A novel small object detection algorithm for UAVs based on YOLOv5. Phys. Scr. 2024, 99, 036001. [Google Scholar] [CrossRef]

- Li, J.; Liang, X.; Wei, Y.; Xu, T.; Feng, J.; Yan, S.; Recognition, P. Perceptual Generative Adversarial Networks for Small Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1951–1959. [Google Scholar]

- Ma, M.; Pang, H. SP-YOLOv8s: An Improved YOLOv8s Model for Remote Sensing Image Tiny Object Detection. Appl. Sci. 2023, 13, 8161. [Google Scholar] [CrossRef]

- Xie, S.; Zhou, M.; Wang, C.; Huang, S. CSPPartial-YOLO: A Lightweight YOLO-Based Method for Typical Objects Detection in Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 388–399. [Google Scholar] [CrossRef]

- Qin, D.; Leichner, C.; Delakis, M.; Fornoni, M.; Luo, S.; Yang, F.; Wang, W.; Banbury, C.R.; Ye, C.; Akin, B.; et al. MobileNetV4: Universal Models for the Mobile Ecosystem. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; pp. 78–96. [Google Scholar]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C.; Recognition, P. GhostNet: More Features From Cheap Operations. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 1577–1586. [Google Scholar]

- Cai, Z.; Shen, Q.J.A. FalconNet: Factorization for the Light-weight ConvNets. arXiv 2023, arXiv:2306.06365. [Google Scholar]

- Hu, M.; Li, Z.; Yu, J.; Wan, X.; Tan, H.; Lin, Z. Efficient-Lightweight YOLO: Improving Small Object Detection in YOLO for Aerial Images. Sensors 2023, 23, 6423. [Google Scholar] [CrossRef]

- Yu, Z.; Guan, Q.; Yang, J.; Yang, Z.; Zhou, Q.; Chen, Y.; Chen, F. LSM-YOLO: A Compact and Effective ROI Detector for Medical Detection. arXiv 2024, arXiv:2408.14087. [Google Scholar]

- Zhu, P.; Du, D.; Wen, L.; Bian, X.; Ling, H.; Hu, Q.; Peng, T.; Zheng, J.; Wang, X.; Zhang, Y.; et al. VisDrone-DET2019: The Vision Meets Drone Object Detection in Image Challenge Results. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshop (ICCVW), Seoul, Republic of Korea, 27–28 October 2019; pp. 213–226. [Google Scholar]

- Yu, X.; Gong, Y.; Jiang, N.; Ye, Q.; Han, Z. Scale Match for Tiny Person Detection. In Proceedings of the 2020 IEEE Winter Conference on Applications of Computer Vision, Snowmass, CO, USA, 1–5 March 2020; pp. 1246–1254. [Google Scholar]

- Everingham, M.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes (VOC) Challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Zhang, R.; Shao, Z.; Huang, X.; Wang, J.; Li, D. Object Detection in UAV Images via Global Density Fused Convolutional Network. Remote. Sens. 2020, 12, 3140. [Google Scholar] [CrossRef]

- Dang, C.; Wang, Z.; He, Y.; Wang, L.; Cai, Y.; Shi, H.; Jiang, J. The Accelerated Inference of a Novel Optimized YOLOv5-LITE on Low-Power Devices for Railway Track Damage Detection. IEEE Access 2023, 11, 134846–134865. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M.; Recognition, P. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Varghese, R.; M, S. YOLOv8: A Novel Object Detection Algorithm with Enhanced Performance and Robustness. In Proceedings of the 2024 International Conference on Advances in Data Engineering and Intelligent Computing Systems (ADICS), Chennai, India, 18–19 April 2024; pp. 1–6. [Google Scholar]

- Lin, T.-Y.; Goyal, P.; Girshick, R.B.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 42, 318–327. [Google Scholar] [CrossRef]

- Bi, L.; Deng, L.; Lou, H.; Zhang, H.; Lin, S.; Liu, X.; Wan, D.; Dong, J.; Liu, H. URS-YOLOv5s: Object detection algorithm for UAV remote sensing images. Phys. Scr. 2024, 99, 086005. [Google Scholar] [CrossRef]

- Xue, C.; Xia, Y.; Wu, M.; Chen, Z.; Cheng, F.; Yun, L. EL-YOLO: An efficient and lightweight low-altitude aerial objects detector for onboard applications. Expert Syst. Appl. 2024, 256, 124848. [Google Scholar] [CrossRef]

- Gao, S.; Gao, M.; Wei, Z. MCF-YOLOv5: A Small Target Detection Algorithm Based on Multi-Scale Feature Fusion Improved YOLOv5. Information 2024, 15, 285. [Google Scholar] [CrossRef]

- Lou, H.; Liu, X.; Bi, L.; Liu, H.; Guo, J. BD-YOLO: Detection algorithm for high-resolution remote sensing images. Phys. Scr. 2024, 99, 066003. [Google Scholar] [CrossRef]

- Chen, Y.; Jin, Y.; Wei, Y.; Hu, W.; Zhang, Z.; Wang, C.; Jiao, X. LIS-DETR: Small target detection transformer for autonomous driving based on learned inverted residual cascaded group. J. Electron. Imaging 2025, 34, 013003. [Google Scholar] [CrossRef]

- Han, Z.; Jia, D.; Zhang, L.; Li, J.; Cheng, P. FNI-DETR: Real-time DETR with far and near feature interaction for small object detection. Eng. Res. Express 2025, 7, 015204. [Google Scholar] [CrossRef]

- Xu, S.; Zhang, M.; Chen, J.; Zhong, Y. YOLO-HyperVision: A vision transformer backbone-based enhancement of YOLOv5 for detection of dynamic traffic information. Egypt. Inform. J. 2024, 27, 100523. [Google Scholar] [CrossRef]

- Chen, J.; Kao, S.-h.; He, H.; Zhuo, W.; Wen, S.; Lee, C.-H.; Chan, S.-H.G. Run, Don’t Walk: Chasing Higher FLOPS for Faster Neural Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 12021–12031. [Google Scholar]

- Li, J.; Wen, Y.; He, L. SCConv: Spatial and Channel Reconstruction Convolution for Feature Redundancy. In Proceedings of the IEEE/CVF Conference on Computer Vision Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 6153–6162. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).