Abstract

Large language models (LLMs) have rapidly advanced natural language processing, showcasing remarkable effectiveness as automated annotators across various applications. Despite their potential to significantly reduce annotation costs and expedite workflows, annotations produced solely by LLMs can suffer from inaccuracies and inherent biases, highlighting the necessity of maintaining human oversight. In this article, we present a synergistic human–LLM collaboration approach for data annotation enhancement (SYNCode). This framework is designed explicitly to facilitate collaboration between humans and LLMs for annotating complex, code-centric datasets such as Stack Overflow. The proposed approach involves an integrated pipeline that initially employs TF-IDF analysis for quick identification of relevant textual elements. Subsequently, we leverage advanced transformer-based models, specifically NLP Transformer and UniXcoder, to capture nuanced semantic contexts and code structures, generating more accurate preliminary annotations. Human annotators then engage in iterative refinement, validating and adjusting annotations to enhance accuracy and mitigate biases introduced during automated labeling. To operationalize this synergistic workflow, we developed the SYNCode prototype, featuring an interactive graphical interface that supports real-time collaborative annotation between humans and LLMs. This enables annotators to iteratively refine and validate automated suggestions effectively. Our integrated human–LLM collaborative methodology demonstrates considerable promise in achieving high-quality, reliable annotations, particularly for domain-specific and technically demanding datasets, thereby enhancing downstream tasks in software engineering and natural language processing.

1. Introduction

Large language models (LLMs) [1,2,3,4,5] have significantly transformed the landscape of natural language processing (NLP) [6], demonstrating exceptional capabilities in generating contextually relevant, coherent, and fluent text across a broad spectrum of applications. This remarkable advancement has been driven by the sophisticated design and substantial computational power underlying contemporary transformer architectures. Initially, the primary applications of LLMs focused on traditional NLP tasks, such as text generation, summarization, and question-answering systems. However, recent developments have expanded their utility into the specialized domain of software engineering, particularly in the context of code representation learning. Here, their ability to understand and manipulate complex semantic structures has significantly improved performance across diverse software engineering tasks, including automated code completion, bug detection, code summarization, and the generation of documentation [7,8,9,10,11].

In parallel with their expanding capabilities in code comprehension, LLMs have emerged as highly effective automated annotators. This capability derives from their intrinsic ability to accurately interpret linguistic context and semantics, enabling them to generate reliable annotations rapidly. Such annotations substantially streamline the data preparation phase, significantly reducing the time and financial costs traditionally associated with manual data labeling. Moreover, by facilitating the rapid creation of large-scale annotated datasets, LLMs enhance the robustness and efficacy of training pipelines for machine learning models, further extending their impact on both academic research and practical applications [12].

Despite these substantial benefits, reliance on fully automated LLM-based annotation presents several notable challenges. Empirical research reveals considerable variability and inconsistency in annotation quality across different tasks, datasets, and domains [13]. Specifically, issues such as inherent biases, inaccuracies resulting from systematic over- or under-prediction of specific labels, and the limited ability of models to generalize effectively to specialized, emerging, or niche domains significantly hinder their effectiveness. For instance, in tasks involving nuanced categorization, such as hate speech detection or bot detection, the precision and context-awareness provided by human judgment are indispensable. These challenges underscore the critical importance of continued human oversight to ensure annotations are accurate, reliable, unbiased, and contextually appropriate.

To address these significant challenges, we propose synergistic human–LLM collaboration for data annotation enhancement (SynCode), an innovative annotation framework specifically designed to integrate the complementary strengths of human annotators and automated LLM annotation. SynCode explicitly targets the annotation of technically intricate, code-centric datasets typified by large-scale online knowledge-sharing platforms like Stack Overflow. Within such environments, accurately connecting concise question titles, which succinctly describe specific programming problems (for example, “How do I merge two dictionaries in Python (https://www.python.org/)?”) (real-world questions are often much more complex and varied in structure, while a simple and illustrative example is included here for clarity), to relevant and effective code snippets within answers, represents a complex yet critical task. Typically, the direct and explicit semantic linkage between questions and optimal solutions is absent, complicating automated retrieval and annotation processes.

We address these challenges through a structured, multi-level annotation pipeline designed to incrementally refine annotation quality and ensure optimal accuracy. Initially, SynCode employs a fast and efficient TF-IDF-based lexical analysis (AnnoTF) for preliminary identification of relevant textual and code elements using keyword frequency. This serves as an effective, computationally inexpensive filter, rapidly narrowing down large corpora to manageable subsets. Subsequently, the framework leverages advanced transformer-based NLP models (AnnoNL) capable of encoding textual information into high-dimensional semantic embeddings. These embeddings provide the framework with nuanced understanding far beyond simple keyword matching, effectively capturing subtle contextual and semantic relationships between question titles and potential solutions.

To further deepen the semantic annotation process, SynCode incorporates UniXcoder (AnnoCode), an advanced large language model specifically trained on vast repositories of programming language data and associated natural language descriptions. UniXcoder’s unique strengths lie in its ability to interpret complex syntactic structures in programming languages alongside natural language semantics, ensuring a high degree of semantic alignment and contextual accuracy between the questions posed and the associated code snippets identified.

In a practical, real-world scenario, SynCode can be utilized by software development teams and educational institutions to efficiently annotate and categorize code snippets within extensive knowledge-sharing platforms like Stack Overflow. For instance, software developers encountering a common programming problem could rapidly identify highly relevant, accurately annotated code snippets that effectively solve their issues. Similarly, instructors in programming courses can leverage SynCode to curate accurately annotated code examples tailored to specific programming concepts or assignments. This facilitates improved learning outcomes by providing students with clearly labeled, relevant examples, thereby enhancing their understanding of coding best practices, syntax, and common programming patterns. Additionally, instructors can create targeted datasets for assessments and projects, significantly enriching students’ hands-on coding experiences and practical knowledge.

Complementing this methodological core, SynCode addresses practical challenges posed by large-scale collaborative annotation processes. Recognizing the diverse working conditions and requirements of annotators, we have developed multiple intuitive and flexible user interfaces. These include a web-based prototype for convenient, browser-based interaction, an iOS-compatible mobile application optimized for annotators who require mobility and flexibility, and a standalone desktop application that supports offline annotation tasks, thereby increasing accessibility and efficiency. Thus, our framework effectively combines the precision, contextual sensitivity, and oversight provided by human annotators with the efficiency, scalability, and semantic sophistication offered by LLMs. This synergistic human–LLM collaboration significantly improves annotation reliability, accuracy, and efficiency, especially for domain-specific and technically demanding annotation scenarios. Consequently, SynCode offers substantial potential for enhancing downstream tasks in software engineering and NLP, thereby facilitating more robust, accurate, and reliable model training and application outcomes. The contributions of our work are highlighted as follows:

- We present a structured annotation pipeline combining lexical filtering, semantic analysis using advanced transformer models, and iterative human validation.

- Our approach supports multi-platform collaboration, providing user-friendly interfaces across desktop, mobile, and web applications. This infrastructure includes robust synchronization mechanisms, ensuring real-time, seamless collaborative annotation while effectively handling potential conflicts and concurrent edits.

- Our framework strategically applies both lexical and semantic relevance checks, significantly reducing irrelevant or minimally relevant data entries. The detailed case study clearly demonstrates how SynCode effectively isolates high-quality, contextually relevant Stack Overflow post-code pairs, improving both the annotation efficiency and the overall dataset quality for subsequent machine learning applications.

This paper is an extended version of our paper published in SERA 2025 [14], with additional implementation details, further experiments, and expanded discussion of the evaluation. We briefly outline the structure of the remainder of this paper. Section 2 reviews recent advancements in LLM-based annotation, human–AI collaboration, and hybrid annotation frameworks, establishing the context and motivation for our approach. Section 3 introduces our framework, detailing its multi-stage annotation pipeline that integrates lexical filtering, semantic modeling, and iterative human refinement. Section 4 presents a comprehensive case study of our approach, highlighting its system architecture, synchronization strategies, and collaborative annotation workflow across multiple platforms. Section 5 discusses potential threats to the validity of our findings, such as dataset limitations, model dependencies, and annotator subjectivity, along with proposed mitigation strategies. Section 6 outlines avenues for future work, including model fine-tuning, usability enhancements, and broader domain adaptation. Finally, Section 7 concludes with a summary of our contributions and their implications for scalable, human-in-the-loop data annotation.

2. Related Work

The recent proliferation of LLMs has dramatically influenced various domains within machine learning and natural language processing. Their exceptional capabilities in generating fluent, contextually relevant content have opened new opportunities for data annotation and decision-making tasks. Despite these advancements, the practical adoption of LLMs faces significant challenges, primarily related to reliability, transparency, and interpretability. Understanding these limitations is crucial for developing effective collaborative frameworks that leverage both human and AI strengths to enhance overall system performance.

2.1. Reliability and Limitations of LLMs

LLMs have exhibited notable proficiency in producing clear and coherent explanations supporting assigned data labels [15,16], frequently surpassing human-generated rationales in perceived quality and coherence [17]. Beyond simply providing justifications for existing labels, self-rationalizing models extend their utility by simultaneously generating both new predictions and associated explanatory narratives. Recent research [18,19,20,21] has extensively explored the self-explanatory capabilities of LLMs across diverse tasks such as classification and question-answering. However, significant challenges persist regarding the fidelity and authenticity of these model-generated explanations. Studies [22] indicate that explanations provided through chain-of-thought methods can inadvertently be influenced by contextual biases, raising concerns about their reliability in faithfully reflecting the models’ decision-making processes. Our approach addresses these limitations by integrating human annotators into the LLM explanation workflow, enabling iterative refinement and validation of the generated rationales. This human–LLM collaborative process significantly enhances the accuracy, reliability, and interpretability of model-generated explanations, effectively mitigating biases and ensuring alignment with the underlying decision-making mechanisms.

2.2. Human–LLM Collaboration

Recent advancements in LLMs, including prominent examples such as OpenAI’s GPT series [23,24], Google’s PaLM [25], and Meta’s LLaMA [26], have significantly expanded their capabilities across diverse natural language processing tasks. These breakthroughs have spurred interest in leveraging LLMs for data annotation tasks, traditionally performed by human annotators. Approaches have emerged where LLMs either augment human-generated annotations [12,27] or replace them entirely to expedite data labeling processes [13,28]. However, despite their impressive abilities, LLMs exhibit notable limitations in accuracy [29], especially in specialized domains or nuanced tasks. Issues persist, such as the inability to consistently comprehend complex domain-specific knowledge or accurately interpret context-sensitive content [13]. Moreover, annotations produced solely by LLMs can lack transparency, potentially embedding biases and inaccuracies that undermine the trustworthiness and ethical integrity of annotated datasets [29,30]. Acknowledging these challenges, our approach presents a systematic framework designed explicitly for effective collaboration between human annotators and LLMs. Rather than replacing human annotation efforts, our approach strategically integrates human oversight and iterative refinement directly into the annotation pipeline. This collaborative synergy allows LLMs to rapidly generate preliminary annotations and explanatory rationales, which human annotators subsequently review and refine, thereby significantly enhancing annotation accuracy and interpretability.

2.3. Human–LLM Collaboration for Data Annotation

Rapid progress in machine learning (ML) critically depends on high-quality annotated datasets, yet the manual labeling process remains costly and labor-intensive. To mitigate this, combining automated annotation with targeted human oversight is increasingly recognized as an efficient strategy. In such hybrid annotation processes, initial labels are generated automatically by ML systems and subsequently reviewed and corrected by human annotators [31]. Accurate initial annotations have been shown to significantly reduce manual annotation effort, enhancing both productivity and labeling consistency [32,33,34]. However, the effectiveness of automated annotation hinges on the quality of the initial labeling: inaccuracies at this stage can negate efficiency gains and degrade dataset quality [35,36]. Thus, determining which data instances require human oversight becomes crucial. To achieve optimal annotation efficiency, active learning strategies [37] have emerged as a powerful approach, selectively identifying the most informative data points for human review, thereby optimizing resource allocation and annotation accuracy.

Our proposed method, SynCode, advances this hybrid annotation paradigm by integrating active learning with a synergistic collaboration between human annotators and LLMs. Unlike conventional approaches, SynCode incorporates iterative human–LLM interactions, enabling continuous refinement of initial annotations and explanations generated by the LLM. Through this collaborative feedback loop, SynCode not only improves annotation accuracy and reduces biases but also provides a clear rationale behind labeling decisions, enhancing the interpretability and reliability of annotations. Annotation tools traditionally vary widely across domains, including text classification [34,38], image processing [39], clinical data [36,40,41], and geospatial applications [42]. SynCode extends this versatility by providing user-friendly, cross-platform annotation interfaces, facilitating seamless human–LLM interaction across diverse tasks. By bridging advanced computational capabilities with human expertise, SynCode represents a significant advancement in annotation practices, offering both practical efficiency and methodological rigor in modern ML workflows.

2.4. Factors Influencing Human–AI Collaborative Performance

In human–AI collaborative systems, the effective integration of AI-driven decisions significantly influences overall task performance and decision accuracy. Prior research highlights several critical factors that determine how effectively human users interact with AI-generated recommendations. Among these, the transparency and interpretability of AI model predictions emerge as essential elements influencing human trust and adoption rates [43,44,45]. A primary factor is the accuracy of AI predictions [46,47]. When users perceive or directly observe higher model accuracy, they demonstrate increased reliance on AI recommendations, leading to improved outcomes [45,48,49]. Furthermore, clearly communicating model uncertainty or confidence levels enhances users’ trust, allowing them to make informed judgments about whether to accept or override AI suggestions. This transparency reduces instances of over-reliance or mistrust, balancing effective collaboration between humans and AI systems. Building upon these insights, our framework incorporates transparency and iterative human feedback directly into its annotation process. By integrating explicit model confidence indicators and clear, context-rich rationales generated by LLMs, SynCode helps annotators make more informed decisions regarding AI-generated labels. Additionally, SynCode supports iterative interaction, allowing annotators to continuously refine and improve AI suggestions based on real-time human feedback. This structured interaction ensures sustained improvement in annotation accuracy, consistency, and interpretability, directly addressing the challenges highlighted in existing studies. Through this deliberate and iterative approach, SynCode not only leverages proven factors such as transparency and model accuracy but also enhances the collaborative synergy between humans and AI. The result is a robust annotation ecosystem characterized by high reliability, reduced biases, and greater human confidence in AI-supported tasks.

3. Approach

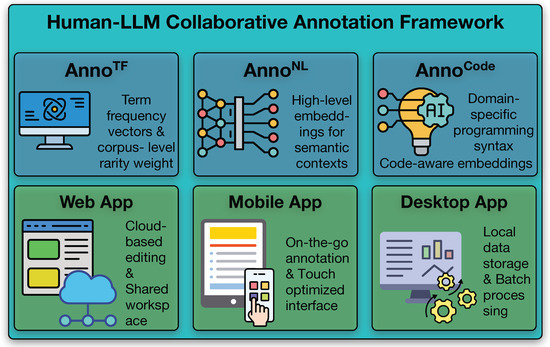

In Figure 1, we outline our framework integrating AnnoTF, AnnoNL, AnnoCode, and human verification for high-quality annotations. A Google Cloud-based synchronization system enables seamless cross-device collaboration.

Figure 1.

Overview of the human–LLM collaborative annotation framework. This diagram showcases three primary annotation engines, employing distinct approaches to evaluate the alignment between question titles and code snippets. AnnoTF leverages token frequency analysis with corpus-wide rarity adjustments, AnnoNL applies high-level semantic representations, and AnnoCode utilizes embeddings tailored for understanding code. The framework supports collaborative annotation across multiple platforms.

3.1. Data Annotation via NLP Transformer

Traditional BERT-based similarity methods require encoding sentence pairs jointly, leading to quadratic computational complexity when performing large-scale comparisons. This inefficiency makes them impractical for large-scale annotation tasks, where millions of comparisons may be needed. In contrast, Sentence-BERT (SBERT) [50] encodes each sentence independently, generating fixed-size vector representations. This architecture reduces computational complexity from to , making it well-suited for large-scale semantic similarity tasks, such as data annotation for Stack Overflow.

Building on this efficiency, AnnoNL leverages sentence transformers, specifically all-MiniLM-L6-v2, to compute the semantic similarity between question titles () and code snippets () in Stack Overflow datasets. The all-MiniLM-L6-v2 model is based on Microsoft’s MiniLM (minimally distilled language model) and has been fine-tuned on a diverse dataset of over one billion sentence pairs using a self-supervised contrastive learning objective. It consists of six transformer layers, making it computationally efficient while maintaining strong semantic representations. By adopting SBERT’s Siamese network architecture, AnnoNL enables direct cosine similarity computation between independently encoded embeddings. Unlike conventional BERT models, which require pairwise encoding and introduce significant computational overhead, our method removes the need for exhaustive comparisons, enabling real-time annotation that is both scalable and efficient.

While TF-IDF is simpler and faster to apply, making it an ideal initial filter for large-scale annotation pipelines, it relies on a bag-of-words (BoW) model that does not consider word order or semantic nuances, and it also suffers from the curse of dimensionality due to its large, vocabulary-dependent feature space. By contrast, SBERT provides a context-aware representation of sentences, mapping them into a dense, fixed-size embedding space that preserves semantic relationships while reducing dimensionality. Consequently, after using TF-IDF to quickly isolate relevant entries from a potentially massive corpus, we apply SBERT to capture deeper semantic alignments. This two-tiered approach balances efficiency and quality, making it especially suitable for annotating large-scale Stack Overflow datasets.

3.2. Data Annotation via UniXcoder

AnnoCode builds upon UniXcoder [51], a transformer-based model pre-trained to effectively support code understanding and generation tasks. Unlike traditional transformer models such as BERT/RoBERTa [1], UniXcoder integrates multi-modal data, including abstract syntax trees (AST) and code comments, into its training process to enhance semantic and structural code comprehension. To achieve this, UniXcoder introduces multiple specialized objectives: (1) masked language modeling, where tokens are randomly hidden, prompting the model to recover their original form based on context; (2) unidirectional language modeling, optimized for autoregressive scenarios like code completion by predicting tokens sequentially; (3) a denoising objective, aimed at reconstructing code sequences from partially corrupted spans, facilitating generation tasks. Additionally, UniXcoder incorporates multi-modal contrastive learning to enhance the semantic understanding of code snippets and cross-modal generation to align representations across different programming languages by generating descriptive code comments.

UniXcoder is pre-trained jointly on millions of NL-PL pairs and unimodal code examples sourced from code repositories (CodeSearchNet [52]). Through this diverse pre-training corpus, UniXcoder learns semantic-aware representations that bridge natural language and programming constructs. Empirical evaluations have highlighted UniXcoder’s effectiveness across multiple code-centric tasks [51]. The model generates representations via multiple transformer layers, producing contextual token-level embeddings capturing both local syntax and global semantic relationships. For obtaining sequence-level representations, UniXcoder aggregates token embeddings, typically using mean pooling, to create compact semantic vectors suitable for downstream classification, retrieval, or similarity-based tasks. This strategy facilitates effective semantic understanding across programming and natural language contexts.

In AnnoCode, the pretrained UniXcoder model from Hugging Face, a Microsoft/UniXcoder-based model, is employed for similarity scoring between question titles () and code snippets () on Stack Overflow.

Specifically, for tokenization, both the text input () and the code snippet () are split into subwords, following the BPE/WordPiece-based tokenizer used by UniXcoder.

For encoding, UniXcoder processes (the tokenized title) and (the tokenized snippet) separately, each yielding a single sequence-level embedding (the [CLS]-based vector) that captures the content’s overall meaning.

denote the final (CLS)-driven vectors. For cosine similarity, the closeness of the two embeddings is measured by the following:

This score lies in but often falls in for semantically related text/code pairs. Higher values suggest greater alignment between and .

Since UniXcoder was pre-trained on large-scale pairs of and , it naturally aligns these two modalities in a shared embedding space, enabling SynCode to generate robust similarity scores [51]. In its current design, SynCode relies on UniXcoder’s out-of-the-box embeddings without introducing additional domain adaptation or structural modeling. Going forward, we plan to apply continued pre-training or domain-adaptive fine-tuning if SynCode must handle specialized domains such as library-specific APIs or security-critical coding styles. Since transformer-based code embedding models like UniXcoder consider code as a linear sequence of tokens (or a flattened AST), they fall short of representing deeper structural code semantics. We are inspired by GraphCodeBERT [7], which demonstrates the value of incorporating data-flow relations and the inherent structure of code. To address the limitations of current embeddings, we plan to enhance UniXcoder by integrating both AST-based representations and structural relationships derived from control and data flow analysis. This integration will enable the model to gain explicit knowledge of relationships, such as execution order and variable dependencies that are often only implicit or entirely missed in traditional sequence-based representations.

A central component of our research is a multi-step, human–LLM collaborative workflow designed specifically to annotate Stack Overflow datasets. Initially, we leverage SBERT to quickly capture semantic similarities at the sentence level, filtering out irrelevant or less promising question–snippet pairs based on general semantic relevance. In the subsequent step, we apply UniXcoder, a transformer model specialized in programming language comprehension, to generate code-aware embeddings. These embeddings more precisely evaluate the semantic alignment between Stack Overflow question titles and the corresponding code snippets. Finally, human annotators validate and refine the automated labels, ensuring correctness, mitigating model biases, and further improving dataset reliability. By integrating sentence-level semantics (via AnnoNL), fine-grained code-specific insights (via AnnoCode), and expert human judgment, our approach produces consistently high-quality annotated datasets, thus enhancing the effectiveness and accuracy of LLMs for downstream, code-focused applications.

4. Case Study

To demonstrate the practical viability and effectiveness of the proposed framework, we present a detailed case study grounded in real-world implementation. This case study focuses on the architectural foundations and collaborative workflow that underpin SynCode, highlighting the technical design choices, synchronization mechanisms, and cross-platform coordination strategies that enable scalable, reliable, and context-aware annotation in complex software engineering environments.

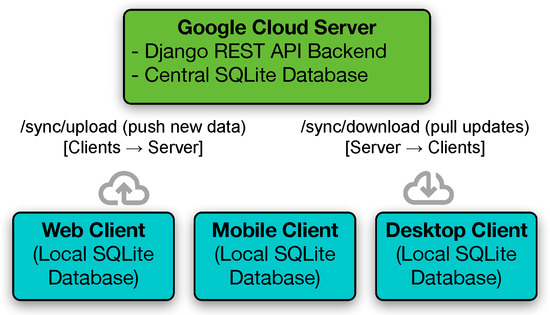

4.1. System Architecture and Configuration

Figure 2 presents the multi-platform annotation architecture employed by SynCode, contextualized through the lens of the model–view–controller (MVC) architectural pattern. In this framework, the client applications, including desktop, mobile, and web-based interfaces, serve as the View layer, which is responsible for presenting the user interface and capturing annotation inputs. Each client operates with an independent local database, supporting responsive interaction and enabling offline annotation functionality. The controller is embodied by the system’s synchronization logic, which manages the coordination and transfer of data between the clients and the backend. When Internet connectivity is restored, this controller process synchronizes updates by transmitting locally modified data to the centralized backend. The model corresponds to the centralized repository hosted on Google Cloud and managed through the Django REST framework. It stores the canonical version of the annotation data, ensuring consistency, persistence, and integrity across all platforms. This clear separation of concerns promotes scalability, maintainability, and streamlined collaboration within the annotation ecosystem.

Figure 2.

Cross-platform annotation architecture demonstrating the data synchronization workflow.

To address the possibility of conflicting updates from simultaneous user activity, SynCode employs a robust timestamp-based conflict resolution strategy. This mechanism guarantees that the most recent changes are preserved during synchronization, thereby maintaining a unified and authoritative dataset across all platforms. From an implementation standpoint, this approach acts as a non-blocking alternative to traditional concurrency control methods, such as critical sections or lock-based synchronization. Locking mechanisms, although reliable, can introduce significant latency, reduce scalability, and even lead to deadlocks when multiple clients attempt to access shared resources simultaneously. In contrast, SynCode avoids such issues by associating each annotation entry with a last-modified timestamp. During synchronization, conflicting updates are resolved deterministically by selecting the version with the most recent timestamp. This lightweight strategy not only simplifies conflict resolution logic but also ensures efficient, scalable operation in distributed environments, enabling annotators to collaborate in real time without risking bottlenecks or data contention.

The backend infrastructure of SynCode is organized according to a layered architectural model, offering a clear separation of concerns across the presentation, business logic, and data access layers. Developed using a modern web framework, the system benefits from ease of deployment, development flexibility, and strong support for iterative refinement. The data access layer currently utilizes the framework’s built-in SQLite database, which simplifies initial setup and supports lightweight data management suited to early-stage development. Integrated RESTful APIs, positioned within the business logic layer, enable smooth communication between backend services and diverse client-side applications, ensuring consistent operation across varied platforms. This layered approach enhances maintainability and scalability, while also positioning the system for future evolution. Specifically, the architecture allows for flexible expansion of the data access layer to incorporate more advanced storage solutions such as PostgreSQL, MySQL, or distributed NoSQL systems. These enhancements will enable higher data throughput, support for complex queries, and robustness for large-scale deployments, all without necessitating a redesign of the overall backend structure.

Annotation data within SynCode are structured to support collaborative workflows and efficient data exchange. The web framework’s serialization capabilities convert annotation records into JSON format, a deliberate design choice that ensures consistency, performance, and ease of integration. JSON is preferred over alternatives such as XML or YAML due to its lightweight syntax, native compatibility with modern web technologies, and minimal parsing overhead. In contrast to XML, which introduces verbose markup and structural complexity, JSON offers a more compact and readable format ideal for web-based interactions. Compared to YAML, which can be more error-prone due to indentation sensitivity and limited tooling support, JSON balances readability and efficiency. These characteristics make JSON especially well-suited for real-time client–server interactions in a collaborative annotation system. Standardized API endpoints are implemented through generic HTTP views, supporting intuitive and predictable GET and POST operations. Clearly defined URL structures simplify client–server interactions, empowering annotators to engage with the backend services effortlessly, regardless of their technical background.

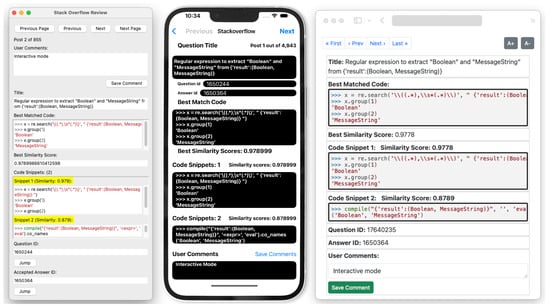

4.2. Multi-Platform Collaborative Annotation Workflow

SynCode was designed to facilitate seamless, real-time collaboration across multiple computing environments, including mobile devices, desktop computers, and web-based platforms. To achieve this integration, we implemented a centralized synchronization strategy, leveraging web framework service interfaces and a secure, cloud-hosted database system deployed on Google Cloud. This architecture ensures that any annotation made by an individual annotator is instantly reflected across all connected platforms, promoting synchronized updates, reducing delays, and ensuring consistent data availability for all team members involved in the annotation project. Figure 3 illustrates the multi-platform synchronization architecture used by SynCode. The framework supports annotation across mobile, desktop, and web platforms, ensuring effective real-time collaboration. The synchronization architecture is built around a centralized Google Cloud server running a Django REST API backend coupled with a secure, centrally managed database. This central server is responsible for receiving annotation updates submitted by annotators and distributing updated annotations back to all connected client applications, thus maintaining consistency and data coherence across platforms.

Figure 3.

Cross-platform annotation architecture demonstrating the data synchronization workflow (due to limited space, only the desktop interface is shown; web and mobile clients offer analogous functionality.)

Recognizing the importance of continuous productivity regardless of network availability, the client-side applications in SynCode independently maintain local database instances. This design choice supports robust offline annotation capabilities, allowing annotators to seamlessly continue their tasks even without internet access. Upon re-establishing connectivity, the system automatically initiates incremental synchronization, which specifically identifies and uploads only locally modified records rather than transferring the entire dataset. Concurrently, it retrieves any new or modified annotations from the central repository. This targeted, incremental updating not only optimizes bandwidth utilization but also ensures scalability and efficiency, which is particularly beneficial when large volumes of annotations are involved or when annotators work from remote or network-constrained environments.

A significant challenge in multi-platform collaborative annotation is handling concurrent edits, i.e., situations where multiple annotators modify the same data entry simultaneously. SynCode addresses this through a timestamp-based conflict resolution mechanism. Each annotation update is timestamped upon modification, and during synchronization, the system identifies conflicts by comparing these timestamps. The most recent annotation automatically takes precedence, effectively resolving discrepancies and ensuring consistent, high-integrity annotation data across platforms. This simple yet effective method minimizes conflicts, reduces the need for manual resolution, and promotes smooth collaboration among annotators.

To facilitate clear and efficient data communication between client applications and the centralized database, SynCode provides two clearly defined REST API endpoints. The endpoint /sync/upload/ is dedicated to the submission of newly created or modified local annotations from client applications to the central server. Conversely, the /sync/download/ endpoint serves client requests by delivering recent annotation updates stored centrally. These structured endpoints streamline interactions, allowing multiple annotators to concurrently contribute and retrieve updates without experiencing synchronization delays or encountering data inconsistencies.

An essential component of the SynCode framework is the synergy between automated processes and human annotation expertise. As illustrated in Figure 2, the system employs advanced transformer-based models to identify semantically relevant code snippets from Stack Overflow data, assigning precise semantic similarity scores (e.g., 0.979 and 0.879). However, despite the accuracy of these automated methods, the generated annotations sometimes include extraneous formatting artifacts or contextually irrelevant elements, especially in code derived from interactive Python environments, such as REPL sessions (characterized by prompts like >>>). Human annotators critically review these automatically generated annotations, removing extraneous symbols and improving clarity, correcting formatting issues, and verifying code accuracy and context suitability. These manual refinements significantly enhance the immediate quality, usability, and contextual accuracy of the dataset, making it readily suitable for practical applications and downstream tasks. Moreover, the insights gained through this hands-on annotation process inform the development of robust, automated, and rule-based enhancement mechanisms. As annotators continuously identify and address recurrent annotation artifacts and errors, they systematically document and formalize these corrective measures into detailed scripts and procedures. This systematic codification not only streamlines the correction process but also incrementally enhances the sophistication, accuracy, and reliability of automated annotation methods, thereby ensuring sustained improvements in future dataset annotations.

The iterative nature of this refinement process closely mirrors principles found in agile development practices, particularly pair programming. In this context, human annotators effectively act as collaborative partners alongside large language models, engaging in a continuous dialogue to validate, critique, and improve annotation outputs. Just as in agile pair programming, where one developer writes code while the other reviews in real time, the human–LLM collaboration involves one ’partner’ generating annotations and the other actively assessing and enhancing their quality. This dynamic exchange fosters greater contextual awareness, immediate feedback, and shared ownership of quality outcomes, ultimately leading to more robust and trustworthy datasets that are aligned with both human intuition and machine scalability.

This iterative collaboration model, integrating automated semantic annotation with human expert judgment and refinement, exemplifies an effective human-in-the-loop methodology. By leveraging the computational speed and precision alongside human contextual intelligence, SynCode consistently delivers high-quality, reliable, and contextually accurate annotations, significantly benefiting downstream machine learning tasks and software engineering applications.

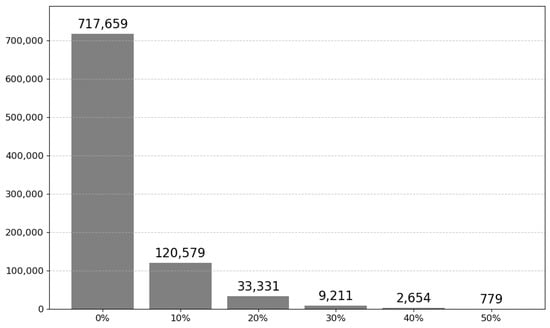

4.3. Lexical and Semantic Relevance in SynCode’s Annotation Pipeline

Figure 4 illustrates the distribution of Stack Overflow post counts across different lexical relevance thresholds, highlighting the effectiveness of evaluating relevance between Stack Overflow post titles and associated code snippets using a standard term weighting scheme, such as AnnoTF. Initially, the unfiltered dataset comprises over 717,000 Stack Overflow posts at the 0% relevance threshold, reflecting the entirety of available data without any lexical filtering. However, even modest relevance checking significantly reduces the number of retained posts.

Figure 4.

Stack overflow post counts across lexical relevance thresholds. This bar chart illustrates how the number of Stack Overflow posts varies according to different lexical relevance thresholds, as measured by a standard term weighting scheme. The x-axis represents increasing threshold levels (from 0% to 50%) used to determine the minimum required relevance between a post title and its corresponding code snippet. The y-axis shows the number of posts that meet or exceed each threshold. The steep decline in post counts, dropping from over 717,000 at 0% to fewer than 1000 at 50%, demonstrates the effectiveness of lexical relevance evaluation in filtering out low-quality or loosely related title–code pairs.

For example, at the relatively mild 10% threshold, only about 120,000 posts remain, indicating that a substantial portion of the dataset contains minimally relevant or irrelevant title–code pairs. This sharp decrease highlights the significant presence of lower-quality data entries within large, unfiltered datasets. As the relevance criteria become more stringent, this downward trend continues markedly, around 33,000 posts persist at the 20% threshold, indicating a further reduction of less pertinent entries. Ultimately, at thresholds of 50% and above, fewer than 1000 Stack Overflow posts are preserved, underscoring the capability of stringent lexical checks to effectively isolate high-quality, contextually relevant title–code pairs. This progressive and selective filtering ensures a higher concentration of meaningful data, thus facilitating streamlined and accurate annotation processes in subsequent stages.

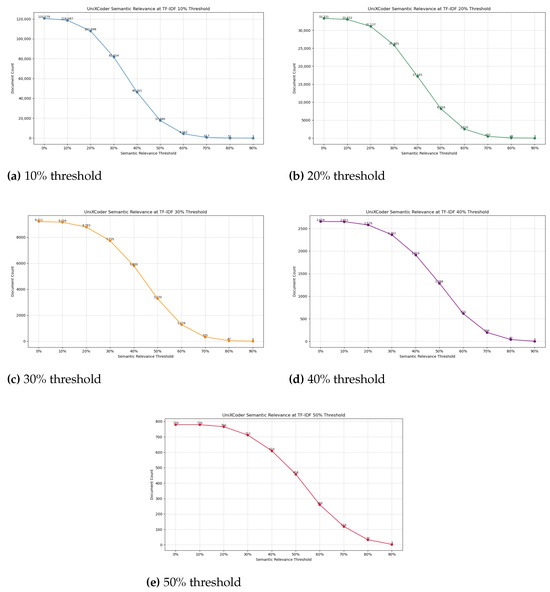

Expanding upon this initial relevance-checking framework, Figure 5 further illustrates SynCode’s comprehensive, integrated lexical-semantic annotation pipeline (AnnoTF and AnnoCode). Initially, Stack Overflow posts undergo systematic and rigorous lexical relevance assessment between titles and corresponding code snippets at various thresholds ranging incrementally from 10% to 50%. Each subfigure clearly delineates the progressively strict criteria applied to evaluating the title–code pairs, demonstrating the incremental narrowing of the dataset as increasingly rigorous thresholds are enforced. Following these initial lexical relevance checks, the remaining Stack Overflow posts undergo further semantic evaluations conducted by AnnoCode. This sophisticated transformer-based model systematically assigns semantic relevance scores, quantifying the degree of alignment between Stack Overflow question titles and the accompanying code snippets, based on deep, nuanced semantic contextual analysis.

Figure 5.

Semantic relevance between Stack Overflow titles and code snippets scored by AnnoCode after filtering with different term-weighting thresholds.

The consistent and pronounced reduction in the number of Stack Overflow posts observed across both lexical and semantic thresholds demonstrates SynCode’s strategic and balanced approach to annotation. By initially identifying and systematically removing broadly irrelevant or marginally related title–code pairs, SynCode substantially enhances the efficacy and efficiency of subsequent semantic analysis conducted by AnnoCode. For instance, under the relatively lenient 10% lexical threshold, the dataset size dramatically shrinks from over 120,000 entries at minimal semantic relevance to fewer than 700 highly relevant entries at higher semantic thresholds (above 70%). Conversely, at a significantly more rigorous 50% lexical threshold, fewer than 1000 Stack Overflow posts remain even at the lowest semantic relevance tier, thereby highlighting the heightened precision and selectivity achieved through this integrated filtering and evaluation strategy.

Importantly, SynCode strategically integrates automated relevance-checking processes with robust iterative human oversight, effectively addressing potential inaccuracies, oversights, and biases inherent in fully automated annotations. Human annotators leverage SynCode’s intuitive, collaborative interface to validate, adjust, and refine preliminary annotations generated by AnnoCode. Through this iterative, human-in-the-loop strategy, annotators can consistently ensure the accuracy, relevance, and contextual appropriateness of annotations, significantly enhancing the overall quality of the dataset. This human–computer collaboration ultimately supports more reliable and effective downstream applications in software engineering and natural language processing, ensuring annotations align precisely with practical user needs and domain-specific requirements.

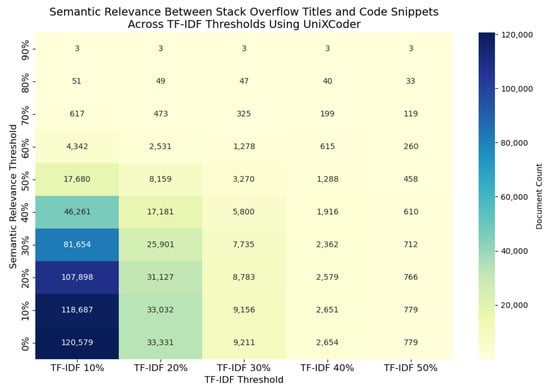

Figure 6 presents a detailed heatmap illustrating the relationship between semantic relevance scores (generated by AnnoCode) and lexical relevance thresholds (calculated via AnnoTF) for Stack Overflow post titles and associated code snippets. Each cell in the heatmap indicates the number of Stack Overflow documents retained at specific combinations of semantic (from 0% to 90%) and lexical (from 10% to 50%) thresholds. It is evident from the visualization that lower thresholds in both semantic and lexical dimensions result in larger volumes of retained documents. As lexical relevance criteria become more stringent, there is a sharp decrease in document count across all semantic thresholds, signifying that stringent lexical filtering significantly reduces dataset size. Additionally, the most substantial document count reductions occur at higher semantic relevance thresholds, underscoring AnnoCode’s effectiveness in identifying contextually and semantically relevant Stack Overflow title–code pairs. Thus, integrating both lexical and semantic filtering effectively concentrates annotation efforts on the most meaningful and relevant data entries, enhancing the precision and efficacy of the overall annotation process.

Figure 6.

Heatmap visualization depicting the number of Stack Overflow posts retained at varying semantic relevance thresholds (0% to 90%, as measured by UniXcoder) and lexical relevance thresholds (TF-IDF, 10% to 50%). Darker cells indicate a higher document count, illustrating how combined lexical and semantic filtering progressively narrows the dataset to include only highly relevant title–code snippet pairs.

5. Threats to Validity

In this study, several factors may influence the validity and generalizability of our findings. To address these limitations, we propose mitigation strategies such as annotator calibration sessions, clearly defined annotation guidelines, controlled experimental trials, and validation through real-world deployments. Firstly, our dataset is sourced exclusively from Stack Overflow. While Stack Overflow is extensive and representative of many software development scenarios, it might not encompass the full diversity of programming languages, coding practices, or tasks prevalent in other software engineering contexts. Therefore, generalizing our results to other programming communities or platforms should be approached with caution.

Secondly, our approach relies significantly on transformer-based models, particularly NLP Transformer and UniXcoder. Although these models have demonstrated state-of-the-art performance in many scenarios, their efficacy could vary considerably with different or emerging programming paradigms, complex syntactic structures, or domain-specific terminology that might not have been thoroughly represented in their training data.

Thirdly, human annotator bias presents a potential threat. Despite our efforts to minimize errors and biases through iterative human oversight, annotators’ subjective judgments could unintentionally introduce inconsistencies or biases, especially in semantically nuanced or complex cases. Future studies could mitigate this risk by introducing standardized annotator calibration sessions and clearly defined annotation guidelines.

Finally, scalability and efficiency assessments were conducted in controlled experimental settings. Practical deployment might encounter additional challenges, such as inconsistent Internet connectivity, limited computational resources, or usability issues not fully captured in this study. These challenges can be mitigated through broader user studies and deployment trials conducted in diverse real-world environments.

To address these identified limitations and further strengthen our approach, future work will aim to extend and refine our annotation framework in real-world environments, as detailed below.

6. Future Work

Currently, SynCode employs pretrained models such as UniXcoder and sentence transformers. Future iterations will enhance annotation accuracy and adaptability by fine-tuning various LLMs, specifically on domain-targeted datasets. We will evaluate model performance using established metrics such as BLEU, exact match (EM), and Accuracy@K (Acc@K). The Stack Overflow dataset was used to demonstrate the annotation workflow, but it is not an inherent limitation of the system. To address this concern in future iterations, we plan to broaden the scope of our evaluations beyond Stack Overflow. Specifically, we intend to perform continued pre-training and supervised fine-tuning on datasets derived not only from Stack Overflow but also from other software development communities and diverse technical domains. This process will involve systematically evaluating transformer-based architectures, including CodeT5, CodeBERT, GPT-based code models (e.g., CodeGPT), and advanced instruction-tuned LLMs like OpenAI’s GPT series. Through these evaluations, we aim to identify the most effective models that balance computational efficiency, annotation quality, and domain adaptability. Additionally, we will explore ensemble approaches, integrating predictions from multiple fine-tuned LLMs to enhance reliability through consensus-driven annotations. These advancements will enable SynCode to better handle the evolving complexities inherent in software engineering, dynamically adapting to emerging programming languages, frameworks, and coding conventions.

Further improving usability and user experience is another critical area of focus. We intend to conduct comprehensive user studies with software developers and data annotators to pinpoint usability challenges and develop targeted improvements.

7. Conclusions

In this paper, we introduced SynCode, an innovative collaborative annotation framework that seamlessly integrates human expertise with LLMs to significantly enhance data annotation quality, particularly within Stack Overflow datasets. By employing a comprehensive pipeline that integrates lexical filtering and semantic relevance evaluation, SynCode systematically isolates and identifies contextually meaningful and semantically coherent title–code snippet pairs. Moreover, SynCode incorporates iterative human validation and refinement, effectively addressing common challenges in automated annotation processes, such as biases, inaccuracies, and limited interpretability.

Our case study underscored SynCode’s practical utility and effectiveness in realistic scenarios, demonstrating significant improvements in annotation accuracy and overall dataset quality. Additionally, SynCode’s versatile, multi-platform collaborative annotation workflow supports annotators across various working environments. The framework provides a web-based interface for easy remote collaboration, a mobile application tailored for annotators requiring mobility and flexibility, and a desktop application with robust offline capabilities. This platform diversity ensures seamless, real-time interaction between annotators and LLMs, effectively accommodating different user preferences and situational needs, thereby promoting uninterrupted annotation progress and significantly enhancing efficiency and user satisfaction.

Despite acknowledged limitations, such as potential annotator biases and evaluation under controlled experimental conditions, SynCode’s prototype has demonstrated a flexible and adaptable architecture in initial evaluations, suggesting strong potential for broader practical deployment. Moving forward, our future work will focus on fine-tuning specialized language models, expanding the framework to additional programming domains, and improving usability through targeted user studies. These ongoing developments aim to refine and strengthen the synergy between human annotators and AI systems, effectively addressing the evolving complexities of software engineering tasks.

Author Contributions

Conceptualization, M.S.; Methodology, M.S.; Software, M.X., T.L., W.T. and M.S.; Validation, M.S.; Formal analysis, M.S.; Investigation, M.S.; Resources, M.S.; Data curation, S.M.; Writing—original draft, M.S.; Writing—review & editing, M.S.; Supervision, M.S.; Project administration, M.S.; Funding acquisition, M.S. All authors have read and agreed to the published version of the manuscript.

Funding

This work was partially supported by the Nebraska Collaboration Initiative (grant number NRI-47130).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Acknowledgments

We sincerely thank Tammy Le, the first author, for her dedicated volunteering efforts as an undergraduate student in the software engineering course. We also thank Will Taylor for his valuable contributions as an undergraduate student participating in the Honors Program. Additionally, we thank the anonymous reviewers for their thorough and insightful comments on our paper.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Devlin, J. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I. Language models are unsupervised multitask learners. OpenAI Blog 2019, 1, 9. [Google Scholar]

- Raffel, C.; Shazeer, N.; Roberts, A.; Lee, K.; Narang, S.; Matena, M.; Zhou, Y.; Li, W.; Liu, P.J. Exploring the limits of transfer learning with a unified text-to-text transformer. J. Mach. Learn. Res. 2020, 21, 1–67. [Google Scholar]

- Fan, A.; Gokkaya, B.; Harman, M.; Lyubarskiy, M.; Sengupta, S.; Yoo, S.; Zhang, J.M. Large language models for software engineering: Survey and open problems. arXiv 2023, arXiv:2310.03533. [Google Scholar]

- Zheng, Z.; Ning, K.; Wang, Y.; Zhang, J.; Zheng, D.; Ye, M.; Chen, J. A survey of large language models for code: Evolution, benchmarking, and future trends. arXiv 2023, arXiv:2311.10372. [Google Scholar]

- Chowdhary, K.; Chowdhary, K. Natural language processing. In Fundamentals of Artificial Intelligence; Springer: New Delhi, India, 2020; pp. 603–649. [Google Scholar]

- Guo, D.; Ren, S.; Lu, S.; Feng, Z.; Tang, D.; Liu, S.; Zhou, L.; Duan, N.; Svyatkovskiy, A.; Fu, S.; et al. Graphcodebert: Pre-training code representations with data flow. arXiv 2020, arXiv:2009.08366. [Google Scholar]

- Kanade, A.; Maniatis, P.; Balakrishnan, G.; Shi, K. Learning and evaluating contextual embedding of source code. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual, 3–18 July 2020; pp. 5110–5121. [Google Scholar]

- Feng, Z.; Guo, D.; Tang, D.; Duan, N.; Feng, X.; Gong, M.; Shou, L.; Qin, B.; Liu, T.; Jiang, D.; et al. Codebert: A pre-trained model for programming and natural languages. arXiv 2020, arXiv:2002.08155. [Google Scholar]

- Xia, C.S.; Wei, Y.; Zhang, L. Practical program repair in the era of large pre-trained language models. arXiv 2022, arXiv:2210.14179. [Google Scholar]

- Niu, C.; Li, C.; Ng, V.; Ge, J.; Huang, L.; Luo, B. Spt-code: Sequence-to-sequence pre-training for learning source code representations. In Proceedings of the 44th International Conference on Software Engineering, Pittsburgh, PA, USA, 21–29 May 2022; pp. 2006–2018. [Google Scholar]

- He, X.; Lin, Z.; Gong, Y.; Jin, A.; Zhang, H.; Lin, C.; Jiao, J.; Yiu, S.M.; Duan, N.; Chen, W.; et al. Annollm: Making large language models to be better crowdsourced annotators. arXiv 2023, arXiv:2303.16854. [Google Scholar]

- Zhu, Y.; Zhang, P.; Haq, E.U.; Hui, P.; Tyson, G. Can chatgpt reproduce human-generated labels? a study of social computing tasks. arXiv 2023, arXiv:2304.10145. [Google Scholar]

- Le, T.; Taylor, W.; Maharjan, S.; Xia, M.; Song, M. SYNC: Synergistic Annotation Collaboration between Humans and LLMs for Enhanced Model Training. In Proceedings of the 23rd IEEE/ACIS International Conference on Software Engineering Research, Management and Applications (SERA), Las Vegas, NV, USA, 29–31 May 2025. [Google Scholar]

- Saha, S.; Hase, P.; Rajani, N.; Bansal, M. Are hard examples also harder to explain? A study with human and model-generated explanations. arXiv 2022, arXiv:2211.07517. [Google Scholar]

- Wang, P.; Chan, A.; Ilievski, F.; Chen, M.; Ren, X. Pinto: Faithful language reasoning using prompt-generated rationales. arXiv 2022, arXiv:2211.01562. [Google Scholar]

- Wiegreffe, S.; Hessel, J.; Swayamdipta, S.; Riedl, M.; Choi, Y. Reframing human-AI collaboration for generating free-text explanations. arXiv 2021, arXiv:2112.08674. [Google Scholar]

- Bhat, M.M.; Sordoni, A.; Mukherjee, S. Self-training with few-shot rationalization. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, Virtual, 7–11 November 2021; pp. 10702–10712. [Google Scholar]

- Marasović, A.; Beltagy, I.; Downey, D.; Peters, M.E. Few-shot self-rationalization with natural language prompts. arXiv 2021, arXiv:2111.08284. [Google Scholar]

- Wang, P.; Wang, Z.; Li, Z.; Gao, Y.; Yin, B.; Ren, X. Scott: Self-consistent chain-of-thought distillation. arXiv 2023, arXiv:2305.01879. [Google Scholar]

- Wiegreffe, S.; Marasović, A.; Smith, N.A. Measuring association between labels and free-text rationales. arXiv 2020, arXiv:2010.12762. [Google Scholar]

- Turpin, M.; Michael, J.; Perez, E.; Bowman, S. Language models don’t always say what they think: Unfaithful explanations in chain-of-thought prompting. Adv. Neural Inf. Process. Syst. 2023, 36, 74952–74965. [Google Scholar]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language models are few-shot learners. Adv. Neural Inf. Process. Syst. 2020, 33, 1877–1901. [Google Scholar]

- Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.L.; Almeida, D.; Altenschmidt, J.; Altman, S.; Anadkat, S.; et al. Gpt-4 technical report. arXiv 2023, arXiv:2303.08774. [Google Scholar]

- Anil, R.; Dai, A.M.; Firat, O.; Johnson, M.; Lepikhin, D.; Passos, A.; Shakeri, S.; Taropa, E.; Bailey, P.; Chen, Z.; et al. Palm 2 technical report. arXiv 2023, arXiv:2305.10403. [Google Scholar]

- Touvron, H.; Lavril, T.; Izacard, G.; Martinet, X.; Lachaux, M.A.; Lacroix, T.; Rozière, B.; Goyal, N.; Hambro, E.; Azhar, F.; et al. Llama: Open and efficient foundation language models. arXiv 2023, arXiv:2302.13971. [Google Scholar]

- Wang, S.; Liu, Y.; Xu, Y.; Zhu, C.; Zeng, M. Want to reduce labeling cost? GPT-3 can help. arXiv 2021, arXiv:2108.13487. [Google Scholar]

- Gilardi, F.; Alizadeh, M.; Kubli, M. ChatGPT outperforms crowd workers for text-annotation tasks. Proc. Natl. Acad. Sci. USA 2023, 120, e2305016120. [Google Scholar] [CrossRef] [PubMed]

- Ziems, C.; Held, W.; Shaikh, O.; Chen, J.; Zhang, Z.; Yang, D. Can large language models transform computational social science? Comput. Linguist. 2024, 50, 237–291. [Google Scholar] [CrossRef]

- Wang, H.; Hee, M.S.; Awal, M.; Choo, K.; Lee, R.K.W. Evaluating GPT-3 Generated Explanations for Hateful Content Moderation. arXiv 2023, arXiv:2305.17680. [Google Scholar] [CrossRef]

- Skeppstedt, M. Annotating named entities in clinical text by combining pre-annotation and active learning. In Proceedings of the 51st Annual Meeting of the Association for Computational Linguistics Proceedings of the Student Research Workshop, Sofia, Bulgaria, 4–9 August 2013; pp. 74–80. [Google Scholar]

- Fort, K.; Sagot, B. Influence of pre-annotation on POS-tagged corpus development. In Proceedings of the fourth ACL Linguistic Annotation Workshop, Uppsala, Sweden, 15–16 July 2010; pp. 56–63. [Google Scholar]

- Lingren, T.; Deleger, L.; Molnar, K.; Zhai, H.; Meinzen-Derr, J.; Kaiser, M.; Stoutenborough, L.; Li, Q.; Solti, I. Evaluating the impact of pre-annotation on annotation speed and potential bias: Natural language processing gold standard development for clinical named entity recognition in clinical trial announcements. J. Am. Med. Inform. Assoc. 2014, 21, 406–413. [Google Scholar] [CrossRef]

- Mikulová, M.; Straka, M.; Štěpánek, J.; Štěpánková, B.; Hajič, J. Quality and efficiency of manual annotation: Pre-annotation bias. arXiv 2023, arXiv:2306.09307. [Google Scholar]

- Ogren, P.V.; Savova, G.K.; Chute, C.G. Constructing Evaluation Corpora for Automated Clinical Named Entity Recognition. In Proceedings of the LREC, Marrakech, Morocco, 26 May–1 June 2008; Volume 8, pp. 3143–3150. [Google Scholar]

- South, B.R.; Mowery, D.; Suo, Y.; Leng, J.; Ferrández, O.; Meystre, S.M.; Chapman, W.W. Evaluating the effects of machine pre-annotation and an interactive annotation interface on manual de-identification of clinical text. J. Biomed. Inform. 2014, 50, 162–172. [Google Scholar] [CrossRef]

- Skeppstedt, M.; Paradis, C.; Kerren, A. PAL, a tool for pre-annotation and active learning. J. Lang. Technol. Comput. Linguist. 2017, 31, 91–110. [Google Scholar] [CrossRef]

- Sujoy, S.; Krishna, A.; Goyal, P. Pre-annotation based approach for development of a Sanskrit named entity recognition dataset. In Proceedings of the Computational Sanskrit & Digital Humanities: Selected Papers Presented at the 18th World Sanskrit Conference, Canberra, Australia, 9–13 January 2023; pp. 59–70. [Google Scholar]

- Andriluka, M.; Uijlings, J.R.; Ferrari, V. Fluid annotation: A human-machine collaboration interface for full image annotation. In Proceedings of the 26th ACM international conference on Multimedia, Seoul, Republic of Korea, 22–26 October 2018; pp. 1957–1966. [Google Scholar]

- Hernandez, A.; Hochheiser, H.; Horn, J.; Crowley, R.; Boyce, R. Testing pre-annotation to help non-experts identify drug-drug interactions mentioned in drug product labeling. In Proceedings of the AAAI Conference on Human Computation and Crowdsourcing, Pittsburgh, PA, USA, 2–4 November 2014; Volume 2, pp. 14–15. [Google Scholar]

- Kuo, T.T.; Huh, J.; Kim, J.; El-Kareh, R.; Singh, S.; Feupe, S.F.; Kuri, V.; Lin, G.; Day, M.E.; Ohno-Machado, L.; et al. The impact of automatic pre-annotation in Clinical Note Data Element Extraction-the CLEAN Tool. arXiv 2018, arXiv:1808.03806. [Google Scholar]

- Ghosh, T.; Saha, R.K.; Jenamani, M.; Routray, A.; Singh, S.K.; Mondal, A. SeisLabel: An AI-Assisted Annotation Tool for Seismic Data Labeling. In Proceedings of the IGARSS 2023–2023 IEEE International Geoscience and Remote Sensing Symposium, Pasadena, CA, USA, 16–21 July 2023; pp. 5081–5084. [Google Scholar]

- Green, B.; Chen, Y. Disparate interactions: An algorithm-in-the-loop analysis of fairness in risk assessments. In Proceedings of the Conference on Fairness, Accountability, and Transparency, Atlanta, GA, USA, 29–31 January 2019; pp. 90–99. [Google Scholar]

- Kneusel, R.T.; Mozer, M.C. Improving human-machine cooperative visual search with soft highlighting. ACM Trans. Appl. Percept. 2017, 15, 1–21. [Google Scholar] [CrossRef]

- Lai, V.; Tan, C. On human predictions with explanations and predictions of machine learning models: A case study on deception detection. In Proceedings of the Conference on Fairness, Accountability, and Transparency, Atlanta, GA, USA, 29–31 January 2019; pp. 29–38. [Google Scholar]

- Ma, S.; Lei, Y.; Wang, X.; Zheng, C.; Shi, C.; Yin, M.; Ma, X. Who should i trust: Ai or myself? Leveraging human and ai correctness likelihood to promote appropriate trust in ai-assisted decision-making. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, Hamburg, Germany, 23–28 April 2023; pp. 1–19. [Google Scholar]

- Wang, X.; Lu, Z.; Yin, M. Will you accept the ai recommendation? Predicting human behavior in ai-assisted decision making. In Proceedings of the ACM Web Conference 2022, Lyon, France, 25–29 April 2022; pp. 1697–1708. [Google Scholar]

- Stites, M.C.; Nyre-Yu, M.; Moss, B.; Smutz, C.; Smith, M.R. Sage advice? The impacts of explanations for machine learning models on human decision-making in spam detection. In Proceedings of the International Conference on Human-Computer Interaction, Virtual Event, 24–29 July 2021; pp. 269–284. [Google Scholar]

- Yin, M.; Wortman Vaughan, J.; Wallach, H. Understanding the effect of accuracy on trust in machine learning models. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; pp. 1–12. [Google Scholar]

- Reimers, N.; Gurevych, I. Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing, Hong Kong, China, 3–7 November 2019. [Google Scholar]

- Guo, D.; Lu, S.; Duan, N.; Wang, Y.; Zhou, M.; Yin, J. Unixcoder: Unified cross-modal pre-training for code representation. arXiv 2022, arXiv:2203.03850. [Google Scholar]

- Husain, H.; Wu, H.H.; Gazit, T.; Allamanis, M.; Brockschmidt, M. Codesearchnet challenge: Evaluating the state of semantic code search. arXiv 2019, arXiv:1909.09436. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).