Amazon Web Service–Google Cross-Cloud Platform for Machine Learning-Based Satellite Image Detection

Abstract

1. Introduction

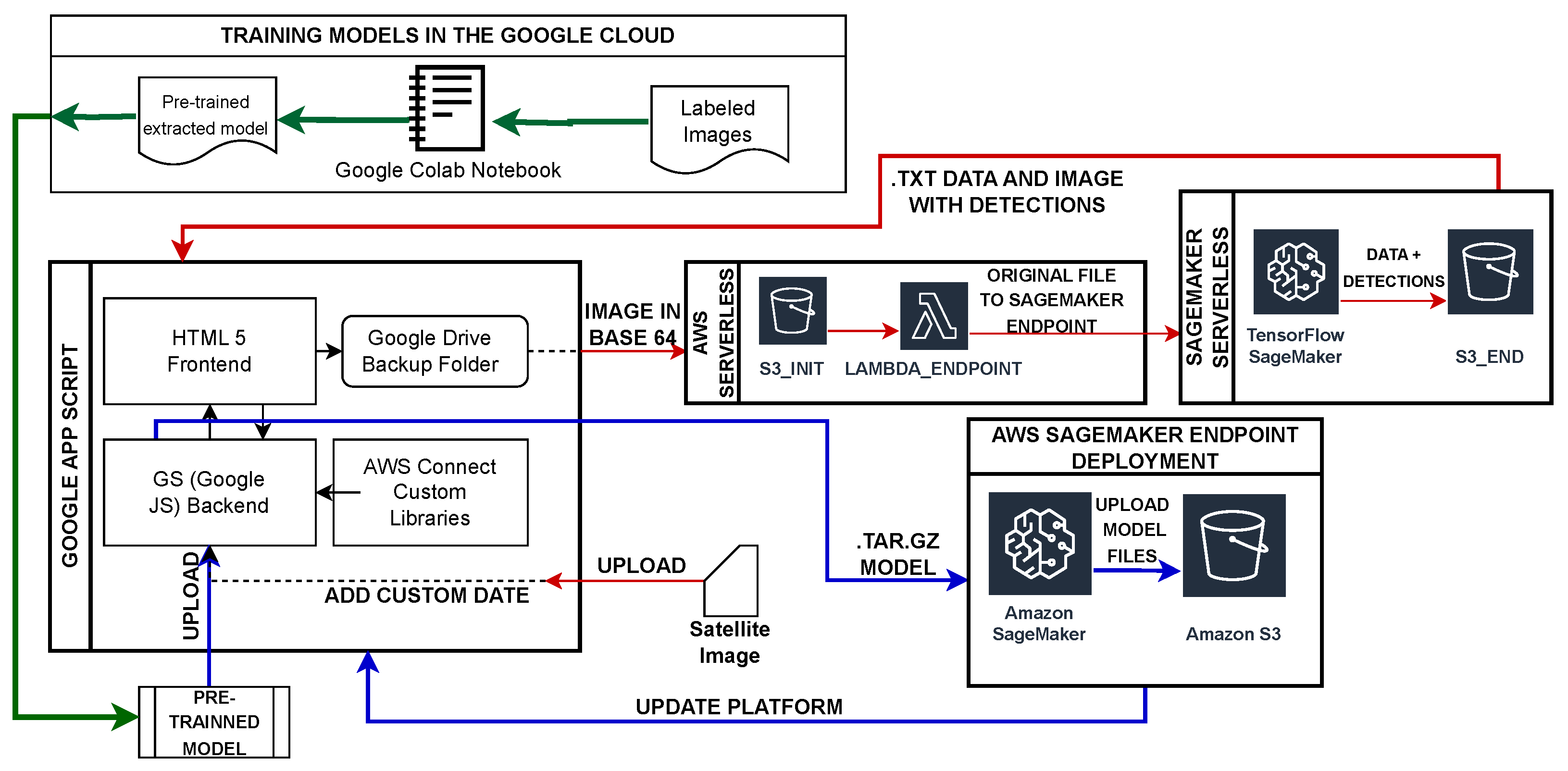

- We reduced costs and increased application efficiency by coordinating and integrating architectures and services in Google App Script. Amazon Web Service was one of the services that we integrated into the application.

- We implemented several services in the application, including AWS Lambda and SageMaker, in order to process and analyze the images in real time. The AWS Lambda service was in charge of image processing and SageMaker was in charge of ML in order to detect image elements in real time.

- We created a replicable model for real-time image detection. We standardized the application to make it replicable on all types of web platforms.

- We compared processes performed on-premise with different cloud applications and with different multi-cloud servers. The different benchmark values are shown in a table to evaluate the difference in the performance of the image processing and ML processes.

2. Related Works

3. Model Development

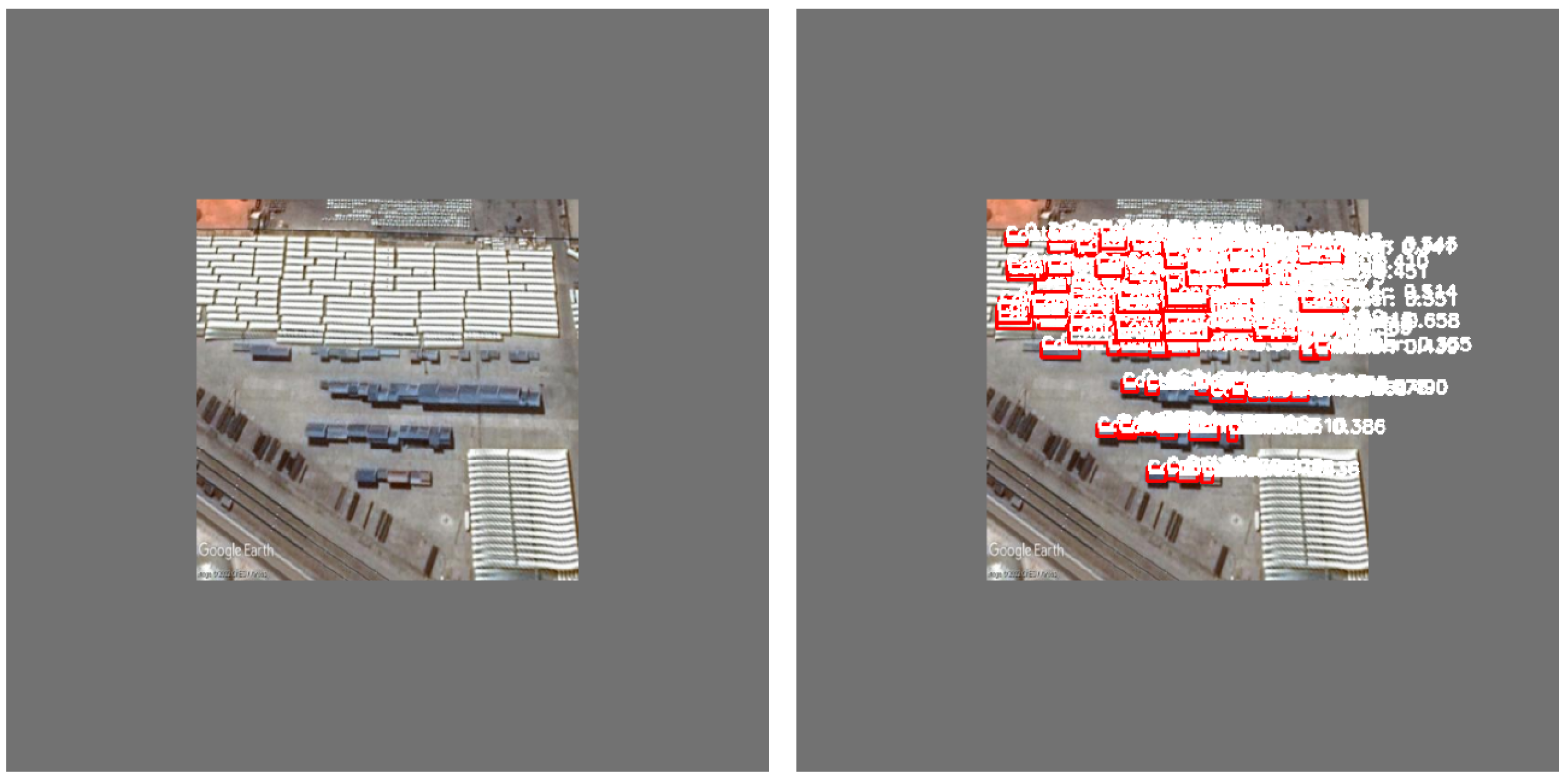

- Labeling images: We labeled 400 images to create a comprehensive dataset that captures the details of our target objects. This dataset was the basis for training the model to accurately identify and locate shipping containers in different port settings. These images were classified in a repository called Roboflow (Link to the platform accesed on 1 March 2023: https://roboflow.com/) and then trained in Google Colab.

- Setting up the repository: We cloned the repository in the Google Colab platform in order to be able to train it. This repository includes a wide range of scripts and files necessary for training and assessing the YOLOvX model (X being a custom YOLO model beginning with 5). After cloning, we set up the required dependencies, with a special focus on the Roboflow library.

- Coordination of SageMaker for conducting training: Without moving from Google Colab, we used tools that could communicate with AWS services, in this case SageMaker, so that the training could be performed in a more optimal way. This tool then moved to both the architecture and the final web platform.

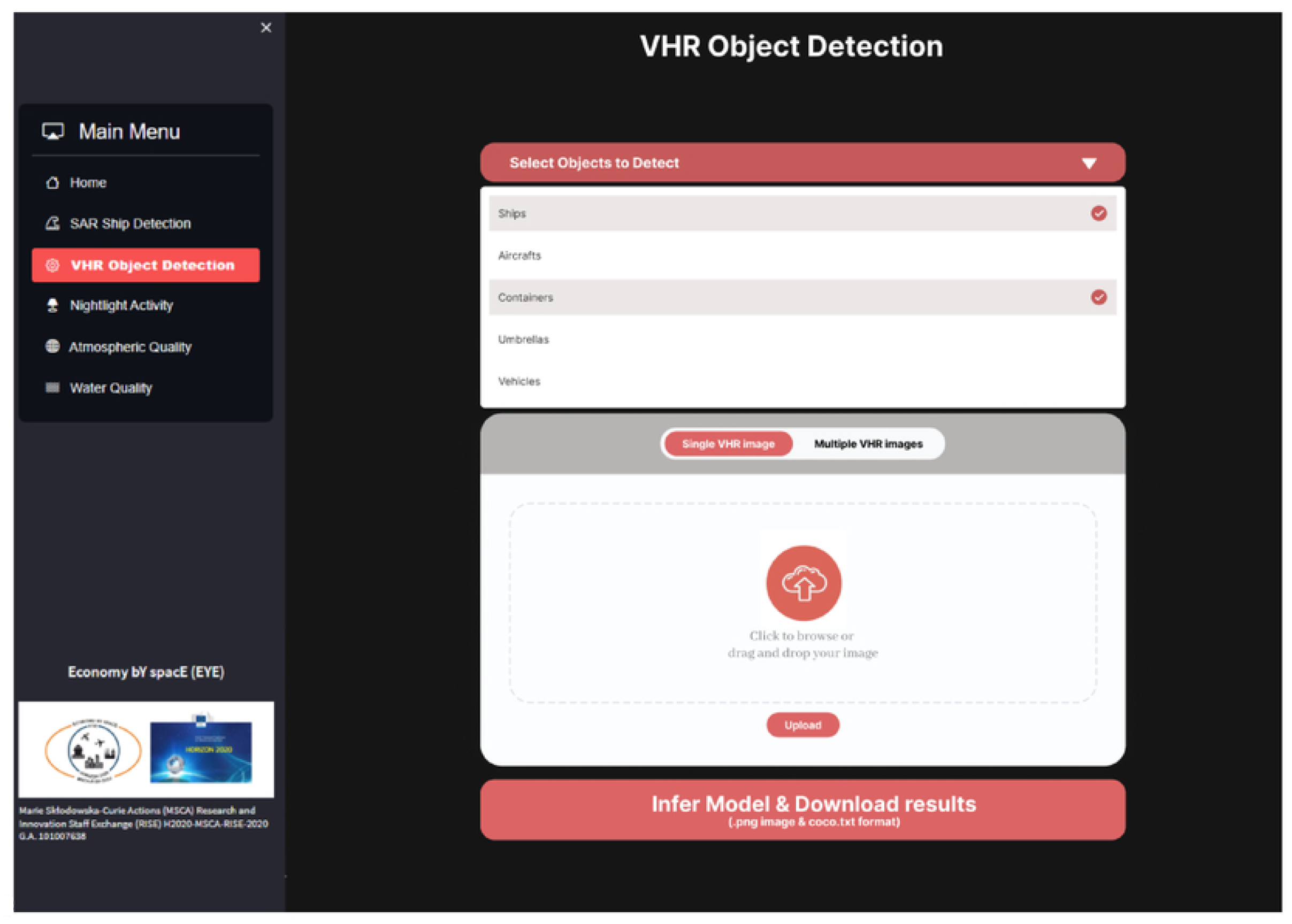

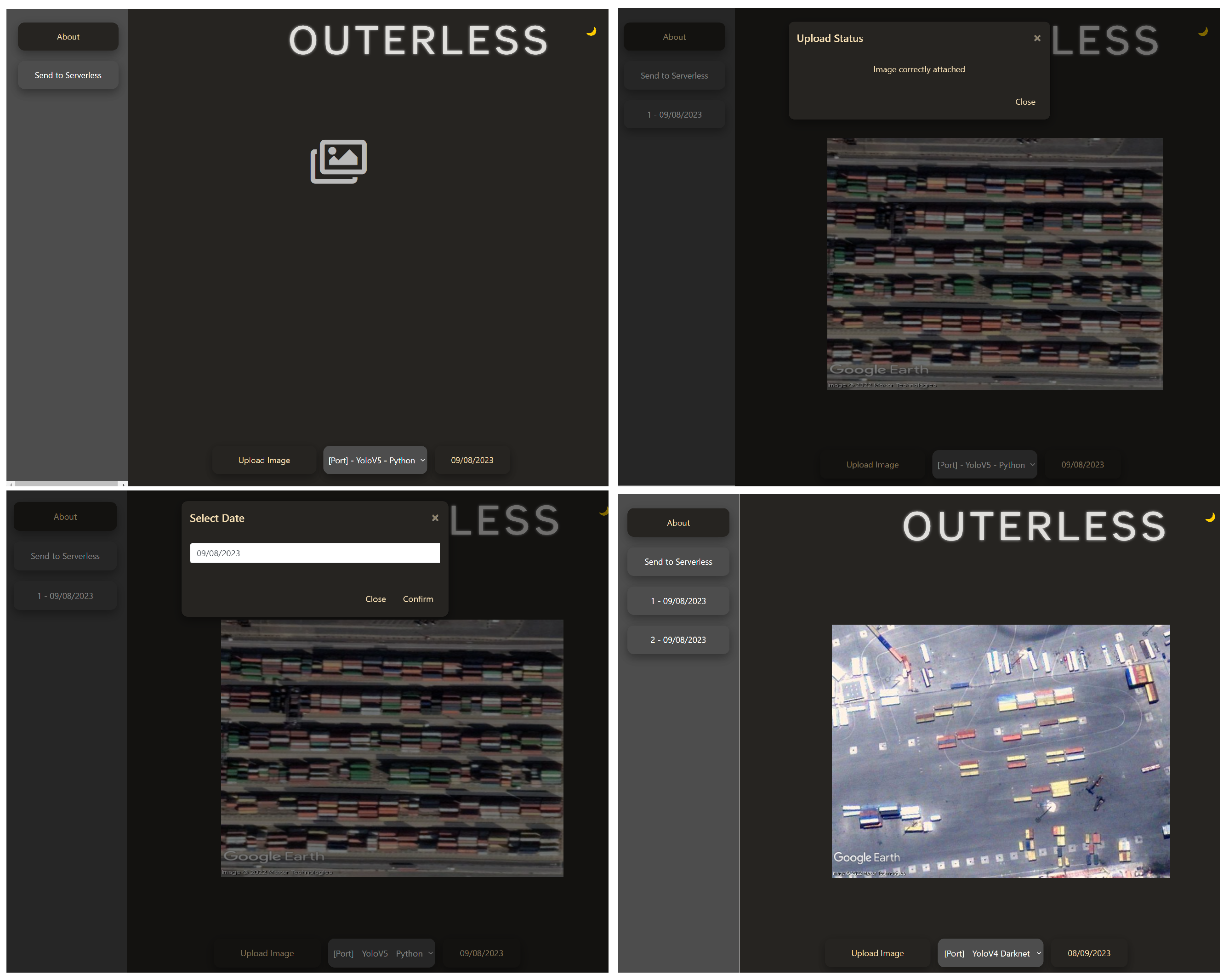

- Model training deployment: We executed the training script, specifying various parameters such as image size, batch size, epoch count, and configuration files for data and initial weights. This last part was implemented in the web platform in such a way that by clicking a button and selecting the type of item to be detected and a specific calendar, the platform displayed the detected elements as a final result.

4. Serverless Architecture

5. Application

- Web Interface Presentation: We employed JavaScript functions to create an HTML template that can be modified in regard to parameters like title and display mode. This provides a convenient and adjustable user interface.

- Image Upload to Google Drive: We created a function that makes it easier to upload images to Google Drive. This function takes care of base64-encoded data and file names, transforming the data, creating blobs, and uploading the files to a specific Google Drive folder.

- Interaction with AWS S3: A particular function is devoted to dealing with interactions with an S3 instance on AWS. This function securely uploads pictures to a designated bucket on AWS S3, goes through files in a Google Drive folder, transforms JPEG images to base64 format, and transfers them to the S3 bucket.

- Calendar and Image Handling Scripts: These scripts are essential for controlling calendar and image processing capabilities. They start and run the calendar component, manage the image upload feature, enable dynamic theme transformation, and interact with AWS S3 for secure image uploads.

- Deployment of Custom Pre-Trained Model: We integrated a button within the web interface that triggers the deployment of a custom pre-trained model packaged as a .tar.gz file. This button initiates a script that uploads the model file to a designated storage solution, unpacks the contents, and sets up a new endpoint in Amazon SageMaker.

- Establishing the Web Interface: We selected Google App Script due to its compatibility with Google services as well as other services.

- Ensure Secure Data Transfer and Authentication: The custom library begins the integration process by creating a tailored request to AWS S3, taking into account the kind of operation and the type of content. This system creates a URL tailored to the particular task, guaranteeing precise and secure data transmission. To maintain security, the library generates authorization headers, authenticating each request to AWS S3 using our AWS credentials combined with additional parameters. This process creates a unique signature for each request, protecting our operations in the S3 storage.

6. Methodology

- The number of requests (n) per million, with a cost of USD 0.2 per million requests.

- Average memory allocation (503 MB out of 1024 MB) multiplied by execution duration (15 min).

- The cost per GB-second is valued at 0.00001667.

- The number of batches processed (n).

- The average processing time in seconds per batch (8 s).

- The hourly rate (USD 0.28) of the ml.m4.xlarge instance, converted to a per-second rate.

7. Results and Discussion

7.1. Counting Elements

7.2. Cost

8. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- McCloskey, B.; Zumla, A.; Ippolito, G.; Blumberg, L.; Arbon, P.; Cicero, A.; Endericks, T.; Lim, P.L.; Borodina, M. Mass gathering events and reducing further global spread of COVID-19: A political and public health dilemma. Lancet 2020, 395, 1096–1099. [Google Scholar] [CrossRef] [PubMed]

- Jutz, S.; Milagro-Perez, M.P. Copernicus: The european earth observation programme. Rev. Teledetección 2020, V–XI. [Google Scholar] [CrossRef]

- Derollez, R.; Petitdemange, R.; Brémond, L. Building Space Infrastructure as a Service: An Automated Planning and Scheduling System for a Heterogeneous Spacecraft Constellation. In Proceedings of the International Conference on Space Operations, Montreal, QC, Canada, 26–30 May 2025; Springer: Berlin/Heidelberg, Germany, 2025; pp. 311–337. [Google Scholar]

- Gupta, H. AWS Lambda and SageMaker: Real-Time Solutions for Machine Learning. Int. J. Adv. Res. Sci. Commun. Technol. 2025, 5, 517–522. [Google Scholar] [CrossRef]

- Petrović, N.; Roblek, V.; Radenković, M.; Nejković, V. Approach to rapid development of data-driven applications for smart cities using appsheet and apps script. In Proceedings of the AIIT 2020 International conference on Applied Internet and Information Technologies, Zrenjanin, Serbia, 16 October 2020; pp. 77–81. [Google Scholar]

- Pacios, D.; Vazquez-Poletti, J.L.; Sánchez-Cano, B.; Moreno-Vozmediano, R.; Schetakis, N.; Vazquez, L.; Titov, D.V. Serverless Architecture for Data Processing and Detecting Anomalies with the Mars Express MARSIS Instrument. Astron. J. 2023, 166, 19. [Google Scholar] [CrossRef]

- Wang, Z.; Goudarzi, M.; Buyya, R. TF-DDRL: A Transformer-enhanced Distributed DRL Technique for Scheduling IoT Applications in Edge and Cloud Computing Environments. IEEE Trans. Serv. Comput. 2025, 55, 1–41. [Google Scholar] [CrossRef]

- Iacono, L.E.; Pacios, D.; Vazquez-Poletti, J.L. SNDVI: A new scalable serverless framework to compute NDVI. Front. High Perform. Comput. 2023, 1, 1151530. [Google Scholar] [CrossRef]

- Iacono, L.E.; Poletti, J.L.V.; Garino, C.G.; Llorente, I.M. Performance models for frost prediction in public cloud infrastructures. Comput. Inform. 2018, 37, 815–837. [Google Scholar] [CrossRef]

- Zhang, J.; Huang, Y.; Pu, R.; Gonzalez-Moreno, P.; Yuan, L.; Wu, K.; Huang, W. Monitoring plant diseases and pests through remote sensing technology: A review. Comput. Electron. Agric. 2019, 165, 104943. [Google Scholar] [CrossRef]

- Stavrakakis, K.; Pacios, D.; Papoutsakis, N.; Schetakis, N.; Bonfini, P.; Papakosmas, T.; Charalampopoulou, B.; Vázquez-Poletti, J.L.; Di Iorio, A. EYE-Sense: Empowering remote sensing with machine learning for socio-economic analysis. In Proceedings of the Ninth International Conference on Remote Sensing and Geoinformation of the Environment (RSCy2023), Ayia Napa, Cyprus, 3–5 April 2023; Volume 12786, pp. 100–117. [Google Scholar]

- Rajesh, S.C.; Goel, L. Architecting Distributed Systems for Real-Time Data Processing in Multi-Cloud Environments. Int. J. Emerg. Technol. Innov. Res. 2025, 12, b623–b640. [Google Scholar]

- Singh, A.; Aggarwal, A. Enhancing Security: Vulnerability Detection and Monitoring in Microservices within Multi-Cloud Environments Utilizing Sysdig. PriMera Sci. Eng. 2025, 6. [Google Scholar] [CrossRef]

- Linden, J.v.d.; Wang, X.; Forsström, S.; Zhang, T. Productify news article classification model with sagemaker. Adv. Sci. Technol. Eng. Syst. J. 2020, 5, 13–18. [Google Scholar] [CrossRef]

- Shergadwala, M.; Lakkaraju, H.; Kenthapadi, K. A human-centric perspective on model monitoring. Proc. AAAI Conf. Hum. Comput. Crowdsourcing 2022, 10, 173–183. [Google Scholar] [CrossRef]

- Chougule, N.S.; Awati, C.J.; Deshmukh, R. Using AWS SageMaker to Deploy ML Credit Card Fraud Detection Model. In Proceedings of the 2024 5th International Conference on Mobile Computing and Sustainable Informatics (ICMCSI), Lalitpur, Nepal, 18–19 January 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 150–156. [Google Scholar]

- Zhu, X.; Tang, X.; Zhang, G.; Liu, B.; Hu, W. Accuracy comparison and assessment of dsm derived from gfdm satellite and gf-7 satellite imagery. Remote Sens. 2021, 13, 4791. [Google Scholar] [CrossRef]

- Khaliq, A.; Comba, L.; Biglia, A.; Aimonino, D.R.; Chiaberge, M.; Gay, P. Comparison of satellite and uav-based multispectral imagery for vineyard variability assessment. Remote Sens. 2019, 11, 436. [Google Scholar] [CrossRef]

- Ghuffar, S. Dem generation from multi satellite planetscope imagery. Remote Sens. 2018, 10, 1462. [Google Scholar] [CrossRef]

- Kamiya, K.; Fuse, T.; Takahashi, M. Applicability evaluation of object detection method to satellite and aerial imageries. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, XLI-B7, 229–234. [Google Scholar] [CrossRef]

- Coffer, M.M.; Whitman, P.J.; Schaeffer, B.A.; Hill, V.; Zimmerman, R.C.; Salls, W.; Lebrasse, M.C.; Graybill, D.D. Vertical artifacts in high-resolution worldview-2 and worldview-3 satellite imagery of aquatic systems. Int. J. Remote. Sens. 2022, 43, 1199–1225. [Google Scholar] [CrossRef] [PubMed]

- Zhang, T.; Hu, S.; He, Y.; You, S.; Yang, X.; You-min, G.; Liu, A. A fine-scale mangrove map of china derived from 2-meter resolution satellite observations and field data. ISPRS Int. J. Geo-Inf. 2021, 10, 92. [Google Scholar] [CrossRef]

- Strozzi, T.; Antonova, S.; Günther, F.; Mätzler, E.; Vieira, G.; Wegmüller, U.; Westermann, S.; Bartsch, A. Sentinel-1 sar interferometry for surface deformation monitoring in low-land permafrost areas. Remote Sens. 2018, 10, 1360. [Google Scholar] [CrossRef]

- Derkacheva, A.; Mouginot, J.; Millan, R.; Maier, N.; Gillet-Chaulet, F. Data reduction using statistical and regression approaches for ice velocity derived by landsat-8, sentinel-1 and sentinel-2. Remote Sens. 2020, 12, 1935. [Google Scholar] [CrossRef]

- Gangwar, H.; Date, H.; Ramaswamy, R. Understanding determinants of cloud computing adoption using an integrated tam-toe model. J. Enterp. Inf. Manag. 2015, 28, 107–130. [Google Scholar] [CrossRef]

- Suyatna, E.T.K. Pengembangan aplikasi web google script sebagai instrumen assesment. J. Didakt. Pendidik. Dasar 2022, 6, 997–1016. [Google Scholar] [CrossRef]

- Baptista, L. Using python and google colab to teach physical chemistry during pandemic. ChemRxiv 2021. [Google Scholar] [CrossRef]

- Papilaya, P.P.E. Aplikasi google earth engine dalam menyediakan citra satelit sumberbedaya alam bebas awan. Makila 2022, 16, 96–103. [Google Scholar] [CrossRef]

| Category | Ship Detection | Aircraft Detection | Container Detection |

|---|---|---|---|

| Input | Area of interest, dates | VHR Image | VHR Image |

| Output | Time series of detected ships | Detected objects in image, .txt file | Detected objects in image, .txt file |

| Environment | Python, Sentinelapi | Python, OBBDetection | pytorch (Python) |

| Models | YOLOv5, Faster-RCNN | Faster-RCNN | YOLOv5-7 |

| Product | Number of ships | Number of aircraft | Number of containers |

| Satellite | Sentinel-1/2, VHR (Planet) | VHR (Planet) | VHR (Worldview-3, Planet) |

| Instrument | SAR-C/Sentinel-1, MSI | MSI | MSI |

| Spatial Resolution | 9 m × 9 m (S-1), 10 m × 10 m (S-2) | (0.8–1.5) m | (0.8–1.5) m, 10 m × 10 m (S-2) |

| Temporal | 3–5 days (S-1), 1–10 days (S-2) | On request | On request, 1–10 days (S-2) |

| File Size | 800 MB (S-1), 600–700 MB (S-2) | 100 MB | 600–700 MB (S-2), 100 MB |

| Database | Google Earth Engine, third-party | Creodias, Google Earth Engine | Creodias, Google Earth Engine |

| Object | Model | Accuracy |

|---|---|---|

| Aircraft | Faster-RCNN | 99% |

| Ship | Faster-RCNN | 96% |

| Containers | YOLOv5 | 86% |

| Service | Cost per 1000 Images (Dollars) |

|---|---|

| Application | USD 0.8056 |

| Lambda with S3 and EFS storage | USD 1.3456 |

| EC2 instance with S3 storage | USD 1.544 |

| Lambda without S3 storage | USD 0.1956 |

| Azure5 | USD 158 |

| Storm6 | USD 351 |

| DreamHost5 | USD 106 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pacios, D.; Ignacio-Cerrato, S.; Vázquez-Poletti, J.L.; Moreno-Vozmediano, R.; Schetakis, N.; Stavrakakis, K.; Di Iorio, A.; Gomez-Sanz, J.J.; Vazquez, L. Amazon Web Service–Google Cross-Cloud Platform for Machine Learning-Based Satellite Image Detection. Information 2025, 16, 381. https://doi.org/10.3390/info16050381

Pacios D, Ignacio-Cerrato S, Vázquez-Poletti JL, Moreno-Vozmediano R, Schetakis N, Stavrakakis K, Di Iorio A, Gomez-Sanz JJ, Vazquez L. Amazon Web Service–Google Cross-Cloud Platform for Machine Learning-Based Satellite Image Detection. Information. 2025; 16(5):381. https://doi.org/10.3390/info16050381

Chicago/Turabian StylePacios, David, Sara Ignacio-Cerrato, José Luis Vázquez-Poletti, Rafael Moreno-Vozmediano, Nikolaos Schetakis, Konstantinos Stavrakakis, Alessio Di Iorio, Jorge J. Gomez-Sanz, and Luis Vazquez. 2025. "Amazon Web Service–Google Cross-Cloud Platform for Machine Learning-Based Satellite Image Detection" Information 16, no. 5: 381. https://doi.org/10.3390/info16050381

APA StylePacios, D., Ignacio-Cerrato, S., Vázquez-Poletti, J. L., Moreno-Vozmediano, R., Schetakis, N., Stavrakakis, K., Di Iorio, A., Gomez-Sanz, J. J., & Vazquez, L. (2025). Amazon Web Service–Google Cross-Cloud Platform for Machine Learning-Based Satellite Image Detection. Information, 16(5), 381. https://doi.org/10.3390/info16050381