Artificial Intelligence-Based Prediction Model for Maritime Vessel Type Identification

Abstract

1. Introduction

2. Related Work

2.1. Classification Approaches Based on AIS Data

2.2. Classification Approaches Based on SAR Data

2.3. Classification Approaches Based on Optical Data

2.3.1. Approaches Based on Machine Learning

2.3.2. Approaches Based on Deep Learning

2.3.3. Approaches That Combine Machine Learning and Deep Learning

2.4. Concluding Remarks

3. Artificial Intelligence Background

3.1. Computer Vision Principles

3.2. Machine Learning and Deep Learning Principles

3.3. Data Mining Process

4. Proposed Model for Maritime Vessel Classification

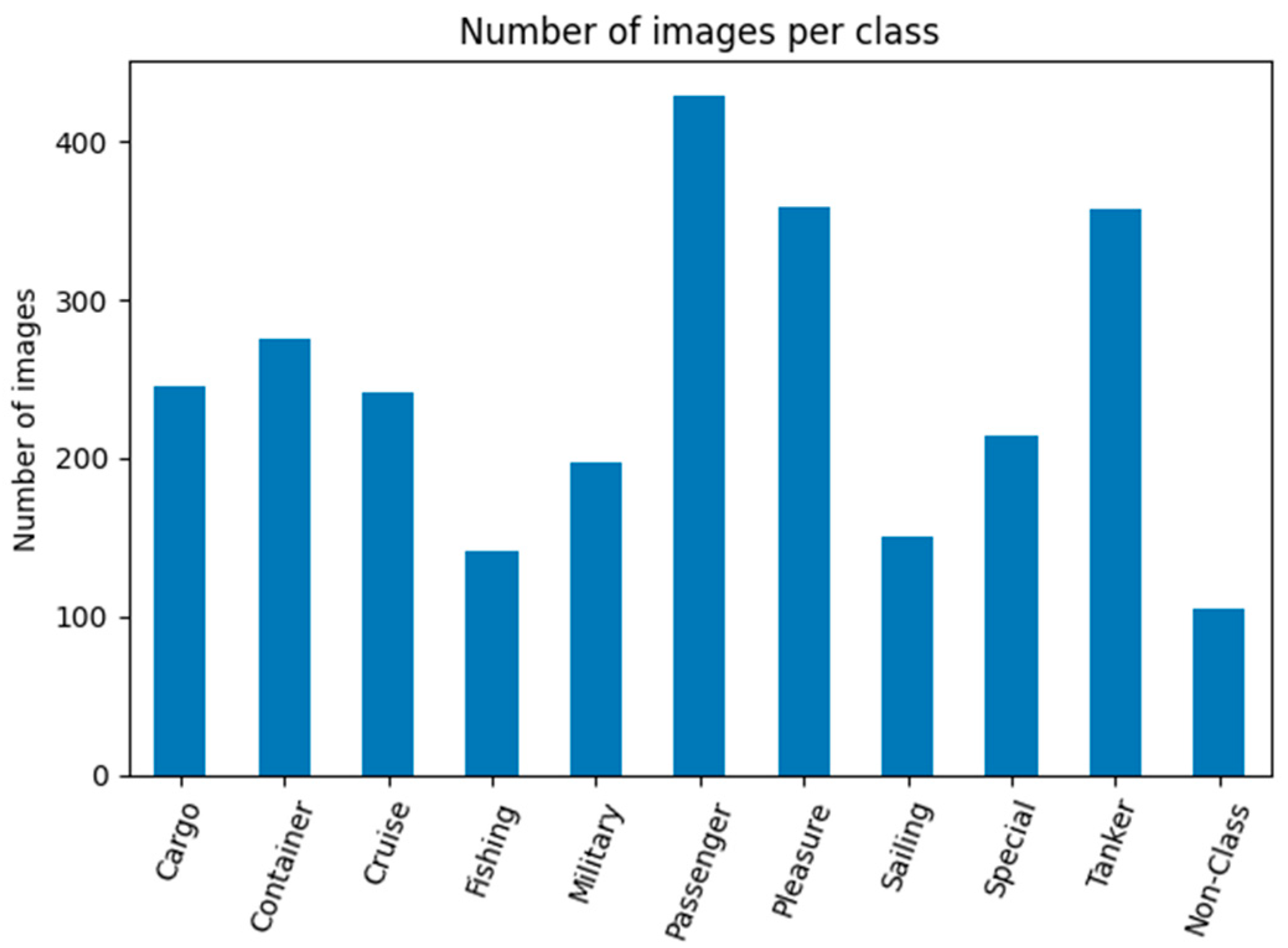

4.1. Dataset Preparation

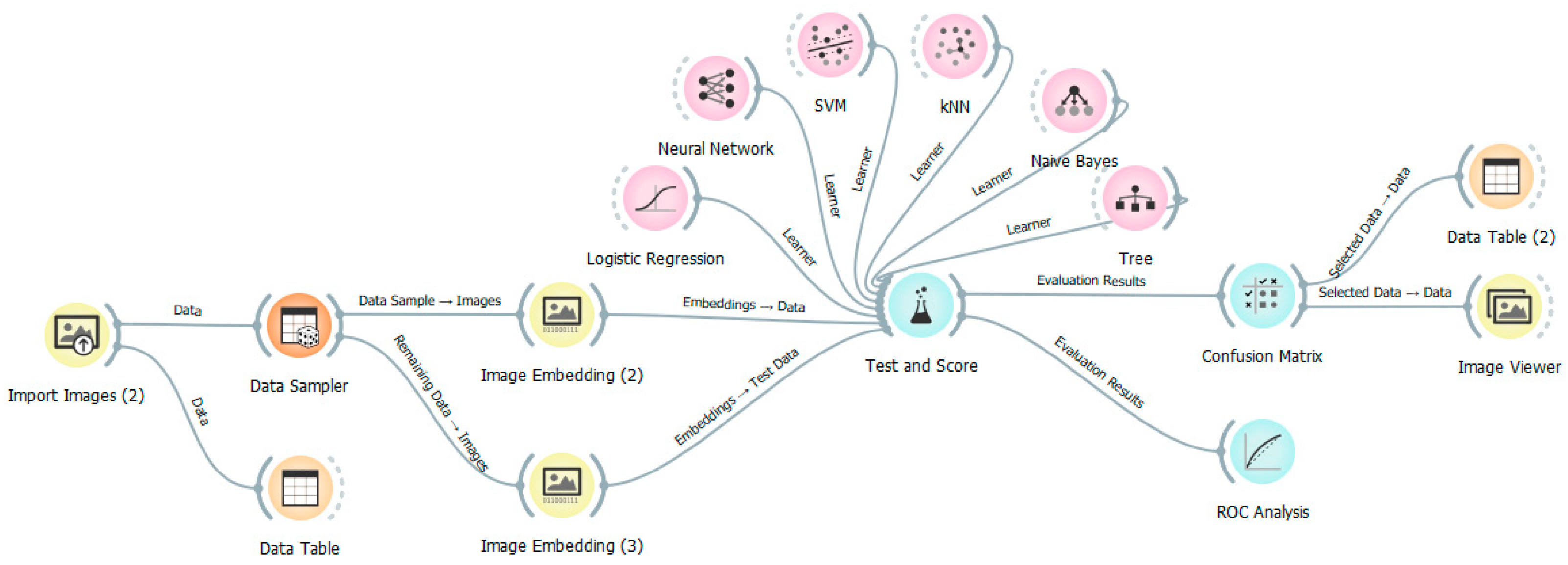

4.2. Model Design

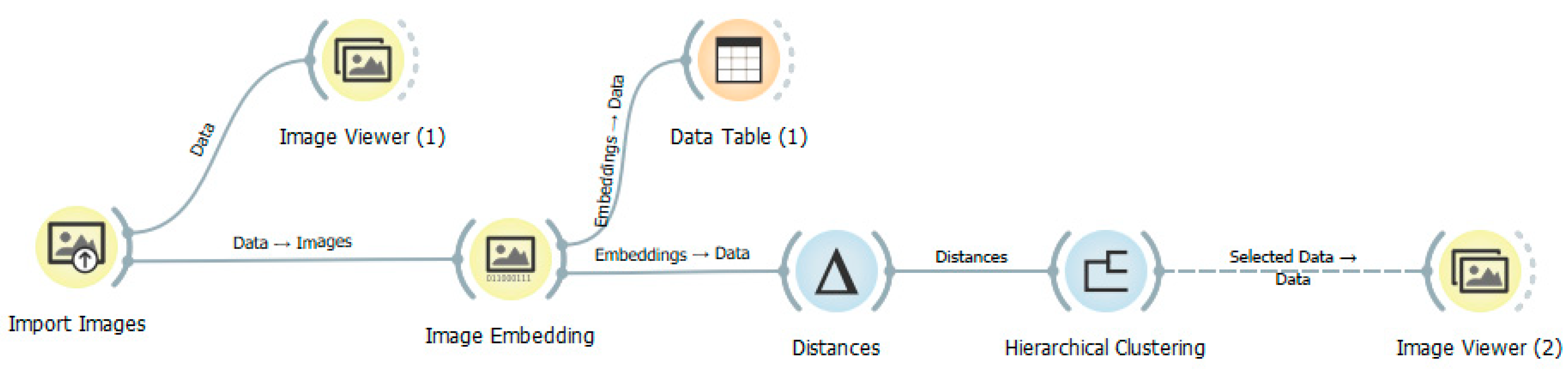

- Image embedding and clustering. In this stage of model development, the input images are transformed into vectors via different deep learning-based image embedding methods (e.g., Inception v3, SqueezeNet, VGG-16, etc.). After the comparison of these methods, Inception v3 [85,86] was selected as optimal for the problem at hand. Following this comparison, hierarchical clustering is performed on the input images in order to evaluate the model’s ability to separate instances into categories based on a selected similarity measure and image embedding. After comparing different similarity measures (e.g., Euclidean, Manhattan, and Cosine distances), Euclidean distance was chosen as optimal.

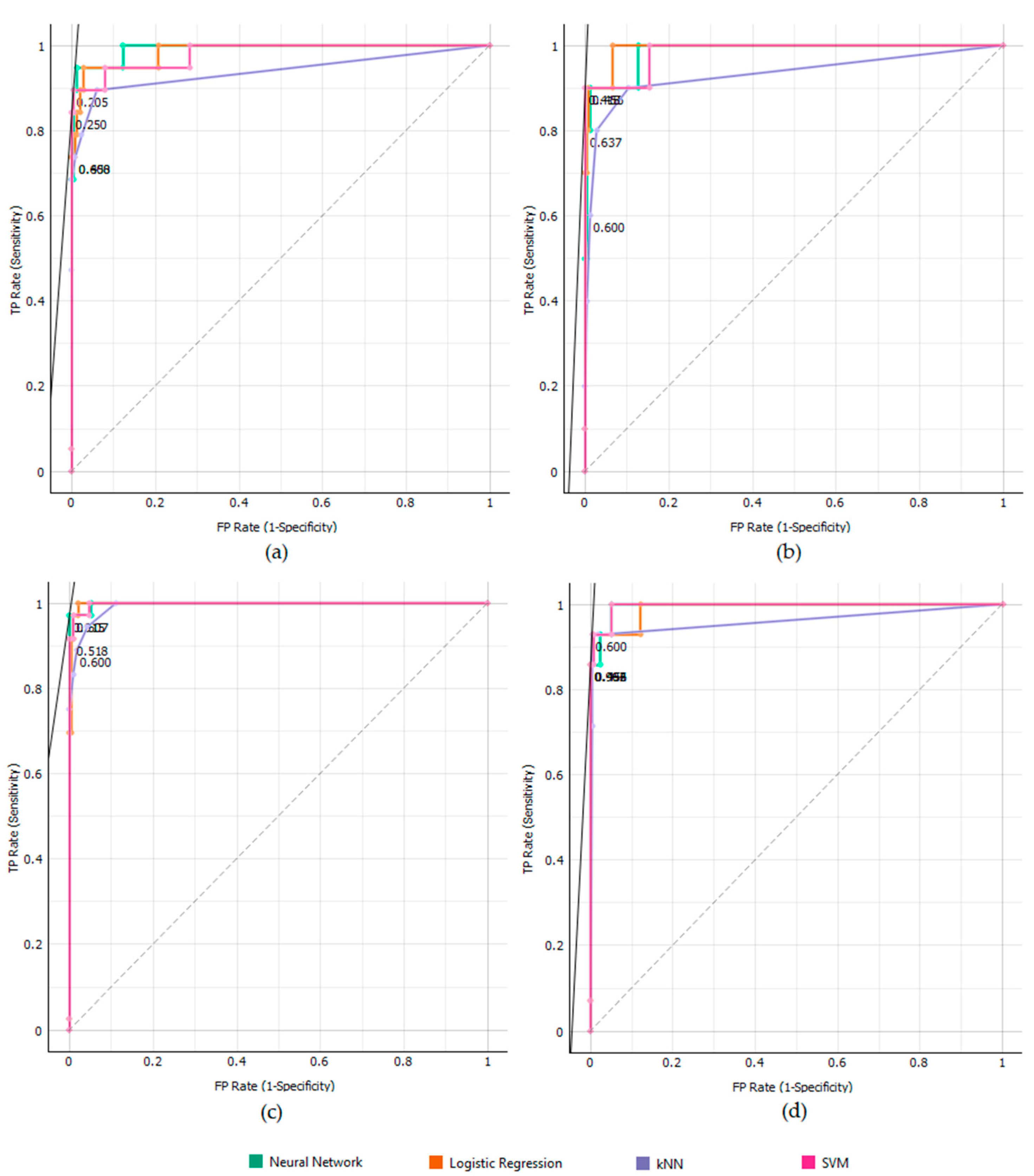

- Comparison of different machine learning algorithms. In this stage of model development, different machine learning algorithms are trained, tested, and evaluated in order to determine the optimal one for maritime vessel classification. We focused on the evaluation of well-known, traditional machine learning algorithms such as logistic regression, a neural network, SVM, kNN, Naïve Bayes, and decision tree.

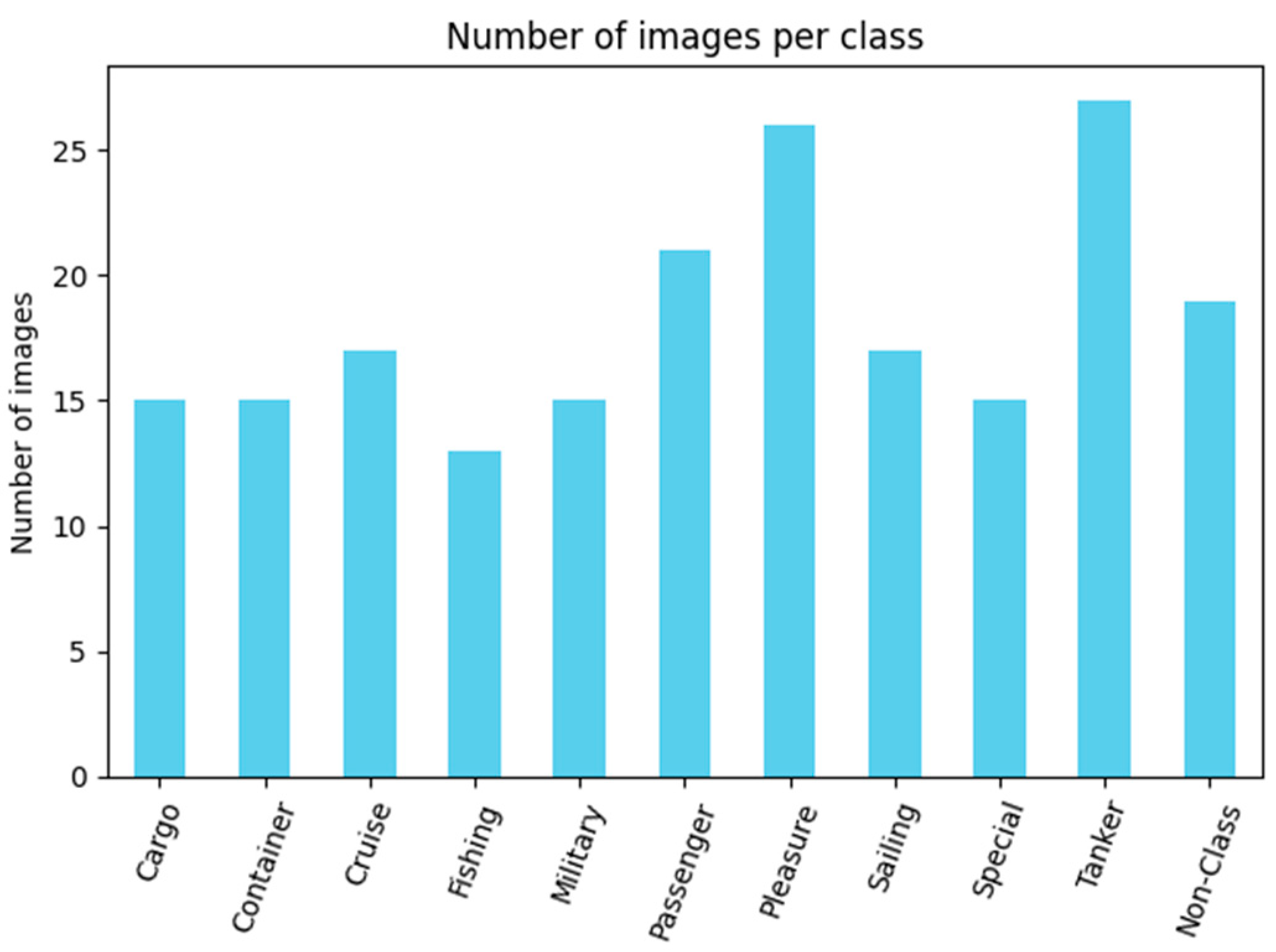

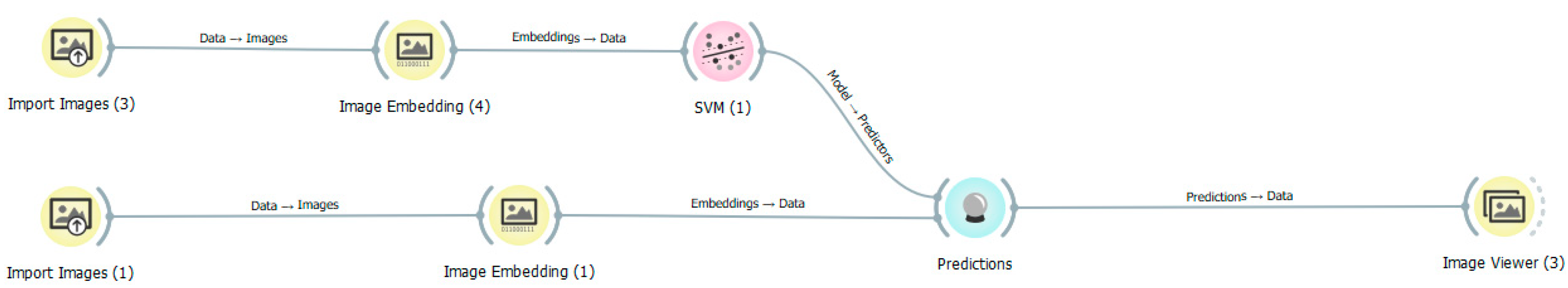

- Model evaluation. The optimal machine learning algorithm chosen in a previous stage of model development is evaluated on 200 previously unseen maritime images.

4.2.1. Image Embedding and Clustering

4.2.2. Comparison of Different Machine Learning Algorithms

- Logistic regression—L2 or ridge regularization was employed, and the strength of regularization was set at 1.

- Neural network—the number of hidden layers was set at 100, ReLU (Rectified Linear Unit) activation function and Adam optimizer were used, and the maximum number of iterations was set at 200.

- SVM—the cost C was set at 0, the kernel was set to RBF (Radial Basis Function), numerical tolerance was set at 0.0010, and the iteration limit was set at 100.

- kNN—number of neighbors was set at 5, Euclidean metric was used, and the weight of data points was uniform.

- Naïve Bayes—no parameters were set during training.

- Decision tree—the minimum number of instances in leaves of the tree was set at 2, subsets smaller than 5 were not split, binary trees were included, maximum tree depth was limited to 100, and the classification would stop when the majority threshold would reach 95%.

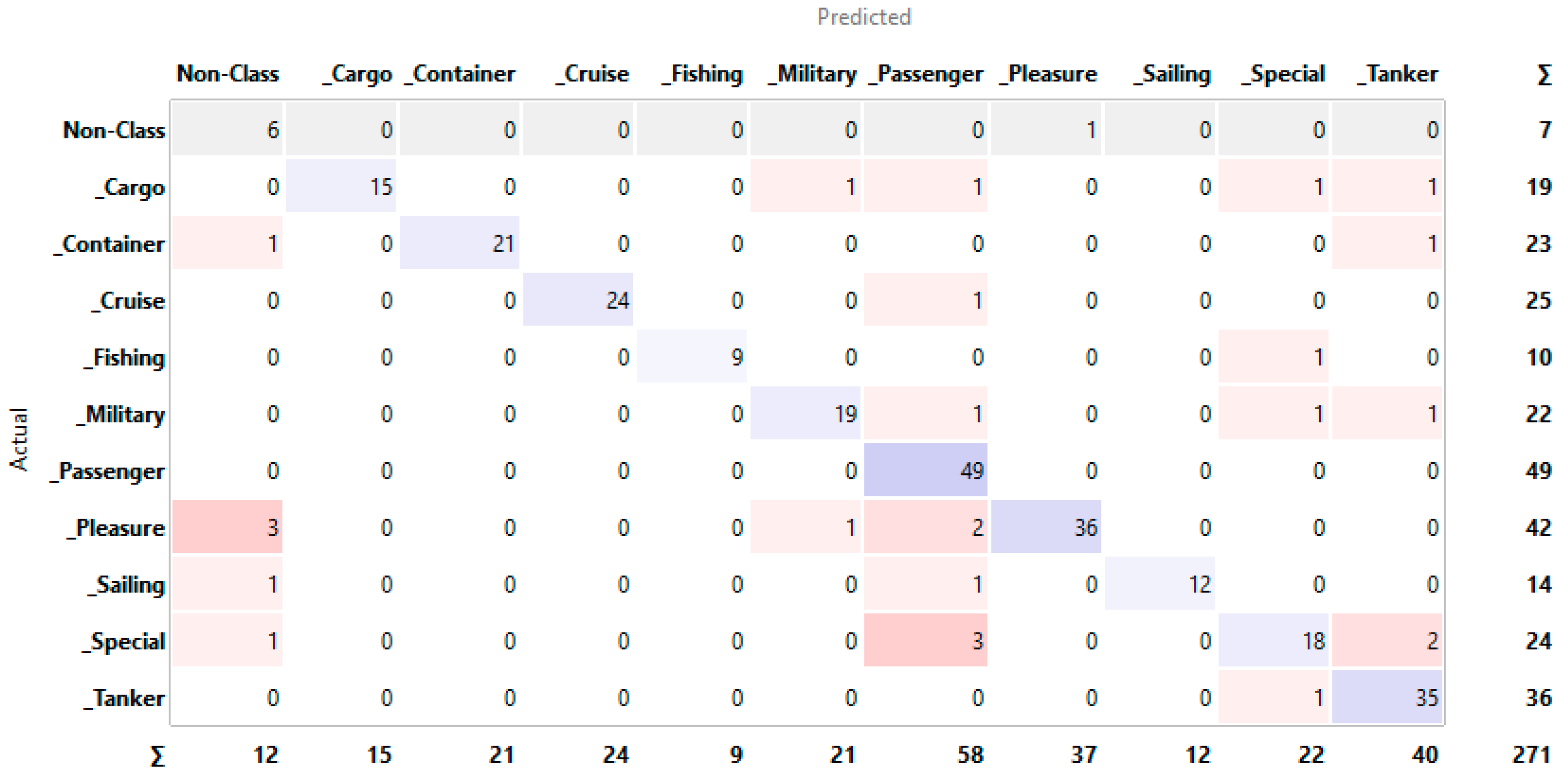

4.2.3. Model Evaluation

5. Conclusions

- Dataset augmentation. By increasing the number of images in the dataset, a more robust foundation for model training can be established.

- Refinement of maritime vessel categories. Enhancing the dataset by incorporating additional maritime vessel categories could ensure it is not restricted to one specific geographic area. This would make it more versatile and applicable to a wider number of applications.

- Evaluation of additional machine learning algorithms. Testing the performance of additional algorithms on the newly constructed dataset could provide valuable insights about the type of classification method most suited for the task at hand. The evaluation should prioritize not only classification accuracy but also the speed of processing, as real-time performance is essential for effective maritime vessel surveillance, especially in military domains.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| 2D | two-dimensional |

| AI | artificial intelligence |

| AIS | automatic identification system |

| AUC | area under the curve |

| BoW | bag-of-words |

| CA | classification accuracy |

| CNN | convolutional neural network |

| CRISP-DM | Cross-Industry Standard Process for Data Mining |

| DM | data mining |

| EM | electromagnetic |

| HDR | High Dynamic Range |

| HPC | High-Performance Computing |

| IR | Infrared |

| kNN | k-Nearest Neighbors |

| LAWS | lethal autonomous weapons systems |

| MDMP | military decision-making process |

| MRI | Magnetic Resonance Imaging |

| NATO | North Atlantic Treaty Organization |

| NOAA | National Oceanic and Atmospheric Administration |

| OODA | Observe, Orient, Decide, Act |

| PPI | pixels per inch |

| RBF | Radial Basis Function |

| ReLU | Rectified Linear Unit |

| RGB | Red, Green, Blue |

| RGB-D | RGB-Depth |

| RMP | Recognized Maritime Picture |

| ROC | Receiver Operating Characteristic |

| SAR | synthetic-aperture radar |

| SIFT | Scale Invariant Feature Transform |

| SURF | Sped-Up Robust Features |

| SVM | support vector machine |

| UAV | unmanned aerial vehicle |

References

- Scott-Samuel, N.E.; Baddeley, R.; Palmer, C.E.; Cuthill, I.C. Dazzle camouflage affects speed perception. PLoS ONE 2011, 6, e20233. [Google Scholar] [CrossRef]

- White, E. Technicities of deception: Dazzle camouflage, avant-gardes and sensory augmentation in the First World War. Mod. Cult. 2017, 12, 36–58. [Google Scholar] [CrossRef]

- de Reus, N.M.; Kerbusch, P.J.M.; Schadd, M.P.D.; de Vos, A. Geospatial Analysis for Machine Learning in Tactical Decision Support. STO-MP-MSG-184 2021. Available online: https://www.researchgate.net/publication/357768410_Geospatial_analysis_for_Machine_Learning_in_Tactical_Decision_Support (accessed on 28 December 2024).

- Surdu, J.R.; Kittka, K. Deep Green: Commander’s tool for COA’s concept. Comput. Commun. Control. Technol. 2008, 34, 45–51. [Google Scholar]

- Chen, C.; Kong, D.F.; Du, Z.J.; Li, R. Modeling and solution of COA development based on timed influence net and game theory. Appl. Math. Model. 2014, 38, 5269–5278. [Google Scholar] [CrossRef]

- Christie, E.H.; Ertan, A.; Adomaitis, L.; Klaus, M. Regulating lethal autonomous weapon systems: Exploring the challenges of explainability and traceability. AI Ethics 2024, 4, 229–245. [Google Scholar] [CrossRef]

- Righetti, L.; Pham, Q.C.; Madhavan, R.; Chatila, R. Lethal autonomous weapon systems [ethical, legal, and societal issues]. IEEE Robot. Autom. Mag. 2018, 25, 123–126. [Google Scholar] [CrossRef]

- Orange Data Mining. University of Ljubljana. Available online: https://orangedatamining.com/ (accessed on 28 December 2024).

- Şengül, B.; Yılmaz, F.; Uğurlu, Ö. Safety–security analysis of maritime surveillance systems in critical marine areas. Sustainability 2023, 15, 16381. [Google Scholar] [CrossRef]

- Burmeister, H.C.; Constapel, M. Autonomous collision avoidance at sea: A survey. Front. Robot. AI 2021, 8, 739013. [Google Scholar] [CrossRef]

- Dunn, D.C.; Jablonicky, C.; Crespo, G.O.; McCauley, D.J.; Kroodsma, D.A.; Boerder, K.; Gjerde, K.M.; Halpin, P.N. Empowering high seas governance with satellite vessel tracking data. Fish Fish. 2018, 19, 729–739. [Google Scholar] [CrossRef]

- Gonçalves, L.; Damas, B. Automatic detection of rescue targets in maritime search and rescue missions using UAVs. In Proceedings of the International Conference on Unmanned Aircraft Systems (ICUAS), Dubrovnik, Croatia, 21–24 June 2022; pp. 1638–1643. [Google Scholar] [CrossRef]

- Shanthi, T.S.; Dheepanbalaji, L.; Priya, R.; Kumar, V.D.A.; Kumar, A.; Sindhu, P.; Kumar, A. Illegal fishing, anomalous vessel behavior detection through automatic identification system. Mater. Today Proc. 2022, 62, 4685–4690. [Google Scholar] [CrossRef]

- Emmens, T.; Amrit, C.; Abdi, A.; Ghosh, M. The promises and perils of Automatic Identification System data. Expert Syst. Appl. 2021, 178, 114975. [Google Scholar] [CrossRef]

- Jiang, Z.; Zhang, L.; Li, W. A machine vision method for the evaluation of ship-to-ship collision risk. Heliyon 2024, 10, e25105. [Google Scholar] [CrossRef]

- Huang, Z.; Hu, Q.; Mei, Q.; Yang, C.; Qu, Z. Identity recognition on waterways: A novel ship information tracking method based on multimodal data. J. Navig. 2021, 74, 1336–1352. [Google Scholar] [CrossRef]

- Zardoua, Y.; Sebbar, B.; Chbeine, M.; Astito, A.; Boulaala, M. Role and Integration of Image Processing Systems in Maritime Target Tracking. arXiv 2024. [Google Scholar] [CrossRef]

- Copple, R.; The Fallacy of Unambiguous Warning. US Army War College. Available online: https://publications.armywarcollege.edu/News/Display/Article/3890315/the-fallacy-of-unambiguous-warning/ (accessed on 27 December 2024).

- Xu, H.; Huang, L.; Chen, Y.; Zhu, J.; Zeng, H. Unsupervised military-civilian cross-domain vessel re-identification using improved momentum contrast learning. In Proceedings of the 2024 International Conference on New Trends in Computational Intelligence (NTCI), Qingdao, China, 18–20 October 2024; pp. 106–110. [Google Scholar] [CrossRef]

- Soori, M.; Jough, F.G.J.; Dastres, R.; Arezoo, B. AI-based decision support systems in industry 4.0, A review. J. Econ. Technol. 2024. [Google Scholar] [CrossRef]

- Državni Zavod za Statistiku Republike Hrvatske (Croatian Bureau of Statistics). Statistički Ljetopis Republike Hrvatske (Statistical Yearbook of the Republic of Croatia). 2018. Zagreb. ISSN 1333-3305. Available online: https://podaci.dzs.hr/media/wsdkedwa/sljh2018.pdf (accessed on 5 February 2025).

- Kanjir, U.; Greidanus, H.; Oštir, K. Vessel detection and classification from spaceborne optical images: A literature survey. Remote Sens. Environ. 2018, 207, 1–26. [Google Scholar] [CrossRef] [PubMed]

- Lu, Y.; Ma, H.; Smart, E.; Vuksanovic, B.; Chiverton, J.; Prabhu, S.R.; Glaister, M.; Dunston, E.; Hancock, C. Fusion of camera-based vessel detection and AIS for maritime surveillance. In Proceedings of the 26th International Conference on Automation and Computing (ICAC), Portsmouth, UK, 2–4 September 2021; pp. 1–6. [Google Scholar] [CrossRef]

- International Maritime Organization (IMO). AIS Transponders. Available online: https://www.imo.org/en/OurWork/Safety/Pages/AIS.aspx (accessed on 13 January 2025).

- McCauley, D.J.; Woods, P.; Sullivan, B.; Bergman, B.; Jablonicky, C.; Roan, A.; Hirshfield, M.; Boerder, K.; Worm, B. Ending hide and seek at sea. Science 2016, 351, 1148–1150. [Google Scholar] [CrossRef] [PubMed]

- Škrlec, Z.; Bićanić, Z.; Tadić, J. Specific requirements for automated identification system on NATO vessels. In Proceedings of the 5th International Maritime Science Conference, Solin, Croatia, 22–23 April 2013; pp. 50–58. [Google Scholar]

- Huang, I.-L.; Lee, M.-C.; Nieh, C.-Y.; Huang, J.-C. Ship classification based on AIS data and machine learning methods. Electronics 2024, 13, 98. [Google Scholar] [CrossRef]

- Sheng, K.; Liu, Z.; Zhou, D.; He, A.; Feng, C. Research on ship classification based on trajectory features. J. Navig. 2018, 71, 100–116. [Google Scholar] [CrossRef]

- Baeg, S.; Hammond, T. Ship type classification based on the ship navigating trajectory and machine learning. In Proceedings of the ACM IUI Workshops, Sydney, Australia, 27–31 March 2023. [Google Scholar]

- Yang, D.; Wu, L.; Wang, S.; Jia, H.; Li, K.X. How big data enriches maritime research—A critical review of automatic identification system (AIS) data applications. Transp. Rev. 2019, 39, 755–773. [Google Scholar] [CrossRef]

- Tsokas, A.; Rysz, M.; Pardalos, P.M.; Dipple, K. SAR data applications in earth observation: An overview. Expert Syst. Appl. 2022, 205, 117342. [Google Scholar] [CrossRef]

- Ma, M.; Chen, J.; Liu, W.; Yang, W. Ship classification and detection based on CNN using GF-3 SAR images. Remote Sens. 2018, 10, 2043. [Google Scholar] [CrossRef]

- Sannapu, A.R.; Nayak, P.; Charan, U.R.; Keshava, S.G.; Naidu, K.S. Classification of marine vessels using deep learning models based on SAR images. In Proceedings of the International Conference on Inventive Computation Technologies (ICICT), Online, 20–22 July 2022; pp. 123–129. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, C.; Zhang, H. Ship classification in high-resolution SAR images using deep learning of small datasets. Sensors 2018, 18, 2929. [Google Scholar] [CrossRef] [PubMed]

- LTC Craig Broyles (Author). Cascade Rifles (YouTube Channel Name). Dark Rifle Way of War Ep. 3—Outmaneuver the Enemy. 2022. Available online: https://www.youtube.com/watch?v=mO1M5-SmlZw (accessed on 4 July 2024).

- Boyd, J.R. Patterns of Conflict. Presentation, 1986. Available online: https://www.coljohnboyd.com/static/documents/1986-12__Boyd_John_R__Patterns_of_Conflict__PPT-PDF.pdf (accessed on 4 July 2024).

- Boyd, J.R. The Essence of Winning & Losing. Presentation, 1995. Available online: https://www.coljohnboyd.com/static/documents/1995-06-28__Boyd_John_R__The_Essence_of_Winning_and_Losing__PPT-PDF.pdf (accessed on 4 July 2024).

- Boyd, J.R.; Hammond, G.T. A Discourse on Winning and Losing; Air University Press, 386 Maxwell Air Force Base: Montgomery, AL, USA, 2018; p. 400. [Google Scholar]

- Abba, S.; Bizi, A.M.; Lee, J.-A.; Bakouri, S.; Crespo, M.L. Real-time object detection, tracking, and monitoring framework for security surveillance systems. Heliyon 2024, 10, e34922. [Google Scholar] [CrossRef]

- Teixeira, E.; Araujo, B.; Costa, V.; Mafra, S.; Figueiredo, F. Literature review on ship localization, classification, and detection methods based on optical sensors and neural networks. Sensors 2022, 22, 6879. [Google Scholar] [CrossRef]

- Máttyus, G. Near real-time automatic marine vessel detection on optical satellite images. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, XL-1/W1, 233–237. [Google Scholar] [CrossRef]

- Feineigle, P.A.; Morris, D.D.; Snyder, F.D. Ship recognition using optical imagery for harbor surveillance. In Proceedings of the Association for Unmanned Vehicle Systems International (AUVSI), Washington, DC, USA, June 2007; pp. 1–17. [Google Scholar]

- Du, Q.; Zhang, Y.; Yang, X.; Liu, W. Ship target classification based on Hu invariant moments and ART for maritime video surveillance. In Proceedings of the 4th International Conference on Transportation Information and Safety (ICTIS), Banff, AB, Canada, 8–10 August 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 414–419. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Leclerc, M.; Tharmarasa, R.; Florea, M.C.; Boury-Brisset, A.-C.; Kirubarajan, T.; Duclos-Hindié, N. Ship classification using deep learning techniques for maritime target tracking. In Proceedings of the 21st International Conference on Information Fusion (FUSION), Cambridge, UK, 10–13 July 2018; pp. 737–744. [Google Scholar] [CrossRef]

- Mishra, N.K.; Kumar, A.; Choudhury, K. Deep convolutional neural network based ship images classification. Def. Sci. J. 2021, 71, 200–208. [Google Scholar] [CrossRef]

- Leonidas, L.A.; Jie, Y. Ship classification based on improved convolutional neural network architecture for intelligent transport systems. Information 2021, 12, 302. [Google Scholar] [CrossRef]

- Zhenzhen, L.; Baojun, Z.; Linbo, T.; Zhen, L.; Fan, F. Ship classification based on convolutional neural networks. J. Eng. 2019, 21, 7343–7346. [Google Scholar] [CrossRef]

- Gundogdu, E.; Solmaz, B.; Yücesoy, V.; Koç, A. Marvel: A large-scale image dataset for maritime vessels. In Computer Vision–ACCV 2016: 13th Asian Conference on Computer Vision, Revised Selected Papers, Part V 13; Springer: Cham, Switzerland, 2016; pp. 165–180. [Google Scholar]

- Jain, A. Game of Deep Learning: Ship Datasets. Available online: https://www.kaggle.com/datasets/arpitjain007/game-of-deep-learning-ship-datasets (accessed on 5 February 2025).

- Gallego, A.-J.; Pertusa, A.; Gil, P. Automatic ship classification from optical aerial images with convolutional neural networks. Remote Sens. 2018, 10, 511. [Google Scholar] [CrossRef]

- Liu, Y.; Cui, H.Y.; Kuang, Z.; Li, G.Q. Ship detection and classification on optical remote sensing images using deep learning. In ITM Web of Conferences, Proceedings of The 4th Annual International Conference on Information Technology and Applications (ITA 2017), Guangzhou, China, 26–28 May 2017; EDP Sciences: Les Ulis, France, 2017; Volume 12, p. 05012. [Google Scholar] [CrossRef]

- Polap, D.; Wlodarczyk-Sielicka, M. Classification of non-conventional ships using a neural Bag-of-Words mechanism. Sensors 2020, 20, 1608. [Google Scholar] [CrossRef] [PubMed]

- Bay, H.; Ess, A.; Tuytelaars, T.; Van Gool, L. Speeded-up robust features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Syms, M.S.; Isenor, A.W.; Chivari, B.; DeBaie, A.; Hogue, A.; Glessing, B. Building a maritime picture in the era of big data: The development of the geospatial communication interface+. In Proceedings of the International Conference on Military Communication and Information Systems (ICMCIS), The Hague, The Netherlands, 4–5 May 2021; pp. 1–7. [Google Scholar] [CrossRef]

- Karna, H.; Mikulićić, N.; Škiljo, M. Towards Enhanced Recognized Maritime Picture. In Proceedings of the 7th International Conference on Smart and Sustainable Technologies (SpliTech), Bol, Croatia, 5–8 July 2022; pp. 1–4. [Google Scholar] [CrossRef]

- Chauvin, C.; Clostermann, J.-P.; Hoc, J.-M. Situation awareness and the decision-making process in a dynamic situation: Avoiding collisions at sea. J. Cogn. Eng. Decis. Mak. 2008, 2, 1–23. [Google Scholar] [CrossRef]

- Song, R.; Papadimitriou, E.; Negenborn, R.R.; Gelder, P.V. Integrating situation-aware knowledge maps and dynamic window approach for safe path planning by maritime autonomous surface ships. Ocean Eng. 2024, 311, 118882. [Google Scholar] [CrossRef]

- Paul, C.; Clarke, C.P.; Triezenberg, B.L.; Manheim, D.; Wilson, B. Requirements for Better C2 and Situational Awareness of the Information Environment; RAND Corporation: Santa Monica, CA, USA, 2018; Available online: https://www.rand.org/pubs/research_briefs/RB10032.html (accessed on 30 December 2024).

- Matsuzaka, Y.; Yashiro, R. AI-based computer vision techniques and expert systems. AI 2023, 4, 289–302. [Google Scholar] [CrossRef]

- Dehghani, N.; Levin, M. Bio-inspired AI: Integrating biological complexity into artificial intelligence. arXiv 2024. [Google Scholar] [CrossRef]

- Malowany, D.; Guterman, H. Biologically inspired visual system architecture for object recognition in autonomous systems. Algorithms 2020, 13, 167. [Google Scholar] [CrossRef]

- Mahadevkar, S.V.; Khemani, B.; Patil, S.; Kotecha, K.; Vora, D.R.; Abraham, A.; Gabralla, L. A review on machine learning styles in computer vision—Techniques and future directions. IEEE Access 2022, 10, 107293–107329. [Google Scholar] [CrossRef]

- Aldoseri, A.; Al-Khalifa, K.N.; Hamouda, A.M. Re-thinking data strategy and integration for artificial intelligence: Concepts, opportunities, and challenges. Appl. Sci. 2023, 13, 7082. [Google Scholar] [CrossRef]

- Cernadas, E. Applications of computer vision. 2nd Edition. Electronics 2024, 13, 3779. [Google Scholar] [CrossRef]

- Svenmarck, P.; Luotsinen, L.; Nilsson, M.; Schubert, J. Possibilities and Challenges for Artificial Intelligence in Military Applications. NATO S&T Organization. 2018. STO-MP-IST-160, S1-5-1–S1-5-16. Available online: https://www.sto.nato.int/publications/STO%20Meeting%20Proceedings/STO-MP-IST-160/MP-IST-160-S1-5.pdf (accessed on 30 December 2024).

- Žigulić, N.; Glučina, M.; Lorencin, I.; Matika, D. Military decision-making process enhanced by image detection. Information 2024, 15, 11. [Google Scholar] [CrossRef]

- Hwang, K.S.; Ma, J. Military camouflaged object detection with deep learning using dataset development and combination. J. Def. Model. Simul. Appl. Methodol. Technol. 2024. [Google Scholar] [CrossRef]

- Budiharto, W.; Andreas, V.; Suroso, J.S.; Gunawan, A.A.S.; Irwansyah, E. Development of tank-based military robot and object tracker. In Proceedings of the 2019 4th Asia-Pacific Conference on Intelligent Robot Systems (ACIRS), Nagoya, Japan, 13–15 July 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 221–224. [Google Scholar] [CrossRef]

- Budiharto, W.; Irwansyah, E.; Suroso, J.S.; Gunawan, A.A.S. Design of object tracking for military robot using PID controller and computer vision. ICIC Express Lett. 2020, 14, 289–294. [Google Scholar] [CrossRef]

- Petković, M.; Vujović, I.; Lušić, Z.; Šoda, J. Image dataset for neural network performance estimation with application to maritime ports. J. Mar. Sci. Eng. 2023, 11, 578. [Google Scholar] [CrossRef]

- Bradski, G. The OpenCV library. Dr. Dobb’s J. Softw. Tools 2000, 120, 122–125. [Google Scholar]

- Imamagić, E.; Belavić, M. Croatian National Advanced Computing Resources Supek and Vrančić. In Proceedings of the Austrian-Slovenian HPC Meeting 2024—ASHPC24, Seeblickhotel Grundlsee, Austria, 10–13 June 2024. [Google Scholar]

- Shah, K.; Shah, N.; Sawant, V.; Parolia, N. (Eds.) Practical Data Mining Techniques and Applications, 1st ed.; Auerbach Publications: Boca Raton, FL, USA, 2023. [Google Scholar] [CrossRef]

- Shu, X.; Ye, Y. Knowledge discovery: Methods from data mining and machine learning. Soc. Sci. Res. 2023, 110, 102817. [Google Scholar] [CrossRef] [PubMed]

- Wirth, R.; Hipp, J. CRISP-DM: Towards a standard process model for data mining. In Proceedings of the 4th International Conference on the Practical Applications of Knowledge Discovery and Data Mining, Manchester, UK, 11–13 April 2000; pp. 29–39. [Google Scholar]

- Martínez-Plumed, F.; Contreras-Ochando, L.; Ferri, C.; Hernández-Orallo, J.; Kull, M.; Lachiche, N.; Ramírez-Quintana, M.J.; Flach, P. CRISP-DM twenty years later: From data mining processes to data science trajectories. IEEE Trans. Knowl. Data Eng. 2021, 33, 3048–3061. [Google Scholar] [CrossRef]

- Lundén, N.; Bekar, E.T.; Skoogh, A.; Bokrantz, J. Domain knowledge in CRISP-DM: An application case in manufacturing. IFAC-Pap. 2023, 56, 7603–7608. [Google Scholar] [CrossRef]

- Schröer, C.; Kruse, F.; Gómez, J.M. A systematic literature review on applying CRISP-DM process model. Procedia Comput. Sci. 2021, 181, 526–534. [Google Scholar] [CrossRef]

- Saltz, J.S.; Hotz, N. Identifying the most common frameworks data science teams use to structure and coordinate their projects. In Proceedings of the IEEE International Conference on Big Data (Big Data), Atlanta, GA, USA, 10–13 December 2020; pp. 2038–2042. [Google Scholar] [CrossRef]

- CRISP-DM Is Still the Most Popular Framework for Executing Data Science Projects. Data Science PM. 2024. Available online: https://www.datascience-pm.com/crisp-dm-still-most-popular/ (accessed on 30 December 2024).

- AIS Ship Types. Available online: https://api.vtexplorer.com/docs/ref-aistypes.html (accessed on 15 November 2024).

- AIS Vessel Type and Group Codes Used by The Marine Cadastre Project. Available online: https://coast.noaa.gov/data/marinecadastre/ais/VesselTypeCodes2018.pdf (accessed on 20 November 2024).

- Hunter, J.D. Matplotlib: A 2D graphics environment. Comput. Sci. Eng. 2007, 9, 90–95. [Google Scholar] [CrossRef]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception architecture for computer vision. arXiv 2015. [Google Scholar] [CrossRef]

- Stanford Vision Lab, Stanford University, Princeton University. ImageNet. Available online: https://image-net.org/index.php (accessed on 20 November 2024).

- Blanco-Mallo, E.; Morán-Fernández, L.; Remeseiro, B.; Bolón-Canedo, V. Do all roads lead to Rome? Studying distance measures in the context of machine learning. Pattern Recognit. 2023, 141, 109646. [Google Scholar] [CrossRef]

- Kartowisastro, I.H.; Latupapua, J. A Comparison of Adaptive Moment Estimation (Adam) and RMSProp Optimisation Techniques for Wildlife Animal Classification Using Convolutional Neural Networks. Rev. Intell. Artif. 2023, 37, 1023–1030. [Google Scholar] [CrossRef]

- Dogo, E.M.; Afolabi, O.J.; Nwulu, N.I.; Twala, B.; Aigbavboa, C.O. A comparative analysis of gradient descent-based optimization algorithms on convolutional neural networks. In Proceedings of the 2018 international conference on computational techniques, electronics and mechanical systems (CTEMS), Belagavi, India 21–23 December 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 92–99. [Google Scholar] [CrossRef]

- Majnik, M.; Bosnić, Z. ROC analysis of classifiers in machine learning: A survey. Intell. Data Anal. 2013, 17, 531–558. [Google Scholar] [CrossRef]

- Flach, P.A.; Hernández-Orallo, J.; Ferri, C. A coherent interpretation of AUC as a measure of aggregated classification performance. In Proceedings of the 28th International Conference on International Conference on Machine Learning (ICML’11), Bellevue, WA, USA, 28 June–2 July 2011; pp. 657–664. [Google Scholar]

- Carrington, A.M.; Manuel, D.G.; Fieguth, P.W.; Ramsay, T.; Osmani, V.; Wernly, B.; Bennett, C.; Hawken, S.; Magwood, O.; Sheikh, Y.; et al. Deep ROC analysis and AUC as balanced average accuracy, for improved classifier selection, audit and explanation. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 329–341. [Google Scholar] [CrossRef]

- Luque, A.; Mazzoleni, M.A.; Carrasco, A.; Ferramosca, A. Visualizing classification results: Confusion star and confusion gear. IEEE Access 2022, 10, 1659–1677. [Google Scholar] [CrossRef]

- Perri, D.; Simonetti, M.; Gervasi, O. Synthetic data generation to speed-up the object recognition pipeline. Electronics 2022, 11, 2. [Google Scholar] [CrossRef]

- Huang, L.; Li, W.; Chen, C.; Zhang, F.; Lang, H. Multiple features learning for ship classification in optical imagery. Multimed. Tools Appl. 2018, 77, 13363–13389. [Google Scholar] [CrossRef]

- Sullivan, M.D.R.; Shah, M. Visual surveillance in maritime port facilities. SPIE Vis. Inf. Process. XVII 2008, 6978, 291–298. [Google Scholar] [CrossRef]

| Type | Description | No. of Images |

|---|---|---|

| Cargo | Vessel designed to transport cargo, goods, and materials in bulk. | 245 |

| Container | Vessel that carries all its load in truck-size intermodal containers. | 276 |

| Cruise | Vessels designed for providing passengers with accommodation and entertainment. | 241 |

| Fishing | Vessels used to locate, catch, and preserve fish and other aquatic animals in the sea. | 142 |

| Military | Naval vessel used to project power onto the sea or for naval warfare. | 197 |

| Passenger | Vessel specially designed for those transports of persons and their cargo. | 429 |

| Pleasure | Vessels designed for navigation and recreational purposes while providing comfort. | 358 |

| Sailing | Vessel provided with sufficient sail area for navigation used for cruising or racing. | 151 |

| Special | Self-propelled vessel serving diverse and often multiple functions (e.g., tugboat and dragger). | 214 |

| Tanker | Vessels designed to transport or store liquids or gases in bulk. | 357 |

| Non-Class | Non-vessels, floating or other objects. | 105 |

| 2715 |

| Model | AUC | CA | F1-Score | Precision | Recall |

|---|---|---|---|---|---|

| SVM | 0.991 | 0.900 | 0.902 | 0.914 | 0.900 |

| Neural network | 0.992 | 0.904 | 0.904 | 0.907 | 0.904 |

| Logistic regression | 0.992 | 0.882 | 0.881 | 0.885 | 0.882 |

| kNN | 0.968 | 0.856 | 0.858 | 0.861 | 0.856 |

| Naïve Bayes | n/a | 0.779 | 0.790 | 0.821 | 0.779 |

| Decision tree | 0.769 | 0.609 | 0.619 | 0.641 | 0.609 |

| Type | Input Set | Test Set | Percentage [%] | ||

|---|---|---|---|---|---|

| Train | Test | Successful | Unsuccessful | ||

| Cargo | 226 | 19 | 10 | 5 | 66.67 |

| Container | 253 | 23 | 13 | 2 | 86.67 |

| Cruise | 216 | 25 | 16 | 1 | 94.11 |

| Fishing | 132 | 10 | 11 | 2 | 84.61 |

| Military | 175 | 22 | 13 | 2 | 86.67 |

| Passenger | 380 | 49 | 20 | 1 | 95.24 |

| Pleasure | 316 | 42 | 22 | 4 | 84.62 |

| Sailing | 137 | 14 | 17 | 0 | 100.00 |

| Special | 190 | 24 | 10 | 5 | 66.67 |

| Tanker | 321 | 36 | 25 | 2 | 92.59 |

| Non-Class | 98 | 7 | 16 | 3 | 84.21 |

| Total | 2444 | 271 | 173 | 27 | 86.50 |

| Model | Maritime Classes | Average Accuracy [%] |

|---|---|---|

| [95] | barges, container ships, cargo ships, and tankers | 94.63 |

| [95] | barges, container ships, cargo ships, and tankers | 86.87 |

| [95] | merchant ships, sailing ships, medium passenger ships, medium “other” ships, tugboats, and small boats | 85.07 |

| [96] | container ships, speedboats, tanker ships, tugboats, cruise ships, and fishing boats | 88.1 |

| [53] | cargo, military, tanker, yacht, and motorboat | 79.58 |

| Proposed model | cargo, container ships, cruise, fishing, military, passenger, pleasure, sailing, special, tanker, and non-class | 86.50 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Karna, H.; Braović, M.; Gudelj, A.; Buličić, K. Artificial Intelligence-Based Prediction Model for Maritime Vessel Type Identification. Information 2025, 16, 367. https://doi.org/10.3390/info16050367

Karna H, Braović M, Gudelj A, Buličić K. Artificial Intelligence-Based Prediction Model for Maritime Vessel Type Identification. Information. 2025; 16(5):367. https://doi.org/10.3390/info16050367

Chicago/Turabian StyleKarna, Hrvoje, Maja Braović, Anita Gudelj, and Kristian Buličić. 2025. "Artificial Intelligence-Based Prediction Model for Maritime Vessel Type Identification" Information 16, no. 5: 367. https://doi.org/10.3390/info16050367

APA StyleKarna, H., Braović, M., Gudelj, A., & Buličić, K. (2025). Artificial Intelligence-Based Prediction Model for Maritime Vessel Type Identification. Information, 16(5), 367. https://doi.org/10.3390/info16050367