Abstract

The fourth industrial revolution, driven by Artificial Intelligence (AI) and Generative AI (GenAI), is rapidly transforming human life, with profound effects on education, employment, operational efficiency, social behavior, and lifestyle. While AI tools potentially offer unprecedented support in learning and problem-solving, their integration into education raises critical questions about cognitive development and long-term intellectual capacity. Drawing parallels to previous industrial revolutions that reshaped human biological systems, this paper explores how GenAI introduces a new level of abstraction that may relieve humans from routine cognitive tasks, potentially enhancing performance but also risking a cognitively sedentary condition. We position levels of abstraction as the central theoretical lens to explain when GenAI reallocates cognitive effort toward higher-order reasoning and when it induces passive reliance. We present a conceptual model of AI-augmented versus passive trajectories in cognitive development and demonstrate its utility through a simulation-platform case study, which exposes concrete failure modes and the critical role of expert interventions. Rather than a hypothesis-testing empirical study, this paper offers a conceptual synthesis and concludes with mitigation strategies organized by abstraction layer, along with platform-centered implications for pedagogy, curriculum design, and assessment.

1. Introduction

Artificial Intelligence (AI) and Generative AI (GenAI) are rapidly transforming education, offering powerful tools for instruction, assessment, and knowledge work. Yet this transformation brings significant challenges, particularly around dependency and possible erosion of independent thought. Over-reliance on AI may lead individuals to think algorithmically without a deep understanding, resulting in diminished creativity, skill degradation, and reduced autonomy. In educational contexts, excessive reliance by both students and educators risks undermining critical thinking and problem-solving capabilities, which are essential for intellectual fulfillment and societal contribution.

GenAI, while capable of generating fluent content and assisting with routine reasoning, lacks consciousness, agency, emotional intelligence, and ethical awareness. It cannot grasp the subtleties of human relationships or the contextual depth required for holistic learning. Nevertheless, GenAI represents a major leap in cognitive support, capable of processing vast data, adapting to individual learning styles, and offering personalized feedback. This duality, i.e., enhancement versus dependency [1,2,3], raises a central question: how can we ensure that AI augments rather than diminishes human cognition?

To address this, we propose a levels-of-abstraction lens as the central theoretical framework. This lens helps explain when GenAI reallocates cognitive effort toward higher-order reasoning and when it induces passive reliance. As AI elevates routine tasks to higher abstraction layers, such as drafting, scaffolding, and pattern completion, learners may lose touch with foundational cognitive operations like decomposition, debugging, and recall. Neuroplasticity suggests that the brain adapts to the tasks it routinely performs; if AI takes over these lower-level functions, a “use it or lose it” effect may emerge, leading to cognitive atrophy. This phenomenon mirrors earlier industrial revolutions, which reshaped biological systems through reduced physical exertion and altered lifestyles. Today, the risk is not muscular atrophy but cognitive sedentism.

To operationalize this lens, we present a simulation-platform case study, where GenAI was used to assist in developing a virtualization platform for wireless sensor network systems. The case reveals concrete failure modes, such as incorrect socket configurations and non-functional callbacks, and shows how expert interventions at lower abstraction layers are necessary to convert AI-generated drafts into functional systems. This illustrates that active engagement across abstraction layers sustains cognitive development, while passive acceptance of AI outputs leads to recurring errors and diminished understanding.

This paper offers a conceptual synthesis rather than a hypothesis-testing empirical study. We conducted a targeted narrative review of recent literature across education technology, cognitive science, and human–computer interaction, and we present the case study as an explanatory vignette. The remainder of the paper develops the abstraction lens, analyzes cognitive impacts, presents the case study, and concludes with mitigation strategies organized by abstraction layer. Our goal is to support educational systems in evolving alongside AI, preserving foundational skills while embracing new cognitive tools.

2. Industrial Revolution and Human Development

Advancements in technology and modern conveniences have brought numerous benefits, but they have also introduced new challenges to our biological well-being [4]. This section presents selected examples of human biological development since the Stone Age, focusing on the side effects of modern industrial revolutions on health. While abstracted, these examples reflect the paradox of improved lifestyle coinciding with biological retreat [5,6,7,8].

Lifestyle changes have led to the gradual weakening of limbs, bones, and muscles, resulting in reduced structural support and increased health challenges. For instance, the shift away from physically demanding tasks has contributed to weaker muscles and lower bone density. Desk jobs and prolonged sitting have led to poor posture and weakened core muscles, manifesting in back pain and spinal misalignment. The decline in grip strength over time is a natural consequence of tool reliance. In contrast, manual labor and climbing historically developed stronger grip strength. Similarly, dietary changes, particularly the move toward softer, processed foods, have impacted jaw structure and chewing function, as tougher, fibrous foods once required greater masticatory effort [7].

Regular physical activity and diets rich in natural, unprocessed foods supported cardiovascular health in earlier populations. In contrast, modern diets high in processed foods have contributed to increased rates of hypertension and atherosclerosis [8]. The advent of agriculture and food cultivation introduced more starchy and processed foods, which, over time, led to a decrease in stomach size and contributed to overeating and obesity. Sugary snacks and beverages have increased the prevalence of tooth decay and gum disease, which in turn affects digestion due to impaired chewing.

Antibiotic use, dietary shifts, and reduced exposure to diverse environments have led to a decline in microbiome diversity in modern populations. This reduction may compromise digestive function and increase the risk of inflammatory bowel diseases and metabolic disorders. Low-fiber diets and sedentary lifestyles can slow intestinal peristalsis, resulting in constipation and other digestive issues; conditions that contrast sharply with the efficient digestion promoted by physically demanding lifestyles. Excessive intake of processed foods and alcohol has also contributed to liver damage, including fatty liver disease and cirrhosis [6].

Modern sedentary lifestyles, marked by prolonged sitting and reduced physical exertion, have contributed to diminished lung capacity. Exposure to air pollution further damages lung tissue and impairs respiratory function, exacerbating conditions such as asthma and chronic obstructive pulmonary disease. Stress and poor posture affect breathing patterns, with chronic stress leading to shallow breathing and poor posture restricting diaphragm movement. The hygiene hypothesis suggests that reduced exposure to diverse microbial environments due to modern sanitation practices may be linked to increased allergic and respiratory conditions. Over time, these habits may weaken respiratory muscles, including the diaphragm and intercostal muscles [9].

These examples illustrate the biological consequences of reduced physical engagement, which are often referred to as the emergence of “Western diseases” [6]. As we now enter the fourth industrial revolution, driven by artificial intelligence and automation, a parallel concern arises: could our mental and cognitive systems be subject to a similar form of sedentary decline?

Just as physical inactivity leads to muscular and systemic atrophy, cognitive inactivity, particularly when routine mental tasks are delegated to AI, may result in diminished critical thinking, problem-solving, and memory retention. GenAI elevates problem-solving to a higher level of abstraction, relieving humans from granular analysis and synthesis. While this abstraction can enhance productivity, it also risks bypassing the foundational cognitive exercises that sustain intellectual resilience. The levels-of-abstraction lens helps to understand this shift: when learners and professionals operate only at upper layers, i.e., consuming AI-generated outputs without engaging in lower-level reasoning, they may experience a form of cognitive deconditioning. Education and training must therefore evolve to counterbalance this trend, ensuring that learners remain active across all abstraction layers to preserve and strengthen mental faculties.

3. Levels-of-Abstraction Lens

Early experiences with AI tools reflect the emergence of a new level of abstraction that extends the scale of human interaction with problem domains beyond traditional cognitive limits. This abstraction aligns with the evolution of science and technology, enabling humans to operate at increasingly complex levels without engaging directly with foundational details.

The trajectory of electronic computation illustrates this shift. Since the 1940s, systems have evolved from discrete hardware components like vacuum tubes and transistors to integrated circuits, microprocessors, and now quantum computing. Each stage abstracted away lower-level design complexities, allowing users to interact with higher-level programmable systems. Software development followed a similar path: from machine code to structured programming, object-oriented paradigms, and now AI-driven development. These transitions elevated the primitives used in system analysis and synthesis, enabling us to work at scales that exceed our natural memory and imagination.

Humans have consistently adapted to these abstraction layers, learning to treat increasingly complex components as basic building blocks. However, this adaptation often comes at the cost of distancing ourselves from the foundational principles upon which these systems are built. The question arises: do we still need to understand earlier levels of abstraction? Or are we becoming specialized in navigating only the upper layers, relying on tools to fill in the gaps?

This concern is not hypothetical. Consider everyday situations such as performing basic arithmetic during a power outage or navigating without GPS. In both cases, reliance on technology has replaced foundational skills, mental arithmetic, and spatial memory that were once essential. Similarly, in education, the compression of curricula to accommodate advanced topics has sometimes led to gaps in prerequisite knowledge. For example, the integration of control systems theory into undergraduate engineering programs introduced conflicts between program duration and foundational readiness, making it difficult for students to fully grasp the material.

These examples highlight a broader tension: while abstraction enables efficiency and scalability, it can also obscure the cognitive processes that underpin understanding. In the context of GenAI, this tension becomes more pronounced. GenAI tools allow users to bypass lower-level reasoning, such as decomposition, debugging, and iterative synthesis, by offering high-level solutions. While this can accelerate productivity, it risks creating a cognitively sedentary condition, where foundational mental effort is no longer exercised.

The levels-of-abstraction lens helps us frame this risk. Just as previous industrial revolutions led to biological atrophy through reduced physical exertion, the fourth industrial revolution may lead to cognitive atrophy through reduced mental exertion. If learners engage only with upper abstraction layers, consuming AI-generated outputs without interrogating their structure or logic, they may lose the ability to reason through problems independently. Education systems must therefore evolve to ensure that learners remain active across all abstraction layers, preserving foundational skills while embracing the benefits of advanced tools.

This paper uses a simulation platform case to operationalize this lens. The case demonstrates how expert interventions at lower abstraction layers, such as correcting protocol-level errors and refining AI-generated code, are essential for converting non-functional AI outputs into working systems. It shows that sustained cognitive engagement across layers is not only possible but necessary for meaningful learning and robust problem-solving.

4. Perceived Effects on Human Mental Capabilities

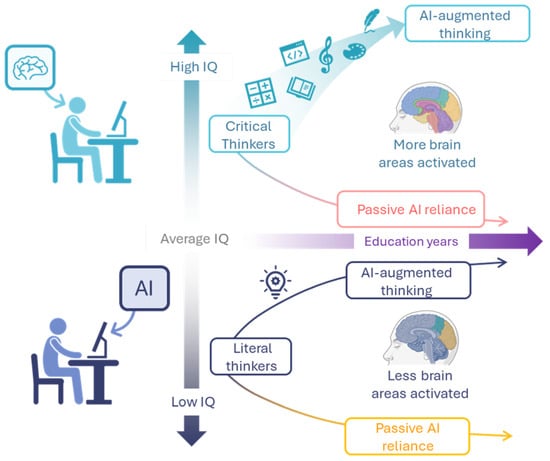

The integration of artificial intelligence into daily cognitive tasks has the potential to reshape human mental capabilities over time. While AI can serve as a powerful tool to enhance reasoning and creativity, its impact depends largely on how humans use it. Prolonged engagement with AI in an active, critical way, referred to as AI-augmented thinking, can stimulate higher-order cognitive processes and broader neural activation, thereby supporting intellectual growth. Conversely, habitual passive reliance on AI, where individuals delegate reasoning without meaningful engagement, may diminish cognitive effort and reduce brain activation, leading to potential declines in intellectual performance. As illustrated in Figure 1, individuals with high cognitive ability may reinforce creative reasoning and even improve IQ through active AI use, while passive dependence poses a risk of cognitive decline. Similarly, those with average cognitive ability can experience substantial gains through AI-augmented thinking but face further reductions in IQ if they adopt a passive approach. These observations underscore the critical role of deliberate, interactive AI use in sustaining and enhancing human cognitive development.

Figure 1.

Long-term cognitive trajectories with AI. The model contrasts two paths: AI-augmented thinking, in which active, critical engagement with AI expands higher-order cognition, and passive reliance, in which routine delegation reduces practice of foundational primitives (recall, decomposition, debugging). Trajectories are shown for learners with higher and average baseline abilities. The model assumes neuroplastic adaptation to practiced tasks and posits that systematic engagement across abstraction layers sustains capacity. The case study included in this paper operationalizes this model: when users drop one layer (to protocols/interfaces), they correct AI proposals and remain on the augmented path; when they stay at upper layers and accept outputs, failure modes persist and complacency rises.

AI tools can influence human creativity both positively and negatively, depending on how they are used. Studies such as [10,11] suggest that automating routine tasks with AI can free up mental energy for higher-level creative work. However, excessive reliance on generative AI may discourage critical and original thinking, leading to a decline in problem-solving skills and creative exploration. Shackell and Williams [12,13] caution that using AI-generated templates or patterns may limit the pursuit of novel ideas, as creativity often thrives when individuals break away from established norms. When AI tools handle tasks effortlessly, individuals may become complacent and less willing to invest the effort required for creative breakthroughs. Effective use of AI requires understanding its limitations and adapting it to specific needs. Combining AI capabilities with human intuition and expertise can lead to truly original outcomes. Thus, AI may enhance or hinder creativity depending on the user’s level of engagement.

AI represents a natural progression in the evolution of problem-solving abstraction. As this level now intersects directly with human interaction, it has transformed how we live, work, and solve problems. Yet, alongside these conveniences lies a risk of cognitive atrophy, as noted in [14]. Over-trust in AI outcomes can lead to reduced questioning and diminished scrutiny. For example, the ease of using AI personal assistants to remember dates, phone numbers, and other information raises concerns about the decline of natural memory abilities. Cognitive psychology suggests that memory functions like a muscle; it requires regular exercise to remain strong. Depending on AI for memory tasks may lead to neglect of this vital mental function.

The decline in mental arithmetic skills has been observed for years, likely exacerbated by the widespread use of calculators. With AI tools now capable of performing complex calculations instantly, even basic arithmetic may seem cumbersome, reducing mathematical agility. Nosta and Stokel-Walker [15,16] highlight this trend, while Malik et al. [17] emphasize the importance of maintaining foundational cognitive skills in the face of increasing automation.

AI systems also impact critical thinking and problem-solving skills. While AI can analyze vast datasets and suggest solutions with speed and accuracy, this may lead to human complacency and reduced scrutiny. Many researchers argue that blind trust in machine judgment can weaken our habit of questioning and evaluating information [18,19,20]. As AI-generated solutions become more reliable, humans may defer to them without critical engagement, diminishing cognitive resilience.

The convenience of AI-powered platforms and search engines has made information instantly accessible. However, this ease may reduce opportunities for deep research, exploration, and serendipitous discovery, which are essential for cognitive development. Harkness and Xianghan [21,22] warn that as AI takes over specialized roles, humans may feel less incentive to acquire diverse skills, leading to cognitive stagnation.

AI’s personalization of user experiences, from data feeds to recommendations, can create echo chambers that limit exposure to diverse perspectives. Wang et al. [23] note that this can inhibit cognitive flexibility and growth. The digital age, amplified by AI, fosters instant gratification, which may reduce attention spans and the mental effort required to interpret and reflect on information. Moreover, deterministic AI models often operate on binary logic. If humans rely too heavily on such systems, they may lose the ability to think in shades of gray, weakening their capacity to handle ambiguity and complexity. Many researchers emphasize that intuitive judgment developed through experience and reflection is difficult to replicate algorithmically [24,25]. Over-reliance on data-driven AI solutions may dull this essential human faculty.

5. Impact on Human Cognitive Development

The ongoing evolution of AI tools is already disrupting nearly every sector [26], including education. This transformation has generated both enthusiastic optimism and thoughtful skepticism. Optimists argue that AI will enhance education by lowering costs, personalizing learning for maximum benefit, and expanding access globally. Pessimists, however, caution that AI may undermine core educational pillars, i.e., critical thinking, logical reasoning, and clear communication, if not used intentionally.

On the optimistic side, AI, particularly GenAI, is expected to improve learning efficiency by streamlining the acquisition of knowledge. Techniques such as adaptive learning and spaced repetition have been shown to enhance long-term memory retention and engagement [27,28]. AI systems can provide instant feedback and personalized content, helping students master concepts more quickly and maintain focus. By presenting complex scenarios and encouraging exploration, AI can also foster critical thinking and problem-solving skills [29].

AI holds promise for supporting diverse learning needs. It can accommodate various learning styles and assist students with disabilities or those requiring additional support. GenAI tools may inspire creativity by generating multiple solution paths and encouraging students to think divergently. Collaborative learning is also enhanced, as AI can connect students across geographies and facilitate teamwork. Additionally, AI can help manage cognitive load by breaking down complex information into digestible segments, reducing overload and burnout. As education becomes increasingly digital and AI-driven, students will need to understand the ethical dimensions of technology, fostering a generation of ethically aware digital citizens [30]. These benefits align with the levels-of-abstraction lens: when learners engage actively with AI across multiple cognitive layers, interpreting, critiquing, and refining AI outputs, they remain in the AI-augmented trajectory, where cognitive development is supported and extended.

However, there are also valid concerns. One is that the instant gratification offered by AI tools may reduce students’ attention spans and patience for deep, sustained thinking [31]. Easy access to information can discourage memorization and deep conceptual understanding, potentially weakening memory skills [30,32]. If students rely on AI for problem-solving, they may not develop the analytical skills needed to break down complex problems or evaluate multiple solution paths.

A major concern is the potential erosion of critical thinking. When AI provides ready-made answers, students may bypass the analytical processes required for deep reasoning. They might accept AI-generated solutions without questioning their validity or considering alternative perspectives, leading to diminished skepticism and weaker argumentation skills. Echo chambers created by algorithmic personalization can further limit exposure to diverse viewpoints, which are essential for cognitive flexibility [33].

Creativity may also be affected. If students depend on AI for content generation, they may not practice their creative skills sufficiently. The iterative process of creativity, i.e., exploring alternatives, refining ideas, and embracing uncertainty, could be replaced by streamlined, AI-driven outputs. This may lead to the homogenization of ideas and reduced originality. Overconfidence in AI-generated content could undermine students’ belief in their own creative capacities, and fewer opportunities for unforeseen discovery may result [19].

These risks reflect the passive reliance trajectory in the levels-of-abstraction model. When learners operate only at upper abstraction layers, i.e., consuming AI outputs without engaging in foundational reasoning, they risk cognitive stagnation. Education systems must therefore design learning experiences that encourage active engagement across abstraction layers, preserving foundational skills while leveraging AI’s strengths.

6. Simulation Platform Development Use Case

At Auckland University of Technology, within the Centre for Sensor Network & Smart Environment Lab of the School of Engineering, Computer and Mathematical Sciences, we undertook the development of a simulation and virtualization platform to support a Wireless Sensor Actuator Network paper, which is offered as an elective to Master of Engineering and senior Bachelor of Engineering students. To build the platform, we selected the open-source Contiki-NG Cooja simulator due to its widespread adoption and compatibility with a broad range of communication protocols and commercial wireless hardware, including sensors and embedded electronics. The development process involved assembling a generic virtual machine environment for student exercises and examples, alongside creating additional software resources tailored to the course content and targeted hardware. The growing capabilities of embedded devices today allow for extending functionality at the levels of digital sensing, general intelligence, machine learning, networking, and communication, which are accessible even at the lower-level wireless motes.

Virtualizing an integrated system with components requiring multiple functionalities, each implemented in different programming languages, can pose challenges for developers. AI may offer significant assistance in progressing toward workable solutions. However, how much reliance is appropriate? And what happens if either AI or human expertise lacks critical knowledge components? This is a key consideration, aligning with the concept illustrated in Figure 1. It could lead developers toward either passive reliance on AI or active critical thinking.

To illustrate this, we conducted an exercise to enhance the general intelligence of wireless motes by integrating existing software functionalities. From a curriculum perspective, this demonstrates the computation/communication trade-off in wireless sensor networks. In this exercise, we added a lightweight database (SQLite) to provide data management capabilities at the mote level. The developer was unsure how to connect SQLite in Python 3.8.10 to the mote software (written in C) and the related data file (.txt).

ChatGPT-5 was consulted. The question posed to the AI was: What is the best way to establish connectivity between SQLite in Python and both the mote model in C and the data file in .txt format? The AI proposed the following system structure:

- ↓ UDP (temp, light)

- [Coordinator]

- ↓ Logs data to file

- cooja_log_dynamic.txt

- ↓ (Python tail)

- [Python Parser] → BUFFER → SQLite DB

- ↑ TCP Query ↓ SQL Results

- [Coordinator Query Timer]

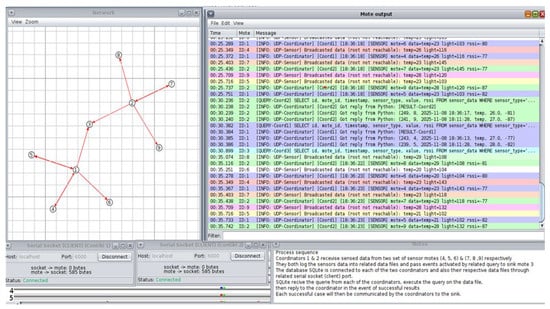

While the AI correctly interpreted the interaction flow, it lacked details on how to establish actual connectivity between the components. When the requirement was emphasized in a follow-up question, the AI suggested using a socket bridge with the coordinator’s serial server port. This approach failed, as the suggested port is intended for listening for connections inside Cooja on a TCP port. The human developer identified the issue and instead used the socket with the coordinator’s client serial port, which is designed for data exchange between applications. This solution worked.

Figure 2 illustrates this setup. A network of two coordinators (nodes 1 and 2) receives data from three wireless sensors each and forwards it to a sink (node 3). Each coordinator generates its own data text file based on its associated sensors. These files contain application-specific queries to identify events and pass them to the sink. SQLite is connected to each coordinator and its respective data file via the Serial Socket (Client) Contiki 1 and 2, shown in the lower-left portion of the figure.

Figure 2.

Contiki-NG Cooja Simulation use of SQLite queries to filter a significant amount of undesired data. Nodes 1 & 2 receive sense data from nodes 4, 5 & 6 and 7, 8 & 9 respectively and forward the data to node 3 when the temp reading is more than 25.

This exercise highlights the collaboration between AI and the human developer. Human experience played a crucial role in achieving the goal. While AI knowledge is still maturing, it has significantly helped stimulate discussion and accelerate the solution. However, total reliance on AI would not have sufficed.

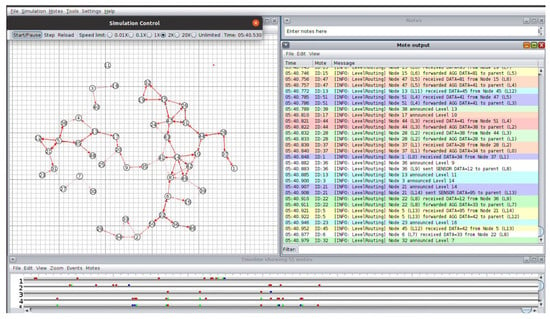

Another exercise involved integrating a level routing solution into the mote network. This task was relatively straightforward for the AI, and the solution was developed with minimal interaction. However, the code provided by ChatGPT contained several errors:

- -

- Non-functional callback function.

- -

- Message format mismatches.

- -

- Sink not responding.

The human developer resolved these issues and got the software operational. Figure 3 illustrates this case.

Figure 3.

Contiki-NG Cooja Simulating Level Routing case. The algorithm starts with node 1 as level 0, identifying all nodes with line of site with node 1 are considered as level 1. Then proceed with identifying all nodes with line of site with level 1 nodes are level 2. Continue in this approach until it reaches the furthest node away from node 1.

AI proved beneficial in accelerating toolset development. However, we frequently encountered instances of “AI hallucination,” where the system produced incorrect results due to misinterpretation, limited understanding, or a lack of maturity. Examples include:

- -

- Programs that failed to compile due to undefined or unused variables.

- -

- Use of outdated functions from earlier Contiki-Cooja releases.

- -

- Incorrect program logic resulting in unintended outcomes.

These issues underscore the importance of a solid foundational knowledge base for AI users to ensure functional outcomes. AI should be viewed as a new level of abstraction. Accordingly, educational approaches should shift focus from micro-level details to macro-level understanding.

As reliance on AI increases, are we steering humans toward cognitive sedentary behavior? How can we replace missing foundational exercises with more advanced, upper-level engagements? We must avoid diminishing our ability to critically assess AI outputs, which could lead to cognitive complacency or even mental health concerns [34], which have already been surfacing in several parts of the world.

7. Mitigation Strategies

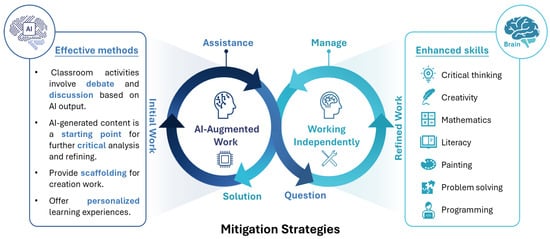

Several strategies can be employed to mitigate the potential negative impacts of AI on human mental capabilities, especially in education. Educators can foster environments where questioning AI output is encouraged and valued. Classroom activities involving debate and discussion help students practice argumentation and consider multiple viewpoints. AI-generated content should be treated as a starting point for exploration and critical analysis, not as definitive answers. Teaching digital literacy, including how AI works and its limitations, empowers students to use AI tools more critically. Assignments can be designed to challenge students to go beyond AI suggestions and develop unique contributions, ensuring that AI augments rather than replaces creativity.

To structure these strategies effectively, we propose organizing them across three levels of abstraction: Foundations, Translation, and Orchestration. Each of these levels requires distinct pedagogical approaches.

- Layer 1: Foundations

At this level, students engage with core cognitive skills, such as mental arithmetic, grammar, logic, and recall. These are building blocks of reasoning and expression. Educators should design tasks that require manual effort before AI assistance is permitted. For example, students might solve math problems without calculators or write short essays without AI support. This ensures fluency in foundational skills and prevents premature reliance on automation. The historical debate around pocket calculators offers a useful analogy: while calculators were feared to erode math skills, research showed that when used thoughtfully, they improved operational fluency and problem-solving [17]. Similarly, AI can enhance learning if foundational fluency is safeguarded.

- Layer 2: Translation

This layer involves converting between representations, such as specifications to code, equations to simulations, or ideas to structured arguments. AI can assist here by offering scaffolding, brainstorming, and real-time feedback. For example, AI writing tools can help language learners reduce anxiety and improve fluency [23]. However, students must be taught to critically evaluate and refine AI outputs. Assignments should require students to identify flaws, explain corrections, and reflect on the reasoning behind their edits. This maintains cognitive engagement and supports academic integrity. Banning AI tools is neither practical nor beneficial; instead, students should learn to use them responsibly, preparing for real-world applications.

- Layer 3: Orchestration

At the highest level, students use AI to coordinate complex tasks, i.e., systems design, solving open-ended problems, or leading collaborative projects. Here, the emphasis shifts to strategic thinking, ethical reasoning, and creative synthesis. Educators can assign capstone projects where students use AI for scaffolding but must defend their design choices, critique limitations, and demonstrate understanding of underlying principles. This promotes lateral thinking and ensures that students remain the “masters of the solution,” even when AI handles lower-level primitives.

To support these layers, we propose two pedagogical shift patterns:

AI-first draft → Human revision: Students receive AI-generated content and are assessed on their ability to critique, revise, and improve it.

Human-first plan → AI implementation: Students design a solution or outline, then use AI to implement it, followed by validation and reflection.

These patterns encourage active engagement and reinforce the abstraction lens: students must move between layers, not remain confined to the top.

As we transition into widespread AI adoption, we will inevitably pass through several stages of familiarization, adaptation, and development. Each of these stages reflects a transformation in cognition and behavior:

- Early Interaction, Training, and Familiarization Phase

In this initial stage, both humans and AI systems undergo training. AI benefits from human interaction, gaining refinement and reducing hallucinations. Humans maintain mental engagement by challenging AI but may begin to lose interest in traditional knowledge. Educational institutions must decide which foundational knowledge to preserve and how to sustain cognitive dynamics.

- Maturity and Effective Interaction Phase

At this stage, AI systems reach a level of maturity. If education systems have prepared learners well, they will be equipped to operate safely at higher abstraction levels. If not, confusion between old and new knowledge may reduce adaptability and effectiveness.

- Further Development Phase

AI may evolve toward creativity and consciousness, which is a frontier that is already emerging [35]. This introduces new challenges requiring sustained attention, including ethical, pedagogical, and cognitive implications.

Thus, mitigation strategies must be layered, intentional, and adaptive. They should preserve foundational skills, promote critical engagement, and prepare learners to navigate the evolving landscape of AI-enhanced cognition.

Figure 4 gives a visual representation of several strategies that can be used for preserving or enhancing human cognitive capacity in the AI era.

Figure 4.

Strategies to Mitigate Potential Risks of AI on Human Cognitive Capacity.

8. Conclusions

Artificial intelligence (AI) presents a dual impact on education: it can either erode foundational cognitive skills through passive reliance or empower learners when used intentionally. The key is not whether AI is present, but how it is integrated and whether it encourages critical engagement or replaces it. This paper introduced the levels-of-abstraction lens to explain how GenAI affects cognitive development. The included simulation-platform case demonstrated that meaningful learning occurs when users engage across abstraction layers, especially when they drop down to correct AI-generated errors at the protocol and implementation level. This active engagement sustains intellectual resilience and reinforces the value of foundational reasoning.

To guide responsible integration, we propose several design principles: embed abstraction awareness in curricula, require manual effort before automation, assess explanation quality alongside output, and maintain a ground-truth channel for verifying AI suggestions. These principles ensure that AI remains a cognitive exoskeleton, not a crutch. As AI reshapes learning environments and labor markets, education must prioritize critical thinking, creativity, and ethical literacy. Empowerment lies in preparing students to navigate abstraction, challenge automation, and remain intellectually agile in an AI-enhanced world.

Author Contributions

Conceptualization, A.A. and A.A.-A.; methodology, A.A. and A.A.-A.; software, S.S.M.; validation, A.A., A.A.-A. and S.S.M.; formal analysis, A.A. and A.A.-A.; investigation, A.A., A.A.-A., F.X. and S.S.M.; resources, A.A. and A.A.-A. and S.S.M.; writing—original draft preparation, A.A. and A.A.-A.; writing—review and editing, A.A. and A.A.-A.; visualization, F.X.; supervision, A.A. and A.A.-A.; project administration, A.A. and A.A.-A. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not relevant.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Pitt, J. ChatSh*t and Other Conversations (That We Should Be Having, But Mostly Are Not). IEEE Technol. Soc. Mag. 2023, 42, 7–13. [Google Scholar] [CrossRef]

- Liveley, G. AI Futures Literacy. IEEE Technol. Soc. Mag. 2022, 41, 90–93. [Google Scholar] [CrossRef]

- Sarkadi, Ş. Deceptive AI and Society. IEEE Technol. Soc. Mag. 2023, 42, 77–86. [Google Scholar] [CrossRef]

- Robbins, J. The Intelligence Factor: Technology and the Missing Link. IEEE Technol. Soc. Mag. 2022, 41, 82–93. [Google Scholar] [CrossRef]

- Crane-Kramer, G.; Buckberry, J. Changes in health with the rise of industry. Int. J. Paleopathol. 2023, 40, 99–102. [Google Scholar] [CrossRef]

- Broussard, J.; Devkota, S. The changing microbial landscape of Western society: Diet, dwellings and discordance. Mol. Metab. 2016, 5, 737–742. [Google Scholar] [CrossRef]

- Kralick, A.; Zemel, B. Evolutionary Perspectives on the Developing Skeleton and Implications for Lifelong Health. Front. Endocrinol. 2020, 11, 99. [Google Scholar] [CrossRef]

- Paffenbarger, R.; Blair, S.; Lee, I.-M. A history of physical activity, cardiovascular health, and longevity. Int. J. Epidemiol. 2001, 30, 1184–1192. [Google Scholar] [CrossRef]

- Wang, Y.; Xie, Y.; Chen, Y.; Ding, G.; Zhang, Y. Joint association of sedentary behavior and physical activity with pulmonary function. BMC Public Health 2024, 24, 604. [Google Scholar] [CrossRef]

- De Cremer, D.; Bianzino, N.M.; Falk, B. How Generative AI Could Disrupt Creative Work. Harv. Bus. Rev. 2023. Available online: https://hbr.org/2023/04/how-generative-ai-could-disrupt-creative-work (accessed on 3 September 2024).

- Doshi, A.; Hauser, O. Generative Artificial Intelligence Enhances Creativity but Reduces the Diversity of Novel Content. Sci. Adv. 2024, 10, eadn5290. [Google Scholar] [CrossRef] [PubMed]

- Shackell, C. Will AI Kill Our Creativity? It Could–If We Don’t Start to Value and Protect the Traits That Make Us Human; The Conversation Media Group Ltd.: Carlton, Australia, 2023. [Google Scholar]

- Williams, R. AI can make you more creative—But it has limits. MIT Technology Review, 12 July 2024. [Google Scholar]

- Dergaa, I.; Ben Saad, H.; Glenn, J.M.; Amamou, B.; Ben Aissa, M.; Guelmami, N.; Fekih-Romdhane, F.; Chamari, K. From tools to threats: A reflection on the impact of artificial-intelligence chatbots on cognitive health. Front. Psychol. 2024, 15, 1259845. [Google Scholar] [CrossRef] [PubMed]

- Nosta, J. AI and the Erosion of Human Cognition. Psychology Today, 5 November 2023. [Google Scholar]

- Stokel-Walker, C. Will we lose certain skills and knowledge if we rely on AI too much? Cybernews, 9 December 2023. [Google Scholar]

- Malik, T.; Hughes, L.; Dwivedi, Y.; Dettmer, S. Exploring the Transformative Impact of Generative AI on Higher Education. In New Sustainable Horizons in Artificial Intelligence and Digital Solutions. I3E 2023; Janssen, M., Pinheiro, L., Matheus, R., Frankenberger, F., Dwivedi, Y.K., Pappas, I.O., Mäntymäki, M., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2023; Volume 14316. [Google Scholar]

- Zhai, C.; Wibowo, S.; Li, L. The effects of over-reliance on AI dialogue systems on students’ cognitive abilities: A systematic review. Smart Learn. Environ. 2024, 11, 28. [Google Scholar] [CrossRef]

- Chiu, T. The impact of generative AI (GenAI) on practices, policies and research direction in education: A case of chatgpt and MidJourney. Interact. Learn. Environ. 2023. advance online publication. [Google Scholar] [CrossRef]

- Broom, D. AI: These are the biggest risks to businesses and how to manage them. World Economic Forum, 27 July 2023. [Google Scholar]

- Interview with Timandra Harkness, AI Can’t Think Critically, but People Must. Economist Education, July 2024. Available online: https://education.economist.com/insights/interviews/ai-cant-think-critically-but-people-must (accessed on 3 September 2024).

- O’Dea, X. Generative AI: Is it a paradigm shift for higher education? Stud. High. Educ. 2024, 49, 811–816. [Google Scholar] [CrossRef]

- Wang, S.; Wang, F.; Zhu, Z.; Wang, J.; Tran, T.; Du, Z. Artificial intelligence in education: A systematic literature review. Expert Syst. Appl. 2024, 252 Pt A, 124167. [Google Scholar] [CrossRef]

- Shine, I.; Whiting, K. These are the jobs most likely to be lost–and created–because of AI. World Economic Forum, 4 May 2023. [Google Scholar]

- Mukherjee, S.; Senapati, D.; Mahajan, I. Toward Behavioral AI: Cognitive Factors Underlying the Public Psychology of Artificial Intelligence. In Applied Cognitive Science and Technology; Mukherjee, S., Dutt, V., Srinivasan, N., Eds.; Springer: Singapore, 2023. [Google Scholar]

- Littman, M.L.; Ajunwa, I.; Berger, G.; Boutilier, C.; Currie, M.; Doshi-Velez, F.; Hadfield, G.; Horowitz, M.C.; Isbell, C.; Kitano, H.; et al. Gathering Strength, Gathering Storms: The One Hundred Year Study on Artificial Intelligence (AI100) 2021 Study Panel Report; Stanford University: Stanford, CA, USA, 2021. [Google Scholar]

- Hsu, Y.; Ching, Y. Generative Artificial Intelligence in Education, Part Two: International Perspectives. TechTrends 2023, 67, 885–890. [Google Scholar] [CrossRef]

- Mao, J.; Chen, B.; Liu, J. Generative Artificial Intelligence in Education and Its Implications for Assessment. TechTrends 2024, 68, 58–66. [Google Scholar] [CrossRef]

- Chan, C.; Hu, W. Students’ voices on generative AI: Perceptions, benefits, and challenges in higher education. Int. J. Educ. Technol. High. Educ. 2023, 20, 43. [Google Scholar] [CrossRef]

- Chan, C.; Lee, K. The AI generation gap: Are Gen Z students more interested in adopting generative AI such as ChatGPT in teaching and learning than their Gen X and millennial generation teachers? Smart Learn. Environ. 2023, 10, 60. [Google Scholar] [CrossRef]

- Zhang, S.; Zhao, X.; Zhou, T.; Kim, J.H. Do you have AI dependency? The roles of academic self-efficacy, academic stress, and performance expectations on problematic AI usage behavior. Int. J. Educ. Technol. High. Educ. 2024, 21, 34. [Google Scholar] [CrossRef]

- Kim, J.; Yu, S.; Detrick, R.; Li, N. Exploring students’ perspectives on Generative AI-assisted academic writing. Educ. Inf. Technol. 2025, 30, 1265–1300. [Google Scholar] [CrossRef]

- Luo, J. A critical review of GenAI policies in higher education assessment: A call to reconsider the “originality” of students’ work. Assess. Eval. High. Educ. 2024, 49, 651–664. [Google Scholar]

- Zimmerman, A.; Janhonen, J.; Beer, E. Human/AI relationships: Challenges, down-sides, and impacts on human/human relationships. AI Ethics 2023, 4, 1555–1567. [Google Scholar] [CrossRef]

- Maheshwari, A.K.; Frick, K.D.; Greenberg, I.; Colby, D.K. Creativity, Consciousness, and AI in Organizations; Springer Nature: Cham, Switzerland, 2025; pp. 135–154. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).