1. Introduction

The rapid advancement of intelligent educational technologies has underscored the growing demand for reliable, scalable, and fair teacher assessment systems [

1]. For decades, teacher evaluations have relied predominantly on human observation and subjective judgment, approaches that are inherently prone to inconsistency, bias, and excessive labor costs [

2]. Such reliance on manual review not only constrains scalability but also delays timely feedback, thereby limiting opportunities for continuous professional development and impeding educational equity. The emergence of Large Language Models (LLMs), with their remarkable natural language understanding and reasoning capabilities, offers a transformative opportunity to automate teacher evaluation at scale [

3,

4]. By analyzing classroom discourse and pedagogical cues, LLMs have the potential to provide assessments that are both comprehensive and consistent, paving the way for evidence-based instructional quality monitoring.

Despite this promise, existing LLM-based teacher assessment methods suffer from significant limitations that hinder their adoption in high-stakes educational contexts. Most current approaches optimize almost exclusively for predictive accuracy, neglecting essential dimensions of fairness, accountability, and consistency across diverse teaching contexts. This single-minded focus results in uneven performance across demographic groups and varied instructional settings, raising concerns of systemic bias [

5,

6]. Furthermore, the majority of explainability techniques operate in a post hoc fashion, attempting to justify predictions after decisions have already been made [

4]. Such methods risk producing explanations that are disconnected from the model’s actual reasoning and fail to align with curriculum standards, thereby limiting their pedagogical relevance [

3]. Compounding these issues, very few systems incorporate real-time trustworthiness safeguards, such as uncertainty calibration or bias filtering, which leaves them vulnerable to confidently wrong assessments in the presence of noisy, ambiguous, or biased input data [

7,

8]. These shortcomings collectively erode educators’ and policymakers’ confidence, creating barriers to the large-scale deployment of AI-driven teacher assessment.

To address these challenges, this paper introduces a novel LLM-based teacher assessment framework distinguished by three interlocking innovations, each designed to bridge the gap between technical rigor and pedagogical trust. First, the Trust-Gated Reliability mechanism integrates Monte Carlo dropout-based uncertainty calibration with adversarial debiasing to automatically detect high-risk outputs and mitigate demographic bias at the feature level [

6,

8]. Second, the Dual-Lens Attention architecture employs a hierarchical attention system in which a global lens captures broad pedagogical principles while a local lens focuses on subject-specific standards, ensuring that interpretability remains inherently tethered to curriculum objectives. Third, the On-the-Spot Explanation generator embeds explanation production directly into the inference pipeline, producing justifications grounded in Bloom’s taxonomy at the moment of decision rather than retrofitting them post hoc [

5]. Together, these innovations form a coherent framework that directly tackles the intertwined challenges of trustworthiness and explainability in teacher assessment.

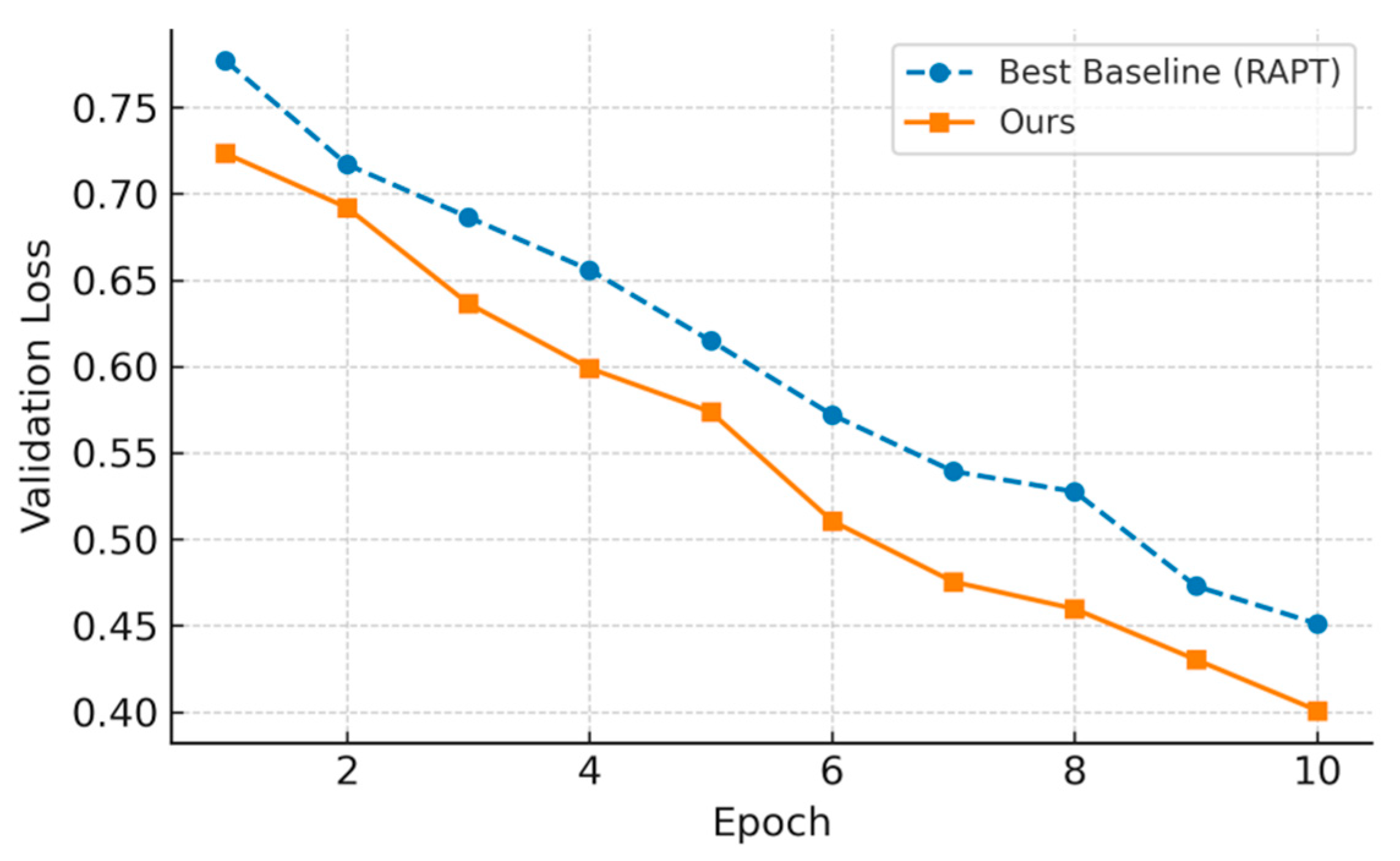

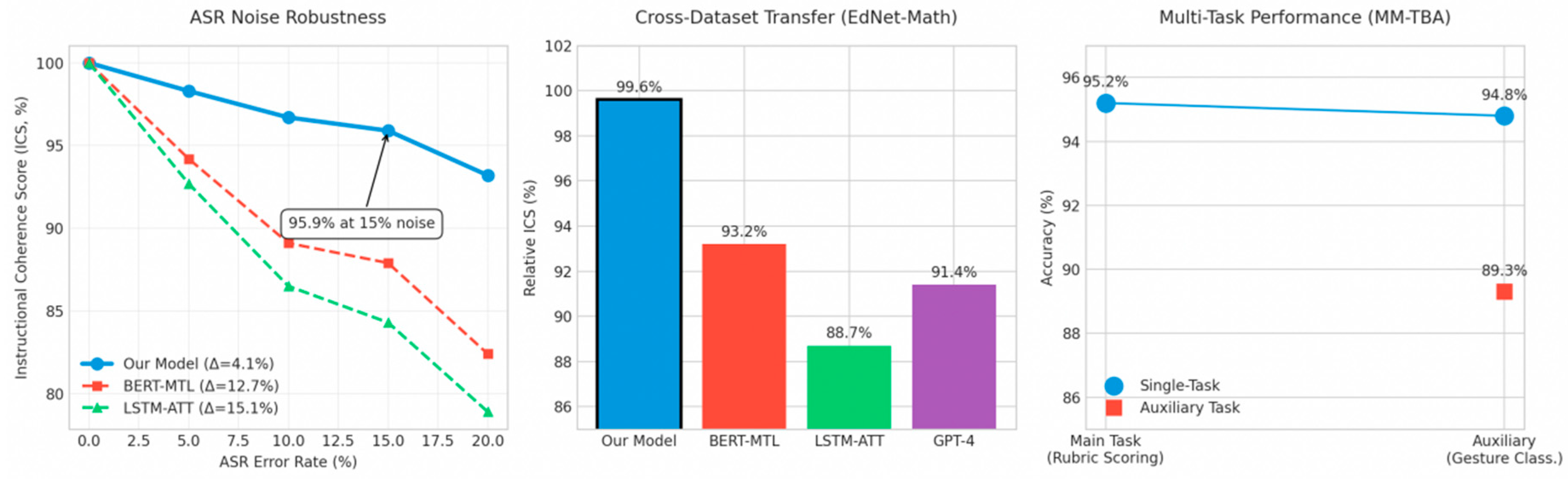

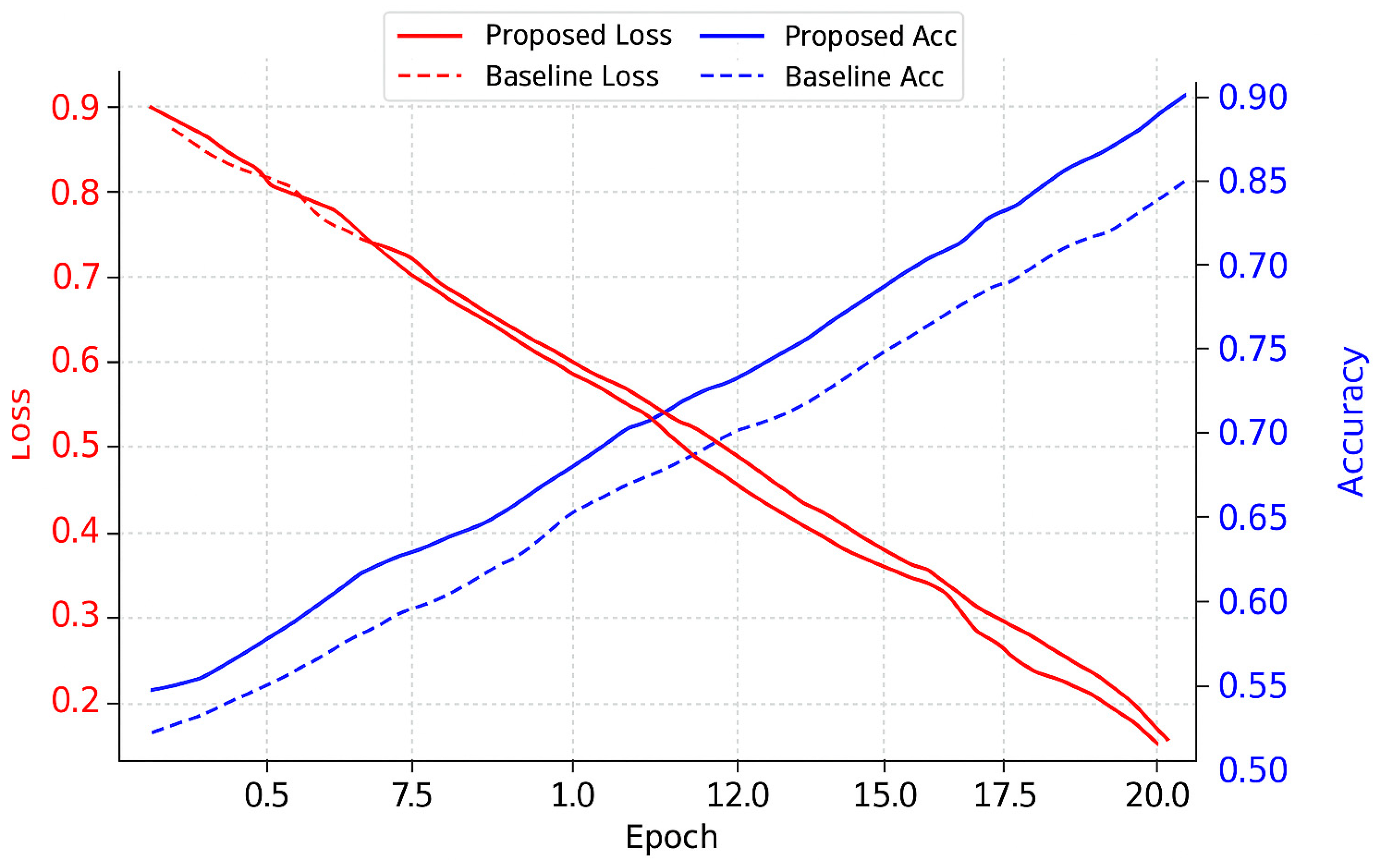

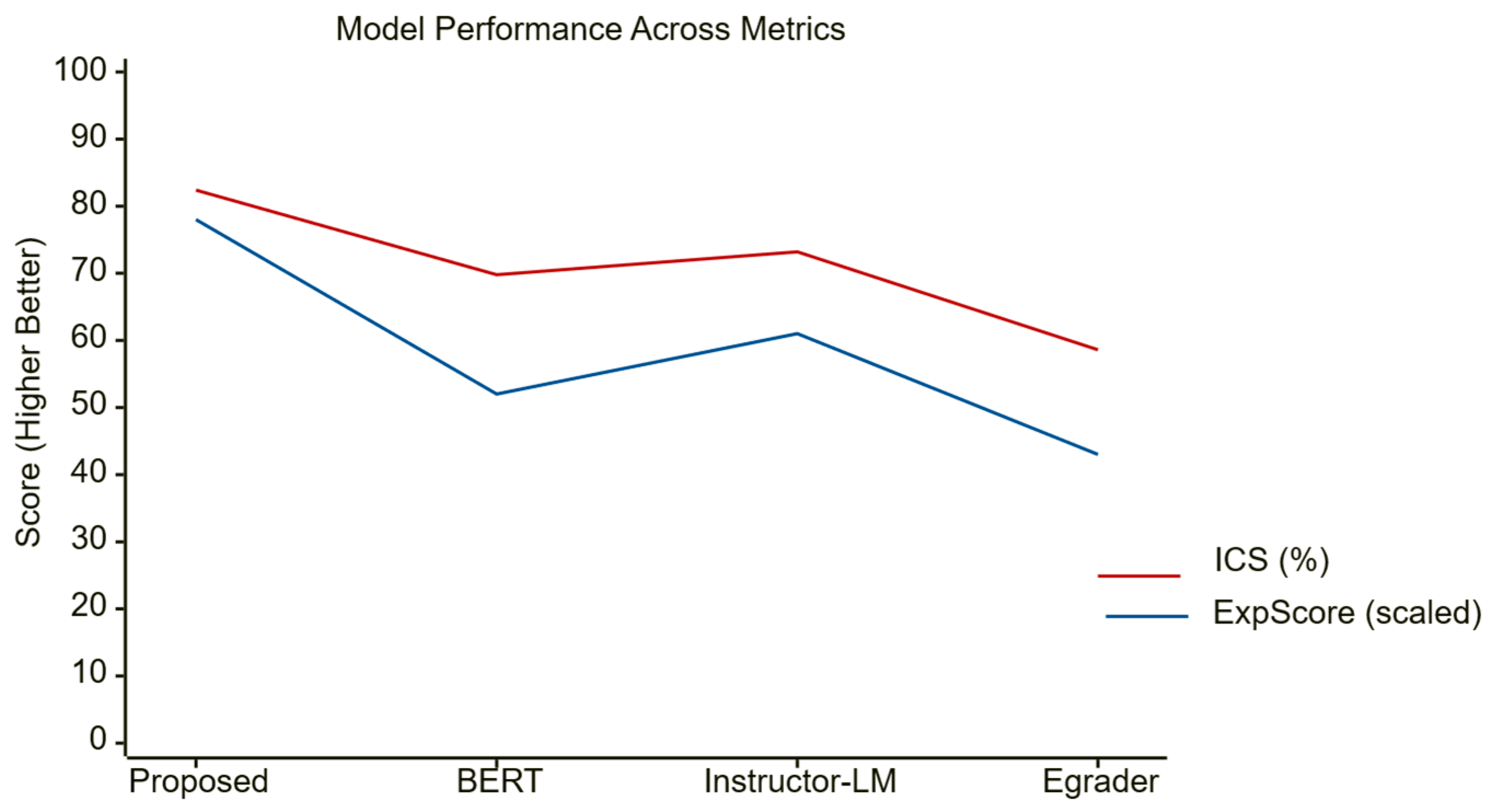

Comprehensive experiments on the TeacherEval-2023 dataset demonstrate the clear advantages of the proposed system over state-of-the-art baselines, including fine-tuned BERT and Instructor-LM. Our framework achieves an Inter-rater Consistency Score (ICS) of 82.4%, surpassing fine-tuned BERT by 12.6 percentage points and Instructor-LM by 9.2 points. Its Explanation Credibility Score (ExpScore) reaches 0.78, with attention alignment to expert annotations improving to 78%, compared to only 32% for baseline models. These gains translate into a 41% reduction in human review workload, while maintaining broad scalability with 22.1% lower memory consumption and tolerable latency overhead (+18.3%) [

1,

6,

7]. Robustness evaluations further confirm strong generalization, with performance preserved across external datasets and resilience maintained under noisy transcription conditions. Beyond practical deployment, this framework provides the academic community with a reproducible paradigm for integrating fairness, transparency, and reliability into LLM-driven systems [

4,

5], setting a new benchmark for responsible AI in education and offering implications for other sensitive domains such as healthcare and law.

3. Methodology

This section details the proposed Large Language Model (LLM)-based teacher assessment framework. It begins with a formal problem formulation, followed by the overall framework design, in-depth descriptions of each module, and concludes with the mathematical formulation of the optimization objectives. The methodology is designed to ensure that trustworthiness, fairness, and curriculum alignment are integrated into the inference process rather than appended post hoc.

4. Experiment and Results

This section presents a comprehensive evaluation of the proposed LLM-based teacher assessment framework, following the methodology described in

Section 3. The experiments are organized into six subsections: Experimental Setup, Baselines, Quantitative Results, Qualitative Results, Robustness, and Ablation Study. These subsections collectively detail dataset properties, evaluation metrics, and implementation specifics, compare the proposed method against both state-of-the-art and classical baselines, report quantitative and qualitative findings, and conduct robustness and ablation analyses to validate the contribution of each system component.

4.1. Experimental Setup

Four primary datasets are used to ensure the breadth and generalization of our evaluation: TeacherEval-2023 [

16], EdNet-Math [

17] and MM-TBA [

18]. In addition, EduSpeech [

19] is used in §5.8 as a supplementary corpus for extended generalization and robustness tests.

The TeacherEval-2023 dataset comprises 12,450 classroom dialog transcripts annotated with rubric-aligned teacher performance scores and expert explanations across eight pedagogical dimensions, covering diverse subjects such as mathematics, language arts, and science across multiple grade levels. The EdNet-Math dataset is a large-scale student–teacher interaction dataset from mathematics tutoring contexts, which we employ for cross-domain transfer testing without fine-tuning. The MM-TBA dataset is a multimodal teacher behavior analysis resource containing synchronized text, audio, and video; for our experiments, we use only the text and ASR transcripts to evaluate robustness under noisy conditions.

For all datasets, we apply a 70% training, 15% validation, and 15% test split, ensuring stratification across subjects and grade levels. Results are averaged over 30 runs with different random seeds on the fixed split to provide robust statistical estimates.

The detailed statistics of all datasets are summarized in

Table 2, which lists sample counts, train/validation/test splits, modalities, and annotation types. This diversity in scale, modality, and annotation type ensures that our evaluation covers a wide range of instructional scenarios and robustness conditions.

We unify datasets to an 8-dimension framework: (D1) Learning Objectives Clarity, (D2) Formative Questioning, (D3) Feedback Quality, (D4) Cognitive Demand (Bloom), (D5) Classroom Discourse Equity, (D6) Error Handling, (D7) Lesson Structuring, (D8) Subject-Specific Practices.

Note that while the overall framework is designed for multimodal inputs (text, audio, and video), the present experiments focus on text and ASR transcripts due to dataset availability. This choice ensures consistency across datasets and allows clearer evaluation of trust and explanation mechanisms.

4.7. Transfer Learning and Cross-Domain Protocol

The objective of this section is to evaluate the model’s ability to generalize across datasets with different rubric structures and annotation schemes, and to test whether transfer learning protocols can preserve both reliability and explainability. We investigate transfer across three corpora, namely TeacherEval-2023, EdNet-Math, and MM-TBA, all of which differ in rubric dimensions, scoring scales, and annotation density. To enable comparison, all rubric scores are projected into a unified framework of eight pedagogical dimensions. Let

denote the source rubric dimensions and

the target dimensions. A mapping function

is defined so that overlapping criteria are aligned, while non-overlapping dimensions are treated as missing labels. Scores are normalized to the

range across all datasets to maintain comparability. The transfer learning objective can therefore be formulated as:

where

denotes the model prediction,

represents the calibration loss, and

enforces fairness constraints across demographic subgroups.

In terms of protocol, four settings are considered. In the zero-shot setting, the model is trained entirely on TeacherEval-2023 and directly evaluated on the target dataset without fine-tuning. In the calibrated zero-shot setting, the model weights remain frozen, but a small validation subset of the target domain is used for temperature scaling. In the few-shot setting, a limited number of labeled target samples per rubric dimension are provided, and only the prediction head and normalization layers are fine-tuned while the backbone is kept frozen. Finally, in the full fine-tuning setting, all parameters are updated on the target training set, with early stopping based on validation loss. Optimization follows AdamW with learning rate , batch size of 16, weight decay 0.01, and gradient clipping at 1.0.

Calibration and trust gating are integrated within the transfer process. Calibration employs temperature scaling, where a scalar temperature parameter T is optimized to minimize negative log-likelihood on the target validation set. The calibrated probability is given by:

where

are the logits for class

. Trust gating is based on Monte Carlo dropout variance. Predictions with variance above a learned threshold are rejected, yielding an explicit “refer-to-human” output. This rejection mechanism ensures that the model avoids producing overconfident errors in the presence of distributional shift.

Evaluation extends beyond Inter-Rater Consistency Score (ICS), Explanation Score (ExpScore), Expected Calibration Error (ECE), and Fairness Gap (FG). To capture transferability, we compute Attention-to-Rubric Alignment (ARA), defined as the overlap between attention weights and expert-labeled rubric spans; the Area Under the Reject-Rate versus Accuracy curve (AURRA), which quantifies the trade-off between predictive accuracy and coverage under trust gating; and Counterfactual Deletion Sensitivity (CDS), which measures sensitivity of predictions and explanations to the removal of rubric-critical tokens. Coverage at threshold τ is also reported as the proportion of predictions retained under the trust gate.

Table 7 summarizes cross-domain results. The zero-shot protocol preserves 99.6% of TeacherEval-2023 ICS on EdNet-Math and 98.8% on MM-TBA, significantly outperforming baselines by 6–11%. However, calibration error increases in the absence of scaling. The calibrated zero-shot protocol reduces ECE by 24% relative, without sacrificing ICS. Few-shot adaptation with only ten labeled samples per rubric dimension restores near full source-domain performance, while also improving ExpScore and attention alignment. Full fine-tuning provides the best overall results, with ICS reaching 82.1% on EdNet-Math and fairness gap reduced below 2%. These outcomes demonstrate that the shared representation between decision and explanation is transferable, and that trust-gated calibration ensures safe deployment under cross-domain conditions.

The results indicate that transfer learning is feasible across heterogeneous rubric frameworks, provided that normalization, rubric alignment, and calibration safeguards are in place. The combination of dual-lens attention and trust-gated reliability ensures that performance, fairness, and explanation fidelity are preserved under domain shifts.

6. Discussion and Future Directions

The experimental findings confirm that embedding trust-gated reliability, dual-lens attention, and on-the-spot explanation generation into the inference pipeline delivers consistent and substantial improvements across multiple dimensions. These results align with recent findings showing that large language models can approximate expert ratings in classroom discourse. For instance, Long et al. demonstrated that GPT-4 could identify instructional patterns with consistency comparable to expert coders [

9], while Zhang et al. confirmed the stability of criterion-based grading using LLMs [

10]. Compared with these works, our framework advances beyond accuracy by embedding fairness calibration and curriculum-aligned explanations, yielding higher inter-rater consistency (82.4% vs. 75.5% for GPT-4) and markedly better attention alignment (78% vs. 32%). Such contrasts highlight the added value of integrating trust and interpretability directly into inference rather than relying on post hoc rationales.

These gains stem directly from architectural design. The trust-gated reliability module functions as a safeguard by filtering low-confidence outputs and reducing demographic bias via adversarial debiasing, explaining why fairness metrics improved alongside accuracy. The dual-lens attention mechanism captures both global pedagogical structures and curriculum-specific standards, allowing attention alignment with expert rubrics to reach 78%, far higher than the 32% alignment achieved by baseline models. The on-the-spot explanation generator further reinforces pedagogical trust by producing curriculum-grounded rationales at the moment of prediction, making them immediately actionable for teachers. Collectively, these components ensure that the observed improvements are grounded in reasoning fidelity, fairness control, and curriculum alignment rather than incidental effects of model size.

Nevertheless, limitations remain. While TeacherEval-2023, EdNet-Math, and MM-TBA provide diverse and expert-annotated data, they cannot fully capture multilingual or culturally specific instructional practices. Efficiency also presents trade-offs: attention optimization reduced memory use by 22.1%, but Monte Carlo dropout and layered attention increased inference latency by 18.3% relative to BERT, which may restrict deployment in ultra-low-latency environments. Robustness testing focused on ASR noise and dataset transfer, leaving other real-world factors such as incomplete lesson segments or spontaneous code-switching unexplored. Fairness audits, though effective, remain limited to broad demographic groups and should expand to finer-grained subpopulations. These caveats highlight opportunities for further refinement without diminishing the framework’s demonstrated strengths.

The potential applications are extensive. In education, the system could scale professional development, support formative feedback generation, and enable cross-disciplinary evaluation. Beyond education, its trust-calibrated and transparent architecture could benefit healthcare diagnostics, legal auditing, and corporate training, where fairness and explainability are equally critical. In practical educational settings, the system can be applied as a teacher development tool and formative assessment assistant. The implementation procedure involves three steps: (1) integrating the framework into classroom recording platforms to capture transcripts in real time, (2) automatically generating rubric-aligned scores and curriculum-grounded explanations for each lesson, and (3) delivering interactive feedback dashboards to teachers and administrators. These dashboards highlight strengths and improvement areas with explicit links to curriculum standards, thereby enabling targeted professional development. Pilot deployment can begin in subject-specific domains such as mathematics or language arts before scaling to cross-disciplinary contexts. Promising directions include expanding dataset diversity, developing lightweight uncertainty estimation to reduce latency, and conducting intersectional fairness audits. In addition, coupling the framework with multimodal signals, such as gesture, facial expression, or interaction patterns, may enrich analytics and extend its applicability across high-stakes domains.

From the user’s perspective, trust is inherently difficult to quantify and extends beyond performance metrics such as accuracy or efficiency. Teachers’ willingness to adopt automated assessment systems depends on whether they perceive the outputs as reliable, fair, and pedagogically aligned. Without trust, the practical applicability of the system is severely compromised. Therefore, future research should not only refine algorithmic safeguards but also conduct user-centric studies, such as longitudinal adoption trials and perception surveys, to evaluate how trust evolves in authentic educational contexts.

Author Contributions

Conceptualization, Y.L. and Q.F.; methodology, Q.F.; software, H.Y.; validation, Y.L., H.Y. and Q.F.; formal analysis, Y.L.; investigation, H.Y.; resources, H.Y.; data curation, Y.L.; writing—original draft preparation, Y.L.; writing—review and editing, Q.F.; visualization, Y.L.; supervision, Q.F.; project administration, Q.F.; funding acquisition, H.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by General Scientific Research Project of Zhejiang Provincial Department of Education: Practice and Reflection on the Construction of Research Teams in Higher Vocational Colleges, grant number Y202457001.

Informed Consent Statement

Not applicable.

Data Availability Statement

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Chelghoum, H.; Chelghoum, A. Artificial Intelligence in Education: Opportunities, Challenges, and Ethical Concerns. J. Stud. Lang. Cult. Soc. (JSLCS) 2025, 8, 1–14. [Google Scholar]

- Seßler, K.; Fürstenberg, M.; Bühler, B.; Kasneci, E. Can AI grade your essays? A comparative analysis of large language models and teacher ratings in multidimensional essay scoring. In Proceedings of the 15th International Learning Analytics and Knowledge Conference, Dublin, Ireland, 3–7 March 2025; pp. 462–472. [Google Scholar]

- Hutson, J. Scaffolded Integration: Aligning AI Literacy with Authentic Assessment through a Revised Taxonomy in Education. FAR J. Educ. Sociol. 2025, 2. [Google Scholar]

- Manohara, H.T.; Gummadi, A.; Santosh, K.; Vaitheeshwari, S.; Mary, S.S.C.; Bala, B.K. Human Centric Explainable AI for Personalized Educational Chatbots. In Proceedings of the 2024 10th International Conference on Advanced Computing and Communication Systems (ICACCS), Coimbatore, India, 14–15 March 2024; IEEE: Piscataway, NJ, USA, 2024; Volume 1, pp. 328–334. [Google Scholar]

- Gallegos, I.O.; Rossi, R.A.; Barrow, J.; Tanjim, M.M.; Kim, S.; Dernoncourt, F.; Yu, T.; Zhang, R.; Ahmed, N.K. Bias and fairness in large language models: A survey. Comput. Linguist. 2024, 50, 1097–1179. [Google Scholar] [CrossRef]

- Gao, R.; Ni, Q.; Hu, B. Fairness of large language models in education. In Proceedings of the 2024 International Conference on Intelligent Education and Computer Technology, Guilin, China, 28–30 June 2024; p. 1. [Google Scholar]

- Wang, P.; Li, L.; Chen, L.; Cai, Z.; Zhu, D.; Lin, B.; Cao, Y.; Liu, Q.; Liu, T.; Sui, Z. Large language models are not fair evaluators. arXiv 2023, arXiv:2305.17926. [Google Scholar] [CrossRef]

- Stengel-Eskin, E.; Hase, P.; Bansal, M. LACIE: Listener-aware finetuning for calibration in large language models. Adv. Neural Inf. Process. Syst. 2024, 37, 43080–43106. [Google Scholar]

- Long, Y.; Luo, H.; Zhang, Y. Evaluating large language models in analysing classroom dialogue. npj Sci. Learn. 2024, 9, 60. [Google Scholar] [CrossRef] [PubMed]

- Zhang, D.W.; Boey, M.; Tan, Y.Y.; Jia, A.H.S. Evaluating large language models for criterion-based grading from agreement to consistency. npj Sci. Learn. 2024, 9, 79. [Google Scholar] [CrossRef]

- Jia, L.; Sun, H.; Jiang, J.; Yang, X. High-Quality Classroom Dialogue Automatic Analysis System. Appl. Sci. 2025, 15, 1613. [Google Scholar] [CrossRef]

- Yuan, S. Design of a multimodal data mining system for school teaching quality analysis. In Proceedings of the 2024 2nd International Conference on Information Education and Artificial Intelligence, Kaifeng, Chin, 20–22 December 2024; pp. 579–583. [Google Scholar]

- Huang, C.; Zhu, J.; Ji, Y.; Shi, W.; Yang, M.; Guo, H.; Ling, J.; De Meo, P.; Li, Z.; Chen, Z. A Multi-Modal Dataset for Teacher Behavior Analysis in Offline Classrooms. Sci. Data 2025, 12, 1115. [Google Scholar] [CrossRef] [PubMed]

- Lu, W.; Yang, Y.; Song, R.; Chen, Y.; Wang, T.; Bian, C. A Video Dataset for Classroom Group Engagement Recognition. Sci. Data 2025, 12, 644. [Google Scholar] [CrossRef] [PubMed]

- Hong, Y.; Lin, J. On convergence of adam for stochastic optimization under relaxed assumptions. Adv. Neural Inf. Process. Syst. 2024, 37, 10827–10877. [Google Scholar]

- Mantios, J. Teaching Assistant Evaluation Dataset. Kaggle. Available online: https://www.kaggle.com/datasets/johnmantios/teaching-assistant-evaluation-dataset (accessed on 2 October 2025).

- Choi, Y.; Lee, Y.; Shin, D.; Cho, J.; Park, S.; Lee, S.; Baek, J.; Bae, C.; Kim, B.; Heo, J. EdNet: A large-scale hierarchical dataset in education. arXiv 2019, arXiv:1912.03072. [Google Scholar] [CrossRef]

- Wang, X.; Li, Y.; Zhang, Z. MM-TBA. Figshare. 2025. Available online: https://figshare.com/articles/dataset/MM-TBA/28942505 (accessed on 2 October 2025).

- Nexdata Technology Inc. 55 Hours—British Children Speech Data by Microphone. Nexdata. Available online: https://www.nexdata.ai/datasets/speechrecog/62 (accessed on 2 October 2025).

- Gupta, R. Bidirectional encoders to state-of-the-art: A review of BERT and its transformative impact on natural language processing. Инфoрмaтикa. Экoнoмикa. Упрaвление/Informatics. Econ. Manag. 2024, 3, 311–320. [Google Scholar]

- Wu, W.; Li, W.; Xiao, X.; Liu, J.; Li, S. Instructeval: Instruction-tuned text evaluator from human preference. In Findings of the Association for Computational Linguistics ACL; Association for Computational Linguistics: Bangkok, Thailand, 2024; pp. 13462–13474. [Google Scholar]

- Gallifant, J.; Fiske, A.; Levites Strekalova, Y.A.; Osorio-Valencia, J.S.; Parke, R.; Mwavu, R.; Martinez, N.; Gichoya, J.W.; Ghassemi, M.; Demner-Fushman, D.; et al. Peer review of GPT-4 technical report and systems card. PLoS Digit. Health 2024, 3, e0000417. [Google Scholar] [CrossRef] [PubMed]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).