Innovating Intrusion Detection Classification Analysis for an Imbalanced Data Sample

Abstract

1. Introduction

- ―

- How do IDC assessments direct the classifiers’ analysis domains related to cybersecurity aspects?

- ―

- What kind of output(s) dominates IDS classification exploring the dataset?

- ―

- What implications do these findings have for IDS policy and strategy?

2. Related Works

- ―

- Recruit a sophisticated metric that is capable of clarifying the model’s performance form, particularly with a minority set. Such metrics include recall, precision, F1-score, ROC, and AUC curves.

- ―

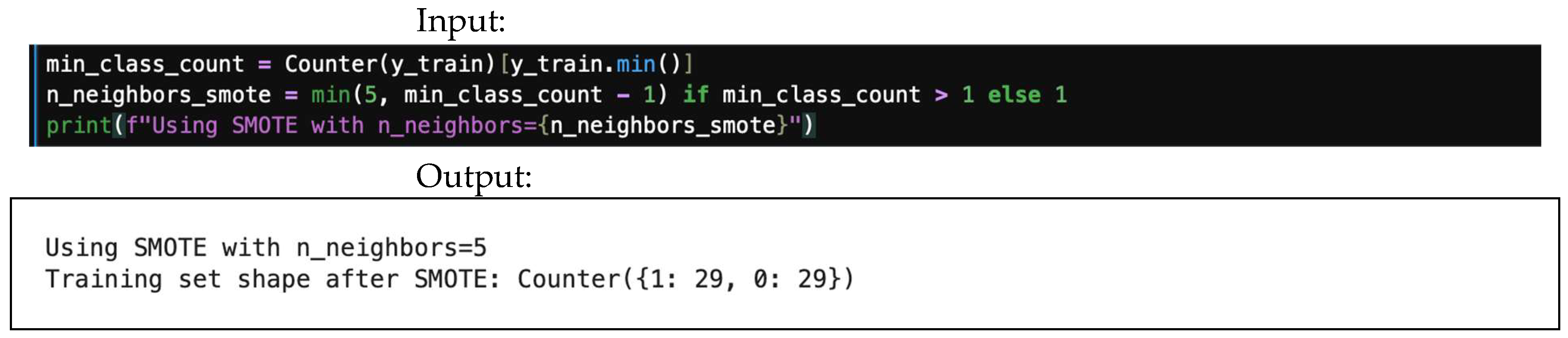

- SMOTE, which stands for synthetic minority oversampling technique, is used to perfectly balance/reduce the number of majority sets. It is highly effective; however, it causes data loss.

- ―

- Algorithm-based tuning is used to balance or adapt higher weights for the minority set to minimize bias. It is major sampling method that offers cost-sensitive learning and class-weighted models, and it fixes imbalance influence. Algorithms such as random forest and XGBoost are the most effective ones.

- ―

- Data augmentation is an amazingly effective technique used to augment the minority set by rotating or flipping to balance the dataset.

- ―

- The anomaly technique is an anomaly detection method used to control the minority class.

- ―

- Understandability of the domain is useful, granted that the minority class is rare.

- ―

- Experimental methods are used to examine different resampling methods, models, and measures.

- ―

- CV-based methods use different combinations/permutation to validate the system’s performance to achieve generalization.

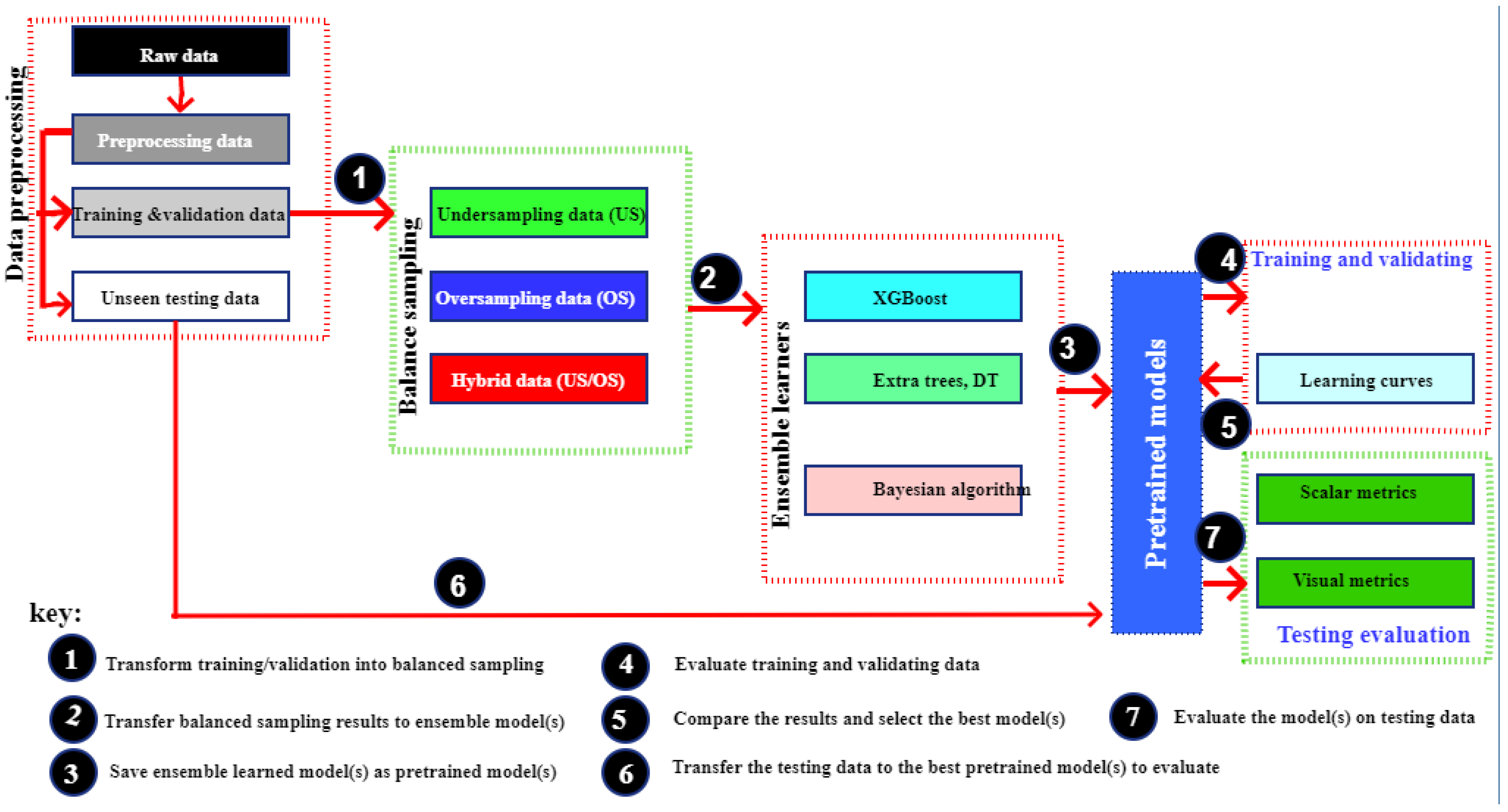

3. Materials and Methods

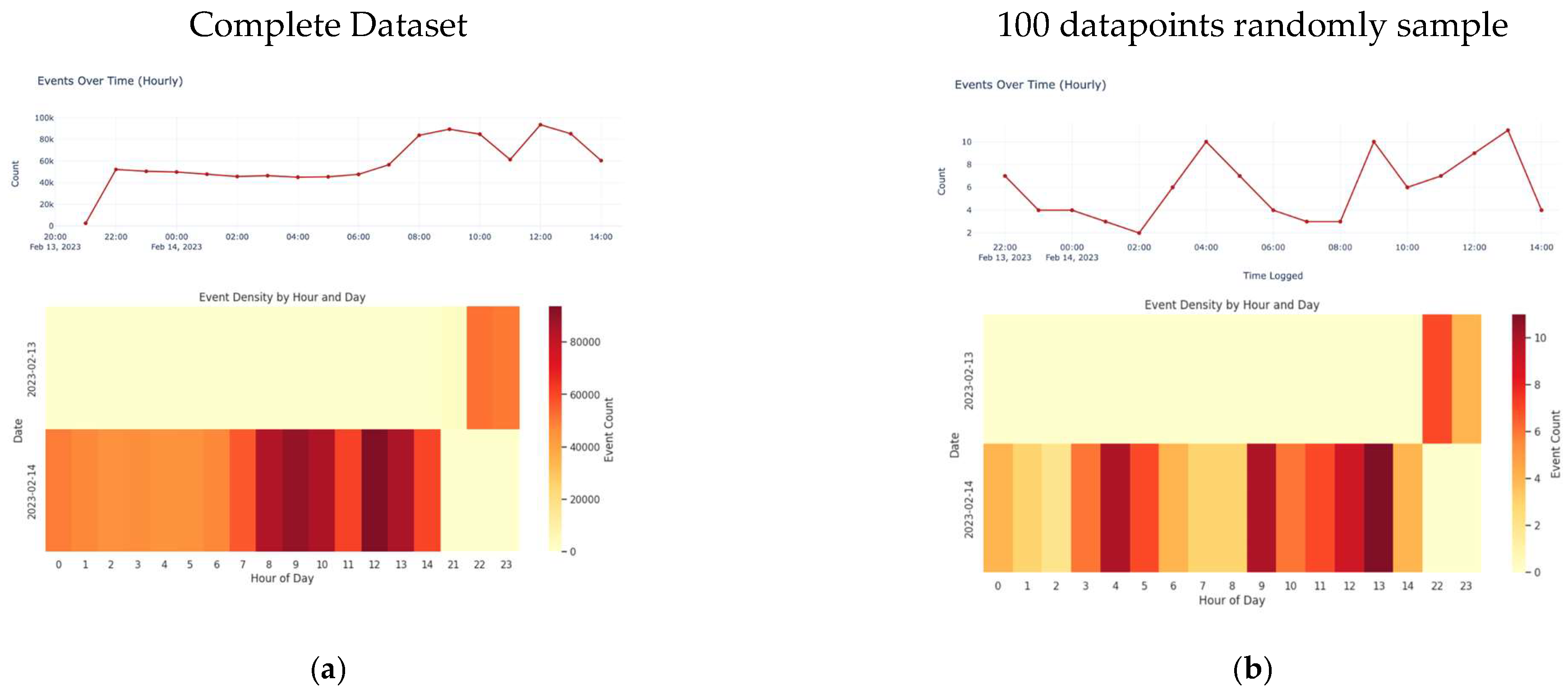

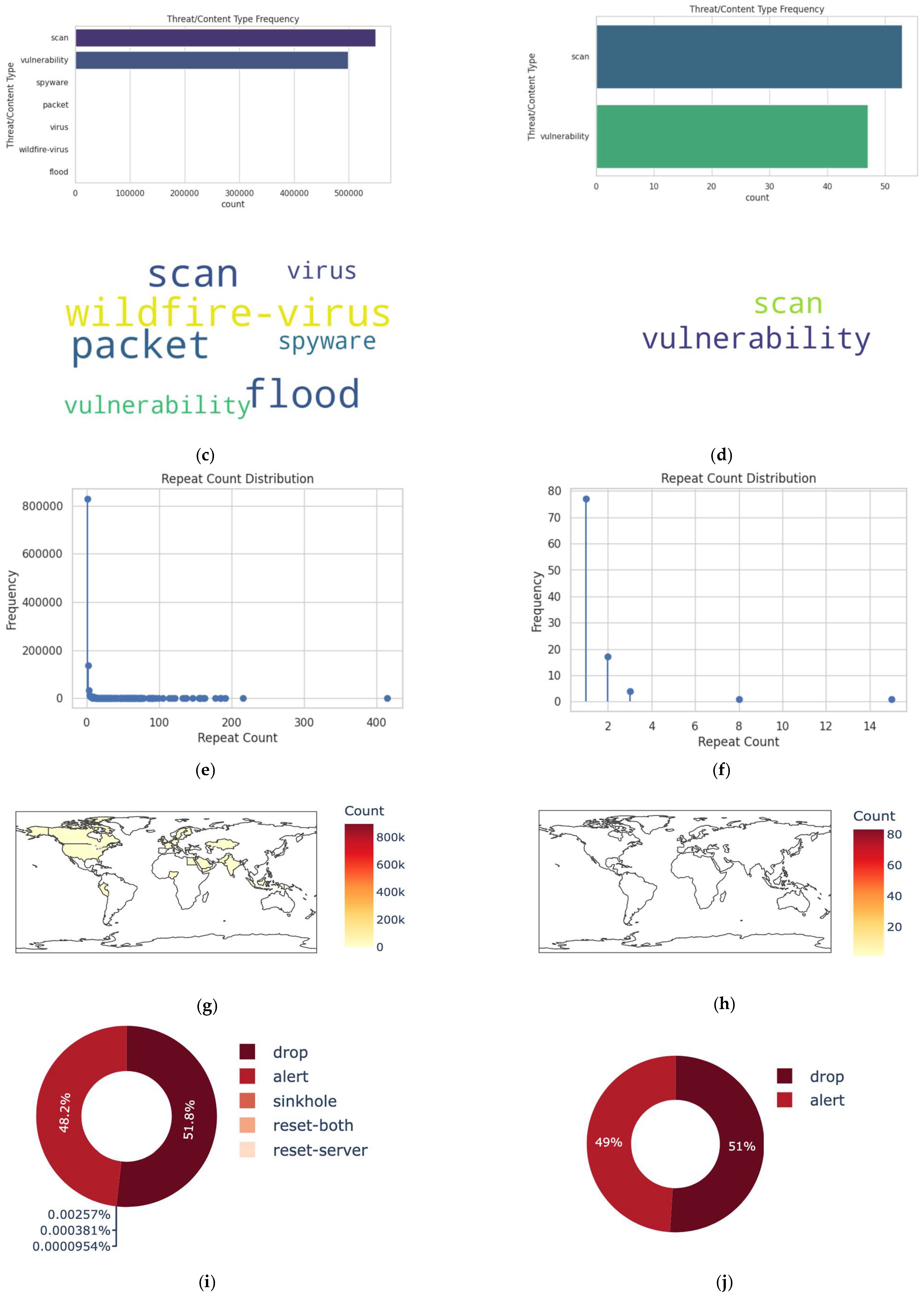

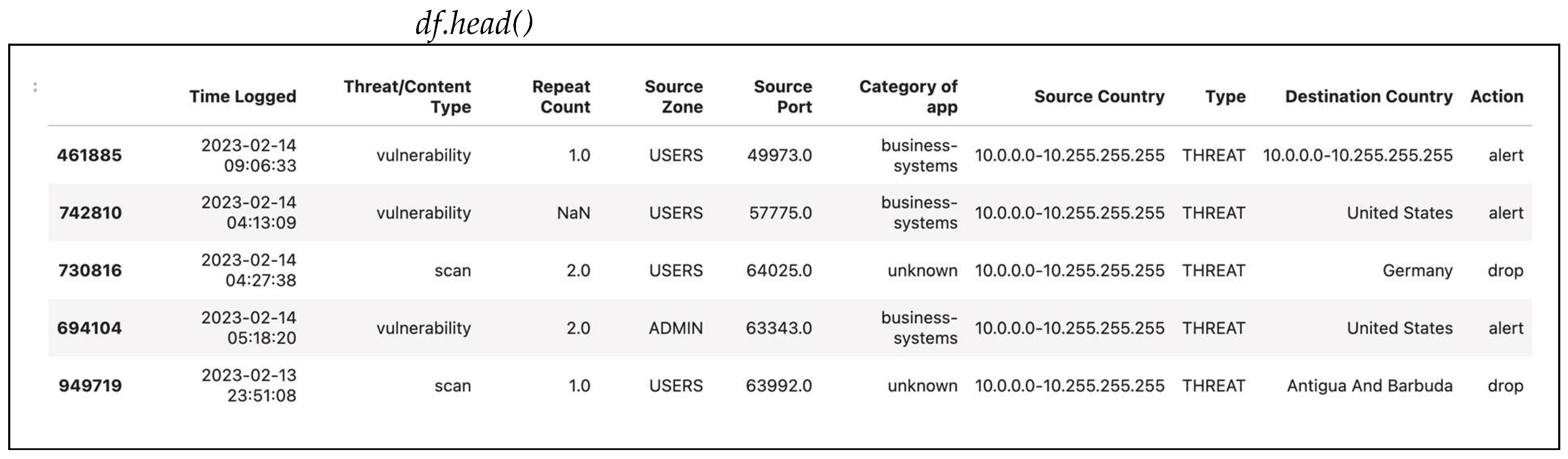

3.1. Overview of the Dataset

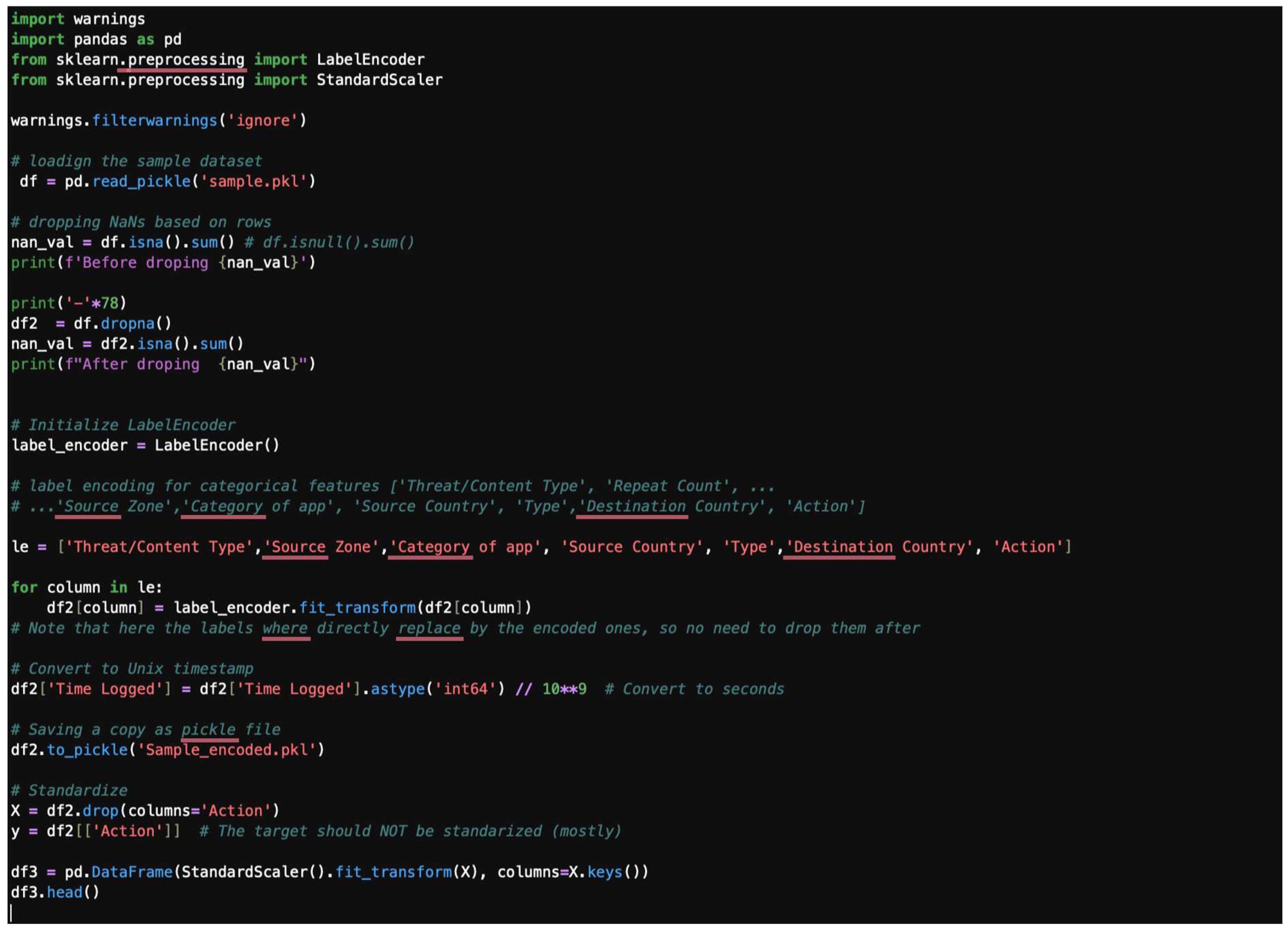

3.2. Preprocessing

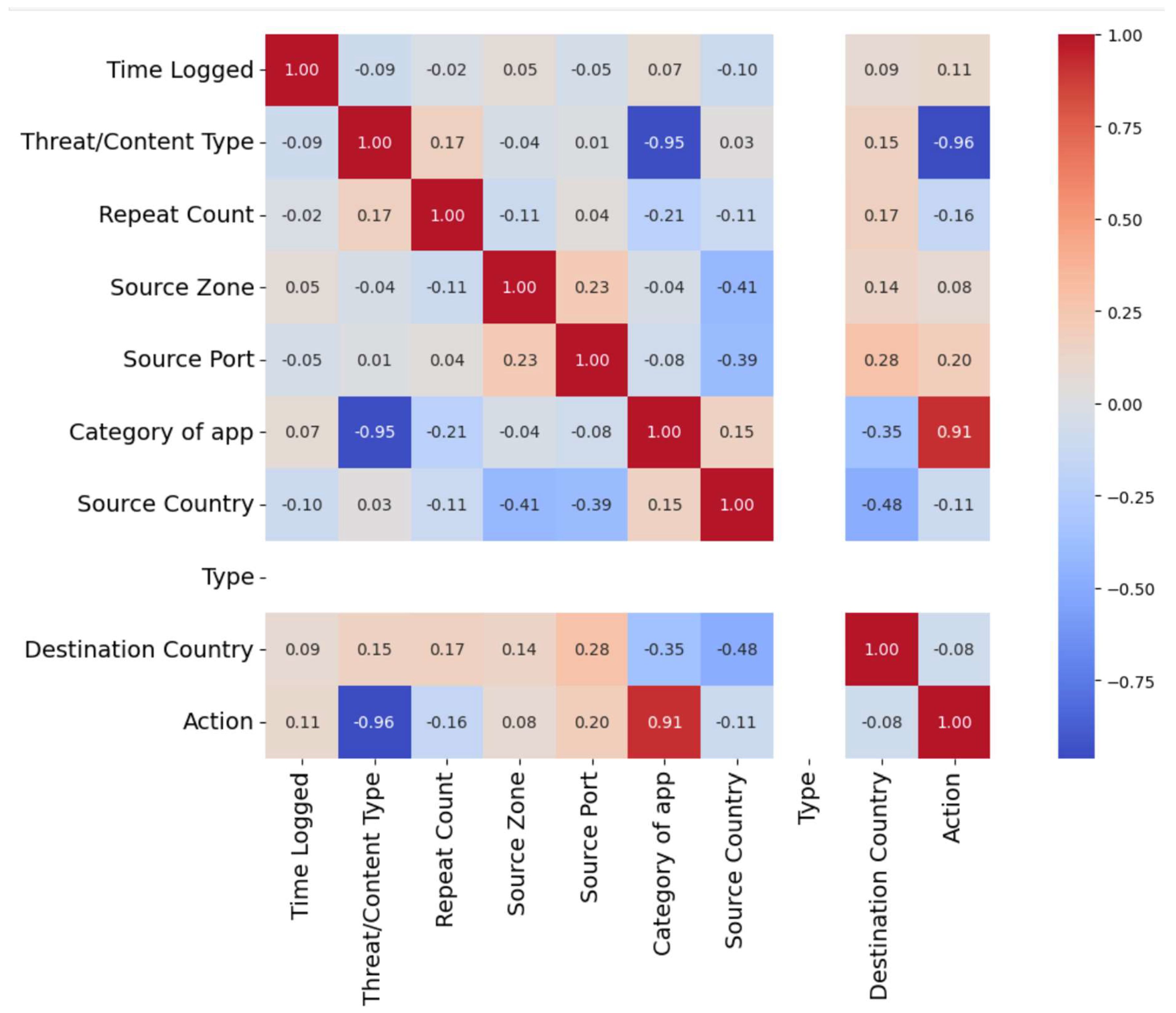

3.3. Visualization

3.4. Core Classification Formulas

- Linear classifiers separate classes by using a linear decision boundary/hyperplane, using the following form:

- The decision rule is

- Logistic regression:

- Linear discriminant analysis (LDA) uses class means of (μ0, μ1) and shared covariance, ∑:

- Linear support vector machine (SVM) is used to maximize margin based on constraints for

- 2.

- Nonlinear classifiers separate data using a decision function and take the form of

- Kernel SVM replaces linear dot product with kernel K(Xi, Xj):

- For the Decision Tree, split space recursively using thresholds:

- The k-Nearest Neighbor (k-NN) method assigns class based on majority vote and as

- Neural Networks (MLP) use layers of weighted sums plus nonlinear activation functions:

- Output, i.e., softmax for multiclass, is calculated by

- A model learns mapping as follows:

- Decision rule, y = 1, if P(y = 1/x) ≥ 0.5.

- SMOTE used synthetic samples for minority-imbalanced datasets. For each minority sample, xi, perform the following:

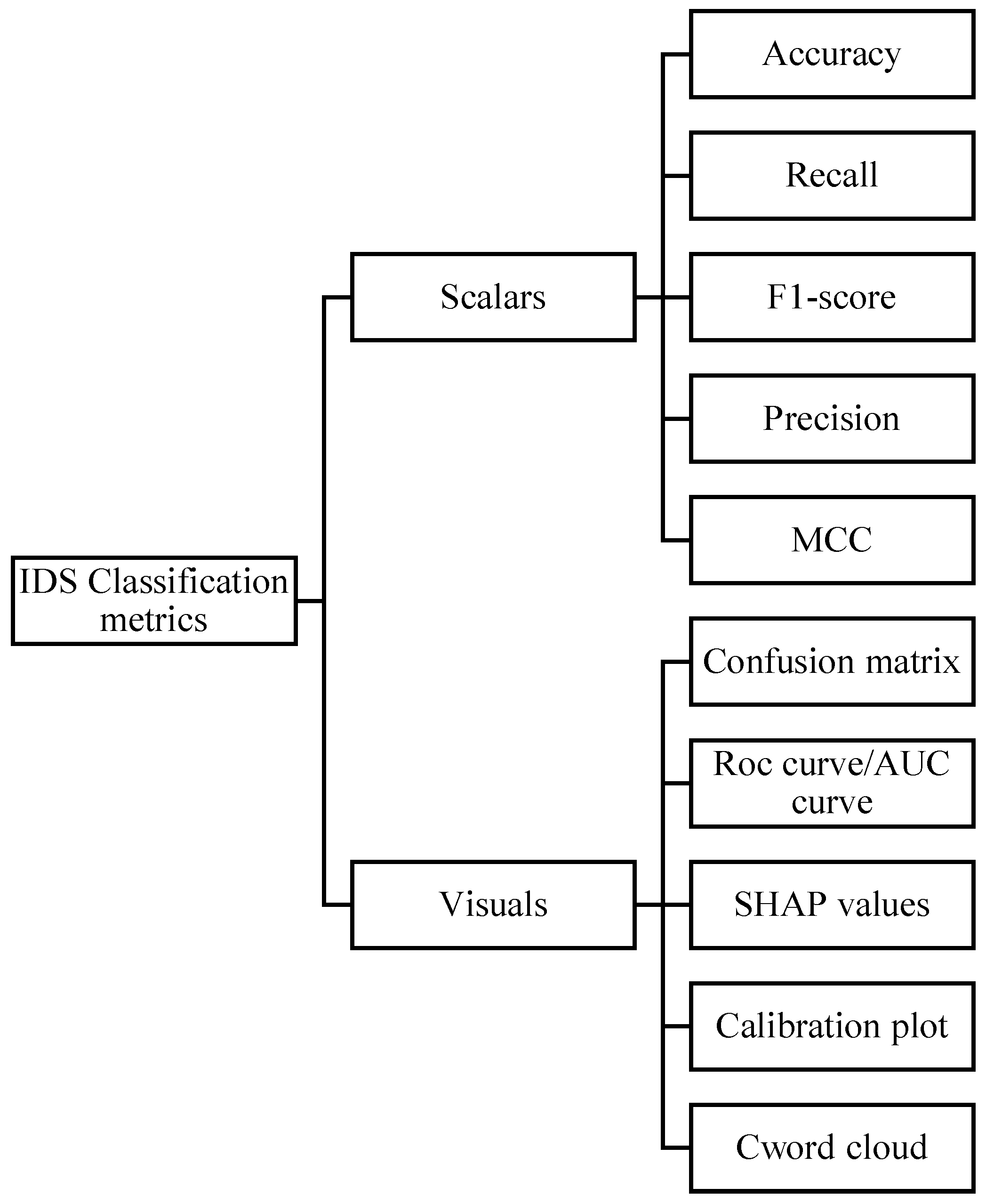

3.5. Classification Metrics

4. Experiments and Results

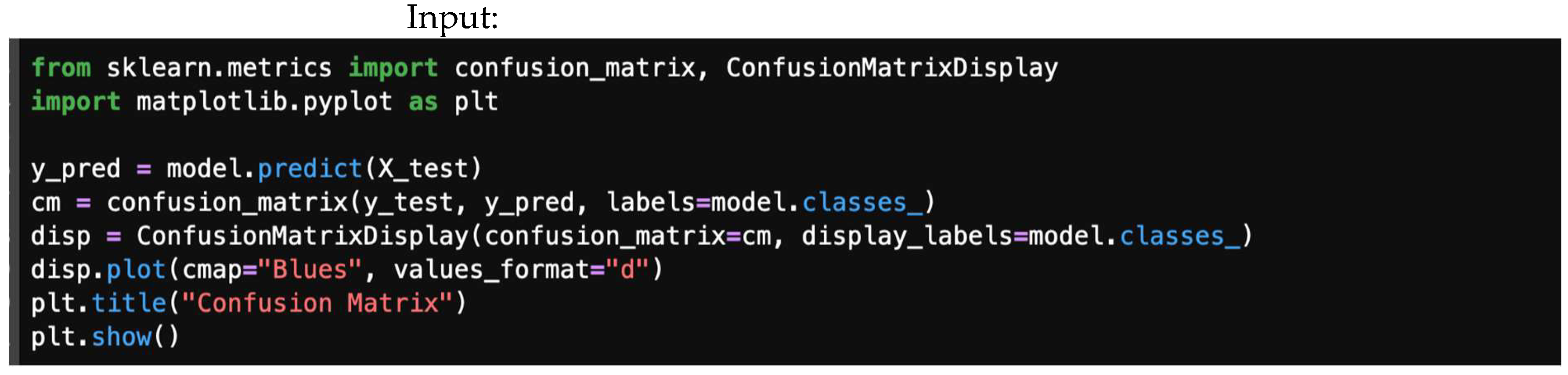

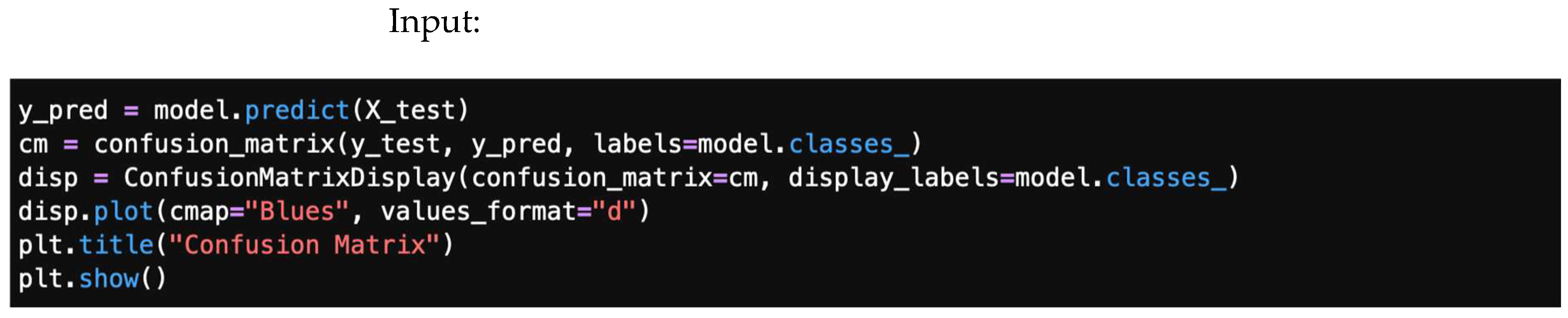

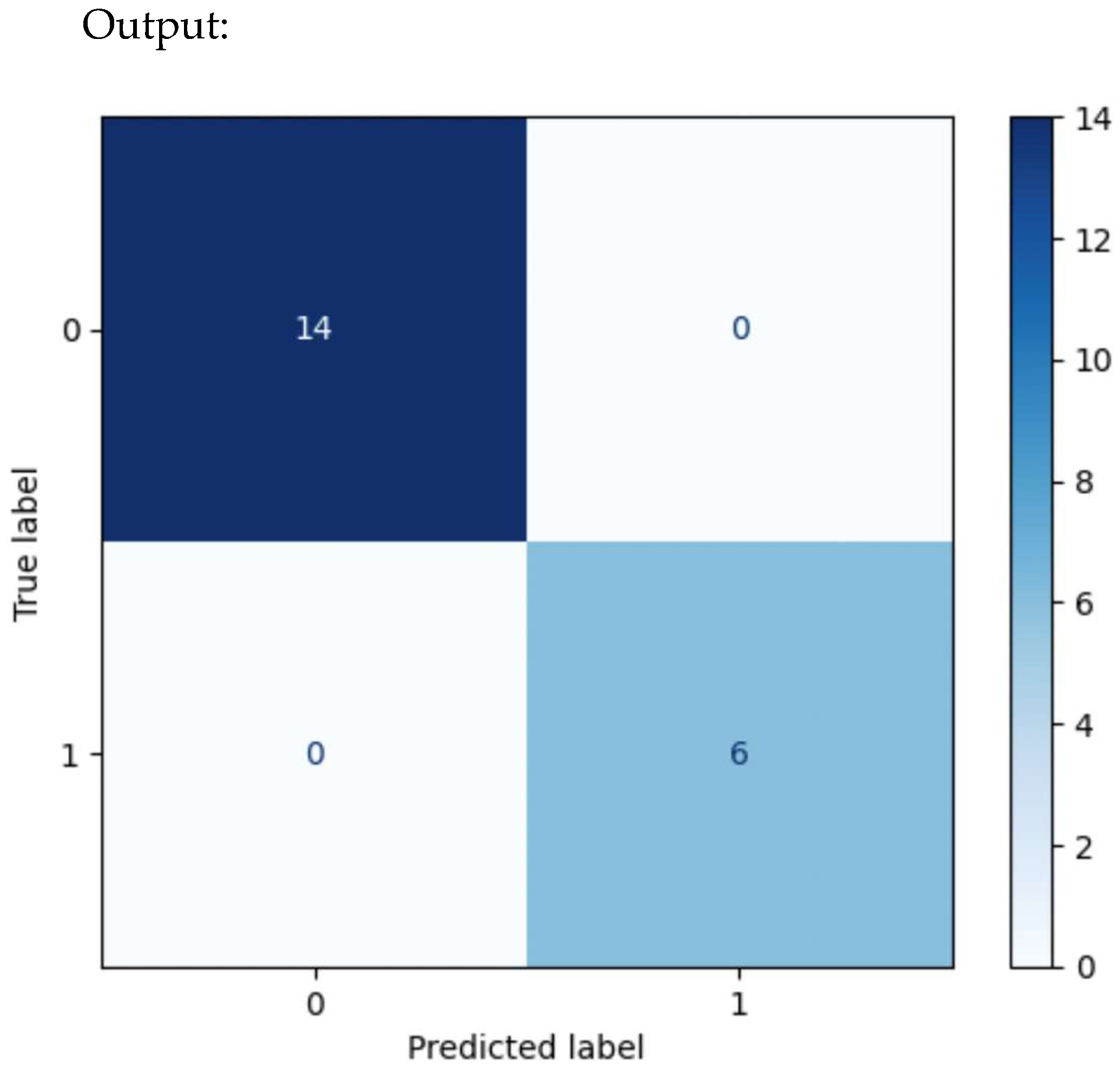

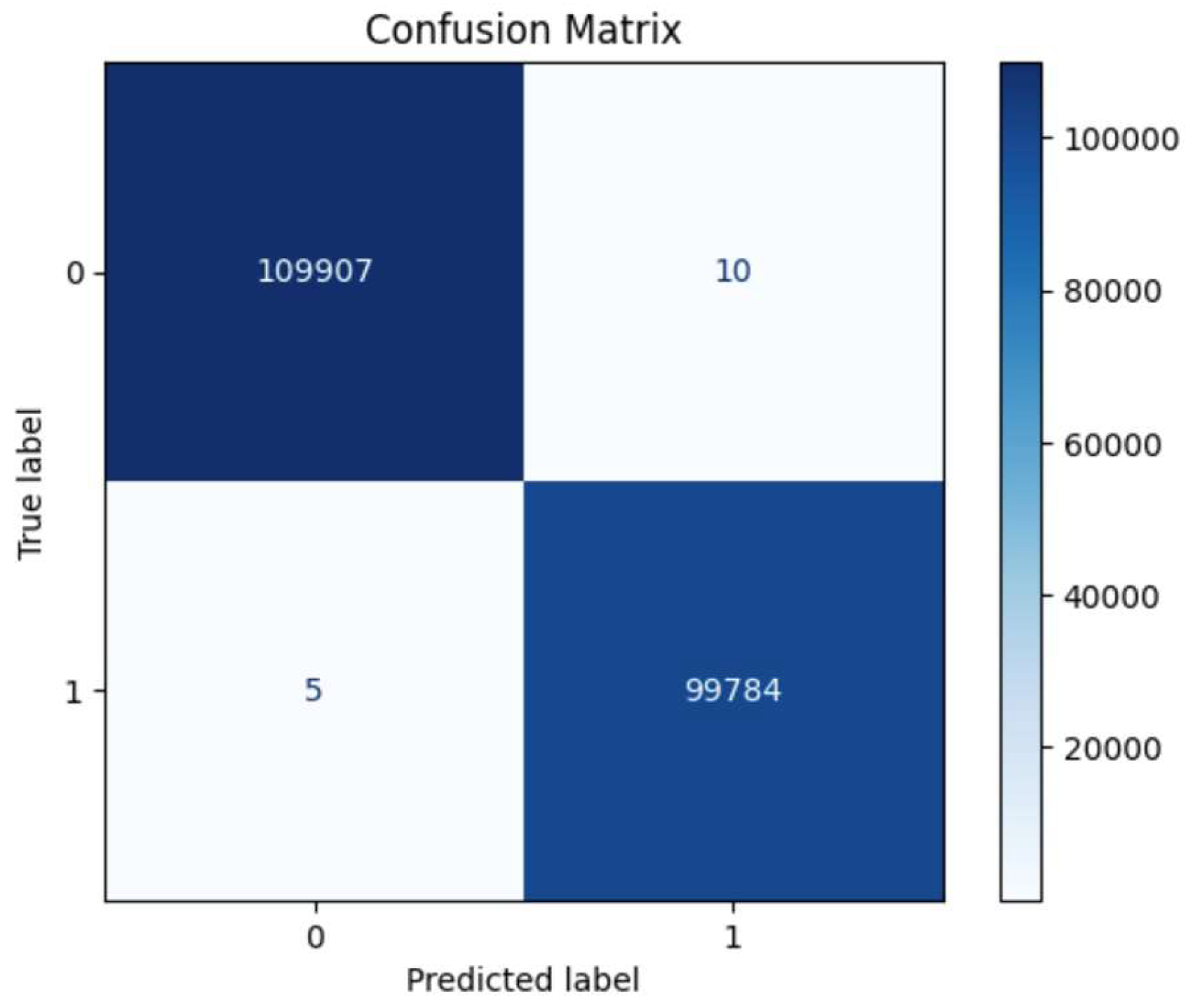

4.1. Confusion Matrix

- TP (true positive): Threat correctly detected.

- TN (true negative): Normal traffic correctly classified.

- FP (false positive): Normal traffic flagged as a threat (false alarm).

- FN (false negative): Threat missed, classified as normal.

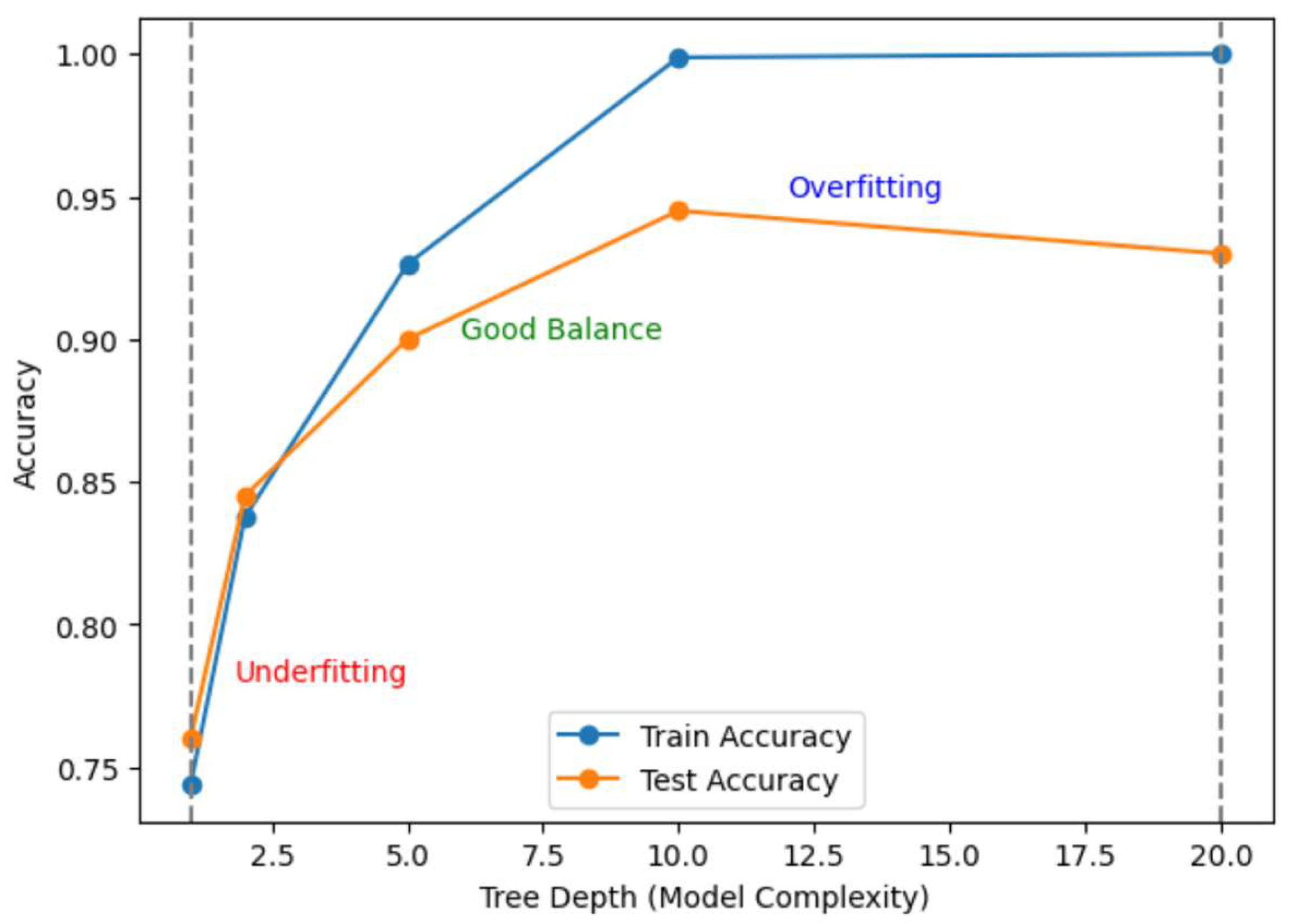

4.2. Accuracy

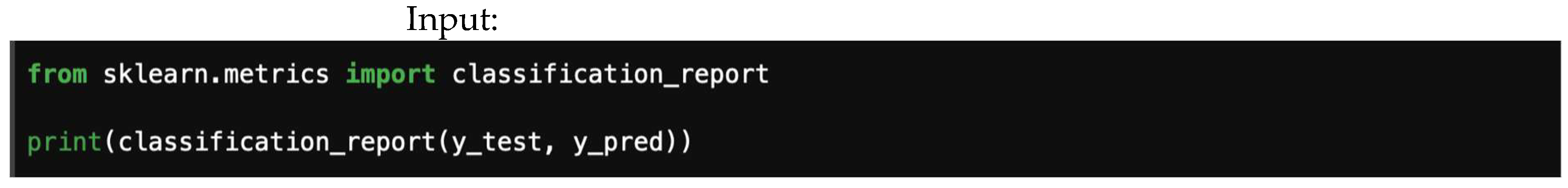

4.3. Precision, Recall, and F1-Score

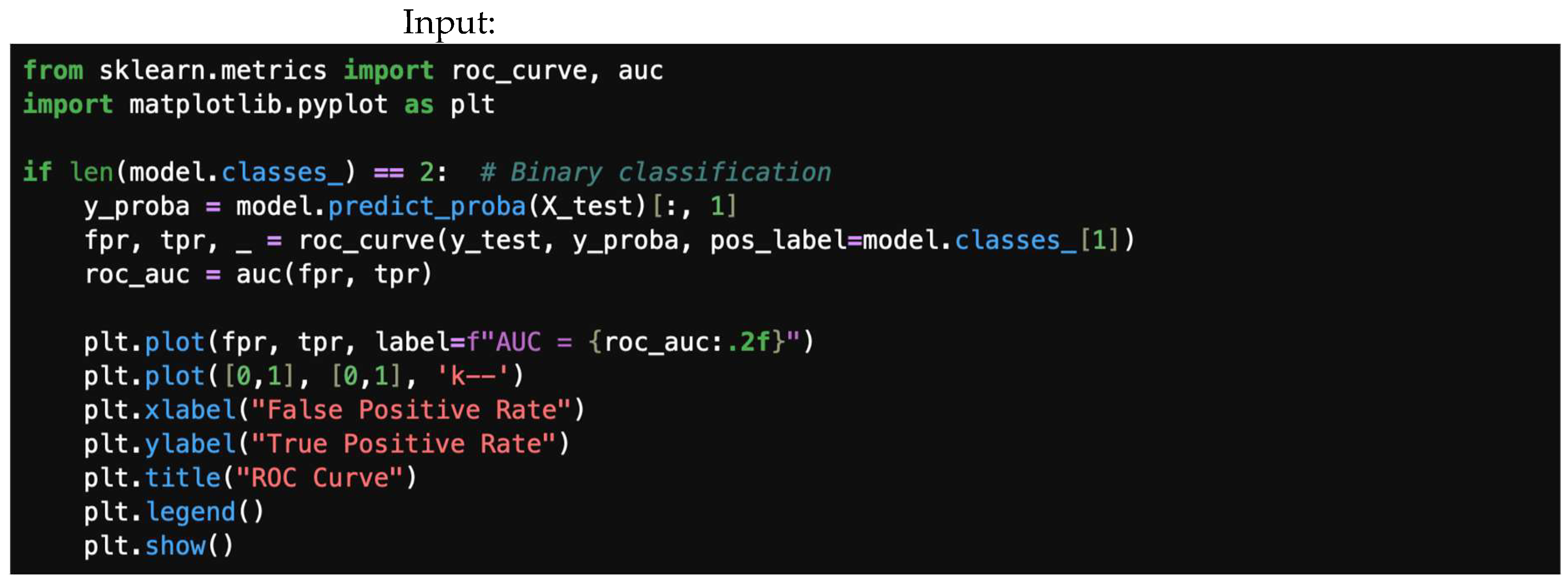

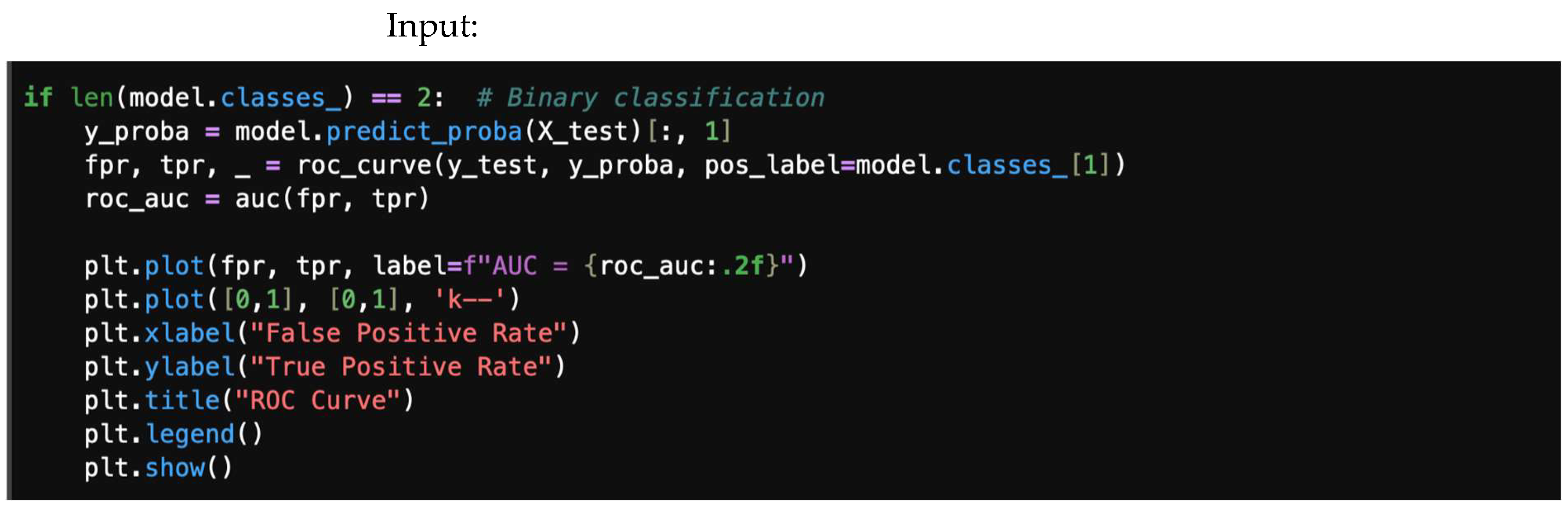

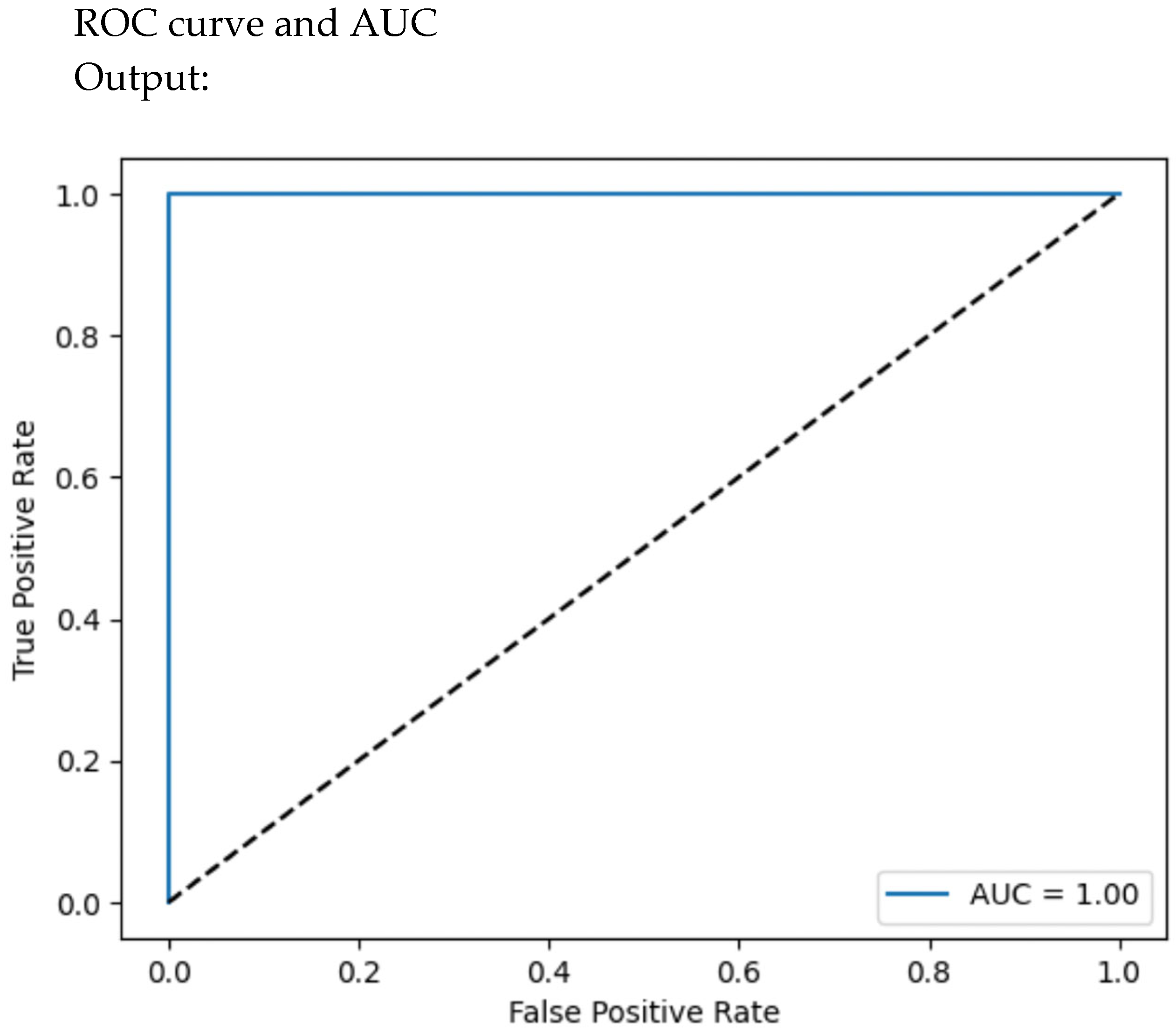

4.4. ROC Curve and AUC

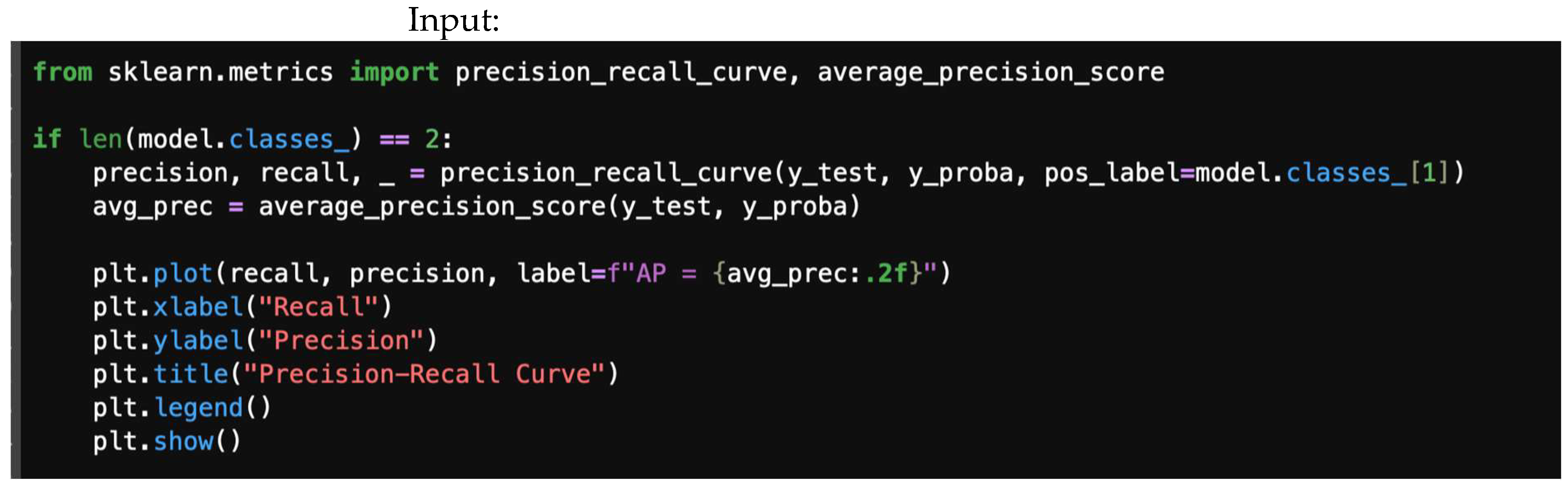

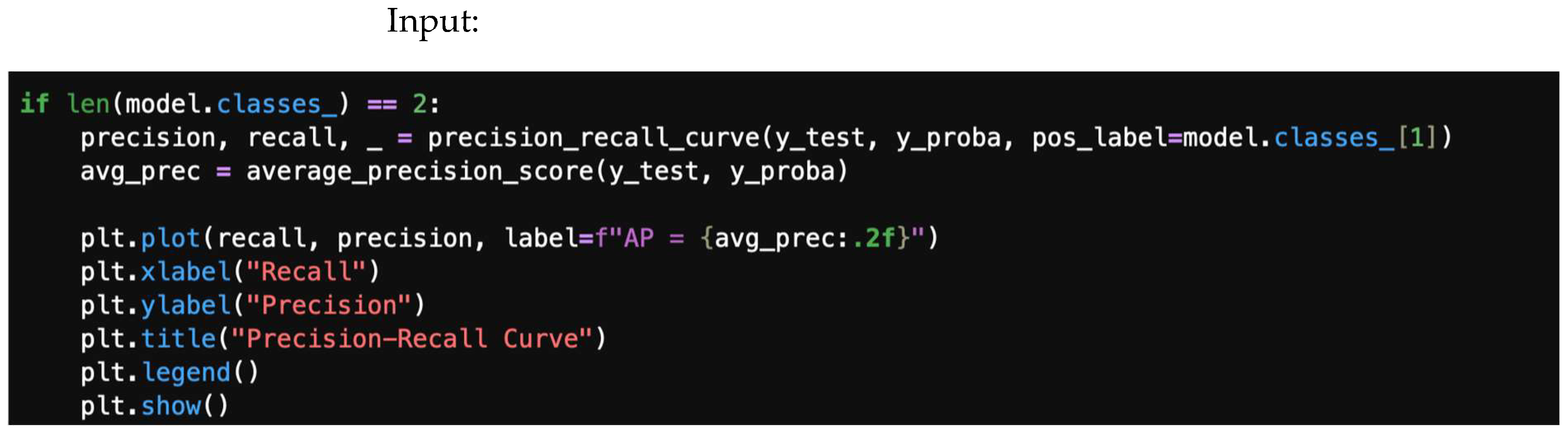

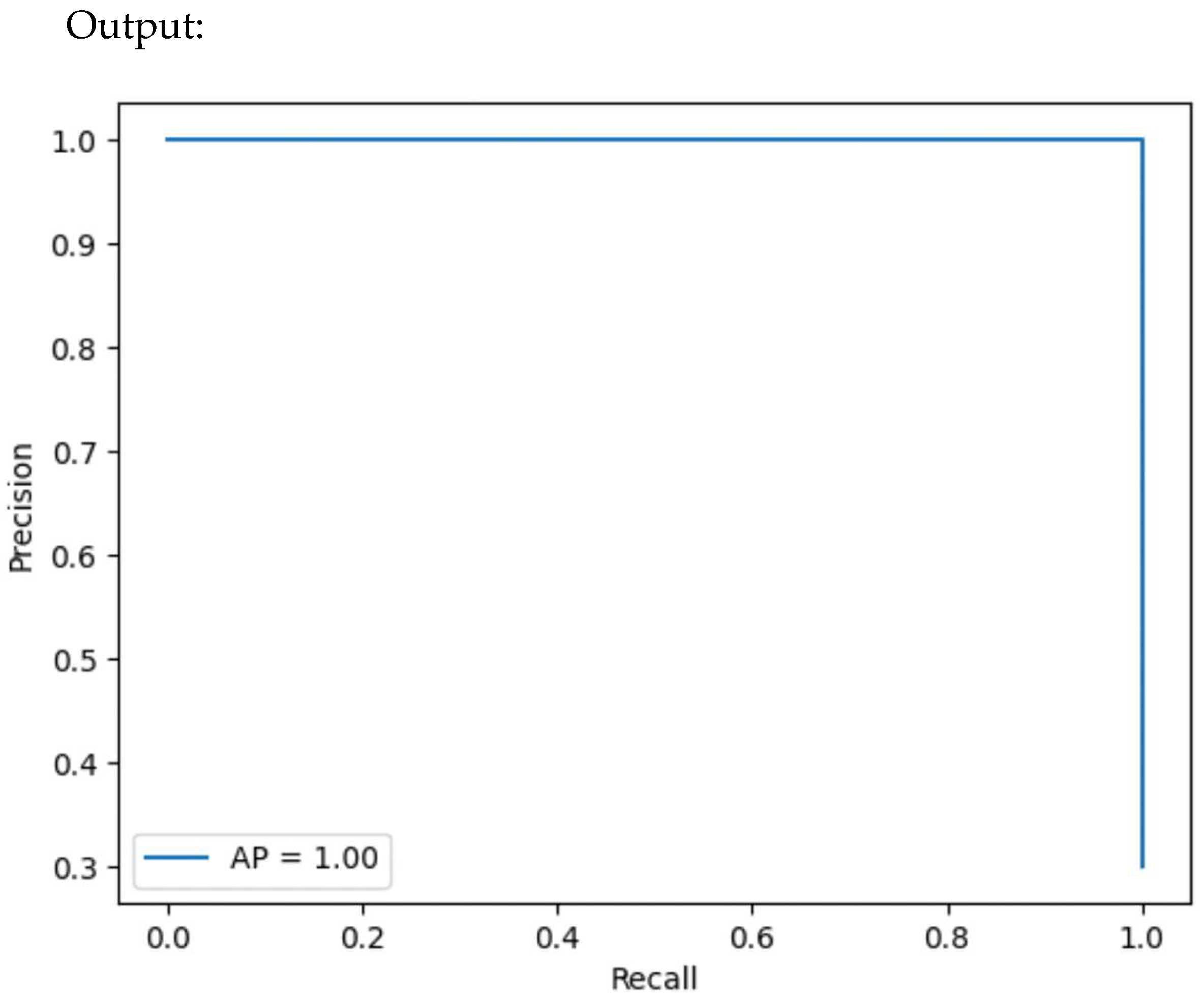

4.5. Precision–Recall Curve

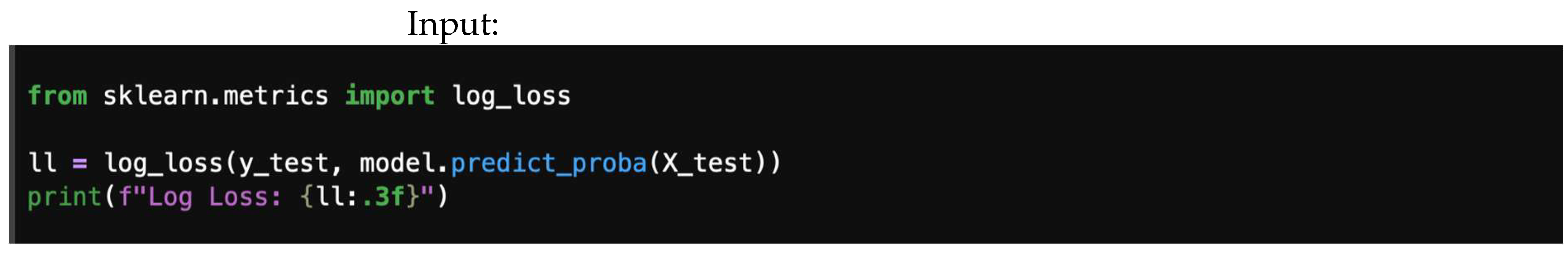

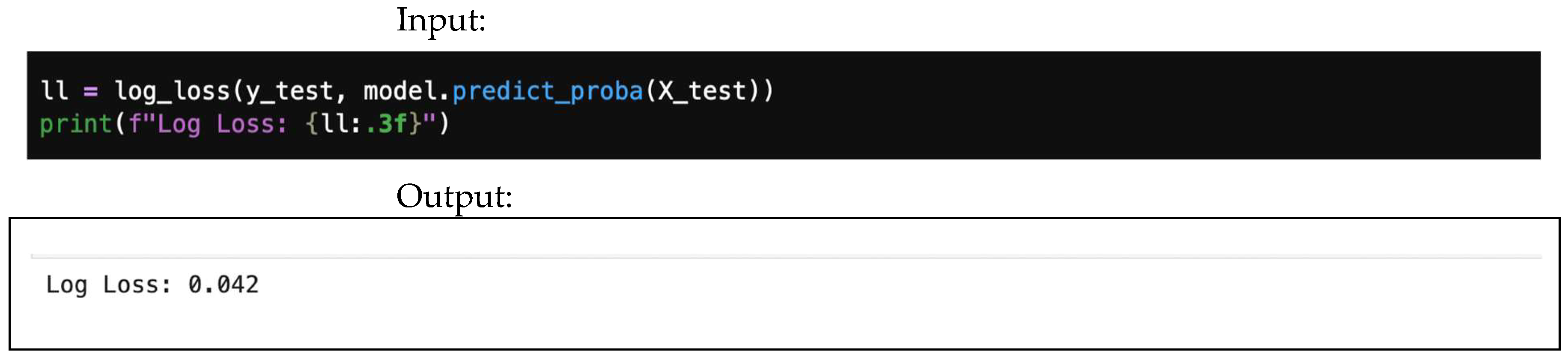

4.6. Logarithmic Loss (Log Loss)

- is the true label indicator. It is 1 if the sample belongs to class c, and 0 otherwise.

- is the model’s predicted probability that sample belongs to class c. It is obtained from class predict_proba().

4.7. Complete Example

4.7.1. Confusion Matrix

4.7.2. Accuracy

4.7.3. Precision, Recall, and F1-Score

4.7.4. ROC Curve and AUC

4.7.5. Precision–Recall Curve

4.7.6. Logarithmic Loss (Log Loss)

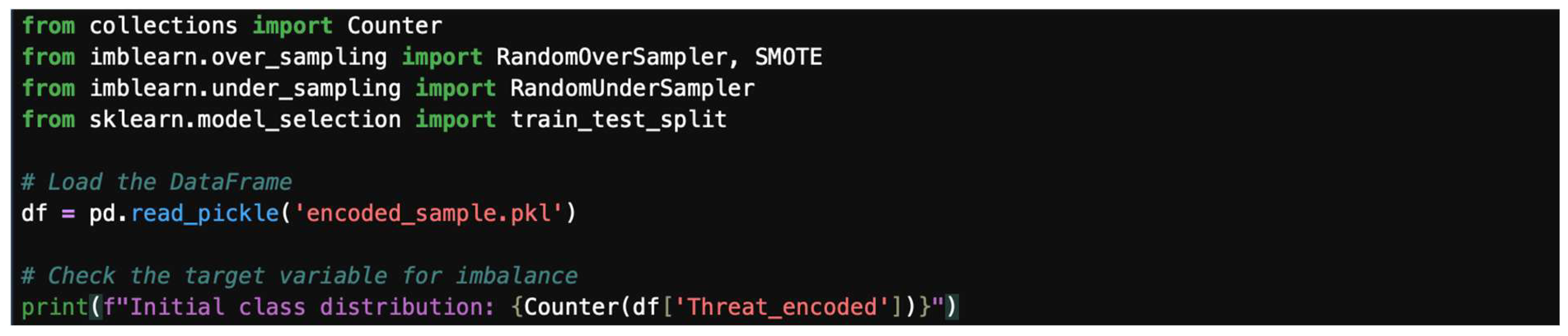

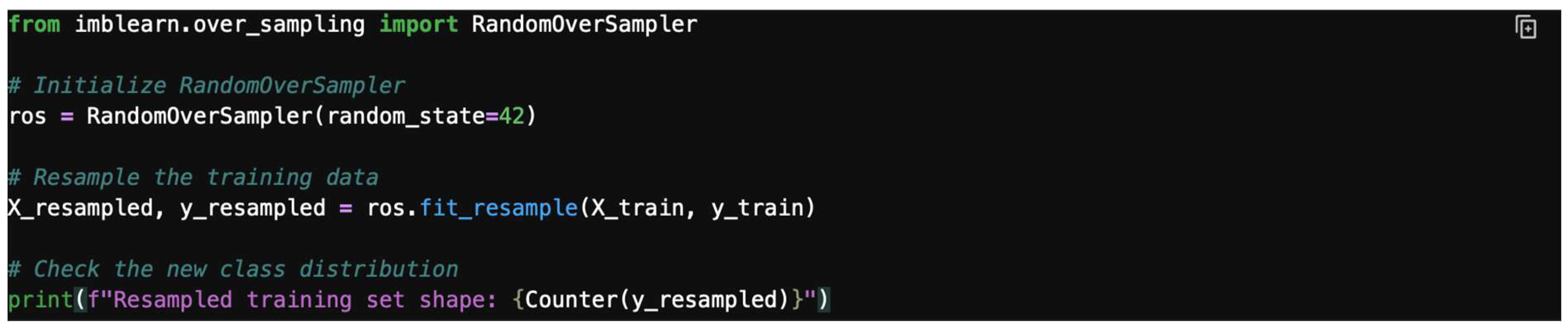

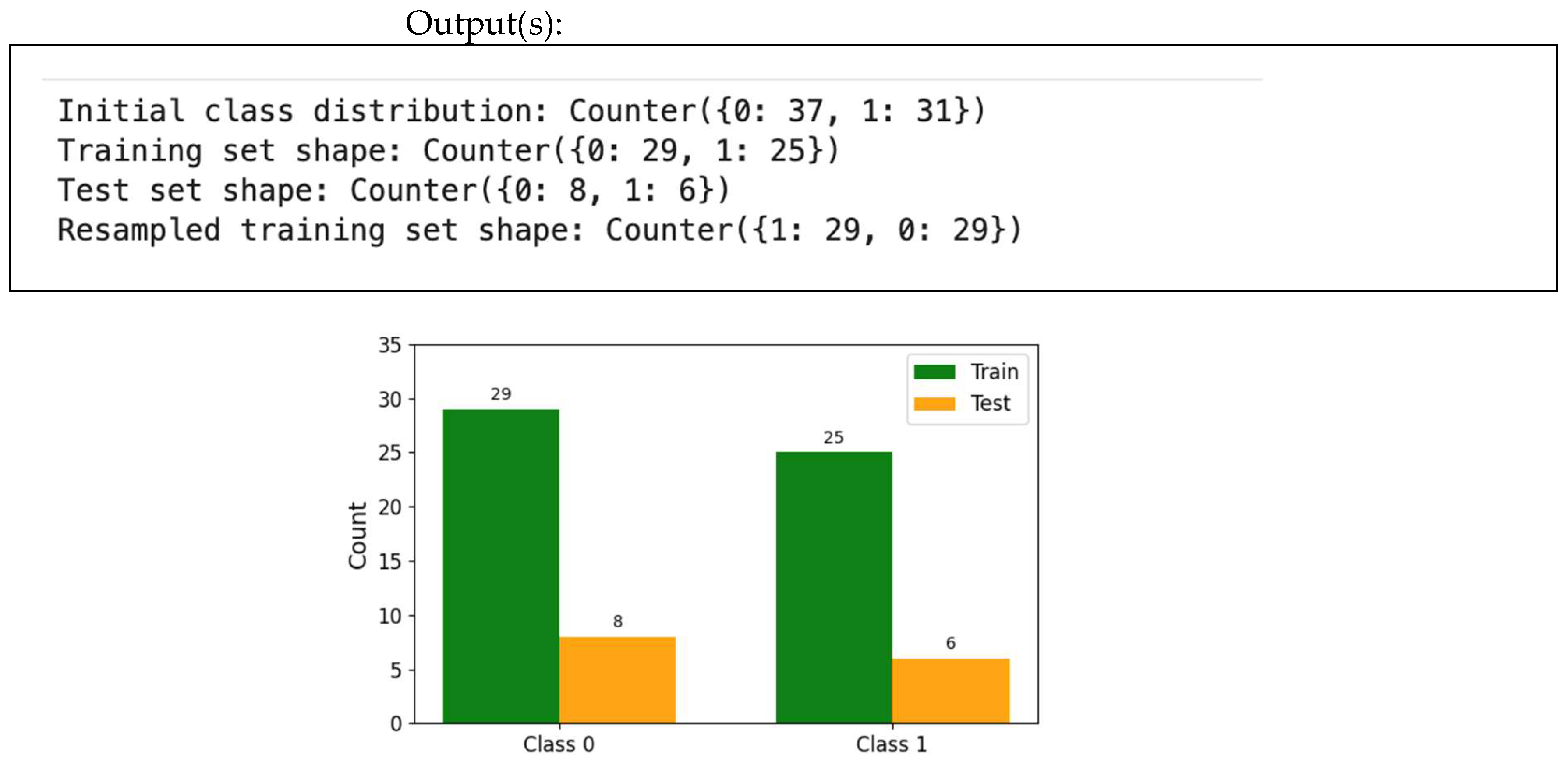

4.8. Handling Imbalance Data

- Understand the problem of data imbalance.

- Learn various strategies to address imbalance (undersampling, oversampling, and hybrid).

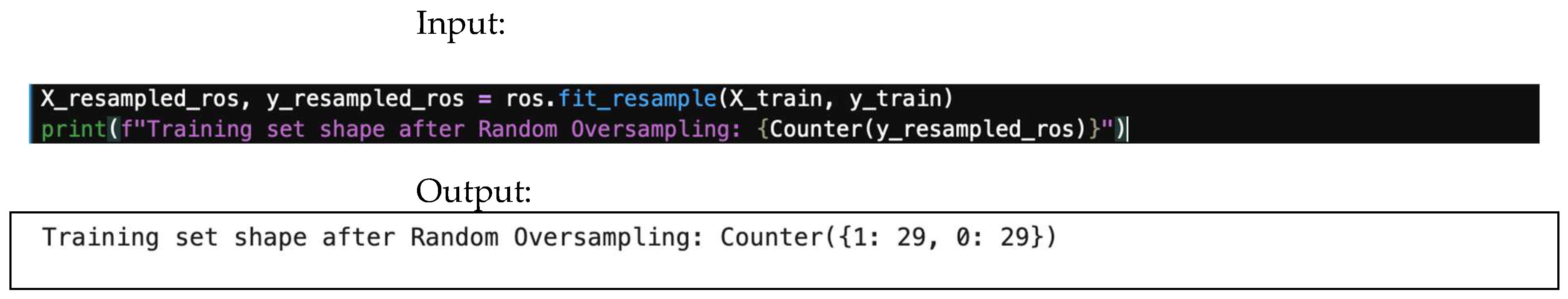

- Implement common techniques like RandomOverSampler and SMOTE.

- Understand the importance of applying these techniques after splitting data into train/test sets.

- -

- Step 1: Load data and check for imbalance.

- -

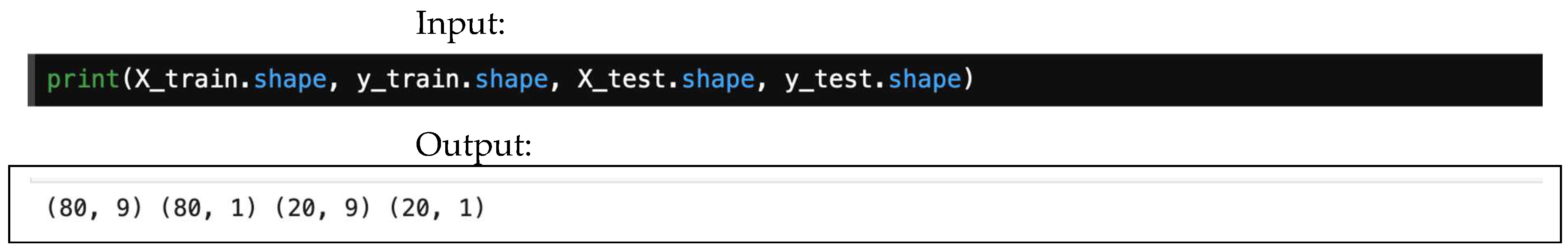

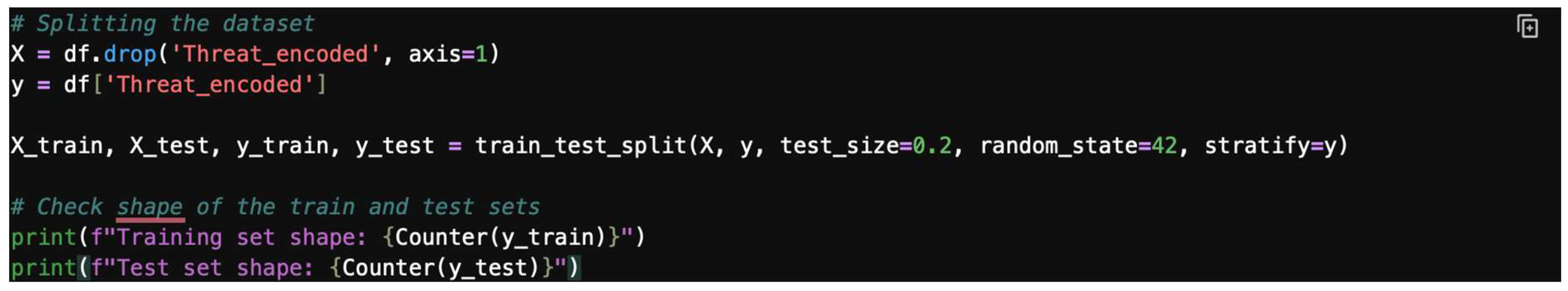

- Step 2: Split the data.

- -

- Step 3: Resample to address imbalance.

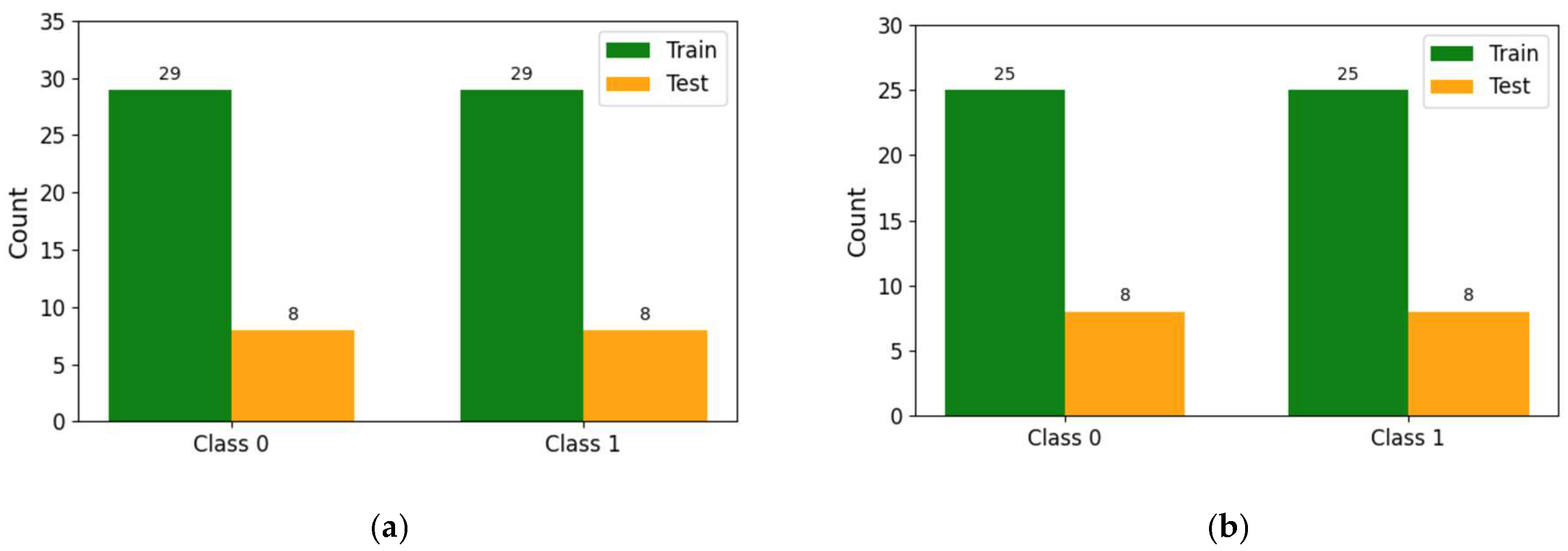

4.8.1. Random Oversampling

4.8.2. SMOTE

4.8.3. Random Undersampling (Use with Caution)

4.9. Sampling Dataset

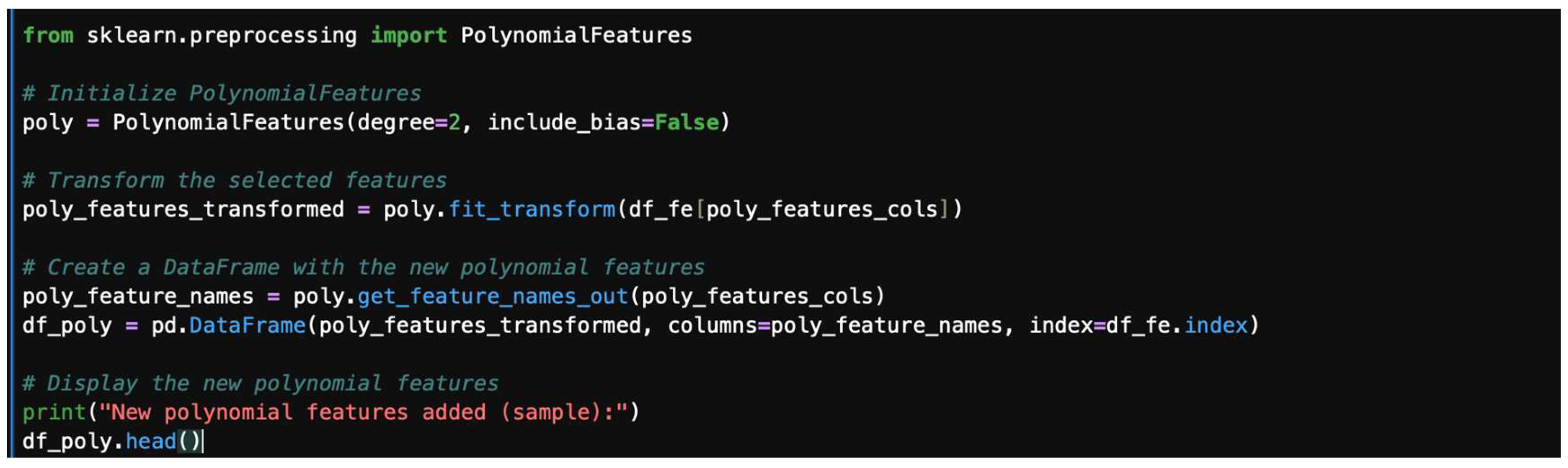

4.10. Advanced Feature Engineering

- -

- Step 1: Copy the DataFrame.

- -

- Step 2: Select features for polynomial transformation.

- -

- Step 3: Generate polynomial features.

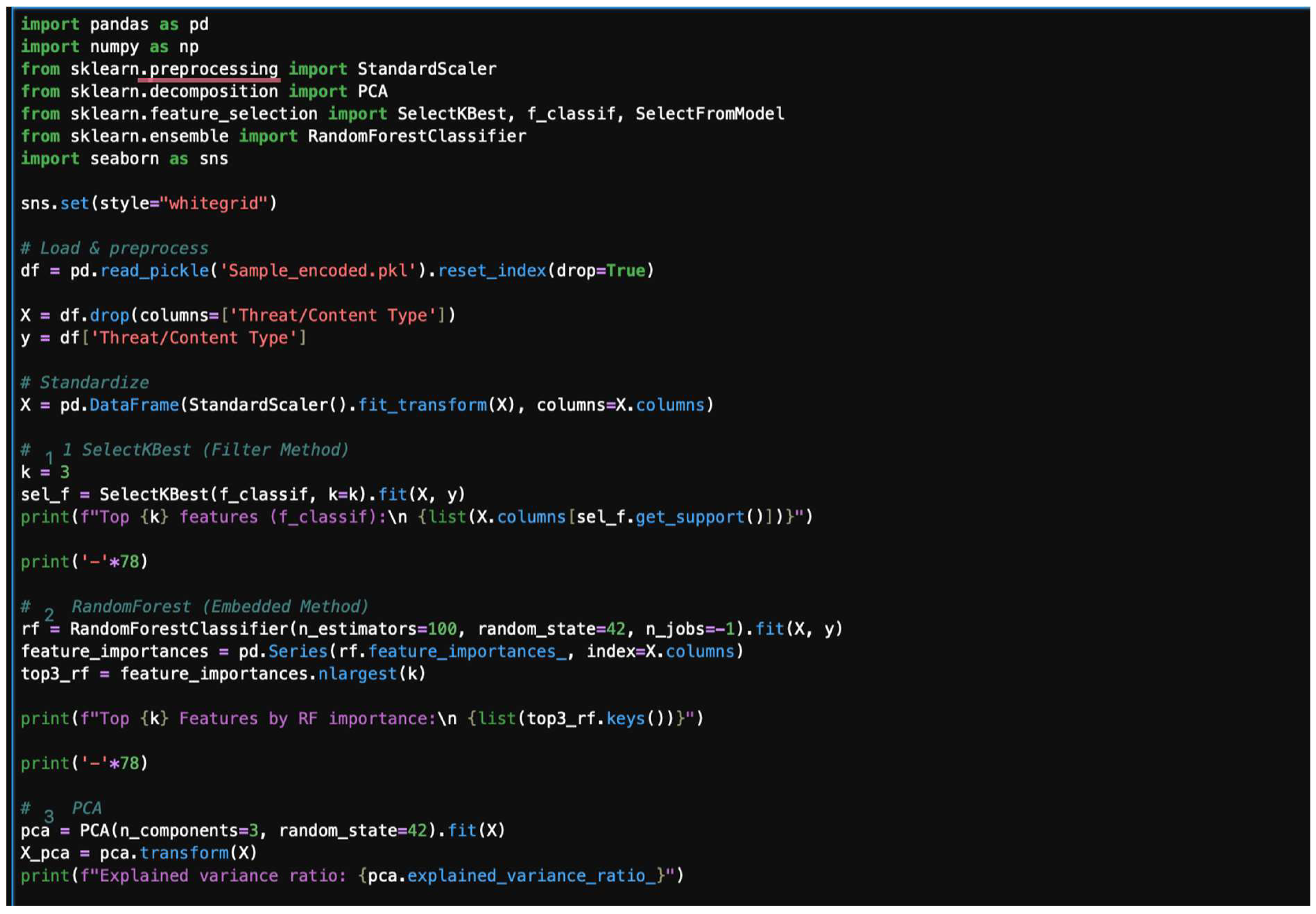

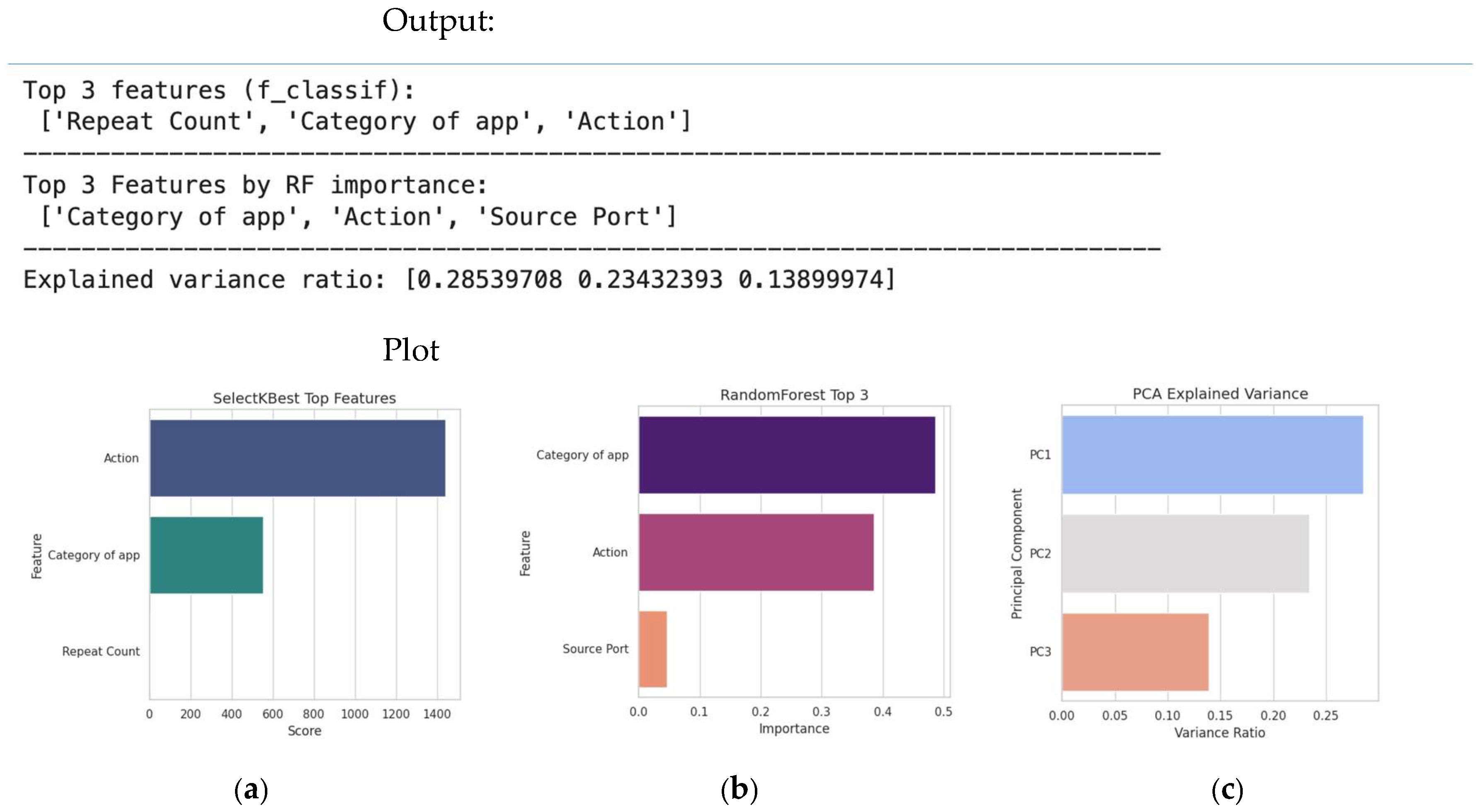

4.11. Advanced Dimensionality Reduction

5. Discussion and Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Uddin, A.; Aryal, S.; Bouadjenek, M.R.; Al-Hawawreh, M.; Talukder, A. Hierarchical classification for intrusion detection system: Effective design and empirical analysis. Ad Hoc Netw. 2025, 178, 103982. [Google Scholar] [CrossRef]

- Alotaibi, M.; Mengash, H.A.; Alqahtani, H.; Al-Sharafi, A.M.; Yahya, A.E.; Alotaibi, S.R.; Khadidos, A.O.; Yafoz, A. Hybrid GWQBBA model for optimized classification of attacks in Intrusion Detection System. Alex. Eng. J. 2025, 116, 9–19. [Google Scholar] [CrossRef]

- Suarez-Roman, M.; Tapiador, J. Attack structure matters: Causality-preserving metrics for Provenance-based Intrusion Detection Systems. Comput. Secur. 2025, 157, 104578. [Google Scholar] [CrossRef]

- Shana, T.B.; Kumari, N.; Agarwal, M.; Mondal, S.; Rathnayake, U. Anomaly-based intrusion detection system based on SMOTE-IPF, Whale Optimization Algorithm, and ensemble learning. Intell. Syst. Appl. 2025, 27, 200543. [Google Scholar] [CrossRef]

- Araujo, I.; Vieira, M. Enhancing intrusion detection in containerized services: Assessing machine learning models and an advanced representation for system call data. Comput. Secur. 2025, 154, 104438. [Google Scholar] [CrossRef]

- Devi, M.; Nandal, P.; Sehrawat, H. Federated learning-enabled lightweight intrusion detection system for wireless sensor networks: A cybersecurity approach against DDoS attacks in smart city environments. Intell. Syst. Appl. 2025, 27, 200553. [Google Scholar] [CrossRef]

- Le, T.-T.; Shin, Y.; Kim, M.; Kim, H. Towards unbalanced multiclass intrusion detection with hybrid sampling methods and ensemble classification. Appl. Soft Comput. 2024, 157, 111517. [Google Scholar] [CrossRef]

- He, H.; Garcia, E.A. Learning from imbalanced data. IEEE Trans. Knowl. Data Eng. 2009, 21, 1263–1284. [Google Scholar] [CrossRef]

- Othman, S.M.; Ba-Alwi, F.M.; Alsohybe, N.T.; Al-Hashida, A.Y. Intrusion detection model using machine learning algorithm on Big Data environment. J. Big Data 2018, 5, 34. [Google Scholar] [CrossRef]

- Rao, Y.N.; Babu, K.S. An Imbalanced Generative Adversarial Network-Based Approach for Network Intrusion Detection in an Imbalanced Dataset. Sensors 2023, 23, 550. [Google Scholar] [CrossRef]

- Sun, Z.; Zhang, J.; Zhu, X.; Xu, D. How Far Have We Progressed in the Sampling Methods for Imbalanced Data Classification? An Empirical Study. Electronics 2023, 12, 4232. [Google Scholar] [CrossRef]

- El-Gayar, M.M.; Alrslani, F.A.F.; El-Sappagh, S. Smart Collaborative Intrusion Detection System for Securing Vehicular Networks Using Ensemble Machine Learning Model. Information 2024, 15, 583. [Google Scholar] [CrossRef]

- Gong, W.; Yang, S.; Guang, H.; Ma, B.; Zheng, B.; Shi, Y.; Li, B.; Cao, Y. Multi-order feature interaction-aware intrusion detection scheme for ensuring cyber security of intelligent connected vehicles. Eng. Appl. Artif. Intell. 2024, 135, 108815. [Google Scholar] [CrossRef]

- Gou, W.; Zhang, H.; Zhang, R. Multi-Classification and Tree-Based Ensemble Network for the Intrusion Detection System in the Internet of Vehicles. Sensors 2023, 23, 8788. [Google Scholar] [CrossRef]

- Moulahi, T.; Zidi, S.; Alabdulatif, A.; Atiquzzaman, M. Comparative Performance Evaluation of Intrusion Detection Based on Machine Learning in In-Vehicle Controller Area Network Bus. IEEE Access 2021, 9, 99595–99605. [Google Scholar] [CrossRef]

- Wang, W.; Sun, D. The improved AdaBoost algorithms for imbalanced data classification. Inf. Sci. 2021, 563, 358–374. [Google Scholar] [CrossRef]

- Abedzadeh, N.; Jacobs, M. A Reinforcement Learning Framework with Oversampling and Undersampling Algorithms for Intrusion Detection System. Appl. Sci. 2023, 13, 11275. [Google Scholar] [CrossRef]

- Sayegh, H.R.; Dong, W.; Al-Madani, A.M. Enhanced Intrusion Detection with LSTM-Based Model, Feature Selection, and SMOTE for Imbalanced Data. Appl. Sci. 2024, 14, 479. [Google Scholar] [CrossRef]

- Palma, Á.; Antunes, M.; Bernardino, J.; Alves, A. Multi-Class Intrusion Detection in Internet of Vehicles: Optimizing Machine Learning Models on Imbalanced Data. Futur. Internet 2025, 17, 162. [Google Scholar] [CrossRef]

- Zayid, E.I.M.; Humayed, A.A.; Adam, Y.A. Testing a rich sample of cybercrimes dataset by using powerful classifiers’ competences. TechRxiv 2024. [Google Scholar] [CrossRef]

- Faul, A. A Concise Introduction to Machine Learning, 2nd ed.; Chapman and Hall/CRC: New York, NY, USA, 2025; pp. 88–151. [Google Scholar] [CrossRef]

- Tharwat, A. Classification assessment methods. Appl. Comput. Inform. 2018, 17, 168–192. [Google Scholar] [CrossRef]

- Wu, Y.; Zou, B.; Cao, Y. Current Status and Challenges and Future Trends of Deep Learning-Based Intrusion Detection Models. J. Imaging 2024, 10, 254. [Google Scholar] [CrossRef]

- Aljuaid, W.H.; Alshamrani, S.S. A Deep Learning Approach for Intrusion Detection Systems in Cloud Computing Environments. Appl. Sci. 2024, 14, 5381. [Google Scholar] [CrossRef]

- Isiaka, F. Performance Metrics of an Intrusion Detection System Through Window-Based Deep Learning Models. J. Data Sci. Intell. Syst. 2023, 2, 174–180. [Google Scholar] [CrossRef]

- Sajid, M.; Malik, K.R.; Almogren, A.; Malik, T.S.; Khan, A.H.; Tanveer, J.; Rehman, A.U. Enhancing Intrusion Detection: A Hybrid Machine and Deep Learning Approach. J. Cloud Comput. 2024, 13, 1–24. [Google Scholar] [CrossRef]

- Neto, E.C.P.; Iqbal, S.; Buffett, S.; Sultana, M.; Taylor, A. Deep Learning for Intrusion Detection in Emerging Technologies: A Comprehensive Survey and New Perspectives. Artif. Intell. Rev. 2025, 58, 1–63. [Google Scholar] [CrossRef]

- Nakıp, M.; Gelenbe, E. Online Self-Supervised Deep Learning for Intrusion Detection Systems (SSID). arXiv 2023, arXiv:2306.13030. Available online: https://arxiv.org/abs/2306.13030 (accessed on 1 September 2025).

- Liu, J.; Simsek, M.; Nogueira, M.; Kantarci, B. Multidomain Transformer-based Deep Learning for Early Detection of Network Intrusion. arXiv 2023, arXiv:2309.01070. Available online: https://arxiv.org/abs/2309.01070 (accessed on 10 September 2025). [CrossRef]

- Zeng, Y.; Jin, R.; Zheng, W. CSAGC-IDS: A Dual-Module Deep Learning Network Intrusion Detection Model for Complex and Imbalanced Data. arXiv 2025, arXiv:2505.14027. Available online: https://arxiv.org/abs/2505.14027 (accessed on 10 September 2025).

- Chen, Y.; Su, S.; Yu, D.; He, H.; Wang, X.; Ma, Y.; Guo, H. Cross-domain industrial intrusion detection deep model trained with imbalanced data. IEEE Internet Things J. 2022, 10, 584–596. [Google Scholar] [CrossRef]

- Sangaiah, A.K.; Javadpour, A.; Pinto, P. Towards data security assessments using an IDS security model for cyber-physical smart cities. Inf. Sci. 2023, 648, 119530. [Google Scholar] [CrossRef]

- Disha, R.A.; Waheed, S. Performance analysis of machine learning models for intrusion detection system using Gini Impurity-based Weighted Random Forest (GIWRF) feature selection technique. Cybersecurity 2022, 5, 1–22. [Google Scholar] [CrossRef]

- Sarhan, M.; Layeghy, S.; Portmann, M. Towards a standard feature set for network intrusion detection system datasets. Mob. Networks Appl. 2022, 27, 357–370. [Google Scholar] [CrossRef]

| Approach | Implication | + | − | Domain(s) | Criteria | Review | Article(s), Year | Method | Imbalanced | Metrics Used | Contribution |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Data level [10,11] | Random oversampling, SMOTE, ADASYN. Random undersampling Tomek links | Simple, model-agnostic, balances class distribution | Oversampling may cause overfitting. Undersampling may lose useful data | Medical diagnosis, text classification, fraud detection | F1-score, precision–recall curve (PR) curve, G-mean | Suited for PR curves and G-mean, since they directly affect class distributions | [10], 2023 | GAN-based | GAN synthetic oversampling | Acc, precision, recall, F1 | GANs for minority attack classes |

| [11], 2023 | Empirical (under/over/hybrid-sampling) | Sampling metric | Precision, recall, F1, AUC | Comprehensive review | |||||||

| Algorithm level [12,13,14,15,16] | Cost-sensitive learning. Decision Trees with class weights, SVM penalties, boosting | Directly modifies algorithms, avoids altering data distribution. | Requires algorithm-specific tuning, may increase computational cost | Intrusion detection, financial risk assessment, cybersecurity | ROC-AUC, MCC, balanced accuracy | Better assessed with ROC-AUC and MCC, as they optimize decision boundaries | [12], 2024 | Ensemble ML | limited | Acc, recall, F1 | Collaborative IDS across edge systems |

| [13], 2024 | DL | indirect | Acc, precision, recall, F1 | Feature interaction modeling | |||||||

| [14], 2023 | Tree-based ensemble | Algorithm-level imbalance robustness | Acc, recall, F1 | Multiclass ensemble IDS | |||||||

| [15], 2021 | ML-based (SVM, RF, KNN) | Nor addressed | Acc, detection rate | Comparative, baseline CAN-bus IDS | |||||||

| [16], 2021 | AdaBoost | Algorithm-level | Acc, AUC, F1 | Improved AdaBoost for imbalance | |||||||

| Hybrid [17,18,19] | SMOTE, boosting, ensemble with resampling, GAN-based synthetic data + classifier | Combines strengthens of both approaches, often best performance | More complex to implement, risk of high computational cost | Rare-disease detection, image recognition | ROC-AUAUC, F1-score, MCC | Typically evaluated with a mix (PR-AUC + ROC-AUC + MCC), since they combine both strategies | [17], 2023 | Reinforcement learning + sampling | Adaptive over/under sampling | Acc, precision, recall, F1 | RL-driven dynamic imbalance handling |

| [18], 2024 | LSTM, feature selection, SMOTE | SMOTE oversampling | Acc, precision, recall, F1 | Merges DL plus resampling | |||||||

| [19], 2025 | ML (RF, XGB, DL) | evaluation | Acc, precision, recall, F1 | Top IDS handles multiclass imbalance |

| Column Name | Data Type | Unique Values Count | NaN Count |

|---|---|---|---|

| Domain | int64 | 1 | 0 |

| Receive Time | datetime64[ns] | 53,466 | 0 |

| Serial # | int64 | 1 | 0 |

| Type | object | 1 | 0 |

| Threat/Content Type | object | 7 | 0 |

| Config Version | int64 | 1 | 0 |

| Generate Time | datetime64[ns] | 53,466 | 0 |

| Source Address | object | 9744 | 0 |

| Destination Address | object | 6624 | 0 |

| NAT Source IP | object | 10 | 1,033,000 |

| NAT Destination IP | object | 818 | 1,033,000 |

| Rule | object | 17 | 549,532 |

| Source User | object | 4234 | 391,310 |

| Destination User | object | 1421 | 842,969 |

| Application | object | 15 | 0 |

| Virtual System | object | 1 | 0 |

| Source Zone | object | 5 | 0 |

| Destination Zone | object | 6 | 1 |

| Inbound Interface | object | 5 | 1 |

| Outbound Interface | object | 6 | 549,532 |

| Log Action | object | 2 | 1,037,148 |

| Time-Logged | datetime64[ns] | 53,466 | 0 |

| Session ID | int64 | 462,478 | 0 |

| Repeat Count | int64 | 102 | 0 |

| Source Port | int64 | 42,788 | 0 |

| Destination Port | int64 | 969 | 0 |

| NAT Source Port | int64 | 13,400 | 0 |

| NAT Destination Port | int64 | 7 | 0 |

| Flags | object | 11 | 0 |

| IP Protocol | object | 3 | 0 |

| Action | object | 5 | 0 |

| URL/Filename | object | 28 | 1,048,035 |

| Threat/Content Name | object | 43 | 0 |

| Category | object | 13 | 0 |

| Severity | object | 5 | 0 |

| Direction | object | 2 | 0 |

| Sequence Number | int64 | 1056 | 0 |

| Action Flags | object | 2 | 0 |

| Source Country | object | 35 | 0 |

| Destination Country | object | 79 | 0 |

| Unnamed: 40 | float64 | 0 | 1,048,575 |

| Contenttype | float64 | 0 | 1,048,575 |

| pcap_id | int64 | 1 | 0 |

| Filedigest | float64 | 0 | 1,048,575 |

| Cloud | float64 | 0 | 1,048,575 |

| url_idx | int64 | 9 | 0 |

| user_agent | float64 | 0 | 1,048,575 |

| Filetype | float64 | 0 | 1,048,575 |

| Xff | float64 | 0 | 1,048,575 |

| Referrer | float64 | 0 | 1,048,575 |

| Sender | float64 | 0 | 1,048,575 |

| Subject | float64 | 0 | 1,048,575 |

| Recipient | float64 | 0 | 1,048,575 |

| Reported | int64 | 1 | 0 |

| DG Hierarchy Level 1 | int64 | 1 | 0 |

| DG Hierarchy Level 2 | int64 | 1 | 0 |

| DG Hierarchy Level 3 | int64 | 1 | 0 |

| DG Hierarchy Level 4 | int64 | 1 | 0 |

| Virtual System Name | object | 1 | 0 |

| Device Name | object | 1 | 0 |

| file_url | float64 | 0 | 1,048,575 |

| Source VM UUID | float64 | 0 | 1,048,575 |

| Destination VM UUID | float64 | 0 | 1,048,575 |

| http_method | float64 | 0 | 1,048,575 |

| Tunnel ID/IMSI | int64 | 1 | 0 |

| Monitor Tag/IMEI | float64 | 0 | 1,048,575 |

| Parent Session ID | int64 | 1 | 0 |

| Parent Session Start Time | float64 | 0 | 1,048,575 |

| Tunnel | float64 | 0 | 1,048,575 |

| thr_category | object | 11 | 0 |

| Contentver | object | 5 | 0 |

| sig_flags | object | 2 | 0 |

| SCTP Association ID | int64 | 1 | 0 |

| Payload Protocol ID | int64 | 1 | 0 |

| http_headers | float64 | 0 | 1,048,575 |

| URL Category List | float64 | 0 | 1,048,575 |

| UUID for Rule | object | 17 | 549,532 |

| HTTP/2 Connection | int64 | 1 | 0 |

| dynusergroup_name | float64 | 0 | 1,048,575 |

| XFF Address | float64 | 0 | 1,048,575 |

| Source Device Category | float64 | 0 | 1,048,575 |

| Source Device Profile | float64 | 0 | 1,048,575 |

| Source Device Model | float64 | 0 | 1,048,575 |

| Source Device Vendor | float64 | 0 | 1,048,575 |

| Source Device OS Family | float64 | 0 | 1,048,575 |

| Source Device OS Version | float64 | 0 | 1,048,575 |

| Source Hostname | float64 | 0 | 1,048,575 |

| Source Mac Address | float64 | 0 | 1,048,575 |

| Destination Device Category | float64 | 0 | 1,048,575 |

| Destination Device Profile | float64 | 0 | 1,048,575 |

| Destination Device Model | float64 | 0 | 1,048,575 |

| Destination Device Vendor | float64 | 0 | 1,048,575 |

| Destination Device OS Family | float64 | 0 | 1,048,575 |

| Destination Device OS Version | float64 | 0 | 1,048,575 |

| Destination Hostname | float64 | 0 | 1,048,575 |

| Destination Mac Address | float64 | 0 | 1,048,575 |

| Container ID | float64 | 0 | 1,048,575 |

| POD Namespace | float64 | 0 | 1,048,575 |

| POD Name | float64 | 0 | 1,048,575 |

| Source External Dynamic List | float64 | 0 | 1,048,575 |

| Destination External Dynamic List | float64 | 0 | 1,048,575 |

| Host ID | float64 | 0 | 1,048,575 |

| Serial Number | float64 | 0 | 1,048,575 |

| domain_edl | float64 | 0 | 1,048,575 |

| Source Dynamic Address Group | float64 | 0 | 1,048,575 |

| Destination Dynamic Address Group | float64 | 0 | 1,048,575 |

| partial_hash | int64 | 1 | 0 |

| High-Res Timestamp | object | 85,581 | 0 |

| Reason | float64 | 0 | 1,048,575 |

| Justification | float64 | 0 | 1,048,575 |

| nssai_sst | float64 | 1 | 49,9043 |

| Subcategory of App | object | 8 | 0 |

| Category of App | object | 6 | 0 |

| Technology of App | object | 4 | 0 |

| Risk of App | int64 | 4 | 0 |

| Characteristics of App | object | 9 | 549,532 |

| Container of App | object | 5 | 752,386 |

| Tunneled App | object | 9 | 0 |

| SaaS of App | object | 2 | 0 |

| Sanctioned State of App | object | 1 | 0 |

| Actual Values | Positive | TP | FN |

| Negative | FP | TN | |

| Positive | Negative | ||

| Predicted value | |||

| Metric | Precision | Recall | F1-Score | Support |

|---|---|---|---|---|

| 0 | 1.00 | 1.00 | 1.00 | 14 |

| 1 | 1.00 | 1.00 | 1.00 | 6 |

| Accuracy | 1.00 | 20 | ||

| Macro avg | 1.00 | 1.00 | 1.00 | 20 |

| Weighted avg | 1.00 | 1.00 | 1.00 | 20 |

| Article, Year | Algorithm(s) | Processes | Metrics | IDS | Imbalanced | Prediction | Learnability | IDAT | Best for |

|---|---|---|---|---|---|---|---|---|---|

| Wu, Y.; Zou, B.; Cao, Y., 2024 [23] | CNN, RNN, GANs | Summarize, preprocess, feature engineering, transform | Acc, recall, precision, F1, etc. | ✓ | ✓ | ✓ | 🗶 | 🗶 | Policy, strategy, framework |

| Wa’ad H. Aljuaid and Sultan S. Alshamrani, 2024 [24] | CNN, SMOTE | Balancing, feature selection | Acc, precision, recall, | ✓ | ✓ | ✓ | ✓ | 🗶 | Cloud-based, example/scenario |

| Fatima Isiaka, 2023–2024 [25] | CNN, RNN | Autoencoder-based | Precision, recall, etc. | ✓ | ✓ | ✓ | ✓ | 🗶 | Metrics discussion (scalar vs. visual) |

| Sajid, M.; Malik, K.R.; Almogren, A. et al., 2024 [26] | ML, DL, hybrid | Preprocess, explore | Acc, precision, F1-score, etc. | ✓ | ✓ | ✓ | 🗶 | 🗶 | Comparison-based models |

| A. Khan, M. S. Hossain, M. K. Hasan, et al., 2025 [27] | Review/survey | Explore emerging techs | FP, explore, F1, precision, etc. | ✓ | ✓ | ✓ | 🗶 | 🗶 | Justify metrics selection |

| Mert Nakıp, Erol Gelenbe, 2023 [28] | Supervised-based | Acquire online, auto-associate NN, trust evaluation | Acc, precision, recall, F1, etc. | ✓ | ✓ | ✓ | 🗶 | 🗶 | Useful in adaptive, streaming IDS |

| Jinxin Liu, Murat Simsek, Michele Nogueira, Burak Kantarci et al., 2023 [29] | Transfer arcitechure-based | Combine features and compare | F1-score | ✓ | ✓ | ✓ | 🗶 | 🗶 | Modern modeling, useful with metric trade-offs |

| Yifan Zeng et al., 2025 [30] | CNN, SC-CGAN | Generate, combine, oversample | Acc, F1, SHAP, LIME, cost-sensitive, attention | ✓ | ✓ | ✓ | 🗶 | 🗶 | Interpretability purposes |

| Ours, 2025 | ML, DL, SMOTE, visualization, empirical | Preprocessing, undersampling, oversampling, feature engineering, dimensionality reduction | Acc, F1, precision, recall, ROC-AUC, visualization, confusion matrix, coding | ✓ | ✓ | ✓ | ✓ | ✓ | Modeling assessment, methodology development |

| Parameter | Value |

|---|---|

| sampling_strategy | ‘auto’ |

| random_state | 47 |

| k_neighbors | 5 |

| Parameter | Value |

|---|---|

| penalty | ‘l2’ |

| tol | 0.0001 |

| C | 1 |

| solver | ‘lbfgs’ |

| max_iter | 100 |

| intercept_scaling | 1 |

| Metric | Precision | Recall | F1-Score | Support | Test Size | Accuracy |

|---|---|---|---|---|---|---|

| 0 | 1.00 | 1.00 | 1.00 | 109,917 | 10,000 | 1.000000 |

| 1 | 1.00 | 1.00 | 1.00 | 20,000 | 1.000000 | |

| accuracy | 1.00 | 209,706 | 30,000 | 1.000000 | ||

| macro avg | 1.00 | 1.00 | 1.00 | 209,706 | 40,000 | 0.999875 |

| weighted avg | 1.00 | 1.00 | 1.00 | 209,706 | 50,000 | 1.000000 |

| weighted avg | 1.00 | 1.00 | 1.00 | 209,706 | 60,000 | 0.999917 |

| 70,000 | 1.000000 | |||||

| 80,000 | 1.000000 | |||||

| 90,000 | 0.999833 | |||||

| 100,000 | 0.999750 | |||||

| 110,000 | 0.999909 | |||||

| 120,000 | 0.999792 | |||||

| 130,000 | 0.999962 | |||||

| 140,000 | 0.999929 | |||||

| 150,000 | 1.000000 | |||||

| 160,000 | 0.999844 | |||||

| 170,000 | 0.999853 | |||||

| 180,000 | 0.999889 | |||||

| 190,000 | 0.999921 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zayid, E.I.M.; Isah, I.; Humayed, A.A.; Adam, Y.A. Innovating Intrusion Detection Classification Analysis for an Imbalanced Data Sample. Information 2025, 16, 883. https://doi.org/10.3390/info16100883

Zayid EIM, Isah I, Humayed AA, Adam YA. Innovating Intrusion Detection Classification Analysis for an Imbalanced Data Sample. Information. 2025; 16(10):883. https://doi.org/10.3390/info16100883

Chicago/Turabian StyleZayid, Elrasheed Ismail Mohommoud, Ibrahim Isah, Abdulmalik A. Humayed, and Yagoub Abbker Adam. 2025. "Innovating Intrusion Detection Classification Analysis for an Imbalanced Data Sample" Information 16, no. 10: 883. https://doi.org/10.3390/info16100883

APA StyleZayid, E. I. M., Isah, I., Humayed, A. A., & Adam, Y. A. (2025). Innovating Intrusion Detection Classification Analysis for an Imbalanced Data Sample. Information, 16(10), 883. https://doi.org/10.3390/info16100883