Evaluating the Effectiveness and Ethical Implications of AI Detection Tools in Higher Education

Abstract

1. Introduction

1.1. Generative AI in Higher Education: Evolution, Impact, and Institutional Response

1.2. Assessing the Effectiveness of AI Detection Tools and Ethical Issues in Teaching and Practice

1.3. Identifying Gaps in Existing Research and Rationale for Study

- Institutional Implementation of AI Detection Tools in Academic Integrity Frameworks and Assessment Systems;

- Evaluating the Effectiveness and Limitations of Current AI Detection Tools in Distinguishing AI-Generated Content from Student Work;

- The Ethical, Procedural, and Pedagogical Concerns that AI Detection Systems Raise for Students and Faculty in Higher Education.

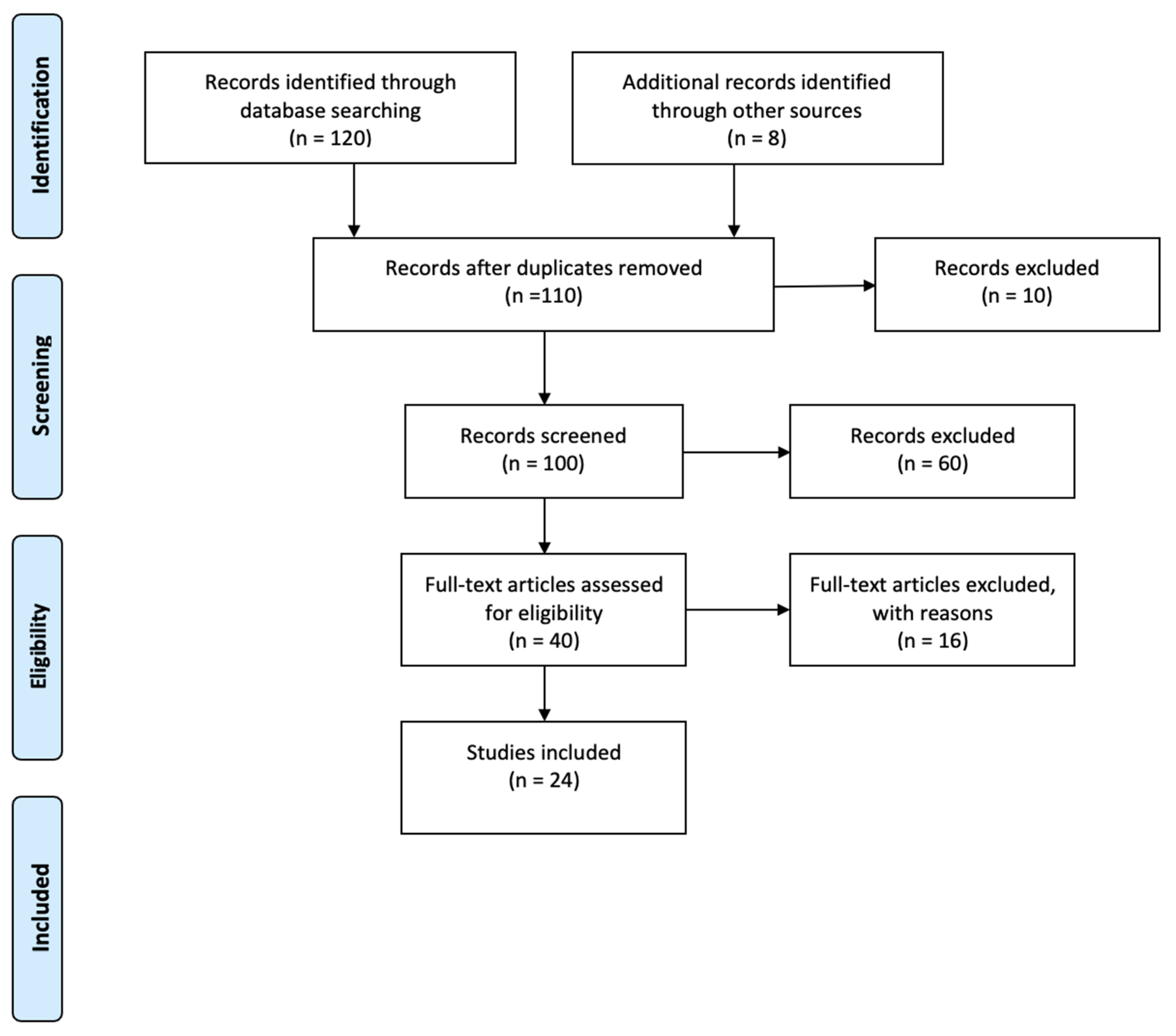

2. Materials and Methods

Review Process

3. Results

3.1. Screening Summary

3.2. Study Characteristics

3.3. Thematic Analysis

4. Discussion

4.1. Institutional Implementation of AI Detection Tools in Academic Integrity Frameworks and Assessment Systems

4.1.1. Policy Integration and Ethical Framing in the Age of AI

4.1.2. Technological Adoption and the Effectiveness of AI Detection Tools

4.1.3. Transformations in Assessment Design and Pedagogical Strategy

4.2. Evaluating the Effectiveness and Limitations of Current AI Detection Tools in Distinguishing AI-Generated Content from Student Work

4.2.1. Effectiveness and Shortcomings of Current AI Detection Tools

4.2.2. Emerging Innovations and Methodological Approaches in AI Detection

4.2.3. Ethical, Legal, and Educational Implications

4.3. Ethical, Procedural, and Pedagogical Concerns Raised by AI Detection Tools in Higher Education

4.3.1. Ethical Ambiguities and Student Vulnerabilities

4.3.2. Procedural Inconsistencies and Institutional Risks

4.3.3. Pedagogical Consequences and the Future of Assessment

5. Limitations of Current Research

6. Suggestions for Future Research

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Abbas, M.; Jam, F.A.; Khan, T.I. Is it harmful or helpful? Examining the causes and consequences of generative AI usage among university students. Int. J. Educ. Technol. High. Educ. 2024, 21, 10. [Google Scholar] [CrossRef]

- Alexander, K.; Savvidou, C.; Alexander, C. Who wrote this essay? Detecting AI-generated writing in second language education in higher education. Teach. Engl. Technol. 2023, 23, 25–43. [Google Scholar] [CrossRef]

- Davar, N.F.; Dewan, M.A.A.; Zhang, X. AI Chatbots in Education: Challenges and Opportunities. Information 2025, 16, 235. [Google Scholar] [CrossRef]

- Al-Zahrani, A.M. The impact of generative AI tools on researchers and research: Implications for academia in higher education. Innov. Educ. Teach. Int. 2024, 61, 1029–1043. [Google Scholar] [CrossRef]

- Lund, B.D.; Wang, T.; Mannuru, N.R.; Nie, B.; Shimray, S.; Wang, Z. Chatgpt and a new academic reality: Artificial Intelligence-written research papers and the ethics of the large language models in scholarly publishing. J. Assoc. Inf. Sci. Technol. 2023, 74, 570–581. [Google Scholar] [CrossRef]

- Revell, T.; Yeadon, W.; Cahilly-Bretzin, G.; Clarke, I.; Manning, G.; Jones, J.; Mulley, C.; Pascual, R.J.; Bradley, N.; Thomas, D.; et al. Chatgpt versus human essayists: An exploration of the impact of artificial intelligence for authorship and academic integrity in the humanities. Int. J. Educ. Integr. 2024, 20, 18. [Google Scholar] [CrossRef]

- Haenlein, M.; Kaplan, A. A brief history of artificial intelligence: On the past, present, and future of artificial intelligence. Calif. Manag. Rev. 2019, 61, 5–14. [Google Scholar] [CrossRef]

- Zhao, Y.; Borelli, A.; Martinez, F.; Xue, H.; Weiss, G.M. Admissions in the age of AI: Detecting AI-generated application materials in higher education. Sci. Rep. 2024, 14, 26411. [Google Scholar] [CrossRef]

- Yeo, M.A. Academic integrity in the age of Artificial Intelligence (AI) authoring apps. TESOL J. 2023, 14, e716. [Google Scholar] [CrossRef]

- Weber-Wulff, D.; Anohina-Naumeca, A.; Bjelobaba, S.; Foltýnek, T.; Guerrero-Dib, J.; Popoola, O.; Šigut, P.; Waddington, L. Testing of detection tools for AI-generated text. Int. J. Educ. Integr. 2023, 19, 26. [Google Scholar] [CrossRef]

- Ibrahim, K. Using AI-based detectors to control AI-assisted plagiarism in ESL writing: “The Terminator Versus the Machines”. Lang. Test. Asia 2023, 13, 46. [Google Scholar] [CrossRef]

- Gaumann, N.; Veale, M. AI providers as criminal essay mills? Large language models meet contract cheating law. Inf. Commun. Technol. Law 2024, 33, 276–309. [Google Scholar] [CrossRef]

- Perkins, M. Academic Integrity considerations of AI Large Language Models in the post-pandemic era: Chatgpt and beyond. J. Univ. Teach. Learn. Pract. 2023, 20, 1–24. [Google Scholar] [CrossRef]

- Waltzer, T.; Pilegard, C.; Heyman, G.D. Can you spot the bot? Identifying AI-generated writing in college essays. Int. J. Educ. Integr. 2024, 20, 11. [Google Scholar] [CrossRef]

- Ardito, C.G. Generative AI detection in higher education assessments. New Dir. Teach. Learn. 2025, 2025, 11–28. [Google Scholar] [CrossRef]

- Elkhatat, A.M.; Elsaid, K.; Almeer, S. Evaluating the efficacy of AI content detection tools in differentiating between human and AI-generated text. Int. J. Educ. Integr. 2023, 19, 17. [Google Scholar] [CrossRef]

- Birks, D.; Clare, J. Linking artificial intelligence facilitated academic misconduct to existing prevention frameworks. Int. J. Educ. Integr. 2023, 19, 20. [Google Scholar] [CrossRef]

- Kar, S.K.; Bansal, T.; Modi, S.; Singh, A. How Sensitive Are the Free AI-detector Tools in Detecting AI-generated Texts? A Comparison of Popular AI-detector Tools. Indian J. Psychol. Med. 2024, 47, 275–278. [Google Scholar] [CrossRef]

- Sukhera, J. Narrative Reviews: Flexible, Rigorous, and Practical. J. Grad. Med. Educ. 2022, 14, 414–417. [Google Scholar] [CrossRef]

- Das Deep, P.; Martirosyan, N.; Ghosh, N.; Rahaman, M.S. Chatgpt in ESL Higher Education: Enhancing Writing, Engagement, and Learning Outcomes. Information 2025, 16, 316. [Google Scholar] [CrossRef]

- Hao, J.; Von Davier, A.A.; Davier, V.; College, B.; Harris, D.J. Transforming Assessment: The Impacts and Implications of Large Language Models and Generative AI. Educ. Meas. Issues Pract. 2024, 43, 16–29. [Google Scholar] [CrossRef]

- Krawczyk, N.; Probierz, B.; Kozak, J. Towards AI-Generated Essay Classification Using Numerical Text Representation. Appl. Sci. 2024, 14, 9795. [Google Scholar] [CrossRef]

- Krishna, K.; Song, Y.; Karpinska, M.; Wieting, J.; Iyyer, M. Paraphrasing evades detectors of AI-generated text, but retrieval is an effective defense. In: Advances in Neural Information Processing Systems. Neural Inf. Process. Syst. Found. 2023, 36, 27469–27500. [Google Scholar]

- Nelson, A.S.; Santamaría, P.V.; Javens, J.S.; Ricaurte, M. Students’ Perceptions of Generative Artificial Intelligence (genai) Use in Academic Writing in English as a Foreign Language †. Educ. Sci. 2025, 15, 611. [Google Scholar] [CrossRef]

- Pan, W.H.; Chok, M.J.; Wong, J.L.S.; Shin, Y.X.; Poon, Y.S.; Yang, Z.; Chong, C.Y.; Lo, D.; Lim, M.K. Assessing AI detectors in identifying AI-generated code: Implications for education. In Proceedings of the ACM Conference (Conference’17), Rio de Janeiro, Brazil, 14–20 April 2024; pp. 1–11. [Google Scholar]

- Baethge, C.; Goldbeck-Wood, S.; Mertens, S. SANRA—A scale for the quality assessment of narrative review articles. Res. Integr. Peer Rev. 2019, 4, 5. [Google Scholar] [CrossRef]

| Keyword/Concept | Boolean Operators | Purpose/Focus |

|---|---|---|

| AI Detection Tools | “AI detection tools” OR “AI writing detectors” OR “AI content detection” | To identify literature discussing technologies designed to detect AI-generated content in educational contexts. |

| Higher Education Context | “Higher education” OR “university” OR “tertiary education” OR “college” | To narrow the scope to post-secondary education environments where such tools are being evaluated and implemented. |

| Academic Integrity | “Academic integrity” AND “plagiarism” OR “misconduct” OR “academic honesty” | To capture studies exploring how AI detection tools intersect with integrity frameworks and institutional misconduct policies. |

| Generative AI Tools | “Generative AI” OR “ChatGPT” OR “large language models” OR “GPT-3” OR “GPT-4” | To focus on content related to the technologies most relevant to recent shifts in academic writing and integrity concerns. |

| Specific Detection Tools | “Turnitin” OR “GPTZero” OR “ZeroGPT” OR “Copyleaks” OR “CrossPlag” | To retrieve empirical studies that tested or reviewed commonly used detection platforms in educational contexts. |

| Detection Accuracy & Effectiveness | “accuracy” OR “detection performance” AND “false positives” OR “false negatives” | To locate studies that evaluated the technical reliability of AI detectors, particularly in distinguishing human vs. machine-generated texts. |

| Student and Faculty Perceptions | “Student perception” OR “faculty perception” AND “AI detection” OR “AI surveillance” | To understand user attitudes, concerns, and ethical views toward institutional use of AI detection technologies. |

| Ethical Implications | “ethics” OR “ethical implications” AND “privacy” OR “fairness” OR “consent” OR “bias” OR “equity” | To uncover scholarship discussing AI detection’s moral and social dimensions, especially around data use, consent, and equity in enforcement. |

| Assessment and Curriculum Redesign | “Assessment design” OR “curriculum redesign” AND “AI-generated writing” OR “ChatGPT” | To identify pedagogical responses to AI, including innovative assessments that reduce reliance on detection and emphasize human-centered learning. |

| AI Literacy and Responsible Use | “AI literacy” OR “responsible AI use” AND “teaching practice” OR “student support” | To gather conceptual and practice-oriented papers promoting student engagement with AI tools in ethical and educationally beneficial ways. |

| Criteria | Inclusion | Exclusion | Rationale |

|---|---|---|---|

| Publication Date | Peer-reviewed articles published between 2021 and 2024. | Articles published before 2021. | Ensures the review reflects the post-ChatGPT era, significantly accelerating the deployment and institutional scrutiny of AI detection tools in higher education. The 2021–2024 window aligns with the rapid technological and policy transformation period in academia. |

| Language | Studies published in English. | Non-English publications. | English was selected as the review language to maintain linguistic consistency, avoid translation inaccuracies, and reflect the dominance of English in scholarly discourse, particularly in AI and higher education research domains. |

| Peer-Review Status | Only peer-reviewed journal articles and conference proceedings included. | Excludes preprints, white papers, blogs, media articles, and non-peer-reviewed sources. | Peer-reviewed sources ensure methodological rigor and quality, according to the SANRA (Scale for the Assessment of Narrative Review Articles) criteria for evaluating the included literature. |

| Focus on Higher Education | Articles focused on university-level or tertiary education settings, including institutional, student, or faculty perspectives. | Research on K–12 education, vocational training, or non-academic sectors. | Ensures alignment with the study’s scope, AI detection tools are used within university-level academic integrity systems, not other educational levels or industries. |

| AI Detection Systems | Articles discussing AI writing detection tools (e.g., Turnitin AI, GPTZero, Copyleaks, ZeroGPT, CrossPlag, OpenAI classifiers). | Articles discussing AI in education generally and which do not reference detection tools. | The tool-specific review assesses detection systems’ technical, ethical, and procedural roles in enforcing academic integrity. |

| Thematic Relevance | Studies that explore: (a) institutional policy integration, (b) effectiveness and accuracy of detection tools, or (c) ethical and pedagogical impacts. | Studies lacking focus on institutional policy, accuracy, or ethical/pedagogical implications. | These themes correspond directly to the three research questions (RQ1–RQ3) guiding the review and structure the analysis across policy, performance, and pedagogy/ethics. |

| Methodological Clarity | Research with explicit methodological frameworks, such as case studies, comparative evaluations, theoretical models, or structured reviews. | Articles lacking any straightforward methodological approach or lacking an evaluative structure. | This ensures replicability, transparency, and analytical depth, especially where empirical claims about tool performance or institutional practice are made. |

| Stakeholder Involvement | Studies involving university stakeholders: students, faculty, administrators, policy makers, or institutional frameworks. | Articles focusing on AI tool developers, industry marketing, or technical benchmarking without a user perspective. | It focuses on the academic community, which is most impacted by AI detection, ensuring that findings remain grounded in educational practice, student experience, and faculty decision-making. |

| Ethical and Social Dimensions | Articles addressing fairness, surveillance, student rights, linguistic bias, transparency, or AI misuse/misidentification. | Papers focused purely on technical algorithms or architecture without discussing social or ethical implications. | Ethical risk is a significant pillar of the review. Including studies that engage with due process, trust, and academic freedom ensures a holistic assessment of the tools’ impact, beyond raw accuracy. |

| No | Reference (In-Text) | Study Location | Target Group | Research Objective | Research Approach | Principal Outcomes |

|---|---|---|---|---|---|---|

| 1 | Abbas et al. (2024) [1] | Universities in Pakistan | University students | To examine the causes and consequences of ChatGPT usage among university students | Quantitative | Academic workload and time pressure increase ChatGPT use; reward-sensitive students use it less; use is linked to procrastination, memory loss, and lower CGPA. |

| 2 | Al-Zahrani (2024) [4] | Saudi Arabia | Higher education students | To explore students’ awareness, use, impact perception, and ethical considerations of Generative AI (GenAI) tools in academic research | Quantitative survey | Students reported high awareness and positive experiences with GenAI, optimism about its future, and strong ethical concerns. However, training/support was limited. |

| 3 | Alexander et al. (2023) [2] | Cyprus | ESL lecturers in higher education | Evaluate the effectiveness of AI detectors and human judgment in ESL assessment. | Qualitative | The paper reports that AI detectors (e.g, Turnitin, GPTZero, Crossplag) were generally more accurate than the ESL lecturers, particularly in identifying fully AI-generated essays. |

| 4 | Ardito et al. (n.d.) [15] | Global | Higher education faculty and students | Critically analyze generative AI detection tools’ effectiveness, vulnerabilities, and ethical implications in academic assessments. | Narrative analysis | AI detectors are unreliable, biased, and misaligned with educational goals. The study advocates replacing detection tools with robust, AI-inclusive assessment frameworks. |

| 5 | Birks & Clare (2023) [17] | Global | Commentary | Conceptual analysis using the situational crime prevention (SCP) framework, supplemented by academic misconduct literature. | Theoretical analysis | AI-misconduct can be tackled using opportunity-reduction strategies from SCP, including redesigning assessments, increasing detection risks, reducing temptations, and clarifying institutional policies. |

| 6 | Davar et al. (2025) [3] | Global | Students and Educators in Higher Education | To explore the benefits and challenges of AI chatbots (e.g., ChatGPT) in education | Narrative Review | AI chatbots can support adaptive, real-time, and personalized learning and teaching, but raise concerns about ethics, academic integrity, misinformation, and accessibility. |

| 7 | Deep et al. (2025) [20] | Global | Students and Educators in Higher Education | To evaluate the accuracy and ethical concerns surrounding AI-content detection tools | Narrative Review | Limitations include false positives, a lack of transparency, and fairness concerns; Turnitin AI, GPTZero, and Copyleaks were analyzed. |

| 8 | Elkhatat (2023) [16] | UAE | University Students | To explore students’ perceptions of using ChatGPT in academic writing, particularly regarding fairness, bias, and trust | Quantitative | Most students acknowledged ChatGPT’s usefulness, but raised concerns over fairness, accuracy, and trustworthiness. |

| 9 | Gaumann et al. (2024) [12] | Global | Legal scholars, policymakers, and education regulators. | Engaged in rigorous legal and policy analysis, reviewing statutes, AI services, and advertising practices | Conceptual Commentary | LLM providers may unintentionally fall under contract cheating laws; legal frameworks are unclear and need reform to protect legitimate AI use while targeting essay mills. |

| 10 | Hao et al. (2024) [21] | Not Applicable—the study is conceptual | Assessment professionals, educators, and students (Both K–12 and higher education) | Explore how LLMs and generative AI affect educational assessment | Conceptual | AI improves test creation and scoring efficiency but introduces concerns around fairness, validity, and security. |

| 11 | Haenlein & Kaplan (2019) [7] | Not location-based; global focus | General readers, scholars, and business leaders | To provide a historical overview, current applications, and future outlook of AI in business and society | Narrative review/editorial | The article outlines the historical development of AI and its current applications in business contexts like HR, marketing, and decision-making. It also discusses future challenges related to ethics, regulation, and societal impact. |

| 12 | Ibrahim et al. (2023) [11] | Kuwait | University ESL students | Test the effectiveness of AI detectors for identifying AI-generated plagiarism. | Empirical descriptive comparative study | Crossplag showed greater sensitivity to machine-generated texts, as reflected by a higher concentration of scores. |

| 13 | Kar et al. (2025) [18] | India | Academic integrity experts | To assess how well free AI detection tools can identify AI-generated and AI-paraphrased academic texts | Quantitative | Detection capability varied across tools; a few accurately identified original and paraphrased AI-generated content, while others failed to detect AI-generated text reliably. |

| 14 | (Krawczyk et al., 2024) [22] | Poland | AI-generated vs. student-written essays | To evaluate and compare different numerical text representation methods for classifying AI-generated essays | Quantitative | TF-IDF and Bag of Words with simple classifiers effectively detected AI-generated essays, while pretrained models performed poorly. |

| 15 | Krishna et al. (2023) [23] | Not location-specific | Higher education (implicitly) | To evaluate how paraphrasing affects the performance of AI-text detectors and to test retrieval-based defense mechanisms | Experimental | Paraphrasing weakens detection tools, but retrieval-based methods offer a more reliable way to identify AI-generated content. |

| 16 | (Lund et al., 2023) [5] | Global | Scholars & publishers | To assess the ethical, practical, and academic implications of using ChatGPT in scholarly publishing. | Conceptual review | Identified benefits (efficiency, editing) and ethical concerns (bias, authorship, plagiarism). |

| 17 | (Nelson et al., 2025) [24] | Ecuador | Higher Education (EFL Students) | To explore Ecuadorian EFL students’ perceptions of generative AI (e.g., ChatGPT) in academic writing, particularly regarding academic dishonesty, usage, and institutional responses. | Quantitative | Students partially understood AI misuse, overestimated detection tools, and favored ethical guidance. |

| 18 | Pan et al. (2024) [25] | Malaysia & Singapore | Higher education students | To assess the performance and limitations of AIGC detectors in identifying AI-generated code in education contexts | Empirical (Quantitative) | AIGC detectors struggled to distinguish between AI- and human-written code. Most tools frequently misclassified code, indicating a need for improved detection models tailored to programming content. Sapling and GLTR showed relatively better performance with specific code variations. |

| 19 | (Perkins et al., 2024) [13] | Vietnam | Higher Education Institutions (HEIs), academic staff, and students | Examine academic integrity issues from student use of AI/LLMs like ChatGPT | Literature review | LLMs like ChatGPT produce undetectable, fluent content. Misconduct occurs when their use isn’t disclosed. |

| 20 | (Revell et al., 2024) [6] | UK | Higher education students | Compare the performance of ChatGPT-generated essays with human essays analyzing Old English poetry, and evaluate detection accuracy. | Mixed-methods approach | AI essays scored similarly to student essays but lacked depth and cultural insight. Human markers identified AI essays around 79% of the time; AI detectors like Quillbot were even more accurate, at around 95%. The study urges reassessing how academic integrity is upheld as AI tools advance. |

| 21 | (Waltzer et al., 2024) [14] | USA | Higher Education (Students & instructors) | To find out if people can tell the difference between AI-generated and student-written essays | Quantitative | Instructors struggled to detect AI essays, experience didn’t help, and both groups had mixed views on AI use. |

| 22 | (Weber-Wulff et al., 2023) [10] | Cross-country | Higher education academic integrity context | Evaluate detection tools for AI-generated text | Quantitative | Tools are mostly inaccurate; Turnitin was the most reliable. |

| 23 | (Yeo, 2023) [9] | Singapore | Higher Education (TESOL educators and second/foreign language learners) | To explore how AI writing tools affect academic integrity, authorship, teaching, and assessment in language education. | Conceptual analysis | AI challenges authorship & integrity. Risk of misuse but learning potential. Teachers should integrate AI ethically. |

| 24 | Zhao et al. (2024) [8] | USA | Higher Education | To detect AI-generated and AI-revised content in Letters of Recommendation (LORs) and Statements of Intent (SOIs) | Quantitative; | Domain-specific AI detectors (e.g., BERT, DistilBERT) achieved near-perfect accuracy in distinguishing human vs. AI content in admissions materials; general detectors showed poor cross-domain performance. |

| Central Theme (Research Question) | Co-Themes | Structural Interpretation |

|---|---|---|

| 1. Institutional Policy and Implementation | a. Lack of AI-Specific Academic Integrity Policies | Many institutions have yet to update academic integrity policies to reflect generative AI, creating confusion among faculty and students. |

| b. Policy Gaps and Faculty Uncertainty | Faculty members often rely on personal judgment in AI-related cases due to vague or missing guidelines, which can lead to inconsistency. | |

| c. Student Confusion and Inconsistent Enforcement | Students face unclear expectations, with institutional responses varying by instructor or department, increasing the risk of unfair accusations. | |

| 2. Detection Accuracy and System Performance | a. False Positives and False Negatives | AI detectors often misclassify human writing as AI-generated and vice versa, undermining trust in academic decisions. |

| b. Tool Performance with Evolving LLMs | Many detection tools are not trained to detect the most recent language models (e.g., GPT-4), limiting their effectiveness. | |

| c. Gaps in Validation Across Student Populations | Detection tools often perform poorly with diverse writing styles, especially those from multilingual or non-traditional students. | |

| 3. Ethical and Pedagogical Implications | a. Transparency and Due Process Issues | Students are rarely informed how their work is analyzed, raising concerns about consent, privacy, and fairness in enforcement. |

| b. Equity and Bias Concerns | Linguistic bias leads to disproportionate scrutiny of non-native English speakers, creating equity concerns. | |

| c. Need for Inclusive Assessment Design | Redesigning assessments (e.g., drafts, oral exams) can reduce reliance on detection tools and promote authentic student work. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Deep, P.D.; Edgington, W.D.; Ghosh, N.; Rahaman, M.S. Evaluating the Effectiveness and Ethical Implications of AI Detection Tools in Higher Education. Information 2025, 16, 905. https://doi.org/10.3390/info16100905

Deep PD, Edgington WD, Ghosh N, Rahaman MS. Evaluating the Effectiveness and Ethical Implications of AI Detection Tools in Higher Education. Information. 2025; 16(10):905. https://doi.org/10.3390/info16100905

Chicago/Turabian StyleDeep, Promethi Das, William D. Edgington, Nitu Ghosh, and Md. Shiblur Rahaman. 2025. "Evaluating the Effectiveness and Ethical Implications of AI Detection Tools in Higher Education" Information 16, no. 10: 905. https://doi.org/10.3390/info16100905

APA StyleDeep, P. D., Edgington, W. D., Ghosh, N., & Rahaman, M. S. (2025). Evaluating the Effectiveness and Ethical Implications of AI Detection Tools in Higher Education. Information, 16(10), 905. https://doi.org/10.3390/info16100905