5. Conclusions

Due to the evolution of neural network-driven methods, their positive impact on object detection is becoming increasingly evident. However, existing object detection models face challenges when dealing with complex scenes, like detecting small objects, occluded objects, and multiscale objects. Additionally, these models require high computational power, which limits their usage on embedded systems. We propose a lightweight algorithm based on YOLOv8n as a solution for these issues.

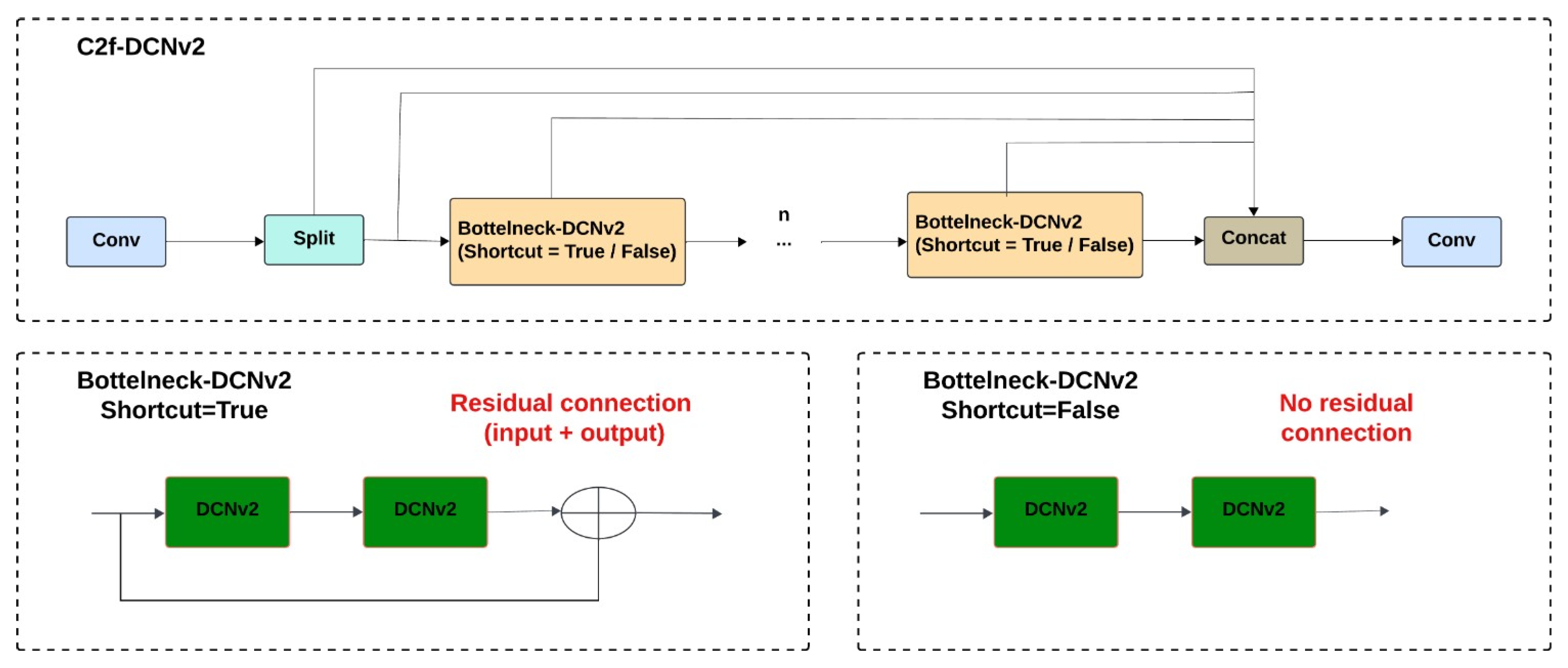

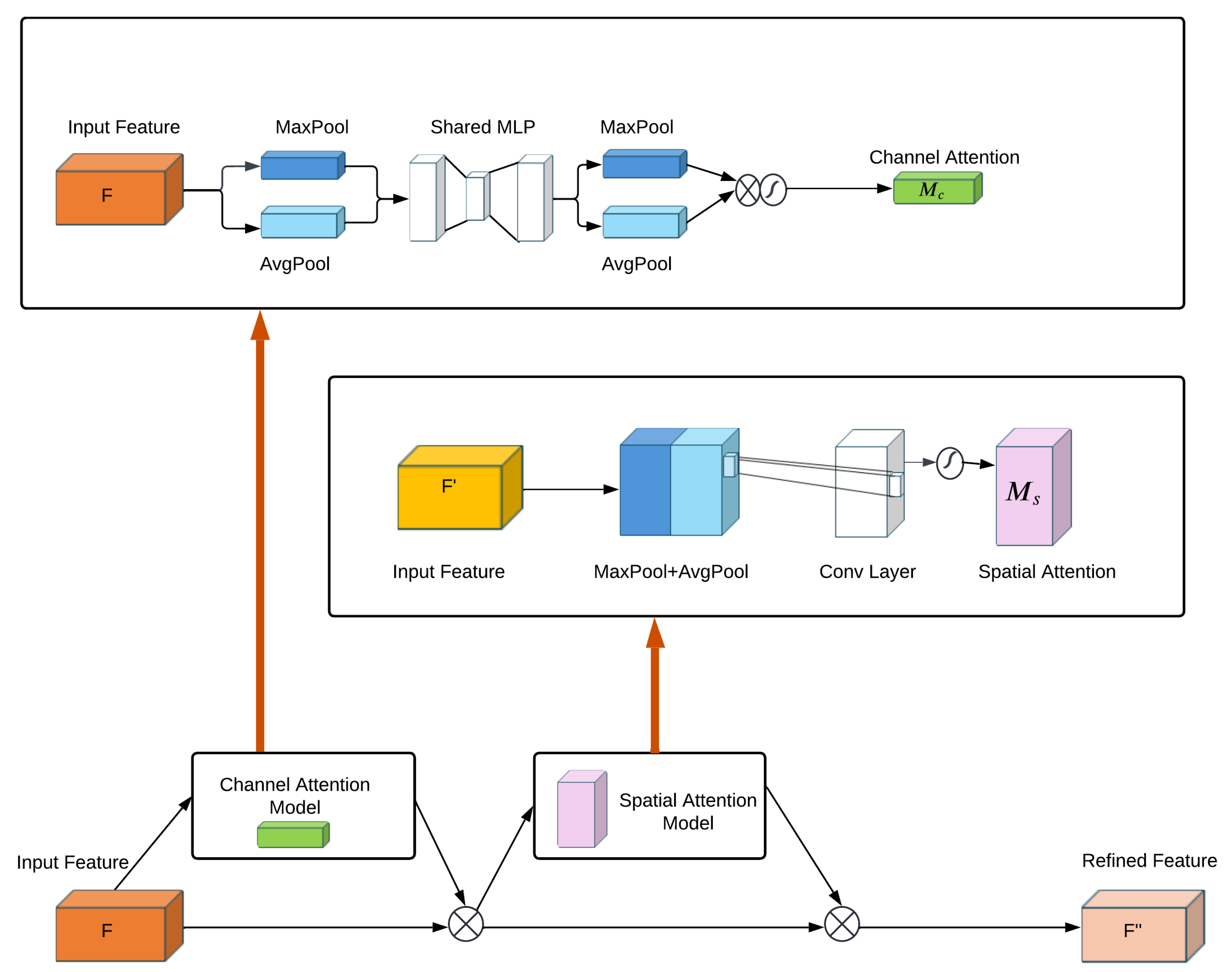

To strengthen the model’s capability to accurately detect small targets, a dedicated detection head is introduced, expanding the total number of detection layers to four. Additionally, the C2f layer in the backbone is replaced with the C2f-DCNv2 module to enhance feature extraction. The module relies on deformable convolution (DCNv2) to efficiently capture features from objects with complex appearances and shapes. Thirdly, we designed the CBAM lightweight attention module to address missed detections by integrating it into the neck. This incorporation involves exploiting both the spatial attention and channel attention modules, which can be effective in extracting feature information flow across the model, thereby enhancing the capacity to understand and interpret complex scenes. Ultimately, our goal is to develop an effective method that minimizes the total number of parameters in our improved approach. GhostConv, as a lightweight convolutional layer, is used to alternate with ordinary convolution in the neck, ensuring good detection performance.

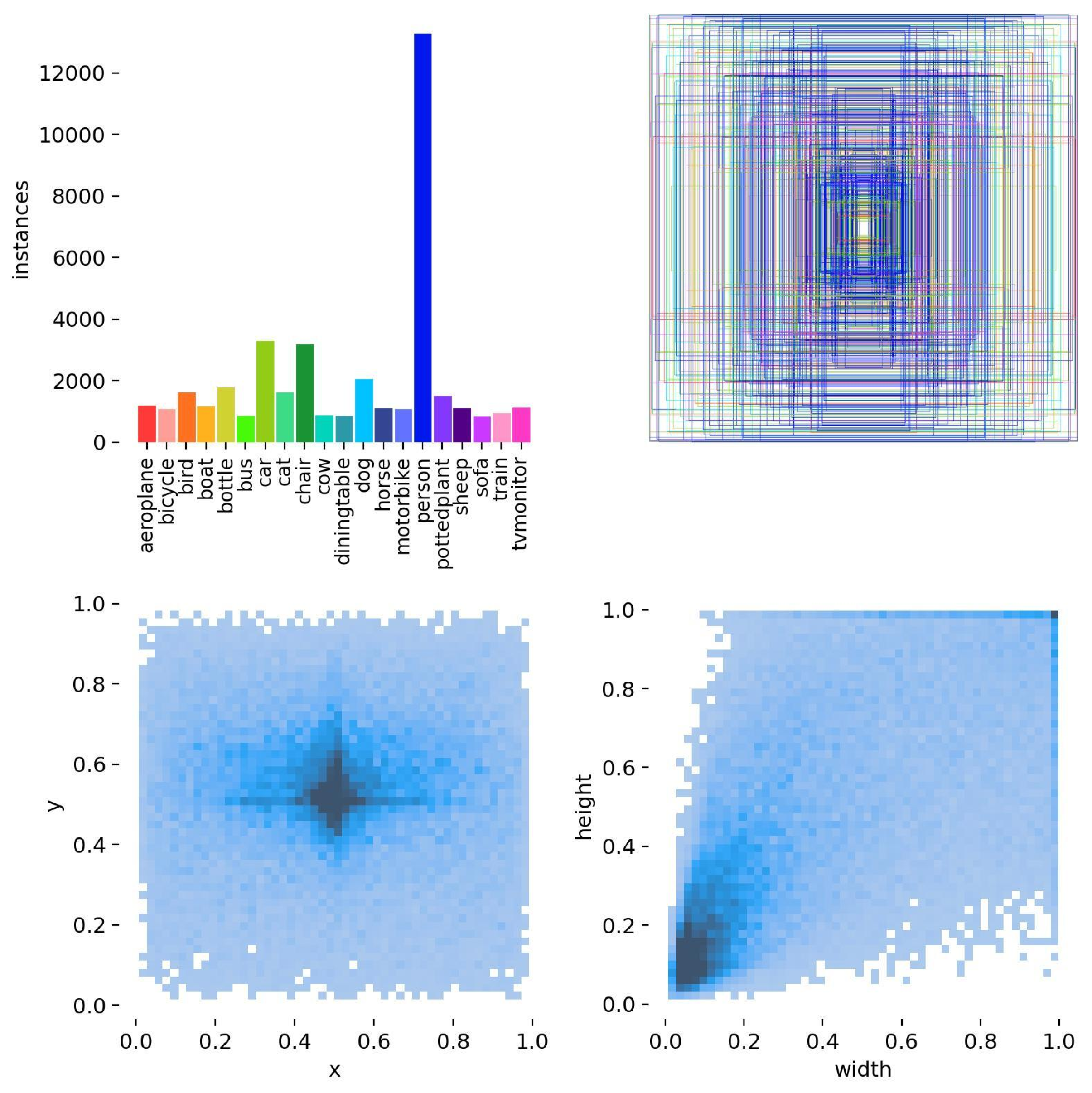

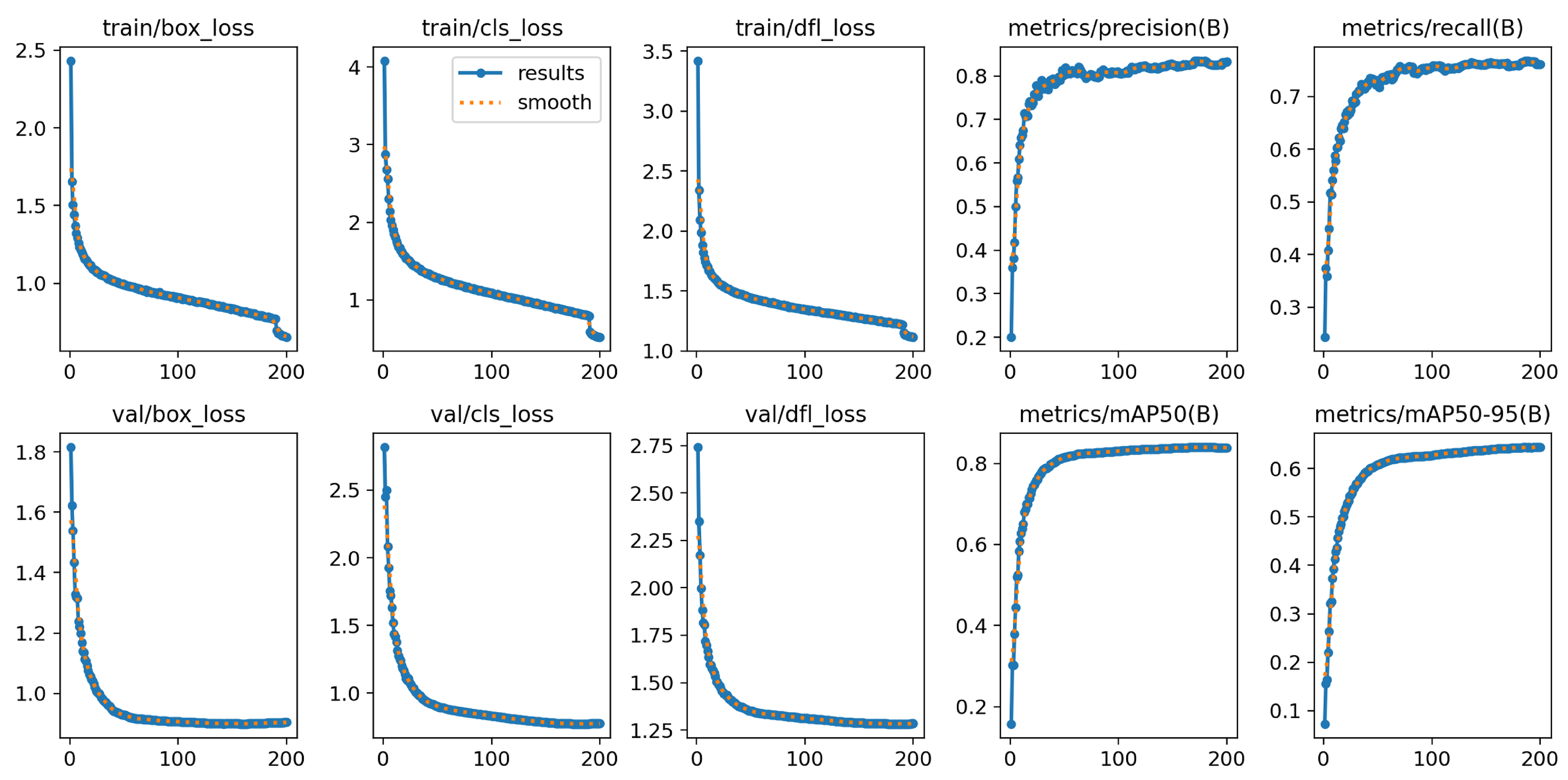

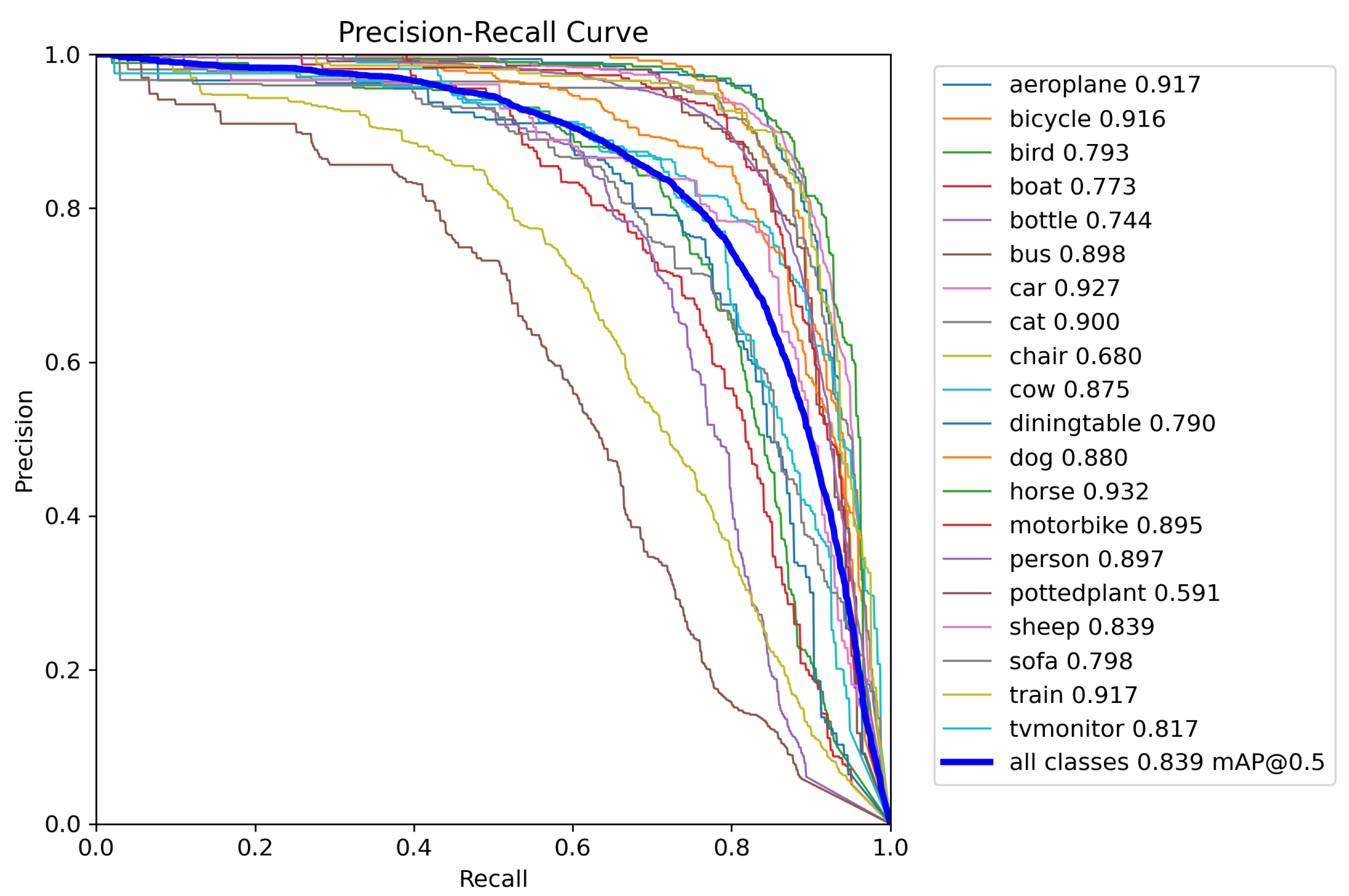

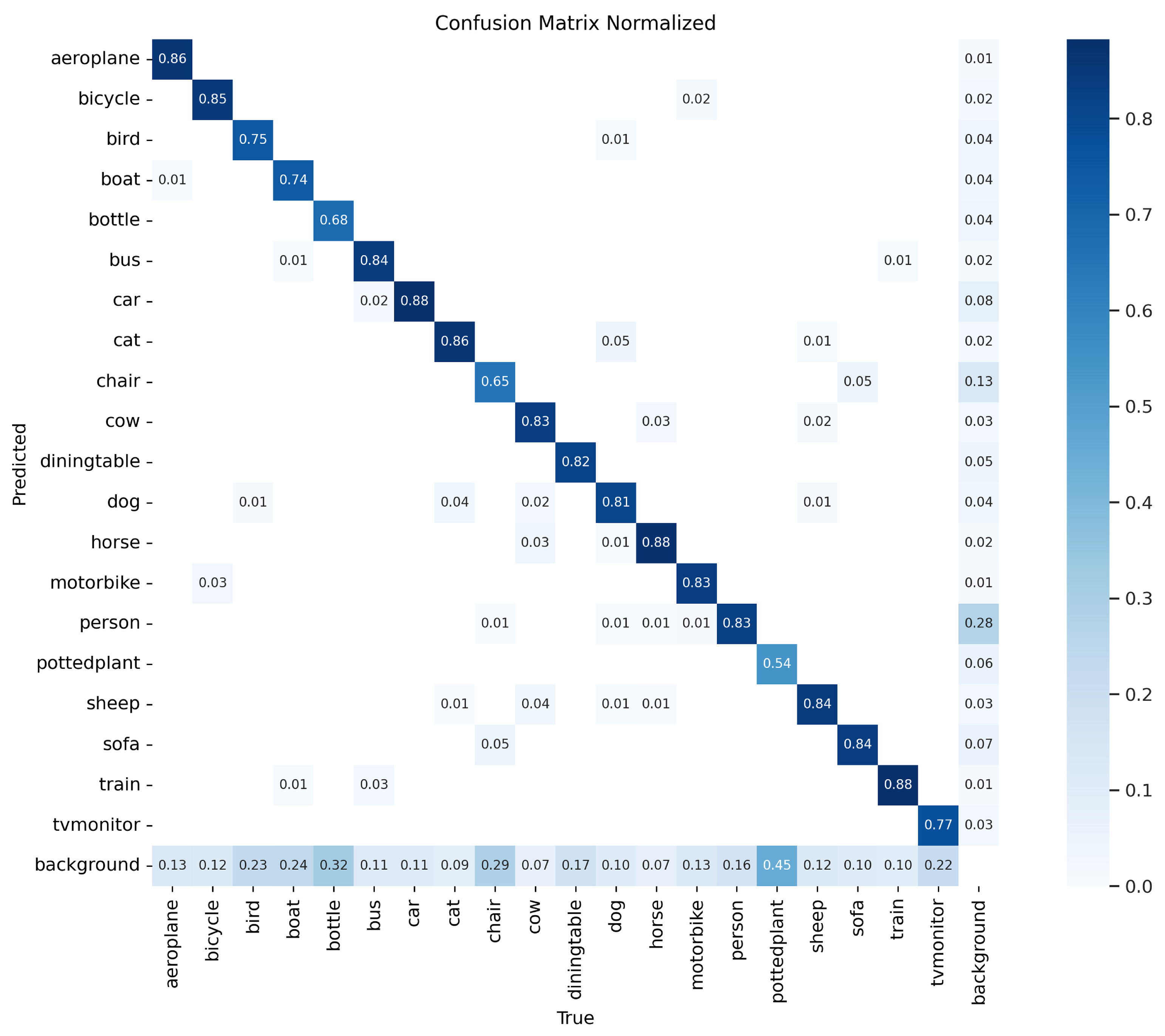

Tests on the PASCAL VOC dataset and the KITTI dataset indicate that our model yields higher detection accuracy while maintaining a low number of parameters. Especially in complex scenes, our model demonstrates clear advantages compared to the baseline YOLOv8n and other existing methods. This confirms that the proposed modifications improve small, occluded, and multiscale object detection while preserving real-time performance. It also opens novel possibilities for applications requiring accurate and efficient detection in complex scenarios.

Future research will lead in several significant directions. Firstly, we aim to adapt the model to a broader range of real-world scenarios, such as object detection under various weather conditions. To further enhance its robustness, future research will investigate multimodal data fusion by integrating information from images, LiDAR, and radar sensors. Another important direction is to combine the model with supporting perception modules. These include semantic segmentation for scene understanding at depth and natural language processing (NLP) to facilitate interaction through voice or text input. Adding NLP would enable the capability to interpret voice commands and generate descriptive feedback, facilitating collaboration between the vision and language modules. Overall, these improvements will build a stronger and more intelligent perception system, which will enhance the performance of our method on small object, occluded object, and multiscale object detection tasks. Ultimately, this work will facilitate autonomous driving and other computer vision applications.

Author Contributions

Conceptualization, S.E.H., B.H. and S.E.F.; methodology, S.E.H.; validation, B.H. and S.E.F.; formal analysis, S.E.H., B.H. and S.E.F.; investigation, S.E.H., B.H. and S.E.F.; resources, S.E.H., B.H. and S.E.F.; data curation, S.E.H.; writing—original draft preparation, S.E.H.; writing—review and editing, S.E.H., B.H. and S.E.F.; visualization, B.H. and S.E.F.; supervision, B.H. and S.E.F. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Ang, G.J.N.; Goil, A.K.; Chan, H.; Lee, X.C.; Mustaffa, R.B.A.; Jason, T.; Woon, Z.T.; Shen, B. A novel application for real-time arrhythmia detection using YOLOv8. arXiv 2023, arXiv:2305.16727. [Google Scholar]

- Salinas-Medina, A.; Neme, A. Enhancing Hospital Efficiency Through Web-Deployed Object Detection: A YOLOv8-Based Approach for Automating Healthcare Operations. In Proceedings of the 2023 IEEE Mexican International Conference on Computer Science (ENC), Guanajuato, Mexico, 11–13 September 2023; pp. 1–6. [Google Scholar]

- Shi, Z. Object detection models and research directions. In Proceedings of the 2021 IEEE International Conference on Consumer Electronics and Computer Engineering (ICCECE), Guangzhou, China, 15–17 January 2021; pp. 546–550. [Google Scholar]

- Li, J.; Xu, R.; Ma, J.; Zou, Q.; Ma, J.; Yu, H. Domain adaptive object detection for autonomous driving under foggy weather. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 2–7 January 2023; pp. 612–622. [Google Scholar]

- Mao, J.; Shi, S.; Wang, X.; Li, H. 3d object detection for autonomous driving: A review and new outlooks. arXiv 2022, arXiv:2206.09474. [Google Scholar]

- Chen, G.; Wang, H.; Chen, K.; Li, Z.; Song, Z.; Liu, Y.; Chen, W.; Knoll, A. A survey of the four pillars for small object detection: Multiscale representation, contextual information, super-resolution, and region proposal. IEEE Trans. Syst. Man Cybern. Syst. 2020, 52, 936–953. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Zhai, S.; Shang, D.; Wang, S.; Dong, S. DF-SSD: An improved SSD object detection algorithm based on DenseNet and feature fusion. IEEE Access 2020, 8, 24344–24357. [Google Scholar] [CrossRef]

- Xie, X.; Cheng, G.; Wang, J.; Yao, X.; Han, J. Oriented R-CNN for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 3520–3529. [Google Scholar]

- Pramanik, A.; Pal, S.K.; Maiti, J.; Mitra, P. Granulated RCNN and multi-class deep sort for multi-object detection and tracking. IEEE Trans. Emerg. Top. Comput. Intell. 2021, 6, 171–181. [Google Scholar] [CrossRef]

- Giri, K.J. SO-YOLOv8: A novel deep learning-based approach for small object detection with YOLO beyond COCO. Expert Syst. Appl. 2025, 280, 127447. [Google Scholar]

- Li, M.; Chen, Y.; Zhang, T.; Huang, W. TA-YOLO: A lightweight small object detection model based on multi-dimensional trans-attention module for remote sensing images. Complex Intell. Syst. 2024, 10, 5459–5473. [Google Scholar] [CrossRef]

- Ma, S.; Lu, H.; Liu, J.; Zhu, Y.; Sang, P. Layn: Lightweight multi-scale attention yolov8 network for small object detection. IEEE Access 2024, 12, 29294–29307. [Google Scholar] [CrossRef]

- Chen, Y.; Yuan, X.; Wang, J.; Wu, R.; Li, X.; Hou, Q.; Cheng, M.M. YOLO-MS: Rethinking multi-scale representation learning for real-time object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2025, 47, 4240–4252. [Google Scholar] [CrossRef]

- Qian, C.; Qian, J.; Wang, C.; Ye, X.; Zhong, C. A Vision Enhancement and Feature Fusion Multiscale Detection Network. Neural Process. Lett. 2024, 56, 19. [Google Scholar] [CrossRef]

- Zhong, R.; Peng, E.; Li, Z.; Ai, Q.; Han, T.; Tang, Y. SPD-YOLOv8: An small-size object detection model of UAV imagery in complex scene. J. Supercomput. 2024, 80, 17021–17041. [Google Scholar] [CrossRef]

- Luo, Q.; Wu, C.; Wu, G.; Li, W. A Small Target Strawberry Recognition Method Based on Improved YOLOv8n Model. IEEE Access 2024, 12, 14987–14995. [Google Scholar] [CrossRef]

- Li, X.; Fu, C.; Li, X.; Wang, Z. Improved faster R-CNN for multi-scale object detection. J. Comput.-Aided Des. Comput. Graph. 2019, 31, 1095–1101. [Google Scholar] [CrossRef]

- Terven, J.; Córdova-Esparza, D.M.; Romero-González, J.A. A comprehensive review of yolo architectures in computer vision: From yolov1 to yolov8 and yolo-nas. Mach. Learn. Knowl. Extr. 2023, 5, 1680–1716. [Google Scholar] [CrossRef]

- Dai, J.; Qi, H.; Xiong, Y.; Li, Y.; Zhang, G.; Hu, H.; Wei, Y. Deformable convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 764–773. [Google Scholar]

- Zhu, X.; Hu, H.; Lin, S.; Dai, J. Deformable convnets v2: More deformable, better results. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 9308–9316. [Google Scholar]

- Jacobsen, J.H.; Van Gemert, J.; Lou, Z.; Smeulders, A.W. Structured receptive fields in cnns. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2610–2619. [Google Scholar]

- Li, L.; Li, B.; Zhou, H. Lightweight multi-scale network for small object detection. PeerJ Comput. Sci. 2022, 8, e1145. [Google Scholar] [CrossRef] [PubMed]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Chen, Y.; Zhang, X.; Chen, W.; Li, Y.; Wang, J. Research on recognition of fly species based on improved RetinaNet and CBAM. IEEE Access 2020, 8, 102907–102919. [Google Scholar] [CrossRef]

- He, K.; Sun, J. Convolutional neural networks at constrained time cost. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 5353–5360. [Google Scholar]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. Ghostnet: More features from cheap operations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1580–1589. [Google Scholar]

- Yang, X.; Ji, W.; Zhang, S.; Song, Y.; He, L.; Xue, H. Lightweight real-time lane detection algorithm based on ghost convolution and self batch normalization. J. Real-Time Image Process. 2023, 20, 69. [Google Scholar] [CrossRef]

- Everingham, M.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The pascal visual object classes (voc) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for autonomous driving? The kitti vision benchmark suite. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 3354–3361. [Google Scholar]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets robotics: The kitti dataset. Int. J. Robot. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Gidaris, S.; Komodakis, N. Object detection via a multi-region and semantic segmentation-aware cnn model. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1134–1142. [Google Scholar]

- Dai, J.; Li, Y.; He, K.; Sun, J. R-fcn: Object detection via region-based fully convolutional networks. In Advances in Neural Information Processing Systems; The MIT Press: Cambridge, MA, USA, 2016; Volume 29. [Google Scholar]

- Zhu, Y.; Zhao, C.; Wang, J.; Zhao, X.; Wu, Y.; Lu, H. Couplenet: Coupling global structure with local parts for object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 4126–4134. [Google Scholar]

- Song, P.; Li, P.; Dai, L.; Wang, T.; Chen, Z. Boosting R-CNN: Reweighting R-CNN samples by RPN’s error for underwater object detection. Neurocomputing 2023, 530, 150–164. [Google Scholar] [CrossRef]

- Shen, Z.; Liu, Z.; Li, J.; Jiang, Y.G.; Chen, Y.; Xue, X. Dsod: Learning deeply supervised object detectors from scratch. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1919–1927. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part I 14. Springer: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Zhou, P.; Ni, B.; Geng, C.; Hu, J.; Xu, Y. Scale-transferrable object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 528–537. [Google Scholar]

- Zhang, S.; Wen, L.; Bian, X.; Lei, Z.; Li, S.Z. Single-shot refinement neural network for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4203–4212. [Google Scholar]

- Kong, T.; Sun, F.; Tan, C.; Liu, H.; Huang, W. Deep feature pyramid reconfiguration for object detection. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 169–185. [Google Scholar]

- Fan, B.; Chen, W.; Cong, Y.; Tian, J. Dual refinement underwater object detection network. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XX 16. Springer: Berlin/Heidelberg, Germany, 2020; pp. 275–291. [Google Scholar]

- Zhang, Z.; Qiao, S.; Xie, C.; Shen, W.; Wang, B.; Yuille, A.L. Single-shot object detection with enriched semantics. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 5813–5821. [Google Scholar]

- Pang, Y.; Wang, T.; Anwer, R.M.; Khan, F.S.; Shao, L. Efficient featurized image pyramid network for single shot detector. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 7336–7344. [Google Scholar]

- Liu, S.; Huang, D. Receptive field block net for accurate and fast object detection. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 385–400. [Google Scholar]

- Li, Y.; Li, J.; Lin, W.; Li, J. Tiny-DSOD: Lightweight object detection for resource-restricted usages. arXiv 2018, arXiv:1807.11013. [Google Scholar]

- Zhao, J.; Zhu, H.; Niu, L. Bitnet: A lightweight object detection network for real-time classroom behavior recognition with transformer and bi-directional pyramid network. J. King Saud Univ.-Comput. Inf. Sci. 2023, 35, 101670. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. Scaled-yolov4: Scaling cross stage partial network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 13029–13038. [Google Scholar]

- Wang, S.; Hao, X. YOLO-SK: A lightweight multiscale object detection algorithm. Heliyon 2024, 10, e24143. [Google Scholar] [CrossRef]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Chen, J.; Er, M.J. Dynamic YOLO for small underwater object detection. Artif. Intell. Rev. 2024, 57, 165. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).