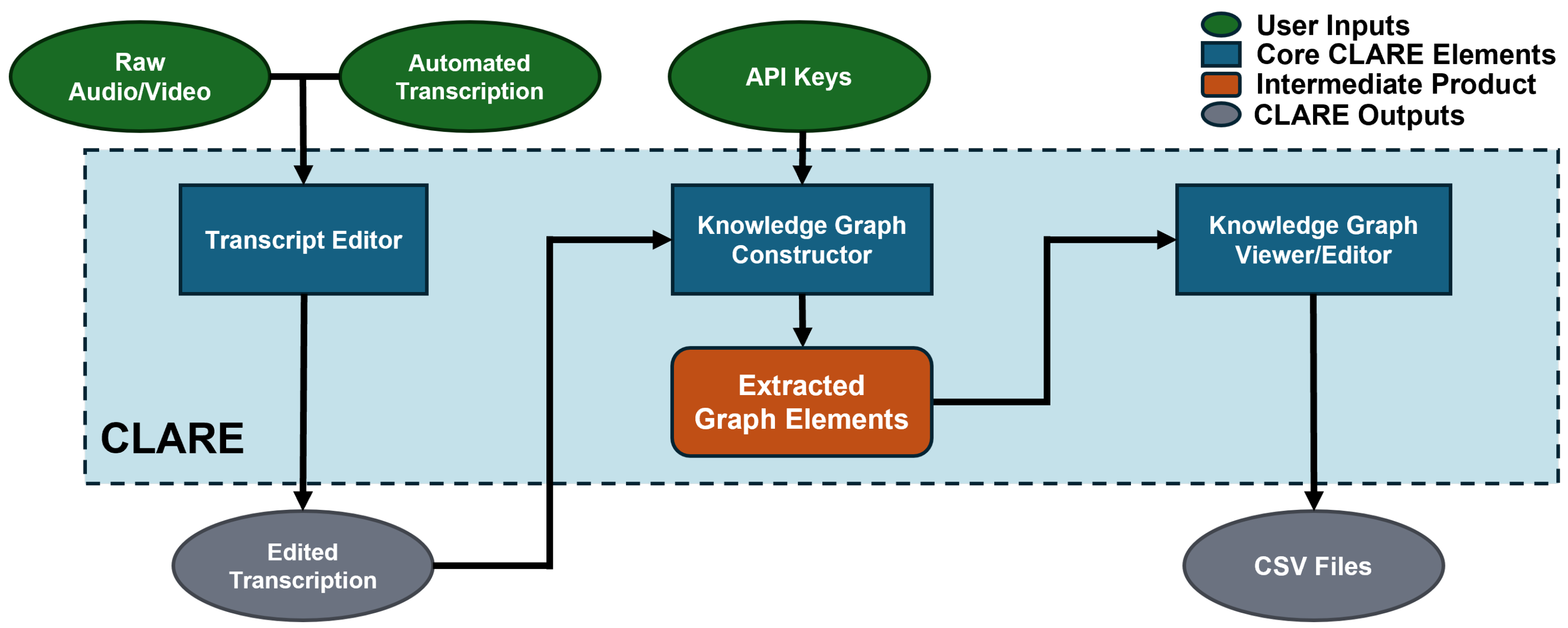

CLARE introduces a unified system for transcript-based KG construction composed of three modular, interoperable components. These are time-synchronized transcript correction, customizable KG construction, and interactive graph editing. Our primary contribution lies in extending AutoKG with a context-aware pipeline that transforms corrected transcripts into semantically labeled triples. We therefore begin by describing the KG construction process in detail. Afterward, we discuss the supporting components for transcript correction and graph refinement, which together enable seamless workflows for domain experts.

2.1. Knowledge Graph Construction

Our system’s KG construction uses a context-aware pipeline that extracts triples with awareness of surrounding discourse, which improves relation accuracy and coherence. In the “Knowledge Graph” tab, users can generate and visualize graphs from their transcripts within an integrated workspace that combines all generation, customization, and exploration tools. To accommodate diverse research needs, we provide model flexibility through the LiteLLM Python package, which supports independent selection of embedding models and LLMs from a variety of providers. These options include both cloud-based services and local execution through Ollama. With LiteLLM, users may run Ollama models on a local server for either embeddings or LLMs, gaining the privacy and cost benefits of on-device inference. In addition, bundled sentence-transformers models are available for local embeddings, which are lightweight enough to run directly within the application. These local choices reduce costs and improve speed while keeping sensitive data off external servers.

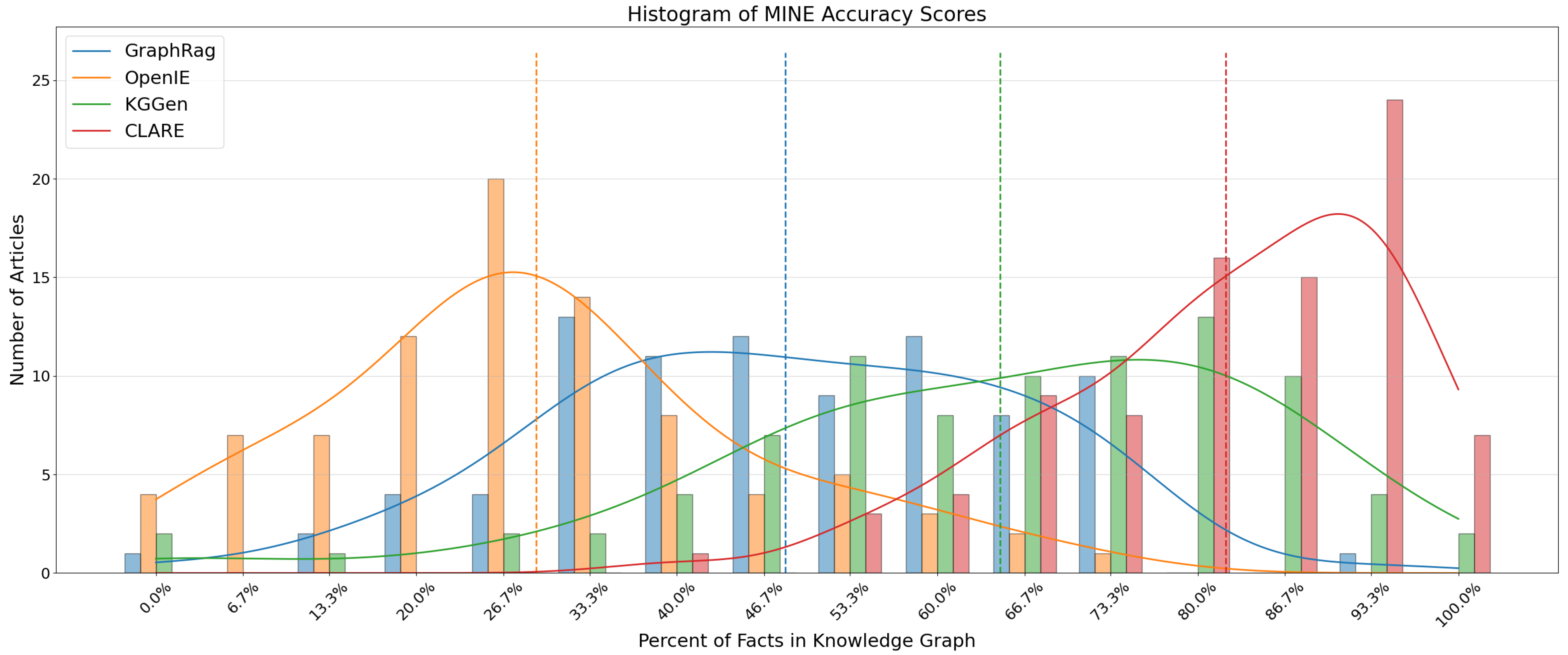

CLARE permits many model choices through LiteLLM and sentence-transformers. For the experiments reported in this paper, we used OpenAI’s gpt-4o-mini for all LLM prompting tasks (entity extraction, entity consolidation, and relation extraction) and sentence-transformers’ all-mpnet-base-v2 for text and entity embeddings. Relation extraction evaluated up to 30 candidate pairs per request and the dynamic threshold was set to 50%. The LLM prompts and specific configurations used for our evaluation are available in the

Supplementary Materials and our Github page at

https://github.com/ryanwaynehenry/CLARE (accessed on 26 September 2025), along with the full source code and executable for CLARE.

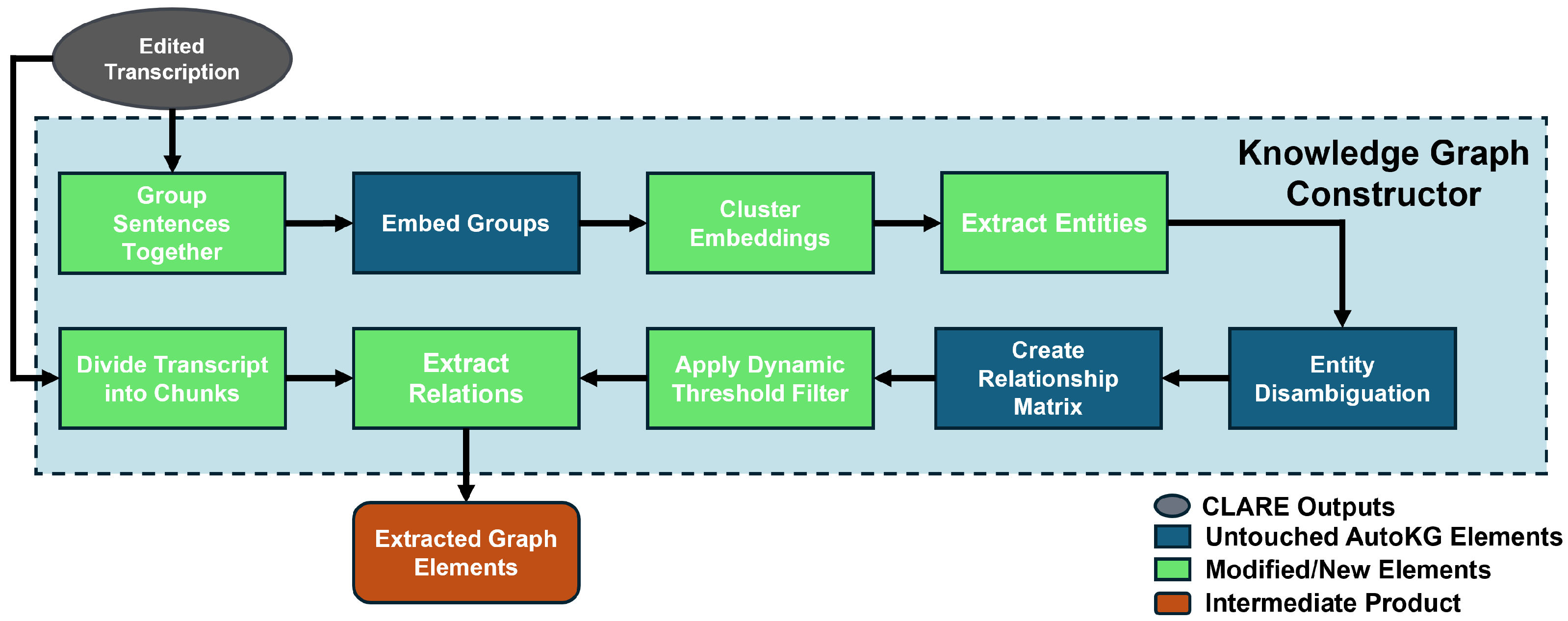

The knowledge graph construction process is initiated by the user within the interface. Before generating a graph, users may provide a central theme to filter irrelevant entities and may also set advanced parameters through the clustering options dialog box. Once ready, they can click the generate KG button to begin graph creation. Our system, illustrated in

Figure 3, extends the AutoKG framework [

23] by adding semantic relations between nodes and introducing modifications that improve context sensitivity and control over clustering behavior.

The KG process begins by preprocessing the input transcript through block-level segmentation and embedding to transform raw text into a form suitable for clustering. Adjacent transcription cells from the same speaker are concatenated to form discourse blocks. Let the sequence of raw utterances be denoted as . The segmentation function S produces a sequence of segments , where each satisfies the constraint words and aligns with sentence boundaries. Each segment is then passed through an embedding function , where is the space of text segments and d is the embedding dimension. This yields a set of vectors with . These vectors are used to capture the semantic content of the transcript in a high-dimensional space.

To identify thematically similar segments, the system applies the k-means clustering algorithm to the embedded set

X. Users may specify the number of clusters

k and the maximum number of entities per cluster

l in the advanced options. If

l is not provided, a preset default is used. If

k is not provided, the system determines it automatically by evaluating several candidate values of

k and selecting the one that maximizes the average silhouette coefficient

. The silhouette coefficient measures clustering quality by comparing the cohesion of a point within its own cluster against its separation from other clusters. It is defined as

where

is the average intra-cluster distance for point

and

is the lowest average inter-cluster distance to any other cluster. A higher value of

indicates better-separated and more cohesive clusters [

29]. The clustering procedure yields a set of

k clusters, either user-defined or chosen by silhouette-based selection, that are then processed independently.

After clustering the block embeddings, the system extracts entities (keywords) from each cluster using the AutoKG procedure [

23]. Let cluster

contain segments

with embeddings

. From

, we sample

segments, with

c nearest to the cluster centroid and

c chosen at random, and prompt the LLM to propose up to

l candidate entities. This produces the candidate set

with

. The system maintains a global entity set

, which has each candidate’s set

added to it. Once all the clusters have had their candidates extracted, the LLM is given

to consolidate duplicates, split overly broad items, and remove low-utility items from the concatenated list of candidates. The resulting

serves as the node vocabulary for downstream association.

For each entity

, we compute its embedding

. We use this embedding to identify which texts are most and least similar in semantic meaning to a given entity based on cosine similarity.

Like in AutoKG, we apply Graph Laplacian Learning on

X to obtain association scores between an entity and each text [

23]. In Graph Laplacian Learning, we first select seed nodes by labeling the

closest blocks to

as positive (score 1) to indicate a strong relationship and the

farthest blocks as negative (score 0) to indicate no relation. It then assigns scores to all remaining text blocks, requiring each score to equal the weighted average of its neighbors’ values. This iterative process propagates the seed labels through the graph until a stable equilibrium is reached [

30]. Let

h be the function produced by Graph Laplacian Learning, yielding a function

that measures the association between entity embedding

and block embedding

. To convert these continuous scores into binary associations, we define the indicator function

as

where we set the threshold of

, so that only texts with high values of correlation will be associated with entity. We then form the set

that encompasses all block embeddings from

X that are linked to entity

.

Using these sets, we create an entity–entity adjacency matrix

, where

which counts how many blocks are jointly associated with

and

. This yields symmetric, nonnegative integer weights between entities that indicate how strongly connected the two are likely to be. We remove self-edges by setting the diagonal to zero,

.

We will use this matrix to identify which entities are likely to have relationships between them. The entities will form the nodes of the KG and the relationships will be the edges that connect them. The user has the ability to set a dynamic threshold based on a selected percentile of the edges they would like to evaluate. Let

be the user-selected retention percentile. The pipeline chooses a threshold

h so that

p% of the nonzero off-diagonal entries of

W are at least

h. We then overwrite

W in place by setting

This keeps roughly the top

of nonzero edges and zeros the rest, preventing those relationships from being considered. This pruning step is specific to our system and differs from AutoKG, which uses

W without pruning. We then form the candidate pair set for relation extraction

Rather than relying solely on the unidirectional weight

to represent relationships between entities as in AutoKG [

23], the pipeline generates a short relation phrase by using the source text as context. The pipeline begins by querying an LLM to determine whether a meaningful relationship exists between entity pairs and to identify its form. First, the full transcript

T is divided into overlapping chunks

of at most

tokens, where

t denotes the model’s token limit and

is a safety margin that ensures there are enough tokens for the prompt and entity pairs. These chunks are generated by the sliding-window procedure in Algorithm 1, which enforces an overlap ratio

o between the chunks. Within each chunk

, we extract the local candidate set

which contains all entity pairs co-occurring in that chunk. To balance efficiency and accuracy, we prompt the LLM to evaluate multiple potential relationships per query, partitioning

into batches of at most

pairs. Each batch is sent to the user-selected LLM via LiteLLM with few-shot prompting [

31]. The LLM responds with a list of

, where

r is the semantic relation identified by the model. The response also includes the direction of the relation and a brief explanation for each relationship, as we found that having the LLM explain its reasoning improves the result. The LLM may also indicate that no relationship exists or that multiple relationships are present between each pair of entities. In the latter case, each relationship for a pair is listed together with its corresponding direction and explanation.

In some cases, an entity pair

may never appear together within any transcript chunk, leaving the system without direct co-occurrence evidence to evaluate their relationship. This absence raises the question of how to gather sufficient context for such pairs. Users may enable a fallback option for these entity pairs in the advanced options menu. We define this set of entity pairs with

where

represents the set of all entity pairs that never co-occur in the same transcript chunk.

When the fallback option is enabled, the system compiles, for each entity, a list of the sentence indices in which it appears within the transcript. Let the transcript be divided into sentences

. For an entity

e, define the index set of mentions

To provide local context, the set of sentences associated with an entity is expanded by adding the immediately preceding and following sentences for each of its members. This helps resolve pronouns and nearby references. We define the one-step neighborhood

and the contextual set for

eFor a pair

, we take the combined context

.

| Algorithm 1 Sliding Window Transcript Chunking |

- 1:

Input T full transcript; t token limit; m safety margin; o overlap ratio ; token counter - 2:

Output chunks - 3:

▹ max tokens per chunk - 4:

▹ list of words - 5:

- 6:

Initialize empty list and empty buffer B - 7:

▹ sample size in words - 8:

▹ tokens in the sample - 9:

▹ words per token estimate - 10:

▹ overlap measured in tokens - 11:

▹ overlap measured in words - 12:

- 13:

while

do - 14:

- 15:

- 16:

if then - 17:

- 18:

- 19:

else - 20:

binary search for maximal fit - 21:

- 22:

while and do - 23:

- 24:

- 25:

if then - 26:

- 27:

else - 28:

- 29:

end if - 30:

- 31:

end while - 32:

finalize current chunk - 33:

- 34:

append B to - 35:

prepare overlap buffer - 36:

▹ overlap size in words - 37:

▹ retain last words in buffer - 38:

- 39:

end if - 40:

end while - 41:

return

|

We then group pairs in

by a greedy procedure described in Algorithm 2. The goal is to reuse overlapping sentences and to keep the number of groups small. Each group

g must satisfy two constraints. The group size satisfies

, with

. The concatenated context of its sentences must fit within the model budget

tokens. For each pair, the algorithm places it in the group that results in the minimal increase in token count, ensuring that all constraints remain satisfied. Once all the groups are finalized, sentences in each group are concatenated in transcript order. Ellipses are inserted between non-adjacent sentences to signal discontinuity to the model. If the context for a single pair exceeds the token budget, we truncate that context to fit while preserving the most relevant sentences. After all groups are formed, we send each group context

, together with its pair set

, to the selected LLM using few-shot prompting. The LLM responds with the same format of triples, direction, and explanation as it did for the co-occurring pairs.

| Algorithm 2 Fallback Relationship Extraction for Non-Co-Occurring Pairs |

- 1:

Input non co–occurring pairs ; transcript T; token limit t; safety margin m; max batch size ; token counter ; mention-indexing function ; extraction procedure - 2:

Output triples for pairs in - 3:

▹ token budget per query - 4:

if then ▹ if the full transcript fits, use it once for all pending pairs - 5:

- 6:

return - 7:

end if - 8:

Split T into sentences ▹ sentence segmentation - 9:

for

to

q

do - 10:

▹ token count per sentence - 11:

end for - 12:

Initialize empty list ▹ will hold tuples - 13:

for all

do - 14:

▹ indices where each entity appears - 15:

▹ context indices using a one–step neighborhood - 16:

▹ token cost of the pair’s context - 17:

if then ▹ trim if a single pair’s context exceeds the budget - 18:

Select a subset emphasizing indices near such that - 19:

, - 20:

end if - 21:

Append to ▹ record pair, its context indices, and cost - 22:

end for - 23:

Sort by in descending order ▹ pack larger contexts first to maximize reuse - 24:

Initialize empty group list - 25:

for all

do - 26:

Find minimizing the marginal token increase - 27:

▹ measures the extra token cost of adding p to g - 28:

, ▹ the set of entity pairs in g, the set of sentence indices in g. - 29:

Adding to a group is subject to two constraints - 30:

1. - 31:

2. - 32:

if such a group g exists then ▹ greedy packing with overlap reuse - 33:

, , - 34:

else - 35:

Create a new group g with , , and ; append to - 36:

end if - 37:

end for - 38:

for all do ▹ build context strings and extract relations - 39:

Let be the sentences indexed by , ordered increasingly by index. - 40:

Build by concatenating , inserting “...” between nonadjacent indices - 41:

- 42:

end for

|

For final processing, we merge the outputs across all the groups, for both co-occurring and fallback pairs. For each candidate triple returned by the model with direction tag , we proceed as follows. If , we keep . If , we swap the arguments and form . If , we discard the item. The finalized set of directed triples defines the edges of the KG, denoted by , where each node corresponds to an extracted keyword. We pass to the visualizer for interactive exploration and filtering.

2.2. Interface Design and Functionality

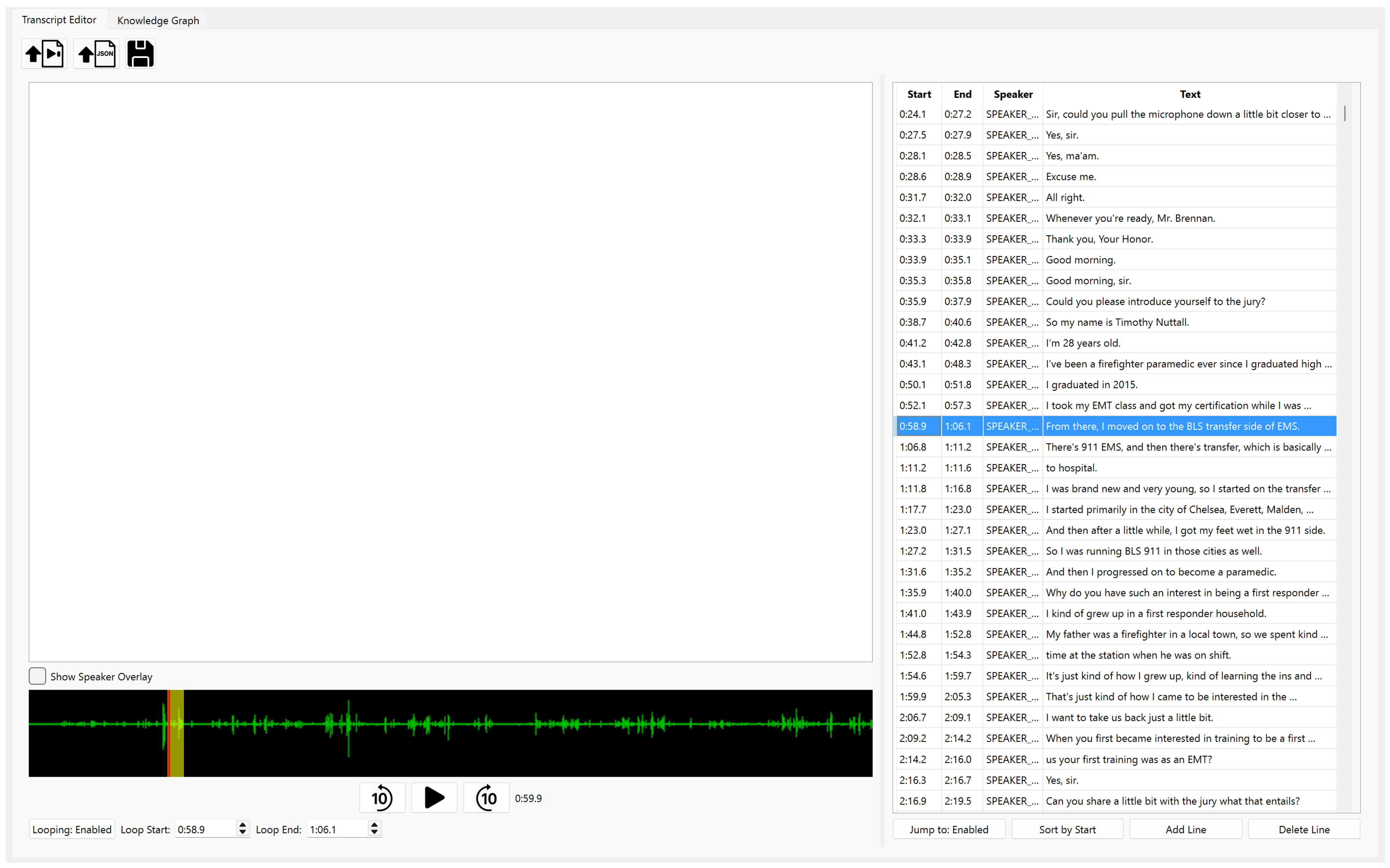

This section describes the two workspaces that support end-to-end analysis in our system. The “Transcript Editor” enables precise, time-aligned correction of source material. The “Knowledge Graph” serves as a workspace for graph generation, visualization, and post hoc editing. Together, they allow a workflow that begins with raw media and ends with an editable, exportable KG without external conversion utilities.

2.2.1. Transcript Editor

The transcript editor shown in

Figure 4 presents the source media and its time-aligned transcript side by side so that users can verify and correct content with minimal effort. Two JavaScript Object Notation (JSON) formats are supported for import. The first is a simple per-line structure with start time, end time, speaker label, and text. The second is the JSON file produced by the aTrain GUI [

8]. By supporting aTrain’s output, the editor lets users move from source media to an edited transcript and KG using only two applications. No intermediate conversion utilities are required. This lowers the technical barrier for domain experts outside computer science and aligns with our goal of reducing reliance on programmer workflows.

The design supports efficient transcription by placing playback tools and transcript editing in dedicated panels. The left panel contains a waveform visualization with standard playback controls and a timeline slider, allowing users to navigate recordings efficiently. If the media is a video, it is displayed in this panel above the waveform to keep the playback and visual context together. A vertical bar indicates the current playback time, giving users a clear reference point for precise positioning within the audio. When a transcript segment is selected in the right panel, the corresponding region of the waveform is automatically highlighted between its start and end times. The right panel contains an editable table of transcript rows with columns for start time, end time, speaker label, and text. By default, selecting a transcript row moves playback to the beginning of that segment, and it will automatically repeat the audio between its start and end times. This looping is especially helpful when passages are unclear or when speakers overlap. Simple toggles control both the seek and looping features, so users who prefer full manual navigation can turn them off at any time.

Editing operations are designed to be immediate and intuitive, allowing users to make corrections directly within the transcript without additional steps. Rows can be inserted, moved, or deleted. Edits are applied directly in place within the same interface, allowing users to adjust timing, text, or speaker labels without breaking their workflow. When users modify start or end times, the highlighted region of the waveform updates instantly to reflect the change, providing immediate visual feedback. When transcript rows are reordered or start and end times are adjusted, the transcript can drift out of chronological sequence. Users can restore the chronological sequence of the transcript by clicking the “Sort by Start Time” button below it. This ability to quickly recover a coherent timeline is particularly useful during heavy editing, where structural changes might otherwise leave the transcript fragmented or confusing. Users have the option to save the transcript at any stage, either for resuming editing later or for archiving as the finalized version.

The editor is integrated with the KG workflow so that graphs are generated directly from the transcript. In the intended CLARE process, the first steps are to refine the transcript thoroughly and then build the graph. If later exploration reveals a mistake such as a spurious node, a missing entity, or an ambiguous relation, the user can return to the editor, correct the transcript, and regenerate. When the issue is small, the user may instead apply a targeted fix in the graph view, which can be faster than a full rebuild for long transcripts. After each build, search and filtering in the graph view help verify the effect of changes. This process balances up-front transcript quality with selective corrections and produces a transcript that is accurate for archival use and well structured for entity extraction.

Transcript fidelity directly affects the reliability of the KG. Precise segment boundaries align entity mentions with the correct text blocks, which stabilizes association scores and reduces spurious co-occurrences. Consistent speaker labels reduce the likelihood of false merges during clustering, ensuring that utterances from different individuals remain properly separated. Clean punctuation and sentence boundaries improve segmentation and embeddings. The editor is not only a convenience. It serves as a control surface for improving downstream graph construction through small, targeted corrections that can be verified in the visualization.

2.2.2. Knowledge Graph Visualizer and Editor

The Knowledge Graph tab shown in

Figure 1 includes a ribbon at the top of the window that groups KG generation controls, input and output options, and later editing tools. The KG generation controls section includes a Central Topic field that accepts a phrase that filters off-topic entities and edges. The adjacent Clustering Options button opens an advanced dialog for clustering and relation-extraction parameters. Users can set the number of clusters for grouping text segments and the per-cluster cap on candidate entities. Raising this cap increases the number of entities considered and typically produces a larger graph at the cost of a longer processing time. The dialog also includes a Dynamic Threshold setting that keeps only the top

of candidate edges by score. Next to the Clustering Options button in the ribbon are the Model Selection menus. Users must choose an embedding model and a language model, then provide credentials through the API Keys button, which stores keys locally. Both the API keys used by Hosted services and the server address required for local deployments will be provided by the user in the API Keys window. After the model selections keys are provided, and any optional parameters are set, the user clicks the Generate KG button to run the pipeline on the currently loaded transcript.

The Knowledge Graph view complements automated construction with an interactive workspace for exploration and refinement. Graphs can be generated directly from the transcript pipeline or imported from CSV files that contain node and edge lists. A search bar in the upper-right corner lets users locate entities by name. To manage visual complexity, two filters provide focused views. The Direct Connections filter shows each selected entity together with its immediate neighbors and hides unrelated edges. The Overlapping Connections filter uses a stricter rule, displaying only relationships between entities within the current selection. This focused visibility enables users to examine interaction patterns in densely connected regions without being distracted by superfluous information.

At the core of the interface is the canvas, which displays the graph and allows users to manipulate it directly. Users can drag entities to improve legibility, uncover crossings, or group related concepts. Common editing actions are placed prominently in the top ribbon, allowing users to delete relationships, remove nodes, or reverse edge directions with minimal effort. The ribbon layout and single-click controls make these corrections efficient, so that graph adjustments remain a seamless part of the analytical process. A collapsible side panel exposes the advanced editing options. Researchers can add new nodes, rename existing nodes, merge duplicates into a single entity, and create or adjust relationships. The interface includes a selection buttons that speed up filtering and advanced edits. Instead of typing names manually, users can click directly on nodes or relationships to add them to the active filter or editing panel.

KG construction is a complex task in which some errors are to be expected. Our interface addresses this reality by emphasizing efficient error detection and correction, enabling users to iteratively refine the graph instead of relying on a one-time, error-free output. Users explore the automatically generated structure with search and filters, then apply editing operations to refine it, including by removing spurious nodes, correcting edge directions, merging entities, and adding missing links. Small issues in the generated KG should be corrected using this interface. But for severe, cascading issues, the root cause is likely an error in the transcript itself, and it is likely more efficient to correct the issue there and regenerate the KG. This loop supports steady convergence toward a representation that matches the user’s understanding of the source material.

When a graph meets the user’s needs, it can be exported as CSV files for nodes and edges. Exported CSV files can be reloaded into CLARE at any time for continued editing and exploration, or imported into external environments such as Neo4j for extended analysis. Together, the transcript editor and graph viewer form a unified workflow; one ensures accurate input, and the other provides the means to refine and interpret its output. This integration allows users to iteratively improve both transcript quality and graph clarity within a single environment.