Abstract

This paper contains an analysis of methods for person classification based on signals from wearable IMU sensors during sports. While this problem has been investigated in prior work, existing approaches have not addressed it within the context of few-shot or minimal-data scenarios. A few-shot scenario is especially useful as the main use case for person identification in sports systems is to be integrated into personalised biofeedback systems in sports. Such systems should provide personalised feedback that helps athletes learn faster. When introducing a new user, it is impractical to expect them to first collect many recordings. We demonstrate that the problem can be solved with over 90% accuracy in both open-set and closed-set scenarios using established methods. However, the challenge arises when applying few-shot methods, which do not require retraining the model to recognise new people. Most few-shot methods perform poorly due to feature extractors that learn dataset-specific representations, limiting their generalizability. To overcome this, we propose a combination of an unsupervised feature extractor and a prototypical network. This approach achieves 91.8% accuracy in the five-shot closed-set setting and 81.5% accuracy in the open-set setting, with a 99.6% rejection rate for unknown athletes.

1. Introduction

Professional sports are a massive industry with an annual revenue of USD 159 billion [1]. This leaves great market potential for any technology that could provide a competitive advantage during training. To improve the learning process of athletes, the concept of a biomechanical feedback loop was created [2]. It is a system designed to capture the movement of athletes, recognise mistakes, and provide feedback that athletes can use to improve their technique. It consists of four parts: the athlete who performs the movement; sensors that measure the movement; a processing unit that processes signals from the sensors, recognises useful information, and generates feedback; and actuators that provide the feedback to the athlete. The athlete is part of the loop, as they need to be able to respond to feedback and improve their technique for the next repetition of the movement.

The movement can be captured by using either cameras or embedded and wearable sensors. Most studies that use deep learning techniques focus on movement captured by cameras [3]. However, the problem with cameras is that they require setup and calibration, which are often too time-consuming for professional athletes. For the applications aimed at recreational athletes, sensors have the advantage of lower cost. Inertial sensors are mass-produced and very cost-effective [4]. Due to these two reasons, we focus our research only on applications based on data from wearable or embedded sensors.

In a recent review of the field of using machine learning in sports, we have found that a significant portion of studies used models specific to one subject [5], highlighting the need for personalised models. This is not surprising, as each individual has their own personal style or technique while performing sports [6]. There are not many studies comparing personalised versus general approaches, and between those that do, the results are not consistent. We found a study that concluded that building a personalised model greatly outperformed using a universal model for everyone [7] and another that found no statistical difference between the two options [8]. Based on that, it is most likely that in some applications, we can provide feedback on a universal technique; in others, feedback needs to be personalised to accommodate individual-level differences. This applies regardless of whether feedback is provided using machine learning methods or not. In such applications, it is useful to recognise the person performing the movement, so we can provide the correct personalised feedback. For example, in gait rehabilitation, the field is shifting into more personalised assistance systems [9], a direction that is also worth exploring in sports.

We envision the personalised feedback system as a modular framework composed of several components. The first component is responsible for recognising the individual or even a specific group of individuals with similar personal techniques. The second component analyses the movements, considering the ideal movement patterns tailored to that individual. Additional components involve the sensors used to capture motion and the actuators that deliver feedback. This paper focuses on person identification needed as the first component.

Due to significant differences in personal techniques, the problem of recognising a person performing sports based only on signals from inertial movement sensors or similar is relatively easy to solve. Previous research has achieved good accuracy in multiple studies, such as a study that recognised people based on their gait [10], where they achieved a maximum of 97.09% accuracy, or a study where they achieved 100% accuracy in recognising a small number of golfers based on recorded golf swings [11]. A person can be identified using wearable IMU sensor signals not only during sports activities but also during a variety of other everyday tasks [12,13].

In this paper, we focus on methods with practical applications. The two gaps that were not covered by the aforementioned studies are that they do not explore the minimum data needed for building such models or the fact that the model needs to be trained on data from all subjects that it recognises. We address these gaps by examining the minimum data requirements in terms of the number of required samples for each person. For practical application, this is a key consideration. Person recognition is a unique problem in the way that we cannot possibly train the model without the final user’s own data. Simultaneously, we cannot expect users to record their movement many times before the application starts working. Eliminating the need to retrain the model on the user’s device when adding a new person significantly enhances the application’s usability.

The challenge specific to few-shot person recognition based on signals from inertial measurement sensors is that the signals between individuals vary a lot. A model trained on data from a subset of people will easily learn features of the signals needed to recognise these individuals. However, these features can be very unique to each person. The problem arises when we want to generalise this model and use it to recognise people whose data was not present during the training.

Furthermore, in sports, data collection is often challenging, as each measurement typically requires the deliberate use of specialised sensors, which must be accurately positioned on the athlete’s body or equipment for each specific movement. We have found that the majority of machine learning research in sports is currently conducted on relatively small datasets [5]. This highlights the need to develop movement recognition methods in sports that remain effective with limited data, extending beyond the current focus on person identification.

The main objective of our paper is to develop methods that can recognise a person based on signals from an inertial measurement sensor using only a few samples per person. The final solution should support open-set classification, meaning it must be capable of recognising and correctly labelling samples from unknown individuals as unknown.

To summarise, the main contribution of this work is as follows:

- A task-specific combination of methods for person recognition, with strong performance in few-shot open-set classification.

The supporting contributions can be summarised as follows:

- A comprehensive analysis of person identification methods using IMU signals across four scenarios—closed-set classification, open-set classification, few-shot classification, and few-shot open-set classification;

- An in-depth investigation into the limitations of standard few-shot learning methods when applied to this task.

The paper is structured as follows. First, we describe the dataset and the data collection process. The Related Work section then introduces the machine learning methods evaluated in this study, followed by a summary of prior research on IMU-based person recognition. The Methods section details the preprocessing procedures, the proposed model, and the evaluation methodology. The Results section presents findings across four scenarios: classification, open-set classification, few-shot classification, and few-shot open-set classification. The proposed model is used in few-shot classification, and few-shot open-set classification scenarios. Finally, we conclude with a discussion of the results and a summary of key findings.

2. Dataset Collection

The experiments presented in this study were conducted using a custom dataset comprising 2850 recordings of dart throws collected from 20 individuals. All participants consented to participate in the study. All recordings were anonymous, and no personal data was collected.

Each recording corresponds to one throw, recorded in real time with a fixed duration of 0.5 s (100 samples), without any temporal normalisation. Throwing darts was selected as the example activity due to the short duration of each motion, which enables efficient data collection. Dart throwing also represents a typical sports-related arm motion, sharing characteristics with other throwing or swinging actions. Therefore, the database is relevant not only for this relatively uncommon sport, but also as a transferable benchmark for broader applications in sports motion analysis. Below, we provide a brief overview of the data collection process.

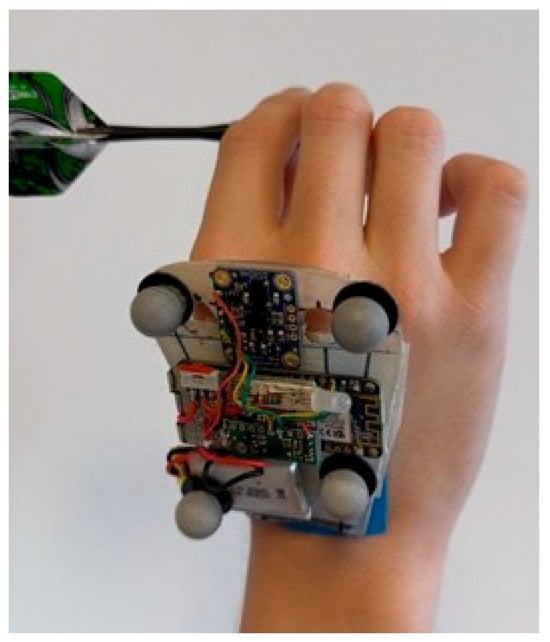

Throws were recorded using a custom-built device that is attached to the back of the athlete’s hand. For attaching the device, we used double-sided adhesive tape, but protected the athlete’s hand with kinetic tape. The holes in the device are aligned with the knuckles, to reduce sensor placement variations from one measurement session to another. The device consists of an Adafruit Feather M0 microcontroller and a Bosch BNO085 sensor. The sensor is capable of achieving a 100 Hz sampling rate in a configuration where all built-in sensors are turned on. It provides linear acceleration measurements with an accuracy of 0.35 m/s2 and estimates the gravity vector with an angular error of 1.5° [14]. Based on our initial results, we have concluded that the sampling rate of 100 Hz is sufficient for the identification of individuals. Moreover, the accelerations during motion are significantly larger than the sensor’s accuracy threshold, ensuring that the measurement accuracy is adequate as well. In Figure 1, we show the device and its attachment to the hand.

Figure 1.

Sensor device with microcontroller, two sensors, and reflective spheres for motion capture. It is glued to the blue kinetic tape protecting the hand.

We recorded raw data from the accelerometer, magnetometer, and gyroscope, as well as additional signals computed via the sensor’s internal sensor fusion algorithms. This enabled us to capture device orientation, gravity-compensated 3D acceleration, and the gravity vector. Sensor data was transmitted wirelessly via Wi-Fi. The measurement system collected data at 200 Hz, while the sampling frequency of the sensor was 100 Hz. In addition to the inertial sensor data, the position and orientation of the measurement device were tracked using a Qualisys motion capture system for external reference and sensor validation, which is not part of this paper. The data from Qualisys confirms that the measurements from IMU are correct. The velocity calculated using the path recorded by Qualisys closely matches the velocity calculated from acceleration recorded using IMU.

The experiment was designed to replicate a natural darts-playing experience with minimal changes from standard conditions. A standard-size dartboard (45.1 cm diameter, 173 cm centre height) was used, and players threw from the regular distance of 237 cm. To ensure consistent aiming, we used a concentric circle dartboard variant. The participants threw darts in sets of three. Figure 2 illustrates the hand motion during the throw.

Figure 2.

Hand motion during the throw.

In Table 1, we report the statistics of the dataset used. Participants took part in recording sessions over a span of three months. The number of recordings and recording sessions per participant varied. As we are performing person identification, the number of participants is equal to the number of classes.

Table 1.

Dataset statistics.

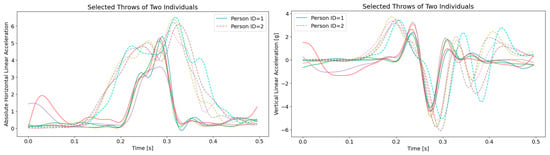

The collected signals show relatively large differences between individuals, which can be discerned by the naked eye. In Figure 3, we show signals for two individuals. The preprocessing steps outlined in Section 4.1 were applied to them.

Figure 3.

Comparison of collected signals between two individuals. Each line corresponds to one throw.

3. Related Work

In this section, we introduce established machine learning methods that we have tested on our data. They are separated into subsections based on the goal for which they are used. The first tests are performed with classifiers. We demonstrate that, regardless of the model used, achieving good accuracy is possible when a large dataset is available and the model is trained on all classes. We follow by introducing the problem of open-set classification, where more sophisticated methods are needed. Lastly, for open-set few-shot person identification, most methods fail.

3.1. Classifiers

Firstly, we tested a few commonly used methods for time series classification. 1D-CNNs are very commonly used for time series classification [15]. For the first experiment, we used a three-layer one-dimensional convolutional neural network with ReLU activation, batch normalisation, and max pooling, followed by adaptive average pooling, a fully connected layer, and SoftMax activation for classification.

We tested two non-deep learning methods—K-nearest neighbour [16] and support vector machine [17]. We set the number of neighbours for KNN to K = 5, except where we use only one sample per class, where we used K = 1. A support vector machine was used with a linear kernel.

As classification methods for pictures are much more studied than those for time series, it is worth transforming the time series into a picture and using 2D-CNN models that are designed for image classification. We tested Gramian angular field transformation (GAF) [18] along with a three-layer two-dimensional convolutional neural network with ReLU activation, batch normalisation, dropout, and max pooling, followed by flattening, a fully connected hidden layer with batch normalisation and dropout, a final fully connected layer, and SoftMax activation for classification. Gramian angular field transformation is commonly used and performs well in combination with image classification methods [19,20].

The last classifier that we tested is based on a liquid neural network [21]. We used a liquid time-constant recurrent neural network with a single ungated liquid layer that processes sequential input through recurrent dynamics using learned time constants, followed by a fully connected output layer for classification.

3.2. Open-Set Methods

The simplest way to reject an unknown player is to set a threshold for predicted class probability at which the model rejects the sample. Hendrycks, D. and Gimpel, K [22] show that SoftMax probabilities for unknown classes are sufficiently lower, so SoftMax probabilities can be used to reject unknown classes. However, they also found that more sophisticated methods perform better in many scenarios. We compare this method with a few more sophisticated methods from the literature.

Balasubramanian, L et al. [23] propose the combination of deep learned feature extraction with a random forest model. The distribution of votes is examined on a per-class basis, and using extreme value theory, the tail of votes is modelled using the Weibull distribution. This way, a rejection threshold is calculated for each class. In this paper, we test this method, where the only change we make is the use of a 1D CNN instead of a 3D CNN as our data is in the form of a time series. This model was trained on 50 epochs to avoid overfitting. Increasing the tail percentage leads to more samples being classified as unknown, as a larger portion of the distribution is treated as outliers. After experimenting with various tail percentages in 10% increments, we selected a value of 60%, which yielded the best performance. This choice was based on maximising the product of the rejection rate (correctly rejecting unknowns) and the accuracy on known classes, i.e., we selected the tail percentage that achieved the highest value of the metric: score = accuracy × rejection rate.

Another promising method for how to improve open-set classification is called open set loss [24]. This involves simulating an open set scenario during training and training the model using a two-part loss function. The two parts are Identification-Detection Loss (IDL), which optimises recognition and detection performance under varying thresholds, and Relative Threshold Minimisation (RTM), which reduces the highest similarity scores of non-mated probes. Researchers made their code available on GitHub, so we used their loss function to train a model based on a 1D CNN.

3.3. Few-Shot Methods

Few-shot methods [25] are designed specifically to work well with only a small number of samples available. Typical approaches include using a feature extractor trained on one dataset and adapting it to new tasks through transfer learning and training a model on multiple tasks with limited data available for each. This approach is known as meta-learning. A very commonly used approach is a prototypical network [26]. It falls under meta learning methods. During training, the model learns representations in the embedding space through multiple episodes, with each episode presenting a different task composed of varying classes and samples. For inference, a prototype of each class is calculated using samples from the support set, typically five samples per class. The model assigns a sample to the class based on the distance to its prototype.

Matching networks [27] is a similar approach, where the main difference is that instead of distance to the calculated prototype, a nearest neighbour classifier is used.

Both methods were originally developed for image classification tasks, necessitating modifications to the feature extraction process to accommodate our time series data. For this purpose, we employed a 1D convolutional neural network using raw vertical and horizontal acceleration signals and a 2D CNN applied to images generated from these signals using the Gramian Angular Field (GAF) transformation. These two feature extractors were selected based on their superior performance in our initial objective—training classifiers on the full dataset. Additionally, we evaluated transformer-based and liquid neural network (LNN) architectures as alternative feature extractors, hypothesising that suboptimal feature extraction may have contributed to lower performance in prior tests.

3.4. Few-Shot Open-Set

As other few-shot methods completely failed on our dataset even in the easier setting of closed-set classification, we cannot expect them to perform well in this challenging setting. Just for comparison, we will include the results of using the prototypical network with 1D CNN feature extractor in the results. Furthermore, we test another method that should improve open-set accuracy. The SnaTCHer [28] method promotes robustness by ensuring that both support and query embeddings remain consistent under input transformations, thus enhancing the model’s ability to detect unknown classes. The transformations are performed using a transformer model on the embeddings.

3.5. IMU-Based Person Recognition

There have been multiple studies that have successfully recognised a person by classifying movement collected from inertial measurement sensors. Luo F et al. [12] built a deep learning-based model that achieved 91% accuracy on a dataset consisting of various everyday activities. They used accelerometer and gyroscope signals. They found that during certain activities such as walking, the accuracy can be even higher. Retsinas G. et al. [13] developed a deep neural network architecture which uses an accelerometer, gyroscope, and an additional hearth rate sensor to achieve overall 88% person-recognition accuracy across various activities. Libin J. et al. [29] have found that using only signals from accelerometer attached to a golf club, they can achieve 97% accuracy in recognising golfers as well as the shape of the golf swing. This was also performed using a deep learning model. Andersson R et al. [30] have found that various classical machine learning models can achieve over 80% accuracy when recognising a person based on IMU data captured during walking.

We have found no papers that address the problem of few-shot IMU-based person recognition. This makes the existing solution impractical to use, as it requires extensive data collection as well as model retraining for each new person the system needs to recognise.

4. Methods

4.1. Preprocessing

The main idea behind our preprocessing method is that we process the signal in a way that will make the data resistant to small inaccuracies in sensor placement. It is impossible to ensure that in each measurement session, the sensor device is attached to the hand exactly the same. The small differences in sensor orientation when attached to the hand could not only introduce noise into our data but also provide unwanted information specific to the measurement session, i.e., the model could potentially learn to recognise the player based on the incorrect placement of the sensor rather than his personal throwing technique. To alleviate these concerns, we used the following preprocessing method for linear acceleration in 3D.

The BNO085 sensor provides linear acceleration, excluding the component of gravity, in its local coordinate system, along with the gravity vector. We can use that data to calculate acceleration in vertical () and horizontal () directions. This way we gain two acceleration signals, which are both independent of any unwanted variation in sensor attachment. The two signals are calculated using the following formulas, where is the vector of linear acceleration and is the vector of gravity:

In addition to this preprocessing method, we applied several other techniques to ensure the quality of our data. During measurement, we recorded several throws, where the recording had some artefacts, such as repeated samples. Any bad recordings were removed from the dataset before use. The removal process was both automated and manual. Furthermore, a low-pass filter with cutoff frequency of 20 Hz was applied to reduce measurement noise.

4.2. Our Solution

We propose a novel combination of methods found in the literature. The part of our approach that is new is the rather simple idea of using an unsupervised, trained model as a feature extractor for the prototypical network. An unsupervised trained model will have to learn all the features of the input signal, rather than just those needed to differentiate between classes present during training. Unsupervised training refers to the feature extractor being trained without the knowledge of class.

We used the TS2Vec [31] model designed to extract universal representations of time series. We trained this model on acceleration signals from 10 people and then used it as a feature extractor for the prototypical network. During the training, we used the methods from the original paper. For inference, we kept the idea of calculating a prototype of the support samples for each class in the embedding space and then assigning the query sample to the class of the closest prototype. The episodic training method found in the original prototypical network [26] was completely replaced by the pretrained TS2Vec [31] model. This works well because the differences in recorded signals between the players are much more prominent than the differences caused by any other factor. As a result, the solution is highly specific to this application.

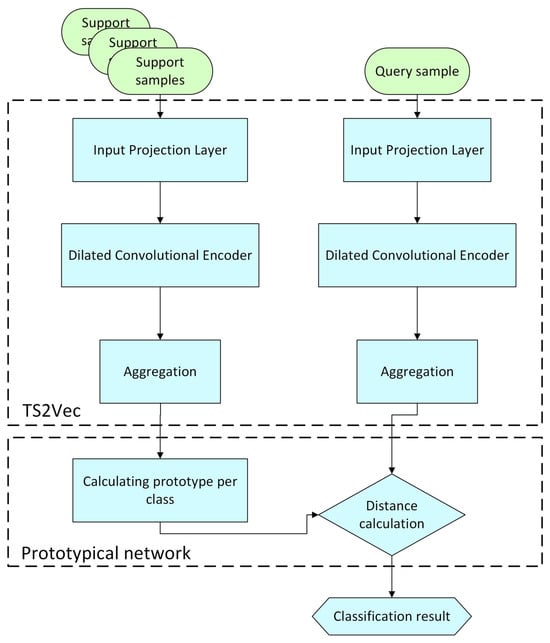

In Figure 4, we show the full architecture of the solution. At inference, samples from support examples are passed through the following layers of the feature extractor:

Figure 4.

Model architecture is designed by combining TS2Vec model and prototypical network.

- Input Projection Layer: Each timestamp’s raw input vector is passed through a fully connected layer to obtain latent embeddings.

- Dilated Convolutional Encoder: The projected inputs are fed into a stack of residual 1D dilated convolution blocks, producing contextualised timestamp-level representations.

- Aggregation: Max pooling is used to gain a fixed-length vector representation of a time series.

Next, the prototype for each class is obtained by averaging the representation vectors of its support samples across all dimensions. After the query sample is passed through the same feature extractor, we use a matrix multiplication approach to calculate the Euclidean distance between the prototypes of classes and the embedding vector of the query sample. The sample is assigned to the class of the closest prototype.

To adapt our method to the open-set scenario, we use a rejection threshold. Samples with a distance from the prototype greater than the threshold are classified as unknown. The threshold is set to allow a 5% false positive ratio on a validation dataset. For this experiment, we use a subset of classes as a validation dataset; in total, 5 classes are used for training, 5 for the validation dataset, and 10 for testing. In addition to this testing, we also try various thresholds and choose the one maximising the product of the rejection rate and the accuracy on known classes. The result of this experiment is annotated as “best threshold” in the Results section.

4.3. Testing Methodology and Scenarios

Our testing methodology varied for each scenario. The first scenario was regular classification. Models were tested using a random split [32] into training and testing subsets, with 70% of the samples used for training. A sample is defined as a recording of a single throw. Classifiers trained on either 5 or 1 sample were tested 10 times with different random sets of training samples. The accuracy is the average of the ten runs. This action was taken to avoid the bias from the selection of training samples.

An open-set scenario is when the model must also recognise a sample of an unknown player as unknown. For this scenario, we randomly selected two players as unknown, while the rest of the data was split using a random split.

A few-shot scenario refers to a setting in which only a limited support set is available, in our case consisting of one or five samples per class, and this limited set is then used to classify a new query sample into one of the classes. For few-shot methods, we reserved 10 classes for training the model, and the other 10 for testing. All feature extractors, including the TS2Vec model used in our solution, are therefore trained on data from 10 participants, whose data is not used for testing. We used episodic evaluation. We sample episodes, each consisting of a small number of support examples and a set of query examples drawn from the test subset of classes. Testing was performed in 5-way 5-shot episodes, meaning that the model needs to differentiate between 5 players based on 5 labelled samples from each. In an open-set few-shot scenario, the query examples also include samples from classes that are not present in the support set.

While the established way of testing few-shot models is to test the model on classes which were not present during the training phase, we have also separately tested it on classes that were present during the training to better understand what went wrong with the models that performed poorly on unseen classes. For this test, a random split was used to create training and testing subsets of samples within the 10 training classes.

5. Results

In this section, we provide the results of all conducted experiments. In the first subsection, we test our preprocessing method and conclude that it is appropriate. In the following subsections, we focus on various player classification scenarios, from straightforward classification by training the model using all available data to few-shot open-set scenarios. Our method is designed for the few-shot scenario, and its results are presented in Section 5.3 and Section 5.4. The results in Section 5.2 are also relevant, as they demonstrate that in scenarios with abundant data, person recognition performs well using established machine learning methods. This underlines that our approach is not universally optimal, but is particularly well-suited for few-shot scenarios, which are the most common in real-world applications.

5.1. Appropriateness of Preprocessing Method

We have tested our preprocessing method on the base case of user classification. This is the use of a 1D CNN model, trained on full training dataset. Having done so, we obtained the results seen in Table 2.

Table 2.

Classification results for different input signals.

The results prove that our approach to preprocessing by calculating acceleration in vertical and horizontal directions has only a minimal or negligible impact on accuracy compared to the best combination of raw signals.

Incorporating the gravity vector provides additional information about the sensor’s orientation, which can vary slightly due to changes in sensor placement across measurement sessions. To account for this potential variability, we apply this preprocessing consistently in all experiments throughout the paper, ensuring that differences in sensor positioning do not introduce unintended bias.

5.2. Classification Results

In Table 3 we present results from tests performed with regular classifiers. Here, we consider a scenario in which a large amount of training data is available, and the model can be retrained each time a new individual is added to the recognition system. Each model was trained with all classes present. Models were tested when trained on 70% of all samples, when trained on five samples per class, and with only one sample per class. The 2D CNN was trained on images of GAF transformation of the signals (1) and (2); the other models were trained on the signals directly.

Table 3.

Classification results.

As we can see, achieving good results is not difficult when using a full training dataset. Any of the deep learning techniques tested are able to achieve over 90% accuracy. As expected, deep learning techniques outperform traditional machine learning when enough data is available but fall behind when we limit the number of training samples. More complex models such as LNN and 2D CNN are more sensitive to a lack of training data. But even so, just five samples per class were enough to train a 1D-CNN to recognise players with over 90% accuracy. However, this solution is not ideal, as the model needs to be trained on data from all players, so it does not allow users to simply add new players. Furthermore, it does not reject unknown players.

Open-Set Classification

In this section, we present results where classifiers are trained on 70% of all samples from players selected for training dataset but where, unlike in the previous section, the goal now is to reject any unknown player in addition of recognising known players. Two players are randomly selected as unknown.

The results in Table 4 show that using the combination of deep learned feature extraction with a random forest model [23] allows us to reach high accuracy in an open-set scenario. As both rejection rate and accuracy on known classes outperform 1D CNN with added thresholds for rejection, we believe that this method is appropriate. By experimenting with setting different thresholds, we could potentially improve the rejection of unknown samples at the expense of accuracy for known samples in 1D CNN + threshold method, but as it was outperformed in both metrics, we do not believe this is worth pursuing further. Similarly, in the 1D CNN + RF + EVT method, we can set different balances between rejection rate for unknown samples vs. accuracy for known samples by setting different percentages for tail end of the distribution of numbers of votes. While we wanted to maximise both, to prove the efficiency of the method, the correct answer can vary by intended application.

Table 4.

Open-set classification results.

The method with open-set loss was not successful on our data.

5.3. Few-Shot Methods

A few-shot scenario is where we only have a small number of samples for each of the classes that we are trying to recognise. This scenario is important because collecting large amounts of labelled data for every new player is impractical, and in real-world applications, we need to add new individuals to the recognition system without gathering extensive training data for them. The most commonly used few-shot methods fail at the task of recognising players. In Table 5, we show the results.

Table 5.

Results for few-shot classification.

The comparison between common few-shot methods shows that prototypical networks significantly outperform matching networks. However, we notice that the accuracy on classes unseen during the training is so low that this cannot be useful in any practical applications.

Our solution (bold) significantly outperforms any other method in terms of classification accuracy. The accuracy achieved with five samples per class is over 90% and sufficient for practical applications. The result for one-shot classification (77.8% accuracy) is also great in comparison to other tested methods.

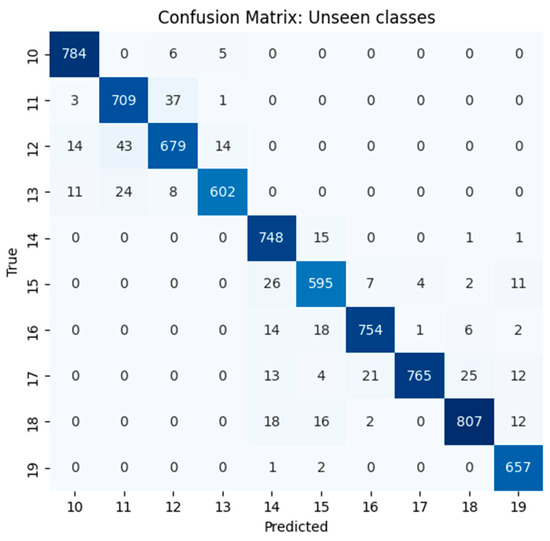

Figure 5 presents the confusion matrix, which demonstrates that our approach performs well across all classes. Although some players with similar techniques are more frequently confused with one another, misclassifications remain rare. A confusion matrix was obtained by aggregating query predictions across all episodes, mapped back to global dataset labels.

Figure 5.

Confusion matrix for TS2Vec + prototypical network model. Please note that the confusion matrix was collected in a later rerun of the experiment and the accuracy varies slightly from the one reported.

With other methods, there is a large discrepancy in accuracy between seen and unseen classes, that suggests the primary limitation lies in the trainable component of the model—the feature extractor—rather than in the fixed metric-based classification stage (e.g., prototypical or matching networks). The only exception was the liquid neural network, which performed similarly in both cases; however, its accuracy was relatively low.

To prove this hypothesis, we analysed the embeddings obtained from the feature extractor. The results were similar for all feature extractors except the liquid neural network. In its case, embeddings from classes seen during training were also poorly separated by class. The difference in inter-class distances and intra-class distances was less than 10% between seen and unseen classes.

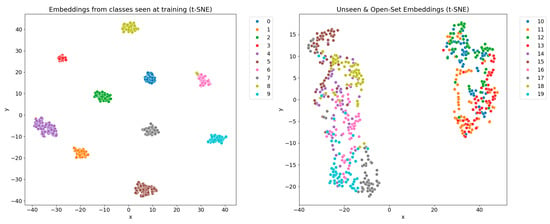

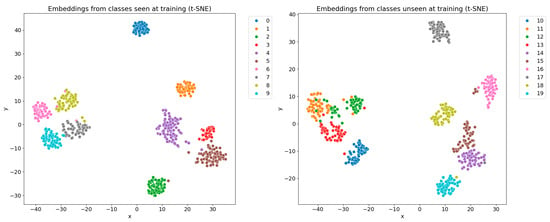

Here we provide a detailed analysis for only the 1D CNN feature extractor, as that model outperformed others, and the conclusions that we can draw from the analysis are the same regardless of which feature extractor was used. In Figure 6, we use the dimension reduction technique t-SNE [33] to reduce the 64 dimensions that embeddings have to 2 dimensions that we can show on the plot.

Figure 6.

Differences in embedding quality between classes seen at training and classes unseen during training for 1D CNN feature extractor are obvious.

As seen from the plot, it is impossible to separate embeddings of unseen classes, while the embeddings from seen classes form clearly distinguishable clusters. To prove that this is truly the case and not an artefact of t-SNE dimensionality reduction, we provide the average distance between the centre of a class and the embeddings of samples of this class, which we labelled as “Intra-class distance” in Table 6. The second metric we report, labelled “Inter-class distance”, represents the average distance from the centre of each class to the centre of the closest class. Additionally, we calculated the p-value to test whether the differences in embedding quality between seen and unseen classes are statistically significant. The results show that the groups of samples from classes seen at training are statistically significantly more compact; however, the differences between inter-class distances are not statistically significant. We can conclude that the feature extractor is unable to generalise well to unseen classes and that embeddings are of significantly lower quality.

Table 6.

Embedding quality for 1D CNN feature extractor.

We tested multiple commonly used feature extractors and consistently observed poor embedding quality for unseen classes, regardless of the specific architecture. One exception was the liquid neural network, where embeddings for both seen and unseen classes were comparably poor. Our findings suggest that meta-training may not be well suited to the characteristics of our dataset.

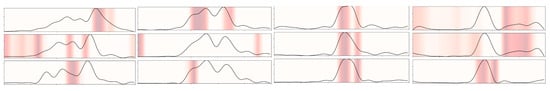

Furthermore, to determine why generalisation is bad, we visualised the attention of the model using the Grad-CAM Attention Visualization [34]. What we see is that the results vary greatly by the class (person). In some classes, the model focuses on the expected part of the signal—the main part of the throw—when acceleration is the greatest, while for other classes, attention might be on some detail or even inconsistent between samples.

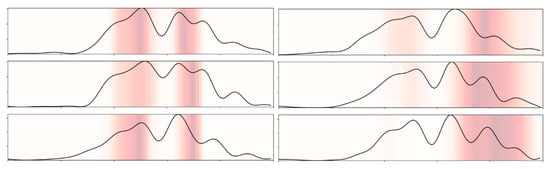

The four examples in Figure 7 show this well. Classes 14 and 0 showed no consistent attention between samples, despite the fact, that class 0 is among the seen classes and can be classified well using the model. Class 7 has very consistent attention, exactly on the expected part of the throw, and for class 12, the attention is focused on two different regions across the samples, either on the main part of the throw or on the beginning and the end. It seems like the model is learning some specific features which are relevant for person identification for seen classes but not relevant for unseen classes. We cannot see a consistent difference in attention between seen and unseen classes, but we can see that the attention is different if we move the same class from seen to unseen, as explored in Figure 8. We chose the example where the change is the most obvious.

Figure 7.

Attention for classes in order from left to right: 0 (seen), 14 (unseen), 7 (seen), and 12 (unseen).

Figure 8.

Attention for samples from class 18, when it was unseen during training (left) versus when it was seen during training (right).

Attention for class 18 was relatively consistent across the 10 randomly selected samples that we analysed. When class 18 was not in the training subset, the model would focus on the main part of the throw. However, if the class was present during the training of the model, the attention is fully on the last part of the throw. That part has a very unique shape that is not present for any other player. This demonstrates well how some class-specific features are only considered by the model if it was trained on the data from that specific class.

Our solution is to use the model presented in Section 4.2 to directly address the issues that are present with most of the common few-shot learning methods. The feature extractor is trained without the knowledge about which class the sample belongs to, so it learns all features rather than just the ones needed for classifying classes seen at training.

However, this method comes with some limitations. As shown in Figure 9, the embeddings of the same class are grouped together for both classes seen and unseen at training. While this proves that the feature extractor is appropriate, the feature extractor was trained without the access to class labels. This means that, regardless of what we want to classify, the embeddings of same samples are grouped together. This behaviour is ideal for our application (person recognition), since inter-individual differences in the signal are more pronounced than those caused by other factors. However, this also implies limited transferability to other tasks. For instance, if we attempted to classify errors in throwing technique, which manifest as subtle signal variations, the prototype generated from support samples would be more influenced by the specific individuals included in the support set than by the actual technique differences.

Figure 9.

Embeddings from an unsupervised trained feature extractor are of much higher quality for unseen classes compared to Figure 6.

There is still a slight difference in quality between seen and unseen embeddings, as seen from Figure 9 and indicated by a 3% lower accuracy in unseen classes.

In Table 7 we prove that the embeddings of classes seen during training remain statistically significantly grouped closer together, indicating that the accuracy gap between seen and unseen classes is not due to chance. Trained classifiers will still outperform this method, as shown in Section 5.2, but its advantage is in its practicality, as there is no need to retrain the model for new players.

Table 7.

Embedding quality for unsupervised feature extractor.

5.4. Open-Set Few-Shot Scenario

This scenario differs from the few-shot closed-set setting in that the query sample may belong to a class that is not represented in the support set, in which case it should be identified as unknown. In Table 8, we present the results achieved in an open-set few-shot scenario. As expected, methods based on a supervised trained feature extractor fail just as they did in the closed set scenario. Our method (bold) can achieve good performance, with known accuracy over 80% while keeping the rejection rate above 99%.

Table 8.

Few-shot open-set classification results.

There is an issue with setting the correct threshold. By using a separate subset of classes for calculating the threshold, we did not obtain the optimal threshold for test classes. The distribution of distances is different enough that the false positive rate set to 5% on validation dataset (which would translate to 95% rejection rate) achieved a rejection rate of 99.6% on test dataset. The model therefore rejects more samples than it was calibrated to reject. We believe that having a larger dataset for calibration could mitigate this problem.

6. Discussion

The problem of person recognition based on signals from inertial measurement sensors recorded during sports is easily solvable with established methods, as long as we train the classifier on data from all people that we want to recognise. For that purpose, even five samples per person are enough. The same goes for recognising an unknown person as unknown; established methods for open-set classification can be used effectively.

When the problem of few-shot learning is introduced, the established few-shot methods fail. This is because the feature extractor learns to recognise players in the training set based on dataset-specific patterns, which do not generalise well, mainly due to the significant variability in IMU signal shapes between individuals. The feature extractor can learn a few features, which are sufficient to recognise players present at training phase. However, these features are not sufficient to recognise new players. To force the model to learn more general features, we need to train the feature extractor without the knowledge of class.

We proposed a solution that leverages the substantial variability between individuals by using an unsupervised model to extract embeddings for a prototypical network. This approach significantly improves accuracy in the few-shot setting, achieving over 90% accuracy with only five samples per class. When evaluated in an open-set scenario, the method maintained high accuracy while also demonstrating a strong rejection rate for unknown players.

Nevertheless, the model still performs better on samples from the classes it was trained on. This, along with the results of regular classifiers, suggests that the trained model will always outperform this method.

As a result of unsupervised training, embeddings of similar samples are naturally clustered in the feature space. This property is advantageous for classification tasks where the primary goal is to distinguish between samples exhibiting substantial inter-class signal differences, such as in person recognition. However, this same characteristic limits the method’s transferability to other tasks where inter-class variability may be more subtle or structured differently.

In summary, our approach demonstrates that an unsupervised feature extractor combined with prototypical classification is a promising direction for few-shot person recognition based on sensor signals, but its success is limited to applications where the variation in recorded signals between classes is greater than variation from any other source.

7. Conclusions

Our experiments show that person recognition and open-set detection are both feasible with established techniques when sufficient training data is available. Standard few-shot methods showed poor generalisation in our setup, highlighting the need for specialised techniques for highly variable IMU data.

The method described in this paper leverages an unsupervised trained feature extractor and prototypical networks to achieve accuracy over 90% in a few-shot scenario. Its main advantage lies in its practicality. It is well-suited to biofeedback assistance applications that rely on person recognition to recommend personalised movement styles. In this context, the model can be pretrained on data from any 10 athletes. The end user only needs to contribute a single dart throw recording to be recognised with nearly 80% accuracy. As more data is collected, the recognition accuracy improves, surpassing 90% when using five samples.

Its high accuracy and low data requirements make this method ready to be used in real-time assistance systems. Most of the current assistance systems in sports use strong computing devices (phones, PCs, cloud) for feedback [5], where the model is sufficiently fast to work in real-time. The architecture would need to be adapted for deployment on microcontrollers, but the feasibility of running such systems on wearable devices remains uncertain due to additional limitations. For personalised feedback to function effectively, the system must not only identify the individual but also incorporate dedicated feedback profiles for each user. This requirement may make deployment on microcontrollers impossible. Consequently, we argue that future efforts should prioritise further improving accuracy and adaptation to other sports, rather than focusing on microcontroller-based deployment.

While promising, the method’s limitation lies in its reliance on clear inter-class signal differences. Future work should explore domain adaptation strategies or hybrid models that combine unsupervised and supervised components for improved generalisation. It would be worth further exploring the idea of using an unsupervised feature extractor for a prototypical network, such as by replacing the current TS2Vec model by one of many other solutions for unsupervised time series representation learning such as Temporal Neighbourhood Coding [35] or Temporal and Contextual Contrasting [36]. Furthermore, future work could include validating the method on additional datasets to further strengthen the generalizability of the findings beyond the dataset of 20 participants.

Author Contributions

Conceptualization, V.V., A.K., R.B., L.J., H.W., A.U., Z.Z., and S.T.; methodology V.V., R.B., L.J., H.W., and A.U.; software, V.V.; validation, A.U.; formal analysis, V.V., R.B., L.J., and H.W.; investigation, V.V., R.B., L.J., and H.W.; resources, V.V., A.K., and A.U.; data curation, V.V., and A.U.; writing—original draft preparation, V.V.; writing—review and editing, V.V., A.K., R.B., L.J., H.W., Z.Z., and A.U.; visualisation, V.V.; supervision, A.K., R.B., L.J., H.W., and A.U.; project administration, R.B. and A.U.; funding acquisition, S.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded in part by the Slovenian Research Agency within the research program ICT4QoL—Information and Communications Technologies for Quality of Life, grant number P2-0246. This work is financed in part by the Fundamental Research Funds for the Central Universities of China grant number 2024ZKPYZN01.

Institutional Review Board Statement

All measurements were non-invasive, collected during regular leisure activity, and no sensitive or personally identifiable data were collected. The Rules for the Processing of Applications by the Committee of the University of Ljubljana for Ethics in Research that Includes Work with People (KERL UL) state that if “the research does not go beyond normal everyday (occupational, educational, leisure and other) activities of participants or requires only minimal participation of those involved in the research, and it does not involve the collection of identified personal data”, it does not require ethical assessment. Our study is therefore exempt under these rules. We confirm that all procedures complied with the ethical standards of the 2013 revision of the Declaration of Helsinki and the Code of Ethics of the University of Ljubljana, including respect for informed consent, privacy, and voluntary participation.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study. Participants were informed of the study’s purpose and their right to withdraw at any time without consequence.

Data Availability Statement

The dataset in python pickle format is available at https://github.com/vvec1/Few-shot-identification-of-individuals-in-sports-the-case-of-darts (accessed on 1 October 2025). For original uncut measurements, contact the first author. Code is available at https://github.com/vvec1/Few-shot-identification-of-individuals-in-sports-the-case-of-darts (accessed on 1 October 2025).

Acknowledgments

During the preparation of this manuscript/study, the authors used Grammarly version 1.2.201.1767 and ChatGPT versions 4 and 5 for the purposes of grammar correction. The authors have reviewed and edited the output and take full responsibility for the content of this publication. Figure 2 was initially generated using AI and then edited.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Sim, J. Global Sports Industry Revenues to Reach US$260bn by 2033. SportsPro. 2024. Available online: https://www.sportspro.com/news/global-sports-industry-revenue-projection-2033-two-circles/ (accessed on 1 May 2025).

- Kos, A.; Umek, A. Biomechanical Biofeedback Systems and Applications; Springer: Cham, Switzerland, 2018; ISBN 978-3-319-91348-3. [Google Scholar]

- Naik, B.T.; Hashmi, M.F.; Bokde, N.D. A Comprehensive Review of Computer Vision in Sports: Open Issues, Future Trends and Research Directions. Appl. Sci. 2022, 12, 4429. [Google Scholar] [CrossRef]

- Lee, J.; Wheeler, K.; James, D.A. Wearable Sensors in Sport: A Practical Guide to Usage and Implementation; Springer Briefs in Applied Sciences and Technology; Springer: Singapore, 2019; ISBN 9789811337765. [Google Scholar]

- Vec, V.; Tomažič, S.; Kos, A.; Umek, A. Trends in Real-Time Artificial Intelligence Methods in Sports: A Systematic Review. J. Big Data 2024, 11, 148. [Google Scholar] [CrossRef]

- Schöllhorn, W.; Bauer, H. Identifying Individual Movement Styles in High Performance Sports by Means of Self-Organizing Kohonen Maps; Universitätsverlag Konstanz: Konstanz, Germany, 1998. [Google Scholar]

- Sharma, A.; Arora, J.; Khan, P.; Satapathy, S.; Agarwal, S.; Sengupta, S.; Mridha, S.; Ganguly, N. CommBox: Utilizing Sensors for Real-Time Cricket Shot Identification and Commentary Generation. In Proceedings of the 2017 9th International Conference on Communication Systems and Networks (COMSNETS), Bengaluru, India, 4–8 January 2017; pp. 427–428. [Google Scholar]

- Schneider, O.S.; MacLean, K.E.; Altun, K.; Karuei, I.; Wu, M.M.A. Real-Time Gait Classification for Persuasive Smartphone Apps: Structuring the Literature and Pushing the Limits. In Proceedings of the 2013 International Conference on Intelligent User Interfaces, Santa Monica, CA, USA, 19–22 March 2013; ACM: Santa Monica, CA, USA, 2013; pp. 161–172. [Google Scholar]

- Wall, C.; McMeekin, P.; Walker, R.; Hetherington, V.; Graham, L.; Godfrey, A. Sonification for Personalised Gait Intervention. Sensors 2024, 24, 65. [Google Scholar] [CrossRef] [PubMed]

- Yao, Z.-M.; Zhou, X.; Lin, E.-D.; Xu, S.; Sun, Y.-N. A Novel Biometrie Recognition System Based on Ground Reaction Force Measurements of Continuous Gait. In Proceedings of the 3rd International Conference on Human System Interaction, Rzeszow, Poland, 13–15 May 2010; pp. 452–458. [Google Scholar]

- Zhang, Z.; Zhang, Y.; Kos, A.; Umek, A. Strain Gage Sensor Based Golfer Identification Using Machine Learning Algorithms. Procedia Comput. Sci. 2018, 129, 135–140. [Google Scholar] [CrossRef]

- Luo, F.; Khan, S.; Huang, Y.; Wu, K. Activity-Based Person Identification Using Multimodal Wearable Sensor Data. IEEE Internet Things J. 2023, 10, 1711–1723. [Google Scholar] [CrossRef]

- Retsinas, G.; Filntisis, P.P.; Efthymiou, N.; Theodosis, E.; Zlatintsi, A.; Maragos, P. Person Identification Using Deep Convolutional Neural Networks on Short-Term Signals from Wearable Sensors. In Proceedings of the ICASSP 2020—2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 3657–3661. [Google Scholar]

- Datasheet—LCSC Electronics. Available online: https://www.lcsc.com/datasheet/C5189642.pdf (accessed on 24 September 2025).

- Kiranyaz, S.; Avci, O.; Abdeljaber, O.; Ince, T.; Gabbouj, M.; Inman, D.J. 1D Convolutional Neural Networks and Applications: A Survey. Mech. Syst. Signal Process. 2021, 151, 107398. [Google Scholar] [CrossRef]

- Cover, T.; Hart, P. Nearest Neighbor Pattern Classification. IEEE Trans. Inf. Theory 1967, 13, 21–27. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-Vector Networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Wang, Z.; Oates, T. Imaging Time-Series to Improve Classification and Imputation. In Proceedings of the 24th International Conference on Artificial Intelligence, Buenos Aires, Argentina, 25–31 July 2015. [Google Scholar]

- Mariani, M.; Appiah, P.; Tweneboah, O. Fusion of Recurrence Plots and Gramian Angular Fields with Bayesian Optimization for Enhanced Time-Series Classification. Axioms 2025, 14, 528. [Google Scholar] [CrossRef]

- Ni, J.; Zhao, Z.; Shen, C.; Tong, H.; Song, D.; Cheng, W.; Luo, D.; Chen, H. Harnessing Vision Models for Time Series Analysis: A Survey. In Proceedings of the Thirty-Fourth International Joint Conference on Artificial Intelligence, Montreal, QC, Canada, 16–22 August 2025. [Google Scholar]

- Hasani, R.; Lechner, M.; Amini, A.; Rus, D.; Grosu, R. Liquid Time-Constant Networks. Proc. AAAI Conf. Artif. Intell. 2021, 35, 7657–7666. [Google Scholar] [CrossRef]

- Hendrycks, D.; Gimpel, K. A Baseline for Detecting Misclassified and Out-of-Distribution Examples in Neural Networks. arXiv 2018, arXiv:1610.02136. [Google Scholar] [CrossRef]

- Balasubramanian, L.; Kruber, F.; Botsch, M.; Deng, K. Open-Set Recognition Based on the Combination of Deep Learning and Ensemble Method for Detecting Unknown Traffic Scenarios. arXiv 2021, arXiv:2105.07635. [Google Scholar] [CrossRef]

- Su, Y.; Kim, M.; Liu, F.; Jain, A.; Liu, X. Open-Set Biometrics: Beyond Good Closed-Set Models. arXiv 2024, arXiv:2407.16133. [Google Scholar]

- Wang, Y.; Yao, Q.; Kwok, J.T.; Ni, L.M. Generalizing from a Few Examples: A Survey on Few-Shot Learning. ACM Comput. Surv. 2020, 53, 1–34. [Google Scholar] [CrossRef]

- Snell, J.; Swersky, K.; Zemel, R.S. Prototypical Networks for Few-Shot Learning. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Vinyals, O.; Blundell, C.; Lillicrap, T.; Kavukcuoglu, K.; Wierstra, D. Matching Networks for One Shot Learning. In Proceedings of the 30th International Conference on Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2017. [Google Scholar]

- Jeong, M.; Choi, S.; Kim, C. Few-Shot Open-Set Recognition by Transformation Consistency. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Golf Swing Classification with Multiple Deep Convolutional Neural Networks. Available online: https://journals.sagepub.com/doi/epub/10.1177/1550147718802186 (accessed on 19 September 2025).

- Andersson, R.; Bermejo-García, J.; Agujetas, R.; Cronhjort, M.; Chilo, J. Smartphone IMU Sensors for Human Identification through Hip Joint Angle Analysis. Sensors 2024, 24, 4769. [Google Scholar] [CrossRef] [PubMed]

- Yue, Z.; Wang, Y.; Duan, J.; Yang, T.; Huang, C.; Tong, Y.; Xu, B. TS2Vec: Towards Universal Representation of Time Series. Available online: https://arxiv.org/abs/2106.10466v4 (accessed on 20 June 2025).

- Train_Test_Split. Available online: https://scikit-learn.org/stable/modules/generated/sklearn.model_selection.train_test_split.html (accessed on 11 June 2025).

- TSNE. Available online: https://scikit-learn.org/stable/modules/generated/sklearn.manifold.TSNE.html (accessed on 18 June 2025).

- Jiao, L.; Gao, W.; Bie, R.; Umek, A.; Kos, A. Golf Guided Grad-CAM: Attention Visualization within Golf Swings via Guided Gradient-Based Class Activation Mapping. Multimed. Tools Appl. 2024, 83, 38481–38503. [Google Scholar] [CrossRef]

- Tonekaboni, S.; Eytan, D.; Goldenberg, A. Unsupervised Representation Learning for Time Series with Temporal Neighborhood Coding. arXiv 2021, arXiv:2106.00750. [Google Scholar]

- Eldele, E.; Ragab, M.; Chen, Z.; Wu, M.; Kwoh, C.K.; Li, X.; Guan, C. Time-Series Representation Learning via Temporal and Contextual Contrasting. In Proceedings of the Thirtieth International Joint Conference on Artificial Intelligence, Montreal, QC, Canada, 19–27 August 2021. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).