Factors Affecting Human-Generated AI Collaboration: Trust and Perceived Usefulness as Mediators

Abstract

1. Introduction

2. Theoretical Background

2.1. Generative AI

2.2. Collaboration with the AI

2.3. Trust and Its Antecedents

2.3.1. Calculative-Based Trust Antecedents

2.3.2. Cognition-Based Trust Antecedents

2.3.3. Knowledge-Based Trust Antecedents

2.3.4. Social Influence-Based Trust Antecedents

2.4. Perceived Usefulness

3. Research Model and Hypothesis Development

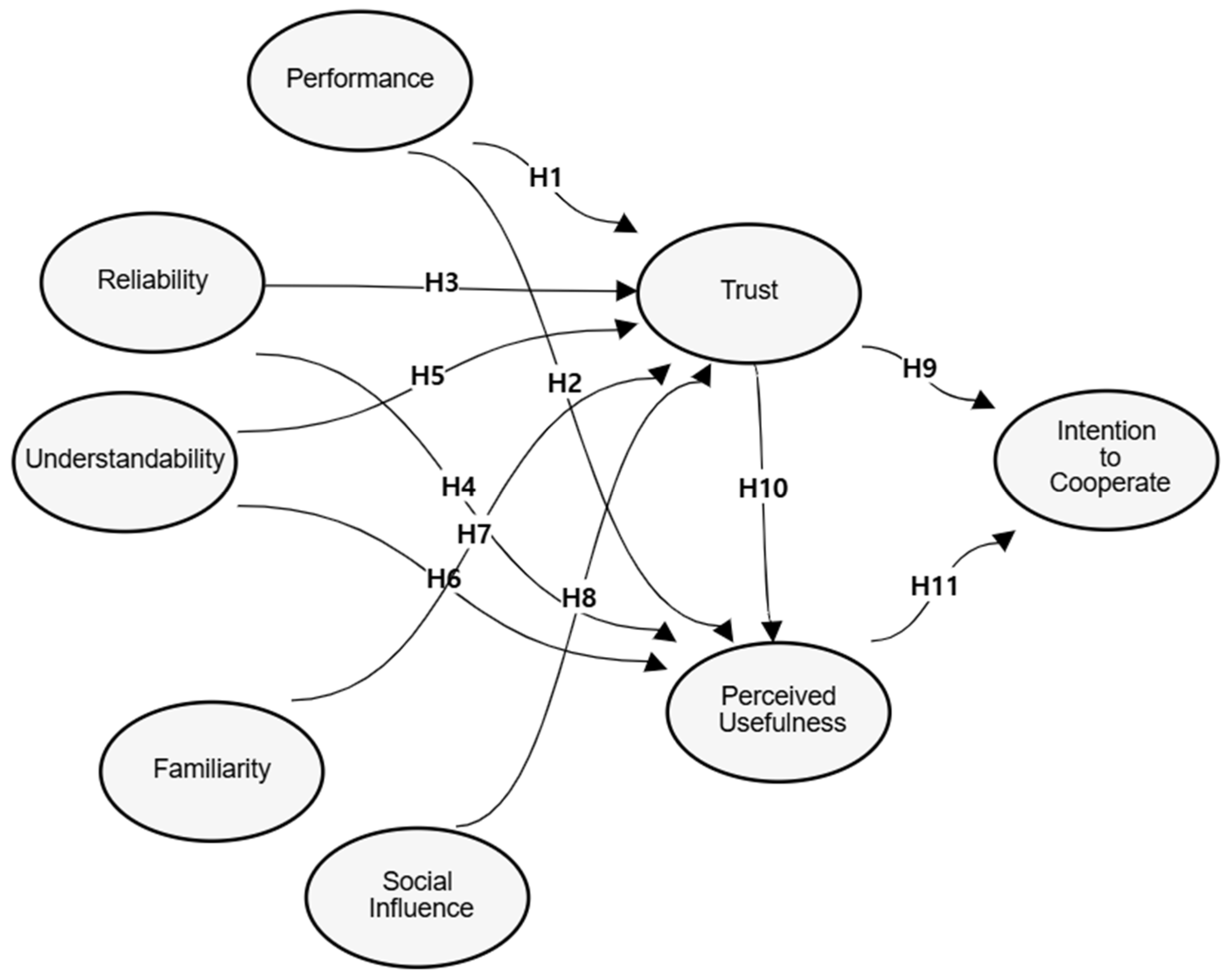

3.1. Research Model

3.2. Hypothesis

4. Research Methodology

4.1. Data Collection

4.2. Measurement Development

5. Results

5.1. Reliability and Validity of the Measurement Items

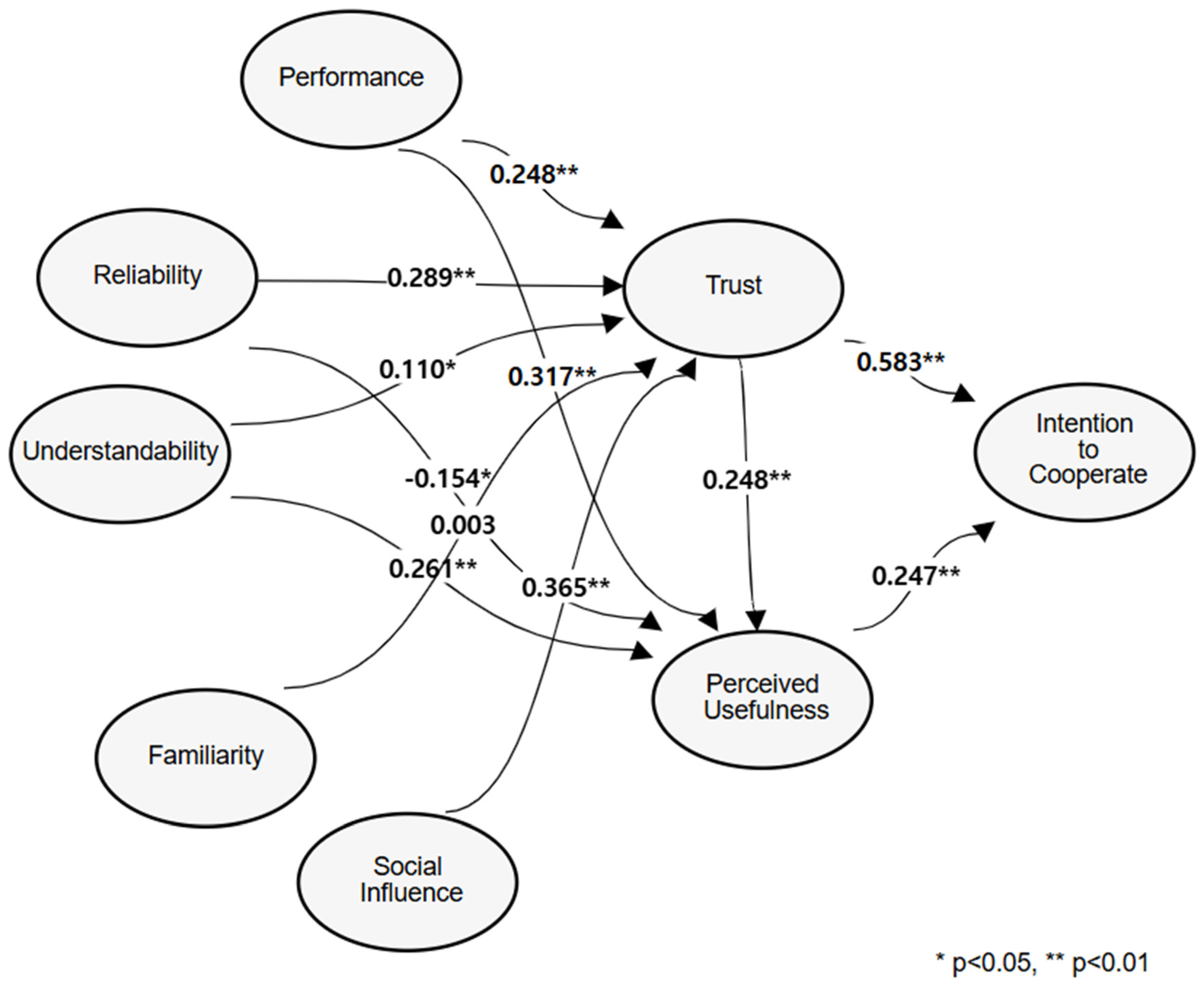

5.2. Hypothesis Testing

6. Discussion

6.1. Contributions and Implications

6.2. Limitations and Recommendations for Future Research

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

- a1.

- Using generative AI improves work efficiency.

- a2.

- Using generative AI enhances productivity.

- a3.

- Using generative AI allows for quicker task completion.

- a4.

- Generative AI is useful for work.

- a5.

- The results of generative AI fit my requirements and objectives well.

- a6.

- Generative AI produces better results than humans.

- a7.

- Generative AI makes fewer errors and is more accurate than humans.

- a8.

- The outputs from generative AI are as excellent as those of a competent person.

- a9.

- Generative AI performs reliably.

- a10.

- Generative AI produces consistent outputs under the same conditions.

- a11.

- I can rely on that generative AI is working properly.

- a12.

- Generative AI analyzes problems consistently.

- a13.

- The outputs generated by generative AI are clearly structured.

- a14.

- The results from generative AI are simple and easy to understand.

- a15.

- If I lack understanding of the results, I can get sufficient explanations through generative AI.

- a16.

- I have no difficulty understanding the outputs of generative AI.

- a17.

- I am familiar with using generative AI.

- a18.

- I am familiar with the work methods using generative AI.

- a19.

- I am familiar with working with generative AI.

- a20.

- People I consider wise prefer using generative AI.

- a21.

- My colleagues think it is desirable to use generative AI.

- a22.

- Many people believe that generative AI should be used in work whenever possible.

- a23.

- The general social atmosphere is positive towards using generative AI.

- a24.

- The outputs of generative AI are trustworthy.

- a25.

- As a partner for collaboration, generative AI is reliable.

- a26.

- Overall, I trust generative AI.

- a27.

- I will actively use generative AI in my work.

- a28.

- I will use generative AI in my work rather than working alone.

- a29.

- I will collaborate with generative AI whenever possible.

References

- Banh, L.; Strobel, G. Generative artificial intelligence. Electron. Mark. 2023, 33, 63. [Google Scholar] [CrossRef]

- Feuerriegel, S.; Hartmann, J.; Janiesch, C.; Zschech, P. Generative ai. Bus. Inf. Syst. Eng. 2024, 66, 111–126. [Google Scholar] [CrossRef]

- Ji, Z.; Lee, N.; Frieske, R.; Yu, T.; Su, D.; Xu, Y.; Fung, P. Survey of hallucination in natural language generation. ACM Comput. Surv. 2023, 55, 1–38. [Google Scholar] [CrossRef]

- Sahoo, N.R.; Saxena, A.; Maharaj, K.; Ahmad, A.A.; Mishra, A.; Bhattacharyya, P. Addressing Bias and Hallucination in Large Language Models. In Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024), Torino, Italy, 20–25 May 2024; pp. 73–79. [Google Scholar]

- Fui-Hoon Nah, F.; Zheng, R.; Cai, J.; Siau, K.; Chen, L. Generative AI and ChatGPT: Applications, challenges, and AI-human collaboration. J. Inf. Technol. Case Appl. Res. 2023, 25, 277–304. [Google Scholar] [CrossRef]

- Susarla, A.; Gopal, R.; Thatcher, J.B.; Sarker, S. The Janus effect of generative AI: Charting the path for responsible conduct of scholarly activities in information systems. Inf. Syst. Res. 2023, 34, 399–408. [Google Scholar] [CrossRef]

- OpenAI. Gpt-4 technical report. arXiv 2023, arXiv:2303.08774. [Google Scholar] [CrossRef]

- Kipp, M. From GPT-3.5 to GPT-4. o: A Leap in AI’s Medical Exam Performance. Information 2024, 15, 543. [Google Scholar] [CrossRef]

- Dwivedi, Y.K.; Kshetri, N.; Hughes, L.; Slade, E.L.; Jeyaraj, A.; Kar, A.K.; Baabdullah, A.M.; Koohang, A.; Raghavan, V.; Ahuja, M.; et al. Opinion Paper:“So what if ChatGPT wrote it?” Multidisciplinary perspectives on opportunities, challenges and implications of generative conversational AI for research, practice and policy. Int. J. Inf. Manag. 2023, 71, 102642. [Google Scholar] [CrossRef]

- Lin, X.; Liang, Y.; Zhang, Y.; Hu, Y.; Yin, B. IE-GAN: A data-driven crowd simulation method via generative adversarial networks. Multimed. Tools Appl. 2023, 83, 1–34. [Google Scholar] [CrossRef]

- Kar, A.K.; Varsha, P.S.; Rajan, S. Unravelling the impact of generative artificial intelligence (GAI) in industrial applications: A review of scientific and grey literature. Glob. J. Flex. Syst. Manag. 2023, 24, 659–689. [Google Scholar] [CrossRef]

- Man, K.; Chahl, J. A review of synthetic image data and its use in computer vision. J. Imaging 2022, 8, 310. [Google Scholar] [CrossRef]

- Jabbar, A.; Li, X.; Omar, B. A survey on generative adversarial networks: Variants, applications, and training. ACM Comput. Surv. (CSUR) 2021, 54, 1–49. [Google Scholar] [CrossRef]

- Cai, C.J.; Winter, S.; Steiner, D.; Wilcox, L.; Terry, M. Hello AI: Uncovering the onboarding needs of medical practitioners for human-AI collaborative decision-making. Proc. ACM Hum.-Comput. Interact. 2019, 3, 1–24. [Google Scholar] [CrossRef]

- Wang, D.; Weisz, J.D.; Muller, M.; Ram, P.; Geyer, W.; Dugan, C.; Tausczik, Y.; Samulowitz, H.; Gray, A. Human-AI collaboration in data science: Exploring data scientists’ perceptions of automated AI. Proc. ACM Hum.-Comput. Interact. 2019, 3, 1–24. [Google Scholar] [CrossRef]

- Zhang, G.; Chong, L.; Kotovsky, K.; Cagan, J. Trust in an AI versus a Human teammate: The effects of teammate identity and performance on Human-AI cooperation. Comput. Hum. Behav. 2023, 139, 107536. [Google Scholar] [CrossRef]

- Sowa, K.; Przegalinska, A.; Ciechanowski, L. Cobots in knowledge work: Human–AI collaboration in managerial professions. J. Bus. Res. 2021, 125, 135–142. [Google Scholar] [CrossRef]

- Lai, Y.; Kankanhalli, A.; Ong, D. Human-AI collaboration in healthcare: A review and research agenda. In Proceedings of the 54th Hawaii International Conference on System Sciences, Maui, HI, USA, 5–8 January 2021. [Google Scholar]

- Wang, D.; Churchill, E.; Maes, P.; Fan, X.; Shneiderman, B.; Shi, Y.; Wang, Q. From Human-Human Collaboration to Human-AI Collaboration: Designing AI Systems that Can Work Together with People. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; pp. 1–6. [Google Scholar]

- Fan, M.; Yang, X.; Yu, T.; Liao, Q.V.; Zhao, J. Human-ai collaboration for UX evaluation: Effects of explanation and synchronization. Proc. ACM Hum.-Comput. Interact. 2022, 6, 1–32. [Google Scholar] [CrossRef]

- Holzinger, A.; Langs, G.; Denk, H.; Zatloukal, K.; Müller, H. Causability and explainability of artificial intelligence in medicine. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2019, 9, e1312. [Google Scholar] [CrossRef]

- Glikson, E.; Woolley, A.W. Human trust in artificial intelligence: Review of empirical research. Acad. Manag. Ann. 2020, 14, 627–660. [Google Scholar] [CrossRef]

- Haesevoets, T.; De Cremer, D.; Dierckx, K.; Van Hiel, A. Human-machine collaboration in managerial decision making. Comput. Hum. Behav. 2021, 119, 106730. [Google Scholar] [CrossRef]

- Vössing, M.; Kühl, N.; Lind, M.; Satzger, G. Designing transparency for effective human-AI collaboration. Inf. Syst. Front. 2022, 24, 877–895. [Google Scholar] [CrossRef]

- Rousseau, D.M.; Sitkin, S.B.; Burt, R.S.; Camerer, C. Not so different after all: A cross-discipline view of trust. Acad. Manag. Rev. 1998, 23, 393–404. [Google Scholar] [CrossRef]

- Lewicki, R.J.; Bunker, B.B. Developing and Maintaining Trust in Work Relationships. In Trust in Organizations: Frontiers in Theory and Research; Kramer, R.M., Tyler, T.R., Eds.; Sage Publications: Thousand Oaks, CA, USA, 1996; pp. 114–139. [Google Scholar]

- Gefen, D.; Karahanna, E.; Straub, D. Trust and tam in online shopping: An integrated model1. MIS Q. 2003, 27, 51–90. [Google Scholar] [CrossRef]

- Lewis, J.D.; Weigert, A. Trust as a social reality. Soc. Forces 1985, 63, 967–985. [Google Scholar] [CrossRef]

- Shapiro, S.P. The social control of impersonal trust. Am. J. Sociol. 1987, 93, 623–658. [Google Scholar] [CrossRef]

- Zucker, L.G. Production of trust: Institutional sources of economic structure, 1840–1920. Res. Organ. Behav. 1986, 8, 53–111. [Google Scholar]

- Mei, J.P.; Yu, H.; Shen, Z.; Miao, C. A social influence based trust model for recommender systems. Intell. Data Anal. 2017, 21, 263–277. [Google Scholar] [CrossRef]

- Li, X.; Hess, T.J.; Valacich, J.S. Why do we trust new technology? A study of initial trust formation with organizational information systems. J. Strateg. Inf. Syst. 2008, 17, 39–71. [Google Scholar] [CrossRef]

- Shin, D. The effects of explainability and causability on perception, trust, and acceptance: Implications for explainable AI. Int. J. Hum. Comput. Stud. 2021, 146, 102551. [Google Scholar] [CrossRef]

- Gursoy, D.; Chi, O.H.; Lu, L.; Nunkoo, R. Consumers acceptance of artificially intelligent (AI) device use in service delivery. Int. J. Inf. Manag. 2019, 49, 157–169. [Google Scholar] [CrossRef]

- Madsen, M.; Gregor, S. Measuring Human-Computer Trust. In Proceedings of the 11th Australasian Conference on Information Systems, Brisbane, Australia, 6–8 December 2000; Volume 53, pp. 6–8. [Google Scholar]

- Cloutier, J.; Kelley, W.M.; Heatherton, T.F. The influence of perceptual and knowledge-based familiarity on the neural substrates of face perception. Soc. Neurosci. 2011, 6, 63–75. [Google Scholar] [CrossRef]

- Gefen, D. E-commerce: The role of familiarity and trust. Omega 2000, 28, 725–737. [Google Scholar] [CrossRef]

- Venkatesh, V.; Morris, M.G.; Davis, G.B.; Davis, F.D. User acceptance of information technology: Toward a unified view. MIS Q. 2003, 27, 425–478. [Google Scholar] [CrossRef]

- Venkatesh, V.; Thong, J.Y.; Xu, X. Consumer acceptance and use of information technology: Extending the unified theory of acceptance and use of technology. MIS Q. 2012, 36, 157–178. [Google Scholar] [CrossRef]

- Yilmaz, F.G.K.; Yilmaz, R.; Ceylan, M. Generative artificial intelligence acceptance scale: A validity and reliability study. Int. J. Hum.—Comput. Interact. 2024, 40, 8703–8715. [Google Scholar] [CrossRef]

- Davis, F.D. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q. 1989, 13, 319–340. [Google Scholar] [CrossRef]

- Davenport, T.H.; Kirby, J. Only Humans Need Apply: Winners and Losers in the Age of Smart Machines; Harper Business: New York, NY, USA, 2016; pp. 1–281. [Google Scholar]

- Rajpurkar, P.; Hannun, A.Y.; Haghpanahi, M.; Bourn, C.; Ng, A.Y. Cardiologist-level arrhythmia detection with convolutional neural networks. arXiv 2017, arXiv:1707.01836. [Google Scholar] [CrossRef]

- Andriluka, M.; Uijlings, J.R.; Ferrari, V. Fluid annotation: A human-machine collaboration interface for full image annotation. In Proceedings of the 26th ACM International Conference on Multimedia, Seoul, Republic of Korea, 22–26 October 2018; pp. 1957–1966. [Google Scholar]

- Mayer, R.C. An Integrative Model of Organizational Trust. Acad. Manag. Rev. 1995, 20, 709–734. [Google Scholar] [CrossRef]

- DeLone, W.H.; McLean, E.R. Information systems success: The quest for the dependent variable. Inf. Syst. Res. 1992, 3, 60–95. [Google Scholar] [CrossRef]

- DeLone, W.H.; McLean, E.R. The DeLone and McLean model of information systems success: A ten-year update. J. Manag. Inf. Syst. 2003, 19, 9–30. [Google Scholar]

- Mcknight, D.H.; Carter, M.; Thatcher, J.B.; Clay, P.F. Trust in a specific technology: An investigation of its components and measures. ACM Trans. Manag. Inf. Syst. (TMIS) 2011, 2, 1–25. [Google Scholar] [CrossRef]

- Lankton, N.K.; McKnight, D.H.; Tripp, J. Technology, humanness, and trust: Rethinking trust in technology. J. Assoc. Inf. Syst. 2015, 16, 1. [Google Scholar] [CrossRef]

- Pitt, L.F.; Watson, R.T.; Kavan, C.B. Service quality: A measure of information systems effectiveness. MIS Q. 1995, 19, 173–187. [Google Scholar] [CrossRef]

- Von Eschenbach, W.J. Transparency and the black box problem: Why we do not trust AI. Philos. Technol. 2021, 34, 1607–1622. [Google Scholar] [CrossRef]

- Ferrario, A.; Loi, M. How explainability contributes to trust in AI. In Proceedings of the 2022 ACM Conference on Fairness, Accountability, and Transparency, Seoul, Republic of Korea, 21–24 June 2022; pp. 1457–1466. [Google Scholar]

- Luhmann, N. Familiarity, confidence, trust: Problems and alternatives. Trust Mak. Break. Coop. Relat. 2000, 6, 94–107. [Google Scholar]

- Horowitz, M.C.; Kahn, L.; Macdonald, J.; Schneider, J. Adopting AI: How familiarity breeds both trust and contempt. AI Soc. 2024, 39, 1721–1735. [Google Scholar] [CrossRef]

- Arce-Urriza, M.; Chocarro, R.; Cortiñas, M.; Marcos-Matás, G. From familiarity to acceptance: The impact of Generative Artificial Intelligence on consumer adoption of retail chatbots. J. Retail. Consum. Serv. 2025, 84, 104234. [Google Scholar] [CrossRef]

- Cialdini, R.B. Social Proof: Truths are Us. Influence: Science and Practice, 5th ed.; Allyn & Bacon: Boston, MA, USA, 2009; pp. 97–140. [Google Scholar]

- Jarvenpaa, S.L.; Tractinsky, N.; Vitale, M. Consumer trust in an Internet store. Inf. Technol. Manag. 2000, 1, 45–71. [Google Scholar] [CrossRef]

- Chaouali, W.; Yahia, I.B.; Souiden, N. The interplay of counter-conformity motivation, social influence, and trust in customers’ intention to adopt Internet banking services: The case of an emerging country. J. Retail. Consum. Serv. 2016, 28, 209–218. [Google Scholar] [CrossRef]

- Choi, J.; Park, J.; Suh, J. Evaluating the Current State of ChatGPT and Its Disruptive Potential: An Empirical Study of Korean Users. Asia Pac. J. Inf. Syst. 2023, 33, 1058–1092. [Google Scholar] [CrossRef]

- Choung, H.; David, P.; Ross, A. Trust in AI and its role in the acceptance of AI technologies. Int. J. Hum.–Comput. Interact. 2023, 39, 1727–1739. [Google Scholar] [CrossRef]

- Baroni, I.; Calegari, G.R.; Scandolari, D.; Celino, I. AI-TAM: A model to investigate user acceptance and collaborative intention in human-in-the-loop AI applications. Hum. Comput. 2022, 9, 1–21. [Google Scholar] [CrossRef]

- Ganesan, S. Determinants of long-term orientation in buyer-seller relationships. J. Mark. 1994, 58, 1–19. [Google Scholar] [CrossRef]

- Yoon, C. The effects of national culture values on consumer acceptance of e-commerce: Online shoppers in China. Inf. Manag. 2009, 46, 294–301. [Google Scholar] [CrossRef]

- Sanchez, G.; Trinchera, L.; Sanchez, M.G.; FactoMineR, S. Package ‘plspm’; Citeseer: State College, PA, USA, 2013. [Google Scholar]

- Hair, J.F.; Hult, G.T.M.; Ringle, C.; Sarstedt, M. A Primer on Partial Least Squares Structural Equation Modeling (PLS-SEM); Sage Publications: Thousand Oaks, CA, USA, 2013. [Google Scholar]

- Bagozzi, R.P.; Yi, Y. On the evaluation of structural equation models. J. Acad. Mark. Sci. 1988, 16, 74–94. [Google Scholar] [CrossRef]

- Gefen, D.; Straub, D. A practical guide to factorial validity using PLS-Graph: Tutorial and annotated example. Commun. Assoc. Inf. Syst. 2005, 16, 5. [Google Scholar] [CrossRef]

| Measure | Value | Frequency | Percentage |

|---|---|---|---|

| Gender | Male | 153 | 50.2 |

| Female | 152 | 49.8 | |

| - | 305 | 100.0 | |

| Age | 25–29 | 48 | 15.7 |

| 30–39 | 67 | 22.0 | |

| 45–49 | 119 | 39.0 | |

| More than 50 | 71 | 23.3 | |

| - | 305 | 100.0 | |

| AI usage type | Language learning model | 237 | 77.7 |

| Image generation program | 30 | 9.8 | |

| In-app AI | 38 | 12.5 | |

| - | 305 | 100.0 | |

| Profession | Student (including graduate students) | 31 | 10.2 |

| Office worker | 95 | 31.1 | |

| Expert | 101 | 33.1 | |

| Self-employed | 27 | 8.9 | |

| Other | 51 | 16.7 | |

| - | 305 | 100.0 | |

| Paid usage experience | Yes | 107 | 35.1 |

| No | 198 | 64.9 | |

| - | 305 | 100.0 |

| Construct | Item No. | C. Alpha * | CCR ** | AVE *** |

|---|---|---|---|---|

| Performance | 4 | 0.874 | 0.914 | 0.726 |

| Reliability | 4 | 0.877 | 0.916 | 0.731 |

| Understandability | 4 | 0.852 | 0.900 | 0.692 |

| Familiarity | 3 | 0.914 | 0.946 | 0.852 |

| Social Influence | 4 | 0.909 | 0.936 | 0.786 |

| Trust | 3 | 0.901 | 0.938 | 0.835 |

| Perceived Usefulness | 4 | 0.944 | 0.960 | 0.856 |

| Intention to Cooperate | 3 | 0.962 | 0.975 | 0.929 |

| Construct | PF | REL | US | FAM | SI | TR | PU | ITC |

|---|---|---|---|---|---|---|---|---|

| PF | (0.85) | |||||||

| REL | 0.76 | (0.86) | ||||||

| US | 0.71 | 0.75 | (0.83) | |||||

| FAM | 0.43 | 0.49 | 0.52 | (0.92) | ||||

| SI | 0.62 | 0.58 | 0.60 | 0.66 | (0.89) | |||

| TR | 0.77 | 0.77 | 0.72 | 0.55 | 0.75 | (0.91) | ||

| PU | 0.58 | 0.48 | 0.55 | 0.39 | 0.52 | 0.56 | (0.93) | |

| ITC | 0.62 | 0.53 | 0.58 | 0.65 | 0.74 | 0.72 | 0.58 | (0.96) |

| Mean | 4.83 | 4.83 | 5.07 | 4.64 | 4.87 | 4.82 | 5.67 | 5.10 |

| SD | 1.26 | 1.23 | 1.08 | 1.43 | 1.23 | 1.27 | 1.16 | 1.34 |

| Hypothesis | Sign | Path Coefficient | t-Value | p-Value | |

|---|---|---|---|---|---|

| H1. | Performance -> Trust | (+) | 0.248 | 3.055 | 0.001 |

| H2. | Performance -> Perceived Usefulness | (+) | 0.317 | 3.909 | 0.000 |

| H3. | Reliability -> Trust | (+) | 0.289 | 3.263 | 0.001 |

| H4. | Reliability -> Perceived Usefulness | (+) | −0.154 | −1.668 | 0.048 |

| H5. | Understandability -> Trust | (+) | 0.110 | 1.953 | 0.026 |

| H6. | Understandability -> Perceived Usefulness | (+) | 0.261 | 2.884 | 0.002 |

| H7. | Familiarity -> Trust | (+) | 0.003 | 0.068 | 0.473 |

| H8. | Social Influence -> Trust | (+) | 0.365 | 7.226 | 0.000 |

| H9. | Trust -> Intention to Cooperate | (+) | 0.583 | 10.266 | 0.000 |

| H10. | Trust -> Perceived Usefulness | (+) | 0.248 | 2.978 | 0.002 |

| H11. | Perceived Usefulness -> Intention to Cooperate | (+) | 0.247 | 4.047 | 0.000 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chae, H.-S.; Yoon, C. Factors Affecting Human-Generated AI Collaboration: Trust and Perceived Usefulness as Mediators. Information 2025, 16, 856. https://doi.org/10.3390/info16100856

Chae H-S, Yoon C. Factors Affecting Human-Generated AI Collaboration: Trust and Perceived Usefulness as Mediators. Information. 2025; 16(10):856. https://doi.org/10.3390/info16100856

Chicago/Turabian StyleChae, Hee-Sung, and Cheolho Yoon. 2025. "Factors Affecting Human-Generated AI Collaboration: Trust and Perceived Usefulness as Mediators" Information 16, no. 10: 856. https://doi.org/10.3390/info16100856

APA StyleChae, H.-S., & Yoon, C. (2025). Factors Affecting Human-Generated AI Collaboration: Trust and Perceived Usefulness as Mediators. Information, 16(10), 856. https://doi.org/10.3390/info16100856