Abstract

Image captioning aims at generating meaningful verbal descriptions of a digital image. This domain is rapidly growing due to the enormous increase in available computational resources. The most advanced methods are, however, resource-demanding. In our paper, we return to the encoder–decoder deep-learning model and investigate how replacing its components with newer equivalents improves overall effectiveness. The primary motivation of our study is to obtain the highest possible level of improvement of classic methods, which are applicable in less computational environments where most advanced models are too heavy to be efficiently applied. We investigate image feature extractors, recurrent neural networks, word embedding models, and word generation layers and discuss how each component influences the captioning model’s overall performance. Our experiments are performed on the MS COCO 2014 dataset. As a result of our research, replacing components improves the quality of generating image captions. The results will help design efficient models with optimal combinations of their components.

1. Introduction

Image captioning is an essential branch of modern artificial intelligence (AI). It aims to produce a verbal description of a digital image, usually as a single phrase. It combines computer vision and natural language processing, two significant fields in AI. Image captioning presents challenges such as understanding context, recognizing details, and generating coherent and relevant descriptions. It helps make visual content accessible to visually impaired people. By providing descriptive captions for images, AI can help them understand and interact with digital content more effectively. It can also automatically scan and describe large volumes of visual content, assisting in identifying inappropriate or harmful content on social media and other platforms. It improves user experience in various applications like photo organizing and searching. Automatically generated captions can help users find specific images without manually tagging every photo. Image captioning can enhance online catalogs, improve customer experience on retail sites, and provide better insights from visual data like social media trends and consumer behavior. In digitizing cultural and historical archives, AI-powered image captioning can help catalog and describe large volumes of visual data, making them more searchable and understandable.

As a result, image captioning is experiencing intense development nowadays. It is driven by developing deep-learning methods that are made possible by increasing computing power. More and more new models are continuously appearing. Novel methods are resource-consuming; they require powerful computing servers to learn successfully and perform efficient inference processes. A drastic increase in complexity often obtains a slight increase in efficiency. Most recent transformer models are computationally intensive, requiring significant hardware and energy resources for training and inference. The high computational cost is due to the model size and the complex attention mechanisms that scale quadratically with the input length. This complexity necessitates extensive hardware, such as TPUs or multi-GPU setups, to efficiently manage the memory and processing requirements. Transformer models also demand large and diverse datasets for effective training. This extensive data requirement ensures that the model can learn a wide array of patterns and nuances in the data, which happens in the case of image captioning. However, acquiring and annotating such large datasets is costly and needs additional computational efforts. Furthermore, the quality of the training data directly impacts the model’s performance, as any biases or errors in the data can be amplified in the model’s outputs. On the other hand, older models, much less complex, are readily available. They consist of multiple components that their more advanced and efficient substitutes may replace. Finally, such a substitution could improve the overall efficiency of models.

Our paper thoroughly investigates the encoder–decoder architecture for image captioning, which consists of an encoder that converts the image into a feature vector and a decoder that decodes it as a single phrase describing the image’s content. In our experiments, we make use of the classic approach, where the encoder employs the backbone model based on the convolutional neural network (CNN) that extracts features from the image. The decoder, in turn, is the recurrent neural network (RNN), which produces, word-by-word, an output sentence describing the image content. In addition, the captioning model includes supplementary components. The first is word embedding, which converts the one-hot-encoded word (vocabulary index) into a fixed-length vector that makes the RNN input. The second is the adaptation component, which reduces and generalizes the image feature vector. Finally, the third one is responsible for merging the output of the RNN with the image feature vector and processing it by fully connected layers to obtain the prediction of the next word.

We focus our experiments on choosing particular components of the encoder–decoder captioning model and their influence on its efficiency. We aim to enhance the well-known encoder–decoder image captioning architecture by manipulating parameters in the model. By experimenting with CNN extractors, we captured more nuanced visual features, improving the model’s understanding of complex scenes. Furthermore, integrating more sophisticated language models (Glove, FastText) can significantly enhance the generated captions’ linguistic quality and semantic coherence. Our approach involves systematically tuning hyperparameters and expanding the various image and text feature extractors. These improvements collectively contribute to higher performance without main changes in the leading architecture, demonstrating the potential of our approach to bust the already existing image captioning model.

2. Deep-Learning Captioning Models

The history of image captioning, a field that merges computer vision and natural language processing, has evolved significantly over the years. The methods combine text and visual data and belong to the multimodal machine-learning approaches [1,2,3]. Image captioning has been a field of active research and development, showcasing the rapid advancements in computer vision and natural language processing. It continues to evolve, with ongoing research focusing on improving accuracy, context awareness, and the ability to generate more detailed and nuanced captions. The history of captioning can be divided into several periods. Initial attempts at image captioning were rudimentary and primarily rule-based. These systems relied on manually crafted rules for image recognition and text generation. Due to some uncertainty level, among other techniques, the fuzzy logic approach has been used [4,5]. In [6,7,8], the authors applied fixed templates with blank slots filled with various objects, descriptive tokens, and situations extracted from images by the object detection systems. On the other hand, in [9], the authors used already existing, predefined sentences. They created space of meaning from image features and compared images with sentences to find the most appropriate ones. Despite semantic and grammatical correctness, captions from traditional methods often differ from the way a human describes the image content. This period was characterized by limited success due to the time constraints of the technology available.

In early 2000, researchers began to train deep-learning models to recognize image patterns and generate descriptions [10]. However, these models still needed to improve their capabilities, often requiring hand-crafted features and extensive domain knowledge. The breakthrough came in the 2010s with the application of deep learning, where convolutional neural networks (CNN) [11] and recurrent neural networks (RNN) were combined. CNN focused on extracting features from images, while RNNs effectively handled sequential data, such as phrases consisting of consecutive words. Developing end-to-end trainable models in the mid-2010s, where a single model could be simultaneously trained on image processing and caption generation tasks, was a significant milestone [12]. It was enabled by large datasets like MS COCO (Microsoft Common Objects in Context) 2014, which provided extensive image and caption pairs for training.

Scenes in the MS COCO 2014 dataset are complex and consist of animals and people in their everyday activities. Sentences are correct semantically and grammatically represent different aspects of an image. On the contrary, BreakingNews [13] and GoodNews [14] contain novel captions, therefore being taken from news articles. Conceptual Captions [15,16] are collections of 3.3 million and 12 million automatically collected from the internet, with weakly associated descriptions. According to the quantity, they are useful for pretraining, but the source is not guaranteed according to the URL source. Therefore, during our experiments, we utilized the MS COCO 2014 dataset. High-quality captions and contextual richness make it well-suited for training image captioning models. It also works as a standard benchmark of image captioning research, which helps in the consistent evaluation and advancement.

Evaluation of image captioning methods is still a task that requires simulating human judgment. Due to that, it is still a complicated and comprehensive task. In pioneering work [17], the authors suggested that neural networks can interpret deep semantics of images and word embedding. They proved that combined image features extracted by CNN and word embedding could hold semantic meaning. Inspired by the success of machine translation, ref. [12] proposed using an encoder–decoder framework in image captioning, which has recently become dominant in the image captioning field. The paper [18] by Karpathy et al. introduced an architecture similar to human perception. The method generates novel descriptions over image regions, with R-CNN (regional convolutional neural networks) [19] for extracting image features and the RNN to generate consecutive words of caption iteratively. The model uses the multimodal embedding space to find the parts of the sentence that best fit the image regions. Differently from other proposed methods [12,17,20], where a global image vector was used, Karpathy focused on image regions, and a separate caption described each region. Finally, a spatial map generates the target word for image regions. These image captioning approaches, which focus on generating captions for each region of an image, are called dense image captioning [21,22,23].

The encoder–decoder architecture [12,24,25,26] considers the task of image captioning as the sequence-to-sequence problem. Using the image feature extractor, the encoder encodes the image to the fixed-length vector. The most widely used are CNN networks such as VGG [27,28], ResNet [28,29,30], or Inception [31,32]. The decoder represents a language model in image captioning and generates natural language descriptions for the output. Most popular approaches used RNN [12,33,34,35].

There are two main approaches to controlling how the encoder and decoder are linked together [36]. The first, called injection, applies the image feature vector to initialize the state vector of the RNN [20,37,38,39]. The second approach is the merge architecture, where the image feature vector is combined with the output of the RNN. In our experiments, we use the latter approach, which proved to be more efficient [40,41,42,43].

The introduction of attention mechanisms has significantly improved the performance of the encoder–decoder image captioning models by enabling them to focus on different parts of an image when generating each word in the caption. Recently, transformer-based models have revolutionized image captioning. Vision transformers (ViTs) [44] leverage self-attention mechanisms to process image patches, achieving competitive performance compared to CNN-based models. Method [45] employs an entire transformer architecture for both the encoder and decoder, eliminating convolutional layers. In contrast, traditional CNN+transformer models typically use a CNN (e.g., ResNet) as the encoder to extract spatial features from images before passing them to a transformer decoder. The model’s encoder is initialized with weights from the VIT [44] model. In the [46], the authors used X-linear attention block, with bilinear pooling to capture second-order interactions between elements during attention weights computation. It is achieved by simultaneously exploiting both spatial and channel-wise attention distributions. Furthermore, the X-linear attention block is integrated into the image encoder and sentence decoder to facilitate multimodal reasoning.

This shift towards transformers has been further bolstered by large-scale vision-language pretraining paradigms, exemplified by models such as CLIP [47] and ALIGN [48], which use extensive datasets to pretrain on paired image–text data. Model [49] employs a shared multi-layer transformer network for fine-tuning encoding and decoding for image captioning tasks. The model is pretrained using two unsupervised vision-language prediction tasks: bidirectional masked language prediction and sequence-to-sequence (seq2seq) masked language prediction. This dual approach enables the model to learn a more universal contextualized vision-language representation. By unifying the pretraining procedure, the model eliminates the need for separate models for encoding and decoding.

Moreover, transformer-based captioning models like OSCAR [50] and VinVL [51] encode visual features and textual context, producing contextually enriched captions. Model [51] improved the transformer-based VL fusion model OSCAR by feeding it with a new object detection model and proved that visual features matter mostly in VL models. The latest model incorporates the ResNeXt-152 C4 architecture, which is tailored for generating object-centric representations and detecting objects and their attributes, thanks to the attribute branch during fine-tuning.

Recent advancements in 3D representation learning have also led to significant innovations across 3D image captioning [52]. Model [53] enhances 3D point-cloud classification and segmentation. Ref. [54] addresses the aggregation and transfer of local features in point clouds, achieving robust performance in shape classification and segmentation. Ref. [55] leverages 3D point clouds for person re-identification, utilizing global semantic guidance and local feature extraction to achieve high accuracy while reducing model parameters. Ref. [56] advances scalable multimodal 3D representation learning by automatically generating language descriptions for 3D shapes, demonstrating state-of-the-art results in zero-shot classification, fine-tuning, and image captioning. Finally, ref. [57] adapts GPT concepts to point clouds through an auto-regressive generation task. Collectively, these approaches highlight the potential of advanced neural network architectures and pretraining frameworks to enhance 3D data processing and image captioning. During the rapid development of image captioning methods, researchers have also investigated other aspects of captions that are not just comparable to human judgment. Researchers have focused on captions with a specific style. In [25], the authors improved the descriptiveness of the generated captions by combining CNN and LSTM. In [58], the authors focused on captions for visually impaired people. The developed model tends to create captions that describe the surrounding environment.

3. Preliminaries

3.1. Encoder–Decoder Image Captioning Model

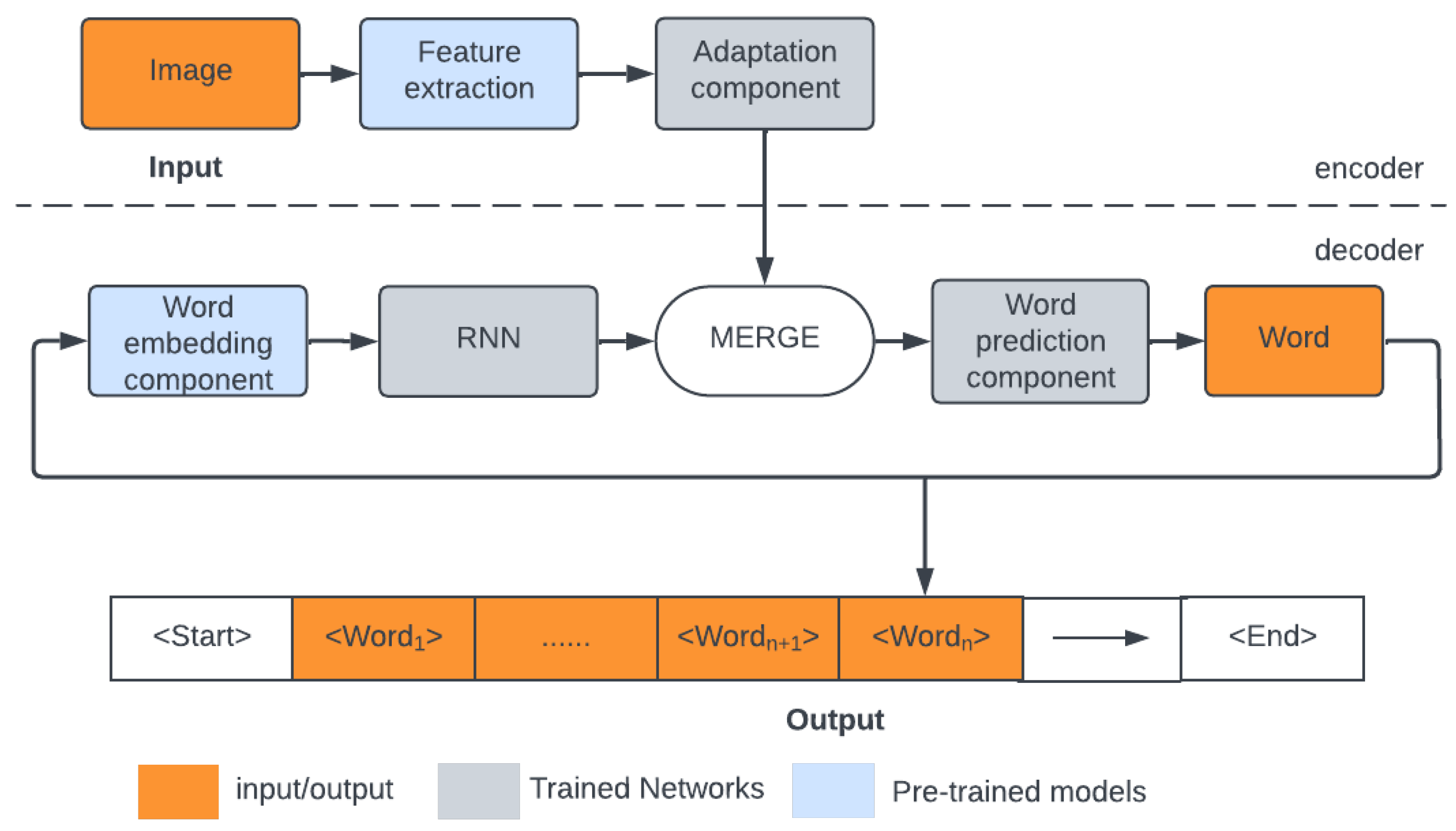

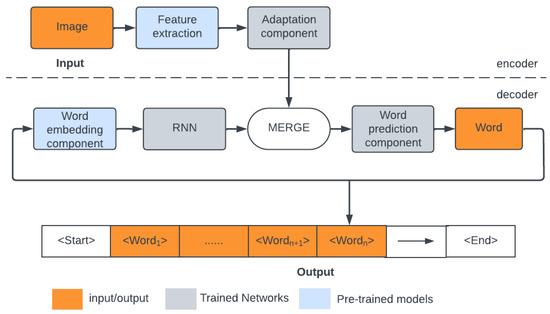

In our paper, we investigate the merge approach to encoder–decoder-based captioning. The complete structure of the model under study is shown in Figure 1. The encoder–decoder merge model consists of two main components. The first component is responsible for encoding the visual information from the image. The CNN-based encoder processes the input image to extract visual features. The output of the encoder is a feature vector that represents the content of the image. Following most approaches [18,24,25,59,60,61,62,63], in our experiments, we use the pretrained backbones. This convenient solution uses transfer learning to save time for learning image features (for details, see Section 3.2). The continuous development of backbones results in the increasing efficiency of image feature extractors—the first part of our research focuses on their influence on the final captioning quality. In the data processing pipeline, the pretrained feature extractor is followed by the adaptation component, which produces a shorter feature vector and—contrary to the pretrained extractor—is trained during the training process, thus adapting the feature vector, generated by the off-the-shelf backbone, to the particular form of data used by the captioning model.

Figure 1.

Encoder–decoder merge captioning model.

The decoder that generates a textual description is the second principal component of the captioning model. This component is based on a recurrent neural network. The RNN processes the feature vector sequentially, generating one word at a time and creating a coherent sentence. The decoder generates a caption, beginning with a <Start> token. Word by word, the decoder predicts the following word in the sequence until it reaches an <End> token. The decoder learns to translate the visual features into meaningful sequences of consecutive words. In our experiments, we mainly use the LSTM model, but we also validate its alternative—the GRU recurrent network (for details, see Section 3.3). As in many text generation models, we use, in addition, the word embedding models that present several advantages like dimensionality reduction and generalization properties. This issue is discussed in Section 3.4.

Linking the encoder and the decoder in the merge architecture is achieved by combining the image feature vector modified by the adaptation component with the output of the RNN. There are two ways of doing this: vectors of adapted image features and output of the RNN can be either added or concatenated. The result of this operation is next processed by the word prediction component, composed of fully connected layers. It outputs a vector of size equal to the vocabulary size predicting the next word in a sentence in a one-hot encoded manner. On the one hand, this word is added to the final sentence. On the other hand, it is used, along with previously generated words, as the decoder’s recurrent input to predict the next word of the sentence. In our experiments, we validate various configurations of the captioning model’s adaptation, merging, and word prediction components (for details, see Section 3.5).

During the experiments, we checked how the model’s choice of the above components affects the final results. We tested several configurations of adaptation, merging, and word prediction components of the image captioning model.

3.2. Image Feature Extractors

Image features are essential in image captioning. Following the majority of approaches, we used pretrained models (backbones) in our experiments to extract them. Pretraining makes it possible to focus on the captioning model and restrict training to its remainder, leaving apart the tedious training of the image feature extractor. Weights of pretrained backbones are not updated during training but are used as the frozen layers of the image captioning model to extract specifics of the image. The vector of extracted features is the input to the adaptation component, which updates weights during training. In our experiments [64], we selected several pretrained feature extractors (backbones) among many available, focusing on ResNet, Inception, InceptionV3, Xception, DenseNet, and MobileNet. We also applied the older VGG backbone used in pioneering deep-learning captioning models for comparative purposes.

VGG [65] is a group of convolutional neural networks widely used for image classification tasks. The most popular variants are VGG16 and VGG19. VGG16 consists of 13 convolutional and 3 dense layers and was trained to recognize 1000 object classes referring to objects depicted on input 224 × 224 × 3 color images from the ImageNet database [66]. The backbone network, which produces the image feature vector of length 4096, has been obtained by cutting out the dense layers. VGG19 has three more CNN layers than VGG16. That allows us to learn richer data representations and achieve higher prediction results. On the other hand, VGG19 is more exposed to the vanishing gradient problem than VGG16 and requires more computational power.

The ResNet [67] network was created to support many layers while preventing the phenomenon of vanishing gradients in deep neural networks. The most popular variants are ResNet18, ResNet50, and ResNet100, where the number in the name represents the number of layers. The fundamental concept behind ResNet is the utilization of residual blocks, which introduce skip connections (shortcuts across layers). These connections allow the network to bypass specific layers, enabling it to learn residual mappings instead of directly attempting to approximate the desired underlying mapping.

The network structure consists of two main phases. In the beginning, the stack of skip connections layers is built. Those layers are omitted, and the activation function from the previous layer is used. In the next stage, the network is learned again, layers are expanded, and other parts of the network (residual blocks) learn deeper features of the image. Residual blocks are the heart of residual convolutional networks. They add skip connections to the network, which preserve essential elements of the picture till the end of the training, simultaneously allowing smooth gradient flow.

The Inception [68] model was created to deal with overfitting in very deep neural networks by going wider in layers rather than deeper. It is built among Inception blocks that process input and repetitively pass the result to another Inception block. Each block consists of four parallel layers 1 × 1, 3 × 3, 5 × 5, and max-pooling. Here, 1 × 1 is used to reduce dimensions by channel-wise pooling. Thanks to that, the network can increase in depth without overfitting. Convolution is computed between each pixel and filter in the channel dimension to change the number of channels rather than the image size. Moreover, 3 × 3 and 5 × 5 filters learn spatial features of the image in different scales and act similarly to human perception. Final max-pooling reduces the dimensions of the feature map. The most popular versions of the Inception network are Inception, InceptionV2, and InceptionV3.

InceptionV3 [69] incorporates the best techniques to optimize and reduce the computational power needed for image feature extraction in the network. It is a deeper network than InceptionV2 and Inception, but its effectiveness is maintained. Also, auxiliary classifiers can be used to improve the convergence of very deep neural networks and combat the vanishing gradient problem. Factorized convolutions were used to reduce the number of parameters needed in the network, and smaller asymmetric convolutions allowed faster computations.

Xception [70] is a variation of an Inception [68] model that decouples cross-channel and spatial correlations. The architecture is based on depth-wise separable convolution layers and shortcuts between convolution blocks, as in ResNet. It consists of 36 convolutional layers divided into 14 modules. Each module is surrounded by residual connections, except the first and last modules. It has a simple and modular architecture and achieved better results than VGG16, ResNet, and InceptionV3 in classical classification challenges.

DenseNet [71] was created to overcome the vanishing gradient problem in very long deep neural networks by simplifying data flow between layers. The architecture is similar to ResNet, but thanks to the simple change in connection between layers, DenseNet allows the reuse of parameters within the network and produces models with high accuracy. The structure of DenseNet is based on a stack of connectivity, transition, and bottleneck layers grouped in dense blocks. Every layer is connected with every other layer in a dense way.

A dense block is a main part of DenseNet and reduces the size of feature maps by lowering their dimensions. In each dense block, the dimensions of feature maps are constant, but the number of filters changes. A transition layer is placed between each dense block to concatenate all previous inputs, reducing the number of channels and parameters needed in the network. Also, a bottleneck layer is placed between every layer to reduce the number of inputs, especially in far-away layers. DenseNet also introduced a growth rate parameter to regulate the quantity of information added in each layer. The most popular implementations are DenseNet121 and DenseNet201, where the number denotes the number of layers in the network.

MobileNet [72] is a small and efficient CNN designed for mobile computer vision tasks. It is built of layers of depth-wise separable convolutions, composed of depth-wise and point-wise layers. MobileNet also introduced width multiplier and resolution multiplier hyperparameters. The width multiplier decreases the computational power needed during training, and the resolution multiplier decreases the resolution of the input image during training. The most popular versions of MobileNet are MobileNetV1 and MobileNetV2. In comparison with MobileNet, MobileNetV2 introduced inverted residual blocks and linear bottlenecks. Also, the Relu activation function was replaced by Relu6 (ReLu with saturation at value 6), which improved the model’s accuracy.

3.3. Recurrent Neural Network

In our experiments, we applied two of the most popular recurrent neural network structures: LSTM and GRU. The RNN predicts the following word in the sentence at every time step based on its predecessors. LSTM and GRU are types of RNNs specially designed to capture long-range dependencies in sequential data, such as time series or natural language.

Long-short-term memory (LSTM) [73] was designed for long-sequence problems and can predict the next word in the sequence based on its predecessors. Each LSTM unit consists of three gates that control and monitor the information flow in LSTM cells. The forgetting gate decides which information from the previous iteration will be stored in the cell state or is irrelevant and can be forgotten. In the input gate, the cell attempts to learn new information. It quantifies the relevance of the new input value of the cell and decides whether to process it. The output gate transfers the updated information from the current iteration to the next iteration. The state of the cell also contains the information along with a timestamp.

A gated recurrent unit (GRU) [74] was introduced as the more straightforward alternative to LSTM. All the information is passed and maintained through hidden states across all the time steps. Forget and input gates were replaced by one update gate, which controls the amount of information from the previous and current steps used. The reset gate controls how much information is discarded. Compared to LSTM, GRU has fewer parameters because the forget and output gates are merged into one update gate. Thanks to that, training is faster, and structure is more straightforward, especially for modeling an information flow within a neural network. On the other hand, LSTM proved to be a better solution for modeling long dependencies than GRU. However, the neural network’s actual performance depends on the dataset’s task and specification.

3.4. Word Embedding Models

Word embedding is a vector representation of words fed to the RNN part of our deep-learning model that allows for the representation of words in the context. In our encoder–decoder merge captioning model, the word embedding component creates input for the RNN that directly predicts words based on previous ones.

One-hot encoding is the most straightforward word-encoding technique, where each word/token is encoded to the binary vector representation. The method is based on the dictionary created for all unique tokens in the corpus. A fixed-length binary vector of the size of a dictionary represents each word. Each word is replaced by its index in this dictionary encoded as ’1’ in the embedding vector, while its other elements are set to ’0’. It is a straightforward technique that captures various words but misses their semantic relation. Furthermore, fixed-length vectors are sparse, which could be more computationally efficient. More sophisticated approaches to word embedding have been proposed to obtain shorter vector representation that reflects relations between words.

The most common embedding systems used for natural language processing and image captioning are Glove, Word2Vec, and FastText. The Word2Vec [75] method simultaneously captures semantic relations between words. It is based on two techniques: continuous bag of words (CBOW), which allows the prediction of words from the context word list vector, and the continuous skip-gram model, a simple one-layer neural network that predicts context based on a given word. The most often used sizes of pretrained Word2Vec models are 100, 200, and 300.

FastText [76] comes from the Word2Vec model but analyzes words as n-grams. The algorithm is similar to CBOW from Word2Vec but focuses on a hierarchical structure, representing a word in a dense form. Each n-gram is a vector, and the whole phrase is a sum of those vectors. Training is similar to the CBOW to achieve a word embedding vector. The size of the vectors in pretrained FastText models typically ranges from size 100 to 300. Models trained on large corpora have higher-dimensional vectors, whereas small corpora are represented in lower-dimensional ones.

Glove [77] word embedding is based on unsupervised learning to capture words that occur together frequently. Thanks to global and local statistics, semantic relations are created based on the whole corpus. Furthermore, it uses global matrix factorization to represent the word or lack of words in the document. It is also called the count-based model because Glove tries to learn how the words co-occur with other words in the corpus, allowing it to reflect the meaning of the words conditionally of the different words. Most popular pretrained Glove vectors represent words in 200- or 300-dimensional space.

In our image captioning model, we utilized GLOVE and FastText word embedding, which were trained on a large corpus and allowed for the contextual modeling of words.

3.5. Adaptation, Merging, and Word Prediction

The encoder–decoder merge model as input consumes images encoded by backbones (see Section 3.2). An adaptation component is applied to transform features from the backbone into a format suitable for further processing. High-dimensional feature vectors are mapped into a lower-dimensional space, which helps to distill the most relevant visual information while reducing computational complexity, making it more manageable for subsequent stages of the captioning model.

Encoder and decoder are linked using merge architecture, meaning that features modified by the adaptation component and output from the RNN are combined. In our experiments, we tested two ways of doing that: addition and concatenation.

In the add mechanism, modified image features and the RNN output are added element-wise—each corresponding element of the image features and the RNN output vectors are summed together. In this case, the adapted image feature vector and RNN output lengths must be equal.

The concatenate method creates a joint feature representation of modified image features and the RNN output by concatenating along the feature dimension axis. The length of the output vector is thus a sum of the lengths of the adapted image feature vector and the output of the RNN. The method combines both modalities and creates a comprehensive representation in a single feature vector.

Merged features are processed via a word prediction component—a sequence of fully connected layers that first reduce the merged vector, transforming it into lower-dimensional space. It is a trimmer to the final feature matrix that coherently and equally represents visual and text features. Finally, the probability distribution of the next word in the sequence is calculated using another fully connected layer. Output is thus a vector of size equal to the vocabulary size. A separate track of our experiments focused on the word prediction component design.

3.6. Evaluation Metrics

Evaluating image captioning results is a complex task because it needs to determine if the machine’s descriptions are correct and also judge their quality, relevance, and clarity, which can be subjective and complex.

Evaluation metrics in image captioning measure the correlation of generated captions with human judgment. The latter is present in the training and test set metadata in the form of five human-written captions (ground truth). Metrics are used to compare the model output with these five captions. They estimate the similarity between predicted captions and ground-truth ones. Evaluation metrics apply their technique for computation and have distinct advantages. Standard evaluation metrics for image captioning are BLEU-1 to BLEU-4, CIDEr, and SPICE. They calculate word overlap between candidate and reference sentences. Higher values indicated better results.

The BLEU (Bilingual Evaluation Understudy) [78] metric measures the correlation between predicted and human-made captions. It compares n-grams in predicted and reference sentences, where more common n-grams result in higher metric values. It is worth mentioning that metrics exclusively count n-grams; locations of the n-grams in sentences are not considered. Metric also allows additional weights for specific n-grams to prioritize longer, common sequences of words. Usually, 1 to 4 g is used when computing the metric—the respective variants are called BLEU-1 up to BLEU-4.

CIDEr (Consensus-based Image Description Evaluation) [79] metric calculates correspondence between candidate and reference captions. It is based on the TF-IDF metric, calculated for each n-gram. It is widely used for SCST [33] training, where the strategy is to optimize the model for a specific metric. It results in higher results during the testing phase compared with [33]. Furthermore, CIDEr optimization during training impacts high BLEU and SPICE metrics scores.

The Consensus-based Image Description Evaluation (CIDEr) [79] assesses how well a predicted caption aligns with the consensus among a set of reference image descriptions. It emphasizes similarity to human captions without requiring subjective judgments about factors such as content, grammar, or saliency.

All the above metrics are used in various NLP tasks. However, according to some investigations [80], they do not correlate with a human judgment, which makes them not adequate to measure the similarity of image captions The authors of [80] propose their metric, but because it is much lesser (more than 10×) in popularity compared with SPICE, we decided to use the latter in the current study. Among the known metrics, the one that correlates with human judgment is SPICE (Semantic Propositional Image Caption Evaluation) [81]. This metric measures the similarity between sentences, represented by a directed graph. The SPICE algorithm at the beginning creates two directed graphs. The first one is for all reference captions, and the second is for the candidate sentence. Graph elements can belong to three groups. The first group is objects and activity performers, the second group consists of descriptive tokens (adjectives adverbs), and the last group represents relations between objects and links other groups of tokens on the graph. Based on this representation, sentences are compared.

4. Training and Evaluation

4.1. Dataset and Testing Procedure

During our experiments, we used the model for the evaluation and training of the MS COCO 2014 dataset [82,83]. It consists of more than 120,000 images from various everyday scenes. Five captions describe each photo in natural language. We also used the Karpathy split [18], where there are 113,000 images in training, 5000 in validation, and 5000 in test disjoint subsets. It is the most popular split in the image captioning area, allowing comparison models and results for them.

We divided our experiment into three stages to investigate each part of our encoder–decoder merge captioning model. Initially, we experimented with pretrained image and text encoders to find the optimal pair for our model architecture. We trained various models using each image encoder and text encoder mentioned previously (see Table 1 for specific combinations of feature extraction and word embedding components). At that stage adaptation, RNN, and word prediction components are excluded from the experimental process, and their parameters are configured permanently as not part of this experimental stage. Here, we look for the pair of feature extraction and word embedding components that produce the most accurate captions.

Table 1.

Evaluation results for MSCOCO 2014 test dataset. Metrics’ values are averaged over the whole test dataset.

In the next step, we investigated the generalization of image and text features extracted via feature extraction and word embedding components. Here, responsible for that are the adaptation, RNN, word prediction, and MERGE components. We set up permanent input and output features of the feature extraction and word embedding components and experimented with the parameters and structure of the adaptation, RNN, word prediction, MERGE components. The word prediction component takes merged features from the MERGE component, so we experimented, respectively, with the add and concatenate method as the merging strategy, dividing this area of experiments into two separate stages.

GRU is a popular alternative to LSTM in language modeling tasks, so we experimented with GRU as the basis of a language model. As in the previous scenario, we set up permanent input and output features of the feature extraction and word embedding components and checked combinations of the adaptation, RNN, and word prediction components.

At each stage of our experimental setup, we evaluated the results with the BLEU-1–BLEU-4, CIDEr, and SPICE metrics. The complete process will allow us to achieve a comprehensive perspective of the performance of different image encoders along with different embedding methods, separately to the influence of parameters in particular components of our image captioning model.

4.2. Training

Before training starts, captions are preprocessed—all words are converted to lowercase and tokenized. We removed punctuation, hanging single-letter words, and discarded rare words that occurred less than five times. Next, the list of processed words is appended with start and stop tokens to mark the beginning and end of the sentence, respectively. As a result, we achieved the MS COCO 2014 vocabulary (dictionary) that will be used to create an embedding matrix that contains embedding vectors. Before being handled by RNN, word sequences must be represented in word embedding vectors. We adopted pretrained versions of FastText and Glove word embedding to extract the text features and finally achieved a vocabulary size of 7293. Each word is embedded into a 200-element vector for Glove and a 300-element vector for FastText word embedding space.

During training, the model processes combined RNN (LSTM or GRU) output and image feature vectors based on the backbone for a given image and adaptation component. We applied different image feature extractor described in Section 3.2 and variants of the adaptation component, MERGE component, word prediction component, which are described in Section 3.5. At each time step, the word prediction component predicts a word for the processed image and compares it with the ground-truth word from the corresponding training set. The predicted words and ground-truth words (from the training set) are compared using the cross-entropy measure.

In our experiments, we use the Adam [85] optimizer with a base learning rate of 0.001. We set the batch size to be 480 and train for up to 150 epochs with early stopping if the loss score had not improved over 0.001.

4.3. Evaluation

During the testing, the image captioning model is fed by a preprocessed photo based on the backbone of a given image. In the beginning, at the 0 time step, there is no previously predicted word. Therefore, to denote the start of prediction, a start of sentence token start is used. Words are replaced by their embeddings. Next, the image captioning model predicts words recursively until the sentence’s end (marked by stop token) or the maximum length of the sentence has been reached and adds it to the word list. At each step, the chance of the occurrence of one word next to another is calculated using embedding specific to the tested text features. Finally, a full caption for the tested image is generated and compared with ground-truth phrases for the tested image using specific metrics mentioned in Section 3.6.

During the evaluation, we used a greedy search algorithm when sampling the caption for the MS COCO 2014 dataset. We report results using the MS COCO captioning evaluation tool [83], which reports the following metrics: BLEU-1–BLEU-4, CIDEr, and SPICE.

5. Results

5.1. Feature Extraction and Word Embedding

Motivated by [18], and considering the variety of available pretrained object detection models and language processing models, at first, we conducted experiments to enhance the accuracy of a baseline captioning model via changes in encoding of an input data—the choice of the backbone in the feature extraction component with two types of word processing—the word embedding component.

Images from the dataset are resized and normalized before entering the image captioning model to be compatible with one of the image feature extractors. For VGG16, VGG19, ResNet152V2, ResNet50, DenseNet121, DenseNet201, MobileNet, and MobileNetV2 input shape is 224 × 224 × 3, and for InceptionV3 and Xception, it is equal to 299 × 299 × 3. As a result, we obtained feature vectors with the following sizes, corresponding to the preprocessed input image: 4096-element vector for VGG16 and VGG19; 2048-element for InceptionV3, Xception, and ResNet152V2; 1024-element for DenseNet121; 1920-element for DenseNet201; 1000-element for MobileNet; and finally, 1280-element for MobileNetV2. We used backbones pretrained on the ImageNet dataset, where the network’s fully connected layers are removed since we do not need the probability distribution on 1000 image categories from ImageNet. The adaptation component in this experiment consists of a single fully connected layer where the size of an input equals the length of a feature vector (which depends on the backbone used) and the output, the size of which equals the size of the RNN, which is, in this experiment, the LSTM network.

The word prediction component consists of two fully connected layers: the first keeps the same input and output size equal to 256, and the second outputs the probability of words. The size of the latter’s input equals 256, while the output is the vocabulary size.

Table 1 shows the results of image captioning metrics calculated for different image extractors (Image Features column) and text feature extractors (Embeddings column). We analyzed all models using the BLEU-1–BLEU-4, CIDEr, and SPICE metrics. Following the literature, we used the most recent CIDEr and SPICE metrics to evaluate the performance, keeping the remainder for comparative purposes. We also added information about the number of parameters in the neural network (column “No. of model parameters (mln)”) and the average time of sentence generation by the model (column “Time of sentence generation (ms)”). For the same purposes, we added four reference methods in the last four rows of Table 1.

From the obtained results, we can see that model performance depends mainly on the backbone used. Considering the CIDEr metric, the best results have been achieved for the Xception backbone feature extractor; second place belongs to DenseNet201. The spread between the highest (Xception with Glove, 78.13) and the lowest (VGG with Glove, 67.35) metrics value equals 10.78 points difference, which makes the model strongly dependent on the image backbone feature extractor. The evaluated quality of caption extractors is correlated with the backbones’ accuracy. The average time of sentence generation is not correlated with the model complexity (number of model params). Differences in execution time spread from 1748 to 3860 ms. The fastest is DenseNet201, which is also the second-best model. All the execution times reported in this paper have been measured for a single caption on the computer with the following parameters: NVIDIA GeForce RTX 4070 with 12GB VRAM; AMD Ryzen 5 3600 6-core processor; 32 GB RAM.

One cannot observe any remarkable superiority of one embedding model over another. For some metrics, the Glove model performs better, while for the remainder, the FastText model. In most cases, FastText embedding achieves higher results than Glove for the same image feature extractor. This suggests that FastText adapts more easily to different backbones than Glove. Longer feature vectors do not imply higher performance. The longest feature vectors VGG backbones generate do not mean higher measure values. The winning models use 2048- (Xception) and 1920-element (DenseNet201) vectors. The average time of sequence generation is not correlated with the model complexity (no. of model parameters). Differences in execution time between models spread from 874 to 1417 ms. The fastest is DenseNet201, which is also the second-best model.

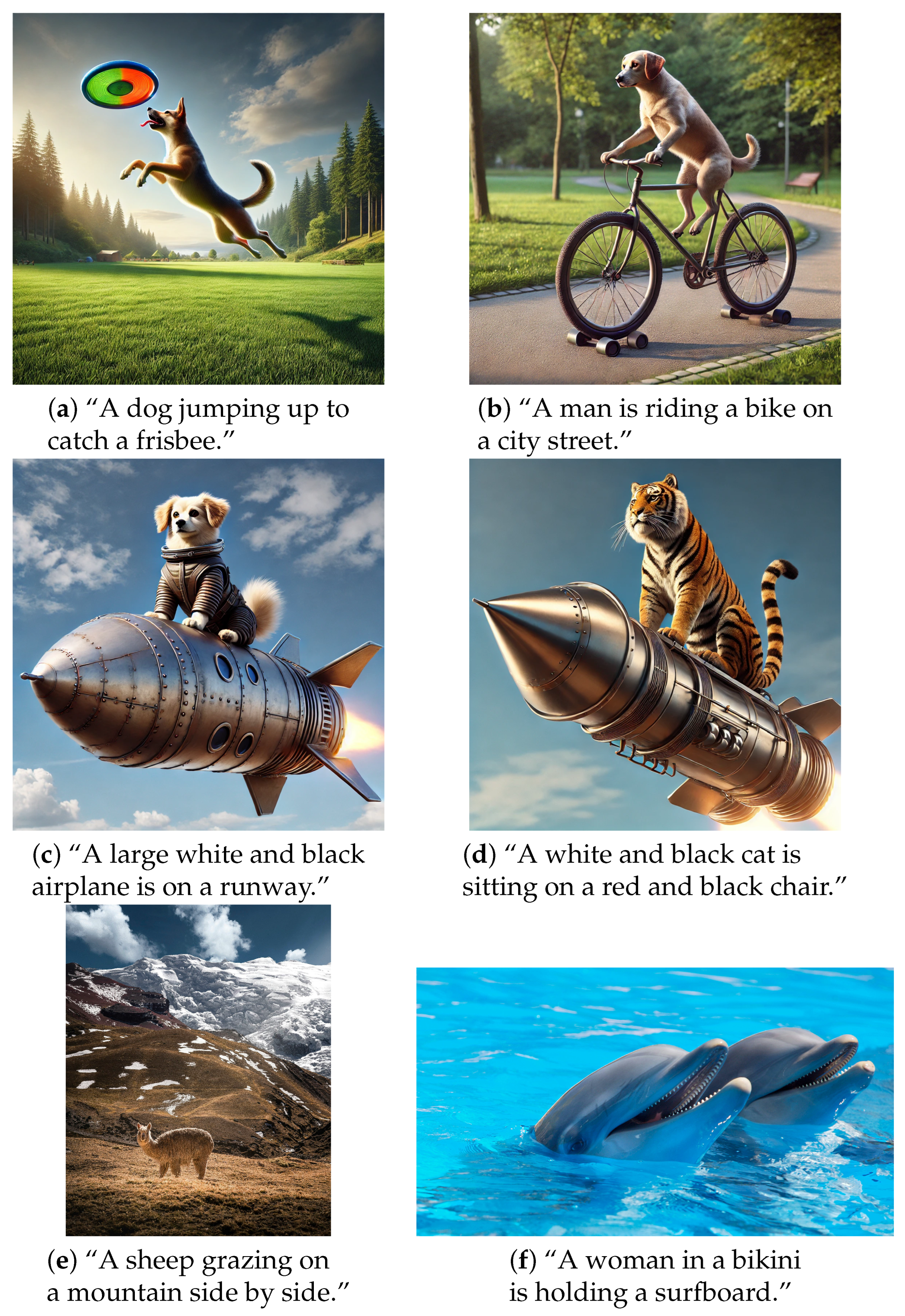

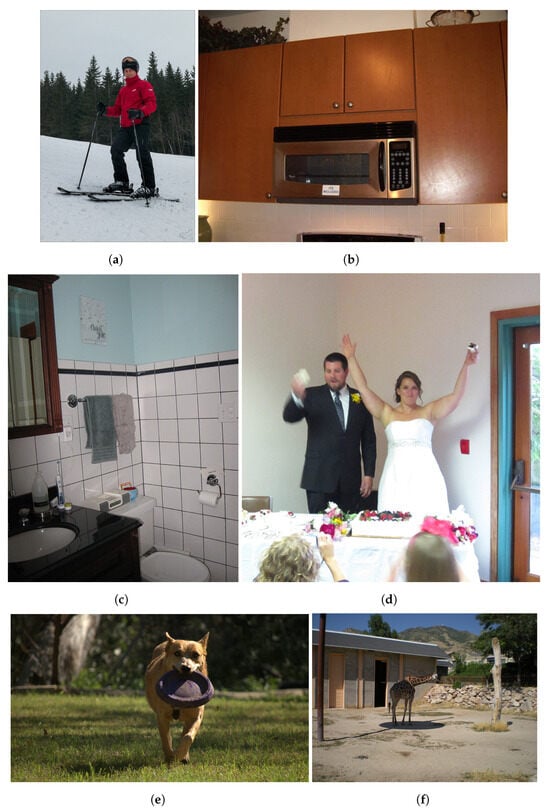

In Table 2, we present example correct captions obtained using various image feature extractors and Glove embedding; respective images are shown in Figure 2. The table contains ground-truth five captions from the dataset metadata, captions obtained from the model, and values of metrics calculated for particular predicted sentences. Generated captions sound good, are grammatically correct, and are consistent with the image content. However, in picture shown in Figure 2b, we can observe the hallucination phenomenon [86], despite no presence of the word “stove” in the groundtruth captions model, which calculated that in the kitchen near the microwave there must be a stove, even though there is no such object on the image.

Table 2.

Example images with predicted captions and achieved scores. For pictures, see Figure 2.

Figure 2.

Images (with COCO IDs) with properly predicted captions (see Table 2 for details). (a) ID = 95051; (b) ID = 309933; (c) ID = 570138; (d) ID = 105234; (e) ID = 347950; (f) ID = 27440.

In contrast to the above, in Table 3, we compare the caption for Figure 2d produced by the model, which achieved the overall highest CIDEr score in our research (Xception + Glove), with the model based on DenseNet201. The presented metrics were explicitly calculated for predicted sentences. Comparing them, we can see that the model based on DenseNet201 does not correctly recognize objects in the image (bride and groom) and relations between them (cutting wedding cake). Human judgment is also reflected in the CIDEr metric. Despite grammatical correctness, the CIDEr metric rated the sentence 11.12 as unrelated to the five reference sentences. The result for the same sentence, produced by the model based on Xception, is 146.85 points higher, whereas in the produced sentence, we can point to all of the relations and objects from reference sentences.

Table 3.

Comparison of predicted captions for Figure 2d, with DenseNet201 and Xception as image feature extractors, along with Glove as word embeddings.

5.2. Merging and Word Prediction

In the next step, we kept the best-performing feature extraction and word embedding components, and we focused on the components that generalize image and text features in our image captioning model. The adaptation component as input consumes 2048 image features vector obtained via the Xception backbone in the feature extraction component. Images before entering the network are resized to 229 × 229 × 3 to be compatible with the Xception architecture. The adaptation component reduces the length of a feature vector. Four cases are considered in our research: 128, 256, and 512. In addition, we also consider the case when the image feature vector is delivered directly to the MERGE component without any preprocessing.

The word embedding component uses Glove embedding and, as input, consumes a vector of size 7293—equal to the vocabulary size and output vector of size 200—equal to the Glove embedding size. Next, the RNN component consumes embedded words and processes them through RNN, which in this experimental scenario is LSTM. The LSTM output size equals the size of the adaptation component’s output. However, in some of the experiments, we disregarded the adaptation component (marked as “-” in the column “Adaptation component size” in Table 4). In that case, concatenation directly merges Xception image features with LSTM output in the MERGE component.

The word prediction component comprises two fully connected layers. The first has input and output sizes equal to the output from MERGE component, while the second output is the probability of words vector—the vocabulary size. In some of the experiments, we disregarded the first fully connected layer(marked as “-” in the column “Word prediction component size” in Table 4 and Table 5) and directly sent merged image and text features to the layer that outputs the probability of words. This allowed us to test whether the output of the MERGE component needs to be trained in the word prediction component to train the whole captioning model efficiently.

Table 4.

Evaluation results for MSCOCO 2014 test dataset using LSTM as RNN and merging via concatenate method. Bold indicates the model with highest metrics values.

Table 4.

Evaluation results for MSCOCO 2014 test dataset using LSTM as RNN and merging via concatenate method. Bold indicates the model with highest metrics values.

| RNN Size |

Adaptation Component Size |

Word Prediction Component Size | No. of Model Parameters (mln) | Time of Sentence Generation (ms) | BLEU-1 | BLEU-2 | BLEU-3 | BLEU-4 | CIDEr | SPICE | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 512 | 512 | 1024 | 33.35 | 6024 | 66.31 | 48.47 | 34.29 | 24.22 | 79.05 | 15.57 |

| 2 | 256 | 256 | - | 27.04 | 2814 | 66.96 | 48.74 | 34.32 | 24.28 | 79.07 | 15.54 |

| 3 | 128 | 128 | 256 | 24.68 | 2647 | 67.27 | 49.69 | 35.36 | 25.04 | 80.44 | 15.71 |

| 4 | 256 | 256 | 512 | 27.31 | 2812 | 67.51 | 49.75 | 35.56 | 25.36 | 82.49 | 16.08 |

| 5 | 256 | - | - | 25.56 | 2765 | 65.36 | 47.02 | 32.72 | 22.77 | 75.93 | 14.90 |

| 6 | 256 | - | 512 | 26.66 | 2513 | 66.22 | 48.39 | 34.32 | 24.27 | 78.71 | 15.09 |

| 7 | 256 | 256 | 256 | 25.32 | 2247 | 67.56 | 49.72 | 35.48 | 25.24 | 81.85 | 15.63 |

| 8 | 256 | 256 | 128 | 24.31 | 1905 | 67.18 | 49.47 | 35.19 | 24.90 | 80.73 | 15.40 |

| 9 | 512 | 512 | - | 32.29 | 2393 | 65.24 | 47.33 | 33.30 | 23.54 | 75.86 | 14.88 |

Table 5.

Evaluation results for MSCOCO 2014 test dataset using LSTM as RNN and merging via add method.

Table 5.

Evaluation results for MSCOCO 2014 test dataset using LSTM as RNN and merging via add method.

| RNN Size |

Adaptation Component Size |

Word Prediction Component Size | No. of Model Parameters (mln) | Time of Sentence Generation (ms) | BLEU-1 | BLEU-2 | BLEU-3 | BLEU-4 | CIDEr | SPICE | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 512 | 512 | 512 | 28.82 | 5565 | 67.53 | 49.67 | 35.39 | 25.19 | 82.48 | 15.75 |

| 2 | 256 | 256 | - | 25.18 | 4195 | 66.87 | 48.61 | 34.17 | 24.00 | 78.55 | 15.39 |

| 3 | 128 | 128 | 128 | 23.7 | 5100 | 65.86 | 47.94 | 33.54 | 23.40 | 74.92 | 14.77 |

| 4 | 256 | 256 | 256 | 25.2 | 6336 | 66.59 | 48.63 | 34.34 | 24.33 | 78.13 | 15.16 |

In Table 4 and Table 5, we show results of the experiments on the choice of parameters in adaptation, RNN, and word prediction components for the add and concatenate method, as the merging strategy in the MERGE component, respectively. Each row represents a variant of the model.

The first four rows of Table 4 and Table 5 show comparable results for the add and concatenate merging methods. Comparing them, we can see that using the concatenate merge method better aggregates local features into global features. We can achieve the same results as for the add–merge method but with fewer parameters in the network and a significant reduction in training time. Best results considering the CIDEr metric 82.49 were achieved for RNN and the adaptation component of size 256 (row 4 in Table 4), with 27.31 mln parameters in the network. On the other hand, the model based on the add method (see Table 5) requires a size of the RNN and the adaptation component equal to 512 units, with 28.82 mln parameters in the network. Also, comparing the time of sentence generation, the concatenate method performs faster. For our best results, the concatenate method needs on average 2812 ms and 5565 ms for the comparable add model.

In row 9 in Table 4, we have a model trained without a fully connected layer after the merge component (“Word prediction component size” column equal to “-”), comparable with row 1 from Table 5, where that layer was used. A 3.19 difference in the CIDEr metric clearly shows the importance of a fully connected layer that makes merged text and image features more understandable for the LSTM during the word prediction stage. Despite 3.47 more parameters and twice as much time for sentence generation, the method with a fully connected layer after the merge component achieved better results. The ReLu activation function present in the fully connected layer introduces non-linearity, which allows us to learn complex mapping between input features and output, which is the probability of words.

5.3. Recurrent Neural Network Model

In all experiments performed up to this moment, we used LSTM as the RNN component—part of the model that directly produces sentence word by word. Motivated by [74], we reproduced a few experiments by learning a model with GRU as the RNN to check to which extent the results depends on the type of the RNN used in the captioning model.

We used a pretrained Xception image feature extractor with a 2048 output size in the feature extraction component. As the word embedding component, we utilized Glove, which serves a 200-element vector to the RNN component—here, the GRU. We considered the concatenate method as a merging strategy in the MERGE component.

Comparing rows 4, 7, and 8 from Table 4 and 1, 2, and 3 from Table 6, respectively, one can observe that we achieved similar CIDEr results for LSTM and GRU, with the tendency of GRU to achieve higher CIDEr results in the models with fewer parameters. Also, the number of model parameters and time of sentence generation are similar for models based on LSTM and GRU. Comparing row 7 from Table 4 and row 2 from Table 6, we achieved results of 0.34 better in the GRU. Also, longer word prediction component sizes do not imply higher measure values. Winning configuration utilizes a 256-word prediction component size, which means that GRUs perform better on less complex dependency tasks than LSTMs, which are fit for deep neural networks. From the design perspective, GRUs perform well on short sequences, but in the image captioning domain, we produce sequences with a maximum of 50 tokens, which is short.

Table 6.

Evaluation results for MSCOCO 2014 test dataset using GRU as RNN.

5.4. Experiments on External Images

Methods for generating image captions consist of multiple components that cooperate. Depending on their choice, models produce different captions, even for the same picture. For example, in Table 7, we present captions produced for Figure 2f. One may easily observe how the predicted caption differs from one model to another—each caption is different from the remainder, and so is the CIDEr measure. The overall quality of a captioning model is evaluated by measures (like CIDEr) averaged over the complete test dataset.

Table 7.

Example predicted captions and achieved scores for picture shown in Figure 2f.

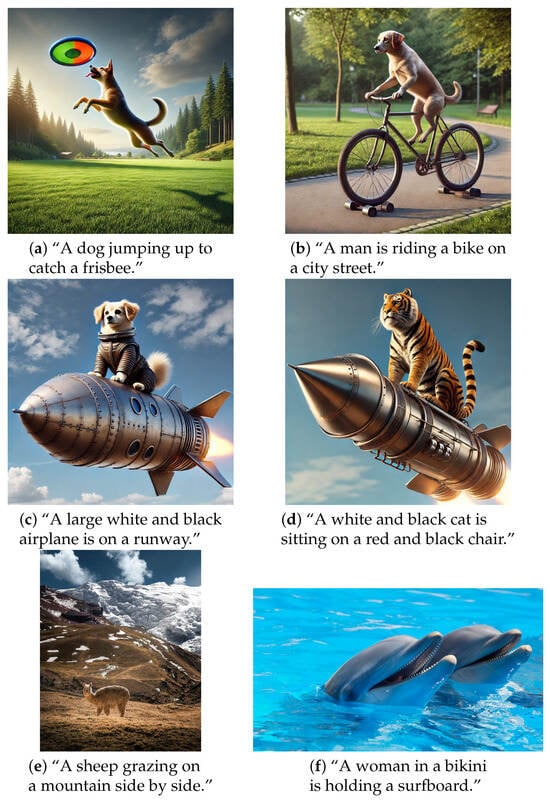

The image captioning models (as usual in machine learning) are intended to be applied to external images, i.e., images that are not present in the database that were used neither for training nor for validating the model. To investigate the ability of models to work correctly with such images, we also tested the best of our models on external data. This way, we validated if the model can generalize its expertise to unknown data. Our experiments revealed that the ability to generalize strongly depends on the image’s content. In particular, if within the scene presented on an image are regions, objects similar to those included in some image in the training set, the generated caption is more correct. On the other hand, objects and scenes that have not been seen within the image in the training set are poorly described, primarily by irrational and incorrect captions.

In Figure 3e, the model falsely recognized “sheep” the object present on the scene. It is caused by the lack of “lama” object representation in the MSCOCO 2014 dataset; however, the surrounding scene is correct. In Figure 3e, the predicted caption is totally pointless, “dolphins” are not present in the training dataset.

Figure 3.

Images with predicted captions generated artificially: (a) “Dog with frisbee”, (b) “Dog on bike”, (c) “Dog on rocket”, (d) “Tiger on rocket”; real images with objects not present in the MS COCO dataset (e,f).

To further investigate the relation between the correctness of captions and the image content, artificial images were used. To generate them, the model DALL-E3 [87] in ChatGPT4o [88] was used. As we may see, in Figure 3a, the model correctly predicted the caption and recognized objects present in the MSCOCO 2014 categories frisbee and dog. On the other hand, in Figure 3c,d, where the categories “rocket” and “tiger” are not included in the MSCOCO 2014 categories, the captions do not correctly describe images. Also, in Figure 3b, we can see that the category “dog” that was perfectly described in Figure 3b does not always guarantee the correct object category in the predicted caption. Object “dog” was mistaken for “man”, but all relations (riding a bike) between other objects were perfectly recognized.

5.5. Comparison with Transformer-Based Approaches

Despite promising results, most recent transformer-based models have certain disadvantages in image captioning. One of the primary issues is their high computational complexity and resource intensity [89]. The self-attention mechanism in transformers has a quadratic complexity concerning the sequence length, leading to substantial memory requirements for training, mainly when dealing with high-resolution images and long captions [44,90]. The training process is computationally expensive and time-consuming, necessitating powerful hardware such as GPUs or TPUs. Transformers also require large amounts of well-annotated data to learn effectively. Insufficient data can lead to overfitting and poor generalization, resulting in models that perform well on training data but poorly on unseen data [91].

Transformer models struggle with interpretability and explainability. Understanding and interpreting why a model generates a specific caption for an image can be challenging, which is a significant drawback in applications that can not work as a “black-box” [92]. Integrating visual and text features in transformers is another complex task. While vision transformers (ViTs) have been developed, combining these with language models for image captioning remains less mature than traditional CNNs coupled with RNNs [93]. Furthermore, the performance of transformers can degrade with very long sequences, which can be an issue for complex and detailed image descriptions. Moreover, the transformer architecture has not been initially designed for image processing tasks and requires substantial computational resources, significantly limiting their applicability.

Let us focus on examples to compare the results obtained with some transformer-based approaches on the COCO database. In the method [45], the transformer architecture is used for both the encoder and decoder, eliminating convolutional layers. The authors achieved a 129.4 CIDEr score, 81.7 BLEU-1, and 40.0 BLEU-4, with 138.5 mln parameters in the network [89]. Solution [46] focused on multimodal reasoning. It achieved a 132.8 CIDEr score, 80.9 BLEU-1, and 39.7 BLEU-4, with 137.5 mln parameters in the network [89]. Model [49] focused on pretraining to capture more sophisticated relations between objects on images and achieved 80.9 BLEU-1, 39.5 BLEU-4, and 129.3 CIDEr, with 138.2 mln parameters in the network [89]. In the model [51], the authors improved the results of an existing image captioning model by developing a new visual feature extractor. The new image captioning model consists of 369.6 mln parameters in the network [89] and obtained 82.0 BLEU-1, 41.0 BLEU-4, and 140.9 CIDEr.

On the other hand, our best model achieved 82.49 CIDEr metric, 67.51 BLEU-1, and 25.36 BLEU-4, with only 27.31 mln parameters in the network. One can see that an increase in the metrics’ value less than 1.6× has been obtained by the model of the complexity higher by one order of magnitude, i.e., almost 13×. Despite lower CIDEr results, sentences produced by our best model are grammatically and semantically correct while obtained using models of several times lower complexity (number of parameters).

6. Conclusions

Most recent transformer models are computationally intensive, requiring significant hardware and energy resources for training and inference. Classical models, which are the research subjects, are more straightforward and, therefore, less resource-demanding. In the outcomes of our research, we showed that the substitution of the components of the classic model improves the overall efficiency. This paper analyzed how image features, word encoding, and manipulation of the generalization layers can enhance the encoder–decoder image captioning model results.

Experimenting with pretrained image and text feature extractors (word embedding component, image feature extractor) proved that encoding input data plays the primary role in this area. During our research, we recognized that image captioning involves merging features from different modalities. Because of that, encoding images and features must cooperate, so finding the optimal pair for specific model architecture is crucial. We can significantly improve the results of the model predictions with that principle. The influence of the image feature extractor by the backbone is essential in this captioning model; it affects the performance more than the word embedding scheme. According to our experiment, the Xception with Glove and DenseNet201 with FastText are the best combinations of models’ components.

Moreover, our investigation delved into the nuanced process of merging image and text modalities, recognizing its potential to streamline network parameters and, by extension, expedite the training time of the image captioning model. The strategic selection of the merging function emerged as a pivotal consideration, with our analysis conclusively demonstrating the superior efficiency of the “concatenate” method. This choice yielded comparable results to the “add” approach and exhibited a notable reduction in parameters within the whole neural network, further optimizing model training time and time of sentence generation.

During our exploration of various model components and methodologies, we encountered no limitations in the efficacy of the GRU in addressing the challenges posed by image captioning tasks. We achieved convergent CIDER results for LSTM and GRU, as RNNs, with the tendency of GRUs to perform better on less complex tasks. Also, the number of model parameters and time of sentence generation are similar for configurations comparable to our image captioning models. We observed that models leveraging GRU architecture generate grammatically coherent captions aligned with the content depicted in the images. It is worth further investigating GRUs as the language decoder in the image captioning models, especially for the models that must be easy to understand along with the results.

The image captioning model heavily relies on the quality and diversity of training data, which can lead to biases and poor generalization across diverse real-world scenarios. The model’s adaptability to entirely new domains or significantly different image types without extensive retraining is limited. Additionally, the computational complexity involved restricts its real-time application and scalability. From this point of view, simpler models exhibit advantages over more sophisticated ones. As a result of our research, we showed that replacing their components enhances the quality of generating image captions. Our study’s outcomes apply to all the research works that have led to the development of the optimal encoder–decoder image captioning model.

Author Contributions

Methodology, M.B. and M.I.; Software, M.B.; Validation, M.B.; Formal analysis, M.I.; Investigation, M.B. and M.I.; Writing—original draft, M.B. and M.I.; Supervision, M.I.; Project administration, M.I. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

In our experiments we used COCO2014 dataset, which is publicly available and licensed under a Creative Commons Attribution 4.0 License.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Ramachandram, D.; Taylor, G.W. Deep Multimodal Learning: A Survey on Recent Advances and Trends. IEEE Signal Process. Mag. 2017, 34, 96–108. [Google Scholar] [CrossRef]

- Zhang, X.; He, S.; Song, X.; Lau, R.W.; Jiao, J.; Ye, Q. Image captioning via semantic element embedding. Neurocomputing 2020, 395, 212–221. [Google Scholar] [CrossRef]

- Janusz, A.; Kałuża, D.; Matraszek, M.; Grad, Ł.; Świechowski, M.; Ślęzak, D. Learning multimodal entity representations and their ensembles, with applications in a data-driven advisory framework for video game players. Inf. Sci. 2022, 617, 193–210. [Google Scholar] [CrossRef]

- Zhang, W.; Sugeno, M. A fuzzy approach to scene understanding. In Proceedings of the [Proceedings 1993] Second IEEE International Conference on Fuzzy Systems, San Francisco, CA, USA, 28 March–1 April 1993; Volume 1, pp. 564–569. [Google Scholar] [CrossRef]

- Iwanowski, M.; Bartosiewicz, M. Describing images using fuzzy mutual position matrix and saliency-based ordering of predicates. In Proceedings of the 2021 IEEE International Conference on Fuzzy Systems (FUZZ-IEEE), Luxembourg, 11–14 July 2021; pp. 1–8. [Google Scholar] [CrossRef]

- Kuznetsova, P.; Ordonez, V.; Berg, A.; Berg, T.; Choi, Y. Collective Generation of Natural Image Descriptions. In Proceedings of the 50th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Jeju Island, Republic of Korea, 10 July 2012; pp. 359–368. [Google Scholar]

- Li, S.; Kulkarni, G.; Berg, T.L.; Berg, A.C.; Choi, Y. Composing Simple Image Descriptions using Web-scale N-grams. In Proceedings of the Fifteenth Conference on Computational Natural Language Learning, Portland, OR, USA, 23–24 June 2011; pp. 220–228. [Google Scholar]

- Mitchell, M.; Han, X.; Dodge, J.; Mensch, A.; Goyal, A.; Berg, A.; Yamaguchi, K.; Berg, T.; Stratos, K.; Daumé, H. Midge: Generating Image Descriptions from Computer Vision Detections. In Proceedings of the 13th Conference of the European Chapter of the Association for Computational Linguistics (EACL ’12), Avignon, France, 23–27 April 2012; pp. 747–756. [Google Scholar]

- Farhadi, A.; Hejrati, M.; Sadeghi, M.A.; Young, P.; Rashtchian, C.; Hockenmaier, J.; Forsyth, D. Every Picture Tells a Story: Generating Sentences from Images. In Proceedings of the Computer Vision—ECCV 2010; Daniilidis, K., Maragos, P., Paragios, N., Eds.; Springer: Berlin/Heidelberg, Germany, 2010; pp. 15–29. [Google Scholar]

- Barnard, K.; Duygulu, P.; Forsyth, D.; Blei, D.; Kandola, J.; Hofmann, T.; Poggio, T.; Shawe-Taylor, J. Matching Words and Pictures. J. Mach. Learn. Res. 2003, 3, 1107–1135. [Google Scholar] [CrossRef][Green Version]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Proceedings of the Advances in Neural Information Processing Systems; Pereira, F., Burges, C., Bottou, L., Weinberger, K., Eds.; Curran Associates, Inc.: New York, NY, USA, 2012; Volume 25. [Google Scholar]

- Vinyals, O.; Toshev, A.; Bengio, S.; Erhan, D. Show and tell: A neural image caption generator. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3156–3164. [Google Scholar]

- Ramisa, A.; Yan, F.; Moreno-Noguer, F.; Mikolajczyk, K. BreakingNews: Article Annotation by Image and Text Processing. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 1072–1085. [Google Scholar]

- Biten, A.F.; Gómez, L.; Rusiñol, M.; Karatzas, D. Good News, Everyone! Context Driven Entity-Aware Captioning for News Images. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 12458–12467. [Google Scholar]

- Sharma, P.; Ding, N.; Goodman, S.; Soricut, R. Conceptual Captions: A Cleaned, Hypernymed, Image Alt-text Dataset For Automatic Image Captioning. In Proceedings of the ACL, Melbourne, Australia, 15–20 July 2018. [Google Scholar]

- Changpinyo, S.; Sharma, P.; Ding, N.; Soricut, R. Conceptual 12M: Pushing Web-Scale Image-Text Pre-Training to Recognize Long-Tail Visual Concepts. In Proceedings of the CVPR, Los Alamitos, CA, USA, 10 June 2021. [Google Scholar]

- Kiros, R.; Salakhutdinov, R.; Zemel, R. Multimodal Neural Language Models. In Proceedings of the 31st International Conference on Machine Learning, Bejing, China, 22–24 June 2014; pp. 595–603. [Google Scholar]

- Karpathy, A.; Fei-Fei, L. Deep visual-semantic alignments for generating image descriptions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Los Alamitos, CA, USA, 7–12 June 2015; pp. 3128–3137. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Los Alamitos, CA, USA, 23–28 June 2014; pp. 580–587. [Google Scholar] [CrossRef]

- Donahue, J.; Hendricks, L.A.; Rohrbach, M.; Venugopalan, S.; Guadarrama, S.; Saenko, K.; Darrell, T. Long-Term Recurrent Convolutional Networks for Visual Recognition and Description. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 677–691. [Google Scholar] [CrossRef]

- Johnson, J.; Karpathy, A.; Fei-Fei, L. Densecap: Fully convolutional localization networks for dense captioning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 18–20 June 2016; pp. 4565–4574. [Google Scholar]

- Xiao, X.; Wang, L.; Ding, K.; Xiang, S.; Pan, C. Dense semantic embedding network for image captioning. Pattern Recognit. 2019, 90, 285–296. [Google Scholar] [CrossRef]

- Toshevska, M.; Stojanovska, F.; Zdravevski, E.; Lameski, P.; Gievska, S. Exploration into Deep Learning Text Generation Architectures for Dense Image Captioning. In Proceedings of the 2020 15th Conference on Computer Science and Information Systems (FedCSIS), Sofia, Bulgaria, 6–9 September 2020; pp. 129–136. [Google Scholar] [CrossRef]

- Xu, K.; Ba, J.; Kiros, R.; Cho, K.; Courville, A.; Salakhudinov, R.; Zemel, R.; Bengio, Y. Show, Attend and Tell: Neural Image Caption Generation with Visual Attention. In Proceedings of the 32nd International Conference on Machine Learning, Lille, France, 7–9 July 2015; Volume 37, pp. 2048–2057. [Google Scholar]

- Anderson, P.; He, X.; Buehler, C.; Teney, D.; Johnson, M.; Gould, S.; Zhang, L. Bottom-Up and Top-Down Attention for Image Captioning and Visual Question Answering. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6077–6086. [Google Scholar]

- Guo, L.; Liu, J.; Tang, J.; Li, J.; Luo, W.; Lu, H. Aligning Linguistic Words and Visual Semantic Units for Image Captioning. In Proceedings of the 27th ACM International Conference on Multimedia (MM ’19), New York, NY, USA, 21–25 October 2019; pp. 765–773. [Google Scholar] [CrossRef]

- Gu, J.; Wang, G.; Cai, J.; Chen, T. An Empirical Study of Language CNN for Image Captioning. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 1231–1240. [Google Scholar]

- Liu, S.; Bai, L.; Hu, Y.; Wang, H. Image Captioning Based on Deep Neural Networks. MATEC Web Conf. 2018, 232, 01052. [Google Scholar] [CrossRef]

- Xu, K.; Wang, H.; Tang, P. Image captioning with deep LSTM based on sequential residual. In Proceedings of the 2017 IEEE International Conference on Multimedia and Expo (ICME), Los Alamitos, CA, USA, 10–14 July 2017; pp. 361–366. [Google Scholar] [CrossRef]

- Mao, J.; Xu, W.; Yang, Y.; Wang, J.; Yuille, A.L. Explain Images with Multimodal Recurrent Neural Networks. arXiv 2014, arXiv:1410.1090. [Google Scholar]

- Dong, H.; Zhang, J.; McIlwraith, D.; Guo, Y. I2T2I: Learning Text to Image Synthesis with Textual Data Augmentation. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; IEEE Press: Piscataway, NJ, USA, 2017; pp. 2015–2019. [Google Scholar] [CrossRef]

- Xian, Y.; Tian, Y. Self-Guiding Multimodal LSTM-When We Do Not Have a Perfect Training Dataset for Image Captioning. IEEE Trans. Image Process. 2019, 28, 5241–5252. [Google Scholar] [CrossRef]

- Rennie, S.J.; Marcheret, E.; Mroueh, Y.; Ross, J.; Goel, V. Self-Critical Sequence Training for Image Captioning. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Los Alamitos, CA, USA, 21–26 July 2017; pp. 1179–1195. [Google Scholar]

- Lu, J.; Xiong, C.; Parikh, D.; Socher, R. Knowing When to Look: Adaptive Attention via a Visual Sentinel for Image Captioning. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Los Alamitos, CA, USA, 21–26 July 2017; pp. 3242–3250. [Google Scholar]

- Delbrouck, J.; Dupont, S. Bringing back simplicity and lightliness into neural image captioning. arXiv 2018, arXiv:1810.06245. [Google Scholar]

- Tanti, M.; Gatt, A.; Camilleri, K. What is the Role of Recurrent Neural Networks (RNNs) in an Image Caption Generator? In Proceedings of the 10th International Conference on Natural Language Generation, Santiago de Compostela, Spain, 4–7 September 2017; pp. 51–60. [Google Scholar] [CrossRef]

- Zhou, L.; Xu, C.; Koch, P.A.; Corso, J.J. Image Caption Generation with Text-Conditional Semantic Attention. arXiv 2016, arXiv:1606.04621. [Google Scholar]

- Chen, X.; Zitnick, C.L. Mind’s eye: A recurrent visual representation for image caption generation. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Los Alamitos, CA, USA, 7–12 June 2015; pp. 2422–2431. [Google Scholar] [CrossRef]

- Hessel, J.; Savva, N.; Wilber, M. Image Representations and New Domains in Neural Image Captioning. arXiv 2015, arXiv:1508.02091. [Google Scholar] [CrossRef]

- Song, M.; Yoo, C.D. Multimodal representation: Kneser-ney smoothing/skip-gram based neural language model. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 2281–2285. [Google Scholar] [CrossRef]

- Hendricks, L.; Venugopalan, S.; Rohrbach, M.; Mooney, R.; Saenko, K.; Darrell, T. Deep Compositional Captioning: Describing Novel Object Categories without Paired Training Data. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Los Alamitos, CA, USA, 27–30 June 2016; pp. 1–10. [Google Scholar] [CrossRef]

- You, Q.; Jin, H.; Wang, Z.; Fang, C.; Luo, J. Image Captioning with Semantic Attention. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Los Alamitos, CA, USA, 27–30 June 2016; pp. 4651–4659. [Google Scholar] [CrossRef]

- Mao, J.; Xu, W.; Yang, Y.; Wang, J.; Yuille, A.L. Deep Captioning with Multimodal Recurrent Neural Networks (m-RNN). arXiv 2014, arXiv:1412.6632. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16 × 16 Words: Transformers for Image Recognition at Scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Liu, W.; Chen, S.; Guo, L.; Zhu, X.; Liu, J. CPTR: Full Transformer Network for Image Captioning. arXiv 2021, arXiv:2101.10804. [Google Scholar]

- Pan, Y.; Yao, T.; Li, Y.; Mei, T. X-linear attention networks for image captioning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Los Alamitos, CA, USA, 14–19 June 2020; pp. 10971–10980. [Google Scholar]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning Transferable Visual Models From Natural Language Supervision. In Proceedings of the International Conference on Machine Learning; Available online: http://proceedings.mlr.press/v139/radford21a/radford21a.pdf (accessed on 12 July 2024).