One-Shot Learning from Prototype Stock Keeping Unit Images

Abstract

1. Introduction

- A novel modification of the VPE algorithm was made, involving the incorporation of prototypes as a signal at the encoder input;

- The modified VPE was adapted for product recognition on retail shelves;

- The impact of data diversity and quality was analyzed, focusing on key aspects such as augmentation techniques, background uniformity, and optimal prototype selection;

- A comprehensive optimization of parameters and techniques for the VPE was conducted. This included methods for stopping network training, distance metrics in the latent space, network architecture, and various implemented loss functions.

2. Related Works

3. Method

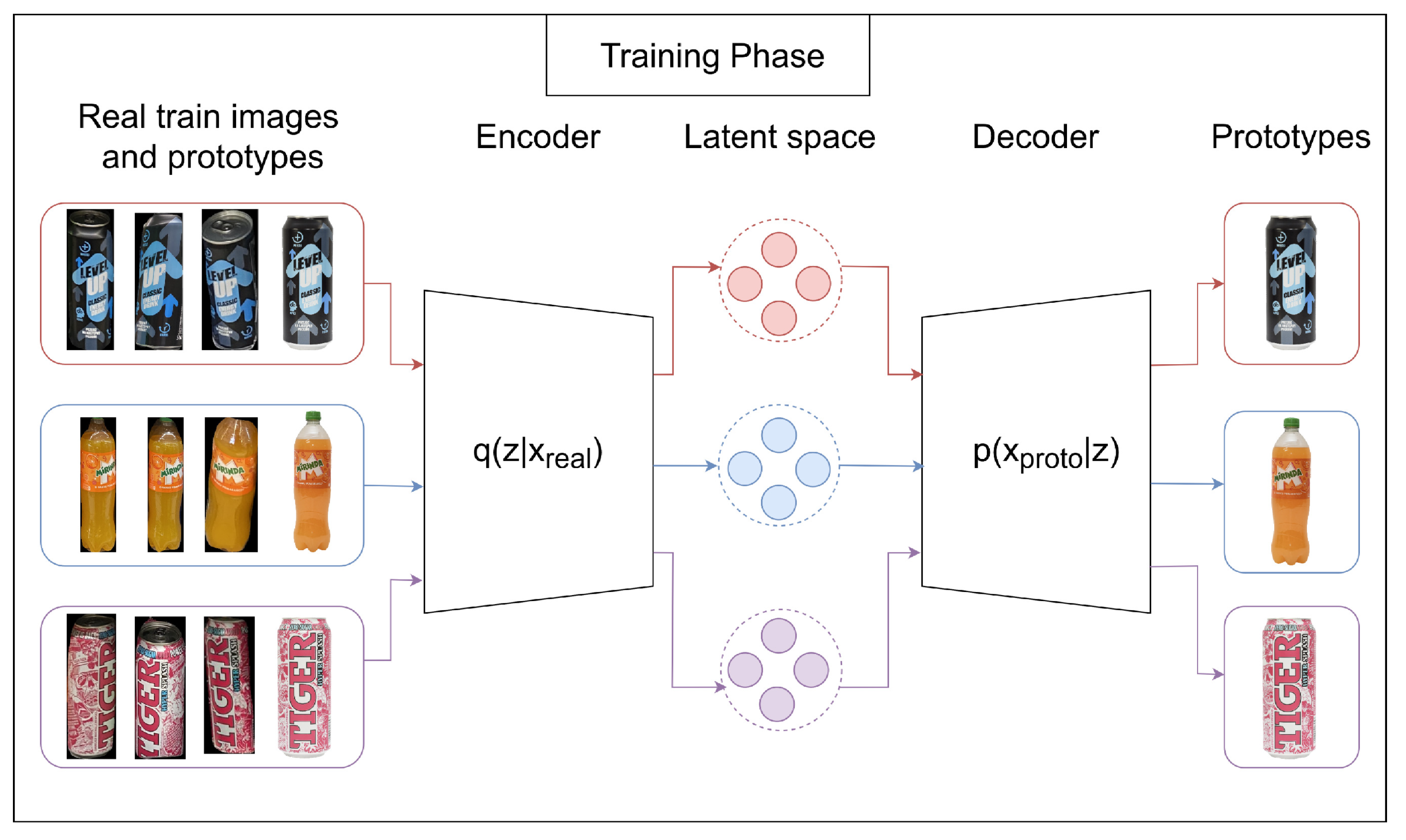

3.1. Variational Prototyping Encoder

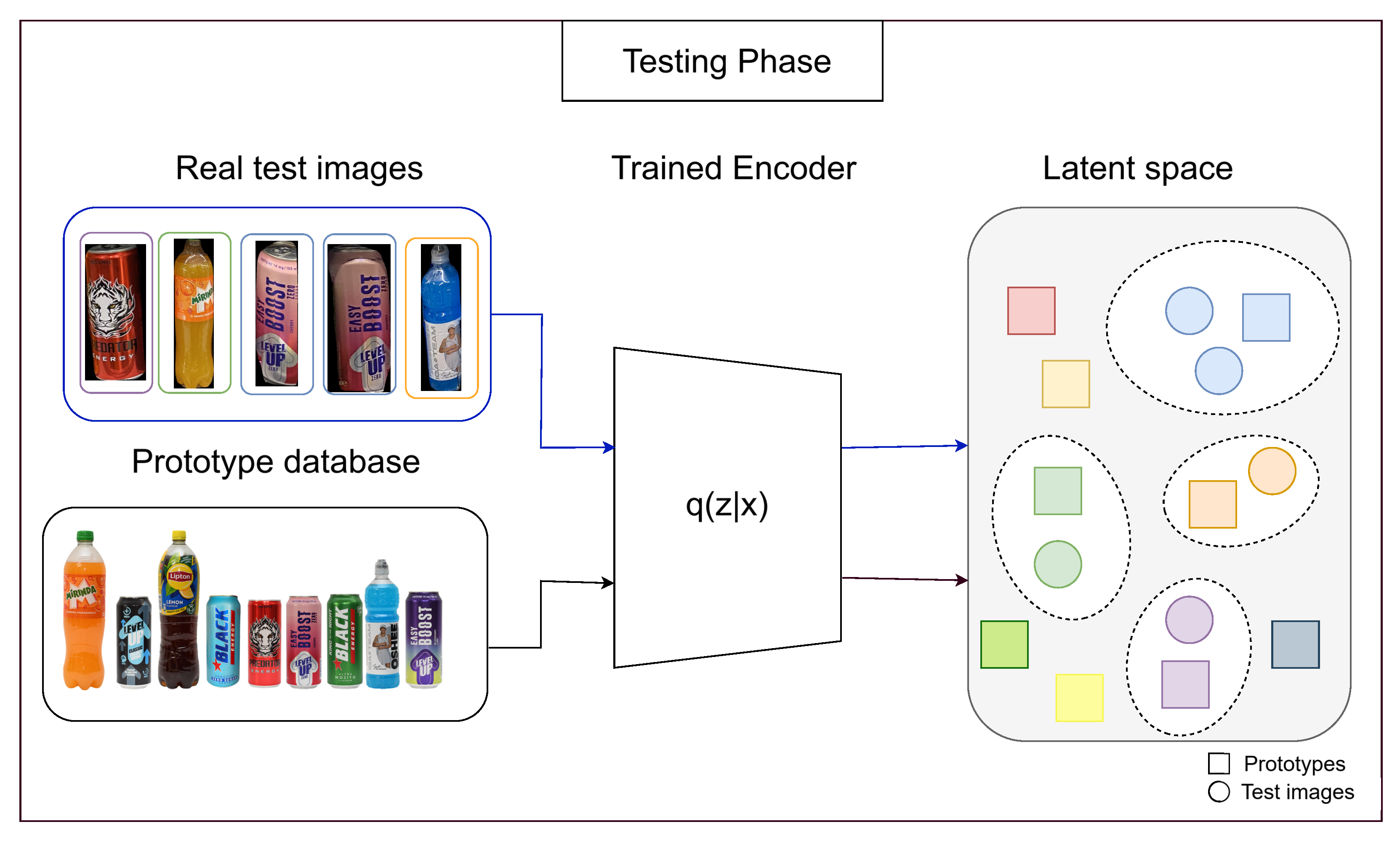

3.2. Training and Testing Phases

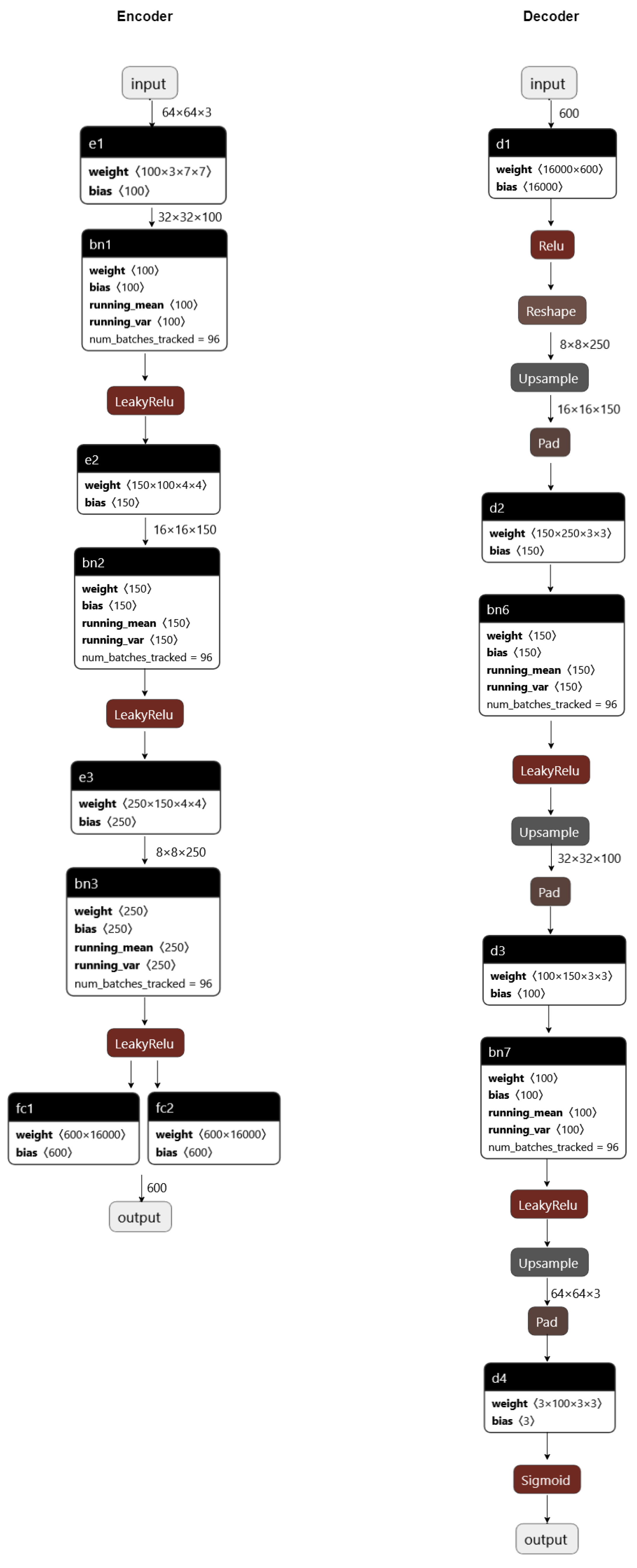

3.3. Network Architecture

3.4. Loss Functions

- Sum of two components: Binary Cross Entropy (BCE) and Kullback–Leibler Divergence (KLD) [11]:where x are the original image, are the reconstructed image, and K is equal to the sum of elements in the tensor consisting of the number of photos in the given batch, the number of RGB channels and the size of the photo.where is the mean vector of the latent variables in VPE, is the standard deviation vector, and L is equivalent to the sum of elements in a tensor whose first dimension also refers to the number of photos in a given batch, and the second is the declared size of the latent space.The total loss function is the sum of these two components:

- Root mean square error (RMSE) measures the changes in pixel values of the input band of the original image x and the reconstructed image . N and M are the width and height numbers, respectively.This error is determined using the following formula [25]:The desired value of this error is zero.

- Relative average spectral error (RASE) is computed based on the RMSE value using the following Equation [25]:where is the mean value across all B spectral bands of the original image x. is the root mean square error of the k-th band between the original and the reconstructed images. The desired value of this parameter is zero.

- Relative dimensionless global error in synthesis (ERGAS) is a global quality factor. This error is affected by variations in the average pixel value of the image and the dynamically changing range. It can be expressed as [25]:where is the ratio of the number of the original image’s pixels to the reconstructed image’s pixels. B is the total number of spectral bands. is the root mean square error of the k-th band between the original and the reconstructed images, while is the mean value of the k-th band of the original image x. The optimal value for this error is close to zero.

- Pearson’s correlation coefficient (PCC) shows the spectral correlation between two images. The value of this coefficient for the reconstructed image and the original image x is calculated as follows [25]:where and mean the average values of the x and images, while M and N denote the shape of the images. The desired value of this coefficient is one.

4. Experiments

4.1. Dataset Overview

4.2. Implementation Overview

4.3. Metrics

- Recall, defined as the ratio of correctly assigned instances to the correct class () to the sum of correctly assigned instances to the correct class () and incorrectly assigned instances to a class other than the correct one (), represents the model’s ability to correctly identify instances of a given class, as follows [26]:

- Precision, being the ratio of correctly assigned instances to the correct class () to the sum of correctly assigned instances to the correct class () and incorrectly assigned instances to the correct class (), measures the model’s ability to correctly classify instances as positive, as follows [26]:

- F1-score is a function used to test accuracy, which takes into account both precision and recall. It is considered the weighted average of precision and recall. The F1 value ranges from 0 to 1. It favors algorithms with high recall, as follows [26]:

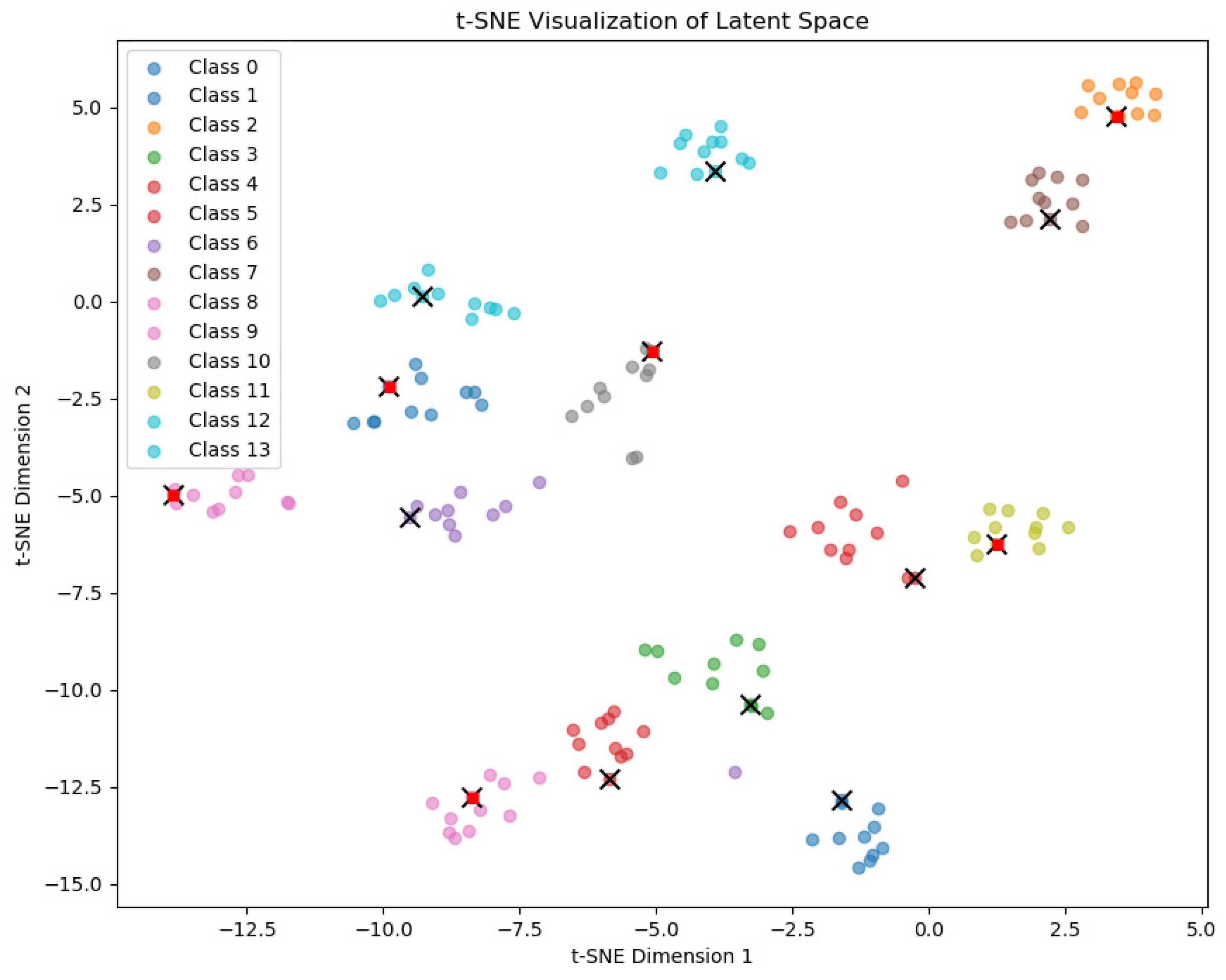

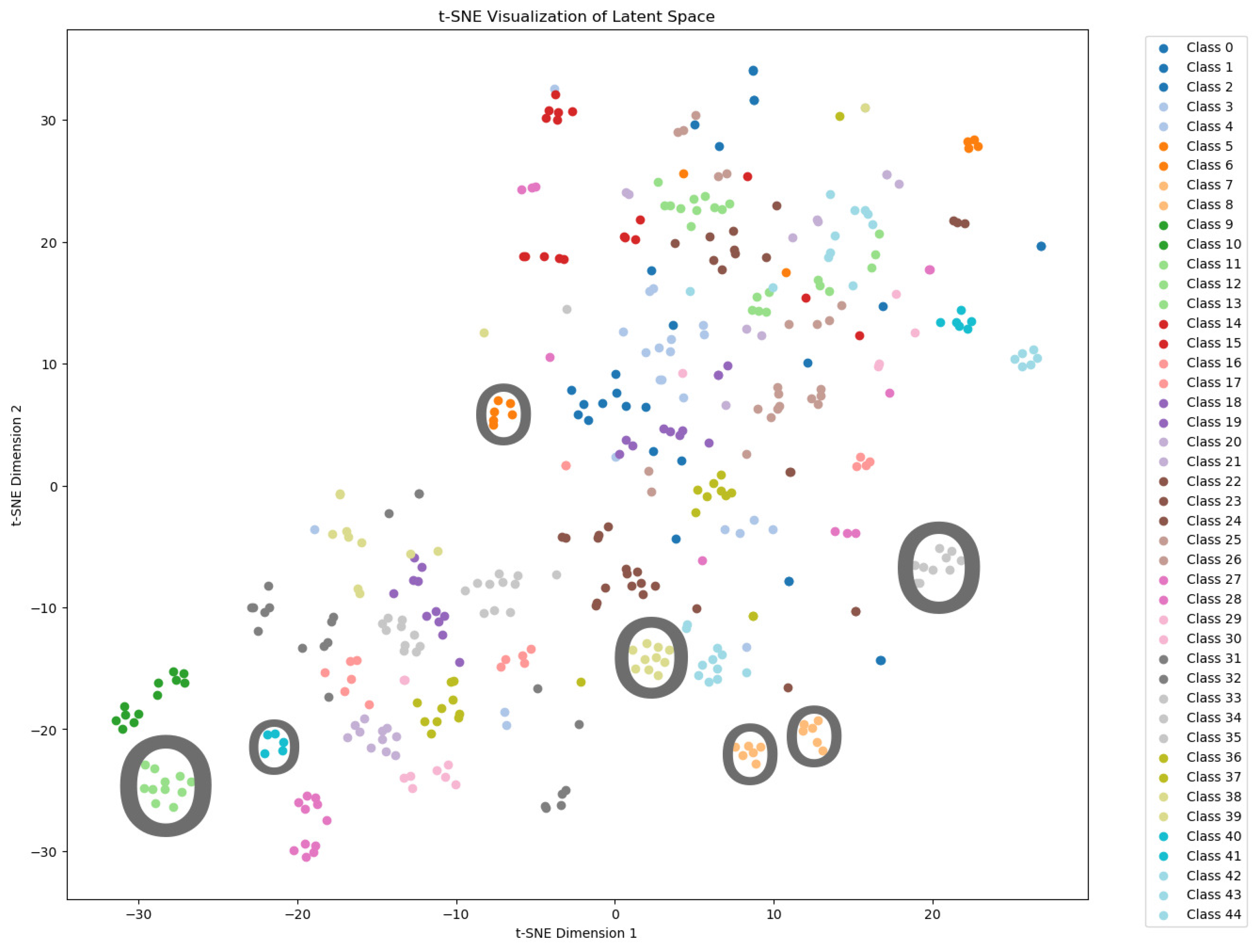

4.4. Results

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Network Architecture

References

- Merler, M.; Galleguillos, C.; Belongie, S. Recognizing Groceries in situ Using in vitro Training Data. In Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–8. [Google Scholar] [CrossRef]

- George, M.; Mircic, D.; Sörös, G.; Floerkemeier, C.; Mattern, F. Fine-Grained Product Class Recognition for Assisted Shopping. In Proceedings of the 2015 IEEE International Conference on Computer Vision Workshop (ICCVW), Santiago, Chile, 7–13 December 2015; pp. 546–554. [Google Scholar] [CrossRef][Green Version]

- Melek, C.G.; Sonmez, E.B.; Albayrak, S. A survey of product recognition in shelf images. In Proceedings of the 2017 International Conference on Computer Science and Engineering (UBMK), Antalya, Turkey, 5–8 October 2017; pp. 145–150. [Google Scholar] [CrossRef]

- Tonioni, A.; Serra, E.; Di Stefano, L. A deep learning pipeline for product recognition on store shelves. In Proceedings of the 2018 IEEE International Conference on Image Processing, Applications and Systems (IPAS), Sophia Antipolis, France, 12–14 December 2018; pp. 25–31. [Google Scholar] [CrossRef]

- Geng, W.; Han, F.; Lin, J.; Zhu, L.; Bai, J.; Wang, S.; He, L.; Xiao, Q.; Lai, Z. Fine-Grained Grocery Product Recognition by One-Shot Learning. In Proceedings of the 26th ACM International Conference on Multimedia, Seoul, Republic of Korea, 22–26 October 2018; MM’18. pp. 1706–1714. [Google Scholar] [CrossRef]

- Leo, M.; Carcagnì, P.; Distante, C. A Systematic Investigation on end-to-end Deep Recognition of Grocery Products in the Wild. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 7234–7241. [Google Scholar] [CrossRef]

- Chen, S.; Liu, D.; Pu, Y.; Zhong, Y. Advances in deep learning-based image recognition of product packaging. Image Vis. Comput. 2022, 128, 104571. [Google Scholar] [CrossRef]

- Selvam, P.; Faheem, M.; Dakshinamurthi, V.; Nevgi, A.; Bhuvaneswari, R.; Deepak, K.; Abraham Sundar, J. Batch Normalization Free Rigorous Feature Flow Neural Network for Grocery Product Recognition. IEEE Access 2024, 12, 68364–68381. [Google Scholar] [CrossRef]

- Goldman, E.; Herzig, R.; Eisenschtat, A.; Goldberger, J.; Hassner, T. Precise Detection in Densely Packed Scenes. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 5222–5231. [Google Scholar] [CrossRef]

- Melek, C.G.; Battini Sönmez, E.; Varlı, S. Datasets and methods of product recognition on grocery shelf images using computer vision and machine learning approaches: An exhaustive literature review. Eng. Appl. Artif. Intell. 2024, 133, 108452. [Google Scholar] [CrossRef]

- Kim, J.; Oh, T.H.; Lee, S.; Pan, F.; Kweon, I.S. Variational Prototyping-Encoder: One-Shot Learning With Prototypical Images. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 9454–9462. [Google Scholar] [CrossRef]

- Fe-Fei, L.; Fergus; Perona. A Bayesian approach to unsupervised one-shot learning of object categories. In Proceedings of the Ninth IEEE International Conference on Computer Vision, Nice, France, 13–16 October 2003; Volume 2, pp. 1134–1141. [Google Scholar] [CrossRef]

- Lake, B.M.; Salakhutdinov, R.; Tenenbaum, J.B. Human-level concept learning through probabilistic program induction. Science 2015, 350, 1332–1338. [Google Scholar] [CrossRef] [PubMed]

- Vinyals, O.; Blundell, C.; Lillicrap, T.; kavukcuoglu, k.; Wierstra, D. Matching Networks for One Shot Learning. In Proceedings of the Advances in Neural Information Processing Systems; Lee, D., Sugiyama, M., Luxburg, U., Guyon, I., Garnett, R., Eds.; Curran Associates, Inc.: Nice, France, 2016; Volume 29. [Google Scholar]

- Sung, F.; Yang, Y.; Zhang, L.; Xiang, T.; Torr, P.H.; Hospedales, T.M. Learning to Compare: Relation Network for Few-Shot Learning. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 1199–1208. [Google Scholar] [CrossRef]

- Zhenguo, L.; Fengwei, Z.; Fei, C.; Hang, L. Meta-SGD: Learning to Learn Quickly for Few Shot Learning. arXiv 2017, arXiv:1707.09835. [Google Scholar]

- Finn, C.; Abbeel, P.; Levine, S. Model-agnostic meta-learning for fast adaptation of deep networks. In Proceedings of the 34th International Conference on Machine Learning–Volume 70. JMLR.org, Sydney, Australia, 6–11 August 2017; Volume 10, pp. 1126–1135. [Google Scholar]

- Chen, T.; Xie, G.S.; Yao, Y.; Wang, Q.; Shen, F.; Tang, Z.; Zhang, J. Semantically Meaningful Class Prototype Learning for One-Shot Image Segmentation. IEEE Trans. Multimed. 2022, 24, 968–980. [Google Scholar] [CrossRef]

- Snell, J.; Swersky, K.; Zemel, R. Prototypical Networks for Few-shot Learning. In Proceedings of the Advances in Neural Information Processing Systems; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: Nice, France, 2017; Volume 30. [Google Scholar]

- Wang, C.; Huang, C.; Zhu, X.; Zhao, L. One-Shot Retail Product Identification Based on Improved Siamese Neural Networks. Circuits, Syst. Signal Process. 2022, 41, 1–15. [Google Scholar] [CrossRef]

- Kingma, D.P.; Welling, M. Auto-Encoding Variational Bayes. In Proceedings of the 2nd International Conference on Learning Representations, ICLR 2014, Banff, AB, Canada, 14–16 April 2014; Conference Track Proceedings. Available online: https://arxiv.org/abs/1312.6114v11 (accessed on 1 July 2024). Conference Track Proceedings.

- Kang, J.S.; Ahn, S.C. Variational Multi-Prototype Encoder for Object Recognition Using Multiple Prototype Images. IEEE Access 2022, 10, 19586–19598. [Google Scholar] [CrossRef]

- Liu, Y.; Shi, D. SS-VPE: Semi-Supervised Variational Prototyping Encoder With Student’s-t Mixture Model. IEEE Trans. Instrum. Meas. 2023, 72, 1–9. [Google Scholar] [CrossRef]

- Xiao, C.; Madapana, N.; Wachs, J. One-Shot Image Recognition Using Prototypical Encoders with Reduced Hubness. In Proceedings of the 2021 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 3–8 January 2021; pp. 2251–2260. [Google Scholar] [CrossRef]

- Panchal, S. Implementation and Comparative Quantitative Assessment of Different Multispectral Image Pansharpening Approaches. Signal Image Process. Int. J. 2015, 6, 35. [Google Scholar] [CrossRef]

- Bansal, A.; Singhrova, A. Performance Analysis of Supervised Machine Learning Algorithms for Diabetes and Breast Cancer Dataset. In Proceedings of the 2021 International Conference on Artificial Intelligence and Smart Systems (ICAIS), Coimbatore, India, 25–27 March 2021; pp. 137–143. [Google Scholar] [CrossRef]

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Lo, W.Y.; et al. Segment Anything. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–3 October 2023; pp. 4015–4026. [Google Scholar]

- Hu, R.; Hu, W.; Li, J. Saliency Driven Nonlinear Diffusion Filtering for Object Recognition. In Proceedings of the 2013 2nd IAPR Asian Conference on Pattern Recognition, Naha, Japan, 5–8 November 2013; pp. 381–385. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Bay, H.; Ess, A.; Tuytelaars, T.; Van Gool, L. Speeded-Up Robust Features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

| Distance | Method | Recall | Top-nn | |||

|---|---|---|---|---|---|---|

| All | Train | Test | 2-nn | 3-nn | ||

| Euclidean | Reach defined number of epochs | 0.888 | 0.894 | 0.883 | 0.972 | 0.986 |

| Trigger after validation accuracy is achieved | 0.769 | 0.939 | 0.623 | 0.825 | 0.839 | |

| Cosine | Reach defined number of epochs | 0.916 | 0.909 | 0.922 | 0.986 | 0.993 |

| Trigger after validation accuracy is achieved | 0.888 | 0.955 | 0.831 | 0.986 | 0.986 | |

| Image Size | Algorithm’s Version | One-Shot Classification Recall (%) | |

|---|---|---|---|

| Classes Seen | Classes Unseen | ||

| VPE | 0.939 | 0.713 | |

| VPE + aug | 0.939 | 0.896 | |

| VPE + aug + rotate | 0.576 | 0.818 | |

| VPE + stn | 0.939 | 0.948 | |

| VPE + aug + stn | 0.955 | 0.896 | |

| VPE | 0.924 | 0.740 | |

| VPE + aug | 0.970 | 0.909 | |

| VPE + aug + rotate | 0.712 | 0.909 | |

| VPE + stn | 0.939 | 0.935 | |

| VPE + aug + stn | 0.909 | 0.922 | |

| Loss Function | One-Shot Classification Recall (%) | |

|---|---|---|

| Classes Seen | Classes Unseen | |

| BCE + KLD | 0.970 | 0.949 |

| RMSE | 0.970 | 0.949 |

| ERGAS | 0.939 | 0.970 |

| CC | 0.955 | 0.949 |

| RASE | 0.924 | 0.929 |

| Classes | Recall | Precision | F1-Score |

|---|---|---|---|

| Seen | |||

| Class 1, Black Energy, ultra mango, can, orange | 1.000 | 1.000 | 1.000 |

| Class 2, Coca-cola, bottle | 1.000 | 1.000 | 1.000 |

| Class 8, Easy boost, zero sugar, cherry, can, pink | 1.000 | 0.917 | 0.957 |

| Class 9, Easy boost, blueberry and lime, can, purple | 1.000 | 1.000 | 1.000 |

| Class 10, Level up Classic Energy Drink, can, blue | 1.000 | 1.000 | 1.000 |

| Class 11, Dzik, tropic, can, green | 1.000 | 1.000 | 1.000 |

| Unseen | |||

| Class 0, Black Energy, zero sugar, paradise, can, light-blue | 1.000 | 1.000 | 1.000 |

| Class 3, Tiger Pure, passion fruit-lemon, can, light-yellow | 0.909 | 0.909 | 0.909 |

| Class 4, Tiger Hyper Splash, exotic, can, pink | 0.909 | 0.909 | 0.909 |

| Class 5, Black Energy, ultra mojito, can, green | 1.000 | 1.000 | 1.000 |

| Class 6, Red Bull Purple Edition, sugarfree, açai, can, purple | 0.909 | 1.000 | 0.952 |

| Class 7, Lipton Ice Tea, lemon, bottle | 1.000 | 1.000 | 1.000 |

| Class 12, Oshee Isotonic Drink, multifruit, narrow bottle, blue | 1.000 | 1.000 | 1.000 |

| Class 13, Oshee Vitamin Water, lemon-orange, bottle, blue | 1.000 | 1.000 | 1.000 |

| Category | One-Shot Classification Recall (%) | |

|---|---|---|

| Classes Seen | Classes Unseen | |

| Beverages | 0.939 | 0.725 |

| Dairy | 0.924 | 0.613 |

| Snacks | 0.954 | 0.754 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kowalczyk, A.; Sarwas, G. One-Shot Learning from Prototype Stock Keeping Unit Images. Information 2024, 15, 526. https://doi.org/10.3390/info15090526

Kowalczyk A, Sarwas G. One-Shot Learning from Prototype Stock Keeping Unit Images. Information. 2024; 15(9):526. https://doi.org/10.3390/info15090526

Chicago/Turabian StyleKowalczyk, Aleksandra, and Grzegorz Sarwas. 2024. "One-Shot Learning from Prototype Stock Keeping Unit Images" Information 15, no. 9: 526. https://doi.org/10.3390/info15090526

APA StyleKowalczyk, A., & Sarwas, G. (2024). One-Shot Learning from Prototype Stock Keeping Unit Images. Information, 15(9), 526. https://doi.org/10.3390/info15090526